Abstract

The question of molecular similarity is core in cheminformatics and is usually assessed via a pairwise comparison based on vectors of properties or molecular fingerprints. We recently exploited variational autoencoders to embed 6M molecules in a chemical space, such that their (Euclidean) distance within the latent space so formed could be assessed within the framework of the entire molecular set. However, the standard objective function used did not seek to manipulate the latent space so as to cluster the molecules based on any perceived similarity. Using a set of some 160,000 molecules of biological relevance, we here bring together three modern elements of deep learning to create a novel and disentangled latent space, viz transformers, contrastive learning, and an embedded autoencoder. The effective dimensionality of the latent space was varied such that clear separation of individual types of molecules could be observed within individual dimensions of the latent space. The capacity of the network was such that many dimensions were not populated at all. As before, we assessed the utility of the representation by comparing clozapine with its near neighbors, and we also did the same for various antibiotics related to flucloxacillin. Transformers, especially when as here coupled with contrastive learning, effectively provide one-shot learning and lead to a successful and disentangled representation of molecular latent spaces that at once uses the entire training set in their construction while allowing “similar” molecules to cluster together in an effective and interpretable way.

1. Introduction

The relatively recent development and success of “deep learning” methods involving “large”, artificial neural networks (e.g., [1,2,3,4]) has brought into focus a number of important features that can serve to improve them further, in particular with regard to the “latent spaces” that they encode internally. One particular recognition is that the much greater availability of unlabeled than labelled (supervised learning) data can be exploited in the creation of such deep nets (whatever their architecture), for instance in variational autoencoders [5,6,7,8,9], or in transformers [10,11,12].

A second trend involves the recognition that the internal workings of deep nets can be rather opaque, and especially in medicine there is a desire for systems that explain precisely the features they are using in order to solve classification or regression problems. This is often referred to as “explainable AI” [13,14,15,16,17,18,19,20,21,22]. The most obviously explainable networks are those in which individual dimensions of the latent space more or less directly reflect or represent identifiable features of the inputs; in the case of images of faces, for example, this would occur when the value of a feature in one dimension varies smoothly with and thus can be seen to represent, an input feature such as hair color, the presence or type of spectacles, the presence or type of a moustache, and so on [23,24,25,26]. This is known as a disentangled representation (e.g., [27,28,29,30,31,32,33,34,35,36,37,38]). To this end, it is worth commenting that the ability to generate more or less realistic facial image structures using orthogonal features extracted from a database or collection of relevant objects that can be parametrized has been known for some decades [39,40,41,42].

Given an initialization, the objective function of a deep network necessarily determines the structure of its latent space. Typical variational autoencoders seek to minimize the evidence lower bound (ELBO) of the Kulback–Leibler (KL) divergence between the desired and calculated output distributions [43,44,45,46], although many other variants with different objective functions have been suggested (e.g., [45,47,48,49,50,51,52,53,54]). However, a third development is the recognition that training with such unlabeled data can also be used to optimize the (self-) organization of the latent space itself. A particular objective of one kind of self-organization is one in which individual inputs are used to create a structure in which similar input examples are also closer to each other in the latent space; this is commonly referred to as self-supervised [12,55,56,57], or contrastive [58,59,60,61,62,63,64,65,66], learning. In image processing this is often performed by augmenting training data with rotated or otherwise distorted versions of a given image, which then retain the same class membership or “similarity” despite appearing very different [61,67,68,69]. Our interests here are in molecular similarity.

Molecular Similarity

Molecular (as with any other kind of) similarity [70,71,72] is a somewhat elusive but, importantly, unsupervised concept in which we seek a metric to describe, in some sense, how closely related two entities are to each other from their structure or appearance alone. The set of all small molecules of possible interest for some purpose, subject to constraints such as commercial availability [73], synthetic accessibility [74,75], or “drug-likeness” [76,77], is commonly referred to “chemical space”, and it is very large [78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97]. In cheminformatics the concept of similarity is widely used to prioritize the choice of molecules “similar” to an initial molecule (usually a “hit” with a given property or activity in an assay of interest) from this chemical space or by comparison with those in a database, on the grounds that “similar” molecular structures tend to have “similar” bioactivities [98].

The problem with this is that the usual range of typical metrics of similarity, whether using molecular fingerprints or vectors of the values of property descriptors, tend to give quite different values for the similarity of a given pair of molecules (e.g., [99]). In addition, and importantly, such pairwise evaluations are done individually, and their construction takes no account of the overall structure and population of the chemical space.

Deep Learning for Molecular Similarity

In a recent paper [5], we constructed a subset of chemical space using six million molecules taken from the ZINC database [100] (www.zincdocking.org/, accessed on 28 February 2021), employing a variational autoencoder to construct the latent space used to represent 2D chemical structures. The latent space is a space between the encoder and the decoder with a certain dimensionality D such that the position of an individual molecule in the latent space, and hence the chemical space is simply represented by a D-dimensional vector. A brief survey [5] implied that molecules near each other in this chemical space did indeed tend to exhibit evident and useful structural similarities, though no attempt was made there either to exploit contrastive learning or to assess degrees of similarity systematically. Thus, it is correspondingly unlikely that we had optimized the latent space from the points of view of either optimal feature extraction or explainability.

The most obvious disentanglement for small molecules, which is equivalent to feature extraction in images, is surely the extraction of molecular fragments or substructures, that can then simply be “bolted together” in different ways to create any other larger molecule(s). Thus, it is reasonable that a successful disentangled representation would involve the principled extraction of useful substructures (or small molecules) taken from the molecules used in the training. In this case we have an additional advantage over those interested in image processing, because we have other effective means for assessing molecular similarity, and these do tend to work for molecules with a Tanimoto similarity (TS) greater than about 0.8 [99]; such molecules can then be said to be similar, providing positive examples for contrastive learning (although in this case we use a different encoding strategy). Pairwise comparisons returning TS values lower than say 0.3 may similarly be considered to represent negative examples.

Nowadays, transformer architectures (e.g., [3,11,12,101,102,103,104,105,106,107,108,109,110]) are seen as the state of the art for deep learning of the type of present interest. As per the definition of the contrastive learning framework mentioned in [61,66], we add an extra autoencoder in which the encoder behaves as a projection head. The outputs of the transformer encoder, which we regard as representations, are to be of a higher dimension. Consequently, it can still take a relatively large computational effort to compute the similarity between the representations. To this end, we add a simple encoder network that maps the representations to a lower dimensional latent space on which the contrastive loss is computationally easier to define. Then, to convert the latent vector again to the appropriate representations to feed into the transformer decoder network, we add a simple decoder network.

In sum, therefore, it seemed sensible to bring together both contrastive learning and transformer architectures so as to seek a latent space optimized for substructure or molecular fragment extraction. Consequently we refer to this method as FragNet. The purpose of the present paper is to describe our implementation of this, recognizing that SMILES strings represent sequences of characters just as do the words used in natural language processing. During the preparation of this paper, a related approach also appeared [111] but used graphs rather than a SMILES encoding of the structures.

2. Results

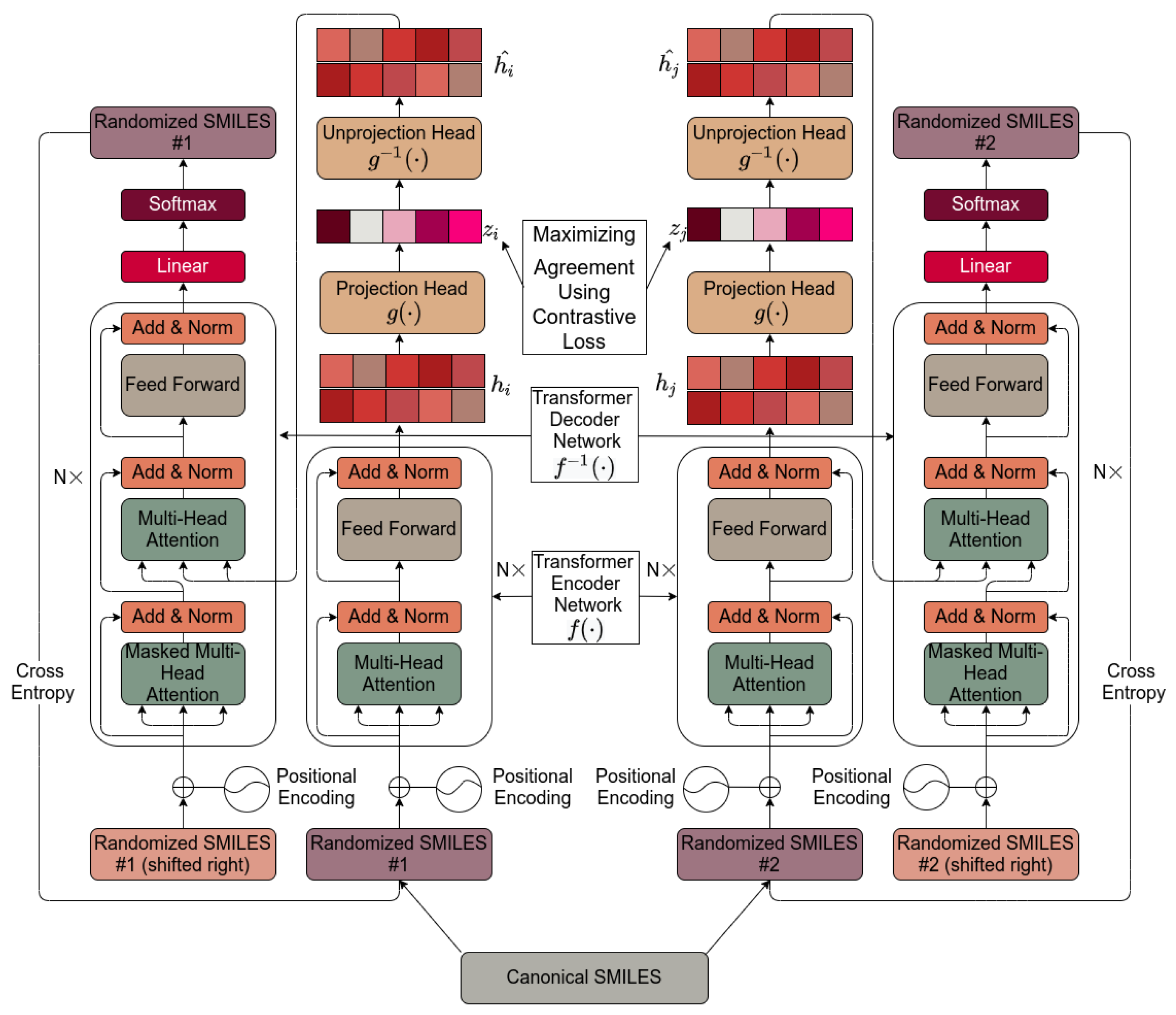

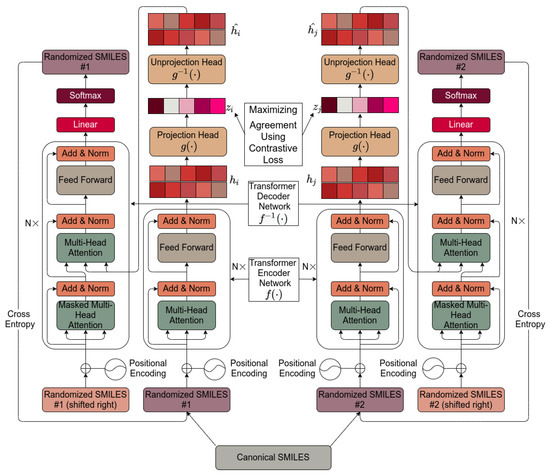

Figure 1 shows the basic architecture chosen, essentially as set down by [112]. It is based on [112] and is described in detail in Section 4. Pseudocode for the algorithm used is given in Scheme 1.

Figure 1.

The transformer-based architecture used in the present work. The internals are described in Section 4.

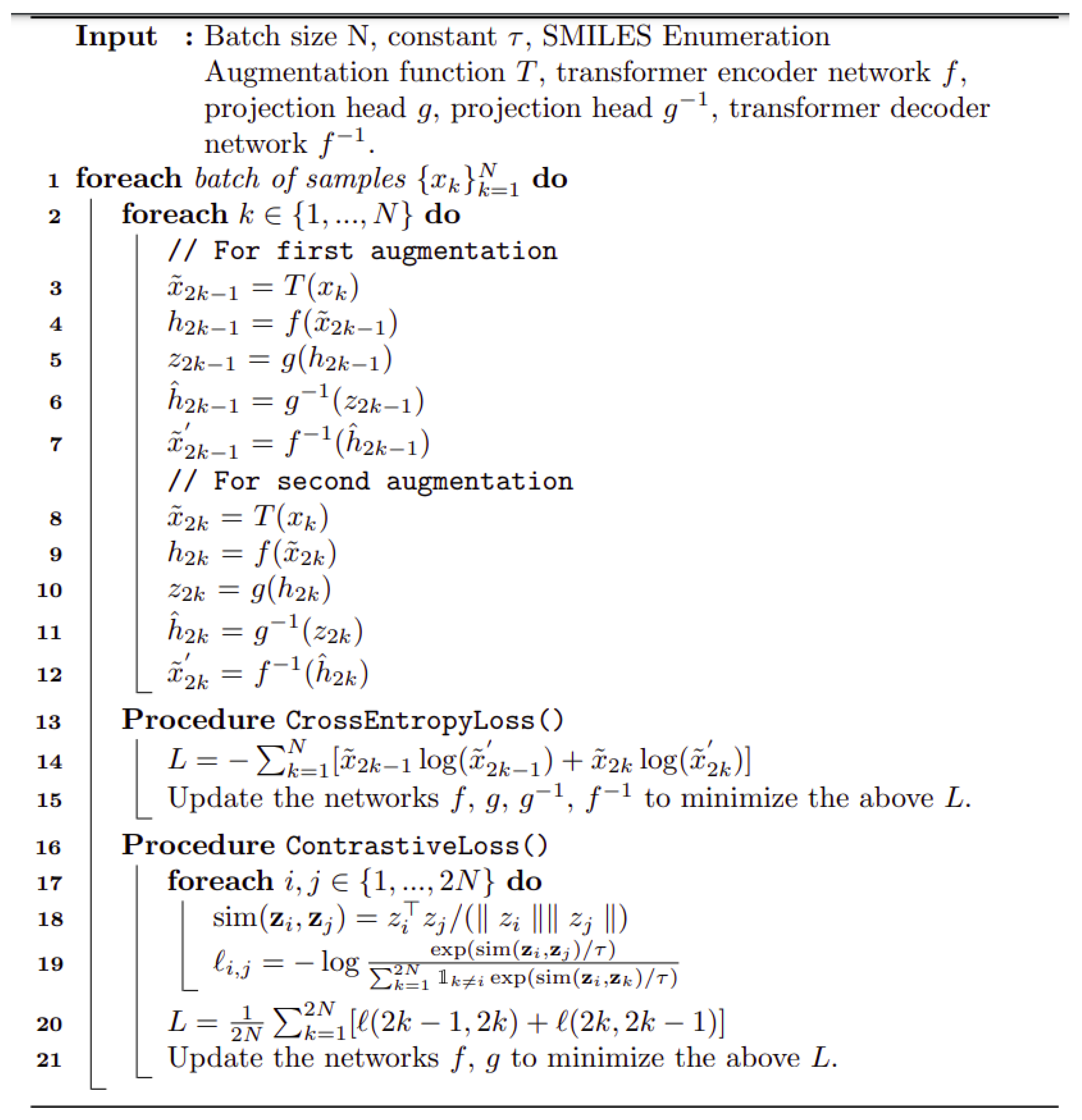

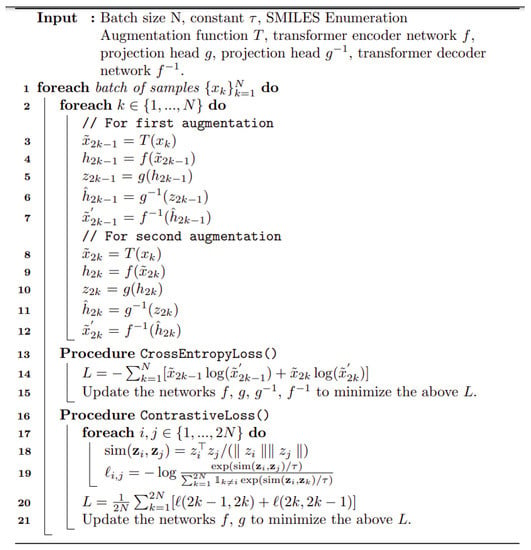

Scheme 1.

Pseudocode for the transformer algorithm as implemented here.

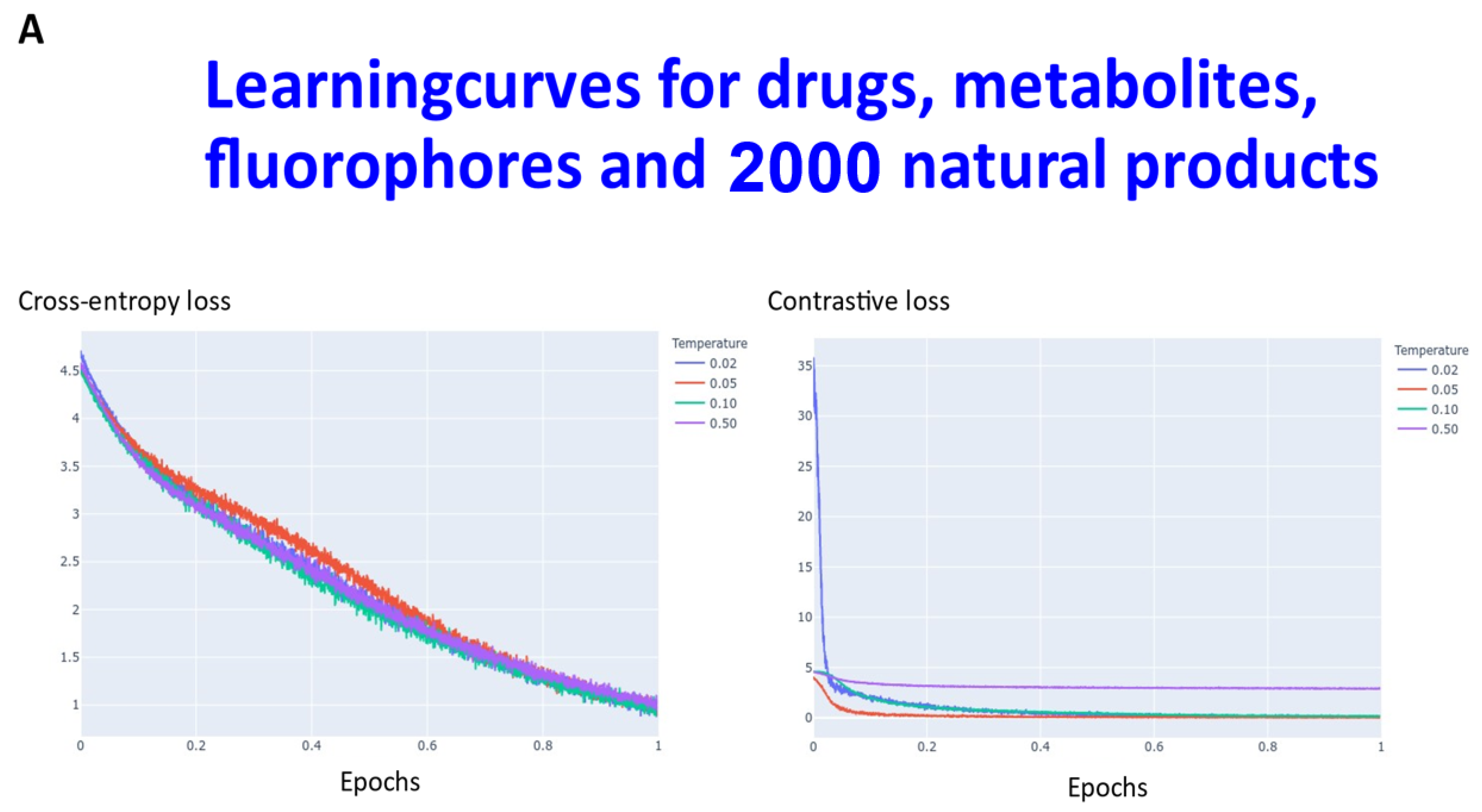

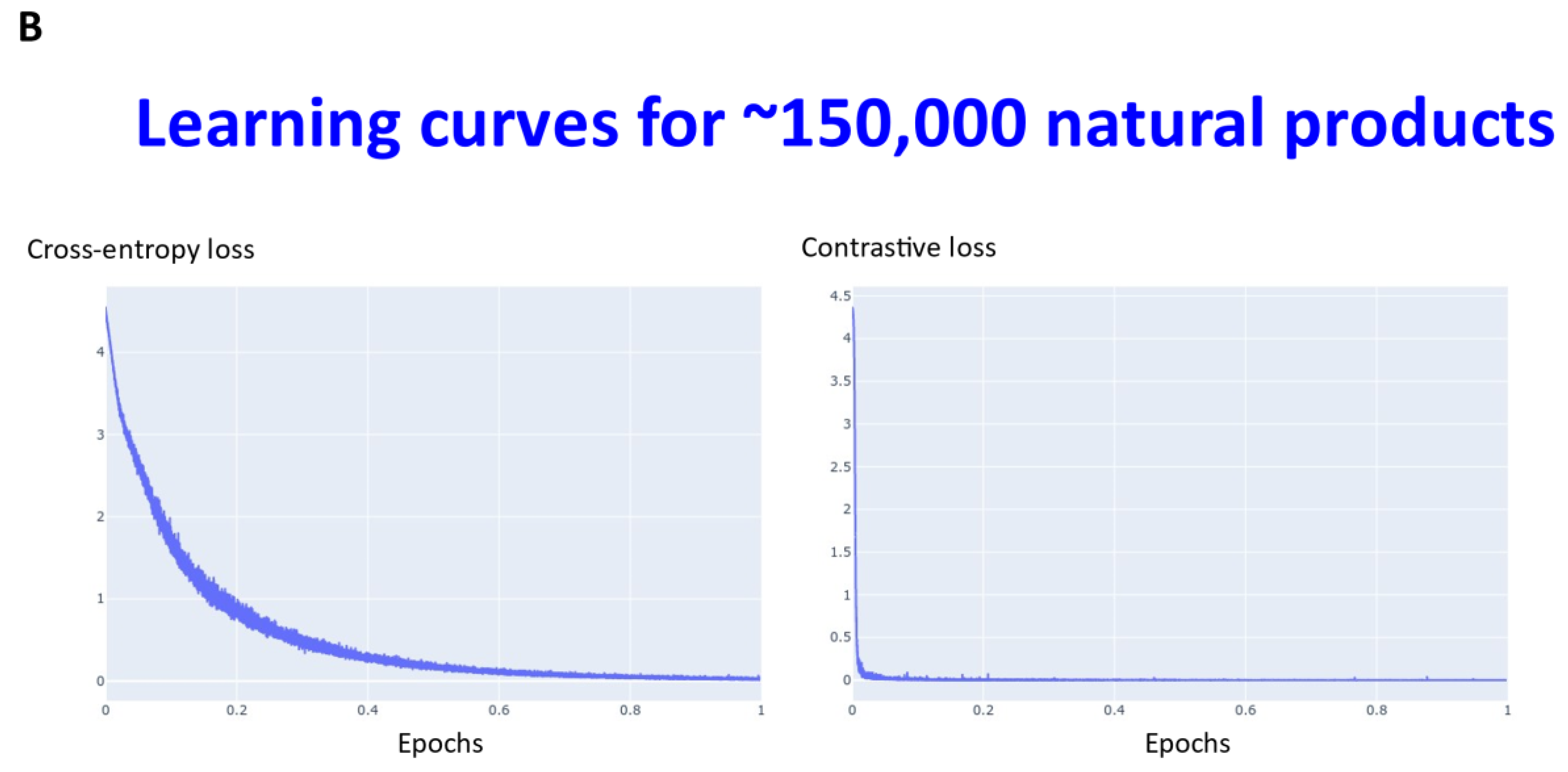

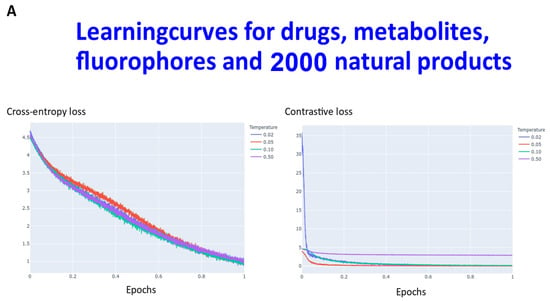

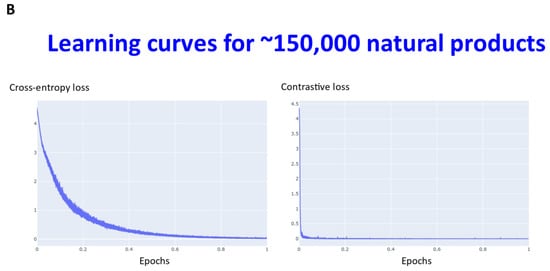

Transformers are computationally demanding (our largest network had some 4.68 M parameters), and so (as described in Section 4) instead of using 6M ZINC molecules (that the memory available in our computational resources could not accommodate), we studied datasets consisting overall of ~160,000 natural products, fluorophores, endogenous metabolites, and marketed drugs (the dataset is provided in [113]). We compared contrastive learning with the conventional objective function in which we used the evidence lower bound of the KL divergence. The first dataset (Materials and Methods) consisted of ~5000 (actually 4643) drugs, metabolites, and fluorophores, and 2000 UNPD natural products molecules, while the second consisted of the full set of ~150 k natural products. “Few-shot” learning (e.g., [114,115,116]) means that only a very small number of data points are required to train a learning model, while “one-shot” learning (e.g., [117,118,119,120,121]) involves the learning of generalizable information about object categories from a single related training example. In appropriate circumstances, transformers can act as few-shot [3,122,123], or (as here) even one-shot learners [124,125]. We thus first compared the learning curves of transformers trained using cross entropy versus those trained using contrastive loss (Figure 2). In each case, the transformer-based learning essentially amounts to one-shot learning, especially for the contrastive case, and so the learning curve is given in terms of the effective fraction of the training set. We note that recent studies happily imply that large networks of the present type are indeed surprisingly resistant to overtraining [126]. In Figure 2A the optimal temperature used seemed to be 0.05 and this was used for the larger dataset (Figure 2B). The clock time for training an epoch on a single NVIDIA-V100-GPU system was ca. 30 s and 23 min for the two datasets illustrated in Figure 2A and Figure 2B, respectively.

Figure 2.

Learning curve for training our transformers on (A) drugs, metabolites, fluorophores, and 2000 natural products, and (B) a full set of natural products. Because the transformer is effectively a one-shot learner, and the batch size varied, the abscissa is shown as a single epoch. The batch size was varied, as described in Section 4, and was (A) 50 (latent space of 64 dimensions) and (B) 20 (latent space of 256 dimensions), leading to an actual number of batches of (A) 92 and (B) 7500.

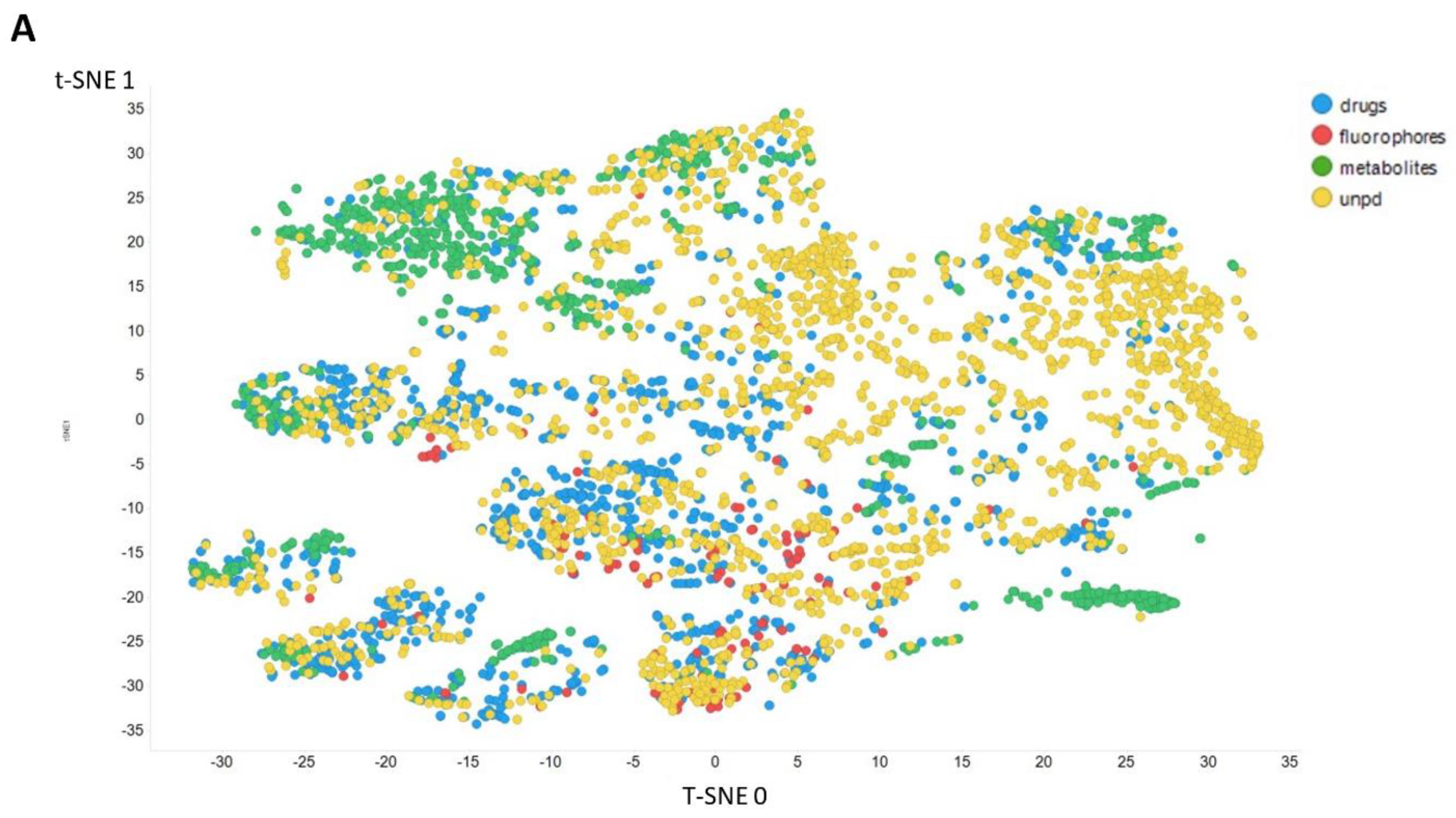

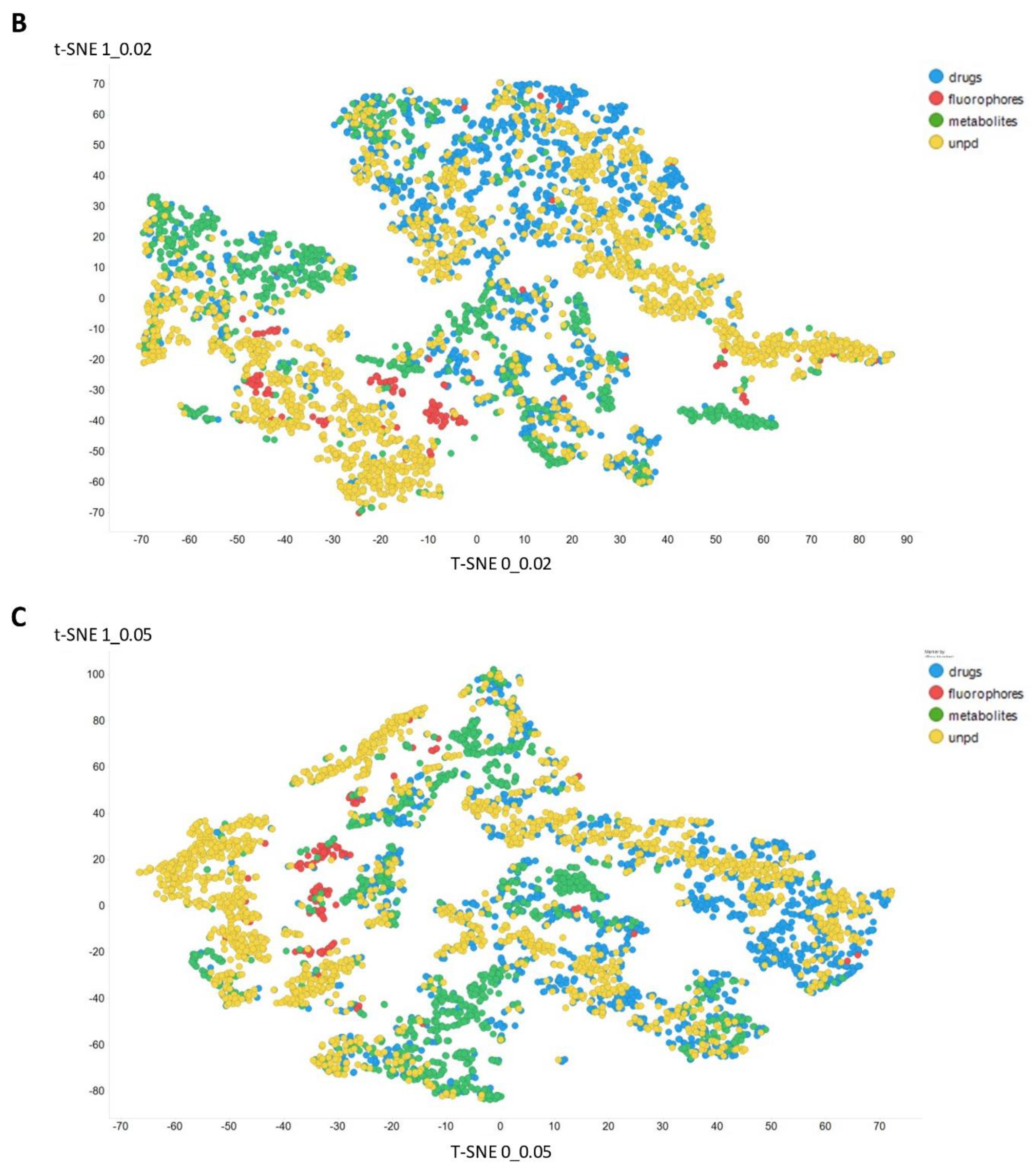

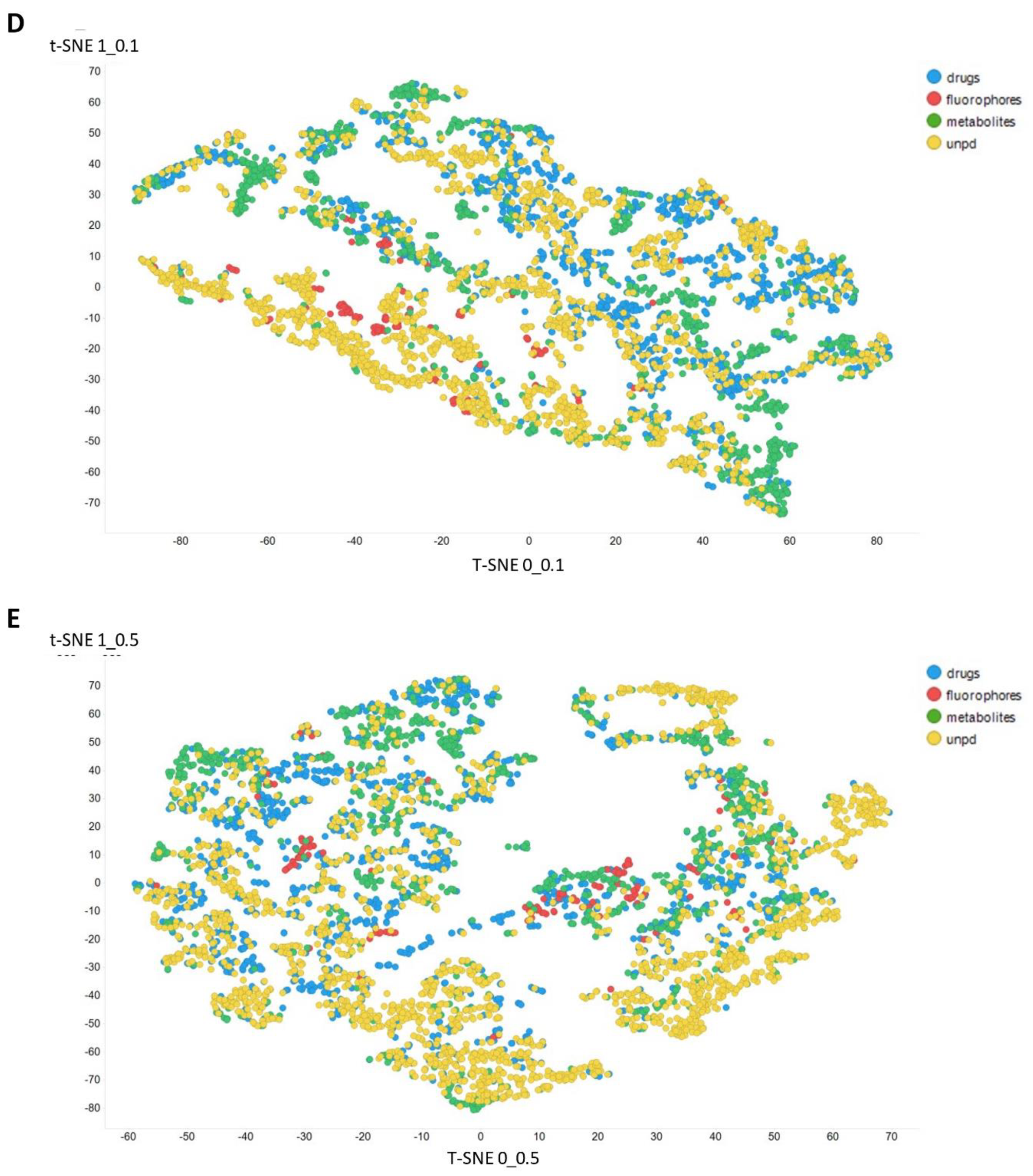

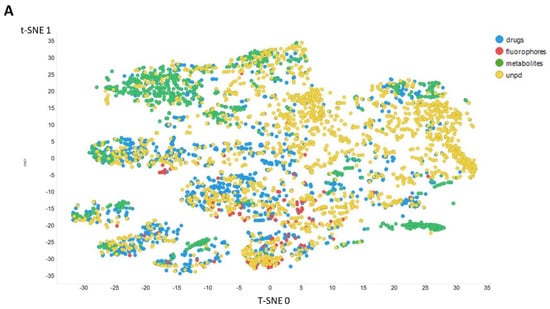

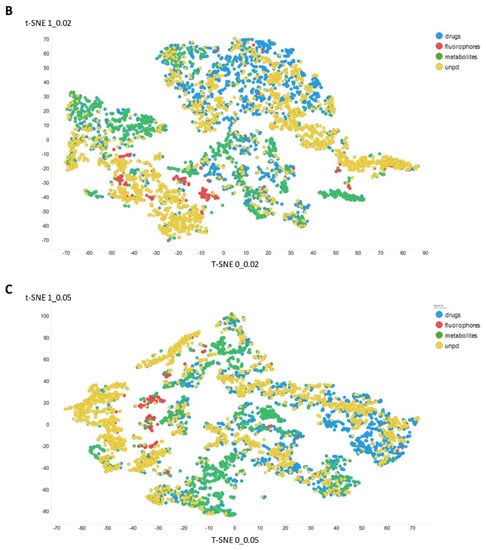

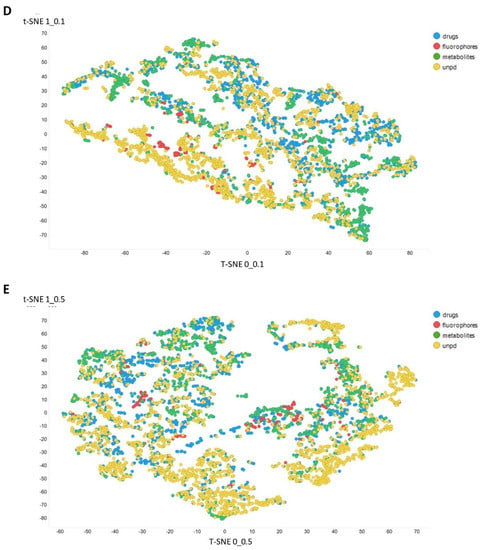

Figure 3 gives an overall picture using t-SNE [127,128] of the dataset used. Figure 3A recapitulates that published previously, using standard VAE-type ELBO/K-L divergence learning alone, while panels Figure 3B–E show the considerable effect of varying the temperature scalar (as in [112]).

Figure 3.

Effect of adjusting the temperature parameter in the contrastive learning loss on the distribution of molecules in the latent space as visualized via the t-SNE algorithm. For clarity, only a random subset of 2000 natural products is shown. (A) Learning based purely on the cross-entropy objective function. (B–E) The temperature scalar (as in [112]) was varied between 0.02 and 0.5 as indicated. (Reducing t below led to numerical instabilities.) All drugs, fluorophores, and Recon2 metabolites are plotted, along with a randomly chosen 2000 natural products (as in [113]).

It can clearly be seen from Figure 3B–E that as the temperature was increased in the series 0.02, 0.05, 0.1, and 0.5, the tightness and therefore the separability of the clusters progressively decreased. For instance, by mainly looking at the fluorophores (red colors) in the plotted latent space for each of the four temperatures, the separability as well as tightness of the cluster was best for the 0.02 and 0.05 temperatures. Later, as the temperature increased to 0.1, the data points became more dispersed, and finally at a temperature of 0.5, the data points were the most dispersed. Therefore, we suggest that (while the effect is not excessive) the reduced temperature may lead to the data points being more tightly clustered. However, the apparent dependency is not linear.

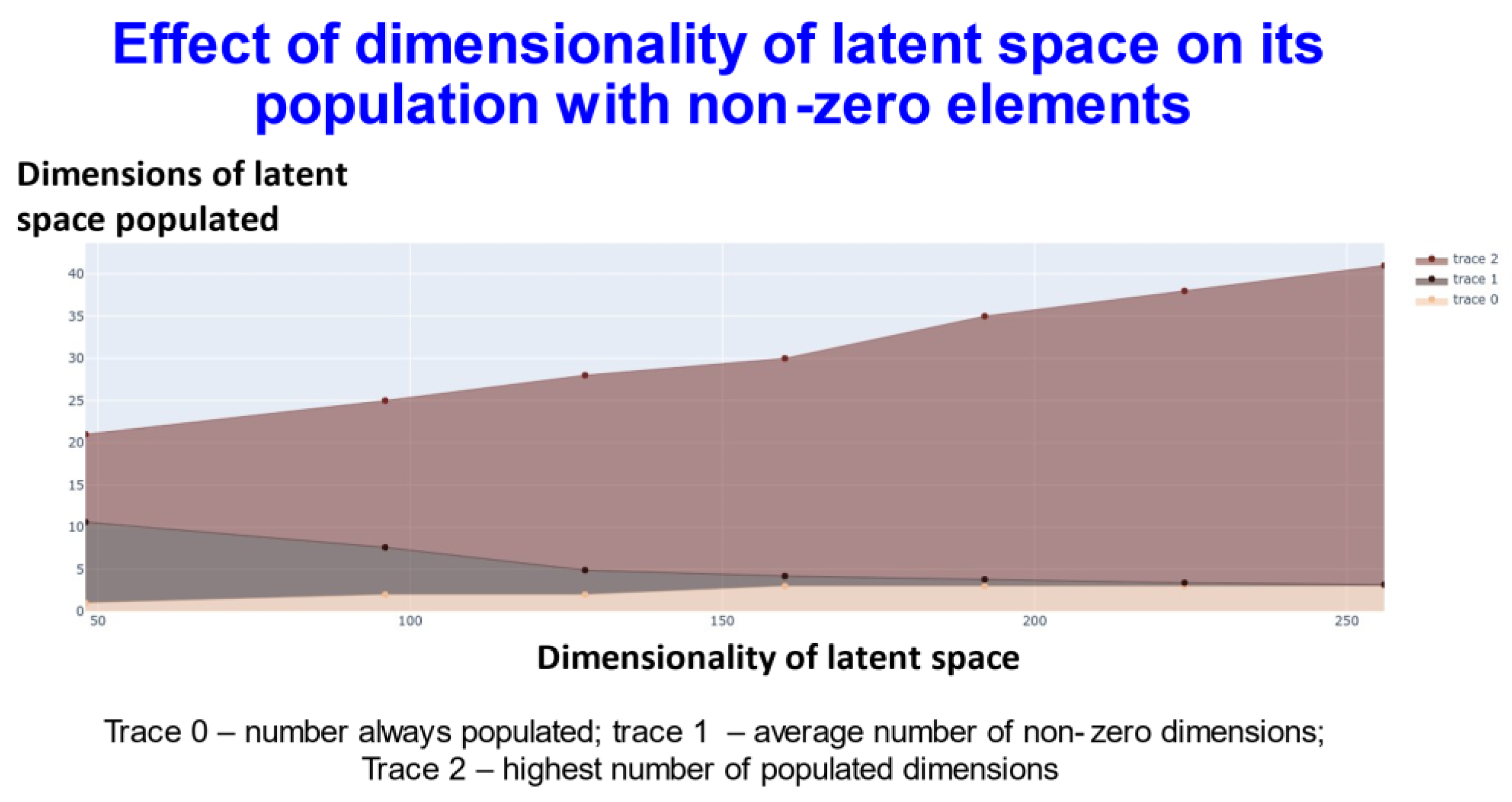

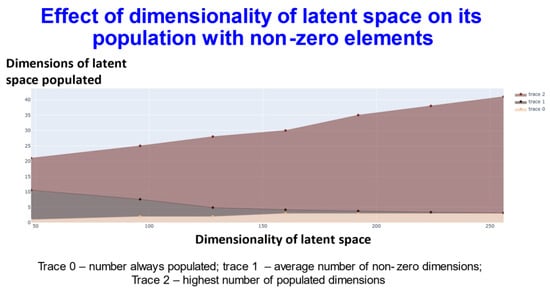

We also varied the number of dimensions used in the latent space, which served to provide some interesting insights into the effectiveness of the disentanglement and the capacity of the transformer (Figure 4).

Figure 4.

Relationship between the extent of population of different dimensions and the dimensionality of the latent space using transformers with contrastive learning.

In Figure 4, trace 0 means that the elements of this number of dimensions was always nonzero. In other words, for every molecule, the value of at least that number of dimensions (the value on the y-axis) will be always non zero. Thus, for the 256-dimensional latent space three dimensions were always non-zero). Trace1 means the average of the number of dimensions that were non zero for the dataset. Finally trace2 gives the highest number of dimensions recorded as populated for that specific dimensional latent space. This shows (and see below) that while GPU memory requirements meant that we were limited to a comparatively small number of molecules in our ability to train a batch of molecules, the capacity of the network was very far from being exceeded, and in many cases some of the dimensions were not populated with non-zero values at all. At one level this might be seen as obvious: if we have 256 dimensions and each could take only two values, there are 2256 positions in this space (~1077). This large dimensionality at once explains the power and the storage capacity of large neural networks of this type.

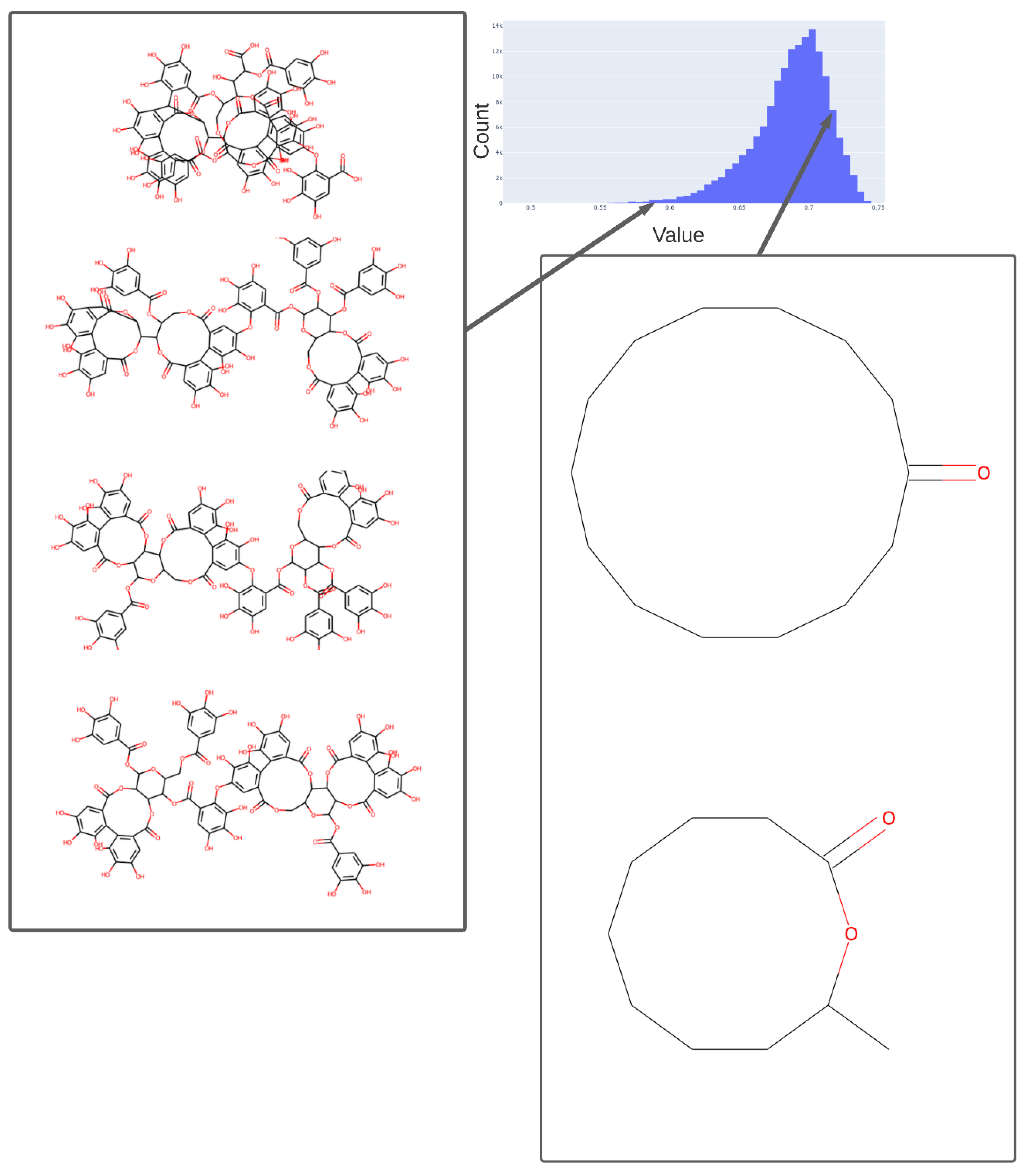

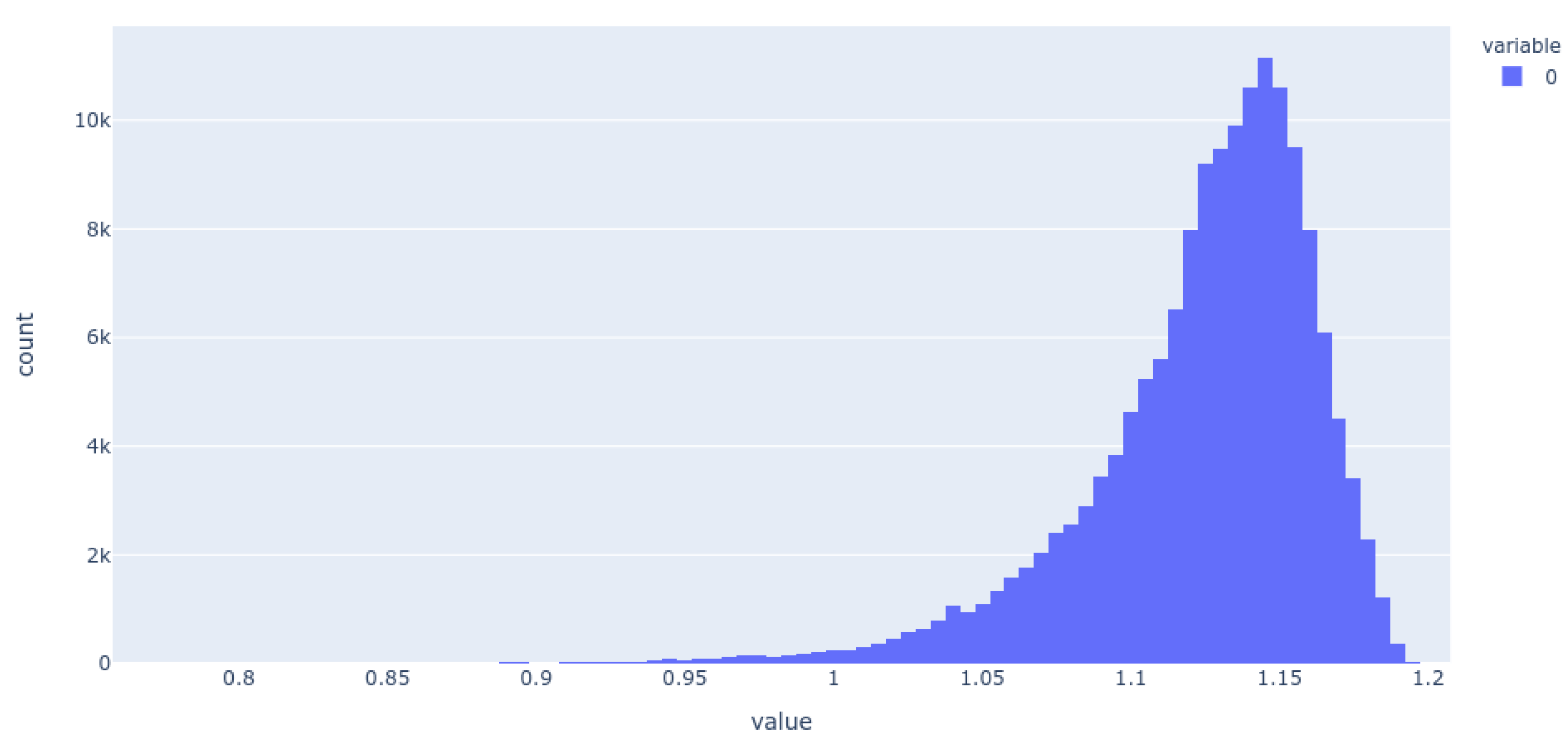

We illustrate this further by showing the population of just three of the dimensions (for the 256-dimension case), viz dimensions 254 (Figure 5), 182 (Figure 6), and 25 (Figure 7) (these three were always populated with non-zero values).

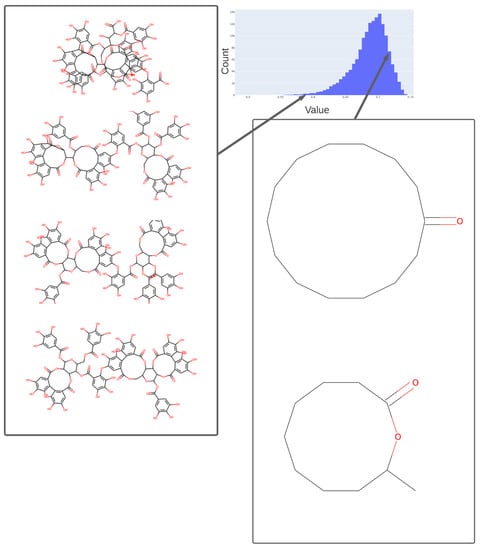

Figure 5.

Values adopted in dimension 254 of the trained 256-D transformer, showing the values of various tri-hydroxy-benzene-containing compounds (left) ca. 0.59 and two lactones (ca. 0.73). The arrows indicate the bins (0.58, 0.73) in the histogram of values in this dimension from which the representative molecules shown were taken.

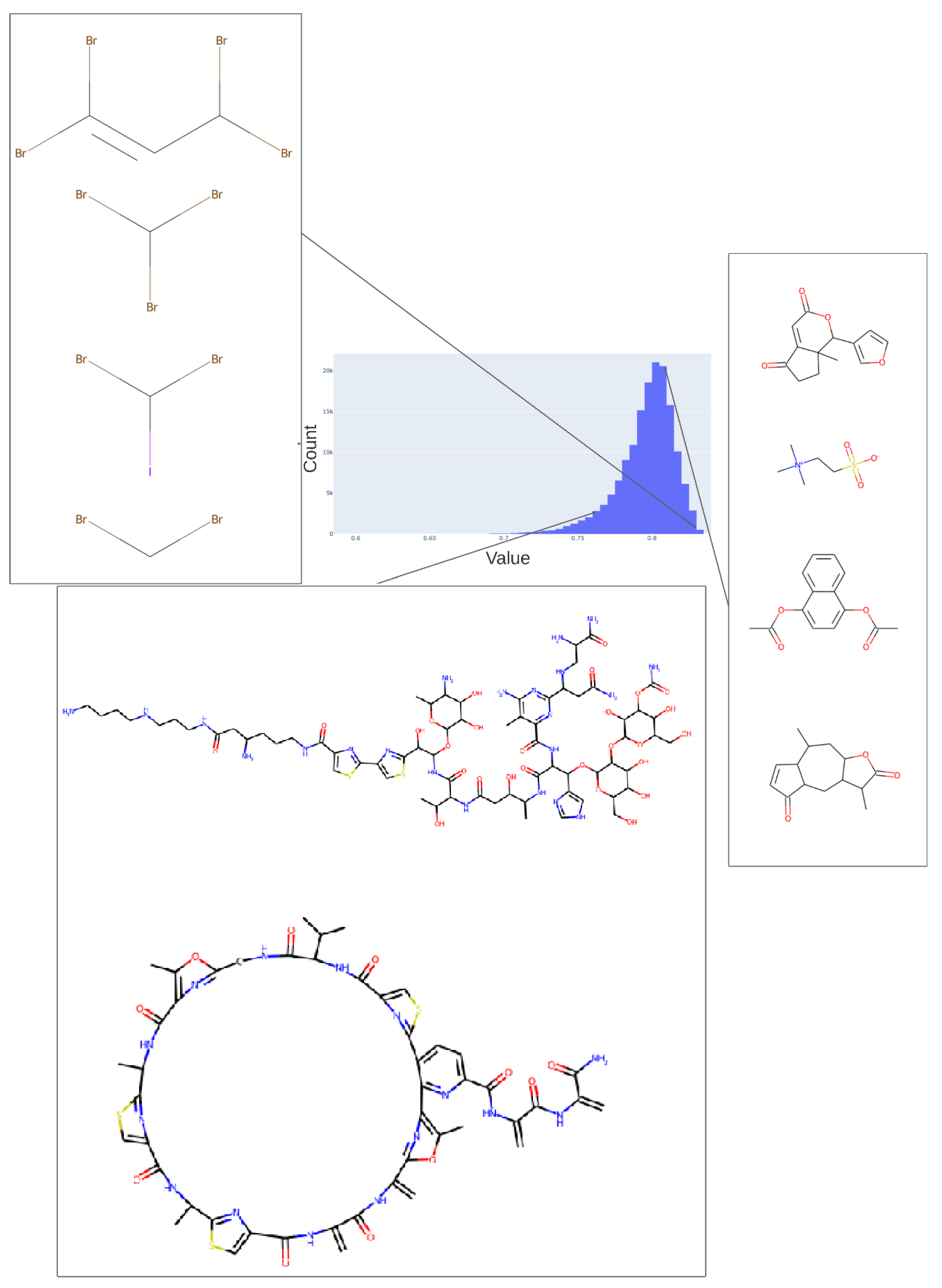

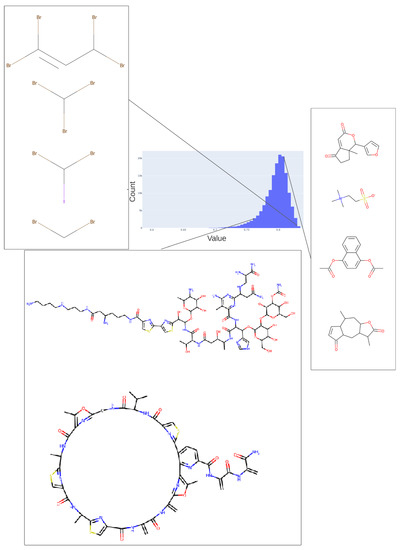

Figure 6.

Values adopted in dimension 182 of the trained 256-D transformer, showing the values of various halide-containing (~0.835) and other molecules. As in Figure 5, we indicate the bins in the histogram of values (0.76, 0.81, 0.83) in this dimension from which the representative molecules shown were taken.

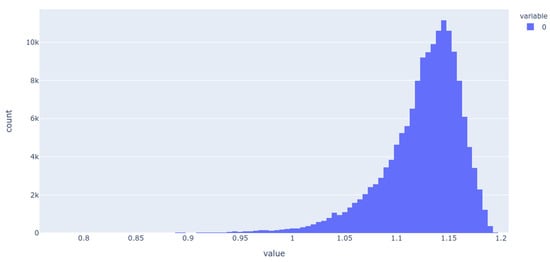

Figure 7.

Histogram of the population of dimension 25 for the 256-D dataset. It is evident that most molecules adopt only a small range of non-zero values in this dimension.

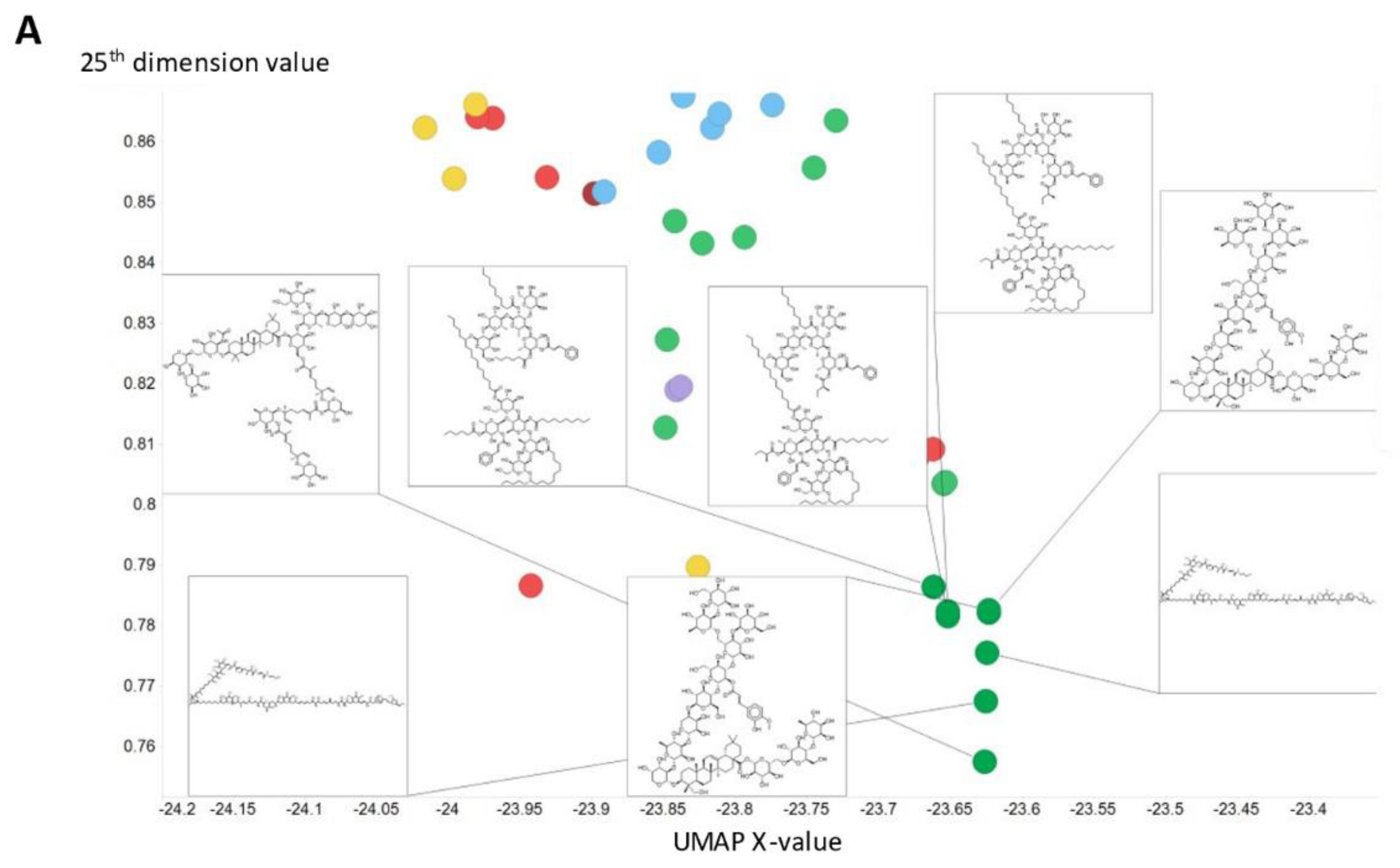

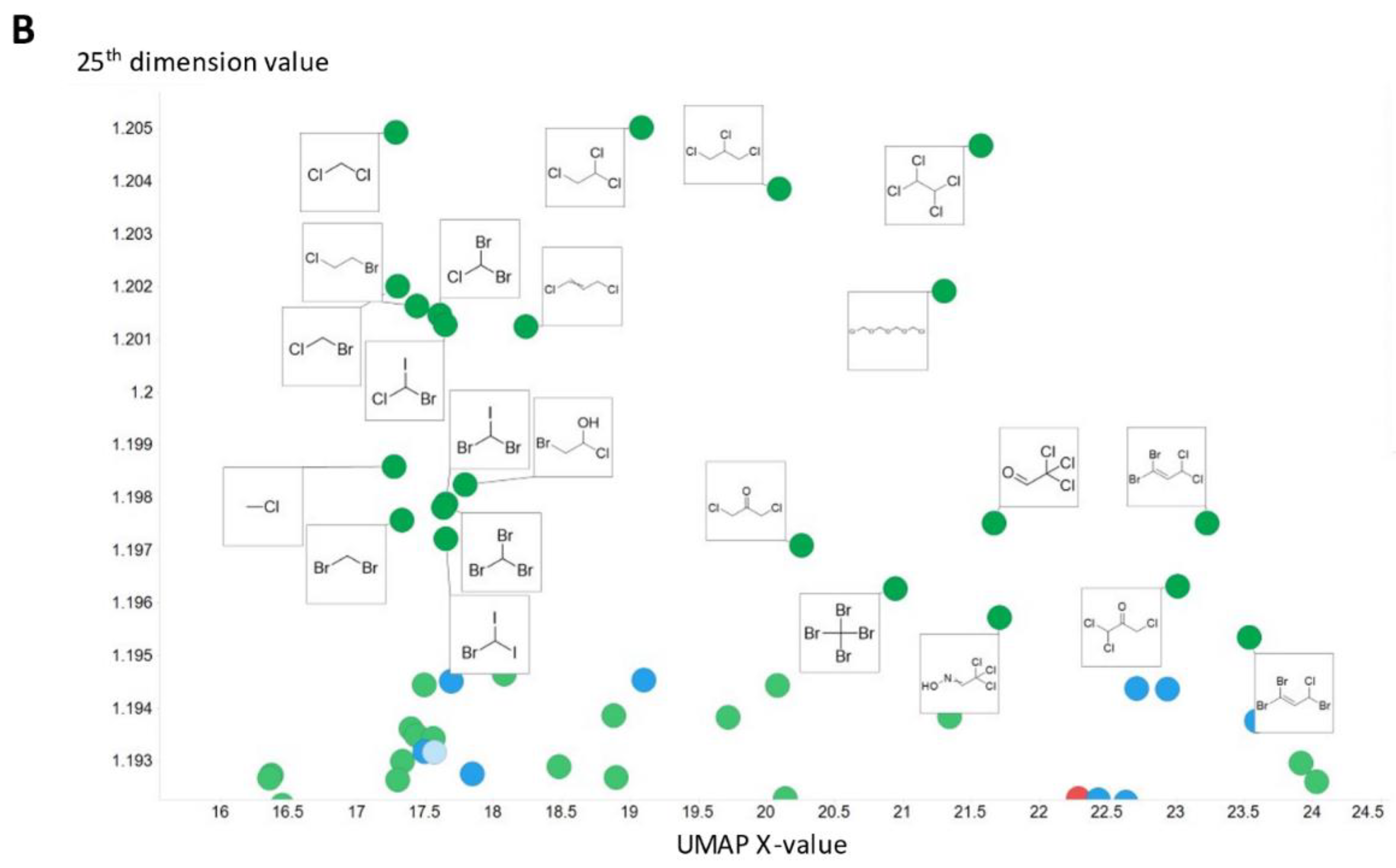

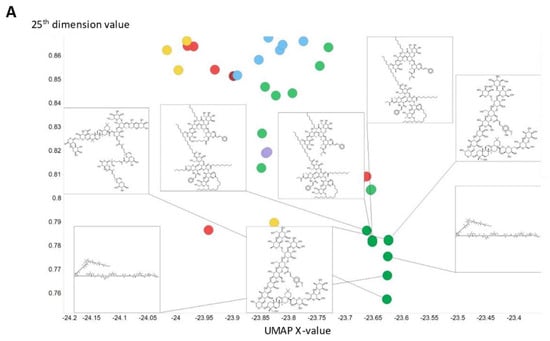

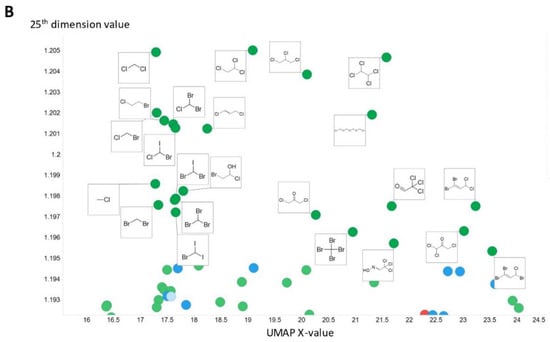

To illustrate in more detail the effectiveness of the disentanglement, we illustrated a small fraction of the values of the 25th dimension alone, as plotted against a UMAP [129,130] X-coordinate. Despite the tiny part of the space involved (shown on the y-axis), it is clear that this dimension alone has extracted features that involve tri-hydroxylated cyclohexane- (Figure 8A) or halide-containing moieties (Figure 8B).

Figure 8.

Effective disentanglement of molecular features into individual dimensions, using the indicated values of 25th dimension of the latent space of the 2nd dataset. In this case we used a latent space of 256 dimensions and a temperature t of 0.05. (A) Trihydroxycyclohexane derivatives, (B) halide-containing moieties.

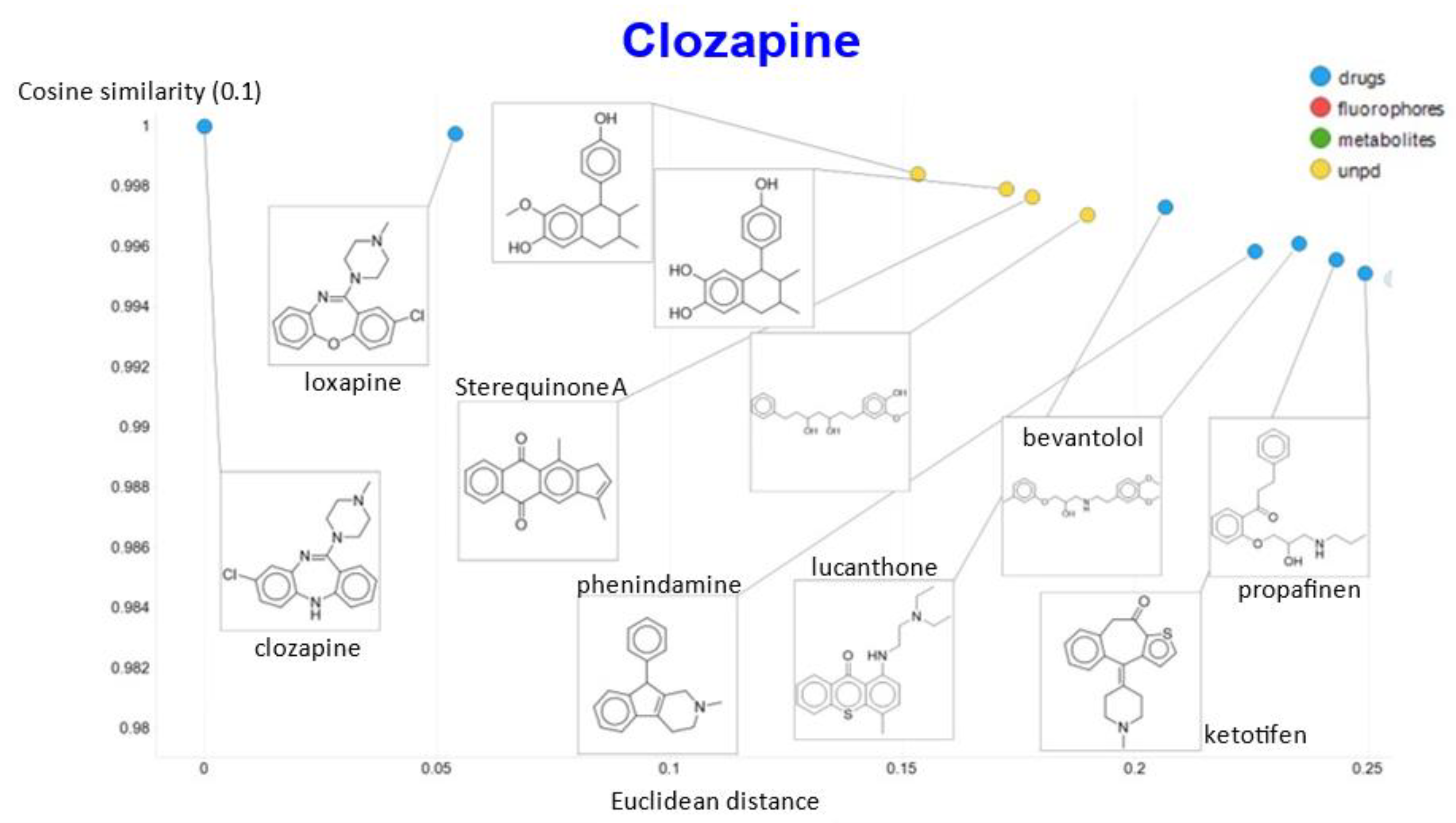

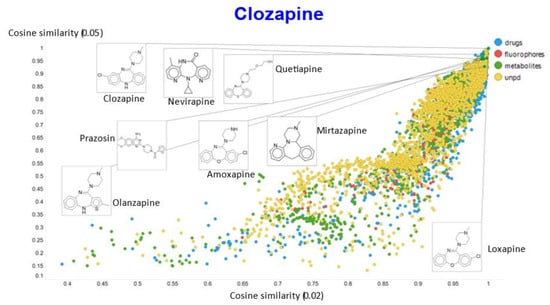

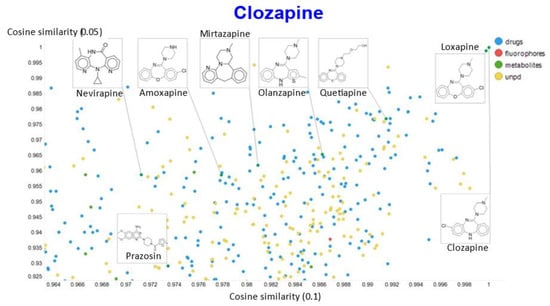

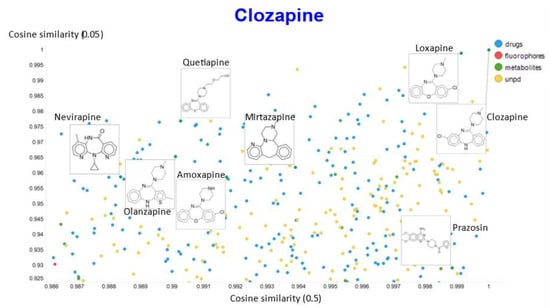

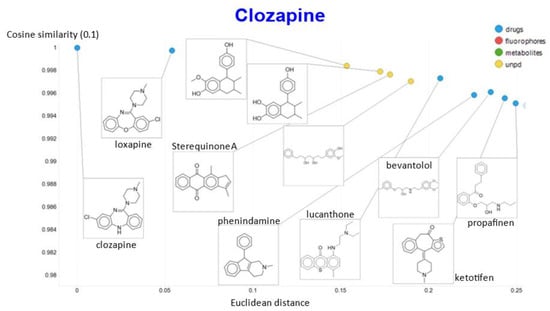

Another feature of this kind of chemical similarity analysis involves picking a molecule of interest and assessing what is “near” to it in the high-dimensional latent space, as judged by conventional measures of vector distance. We variously used the cosine or the Euclidean distance. As before [5], we chose clozapine as our first “target” molecule and used it to illustrate different feature of our method.

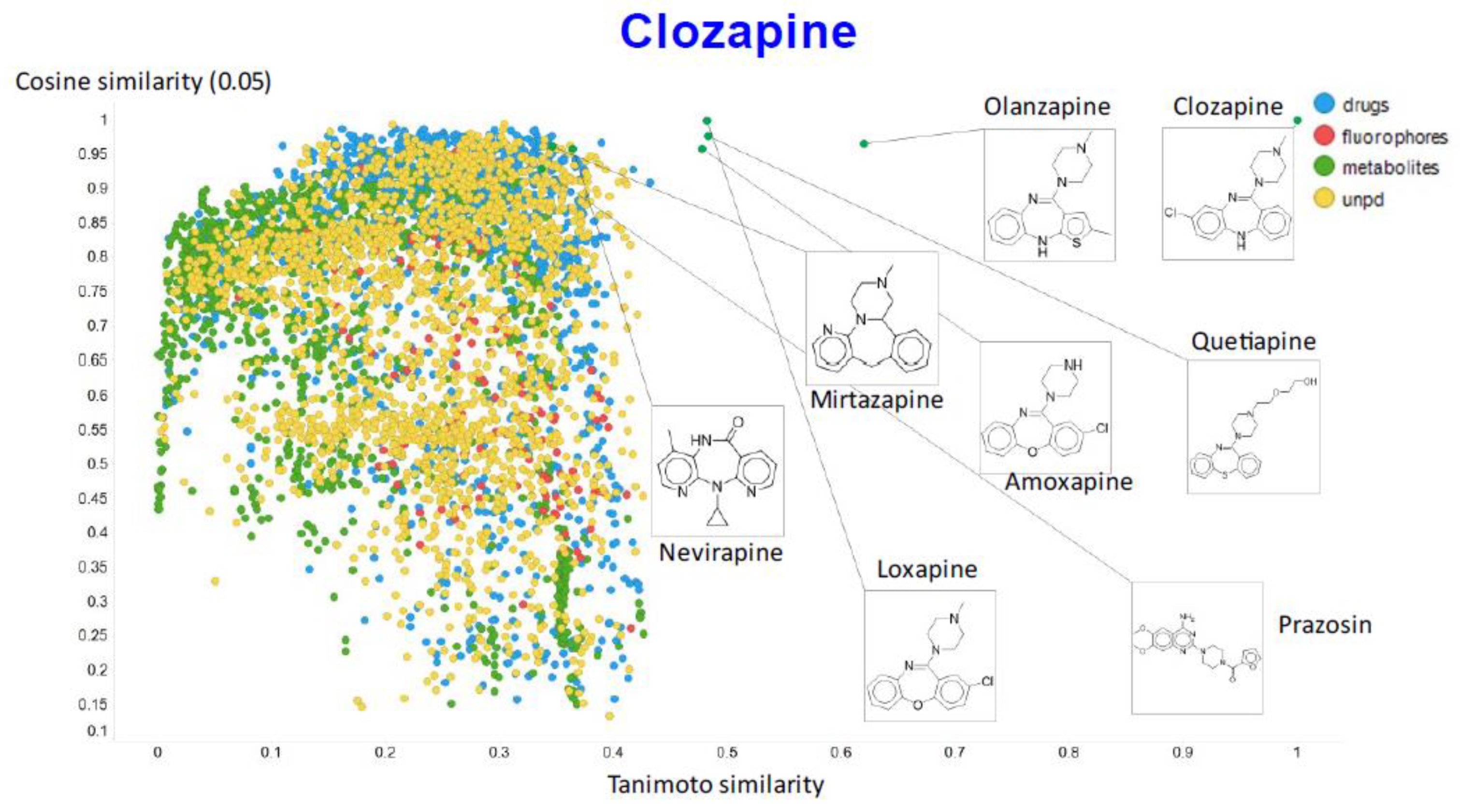

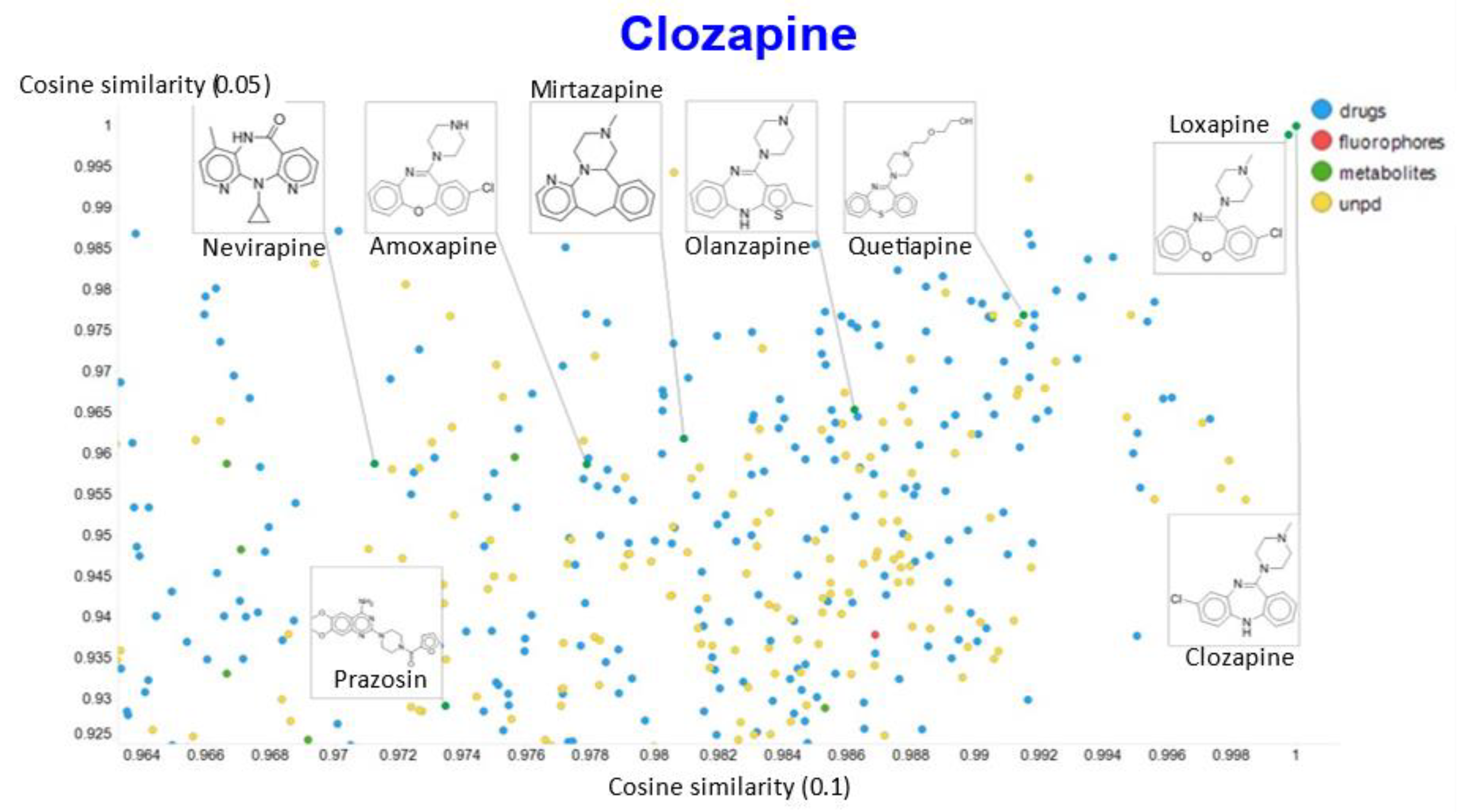

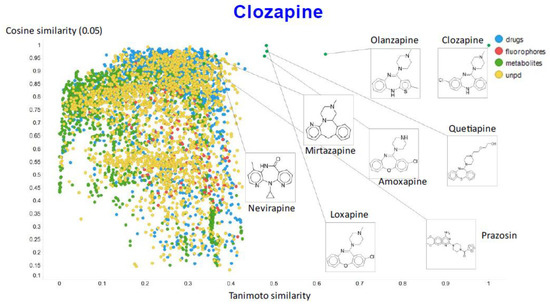

Figure 9 illustrates the relationship (using a temperature factor of 0.05) between the cosine similarity and the Tanimoto similarity for clozapine (using RDKit’s RDKfingerprint encoding (https://www.rdkit.org/docs/source/rdkit.Chem.rdmolops.html, accessed on 28 February 2021).

Figure 9.

Relationship between cosine similarity and Tanimoto similarity for clozapine in our chemical space, using a temperature of 0.05.

It is clear that (i) very few molecules showed up as being similar to clozapine in Tanimoto space, while prazosin (which competes with it for transport [131]) had a high cosine similarity despite having a very low Tanimoto similarity. In particular, none of the molecules with a high Tanimoto similarity had a low cosine similarity, indicating that our method does recognize molecular similarities effectively.

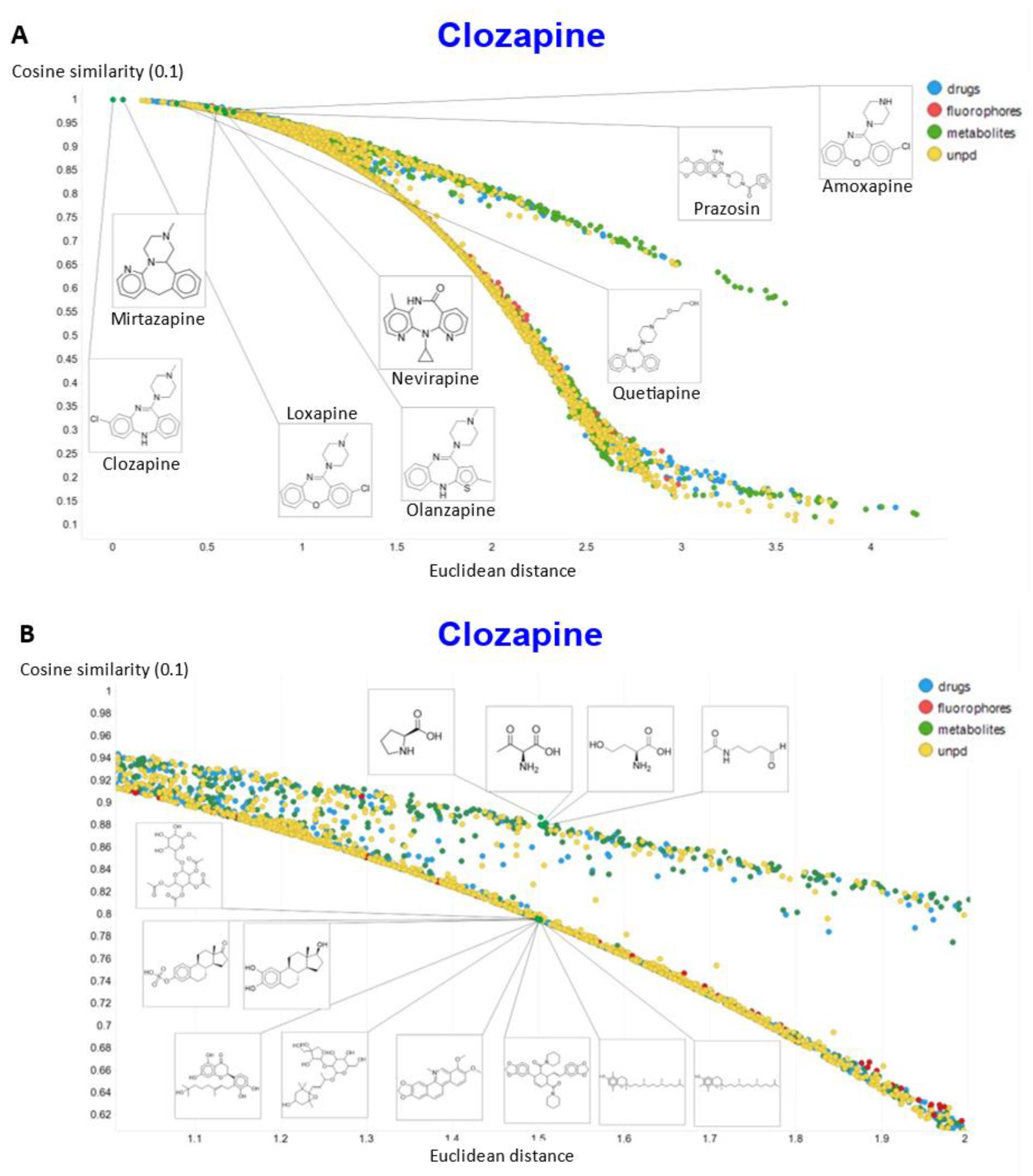

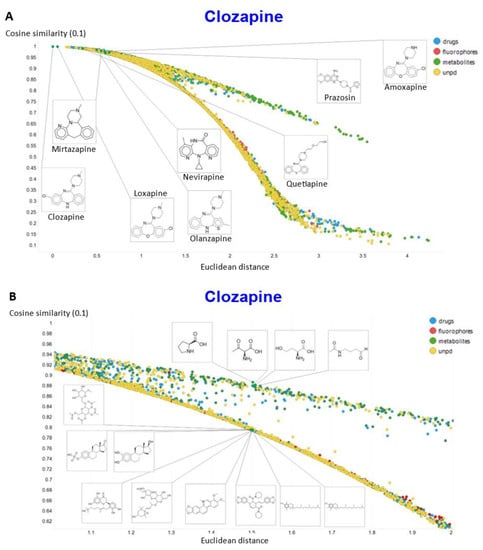

To show other features, Figure 10A shows the plots of the cosine similarity against the Euclidean distance; they were tolerably well correlated, with an interesting bifurcation, implying that the cosine similarity is probably to be preferred. This is because a zoomed-in version (Figure 10B) shows that the two sets of molecules with a similar Euclidean distance around 1.5 really are significantly different from each other between the two sets, where the cosine similarities also differ. By contrast, the molecules with a similar cosine similarity within a given arm of the bifurcation really are similar. The zooming in also makes it clear that the upper fork tends to have a significantly greater fraction of “Recon2” metabolites than does the lower fork, showing further how useful the disentangling that we have effected can be.

Figure 10.

Relationship between cosine similarity and Euclidean distance for clozapine in our chemical space using a temperature of 0.1. (A) Overview. (B) Illustration of molecules in the bifurcation.

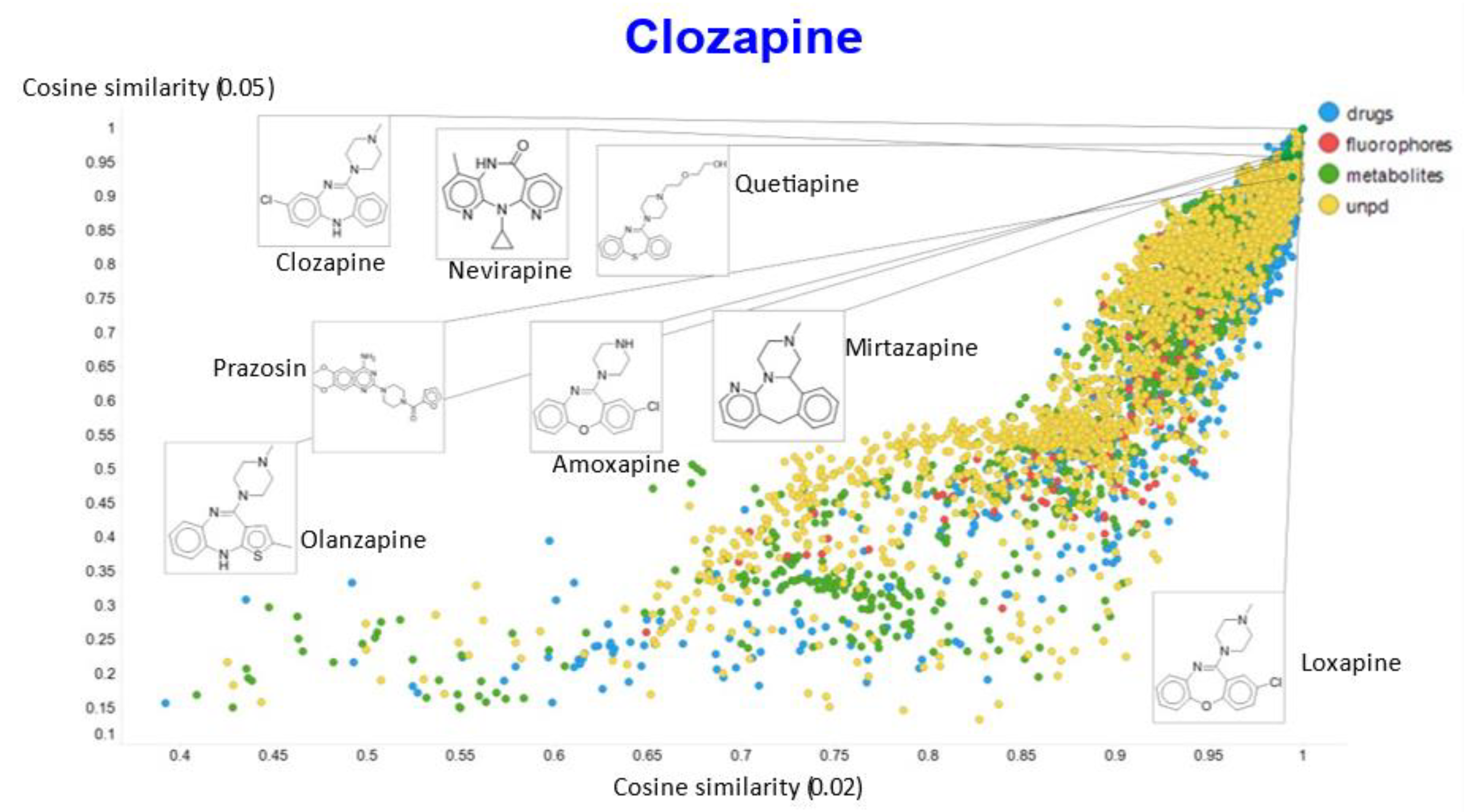

In a similar vein, varying the temperature scalar caused significant differences in the values of the cosine similarities for clozapine vs. the rest of the dataset (Figure 11).

Figure 11.

Relationship between cosine similarity for values of the temperature parameter of 0.05 and 0.02 for clozapine in our chemical space.

A similar plot is shown, at a higher resolution, for the cosine similarities with temperature scalars of 0.05 and 0.1 (Figure 12) and 0.05 vs. 0.5 (Figure 13). The closeness of clozapine to the other “apines”, as judged by cosine similarity, did vary somewhat with the value of the temperature. However, the latter value brings prazosin to be very close to clozapine, indicating the substantial effects that the choice of the temperature scalar can exert.

Figure 12.

Relationship between cosine similarity for values of the temperature parameter of 0.05 and 0.1 for clozapine in our chemical space.

Figure 13.

Relationship between cosine similarity for values of the temperature parameter of 0.05 and 0.5 for clozapine in our chemical space.

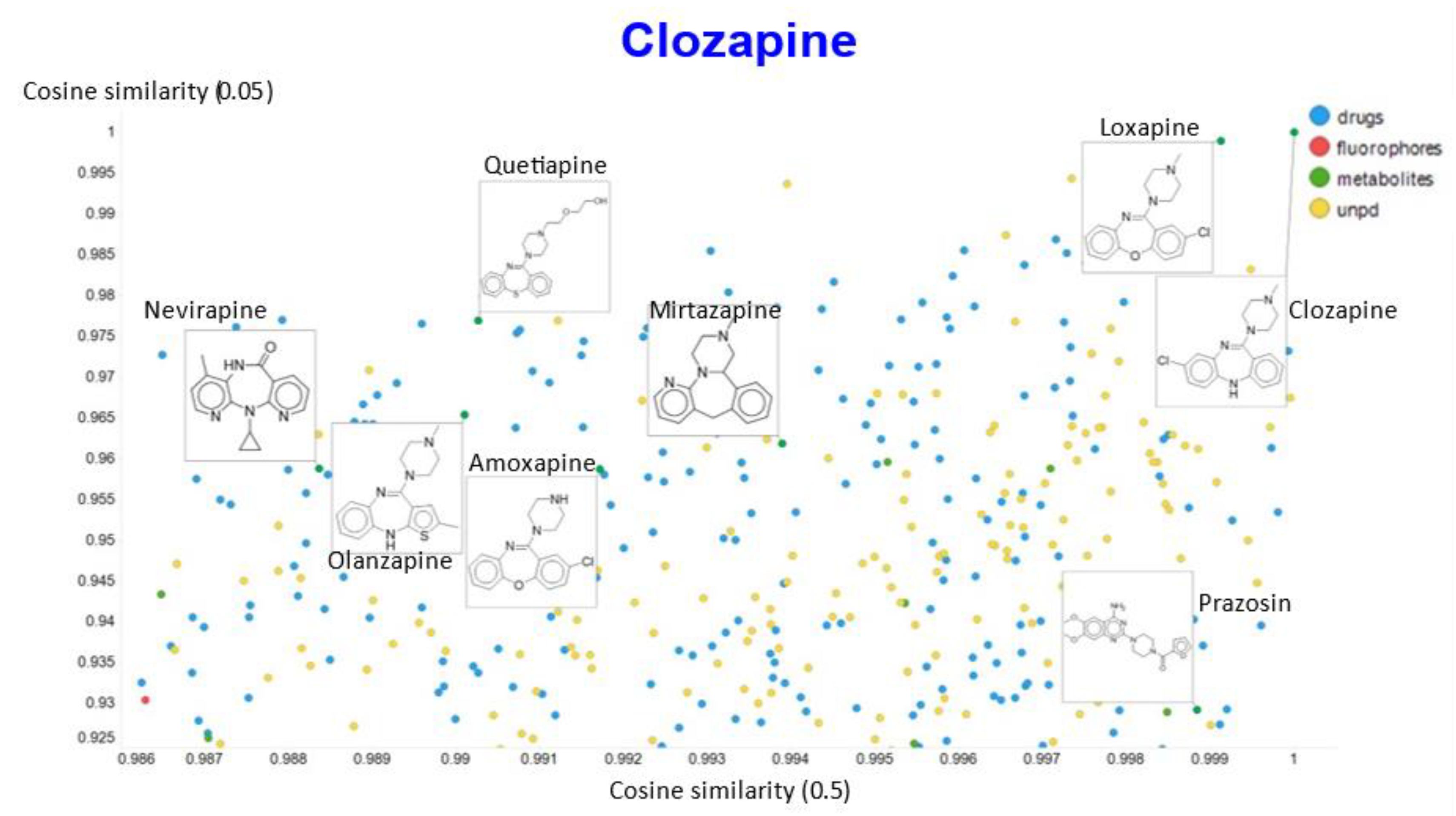

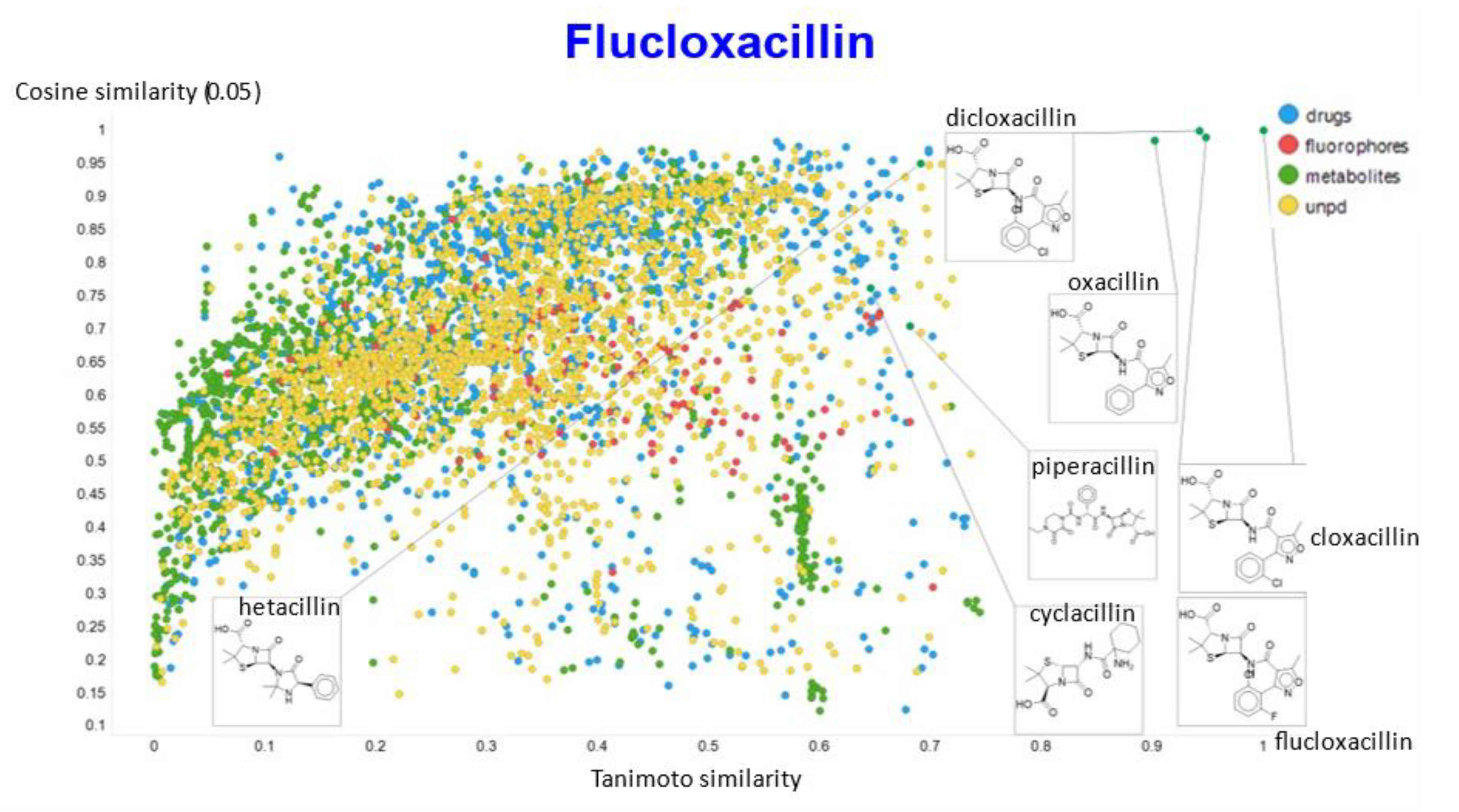

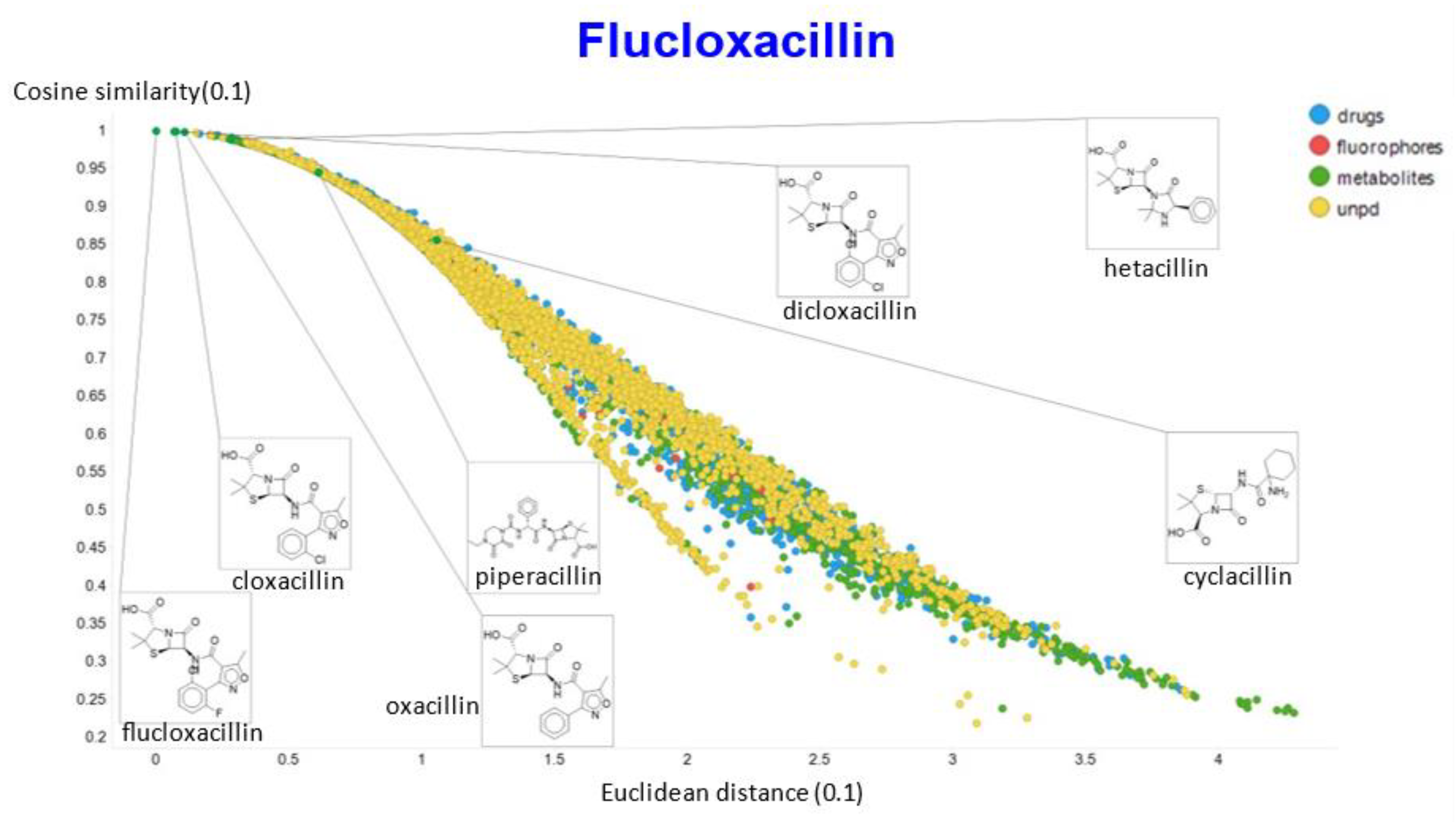

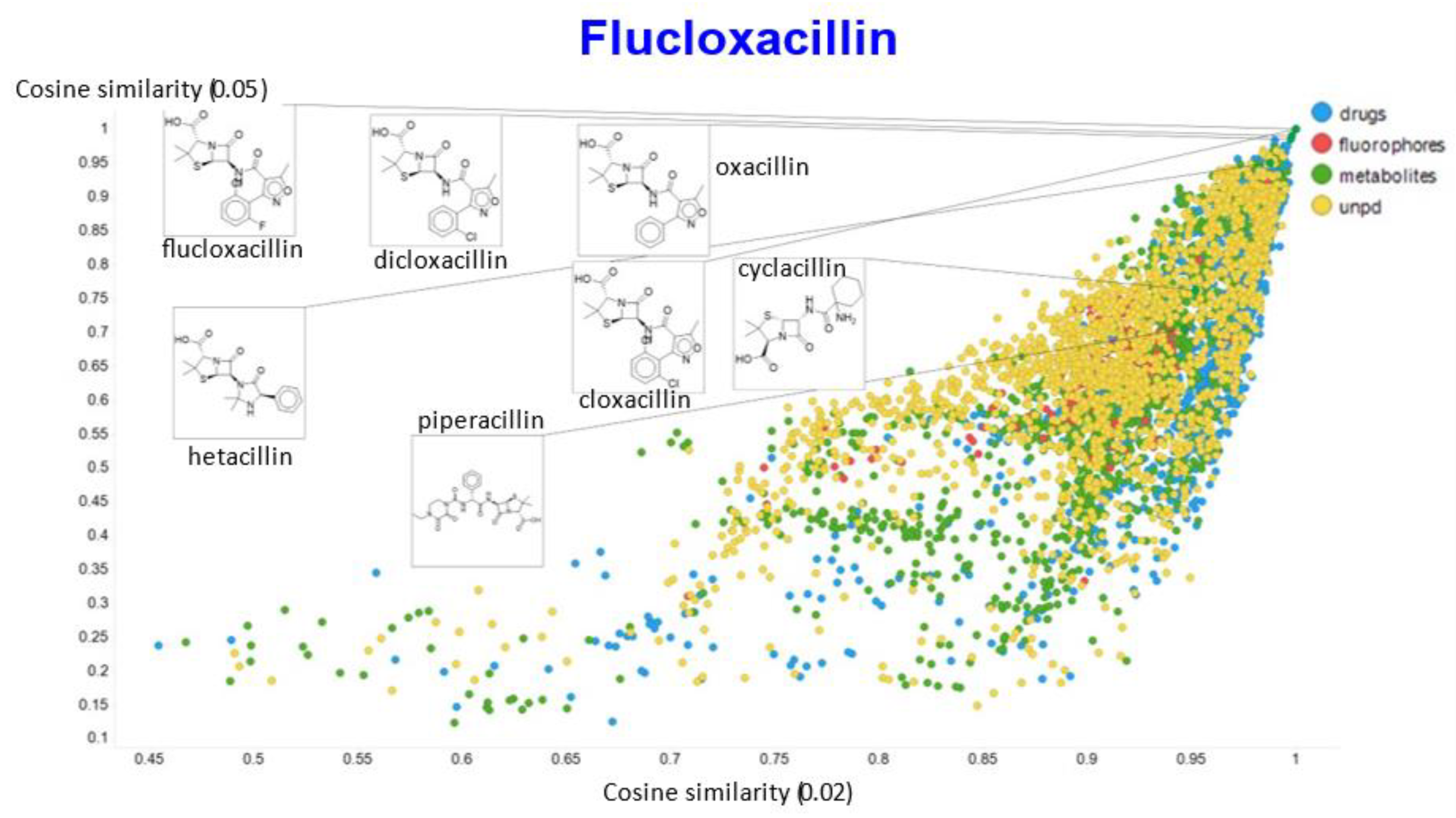

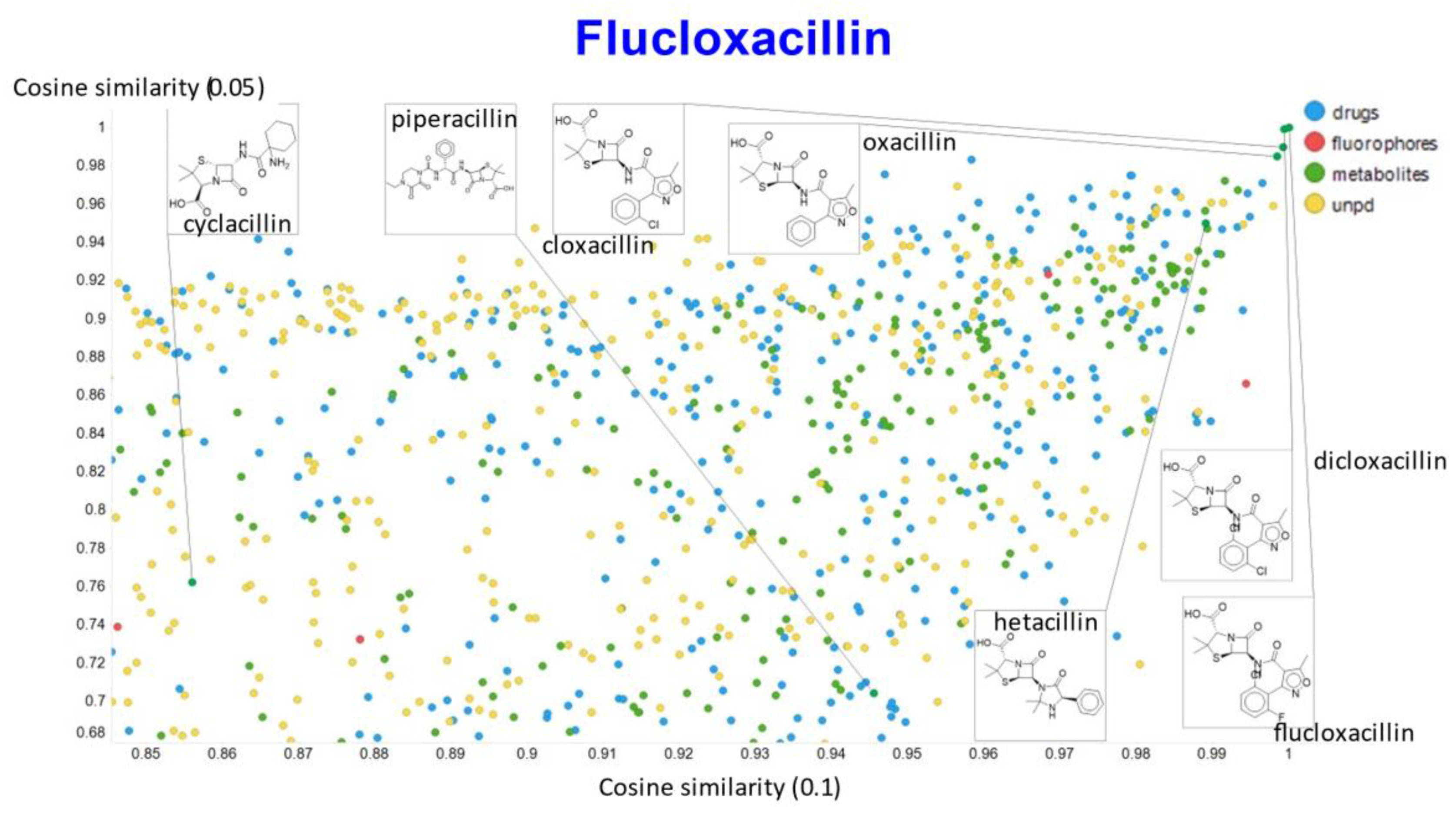

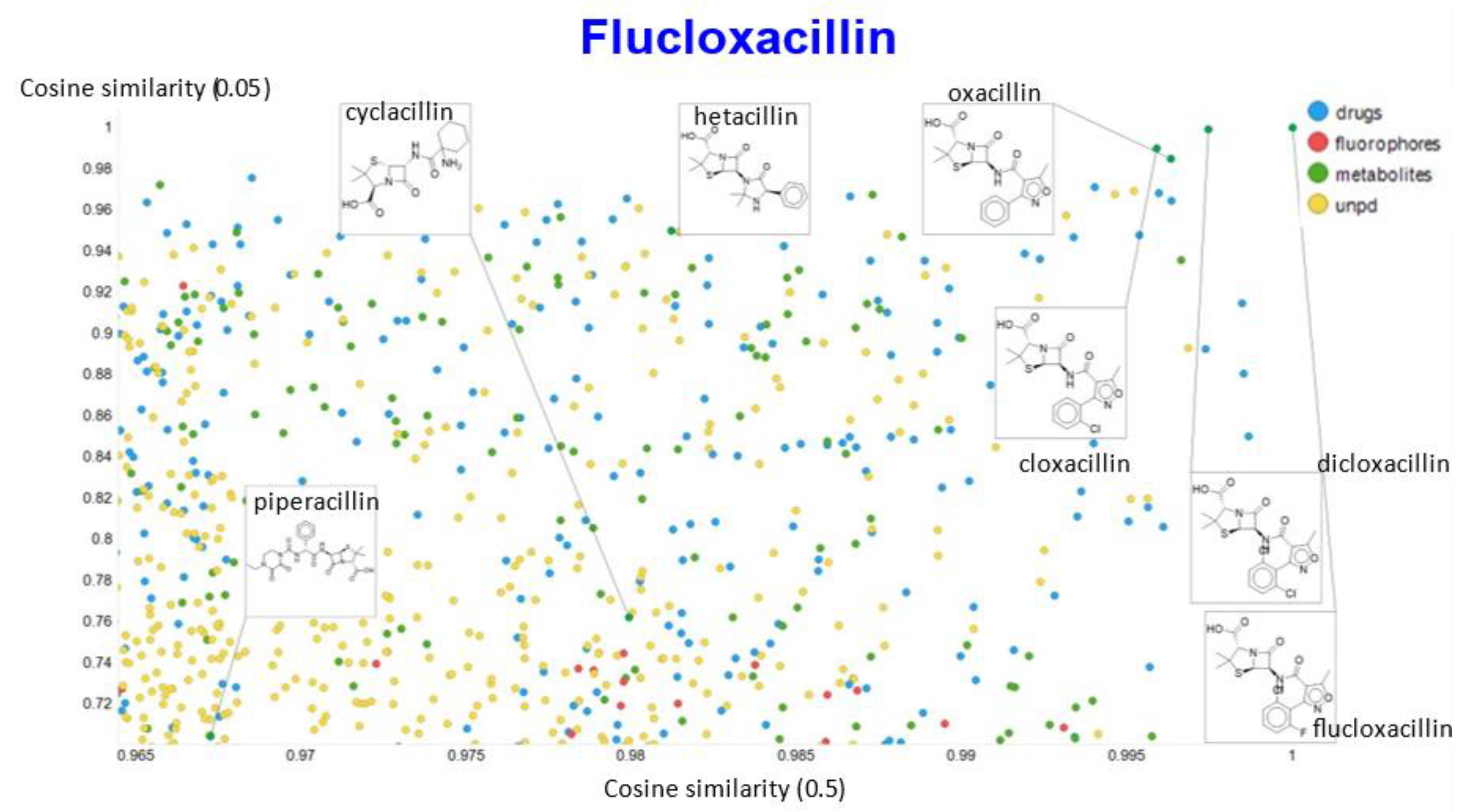

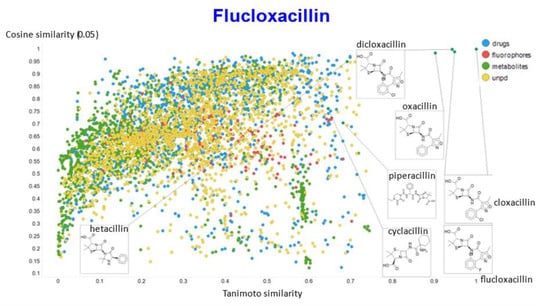

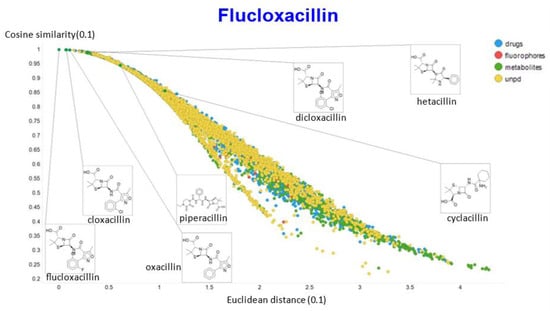

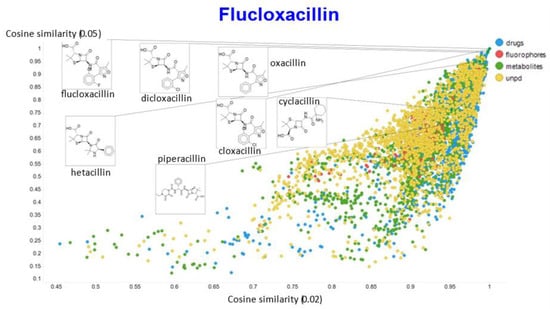

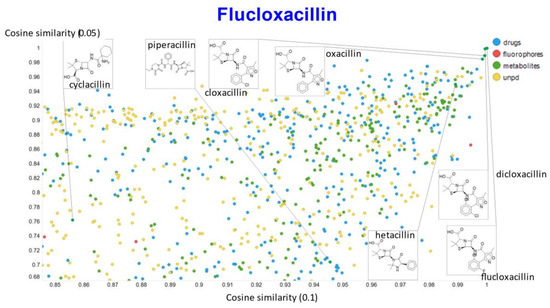

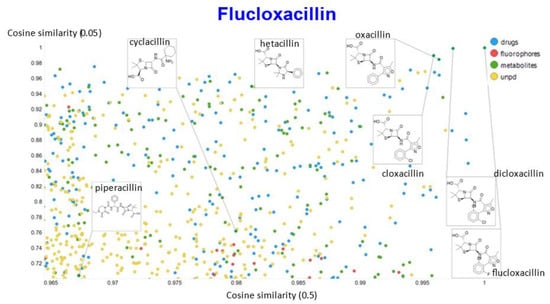

A similar exercise was undertaken for “acillin”-type antibiotics based on flucloxacillin, with the results illustrated in Figure 14, Figure 15, Figure 16, Figure 17 and Figure 18.

Figure 14.

Relationship between cosine similarity and Tanimoto similarity (temperature = 0.05) for flucloxacillin in our chemical space.

Figure 15.

Relationship between cosine similarity and Euclidean distance for flucloxacillin in our chemical space, with a temperature parameter of 0.1.

Figure 16.

Relationship between cosine similarity for values of the temperature parameter of 0.05 and 0.02 for flucloxacillin in our chemical space.

Figure 17.

Relationship between cosine similarity for values of the temperature parameter of 0.05 and 0.1 for flucloxacillin in our chemical space.

Figure 18.

Relationship between cosine similarity for values of the temperature parameter of 0.05 and 0.5 for flucloxacillin in our chemical space.

In the case of flucloxacillin, the closeness of the other “acillins” varied more or less monotonically with the value of the temperature parameter. Thus for particular drugs of interest, it is likely best to fine tune the temperature parameter accordingly. In addition, the bifurcation seen in the case of clozapine was far less substantial in the case of flucloxacillin.

That the kinds of molecule that were most similar to clozapine do indeed share structural features is illustrated (Figure 19) for a temperature of 0.1 in both cosine and Euclidean similarities, where the 10 most similar molecules include six known antipsychotics, plus four related natural products that might be of interest to those involved in drug discovery.

Figure 19.

Molecules closest to clozapine when a temperature of 0.1 is used, as judged by both cosine similarity and Euclidean distance.

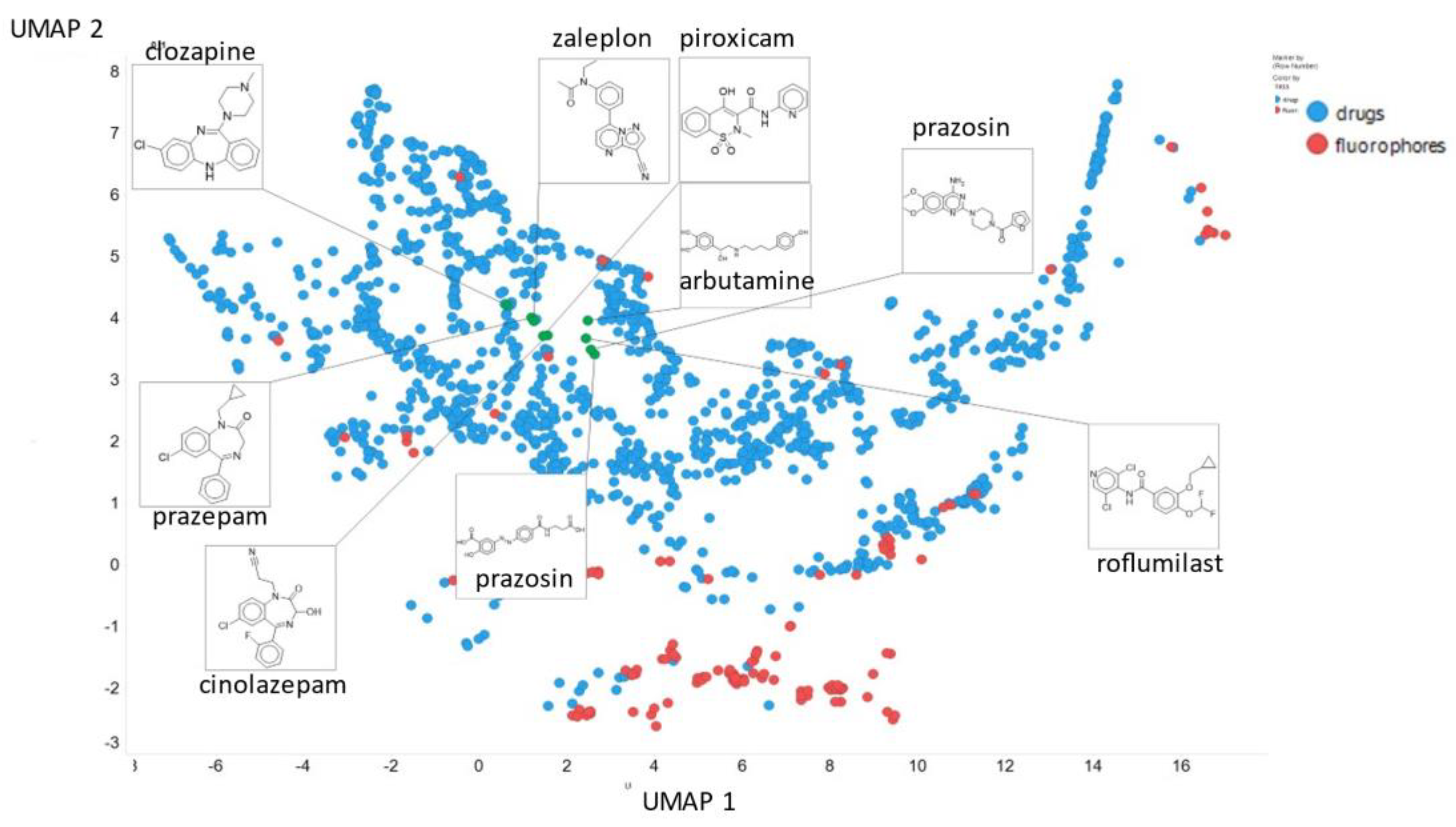

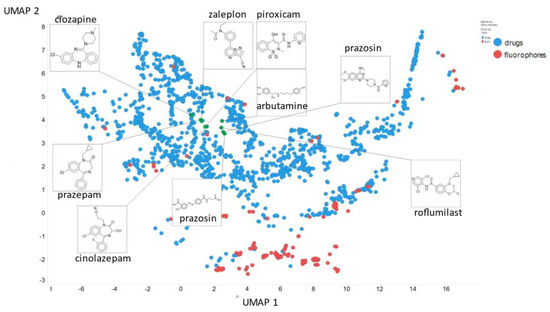

Finally, here we show (using for clarity drugs and fluorophores only (Figure 20)) the closeness of chlorpromazine and prazosin in UMAP space when the NT-Xent temperature factor is 0.1.

Figure 20.

Positions of chlorpromazine, prazosin and some other molecules in UMAP space when the NT-Xent temperature factor is 0.1.

3. Discussion

The concept of molecular similarity is at the core of much of cheminformatics, on the simple grounds that structures that are more similar to each other tend to have more similar bioeffects, an elementary idea typically referred to as the “molecular similarity principle” (e.g., [98,132,133,134]). Its particular importance commonly comes in circumstances where one has a “hit” in a bioassay and wishes to select from a library of available molecules of known structure which ones to prioritize for further assays that might detect a more potent hit. The usual means of assessing molecular similarity are based on encoding the molecules as vectors of numbers based either on a list of measured or calculated biophysical or structural properties, or via the use of so-called molecular fingerprinting methods (e.g., [135,136,137,138,139,140,141,142]). We ourselves have used a variety of these methods in comparing the “similarity” between marketed drugs, endogenous metabolites and vitamins, natural products, and certain fluorophores [91,99,113,143,144,145,146,147,148].

At one level, the biggest problem with these kinds of methods is that all comparisons are done pairwise, and no attempt is thereby made to understand chemical space “as a whole”. In a previous paper [5], based in part on other “deep learning” strategies (e.g., [80,96,149,150,151,152,153,154,155,156,157,158,159]) we used a variational autoencoder (VAE) [6], to project some 6M molecules into a latent chemical space of some 192 dimensions. It was then possible to assess molecular similarity as a simple Euclidean distance.

A popular and more powerful alternative to the VAE is the transformer. Originally proposed by Vaswani and colleagues [11], transformers have come to dominate the list of preferred methods, especially those used with strings such as those involved in natural language processing [106,160,161,162,163]. Since chemical structures can be encoded as strings such as SMILES [164], it is clear that transformers might be used with success to attach problems involving small molecules, and they have indeed been so exploited (e.g., [10,12,104,165,166,167,168]). In the present work, we have adopted and refined the transformer architecture.

A second point is that in the previous work [5], we made no real attempt to manipulate the latent space so as to “disentangle” the input representations, and if one is to begin to understand the working of such “deep” neural networks it is necessary to do so. Of the various strategies available, those using contrastive learning [11,62,66,169,170,171] seem to be the most apposite. In contrastive learning, one informs the learning algorithm whether two (or more) individual examples come from the same of different classes. Since in the present case we do know the structures, it is relatively straightforward to assign “similarities”, and we used a SMILES augmentation method for this.

The standard transformer does not have an obvious latent space of the type generated by autoencoders (variational or otherwise). However, the SimCLR architecture admits its production using one of the transformer heads. To this end, we added a simple autoencoder to our transformer such that we could create a latent space with which to assess molecular similarity more easily. In the present case, we used cosine similarity, Tanimoto similarity, and Euclidean distance.

There is no “correct” answer for similarity methods, and as Everitt [172] points out, results are best assessed in relation to their utility. In this sense, it is clear that our method returns very sensible groupings of molecules that may be seen as similar by the trained chemical eye, and which in the cases illustrated (clozapine and flucloxacillin) clearly group molecules containing the base scaffold that contributes to both their activity and to their family membership (“apines” and “acillins”, respectively).

There has long been a general recognition (possibly as part of the search for “artificial general intelligence” (e.g., [173,174,175,176,177,178,179]) that one reason that human brains are more powerful than are artificial neural networks may be—at least in part—simply because the former contain vastly more neurons. What is now definitely increasingly clear is that very large transformer networks can both act as few-shot learners (e.g., [3,108]) and are indeed able to demonstrate extremely powerful generative properties, albeit within somewhat restricted domains. Even though the limitations on the GPU memory that we could access meant that we studied only some 160,000 molecules, our analysis of the contents of the largest transformer trained with contrastive learning indicated that it was nonetheless very sparsely populated. This both illustrates the capacity of these large networks and leads necessarily to an extremely efficient means of training.

Looking to the future, as more computational resources become available (with transformers using larger networks for their function), we can anticipate the ability to address and segment much larger chemical spaces, and to use our disentangled transformer-based representation for the encoding of molecular structures for a variety of both supervised and unsupervised problem domains.

4. Materials and Methods

We developed a novel hybrid framework by combining three things, namely transformers, an auto-encoder, and a contrastive learning framework. The complete framework is shown in Figure 1. The architecture chosen was based on the SimCLR framework of Hinton and colleagues [61,112], to which we added an autoencoder so as to provide a convenient latent space for analysis and extraction. Programs were written in PyTorch within an Anaconda environment. They were mostly run one GPU of a 4-GPU (NVIDIA V100) system. The dataset used included ~150,000 natural products [91,99,148], plus fluorophores [113], Recon2 endogenous human metabolites [143,144,146,147], and FDA-approved drugs [99,143,144,145], as previously described. Visualization tools such as t-SNE [127,128] and UMAP [129,130] were implemented as previously described [113]. The dataset was split into training and validation and test sets as described below.

We here develop a novel hybrid framework upon the contrastive learning framework using transformers. We explain the complete framework with each of the components as below:

4.1. Molecular SMILES Augmentation

Contrastive learning is all about uniting positive pairs and discriminating between negative pairs. The first objective is thus to develop an efficient way of determining positive and negative data pairs for the model. We adopted the SMILES enumeration data augmentation technique from Bjerrum [180], that any given canonical SMILES data example can generate multiple SMILES strings that basically represent the same molecule. We used this technique to sample two different SMILES strings xi and xj from every canonical SMILES string from the dataset, which we regarded as positive pairs.

4.2. Base Encoder

Once we received the augmented, randomized SMILES, they were added with their respective positional encoding. The positional encoding is a sine or cosine function defined according to the position of a token in the input sequence length. It is done in order also to take into consideration the order of the sequence. The next component of the framework is the encoder network that takes in the summation of the input sequence and its positional encoding and extracts the representation vectors for those samples. As stated by Chen and colleagues [112], there is complete freedom when it comes to the choice of architecture for the encoder network. Therefore, we used a transformer encoder network, which has in recent years become the state-of-the-art for language modelling tasks and has been subsequently significantly extended to chemical domains as well.

As set down in the original transformers paper, the transformer encoder basically comprises two sub-blocks. The first sub-block has a multi-head attention layer followed by a layer normalization layer. The first multi-head attention layer makes the model pay attention to the values at neighbors’ positions when encoding a representation for one particular position. Then, the layer normalization layer normalizes the sum of inputs obtained from the residual connection and the outputs of the multi-head attention layer. The second block consists of a feed forward network, one for every position. Then, similar to the previous case, layer norm is defined on the position-wise sum of the outputs from the feed forward layer and the residually connected output from the previous block.

The output of the transformer encoder network is an array of feature-embedding vectors which we call the representation (hi). The representation obtained from the network is of the dimension sequential length × dmodel. This means that the transformer encoder network generates feature embedding vectors for every position in the input sequence length. Normally, these transformer encoder network blocks are repeated N times and the output representation of one encoder is an input of another. Here, we employed 4 transformer encoder blocks.

4.3. Projection Head

The projection head is a simple encoder neural network to project the feature embedding representation vector of shape (input sequence length × dmodel) down to a lower dimension representation of shape (1 × dmodel). Here, we used an artificial neural network of 4 layers with the ReLu activation function. This gave an output projection vector zi, which was then used for defining the contrastive loss.

4.4. Contrastive Loss

As the choice of contrastive loss for our experiments, we used the normalized temperature-scaled cross entropy (NT-Xent) loss [64,112,181,182].

where zi and zj are positive pair projection vectors when two transformer models are run in parallel. 𝟙 is a Boolean evaluating to 1 if k is not the same as i, and τ is the temperature parameter. Lastly, sim() is the similarity metric for estimating the similarity between zi and zj. The idea behind using this loss function is that when sampling a sample batch of data of size N for training, each sample is augmented as per subsection “Section 4.1” and the total would then be 2N samples. Therefore, for every sample there is one other sample from the same canonical SMILES and 2N-2 other samples. Therefore, we considered for every sample one other sample generated from the same canonical SMILES as a positive pair and each of the other 2N-2 samples as a negative pair.

4.5. Unprojection Head

Unlike SimCLR or any other previous contrastive learning framework, we also opted to include a simple decoder network and then a transformer decoder network through which we also taught the model to generate a molecular SMILES representation whenever queried with latent space vectors. With this architecture, we thus developed a novel framework which can not only build nicely clustered latent spaces based on the structural similarities of molecules but also has the capability of doing some intelligent navigation of those latent spaces to generate some other highly similar molecules.

4.6. Base Decoder

This final component of our architecture, the base decoder, consists of a transformer decoder network, a final linear layer, and a softmax layer. The transformer decoder network adds one more block of multi-head attention, which takes in the attention vectors K and V from the output of the unprojection. Moreover, the masking mechanism is infused in the first attention block to mask the 1 position shifted right output embedding. With this, the model is only allowed to take into consideration the feature embeddings from the previous positions. Then the final linear layer is a simple neural network to convert position vector outputs from the transformer decoder network into a logit vector which is then followed by softmax layer to convert this array of logit values into a probability score, and the atom or bond corresponding to the index with highest probability is produced as an output. Once the complete sequence of molecules is generated, it is compared with the original input sequence with cross-entropy as a loss function.

4.7. Default Settings

We referred to the first dataset of ~5 k molecules containing natural products, drugs, fluorophores, and metabolites as SI1 and that of ~150 k natural product molecules as SI2.

For both the datasets, the choice of optimizer was Adam [183], the learning rate was 10−5, and dropout [184] was 20%. Our model has 4 encoder and decoder blocks and each transformer block has 4 attention heads in its multi-head attention layer. For the SI1 dataset, the maximum sequence length of the molecule (in its SMILES encoding) was found to be of length 412. Therefore, we chose the optimal input sequence length post data preprocessing to be 450. The vocabulary size was 79, and the dmodel was set to 64. With these settings the total number of parameters in our model was 342,674, and we chose the maximum possible batch-size to fit on our GPU set-up, which was 40. We randomly split the dataset in the ratio 3:2 for training and validation. However, in this particular scenario we augment the canonical SMILES and train only on the augmented SMILES. Our model was shown none of the original canonical SMILES during training and validation. Canonical SMILES were used only for obtaining the projection vectors during testing and the analyses of the latent space.

For the SI2 dataset, the maximum molecule length was 619, and therefore we chose to train the model with input sequence length of 650. The total vocabulary size of the dataset was 69. The dimensionality dmodel of the model was varied for this dataset from around 48 to 256. For most of our analysis, however, we choose 256 dimensional latent space or dmodel = 256. Therefore, we focused on the settings for this case only. The batch size was set to 20, and the model had a total of 4,678,864 training parameters. In this case, the dataset was split such that 125,000 molecules were used for training and 25,000 reserved for validation.

5. Conclusions

The combination of transformers, contrastive learning, and an autoencoder head allows the production of a powerful and disentangled learning system that we have applied to the problem of small molecule similarity. It also admitted a clear understanding of the sparseness with which the space was populated even by over 150,000 molecules, giving optimism that these methods, when scaled to greater numbers of molecules, can learn many molecular properties of interest to the computational chemical biologist.

Author Contributions

Conceptualization, all authors; methodology, all authors; software, A.D.S.; resources, D.B.K.; data curation, A.D.S.; writing—original draft preparation, D.B.K.; writing—review and editing, all authors; funding acquisition, D.B.K. All authors have read and agreed to the published version of the manuscript.

Funding

A.D.S. was an intern sponsored by the University of Liverpool. D.B.K. is also funded by the Novo Nordisk Foundation (grant NNF10CC1016517).

Acknowledgments

Conflicts of Interest

The authors declare that they have no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. arXiv 2020, arXiv:2005.14165. [Google Scholar]

- Senior, A.W.; Evans, R.; Jumper, J.; Kirkpatrick, J.; Sifre, L.; Green, T.; Qin, C.; Zidek, A.; Nelson, A.W.R.; Bridgland, A.; et al. Improved protein structure prediction using potentials from deep learning. Nature 2020, 577, 706–710. [Google Scholar] [CrossRef] [PubMed]

- Samanta, S.; O’Hagan, S.; Swainston, N.; Roberts, T.J.; Kell, D.B. VAE-Sim: A novel molecular similarity measure based on a variational autoencoder. Molecules 2020, 25, 3446. [Google Scholar] [CrossRef]

- Kingma, D.; Welling, M. Auto-encoding variational Bayes. arXiv 2014, arXiv:1312.6114v1310. [Google Scholar]

- Kingma, D.P.; Welling, M. An introduction to variational autoencoders. arXiv 2019, arXiv:1906.02691v02691. [Google Scholar]

- Wei, R.; Mahmood, A. Recent advances in variational autoencoders with representation learning for biomedical informatics: A survey. IEEE Access 2021, 9, 4939–4956. [Google Scholar] [CrossRef]

- Wei, R.; Garcia, C.; El-Sayed, A.; Peterson, V.; Mahmood, A. Variations in variational autoencoders—A comparative evaluation. IEEE Access 2020, 8, 153651–153670. [Google Scholar] [CrossRef]

- Van Deursen, R.; Tetko, I.V.; Godin, G. Beyond chemical 1d knowledge using transformers. arXiv 2020, arXiv:2010.01027. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Chithrananda, S.; Grand, G.; Ramsundar, B. Chemberta: Large-scale self-supervised pretraining for molecular property prediction. arXiv 2020, arXiv:2010.09885. [Google Scholar]

- Sundararajan, M.; Taly, A.; Yan, Q. Axiomatic attribution for deep networks. arXiv 2017, arXiv:1703.01365. [Google Scholar]

- Simonyan, K.; Vedaldi, A.; Zisserman, A. Deep inside convolutional networks: Visualising image classification models and saliency maps. arXiv 2013, arXiv:1312.6034. [Google Scholar]

- Azodi, C.B.; Tang, J.; Shiu, S.H. Opening the black box: Interpretable machine learning for geneticists. Trends Genet. 2020, 36, 442–455. [Google Scholar] [CrossRef]

- Core, M.G.; Lane, H.C.; van Lent, M.; Gomboc, D.; Solomon, S.; Rosenberg, M. Building explainable artificial intelligence systems. AAAI 2006, 1766–1773. [Google Scholar] [CrossRef]

- Holzinger, A.; Biemann, C.; Pattichis, C.S.; Kell, D.B. What do we need to build explainable AI systems for the medical domain? arXiv 2017, arXiv:1712.09923v09921. [Google Scholar]

- Samek, W.; Montavon, G.; Vedaldi, A.; Hansen, L.K.; Müller, K.-R. Explainable AI: Interpreting, Explaining and Visualizing Deep Learning; Springer: Berlin, Germany, 2019. [Google Scholar]

- Singh, A.; Sengupta, S.; Lakshminarayanan, V. Explainable deep learning models in medical image analysis. arXiv 2020, arXiv:2005.13799. [Google Scholar]

- Tjoa, E.; Guan, C. A survey on explainable artificial intelligence (XAI): Towards medical XAI. arXiv 2019, arXiv:1907.07374. [Google Scholar] [CrossRef]

- Arrieta, A.B.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable artificial intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Gunning, D.; Stefik, M.; Choi, J.; Miller, T.; Stumpf, S.; Yang, G.Z. XAI-explainable artificial intelligence. Sci. Robot. 2019, 4, eaay7120. [Google Scholar] [CrossRef]

- Parmar, G.; Li, D.; Lee, K.; Tu, Z. Dual contradistinctive generative autoencoder. arXiv 2020, arXiv:2011.10063. [Google Scholar]

- Peis, I.; Olmos, P.M.; Artés-Rodríguez, A. Unsupervised learning of global factors in deep generative models. arXiv 2020, arXiv:2012.08234. [Google Scholar]

- Klys, J.; Snell, J.; Zemel, R. Learning latent subspaces in variational autoencoders. arXiv 2018, arXiv:1812.06190. [Google Scholar]

- He, Z.; Kan, M.; Zhang, J.; Shan, S. PA-GAN: Progressive attention generative adversarial network for facial attribute editing. arXiv 2020, arXiv:2007.05892. [Google Scholar]

- Shen, X.; Liu, F.; Dong, H.; Lian, Q.; Chen, Z.; Zhang, T. Disentangled generative causal representation learning. arXiv 2020, arXiv:2010.02637. [Google Scholar]

- Esser, P.; Rombach, R.; Ommer, B. A note on data biases in generative models. arXiv 2020, arXiv:2012.02516. [Google Scholar]

- Kumar, A.; Sattigeri, P.; Balakrishnan, A. Variational inference of disentangled latent concepts from unlabeled observations. arXiv 2017, arXiv:1711.00848. [Google Scholar]

- Kim, H.; Mnih, A. Disentangling by factorising. arXiv 2018, arXiv:1802.05983. [Google Scholar]

- Locatello, F.; Bauer, S.; Lucic, M.; Rätsch, G.; Gelly, S.; Schölkopf, B.; Bachem, O. Challenging common assumptions in the unsupervised learning of disentangled representations. arXiv 2018, arXiv:1811.12359. [Google Scholar]

- Locatello, F.; Tschannen, M.; Bauer, S.; Rätsch, G.; Schölkopf, B.; Bachem, O. Disentangling factors of variation using few labels. arXiv 2019, arXiv:1905.01258v01251. [Google Scholar]

- Locatello, F.; Poole, B.; Rätsch, G.; Schölkopf, B.; Bachem, O.; Tschannen, M. Weakly-supervised disentanglement without compromises. arXiv 2020, arXiv:2002.02886. [Google Scholar]

- Oldfield, J.; Panagakis, Y.; Nicolaou, M.A. Adversarial learning of disentangled and generalizable representations of visual attributes. IEEE Trans. Neural Netw. Learn. Syst. 2021. [Google Scholar] [CrossRef] [PubMed]

- Pandey, A.; Schreurs, J.; Suykens, J.A.K. Generative restricted kernel machines: A framework for multi-view generation and disentangled feature learning. Neural Netw. 2021, 135, 177–191. [Google Scholar] [CrossRef] [PubMed]

- Hao, Z.; Lv, D.; Li, Z.; Cai, R.; Wen, W.; Xu, B. Semi-supervised disentangled framework for transferable named entity recognition. Neural Netw. 2021, 135, 127–138. [Google Scholar] [CrossRef] [PubMed]

- Shen, Y.; Yang, C.; Tang, X.; Zhou, B. Interfacegan: Interpreting the disentangled face representation learned by gans. IEEE Trans. Pattern Anal. Mach. Intell. 2020. [Google Scholar] [CrossRef] [PubMed]

- Tang, Y.; Tang, Y.; Zhu, Y.; Xiao, J.; Summers, R.M. A disentangled generative model for disease decomposition in chest x-rays via normal image synthesis. Med. Image Anal. 2021, 67, 101839. [Google Scholar] [CrossRef]

- Cootes, T.F.; Edwards, G.J.; Taylor, C.J. Active appearance models. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 681–685. [Google Scholar] [CrossRef]

- Cootes, T.F.; Taylor, C.J.; Cooper, D.H.; Graham, J. Active shape models—Their training and application. Comput. Vis. Image Underst. 1995, 61, 38–59. [Google Scholar] [CrossRef]

- Hill, A.; Cootes, T.F.; Taylor, C.J. Active shape models and the shape approximation problem. Image Vis. Comput. 1996, 14, 601–607. [Google Scholar] [CrossRef]

- Salam, H.; Seguier, R. A survey on face modeling: Building a bridge between face analysis and synthesis. Vis. Comput. 2018, 34, 289–319. [Google Scholar] [CrossRef]

- Bozkurt, A.; Esmaeili, B.; Brooks, D.H.; Dy, J.G.; van de Meent, J.-W. Evaluating combinatorial generalization in variational autoencoders. arXiv 2019, arXiv:1911.04594v04591. [Google Scholar]

- Alemi, A.A.; Poole, B.; Fischer, I.; Dillon, J.V.; Saurous, R.A.; Murphy, K. Fixing a broken ELBO. arXiv 2019, arXiv:1711.00464. [Google Scholar]

- Zhao, S.; Song, J.; Ermon, S. InfoVAE: Balancing learning and inference in variational autoencoders. arXiv 2017, arXiv:1706.02262v02263. [Google Scholar] [CrossRef]

- Leibfried, F.; Dutordoir, V.; John, S.T.; Durrande, N. A tutorial on sparse Gaussian processes and variational inference. arXiv 2020, arXiv:2012.13962. [Google Scholar]

- Rezende, D.J.; Viola, F. Taming VAEs. arXiv 2018, arXiv:1810.00597v00591. [Google Scholar]

- Dai, B.; Wipf, D. Diagnosing and enhancing VAE models. arXiv 2019, arXiv:1903.05789v05782. [Google Scholar]

- Li, Y.; Yu, S.; Principe, J.C.; Li, X.; Wu, D. PRI-VAE: Principle-of-relevant-information variational autoencoders. arXiv 2020, arXiv:2007.06503. [Google Scholar]

- Higgins, I.; Matthey, L.; Pal, A.; Burgess, C.; Glorot, X.; Botvinick, M.; Mohamed, S.; Lerchner, A. β-VAE: Learning basic visual concepts with a constrained variational framework. In Proceedings of the ICLR 2017, Toulon, France, 24–26 April 2017. [Google Scholar]

- Burgess, C.P.; Higgins, I.; Pal, A.; Matthey, L.; Watters, N.; Desjardins, G.; Lerchner, A. Understanding disentangling in β-VAE. arXiv 2018, arXiv:1804.03599. [Google Scholar]

- Havtorn, J.D.; Frellsen, J.; Hauberg, S.; Maaløe, L. Hierarchical vaes know what they don’t know. arXiv 2021, arXiv:2102.08248. [Google Scholar]

- Kumar, A.; Poole, B. On implicit regularization in β-VAEs. arXiv 2021, arXiv:2002.00041. [Google Scholar]

- Yang, T.; Ren, X.; Wang, Y.; Zeng, W.; Zheng, N.; Ren, P. GroupifyVAE: From group-based definition to VAE-based unsupervised representation disentanglement. arXiv 2021, arXiv:2102.10303. [Google Scholar]

- Gatopoulos, I.; Tomczak, J.M. Self-supervised variational auto-encoders. arXiv 2020, arXiv:2010.02014. [Google Scholar]

- Rong, Y.; Bian, Y.; Xu, T.; Xie, W.; Wei, Y.; Huang, W.; Huang, J. Self-supervised graph transformer on large-scale molecular data. arXiv 2020, arXiv:2007.02835. [Google Scholar]

- Saeed, A.; Grangier, D.; Zeghidour, N. Contrastive learning of general-purpose audio representations. arXiv 2020, arXiv:2010.10915. [Google Scholar]

- Aneja, J.; Schwing, A.; Kautz, J.; Vahdat, A. NCP-VAE: Variational autoencoders with noise contrastive priors. arXiv 2020, arXiv:2010.02917. [Google Scholar]

- Artelt, A.; Hammer, B. Efficient computation of contrastive explanations. arXiv 2020, arXiv:2010.02647. [Google Scholar]

- Ciga, O.; Martel, A.L.; Xu, T. Self supervised contrastive learning for digital histopathology. arXiv 2020, arXiv:2011.13971. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. arXiv 2020, arXiv:2002.05709. [Google Scholar]

- Jaiswal, A.; Babu, A.R.; Zadeh, M.Z.; Banerjee, D.; Makedon, F. A survey on contrastive self-supervised learning. arXiv 2020, arXiv:2011.00362. [Google Scholar]

- Purushwalkam, S.; Gupta, A. Demystifying contrastive self-supervised learning: Invariances, augmentations and dataset biases. arXiv 2020, arXiv:2007.13916. [Google Scholar]

- Van den Oord, A.; Li, Y.; Vinyals, O. Representation learning with contrastive predictive coding. arXiv 2018, arXiv:1807.03748v03742. [Google Scholar]

- Verma, V.; Luong, M.-T.; Kawaguchi, K.; Pham, H.; Le, Q.V. Towards domain-agnostic contrastive learning. arXiv 2020, arXiv:2011.04419. [Google Scholar]

- Le-Khac, P.H.; Healy, G.; Smeaton, A.F. Contrastive representation learning: A framework and review. arXiv 2020, arXiv:2010.05113. [Google Scholar] [CrossRef]

- Wang, Q.; Meng, F.; Breckon, T.P. Data augmentation with norm-VAE for unsupervised domain adaptation. arXiv 2020, arXiv:2012.00848. [Google Scholar]

- Li, H.; Zhang, X.; Sun, R.; Xiong, H.; Tian, Q. Center-wise local image mixture for contrastive representation learning. arXiv 2020, arXiv:2011.02697. [Google Scholar]

- You, Y.; Chen, T.; Sui, Y.; Chen, T.; Wang, Z.; Shen, Y. Graph contrastive learning with augmentations. arXiv 2020, arXiv:2010.13902. [Google Scholar]

- Willett, P. Similarity-based data mining in files of two-dimensional chemical structures using fingerprint measures of molecular resemblance. Wires Data Min. Knowl. 2011, 1, 241–251. [Google Scholar] [CrossRef]

- Stumpfe, D.; Bajorath, J. Similarity searching. Wires Comput. Mol. Sci. 2011, 1, 260–282. [Google Scholar] [CrossRef]

- Maggiora, G.; Vogt, M.; Stumpfe, D.; Bajorath, J. Molecular similarity in medicinal chemistry. J. Med. Chem. 2014, 57, 3186–3204. [Google Scholar] [CrossRef]

- Irwin, J.J.; Shoichet, B.K. ZINC--a free database of commercially available compounds for virtual screening. J. Chem. Inf. Model. 2005, 45, 177–182. [Google Scholar] [CrossRef]

- Ertl, P.; Schuffenhauer, A. Estimation of synthetic accessibility score of drug-like molecules based on molecular complexity and fragment contributions. J. Cheminform. 2009, 1, 8. [Google Scholar] [CrossRef]

- Patel, H.; Ihlenfeldt, W.D.; Judson, P.N.; Moroz, Y.S.; Pevzner, Y.; Peach, M.L.; Delannee, V.; Tarasova, N.I.; Nicklaus, M.C. Savi, in silico generation of billions of easily synthesizable compounds through expert-system type rules. Sci. Data 2020, 7, 384. [Google Scholar] [CrossRef]

- Bickerton, G.R.; Paolini, G.V.; Besnard, J.; Muresan, S.; Hopkins, A.L. Quantifying the chemical beauty of drugs. Nat. Chem. 2012, 4, 90–98. [Google Scholar] [CrossRef]

- Cernak, T.; Dykstra, K.D.; Tyagarajan, S.; Vachal, P.; Krska, S.W. The medicinal chemist’s toolbox for late stage functionalization of drug-like molecules. Chem. Soc. Rev. 2016, 45, 546–576. [Google Scholar] [CrossRef]

- Lovrić, M.; Molero, J.M.; Kern, R. PySpark and RDKit: Moving towards big data in cheminformatics. Mol. Inform. 2019, 38, e1800082. [Google Scholar] [CrossRef]

- Clyde, A.; Ramanathan, A.; Stevens, R. Scaffold embeddings: Learning the structure spanned by chemical fragments, scaffolds and compounds. arXiv 2021, arXiv:2103.06867. [Google Scholar]

- Arús-Pous, J.; Awale, M.; Probst, D.; Reymond, J.L. Exploring chemical space with machine learning. Chem. Int. J. Chem. 2019, 73, 1018–1023. [Google Scholar] [CrossRef]

- Awale, M.; Probst, D.; Reymond, J.L. WebMolCS: A web-based interface for visualizing molecules in three-dimensional chemical spaces. J. Chem. Inf. Model. 2017, 57, 643–649. [Google Scholar] [CrossRef]

- Baldi, P.; Muller, K.R.; Schneider, G. Charting chemical space: Challenges and opportunities for artificial intelligence and machine learning. Mol. Inform. 2011, 30, 751–752. [Google Scholar] [CrossRef]

- Chen, Y.; Garcia de Lomana, M.; Friedrich, N.O.; Kirchmair, J. Characterization of the chemical space of known and readily obtainable natural products. J. Chem. Inf. Model. 2018, 58, 1518–1532. [Google Scholar] [CrossRef] [PubMed]

- Drew, K.L.M.; Baiman, H.; Khwaounjoo, P.; Yu, B.; Reynisson, J. Size estimation of chemical space: How big is it? J. Pharm. Pharmacol. 2012, 64, 490–495. [Google Scholar] [CrossRef] [PubMed]

- Ertl, P. Visualization of chemical space for medicinal chemists. J. Cheminform. 2014, 6, O4. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez-Medina, M.; Prieto-Martinez, F.D.; Naveja, J.J.; Mendez-Lucio, O.; El-Elimat, T.; Pearce, C.J.; Oberlies, N.H.; Figueroa, M.; Medina-Franco, J.L. Chemoinformatic expedition of the chemical space of fungal products. Future Med. Chem. 2016, 8, 1399–1412. [Google Scholar] [CrossRef]

- Klimenko, K.; Marcou, G.; Horvath, D.; Varnek, A. Chemical space mapping and structure-activity analysis of the chembl antiviral compound set. J. Chem. Inf. Model. 2016, 56, 1438–1454. [Google Scholar] [CrossRef]

- Lin, A.; Horvath, D.; Afonina, V.; Marcou, G.; Reymond, J.L.; Varnek, A. Mapping of the available chemical space versus the chemical universe of lead-like compounds. ChemMedChem 2018, 13, 540–554. [Google Scholar] [CrossRef]

- Lucas, X.; Gruning, B.A.; Bleher, S.; Günther, S. The purchasable chemical space: A detailed picture. J. Chem. Inf. Model. 2015, 55, 915–924. [Google Scholar] [CrossRef]

- Nigam, A.; Friederich, P.; Krenn, M.; Aspuru-Guzik, A. Augmenting genetic algorithms with deep neural networks for exploring the chemical space. arXiv 2019, arXiv:1909.11655. [Google Scholar]

- O’Hagan, S.; Kell, D.B. Generation of a small library of natural products designed to cover chemical space inexpensively. Pharm. Front. 2019, 1, e190005. [Google Scholar]

- Polishchuk, P.G.; Madzhidov, T.I.; Varnek, A. Estimation of the size of drug-like chemical space based on GDB-17 data. J. Comput. Aided Mol. Des. 2013, 27, 675–679. [Google Scholar] [CrossRef]

- Reymond, J.L. The chemical space project. Acc. Chem. Res. 2015, 48, 722–730. [Google Scholar] [CrossRef] [PubMed]

- Rosén, J.; Gottfries, J.; Muresan, S.; Backlund, A.; Oprea, T.I. Novel chemical space exploration via natural products. J. Med. Chem. 2009, 52, 1953–1962. [Google Scholar] [CrossRef] [PubMed]

- Thakkar, A.; Selmi, N.; Reymond, J.L.; Engkvist, O.; Bjerrum, E. ‘Ring breaker’: Neural network driven synthesis prediction of the ring system chemical space. J. Med. Chem. 2020, 63, 8791–8808. [Google Scholar] [CrossRef] [PubMed]

- Thiede, L.A.; Krenn, M.; Nigam, A.; Aspuru-Guzik, A. Curiosity in exploring chemical space: Intrinsic rewards for deep molecular reinforcement learning. arXiv 2020, arXiv:2012.11293. [Google Scholar]

- Coley, C.W. Defining and exploring chemical spaces. Trends Chem. 2021, 3, 133–145. [Google Scholar] [CrossRef]

- Bender, A.; Glen, R.C. Molecular similarity: A key technique in molecular informatics. Org. Biomol. Chem. 2004, 2, 3204–3218. [Google Scholar] [CrossRef]

- O’Hagan, S.; Kell, D.B. Consensus rank orderings of molecular fingerprints illustrate the ‘most genuine’ similarities between marketed drugs and small endogenous human metabolites, but highlight exogenous natural products as the most important ‘natural’ drug transporter substrates. ADMET DMPK 2017, 5, 85–125. [Google Scholar]

- Sterling, T.; Irwin, J.J. ZINC 15—Ligand discovery for everyone. J. Chem. Inf. Model. 2015, 55, 2324–2337. [Google Scholar] [CrossRef]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. arXiv 2019, arXiv:1910.10683. [Google Scholar]

- Rives, A.; Goyal, S.; Meier, J.; Guo, D.; Ott, M.; Zitnick, C.L.; Ma, J.; Fergus, R. Biological structure and function emerge from scaling unsupervised learning to 250 million protein sequences. bioRxiv 2019, 622803. [Google Scholar] [CrossRef]

- So, D.R.; Liang, C.; Le, Q.V. The evolved transformer. arXiv 2019, arXiv:1901.11117. [Google Scholar]

- Grechishnikova, D. Transformer neural network for protein specific de novo drug generation as machine translation problem. bioRxiv 2020. [Google Scholar] [CrossRef]

- Choromanski, K.; Likhosherstov, V.; Dohan, D.; Song, X.; Gane, A.; Sarlos, T.; Hawkins, P.; Davis, J.; Mohiuddin, A.; Kaiser, L.; et al. Rethinking attention with Performers. arXiv 2020, arXiv:2009.14794. [Google Scholar]

- Yun, C.; Bhojanapalli, S.; Rawat, A.S.; Reddi, S.J.; Kumar, S. Are transformers universal approximators of sequence-to-sequence functions? arXiv 2019, arXiv:1912.10077. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Fedus, W.; Zoph, B.; Shazeer, N. Switch transformers: Scaling to trillion parameter models with simple and efficient sparsity. arXiv 2021, arXiv:2101.03961. [Google Scholar]

- Lu, K.; Grover, A.; Abbeel, P.; Mordatch, I. Pretrained transformers as universal computation engines. arXiv 2021, arXiv:2103.05247. [Google Scholar]

- Goyal, P.; Caron, M.; Lefaudeux, B.; Xu, M.; Wang, P.; Pai, V.; Singh, M.; Liptchinsky, V.; Misra, I.; Joulin, A.; et al. Self-supervised pretraining of visual features in the wild. arXiv 2021, arXiv:2103.01988v01981. [Google Scholar]

- Wang, Y.; Wang, J.; Cao, Z.; Farimani, A.B. MolCLR: Molecular contrastive learning of representations via graph neural networks. arXiv 2021, arXiv:2102.10056. [Google Scholar]

- Chen, T.; Kornblith, S.; Swersky, K.; Norouzi, M.; Hinton, G. Big self-supervised models are strong semi-supervised learners. arXiv 2020, arXiv:2006.10029. [Google Scholar]

- O’Hagan, S.; Kell, D.B. Structural similarities between some common fluorophores used in biology, marketed drugs, endogenous metabolites, and natural products. Mar. Drugs 2020, 18, 582. [Google Scholar] [CrossRef] [PubMed]

- Ji, Z.; Zou, X.; Huang, T.; Wu, S. Unsupervised few-shot feature learning via self-supervised training. Front. Comput. Neurosci. 2020, 14, 83. [Google Scholar] [CrossRef]

- Wang, Y.; Yao, Q.; Kwok, J.; Ni, L.M. Generalizing from a few examples: A survey on few-shot learning. arXiv 2019, arXiv:1904.05046. [Google Scholar]

- Ma, J.; Fong, S.H.; Luo, Y.; Bakkenist, C.J.; Shen, J.P.; Mourragui, S.; Wessels, L.F.A.; Hafner, M.; Sharan, R.; Peng, J.; et al. Few-shot learning creates predictive models of drug response that translate from high-throughput screens to individual patients. Nat. Cancer 2021, 2, 233–244. [Google Scholar] [CrossRef]

- Li, F.-F.; Fergus, R.; Perona, P. One-shot learning of object categories. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 594–611. [Google Scholar]

- Rezende, D.J.; Mohamed, S.; Danihelka, I.; Gregor, K.; Wierstra, D. One-shot generalization in deep generative models. arXiv 2016, arXiv:1603.05106v05101. [Google Scholar]

- Altae-Tran, H.; Ramsundar, B.; Pappu, A.S.; Pande, V. Low data drug discovery with one-shot learning. ACS Cent. Sci. 2017, 3, 283–293. [Google Scholar] [CrossRef] [PubMed]

- Baskin, I.I. Is one-shot learning a viable option in drug discovery? Expert Opin. Drug Discov. 2019, 14, 601–603. [Google Scholar] [CrossRef]

- He, X.; Zhao, K.Y.; Chu, X.W. AutoML: A survey of the state-of-the-art. Knowl. Based Syst. 2021, 212, 106622. [Google Scholar] [CrossRef]

- Chochlakis, G.; Georgiou, E.; Potamianos, A. End-to-end generative zero-shot learning via few-shot learning. arXiv 2021, arXiv:2102.04379. [Google Scholar]

- Majumder, O.; Ravichandran, A.; Maji, S.; Polito, M.; Bhotika, R.; Soatto, S. Revisiting contrastive learning for few-shot classification. arXiv 2021, arXiv:2101.11058. [Google Scholar]

- Dasari, S.; Gupta, A. Transformers for one-shot visual imitation. arXiv 2020, arXiv:2011.05970. [Google Scholar]

- Logeswaran, L.; Lee, A.; Ott, M.; Lee, H.; Ranzato, M.A.; Szlam, A. Few-shot sequence learning with transformers. arXiv 2020, arXiv:2012.09543. [Google Scholar]

- Belkin, M.; Hsu, D.; Ma, S.; Mandal, S. Reconciling modern machine-learning practice and the classical bias-variance trade-off. Proc. Natl. Acad. Sci. USA 2019, 116, 15849–15854. [Google Scholar] [CrossRef] [PubMed]

- Van der Maaten, L.; Hinton, G. Visualizing data using t-sne. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Van der Maaten, L. Learning a parametric embedding by preserving local structure. Proc. AISTATS 2009, 384–391. [Google Scholar]

- McInnes, L.; Healy, J.; Melville, J. UMAP: Uniform manifold approximation and projection for dimension reduction. arXiv 2018, arXiv:1802.03426v03422. [Google Scholar]

- McInnes, L.; Healy, J.; Saul, N.; Großberger, L. UMAP: Uniform manifold approximation and projection. J. Open Source Softw. 2018. [Google Scholar] [CrossRef]

- Dickens, D.; Rädisch, S.; Chiduza, G.N.; Giannoudis, A.; Cross, M.J.; Malik, H.; Schaeffeler, E.; Sison-Young, R.L.; Wilkinson, E.L.; Goldring, C.E.; et al. Cellular uptake of the atypical antipsychotic clozapine is a carrier-mediated process. Mol. Pharm. 2018, 15, 3557–3572. [Google Scholar] [CrossRef]

- Horvath, D.; Jeandenans, C. Neighborhood behavior of in silico structural spaces with respect to in vitro activity spaces-a novel understanding of the molecular similarity principle in the context of multiple receptor binding profiles. J. Chem. Inf. Comput. Sci. 2003, 43, 680–690. [Google Scholar] [CrossRef]

- Bender, A.; Jenkins, J.L.; Li, Q.L.; Adams, S.E.; Cannon, E.O.; Glen, R.C. Molecular similarity: Advances in methods, applications and validations in virtual screening and qsar. Annu. Rep. Comput. Chem. 2006, 2, 141–168. [Google Scholar] [PubMed]

- Horvath, D.; Koch, C.; Schneider, G.; Marcou, G.; Varnek, A. Local neighborhood behavior in a combinatorial library context. J. Comput. Aid. Mol. Des. 2011, 25, 237–252. [Google Scholar] [CrossRef] [PubMed]

- Gasteiger, J. Handbook of Chemoinformatics: From Data to Knowledge; Wiley/VCH: Weinheim, Germany, 2003. [Google Scholar]

- Bajorath, J. Chemoinformatics: Concepts, Methods and Tools for Drug Discovery; Humana Press: Totowa, NJ, USA, 2004. [Google Scholar]

- Sutherland, J.J.; Raymond, J.W.; Stevens, J.L.; Baker, T.K.; Watson, D.E. Relating molecular properties and in vitro assay results to in vivo drug disposition and toxicity outcomes. J. Med. Chem. 2012, 55, 6455–6466. [Google Scholar] [CrossRef] [PubMed]

- Capecchi, A.; Probst, D.; Reymond, J.L. One molecular fingerprint to rule them all: Drugs, biomolecules, and the metabolome. J. Cheminform. 2020, 12, 43. [Google Scholar] [CrossRef] [PubMed]

- Muegge, I.; Mukherjee, P. An overview of molecular fingerprint similarity search in virtual screening. Expert Opin. Drug Discov. 2016, 11, 137–148. [Google Scholar] [CrossRef] [PubMed]

- Nisius, B.; Bajorath, J. Rendering conventional molecular fingerprints for virtual screening independent of molecular complexity and size effects. ChemMedChem 2010, 5, 859–868. [Google Scholar] [CrossRef] [PubMed]

- Riniker, S.; Landrum, G.A. Similarity maps—A visualization strategy for molecular fingerprints and machine-learning methods. J. Cheminform. 2013, 5, 43. [Google Scholar] [CrossRef] [PubMed]

- Vogt, I.; Stumpfe, D.; Ahmed, H.E.; Bajorath, J. Methods for computer-aided chemical biology. Part 2: Evaluation of compound selectivity using 2d molecular fingerprints. Chem. Biol. Drug Des. 2007, 70, 195–205. [Google Scholar] [CrossRef]

- O’Hagan, S.; Swainston, N.; Handl, J.; Kell, D.B. A ‘rule of 0.5′ for the metabolite-likeness of approved pharmaceutical drugs. Metabolomics 2015, 11, 323–339. [Google Scholar] [CrossRef]

- O’Hagan, S.; Kell, D.B. Understanding the foundations of the structural similarities between marketed drugs and endogenous human metabolites. Front. Pharm. 2015, 6, 105. [Google Scholar] [CrossRef]

- O’Hagan, S.; Kell, D.B. The apparent permeabilities of Caco-2 cells to marketed drugs: Magnitude, and independence from both biophysical properties and endogenite similarities. Peer J. 2015, 3, e1405. [Google Scholar] [CrossRef]

- O’Hagan, S.; Kell, D.B. MetMaxStruct: A Tversky-similarity-based strategy for analysing the (sub)structural similarities of drugs and endogenous metabolites. Front. Pharm. 2016, 7, 266. [Google Scholar] [CrossRef]

- O’Hagan, S.; Kell, D.B. Analysis of drug-endogenous human metabolite similarities in terms of their maximum common substructures. J. Cheminform. 2017, 9, 18. [Google Scholar] [CrossRef] [PubMed]

- O’Hagan, S.; Kell, D.B. Analysing and navigating natural products space for generating small, diverse, but representative chemical libraries. Biotechnol. J. 2018, 13, 1700503. [Google Scholar] [CrossRef] [PubMed]

- Gawehn, E.; Hiss, J.A.; Schneider, G. Deep learning in drug discovery. Mol. Inform. 2016, 35, 3–14. [Google Scholar] [CrossRef] [PubMed]

- Gómez-Bombarelli, R.; Wei, J.N.; Duvenaud, D.; Hernández-Lobato, J.M.; Sánchez-Lengeling, B.; Sheberla, D.; Aguilera-Iparraguirre, J.; Hirzel, T.D.; Adams, R.P.; Aspuru-Guzik, A. Automatic chemical design using a data-driven continuous representation of molecules. ACS Cent. Sci. 2018, 4, 268–276. [Google Scholar] [CrossRef]

- Sanchez-Lengeling, B.; Aspuru-Guzik, A. Inverse molecular design using machine learning: Generative models for matter engineering. Science 2018, 361, 360–365. [Google Scholar] [CrossRef]

- Arús-Pous, J.; Probst, D.; Reymond, J.L. Deep learning invades drug design and synthesis. Chimia 2018, 72, 70–71. [Google Scholar] [CrossRef]

- Yang, K.; Swanson, K.; Jin, W.; Coley, C.; Eiden, P.; Gao, H.; Guzman-Perez, A.; Hopper, T.; Kelley, B.; Mathea, M.; et al. Analyzing learned molecular representations for property prediction. J. Chem. Inf. Model. 2019, 59, 3370–3388. [Google Scholar] [CrossRef]

- Zhavoronkov, A.; Ivanenkov, Y.A.; Aliper, A.; Veselov, M.S.; Aladinskiy, V.A.; Aladinskaya, A.V.; Terentiev, V.A.; Polykovskiy, D.A.; Kuznetsov, M.D.; Asadulaev, A.; et al. Deep learning enables rapid identification of potent DDR1 kinase inhibitors. Nat. Biotechnol. 2019, 37, 1038–1040. [Google Scholar] [CrossRef]

- Khemchandani, Y.; O’Hagan, S.; Samanta, S.; Swainston, N.; Roberts, T.J.; Bollegala, D.; Kell, D.B. DeepGraphMolGen, a multiobjective, computational strategy for generating molecules with desirable properties: A graph convolution and reinforcement learning approach. J. Cheminform. 2020, 12, 53. [Google Scholar] [CrossRef] [PubMed]

- Shen, C.; Krenn, M.; Eppel, S.; Aspuru-Guzik, A. Deep molecular dreaming: Inverse machine learning for de-novo molecular design and interpretability with surjective representations. arXiv 2020, arXiv:2012.09712. [Google Scholar]

- Moret, M.; Friedrich, L.; Grisoni, F.; Merk, D.; Schneider, G. Generative molecular design in low data regimes. Nat. Mach. Intell. 2020, 2, 171–180. [Google Scholar] [CrossRef]

- Kell, D.B.; Samanta, S.; Swainston, N. Deep learning and generative methods in cheminformatics and chemical biology: Navigating small molecule space intelligently. Biochem. J. 2020, 477, 4559–4580. [Google Scholar] [CrossRef]

- Walters, W.P.; Barzilay, R. Applications of deep learning in molecule generation and molecular property prediction. Acc. Chem. Res. 2021, 54, 263–270. [Google Scholar] [CrossRef]

- Zaheer, M.; Guruganesh, G.; Dubey, A.; Ainslie, J.; Alberti, C.; Ontanon, S.; Pham, P.; Ravula, A.; Wang, Q.; Yang, L.; et al. Big bird: Transformers for longer sequences. arXiv 2020, arXiv:2007.14062. [Google Scholar]

- Hutson, M. The language machines. Nature 2021, 591, 22–25. [Google Scholar] [CrossRef]

- Topal, M.O.; Bas, A.; van Heerden, I. Exploring transformers in natural language generation: GPT, BERT, and XLNET. arXiv 2021, arXiv:2102.08036. [Google Scholar]

- Zandie, R.; Mahoor, M.H. Topical language generation using transformers. arXiv 2021, arXiv:2103.06434. [Google Scholar]

- Weininger, D. Smiles, a chemical language and information system.1. Introduction to methodology and encoding rules. J. Chem. Inf. Comput. Sci. 1988, 28, 31–36. [Google Scholar] [CrossRef]

- Tetko, I.V.; Karpov, P.; Van Deursen, R.; Godin, G. State-of-the-art augmented NLP transformer models for direct and single-step retrosynthesis. Nat. Commun. 2020, 11, 5575. [Google Scholar] [CrossRef] [PubMed]

- Lim, S.; Lee, Y.O. Predicting chemical properties using self-attention multi-task learning based on SMILES representation. arXiv 2020, arXiv:2010.11272. [Google Scholar]

- Pflüger, P.M.; Glorius, F. Molecular machine learning: The future of synthetic chemistry? Angew. Chem. Int. Ed. Engl. 2020. [Google Scholar] [CrossRef] [PubMed]

- Shin, B.; Park, S.; Bak, J.; Ho, J.C. Controlled molecule generator for optimizing multiple chemical properties. arXiv 2020, arXiv:2010.13908. [Google Scholar]

- Liu, X.; Zhang, F.; Hou, Z.; Mian, L.; Wang, Z.; Zhang, J.; Tang, J. Self-supervised learning: Generative or contrastive. arXiv 2020, arXiv:2006.08218v08214. [Google Scholar]

- Wanyan, T.; Honarvar, H.; Jaladanki, S.K.; Zang, C.; Naik, N.; Somani, S.; Freitas, J.K.D.; Paranjpe, I.; Vaid, A.; Miotto, R.; et al. Contrastive learning improves critical event prediction in COVID-19 patients. arXiv 2021, arXiv:2101.04013. [Google Scholar]

- Kostas, D.; Aroca-Ouellette, S.; Rudzicz, F. Bendr: Using transformers and a contrastive self-supervised learning task to learn from massive amounts of EEG data. arXiv 2021, arXiv:2101.12037. [Google Scholar]

- Everitt, B.S. Cluster Analysis; Edward Arnold: London, UK, 1993. [Google Scholar]

- Botvinick, M.; Barrett, D.G.T.; Battaglia, P.; de Freitas, N.; Kumaran, D.; Leibo, J.Z.; Lillicrap, T.; Modayil, J.; Mohamed, S.; Rabinowitz, N.C.; et al. Building machines that learn and think for themselves. Behav. Brain Sci. 2017, 40, e255. [Google Scholar] [CrossRef]

- Hassabis, D.; Kumaran, D.; Summerfield, C.; Botvinick, M. Neuroscience-inspired artificial intelligence. Neuron 2017, 95, 245–258. [Google Scholar] [CrossRef]

- Shevlin, H.; Vold, K.; Crosby, M.; Halina, M. The limits of machine intelligence despite progress in machine intelligence, artificial general intelligence is still a major challenge. EMBO Rep. 2019, 20. [Google Scholar] [CrossRef]

- Pei, J.; Deng, L.; Song, S.; Zhao, M.; Zhang, Y.; Wu, S.; Wang, G.; Zou, Z.; Wu, Z.; He, W.; et al. Towards artificial general intelligence with hybrid Tianjic chip architecture. Nature 2019, 572, 106–111. [Google Scholar] [CrossRef]

- Stanley, K.O.; Clune, J.; Lehman, J.; Miikkulainen, R. Designing neural networks through neuroevolution. Nat. Mach. Intell. 2019, 1, 24–35. [Google Scholar] [CrossRef]

- Zhang, Y.; Qu, P.; Ji, Y.; Zhang, W.; Gao, G.; Wang, G.; Song, S.; Li, G.; Chen, W.; Zheng, W.; et al. A system hierarchy for brain-inspired computing. Nature 2020, 586, 378–384. [Google Scholar] [CrossRef]

- Nadji-Tehrani, M.; Eslami, A. A brain-inspired framework for evolutionary artificial general intelligence. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 5257–5271. [Google Scholar] [CrossRef]

- Bjerrum, E.J. SMILES enumeration as data augmentation for neural network modeling of molecules. arXiv 2017, arXiv:1703.07076. [Google Scholar]

- Sohn, K. Improved deep metric learning with multi-class n-pair loss objective. NIPS 2016, 30, 1857–1865. [Google Scholar]

- Wu, Z.; Xiong, Y.; Yu, S.; Lin, D. Unsupervised feature learning via non-parametric instance-level discrimination. arXiv 2018, arXiv:1805.01978. [Google Scholar]

- Kingma, D.P.; Ba, J.L. Adam: A method for stochastic optimization. arXiv 2015, arXiv:1412.6980v1418. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).