Variety Identification of Orchids Using Fourier Transform Infrared Spectroscopy Combined with Stacked Sparse Auto-Encoder

Abstract

:1. Introduction

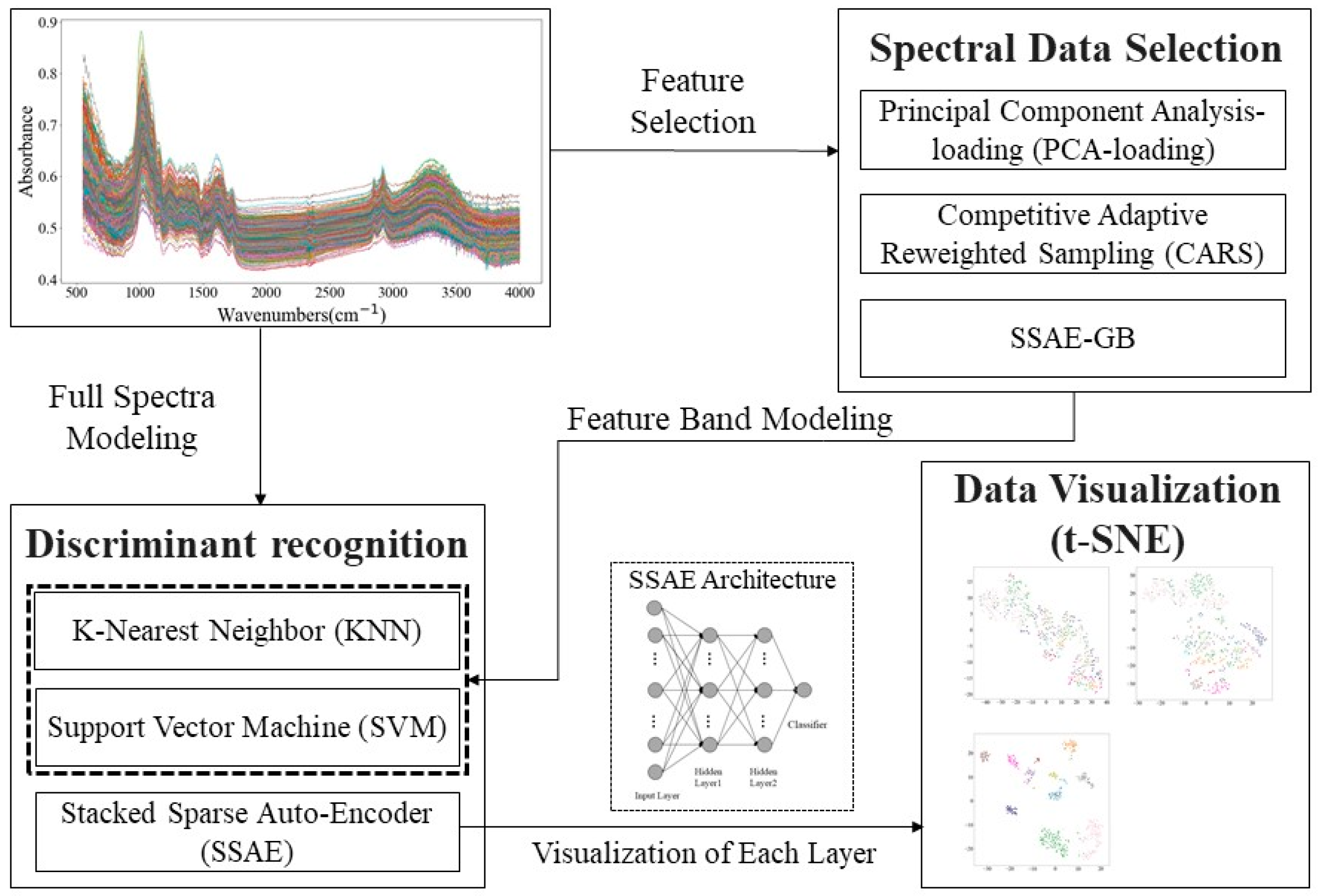

2. Results and Discussion

2.1. Spectral Profiles

2.2. Discriminant Models Based on Full Spectra

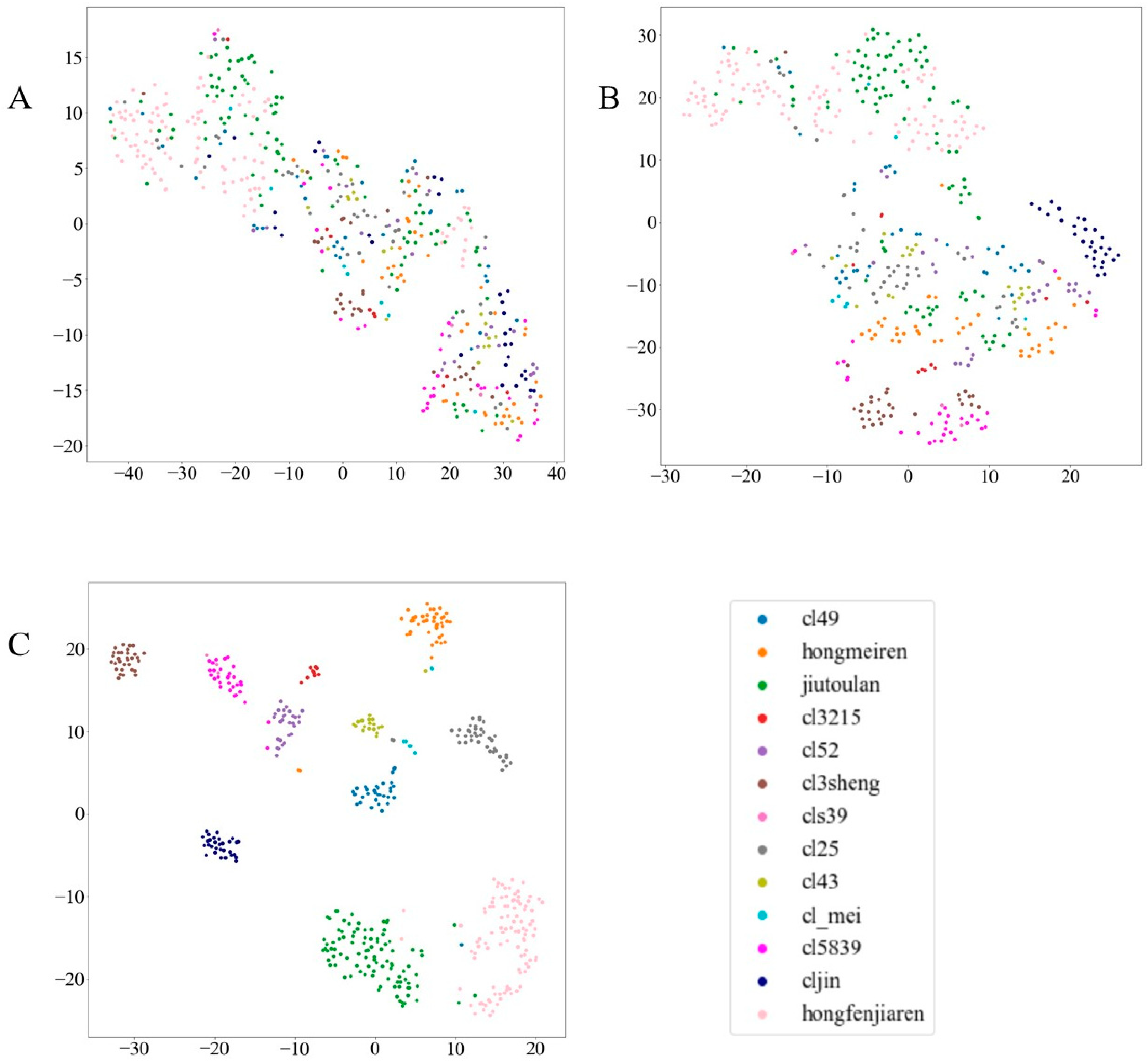

2.3. Feature Visualization with t-SNE

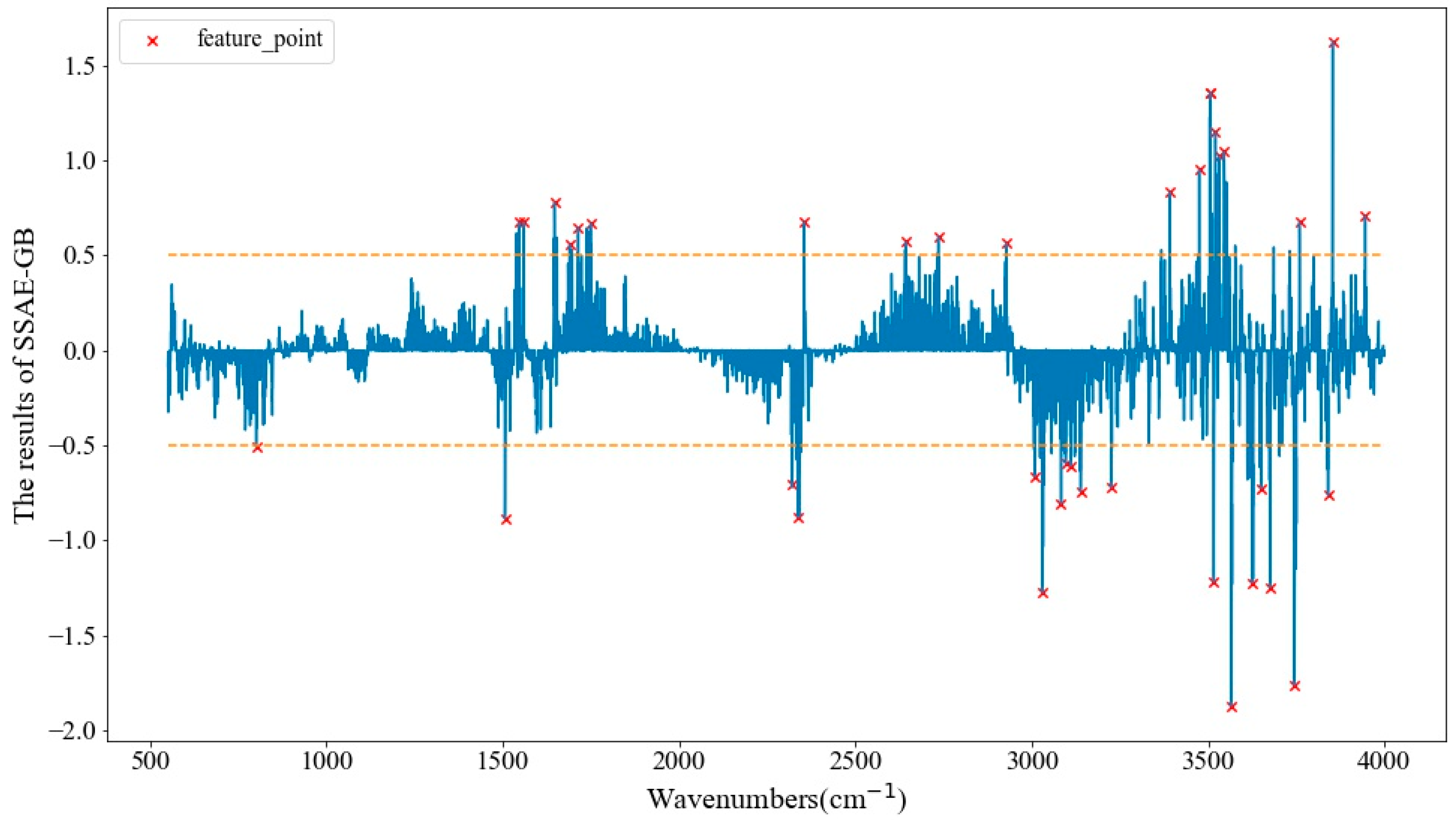

2.4. Optimal Wavenumber Selection

3. Materials and Methods

3.1. Samples Preparation and FTIR Spectra Acquisition

3.2. Multivariate Data Analysis

3.2.1. K-Nearest Neighbor

3.2.2. Support Vector Machine

3.2.3. Principal Component Analysis loading

3.2.4. Competitive Adaptive Reweighted Sampling

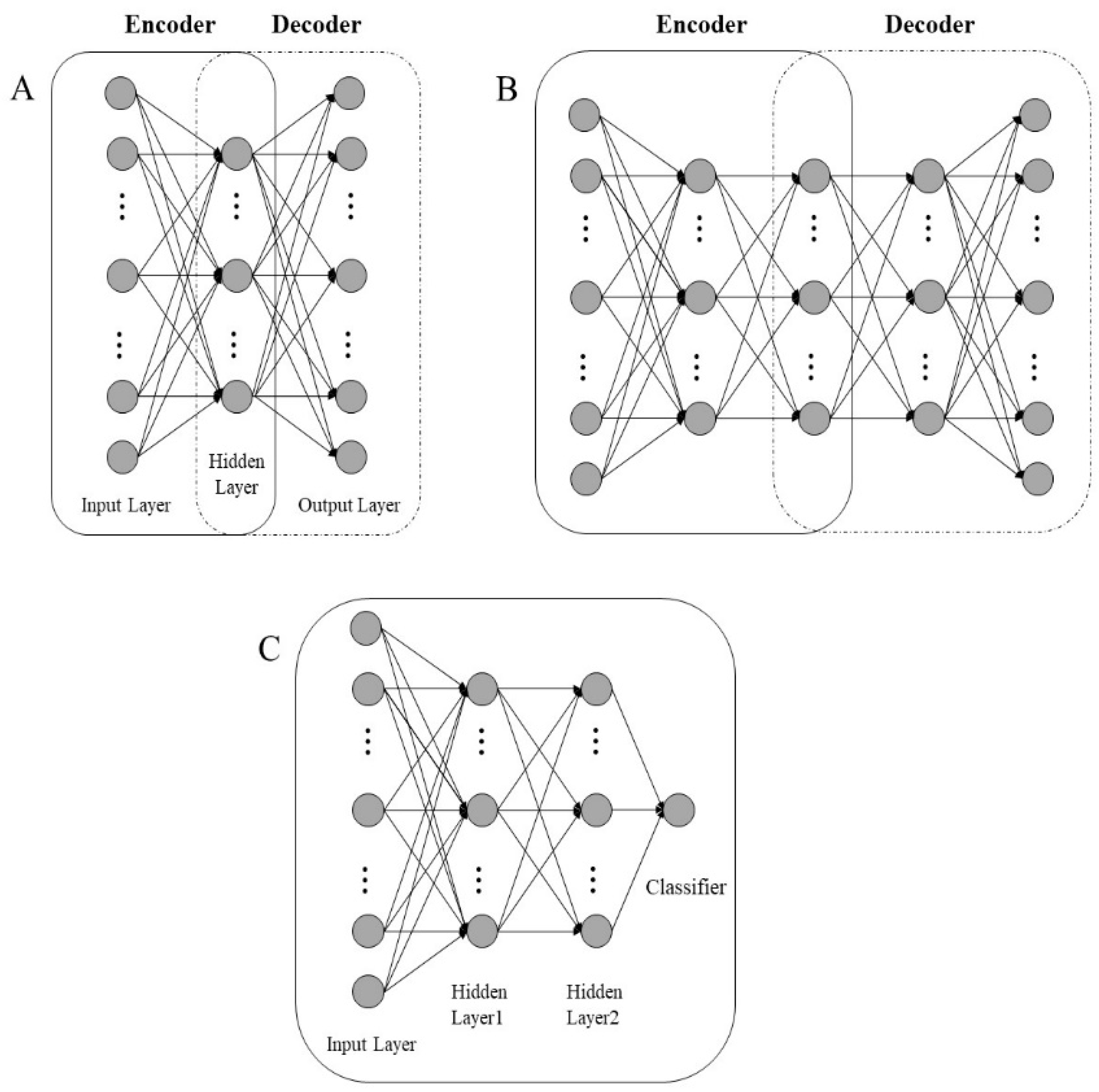

3.2.5. Stacked Sparse Auto-Encoder

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Barthlott, W.; Große-Veldmann, B.; Korotkova, N. Orchid Seed Diversity. A Scanning Electron Microscopy Survey; Turland, N.J., Rodewald, M., Eds.; Botanic Garden and Botanical Museum Berlin-Englera: Berlin, Germany, 2014. [Google Scholar]

- Puttemans, S.; Goedeme, T. Visual Detection and Species Classification of Orchid Flowers; IEEE: Piscataway, NJ, USA, 2015; pp. 505–509. [Google Scholar]

- Nilsback, M.-E.; Zisserman, A.; Society, I.C. Automated Flower Classification over a Large Number of Classes; IEEE: Piscataway, NJ, USA, 2008; pp. 722–729. [Google Scholar]

- Su, C.L.; Chao, Y.T.; Yen, S.H.; Chen, C.Y.; Chen, W.C.; Chang, Y.C.A.; Shih, M.C. Orchidstra: An integrated orchid functional genomics database. Plant Cell Physiol. 2013, 54, e11. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.; Li, F.; Chen, K. Analysis of diversity and relationships among Chinese orchid cultivars using EST-SSR markers. Biochem. Syst. Ecol. 2010, 38, 93–102. [Google Scholar] [CrossRef]

- Mariey, L.; Signolle, J.P.; Amiel, C.; Travert, J. Discrimination, classification, identification of microorganisms using FTIR spectroscopy and chemometrics. Vib. Spectrosc. 2001, 26, 151–159. [Google Scholar] [CrossRef]

- Christou, C.; Agapiou, A.; Kokkinofta, R. Use of FTIR spectroscopy and chemometrics for the classification of carobs origin. J. Adv. Res. 2018, 10, 1–8. [Google Scholar] [CrossRef] [PubMed]

- De Luca, M.; Terouzi, W.; Ioele, G.; Kzaiber, F.; Oussama, A.; Oliverio, F.; Tauler, R.; Ragno, G. Derivative FTIR spectroscopy for cluster analysis and classification of morocco olive oils. Food Chem. 2011, 124, 1113–1118. [Google Scholar] [CrossRef]

- Xiaohong, W.; Jin, Z.; Bin, W.; Jun, S.; Chunxia, D. Discrimination of tea varieties using FTIR spectroscopy and allied Gustafson-Kessel clustering. Comput. Electron. Agric. 2018, 147, 64–69. [Google Scholar] [CrossRef]

- Luca, M.D.; Terouzi, W.; Kzaiber, F.; Ioele, G.; Oussama, A.; Ragno, G. Classification of Moroccan olive cultivars by linear discriminant analysis applied to ATR-FTIR spectra of endocarps. Int. J. Food Sci. Technol. 2012, 47, 1286–1292. [Google Scholar] [CrossRef]

- Wiwart, M.; Kandler, W.; Suchowilska, E.; Krska, R. Discrimination between the grain of spelt and common wheat hybrids and their parental forms using fourier transform infrared-attenuated total reflection. Int. J. Food Prop. 2015, 18, 54–63. [Google Scholar] [CrossRef]

- Feng, X.; Yin, H.; Zhang, C.; Peng, C.; He, Y. Screening of transgenic maize using near infrared spectroscopy and chemometric techniques. Span. J. Agric. Res. 2018, 16. [Google Scholar] [CrossRef]

- Feng, X.; Zhao, Y.; Zhang, C.; Cheng, P.; He, Y. Discrimination of transgenic maize kernel using NIR hyperspectral imaging and multivariate data analysis. Sensors 2017, 17, 1894. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Ye, H.; Liu, F.; He, Y.; Kong, W.; Sheng, K. Determination and visualization of pH values in anaerobic digestion of water hyacinth and rice straw mixtures using hyperspectral imaging with wavelet transform denoising and variable selection. Sensors 2016, 16, 244. [Google Scholar] [CrossRef] [PubMed]

- Devos, O.; Downey, G.; Duponchel, L. Simultaneous data pre-processing and SVM classification model selection based on a parallel genetic algorithm applied to spectroscopic data of olive oils. Food Chem. 2014, 148, 124–130. [Google Scholar] [CrossRef] [PubMed]

- Custers, D.; Cauwenbergh, T.; Bothy, J.L.; Courselle, P.; De Beer, J.O.; Apers, S.; Deconinck, E. ATR-FTIR spectroscopy and chemometrics: An interesting tool to discriminate and characterize counterfeit medicines. J. Pharm. Biomed. Anal. 2015, 112, 181–189. [Google Scholar] [CrossRef] [PubMed]

- Hirri, A.; Bassbasi, M.; Platikanov, S.; Tauler, R.; Oussama, A. FTIR Spectroscopy and PLS-DA classification and prediction of four commercial grade virgin olive oils from Morocco. Food Anal. Methods 2016, 9, 974–981. [Google Scholar] [CrossRef]

- Xu, J.; Xiang, L.; Liu, Q.; Gilmore, H.; Wu, J.; Tang, J.; Madabhushi, A. Stacked sparse autoencoder (SSAE) for nuclei detection on breast cancer histopathology images. IEEE Trans. Med. Imaging 2016, 35, 119–130. [Google Scholar] [CrossRef] [PubMed]

- Ju, Y.; Guo, J.; Liu, S. A Deep Learning Method Combined Sparse Autoencoder with SVM; IEEE: Piscataway, NJ, USA, 2015; pp. 257–260. [Google Scholar] [CrossRef]

- Fan, Y.; Zhang, C.; Liu, Z.; Qiu, Z.; He, Y. Cost-sensitive stacked sparse auto-encoder models to detect striped stem borer infestation on rice based on hyperspectral imaging. Knowl. Based Syst. 2019, 168, 49–58. [Google Scholar] [CrossRef]

- Schwanninger, M.; Rodrigues, J.C.; Pereira, H.; Hinterstoisser, B. Effects of short-time vibratory ball milling on the shape of FT-IR spectra of wood and cellulose. Vib. Spectrosc. 2004, 36, 23–40. [Google Scholar] [CrossRef]

- Popescu, C.-M.; Popescu, M.-C.; Vasile, C. Structural analysis of photodegraded lime wood by means of FT-IR and 2D IR correlation spectroscopy. Int. J. Biol. Macromol. 2011, 48, 667–675. [Google Scholar] [CrossRef]

- Garside, P.; Wyeth, P. Identification of cellulosic fibres by FTIR spectroscopy—Thread and single fibre analysis by attenuated total reflectance. Stud. Conserv. 2003, 48, 269–275. [Google Scholar] [CrossRef]

- Durazzo, A.; Kiefer, J.; Lucarini, M.; Camilli, E.; Marconi, S.; Gabrielli, P.; Aguzzi, A.; Gambelli, L.; Lisciani, S.; Marletta, L. Qualitative analysis of traditional italian dishes: FTIR approach. Sustainability 2018, 10, 4112. [Google Scholar] [CrossRef]

- Mueller, G.; Schoepper, C.; Vos, H.; Kharazipour, A.; Polle, A. FTIR-ATR spectroscopic analyses of changes in wood properties during particle-and fibreboard production of hard-and softwood trees. Bioresources 2009, 4, 49–71. [Google Scholar]

- Sun, Y.; Lin, L.; Deng, H.; Li, J.; He, B.; Sun, R.; Ouyang, P. Structural changes of bamboo cellulose in formic acid. Bioresources 2008, 3, 297–315. [Google Scholar]

- Hori, R.; Sugiyama, J. A combined FT-IR microscopy and principal component analysis on softwood cell walls. Carbohydr. Polym. 2003, 52, 449–453. [Google Scholar] [CrossRef]

- Nie, P.; Zhang, J.; Feng, X.; Yu, C.; He, Y. Classification of hybrid seeds using near-infrared hyperspectral imaging technology combined with deep learning. Sens. Actuators B Chem. 2019, 296, 126630. [Google Scholar] [CrossRef]

- Rossman, G.R. Vibrational spectroscopy of hydrous components. Rev. Mineral. 1988, 18, 193–206. [Google Scholar]

- Gómez-Sánchez, E.; Kunz, S.; Simon, S. ATR/FT-IR spectroscopy for the characterisation of magnetic tape materials. Spectrosc. Eur. 2012, 24, 6. [Google Scholar]

- Saikia, B.J.; Parthasarathy, G. Fourier transform infrared spectroscopic characterization of kaolinite from Assam and Meghalaya, Northeastern India. J. Mod. Phys. 2010, 1, 206. [Google Scholar] [CrossRef]

- Shurvell, H.F. Spectra–Structure Correlations in the Mid- and Far-Infrared; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2006. [Google Scholar] [CrossRef]

- Guo, Y.-C.; Cai, C.; Zhang, Y.-H. Observation of conformational changes in ethylene glycol-water complexes by FTIR-ATR spectroscopy and computational studies. AIP Adv. 2018, 8, 055308. [Google Scholar] [CrossRef]

- Altman, N.S. An introduction to kernel and nearest-neighbor nonparametric regression. Am. Stat. 1992, 46, 175–185. [Google Scholar] [CrossRef]

- Chapelle, O.; Haffner, P.; Vapnik, V.N. Support vector machines for histogram-based image classification. IEEE Trans. Neural Netw. 1999, 10, 1055–1064. [Google Scholar] [CrossRef]

- Lin, H.; Zhao, J.; Sun, L.; Chen, Q.; Zhou, F. Freshness measurement of eggs using near infrared (NIR) spectroscopy and multivariate data analysis. Innov. Food Sci. Emerg. Technol. 2011, 12, 182–186. [Google Scholar] [CrossRef]

- Li, H.; Liang, Y.; Xu, Q.; Cao, D. Key wavelengths screening using competitive adaptive reweighted sampling method for multivariate calibration. Anal. Chim. Acta 2009, 648, 77–84. [Google Scholar] [CrossRef] [PubMed]

- Ng, A. Cs294a Lecture Notes: Sparse Autoencoder; Stanford University: Stanford, CA, USA, 2010. [Google Scholar]

- Kullback, S.; Leibler, R.A. On information and sufficiency. Ann. Math. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

Sample Availability: Samples of the compounds are available from the authors. |

| Model | Parameter a | Accuracy (%) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Calibration Set | Prediction Set | |||||||||

| 1 | 2 | 3 | Mean | 1 | 2 | 3 | Mean | |||

| KNN | K | 3 | 78.0 | 81.1 | 81.1 | 80.1 | 61.6 | 60.4 | 47.8 | 56.6 |

| SVM | (c, g) | (256, 0.035) | 100.0 | 100.0 | 100.0 | 100.0 | 94.3 | 92.4 | 91.2 | 92.6 |

| SSAE | (h1, h2) | (2048, 13) | 99.1 | 99.7 | 99.4 | 99.4 | 98.7 | 98.1 | 96.9 | 97.9 |

| SVM | KNN | |||||

|---|---|---|---|---|---|---|

| Parameters a (c, g) | Mean Accuracy (%) | Parameters a (K) | Mean Accuracy (%) | |||

| Calibration | Prediction | Calibration | Prediction | |||

| PCA-loading | (256, 4) | 99.2 | 90.1 | 3 | 77.3 | 57.4 |

| CARS | (256, 0.5) | 99.9 | 95.2 | 3 | 80.1 | 61.4 |

| SSAE-GB | (256, 4) | 99.7 | 94.5 | 3 | 84.3 | 68.5 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Y.; Chen, Y.; Feng, X.; Yang, X.; Zhang, J.; Qiu, Z.; He, Y. Variety Identification of Orchids Using Fourier Transform Infrared Spectroscopy Combined with Stacked Sparse Auto-Encoder. Molecules 2019, 24, 2506. https://doi.org/10.3390/molecules24132506

Chen Y, Chen Y, Feng X, Yang X, Zhang J, Qiu Z, He Y. Variety Identification of Orchids Using Fourier Transform Infrared Spectroscopy Combined with Stacked Sparse Auto-Encoder. Molecules. 2019; 24(13):2506. https://doi.org/10.3390/molecules24132506

Chicago/Turabian StyleChen, Yunfeng, Yue Chen, Xuping Feng, Xufeng Yang, Jinnuo Zhang, Zhengjun Qiu, and Yong He. 2019. "Variety Identification of Orchids Using Fourier Transform Infrared Spectroscopy Combined with Stacked Sparse Auto-Encoder" Molecules 24, no. 13: 2506. https://doi.org/10.3390/molecules24132506

APA StyleChen, Y., Chen, Y., Feng, X., Yang, X., Zhang, J., Qiu, Z., & He, Y. (2019). Variety Identification of Orchids Using Fourier Transform Infrared Spectroscopy Combined with Stacked Sparse Auto-Encoder. Molecules, 24(13), 2506. https://doi.org/10.3390/molecules24132506