Statistical Inference for High-Dimensional Heteroscedastic Partially Single-Index Models

Abstract

1. Introduction

2. Penalized Empirical Likelihood for PLSIM

- (1)

- and , with probability tending to 1;

- (2)

- , where , and G is a matrix with fixed and ,

3. Penalized Empirical Likelihood for PLM and SIM

- (1)

- , with probability tending to 1;

- (2)

- , where , , is a matrix with fixed and stands for convergence in distribution.

- (1)

- , with probability tending to 1;

- (2)

- , where , , and is a matrix with fixed .

4. Simulations

- Step 1: We use the estimation procedure (a relatively simple but inefficient estimation method) described in Section 2 of Ma and Zhu [6] to obtain an initial estimator .

- Step 2: Obtain , , , , , and described above using fixed values of .

- Step 3: Obtain the auxiliary random vector .

- Step 4: Use Newton’s method to minimize (9) with respect to for fixed values of .

- Step 5: Use the local quadratic approximation algorithm to minimize (9) with respect to for fixed values of obtained from Step 4.

- Step 6: Iterate Steps 4 and 5 until converges.

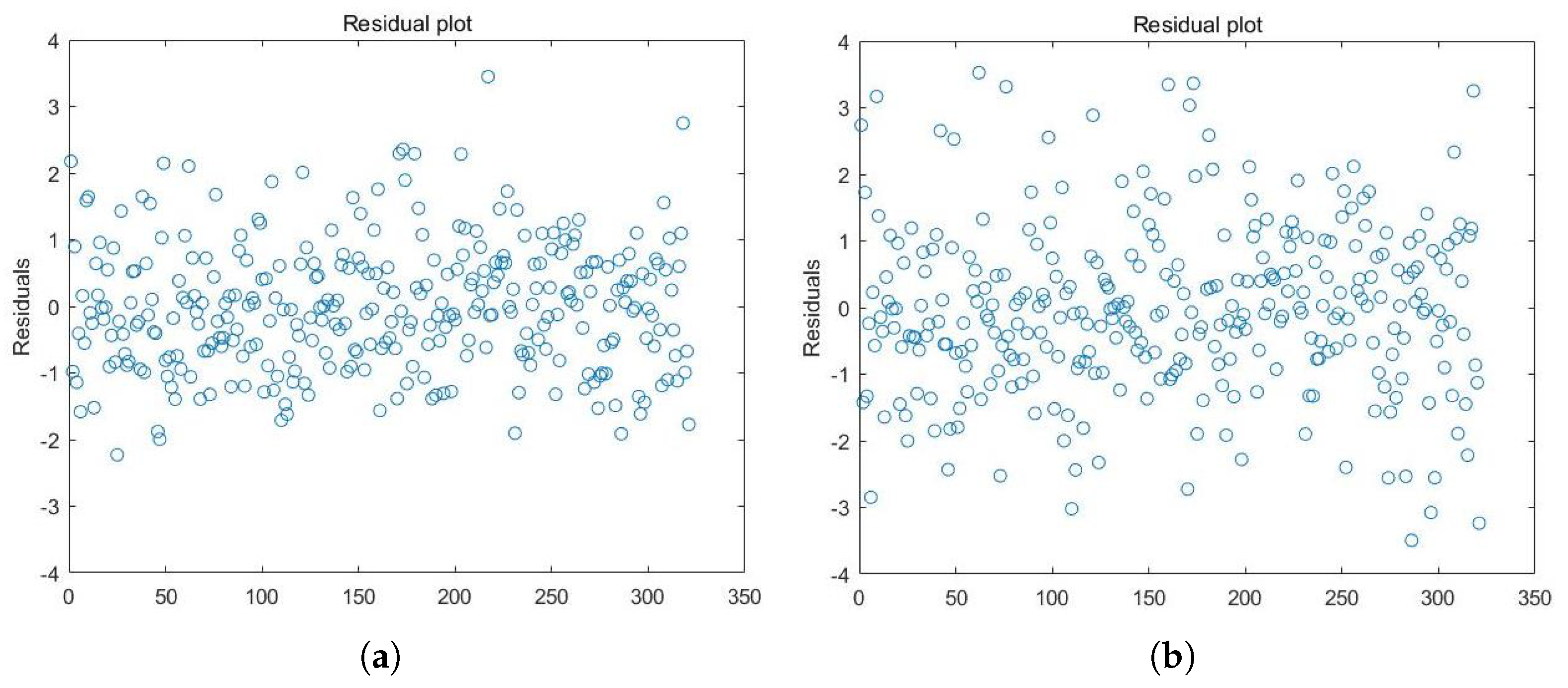

5. Real Data Application

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

- (1)

- (2)

References

- Carroll, R.; Fan, J.; Gijbels, I.; Wand, M.P. Generalized partially linear single-index models. J. Am. Stat. Assoc. 1997, 92, 477–489. [Google Scholar] [CrossRef]

- Yu, Y.; Ruppert, D. Penalized spline estimation for partially linear single-index models. J. Am. Stat. Assoc. 2002, 97, 1042–1054. [Google Scholar] [CrossRef]

- Zhu, L.X.; Xue, L.G. Empirical likelihood confidence regions in a partially linear single-index model. J. R. Stat. Soc. Ser. B 2006, 68, 549–570. [Google Scholar] [CrossRef]

- Xia, Y.; Härdle, W. Semi-parametric estimation of partially linear single-index models. J. Multivar. Anal. 2006, 97, 1162–1184. [Google Scholar] [CrossRef]

- Liang, H.; Xia, L.; Li, R.; Tsai, C.L. Estimation and testing for partially linear single-index models. Ann. Stat. 2010, 38, 3811–3836. [Google Scholar] [CrossRef] [PubMed]

- Ma, Y.; Zhu, L.P. Doubly robust and efficient estimators for heteroscedastic partially linear single-index models allowing high dimensional covariates. J. R. Stat. Soc. Ser. B 2013, 75, 305–322. [Google Scholar] [CrossRef]

- Fang, J.L.; Liu, W.R.; Lu, X.W. Empirical likelihood for heteroscedastic partially linear single-index models with growing dimensional data. Metrika 2018, 81, 255–281. [Google Scholar] [CrossRef]

- Hao, C.; Yin, X. A Normality Test for High-dimensional Data Based on the Nearest Neighbor Approach. J. Am. Stat. Assoc. 2023, 118, 719–731. [Google Scholar]

- Liu, B.; Zhang, Q.; Xue, L.; Song, P.; Kang, J. Robust High-Dimensional Regression with Coefficient Thresholding and its Application to Imaging Data Analysis. J. Am. Stat. Assoc. 2024, 119, 715–729. [Google Scholar] [CrossRef]

- Breiman, L. Heuristics of instability and stabilization in model selection. Ann. Stat. 1996, 24, 2350–2383. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the Lasso. J. R. Stat. Soc. Ser. B 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Fan, J.; Li, R. Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 2001, 96, 1348–1360. [Google Scholar] [CrossRef]

- Xie, H.; Huang, J. SCAD-penalized regression in high-dimensional partially linear models. Ann. Stat. 2009, 37, 673–696. [Google Scholar] [CrossRef]

- Wang, T.; Zhu, L.X. Consistent Model Selection and Estimation in a General Single-Index Model with “Large p and Small n”; Technical Report; Department of Mathematics, Hong Kong Baptist University: Hong Kong, China, 2011. [Google Scholar]

- Zhang, J.; Wang, T.; Zhu, L.X.; Liang, H. A dimension reduction based approach for estimation and variable selection in partially linear single-index models with high-dimensional covariates. Electron. J. Stat. 2012, 6, 2235–2273. [Google Scholar] [CrossRef]

- Lai, P.; Wang, Q.H.; Zhou, X.H. Variable selection and semiparametric efficient estimation for the heteroscedastic partially linear single-index model. Comput. Stat. Data Anal. 2014, 70, 241–256. [Google Scholar] [CrossRef]

- Owen, A. Empirical likelihood ratio confidence intervals for a single function. Biometrika 1988, 75, 237–249. [Google Scholar] [CrossRef]

- Owen, A. Empirical likelihood for linear models. Ann. Stat. 1991, 19, 1725–1747. [Google Scholar] [CrossRef]

- Kolaczyk, E.D. Empirical likelihood for generalized linear models. Stat. Sinaca 1994, 4, 199–218. [Google Scholar]

- Lu, X.W. Empirical likelihood for heteroscedastic partially linear models. J. Multivar. Anal. 2009, 100, 387–395. [Google Scholar] [CrossRef][Green Version]

- Xue, L.; Zhu, L. Empirical likelihood for single-index models. J. Multivar. Anal. 2006, 97, 1295–1312. [Google Scholar] [CrossRef][Green Version]

- Matsushita, Y.; Otsuke, T. Empirical likelihood for network data. J. Am. Stat. Assoc. 2023, 119, 2117–2128. [Google Scholar] [CrossRef]

- Chen, S.; Peng, L.; Qin, Y. Effects of data dimension on empirical likelihood. Biometrika 2009, 96, 712–722. [Google Scholar] [CrossRef]

- Tang, C.; Leng, C. Penalized high-dimensional empirical likelihood. Biometrika 2010, 97, 905–920. [Google Scholar] [CrossRef]

- Leng, C.; Tang, C. Penalized empirical likelihood and growing dimensional general estimating equations. Biometrika 2012, 99, 706–716. [Google Scholar] [CrossRef]

- Donoho, D.; Johnstone, I. Ideal spatial adaptation by wavelet shrinkage. Biometrika 1994, 81, 425–455. [Google Scholar] [CrossRef]

- Hoerl, A.; Kennard, R. Ridge regression: Biased estimation for nonorthogonal problems. Technometrics 1970, 12, 55–67. [Google Scholar] [CrossRef]

- Fan, J.Q.; Lv, J.C. Sure independence screening for ultrahigh dimensional feature space. J. R. Stat. Soc. Ser. B 2008, 70, 894–911. [Google Scholar] [CrossRef]

- Hammer, S.; Katzenstein, D.; Hughes, M.; Gundaker, H.; Schooley, R.; Haubrich, R.; Henry, W.; Lederman, M.; Phair, J.; Niu, M.; et al. A trial comparing nucleoside monotherapy with combination therapy in HIV-infected adults with CD4 cell counts from 200 to 500 per cubic millimeter. N. Engl. J. Med. 1996, 335, 1081–1089. [Google Scholar] [CrossRef]

- Lai, P.; Wang, Q. Semiparametric efficient estimation for partially linear single-index models with responses missing at random. J. Multivar. Anal. 2014, 128, 33–50. [Google Scholar] [CrossRef]

- Zhu, L.X.; Fang, K.T. Asymptotics for kernel estimate of sliced inverse regression. Ann. Stat. 1996, 24, 1053–1068. [Google Scholar] [CrossRef]

- Serfling, R.J. Approximation of Stochastic Processes; John Wiley: New York, NY, USA, 1980. [Google Scholar]

- Owen, A. Empirical Likelihood; Chapman and Hall-CRC: New York, NY, USA, 2001. [Google Scholar]

| n | Kernel Function | Mean Count of Zero Coefficients | ||

|---|---|---|---|---|

| Correct | Incorrect | |||

| (10,10) | 50 | Epanechnikov | (4.47 [55.9%], 3.82 [54.6%]) | (2.51, 3.24) |

| Cosine | (4.35 [54.4%], 3.69 [52.7%]) | (2.38, 3.47) | ||

| (10,10) | 100 | Epanechnikov | (6.93 [86.6%], 5.63 [80.4%]) | (0.52, 0.76) |

| Cosine | (6.86 [85.8%], 5.58 [79.7%]) | (0.55, 0.74) | ||

| (10,10) | 200 | Epanechnikov | (7.32 [91.5%], 6.27 [89.5%]) | (0.34, 0.51) |

| Cosine | (7.29 [91.1%], 6.24 [89.1%]) | (0.37, 0.58) | ||

| (20,20) | 50 | Epanechnikov | (9.71 [53.9%], 8.95 [52.6%]) | (4.83, 5.95) |

| Cosine | (9.58 [53.2%], 8.79 [51.7%]) | (4.92, 6.14) | ||

| (20,20) | 100 | Epanechnikov | (12.69 [70.5%], 11.81 [69.4%]) | (3.58, 5.32) |

| Cosine | (12.66 [70.3%], 11.67 [68.6%]) | (3.71, 5.45) | ||

| (20,20) | 200 | Epanechnikov | (15.81 [87.8%], 14.71 [86.5%]) | (0.42, 0.87) |

| Cosine | (15.28 [84.9%], 14.56 [85.6%]) | (0.45, 0.93) | ||

| n | Kernel Function | |||||

|---|---|---|---|---|---|---|

| (10,10) | 50 | Epanechnikov | 4.35 (2.616) | 2.74 (1.738) | 3.91 (2.237) | −4.96 (3.521) |

| Cosine | 4.27 (2.853) | 2.59 (1.802) | 4.14 (2.394) | −4.58 (3.475) | ||

| (10,10) | 100 | Epanechnikov | 1.94 (0.128) | 0.96 (0.106) | 1.56 (0.132) | −1.93 (0.103) |

| Cosine | 2.06 (0.131) | 0.94 (0.114) | 1.43 (0.146) | −2.09 (0.115) | ||

| (10,10) | 200 | Epanechnikov | 1.95 (0.087) | 1.03 (0.082) | 1.56 (0.105) | −2.04 (0.103) |

| Cosine | 2.02 (0.094) | 1.06 (0.091) | 1.43 (0.113) | −2.07 (0.107) | ||

| (20,20) | 50 | Epanechnikov | 4.92 (3.587) | 3.17 (3.264) | 5.89 (4.316) | −6.83 (4.763) |

| Cosine | 4.53 (3.951) | 2.96 (3.728) | 6.34 (4.512) | −6.71 (4.625) | ||

| (20,20) | 100 | Epanechnikov | 2.97 (1.438) | 1.53 (0.896) | 2.16 (1.048) | −2.79 (0.951) |

| Cosine | 3.18 (1.502) | 1.61 (0.925) | 2.12 (1.073) | −2.85 (1.027) | ||

| (20,20) | 200 | Epanechnikov | 2.09 (0.135) | 1.05 (0.107) | 1.54 (0.139) | −1.96 (0.114) |

| Cosine | 2.12 (0.139) | 1.07 (0.119) | 1.43 (0.147) | −2.08 (0.121) |

| n | Method | Mean Count of Zero Coefficients | ||

|---|---|---|---|---|

| Correct | Incorrect | |||

| (20,20) | 200 | PEL | (15.21 [84.5%], 13.45 [84.1%]) | (0.37, 1.03) |

| PVS | (14.75 [81.9%], 13.17 [82.3%]) | (0.45, 1.26) | ||

| 400 | PEL | (15.89 [88.3%], 14.02 [87.6%]) | (0.35, 0.85) | |

| PVS | (15.46 [85.8%], 13.74 [85.9%]) | (0.41, 0.92) | ||

| (20,30) | 200 | PEL | (15.24 [84.6%], 22.56 [86.7%]) | (0.39, 1.27) |

| PVS | (14.98 [83.2%], 22.12 [85.1%]) | (0.38, 1.35) | ||

| 400 | PEL | (15.79 [87.7%], 23.31 [89.7%]) | (0.34, 1.14) | |

| PVS | (15.13 [84.1%], 22.85 [87.9%]) | (0.43, 1.28) | ||

| (30,20) | 200 | PEL | (24.38 [87.1%], 13.51 [84.4%]) | (0.38, 1.24) |

| PVS | (23.85 [85.2%], 12.97 [81.1%]) | (0.44, 1.31) | ||

| 400 | PEL | (25.14 [89.7%], 14.13 [88.3%]) | (0.33, 1.17) | |

| PVS | (24.73 [88.3%], 13.54 [84.6%]) | (0.39, 1.26) | ||

| (30,30) | 200 | PEL | (24.25 [86.6%], 23.36 [89.8%]) | (0.40, 1.31) |

| PVS | (23.72 [84.7%], 22.68 [87.2%]) | (0.47, 1.38) | ||

| 400 | PEL | (25.27 [90.0%], 23.15 [89.1%]) | (0.38, 1.19) | |

| PVS | (24.54 [87.6%], 22.82 [87.7%]) | (0.45, 1.25) | ||

| n | Method | ||||||

|---|---|---|---|---|---|---|---|

| (20,20) | 200 | PEL | 1.05 (0.132) | −1.04 (0.101) | 1.06 (0.143) | 2.07 (0.127) | 0.95 (0.106) |

| PVS | 1.12 (0.145) | −1.08 (0.134) | 0.93 (0.175) | 2.11 (0.142) | 1.08 (0.131) | ||

| 400 | PEL | 0.98 (0.102) | −1.03 (0.091) | 1.04 (0.126) | 1.98 (0.108) | 1.06 (0.113) | |

| PVS | 1.07 (0.134) | −1.06 (0.114) | 0.96 (0.135) | 2.08 (0.125) | 1.05 (0.117) | ||

| (20,30) | 200 | PEL | 0.94 (0.137) | −1.05 (0.109) | 1.10 (0.128) | 2.06 (0.121) | 1.07 (0.149) |

| PVS | 1.86 (0.153) | −1.12 (0.142) | 0.92 (0.136) | 1.96 (0.147) | 1.13 (0.151) | ||

| 400 | PEL | 0.96 (0.125) | −1.04 (0.098) | 1.08 (0.124) | 2.03 (0.119) | 1.09 (0.128) | |

| PVS | 1.91 (0.138) | −1.09 (0.117) | 0.92 (0.225) | 1.95 (0.124) | 1.92 (0.137) | ||

| (30,20) | 200 | PEL | 1.09 (0.141) | −1.13 (0.135) | 1.07 (0.127) | 1.94 (0.130) | 1.08 (0.143) |

| PVS | 0.89 (0.159) | −1.08 (0.162) | 1.09 (0.143) | 1.91 (0.129) | 1.13 (0.156) | ||

| 400 | PEL | 1.06 (0.098) | −0.96 (0.107) | 1.03 (0.126) | 2.05 (0.115) | 1.04 (0.112) | |

| PVS | 1.86 (0.116) | −1.58 (0.154) | 0.94 (0.139) | 1.97 (0.129) | 1.07 (0.123) | ||

| (30,30) | 200 | PEL | 1.14 (0.129) | −1.13 (0.138) | 0.91 (0.142) | 2.09 (0.138) | 1.12 (0.128) |

| PVS | 0.79 (0.148) | −1.18 (0.145) | 0.88 (0.157) | 1.90 (0.141) | 0.93 (0.135) | ||

| 400 | PEL | 1.09 (0.114) | −1.07 (0.126) | 0.93 (0.119) | 2.10 (0.128) | 1.09 (0.119) | |

| PVS | 1.15 (0.123) | −1.15 (0.131) | 1.05 (0.125) | 1.89 (0.133) | 0.92 (0.121) |

| Variable | Method | |

|---|---|---|

| PEL | PVS | |

| homo | 0.193 ([0.065, 0.317]) | 0.131 ([0.001, 0.262]) |

| str2 | −0.322 ([−0.517, −0.016]) | −0.045 ([−0.324, 0.234]) |

| age | 0.528 ([0.314, 0.735]) | 0.153 ([−0.118, 0.424]) |

| CD820 | −0.691 ([−0.478, −0.893]) | −0.208 ([−0.413, 0.003]) |

| cD40 | 0.255 ([0.009, 0.452]) | 0.446 ([0.134, 0.758]) |

| cD420 | 0.423 ([0.126, 0.674]) | 0.857 ([0.536, 1.178]) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fang, J.; Tian, Z. Statistical Inference for High-Dimensional Heteroscedastic Partially Single-Index Models. Entropy 2025, 27, 964. https://doi.org/10.3390/e27090964

Fang J, Tian Z. Statistical Inference for High-Dimensional Heteroscedastic Partially Single-Index Models. Entropy. 2025; 27(9):964. https://doi.org/10.3390/e27090964

Chicago/Turabian StyleFang, Jianglin, and Zhikun Tian. 2025. "Statistical Inference for High-Dimensional Heteroscedastic Partially Single-Index Models" Entropy 27, no. 9: 964. https://doi.org/10.3390/e27090964

APA StyleFang, J., & Tian, Z. (2025). Statistical Inference for High-Dimensional Heteroscedastic Partially Single-Index Models. Entropy, 27(9), 964. https://doi.org/10.3390/e27090964