3.1. Preliminaries

Structured State Space Sequence (S4) model leverages a continuous-time state space modeling framework to process discrete data, mapping the input sequence

to the output sequence

through an implicit latent state

, where

N denotes the state space dimension. The mathematical formulation is expressed as follows:

The state transition matrix

autonomously governs latent state evolution by integrating historical information to maintain system memory.

dynamically weights input signals to regulate their influence on latent state updates.

transforms latent states into observable outputs, while

provides direct input-to-output connections to preserve transient signal characteristics.

The continuous parameters are then discretized via the zero-order hold (ZOH) rule, integrating actual algorithms into deep learning. The definition is as follows:

where

represents the learnable time scale parameter for converting continuous parameters

into discrete parameters

and

. Then Equation (

1) can be rewritten as follows:

In addition, Equation (

3) can also be transformed into convolution form:

Among them,

is the structured convolution kernel,

L is the length of the input sequence, and ∗ represents the convolution operation.

The S4 framework achieves enhanced computational efficiency through dynamic parameter adaptation, a capability further refined in its advanced iteration S6 (Mamba). By rendering parameters , and input-dependent, Mamba enables context-aware processing tailored to varying input characteristics.

3.2. Overall Architecture

As illustrated in

Figure 1, MSS-Mamba architecture comprises four core components: shallow feature extraction, continuous spectral–spatial scan (CS3), multi-scale information fusion (MIF) and high-quality reconstruction. The network processes a single RGB image

as input. During shallow feature extraction,

2D convolution initially extract low-level feature maps

, where

represents channel depth, height and width.

The shallow features

are subsequently fed into the CS3 module to generate sequential representations

, which encode rich spatial–spectral correlations for robust multi-scale feature extraction. These sequences

are then processed by the MIF module to learn high-level discriminative features

. The entire process can be described as follows:

The CS3 is divided into two scanning modes: BRC-S and BCR-S. Finally, the high-quality reconstruction stage synthesizes

into high-fidelity hyperspectral images

:

3.3. Continuous Spectral–Spatial Scan

Original Mamba demonstrates remarkable advantages in long-sequence modeling tasks, particularly in global receptive field establishment and long-range dependency learning. However, its application to image processing necessitates specialized scanning strategies to transform multidimensional data into 1D sequences. Existing vision-oriented scanning methods primarily focus on spatial dimensions, which exhibit critical limitations in adaptability when extended to 3D hyperspectral data characterized by intricate spatial–spectral interdependencies. To address this, we propose CS3, a novel technique that generates sequences rich in spatial–spectral information to accommodate the complexity of 3D image data while preserving cross-dimensional correlations.

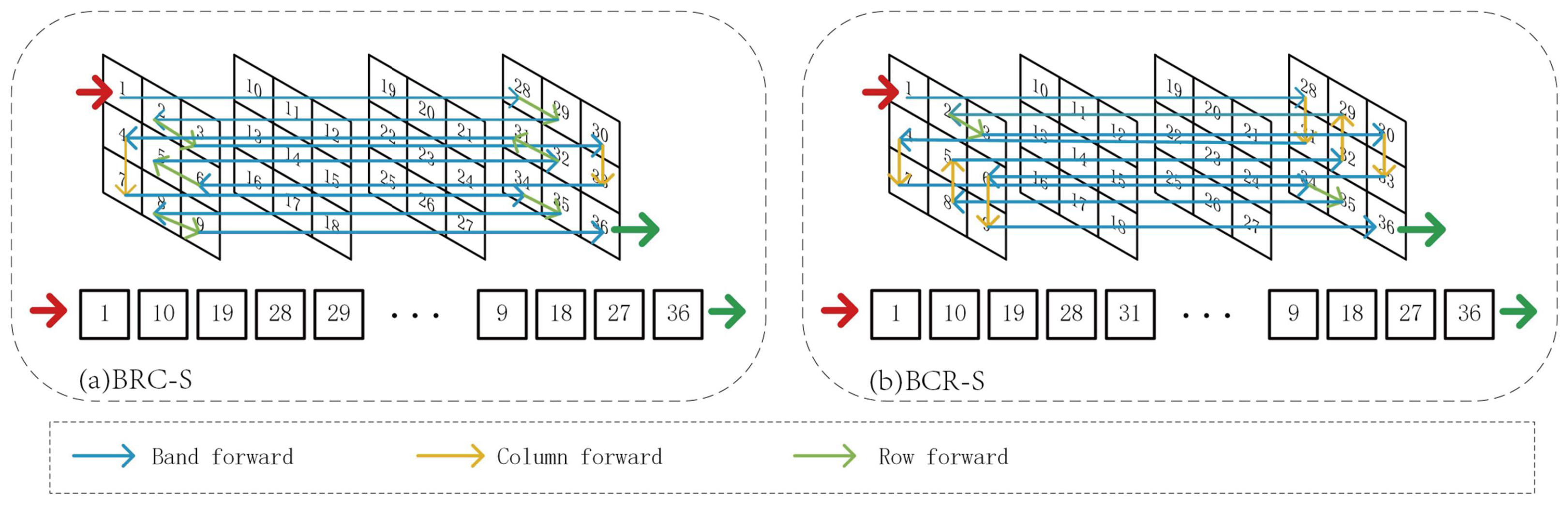

As illustrated in

Figure 2, the CS3 strategy unfolds 3D feature maps in a pixel-wise manner, where each pixel has dependency relationships with surrounding pixels across rows, columns and bands. Taking BRC-S as an example, the input 3D feature map

undergoes dimensional rearrangement to

, which is then scanned into sequence

with

. This process sequentially scans each column following band and row orders. Specifically, BRC-S first scans band-row planes before proceeding to column traversal, as visualized in

Figure 2a. The generated sequences preserve inter-pixel dependencies through tail-to-tail or head-to-head concatenation, where adjacent elements in the sequence maintain spatial–spectral correlations.

Mamba’s unidirectional recurrent processing propagates dependencies solely from preceding tokens, potentially isolating spatially proximate pixels in distant sequence positions and causing local information degradation. To mitigate this, as visualized in

Figure 2b, BRC-S introduces band-column-row scanning to create complementary sequences that enhance long-range modeling diversity. Collectively, CS3 innovatively encodes cross-dimensional relationships into sequences through intelligent dimensional permutations, effectively exploiting spatial–spectral synergies.

3.4. Multi-Scale Information Fusion

While Transformer-based multi-scale learning frameworks improve efficiency through image patch partitioning, they inherently suffer from two critical limitations: inadequate interaction between global and local contextual features [

42], and persistent incompatibility between global receptive fields and computational efficiency. Addressing these challenges, our Multi-scale Information Fusion (MIF) block introduces a sequence tokenization approach that performs multi-scale slicing operations prior to feeding scanned sequences into Mamba. Inspired by temporal sequence modeling principles in Mamba, this strategy processes hierarchical visual patterns through adaptive window slicing while preserving sequence continuity. The proposed method significantly enhances cross-scale feature interactions by jointly optimizing multi-granularity pattern extraction and sequence dependency propagation, thereby achieving synergistic integration of global contextual awareness and local detail preservation within an efficient computational paradigm.

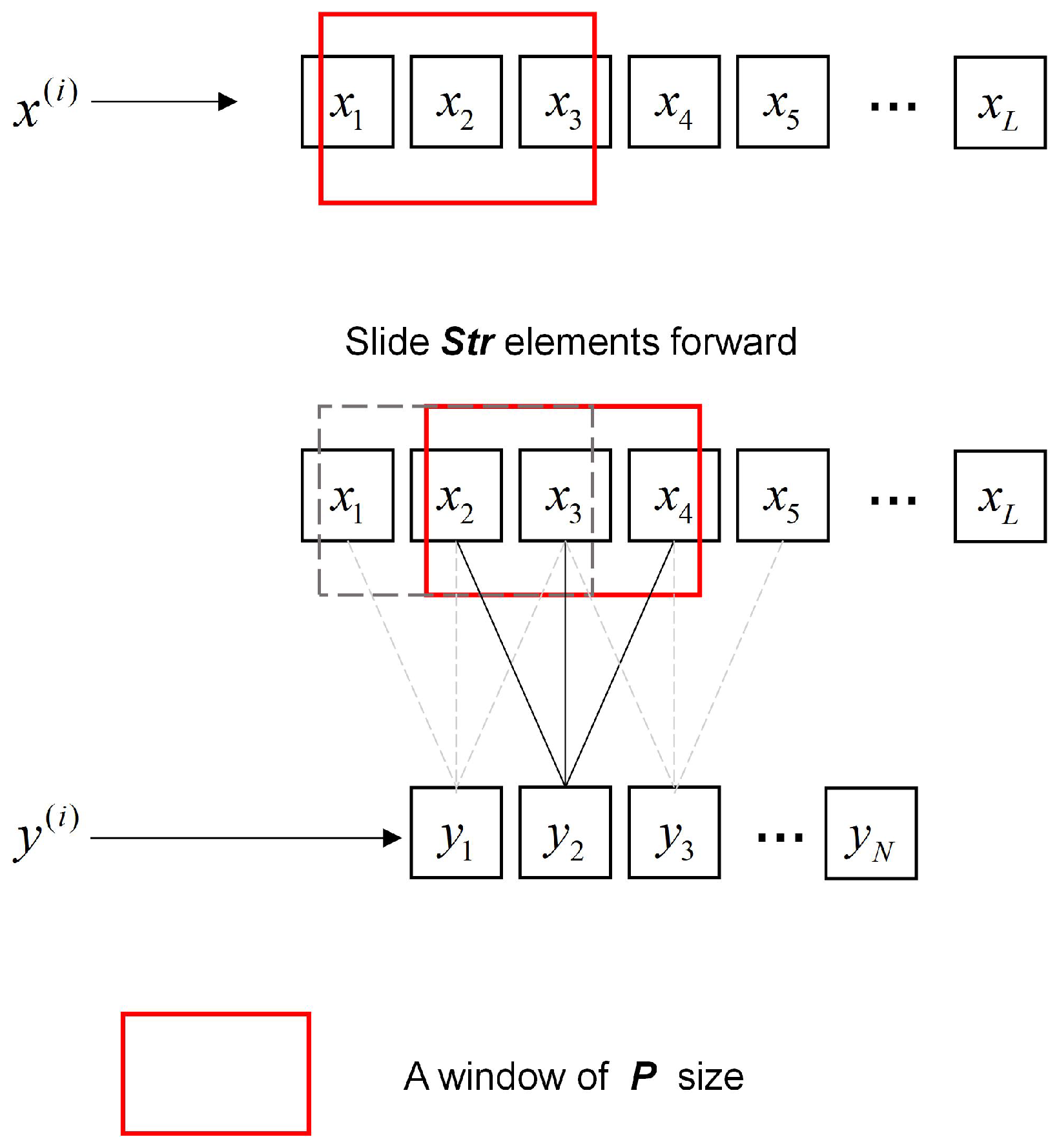

Multi-Scale Patch: As shown in

Figure 1, the sequences generated by CS3 are first fed into a multi-scale patch generator. This module produces multi-scale sequences by configuring varying parameters for sequence tokenization. Specifically, larger patches retain more spatial pixels, enhancing the model’s understanding of structural trends and holistic contextual relationships to facilitate global spatial modeling. Conversely, smaller patches encompass more spectral channel pixels, strengthening the model’s ability to learn variation trends and correlations in adjacent spectral bands for local spectral modeling. To ensure effective spectral–spatial correlation learning, the patch size is set to exceed the number of feature channels. The specific form is shown in

Figure 3. Formally, consider the sequence

generated by BRC-S, where each column vector

represents a spectral–spatial feature stream. The sequence tokenization process applies sliding window slicing with patch length

P and stride

, generating

patches per column. This operation transforms each

into a patch matrix

, effectively expanding the original 2D sequence

into a 3D tensor

. Here,

N becomes the new sequence dimension encoding multi-scale contextual hierarchies, while

P serves as the variable dimension capturing localized pattern characteristics.

Local Information Richness (LIR): To measure the richness of local spectral information in a sequence, we propose a new metric called LIR. The specific calculation method is as follows:

The LIR metric is intrinsically governed by two critical parameters, P and , which jointly determine its computational characteristics. The LIR value is positively correlated with local spectral information density — higher LIR values indicate richer localized patterns within sequences. Notably, patch dimensions differentially modulate receptive fields: larger P expands spatial–spectral coverage, enabling efficient global spatial modeling with reduced computational overhead while maintaining stride sizes. Conversely, smaller P prioritizes localized spectral information capture through decreased , which increases inter-patch overlaps to enhance fine-grained feature extraction. This parametric flexibility allows LIR to adaptively balance global contextual learning and local discriminative analysis.

The entire multi-scale patch process can be described by the following formula:

and

represent the generated high and low LIR sequences, respectively. MP is the multi-scale patch generator.

and

represent different slice sizes, with

and

.

Long-Short Router: The proposed Long-Short Router module dynamically allocates computational resources by learning adaptive, input-dependent weights to integrate global and local representations. As shown in

Figure 1, unlike traditional Mixture-of-Experts (MoE) routers that perform discrete path selection, our router learns the continuous relative contributions of two complementary pathways through a dual-attention mechanism followed by adaptive interpolation.

Formally, given the input feature map

from the shallow extraction module, it is first reshaped into a token sequence

where

indexes the spatial locations. This sequence

is then processed by the router to generate pathway weights. The router leverages parallel channel-wise and spatial-wise attention branches to capture both semantic and structural information, generating a comprehensive representation:

where

denotes a pointwise convolution (

) for channel attention, and

denotes a depthwise convolution (

) for spatial attention. These two attentions are combined via element-wise multiplication to form a joint feature representation

.

Subsequently, the combined representation is aggregated via global average pooling and projected to a 2-dimensional weight vector:

where

is a linear projection layer. The final output weights

are broadcasted and applied to modulate the contributions of the local and global pathways, respectively. This design enables instance-specific resource allocation without the need for discrete routing or expert selection.

Then, the multi-scale sequences generated by Equation (

9) are input into Mamba for feature learning. The feature maps obtained are weighted and summed by the weights generated from Equation (

11). Finally, the depth feature map is restored to the input format via restore. The specific formulas are as follows:

where

is the output depth feature map, and

represents the

i-th Mamba layer in the hierarchical stack. Finally, the feature maps from different sequences are element-wise summed via Equation (

6), and a high-quality image is reconstructed via Equation (

7).