Geometric Neural Ordinary Differential Equations: From Manifolds to Lie Groups

Abstract

1. Introduction

- Systematic derivation of adjoint methods for neural ODEs on manifolds and Lie groups, highlighting the differences and equivalence of various approaches—for an overview, see also Table 1;

- Summarizing the state of the art of manifold and Lie group neural ODEs by formalizing the notion of extrinsic and intrinsic neural ODEs;

- A tutorial on neural ODEs on manifolds and Lie groups, with a focus on the derivation of coordinate-agnostic adjoint methods for optimization of various neural ODE architectures. Readers will gain a conceptual understanding of the geometric nature of the underlying variables, a coordinate-free derivation of adjoint methods and learn to incorporate additional geometric and physical structures. On the practical side, this will aid in the derivation and implementation of adjoint methods with non-trivial terms for various architectures, also with regard to coordinate expressions and chart transformations.

1.1. Literature Review

1.2. Notation

2. Background

2.1. Smooth Manifolds

2.2. Lie Groups

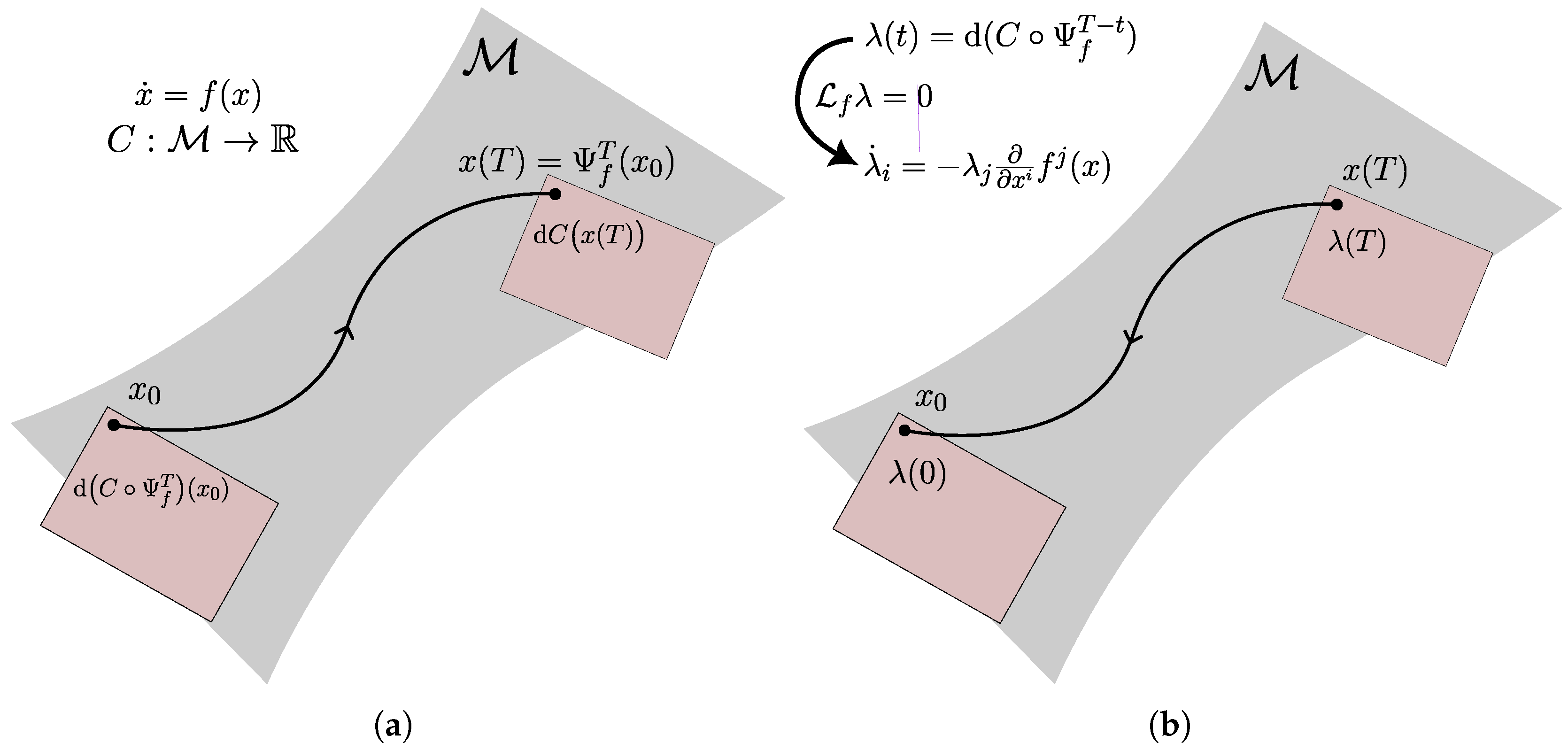

2.3. Gradient over a Flow

3. Neural ODEs on Manifolds

3.1. Constant Parameters and Running and Final Cost

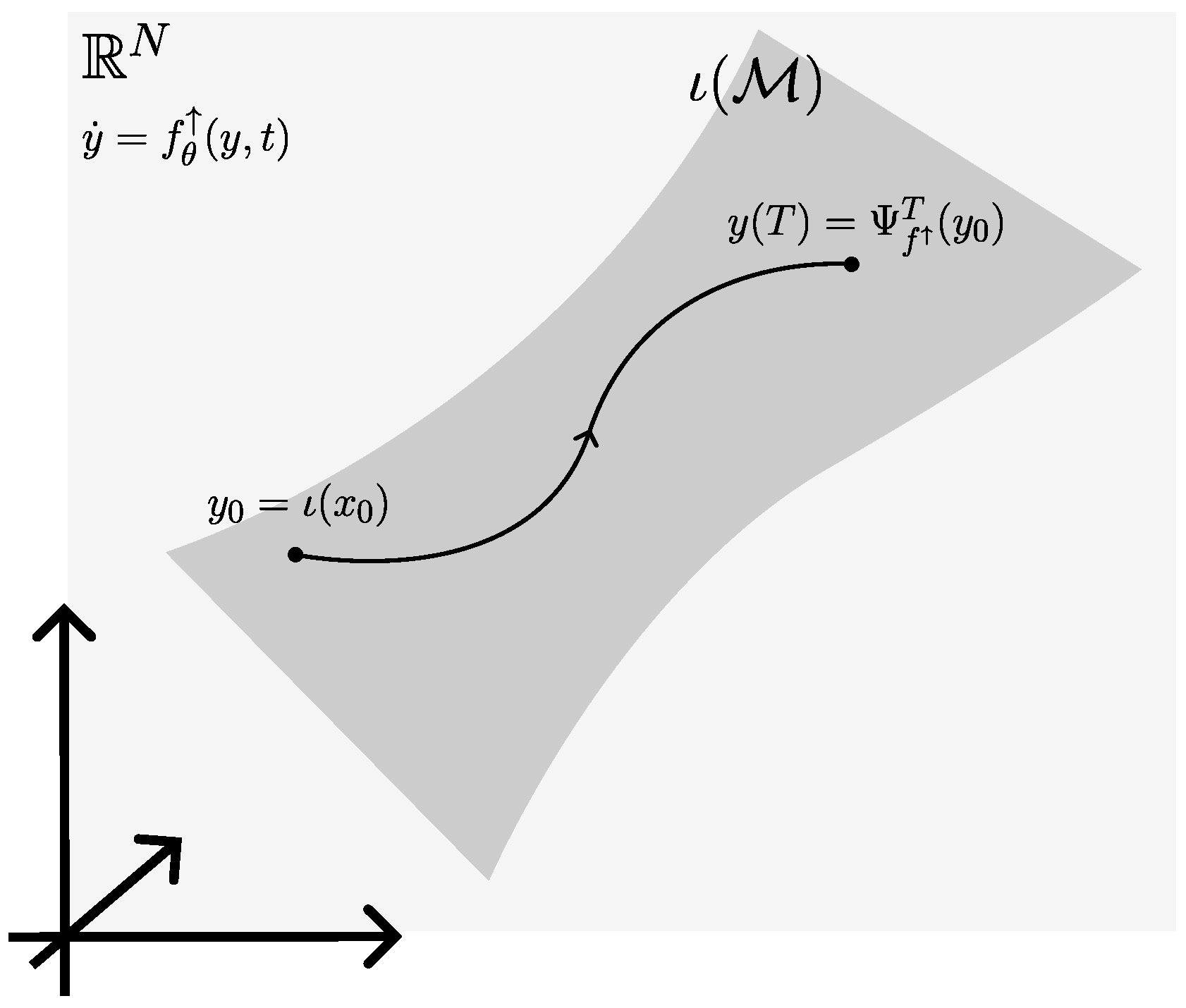

3.1.1. Vanilla Neural ODEs and Extrinsic Neural ODEs on Manifolds

3.1.2. Intrinsic Neural ODEs on Manifolds

3.1.3. Structure Preservation

3.2. Extensions

3.2.1. Nonlinear and Intermittent Cost Terms

3.2.2. Augmented Neural ODEs on Manifolds and Time-Dependent Parameters

4. Neural ODEs on Lie Groups

4.1. Extrinsic Neural ODEs on Lie Groups

4.2. Intrinsic Neural ODEs on Lie Groups

4.3. Extensions

5. Discussion

- The extrinsic formulation is readily implemented if the low-dimensional manifold and an embedding into are known. This comes at the possible cost of geometric inexactness and a higher dimension of the co-state and sensitivity equations.

- The co-state in the intrinsic formulation has a generally lower dimension, which reduces the dimension of the sensitivity equations. The chart-based formulation also guarantees geometrically exact integration of dynamics. This comes at the mild cost of having to define local charts and chart-transitions.

- The extrinsic formulation on matrix Lie groups can come at much higher cost than that on manifolds, since the intrinsic dimension of G can be much lower than and a higher dimension of the co-state and sensitivity equations can be obtained. Geometrically exact integration procedures are more readily available for matrix Lie groups, integrating in local exponential charts.

- The chart-based formulation on matrix Lie groups struggles when dynamics are not naturally phrased in local charts. This is common; dynamics are often more naturally phrased on . This was alleviated by an algebra-based formulation on matrix Lie groups. Both are intrinsic approaches that feature co-state dynamics that are as low as possible. However, the algebra-based approach still lacks readily available software implementation.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Hamiltonian Dynamics on Lie Groups

References

- Chen, R.T.Q.; Rubanova, Y.; Bettencourt, J.; Duvenaud, D. Neural Ordinary Differential Equations. In Proceedings of the 32nd Conference on Neural Information Processing Systems (NeurIPS 2018), Montreal, QC, Canada, 3–8 December 2018; Volume 109, pp. 31–60. Available online: http://arxiv.org/abs/1806.07366 (accessed on 13 August 2025).

- Massaroli, S.; Poli, M.; Park, J.; Yamashita, A.; Asama, H. Dissecting neural ODEs. Adv. Neural Inf. Process. Syst. 2020, 2020, 3952–3963. Available online: http://arxiv.org/abs/2002.08071 (accessed on 13 August 2025).

- Zakwan, M.; Natale, L.D.; Svetozarevic, B.; Heer, P.; Jones, C.; Trecate, G.F. Physically Consistent Neural ODEs for Learning Multi-Physics Systems. IFAC-PapersOnLine 2023, 56, 5855–5860. [Google Scholar] [CrossRef]

- Sholokhov, A.; Liu, Y.; Mansour, H.; Nabi, S. Physics-informed neural ODE (PINODE): Embedding physics into models using collocation points. Sci. Rep. 2023, 13, 10166. [Google Scholar] [CrossRef]

- Ghanem, P.; Demirkaya, A.; Imbiriba, T.; Ramezani, A.; Danziger, Z.; Erdogmus, D. Learning Physics Informed Neural ODEs with Partial Measurements. Proc. AAAI Conf. Artif. Intell. 2024, AAAI-25. Available online: http://arxiv.org/abs/2412.08681 (accessed on 13 August 2025). [CrossRef]

- Massaroli, S.; Poli, M.; Califano, F.; Park, J.; Yamashita, A.; Asama, H. Optimal Energy Shaping via Neural Approximators. SIAM J. Appl. Dyn. Syst. 2022, 21, 2126–2147. [Google Scholar] [CrossRef]

- Niu, H.; Zhou, Y.; Yan, X.; Wu, J.; Shen, Y.; Yi, Z.; Hu, J. On the applications of neural ordinary differential equations in medical image analysis. Artif. Intell. Rev. 2024, 57, 236. [Google Scholar] [CrossRef]

- Oh, Y.; Kam, S.; Lee, J.; Lim, D.Y.; Kim, S.; Bui, A.A.T. Comprehensive Review of Neural Differential Equations for Time Series Analysis. arXiv 2025, arXiv:2502.09885. Available online: http://arxiv.org/abs/2502.09885 (accessed on 13 August 2025).

- Poli, M.; Massaroli, S.; Scimeca, L.; Chun, S.; Oh, S.J.; Yamashita, A.; Asama, H.; Park, J.; Garg, A. Neural Hybrid Automata: Learning Dynamics with Multiple Modes and Stochastic Transitions. In Proceedings of the 35th Conference on Neural Information Processing Systems (NeurIPS 2021), Online, 6–14 December 2021; Volume 34, pp. 9977–9989. Available online: http://arxiv.org/abs/2106.04165 (accessed on 13 August 2025).

- Chen, R.T.Q.; Amos, B.; Nickel, M. Learning Neural Event Functions for Ordinary Differential Equations. In Proceedings of the Ninth International Conference on Learning Representations (ICLR 2021), Virtual, 3–7 May 2021; Available online: http://arxiv.org/abs/2011.03902 (accessed on 13 August 2025).

- Davis, J.Q.; Choromanski, K.; Varley, J.; Lee, H.; Slotine, J.J.; Likhosterov, V.; Weller, A.; Makadia, A.; Sindhwani, V. Time Dependence in Non-Autonomous Neural ODEs. arXiv 2020, arXiv:2005.01906. Available online: http://arxiv.org/abs/2005.01906 (accessed on 13 August 2025). [CrossRef]

- Dupont, E.; Doucet, A.; Teh, Y.W. Augmented Neural ODEs. In Proceedings of the 33rd International Conference on Neural Information Processing Systems (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019; Available online: http://arxiv.org/abs/1904.01681 (accessed on 13 August 2025).

- Chu, H.; Miyatake, Y.; Cui, W.; Wei, S.; Furihata, D. Structure-Preserving Physics-Informed Neural Networks with Energy or Lyapunov Structure. In Proceedings of the 33rd International Joint Conference on Artificial Intelligence (IJCAI 24), Jeju, Republic of Korea, 3–9 August 2024. [Google Scholar] [CrossRef]

- Kütük, M.; Yücel, H. Energy dissipation preserving physics informed neural network for Allen–Cahn equations. J. Comput. Sci. 2025, 87, 102577. [Google Scholar] [CrossRef]

- Bullo, F.; Murray, R.M. Tracking for fully actuated mechanical systems: A geometric framework. Automatica 1999, 35, 17–34. [Google Scholar] [CrossRef]

- Marsden, J.E.; Ratiu, T.S. Introduction to Mechanics and Symmetry; Springer: New York, NY, USA, 1999; Volume 17. [Google Scholar] [CrossRef]

- Whiteley, N.; Gray, A.; Rubin-Delanchy, P. Statistical exploration of the Manifold Hypothesis. arXiv 2025, arXiv:2208.11665. Available online: http://arxiv.org/abs/2208.11665 (accessed on 13 August 2025).

- Lou, A.; Lim, D.; Katsman, I.; Huang, L.; Jiang, Q.; Lim, S.N.; De Sa, C. Neural Manifold Ordinary Differential Equations. In Proceedings of the 34th Conference on Neural Information Processing Systems (NeurIPS 2020), Online, 6–12 December 2020; Available online: http://arxiv.org/abs/2006.10254 (accessed on 13 August 2025).

- Falorsi, L.; Forré, P. Neural Ordinary Differential Equations on Manifolds. arXiv 2020, arXiv:2006.06663. Available online: http://arxiv.org/abs/2006.06663 (accessed on 13 August 2025). [CrossRef]

- Duong, T.; Altawaitan, A.; Stanley, J.; Atanasov, N. Port-Hamiltonian Neural ODE Networks on Lie Groups for Robot Dynamics Learning and Control. IEEE Trans. Robot. 2024, 40, 3695–3715. [Google Scholar] [CrossRef]

- Wotte, Y.P.; Califano, F.; Stramigioli, S. Optimal potential shaping on SE(3) via neural ordinary differential equations on Lie groups. Int. J. Robot. Res. 2024, 43, 2221–2244. [Google Scholar] [CrossRef]

- Floryan, D.; Graham, M.D. Data-driven discovery of intrinsic dynamics. Nat. Mach. Intell. 2022, 4, 1113–1120. [Google Scholar] [CrossRef]

- Andersdotter, E.; Persson, D.; Ohlsson, F. Equivariant Manifold Neural ODEs and Differential Invariants. arXiv 2024, arXiv:2401.14131. Available online: http://arxiv.org/abs/2401.14131 (accessed on 13 August 2025). [CrossRef]

- Wotte, Y.P. Optimal Potential Shaping on SE(3) via Neural Approximators. Master’s Thesis, University of Twente, Enschede, The Netherlands, 2021. [Google Scholar]

- Kidger, P. On Neural Differential Equations. Ph.D. Thesis, Mathematical Institute, University of Oxford, Oxford, UK, 2022. [Google Scholar]

- Gholami, A.; Keutzer, K.; Biros, G. ANODE: Unconditionally Accurate Memory-Efficient Gradients for Neural ODEs. In Proceedings of the 28th International Joint Conference on Artificial Intelligence (IJCAI 19), Macao, 10–16 August 2019; Available online: http://arxiv.org/abs/1902.10298 (accessed on 13 August 2025).

- Kidger, P.; Morrill, J.; Foster, J.; Lyons, T.J. Neural Controlled Differential Equations for Irregular Time Series. In Proceedings of the 34th Conference on Neural Information Processing Systems (NeurIPS 2020), Online, 6–12 December 2020; Available online: http://arxiv.org/abs/2005.08926 (accessed on 13 August 2025).

- Li, X.; Wong, T.L.; Chen, R.T.Q.; Duvenaud, D. Scalable Gradients for Stochastic Differential Equations. In Proceedings of the 23rd International Conference on Artificial Intelligence and Statistics (AISTATS 2020), Online, 26–28 August 2020; Available online: http://arxiv.org/abs/2001.01328 (accessed on 13 August 2025).

- Liu, Y.; Cheng, J.; Zhao, H.; Xu, T.; Zhao, P.; Tsung, F.; Li, J.; Rong, Y. SEGNO: Generalizing Equivariant Graph Neural Networks with Physical Inductive Biases. In Proceedings of the 12th International Conference on Learning Representations (ICLR 2024), Vienna, Austria, 7–11 May 2024; Available online: http://arxiv.org/abs/2308.13212 (accessed on 13 August 2025).

- Greydanus, S.; Dzamba, M.; Yosinski, J. Hamiltonian Neural Networks. In Proceedings of the 33rd Conference on Neural Information Processing Systems (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019; Available online: http://arxiv.org/abs/1906.01563 (accessed on 13 August 2025).

- Finzi, M.; Wang, K.A.; Wilson, A.G. Simplifying Hamiltonian and Lagrangian Neural Networks via Explicit Constraints. In Proceedings of the 34th Conference on Neural Information Processing Systems (NeurIPS 2020), Online, 6–12 December 2020; Available online: http://arxiv.org/abs/2010.13581 (accessed on 13 August 2025).

- Cranmer, M.; Greydanus, S.; Hoyer, S.; Research, G.; Battaglia, P.; Spergel, D.; Ho, S. Lagrangian Neural Networks. In Proceedings of the ICLR 2020 Deep Differential Equations Workshop, Addis Ababa, Ethiopia, 26 April 2020; Available online: http://arxiv.org/abs/2003.04630 (accessed on 13 August 2025).

- Bhattoo, R.; Ranu, S.; Krishnan, N.M. Learning the Dynamics of Particle-based Systems with Lagrangian Graph Neural Networks. Mach. Learn. Sci. Technol. 2023, 4, 015003. [Google Scholar] [CrossRef]

- Xiao, S.; Zhang, J.; Tang, Y. Generalized Lagrangian Neural Networks. arXiv 2024, arXiv:2401.03728. Available online: http://arxiv.org/abs/2401.03728 (accessed on 13 August 2025). [CrossRef]

- Rettberg, J.; Kneifl, J.; Herb, J.; Buchfink, P.; Fehr, J.; Haasdonk, B. Data-Driven Identification of Latent Port-Hamiltonian Systems. arXiv 2024, 37–99. Available online: http://arxiv.org/abs/2408.08185 (accessed on 13 August 2025).

- Duong, T.; Atanasov, N. Hamiltonian-based Neural ODE Networks on the SE(3) Manifold For Dynamics Learning and Control. In Proceedings of the Robotics: Science and Systems (RSS 2021), Online, 12–16 July 2021; Available online: http://arxiv.org/abs/2106.12782v3 (accessed on 13 August 2025).

- Fronk, C.; Petzold, L. Training stiff neural ordinary differential equations with explicit exponential integration methods. Chaos 2025, 35, 33154. [Google Scholar] [CrossRef]

- Kloberdanz, E.; Le, W. S-SOLVER: Numerically Stable Adaptive Step Size Solver for Neural ODEs. In Artificial Neural Networks and Machine Learning—ICANN 2023; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2023; Volume 14262, pp. 388–400. [Google Scholar] [CrossRef]

- Akhtar, S.W. On Tuning Neural ODE for Stability, Consistency and Faster Convergence. SN Comput. Sci. 2025, 6, 318. [Google Scholar] [CrossRef]

- Zhu, A.; Jin, P.; Zhu, B.; Tang, Y. On Numerical Integration in Neural Ordinary Differential Equations. In Proceedings of the 39th International Conference on Machine Learning (ICML 2022), Baltimore, MD, USA, 17–23 July 2022; ML Research Press: Baltimore, MD, USA, 2022; Volume 162, pp. 27527–27547. Available online: http://arxiv.org/abs/2206.07335 (accessed on 13 August 2025).

- Munthe-Kaas, H. High order Runge-Kutta methods on manifolds. Appl. Numer. Math. 1999, 29, 115–127. [Google Scholar] [CrossRef]

- Celledoni, E.; Marthinsen, H.; Owren, B. An introduction to Lie group integrators—Basics, New Developments and Applications. J. Comput. Phys. 2014, 257, 1040–1061. [Google Scholar] [CrossRef]

- Ma, Y.; Dixit, V.; Innes, M.J.; Guo, X.; Rackauckas, C. A Comparison of Automatic Differentiation and Continuous Sensitivity Analysis for Derivatives of Differential Equation Solutions. In Proceedings of the 2021 IEEE High Performance Extreme Computing Conference (HPEC 2021), Online, 20–24 September 2021. [Google Scholar] [CrossRef]

- Saemundsson, S.; Terenin, A.; Hofmann, K.; Deisenroth, M.P. Variational Integrator Networks for Physically Structured Embeddings. In Proceedings of the Twenty Third International Conference on Artificial Intelligence and Statistics (AISTATS 2020), Online, 26–28 August 2020; pp. 3078–3087. Available online: http://arxiv.org/abs/1910.09349 (accessed on 13 August 2025).

- Desai, S.A.; Mattheakis, M.; Roberts, S.J. Variational integrator graph networks for learning energy-conserving dynamical systems. Phys. Rev. E 2021, 104, 035310. [Google Scholar] [CrossRef] [PubMed]

- Bobenko, A.I.; Suris, Y.B. Mathematical Physics Discrete Time Lagrangian Mechanics on Lie Groups, with an Application to the Lagrange Top. Commun. Math. Phys. 1999, 204, 147–188. [Google Scholar] [CrossRef]

- Marsden, J.E.; Pekarsky, S.; Shkoller, S.; West, M. Variational Methods, Multisymplectic Geometry and Continuum Mechanics. J. Geom. Phys. 2001, 38, 253–284. [Google Scholar] [CrossRef]

- Duruisseaux, V.; Duong, T.; Leok, M.; Atanasov, N. Lie Group Forced Variational Integrator Networks for Learning and Control of Robot Systems. In Proceedings of the 5th Annual Conference on Learning for Dynamics and Control, Philadelphia, PA, USA, 15–16 June 2023; Volume 211, pp. 1–21. Available online: http://arxiv.org/abs/2211.16006 (accessed on 13 August 2025).

- Lee, J.M. Introduction to Smooth Manifolds, 2nd ed.; Springer: New York, NY, USA, 2012. [Google Scholar] [CrossRef]

- Hall, B.C. Lie Groups, Lie Algebras, and Representations: An Elementary Introduction; Graduate Texts in Mathematics (GTM); Springer: Berlin/Heidelberg, Germany, 2015; Volume 222. [Google Scholar] [CrossRef]

- Solà, J.; Deray, J.; Atchuthan, D. A micro Lie theory for state estimation in robotics. arXiv 2021, arXiv:1812.01537. Available online: http://arxiv.org/abs/1812.01537 (accessed on 13 August 2025). [CrossRef]

- Visser, M.; Stramigioli, S.; Heemskerk, C. Cayley-Hamilton for roboticists. IEEE Int. Conf. Intell. Robot. Syst. 2006, 1, 4187–4192. [Google Scholar] [CrossRef]

- Robbins, H.; Monro, S. A Stochastic Approximation Method. Ann. Math. Stat. 1951, 22, 400–407. [Google Scholar] [CrossRef]

- Falorsi, L.; de Haan, P.; Davidson, T.R.; Forré, P. Reparameterizing Distributions on Lie Groups. In Proceedings of the 22nd International Conference on Artificial Intelligence and Statistics (AISTATS 2019), Naha, Okinawa, Japan, 16–18 April 2019; Available online: http://arxiv.org/abs/1903.02958 (accessed on 13 August 2025).

- White, A.; Kilbertus, N.; Gelbrecht, M.; Boers, N. Stabilized Neural Differential Equations for Learning Dynamics with Explicit Constraints. In Proceedings of the 37th Conference on Neural Information Processing Systems (NeurIPS 2023), New Orleans, LA, USA, 10–16 December 2023; Available online: http://arxiv.org/abs/2306.09739 (accessed on 13 August 2025).

- Poli, M.; Massaroli, S.; Yamashita, A.; Asama, H.; Park, J. TorchDyn: A Neural Differential Equations Library. arXiv 2020, arXiv:2009.09346. Available online: http://arxiv.org/abs/2009.09346 (accessed on 13 August 2025). [CrossRef]

- Schaft, A.V.D.; Jeltsema, D. Port-Hamiltonian Systems Theory: An Introductory Overview; Now Publishers Inc.: Hanover, MA, USA, 2014; Volume 1, pp. 173–378. [Google Scholar] [CrossRef]

- Rashad, R.; Califano, F.; van der Schaft, A.J.; Stramigioli, S. Twenty years of distributed port-Hamiltonian systems: A literature review. IMA J. Math. Control Inf. 2020, 37, 1400–1422. [Google Scholar] [CrossRef]

- Wotte, Y.P.; Dummer, S.; Botteghi, N.; Brune, C.; Stramigioli, S.; Califano, F. Discovering efficient periodic behaviors in mechanical systems via neural approximators. Optim. Control Appl. Methods 2023, 44, 3052–3079. [Google Scholar] [CrossRef]

- Yao, W. A Singularity-Free Guiding Vector Field for Robot Navigation; Springer: Cham, Switzerland, 2023; pp. 159–190. [Google Scholar] [CrossRef]

| Name of Neural ODE | Subtype | Trajectory Cost | Subsection | Originally Introduced in |

|---|---|---|---|---|

| Neural ODEs on manifolds (Section 3) | Extrinsic | Running and final cost | Section 3.1.1 | Final cost [19], running cost [21] |

| Intrinsic | Running and final cost, intermittent cost | Section 3.1.2 and Section 3.2.1 | Final cost [18], running cost [21], intermittent cost (this work) | |

| Augmented, time-dependent parameters | Final cost | Section 3.2.2 | Augmenting to [23], Augmenting to (this work) | |

| Neural ODEs on Lie groups (Section 4) | Extrinsic | Final cost and intermittent cost | Section 4.1 | In [20] |

| Intrinsic, dynamics in local charts | Running and final cost | Section 4.2 | In [21,24] | |

| Intrinsic, dynamics on Lie algebra | Running and final cost | Section 4.2 | In [21] |

| Function | Vanilla Neural ODE | Extrinsic Neural ODE |

|---|---|---|

| Scalar fields | ||

| Vector fields | with tangency constraint [19], optional stabilization [55] | |

| Components of -tensor fields | , see footnote 1 |

| Partition of Unity [24,49] | Soft Constraint [18,22] | Pullback [19,21] |

|---|---|---|

| Components from all local charts are summed, weighted by a partition of unity. | Function is directly represented in local charts. | Function is pulled back to local chart. |

| Allows representation of arbitrary smooth functions. | Functions are smooth where charts do not overlap, but are not well-defined at chart transitions. | Allows representation of arbitrary smooth functions. |

| Differentiating functions generally requires differentiating through chart transition maps, creating computational overload [24]. | Chart transition maps do not have to be differentiated. | Chart representations of the embedding are differentiated, possibly creating computational overload. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wotte, Y.P.; Califano, F.; Stramigioli, S. Geometric Neural Ordinary Differential Equations: From Manifolds to Lie Groups. Entropy 2025, 27, 878. https://doi.org/10.3390/e27080878

Wotte YP, Califano F, Stramigioli S. Geometric Neural Ordinary Differential Equations: From Manifolds to Lie Groups. Entropy. 2025; 27(8):878. https://doi.org/10.3390/e27080878

Chicago/Turabian StyleWotte, Yannik P., Federico Califano, and Stefano Stramigioli. 2025. "Geometric Neural Ordinary Differential Equations: From Manifolds to Lie Groups" Entropy 27, no. 8: 878. https://doi.org/10.3390/e27080878

APA StyleWotte, Y. P., Califano, F., & Stramigioli, S. (2025). Geometric Neural Ordinary Differential Equations: From Manifolds to Lie Groups. Entropy, 27(8), 878. https://doi.org/10.3390/e27080878