Abstract

We study the generalization properties of stochastic optimization methods under adaptive data sampling schemes, focusing on the setting of pairwise learning, which is central to tasks like ranking, metric learning, and AUC maximization. Unlike pointwise learning, pairwise methods must address statistical dependencies between input pairs—a challenge that existing analyses do not adequately handle when sampling is adaptive. In this work, we extend a general framework that integrates two algorithm-dependent approaches—algorithmic stability and PAC–Bayes analysis for this purpose. Specifically, we examine (1) Pairwise Stochastic Gradient Descent (Pairwise SGD), widely used across machine learning applications, and (2) Pairwise Stochastic Gradient Descent Ascent (Pairwise SGDA), common in adversarial training. Our analysis avoids artificial randomization and leverages the inherent stochasticity of gradient updates instead. Our results yield generalization guarantees of order under non-uniform adaptive sampling strategies, covering both smooth and non-smooth convex settings. We believe these findings address a significant gap in the theory of pairwise learning with adaptive sampling.

1. Introduction

The increasing availability of data makes it feasible to use increasingly large models in principle. However, this comes at the expense of an increasing computational cost of training these models in large pairwise learning applications. Some notable examples of pairwise learning problems include ranking and preference prediction, AUC maximization, and metric learning [1,2,3,4,5]. For instance, in metric learning we aim to learn an appropriate distance or similarity to compare pairs of examples, which has numerous applications such as face verification, person re-identification (Re-ID) [6,7,8,9,10], and bioactivity prediction [11]. Pairwise learning has also been applied to positive-unlabeled (PU) learning problems [12], where only positive and unlabeled examples are available. Such problems arise in one-class classification settings, with practical applications in areas such as fault detection and diagnosis in advanced engineering systems [13]. Given the broad relevance of pairwise learning, there is a pressing need to deepen our theoretical understanding of its generalization properties. This in turn can inform the design of algorithms that generalize reliably to unseen pairs and offer interpretability and trustworthiness to end users.

In both pointwise and pairwise learning settings, Stochastic Gradient Descent (SGD) and Stochastic Gradient Descent Ascent (SGDA) are widely used for large-scale minimization and min-max optimization problems in machine learning due to their favorable computational efficiency. These methods rely on stochastic sampling strategies to approximate the true gradients, and several works have explored data-dependent sampling techniques to accelerate convergence to the optimum [14,15,16,17,18,19]. SGDA, in particular, is a standard approach in solving min-max problems, finding notable applications in generative adversarial networks (GANs) [20] and adversarial training [21,22].

Adversarial perturbations are subtle, often imperceptible modifications to input data designed to deceive models and cause incorrect predictions [23]. Recent studies in pairwise learning have explored strategies to enhance adversarial robustness, applying adversarial pairwise learning methods to min-max problems across various domains, such as metric learning [24,25], ranking [25,26], and kinship verification [27]. These developments illustrate the need for robust, theoretically grounded pairwise methods that can withstand adversarial attacks while maintaining generalization performance.

Under the assumption of i.i.d. data points in classic pointwise learning, the empirical risk of a fixed hypothesis is an average of i.i.d. random variables. However, in pairwise learning the pairs derived from i.i.d. points are no longer i.i.d. Instead, when the loss is symmetric and computed over all unordered pairs, the empirical risk takes the form of a second-order U-statistic. Therefore, results on U-processes may be used to investigate the generalization analysis of pairwise learning [3,28]. While there is much research on the generalization analysis of pairwise learning, the effect of non-uniform, data-dependent sampling schemes has not been rigorously studied.

Non-uniform sampling can be beneficial in noisy data situations where the training examples may not be equally reliable or equally informative. Some examples may be less important than others, or even misleading—e.g., mislabeled examples or examples situated in an ambiguous class-overlap region. In rare cases when the usefulness or importance of individual training examples is known, then the sampling distribution can be designed and fixed before training, and this may improve the representativeness of the sample. For instance, in the case of infrequent observations [29], inverse frequency sampling prioritizes rare examples that may be underrepresented in the training set, ensuring their proper influence. However, in most cases the relative importance of training examples is not known a priori; hence, it is desirable to learn the sampling distribution together with training the model.

The idea of adaptive sampling refers to a sampling distribution that depends on the training sample. Such non-uniform and data-dependent sampling shows great potential in the literature of randomized algorithms for both SGD- [14] and SGDA-based [30] optimizers, in both pointwise and pairwise settings. Importance sampling [14] is one of the widely used of such strategies, and a few others will be reviewed shortly in Section 2. Therefore, recent work [31,32] has begun to develop a better understanding of the generalization behavior of such algorithms, which we continue here in the setting of pairwise learning. The main bottlenecks in the analysis of adaptive sampling-based stochastic optimizers are that (i) a correction factor is often used to ensure the unbiasedness of the gradient [14], which also depends on training data points complicating the analysis, and (ii) in the pairwise setting we also need to cater to statistical dependencies between data pairs, which are due to the fact that each point participates in multiple pairs.

To tackle these problems, we develop a PAC–Bayesian analysis of the generalization of pairwise stochastic optimization methods, which removes the need for a correction factor, and we use U-statistics to capture the statistical structure of pairwise loss functions. The PAC–Bayes framework allows us to obtain generalization bounds that hold uniformly for all posterior sampling schemes, under a mild condition required on a pre-specified prior sampling scheme (chosen as the uniform sampling). For randomized methods, such as Pairwise SGD and Pairwise SGDA, the sampling index pairs will be treated as hyperparameters that follow a sampling distribution.

Our main contributions are listed in Table 1, summarizing the generalization bounds of the order for these randomized algorithms under different assumptions, where n is the sample size.

Table 1.

Summary of generalization rates obtained for two pairwise stochastic optimization algorithms (Pairwise SGD, Pairwise SGDA) under two sets of assumptions (Lipschitz (L), smooth (S), convex (C)) on the pairwise loss function, together with the chosen number of iterations T and step size . The sample size is n. According to this summary, we notice that smaller step sizes and more iterations are needed if the smoothness assumption is removed (more details in Section 4).

Our technical contributions are summarized as follows:

- We bound the generalization gap of randomized pairwise learning algorithms that operate with an arbitrary data-dependent sampling, in a PAC–Bayesian framework, under a sub-exponential stability condition.

- We apply the above general result to Pairwise SGD and Pairwise SGDA with arbitrary sampling. For both of these algorithms, we verify the sub-exponential stability in both smooth and non-smooth problems.

- We exemplify how our bounds can inform algorithm design, and we demonstrate how to extract meaningful information from the resulting algorithms.

Our work builds on well-established tools, including a specific flavor of PAC–Bayesian analysis [31], U-statistic decomposition, and a moment bound for uniformly stable pairwise learning algorithms [33], aimed at bringing theoretical insight into an important, yet relatively underexplored, setting: pairwise learning with adaptive sampling. To the best of our knowledge, our analysis is the first to derive explicit generalization bounds for this setting.

The remainder of the paper is organized as follows. We survey the related work on the generalization analysis and non-uniform sampling in Section 2. We give a brief background on U-statistics and algorithmic stability analysis in Section 3. Our general result and its applications to Pairwise SGD and Pairwise SGDA are presented in Section 4.

2. Related Work

Adaptive Sampling in Stochastic Optimization. Importance Sampling for Stochastic Gradient Descent was proposed in [14]. To compute the stochastic gradient, a training example () is sampled with probability proportional to the gradient norm , where are the model parameters and ℓ is the loss function. This prioritizes high-impact updates from the perspective of optimization—the authors proved that this can significantly reduce the variance of the stochastic gradient and accelerate convergence to the optimum. A related idea, loss-based sampling, proposed in [16], assigns sampling probabilities proportional to the loss evaluated on training points, that is, , thereby focusing on hard-to-fit examples. The authors show faster convergence to the optimum. While these works do not consider pairwise learning, they represent landmarks on adaptive sampling in stochastic optimization.

Furthermore, there are variants of these ideas aimed at lightening the computational demand. These include upper bounds to the gradient norm, shown to exhibit better performance in comparison with the loss-based sampling [34]. The work in [18] proposes to sample the training points based on their relative distance to each other. Another more recent data-dependent sampling approach called group sampling appears in [19] and has been applied to a person re-identification (Re-ID) application.

Adaptive sampling is an umbrella term referring to sampling distributions that depend on the training sample. Here, we mentioned a few of the most prominent existing examples. However, the appropriate sampling distribution is task-dependent. The works discussed, and most of the previous work on adaptive sampling in stochastic optimization, aim at accelerating convergence to the optimum. Therefore, they have no explicit cost for data-dependent sampling; instead, they have a multiplicative correction factor to ensure an unbiased gradient. However, the goal of learning is generalization, which is different from achieving the global optimum on training data. There must be a cost for data-dependent sampling to avoid over-reliance on a biased subset of points. Our forthcoming generalization bounds will quantify this rigorously and provide guidance for algorithm design.

With the exception of [32] and our previous conference paper [31], results on the generalization analysis of the resulting randomized algorithms are very scarce, which is our goal to advance in this paper specifically for the pairwise learning setting.

Generalization through Algorithmic Stability. Stability was popularized in the seminal work of [35], to formalize the intuition that algorithms whose output is resilient to changing an example in its input data will generalize. The stability framework subsequently motivated a chain of analysis of randomized iterative algorithms, such as SGD [36] and SGDA [37,38]. While the stability framework in the previous work is well suited for SGD-type algorithms that operate a uniform sampling scheme [36], this framework alone is unable to tackle arbitrary data-dependent sampling schemes.

Generalization through PAC–Bayes. The PAC–Bayes theory of generalization is another algorithm-dependent framework in statistical learning, the gist of which is to leverage a pre-specified prior distribution on the parameters of interest to obtain generalization bounds that hold uniformly for all posterior distributions [39,40]. Its complementarity with the algorithmic stability framework sparked ideas for combining them [41,42,43,44], some of which are also applicable to randomized learning algorithms such as SGD and SGLD [32,45,46,47]. While insightful, these works assume i.i.d. examples and cannot be applied to non-i.i.d. settings that arise in pairwise learning.

In non-i.i.d. settings, ref. [48] gave PAC–Bayes bounds using fractional covers, which allows for handling the dependencies within the inputs. This gives rise to generalization bounds for pairwise learning, with predictors following a distribution induced by a prior distribution on the model’s parameters. However, with SGD-type methods in mind, which have a randomization already built into the algorithm, the classic PAC–Bayes approach of placing a prior on a model’s parameters would be somewhat artificial. Indeed, considerable research effort has been spent to reverse such randomization [49]. Another issue concerns the prior specification—recent research [50] reveals that placing sufficient prior mass on good predictors is a condition for meaningful PAC–Bayes guarantees. These are difficult to set without a strong prior knowledge. Instead, the construction proposed in [31,32] (albeit restricted to the i.i.d. setting) is to exploit this built-in stochasticity of modern gradient-based optimization algorithms directly, by interpreting it as a PAC–Bayes prior placed on a hyperparameter. We will build on this idea further in this work.

3. Preliminaries

3.1. Pairwise Learning and U-Statistics

Let be an unknown distribution on sample space . We denote by the parameter space, and will be a hyperparameter space. Given a training set drawn i.i.d. from and a hyperparameter , a learning algorithm A returns a model parameterized by .

We are interested in pairwise learning problems and will use a pairwise loss function to measure the mismatch between the prediction of the model that acts on example pairs. The generalization error, or true risk, is defined as the expected loss of the learned predictor applied on an unseen pair of inputs drawn from , that is,

Since is unknown, we consider the empirical risk,

where . The generalization error is a random quantity as a function of the sample S, which does not consider the randomization used when selecting the data or feature index for the update rule of A at each iteration.

To take advantage of the built-in stochasticity of the type of algorithms we consider, we further define two distributions on the hyperparameter space : a sample-independent distribution and a sample-dependent distribution . In this stochastic or randomized learning algorithm setting, the expected risk and the expected empirical risk (both with respect to ) are defined as

We denote the difference between the risk and the empirical risk (i.e., the generalization gap) by .

The difficulty with the pairwise empirical loss (2) is that, even with S consisting of i.i.d. instances, the pairs from S are dependent of each other. Instead, is a second-order U-statistic. A powerful technique to handle the U-statistic is the representation as an average of “sums-of-i.i.d.” blocks [28]. That is, for a symmetric kernel , we can represent the U-statistic as

where ranges over all permutations of .

3.2. Connection with the PAC–Bayesian Framework

As described above, we consider two probability distributions on the hyperparameter space , to account for the stochasticity in stochastic optimization algorithms, such as Pairwise SGD and Pairwise SGDA, where the hyperparameter is a sequence of pairs of indices that follow a discrete distribution. For instance, in Pairwise SGD, in every iteration , we have , that is, a pair of independently sampled sample indices, drawn from with replacement (more details in Section 4.1). We define two distributions over , namely the PAC–Bayes prior , which needs to be specified before seeing the training data, and the PAC–Bayes posterior , which is allowed to depend on the training sample. This setting is different from the classic use of PAC–Bayes, which defines the two distributions directly on the trainable parameter space . Our distributions defined on indirectly induce distributions on the parameter estimates, without the need to know their parametric form. This setting of PAC–Bayes was formerly introduced in London [32] in combination with algorithmic stability and further improved in our previous work [31], both restricted to the i.i.d. pointwise setting.

3.3. Connection with the Algorithmic Stability Framework

A more recent framework for the generalization problem considers algorithmic stability [35], which measures the sensitivity of a learning algorithm to small changes in the training data. The concept considered in our work among several notions of algorithmic stability is uniform stability.

Definition 1

(Uniform Stability). For , we say an algorithm is -uniformly stable if

where differs by at most a single example.

The algorithmic stability framework is suitable for analyzing certain deterministic learning algorithms, or randomized algorithms with a pre-defined randomization. In turn, here we are concerned with inherently stochastic algorithms where we wish to allow any data-dependent stochasticity, such as the variants of importance sampling and other recent practical methods mentioned in the related works, e.g., [14,15,18,19,34]. Moreover, in principle our framework and results are applicable even if the sampling distribution is learned from the training data itself.

Sub-exponential Stability. A useful definition of stability that captures the stochastic nature of the algorithms we are interested in is the sub-exponential stability introduced in Zhou et al. [31]. Recall that is a random variable following a distribution defined on . Therefore, the stability parameter is also a random variable as a function of . We want to control the tail behavior of around a value that decays with the sample size n, and we define the sub-exponential stability as the following.

Definition 2

(Sub-exponential stability). Fix any prior distribution on . We say that a stochastic algorithm is sub-exponentially -stable (with respect to ) if, given any fixed instance of , it is -uniformly stable and there exist such that for any , the following holds with probability of at least :

4. Main Results

In this section, we will give generalization bounds for Pairwise SGD and Pairwise SGDA in pairwise learning. To this aim, we first give a general result (Lemma 1) to show the connection between the sub-exponential stability condition (Assumption 2) and the generalization gap in the case of pairwise learning. We then derive stability bounds to show that this assumption holds for Pairwise SGD and Pairwise SGDA, in both smooth convex and non-smooth convex cases. Based on these, we apply the stability bounds to Lemma 1 to derive the corresponding generalization bounds. We use if there exists a universal constant such that . The proof is given in Appendix A.

Lemma 1

(Generalization of randomized pairwise learning). Given distribution , , and M-bounded loss for a sub-exponentially stable algorithm A, , with probability of at least , the following holds uniformly for all absolutely continuous with respect to :

where is the KL divergence between and , .

A strength of Lemma 1 is that we only need to check the sub-exponential stability condition under a prior distribution , and Lemma 1 automatically translates it to generalization bounds for any posterior distribution .

In the forthcoming applications both and P are discrete distributions, so we have . In particular, the prior will be most naturally chosen as the discrete uniform distribution in the context of applications to stochastic optimization in Section 4.2. Let with denoting the uniform distribution on . Hence, the absolute continuity condition is satisfied, ensuring that for all distributions over the set . Furthermore, in this setting, we have , where H denotes the Shannon entropy.

We introduce some classic assumptions that are frequently employed in the analysis of randomized algorithms. Let denote the Euclidean norm. Let S and be neighboring datasets (i.e., they differ in only one example, which we denote as the k-th example, ). For brevity, we write for , where we mean a property that holds for all .

Assumption 1.

Let . We say ℓ is L-Lipschitz if for any , , we have

Assumption 2 (Convexity).

We say ℓ is convex if the following holds :

where represents the inner product.

Assumption 3.

Let . We say a differentiable function ℓ is α-smooth, if for any , , where represents the gradient of ℓ.

4.1. Stability and Generalization of Pairwise SGD

We now consider Pairwise SGD, which, as we will show, also satisfies the sub-exponential stability condition in both smooth and non-smooth cases.

We denote an initial point and a uniform distribution over . At the t-th iteration for Pairwise SGD, a pair of sample indices is uniformly randomly selected from the set . This forms a sequence of index pairs . For step size , the model is updated by

The following lemma shows that Pairwise SGD with uniform sampling applied to both smooth and non-smooth problems enjoys sub-exponential stability. The proof is given in Appendix B.1.

Lemma 2 (Sub-exponential stability of Pairwise SGD).

Let be two parameter sequences produced by Pairwise SGD with fixed step sizes and uniform sampling , while being trained on neighboring training samples S and . Suppose there is a loss in Lipschitzness and convexity (i.e., Assumptions 1 and 2 hold). Then, we have the following:

- (1)

- At the t-th iteration, we have sub-exponential stability (Definition 2) with

- (2)

- If in addition the loss is also smooth (Assumption 3 holds), then with step size , at the t-th iteration, we have sub-exponential stability (Definition 2) with

Using Lemmas 1 and 2, we obtain the following generalization bound for Pairwise SGD with general sampling.

Theorem 1 (Generalization bounds for Pairwise SGD).

Assume ℓ is M-bounded, Lipschitz, and convex (cf. Assumptions 1 and 2). For any , Pairwise SGD with fixed step sizes satisfies the following generalization guarantees with probability of at least over S, , for all posterior sampling distributions on :

- (1)

- After T iterations, we have

- (2)

- If in addition the loss is also smooth (Assumption 3 holds), then with step size , we have

Remark 1.

Suppose , as it has been tacitly assumed also in previous work [32] when quantifying the generalization convergence rate. Taking the choice of parameters suggested by [51], if and in the non-smooth case (part 1), then the above theorem implies bounds of the order . In the smooth case (part 2), an analysis of the trade-off between optimization and generalization, Lei et al. [33] suggested setting and to get a Pairwise SGD to iterate with a good generalization performance. With these choices, our bounds in Theorem 1 are of order , which are not improvable in general.

Remark 2

(Implication of the assumption). Let denote the support of , where in pairwise learning.

Since for all ϕ, the KL divergence is

To ensure this is of order , we need ; hence,

To give more intuition, consider on a restricted support. This is very much a worst-case scenario, as it would imply completely discarding (rather than down-weighting) some of the training points. For such , the maximum entropy occurs when is uniform over its support, so , and . In this case, having requires

To sum up, must satisfy the entropy lower bound (6), and to achieve that entropy on a restricted support, it must have a large enough support, i.e., at least a fraction of the entire Φ.

In Pairwise SGD with non-uniform data-dependent sampling, this result tells us that in order to keep generalization rates that compare against the uniform baseline, cannot discard a large subset of the index sequences. This limits how aggressively one can compress or “distill” a dataset (as in core-set selection or dataset distillation) without paying a KL penalty that slows down the rate—at least as long as the prior is uniform.

Non-uniform sampling alone is insufficient for robust learning. While it may mitigate the effect of a small fraction of bad examples (e.g., out-of-distribution or mislabeled training examples), achieving robustness also requires modeling choices such as robust loss functions. In the next section we approach this via Pairwise SGDA, the type of optimization required in adversarially robust training.

4.2. Stability and Generalization of Pairwise SGDA

In this subsection, we discuss Pairwise SGDA for solving minimax problems in the convex-concave case. We will abuse the notations to apply them to the minimax case. We receive a model by applying a learning algorithm A on training set S and measure the performance with respect to a loss function . For any , we consider the risk defined as

We consider the following empirical risk as the approximation:

We consider Pairwise SGDA with a general sampling scheme, where the random index pairs follow from a general distribution.

We denote and the initial points. Let and be the gradients with respect to and , respectively. Let be a uniform distribution over and S be a training dataset with n samples. Let from set be drawn uniformly at random. At the t-th iteration, with step size sequence , Pairwise SGDA updates the model as follows:

Before giving the results for Pairwise SGDA, we restate the assumptions we need, adapted to the new setting with two distinct parameter vectors and [38,52].

Assumption 4.

Let . We say a differentiable function ℓ is L-Lipschitz with respect to and if the following holds: For any , , , we have

Assumption 5.

Let . We say a differentiable function ℓ is α-smooth if the following inequality holds for any , , , and :

Assumption 6 (Convexity-Concavity).

We say ℓ is concave if is convex. We say ℓ is convex-concave if is convex for every and is concave for every .

Now, we apply Lemma 1 to develop bounds for Pairwise SGDA in both smooth and non-smooth cases. In the following lemma, proved in Appendix B.2, we establish the sub-exponential stability of Pairwise SGDA.

Lemma 3 (Sub-exponential stability of Pairwise SGDA).

Let be two parameter sequences produced by Pairwise SGDA with fixed step sizes and uniform sampling while being trained on neighboring samples S and . Suppose the loss is Lipschitz and convex (cf. Assumptions 4–6). Then, we have the following:

- (1)

- At the t-th iteration, we have sub-exponential stability (Definition 2) with

- (2)

- If in addition the loss is also smooth (cf. Assumption 5), then at the t-th iteration, sub-exponential stability (Definition 2) holds with

We combine the above lemma with Lemma 1 to obtain bounds for Pairwise SGDA with a general sampling distribution.

Theorem 2 (Generalization bounds for Pairwise SGDA).

Assume ℓ is M-bounded, Lipschitz, and convex (cf. Assumptions 4 and 6). For any , Pairwise SGDA with fixed step sizes satisfies the following generalization guarantees with probability of at least over draws of , for all posterior sampling distributions on :

- (1)

- After T iterations, we have

- (2)

- If in addition the loss is also smooth (cf. Assumption 5), we have

Let us assume again that . As Theorem 2 deals with min-max optimization applicable to minimizing an adversarially robust loss function, this assumption still allows some extra flexibility to account for a few outliers while having the following rates. For part 1, if we choose and , this gives a rate of the order . For part 2, if we choose and , this gives the bounds of the order .

5. Algorithmic Implications and Illustrative Experiments

Our theoretical guarantees in the previous section are given up to constant factors. This is common in theoretical analyses, as such results still give useful information about the behavior of bounds with quantities of interest, such as the sample size n. To further verify that our bounds are informative, in this section we show how one can convert them into learning algorithms by minimizing the terms on the r.h.s. of our bounds. We will then illustrate the working of the resulting algorithms in numerical experiments and demonstrate examples of extracting meaningful information from these new algorithms. The goal of this section is to empirically corroborate our theoretical guarantees and demonstrate their potential use for algorithm design.

In line with our theory, we use uniform sampling as the PAC–Bayes prior, and we learn the posterior sampling along with the model’s parameters, by alternating minimization of our bounds. We choose of a factorized form, which corresponds to sampling from training indices with replacement T times during the training trajectory. Minimizing the terms on the r.h.s. of the bounds in Theorem 1 yields an adaptive Pairwise SGD algorithm that we refer to as Pairwise SGD-Q, and likewise minimizing the r.h.s. of the bounds in Theorem 2 yields an adaptive Pairwise SGDA algorithm SGDA-Q. The pseudo-codes of both of these resulting algorithms are given in Algorithm 1 (with the options of Pairwise SGD-Q or Pairwise SGDA-Q).

| Algorithm 1 Pairwise SGD-Q/Pairwise SGDA-Q |

|

Before moving on to exemplify our algorithms at work, we should note that there are two in-built guardrails that prevent sampling bias by design, as follows. The r.h.s. of all bounds that we minimize contain two key terms, each acting as a guardrail: (i) Minimizing the term is equivalent to maximizing the entropy of Q, i.e., the sampling distribution that we learn (since P is the uniform sampling)—hence, Q will only deviate from uniformity for a good reason (e.g., when encountering misleading or mislabeled training pairs). (ii) Minimizing the expected empirical risk (rather than the empirical risk itself) has the consequence that the correction term that usually appears in existing non-uniform sampling-based SGD-type algorithms simply cancels out, automatically ensuring unbiased gradients by construction and without an external correction. Overall, this illustrates the advantage of learning algorithms obtained by minimizing generalization bounds, as the two terms together minimize an upper bound on the quantity we care about, i.e., the true risk.

The forthcoming numerical experiments are meant to showcase the way in which our adaptive sampling methods enhance robustness by learning to down-weight misleading or mislabeled training examples, thereby avoiding being misled on them. As a byproduct, these weights provide explanatory information about the data pairs.

Numerical Results in Pairwise Preference Learning

We illustrate the working of our bound-based pairwise learning algorithms on a toy problem involving pairwise ranking on synthetic 2D data. Pairwise ranking is the task of inferring relative preferences by comparing items in pairs. It has broad applicability, including information retrieval, recommendation systems, preference modeling [53], and positive-unlabeled learning [12]. In this section, we present experiments using a simple linear preference model to demonstrate how our theoretical findings manifest in practice. The aim of this section is not to compete with state-of-the-art empirical methods, but rather to provide insight into the behavior of the algorithms and the use of our bounds under controlled conditions.

We generate i.i.d. points from a 2D standard Gaussian and assign “preference scores” to each using a hidden linear function , where is fixed. We then sample 1000 pairs from this data and assign binary labels indicating which of two items is preferred in each sampled pair.

We use the resulting labeled pairs in the form of difference vectors (where ) as inputs to our Pairwise SGD-Q algorithm to train a linear model with cross-entropy loss for 15 epochs of iterations, each set equal to the number of pairs (so ), with step size . We aim to learn a scoring function so that for any two items and the model can say which one ranks higher. The model learns a weight vector so that the score of item x is , and we want if item i is preferred to j. That is, the model projects all points onto the direction and ranks them by how far they fall along that line.

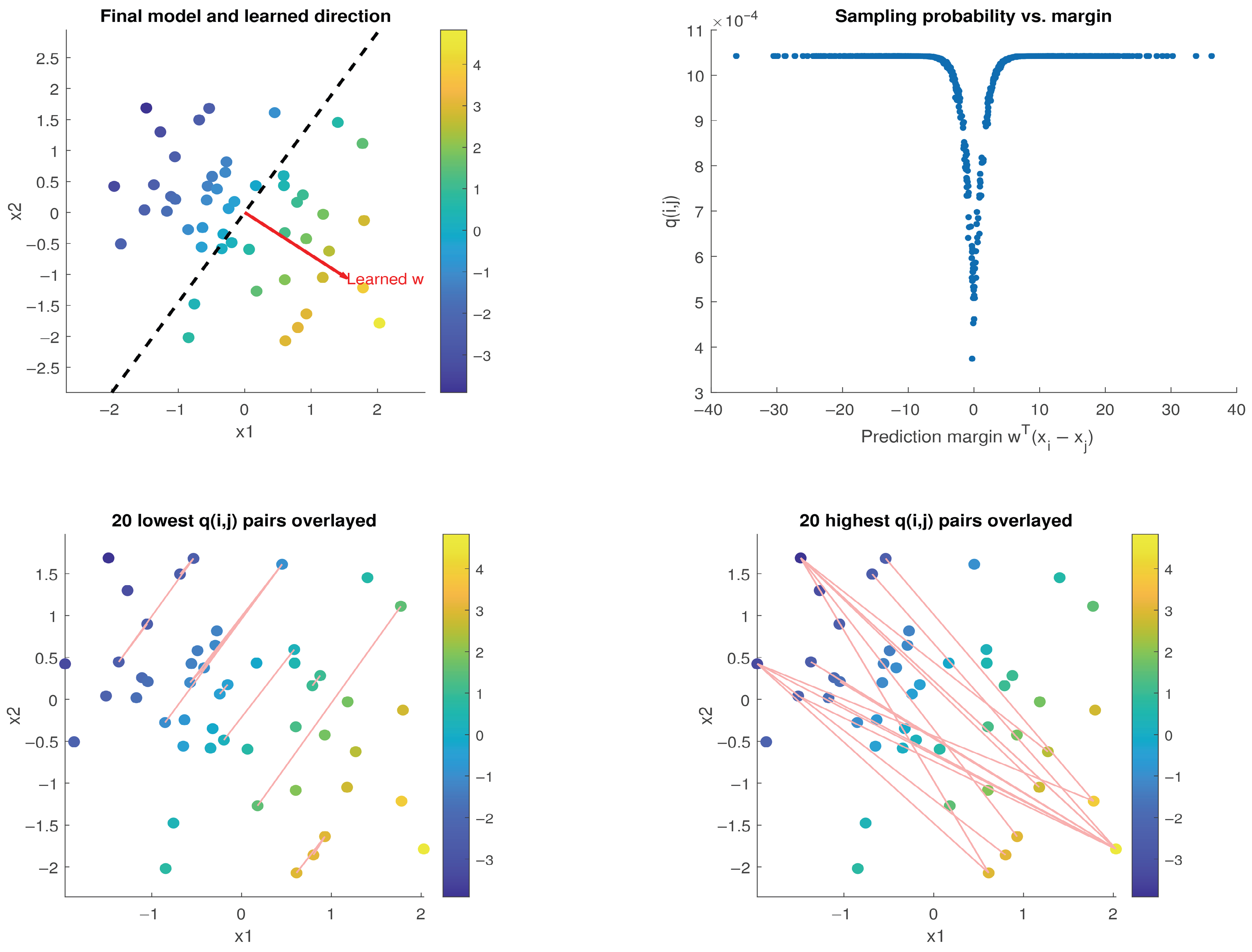

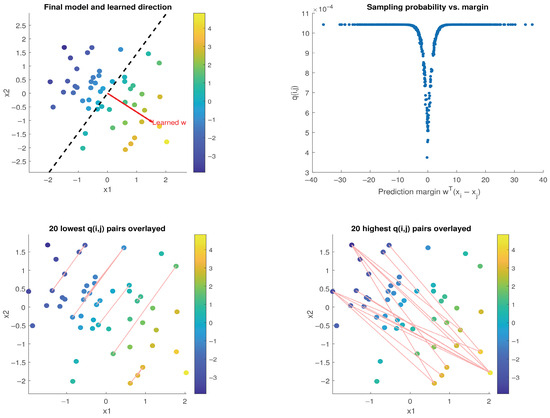

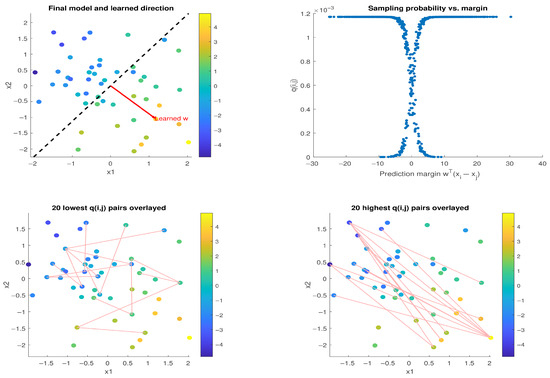

The results are plotted in Figure 1. The top-left figure shows the 2D data colored by their preference scores. A red arrow shows the ranking direction learned by our Pairwise SGD-Q, i.e., the direction the trained model uses to order points. Its associated “decision boundary” is shown by the dashed line. In this context, the decision boundary represents the level set that is the dividing line (more generally, hyperplane) orthogonal to the learned ranking direction. It shows how splits the space into higher- and lower-scoring regions.

Figure 1.

Visualization of Pairwise SGD-Q on a synthetic pairwise ranking task with 2D Gaussian data. (Top-Left) Data points colored by true preference scores; the red arrow indicates the learned ranking direction, and the dashed line shows the decision boundary . (Top-Right) Pairwise margins vs. sampling probabilities ; high-confidence pairs (larger margins) are sampled more frequently. (Bottom-Left) The 20 least informative (lowest q) pairs—these are distant pairs having similar preference scores. (Bottom-Right) The 20 most informative (highest q) pairs.

The top-right figure is a scatter plot showing the relationship between the learned pairwise margins and the learned sampling probabilities (q-scores for each pair). Ambiguous pairs with small margins, i.e., those the model is less confident about, tend to have higher losses and thus get down-weighted by a lower sampling probability . Hence, more confident (larger-margin) pairs are sampled more often.

The bottom-left plot overlays an edge for the 20 lowest q-scored pairs. These are the pairs that have the same preference score but differing feature coordinates—indeed the least helpful pairs for learning the preference change direction. The 20 highest q-scored pairs are shown on the bottom-right plot; these are most informative of the direction of change.

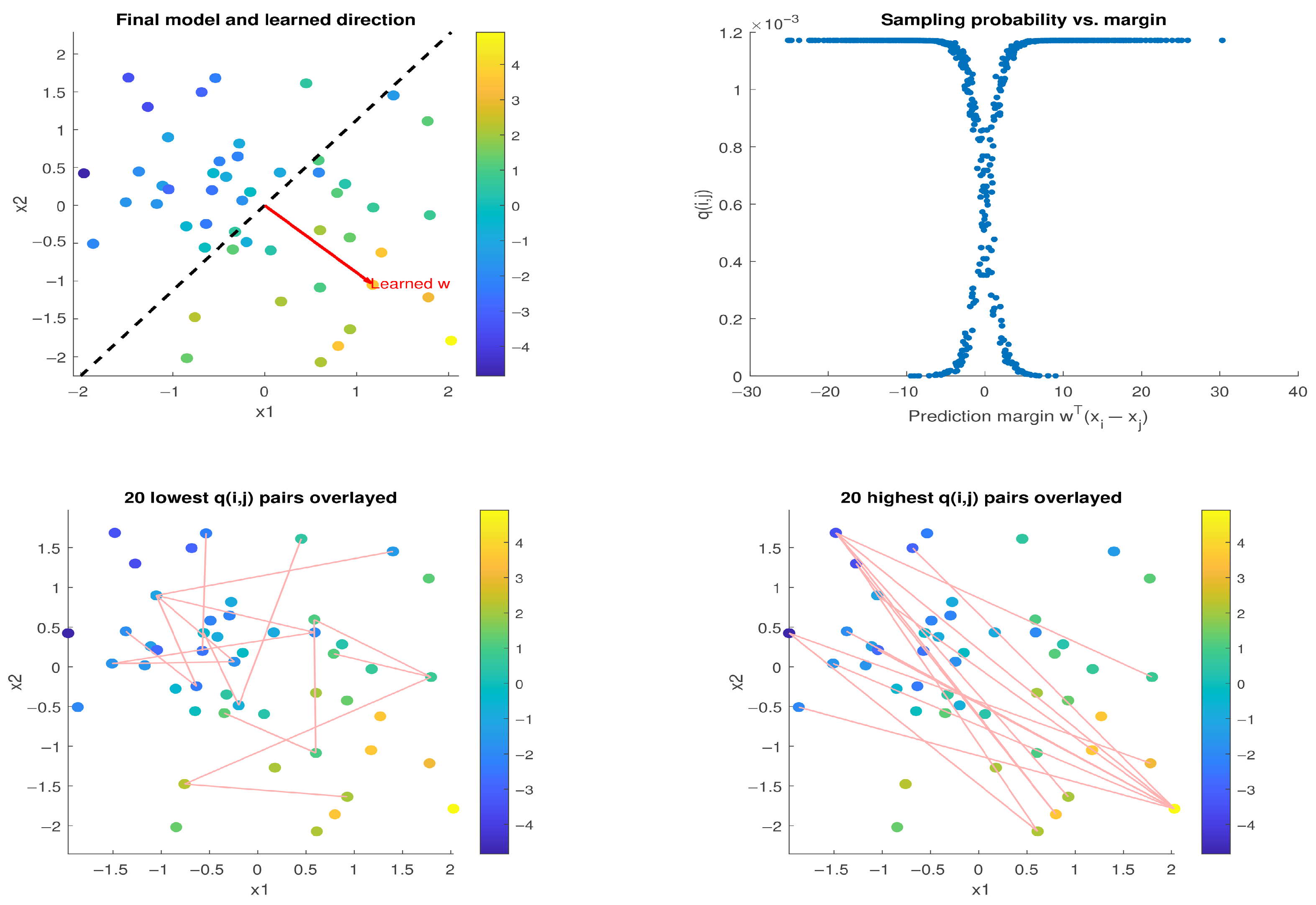

We repeated the experiment using a noisy preference model, where the observed scores are given by , with for . Figure 2 shows the results of training a model of the same form as previously, using our Pairwise SGD-Q. The top-left plot depicts the noisy data overlaid with the learned direction . The top-right plot shows the pairwise margins against the corresponding sampling probabilities . While q still decreases near the pairwise margin, the noise now causes mislabeled or ambiguous pairs to receive even lower q values. This becomes evident in the bottom-left plot: the lowest q-scored pairs have similar preference scores but differing features and are no longer orthogonal to due to noise. The bottom-right plot shows the highest q-scored pairs, which continue to reflect the most informative preference differences aligned on average with the learned direction.

Figure 2.

Same setup as Figure 1, but with additive Gaussian noise in the generating preference model. The top-right plot shows that now reflect both the size of pairwise margin and the noise sensitivity, assigning lower weights to mislabeled or ambiguous pairs. The bottom-left plot shows that, as before, Pairwise SGD-Q still down-weights distant pairs that have too similar preference scores, although now these are no longer perpendicular to the ranking direction due to noise. The bottom-right plot shows that Pairwise SGD-Q prioritizes the pairs most aligned with the ranking direction.

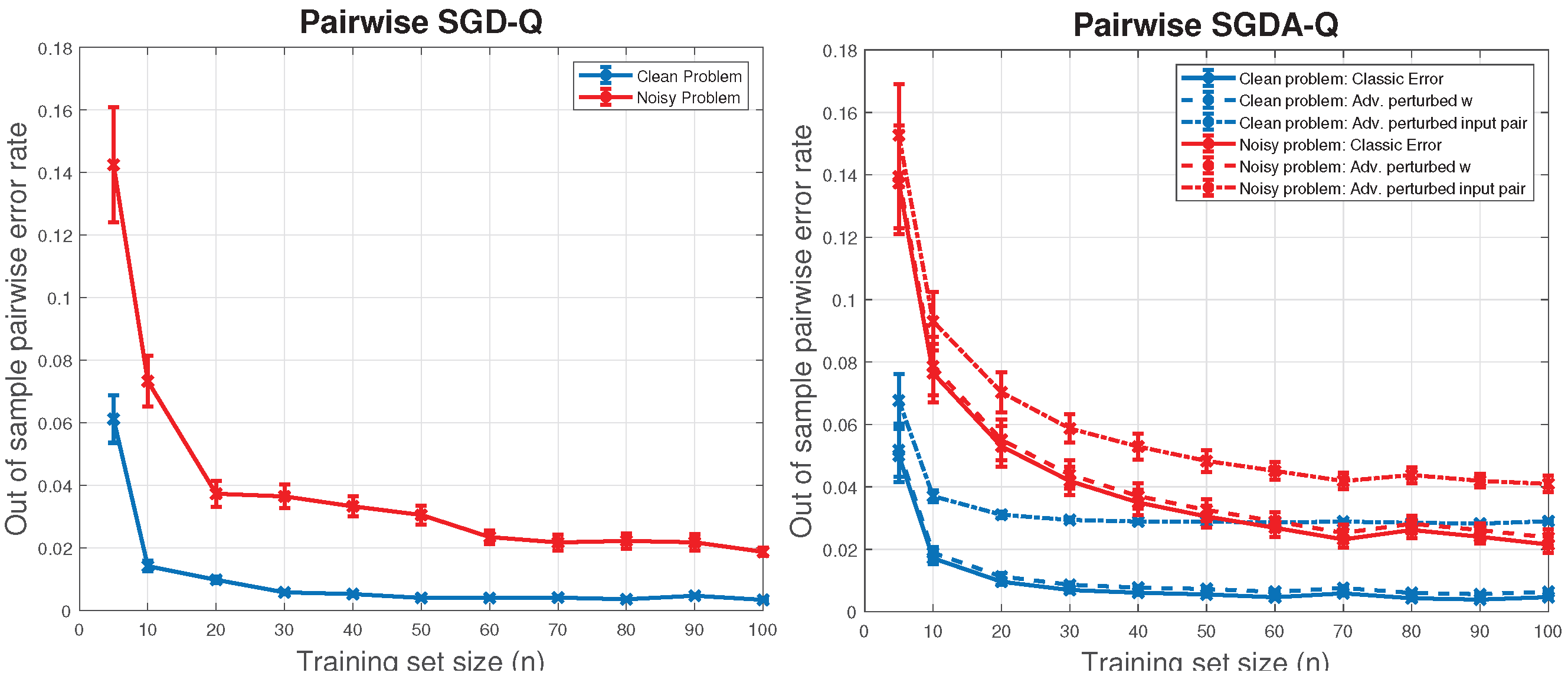

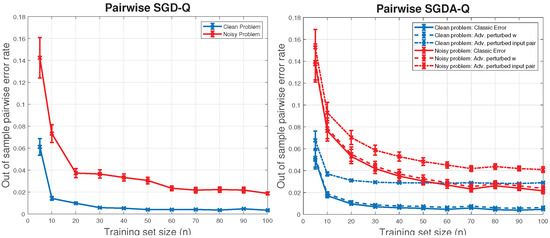

Next, we plot learning curves to see how the generalization performance of the pairwise ranking model trained with our Pairwise SGD-Q and Pairwise SGDA-Q algorithms varies with the number of i.i.d. items, under both clean and noisy settings, while we keep the number of pairs used for training constant. The Pairwise SGDA-Q experiments represent an instance of adversarial training. This can model, for instance, malicious users, bots, or strategic agents in applications like recommendation systems, crowd-sourced ranking, sports, or election ranking.

We vary and repeat all experiments on both clean and noisy preference score generation models. This totals 44 different experiment settings, and we perform 50 independent trials for each. The data generation process is the same as before. While the sample size n varies, the number of pairs sampled from these n points for training is set to 1000 throughout, and these pairs are labeled in the same way as before.

Training with Pairwise SGD-Q is performed in the same way as previously described, but this time we set to run for 30 epochs. When training with Pairwise SGDA-Q, at each training epoch the algorithm first computes the pairwise losses after adversarial maximization over weight vector constrained to an -ball of radius around the current model , using 6 gradient ascent steps with step size . The resulting adversarially induced losses are used to compute the sampling distribution , from which pairs are drawn to iteratively update , for 30 epochs.

For both algorithms, evaluation is performed using an independent test set of 500 unseen items, drawn from the clean preference scoring model. For Pairwise SGD-Q, the out-of-sample errors are computed. For Pairwise SGDA-Q, three different out-of-sample error metrics are computed: (1) standard pairwise error, (2) error under adversarial perturbation of the model’s weights , and (3) error under adversarial perturbation of the test pairwise inputs , all within the same radius and using the same Pairwise SGDA-Q ascent procedure.

Figure 3 reports the obtained learning curves for both algorithms: the error bars show the average and standard error across 50 independent runs. From these figures we can see, as expected, that both natural noise and adversarial perturbations make the problem harder. However, all out-of-sample errors display a decreasing trajectory as the sample size grows.

Figure 3.

Out-of-sample errors for Pairwise SGD-Q and Pairwise SGDA-Q as a function of the number of i.i.d. items n, while the number of pairs trained on remains constant at 1000. The curves represent averages, and the error bars are standard errors from 50 independent trials.

6. Conclusions

We obtained stability-based PAC–Bayes bounds for randomized pairwise learning, applicable to general sampling. These bounds are applicable to analyzing the generalization of stochastic optimization algorithms, and we demonstrated this in the case of Pairwise SGD and Pairwise SGDA. Our generalization analysis of these methods is suggestive of new stochastic optimizers that allow non-uniform and data-dependent sampling distributions to be updated during the training process. We believe this is a theoretically grounded step that connects two important ideas and may support future work on more complex or application-specific methods. The practical use of this idea is explored in a companion paper [22].

Limitations: Our analysis of Pairwise SGD and Pairwise SGDA is built on a set of classic assumptions regarding the loss function, with convexity being perhaps the most restrictive among them. Nonetheless, insights gained from the convex setting remain a valuable stepping stone to tackling more general, non-convex problems in future work. Indeed, a worthwhile avenue will be to obtain bounds and associated algorithms under relaxed assumptions. Furthermore, here we demonstrated numerical results with our bound-based algorithm under its intended conditions. It will also be interesting to explore experimentally to what extent such algorithms remain functional and potentially useful outside the theoretical conditions in which they were obtained.

Author Contributions

Conceptualization, formal analysis, writing—original draft, S.Z.; conceptualization, supervision, writing—review and editing, Y.L.; conceptualization, validation, supervision, writing—review and editing, A.K. All authors have read and agreed to the published version of the manuscript.

Funding

The work of Sijia Zhou is funded by CSC and UoB scholarship. The work of Yunwen Lei is partially supported by the Research Grants Council of Hong Kong [Project No. 22303723]. Ata Kabán acknowledges past funding from EPSRC Fellowship grant EP/P004245/1.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Proof of Lemma 1

We follow the ideas in [31,54] to prove Lemma 1. We first introduce some useful lemmas. The following lemma shows some results on characterizing sub-Gaussian and sub-exponential random variables. For , let denote the moment-generating function (MGF) of Z. We denote the indicator function.

Lemma A1

(Vershynin [55]). Let X be a random variable with . We have the following equivalences for X:

- , for all .

- There exists such that, for all ,

We have the following equivalences for X:

- , for all .

- For all λ such that ,

The following lemma gives a change in measure of the KL divergence.

Lemma A2

(Lemma 4.10 in Van Handel [56]). For any measurable function , we have

We denote the -norm of a random variable Z as , denote the set , and abbreviate as . For , is the set derived by replacing the k-th element of S with .

The following lemma gives moment bounds for a summation of weakly dependent and mean-zero random functions with bounded increments under a small change.

Lemma A3

(Theorem 1 in Lei et al. [33]). Let be a set of independent random variables that each takes values in and . Let be some functions that can be decomposed as . Suppose for and , the following hold for any :

- almost surely (a.s.).

- a.s.

- For any with we have a.s., and for any with and , we have a.s.

Then, we can decompose and as follows:

where , , , are four random variables satisfying . Furthermore, for any

and for any

Proof of Lemma 1.

Based on Lemma A2, if we set , then

To control the deviations of , we use Markov’s inequality. With a probability of , we have

Applying the above results to Equation (A1), with a probability of , we get

We can exchange and using Fubini’s theorem. Next, we will bound the generalization gap with respect to . Let be some decaying function of n. We denote by a subset with on which sub-exponential stability (Definition 2) holds and is the complement of . We first give results for any fixed . Given , it was shown in Lei et al. [33], , that

As shown in Lei et al. [33], satisfies all the conditions in Lemma A3, and therefore one can apply Lemma A3 to show the existence of four random variables , , , such that :

Using the first part of Lemma A1 with to get

and using the second part of Lemma A1 with ,

According to Jensen’s inequality, we have

This implies

As sub-exponential stability (Definition 2) holds with when , the above inequality together with Equations (A3) and (A4) implies that, for all

we have

Next, we give results for any fixed . We define as , where is the indicator function. We have

Based on Equation (A.8) and Equation (A.9) in Zhou et al. [31], for , we have

where and

For any and , we have

Applying the above inequality into Equation (A9), if , , , it gives

We choose

so that we have

Plugging this back into Equation (A10) yields the MGF of our truncated generalization gap, , which is a key quantity in PAC–Bayes analysis:

Applying the above results into Equation (A2), we have, with a probability of ,

Based on the above inequality and Equations (A7) and (A8), the following inequality holds uniformly for all with probability of at least :

In the above, comparing the first and the second term on the r.h.s, the second term can be made negligible by choosing small enough, .

Therefore, our analysis shows

The proof is completed. □

Here, we discuss the existence of . In practice, we consider and to be sampling distributions. In these cases, and are discrete distributions on the same dataset. In particular, we are interested in the case with being the uniform distribution. Under these circumstances, this expectation exists.

Appendix B. Proofs for Pairwise SGD and Pairwise SGDA with Adaptive Sampling

Appendix B.1. Pairwise Stochastic Gradient Descent

We will prove that stability bounds of Pairwise SGD satisfy sub-exponential stability (Definition 2). Based on this, we can derive the generalization bounds for Pairwise SGD with smooth and non-smooth convex loss functions. To this aim, we introduce the following lemma to bound the summation of i.i.d events [57].

Lemma A4 (Chernoff’s Bound).

Let be independent random variables taking values in . Let and . Then, for any with probability of at least , we have

We first present the stability bounds for non-smooth and convex cases.

Proof of Lemma 2, (1).

Without loss of generality, we assume S and differ by the last example. Based on the Equation (F.2) in Lei et al. [51], we have

We set and use the inequality to get

It then follows that

According to the Lipschitz continuity, we know that Pairwise SGD is -uniformly stable with

To bound w.h.p., we set and note that Applying Lemma A4 to the sum in Equation (A12), with probability of at least , we get

Therefore, with probability of at least , the following holds simultaneously for all by the union bound on probability

For , this implies the following inequality with probability of at least :

Finally, from Equation (A13) we know that Pairwise SGD with the uniformly distributed hyperparameter satisfies sub-exponential stability (Definition 2) with

The proof is completed. □

Proof of Lemma 2, (2).

By an intermediate result in the proof in Lemma C.3 of Lei et al. [33], for all and , with L-Lipschitz, we have

From this inequality it follows that Pairwise SGD is -uniformly stable with

Let for any . It remains to show that the stability parameter of Pairwise SGD satisfies sub-exponential stability (Definition 2). Using Lemma A4 with and noting that , we get the following inequality with probability of at least (taking ):

By the union bound, with probability of at least , Equation (A15) holds for all . Therefore, with probability of at least , it gives

where we have used in the second inequality. Hence, sub-exponential stability (Definition 2) holds with

This completes the proof. □

Proof of Theorem 1.

With , it follows from Lemma 2, (1) and (2), that Pairwise SGD with both convex non-smooth and convex smooth loss functions satisfies sub-exponential stability (Definition 2). Applying the upper bound on to Lemma 1, the result follows. □

Appendix B.2. Pairwise Stochastic Gradient Descent Ascent

Next, we prove the generalization bounds for Pairwise SGDA with smooth and non-smooth convex-concave loss functions.

Lemma A5

(Lemma C.1., [37]). Let ℓ be convex-concave.

- (1)

- If Assumption 4 holds, then

- (2)

- If Assumption 5 holds, then

Proof of Lemma 3, (1).

We assume S and differ by the last example for simplicity. Based on Lemma A5 (1), for , we have

When , we have

where in the second inequality, we use that, for any , we have . Combining Equations (A16) and (A17), this gives

We apply the above inequality recursively and follow the analysis of Equation (C.4) in Lei et al. [37]:

where we assume fixed step sizes. We set and use the inequality to derive

It then follows that

By L-Lipschitzness, we have

Therefore, we know that Pairwise SGDA is -uniformly stable with

For simplicity, let . Applying Lemma A4 to Equation (A18), with probability of at least , we have

With probability of at least , the following holds for all :

This suggests the following inequality with probability of at least :

This suggests that Pairwise SGDA with uniform sampling distribution and the hyperparameter satisfies sub-exponential stability (Definition 2) with

The proof is completed. □

Proof of Lemma 3, (2).

Without loss of generality, we first assume S and differ by the last example. Based on Lemma A5 (2), if , we have

When , we consider Equation (A17). Combining these two cases, we get

We apply the above Equation (A19) recursively, following the proof of Theorem 2(d) in Lei et al. [37],

where we assume fixed step sizes and use in the last inequality. We set and use the inequality to derive

Based on L-Lipschitzness and the above inequality, for any two neighboring datasets we have

Therefore, we know that Pairwise SGDA is -uniformly stable with

For simplicity, let for any . Taking the expectation over both sides of the above inequality, we derive

where Applying in Lemma A4, we get the following inequality with probability of at least :

By the union bound in probability, with probability of at least , Equation (A21) holds for all . Therefore, with probability of at least ,

where we have used in the second inequality and Equation (A20) in the last inequality. Therefore, sub-exponential stability (Definition 2) holds with and . The proof is completed. □

Based on the above lemma, we are ready to develop generalization bounds in Theorem 2 for Pairwise SGDA with smooth and non-smooth loss functions.

Proof of Theorem 2.

With , based on Lemma 3, (1) and (2), Pairwise SGDA with both convex-concave non-smooth as well as convex-concave smooth loss functions satisfies sub-exponential stability (Definition 2). Applying the upper bounds on to Lemma 1, we obtain the result. □

References

- Lei, G.; Shi, L. Pairwise ranking with Gaussian kernel. Adv. Comput. Math. 2024, 50, 70. [Google Scholar] [CrossRef]

- Agarwal, S.; Niyogi, P. Generalization bounds for ranking algorithms via algorithmic stability. J. Mach. Learn. Res. 2009, 10, 441–474. [Google Scholar]

- Clémençon, S.; Lugosi, G.; Vayatis, N. Ranking and empirical minimization of U-statistics. In The Annals of Statistics; Institute of Mathematical Statistics: Beachwood, OH, USA, 2008; pp. 844–874. [Google Scholar]

- Cortes, C.; Mohri, M. AUC optimization vs. error rate minimization. Adv. Neural Inf. Process. Syst. 2004, 16, 313–320. [Google Scholar]

- Cao, Q.; Guo, Z.C.; Ying, Y. Generalization bounds for metric and similarity learning. Mach. Learn. 2016, 102, 115–132. [Google Scholar] [CrossRef]

- Koestinger, M.; Hirzer, M.; Wohlhart, P.; Roth, P.M.; Bischof, H. Large scale metric learning from equivalence constraints. In Proceedings of the IEEE Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2288–2295. [Google Scholar]

- Xiong, F.; Gou, M.; Camps, O.; Sznaier, M. Person re-identification using kernel-based metric learning methods. In Proceedings of the European Conference Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014; pp. 1–16. [Google Scholar]

- Guillaumin, M.; Verbeek, J.; Schmid, C. Is that you? Metric learning approaches for face identification. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 498–505. [Google Scholar]

- Zheng, Z.; Zheng, L.; Yang, Y. A discriminatively learned cnn embedding for person reidentification. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2017, 14, 1–20. [Google Scholar] [CrossRef]

- Yi, D.; Lei, Z.; Liao, S.; Li, S.Z. Deep metric learning for person re-identification. In Proceedings of the 2014 IEEE 22nd International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; pp. 34–39. [Google Scholar]

- Feng, B.; Liu, Z.; Huang, N.; Xiao, Z.; Zhang, H.; Mirzoyan, S.; Xu, H.; Hao, J.; Xu, Y.; Zhang, M.; et al. A bioactivity foundation model using pairwise meta-learning. Nat. Mach. Intell. 2024, 6, 962–974. [Google Scholar] [CrossRef]

- Sellamanickam, S.; Garg, P.; Selvaraj, S.K. A pairwise ranking based approach to learning with positive and unlabeled examples. In Proceedings of the 20th ACM International Conference on Information and Knowledge Management, New York, NY, USA, 24–28 October 2011; CIKM ’11. pp. 663–672. [Google Scholar]

- Wang, Z.; Liang, P.; Bai, R.; Liu, Y.; Zhao, J.; Yao, L.; Zhang, J.; Chu, F. Few-shot fault diagnosis for machinery using multi-scale perception multi-level feature fusion image quadrant entropy. Adv. Eng. Inform. 2025, 63, 102972. [Google Scholar] [CrossRef]

- Zhao, P.; Zhang, T. Stochastic Optimization with Importance Sampling for Regularized Loss Minimization. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 1–9. [Google Scholar]

- Allen-Zhu, Z.; Qu, Z.; Richtárik, P.; Yuan, Y. Even faster accelerated coordinate descent using non-uniform sampling. In Proceedings of the International Conference on Machine Learning, PMLR, New York, NY, USA, 19–24 June 2016; pp. 1110–1119. [Google Scholar]

- Katharopoulos, A.; Fleuret, F. Biased importance sampling for deep neural network training. arXiv 2017, arXiv:1706.00043. [Google Scholar]

- Johnson, T.B.; Guestrin, C. Training deep models faster with robust, approximate importance sampling. Adv. Neural Inf. Process. Syst. 2018, 31, 1–11. [Google Scholar]

- Wu, C.Y.; Manmatha, R.; Smola, A.J.; Krahenbuhl, P. Sampling matters in deep embedding learning. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2840–2848. [Google Scholar]

- Han, X.; Yu, X.; Li, G.; Zhao, J.; Pan, G.; Ye, Q.; Jiao, J.; Han, Z. Rethinking sampling strategies for unsupervised person re-identification. IEEE Trans. Image Process. 2022, 32, 29–42. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 1–9. [Google Scholar]

- Madry, A.; Makelov, A.; Schmidt, L.; Tsipras, D.; Vladu, A. Towards deep learning models resistant to adversarial attacks. arXiv 2017, arXiv:1706.06083. [Google Scholar]

- Zhou, S.; Lei, Y.; Kabán, A. Learning to Sample in Stochastic Optimization. In Proceedings of the 41st Confenence on Uncertainty in Artificial Intelligence, Rio de Janeiro, Brazil, 21–25 July 2025. [Google Scholar]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and harnessing adversarial examples. arXiv 2014, arXiv:1412.6572. [Google Scholar]

- Zhou, M.; Patel, V.M. Enhancing adversarial robustness for deep metric learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 15325–15334. [Google Scholar]

- Wen, W.; Li, H.; Wu, R.; Wu, L.; Chen, H. Generalization analysis of adversarial pairwise learning. Neural Netw. 2025, 183, 106955. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Wang, Z.J.; Yao, B.; Yin, J. Geo-ALM: POI Recommendation by Fusing Geographical Information and Adversarial Learning Mechanism. Int. Jt. Conf. Artif. Intell. 2019, 7, 1807–1813. [Google Scholar]

- Zhang, L.; Duan, Q.; Zhang, D.; Jia, W.; Wang, X. AdvKin: Adversarial convolutional network for kinship verification. IEEE Trans. Cybern. 2020, 51, 5883–5896. [Google Scholar] [CrossRef]

- De la Pena, V.; Giné, E. Decoupling: From Dependence to Independence; Springer Science & Business Media: New York, NY, USA, 2012. [Google Scholar]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient Estimation of Word Representations in Vector Space. In Proceedings of the 1st International Conference on Learning Representations, ICLR 2013, Scottsdale, AZ, USA, 2–4 May 2013. Workshop Track Proceedings, 2013. [Google Scholar]

- Beznosikov, A.; Gorbunov, E.; Berard, H.; Loizou, N. Stochastic gradient descent-ascent: Unified theory and new efficient methods. In Proceedings of the International Conference on Artificial Intelligence and Statistics, PMLR, Valencia, Spain, 25–27 April 2023; pp. 172–235. [Google Scholar]

- Zhou, S.; Lei, Y.; Kabán, A. Toward Better PAC-Bayes Bounds for Uniformly Stable Algorithms. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 10–16 December 2023; Volume 36. [Google Scholar]

- London, B. A PAC-bayesian analysis of randomized learning with application to stochastic gradient descent. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 2931–2940. [Google Scholar]

- Lei, Y.; Ledent, A.; Kloft, M. Sharper Generalization Bounds for Pairwise Learning. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–10 December 2020; Volume 33, pp. 21236–21246. [Google Scholar]

- Katharopoulos, A.; Fleuret, F. Not all samples are created equal: Deep learning with importance sampling. In Proceedings of the International Conference on Machine Learning. PMLR, Stockholm Sweden, 10–15 July 2018; pp. 2525–2534. [Google Scholar]

- Bousquet, O.; Elisseeff, A. Stability and generalization. J. Mach. Learn. Res. 2002, 2, 499–526. [Google Scholar]

- Hardt, M.; Recht, B.; Singer, Y. Train faster, generalize better: Stability of stochastic gradient descent. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 20–22 June 2016; pp. 1225–1234. [Google Scholar]

- Lei, Y.; Yang, Z.; Yang, T.; Ying, Y. Stability and Generalization of Stochastic Gradient Methods for Minimax Problems. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 6175–6186. [Google Scholar]

- Farnia, F.; Ozdaglar, A. Train simultaneously, generalize better: Stability of gradient-based minimax learners. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 3174–3185. [Google Scholar]

- Shawe-Taylor, J.; Williamson, R.C. A PAC analysis of a Bayesian estimator. In Proceedings of the Tenth Annual Conference on Computational Learning Theory, Nashville, TN, USA, 6–9 July 1997; pp. 2–9. [Google Scholar]

- McAllester, D.A. Some pac-bayesian theorems. Mach. Learn. 1999, 37, 355–363. [Google Scholar] [CrossRef]

- London, B.; Huang, B.; Getoor, L. Stability and generalization in structured prediction. J. Mach. Learn. Res. 2016, 17, 7808–7859. [Google Scholar]

- Rivasplata, O.; Parrado-Hernández, E.; Shawe-Taylor, J.S.; Sun, S.; Szepesvári, C. PAC-Bayes bounds for stable algorithms with instance-dependent priors. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 9214–9224. [Google Scholar]

- Sun, S.; Yu, M.; Shawe-Taylor, J.; Mao, L. Stability-based PAC-Bayes analysis for multi-view learning algorithms. Inf. Fusion 2022, 86, 76–92. [Google Scholar] [CrossRef]

- Oneto, L.; Donini, M.; Pontil, M.; Shawe-Taylor, J. Randomized learning and generalization of fair and private classifiers: From PAC-Bayes to stability and differential privacy. Neurocomputing 2020, 416, 231–243. [Google Scholar] [CrossRef]

- Mou, W.; Wang, L.; Zhai, X.; Zheng, K. Generalization Bounds of SGLD for Non-convex Learning: Two Theoretical Viewpoints. In Proceedings of the Conference on Learning Theory, Stockholm, Sweden, 6–9 July 2018; pp. 605–638. [Google Scholar]

- Negrea, J.; Haghifam, M.; Dziugaite, G.K.; Khisti, A.; Roy, D.M. Information-theoretic generalization bounds for SGLD via data-dependent estimates. Adv. Neural Inf. Process. Syst. 2019, 32, 1–11. [Google Scholar]

- Li, J.; Luo, X.; Qiao, M. On Generalization Error Bounds of Noisy Gradient Methods for Non-Convex Learning. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Ralaivola, L.; Szafranski, M.; Stempfel, G. Chromatic PAC-Bayes bounds for non-iid data: Applications to ranking and stationary β-mixing processes. J. Mach. Learn. Res. 2010, 11, 1927–1956. [Google Scholar]

- Viallard, P.; Germain, P.; Habrard, A.; Morvant, E. A General Framework for the Derandomization of PAC-Bayesian Bounds. ArXiv 2021, arXiv:2102.08649. [Google Scholar]

- Picard-Weibel, A.; Clerico, E.; Moscoviz, R.; Guedj, B. How good is PAC-Bayes at explaining generalisation? arXiv 2025, arXiv:2503.08231. [Google Scholar]

- Lei, Y.; Liu, M.; Ying, Y. Generalization Guarantee of SGD for Pairwise Learning. Adv. Neural Inf. Process. Syst. 2021, 34, 21216–21228. [Google Scholar]

- Zhang, J.; Hong, M.; Wang, M.; Zhang, S. Generalization bounds for stochastic saddle point problems. In Proceedings of the International Conference on Artificial Intelligence and Statistics, PMLR, Virtual, 13–15 April 2021; pp. 568–576. [Google Scholar]

- Liu, T.Y. Learning to Rank for Information Retrieval; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Guedj, B.; Pujol, L. Still no free lunches: The price to pay for tighter PAC-Bayes bounds. Entropy 2021, 23, 1529. [Google Scholar] [CrossRef]

- Vershynin, R. High-Dimensional Probability: An Introduction with Applications in Data Science; Cambridge University Press: Cambridge, UK, 2018; Volume 47. [Google Scholar]

- Van Handel, R. Probability in high dimension. In Lecture Notes; Princeton University: Princeton, NJ, USA, 2014. [Google Scholar]

- Shalev-Shwartz, S.; Ben-David, S. Understanding Machine Learning: From Theory to Algorithms; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).