1. Introduction

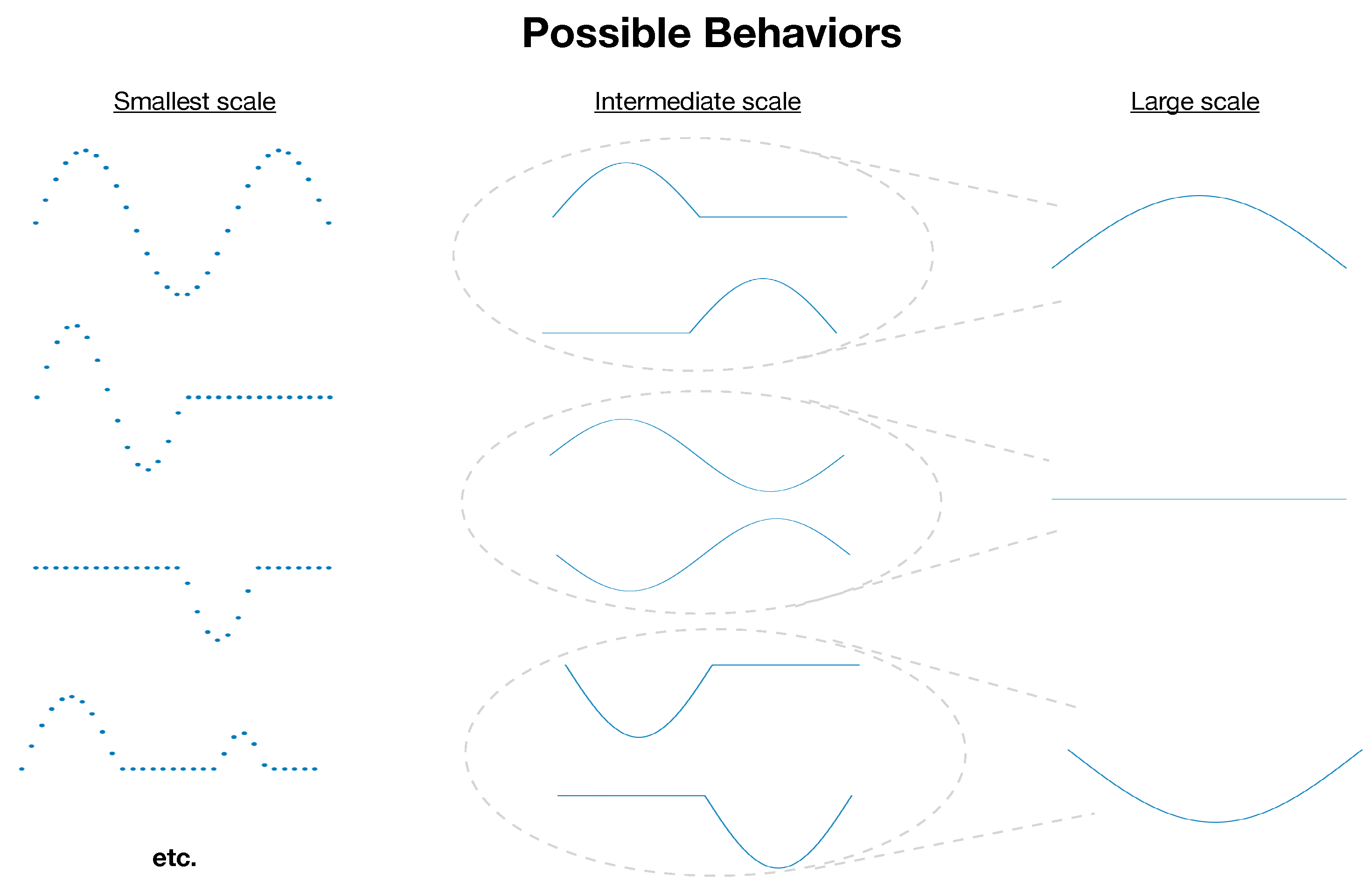

Defining complexity in general terms has been a persistent challenge in the study of complex systems [

1,

2,

3,

4]. A first-pass definition of a system’s complexity would be the information necessary to describe its state in full detail. However, this definition would assign the highest complexity to maximally disordered systems (e.g., an ideal gas where molecules move independently and unpredictably). Conversely, defining complexity as the degree of order would assign the maximum complexity to perfectly ordered systems (e.g., all the molecules moving in unison). Neither extreme captures what is typically meant by “complex”. Scale-dependent complexity—which has been formalized for general collections of random variables [

5,

6,

7] and time series in particular [

8,

9,

10,

11] and used in contexts such as chaos [

12,

13], biological signals [

14,

15,

16,

17,

18], traffic patterns [

19,

20], financial data [

21,

22,

23], Gaussian processes [

24], and fluid dynamics [

25,

26]—offers a promising path. Rather than attempt to quantify a system’s complexity with a single number, this approach recognizes that the information required to describe a system’s state—i.e., its complexity—is inherently dependent on the level of detail or scale at which that description is made (see

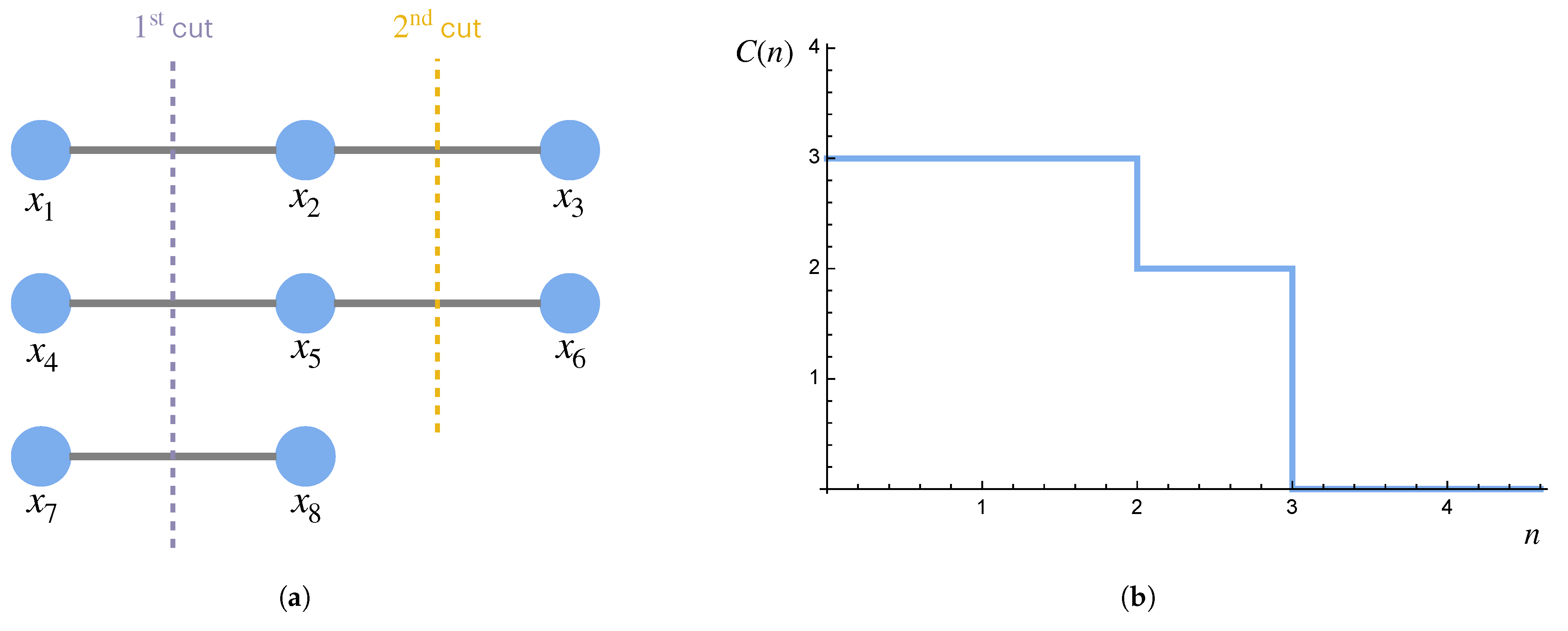

Figure 1). In this framework, complexity is not an intrinsic scalar quantity of a system but a

property of scale: It reflects how much structured variation remains after a system has been coarse-grained to a particular level of resolution. Systems typically viewed as “complex” exhibit complexity that varies across a range of scales [

27], potentially in a scale-free/fractal fashion [

28,

29].

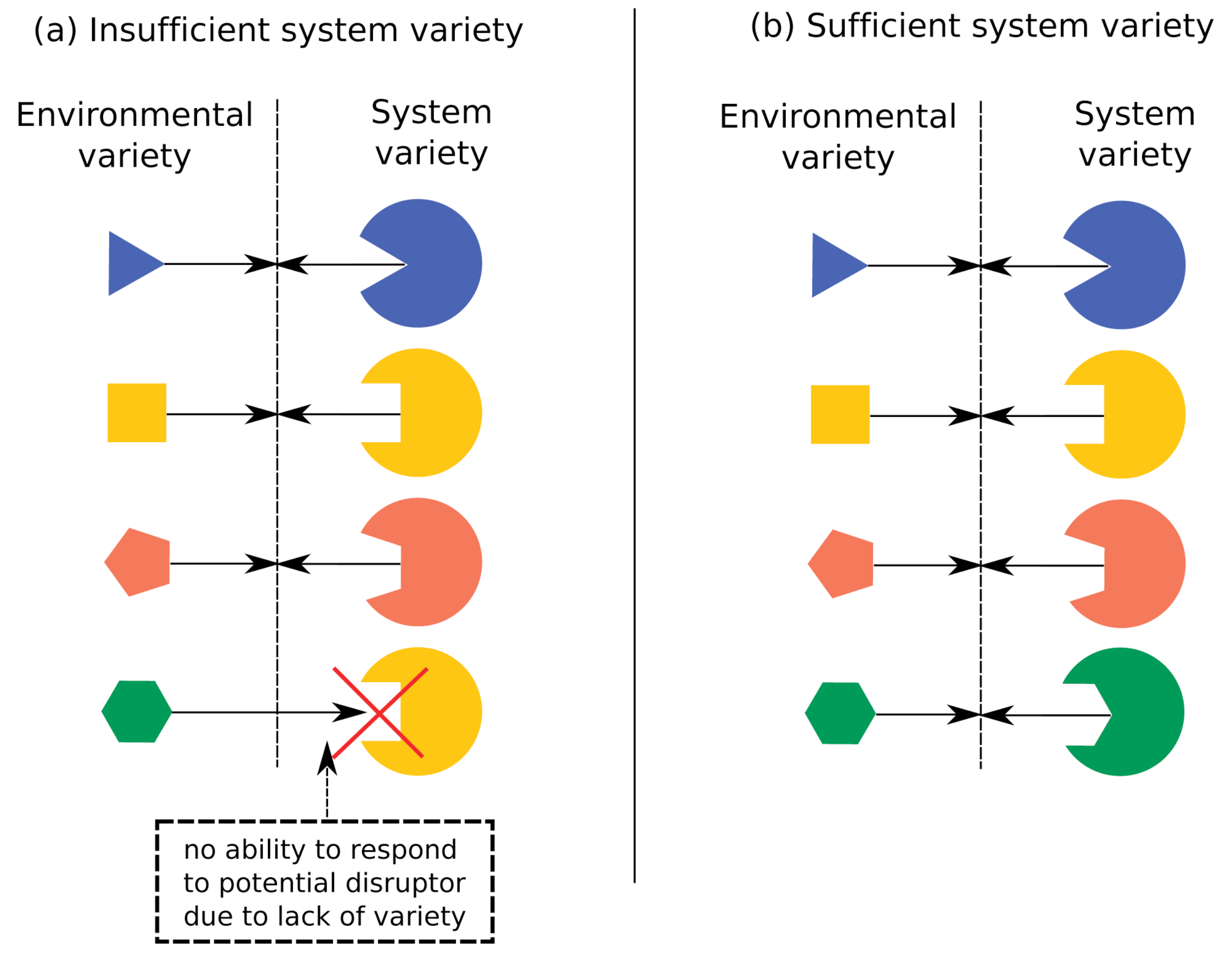

But in terms of real-world consequences, what does it mean if one system possesses more complexity than another? Ashby’s law of requisite variety [

30] provides one possible answer: in order to be effective, the complexity of a system must equal or exceed that of its environment. Here, a system’s environment must be defined to include only the set of behaviors that requires a distinct response from the system (see

Figure 2).

However, Ashby’s law does not account for the multi-scale nature of complexity. For instance, two individuals who lacked the ability to cooperate may have enough small-scale complexity to independently move various objects but would lack sufficient complexity at the scale necessary to move a couch. Thus, while the two individuals would possess sufficient complexity (i.e., a sufficient variety of behaviors), it would not be at the right scale (i.e., at the level of description that includes only behaviors that involve coordination between the two individuals). This example is not in violation of Ashby’s law, as Ashby’s law merely provides a necessary rather than a sufficient condition for system efficacy. Nonetheless, this example and others (see below) motivate us to seek a stronger necessary condition for system efficacy that takes into account not only the complexity but also the scale of system behaviors. We thus propose a

multi-scale law of requisite variety: In order to be effective, the complexity of a system must equal or exceed that of its environment

at all scales. Our goal is to provide a definition of scale-dependent complexity—a class of complexity profiles—that satisfies this multi-scale version law of requisite variety. For a pedagogical introduction to complexity profiles and the multi-scale law of requisite variety, please see ref. [

27].

For an existing formal definition of a complexity profile [

7,

32], it has been proven that a multi-scale law of requisite variety applies for systems and environments that are

block-independent, i.e., systems/environments with components that can be partitioned into mutually independent blocks such that components within the same block have the identical behavior [

33]. However, the law of requisite variety does not apply to this complexity profile more generally. For instance, adding additional components to the system (without changing the existing components) can actually reduce this complexity profile at larger scales due to the possibility of negative interaction information for more than two variables [

34]; thus, a system that is capable of effectively interacting with its environment could, nonetheless, end up with less complexity than its environment at larger scales. Given the desirability and usefulness of a complexity profile satisfying Ashby’s law at each scale (a property that has been implicitly used in many analyses, such as management [

35,

36,

37], military defense [

31,

38,

39], governance [

40], multi-agent coordination [

41,

42,

43], and evolutionary dynamics [

44,

45,

46]), we therefore seek a formal definition of the complexity profile that reflects this property. (We will show that for block-independent systems, the formalism introduced here can be reduced to the definition of complexity profiles discussed above.)

The one other constraint that we desire for a complexity profile is a sum rule, i.e., that the area under the complexity profile does not depend on interdependencies between components but rather only the individual components’ behaviors. Such a constraint reflects the tradeoff between complexities at various scales: In order for a system to have complexity at larger scales, its components must be correlated, which constrains the fine-scale configurations of the system (and thus its smaller-scale complexity) [

27]. Without a sum rule or some other similar constraint, the multi-scale law of requisite variety would be no more than many copies of the single-scale version of Ashby’s law—one for each scale—with no structure relating the various scales to each other.

In order to define a complexity profile for which the multi-scale law of requisite variety holds, we first have to define what it means for a multi-component system to effectively match its environment (

Section 2). Then, in

Section 3, we formally define what constitutes a complexity profile and what criteria must be satisfied for it to capture the multi-scale law of requisite variety and the tradeoff between complexities at various scales. In

Section 4, we define a class of complexity profiles that satisfies such criteria and examine some of its properties.

Such a class does not provide a single complexity profile; rather, a complexity profile is assigned for each way of partitioning the system. Choosing a method of partitioning the system is analogous to choosing a coordinate system onto which the multi-scale complexity of the system can be projected (breaking the permutation symmetry corresponding to the relabeling of system components). The fact that the profile depends on the partitioning method reflects the fact that there is no single way to coarse-grain a system, although some coarse-graining choices are more useful/better reflect the system’s structure than others. However, the efficacy of a system in a particular environment is independent of the choice of the coordinate system; thus, regardless of which partitioning scheme is chosen, an effective system will have at least as much complexity as the environment at all scales. This formalism, therefore, gives an entire class of constraints that must be satisfied: As long as the system and its environment are partitioned/coarse-grained in the same way, the system’s complexity matching/exceeding its environment’s at all scales provides a necessary condition for system efficacy.

2. Generalizing Ashby’s Law to Multiple Components

Ashby’s law claims that to effectively regulate an environment, the system must have a degree of freedom or behavior for each distinct environmental behavior. In other words, there cannot be two environmental states for a given system state. It then follows (by the pigeon-hole principle) that the number of behaviors of the system must be greater than or equal to the number of behaviors of the environment.

More formally, let

X and

Y be random variables or collections of random variables, let

denote the Shannon entropy of

X, which is the minimum average number of bits needed to describe the state of

X, and let

denote the expected value of the Shannon entropy of

Y given the state of

X [

47]. Then, each system state of

X corresponding to no more than one environmental state of

Y can be written as

, from which it follows that

(i.e., the complexity of the environment can not exceed that of the system). It is important to note that in this formulation, the environment

Y is

defined to be the set of states that require distinct behaviors of the system. Two environmental states that do not require different system behaviors should be represented by a single state of

Y.

In order to consider multi-scale behavior, let us describe the system X as consisting of N components such that .

Definition 1. A system X of size is defined as a set of N random variables. These random variables are referred to as components of the system.

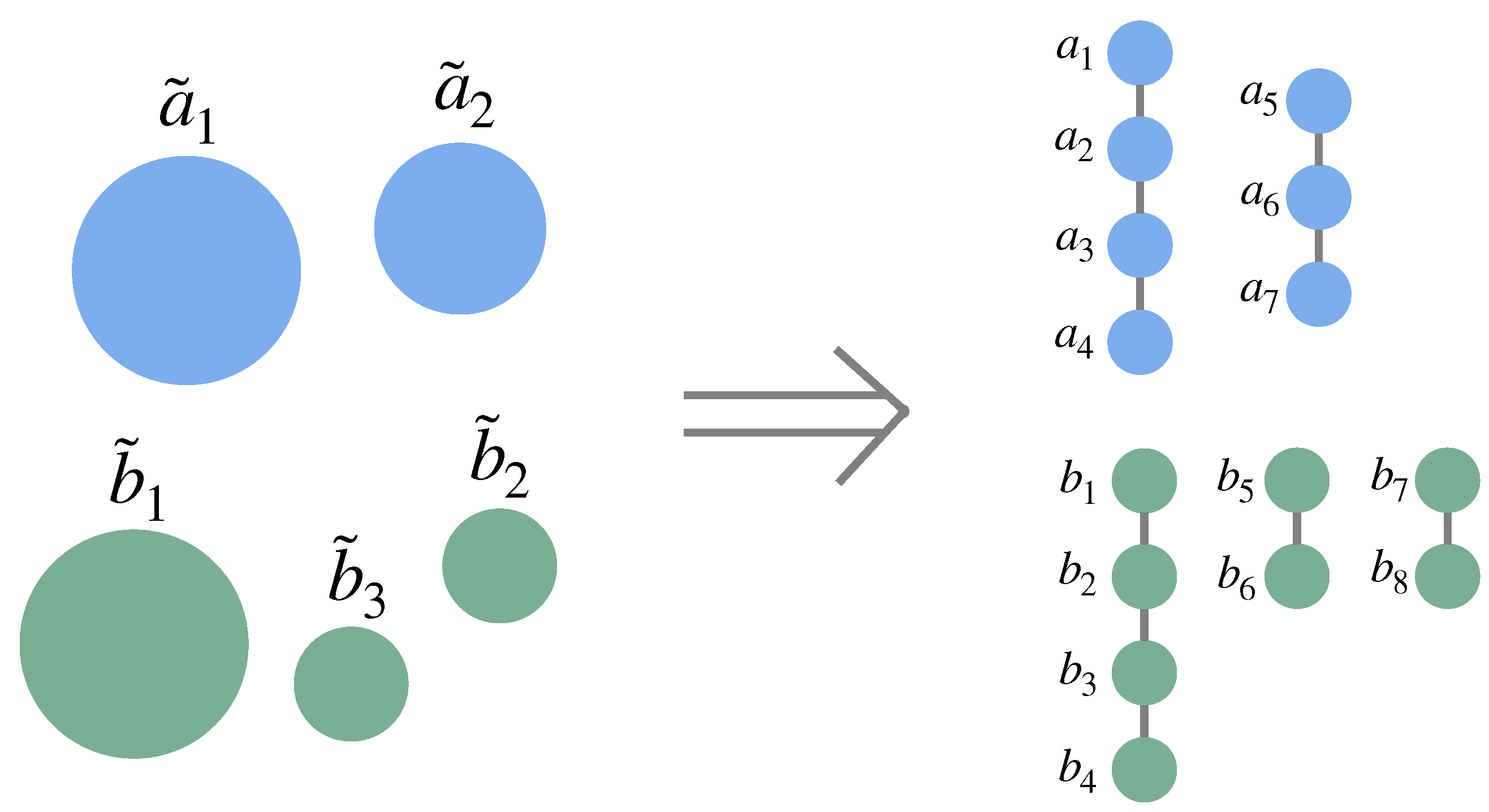

Remark 1. In this formulation, all the components of one or more systems are treated as the same size; while this condition may seem like a limitation, any system or systems can be described in this way to arbitrary precision: Components of different sizes can be accounted for by defining a new set of components of size equal to the greatest common factor of the sizes of the original components (irrational relative sizes—for which no greatest common factor exists—can be approximated to arbitrary precision by rational relative sizes). If the new components are all of size l, each original component of size can then be replaced with new components for which the state of one of these variables completely determines the state of all the others, i.e., (see, e.g., Figure 3). The assumption that the system X must have at least one distinct response for each environmental state Y (i.e., ) is generalized as follows: An “environmental component” is defined for each system component , such that each is a random variable representing the environmental states that require a distinct response from the system component . Then, for the system to effectively interact with its environment, for each i, i.e., there cannot be two environmental component states for a given state in the corresponding system component. (Note that this condition is necessary but not sufficient for the system to effectively interact with its environment—just because the system components can choose a different response for each environmental condition does not guarantee that the responses are appropriate.) Letting , we see that implies , and, thus, is a stronger condition: Not only must the system match the environment overall, but this matching must be properly organized in a specific way. This formulation allows for constraints among the environmental components to induce constraints among the system components.

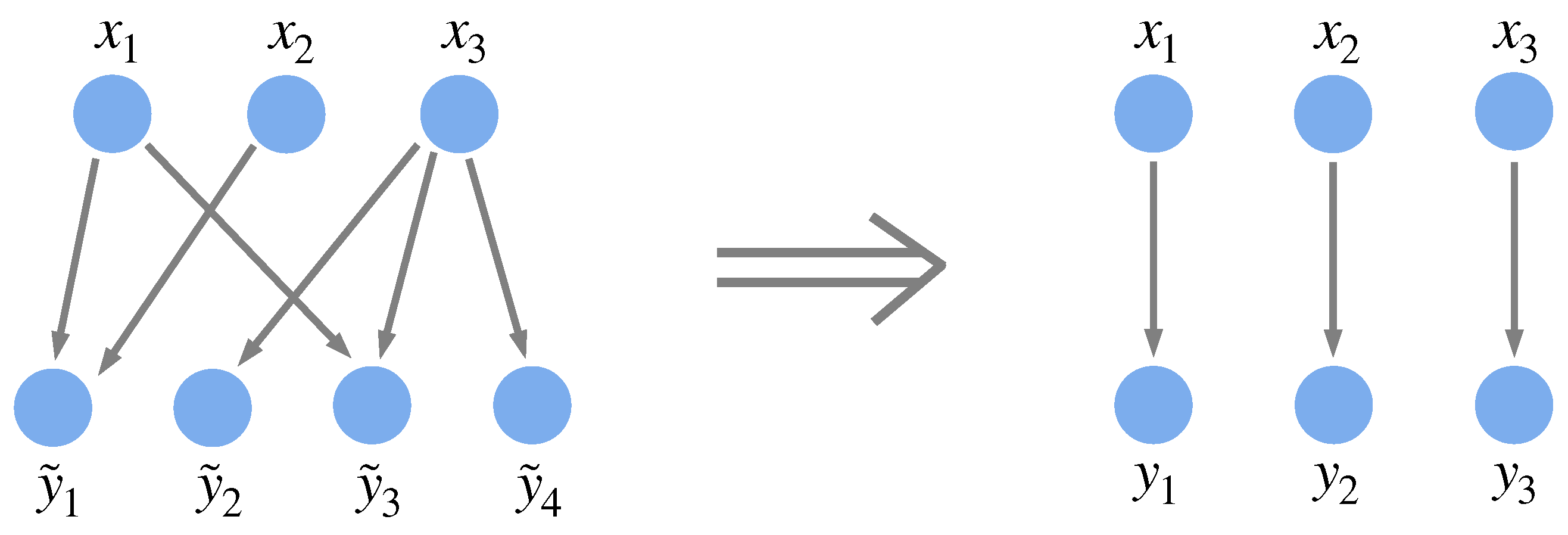

Note that each environmental component represents the set of behaviors that are required by its corresponding system component, which, as shown in

Figure 4, can correspond to multiple physical parts of the environment. Depending on how the system is connected to the environment—or depending on which constraints arising from interactions between the system and its environment are to be examined—the environmental components may need to be defined differently. Although it may not seem so at first, any interaction between a system and its environment can be formulated as above: If we start with a more general formulation in which each system component

interacts with environmental components

(i.e.,

), which allows for each system component to interact with multiple environmental components and vice versa, we can redefine the environmental components such that each

is associated with the random variable

(see

Figure 4 for an example). This redefinition may result in new entanglements among the environmental components.

Definition 2. An environment for system X is a system Y together with a bijection .

Definition 3. A system X matches its environment iff for all .

Example 1. Consider a system of two thermostats, in room 1 and in room 2, that can each be either on or off. The environment can be described by two variables and that represent whether or not room 1 or room 2, respectively, should be heated (the bijection f in Definitions 2 and 3 mapping to and to ). In order for the system to match the environment, it must be that . Thus, if the need for each room to be heated is independent of that of the other room, the thermostats must be able to operate independently of one another; likewise, if the two rooms’ needs for heat are correlated, the thermostats must also be correlated.

Example 2. Consider a system X in which each represents some aspect of policy (e.g., educational policy) being applied in region i of a given country (the regions could, for instance, be towns/cities). The environment could be defined by the random variables (where ), such that each corresponds to conditions in region i that require a distinct policy in order for the region to be effectively governed. If the values vary independently of one another, while the values cannot, then the system will not be able to match its environment (e.g., an education policy that is determined entirely at the national level will not be able to effectively interact with locales if each locale has specific educational needs). Conversely, if there are correlations among the values that are lacking in the values, the system will also be unable to match its environment (e.g., it would be ineffective for each city to independently set its own policy with respect to international trade or with respect to regulating a national corporation that spans many cities).

Note that the possible states of a system or the probabilities assigned to these states cannot be defined without specifying the environment with which the system is interacting, for the same system may behave differently in different environments. (Alternatively, each individual environment need not be treated separately; if each individual environment is assigned a probability, this ensemble of possible individual environments can itself be treated as a single environment of the system.) In either case, this formalism concerning a system matching its environment is purely descriptive and does not require the specification of the mechanism by which the system and the environment are related.

Example 3. Returning to the example of the two thermostats, if the system (the thermostats) and the environment (the rooms) are connected so that the state of thermostat i depends directly on the state of room i, the thermostat states will have precisely as much correlation as the room states do. The thermostat states will be independent random variables if and only if the room states are.

With Definition 3, we have a characterization of Ashby’s law that takes into account the multi-scale structure of a system and its connection with its environment. The goal is then to understand how the properties of the environment constrain the corresponding properties of the system. If an environment has a certain property and it is known that the system matches the environment, what must be true about the system? For the single-scale case of Ashby’s law, the system must have at least as much information as the environment. The complexity profile, described below, generalizes this property to multiple scales. In particular, it allows us to formulate the multi-scale law of requisite variety: In order for a system to match its environment, it must have at least as much complexity as its environment at every scale.

3. Defining a Complexity Profile

The basic version of Ashby’s law states that for a system

X to match its environment

Y, the overall complexity of

X must be greater than or equal to the overall complexity of

Y. But, as argued in Section 2.3 of ref. [

27], it does not make sense to speak of complexity as a single number but rather the complexity of a system must depend on its scale. Thus, we wish to generalize the notion of a complexity profile such that the complexity of a system and its environment can be compared at multiple scales.

Definition 4. A complexity profile of a system X assigns a particular amount of information to the system at each scale . For , we define . If we wish to consider each component of the system to be of size l, we can define a continuous version of the complexity profile (see Appendix B) as follows: We wish for a complexity profile to have two additional properties: It should (1) manifest the

multi-scale law of requisite variety and (2) obey the

sum rule. Each property is defined below, with applications/examples given in Sections 2.5 and 2.4 of ref. [

27], respectively.

Definition 5. A complexity profile manifests the multi-scale law of requisite variety if for any two systems X and Y, X matching Y (per Definition 3) implies that for all n.

Definition 6. A complexity profile obeys the sum rule if for any system X, .

The multi-scale law of requisite variety is important because it allows for the interpretation that a necessary (but not sufficient) condition for a system to effectively interact with its environment must be that it has at least as much complexity as the environment at every scale. The sum rule is important because it captures the intuition that for a system composed of components with the same individual behaviors, there is a tradeoff among the complexities of the system at various scales (since complexity at larger scales requires constraints among the system’s smaller-scale degrees of freedom).

Note that examining measures of multi-scale complexity can never prove that a system matches its environment—just as in the single-scale case, a system having more complexity than its environment by no means guarantees that every system state corresponds to a single environmental state (nor that the state adopted by the system will be appropriate). But examining multi-scale measures of information can prove the impossibility of compatibility. The goal, then, in formulating multi-scale measures is to create more instances in which the impossibility of compatibility can be shown. Using this multi-scale formalism, the system must now possess more complexity than its environment at all scales—not just more complexity than its environment overall. For instance, an army of ants may have more fine-grained complexity than its environment but will be able to perform certain tasks (e.g., moving large objects) only with larger-scale coordination between the ants.

4. A Class of Complexity Profiles

In

Section 3, we have defined the term

complexity profile and have described general properties that any complexity profile should have. We now describe a specific class of complexity profiles that satisfies these properties. This class of profiles is not the only such class and may not be the best one, but it serves as an instructive example and provides one useful way of characterizing multi-scale complexity.

One way to define a large-scale or coarse-grained description of a system is to allow only a subset of the components of the system to be described. (This coarse-graining scheme is analogous to the decimation approach for implementing the position–space renormalization group in physics.) As a first pass, one might divide the system into

n equivalent disjoint subsets and then define the information in the description of the system at scale

n to simply be the information in one of the subsets (see, e.g.,

Figure 5). However, given that the partition into

n equivalent subsets may not be possible (either due to heterogeneity in the components or because the system size is not divisible by

n), this definition can be generalized by averaging over the the information in each of the

n subsets.

Example 4. Consider a Markov chain (for finite Markov chains of size N, simply let for ). A set of disjoint, coarse-grained descriptions of the Markov chain at scale n could beThus, the information at scale n of the Markov chain could be defined asNote, however, that this sequence of descriptions is not nested and so cannot be used in its entirety in Definitions 8 and 9. First, we must define how to successively partition the system. We only allow for nested sequences of partitions so that larger-scale descriptions of the system cannot contain information that smaller-scale descriptions lack. The way in which a system is partitioned defines a sequence of descriptions of the system; different partitioning schemes can be thought of as different nested ontologies with which to create these successively coarser descriptions. This formulation allows for a general framework for describing a system at multiple scales, with successively larger-scale descriptions of a system corresponding to nested subsets of the system that are decreasing in size.

4.1. Definition

We now formally define this class of complexity profiles. To do so, we first build up some notation for defining nested sequences of partitions:

Definition 7. Define to be a nested partition sequence of a set X if each is a partition of X, (i.e., is a refinement of ) whenever , and (i.e., is a strict refinement of ) whenever .

Note that in order for the strict refinement clause of this definition to be satisfied (i.e., for to have more parts than whenever ), it must be that contains n parts for and for , since a partition of X cannot have more than parts.

Example 5. Let . An example of a nested partition sequence of X is .

Definition 8. Given a nested partition sequence P of a system X, define for .

Note that is non-decreasing in n and captures the total (potentially overlapping) information of the system parts, while is the average amount of information necessary to describe one of the n parts. Information that is n-fold redundant (i.e., is of scale n) can be counted up to n times in —it is this fact that motivates the following definition of a complexity profile.

Definition 9. Given a nested partition sequence P of a system X, the complexity profile is defined as , with the convention that .

Remark 2. For , . For , . And for , , where A and B are the two subsets of X that are elements of but not of and where I denotes mutual information. Thus, this complexity profile is very computationally tractable.

Example 6. Using the nested partition sequence given in Example 5 of , if are all unbiased bits, , and , , and are mutually independent, we have , , and for . Thus, , , and for .

Example 7. Consider a system X of N molecules, the velocities of which are independently drawn from a Maxwell–Boltzmann distribution for which the temperature T is, itself, a random variable. Consider a nested partitioning scheme in which at each step, the largest remaining part (or, in the case of a tie, one of the largest remaining parts) is divided as equally as possible in two. The resulting complexity profile will then have and for , since for , the size of the parts will be large enough so that T can be almost precisely determined from any single part. As n approaches N, a measurement of any single part will yield more and more uncertainty regarding the value of T, so will slowly decay from to 0. Such a complexity profile captures the fact that at the smallest scale, there is a lot of information related to the microscopic details of each molecule, but at a wide range of larger intermediate scales, the information present is much smaller and roughly constant, arising only from the common large-scale influence that the temperature has across the system.

4.2. The Multi-Scale Law of Requisite Variety and the Sum Rule

This complexity profile roughly captures the notion of redundant information and will satisfy the properties described in Definitions 5 and 6 (as proved below). It is dependent on the particular set of partitions used—a reflection of the fact that there are multiple ways to coarse-grain a system—and, thus, will not capture the redundancies present in an absolute sense, as the complexity profile described in refs. [

7,

32] does. But that complexity profile, while it does obey the sum rule, does not manifest the multi-scale law of requisite variety. Thus, while it characterizes the information structure present in a system, it does not allow us to compare a system to its environment in a mathematically rigorous way. The class of complexity profiles considered here allows this comparison by requiring that the system and the environment be partitioned in the same way, breaking permutation symmetry and accounting for the correspondence between system and environmental components.

Theorem 1. The multi-scale law of requisite variety. If a system X matches its environment , then for all the nested partition sequences P of X, at each scale n, where is the corresponding nested partition sequence of Y (see Definition 10 below).

Definition 10. Given a nested partition sequence P of a set X and a bijection , define to be the nested partition sequence of Y such that , , y and belong to the same part of iff and belong to the same part of .

One of the advantages of having the complexity profile depend on the partitioning scheme is that Theorem 1 holds for all possible nested partition sequences of the system, assuming its environment is partitioned in the same way. In other words, regardless of how the partitions are used to define the scale, the system must have at least as much complexity as its environment at all scales, so long as the scale is defined in the same way for the system and its environment.

Furthermore, not only must all possible complexity profiles of the system match the corresponding complexity profile of the environment but also all the possible complexity profiles of all the possible subsets of the system must match the corresponding complexity profile of the corresponding subset of the environment, as stated in the following corollary to Theorem 1. This is a powerful statement, since it implies that not only must the system have at least as much complexity as its environment at all scales but also subdivisions within the system must be aligned with the corresponding subdivisions within the environment (see Section 2.6 of ref. [

27]).

Corollary 1. Subdivision matching. Suppose a system X matches its environment . Then, for any subsets and , at each scale n for all the nested partition sequences P of .

Proof. Since X matches Y, matches . Therefore, Theorem 1 applies to and . □

We now state and prove the sum rule:

Theorem 2. Sum rule. For any system X and all the nested partition sequences P of X, .

Proof. . □

Because the complexity at each scale measures the amount of additional information present when different parts of the system are considered separately, the sum of the complexity across all the scales will simply equal the total information present in the system when each component is considered independently of the rest. Thus, given fixed individual behaviors of the system components, there is a necessary tradeoff between complexity at larger and smaller scales, regardless of which partitioning scheme is used (i.e., regardless of how the scale is defined).

4.3. Choosing from Among the Partitioning Schemes

Because of the dependence on the partitioning scheme, Definition 9 defines a family of complexity profiles. That there is no single complexity profile for this definition can be thought of as a consequence of there being no single way to coarse-grain a system. In other words, implicit in any particular complexity profile of a system is a scheme for describing that system at multiple scales. While there is no such scheme that is “the correct scheme” in an absolute sense, for any particular purpose (and, often, for almost any conceivable purpose), some schemes are far better than others.

But before examining this question, we first consider a strong advantage of the multiplicity of complexity profiles: Theorem 1 applies to all of them. Thus, any complexity profile, regardless of the partitioning scheme, can potentially be used to show a multi-scale complexity mismatch between the system and environment. This is useful when one has information about the probability distributions of the system and environment separately, but not necessarily on the joint probability distribution of the system and environment together, such that one cannot directly determine whether the system matches the environment (since quantities such as would be unknown for any given system component x and environmental component y).

Assuming one knows which system components correspond to which environmental components, one can test for potential incompatibility between the system and environment by considering any nested partition sequence of any subset of the system and the corresponding subset of the environment, as per Theorem 1 and Corollary 1. Thus, a meaningful comparison of the system and environment can be made for a wide variety of complexity profiles, provided the definitions for the system and environment are consistent. In the likely case that the complexity profiles cannot be precisely calculated, this framework thus supports a wide variety of qualitative complexity profiles that one may wish to construct.

When the correspondence between system and environmental components is unknown, there are still ways in which to compare the system and the environment. For instance, if a system

X matches its environment

Y, then

for all functions

F that map complexity profiles onto

and are non-decreasing in

for all scales

n. Thus, finding even a single function

F for which Equation (

3) does not hold is enough to show that

X cannot possibly match

Y, regardless of how they may be connected. Other such constructions that are independent of the bijection between

X and

Y are also possible.

However, although any partitioning scheme can be used to show a mismatch between a system and its environment, not all partitioning schemes are equally good choices for gaining an understanding of the structure of the system. Each part of a partition represents an approximation of the system by describing only that subset of its components, so if the purpose of the complexity profile is to characterize the structure of the system, the partitions should be chosen accordingly. For instance, for a system , where and , partitioning the system into and does not make sense if the goal is to create a reasonably faithful two-component description of the four-component system.

As a heuristic, successive cuts in a nested partition sequence should cut through random variables with significant mutual information (i.e., significant redundancy), although, of course, taking a greedy algorithm (i.e., first maximizing complexity at scale 2 and then choosing the next partition to maximize complexity at scale 3, given the constraint that it has to be nested within the previous partition, and so on) may not always match the system’s structure. Nonetheless, this greedy algorithm does at least provide a consistent way to define complexity profiles across various systems such that complexity is decreasing with scale. (Formally, we can define this complexity profile using the nested partition sequence P that maximizes the complexity profile according to a “dictionary ordering”, i.e., that maximizes for any . However, just because P maximizes the complexity profile for X according to this (or any other) metric does not guarantee that for an environment of X, will maximize the complexity profile of Y according to the same metric.)

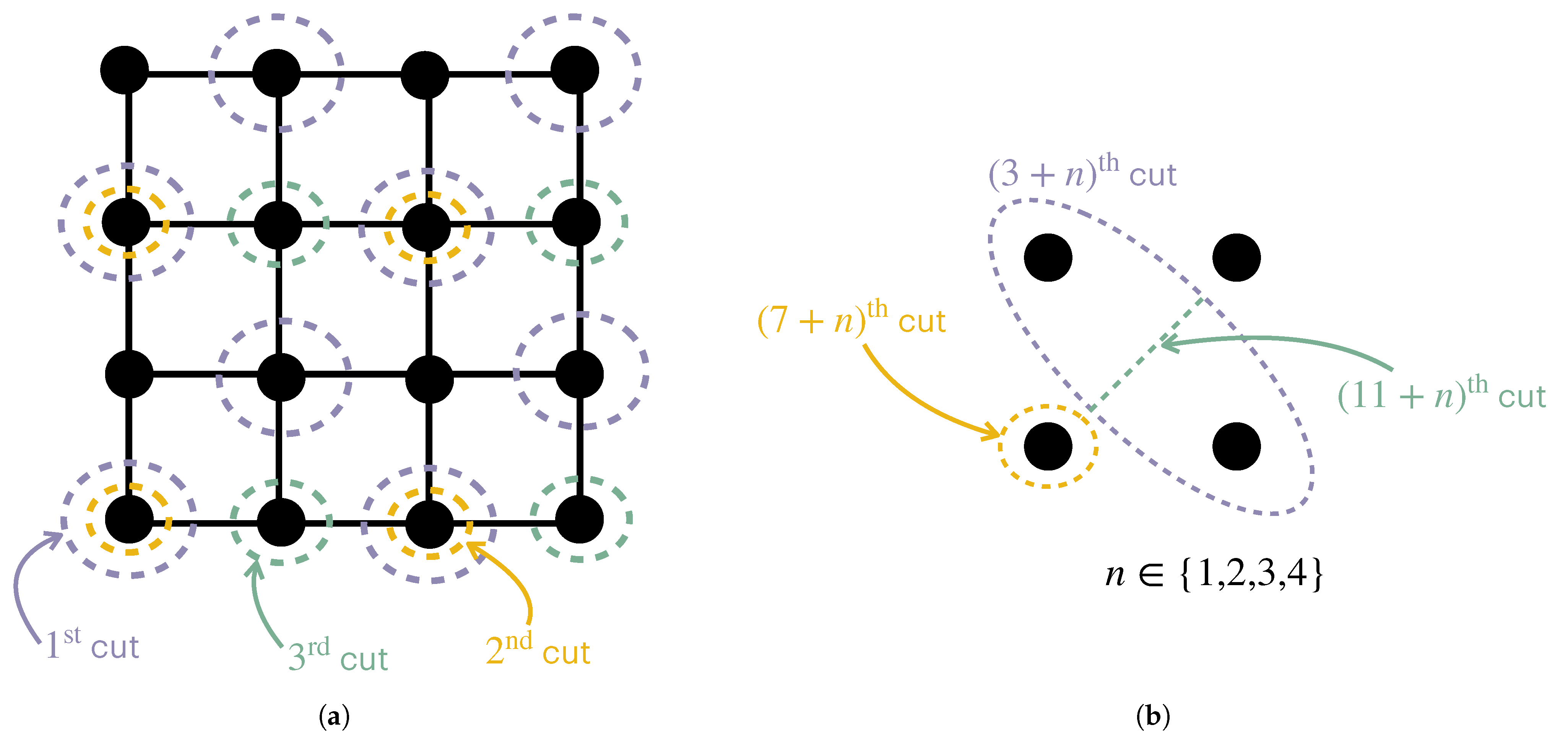

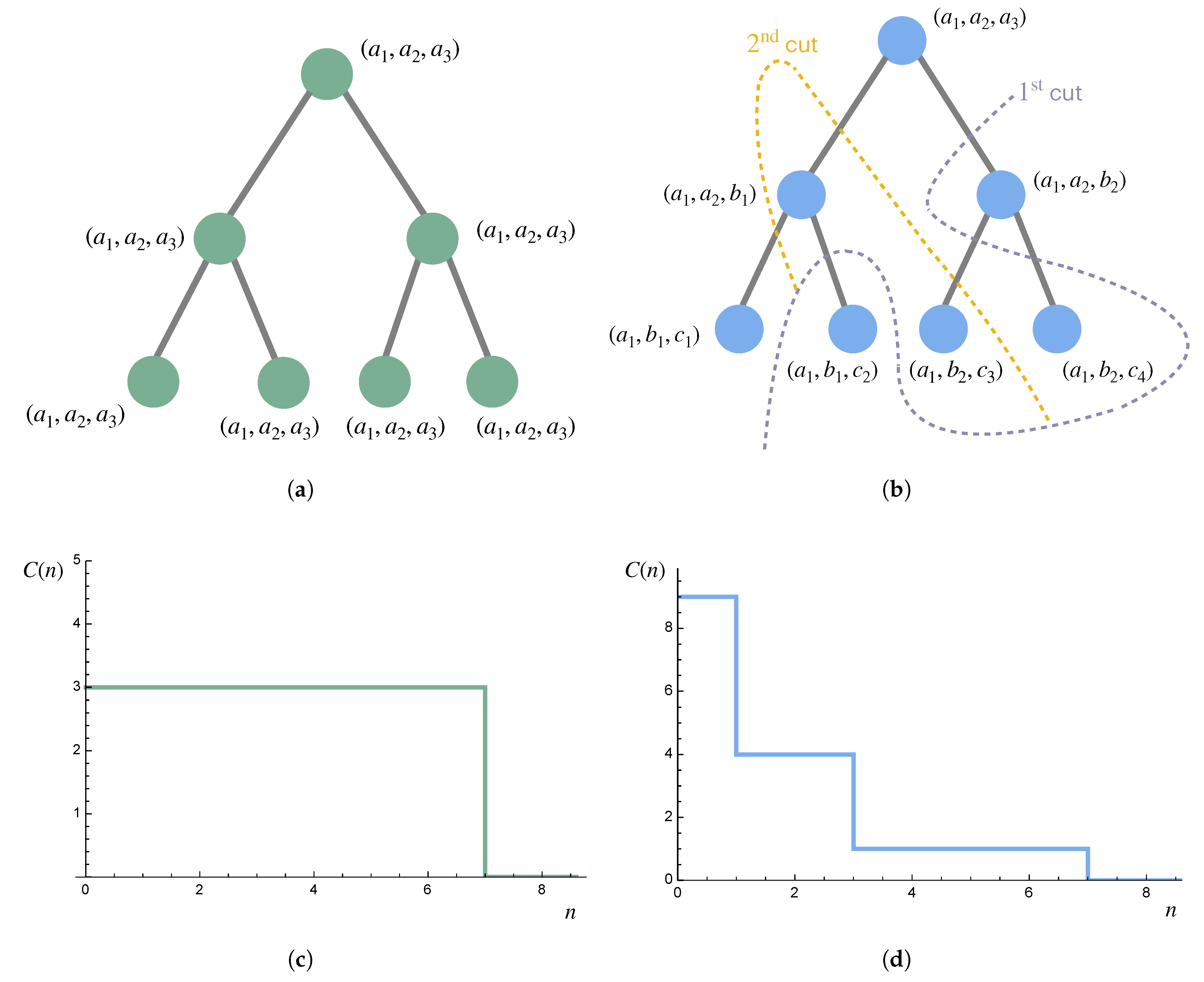

Example 8. Consider a system such that , , and , but otherwise, all the components are mutually independent (Figure 6). Then, intuitively, we expect that , , and for . A nested partition sequence that gives us this complexity profile is with , , and , and where it does not matter which subsequent partitions are used, since each part of contains mutually independent random variables. Example 9. Consider a two-dimensional grid of random variables in which two variables have nonzero mutual information conditioning on the rest if and only if they are adjacent. One way to partition the grid that respects its structure is given in Figure 7. Example 10. Consider a hierarchy consisting of seven individuals: a leader with two subordinates, each of which have two subordinates themselves, as depicted in Figure 8. The behavior of each individual is represented by three random variables, each with complexity c. Thus, if examined separately from the rest of the system, the complexity of each individual is . On the left, everyone completely follows the leader, resulting in a complexity of up to scale 7 regardless of the partitioning scheme. On the right, some information is transmitted down the hierarchy, but lower levels are also given some autonomy, resulting in more complexity at smaller scales but less at larger scales (but with the same area under the curve, consistent with the sum rule). 4.4. Combining Subsystems

If a system can be divided into independent subsystems, the complexity profile of the system as a whole can be written as the sum of the complexity profiles of each of the independent subsystems. And if a system can be divided into m subsystems that behave identically, its complexity profile will equal that of any one of the subsystems except with the scale axis stretched by a factor of m. These properties are made precise below.

Theorem 3. The additivity of the complexity profiles of superimposed independent systems. Suppose two disjoint systems A and B are independent, i.e., the mutual information , and let . Consider any nested partition sequences of A and of B. Then, for all the nested partition sequences that restrict to on and on ,In other words, the complexity profiles of independent subsystems add. Proof. This result follows from restricting to on A and on B and the fact that for any subsets and , . □

In order to formulate the second property, we first build up some notation in the following two definitions:

Definition 11. For a system X and a positive integer m, let , where the are disjoint systems for which there exist bijections such that , . In other words, contains m identical copies of X, such that the behavior of any one copy completely determines the behaviors of all the others.

Definition 12. Given a nested partition sequence P of a system X and a positive integer m, define the nested partition sequence of the system (with bijections ) as follows: For , . For , define such that it restricts to (see Definition 10) on each , where if and otherwise.

Theorem 4. The scale additivity of replicated systems. Let P be any nested partition sequence of a system X. Then,In other words, the effect of including m exact replicas of X is to stretch the scale axis of the complexity profile by a factor of m. Proof. This result follows from Definitions 11 and 12. □

Theorems 3 and 4 indicate that for any block-independent system, there exists a nested partition sequence that yields the same complexity profile as that given by the formalism in refs. [

7,

32]. (The formalism in refs. [

7,

32] is stated in ref. [

32] to be the only such formalism that is a linear combination of entropies of subsets of the system, yields its results for block-independent systems, and is symmetric with respect to permutations of the components. The partition formalism in this paper does not contradict this statement, since any partitioning scheme will break this permutation symmetry for systems with greater than two components.)

5. Discussion

The motivation behind our analysis has been to construct a definition of a complexity profile for multi-component systems that obeys both the sum rule and a multi-scale version of the law of requisite variety. In order to do so, we first had to generalize the law of requisite variety to multi-component systems. We then created a formal definition for a complexity profile and defined two properties—the multi-scale law of requisite variety and the sum rule—that complexity profiles should satisfy. Finally, we constructed a class of examples of complexity profiles and proved that they satisfy these properties. We demonstrated their application to a few simple systems and showed how they behave when independent and dependent subsystems are combined.

This formalism is purely descriptive in that questions of causal influence and mechanism (i.e., what determines the states of each component) are not considered; rather, only the possible states of the system and its environment and correlations among these states are considered. (This approach is analogous to how statistical mechanics does not consider the Newtonian dynamics of individual gas molecules but rather only the probabilities of finding the gas in any given state.) By abstracting out notions of causality and mechanism, this approach allows for an understanding of the space of all the possible system behaviors and for the identification of systems that are doomed to failure, regardless of the mechanism. The way in which system and environmental components are mechanistically linked and the evolution and adaptability of complex systems over time are directions for future work.

More elegant profiles than those presented

Section 4 may exist. One could also imagine complexity profiles that take advantage of some known structure of the systems under consideration; for instance, for systems that can be embedded into

, where

d is far lower than the number of system components, Fourier methods could be explored. More broadly, the sum rule could be relaxed, allowing for other definitions of multi-scale complexity. Completely eliminating any tradeoff of complexity among scales would likely lead to under-constrained profiles—certainly, smaller-scale complexity must be reduced in order to create larger-scale structure. But one could imagine forms that this tradeoff may take other than

. Even more broadly, other definitions of what it means for a system to match its environment could be considered, as long as some sort of multi-scale law of requisite variety is retained, so that the complexity profiles of multiple systems can be meaningfully compared.

With all that said, the profiles presented here are the first, to the best of our knowledge, to obey both a sum rule and multi-scale law of requisite variety. At the very least, these formalisms provide a formal grounding that can be used to support conceptual claims that are made using complexity profiles. Our hope is that these formalisms will spur further development in our understanding of the general properties of multi-component systems.