A Fusion of Entropy-Enhanced Image Processing and Improved YOLOv8 for Smoke Recognition in Mine Fires

Abstract

1. Introduction

2. Algorithm Principles

- (1)

- Capture smoke video images for mine monitoring and process the video images using frames.

- (2)

- Perform fusion entropy enhancement on smoke frame images to realize the structural decoupling of (background) low-entropy region suppression and (smoke) high-entropy region enhancement, and construct a smoke image dataset.

- (3)

- Recognizing smoke target image feature information based on the improved YOLOv8 network model.

- (4)

- Recognizing smoke target frame images.

3. Fire Smoke Fusion Entropy-Enhanced Image Methods

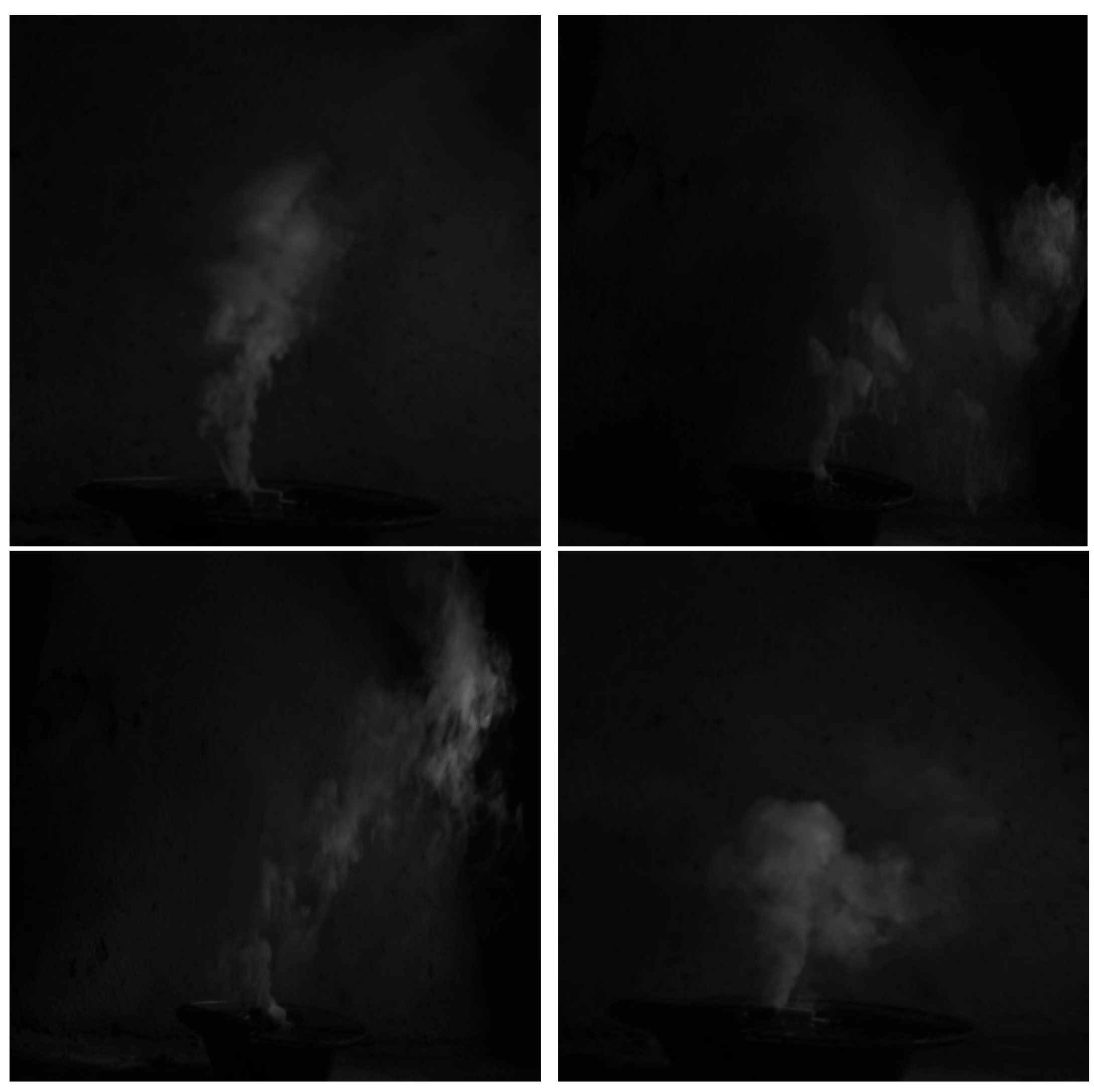

3.1. Characterization of Mine Fire Smoke Images

3.2. Principles of Fire Smoke Fusion Entropy-Enhanced Image Methods

3.3. Fusion Entropy-Enhanced Image Method—Frame M-Value Calculation and Algorithm Steps

- (1)

- Perform the pixel difference operation of the neighboring images for each of the five consecutive frames of the intercepted smoke image, and perform the sum operation on the results of the image difference; the calculation formula is shown in (2).

- (2)

- Perform the equal interval 1-frame image pixel difference operation and sum the image difference result; the calculation formula is shown in (3).

- (3)

- Perform an equally spaced, 2-frame image pixel differencing operation and sum the image differencing results using the formula shown in (4):

- (4)

- Perform the pixel difference operation for 3 frames of images with equal intervals and sum the results of the image difference; the calculation formula is shown in (5):

- (5)

- The neighboring frame image operation results of step (1) are summed with the interval operation results of steps (2), (3), and (4), and the secondary sum operation is performed with the intermediate frame image; then, the image with low-entropy region suppression of the background noise and high-entropy region enhancement of the smoke target is obtained via restoration; the calculation formula is shown in (6):

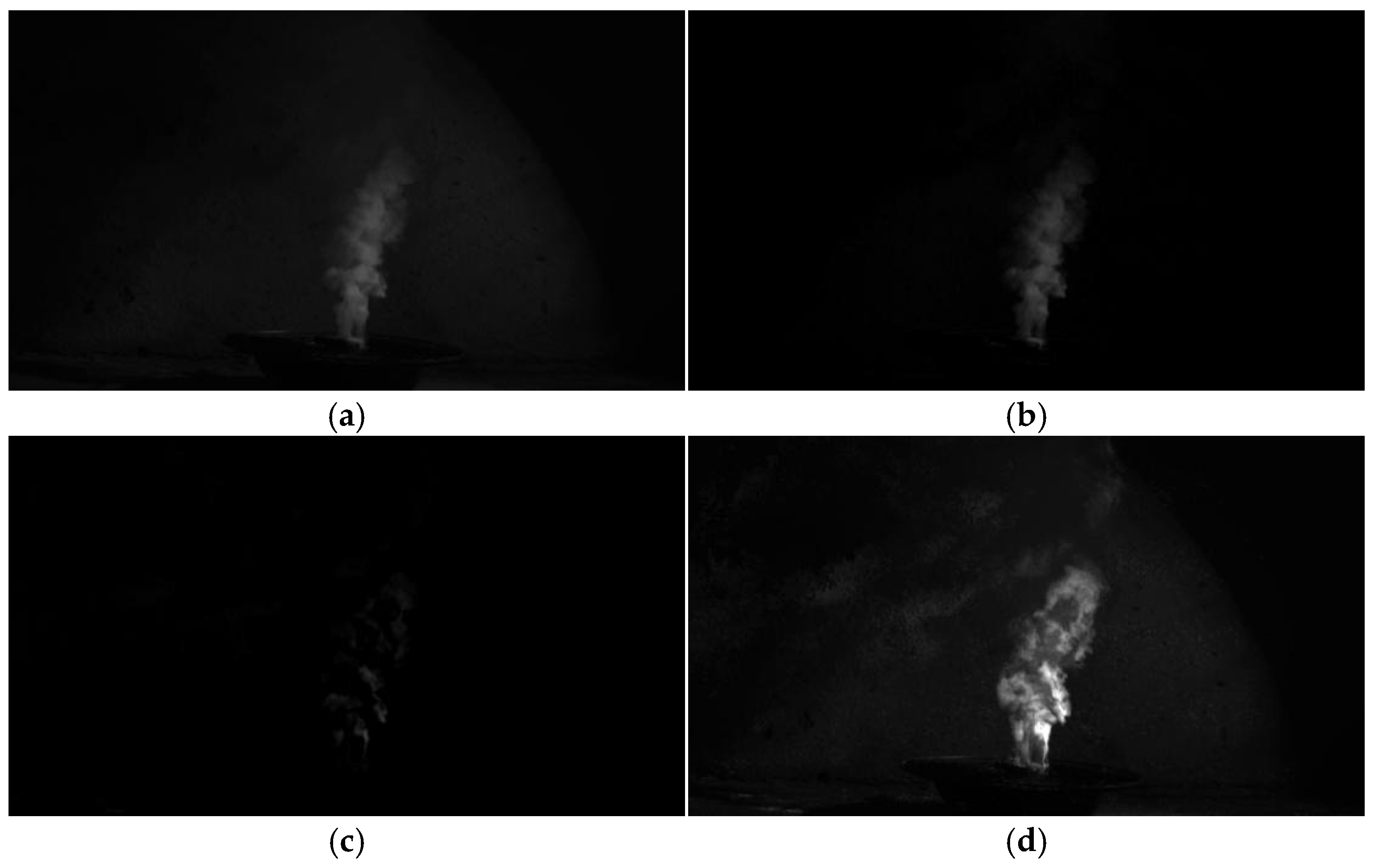

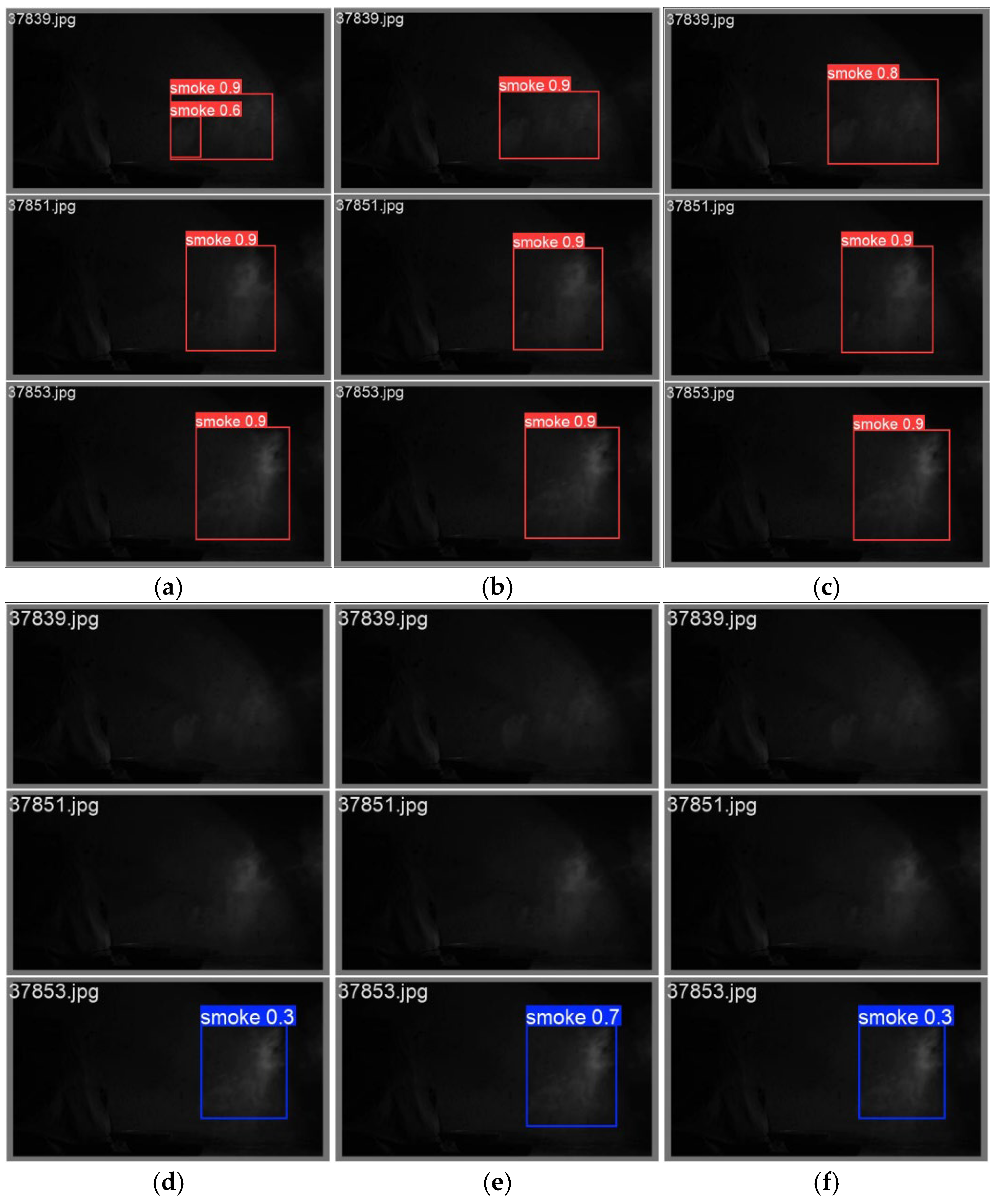

3.4. Analysis of Fusion Entropy-Enhanced Image Effect and Comparison Results with Existing Methods

4. Improved YOLOv8m-Based Smoke Image Recognition Algorithm

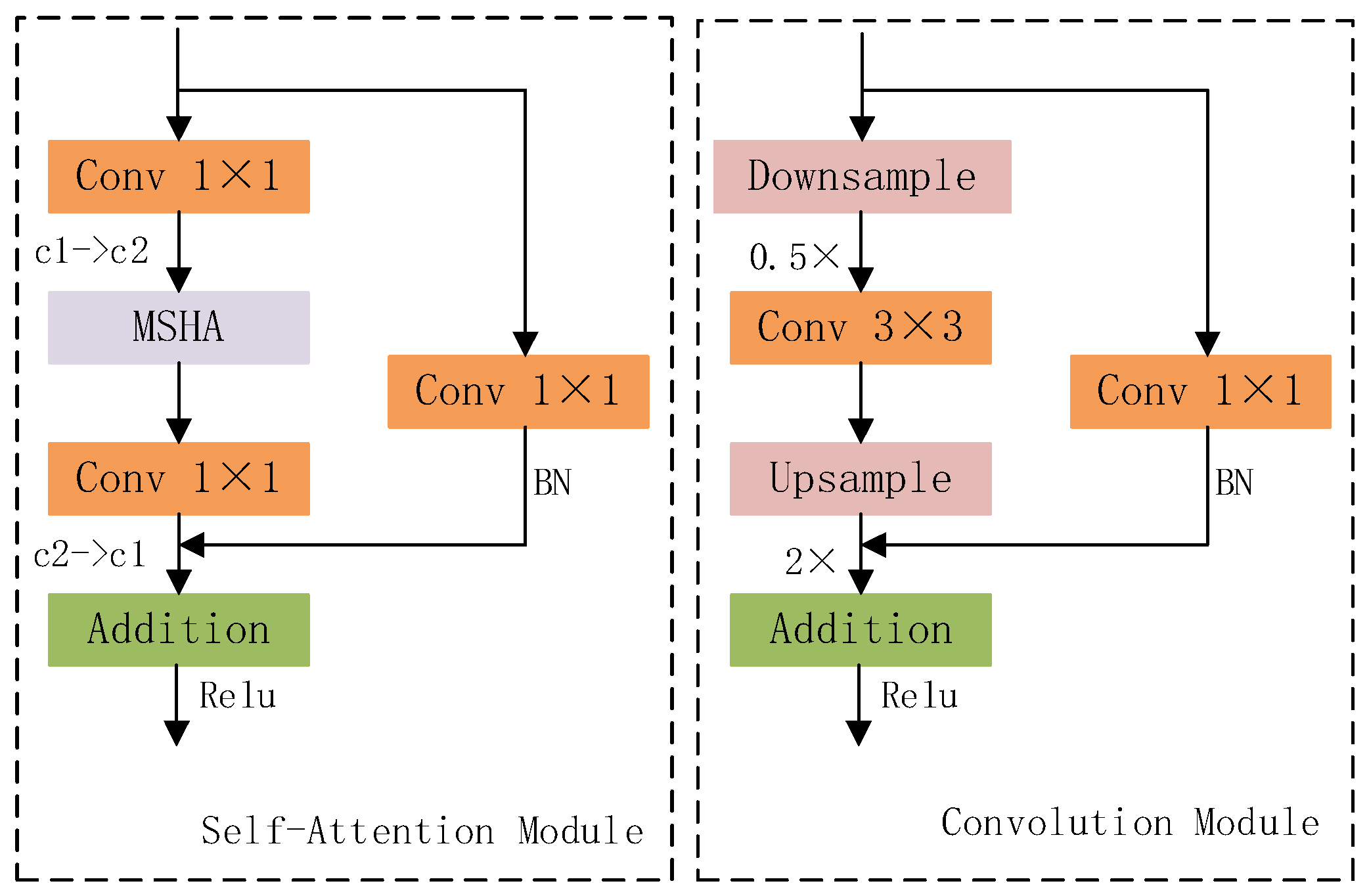

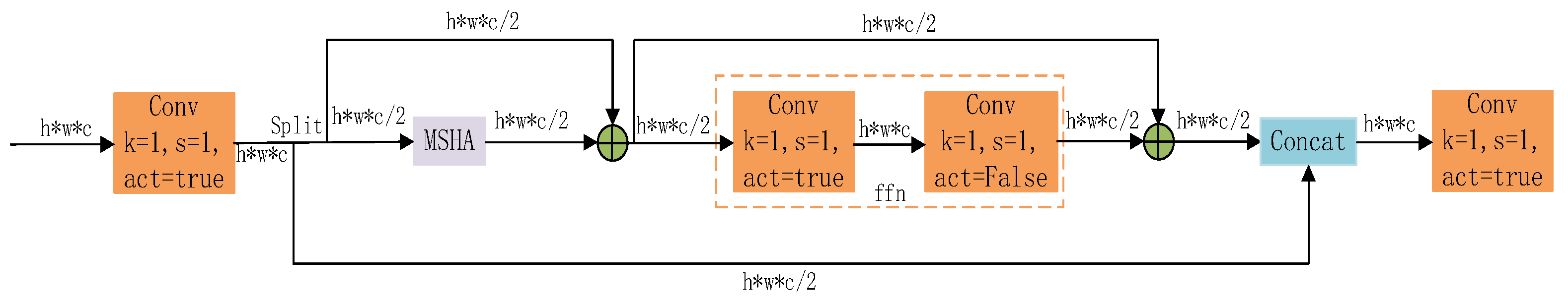

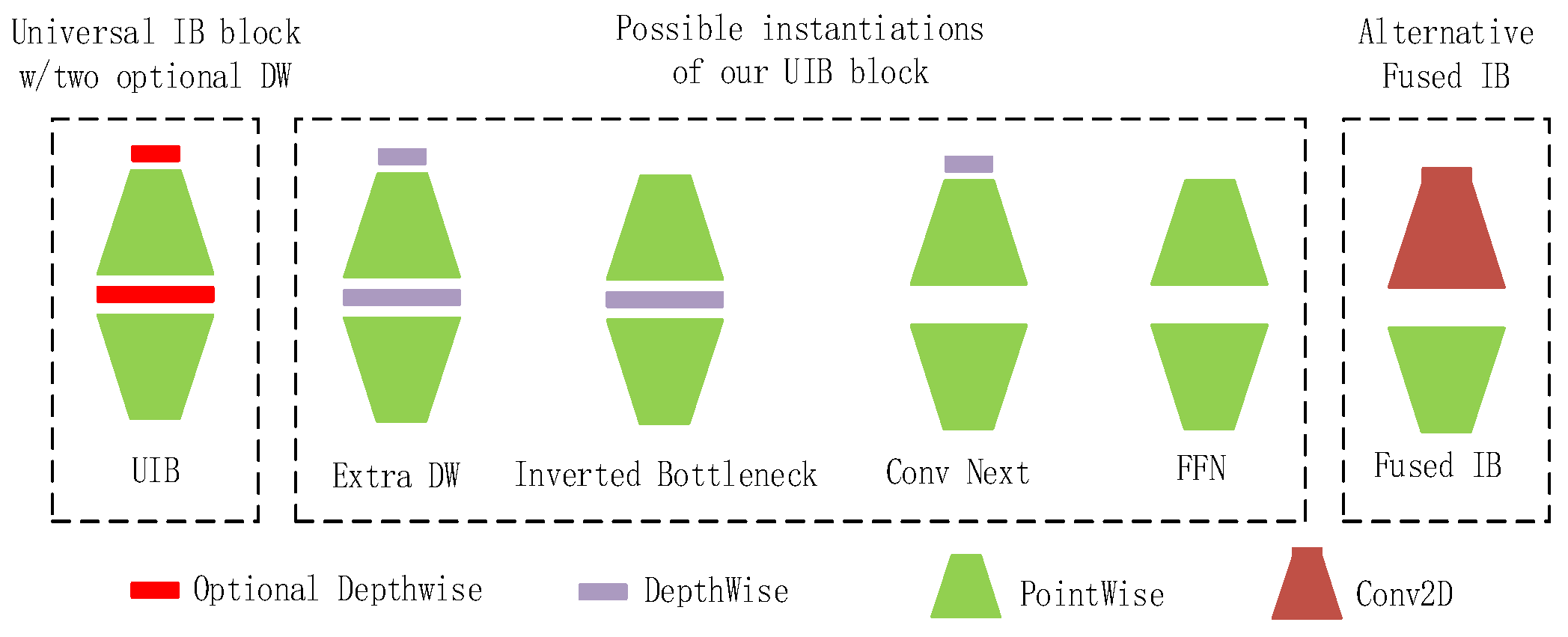

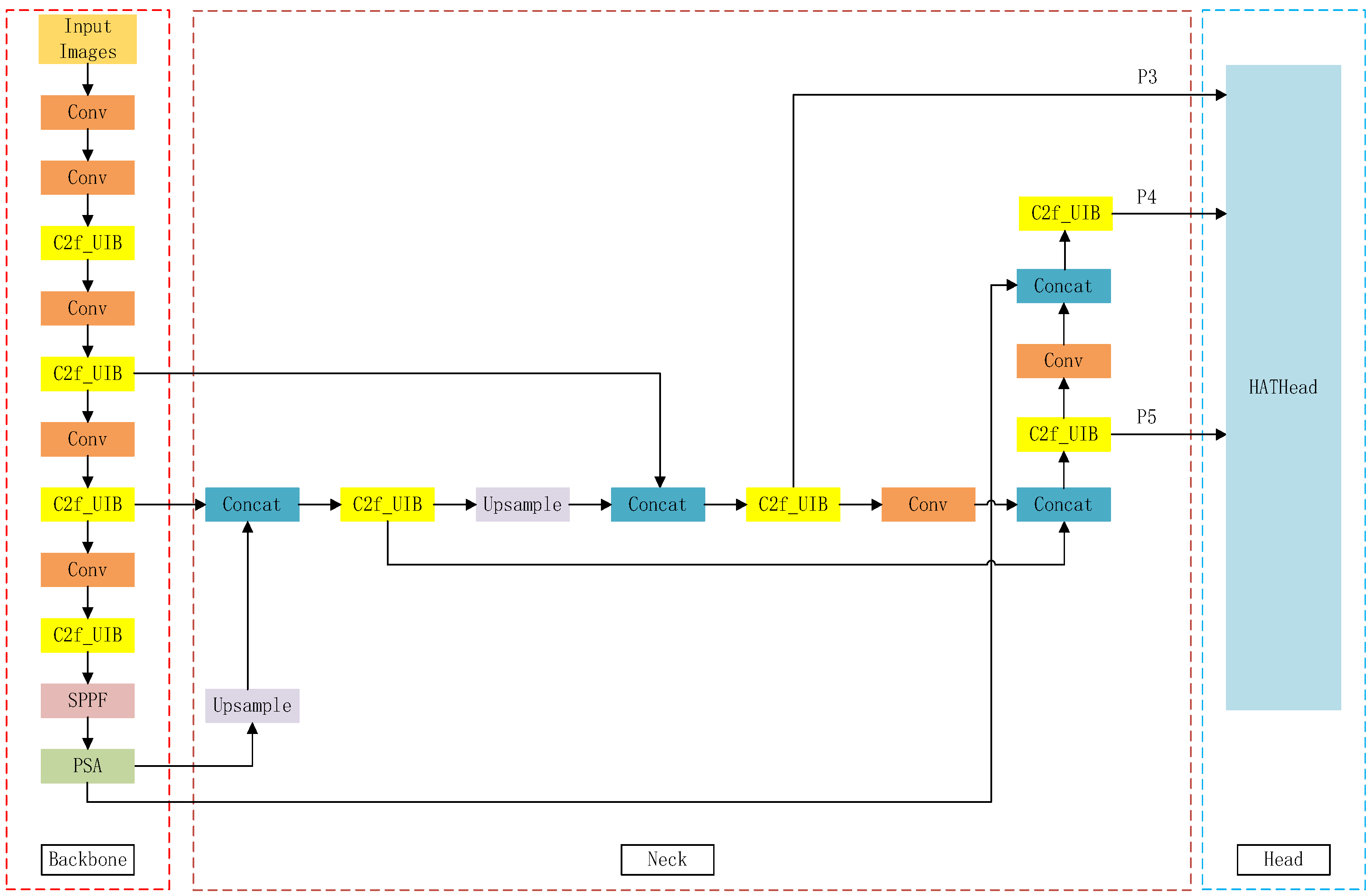

4.1. Algorithm Introduction and Improvement Mechanisms

4.2. Improved YOLOv8m Network Modeling Structure

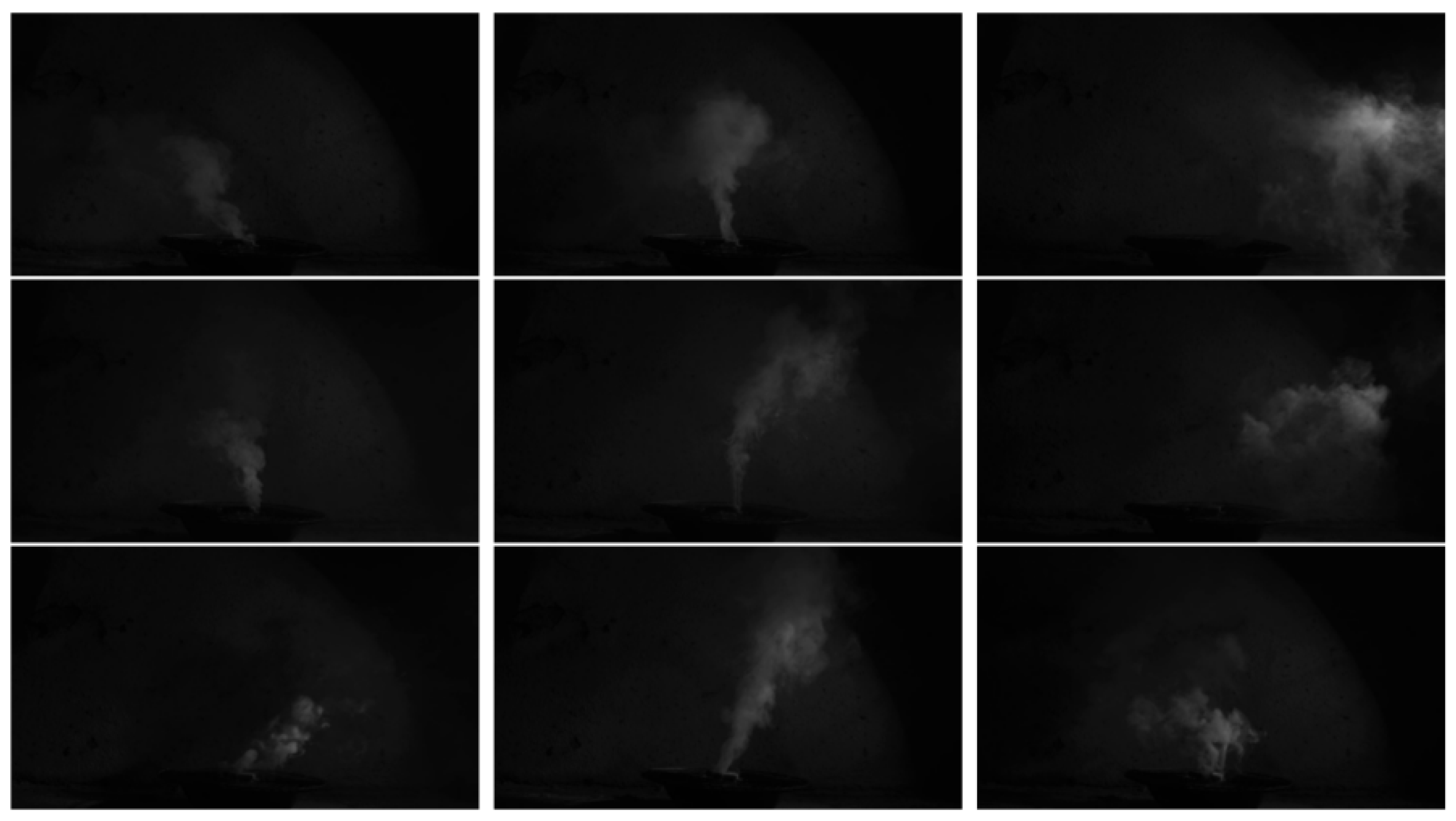

5. Construction of the MFSIDD Dataset

6. Test Results and Analysis

6.1. Algorithm Performance Evaluation Metrics

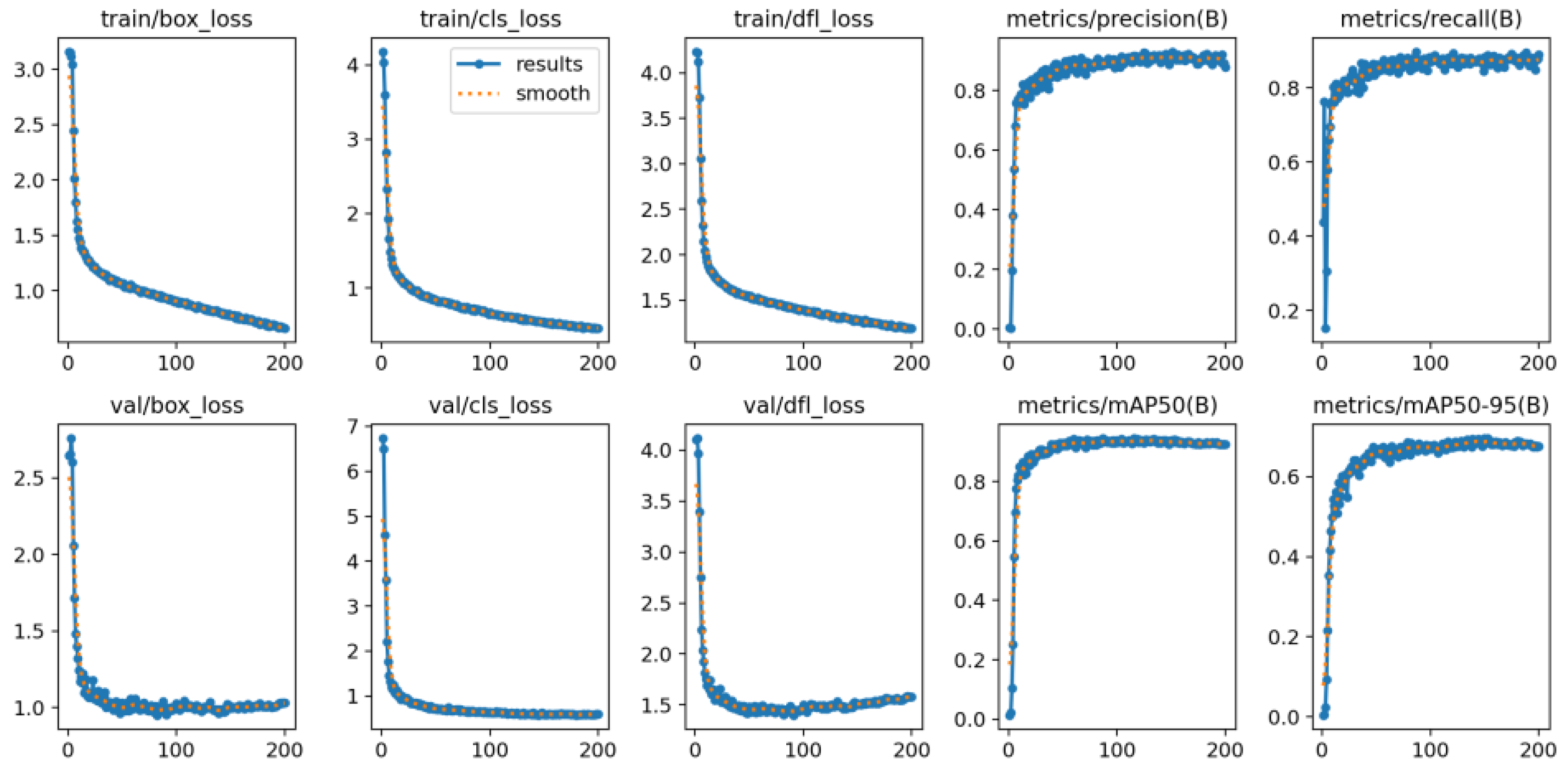

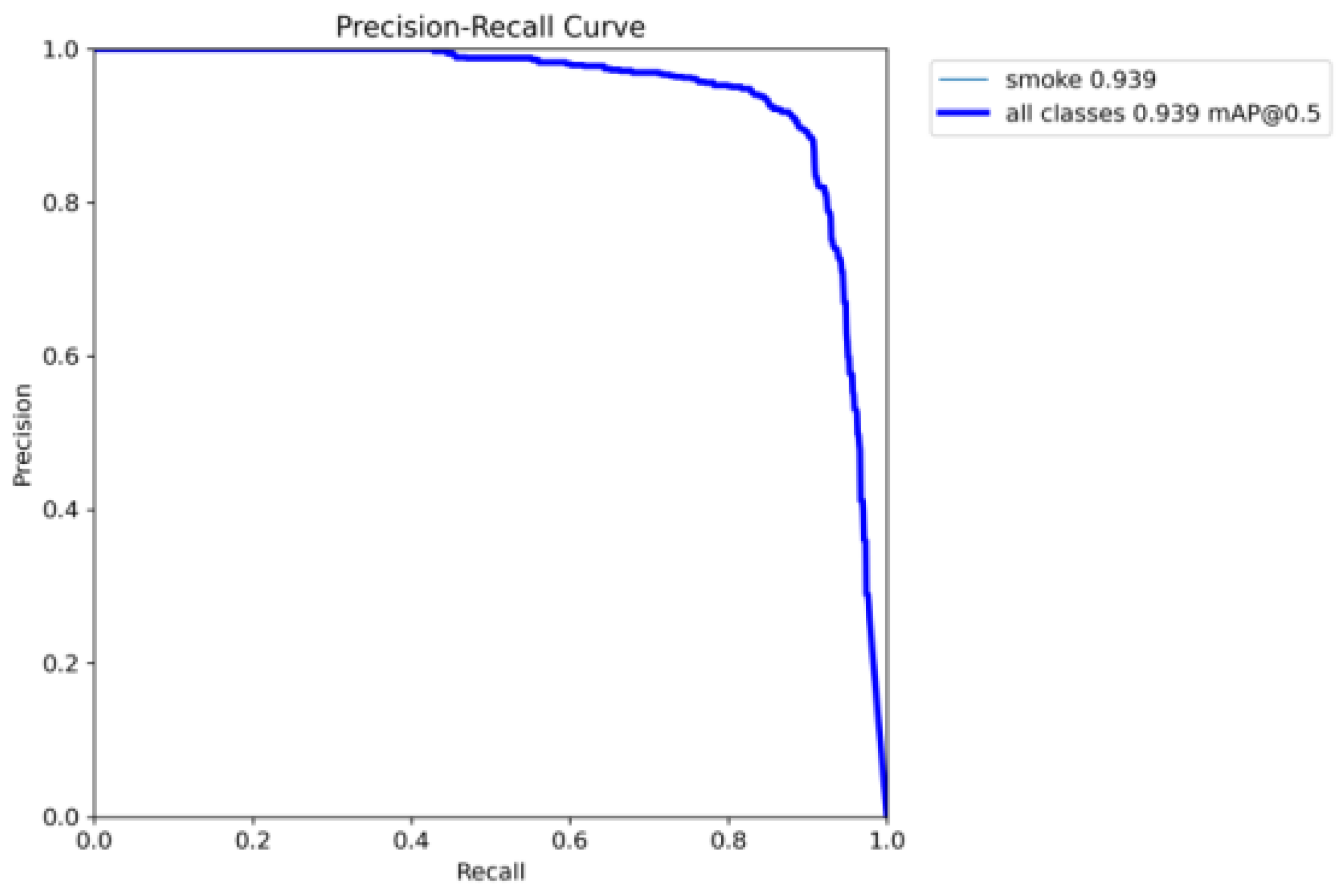

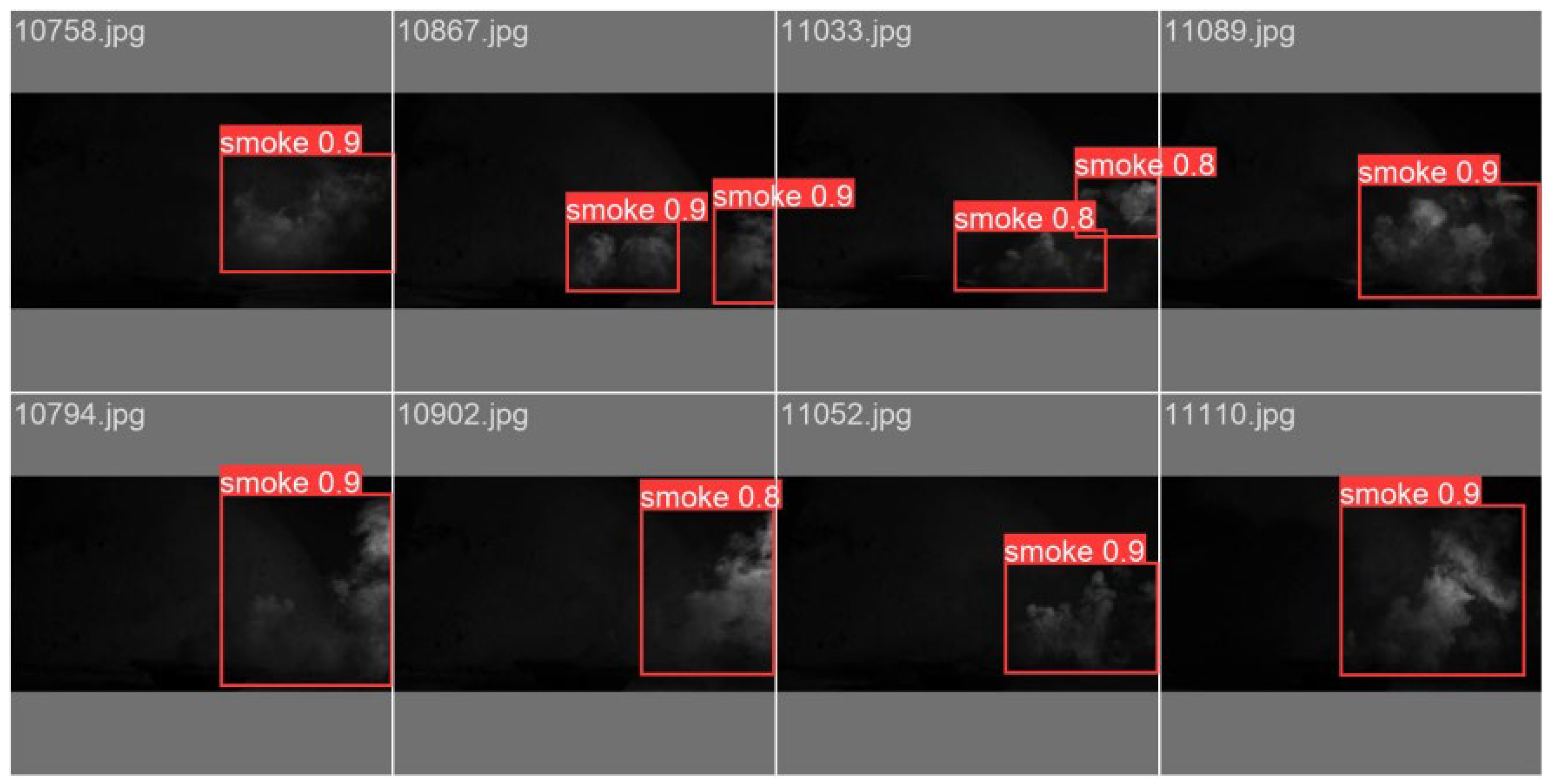

6.2. Analysis of Target Detection Results

6.3. Comparative Analysis of Ablation Experiments and Algorithms

7. Conclusions

- (1)

- According to the entropy change characteristics of spatio-temporal information brought by smoke diffusion movement and the entropy-invariant (or less change) characteristics of background interference information, based on the separation of spatio-temporal entropy, the method of entropy enhancement of isometric frame image differential fusion of smoke target images is proposed; this method effectively suppresses background noise and, at the same time, enhances the detail clarity of the smoke target image.

- (2)

- The YOLOv8m-HPC model method for recognizing smoke target images is proposed. The target detection layer detection head was added to the attention mechanism and replaced with the super-resolution detection head HATHead module, which effectively improves the target detection feature expression capability. Using the self-attention mechanism (PSA) module, the performance of the model is improved without significantly increasing the computational cost by improving the C2f module to the C2f_UIB module. This strategy ensures the improved detection ability of the proposed model in recognizing small and large smoke in images.

- (3)

- The experimental results show that the improved YOLOv8m-HPC model in this paper improves the recall and average precision of smoke recognition and increases the single-frame detection speed. The fps can reach 25 frames, which can satisfy the demand of real-time detection and faster inference. Compared to the YOLOv5m algorithm, it improves accuracy by 5%, recall by 3.1%, average detection precision mAP (50) by 2.9%, and mAP (50–95) by 6.1%. Compared to YOLOv8m, the algorithm improves 2.3%, 0.4%, 1.6%, and 47% on recall, average detection accuracy mAP (50), mAP (50–95), and FPS, respectively, although it decreases 0.8% on precision. Compared to YOLOv11n, although there is a 24% reduction in inference speed, improvements of 11.7%, 16.9%, 13.5%, and 18.5% are observed in the precision, recall, average detection accuracy (mAP) (50), and mAP (50–95) performance metrics, respectively. The YOLOv8m-HPC recognition method proposed in this paper has the best performance compared to similar algorithms and baseline algorithms.

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Sun, J.P. Research on coal-mine safe production conception. J. China Coal Soc. 2011, 36, 313–316. [Google Scholar]

- Sun, J.P.; Li, X.W. Mine external fire recognition and anti-interference method based on the internal concavity of image. J. China Coal Soc. 2024, 49, 3253–3264. [Google Scholar]

- Sun, J.P.; Li, X.W.; Xu, X.; Zhang, S.S. Research on ultraviolet image perception method of mine electric spark and thermal power disaster. J. Mine Autom. 2022, 48, 1–4+95. [Google Scholar]

- Kamran, M.; Shahani, N.M. Decision Support System for the Prediction of Mine Fire Levels in Underground Coal Mining Using Machine Learning Approaches. Min. Metall. Explor. 2022, 39, 591–601. [Google Scholar] [CrossRef]

- Zhang, S.Z.; Wu, Z.Z.; Zhang, R.; Kang, J.N. Dynamic numerical simulation of coal mine fire for escape capsule installation. Saf. Sci. 2012, 50, 600–606. [Google Scholar] [CrossRef]

- Hansen, R. Fire behaviour of multiple fires in a mine drift with longitudinal ventilation. Int. J. Min. Sci. Technol. 2019, 29, 245–254. [Google Scholar] [CrossRef]

- Jia, J.Z.; Wang, F.X. Study on emergency escape route planning under fire accidents in the Burtai coal mine. Sci. Rep. 2022, 12, 13074. [Google Scholar] [CrossRef]

- Lei, K.J.; Qiu, D.D.; Zhang, S.L.; Wang, Z.C.; Jin, Y. Coal Mine Fire Emergency Rescue Capability Assessment and Emergency Disposal Research. Sustainability 2023, 15, 8501. [Google Scholar] [CrossRef]

- Li, X.; Shi, Y.K.; Wang, Z.Y.; Zhang, W.Q. Modified Stochastic Petri Net-Based Modeling and Optimization of Emergency Rescue Processes during Coal Mine Accidents. Geofluids 2021, 2021, 4141236. [Google Scholar] [CrossRef]

- Wang, K.; Jiang, S.G.; Ma, X.P.; Wu, Z.Y.; Shao, H.; Zhang, W.Q.; Cui, C.B. Information fusion of plume control and personnel escape during the emergency rescue of external-caused fire in a coal mine. Process Saf. Environ. Prot. 2016, 103, 46–59. [Google Scholar] [CrossRef]

- Shi, G.Q.; Wang, G.Q.; Ding, P.X.; Wang, Y.M. Model and simulation analysis of fire development and gas flowing influenced by fire zone sealing in coal mine. Process Saf. Environ. Prot. 2021, 149, 631–642. [Google Scholar] [CrossRef]

- Bahrami, D.; Zhou, L.; Yuan, L. Field Verification of an Improved Mine Fire Location Model. Min. Metall. Explor. 2021, 38, 559–566. [Google Scholar] [CrossRef]

- Sun, J.P.; Qian, X.H. Emergency rescue technology and equipment of mine extraordinary accidents. Coal Sci. Technol. 2017, 45, 112–116+153. [Google Scholar]

- National Mine Safety Administration. The Case of “9–24” Major Fire Accident in Shanzhushu Mine of Guizhou Panjiang Fine Coal Co. Available online: https://www.chinamine-safety.gov.cn/zfxxgk/fdzdgknr/sgcc/sgalks/202409/t20240919_501797.shtml (accessed on 1 April 2025).

- National Mine Safety Administration. The case of “5-9” large fire accident in Gengcun coal mine of Henan Sanmenxia Henan Dayou Energy Co. Available online: https://www.chinamine-safety.gov.cn/zfxxgk/fdzdgknr/sgcc/sgalks/202309/t20230922_463749.shtml (accessed on 1 April 2025).

- National Mine Safety Administration. Chongqing Yongchuan District Hangshuidong Coal Industry Co. Available online: https://www.chinamine-safety.gov.cn/zfxxgk/fdzdgknr/sgcc/sgalks/202107/t20210721_392499.shtml (accessed on 1 April 2025).

- National Mine Safety Administration. The Case of “9–27” Major Fire Accident in Songzao Coal Mine of Chongqing Nengtou Yu New Energy Co. Available online: https://www.chinamine-safety.gov.cn/zfxxgk/fdzdgknr/sgcc/sgalks/202107/t20210723_392765.shtml (accessed on 1 April 2025).

- Sun, J.P.; Cui, J.W. Mine external fire sensing method. J. Mine Autom. 2021, 47, 1–5+38. [Google Scholar]

- Sun, J.P.; Li, Y. Binocular vision-based perception and positioning method of mine external fire. J. Mine Autom. 2021, 47, 12–16+78. [Google Scholar]

- Xue, Y.T.; Bahrami, D.; Zhou, L.H. Identifying the Location and Size of an Underground Mine Fire with Simulated Ventilation Data and Random Forest Model. Min. Metall. Explor. 2023, 40, 1399–1407. [Google Scholar] [CrossRef]

- Zhang, J.; Wu, A.G.; Zhao, M. Smog detection based on texture features and optical flow vector of contour. Transducer Microsyst. Technol. 2016, 35, 17–20. [Google Scholar]

- Cao, C.C.; Gong, S.R.; Zhou, L.F.; Zhong, S. Video Smoke Detection Based on HSV Color Space Feature. Comput. Technol. Dev. 2022, 32, 171–175. [Google Scholar]

- Deng, S.Q.; Ding, H.; Yang, M.; Liu, S.; Chen, J.Z. Fire Smoke Detection in Highway Tunnels Based on Video Images. Tunn. Constr. 2022, 42, 291–302. [Google Scholar]

- Yin, Y.P.; Chai, W.; Ling, Y.D.; Zhu, F.H.; Wang, X.J. Convolutional Neural Network for Smoke Recognition Based on Feature Analysis. Radio Eng. 2021, 51, 526–533. [Google Scholar]

- Wang, Y.B. Smoke detection based on computer vision in coal mine. J. Liaoning Tech. Univ. Nat. Sci. 2016, 35, 1230–1234. [Google Scholar]

- Cheng, G.T.; Chen, X.; Gong, J.C. Deep Convolutional Network with Pixel-Aware Attention for Smoke Recognition. Fire Technol. 2022, 58, 1839–1862. [Google Scholar] [CrossRef]

- Zhao, H.; Jin, J.; Liu, Y.; Guo, Y.; Shen, Y. Fsdf: A High-Performance Fire Detection Framework. Expert Syst. Appl. 2024, 238, 121665. [Google Scholar] [CrossRef]

- Wang, Y.; Piao, Y.; Wang, H.; Zhang, H.; Li, B. An Improved Forest Smoke Detection Model Based on YOLOv8. Forests 2024, 15, 409. [Google Scholar] [CrossRef]

- Hu, Y.; Zhan, J.; Zhou, G.; Chen, A.; Cai, W.; Guo, K.; Hu, Y.; Li, L. Fast Forest Fire Smoke Detection Using Mvmnet. Knowl. Based Syst. 2022, 241, 108219. [Google Scholar] [CrossRef]

- Yuan, F.; Zhang, L.; Wan, B.; Xia, X.; Shi, J. Convolutional Neural Networks Based on Multi-Scale Additive Merging Layers for Visual Smoke Recognition. Mach. Vis. Appl. 2019, 30, 345–358. [Google Scholar] [CrossRef]

- Yin, H.; Chen, M.; Fan, W.; Jin, Y.; Hassan, S.G.; Liu, S. Efficient Smoke Detection Based on Yolo V5s. Mathematics 2022, 10, 3493. [Google Scholar] [CrossRef]

- Yang, S.B.; Zhou, M.R.; Pan, W. Research on Fire Recognition Based on Smoke Image Dynamic Multi-frame Difference Method. Autom. Instrum. 2021, 36, 47–50+100. [Google Scholar]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar]

- Ma, G.Z. A remark on the maximum entropy principle in uncertainty theory. Soft Comput. 2021, 25, 13911–13920. [Google Scholar] [CrossRef]

- Yu, X.C.; Li, X.W. Sound Recognition Method of Coal Mine Gas and Coal Dust Explosion Based on GoogLeNet. Entropy 2023, 25, 412. [Google Scholar] [CrossRef]

- Qin, D.; Leichner, C.; Delakis, M.; Fornoni, M.; Luo, S.; Yang, F.; Wang, W.; Banbury, C.; Ye, C.; Akin, B. MobileNetV4-Universal Models for the Mobile Ecosystem. In Proceedings of the 2024 IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024. [Google Scholar]

| Method Category | Background Subtraction Method | Frame Difference Method | Our Method |

|---|---|---|---|

| Expert 1 | 9 | 1 | 14 |

| Expert 2 | 8 | 2 | 15 |

| Expert 3 | 6 | 1 | 14 |

| Expert 4 | 8 | 1 | 13 |

| Expert 5 | 7 | 2 | 14 |

| Expert 6 | 11 | 3 | 15 |

| Expert 7 | 7 | 1 | 15 |

| Expert 8 | 7 | 1 | 14 |

| Expert 9 | 9 | 1 | 15 |

| Expert 10 | 6 | 2 | 14 |

| Expert 11 | 6 | 1 | 13 |

| Expert 12 | 8 | 1 | 12 |

| Expert 13 | 10 | 1 | 11 |

| Expert 14 | 10 | 2 | 15 |

| Expert 15 | 8 | 1 | 14 |

| Total score of judging (Tgs) | 120 | 21 | 208 |

| The mean opinion score (MOS) | 40.0 | 7.0 | 69.3 |

| Serial Number | Algorithm Type | P/% | R/% | mAP (50)/% | mAP (50–95)/% | Fps/(f·s−1) |

|---|---|---|---|---|---|---|

| 1 | YOLOv5m | 86.4 | 85.7 | 92.1 | 66.3 | 16 |

| 2 | YOLOv8m | 92.2 | 86.5 | 94.6 | 70.8 | 17 |

| 3 | YOLOv9m | 86.8 | 79.2 | 90.0 | 63.2 | 27 |

| 4 | YOLOv10n | 78.2 | 75.0 | 84.3 | 60.1 | 28 |

| 5 | YOLOv11n | 79.7 | 71.9 | 81.5 | 53.9 | 31 |

| 6 | YOLOv8m-PSA | 91.9 | 86.1 | 94.3 | 72.4 | 18 |

| 7 | YOLOv8m-PSA-C2f_UIB | 91.8 | 89.9 | 94.9 | 71.8 | 19 |

| 8 | YOLOv8m-PSA-HATHead | 87.0 | 90.0 | 94.4 | 71.7 | 17 |

| 9 | YOLOv8m-PSA-C2f_UIB-HATHead | 91.4 | 88.8 | 95.0 | 72.4 | 25 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; Liu, Y. A Fusion of Entropy-Enhanced Image Processing and Improved YOLOv8 for Smoke Recognition in Mine Fires. Entropy 2025, 27, 791. https://doi.org/10.3390/e27080791

Li X, Liu Y. A Fusion of Entropy-Enhanced Image Processing and Improved YOLOv8 for Smoke Recognition in Mine Fires. Entropy. 2025; 27(8):791. https://doi.org/10.3390/e27080791

Chicago/Turabian StyleLi, Xiaowei, and Yi Liu. 2025. "A Fusion of Entropy-Enhanced Image Processing and Improved YOLOv8 for Smoke Recognition in Mine Fires" Entropy 27, no. 8: 791. https://doi.org/10.3390/e27080791

APA StyleLi, X., & Liu, Y. (2025). A Fusion of Entropy-Enhanced Image Processing and Improved YOLOv8 for Smoke Recognition in Mine Fires. Entropy, 27(8), 791. https://doi.org/10.3390/e27080791