1. Introduction

Research in corpus linguistics has found that the informativity of a word predicts its degree of articulatory reduction above and beyond the unit’s predictability given the current context [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11]. These findings have been interpreted as evidence for speakers being sensitive to a word’s information content in deciding how to pronounce it and for long-term memory representations of words accumulating information about its predictability or pronunciation details or both [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14,

15,

16,

17,

18,

19,

20,

21,

22,

23]. The effects of informativity are remarkably consistent in that all studies that have found an effect of local predictability in a model that included informativity also found an effect of informativity, suggesting that whenever speakers are sensitive to the probability of a word given a particular context, this information also accumulates in memory in association with that word. Consequently, these effects have been argued to provide support for “rich memory” views of the mental lexicon, in which every episode of producing or perceiving a word leaves a trace [

13,

14,

15,

16,

17,

18,

19,

20,

21,

22,

23].

However, the predictability of a word in a context is not independent of its average predictability across contexts, which is the inverse of informativity and is therefore perfectly correlated with it [

24]. Furthermore, when a context is rare, the word’s predictability given that context is unreliable and can be improved by taking the word’s average predictability into account. To take an extreme example, suppose that the word

inched occurs only once in the corpus. Then all words following it would have a probability of zero, except for the word that happened to occur after it (let us say

closer), which would have a probability of 1. These estimates are highly unreliable, since they are based on a sample size of 1. By taking into account probabilities of words in other contexts, we might be able to estimate, for example, that

up is a lot more probable than

higher following

inched. This is especially helpful for a bigram like this, where the words being predicted (

up and

higher) are frequent enough to frequently occur in other contexts where their predictabilities can be computed, and the present context (

inched) is rare so predictability given the current context is unreliable. If instead we have a rare word in a frequent context that co-occurs with it, e.g.,

by Kapatsinski, then informativity will not be of much help as the predictability given the current context is reliable and informativity likely aligns with it. At the limit, if the target word only occurs in the current context, its informativity is its predictability times minus one.

In computational linguistics, this idea is behind the technique of using back-off, where the next word is predicted from a long preceding context but if this context is absent from the training data or less frequent than a predetermined cutoff, the model backs off to using a shorter context, as first proposed in [

25]. Jaeger [

26] has shown that the estimated effect of predictability on reduction increases when predictability estimates are improved by using back-off.

In inferential statistics, this observation is behind the idea of hierarchical/mixed-effects models, which make use of adaptive partial pooling of evidence [

27]. Hierarchical regression is preferrable to back-off because it does not use an arbitrary frequency cutoff above which only local context is used and below which it is not used at all. The cutoff falsely assumes that there is zero information (i.e., only noise) in contextual predictability when the context frequency is below the cutoff and that out-of-context probabilities cannot improve in-context probability when context frequency is above the cutoff (for empirical evidence that these assumptions are false, see [

28,

29]). Ideally, one would take into account both in-context and out-of-context probabilities at all times but optimally weigh them based on how reliable they are in each context. This is what adaptive partial pooling is designed to do. Computational linguistics approaches that have replaced n-gram models with back-off, like recurrent networks and transformers, also implicitly make use of partial pooling. We return to this point in the Discussion.

In estimating some variable in some context, partial pooling relies on observations from that context to the extent that they are available, because they are more relevant than observations from other contexts. But the fewer such observations that are available, the more the model’s estimate is based on observations from other contexts. It can be shown that the estimate resulting from partial pooling is generally more accurate than an estimate that does not take other contexts into account (for simulations in the context of estimating reduction probabilities, see [

28]). In corpus data, context frequency is 1 for a large proportion of contexts. For example, if context is operationalized as the preceding or following word, as in [

1], context frequency would be 1 for ~50% of contexts in any corpus, as shown in [

30]. Therefore, partial pooling would yield substantial benefits in estimating predictability from naturalistic language input compared to relying completely on observed predictability in a given context. If speakers estimate predictability in context, it would therefore behoove them to rely on partial pooling to do so [

17].

How does partial pooling work? In hierarchical regression [

27], the partial pooling estimate for some characteristic (

) of a word (

w) in a context (

cx) would be estimated by Equation (1):

Here,

is the number of observations (frequency) of the context

cx,

is the observed variance of the characteristic (

) across words in the context

cx, and

is the variance of average

across words (

being averaged across all contexts in which each word occurs). Equation (1) says that the estimate based on the current context (

, here predictability) is weighted more when the context is frequent (

is high), and words tend not to vary in

within that context. Conversely, the estimate based on other contexts (informativity) is weighted more when words (within the relevant class) tend not to differ in (

). If

is predictability of the word given context, the variance parameters are likely to be relatively unimportant because word predictability distributions within and across contexts are (approximately) scale-free with an exponent of ~1, i.e., Zipfian [

30,

31], so

and

tend not to vary dramatically, while

, which also follows a scale-free Zipfian distribution, varies enormously across contexts. Therefore, the main influence on the importance of observed local predictability (

) vs. observed informativity (

) in estimating local predictability (

) given a context should be the frequency of that context.

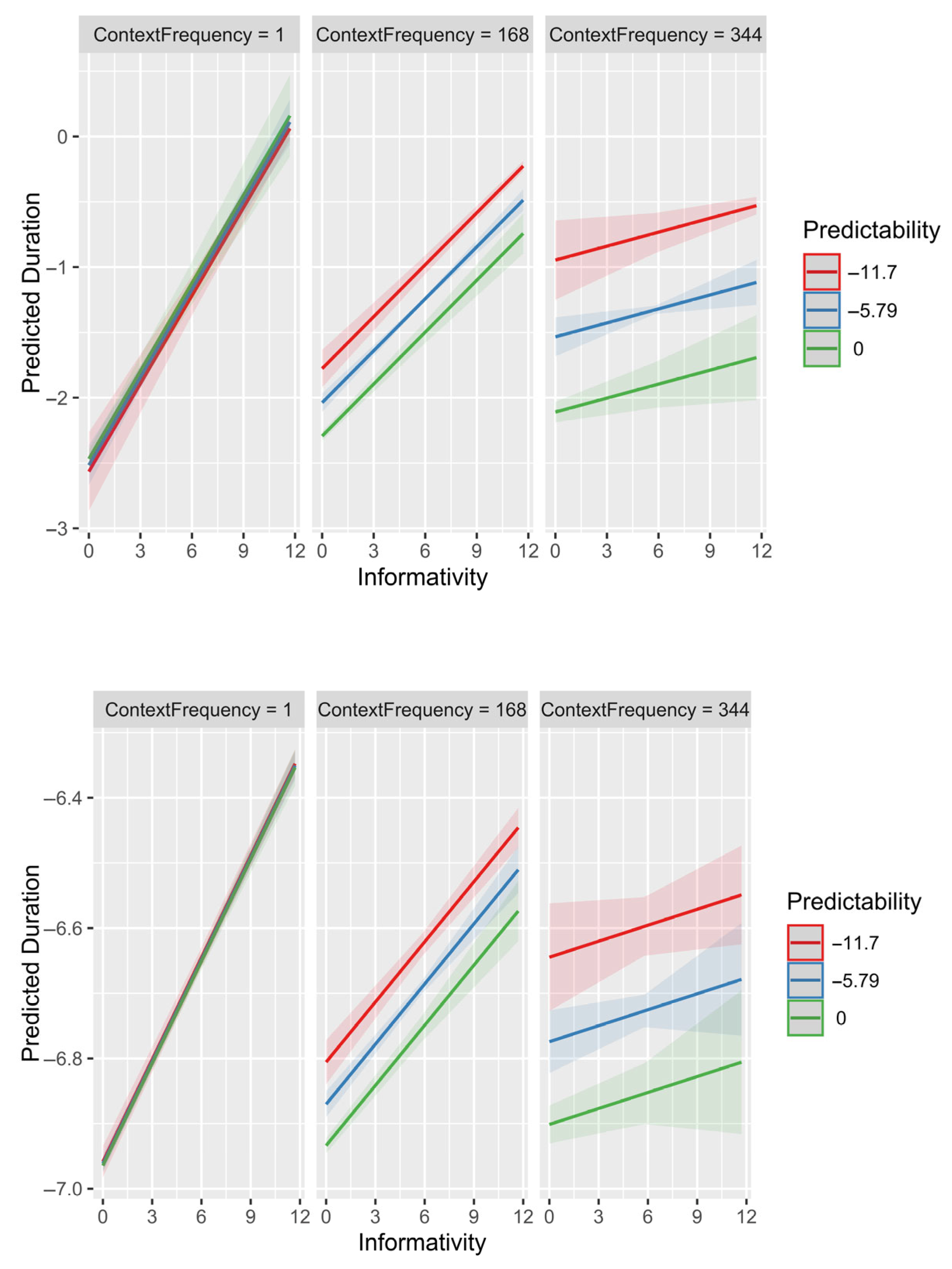

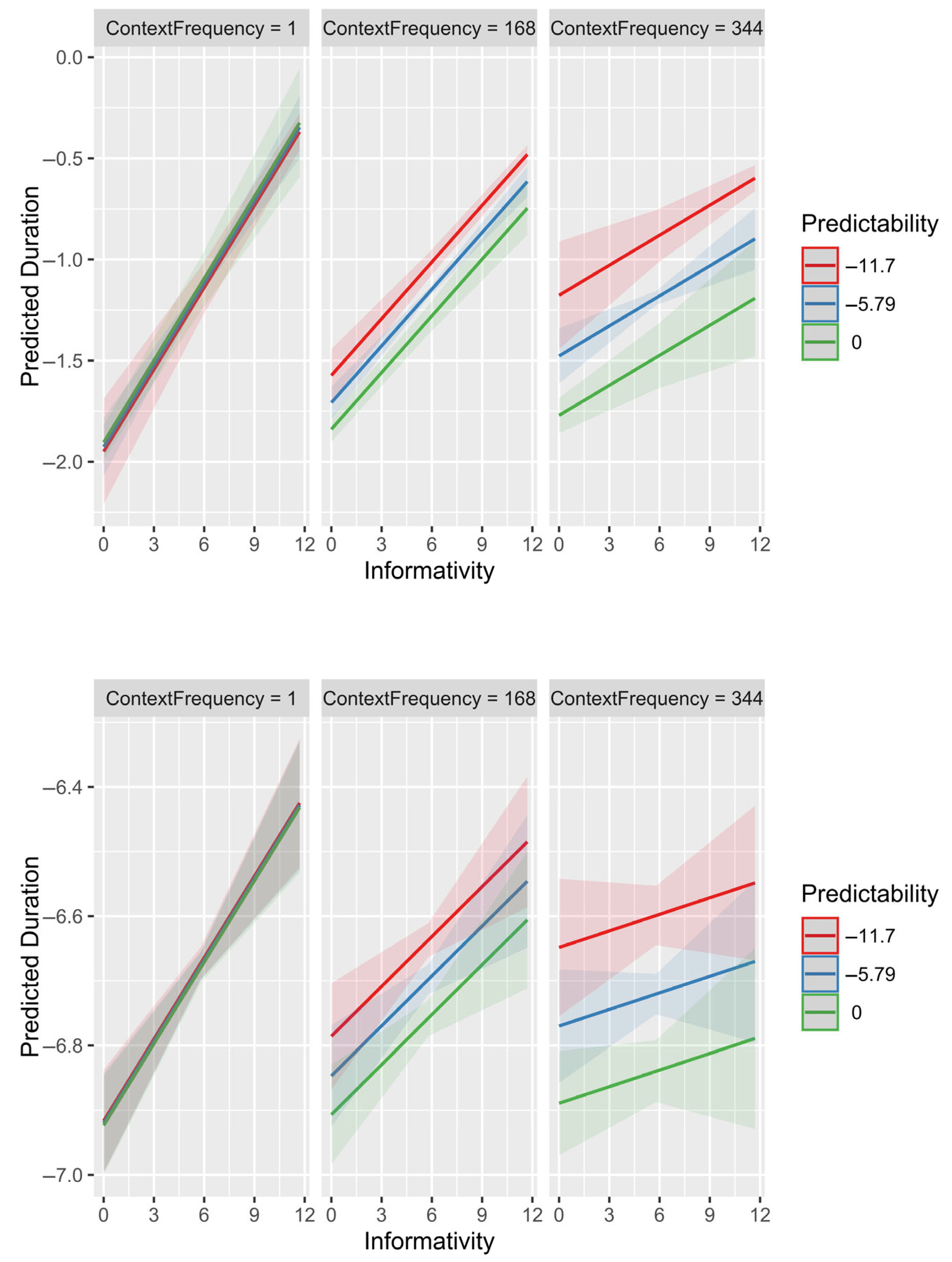

The present paper demonstrates that, as expected from the reasoning above, the apparent effect of informativity is larger in rare contexts than in frequent ones, while the effect of local, contextual predictability is smaller in rare contexts. This follows directly from the fact that contextual predictability estimates are less reliable in rare contexts and that informativity can help improve these estimates via partial pooling.

Previous simulation work on this question by Cohen Priva and Jaeger [

24] has investigated whether the

segmental informativity effect observed in [

32] could be spurious, concluding that it is highly unlikely to be. However, this work did not try to simulate the effects of predictability and informativity on an observable dependent variable like duration, instead asking how correlated informativity is with predictability and vice versa as a function of sample size. This does not allow one to determine how large spurious effects of the two correlated variables on a dependent variable would be. Because the dependent variable and the sizes of the effects of informativity and predictability were not simulated, [

24] also does not allow a researcher to use the simulation approach to infer whether an effect of informativity they have observed is attributable to predictability.

The present paper extends this simulation approach by modeling the dependent variable. In addition, it extends it by modeling lexical rather than segmental predictability and informativity. In [

24], Cohen Priva and Jaeger mention conducting simulations of informativity and predictability at the lexical level in the discussion section but do not describe the results beyond stating that the “results of those additional studies were similar, except that the problems observed here were observed more strongly and even for larger lexical databases when words, instead of segments, were analyzed. This is presumably due to the overall much larger number of word types, compared to segment types. For example, spurious frequency effects were found when predicting word predictability or word informativity, even for lexical databases with 10,000,000 words” (p.11). As noted in this quote, sampling noise is far less of a concern on the segmental level than on the lexical level, because even the rarest segments like [ð] or [ʒ] in English are very frequent relative to the average word. At the same time, most studies that have argued for an effect of informativity have been conducted at the lexical level [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11]. Given that spurious effects are more likely at the lexical level, it is important to have a methodology to determine whether any particular lexical informativity effect might be a predictability effect in disguise.

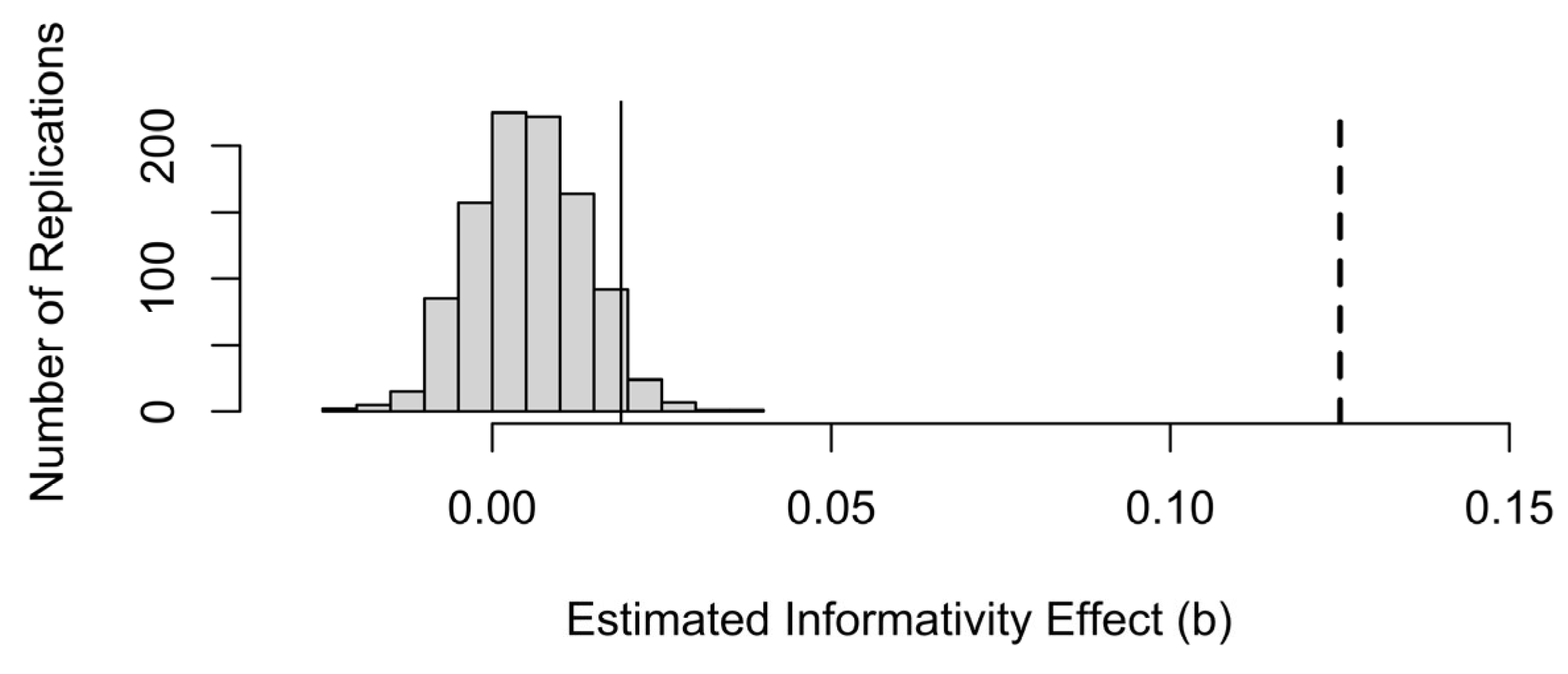

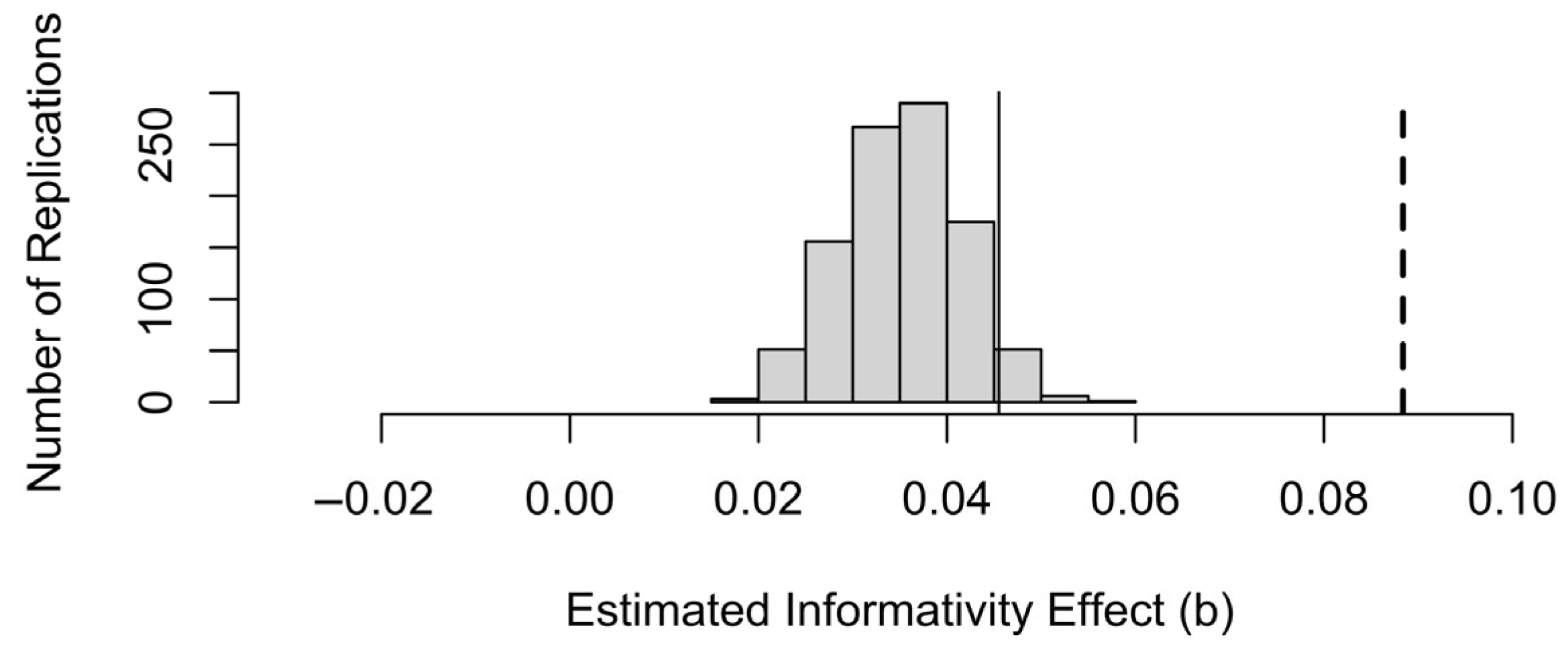

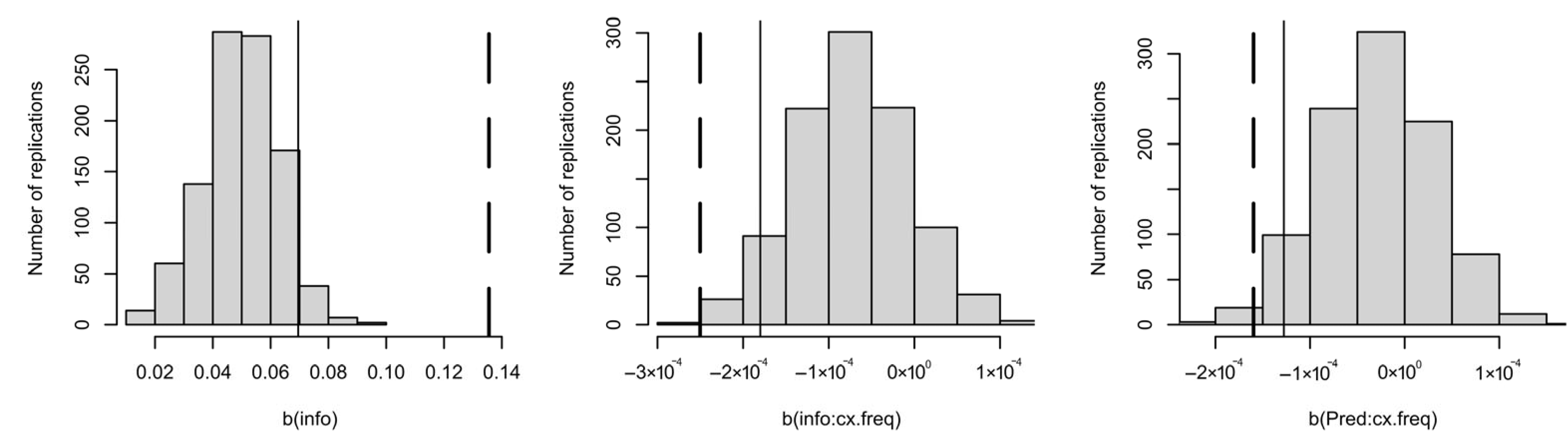

We show, by simulation, that a significant effect of informativity and its greater magnitude in rare contexts could sometimes be due to sampling noise in the predictability estimates. Furthermore, we provide a simple simulation approach to estimate how much of an observed effect of informativity is spurious, i.e., due to sampling noise. For our actual example, much of the effect of informativity is not due to sampling noise in predictability estimates. That is, although corpus data could show a significant effect of informativity in the same direction even if speakers showed no real sensitivity to informativity, our simulations show that the magnitude of that spurious effect would rarely be as large as the effect in the real data.

2. Materials and Methods

We begin by demonstrating that predictability and informativity behave as one would expect if (some of) the effect of informativity were due to partial pooling, i.e., informativity helping reduce noise in predictability estimates. Because there is more noise in smaller samples, this involves showing that these predictors interact with context frequency, such that the effect of predictability is stronger in frequent contexts and the effect of informativity is stronger in rare ones. Previous work in [

26] has shown that the effect of predictability is stronger in frequent contexts but has not examined whether the effect of informativity interacts with context frequency.

The data we model come from the Switchboard Corpus [

33], using the revised word-to-speech alignments from [

34]. To ensure that the results generalize across the informativity and predictability continua, I selected two datasets that are opposite of each other in terms of informativity and predictability. One dataset (

n = 5146) consists of the words that followed repetition disfluencies in [

35], which are mostly nouns. These words are relatively high in informativity and low in predictability, as these factors predict the occurrence of a disfluency before a word [

35]. The other dataset (

n = 5187), helpfully provided by T. F. Jaeger, consists of determiners (

the,

a,

an,

that,

this,

these,

those,

no,

every,

some,

each,

another,

both,

any,

all,

either,

one,

many,

neither, or

half) in prepositional phrases, extracted from the Penn Treebank II Switchboard corpus. None of the determiners are adjacent to disfluencies or pauses. These words are relatively low in informativity and high in predictability. In both datasets, context was operationalized as the preceding word in the utterance, so predictability is

, i.e., forward bigram surprisal with a flipped sign, and informativity is surprisal averaged across contexts.

For the post-disfluency dataset, LSTM [

36] probabilities are also available, as described in [

35]. However, bigram surprisal is preferrable to surprisal from neural models like [

36] for the present purpose of comparing predictability and informativity because part of the power of neural models is that they implicitly implement adaptive partial pooling, relying largely on the specific local context when it is familiar and generalizing over similar contexts when the context is new [

37]. Therefore, surprisal/predictability estimates from neural models are influenced by informativity through partial pooling to an unknown extent, since we do not have the equivalent of Equation (1) for neural models. Furthermore, the bigram operationalization makes it easier to determine which two contexts are “the same” for the purposes of calculating context frequency. That is, words that end in the same word are “the same context” for the present study.

Both datasets contain word tokens that are relatively controlled for other factors that influence durations. For the post-disfluency dataset, they are mostly nouns following high-frequency function words (prepositions or articles) [

35,

38], and surrounding words outside of the disfluency itself have little influence on their durations [

38]. This set of words is also of theoretical interest because there is no previous data investigating whether the durations of words following a disfluency are influenced by predictability, and one might expect the interruption in the flow of speech constituting the disfluency to reset the context for speech planning so that predictability given the word preceding the interruption would not influence duration of the word following the interruption. Below, we show that this is not the case. The determiner dataset is controlled for local syntactic and prosodic context: all determiners are in prepositional phrases, and the dataset contains annotation for prosodic word boundaries. All tokens with adjacent boundaries were excluded to make the dataset maximally different from the other.

The two maximally different datasets are intended to illustrate that the choice of the dataset matters little, as nothing in the argument hinges on the choice of the dataset. The predictions follow simply from the mathematical relationship between predictability and informativity, which ensures that the interactions with context will arise as a side effect of the Law of Large Numbers. Thus, these interactions should always be observed given enough power. The simulations below make no assumptions about what the data distribution looks like and bootstrap it from whatever data the researcher is hoping to simulate. For example, this methodology has also been applied to word durations in a Polish corpus, based on guidance from the present author serving as a reviewer (see [

11]). Furthermore, the interaction between predictability and context frequency was also seen in [

26].

We fit a regression model, predicting word duration (log scaled to reduce skew, as is standard in the literature) from predictability, informativity and their interactions with context. We fit a simple least squares linear regression model (Table 1), using lm() in base R [

39], and a mixed-effects lmer() model using the lme4 package [

40], version 1.1-35.5, with random intercepts for target words whose durations we model. The random intercepts allow us to control for uncontrolled variables that vary across words along with informativity and thus might spuriously strengthen or weaken the informativity effect independently of its relationship with predictability. I also tried adding a random slope for predictability by word. This model did not converge in the post-disfluency dataset because 64% of the words in the sample occur only once and thus cannot be used to test the random slope. Therefore, the random-effects structure for that dataset consists only of the random intercept for word. The random-effects structure for the determiners model in Table 2 is maximal: it also includes a random slope for predictability and the correlation between the two.

p values for linear mixed-effects models were generated using Satterthwaite’s method for approximating degrees of freedom for

t tests in mixed-effects models [

41]. Note that, because the random-effects structure is more complex for determiners, there are more degrees of freedom in the post-disfluency model for the same

n. Therefore, the same

t value corresponds to a lower

p value in the post-disfluency model compared to the determiner model. For the models with interactions in Table 3, the most complex random-effects structure that converged for both datasets included only random intercepts, thus random slopes were not included. Marginal and conditional

R2 values for the mixed-effects models were calculated using the r.squared_GLMM() function in the MuMIn package [

42].

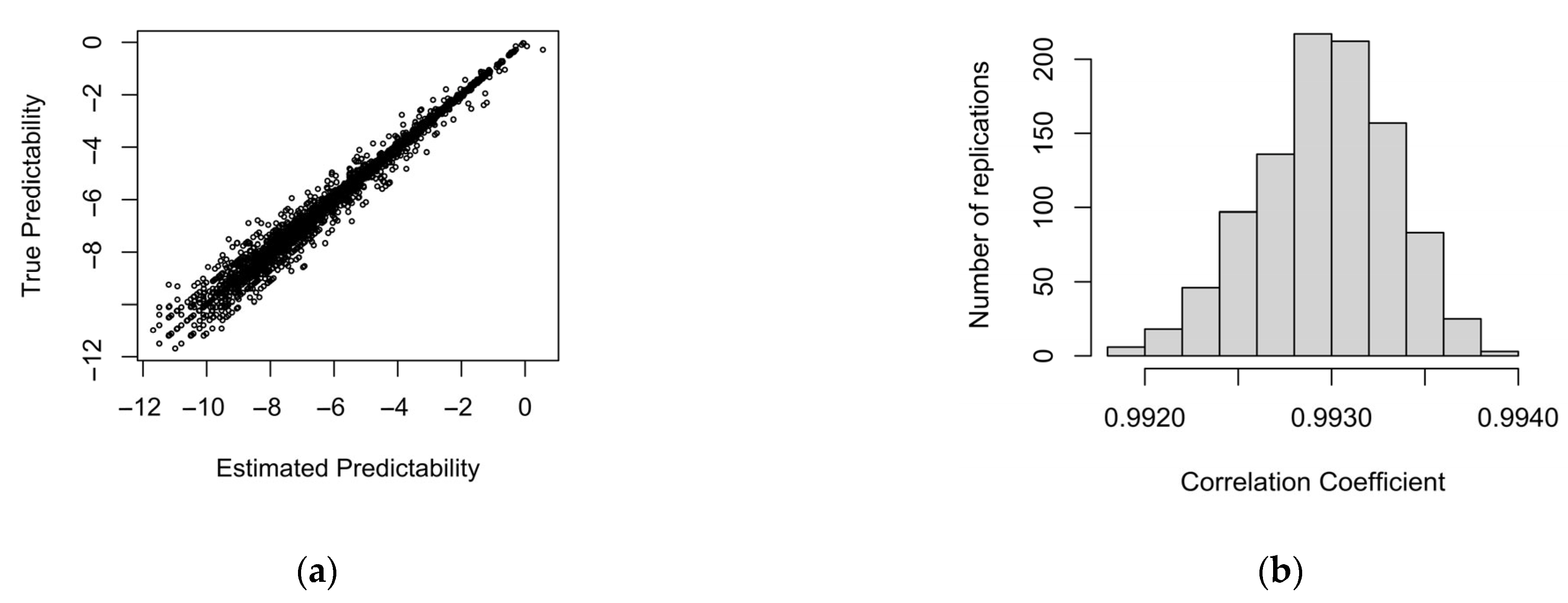

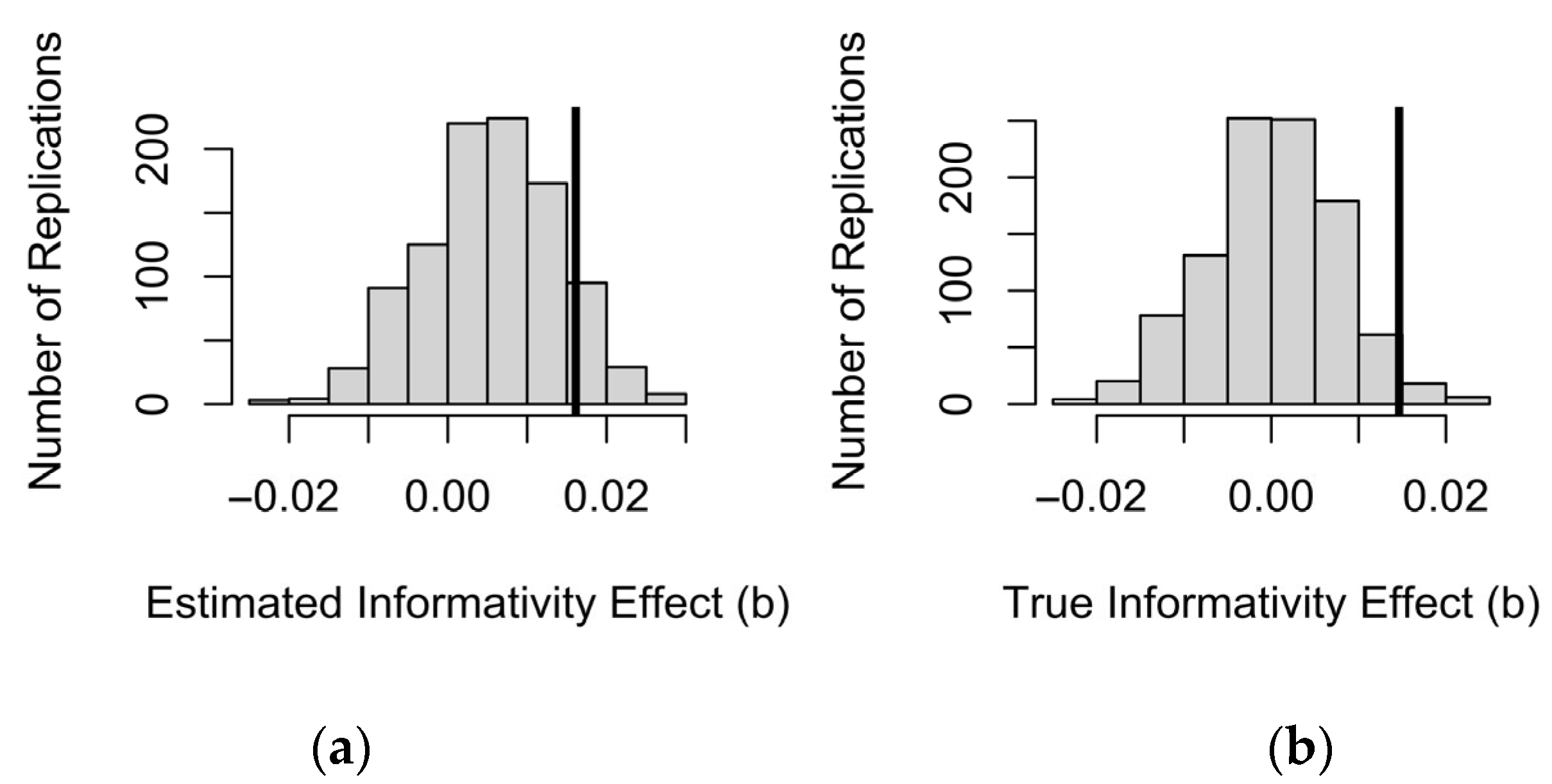

To simulate how much of the effect of informativity might be due to predictability, we repeatedly generate data samples in which predictability has the bivariate relationship with duration observed in the real data while informativity has no effect, i.e., the variance not accounted for by predictability is random observation-level noise (if the data are generated from a fixed-effects-only model) or observation-level noise plus the effects of lexical identity (estimated from the observed real data by the mixed-effects model with predictability as a fixed effect and word identity as a random intercept). The true magnitude of the predictability effect is constant across contexts, regardless of context frequency. We then fit the same model we fit to the real data, with predictability and informativity as predictors, with or without context frequency and interactions with it. We ask: (1) how often do we get a “false alarm” on informativity: how often do we observe a significant effect of informativity (at p < 0.05) in the same direction as in the real data, even though the data are generated by a process in which there is no true effect of informativity, and (2) how often is that effect at least as large as the effect in the real data.

Specifically, the probability of each word given each context, , feeds into a binomial distribution with the generated context frequencies serving as the number of “trials” in each context. For each “trial”, i.e., token of the preceding word, a biased coin is tossed, which comes up heads with the probability given by ’s probability given the preceding word in the actual sample, . I have also tried varying context frequencies across simulations by sampling context tokens from a multinomial distribution fit to the probabilities in the sample, but this does not appear to change the likelihood of observing a spurious effect of informativity compared to simply using the observed frequencies, thus the simpler approach is taken here.

The binomial sampling process inevitably produces sampling noise. For example, if you toss a coin that has a 50% probability of coming up heads 5 times, the coin will not come up heads on exactly 2.5 occasions and sometimes will come up heads in all 5 trials 3% of the time. The effect of this sampling noise is what adding informativity to the regression model can alleviate. Furthermore, the fewer times you toss the coin, i.e., the lower the context frequency, the more variable probabilities across coin tosses will be given that the underlying probability of the coin coming up heads is kept the same. For example, if you toss the coin once, the coin will generate a probability of 0 or 1 in each sample regardless of underlying probability. If you toss it 5000 times, the average will be close to the underlying probability. This is why we expect predictability effects to be stronger in frequent contexts and why we expect the spurious component of the informativity effect to be largest in rare contexts: in a rare context, estimated predictability is on average farther from the true predictability that influenced duration, and therefore more of the effect of true predictability remains to be captured by informativity.

Predictability of a word given context times the coefficient for predictability in the model without informativity plus the random effect of a word generates the mean of a normal distribution of log duration for that word in that context, and the standard deviation of the residuals of the model serves as the standard deviation of the normal. The simulated duration of each word token is then generated by sampling from this distribution.

The post-disfluency dataset, analysis and simulation code are available on OSF:

https://osf.io/c8aws, accessed on 4 July 2025. I do not have permission to redistribute the determiner dataset.

4. Discussion

Informative words have been repeatedly observed to be longer than less informative words. Furthermore, the effect of informativity has been observed to hold even if predictability given the local context is included in the regression model of word duration [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11]. The present paper pointed out that informativity could improve local predictability estimates because of sampling noise in the latter, especially in rare contexts. This means that one would expect some effect of informativity to come out in a regression model that includes predictability even if reduction were driven by predictability alone. The same reasoning predicts that informativity effects should be stronger in rare contexts where the context-specific predictability estimates are noisiest. We showed this to be the case in two datasets at the opposite ends of the predictability, informativity and duration continua.

We then examined by simulation whether an effect of informativity observed in a real dataset is small enough to have emerged from sampling noise, in a world where differences in word duration were entirely due to predictability in the local context. We have shown that there is bias in informativity coefficient estimates: a spurious effect of informativity does come out significant and in the expected direction (showing an effect in the opposite direction of the reductive effect of predictability) more often than the p value in a regression model that includes both predictability and informativity would lead us to expect. This is also true for the interactions between context frequency and either predictability or informativity. However, the effect of informativity is smaller than the one observed in the real data. Therefore, we can conclude that the effect of informativity in the real data is unlikely to be entirely attributable to sampling noise in predictability estimates.

We showed that predictability and informativity interact with context frequency as one would expect from the predictability estimates being less reliable when they are conditioned on rare contexts. The interaction of predictability and context frequency has previously been observed in [

26], whereas the interaction of informativity with context frequency is a new finding. This finding is predicted by the idea of informativity effects being due to adaptive partial pooling of probabilities across contexts. Our simulations show that the interaction of predictability with context frequency observed in the real data might be attributable to this effect of the Law of Large Numbers (i.e.,

p > 0.05). However, some of the interaction of informativity with context frequency may not be attributable to there being more noise in predictability estimates for informativity to compensate for in rare contexts. So, overall, not all of the effect of informativity is attributable to predictability, and the effect of informativity may genuinely be stronger in rare contexts.

This result extends prior simulation work in [

24] that argued that the segmental informativity effect observed in [

32] (e.g., [t] is less informative and more likely to be deleted than [k] in English) could not be attributed to noise in local predictability estimates. Like [

24], we observe that most of the effect of informativity in the real data is not entirely due to noise in predictability estimates. However, this result is more surprising on the lexical level examined here than on the segmental level examined in [

24], because (as pointed out there) segments are abundantly frequent and few in number, and therefore sampling noise in predictability is rather low at the segmental level. In contrast, word frequency distributions are Zipfian, with half the words occurring only once in the corpus, resulting in many contexts where estimating local predictability is virtually impossible. This concern was previously acknowledged in [

24], which reported also running simulations at the lexical level, but this is the first time lexical simulation results are reported in the literature.

Unlike [

24], which simulated how strongly true and estimated predictability and informativity values correlate depending on sample size, we took the approach of simulating the dependent variable, duration. This is likely because [

24] focused on early studies that included only predictability or only informativity in their models, whereas we focus on the studies that included both predictors in the same model [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11]. In the context of a model that includes both predictability and informativity, as in [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11], a strong correlation between informativity and predictability is not a guarantee that an effect of predictability would often be falsely attributed to informativity. For example, in the post-disfluency dataset, informativity and predictability correlate at |

r| > 0.91. Nonetheless, a true effect of predictability is seldom falsely attributed to informativity in its entirety—predictability is almost always significant in our simulations: despite the strong correlation, the sample size is large enough to detect a real effect of predictability with informativity in the model.

The advantage of simulating duration is also that we can determine how large an effect of informativity is expected to be if it were entirely spurious, and predictability had the same bivariate relationship with duration as in the real data. This is important because the likelihood of observing a spurious informativity effect and its size depend strongly on the size of the predictability effect on the dependent variable. Furthermore, by simulating the dependent variable we are able to estimate whether all of the observed informativity effect is likely to be attributable to noise in the predictability estimates.

Of course, showing that an informativity effect cannot be explained away by local predictability or by random differences between words leaves open the possibility that other stable characteristics of words that do not change across contexts and correlate with informativity would explain it away. We do not consider these alternatives here because such alternative explanations are adequately addressed by standard multiple regression methods, as in [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11]. However, the present simulations show that some of the effect of informativity in these previous studies could be due to noise in predictability estimates. This raises the possibility that the remainder of the informativity effect might still be explained away by other stable, context-independent but word-specific factors (e.g., [

44]). The methodology proposed here can incorporate such additional predictors, by creating simulated data in which all predictors but informativity have the effects they do in the real data, fitting a model with all predictors, including informativity, and testing how often we obtain an informativity effect of the observed magnitude and direction. A central point of this paper is that this remains an important direction for future work: the collinearity between informativity and predictability means that the effect of informativity is on looser ground than it appears to be.

The simulations above assume that corpus frequencies and probabilities are unbiased estimators of the probabilities (or activations, in a neural network framework) that drive reductions. While this is the standard simplifying assumption of all corpus studies, it may not be justified; e.g., [

45]. For example, words of zero frequency in a large corpus nonetheless vary in the probability of a speaker knowing each word, and these probability differences affect lexical processing variables like reaction time in lexical decision [

46] and likely also affect production measures like duration. The present simulations assume that words of frequency zero in the corpus have a zero probability of occurrence, which is of course not true for many such words. It does not appear to be a dangerous assumption because we do not have duration measurements for such words in any case so they would not be able to influence our estimate of the relationships between duration, predictability and informativity. However, the general possibility that the mismatch between predictability in a corpus and predictability in a speaker’s experience might distort corpus predictability estimates for rare items and in rare contexts beyond simple sampling noise means that we might be underestimating noise in predictability estimates in such situations. If so, we may need to be particularly cautious in arguing that informativity effects found with such materials cannot be due to predictability.

However, let us assume that some of an effect of informativity is genuine. How then should we interpret it? Here, the notion of adaptive partial pooling also provides a novel perspective. A genuine effect of informativity and its interaction with context frequency can be due to speakers themselves representing their linguistic experience in a way that results in adaptive partial pooling, as argued in [

17,

47]. Adaptive partial pooling is directly predicted by probabilistic models of the mind [

17,

47,

48], which propose that the language learner engages in hierarchical inference, building rich structured representations and apportioning credit for the observations among them. However, it is also compatible with other “rich memory” models in which experienced observations are not discarded even if the learner generalizes over them. All such rich memory models can be understood as performing adaptive partial pooling at the time of production. For example, an exemplar/instance-based model stores specific production exemplars/instances tagged with contextual features [

49,

50]. It then produces a weighted average of the stored exemplars, with the weight reflecting overlap between the tags associated with each exemplar and the current context. The average is naturally dominated by exemplars that match the context exactly when such exemplars are numerous and falls back on more distant exemplars when nearby exemplars are few. Modern deep neural networks also appear to be rich memory systems that implement a kind of hierarchical inference when generating text, in that best performance is achieved when the network is more than big enough to memorize the entire training corpus [

51,

52]. Increasing the size of the network beyond the point at which it can memorize everything appears to benefit the network specifically when the context frequency distribution is Zipfian [

53,

54], by allowing it to retrieve memorized examples when the context is familiar and to fall back on generalizing over similar contexts when it is not. The existence of implicit partial pooling in such models may be why attempts to add explicit partial pooling to them to improve generalization to novel contexts [

55] have led to limited improvements in performance.

Within the usage-based phonology literature, informativity effects have been considered to be one of a number of effects that go by the name of “frequency in favorable contexts” (FFC). In general, the larger the proportion of a word’s tokens that occur in contexts that favor reduction, the more it will be reduced in other contexts [

18,

19,

20,

21,

22,

23]. Informative words can be seen as one type of low-FFC word, because (by definition of informativity) they tend to have low predictability given the contexts in which they occur. In this literature, informativity effects have been argued to be due to storage of phonetically detailed pronunciation tokens in the memory representations of words [

18,

19] and are therefore often thought to be categorically distinct from the online, in-the-moment reduction effects driven by predictability in the current context.

However, the distinction between informativity and local predictability may also be an artifact of how we traditionally operationalize the notion of “current context”. In reality, a word is never in exactly the same context twice. All contexts have a frequency of 1 if you look closely enough, and predictability of a word in a context always involves some generalization over contexts. That is, true predictability must always lie between predictability given the current context and average predictability across contexts (i.e., the inverse of informativity). The present paper treated contexts as being the same if they end in the same word. This is motivated by findings that the width of the context window does not appear to matter for predicting word duration (and other measures of word accessibility) from contextual predictability [

35,

56]. That is, language models with very narrow (one-word) and very broad windows generate upcoming word probabilities that are equally good at predicting word duration and fluency [

35,

56,

57], suggesting that it is largely predictability given the preceding word that matters. However, [

56] nonetheless shows that a large language model (with a short context window) outperforms an n-gram model in yielding predictability estimates predictive of word durations. It therefore seems likely that the notion of “current context” in our studies is too narrow and speakers actually generalize over the contexts that we considered distinct. For example, even if it is only the preceding word that matters, distributionally similar preceding words might behave alike, which a large language model would pick up on by using distributed semantics word representations (also known as embeddings) instead of the local codes of an n-gram model. Thus, another direction for future work is to try to determine what makes contexts alike in conditioning reduction.