Abstract

A significant advancement in Neural Network (NN) research is the integration of domain-specific knowledge through custom loss functions. This approach addresses a crucial challenge: How can models utilize physics or mathematical principles to enhance predictions when dealing with sparse, noisy, or incomplete data? Physics-Informed Neural Networks (PINNs) put this idea into practice by incorporating a forward model, such as Partial Differential Equations (PDEs), as soft constraints. This guidance helps the networks find solutions that align with established laws. Recently, researchers have expanded this framework to include Bayesian NNs (BNNs) which allow for uncertainty quantification. However, what happens when the governing equations of a system are not completely known? In this work, we introduce methods to automatically select PDEs from historical data in a parametric family. We then integrate these learned equations into three different modeling approaches: PINNs, Bayesian-PINNs (B-PINNs), and Physical-Informed Bayesian Linear Regression (PI-BLR). To assess these frameworks, we evaluate them on a real-world Multivariate Time Series (MTS) dataset related to electrical power energy management. We compare their effectiveness in forecasting future states under different scenarios: with and without PDE constraints and accuracy considerations. This research aims to bridge the gap between data-driven discovery and physics-guided learning, providing valuable insights for practical applications.

1. Introduction

NN algorithms are widely used across various fields for a multitude of tasks, often outperforming other methods. Consequently, a diverse range of algorithms has been developed. For instance, there are algorithms designed for spatial relationships in images, sequential data like MTS, and tree-based models demonstrating impressive performance. Most of these algorithms require rich datasets to effectively learn patterns and features for tasks such as regression, classification, or clustering. PINNs, first introduced in [1], improve upon traditional methods by incorporating known physics—specifically PDEs and boundary conditions—into the loss functions as a regularization technique, resulting in more accurate outcomes. One of the key benefits of merging data-driven approaches with fundamental physical principles is their effectiveness in handling small sample sizes and noisy data. Their versatility allows them to be applied across various fields, including fluid dynamics, heat transfer, and structural analysis, where conventional numerical methods often face challenges.

Bararnia and Esmaeilpour [2] explored the application of PINNs to address viscous and thermal boundary layer problems. They analyzed three benchmark scenarios: Blasius–Pohlhausen, Falkner–Skan, and natural convection, specifically investigating the impact of equation nonlinearity and unbounded boundary conditions on the network architecture. Their findings revealed that the Prandtl number plays a crucial role in determining the optimal number of neurons and layers required for accurate predictions using TensorFlow. Additionally, the trained models effectively predicted boundary layer thicknesses for previously unseen data. Hu et al. [3] presented an interpretable surrogate solver for computational solid mechanics based on PINNs. They addressed the challenges of incorporating imperfect and sparse data into current algorithms, as well as the complexities of high-dimensional solid mechanics problems. The paper also explored the capabilities, limitations, and emerging opportunities for PINNs in this domain. Wang et al. [4] explored one application of PINNs in optical fiber communication, proposing five potential solutions for modeling in the time, frequency, and spatial domains. They modeled both forward and backward optical waveform propagation by solving the nonlinear Schrödinger equation. Although conventional numerical methods and PINNs produced similar accuracy, PINNs demonstrated lower computational complexity and reduced time requirements for the same optical fiber problems. Mohammad-Djafari et al. [5] examined the use of PINNs for solving inverse problems, particularly in the identification of dynamic systems. They provided a detailed overview of PINNs and demonstrated their effectiveness through various one-dimensional, two-dimensional, and three-dimensional examples.

B-PINNs are an extension of PINNs that incorporate Bayesian inference to quantify uncertainty in predictions. They leverage the strengths of NNs, physics-based modeling, and Bayesian methods to effectively solve PDEs and other scientific problems. One of the key advantages of B-PINNs is their ability to provide measures of uncertainty, which are crucial for risk assessment and informed decision-making. Liang et al. [6] introduced a higher-order spatiotemporal physics-incorporated graph NN to tackle missing values in MTS. By integrating a dynamic Laplacian matrix and employing an inhomogeneous PDE, their approach effectively captured complex spatiotemporal relationships and enhanced explainability through normalizing flows. Experimental results demonstrated that this method outperformed traditional data-driven models, offering improved dynamic analysis and insights into the impact of missing data.

Since the introduction of PINNs, they have been applied to a wide range of problems across various fields. One of the notable advantages of PINNs is their speed and effectiveness when working with small, noisy datasets. However, a key question arises: how can we apply PINNs when we do not have PDEs readily available for our specific problems? While many physical laws governing phenomena in physics, chemistry, biology, engineering, and environmental science are well established, there are scenarios where no such laws or governing equations (e.g., PDEs) are known. In these cases, applying PINNs becomes challenging because PINNs rely on known physical laws to guide the learning process and achieve precise results with smaller sample sizes, fewer layers, fewer neurons, and fewer training epochs.

For example, consider sociological data, where there are no mathematical equations to describe the percentages of different behaviors or trends in human populations. Similarly, in economics, finance, psychology, or social media analytics, the relationships between variables are often complex and not easily captured by predefined PDEs. In such scenarios, one might aim to use PINNs to inform the network about future predictions in regions where no data are available, enabling the network to make precise forecasts even in data-sparse regions.

However, if no PDEs are known or applicable to the data, using PINNs directly is impossible. This highlights the importance of investigating suitable methods for constructing PDEs from raw data. By identifying governing equations or relationships within the data, we can bridge the gap between data-driven methods and physics-informed approaches, enabling the application of PINNs even in domains where physical laws are not yet defined.

Nayek et al. [7] focused on using spike-and-slab priors for constructing governing PDEs of motion in nonlinear systems. The study used Bayesian Linear Regression (BLR) for variable selection and parameter estimation related to these governing equations. Tang et al. [8] introduced an innovative approach for solving nonlinear robust optimization problems. It combines PINNs and PDE construction within an interval sequential linear programming framework. The method efficiently estimates the sensitivities of uncertain constraints using approximate partial derivatives. These estimates are then used to build deterministic linear models. Tang et al. [9] presented a possibility-based solution framework, known as the equivalent deterministic method, to address interval uncertainty in design optimization. The approach involved constructing PDEs and leveraging PINNs to efficiently model the relations between uncertain variables and the system’s responses. Niven et al. [10] introduced a Bayesian maximum a posteriori framework for identifying dynamical systems from time series data. They provided a comprehensive method for estimating model coefficients, quantifying uncertainties, and selecting models while comparing with joint maximum a posteriori and variational Bayesian approximation against the established Sparse Identification of Nonlinear Dynamics (SINDy) algorithm. The results highlighted the benefits of Bayesian inference in identifying complex dynamical systems and provide a robust metric for model selection.

In this paper, we investigate methods for constructing PDEs from raw datasets. The idea is to use a substantial amount of historical data to construct appropriate PDEs, more precisely to provide a large family of parametric PDE family and use methods of model selection. Once developed, these equations can then analyze new, short-term data to forecast future trends. In summary, we use the historical large data bases to construct a PDE model, which can be used for a much smaller NN with new data using the PINN approach. We explore several techniques, including SINDy, Least Absolute Shrinkage and Selection Operator (LASSO), Bayesian LASSO (B-LASSO), surrogate NNs, and Symbolic Regression (SR). Our focus is on constructing suitable PDEs for an MTS analysis and applying PINNs, B-PINNs, and PI-BLR for a regression task. This approach is particularly applicable when dealing with raw data that lack additional information about their source or underlying dynamics. We then compare the results with and without the constructed PDEs to evaluate any improvements in prediction accuracy for the MTS.

Constructing PDEs is particularly important in the context of MTS analysis. Real-world systems—such as climate patterns, financial markets, and industrial sensors—produce complex, interrelated data that evolve over time. Traditional models often struggle to capture both the hidden physical processes and the intricate relationships among variables. By directly learning PDEs from historical data using methods like SINDy or surrogated NNs, we can uncover the underlying forward model that governs the system. We then integrate these equations with new short-term data using PINNs to enhance our predictions. This approach effectively bridges the gap between the interpretability of physics-based models and the flexibility of Machine Learning (ML), enabling us to forecast multivariate systems even when historical data is noisy or incomplete.

This work introduces a novel methodology for constructing PDEs from raw historical data and integrating them with NNs to enhance MTS forecasting with the new data. The main innovation is the development of a framework that combines data-driven PDE discovery with physics-informed ML, providing more accurate and interpretable models for complex systems. Furtherer, this paper aligns with the aims of the special issue on Bayesian Hierarchical Models with Applications by exploring and enhancing hierarchical Bayesian approaches within the context of physics-informed ML. We focus on developing and interpreting models such as B-LASSO, PI-BLR, and B-PINNs. Our contributions involve integrating domain knowledge through hierarchical priors and providing structured uncertainty quantification, thereby exemplifying the versatility and relevance of Bayesian hierarchical modeling in scientific ML—an overarching theme of this special issue. The main contributions of this paper are outlined as follows: In Section 2, we explain the basic assumptions underlying PDEs. Section 3 presents various methods for constructing PDEs from raw datasets. In Section 4, we provide a brief overview of PINNs through the used PINN algorithm in this paper. Section 5 discusses Bayesian versions of PINNs in conjunction with a PI-BLR model. A real MTS dataset is analyzed in Section 6 using the methods presented in this paper. Finally, insights from the article are discussed in Section 7.

2. Differential Equations

Ordinary Differential Equations (ODEs) and PDEs are mathematical models that involve unknown functions and their derivatives, which provide quantitative descriptions of a wide range of phenomena across various fields, including physics, engineering, biology, finance, and social sciences.

An example of a first-order ODE is , where y is a function of the single independent variable t and k is a constant. This equation describes exponential growth or decay.

A well-known second-order PDE is the heat equation: , where represents the temperature at spatial location x and time , with x as the spatial independent variable and as the time variable [11].

Dynamic systems refer to phenomena that evolve over time according to specific rules or equations, as illustrated by the two examples above. These systems can be linear or nonlinear, deterministic or stochastic, and they are crucial for understanding complex behaviors in various applications, such as climate modeling, population dynamics, and fluid mechanics.

Peitz et al. [12] introduced a convolutional framework that streamlines distributed reinforcement learning control for dynamical systems governed by PDEs. This innovative approach simplifies high-dimensional control problems by transforming them into a multi-agent setup with identical, uncoupled agents, thereby reducing the model’s complexity. They demonstrated the effectiveness of their methods through various PDE examples, successfully achieving stabilization with low-dimensional deep deterministic policy gradient agents while using minimal computing resources.

3. Methods of PDE Construction

In this section, we explain several methods that are useful for constructing PDEs from raw datasets. Some of these methods, such as SINDy, LASSO, and SR, are well-established methods that share common principles; however, they are not always successful across all case studies. Alternative surrogate NNs employing a different approach can be used to generate PDEs, but it is crucial that the NNs perform well on the unobserved test set to ensure that its derivatives are reliable for building PDEs.

Regardless of the data type, surrogate NNs are highly generalizable to any MTS dataset. As outlined in Appendix E and Appendix F, the process involves directly constructing equations from the data without imposing additional conditions. The approach begins with training an efficient NN on large volumes of historical data to achieve high predictive accuracy. Derivatives are then computed from the learned network, and equations are formulated between derivatives and variables that exhibit strong correlations. These PDEs are subsequently used for short-term forecasting with PINNs. Because this method does not rely on specific assumptions about the data, it can be readily applied across various domains, including finance, epidemiology, sociology, etc.

In this study, we choose Convolutional Neural Network (CNN) and Temporal Convolutional Network (TCN) architectures for the surrogate NNs due to their accuracy in predictions. The constructed PDEs are local approximations and therefore are not considered true PDEs. A larger dataset can enhance the accuracy of these approximations, extending their validity to a broader range of conditions and potentially revealing finer-scale features.

However, there are no guarantees that the approximation converges to the true underlying PDE, if such a PDE exists. Even with a large training set, the approximation may still overlook important aspects of the system’s behavior or overfit to noise in the data. Thus, while a larger dataset is advantageous, it cannot transform a local approximation into a globally accurate or true PDE; it can only improve the local approximation.

3.1. Sparse Identification of Nonlinear Dynamics (SINDy)

SINDy is a powerful framework designed to uncover governing equations from data, especially in the context of dynamical systems. Its primary goal is to identify the underlying equations that dictate a system’s behavior by using of observed data. This method is particularly beneficial when the governing equations are not known beforehand, which makes it a valuable asset in fields like fluid dynamics, biology, and control systems. SINDy, introduced in [13], employs sparse regression alongside a library of candidate functions. This approach allows it to identify the essential terms in complex system dynamics, effectively capturing the key features needed to accurately represent the data. Appendix A provides a detailed overview of the SINDy algorithm used to construct PDEs.

Fasel et al. [14] introduced an ensemble version of the SINDy algorithm, which improves the robustness of model discovery when working with noisy and limited data. They demonstrated its effectiveness in uncovering PDEs from datasets with significant measurement noise, achieving notable improvements in both accuracy and robustness compared to traditional SINDy methods. Schmid et al. [15] utilized the weak form of SINDy as an extension of the original algorithm to discover governing equations from experimental data obtained through laser vibrometry. They applied the SINDy to learn macroscale governing equations for beam-like specimens subjected to shear wave excitation, successfully identifying PDEs that describe the effective dynamics of the tested materials and providing valuable insights into their mechanical properties.

3.2. Least Absolute Shrinkage and Selection Operator (LASSO)

LASSO, introduced in [16], is a robust regression technique particularly effective for constructing PDEs from data, especially within the framework of PINNs. This method is valued for its dual capability of variable selection and regularization, which allows for the most relevant feature identification while mitigating the overfitting risk. LASSO achieves this by incorporating a penalty equal to the absolute value of the coefficients into the loss function. This approach promotes sparsity in the model, effectively reducing the number of variables retained in the final equation. Such sparsity is vital when dealing with complex systems governed by PDEs, as it aids in pinpointing the most significant terms from a potentially large pool of candidate variables derived from the data.

Zhan et al. [17] investigated the physics-informed identification of PDEs using LASSO regression, particularly in groundwater-related scenarios. Their work illustrated how LASSO can be effectively combined with dimensional analysis and optimization techniques, leading to improved interpretability and precision in the resulting equations. Additionally, Ma et al. [18] examined a variant version, sequentially thresholded least squares LASSO regression, within PINNs to tackle inverse PDE problems. Their findings highlighted the method’s ability to enhance parameter estimation accuracy in complex systems. Their experiments on standard inverse PDE problems demonstrated that the PINN integrated with LASSO significantly outperformed other approaches, achieving lower error rates even with smaller sample data. The LASSO algorithm is summarized in Appendix B for better understanding of the concept.

3.3. Bayesian Least Absolute Shrinkage and Selection Operator (B-LASSO)

The Bayesian framework is particularly beneficial for dealing with limited data by incorporating prior beliefs about the parameters. B-LASSO, explained in [19], is a statistical method that combines the principles of Bayesian inference with the LASSO regression technique, allowing us to leverage the advantages of both approaches. This method provides uncertainty quantification and is robust against overfitting, particularly in high-dimensional settings. It is useful for regression problems where the number of predictors is large relative to the number of observations or when there is multicollinearity among the predictors. In the Bayesian approach, we treat the model parameters, known as coefficients, as random variables and specify prior distributions for them. In addition to estimating the coefficients, B-LASSO also estimates the hyperparameter to control the strength of the LASSO penalty, assigning a prior distribution to as well.

Many papers use B-LASSO for regression modeling. For example, Chen et al. [20] utilized the B-LASSO for regression models and covariance matrices to introduce a partially confirmatory (theory-driven) factor analysis approach. They tackled the challenges of merging exploratory (data-driven) and confirmatory factor analysis by employing a hierarchical Bayesian framework and Markov Chain Monte Carlo (MCMC) estimation, showcasing the effectiveness of their method through both simulated and real datasets. In Appendix C, we explain the B-LASSO algorithm that is employed in this paper.

3.4. Symbolic Regression (SR)

SR is a sophisticated method used in ML and artificial intelligence to identify mathematical expressions that best fit a given dataset. Unlike traditional regression techniques that rely on predefined models, SR seeks to discover both the model structure and parameters directly from the data. It employs techniques such as genetic programming, NNs, and other optimization algorithms to derive these mathematical expressions such as complex and nonlinear relationships. The process involves generating a variety of candidate functions and using optimization methods to evaluate their performance against the data. This approach can reveal underlying physical laws or relationships that standard regression techniques might overlook, providing valuable insights into the system being studied. However, the search space for potential mathematical expressions can be vast, resulting in high computational costs and longer training times, especially with complex datasets. There is also a risk of overfitting, particularly if the search space is not well regularized or if the dataset is small. Achieving optimal results often requires careful tuning of several hyperparameters. The algorithm we used is detailed in Appendix D.

SR has applications across various fields, including data science for uncovering relationships during exploratory data analysis, engineering for deriving mathematical models of complex systems, biology for understanding biological processes or gene interactions, and finance for modeling market behaviors and predicting trends. Changdar et al. [21] introduced a hybrid framework that merged ML with SR to analyze nonlinear wave propagation in arterial blood flow. They utilized a mathematical model along with PINNs to solve a fifth-order nonlinear equation, optimizing the solutions through Bayesian hyperparameter tuning. This approach led to highly accurate predictions, which were further refined using random forest algorithms. Additionally, they applied SR to discover interpretable mathematical expressions from the solutions generated by the PINNs.

3.5. Convolutional Neural Network (CNN)

CNNs are well known for their ability to handle spatial relationships in various types of data, including images, videos, and even speech. They are also effective for MTS data. Typically, one-dimensional convolution is used for MTS, but two-dimensional convolution can be applied when the data has two-dimensional features. One of the key advantages of CNNs is their ability to automatically learn patterns and features from raw data, which reduces the need for manual feature engineering. They achieve this by using filters that share parameters across different parts of the input, resulting in lower memory usage and improved performance. CNNs are capable of learning hierarchical representations, where lower layers capture simple features and deeper layers identify more complex patterns and abstractions. However, they usually require a large amount of training data to perform well, which can be a barrier in some applications. Additionally, CNNs are sensitive to small changes in the input data, such as noise, translation, or rotation, which can impact prediction accuracy. This sensitivity can lead to overfitting, especially when working with small datasets. To mitigate this issue, techniques like dropout, data augmentation, and regularization can be beneficial.

Recent research has explored various versions and combinations of CNNs to enhance ML efficiency across different problems. For instance, Liu et al. [22] introduced an innovative approach for airfoil shape optimization that integrates CNNs to construct and compress airfoil features, PINNs for assessing aerodynamic performance, and deep reinforcement learning for identifying optimal solutions. This integrated methodology successfully reduces the design space and improves the lift–drag ratio while tackling the challenges of high-dimensional optimization and performance evaluation.

One important aspect to consider when using surrogate NNs, such as CNNs and TCNs, for constructing PDEs is that the network must perform well on unseen datasets. This means that the surrogate NN should effectively learn the function that closely approximates the true relationship between the inputs and outputs. Consequently, by differentiating the network’s output with respect to its inputs, we can derive the approximate true PDEs. To construct PDEs, it is beneficial to incorporate dynamic variables as inputs to the network. This allows the network to be differentiated with respect to these dynamic inputs after training, facilitating the construction of PDEs. It is important to note that while the final equations may not always be true PDEs—since they are defined based on the dataset’s domain—they serve as local approximations of the true PDEs. If the training dataset is comprehensive, the final equations closely approximate the true PDEs. The convolutional surrogate NN algorithm is clarified in Appendix E, designed to construct PDEs from data.

3.6. Temporal Convolutional Network (TCN)

TCNs are a type of NN architecture specifically designed for trajectory data and powerful in handling temporal information. They utilize causal convolutions, meaning that the output at any given time step depends only on current and past input values. This characteristic makes TCNs particularly well suited for tasks such as MTS forecasting, speech synthesis, and other forms of temporal sequence analysis. By employing dilated convolutions, TCNs can effectively expand their receptive fields, allowing them to capture long-range dependencies without relying on recurrent connections. This design also enables TCNs to process data across time steps in parallel, resulting in faster training and more stable gradients compared to recurrent NNs. However, while TCNs provide faster training, using very large kernel sizes or high dilation rates for long sequences can increase computational costs and memory usage due to the larger number of parameters involved. The choice of kernel size, stride, and dilation is crucial, as these factors can significantly impact performance and should be carefully selected based on the characteristics of the input data. Additionally, TCNs can be prone to overfitting, especially when trained on small datasets, which necessitates the use of regularization techniques to improve generalization.

For instance, Perumal et al. [23] explored the use of TCNs for rapidly inferring thermal histories in metal additive manufacturing. They demonstrated that TCNs can effectively capture nonlinear relationships in the data while requiring less training time compared to other deep learning methods. The study highlighted the potential advantages of integrating TCNs with PINNs to enhance modeling efficiency in complex manufacturing contexts. We provide an overview of the algorithm used for the TCN model of this work in Appendix F.

4. Physics-Informed Neural Network (PINN)

PINNs, introduced in [1], represent a powerful technique that integrates data-driven ML with fundamental physical principles, keeping it at low computational cost. Unlike traditional NNs, which rely solely on data for training, PINNs incorporate known physical laws expressed as PDEs and boundary conditions into the loss functions. This integration acts as a regularization mechanism, enabling PINNs to achieve higher accuracy, especially in scenarios with limited or noisy data. By leveraging the underlying physics, PINNs can generalize better and make predictions in regions where data is sparse or unavailable.

Since 2019, numerous research papers have explored PINNs with various NN architectures and applications. For instance, Hu et al. [3] discussed how PINNs effectively integrated imperfect and sparse data. They also addressed how PINNs tackled inverse problems and improved model generalizability while maintaining physical acceptability. This was particularly relevant for solving problems related to PDEs that governed the behavior of solid materials and structures. Additionally, their paper covered foundational concepts, applications in constitutive modeling, and the current capabilities and limitations of PINNs. Appendix G includes the structure of the applied PINNs in this article. One can exclude Steps 3 and 5 of the PINN Algorithm to adapt it into a typical NN.

5. Bayesian Computations

Bayesian computational models are well known for their ability to measure the uncertainty of variables and perform effectively on noisy data. However, their high computational cost can be a significant drawback, making them less accessible for researchers with limited resources. In this section, we introduce a Bayesian model, called PI-BLR, and a B-PINN that we utilize in our work.

5.1. Physics-Informed Bayesian Linear Regression (PI-BLR)

BLR provides a robust framework for statistical modeling by integrating prior beliefs and accounting for uncertainty in predictions. Given our interest in incorporating PDEs as a negative potential component—explained in Step 5 of Algorithm in Appendix H—in the model named PI-BLR, the simplicity of a linear model makes it easy to derive the necessary derivatives. This approach not only improves predictive performance but also supports more informed decision-making across various fields, including finance and healthcare.

Fraza et al. [24] introduced a warped BLR framework, specifically applied to data from the UK Neuroimaging Biobank. The paper emphasized the benefits of this approach, such as enhanced model fitting and predictive performance for various variables, along with the capacity to incorporate non-Normal data through likelihood warping. This method was less computationally intensive than Normal process regression, as it eliminated the need for cross-validation, making it well suited for large and sparse datasets. Gholipourshahraki et al. [25] applied BLR to prioritize biological pathways within the genome-wide association study framework. The advantages of employing BLR included its capacity to reveal shared genetic components across different phenotypes and enhanced the detection of coordinated effects among multiple genes, all while effectively managing diverse genomic features.

In this subsection, we integrate PDEs into the BLR model to introduce physical insights into the framework called PI-BLR. The algorithm is outlined in Appendix H. For a standard BLR model, simply omit Steps 3, 5, and 6.

5.2. Bayesian Physics-Informed Neural Network (B-PINN)

As mentioned, Bayesian methods are particularly robust in the presence of noisy or sparse data. By treating NN weights and biases as random variables, these methods estimate their posterior distribution based on the available data and physical constraints, enabling effective uncertainty measurement. Integrating Bayesian inference into PINNs naturally regularizes the model, helping to prevent overfitting and improve generalization. By providing posterior distributions, B-PINNs offer valuable insights into the uncertainty and reliability of predictions. However, it is important to recognize that Bayesian inference techniques, such as MCMC and Metropolis sampling, can be computationally intensive, particularly when addressing high-dimensional problems.

Yang et al. [26] developed a B-PINN to address both forward and inverse nonlinear problems governed by PDEs and noisy data. Utilizing Hamiltonian Monte Carlo and variational inference, the model estimated the posterior distribution, enabling effective uncertainty quantification for predictions. Their approach not only addressed aleatoric uncertainty from noisy data but also achieved greater accuracy than standard PINNs in high-noise scenarios by mitigating overfitting. The posterior distribution was used to estimate the parameters of the surrogate model and the PDE. The PDE was incorporated into the prior to impose physical constraints during the training process.

There are numerous approaches to transform a PINN into a B-PINN. The paper by Mohammad-Djafari [27] introduces a Bayesian physics-informed framework that enhances NN training by integrating domain expertise and uncertainty quantification. Unlike traditional PINNs which impose physical laws as soft constraints, this approach formalizes physical equations and prior knowledge within a probabilistic framework. The method constructs a loss function that balances three key components: fitting observed data, adhering to physical laws, and regularizing predictions based on prior estimates from a Bayesian perspective.

In this paper, we utilize a BNN and incorporate the constructed PDEs into the data loss function to form a kind of B-PINN. In Appendix I, we explain the B-PINN used here. For the corresponding BNN, we exclude Step 5, which involves the incorporation of PDEs. As described in Algorithm in Appendix I, the inputs and outputs of the network are represented as vectors, allowing for an arbitrary number of variables. Increasing the dimensionality, however, raises the computational cost. For real-time multivariate system analysis, the NN—whether Bayesian or non-Bayesian—is initially trained on historical datasets. Once trained, the network can efficiently produce predictions on streaming data, making it suitable for systems with moderate dynamics. When the system exhibits very high dynamism, it is beneficial to fine-tune a few parameters in the last one or two hidden layers to adapt to the system’s evolving behavior.

6. A Real-World MTS Dataset

We utilize the household electric power consumption dataset from [28], which comprises 2,075,259 samples recorded at one-minute intervals over a period of nearly four years. The data span from 16 December 2006, at 17:24 to 26 November 2010, at 21:02. Each sample includes seven measured variables alongside the corresponding date and time. Although the dataset does not specify the exact location where the measurements were taken, it provides detailed insights into the energy consumption patterns of a single household.

We add a dynamic variable to the dataset by calculating the elapsed time in hours for each sample, starting from zero for the first sample using the information in the date and time columns. To gain a better understanding of the data, we calculate the correlations between each pair of variables. We find that two variables have a correlation of exactly one, indicating that they are linear functions of each other. In other words, knowing the value of one variable allows us to determine the exact value of the other using a simple regression model to derive the linear relationship.

The dataset captures the following key electrical quantities, which we named for easier handling. The input variables are designated by X indices, while the outputs are represented by Y indices. Since this is an MTS, some of the inputs and outputs can be identical. The outputs are generated by incorporating an appropriate shift (lag), such as 30 time units. The variables in this dataset are explained below.

- Elapsed Time : The time that has passed since the beginning of the data collection period, measured in hours. This variable enables us to analyze trends and patterns in energy consumption over time, facilitating time-based analyses and comparisons.

- Global Active Power and : The total active power consumed by the household, averaged over each minute (in kilowatts).

- Global Reactive Power and : The total reactive power consumed by the household, averaged over each minute (in kilowatts).

- Voltage and : The minute-averaged voltage (in volts).

- Global Intensity: The minute-averaged current intensity (in amperes). This variable is a linear function of Global Active Power, so we omit it from the dataset.

- Sub-metering1 and : Energy consumption (in watt-hours) corresponding to the kitchen, primarily attributed to appliances such as a dishwasher, oven, and microwave.

- Sub-metering2 and : Energy consumption (in watt-hours) corresponding to the laundry room, including a washing machine, tumble-drier, refrigerator, and lighting.

- Sub-metering3 and : Energy consumption (in watt-hours) corresponding to an electric water heater and air conditioner.

Notably, the dataset contains approximately missing values, represented by the absence of measurements between consecutive timestamps. We apply first-order spline interpolation to fill in the missing parts. Additionally, ref. [28] explained that the active energy consumed by appliances not covered by the previously mentioned sub-metering systems can be calculated using the below formula based on the available measured variables:

To enhance the effectiveness of ML methods, normalization is recommended to improve the learning process. In this example, we first normalized the dataset and then applied the described method for further analysis.

In the PDE construction phase, the most effective method is the surrogate NN utilizing a TCN architecture. We employ two models and explain the differences between them. Model 1 is a standard TCN with three convolutional blocks, utilizing filters of sizes 64 and 128 and a kernel size of 3. It features residual connections and dropout layers to reduce overfitting, and it is trained using the Adam optimizer. Model 2 enhances this foundation by incorporating dilated convolutions, which allow it to capture long-range dependencies more effectively. With dilation rates of , it can analyze broader contexts without a significant increase in computational cost. Retaining the residual connections and dropout for stability, Model 2 is specifically trained to focus on temporal dependencies, making it more adept at recognizing patterns over extended periods. The CNN model employs a one-dimensional CNN that consists of three convolutional blocks. Each block includes ReLU activation, max pooling, and dropout layers to effectively construct temporal patterns while minimizing the risk of overfitting. The training process utilizes the Adam optimizer along with Mean Squared Error (MSE) as the loss function. All networks are trained for 30 epochs.

The method used in the surrogate NNs involves training the network on a large historical dataset and then utilizing the trained model to perform differentiation. The key aspect is that the networks must make accurate predictions. If they do, we can use the trained network to calculate derivatives. This implies that when the network accurately predicts unseen data, it identifies the unknown function of the input to generate the outputs. Consequently, we can differentiate this network, which represents a mathematical function of the inputs.

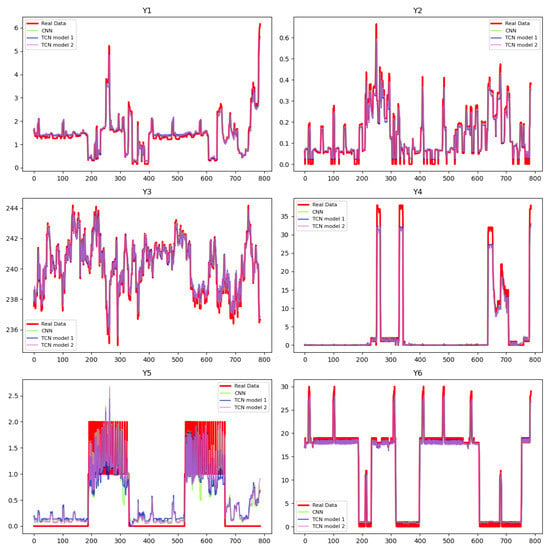

Table 1 displays the metrics of the trained networks, including MSE, Mean Absolute Error (MAE), and the coefficient of determination , which are used to evaluate predictive performances. Figure 1 displays the corresponding predictions of the surrogate NNs. Based on the results, all three networks make good predictions of the variables, except for . As explained earlier, the data relate to the use of certain electrical appliances that are operated intermittently, resulting in many zero values. To evaluate the model’s performance under challenging conditions, we keep the NN simple—comprising three hidden layers and trained for 30 epochs—and observe that, while it performs well for most variables, accurately predicting requires additional computational effort. Our proceeding results demonstrate that the PINN method outperforms traditional NNs, and increasing the network’s complexity has the potential to further enhance this advantage. This setup is employed to assess model performance in more difficult scenarios. The success of these methods heavily depends on the chosen dictionary and various hyperparameters. Based on the evaluation criteria, traditional methods like SINDy, LASSO, B-LASSO, and SR struggle to capture the complex relationships between variables, resulting in PDEs that are less suitable for use in PINNs. In contrast, the PDEs generated from surrogate networks are better suited for integration into the PINN training process.

Table 1.

Performance metrics in PDE construction phase for the state variable predictions.

Figure 1.

Predictions of the state variables in PDE construction phase via surrogated NNs.

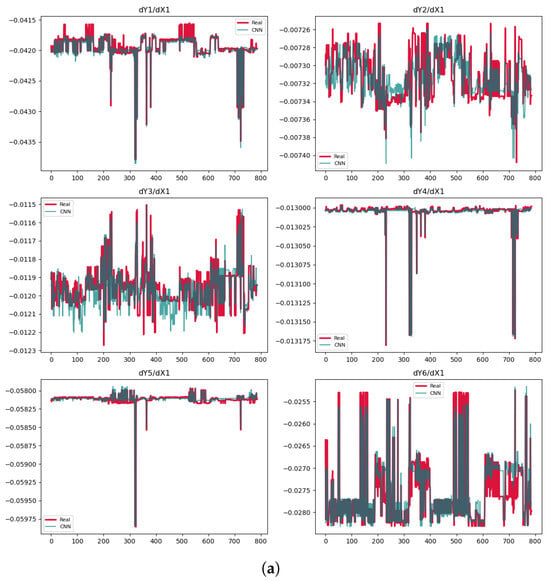

In the next step, we calculate the derivatives of the outputs with respect to all input variables in the train set. Then, we formulate PDEs with respect to the dynamic variable using other derivatives based on polynomials of Degree 3. The results of the surrogate NNs, along with those from other methods, are presented in Table 2. Given the highly complex relationships among variables in this example, traditional methods are unable to accurately discover the underlying relations or derive meaningful mathematical equations. Table 2 presents the metrics for all explained PDE construction methods. As indicated by these metrics, traditional approaches—such as SINDy, LASSO, B-LASSO, and SR—produce equations with high error rates, rendering them unsuitable for training NNs. Conversely, the results from surrogate models suggest that these models effectively predict most variations between derivatives and variables. Consequently, we select the most accurate PDEs to train PINN, B-PINN, and PI-BLR, and compare their results with those obtained through learning without explicit PDEs. While Bayesian networks excel in uncertainty analysis, they are computationally intensive; therefore, for quicker computations, PINNs serve as a practical alternative, albeit without uncertainty quantification. Since the metrics indicate significantly large errors for other methods in Table 2, we plot the PDEs only for the surrogate NNs in Figure 2, separately for each network. This is because each NN predicts the outputs using different mathematical functions, resulting in distinct derivatives for each network. We select and prioritize PDEs by first identifying those with the lowest errors and highest values, as shown in Table 1 and Table 2. Then, based on visualization of the results in Figure 2, we further shortlist the PDEs that demonstrate better performance.

Table 2.

Performance metrics for PDE construction phase for derivatives. Values in bold indicate the corresponding PDEs that are utilized.

Figure 2.

Predictions of the derivatives. (a) CNN model. (b) TCN Model 1. (c) TCN Model 2.

The values are negative or near zero for some cases, indicating poor model performance in Table 2. This occurs because becomes negative when the model’s prediction errors (SSR) exceed the natural variability in the data (SST). Such results suggest the model fails to capture meaningful patterns. We only use the PDEs from the TCN models, either Model 1 or Model 2, despite the CNN showing some high values of . The reason is that the prediction plots in Figure 2a display significant variability, making the derivatives unreliable. In contrast, the predictions for , , and in Model 1 as well as for in Model 2 are very accurate. In Model 1, the for is , largely due to one part of data that is significantly distant from the exact values.

Since TCNs have a strong architecture for recognizing temporal relationships, we also apply a TCN in the prediction phase. The TCN layer extracts temporal features through dilated convolutions with dilation rates of , utilizing 64 filters and a kernel size of 2. The output layer then maps these features to 6 output variables using a dense layer.

We use the same architecture for both PINNs and the standard NNs. The key differences in the PINNs arise from the selection of various PDEs. While the training for PINNs focuses on minimizing (A1), the NNs primarily aim to reduce data loss. For TCN model 1, we develop two distinct PINNs. One of them incorporates the PDE related to , while the other includes PDEs of , and . We train the non-Bayesian networks for 50 epochs, and to facilitate a better comparison, we include results for early stopping with a maximum of 200 epochs; however, all training terminates in fewer than 50 epochs.

To facilitate comparison across sample sizes in the prediction phase, we select three different sample sizes for the training, validation, and testing procedures. The starting point for the training set is the next data point following the last sample of the PDE construction phase, resulting in distinct sample values. We define small (2000 training, 500 validation, and 500 testing), medium (26,000 training, 4000 validation, and 500 testing), and large (91,656 training, 4324 validation, and 500 testing) sample sizes. The large samples consist of the remaining data set after the PDE construction phase.

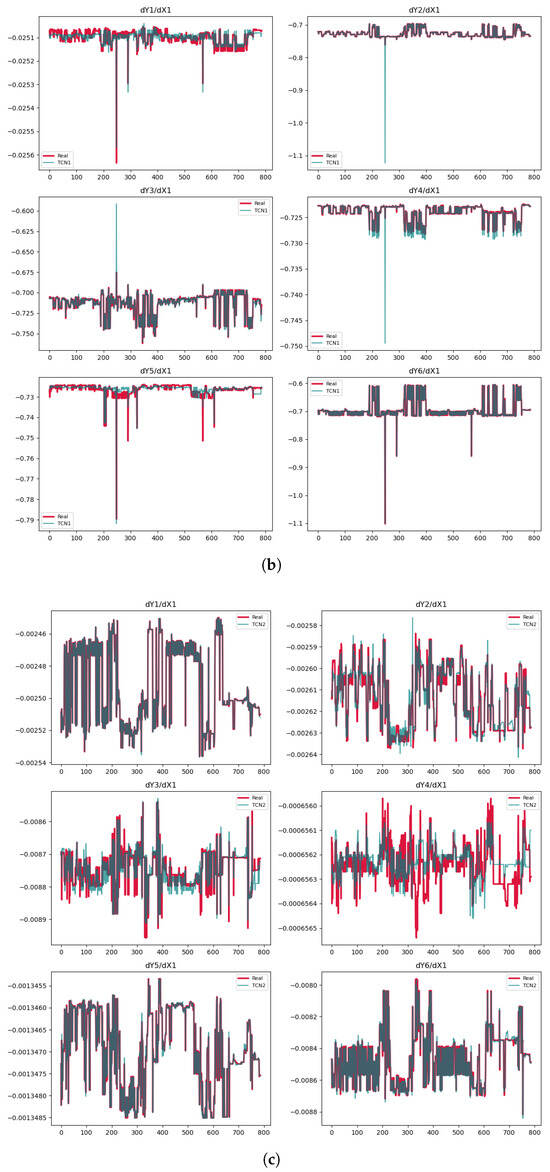

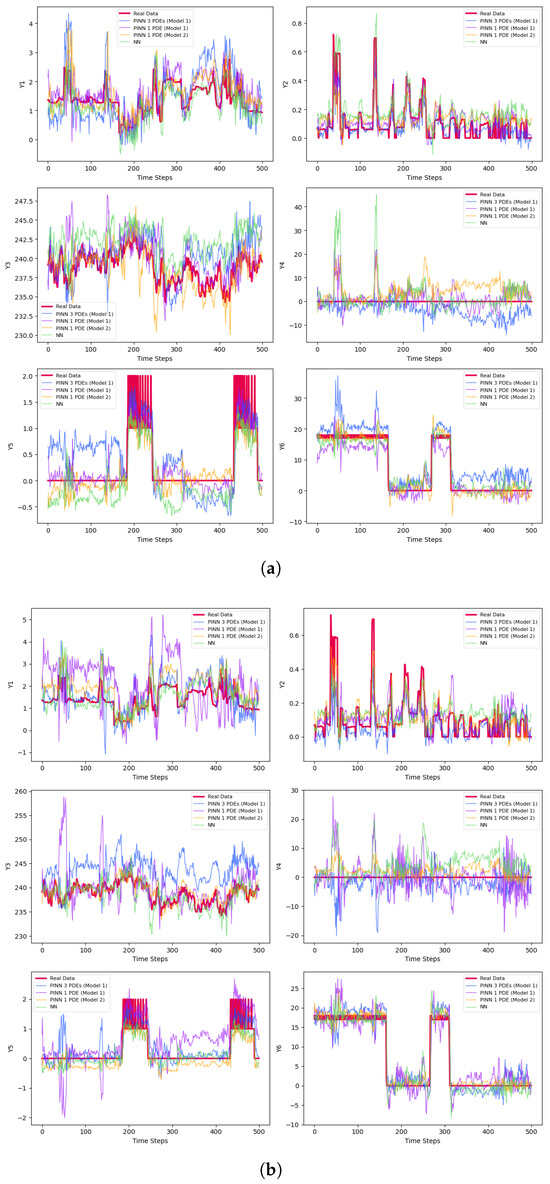

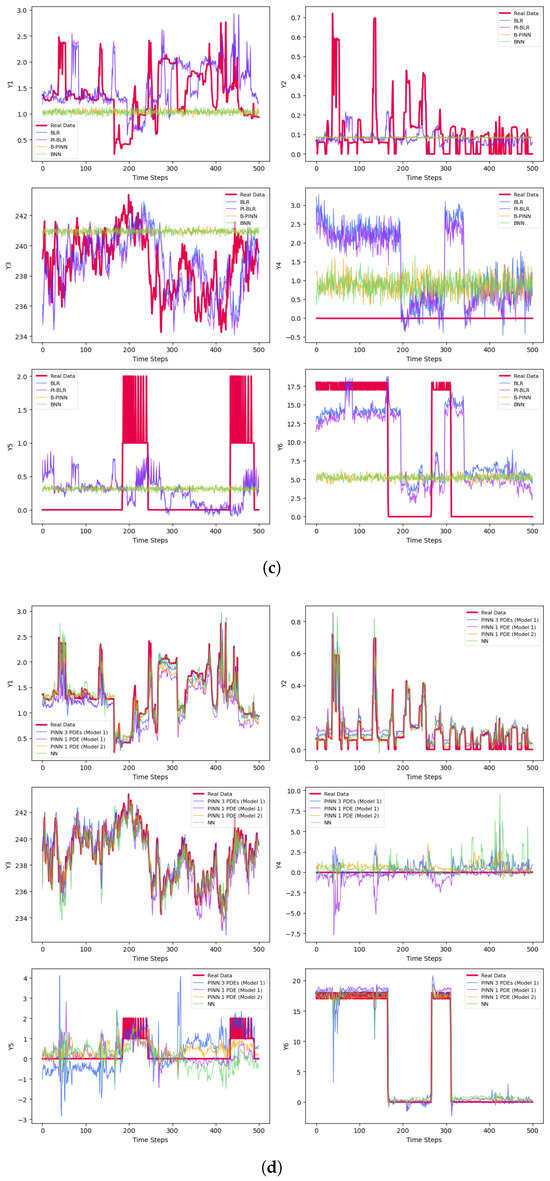

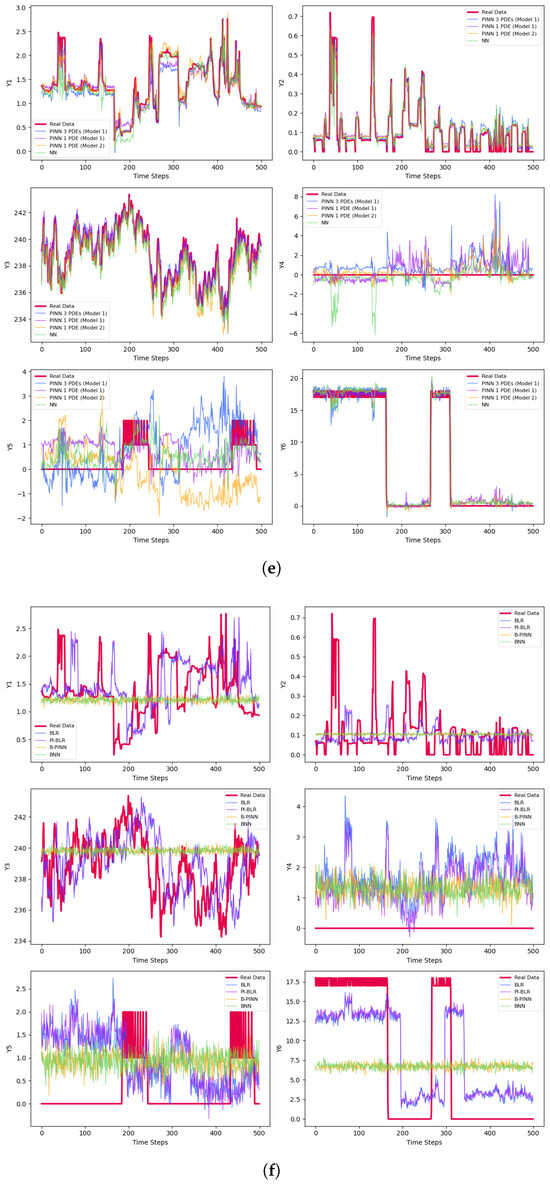

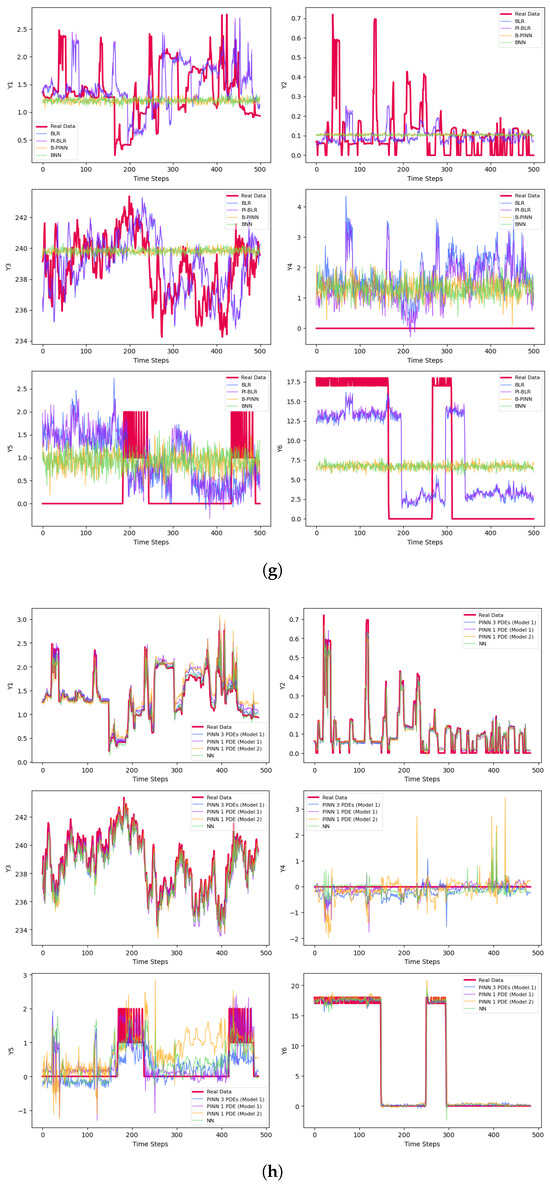

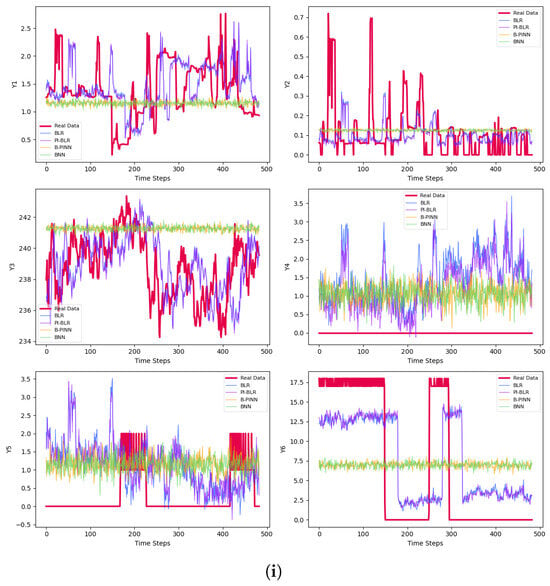

The final prediction results are presented in Table 3 and Figure 3. Figure 3a–c represent results for small batches, while Figure 3d–f correspond to medium-sized samples. The large batch results are shown in Figure 3g–i. For each set, Figure 3a,d,g display the predictions for the test sets after 50 epochs of training. Figure 3b,e,h illustrate the results using early stopping criteria, and Figure 3c,f,i show the prediction curves obtained through Bayesian methods.

Table 3.

Performance metrics for algorithms in predictions: Bold values show the best predictions for each sample size; star values denote the top Bayesian predictions.

Figure 3.

Predictions of the derivatives. (a) Predictions of the small samples with 50 epochs. (b) Predictions of the small samples with early stopping. (c) Bayesian predictions of the small samples. (d) Predictions of the medium-size samples with 50 epochs. (e) Predictions of the medium-size samples with early stopping. (f) Bayesian predictions of the medium-size samples. (g) Predictions of the large samples with 50 epochs. (h) Predictions of the large samples with early stopping. (i) Bayesian predictions of the large samples.

Samples with varying sizes are constructed to compare results. Naturally, increasing the sample size improves prediction accuracy on test samples, as more information is fed into the model. However, this is often impractical due to higher costs and longer computation times. A key focus is whether there is a significant performance difference between traditional NNs and the new approach that incorporates physical information—such as PDEs—into the network. While larger sample sizes tend to enhance accuracy, our main comparison is between classic NNs and PINNs. In PINNs, adding physical information increases training time—meaning they take longer to train for the same number of epochs. Interestingly, when PDEs are estimated from data, PINNs can learn effectively with fewer epochs than simple NNs. As the number of training cycles increases, the training time for PINNs also grows, but they can often reach higher accuracy with fewer epochs depending on the application. In this study, we assume PDEs are unknown and derived from historical data, which preserve the advantage of PINNs over traditional NNs that do not leverage PDEs. These equations are particularly useful when relationships between variables are too complex to be captured by simple mathematical models. Estimating PDEs from data generally yields better results than ignoring this information altogether. The following summarized steps outline our comprehensive approach to data preparation and PDE construction for physical information-based networks.

Data Analysis Algorithm:

- Check the correlations between variables and remove those with linear relationships.

- Use interpolation methods like first-order spline to fill in missing data gaps.

- Normalize the data to facilitate capturing complex relationships between variables.

- Divide the data into two portions: approximately for the PDE construction phase and for the prediction phase using data-driven PDEs. Additionally, split each segment into training, validation, and testing sets.

- Apply the PDE construction methods outlined in Section 3:

- Train the physics-informed network using of the remaining samples, along with the data-driven PDEs.

- Use the previous T samples to predict a point in the future. Then, iteratively apply the same process, using the latest predicted values as inputs to generate subsequent future points. Continue this hierarchical prediction until you reach the desired number of future predictions.

- Evaluate the predictions using metrics such as MSE.

Generally, the non-Bayesian methods perform better and are approximately faster by over . One reason for this is that Bayesian models are more complex and require significantly more samples and chains to train their networks accurately. The limited training time and high computational cost likely contribute to the poor results, which is why we decide to omit the training process for the Bayesian networks when using one PDE from Models 1 and 2. Among the Bayesian methods, the highest is achieved by the B-PINN, which utilizes large sample sizes, while the best plot is produced by the BLR and PI-BLR, as shown in Figure 3i.

There are six variables to predict, , and three different sample sizes—small, medium, and large—resulting in a total of 18 prediction cases. All networks struggle with predicting , consistently yielding an of zero across different training sample sizes. For all other variables, except , the PINNs produce the best predictions, with the highest values highlighted in bold in Table 3. The only exception is , which is best predicted by the simple NN. Among the remaining twelve cases, the highest values are achieved with TCN Model 2 in nine instances, as shown in Table 3. This superiority mainly stems from Model 2’s use of dilated convolutions, which enhance the network’s ability to capture long-term dependencies and learn more complex data patterns. In TCN Model 2, only one PDE is used. According to Table 2 and Figure 2c, this PDE exhibits high accuracy. For the remaining three cases—namely predicting with small samples, with large samples, and with large samples—TCN Model 1 performs best, utilizing only one PDE. It is worth noting that TCN Model 1 operates in two scenarios: using a single PDE and using three PDEs. The metrics are presented in Table 2 and the prediction plots in Figure 2b. In this example, TCN Model 1, which employs a single PDE, outperforms the same model with three PDEs. Overall, PINNs demonstrate higher prediction accuracy compared to non-physics-based networks. Among the PINN models, TCN Model 2 performs the best, showing advantages in nine prediction cases, followed by TCN Model 1, which uses a single PDE and delivers strong results.

PINNs are well known for providing additional information to the training process, enhancing efficiency. In this research, we do not have predefined PDEs; instead, we construct them from historical data. By incorporating these PDEs, the efficiency of the network improves. In other words, the external computations during the PDE construction phase add valuable information to the network, thereby enhancing its efficiency, even though this information is derived from the available data.

7. Conclusions

This work demonstrates that physics-informed learning frameworks can be effectively adapted to systems with unknown governing equations by integrating data-derived PDEs. We show that surrogate TCNs with dilated convolutions can automatically construct meaningful PDEs from raw MTS data. This capability enables the application of PINNs, even in domains where predefined physical laws are absent. While PINNs achieved significant improvements in predictive accuracy for most variables, Bayesian methods revealed trade-offs between computational efficiency and uncertainty quantification. However, Bayesian methods require rich datasets, including sufficient sampling sizes and chains. Interestingly, the constructed PDEs help improve the model’s efficiency by guiding predictions toward physically plausible solutions, even when they are derived only from historical data. These findings highlight the potential of hybrid data-physics approaches in fields such as economics and social sciences, where explicit governing equations are often difficult to identify.

Author Contributions

The contributions are as follows: S.A.F.M.: Methodology, Conceptualization, Validation, Writing—original draft, Writing—review and editing. R.W.: Supervision, Methodology, Investigation, Writing—original draft, Writing—review and editing. A.M.-D.: Supervision, Methodology, Writing—review and editing. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Innovative Research Group Project of the National Natural Science Foundation of China (Grant No. 51975253).

Data Availability Statement

The data supporting the findings in Section 6 are openly available at https://www.kaggle.com/datasets/uciml/electric-power-consumption-data-set, accessed on 22 June 2025.

Conflicts of Interest

Ali Mohammad-Djafari is employed by International Science Consulting and Training (ISCT) and Shanfeng Company. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Appendix A. SINDy Algorithm

- Prepare the system data for inputs and for outputs. Here, n represents the number of samples, d denotes the number of input features, and p indicates the number of target variables. Note that the input contains both dynamic and state variables, while the output consists of the state variables of , excluding the dynamic variables. In some applications, there might be only one dynamic variable (e.g., elapsed time t), which leads to the relationship . For clarification, consider the following example:where is the only dynamic variable.When dealing with MTS, it is important to account for time steps when preparing the output variables. The output variable should be shifted by T samples from the input corresponding to the state variables, which is known as the lag operator in MTS contexts. This means that the first T samples of the state variables in must be removed to construct the outputs. The larger the value of T, the more accurate the predictions become, but this also requires more computation. To simplify notation, assume the number of samples in both and is equal to n.

- Calculate the necessary derivatives of the output variables with respect to the dynamic variables:where for is defined:where is the number of dynamic variables. In this definition, can be replaced by d to take the derivatives with respect to all inputs if desired. This may involve numerical differentiation techniques to estimate the derivatives from the collected data. In this context, represents the total number of dynamic variables.

- Create a library of potential basic functions for the state variables , including polynomial terms, interactions between variables, trigonometric functions, and other relevant functions that may describe the dynamics of the system. This step is crucial, as it lays the foundation for accurately identifying the underlying equations that govern the system’s behavior. For more complex models, one might also incorporate the derivatives of into the potential function, resulting in , where .

- Formulate the sparse regression problem , where is the coefficient vector to estimate, and ⊙ is the Hadamard product.

- Regularize the coefficient vector. This step helps prevent the coefficients from becoming excessively large and promotes sparsity by shrinking some coefficients to zero. Regularization adds a penalty term to the loss function that encourages sparsity. Refine the coefficient by minimizingwhere and are the and norms, respectively.

- Select the active terms from the regression results that have non-zero coefficients.

- Assemble the identified terms into a mathematical model, typically in the form of a PDE.

- Validate the constructed model against the original data and select the equations that accurately describe the data.

Appendix B. LASSO Algorithm

- Perform same as the SINDy Algorithm to define . In this algorithm, the derivatives are obtained not only with respect to the dynamic variables, but also for other features of the input set.

- Formulate the LASSO regression model as

- Choose a range of values to test (e.g., , , 1, 10, 100).

- Implement k-fold cross-validation by splitting the data into k subsets. Use each subset as the validation set and the remaining subsets as the training set.

- Fit the LASSO model to the training set using the current .

- Calculate the MSE or MAE on the validation set and average them across all k folds for each .

- Using the optimal found from cross-validation, fit the LASSO model to the entire dataset X and Y to find . For simplicity, one can skip Steps 3 and 4 and use a predetermined value for .

- Formulate the constructed function as a PDE based on the non-zero coefficients.

- Validate the constructed PDE against a separate dataset to ensure its predictive capability.

SYNDy and LASSO use the same criterion; the difference lies in their optimization algorithms. In SYNDy, at each iteration, only the active terms (those for which the coefficients c are significant, exceeding a certain threshold) are retained. In the above, the used algorithm is described.

Appendix C. B-LASSO Algorithm

- Follow Steps 1 to 3 in the SINDy Algorithm to prepare the data and define the design matrix.

- Formulate the B-LASSO model as follows:where represents the regression coefficients of the model, denotes the positive real regularization parameters, and is the model noise factor. The hyperparameters , , , , and can be approximated based on data. The symbols , , , and represent the Normal, Laplace, Gamma, and Half-Cauchy distributions, respectively. For simplicity, can be identity function.

- Estimate the posterior distribution using a sampling method such as MCMC. The posterior distribution of the parameters , , and given the data and is expressed as

- Approximate the coefficients using the posterior distribution samples:where represents the ith sample of from the jth chain, N is the number of draws per chain, and M is the number of chains. In this context, the highest density interval of the coefficients can be used to measure uncertainty for a specified confidence level, such as . The HDI is a Bayesian credible interval that contains the most probable values of the posterior distribution.

- Construct PDEs based on the non-zero coefficients in the form .

- Evaluate the constructed PDEs using unseen data and select the equations that demonstrate high accuracy.

Appendix D. SR Algorithm

- Prepare the relevant input features and target features .

- Compute the derivative matrix of the output variables with respect to the input variables.

- Utilize a SR package, such as gplearn (a Python library for SR based on genetic programming, for example, version ), to approximate the relationship between the derivatives and the target variables.

- Fit a symbolic regressor to the training data to approximate the relationship:The symbolic regressor searches for a mathematical expression that minimizes the error:

- Construct the best performing equation from the symbolic regressor . For example, one PDE may have the form:where is the first target feature, is the first input feature, and represents all input features except the first one.

- Evaluate the constructed PDEs and select those with high accuracy.

Appendix E. CNN Algorithm

- Provide the input features and the target variables , where T is the number of time steps, known as lags and explained in Appendix A. For example, means that 10 previous time steps are used to predict the value at time .

- Apply one-dimensional convolutional layers with filters to the lth layer, where m is the kernel size. The output of the lth convolutional layer iswhere * denotes the convolution operation, is the output of the previous layer, is the bias term, and ReLU is the activation function.

- Use max-pooling to reduce the dimensionality of the feature maps:

- Flatten the output of the final convolutional layer and pass it through a fully connected layer to produce the predicted outputs :where and are the weights and biases of the last layer and · denotes matrix multiplication.

- Train the model using the MSE loss function. To obtain accurate derivatives, it is essential to have a well-trained network, as this ensures the reliability of the derivatives produced afterward.

- Compute the derivatives of the predicted outputs with respect to the input features using automatic differentiation:

- Organize the derivatives into a matrix , where each entry represents the derivative of the jth output variable with respect to the kth input feature at time step t for the ith sample:

- Perform a correlation analysis between the derivatives and the target variables to identify the most significant terms in the PDEs. Compute the correlation matrix to quantify the relationships.

- Select the derivatives with the highest correlation (e.g., correlation greater than ) to the target variables. These derivatives are considered candidate terms for the PDEs. For example, to construct a PDE for a specific output feature with respect to a specific input feature, such as , we select the most relevant derivatives and state variables associated with the desired derivative.

- Approximate the relationship between the significant derivatives and the target variables using a polynomial regression model. The resulting polynomial equation represents the constructed PDE:where represents polynomial features of degree q constructed from the significant derivatives and state variables.

- Evaluate the accuracy of the constructed PDEs and select those with the highest predictive performance.

Appendix F. TCN Algorithm

- Follow Step 1 of the CNN Algorithm.

- Apply a TCN to the input data. The TCN consists of multiple dilated convolutional layers with causal padding to preserve the temporal order of the data. The output of the lth dilated convolutional layer is given bywhere denotes the causal convolution operation with a dilation rate that increases exponentially with each layer. To ensure that the model does not utilize future information for predictions, causal padding is applied. For a kernel of size m and a dilation rate , the input is padded with zeros on the left side.

- Add residual connections to improve gradient flow and stabilize training. For the lth layer, the residual connection is computed aswhere and are the residual weights and bias. The final output of the layer is

- Perform Steps 4 to 11 of the CNN Algorithm.

Appendix G. PINN Algorithm

- Prepare a new dataset of inputs and outputs , similar to Step 1 in the SINDy Algorithm.

- Create a NN architecture aligned with the number of inputs and outputs according to the data.

- Define the physical loss function based on the constructed PDEs. For example, if a PDE is constructed aswhere is a non-linear function, , and , then the loss function is defined by the MSE:where is a vector of zeros and ones. The values of indicate whether the corresponding derivative equations are included in the loss function based on the PDE construction. If , then contributes to the loss function; conversely, indicates that no equation is defined for , and it is excluded from the loss.

- Determine the data loss function :

- Combine the physics loss and data loss into a total loss function :Although MSE is used in this algorithm, other loss functions can also be applied.

- Use the Adam optimizer or any other suitable optimizer to minimize with respect to the network weights and biases.

- Train the model for a fixed number of epochs.

- Evaluate the trained model on the validation and test sets using the total loss function. If necessary, refine or tune the hyperparameters to achieve better results.

Appendix H. PI-BLR Algorithm

- Prepare the input variable and the output target , similar to the SINDy Algorithm.

- Define the priors and the Normal likelihood for the observed outputs:where , , and are hyperparameters. The term is included to enhance sampling efficiency, while represents the regression coefficients with a non-centered parameterization for stable sampling. The scale parameters enforce sparsity through Gamma priors.

- Specify the desired PDEs as in Step 3 of the PINN Algorithm.

- Construct the posterior distribution of , , and given the data and :

- Set the PDEs to zero:Note that does not need to be defined for all j and i. For undefined cases, assume it to be zero. For simplicity, assume there are PDEs, which can be denoted as . The derivatives are inherently dependent on the coefficients , although this dependence may not be explicitly clear in the preceding formula. This relationship is derived based on the model established in Step 2. The PDE residuals act as soft constraints, weighted by , which can be interpreted as assuming the PDE residuals follow a zero-mean Normal distribution:The logarithmic term for the PDEs is given byHere, serves as a quadratic penalty, equivalent to a Normal prior on the PDE residuals.

- Rewrite the posterior distribution to incorporate the physics-based potential term, penalizing deviations from domain-specific PDEs:

- Find the maximum a posteriori estimation to initialize the parameters:where , , and are the initialization parameter estimations.

- Sample from the full posterior using the No-U-Turn Sampler (NUTS) and specify appropriate values for the number of chains, tuning steps, number of returned samples, target acceptance rate, and maximum tree depth. It is worth mentioning that tuning steps, also known as burn-in, refer to the number of initial samples to discard before collecting the desired number of samples from each chain.

- Evaluate the model on an unseen dataset and adjust the hyperparameters as necessary.

Appendix I. B-PINN Algorithm

- Start by following Step 1 of the SINDy Algorithm to load the input and output datasets.

- Define the initial layer and subsequent layers using a non-centered parameterization of weights:where c is a small scalar, such as , to ensure the weights are non-centered, and L represents the total number of layers. All weights and biases are assumed to follow distributions and , respectively, with typical values being and . The hyperbolic tangent function is omitted in the last layer for regression tasks.

- Assign a Half-Normal prior to the observational noise, represented as , where denotes the Half-Normal distribution. This prior, being lighter-tailed compared to Half-Cauchy, enhances identifiability in high-dimensional B-PINNs by penalizing large noise values.

- Construct the likelihood function based on the prediction :

- Formulate the PDEs as outlined in Steps 3 and 5 of the PI-BLR Algorithm.

- Define the hierarchical Bayesian inference using either the PDEs or by omitting their current term to formulate a BNN:

- Use Automatic Differentiation Variational Inference (ADVI) to approximate initial points for the parameters. ADVI is a type of variational inference aimed at approximating the true posterior distribution by a simpler, parameterized distribution, achieved by minimizing the Kullback–Leibler divergence between the two distributions.

- Sample from the approximated posterior distribution using NUTS with the hyperparameters defined in Step 8 of the PI-BLR Algorithm.

- Evaluate the trained model to determine its reliability.

References

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019, 378, 686–707. [Google Scholar]

- Bararnia, H.; Esmaeilpour, M. On the application of physics informed neural networks (PINN) to solve boundary layer thermal-fluid problems. Int. Commun. Heat Mass Transf. 2022, 132, 105890. [Google Scholar]

- Hu, H.; Qi, L.; Chao, X. Physics-informed neural networks (PINN) for computational solid mechanics: Numerical frameworks and applications. Thin-Walled Struct. 2024, 205, 112495. [Google Scholar]

- Wang, D.; Jiang, X.; Song, Y.; Fu, M.; Zhang, Z.; Chen, X.; Zhang, M. Applications of physics-informed neural network for optical fiber communications. IEEE Commun. Mag. 2022, 60, 32–37. [Google Scholar]

- Mohammad-Djafari, A.; Chu, N.; Niven, R.K. Physics informed neural networks for inverse problems and dynamical system identification. In Proceedings of the 24th Australasian Fluid Mechanics Conference, Canberra, Australia, 1–5 December 2024. [Google Scholar]

- Liang, G.; Tiwari, P.; Nowaczyk, S.; Byttner, S. Higher-order spatio-temporal physics-incorporated graph neural network for multivariate time series imputation. In Proceedings of the 33rd ACM International Conference on Information and Knowledge Management, Boise, ID, USA, 21–25 October 2024; pp. 1356–1366. [Google Scholar]

- Nayek, R.; Fuentes, R.; Worden, K.; Cross, E.J. On spike-and-slab priors for Bayesian equation discovery of nonlinear dynamical systems via sparse linear regression. Mech. Syst. Signal Process. 2021, 161, 107986. [Google Scholar]

- Tang, J.; Fu, C.; Mi, C.; Liu, H. An interval sequential linear programming for nonlinear robust optimization problems. Appl. Math. Model. 2022, 107, 256–274. [Google Scholar]

- Tang, J.; Li, X.; Fu, C.; Liu, H.; Cao, L.; Mi, C.; Yu, J.; Yao, Q. A possibility-based solution framework for interval uncertainty-based design optimization. Appl. Math. Model. 2024, 125, 649–667. [Google Scholar]

- Niven, R.K.; Cordier, L.; Mohammad-Djafari, A.; Abel, M.; Quade, M. Dynamical system identification, model selection, and model uncertainty quantification by bayesian inference. Chaos Interdiscip. J. Nonlinear Sci. 2024, 34, 083140. [Google Scholar]

- Zwillinger, D.; Dobrushkin, V. Handbook of Differential Equations; Chapman and Hall/CRC: Boston, MA, USA, 2021. [Google Scholar]

- Peitz, S.; Stenner, J.; Chidananda, V.; Wallscheid, O.; Brunton, S.L.; Taira, K. Distributed control of partial differential equations using convolutional reinforcement learning. Phys. D Nonlinear Phenom. 2024, 461, 134096. [Google Scholar]

- Brunton, S.L.; Proctor, J.L.; Kutz, J.N. Discovering governing equations from data by sparse identification of nonlinear dynamical systems. Proc. Natl. Acad. Sci. USA 2016, 113, 3932–3937. [Google Scholar]

- Fasel, U.; Kutz, J.N.; Brunton, B.W.; Brunton, S.L. Ensemble-SINDy: Robust sparse model discovery in the low-data, high-noise limit, with active learning and control. Proc. R. Soc. A 2022, 478, 20210904. [Google Scholar]

- Schmid, A.C.; Doostan, A.; Pourahmadian, F. Ensemble WSINDy for data driven discovery of governing equations from laser-based full-field measurements. arXiv 2024, arXiv:2409.20510. [Google Scholar]

- Tibshirani, R. Regression shrinkage and selection via the LASSO. J. R. Stat. Soc. Ser. B Stat. Methodol. 1996, 58, 267–288. [Google Scholar]

- Zhan, Y.; Guo, Z.; Yan, B.; Chen, K.; Chang, Z.; Babovic, V.; Zheng, C. Physics-informed identification of PDEs with LASSO regression, examples of groundwater-related equations. J. Hydrol. 2024, 638, 131504. [Google Scholar]

- Ma, M.; Fu, L.; Guo, X.; Zhai, Z. Incorporating LASSO regression to physics-informed neural network for inverse PDE problem. CMES-Comput. Model. Eng. Sci. 2024, 141, 385–399. [Google Scholar]

- Park, T.; Casella, G. The Bayesian LASSO. J. Am. Stat. Assoc. 2008, 103, 681–686. [Google Scholar]

- Chen, J.; Guo, Z.; Zhang, L.; Pan, J. A partially confirmatory approach to scale development with the Bayesian LASSO. Psychol. Methods 2021, 26, 210. [Google Scholar]

- Changdar, S.; Bhaumik, B.; Sadhukhan, N.; Pandey, S.; Mukhopadhyay, S.; De, S.; Bakalis, S. Integrating symbolic regression with physics-informed neural networks for simulating nonlinear wave dynamics in arterial blood flow. Phys. Fluids 2024, 36, 121924. [Google Scholar]

- Liu, Y.Y.; Shen, J.X.; Yang, P.P.; Yang, X.W. A CNN-PINN-DRL driven method for shape optimization of airfoils. Eng. Appl. Comput. Fluid Mech. 2025, 19, 2445144. [Google Scholar]

- Perumal, V.; Abueidda, D.; Koric, S.; Kontsos, A. Temporal convolutional networks for data-driven thermal modeling of directed energy deposition. J. Manuf. Process. 2023, 85, 405–416. [Google Scholar]

- Fraza, C.J.; Dinga, R.; Beckmann, C.F.; Marquand, A.F. Warped Bayesian linear regression for normative modelling of big data. NeuroImage 2021, 245, 118715. [Google Scholar]

- Gholipourshahraki, T.; Bai, Z.; Shrestha, M.; Hjelholt, A.; Hu, S.; Kjolby, M.; Rohde, P.D.; Sørensen, P. Evaluation of Bayesian linear regression models for gene set prioritization in complex diseases. PLoS Genet. 2024, 20, e1011463. [Google Scholar]

- Yang, L.; Meng, X.; Karniadakis, G.E. B-PINNs: Bayesian physics-informed neural networks for forward and inverse PDE problems with noisy data. J. Comput. Phys. 2021, 425, 109913. [Google Scholar]

- Mohammad-Djafari, A. Bayesian physics informed neural networks for linear inverse problems. arXiv 2025, arXiv:2502.13827. [Google Scholar]

- Repository, U.M.L. Household Electric Power Consumption. 2020. Available online: https://www.kaggle.com/datasets/uciml/electric-power-consumption-data-set (accessed on 3 March 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).