Abstract

Short informal texts are increasingly prevalent in modern communication, often containing fragmented grammar, personal opinions, and limited context. Traditional NLP tasks for the texts ordinarily focus on the subjective aspect learning, such as sentiment analysis and polarity classification. The study of learning objectivity from the texts is similarly significant, which can benefit many real-world applications including information filtering, content verification, etc. Unfortunately, this study is not being explored. This paper proposes a novel task that aims at identifying objectivity in short informal texts. Inspired by the characteristics of objective statements that normally need complete syntax structures for knowledge expression and delivery, we try to leverage the viewpoint of subjects (U), the tense of predicates (V), and the viewpoint of objects (O) as critical factors for objectivity learning. Upon that, we further propose a two-stage objectivity identification approach: (1) a UVO quantification module is implemented via a proposed OpenIE and large language model (LLM)-based triple feature quantification procedure; (2) an objectivity identification module employs pre-trained base models like BERT or RoBERTa that are constrained with the quantified UVO. The experimental result demonstrates our approach can outperform the base models up to in objective-F1 and up to in accuracy.

1. Introduction

The proliferation of online instant communication platforms, such as TikTok, has led to the emergence of large volumes of informal short texts [1]. These texts are often found in social media posts, instant messaging, and online comments, which are characterized by their informal language and brevity [2]. Unlike well-organized formal documents, informal short texts often contain slang [3], abbreviations [4], emoticons [5], non-standard grammar usages [6], and ambiguous language [7], which can hinder accurate information extraction and comprehension [8]. For instance, during the COVID-19 pandemic, tweets like “COVID vaccines alter DNA” spread rapidly as pseudo-factual claims, exploiting informal syntax to evade detection by traditional fact-checking tools [9]. Consequently, it has become a considerable challenge for crowds to identify the objective contents that are the carriers of reliable messages and factual statements from such texts [10,11]. Normally, subjective informal short texts are often laden with personal emotions, opinions, and abbreviations and may exhibit incomplete sentence structures, leading to crowds suffering from identifying the relationships between the relational triplet (a.k.a., subject, predicate, and object) under an uncertain context [12]. However, objective informal short texts, despite being able to be used in informal scenarios, are rooted in factual information and provide accurate, clear communication for knowledge expression and convey. In this case, the goal of differentiating objective informal short texts from subjective ones is quite arduous, especially in the domains where misinformation or inappropriate content can spread rapidly [13].

Objectivity detection in informal texts intersects with but critically diverges from other established NLP tasks such as sentiment analysis [14], stance detection [15], fact verification [16], and opinion mining [17]. For example, sentiment analysis aims to identify the polarity of texts as positive, negative, or neutral; stance detection tries to classify support/opposition toward a target; fact verification tries to validate claims against external evidence; and opinion mining aims to extract subjective expressions from statements. Objectivity detection distinguishes factual claims from non-factual content by evaluating whether statements are grounded in verifiable facts, leveraging syntactic markers (e.g., third-person subjects, definitive tenses) as internal indicators of factuality while addressing the unique challenge of informal texts where opinions and factual phrasing are intricately blended, necessitating deeper structural analysis to disentangle objective assertions from subjective framing. One of the primary challenges in identifying objectivity from informal short texts is their lexical sparsity, where the limited number of words in each text hinders the application of traditional frequency-based or context-dependent models [18,19,20]. These models struggle to capture the subtle linguistic cues that indicate objectivity, particularly in informal short texts [21,22,23,24]. Thus, there is an urgent need to explore more effective tactics for objectivity identification in these texts, thereby fully unlocking their potentiality at automating information processing tasks like fact-checking [25,26,27], information filtering [28,29], and content verification [30,31].

Traditional relational triple-relevant tasks primarily aim to structure information from texts for further analysis and knowledge representation to assist the tasks of information extraction [32], knowledge graph construction [33,34], semantic understanding [35], etc. That means the concept of relational triples maintains a foundational possibility that could support a nuanced approach to understanding content in brief and fragmented statements, especially for informal short texts. Specifically, relational triples help deconstruct these fragmented statements into their core components, which allow for a more nuanced understanding of the underlying objectivity in the statement [36]. Moreover, the latent features of relational triples, such as viewpoints and tenses, are especially valuable as they reveal the essential elements needed to determine whether a statement is objective or subjective [37]. For example, first-person pronouns (e.g., “I believe”, “We think”) typically signal subjective opinions, while impersonal subjects (e.g., “Research shows”, “Statistics indicate”) suggest an objective stance. Furthermore, tense is not merely a grammatical marker; in pragmatics, it functions as a tool to frame information in terms of relevance and reliability. Therefore, effective feature representation for relational triples holds significant potential for identifying objectivity from informal short texts.

To explore the effectiveness of the triple features in identifying objectivity, this paper proposes a novel approach that integrates open information extraction (OpenIE) techniques coupled with LLMs aiming to extract these features from informal short texts. Specifically, we extract and quantify the viewpoint of subjects, the tense of predicates, and the viewpoint of objects (UVO) triple features from these texts to provide a structural foundation that can enhance the analysis and comprehension of the texts. This approach prevents the LLM from unintentionally altering the original text’s meaning, thereby preserving the integrity of the input while improving the model’s performance in identifying objective information. To leverage these features, we embed them into various fine-tunable pre-trained language models, such as RoBERTa and BERT, to verify the effectiveness of the features in detecting objective content within informal short texts.

In summary, this study proposes a novel method aiming at detecting objectivity in informal short texts, which relieves the inherent challenges posed by the informal and sparse nature of these texts. The contribution of this study is summarized as follows.

- This work originally formalizes objectivity detection as a distinct task tailored to the challenges of informal language. Meanwhile, we systematically analyze textual objectivity in platforms like social media and instant messaging.

- We propose a rule-based UVO (subject–predicate–object) quantification framework, which integrates syntactic analysis, tense detection via POS tagging with large-language-model-augmented triple extraction.

- Our two-stage framework synergizes OpenIE, LLMs, and fine-tuned pre-trained encoders. We mitigate hallucination risks inherent in LLMs by decoupling interpretable triple extraction from neural classification via rule-based and LLM voting while preserving input integrity.

- We rigorously evaluate our method across three benchmark datasets and achieve up to 6.97% accuracy improvements over baseline models. The insights revealed in our work provide actionable guidelines for domain-specific adaptation of objectivity detection systems.

2. Related Works

2.1. Early Objectivity Identification

Objective identification could be treated as a derivative of subjectivity learning known as affective computing or sentiment analysis. Differing from subjective learning, the key to objective identification lies in accurately locating fact-based statements, which is crucial for low false tolerance applications. Early research on subjectivity learning primarily focused on lexical or syntax-based methods. In one of the foundational works by [38] on subjectivity classification, an annotation framework was proposed to classify sentences as subjective or objective with the assistance of multiple annotators. These efforts ensured consistency in text annotation and set a solid groundwork for future research. Building on this, Riloff and Wiebe [39] proposed a more systematic learning framework that automatically extracted subjective expressions from texts, which highlighted the complementary nature of objective identification.

As research advanced, objective identification moved beyond simple lexical features to incorporate broader contextual and grammatical information. Afterward, Wiebe et al. [40] introduced a hybrid approach that combined rule-based and statistical methods. This approach analyzed sentence structure, verb types, and noun specificity to more accurately distinguish objective statements from subjective ones, which provided valuable insights for identifying objectivity.

2.2. Objectivity Identification via Artificial Intelligence

With the development of deep learning and machine learning, subjectivity learning evolved into more complex supervised learning models, significantly improving objective identification. For example, Hu and Liu [41] employed a support vector machine (SVM) to classify product reviews as subjective or objective, which effectively vectorizes text features to distinguish opinions from factual descriptions via SVM. Liu et al. [42] used decision trees to build a hierarchical structure for subjective and objective classification, but this method struggled with capturing objective features in complex linguistic patterns. The neural networks, such as CNNs, RNNs, and LSTM also demonstrate excellent performance in objective classification by automatically learning and extracting features from texts. For example, Zhang et al. [43] highlighted the effectiveness of LSTM in capturing logical relationships within long texts, which is beneficial for objective identification in sentences. Additionally, Hu et al. [44] leveraged RNNs to capture contextual information and sequence modeling in text classification, which particularly emphasizes the advantages of RNN in handling sequential data.

Since the rise of Transformer architecture [45], the pre-trained language models, such as BERT [46], RoBERTa [47], T5 [48], BART [49], and especially the series of GPT [50,51,52,53,54], have opened new possibilities for objectivity identification. Qasim et al. [55] demonstrated that fine-tuned BERT models perform exceptionally well in sentiment analysis and other text classification tasks, showing potential for their application in subjectivity classification. These models excel at understanding textual context, which significantly enhances classification accuracy. Larger language models, including GPT-4 [54] and LLaMA [56], have also been applied to text classification tasks. Sun et al. [57] explored the effectiveness of large language models in text classification, showcasing their superiority in capturing context and understanding semantics.

2.3. Objectivity in Informal Short Texts

Despite the success of these advanced models, objective identification in short texts presents unique challenges due to informal short texts increasingly incorporating informal and non-standard language [58]. Specifically, informal texts often lack sufficient contextual information and may contain ambiguous information leading to obscuring the traditional subject–object–predicate relationships that underpin many text classification models [59]. This case has prompted researchers to explore new ways to address the challenges posed by the phenomenon of “colloquialism”. Recent advances have leveraged pre-trained language models to handle informal texts, which can contextualize sparse and unstructured text to infer the intent and factuality behind statements [60,61,62]. However, these generative models struggle with short informal texts as they tend to “fill in the gaps” by completing incomplete statements, which may lead to altered meanings and reduced precision in identifying objectivity. Thus, it remains a critical challenge when adopting LLMs in objective identification in short informal texts.

In summary, detecting objectivity in informal short texts remains a challenging task due to their informal nature [63,64] and lexical sparsity. Traditional feature engineering methods [65] have proven inadequate, and while deep learning and pre-trained models have improved performance, they still encounter significant limitations. The ongoing research continues to explore hybrid methods that combine advanced neural networks with syntactic and semantic extraction techniques to better capture the nuances of informal language and improve objectivity detection in short texts [66]. One of the possible solutions aiming at objectivity detection in short informal texts is to investigate the latent information implied in relational triples by combining the nature of objectivity in text. This study is supposed to investigate the effectiveness of the latent features of the relational triples—viewpoints of subjects, tense of predicates, and viewpoints of objects in the task. The details of our methodology design are demonstrated in the following two sections.

3. Feature Extraction and Quantification

In this section, we describe the systematic procedure for describing objective statements at the sentence level. According to the event expression concept proposed by Zhang et al. [67], a sentence () can be represented by an event set that maintains one or more events (i.e., ), where each event is a triple set consisting of a subject, a predicate, and an object (). Thus, we select subjects (u), predicates (v), and objects (o) of sentences in the form of raw elements as a way to represent sentence-level documents. We subsequently apply the viewpoint of a subject, the tense of a predicate, and the viewpoint of an object as fundamental features to investigate the efficacy of these three parameters in identifying objective cues in short texts. Notably, while our framework processes emojis as text tokens, we acknowledge that visual elements like emojis or images in informal texts may carry additional contextual or tonal cues critical for objectivity determination. Future extensions of this work will explore multimodal integration (e.g., emoji semantic embeddings or image captioning) to address this limitation.

3.1. Implementation Overview

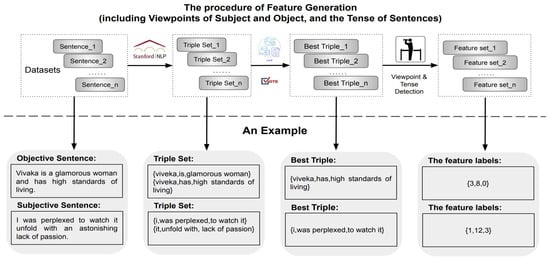

A complex sentence may include multiple triples, which poses a greater challenge in terms of analysis. Moreover, it is crucial to select the most suitable triple from the triple candidates to represent the sentence for accurate semantic analysis. To this end, we apply a sequence of advanced NLP techniques for the best triple selection and the triple-feature quantification, as shown in Figure 1.

Figure 1.

The procedure of UVO feature extraction (including viewpoints of subject and object and the tense of predicate in a short text) is demonstrated using an example.

Specifically, we initially extract triples from a sentence using the OpenIE of Stanford NLP. As the example shown in Figure 1, there are two triples—{vivaka, is, glamorous woman} and {vivaka, has, high standards of living}, that can be obtained from the complex objective sentence—{’Vivaka is a glamorous woman and has high standards of living’} using Stanford OpenIE. Next, we leverage six LLMs including source-opened OLlama 3 70B (https://ollama.com/library/llama3:70b, accessed on 26 April 2025), Mistral 7B (https://huggingface.co/mistralai/Mistral-7B-v0.1, accessed on 26 April 2025), WizardLM 2 7B (https://huggingface.co/dreamgen/WizardLM-2-7B, accessed on 26 April 2025), Phi-3 14B (https://huggingface.co/microsoft/Phi-3-medium-128k-instruct, accessed on 26 April 2025), Gemma 2 9B (https://huggingface.co/google/gemma-2-9b, accessed on 26 April 2025), and Zhipu AI (https://www.zhipuai.cn/, accessed on 26 April 2025) to select the most representative triple from the set of OpenIE-extracted candidates through a simple voting mechanism. During this selection, LLMs prioritize triples where the predicate’s tense aligns with temporal cues identified in the sentence (e.g., flagging mismatches like “Back in the day, I go there daily” for reanalysis). The selected triple best captures the main structure and semantics of the original sentence. These models collectively span a diverse range of capabilities, from instruction tuning to general-purpose dialogue, thereby offering multi-perspective judgment for triple selection. The prompt used to select the best triple from the triple sets is shown in Table 1, where the prompt takes the original sentence and the generated triple set as inputs coupled with a goal and three constraints. Then, we identify the viewpoints of the subject and object and the tense of the predicate embraced in the best triple using our presented rule-based methods. Finally, the viewpoints of the subject and object and the tense of the predicate will be used to facilitate the identification of objective statements.

Table 1.

The prompt used in LLMs for best triple selection.

3.2. The Detection of Viewpoints and Tense

To reveal the usability of the viewpoints of the subject and object and the tense of the predicate in discovering objective short informal texts, we design a sequence of rule-based methods to quantify these latent features.

To cover all possible subject- and object-related cases of sentences, we introduce a set, , where first viewpoints, second viewpoints, third viewpoints, and the other cases using non-pronoun named entities (including slang terms like “doggo”) are represented as 1, 2, 3, and 4, respectively. Value 0 in set P indicates that no subject or object is identified from input sentences caused by the phenomenon of “Ellipsis". Sentence is expressed by the best triple set , where denotes a subject, represents a predicate, and is an object. Function returns the viewpoint of subject - and the viewpoint of object - . Both and must belong to the value set P. More formally, we have

where , , and are manually collected word vocabularies that are comprised of all pronouns in first viewpoints, second viewpoints, and third viewpoints, respectively. refers to an existing named entity recognition method. If neither a subject nor an object of sentence is initially discovered, then or is tentatively set to 0. When sentence embraces a subject (an object), the value of () should be set to 1, 2, 3, or 4.

3.3. Tense Detection

To quantify the tense of the predicate in a sentence, we extend three basic tenses—‘future’, ‘present’, and ‘past’—into twelve tenses using three tense-based parameters—‘Perfect’, ‘Continuous’, and ‘Continuous Perfect’. Such twelve tenses cover all the sentence cases summarized in Table 2 accompanied with the POS tagging schemes.

Table 2.

All twelve tenses accompanied with corresponding POS tagging schemes and labels.

Let denote a set of twelve tenses containing future perfect continuous tense (i.e., 1), future perfect tense (i.e., 2), future continuous tense (i.e., 3), future simple tense (i.e., 4), present perfect continuous tense (i.e., 5), present perfect tense (i.e., 6), present continuous tense (i.e., 7), present simple tense (i.e., 8), past perfect continuous tense (i.e., 9), past perfect tense (i.e., 10), past continuous tense (i.e., 11), past simple tense (i.e., 12), and undetected tense (i.e., 0). We devise function , which returns tense of the best triple (i.e., ). To address temporal cues expressed lexically (e.g., “back in the day”), we supplement the POS-based tense detection with a rule-based filter that maps common temporal adverbs/phrases (e.g., “yesterday”, “currently”) to their implied tenses using a predefined lexicon derived from the TempEval-3 corpus [68]. For instance, the phrase “back in the day” triggers a past-tense override regardless of verb form. Intuitively, the value of is an element in set Q. More specifically, we have

where is a rule-based POS tagging detector used to discriminate the tense of the predicate , as demonstrated in Table 2. More detailed, we employ the CoreNLP POS tagger approach [69], which is nested in the Stanford CoreNLP toolkit to identify the part of speech of words. The tense of will be returned according to the index of the scheme list if predicate has a match within the schemes, To handle non-standard or dialectal verb forms (e.g., “ain’t”), we rely on lexical normalization within the POS tagging phase and apply fallback rules leveraging temporal adverbs (e.g., “yesterday”, “currently”) for tense inference. For slang predicates (e.g., “yeet”), the LLM-aided triple selection (Section 2.1) ensures contextual role inference even if POS tagging mislabels tense; otherwise, is set to 0, meaning that no tense is diagnosed in the case.

4. System Framework

To verify the effectiveness of the above features extracted from the data processing procedure (the viewpoints of subject and object, the tense of predicate) on objectivity identification, we deploy a local short-text learning system using text encoders aiming at objectivity detection. Specifically, the system framework embraces a text representation module, a triple-feature learning module, and a classification module.

4.1. Text Representation

Given a randomly selected sentence , the true label , and the corresponding generated triple-features including the viewpoint of subject , the tense of predicate , and the viewpoint of the object , we initialize three pre-trained encoders, namely, subject encoder, predicate encoder, and object encoder for the feature representation. More specifically, the subject encoder firstly encodes as a sequential representation (n is the length of sentence ) and then acquires the global representation of the subject’s viewpoint——via an attention block, same as the predicate encoder and object encoder. Thus, we have

where is a specific text encoder (subject encoder , predicate encoder , or object encoder ) used for obtaining the sequential representations of text—. It is worth noting that the three Encoders are trainable with the fine-tuning technique by complying with the two restrictions—the triple-features (, , or ) extracted from the procedure of UVO feature generation and the original true label of text .

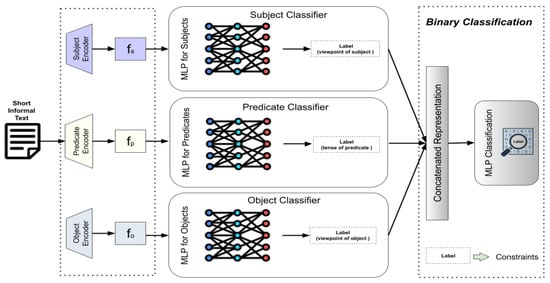

4.2. Triple-Feature Learning

The triple-feature learning module is designed to assist in identifying objective statements. We leverage the three features extracted in the feature generation procedure, including (1) the viewpoint of subject , (2) the tense of predicate q, and (3) the viewpoint of the object to train the three local deployed MLPs. As demonstrated in Figure 2, the MLPs are deployed to learn each of the three features. Moreover, the MLPs take the global representations of text as input and then utilize the value of each feature as the true label for parameter training. The loss function for learning the feature (i.e., the viewpoint of the subject ) is presented as follows:

where is the global representation calculated by feeding into the attention block. It is noteworthy that the value of is transformed with one-hot encoding. Moreover, the MLPs’ loss for learning the predicate tense and the object’s viewpoint are denoted as and .

Figure 2.

The system framework of objectivity identification. The labels used in three classifiers are generated from the procedure of UVO feature generation (including viewpoints of subject and object and the tense of predicate).

4.3. Binary Classification

Once the forward inference processes of the three MLPs are accomplished, the representations of the last hidden layers from the MLPs are concatenated as the final representation of the input sentence. Formally, the final representation of sentence s is represented as . Finally, we fed f into another MLP to approach the true label of the sentence—y. Thus, the learning objective of the last MLP is denoted as the following loss function.

where f is the final representation of sentence s, and it goes through an MLP to match the dimensionality of the true label for loss calculation. Thus, the total loss of the entire model is as follows.

5. Experimental Results

In this section, we conduct two kinds of experiments by varying the three extracted features, namely, (1) viewpoints of subjects; (2) tenses of predicates; and (3) viewpoints of objects. The first group experiment aims to evaluate the effectiveness of the three-element variable set (, q, ) in improving the performance of base models. In the second experiment group, we evaluate the impact of each of these three parameters in distinguishing objective sentences by constructing three one-elemental variable sets (i.e., (),(q), and ()).

5.1. Experimental Configurations

Dataset: In this study, we adopt three existing datasets to conduct our experiment: (1) the movie review dataset that encompasses 5000 movie review snippets from Rottentomatoes (www.rottentomatoes.com, accessed on 26 April 2025) as a subjective data collection and 5000 objective data items from the plot summaries available from the Internet Movie Database (www.imdb.com); (2) the SST dataset (https://nlp.stanford.edu/sentiment/, accessed on 26 April 2025), also known as Stanford Sentiment Treebank dataset, which are labeled in five fold. Specifically, SST consists of 11,855 single sentences extracted from movie reviews including 1510 very negative, 3140 negative, 2242 neutral, 3111 positive, and 1852 very positive sentences; (3) the Twitter US Airline Review dataset from Kaggle that consists of 2349 sentences labeled in positive, 3073 neutral data points, and 9131 negative data points (https://www.kaggle.com/datasets/crowdflower/twitter-airline-sentiment/data, accessed on 26 April 2025). To adapt the setting of our tasks, we treat data points labeled as ’Neutral’ as proxies for objective data, while acknowledging that this approach may conflate non-polar opinions with factual objectivity [13]. For instance, subjective but neutral statements (e.g., “I think the event was average”) could be mislabeled. To assess label fidelity, we conducted a post hoc analysis on 500 randomly selected samples, finding that 78% of ’neutral’ labels aligned with true objectivity (e.g., factual statements like “The meeting starts at 3 PM”). All of the others were subjective data for the SST and the Airline Review datasets.

Baselines: We adopt four pre-trained language models to tier up our proposed method for its effectiveness verification.

- BERT, a bidirectional transformer-based model developed by Google, which is pre-trained using masked language modeling (MLM) and next sentence prediction (NSP) tasks. BERT can read an input sequence from two sides to capture deep contextual information, which allows it to be adaptable for our tasks.

- RoBERTa is developed by Facebook AI, which is an optimized variant of BERT. It removes the next sentence prediction (NSP) task, uses dynamic masking, and is trained on much larger datasets for more epochs than BERT.

- BART is a transformer model also developed by Facebook AI that combines BERT’s bidirectional encoding coupled with GPT’s autoregressive decoding. Its encoder–decoder architecture makes it versatile for both natural language comprehension and generation.

- T5, developed by Google, is a unified framework that can convert NLP tasks into a text-to-text problem. It uses a transformer-based encoder–decoder architecture to handle diverse tasks. Its text-to-text approach simplifies task design and has achieved strong performance across a variety of benchmarks.

- GPT-2, developed by OpenAI, is a large-scale transformer-based language model trained on a massive corpus of internet text in an unsupervised manner. Unlike encoder–decoder frameworks, GPT-2 uses a unidirectional decoder-only architecture, generating text by predicting the next word in a sequence.

Implementation Details: In our experiments, we set up fixed hyper-parameters, including the learning rate in Adam optimizer (i.e., ), dropout rate before the classification layer (i.e., ), and the number of epochs (i.e., 5 times) across all the datasets. In more detail, each dataset is divided as 80% for training and 20% for testing. We adopt various metrics among all the methods to evaluate the performance including training loss (Tr-Loss), training accuracy (Tr-Acc), testing loss (Te-Loss), testing accuracy (Te-Acc), and the F1 score for objective data (Obj-F1), as shown in Table 3 and Table 4. All the results from the tables are the average score of five epochs. Finally, the objective of this study is to evaluate the effectiveness of the extracted UVO features in identifying objectivity from informal texts by taking advantage of fine-tuning three kinds of pretrained language models.

Table 3.

The performance comparison between encoders-only baselines and the triple-feature-based encoders across the three datasets. The triple features, UVO, represent viewpoints of subjects, tenses of predicates, and viewpoints of objects, respectively. The best values in various metrics have been bolded.

Table 4.

The ablation study we conducted for investigating the role of each of the UVO features in affecting the performance of five pre-trained base models on objectivity detection across the three datasets. The best values in various metrics have been bolded.

5.2. The First Group Experiment

Table 3 presents the performance comparison between the UVO-added base models and the UVO-free baselines on the three datasets. As shown in the table, UVO-added base models significantly outperform the base models without UVO on every dataset in terms of all the metrics, which demonstrates the effectiveness of triple features in identifying objective sentences. Specifically, we have the following observations from Table 3.

- Training Efficiency Improvement: In all datasets, the addition of UVO features consistently reduces the training loss as well as yields better training accuracy across all models, which suggests that these features help the models converge better and capture the structure of the data more effectively. In particular, the Bert + UVO model shows the largest reduction in training loss on the Movie dataset, which decreased by 36.69% compared with the base Bert model. Moreover, the Bert + UVO model demonstrated the highest increase in training accuracy percentage increment (6.65%) compared with the corresponding base model on the SST dataset. Notably, even for the GPT2 model—which generally shows higher training loss and lower accuracy compared to other models—the addition of UVO features reduces the training loss significantly across all datasets. For example, in the Movie dataset, the training loss decreased from 0.565 to 0.503, while training accuracy improved from 0.684 to 0.731, indicating better convergence due to the structural cues provided by UVO.

- Better Generalization on Testing Phase: For all the base pre-trained models, UVO features can help them to achieve both better testing loss and accuracy, which reflects the better generalization capabilities of UVO triple features. This improvement signifies that the UVO-added base models are not overfitting to the training data but learning meaningful patterns that transfer well to the test data. For example, the above-mentioned Bert + UVO model on the Movie dataset also can reduce the testing loss by 1.1% and enhance the testing accuracy by 0.8% under the promise of effective training progress. It is noteworthy that the most significant reduction in testing loss (i.e., 16.18%) is from the UVO-added Bert model on the SST dataset compared with the Bert-only, and the UVO-added Bert model also produces the best testing accuracy improvement (i.e., 4.03%) while tested on the SST dataset. In addition, GPT2 models also exhibit consistent improvements in generalization: on the SST dataset, testing loss decreased from 0.538 to 0.460 and test accuracy increased from 0.784 to 0.811 with UVO. A similar trend is observed on the Twitter dataset, where testing accuracy rose from 0.808 to 0.811 and testing loss slightly reduced, demonstrating that GPT2 also benefits from UVO in learning transferable patterns.

- Efficient Objectivity Identification: UVO features consistently improve the Obj-F1 score across all datasets and models, demonstrating that the inclusion of the triple features helps all the base models handle objective information better by focusing on syntactic roles (viewpoints of subjects, tenses of predicates, and viewpoints of objects). For instance, the T5 + UVO model achieves an Obj-F1 of 0.956 on the Movie dataset and 0.926 on the Twitter dataset. Similarly, GPT2 + UVO shows marked improvement over the baseline GPT2 in Obj-F1 scores: from 0.727 to 0.735 (+0.8%) on Movie, from 0.873 to 0.896 (+2.3%) on SST, and from 0.880 to 0.894 (+1.4%) on Twitter, reinforcing the conclusion that UVO helps even relatively weaker baselines like GPT2 to better capture objectivity.

5.3. The Ablation Study

To further investigate the impact of each feature in the UVO triple, we conduct two ablation studies by either utilizing or disabling each of the UVO features. We first activate the effect of a single feature on the performance of base models. As shown in Table 4, we verify the efficiency of each of the UVO features on all base pre-trained models across the three datasets. Furthermore, we have the following observations from the table.

- In the Movie dataset, the viewpoint of subjects (i.e., U) can supply better improvement to the performance in terms of five metrics across all of the base pre-trained models compared with the other two features—the tense of predicates and the viewpoint of objects (i.e., Bert + U achieves the best performance—0.095 training loss, 0.97 training accuracy, 0.102 testing loss, 0.968 testing accuracy, and 0.966 F1 score in objective). Such a phenomenon reflects that the viewpoint of object (O) and the tense of predicate (V) might be less influential than the subject’s perspective in movie reviews. While the viewpoint of objects and the tense of movie reviews are important, they are secondary to how the reviewer personally feels and expresses those feelings. Since the subject’s expression of opinion is critical in movie reviews, its viewpoint allows the model to focus more on this subjective lens, which is key for improving generalization in objectivity detection.

- It is observable from the results yielded from the SST dataset that the tense of predicates (i.e., V) promotes the base models with the relatively best performance in perspectives of all five metrics compared with the viewpoints of subjects and objects. That means, in the SST dataset, the tense of predicates is a major clue in determining whether the content is a personal opinion or a more neutral statement of fact. More insightful, since SST data consists of shorter texts, and other contextual information is sparse, the verbs carry a significant proportion of the determination in helping the model to identify objectivity.

- For the Twitter dataset, various pre-trained base models yield relatively unstable performance compared with the experiment results on the other two datasets. However, the viewpoint of subjects (U) still plays the most important role in distinguishing objective texts. Specifically, the different performance of fine-tuning pre-trained models on the Twitter dataset when applying the U, V, or O constraints reflects their architectural differences and varying pretraining objectives. Models like RoBERTa excel in short-text, informal settings like Twitter due to their fine-tuned contextual embeddings, yet BART performs better at tasks involving sequence structure (e.g., tense or object viewpoint detection). It is crucial to understand the differences between models or datasets to detect objectivity in short informal text.

Similar to the first group of ablation study, Table 5 demonstrates the efficiency of each tuple of the UVO features (implemented by disabling each of the UVO features), which reveals that the performance of base models may be varied while each of the UVO features is disabled in different datasets. The analysis for the second ablation study is enumerated as follows.

Table 5.

The ablation study we conducted for investigating the role of two of the UVO features in affecting the performance of five pre-trained base models on objectivity detection across the three datasets. The best values in various metrics have been bolded.

- In the Movie dataset, combinations of two features—especially U+V and U+O—tend to slightly improve performance compared to using a single constraint. For example, BERT with U+O achieves 0.098 Tr-Loss, 0.969 Tr-Acc, 0.118 Te-Loss, 0.964 Te-Acc, and 0.966 Obj-F1, nearly matching the best single-feature result (BERT+U). This suggests that while subject viewpoint (U) is dominant, integrating it with either tense (V) or object viewpoint (O) can reinforce semantic grounding. However, the marginal improvement indicates that most of the predictive strength still lies in the subject’s viewpoint, highlighting the expressive nature of movie reviews.

- For SST, the dual-feature combinations V+O and U+V perform slightly better than single features for some models. For instance, RoBERTa with V+O yields a Te-Acc of 0.824 and Obj-F1 of 0.903, higher than U or O alone. This reinforces the earlier observation that verb tense (V) carries the most semantic weight in the SST dataset, and when enhanced with object viewpoint (O), the contextual understanding is deepened. Since SST texts are short and less informative, having both predicate tense and object perspectives can aid in identifying subtle subjectivity markers.>

- In Twitter, U+V and U+O combinations generally outperform other pairs or single features. For example, T5 with U+O achieves 0.821 Tr-Acc and 0.915 Obj-F1, higher than V+O. These results imply that in short and informal texts like tweets, integrating subject viewpoint (U) with an additional semantic constraint (V or O) enhances model robustness. However, due to noise and structural variation in tweets, improvements remain dataset- and model-dependent.

6. Conclusions

As key elements in sentence structure, viewpoints of subjects, tenses of predicates, and viewpoints of objects can directly influence the tone and objectivity of the text. Inspired by this, we propose to investigate the impact of the three features on objective detection in short informal text. To achieve this goal, we propose an LLM- and OpenIE-based triple feature extraction procedure to quantify each of the features. Upon that, we construct a pre-trained encoder-based model aiming at objectivity detection by leveraging the extracted triple features as the constraints of model training. Our experimental results demonstrate the superiority of our method in identifying objectivity in datasets that consist of short informal text. Moreover, we conduct the corresponding ablation studies to further explore the impact of each of the three triple features on the task, which reflects that each UVO feature has different influences while varying base models or datasets. Although the proposed UVO quantification framework demonstrates strong performance in detecting objectivity in informal texts, the dependence on OpenIE and rule-based filtering may lead to unstable outputs when processing noisy or structurally complex sentences. This can affect the quality of downstream triple selection. Furthermore, our experiments are conducted primarily on social media and sentiment datasets. The framework’s generalizability to highly specialized domains has not yet been evaluated. In the future, our approach will be applied to further investigate the difference in objectivity between human-written text and LLM-generated text.

Author Contributions

Conceptualization, C.Z. (Chaowei Zhang); Methodology, C.Z. (Chaowei Zhang); Software, C.Z. (Cheng Zhao); Validation, C.Z. (Cheng Zhao); Formal analysis, Z.Z.; Resources, Y.H.; Data curation, Z.Z.; Writing—original draft, Y.H. All authors have read and agreed to the published version of the manuscript.

Funding

This study is partially supported by the National Natural Science Foundation of China (62403412), and the Natural Science Foundation of the Higher Education Institutions of Jiangsu Province of China under grant 23KJB520040.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Laureate, C.D.P.; Buntine, W.; Linger, H. A systematic review of the use of topic models for short text social media analysis. Artif. Intell. Rev. 2023, 56, 14223–14255. [Google Scholar] [CrossRef]

- Tommasel, A.; Godoy, D. Short-text feature construction and selection in social media data: A survey. Artif. Intell. Rev. 2018, 49, 301–338. [Google Scholar] [CrossRef]

- Wuraola, I.; Dethlefs, N.; Marciniak, D. Understanding Slang with LLMs: Modelling Cross-Cultural Nuances through Paraphrasing. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing, Miami, FL, USA, 12–16 November 2024; pp. 15525–15531. [Google Scholar]

- Liu, J.; Tian, X.; Tong, H.; Xie, C.; Ruan, T.; Cong, L.; Wu, B.; Wang, H. Enhancing Chinese abbreviation prediction with LLM generation and contrastive evaluation. Inf. Process. Manag. 2024, 61, 103768. [Google Scholar] [CrossRef]

- Qiu, Z.; Qiu, K.; Lyu, H.; Xiong, W.; Luo, J. Semantics Preserving Emoji Recommendation with Large Language Models. In Proceedings of the 2024 IEEE International Conference on Big Data (BigData), Washington, DC, USA, 15–18 December 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 7131–7140. [Google Scholar]

- Yadav, A.; Garg, T.; Klemen, M.; Ulcar, M.; Agarwal, B.; Robnik-Sikonja, M. From Translation to Generative LLMs: Classification of Code-Mixed Affective Tasks. IEEE Trans. Affect. Comput. 2025. [Google Scholar] [CrossRef]

- Kamath, G.; Schuster, S.; Vajjala, S.; Reddy, S. Scope ambiguities in large language models. Trans. Assoc. Comput. Linguist. 2024, 12, 738–754. [Google Scholar] [CrossRef]

- Pang, B.; Lee, L. Opinion mining and sentiment analysis. Found. Trends Inf. Retr. 2008, 2, 1–135. [Google Scholar] [CrossRef]

- Sachdeva, B.; Rathee, H.; Tiwari, N. Role of social media in causing and mitigating disasters. Int. J. Resil. Fire Saf. Disasters 2021, 1, 52–58. [Google Scholar]

- Fersini, E.; Messina, E.; Pozzi, F.A. Expressive signals in social media languages to improve polarity detection. Inf. Process. Manag. 2016, 52, 20–35. [Google Scholar] [CrossRef]

- Lawrie, D.; Mayfield, J.; Oard, D.W.; Yang, E.; Nair, S.; Galuščáková, P. HC3: A suite of test collections for CLIR evaluation over informal text. In Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval, Taipei, Taiwan, 23–27 July 2023; pp. 2880–2889. [Google Scholar]

- Alhussain, A.I.; Azmi, A.M. Automatic story generation: A survey of approaches. ACM Comput. Surv. 2021, 54, 1–38. [Google Scholar] [CrossRef]

- Chenlo, J.M.; Losada, D.E. An empirical study of sentence features for subjectivity and polarity classification. Inf. Sci. 2014, 280, 275–288. [Google Scholar] [CrossRef]

- Wankhade, M.; Rao, A.C.S.; Kulkarni, C. A survey on sentiment analysis methods, applications, and challenges. Artif. Intell. Rev. 2022, 55, 5731–5780. [Google Scholar] [CrossRef]

- Küçük, D.; Can, F. Stance detection: A survey. ACM Comput. Surv. 2020, 53, 1–37. [Google Scholar] [CrossRef]

- Bekoulis, G.; Papagiannopoulou, C.; Deligiannis, N. A review on fact extraction and verification. ACM Comput. Surv. 2021, 55, 1–35. [Google Scholar] [CrossRef]

- Miao, Z.; Li, Y.; Wang, X.; Tan, W.C. Snippext: Semi-supervised opinion mining with augmented data. In Proceedings of the Web Conference 2020, Taipei, Taiwan, 20–24 April 2020; pp. 617–628. [Google Scholar]

- Biber, D. Variation Across Speech and Writing; Cambridge University Press: Cambridge, UK, 1991. [Google Scholar]

- Udochukwu, O.; He, Y. A rule-based approach to implicit emotion detection in text. In Proceedings of the Natural Language Processing and Information Systems: 20th International Conference on Applications of Natural Language to Information Systems, NLDB 2015, Passau, Germany, 17–19 June 2015; Proceedings 20. Springer: Berlin/Heidelberg, Germany, 2015; pp. 197–203. [Google Scholar]

- Abirami, M.; Uma, M.; Prakash, M. Sentiment analysis of informal text using a Rule based Model. J. Chem. Pharm. Sci. 2016, 9, 2854–2858. [Google Scholar]

- Androutsopoulos, J. From variation to heteroglossia in the study of computer-mediated discourse. In Digital Discourse: Language in the New Media; Oxford University Press: Oxford, UK, 2011; pp. 277–298. [Google Scholar]

- Hutto, C.; Gilbert, E. Vader: A parsimonious rule-based model for sentiment analysis of social media text. In Proceedings of the International AAAI Conference on Web and Social Media, Ann Arbor, MI, USA, 1–4 June 2014; Volume 8, pp. 216–225. [Google Scholar]

- Zhuang, W.; Zeng, Q.; Zhang, Y.; Liu, C.; Fan, W. What makes user-generated content more helpful on social media platforms? Insights from creator interactivity perspective. Inf. Process. Manag. 2023, 60, 103201. [Google Scholar] [CrossRef]

- Lin, Y.; Wang, W.; Wang, Y. Hot Technology Analysis Method Based on Text Mining and Social Networks. In Proceedings of the 2023 4th International Conference on Machine Learning and Computer Application, Hangzhou, China, 27–29 October 2023; pp. 161–167. [Google Scholar]

- Guo, Z.; Schlichtkrull, M.; Vlachos, A. A survey on automated fact-checking. Trans. Assoc. Comput. Linguist. 2022, 10, 178–206. [Google Scholar] [CrossRef]

- Soprano, M.; Roitero, K.; La Barbera, D.; Ceolin, D.; Spina, D.; Demartini, G.; Mizzaro, S. Cognitive Biases in Fact-Checking and Their Countermeasures: A Review. Inf. Process. Manag. 2024, 61, 103672. [Google Scholar] [CrossRef]

- Augenstein, I.; Baldwin, T.; Cha, M.; Chakraborty, T.; Ciampaglia, G.L.; Corney, D.; DiResta, R.; Ferrara, E.; Hale, S.; Halevy, A.; et al. Factuality challenges in the era of large language models and opportunities for fact-checking. Nat. Mach. Intell. 2024, 6, 852–863. [Google Scholar] [CrossRef]

- Liu, B.; Li, C.; Zhou, W.; Ji, F.; Duan, Y.; Chen, H. An attention-based deep relevance model for few-shot document filtering. ACM Trans. Inf. Syst. 2020, 39, 1–35. [Google Scholar] [CrossRef]

- Liu, K.; Wang, Z.; Yang, Y.; Huang, C.; Niu, M.; Lu, Q. Hierarchical Diachronic Embedding of Knowledge Graph Combined with Fragmentary Information Filtering. In Proceedings of the International Conference on Artificial Neural Networks, Heraklion, Greece, 26–29 September 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 435–446. [Google Scholar]

- Fields, J.; Chovanec, K.; Madiraju, P. A survey of text classification with transformers: How wide? How large? How long? How accurate? How expensive? How safe? IEEE Access 2024, 12, 6518–6531. [Google Scholar] [CrossRef]

- Taha, K.; Yoo, P.D.; Yeun, C.; Homouz, D.; Taha, A. A comprehensive survey of text classification techniques and their research applications: Observational and experimental insights. Comput. Sci. Rev. 2024, 54, 100664. [Google Scholar] [CrossRef]

- Ren, F.; Zhang, L.; Zhao, X.; Yin, S.; Liu, S.; Li, B. A simple but effective bidirectional framework for relational triple extraction. In Proceedings of the Fifteenth ACM International Conference on Web Search and Data Mining, Tempe, AZ, USA, 21–25 February 2022; pp. 824–832. [Google Scholar]

- Rosso, P.; Yang, D.; Cudré-Mauroux, P. Beyond triplets: Hyper-relational knowledge graph embedding for link prediction. In Proceedings of the Web Conference 2020, Taipei, Taiwan, 20–24 April 2020; pp. 1885–1896. [Google Scholar]

- Zhao, Y.; Zhou, H.; Zhang, A.; Xie, R.; Li, Q.; Zhuang, F. Connecting embeddings based on multiplex relational graph attention networks for knowledge graph entity typing. IEEE Trans. Knowl. Data Eng. 2022, 35, 4608–4620. [Google Scholar] [CrossRef]

- Li, P.; Wang, X.; Liang, H.; Zhang, S.; Zhang, Y.; Jiang, Y.; Tang, Y. A fuzzy semantic representation and reasoning model for multiple associative predicates in knowledge graph. Inf. Sci. 2022, 599, 208–230. [Google Scholar] [CrossRef]

- González, W.J. Semantics of science and theory of reference: An analysis of the role of language in basic science and applied science. In Language and Scientific Research; Springer: Berlin/Heidelberg, Germany, 2021; pp. 41–91. [Google Scholar]

- Rodrigo-Ginés, F.J.; Carrillo-de Albornoz, J.; Plaza, L. A systematic review on media bias detection: What is media bias, how it is expressed, and how to detect it. Expert Syst. Appl. 2024, 237, 121641. [Google Scholar] [CrossRef]

- Wiebe, J.; Bruce, R.; O’Hara, T.P. Development and use of a gold-standard data set for subjectivity classifications. In Proceedings of the 37th Annual Meeting of the Association for Computational Linguistics, College Park, MD, USA, 20–26 June 1999; pp. 246–253. [Google Scholar]

- Riloff, E.; Wiebe, J. Learning extraction patterns for subjective expressions. In Proceedings of the 2003 Conference on Empirical Methods in Natural Language Processing, Sapporo, Japan, 11–12 July 2003; pp. 105–112. [Google Scholar]

- Wiebe, J.; Wilson, T.; Bruce, R.; Bell, M.; Martin, M. Learning subjective language. Comput. Linguist. 2004, 30, 277–308. [Google Scholar] [CrossRef]

- Hu, M.; Liu, B. Mining and summarizing customer reviews. In Proceedings of the Tenth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Seattle, WA, USA, 22–25 August 2004; pp. 168–177. [Google Scholar]

- Liu, B.; Hu, M.; Cheng, J. Opinion observer: Analyzing and comparing opinions on the web. In Proceedings of the 14th International Conference on World Wide Web, Chiba, Japan, 10–14 May 2005; pp. 342–351. [Google Scholar]

- Zhang, X.; Zhao, J.; LeCun, Y. Character-level convolutional networks for text classification. Adv. Neural Inf. Process. Syst. 2015, 28, 1–9. [Google Scholar]

- Hu, H.; Liao, M.; Zhang, C.; Jing, Y. Text classification based recurrent neural network. In Proceedings of the 2020 IEEE 5th Information Technology and Mechatronics Engineering Conference (ITOEC), Chongqing, China, 12–14 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 652–655. [Google Scholar]

- Vaswani, A. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 1–11. [Google Scholar]

- Devlin, J. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Liu, Y. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 2020, 21, 1–67. [Google Scholar]

- Lewis, M. Bart: Denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. arXiv 2019, arXiv:1910.13461. [Google Scholar]

- Radford, A. Improving Language Understanding by Generative Pre-Training. 2018. Available online: https://cdn.openai.com/research-covers/language-unsupervised/language_understanding_paper.pdf (accessed on 27 May 2025).

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Brown, T.B. Language models are few-shot learners. arXiv 2020, arXiv:2005.14165. [Google Scholar]

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.; Mishkin, P.; Zhang, C.; Agarwal, S.; Slama, K.; Ray, A.; et al. Training language models to follow instructions with human feedback. Adv. Neural Inf. Process. Syst. 2022, 35, 27730–27744. [Google Scholar]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. Gpt-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar]

- Qasim, R.; Bangyal, W.H.; Alqarni, M.A.; Ali Almazroi, A. A Fine-Tuned BERT-Based Transfer Learning Approach for Text Classification. J. Healthc. Eng. 2022, 2022, 3498123. [Google Scholar] [CrossRef]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. Llama: Open and efficient foundation language models. arXiv 2023, arXiv:2302.13971. [Google Scholar]

- Sun, X.; Li, X.; Li, J.; Wu, F.; Guo, S.; Zhang, T.; Wang, G. Text classification via large language models. arXiv 2023, arXiv:2305.08377. [Google Scholar]

- Tang, L.; Shalyminov, I.; Wong, A.W.m.; Burnsky, J.; Vincent, J.W.; Yang, Y.; Singh, S.; Feng, S.; Song, H.; Su, H.; et al. Tofueval: Evaluating hallucinations of llms on topic-focused dialogue summarization. arXiv 2024, arXiv:2402.13249. [Google Scholar]

- Laurenzi, E.; Mathys, A.; Martin, A. An LLM-Aided Enterprise Knowledge Graph (EKG) Engineering Process. Proc. AAAI Symp. Ser. 2024, 3, 148–156. [Google Scholar] [CrossRef]

- Zhang, K.; Choi, Y.; Song, Z.; He, T.; Wang, W.Y.; Li, L. Hire a linguist!: Learning endangered languages in LLMs with in-context linguistic descriptions. In Proceedings of the Findings of the Association for Computational Linguistics ACL 2024, Bangkok, Thailand, 11–16 August 2024; pp. 15654–15669. [Google Scholar]

- Guo, R.; Xu, W.; Ritter, A. Meta-Tuning LLMs to Leverage Lexical Knowledge for Generalizable Language Style Understanding. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Bangkok, Thailand, 11–16 August 2024; pp. 13708–13731. [Google Scholar]

- Smith, G.; Fleisig, E.; Bossi, M.; Rustagi, I.; Yin, X. Standard Language Ideology in AI-Generated Language. arXiv 2024, arXiv:2406.08726. [Google Scholar]

- Song, G.; Ye, Y.; Du, X.; Huang, X.; Bie, S. Short text classification: A survey. J. Multimed. 2014, 9, 635–643. [Google Scholar] [CrossRef]

- Chen, M.; Jin, X.; Shen, D. Short text classification improved by learning multi-granularity topics. In Proceedings of the Twenty-Second International Joint Conference on Artificial Intelligence, Barcelona, Spain, 16–22 July 2011. [Google Scholar]

- Verdonck, T.; Baesens, B.; Óskarsdóttir, M.; van den Broucke, S. Special issue on feature engineering editorial. Mach. Learn. 2024, 113, 3917–3928. [Google Scholar] [CrossRef]

- Nayak, T.; Majumder, N.; Goyal, P.; Poria, S. Deep neural approaches to relation triplets extraction: A comprehensive survey. Cogn. Comput. 2021, 13, 1215–1232. [Google Scholar] [CrossRef]

- Zhang, C.; Gupta, A.; Kauten, C.; Deokar, A.V.; Qin, X. Detecting Fake News for Reducing Misinformation Risks Using Analytics Approaches. Eur. J. Oper. Res. 2019, 279, 1036–1052. [Google Scholar] [CrossRef]

- UzZaman, N.; Llorens, H.; Derczynski, L.; Allen, J.; Verhagen, M.; Pustejovsky, J. SemEval-2013 Task 1: TempEval-3: Evaluating Time Expressions, Events, and Temporal Relations. In Proceedings of the Second Joint Conference on Lexical and Computational Semantics (*SEM), Volume 2: Proceedings of the Seventh International Workshop on Semantic Evaluation (SemEval 2013), Atlanta, GA, USA, 13–14 June 2013; Manandhar, S., Yuret, D., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2013; pp. 1–9. [Google Scholar]

- Manning, C.D.; Surdeanu, M.; Bauer, J.; Finkel, J.R.; Bethard, S.; McClosky, D. The Stanford CoreNLP natural language processing toolkit. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics: System Demonstrations, Baltimore, MD, USA, 23–24 June 2014; pp. 55–60. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).