Abstract

This second part of this companion paper the Carnot cycle is analyzed trying to investigate the similarities and differences between a framework related to thermodynamics and one related to information theory. The parametric Schrodinger equations are the starting point for the framing. In the thermodynamics frame, a new interpretation of the free energy in the isothermal expansion and a new interpretation of the entropy in the adiabatic phase are highlighted. The same Schrodinger equations are then applied in an information theory framework. Again, it is shown that a cycle can be constructed with a diagram that presents the Lagrange parameter and the average codeword length as coordinates. In this case the adiabatic phase consists of a transcoding operation and the cycle as a whole shows a positive or negative balance of information. In conclusion, the Carnot cycle continues to be a source of knowledge of complex systems in which entropy plays a central role.

1. Forward

In the first paper [1] with the same title the Carnot cycle was analyzed starting from the partition function Z, a function that is shared by both thermodynamics (as underlined by Reiss [2], in thermodynamics it is at the base of the “transformation theory”) and information theory (Jaynes [3] pointed out the link between the partition function and the capacity of an communication system). More precisely, in [1] the isothermal expansion of the Carnot cycle was analyzed in the light of the Kullback–Leibler distance even in the presence of a perturbation introduced in the isothermal phase by an information input.

Everything arises from the observation that the two founding equations of thermodynamics (as reported by Schrodinger in [4] (reprint of the first 1946 publication)) and so called in the following parametric Schrodinger equations, or PSEs,

can also be interpreted with the formalism of information theory. In (1) and (2) S is the entropy, k is the Boltzmann constant, the Lagrange multiplier, Z is the partition function of the process under examination defined as

where A represents the “dimension of the considered ensemble of distinguishable groups labelling a random variable” [5]: in quantum mechanics or statistical mechanics (and hence in thermodynamics), this ensemble is the set of all possible energy eigenvalues (i.e., either of the harmonic oscillator or of the molecules of the gas considered); in information theory, the set (obviously much smaller) represents either the number of characters of the considered alphabet or the number of words of the considered vocabulary. In this context is a generic “quality” of the “distinguishable group” such as the energy value in thermodynamics or the character duration or the length of a codeword in information theory and the index “i” is the numeration of the distinguishable groups. Finally, is the expectation value of .

Since its origin, information theory has had a relationship with thermodynamics [5]. Shannon, who, in his fundamental work [6] on formulating the 2nd theorem, admits that: “The form H will be recognized as that of entropy as defined in certain formulation of statistical mechanics”. It is Jaynes, however, in a couple of papers published in 1957 [7] and 1959 [3], who pointed out the existence of a deep relationship between thermodynamics and information theory, which share the concept of “partition function”: “…the basic mathematical identity of the two fields [thermodynamics and information theory] has had, thus far, very little influence on the development of either. There is an inevitable difference in detail, because the applications are so different; but we should at least develop a certain area of common language…we suggest that one way of doing this is to recognize that the partition function, for many decades the standard avenue through which calculations in statistical mechanics are “channelled” is equally fundamental to communication theory”.

However, in the paper of 1959, Jaynes pointed out some “singularities” of information theory that are not found in thermodynamics and therefore prevent the development of simple parallelism. In particular, Jaynes notes that Shannon’s theorem 1, the theorem which defines the channel capacity, does not present an equivalent in thermodynamics. He says “It is interesting that such a fundamental notion as channel capacity has not a thermodynamic analogy”. And he continued by underlining that: “the absolute value of entropy has no meaning, only entropy differences can be measured in experiments. Consequently the condition that H is maximized, equivalent to the statements that the Hemholtz free energy function vanishes, corresponds to no condition which could be detected experimentally”.

The above singularities inhibit extension to information theory of all the fundamental axioms of thermodynamics, including the Carnot theorem that, as a consequence, does not present, in our opinion, an exact parallel in information theory. Essentially, the Carnot theorem is a conservation theorem because it says that, during a reversible thermodynamics cycle finalized to extract work by exchange of heat from two reservoirs at different temperatures, the entropy of the cycle is conserved. This was exactly noted by Carnot who, in his monograph (as translated and edited by E. Mendosa [8]) of 1824, says: “The production of motion in steam-engine is always accompanied by a circumstance on which we should fix our attention. This circumstance is the re-establishing of equilibrium in the caloric; that is, its passage from a body in which the temperature is more o less elevated, to an other in which it is lower”. If the term “caloric” could be interpreted as “entropy” (Carnot used two terms for heat: “chaleur” and “calorique” and from the context we can deduce that with the second term he indicated a concept close to the current “entropy” [8]), we have here the first claim of the “entropy conservation” principle in a reversible Carnot cycle. He also observed that: “The production of motive power is then due in steam-engines not to an actual consumption of caloric, but to its transportation from a warm body to a cold body, that is, to its re-establishment of equilibrium”. It is worth noting that, in the same sentence, Carnot notes that the cycle is finalized to the production of motion. The absence of such production posed some problems, as pointed out by William Thomson (one of the first commenters of Carnot’s book) in 1848 [9]: “When “thermal agency” is thus spent in conducting heat through a solid, what becomes of the mechanical effect which it might produce? Nothing can be lost in the operations of nature—no energy can be destroyed. What effect then is produced in place of the mechanical effect which is lost? A perfect theory of heat imperatively demands an answer to this question: yet no answer can be given in the present state of science…”. The answer to Thomson’s observation came from the German scientist Rudolf Clausius (the scientist that introduced the term “entropy” in 1865 [10]) who in 1850 was already aware that heat is not only inter-convertible with mechanical work but in fact actually may “consist in a motion of the least parts of the bodies” and with this latter conclusion he formulated the equivalence of heat and work.

The question posed in 1848 by William Thomson has similarities to the one that comes naturally when we use PSEs to “export” a Carnot cycle in information theory. In fact, by using PSEs it is possible to reproduce a Carnot cycle where entropy/information is conserved but the question that also rises spontaneously in this case is: “What is produced in this cycle since no mechanical work is produced?”.

In the following we will try to give an answer to this question. We will first propose a synthesis of the Carnot thermodynamic cycle, already developed in part I, and then we will try to develop above all the vision from the point of view of the theory of information.

2. The Thermodynamics Carnot Cycle in the Light of the “Parametric Schrodinger Equations”

As already mentioned in the Introduction, in thermodynamics the variable is the energy of the molecules of the gas,, and the function becomes the internal energy . Hence, from (1) we have

where we have explicated the Lagrange multiplier by 1/kT, the inverse of the absolute temperature times the Boltzmann constant, and the probability of finding the system in the distinguishable group of index “i”.

M. Tribus [11] provides an important interpretation of the variation of internal energy (4) that allows us to better understand the meaning of expressions (1) and (2). Starting from relation (4) Tribus derives that

and he assigns to the first sum after the equals sign the meaning of “change of heat, dQ” and to the second term the meaning of “negative change of work, −dW” in order to obtain the classical relationship

and comments: “A change of the type , causes no change uncertainty S, since are unchanged. A change of the type , is associated with a change in S”. Tribus accompanies these considerations with three illustrations [11] of the distributions of and and the product of .

It is interesting to note that in the case where the thermodynamic system considered is a “black body”, the distribution of is nothing other than the single-mode Planck’s law.

Thermodynamic potentials can also be derived from the PSE. The most direct of these is the Helmholtz potential or “free energy” which is connected to the partition function (see [1] and the references contained therein) by the relation

or, by introducing the partition function expression (3),

We thus obtain a new interpretation of free energy F, a relevant thermodynamics potential in all thermodynamic cycles in which work is produced: it is simply the sum of all possible energy levels of the thermodynamic system under consideration.

By using (7), (4) becomes

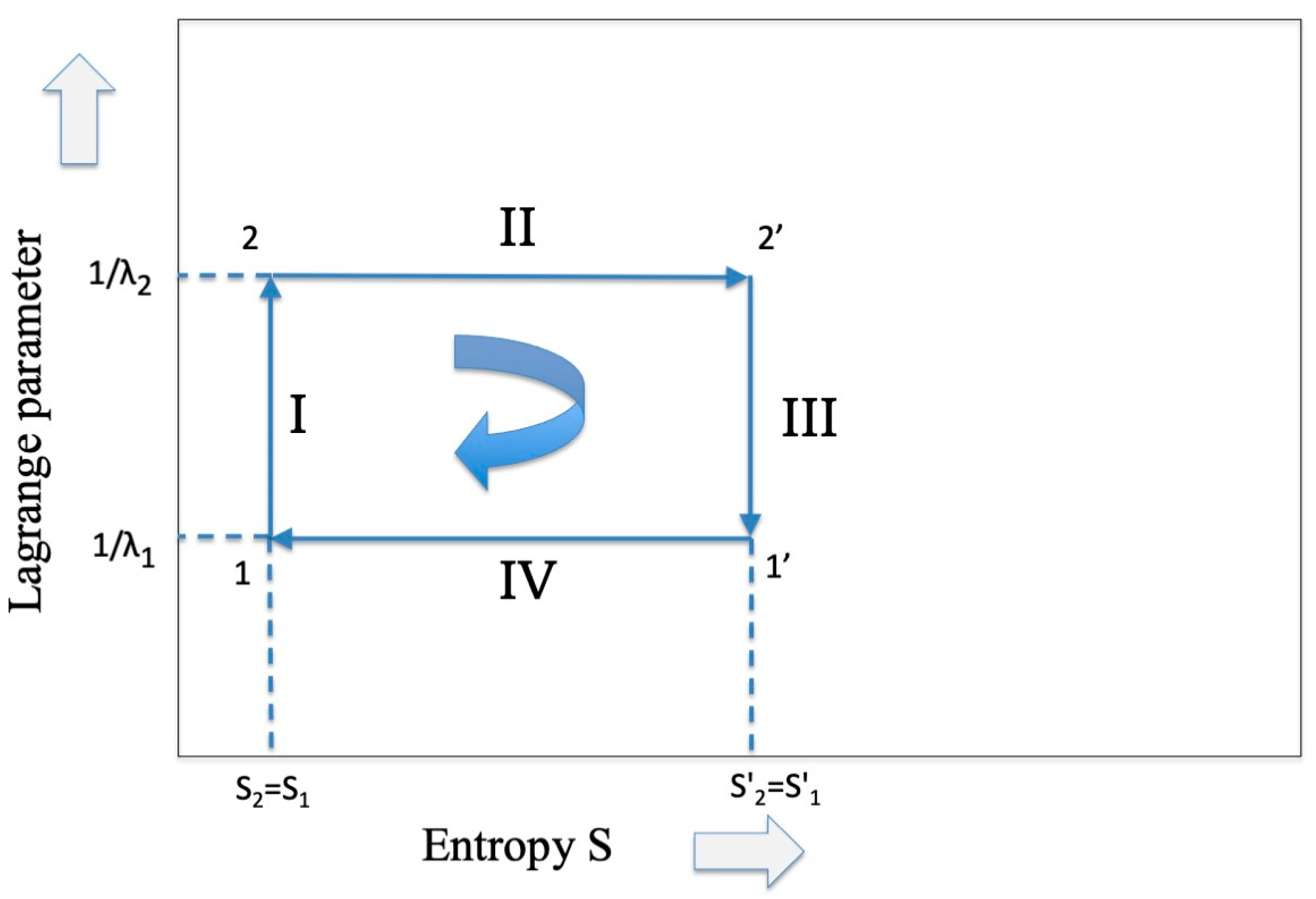

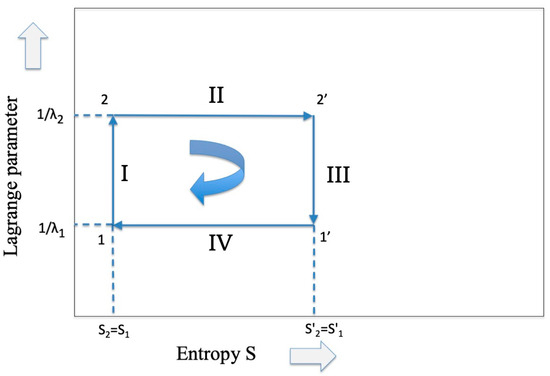

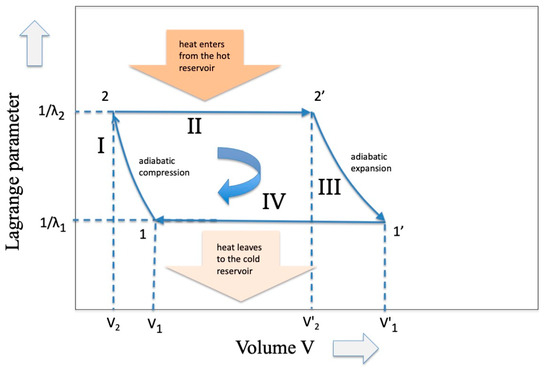

Starting from this relation, let us study the Carnot cycle in the ST representation (see reference [1], reported again for convenience as Figure 1, where the inverse of the Lagrange parameter has been adopted) for the isothermal (II and IV) and the adiabatic branches (III and I).

Figure 1.

The representation of the Carnot cycle which is common to both thermodynamics and information theory.

As far as the isothermal expansion (from point 2 to 2′ in Figure 1) is concerned, we know [1] that the flux of energy coming from the high-temperature reservoir is converted only into free energy (work) while the internal energy remains constant. Hence, we have that

or, from (8),

an expression which evidences that the increase in entropy is a consequence of the sum (over the whole ensemble) of the energy differences between the end and the beginning of the expansion. Note that the ensemble dimension A remains constant.

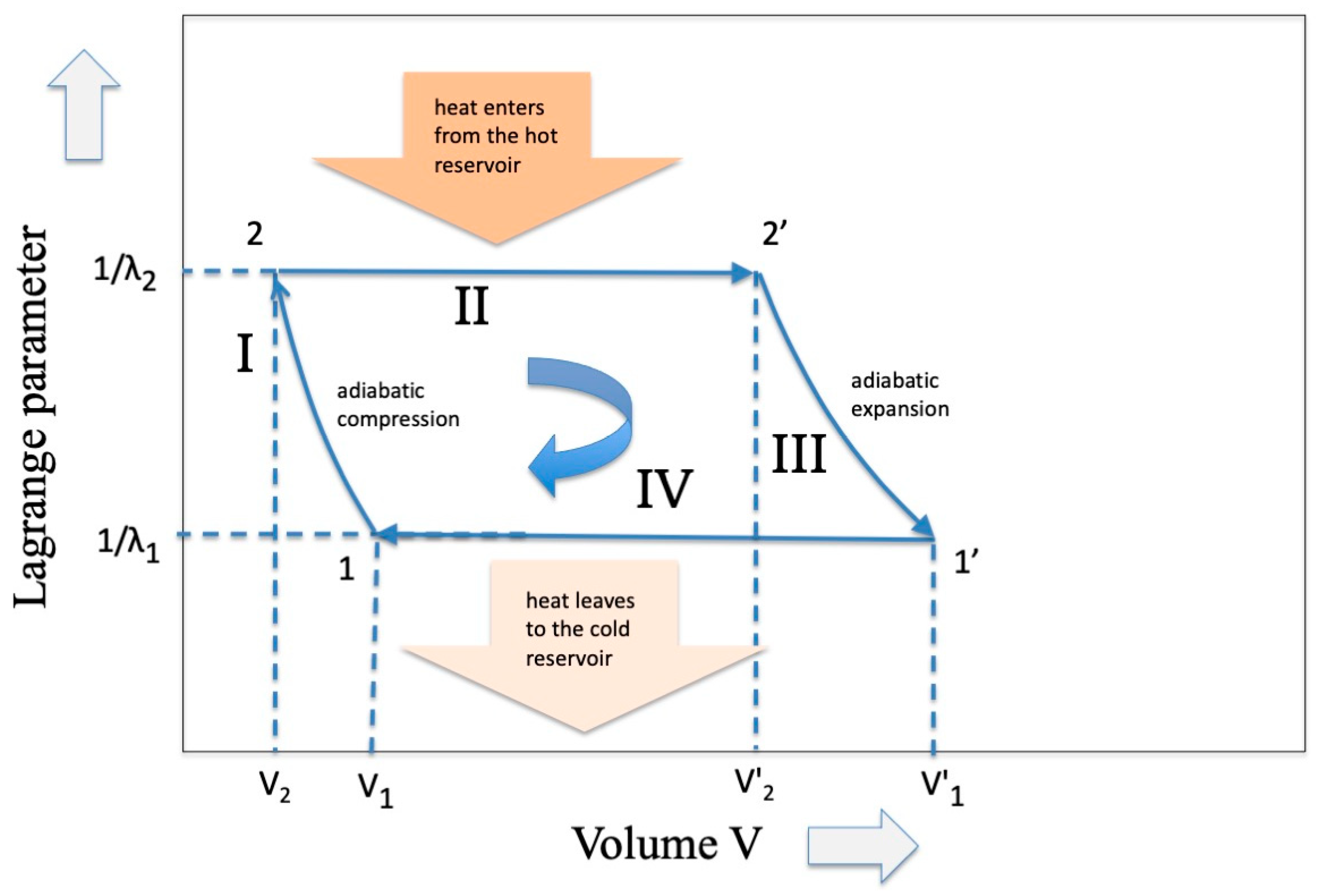

But why are these energy eigenvalues different? To find the answer we must refer to a “hidden” variable that does not appear in Equations (1) and (2), that is, the “volume V”, a variable that enters into the Carnot cycle when a gas is involved (see Figure 2). In the expansion phase a work W is delivered through the relation

where P is the pressure and R the gas constant. Hence, since

it is evident that the different energy eigenvalues of (11) are the direct consequence of the differences in the partition functions, whose ensemble changed because the volumes changed (and not the temperature which remains stable at ).

Figure 2.

The classic representation of the Carnot Cycle in the variables Temperature (inverse of the Lagrange parameter) and Volume of an ideal gas.

The isothermal phase II is inversely replicated in the compression phase IV (from points 1′ to 1 in Figure 1) at the lower temperature , and the sum of the two entropy changes, giving zero. Hence,

or, from (13),

a result that allows obtaining the efficiency of the Carnot cycle

As far as the adiabatic phase III (from points 2′ to 1′ in Figure 1) is concerned, we have that the entropy is conserved, thus

where we introduce the new ensemble dimension A′ because we are now at temperature T1.

That is, in the adiabatic phase we observe a complete change of the ensemble considered because both the temperature and the volume vary and therefore the size of the ensemble, the partition function, the set of energy eigenvalues, and the probability distribution change. In entropic terms, even if it is true that the final entropy remains equal in value to the initial entropy, the two values are obtained as a result of a different ensemble average.

In phase III an amount of work is delivered whose expression is

and by introducing the relations

and

we obtain

These terms represent the work given by the external work reservoir in the system (by the system on the external work reservoir in symmetric phase I, from points 1 to 2 in Figure 1). Since for a perfect gas the relation

can be applied, we observe that the constancy in entropy means that

or by considering the value of cv for the pure mono-atomic gas

and by integration we obtain the simple relationship

In other terms, during the adiabatic expansion, the increase in entropy due to the increase in volume of the piston expansion is compensated by an equivalent decrease in internal energy due to a decrease in temperature.

In summary, we have shown that the entropy conservation principle characteristic of the Carnot cycle can be interpreted in terms of a simple relationship between the volume of the gas and the temperature: during the two isothermal phases, the ratio between the involved final and initial volumes must be maintained; during the two adiabatic phases, the ratio between the involved initial and final temperatures leads to a direct relationship between the final and initial volumes.

Before moving on to the Carnot cycle treated according to information theory, we point out that positive and negative entropy contributions can also occur in out-of-equilibrium situations, as has been explicitly pointed out in the first part of this contribution [1] where the isothermal phase of the cycle is modified by “injections” of information coming from out-of-equilibrium situations. In [1] the use of the so-called “Jarzynski equality” [11] is cited in this regard, but positive and negative entropy contributions also occur in correspondence with fluctuations that occur in microscopic systems, as demonstrated by Y. Hua and Z-Y. Guo in [12] in the case of a thermoelectric cycle. In general, the “entropy production fluctuation theorem” can be kept in mind whenever one is dealing with microscopic systems that are kept far from equilibrium. In its most general formulation (see, for example, G.E. Crooks [13]), this theorem states that, for systems driven out of equilibrium by some time-dependent work process, where and refer respectively to the positive and negative entropy production rates at time .

3. The Information Theory Carnot Cycle in the Light of the “Parametric Schrodinger Equations”

We have shown in the previous section how the systematic application of the two “Schrodinger equations” to the Carnot cycle allows the exploration of new aspects of the cycle itself. On the other hand, we have remarked in the Introduction that the Carnot cycle was the result of Carnot’s effort in understanding the steam machine, a complex thermodynamics system invented in order to transform heat into work. The possibility to apply the same “Schrodinger equations” in the context of information theory raises an obvious question: to whose “cycle” are they applied? Information theory was originated by Shannon in order to study a practical communication system, e.g., the telegraph and telephonic transmission. As shown in reference [2] Shannon’s first theorem (where he introduced the concept of capacity) was derived from Hartley’s studies on telegraph lines. Hence, we have to imagine a “communication cycle” where the information flux moves from a transmitter to a receiver and from the receiver to the transmitter under different impairment conditions. Shannon demonstrated that the impairments can be summarized as the unavoidable noise affecting the communication channel.

When Carnot modeled the cycle that bears his name, he never posed the “practical” problem of what the two heat reservoirs exactly were. In the same way we can imagine that, during communication, there are two “reservoirs” that act on the communication system whose variable, as we have already seen, is the “word length”. These reservoirs can “leave” information (e.g., noise enters into the channel) or can “lead” information, in any case perturbing the transmission.

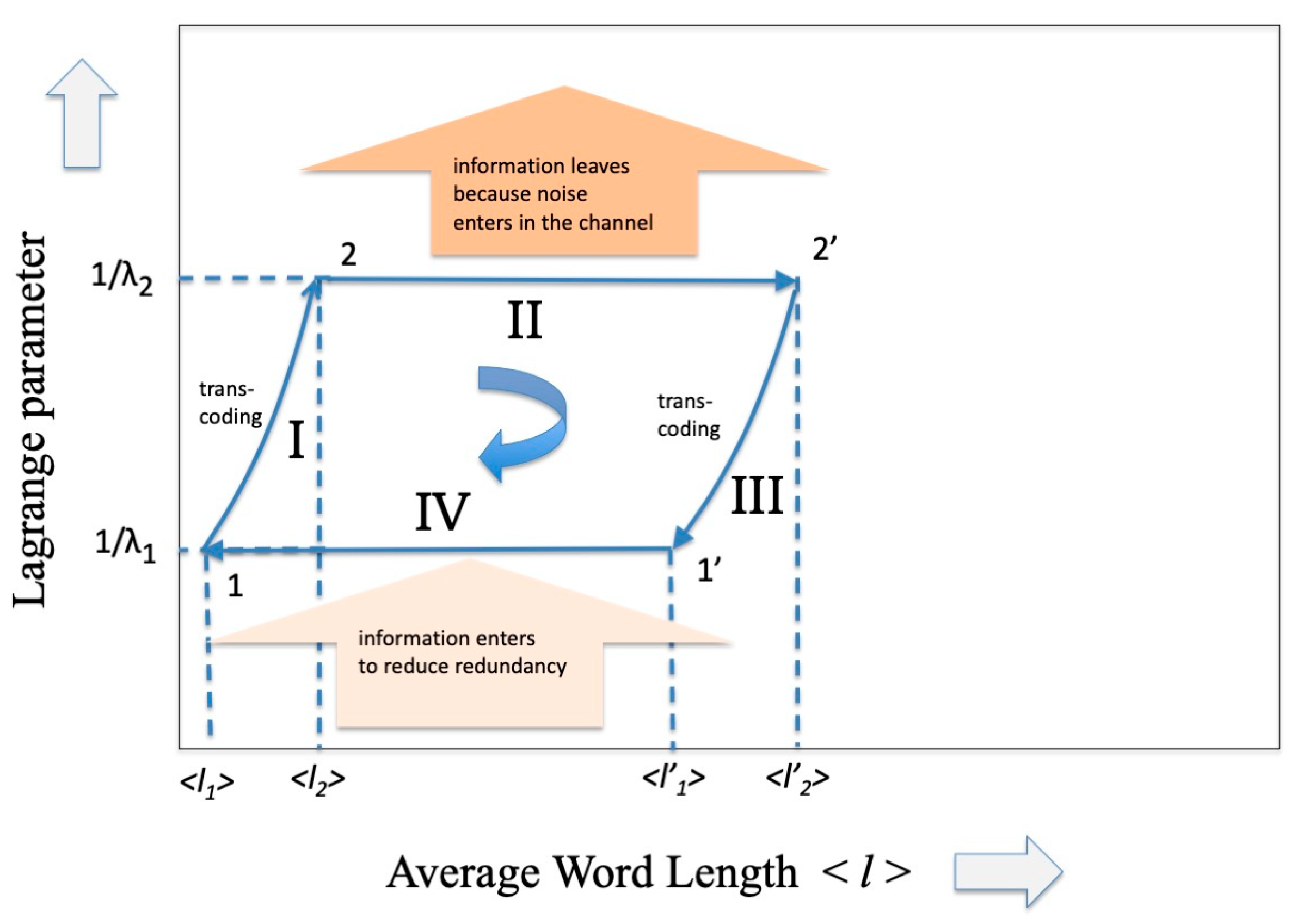

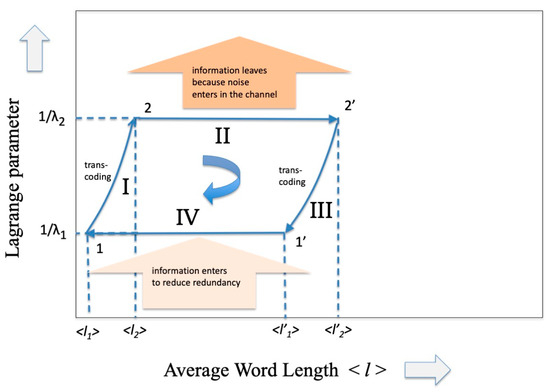

That is, we can imagine that, even in the scenario of information theory, there is a cycle as practical as that of the steam engine. The intent here is to communicate, that is, to “transport” information from a transmitter to a receiver, and vice versa, in the presence of a noise/information reservoir. The cycle closes by transporting the information in the opposite direction. Analogous to what happens in the cycle of the steam engine where two heat reservoirs at different temperatures are present, we could suppose that in this case the opposite transmission occurs at a different value of channel capacity, the parameter that the PSE sets equivalent to the inverse of the temperature. The cycle is illustrated in Figure 3 and will be analyzed below.

Figure 3.

A representation of the Carnot Cycle in information theory that has as ordinate the inverse of the Lagrange parameter (related to the capacity of the communication channel) and as abscissa the average length of the codeword.

The cycle described above can be part of the category known in the literature as “information engines”. Among these “engines” one of the most interesting, in our opinion, is the one proposed on several occasions by T.S. Sundresh [14,15]. The author intends to “explore information transactions between different entities comprising a system, as a unified way of describing the efficiency of integrated working on a system” and examines this “engine” in “analogy to the Carnot cycle”. The author considers a very general system in which there are two “processes” and says: “Two processes A and B are said to be integrated with each other when they can successfully cooperate with each other in doing a global task T”, and he imagines that A and B process information and exchange information. The author then provides interesting examples of the global task T (of which we give only the title in the following for reasons of space): (a) two software modules working together in an integrated fashion to perform a computation; (b) organizational working in which information is transacted between various individuals or teams to work together on a given task; (c) scientific research environments; (d) human–machine interfaces; (e) computer-aided design. Then, introducing the methods of information theory and thermodynamics, the author draws a graph similar to that of Figure 1 with different meaning in the vertical coordinate.

We can therefore imagine that the cycle that will be described below occurs between two entities (in general, “processes” in the sense used by T.S. Sundresh) that behave both as receiver and transmitter, based solely on the need to complete the “task”.

Before proceeding, let us introduce some clarification in the PSEs that make them easier to manipulate. In information theory, the Boltzmann constant k equals 1 and a logarithm base 2 is adopted in order to measure the information in bits. Moreover, as recalled in the Introduction, the variable as the expectation value of the codeword length considered. Hence, (1) and (2) become

According to Jaynes [3], “the notion of word is meaningful only if there exists some rule by which a sequence of letters can be uniquely deciphered into words. If no such this rule exists then effectively each letter is a word”. Applied to codewords, the above sentence means that, when we consider all the codewords of the same length , the partition function becomes

Shannon’s first theorem defines “capacity” C as the eigenvalue of the above equation in the variable at the limit . Hence, for , the capacity of the channel becomes

In this way, a meaning is given to the Lagrange multiplier that becomes equal to the capacity of the channel (as mentioned by Jaynes: ”a given vocabulary may be regarded as defining a channel”).

Having said this, let us start by asking ourselves: What happens when I inject noise (e.g., extract information) into the communication channel existing between the transmitter and the receiver? We know that in this case the error at the receiver inevitably increases because the receiver misinterprets the transmitter’s codewords. In order to restore correct transmission, it is necessary to use an appropriate coding technique (and Shannon’s theorems ensures that this is always possible) which increases the reliability of transmission (or, in information theory terms, reduces equivocation) at the cost of increasing redundancy.

In general, the efficiency of the code is defined as [16]

where H is the entropy of the source of the unit of symbols. The redundancy [17] is

and it is therefore observed how an increase in redundancy leads to an inevitable increase in the variable . A first example of redundancy comes from the coding technique itself. In fact, if we want to distinguish codewords, it is necessary to introduce some symbol between one word and another (for example, a space) and this causes redundancy. Jaynes [3] evaluates the expression of H in the case of a unique decodable code as

That for large becomes

with efficiency

which is certainly lower than the case where there was no space character inserted.

Before proceeding, let us try to clarify the consequences of a change of the variable (which is the parameter equivalent to the internal energy in the thermodynamics treatment). Since it is

we obtain

where the first sum after the equals sign is in the equivalent position of “change of heat, dQ” which in our case can be a variation of the character probability and therefore we will call it dP and the second term in the equivalent position of “negative change of work, −dW” which in our case can be a variation of the codeword length and therefore we will call it dK, thus

and, paraphrasing Tribus [17], we can say that “A change of the type dK, causes no change uncertainty S, since are unchanged. A change of the type dP, is associated with a change in S”.

Moreover, the distribution of will represent the equivalent of the single-mode Planck’s law with a representation labeled with capacity instead of temperature.

When a communication system is subjected to noise, there is a direct impact on the mutual information defined as

where A and B are respectively the transmitter and receiver alphabet and the entropy represents the equivocation

where the probability is the probability to detect b when a is sent and is the joint probability. According to Abramson [16], “the mutual information is equal to the average number of bit necessary to specify an input symbol before receiving and output symbol less the average number of bit necessary to specify and input symbol after receiving and output symbol” and if the channel is not noiseless, “the equivocation will not, in general, be zero, and each output symbol will contain only H(A) − H(A/B) bit of information”. In order to restore the missing bits we therefore need codes that allow us to correct errors due to noise that occurs in the channel and in general these codes increase the redundancy of the communication and therefore increase the entropy.

For example, one of the first codes to be proposed [16] consists in repeating the message several times. However, repeating the message n times means considering the nth extension of the source, which reduces the transmitted information rate by n times. To avoid this, it can be shown that it is sufficient to use only M characters of the nth extension in order to keep the information rate R, defined as [16,18]

high enough. Together with Abramson [17] we can state that, since the nth extension of a source with r input symbols has a total of input symbols, if we use only M of these symbols, we may decrease the probability of error: “The trick is to decrease the probability of error without requiring M to be so small that the message rate logM/n becomes too small. Shannon second theorem tell us that the probability of error can be made arbitrarily small as long as M is less than and for this M the message rate becomes equal to the channel capacity ”.

So many codes have been invented that use the most varied mathematical algebraic properties and allow us to approach the limit of transmission capacity by paying a relatively modest price in terms of redundancy. A fairly recent review (2007) of these codes is reported in the fundamental paper by D.J. Costello and G.D. Forney [19] and more specifically for optical communications in a work by E. Agrell et al. [20]. In general, it is difficult to estimate the increase in redundancy in the case of error-correcting codes, given the very large variety of these. An average measure of this can be obtained by estimating the size of the overhead that must be inserted to reach the Shannon limit. For example, in 100 Gb/s coherent optical communication systems the overhead (or equivalently the required to restore error-free transmission can be of the order of 20%. What can certainly be stated is that the introduction of these error-correcting codes perturbs the dP term in the expression (37) and therefore increases the entropy (27) as well as (see Figure 3 from points 2 to 2′, where the input of noise is represented as “leaving the information”).

In summary, in phase II in the presence of noise (or “leaving of information”), the communication system presents an increase in entropy necessary to send the message from the transmitter to the receiver with the minimum of misunderstanding or with the maximum mutual information. In general, it is difficult to evaluate the increase in entropy and because it depends on the specific code used to reduce the error in the system.

What happens for the adiabatic phase of the cycle, i.e., phase III? In a thermodynamic framework we know that in this phase the temperature of the system decreases, entropy must be conserved, and heat exchange must be zero. As already underlined in the Conclusions of part I of this contribution, the interpretation of the “adiabatic” part for a communication system is quite complex. In fact, while in the isothermal cycle there is a reasonable parallel between the increase in entropy in the thermodynamic system and the parallel increase in entropy in the communication system, for the adiabatic part this similarity ceases. The decrease in temperature of the thermodynamic cycle translates into an equivalent increase in the Lagrange parameter which, according to the interpretation given in Equation (29), means relating to a communication system with greater capacity. Since we have assumed up to now that a transmission takes place in the presence of noise based on an alphabet A, we must now assume that at the receiver there is some device that takes care of translating the code from A to a richer alphabet A′ while maintaining the same entropy value. In the cycle of Figure 1 we would have thus arrived at point 1′. To continue the cycle and to be able to “close” it, it is necessary at this point that the receiver transforms itself into a transmitter and transmits the message back to the previous transmitter (that now becomes a receiver) through a channel that, differently from phase II, reduces the entropy through an appropriate injection of information. Having arrived at point 1 it is easy to hypothesize at this point that there is another transcoder that brings the description of the message back to the original alphabet A, maintaining the same entropy (phase I), and so closing the cycle.

Let us follow the above hypothesis. From the analytical point of view, the change of the alphabet dimension leads to a change of the extreme of the partition function Z and of all the derived quantities. Hence, if we call this new alphabet A’, the entropy conservation means

where is the average codeword length after the transmission and is the average code length after the transcoding. If we make the reasonable working assumption that the error correction code used has restored almost all of the channel capacity and that transcoding occurs at full channel capacity, we can assume that both and in (41) are at the limit of 1 so that (41) becomes

thus

As we expected, the new codewords will have a shorter average length because they refer to a larger alphabet. The effect of phase II on the codeword length is shown in Figure 3 (from point 2′ to 1′).

We are now at point 1′ of the cycle and in order to further reduce the average length it is necessary to inject information in the system. As reported in [1] this can be performed by means of any “decision process” (see Ortega and Brown [21]) or more generally by means of any process that makes a selection, such as the selection processes that occur during the development of living beings (see, for example, B-O Kuppers [22]). Without repeating here what has already been demonstrated using the Kullback–Leibler distance in ref. [1] we may assume that becomes and, from point 1 with a further reverse transcoding, starting point 2 is reached and the loop is closed (see Figure 3). It is easy to observe analogies between Figure 2 and Figure 3: they show the same trend as regards the evolution of the “constant Lagrange parameter” phase and a similar trend (although symmetric) as regards the adiabatic zone. These analogies derive from the choice of representations in the two variables volume V and average codeword length , variables that come naturally when considering the practical realizations of the SPEs.

In summary, we have shown that, by using the variable codeword length , it is possible to trace a Carnot cycle even in information theory where the two isothermal phases are represented by a leaving and ingress of information while the two adiabatic phases are represented by transcoding operations. However, as already mentioned, the “volume” variable of the thermodynamic cycle and the “average codeword length” variable of the cycle in information theory emerge naturally when using PSEs to represent a classical Carnot cycle (where classical means the original one applied to heat engines) and an information theoretic cycle.

4. Conclusions

As we have seen in the previous two sections, PSEs are a powerful tool for analyzing the Carnot cycle and allow us to observe details that would not otherwise be visible. Although there is no direct parallel between the Carnot cycle in thermodynamics and information theory, there are some interesting analogies. Both frameworks can be represented with a diagram with coordinates of entropy/inverse of Lagrange parameter. From this diagram one can derive for the thermodynamic cycle the temperature/volume diagram and for the information theory cycle the capacity/average codeword length diagram.

In the thermodynamics framework, the area circumscribed by the cycle represents the work produced. It is in fact

As far as the information theory framework is concerned, the same quantity is

that at the limit yields

Since A′ is a larger alphabet than A this means that a negative entropy is produced, thus information is gained by the Carnot information cycle. In other words, the transcoding operations makes the information injected in the presence of a larger alphabet heavier with respect to the redundancy accumulated in the presence of the smaller alphabet. Obviously, the cycle can also be built with the opposite direction, so producing noise.

Figure 3 and the previous observations constitute the answer to the question we started from: when the Carnot cycle is developed in the framework of information theory (a framework very far from the classical mechanical–thermal one) the conservation of entropy, that characterizes it, translates into a possible management of information that can be lost or gained. The analysis of this result and its consequences on complex systems that use information deserves further investigation.

Funding

This research received no external funding.

Data Availability Statement

Data is contained within the article.

Acknowledgments

I dedicate this work to my nephew Ettore.

Conflicts of Interest

The author declares no conflict of interest.

Abbreviations

The following symbols are used in this manuscript:

| A, B | transmitter and receiver alphabet |

| a, b | alphabet characters |

| A, A’ | dimension of the considered ensemble/alphabet |

| C | channel capacity |

| specific heat at constant volume | |

| energy of the gas molecules | |

| code efficiency | |

| unique decodable efficiency | |

| F | Helmholtz potential or free energy |

| expectation value of | |

| H | entropy of the source |

| average codeword length | |

| Lagrange multiplier | |

| k | Boltzmann constant |

| M | character ensemble |

| P | gas pressure |

| probability of finding the system in the group index “i” | |

| dQ | change of heat |

| S | entropy |

| T | Kelvin temperature |

| U | internal energy |

| V | gas volume |

| dW | change of work |

| Z | partition function |

| quality of the distinguishable group |

References

- Martinelli, M. Entropy, Carnot cycle, and Information theory. Entropy 2019, 21, 3. [Google Scholar] [CrossRef] [PubMed]

- Reiss, H. Thermodynamic-like transformations in information theory. J. Stat. Phys. 1969, 1, 107. [Google Scholar] [CrossRef]

- Jaynes, E.T. Note on Unique Decipherability. Trans. IRE Inf. Theory 1959, 5, 98–102. [Google Scholar] [CrossRef]

- Schrodinger, E. Statistical Thermodynamics; Dover Publications Inc.: Garden City, NY, USA, 1989. [Google Scholar]

- Martinelli, M. Photons, bits and Entropy: From Planck to Shannon at the roots of the Information Age. Entropy 2017, 19, 341. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423, 623–656. [Google Scholar] [CrossRef]

- Jaynes, E.T. Information theory and Statistical Mechanics. Phys. Rev. 1957, 106, 620. [Google Scholar] [CrossRef]

- Mendosa, E. Reflection on the Motive Power of Fire; Dover Publications: Mineola, NY, USA, 1988. [Google Scholar]

- Smith, C.W. William Thomson and the creation of thermodynamics: 1840–1855. Arch. Hist. Exact Sci. 1977, 16, 231. [Google Scholar] [CrossRef]

- Clausius, R. Under verschieden fur die Anwendung bequeme Formen der Hauptgleichungen der mechanischen Warmetheorie. Ann. Phys. Chemie 1865, CXXV, 353. [Google Scholar] [CrossRef]

- Sgawa, T.; Ueda, M. Generalized Jarzynski equlity under nonequilibrium feedback control. Phys. Rev. Lett. 2010, 104, 090602. [Google Scholar] [CrossRef] [PubMed]

- Hua, Y.; Guo, Z.-Y. Maximum power and the corresponding efficiency for a Carnot-like thermoelectric cycle based on fluctuation theorem. Phys. Rev. 2024, E109, 024130. [Google Scholar] [CrossRef] [PubMed]

- Crooks, G.E. Entropy production fluctuation theorem and the nonequilibrium work relation for a free energy differences. Phys. Rev. E 1999, 60, 2721. [Google Scholar] [CrossRef] [PubMed]

- Sundresh, T.S. Information complexity, information matching and system integration. In Proceedings of the 1997 IEEE International Conference on Systems, Man, and Cybernetics, Orlando, FL, USA, 12–15 October 1997. [Google Scholar]

- Sundresh, T.S. Information flow and processing in anticipatory systems. In Proceedings of the 2000 IEEE International Conference on Systems, Man and Cybernetics, Nashville, TN, USA, 8–11 October 2000. [Google Scholar]

- Abramson, N. Information Theory and Coding; Mc Graw-Hill: New York, NY, USA, 1963. [Google Scholar]

- Tribus, M. Information Theory as the Basis for Thermostatics and Thermodynamics. J. Appl. Mech. 1961, 28, 1–8. [Google Scholar] [CrossRef]

- Adamek, J. Fundations of Coding; Wiley: Hoboken, NJ, USA, 1991. [Google Scholar]

- Costello, D.J.; Forney, G.D. Channel coding: The road to channel capacity. Proc. IEEE 2007, 95, 1150. [Google Scholar] [CrossRef]

- Agrell, E.; Alvarado, A.; Kschischang, F.R. Implications of information theory in optical fibre communications. Phil. Trans. R. Soc. 2016, A374, 20140438. [Google Scholar] [CrossRef] [PubMed]

- Ortega, P.A.; Braun, D.A. Thermodynamics as a theory of decision making with information-processing costs. Proc. R. Soc. A 2013, 469, 20120683. [Google Scholar] [CrossRef]

- Kuppers, B.-O. Information and the Origin of Life; The MIT Press: Cambridge, MA, USA, 1990. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).