Abstract

This paper introduces a two-phase decision support system based on information theory and financial practices to assist investors in solving cardinality-constrained portfolio optimization problems. Firstly, the approach employs a stock-picking procedure based on an interactive multi-criteria decision-making method (the so-called TODIM method). More precisely, the best-performing assets from the investable universe are identified using three financial criteria. The first criterion is based on mutual information, and it is employed to capture the microstructure of the stock market. The second one is the momentum, and the third is the upside-to-downside beta ratio. To calculate the preference weights used in the chosen multi-criteria decision-making procedure, two methods are compared, namely equal and entropy weighting. In the second stage, this work considers a portfolio optimization model where the objective function is a modified version of the Sharpe ratio, consistent with the choices of a rational agent even when faced with negative risk premiums. Additionally, the portfolio design incorporates a set of bound, budget, and cardinality constraints, together with a set of risk budgeting restrictions. To solve the resulting non-smooth programming problem with non-convex constraints, this paper proposes a variant of the distance-based parameter adaptation for success-history-based differential evolution with double crossover (DISH-XX) algorithm equipped with a hybrid constraint-handling approach. Numerical experiments on the US and European stock markets over the past ten years are conducted, and the results show that the flexibility of the proposed portfolio model allows the better control of losses, particularly during market downturns, thereby providing superior or at least comparable ex post performance with respect to several benchmark investment strategies.

1. Introduction

The portfolio selection process typically involves two stages. The first phase comprises the selection of the most promising stocks to be included in the optimization, while the second concerns the optimal wealth allocation between the portfolio constituents.

Ranking and selecting stocks from an investment basket is a challenge that has been addressed in the literature in several ways, such as traditional stock-picking techniques based on factor models [1,2] or novel approaches based on machine learning techniques [3,4]. When the stock-picking process involves many different and conflicting financial criteria, it can fall into the realm of multi-criteria decision-making (MCDM) problems. This ensemble of methods remarkably supports the portfolio selection practice since it provides a comprehensive range of techniques that tackle issues related to the stock-picking phase. In recent times, various multi-criteria decision-making methods have been employed to rank superior securities and construct optimal portfolios, such as the preference ranking organization method for enrichment evaluation [5,6], the technique for order of preference by similarity to ideal solution [7,8], and the multi-criteria optimization and compromise solution [9], to mention some of the most often used. For a comparison of the performance of several multi-criteria decision-making techniques, we refer the reader to [10,11]. To account for the decision-makers’ irrational exuberance when making decisions in the presence of risk and uncertainty, ref. [12] initiated the TODIM (the Portuguese acronym for interactive multi-criteria decision-making) method. Specifically, this approach incorporates prospect theory’s principles [13] to define the value function that ranks between criteria alternatives, considering investors’ behavioral characteristics. Recently, the TODIM method has gained increasing interest in the literature. In [14], the authors applied this procedure to rank 462 equities from the constituents of the S&P500 Index by adopting nine financial criteria. They considered several portfolio cardinalities and constructed equally weighted or ranking-based weighted portfolios, finding that investments built through this technique yielded better results in terms of the Sharpe ratio. Ref. [15] extended this framework by embedding the TODIM method in a multi-objective portfolio selection based on the mean–variance paradigm to optimize the portfolio constituents, finding promising results. Their proposed model has been tested on a limited number of assets from the Chinese stock market.

Although still highly influential, Markowitz’s pioneering mean–variance framework [16] faces numerous practical challenges when applied in real-world scenarios. Moreover, the need to meet the demands of practitioners and institutional investors, who are increasingly engaged in the portfolio design process, has resulted in considerable research aimed at developing alternative optimization approaches. In particular, the so-called risk parity framework has become a mainstream asset allocation approach, gaining widespread relevance in both industry and academia [17,18]. This strategy allocates wealth in such a way that the risk contribution per asset to the portfolio risk is equalized, focusing on managing the different sources of risk involved in the investment process and introducing the idea of risk diversification. However, the existence and uniqueness of a solution to the risk parity portfolio problem is guaranteed only in some particular cases [19,20]. Furthermore, optimizing a portfolio to reach risk parity compliance neglects the performance dimension of an investment, which represents a necessity for most categories of investors. Consequently, some authors have proposed asset allocation problems with different performance objectives while imposing the parity condition as a portfolio constraint [21,22]. It is worth noting that risk parity is a special case of the more general risk budgeting approach, where assets are given a predetermined weight, called a budget, as a percentage of the total portfolio risk [23,24].

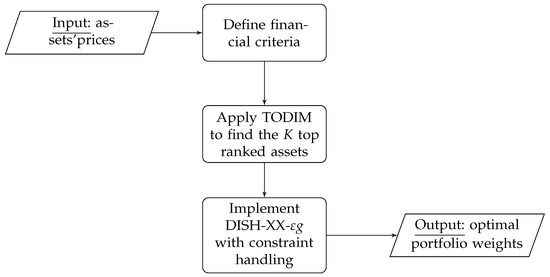

This paper presents a novel automated decision support system built upon two interconnecting modules tailored to solving portfolio optimization models that maximize a financial performance measure subject to real-world constraints, particularly cardinality constraints. This knowledge-based structure is illustrated in Figure 1. The first module employs the TODIM procedure to develop a ranking between stocks concerning several financial criteria identified by the end user. With this step, we can bypass the explicit management of the cardinality constraint within the optimization process. In the second module, we determine the optimal portfolio weights using a version of the linear population size reduction success-based differential evolution algorithm with double crossover (DISH-XX [25]). The synergy between these two modules can address the necessities of various types of end users who wish to be directly involved in the design of the portfolio strategy. The system developed in this paper parallels the one proposed in [26], with the differences being (i) a more practical approach aimed at supporting practitioners, (ii) its application in a single- and not a multi-objective setting, and (iii) the consideration of information theory—and, in particular, entropy—in choosing the portfolio constituents.

Figure 1.

Structure of the knowledge-based financial management system.

Furthermore, the capabilities of our automated financial management system are evaluated by examining two instances of a portfolio optimization problem, where the objective function to optimize is a modified version of the Sharpe ratio [27]. In this refined performance measure, when the portfolio excess return is negative, it is multiplied by the standard deviation instead of being divided. This version aligns with the risk–return preferences of a rational investor, even if the risk premiums are negative [28]. We consider the outlook of an institutional investor or a portfolio manager who seeks to operate in large equity markets by selecting a limited pool of stocks to form a portfolio with a suitable performance while maintaining risk diversification. To achieve this goal, we introduce the following real-world constraints. Firstly, a cardinality constraint limits the number of assets to a reasonable portfolio size. Then, a budget constraint ensures that we allocate all of the available capital, while box constraints prescribe lower and upper bounds on the fraction of capital invested in each asset. The resulting portfolio model is similar to the one analyzed in [29], where the authors empirically proved notable ex post financial profitability regarding many ex post performance metrics. In the second instance, along with the previous restrictions, this work introduces the direct control of the portfolio risk in the optimization phase using a set of risk budgeting constraints. More precisely, a tolerance threshold allowing for minor upper and lower deviations from the parity is adopted, resulting in a formulation similar to the one developed in [30]. This approach relaxes the risk parity conditions and provides greater flexibility in determining the risk contributions of the portfolio constituents. The results are mixed-integer optimization problems that belong to the family of cardinality-constrained portfolio optimization problems, which are NP-hard [31]. In addressing the challenge posed by the resolution of this particular class of optimization problems, a number of researchers in the fields of finance and computer science have directed their attention towards metaheuristics, given their demonstrated simplicity and effectiveness [32]. For instance, the beetle antennae search algorithm has attracted significant interest due to its computational efficiency and global convergence properties, rendering it suitable for solving portfolio optimization problems subject to real-world constraints such as transaction costs and cardinality limits [33]. Furthermore, the integration of artificial neural dynamics into portfolio optimization models has demonstrated substantial enhancements in computational efficiency and solution accuracy when compared to traditional methodologies [34,35]. The present work falls within this line of research, focusing on the differential evolution algorithm (DE [36]), a metaheuristic extensively used to solve single- and multi-objective asset allocation problems [37,38]. Specifically, it considers a recently developed augmented version of DE, called distance-based parameter adaptation for success-history-based differential evolution with double crossover (DISH-XX), which has shown very competitive results based on several benchmark functions from real-world engineering problems [25]. Regarding the current literature and knowledge, this paper is the first to apply this algorithm to portfolio optimization. Section 2.1 provides a detailed description of the progression from DE to DISH-XX. Since the original version of this solver is blind to non-bound constraints, it is equipped with an ensemble of constraint-handling techniques. Specifically, a repair mechanism is used to manage box and budget constraints, as in [39]. Subsequently, for risk budgeting constraints, the proposed constraint-handling approach accelerates the convergence process towards the feasible region by applying a gradient-based mutation [40] and uses the -constrained method [41] to transform the constrained optimization problem into an equivalent unconstrained one.

Note that selecting the alternative criteria for the preliminary stock-picking phase and establishing their relative preferences constitutes a crucial phase for the TODIM method. Specifically, this work considers a complementary set of three financial criteria, each providing a unique perspective. Firstly, it adopts a peripherality measure for the stocks based on mutual information, similar to the approach discussed in [42]. The mutual information dimension focuses on the microstructure of the stock market to capture the full spectrum of assets’ dependencies. Recently, many scholars have investigated the capabilities of mutual information and entropy as investors’ tools to make choices under uncertain conditions. Section 2.2 provides an overview of the most recent literature contributions in this field. Then, the second and third criteria are the momentum based on the most recent monthly returns and the upside-to-downside beta ratio, respectively. The former highlights the ability of stocks to generate value over time, and the latter assesses the responsiveness of a stock concerning market upswings and downswings. Furthermore, to calculate the preference weights associated with the three aforementioned criteria, this work exploits two approaches. The first consists of a static method where the three criteria have the same importance, without introducing any relative preference. Next, the second technique uses the joint entropic information carried by criteria and evaluates their contributions, dynamically adjusting the relative preferences during the investment period.

The following are the main literature contributions of this paper.

- We develop a knowledge-based financial management system to solve cardinality-constrained portfolio optimization problems. This expert system is built upon two interconnected modules. On the one hand, a multi-criteria decision analysis technique called TODIM handles the cardinality constraint. On the other hand, the DISH-XX algorithm is extended with an ensemble of constraint-handling techniques and a gradient-based mutation.

- This study introduces two portfolio selection models where the objective function to maximize is a modified version of the Sharpe ratio under some real-world constraints. The first instance considers cardinality, box, and budget constraints. The second one introduces a set of risk budgeting constraints to provide explicit control of risk.

- When running the TODIM procedure for the preliminary ranking, we use three complementary financial criteria, namely the peripherality measure based on mutual information, the momentum measure, and the upside-to-downside beta ratio.

- To set up the relative preference weights of the three criteria, an equally weighted method and an entropy-based method are adopted.

- An extensive experimental analysis is conducted considering the two most significant indices of the American and European stock markets, namely the S&P 500 and the STOXX Europe 600.

- The empirical part validates the profitability of our investment strategy considering several ex post performance metrics and compares the two portfolio models described above against some alternatives that pre-select the stocks using the criteria individually, as well as the market benchmark.

The remainder of this paper is structured as follows. Section 2 presents some related works regarding the differential evolution algorithm and the use of information theory for portfolio optimization. Section 3 illustrates the two instances of the portfolio model. Section 4 and Section 5 describe the two modules that compose the developed decision support system. Section 6 presents the experimental analysis, discussing the data and investment setup and showing the ex post performance results. Finally, Section 7 concludes the paper, summarizing the main findings, illustrating potential research limitations, and suggesting some future research directions.

2. Related Works

2.1. From DE to DISH-XX

The differential evolution algorithm works according to the following steps. The algorithm starts by randomly sampling an initial population of candidate solutions. Then, it iteratively produces new trial vectors through mutation and crossover phases. If a new individual outperforms the original one, it survives and progresses to the next generation. This iterative process continues until it satisfies some stopping conditions, and the algorithm returns the best-found solution to the optimization problem. The original algorithm, developed in [36], includes three user-defined control parameters: the population size , the scaling factor F, and the crossover rate . Over recent decades, scholars have proposed several enhanced versions of this algorithm. These advancements typically involve using refined mutation schemes, introducing external archives to store the most promising solutions, and adaptively determining the parameters , F, and . For a comprehensive survey of the latest advancements in the field of differential evolution-based algorithms, see [43]. In [44], the authors introduced an influential variant that uses a control parameter adaptation strategy. Specifically, this version samples the parameters F and from a probability distribution and stores successful values in an external archive. An improved version of the latter, called success-history-based differential evolution, was proposed in [45]. Instead of sampling F and from gradually adapted probability distributions, the authors proposed to use historical archives ( and ) to store effective parameter values from recent generations. The algorithm then generates new F and parameters by sampling near these stored pairs. Next, the same authors introduced a linearly decreasing function that adaptively reduces the population size over the generations [46]. In [47], the authors proposed an update to the scaling factor and a crossover rate adaptation that exploits information from the Euclidean distance between the trial and the original individual. They called this new algorithm distance-based success history differential evolution (DISH), proving its superior performance over several versions of DE. The DISH-XX algorithm [25] used as a solver in this paper is a refined version of DISH that introduces a secondary crossover between the trial vector and one of the historically best-found solutions randomly selected from the archive.

2.2. Information Theory in Portfolio Optimization

Metrics from information theory, especially entropy, have led to significant literature contributions in portfolio theory. For instance, several authors have used entropy as a proxy for portfolio risk, starting with the seminal paper [48]. To mention some recent works, ref. [49] proposed a return–entropy portfolio model and compared it with the classical Markowitz strategy. The work in [50] prescribes a setup for portfolio optimization where entropy and mutual information are used instead of variance and covariance as risk measurements. In this approach, the mutual information measures the statistical independence between two random variables, and it is used as a more general approach to capture nonlinear relationships [51]. Along with using entropy as a risk measure, several contributions have used this metric to quantify portfolio diversification. In [52], the authors proposed a model that aims to maximize the entropy of the portfolio weight vector, extending the classical Markowitz framework by adding a control on diversification. In [53], this approach has been broadened by suggesting a mean–variance–skewness–entropy multi-objective optimization model. More recently, in [54], the authors managed the mean, variance, and entropy objectives using a self-adapting parameter that adjusts to the market conditions.

In recent times, researchers in finance have considered markets as networks in which stocks correspond to nodes and the links are related to the correlations of returns. In [55], the authors used network theory to select stocks from the peripheral regions of the financial filtered networks, finding that they performed better than stocks belonging to the networks’ central zones. The work in [56] bridges the gap between the mean–variance and network theories. In particular, a negative relationship between optimal portfolio weights and the centrality of assets in the financial market network has been evidenced. In [57], the authors tested various dependence measures, such as the Pearson and Kendall correlations and lower tail dependence, to construct interconnected graphs and build optimal mean–variance portfolios. Moreover, a trend has emerged in the literature where, instead of using canonical correlation measures, researchers employ mutual information to capture nonlinear dependencies among stocks and describe the microstructure of the financial market. The foundational work in this area is the paper [58], where the authors constructed minimum spanning trees based on the mutual information between stocks in the Chinese stock market. By applying this methodology and combining it with the approach suggested in [55], some authors have considered a measure of asset centrality based on mutual information and have proposed various stock-picking techniques for portfolio construction [42]. This paper follows the latter approach to establish one of the three criteria employed within the multi-criteria decision-making module.

3. Portfolio Models

3.1. Investment Strategy Setup

This paper considers a frictionless market that does not allow for short selling, and all investors act as price takers. The investable universe consists of risky assets, and a portfolio is denoted by the vector of weights , where represents the proportion of capital invested in asset i, with . indicates the random variable representing the rate of return of asset i, and is its expected value. Hence, the random variable expresses the portfolio rate of return, while the expected rate of return of portfolio is defined as

and its volatility is given by

where is the covariance between assets i and j, with , with the covariance matrix assumed to be positive definite. Since investors perceive large deviations from the portfolio mean value as damaging, Equation (2) represents the so-called portfolio risk.

Given this framework, a portfolio that provides the maximum return for a given level of risk or, equivalently, has the minimum risk for a given level of return is called efficient. This decision-making approach is widely known as mean–variance analysis, and the set of optimal mean–variance trade-offs in the risk–return space forms the efficient frontier [16]. In this setting, the so-called Sharpe ratio identifies the best investment among efficient portfolios. This performance measure is defined as

and expresses the net compensation, with respect to a risk-free rate , earned by the investor per unit of risk. However, the reliability of this performance measure decreases when the portfolio excess return is negative, since (in some cases) an investor would select a higher-risk portfolio using the Sharpe ratio. To overcome this issue, the proposed portfolio selection model considers the so-called modified Sharpe ratio [28], defined as

where is the sign function of . Observe that, if the portfolio excess return is non-negative, the modified Sharpe ratio is equal to the Sharpe ratio. Otherwise, it multiplies the portfolio excess return by the standard deviation. In this manner, even in periods of market downturn, portfolios with lower risk and a higher excess return will be preferred.

3.2. First Proposed Model

This paper considers a portfolio model that is similar to the one inspected in [29]. The aim is to maximize the modified Sharpe ratio illustrated in Equation (4) subject to the following real-world constraints.

- Budget. Since all available capital needs to be invested at each investment window, the following holds:

- Cardinality. The portfolio includes exactly K assets, where . To model the inclusion or exclusion of the ith asset in the portfolio, a binary variable is introduced asfor , where , and the cardinality constraint can be written asThen, denotes the set of active portfolio weights, with .

- Box. A balanced portfolio should avoid extreme positions and foster diversification. Hence, maximum and minimum limits for portfolio weights are imposed, expressed bywhere and are the lower and upper bounds for the weight of the ith asset, respectively, with to exclude short sales.

The resulting is a mixed-integer optimization model that requires some ad hoc techniques to be practically handled. In this paper, instead of directly handling the cardinality constraint in the optimization process, as in [29,31], the TODIM procedure described in Section 4 is used to perform preliminary stock selection and bypass the cardinality issue. Then, a metaheuristic is employed to search for optimal solutions for the reduced portfolio allocation problem.

3.3. Risk Budgeting Approach

To control the degree of risk-based diversification between the portfolio constituents, the risk budgeting portfolio [23] is introduced. This approach allocates the risk according to the profile described by the vector , with , , and , such that

where denotes the risk contribution of the i-th stock to the portfolio risk.

Notice that the risk budgeting approach represents a relaxation of the more restrictive risk parity conditions, since the risk parity portfolio occurs when for all i. Appendix A.1 illustrates in detail the basics of the risk parity framework.

3.4. Proposed Risk Budgeting Formulation for the Second Portfolio Model

Inspired by the risk budgeting setup, this paper designs an investment strategy in which the deviations from risk parity are fixed by the investor’s risk profile according to the following set of inequalities:

where . Note that, for , Equation (9) reduces to the risk parity condition, while increasing values of these parameters introduce portfolios with increasing deviations of the risk contributions from the parity condition and thus greater risk concentrations. It can be proven that any optimization problem that involves the use of Equation (9) is non-convex. More details regarding the non-convexity of the risk budgeting formulation are given in Appendix A.2.

Summing up, the second instance of the portfolio model that optimizes the MSR measure of Equation (4) considers budget, bound, and cardinality constraints as specified in Equations (5)–(7), while introducing the direct control of the portfolio risk according to the set of constraints outlined in Equation (9).

4. Multi-Criteria Decision Analysis Module

To address the cardinality constraint (6) and develop a portfolio model involving only real variables, the proposed approach selects assets with higher rankings based on a set of criteria, using the TODIM method.

4.1. TODIM Generalities

The TODIM method facilitates decision-making by evaluating the importance of each criterion according to the subjective preferences of each investor. It consists of the following sequential steps.

- Constructing the multi-criteria decision-making matrix between criteria and alternatives. Given m alternatives and s criteria , the decision matrix is expressed aswhere is the performance evaluation of under criterion .

- Determining the criteria weights. In this step, the criteria weighting vector , which satisfies and , needs to be determined. This vector defines the relative preference degree of the procedure toward the s criteria. Two weighting schemes are analyzed in this paper. The first assigns the same weight to each criterion to avoid any prior preference for a specific criterion in the TODIM structure. The second one utilizes the entropy weight method [59]. The contribution of the alternative to the criterion is calculated asNext, the entropy value for the jth criterion is given bywhere denotes the total contribution of all alternatives to criterion . If , it follows that . After obtaining the entropy values, the entropy weight is

- Binning and normalizing criteria matrix. The third step transforms the raw criteria matrix A into a different matrix, , by binning each element into 10 bins. Specifically, if a criterion is considered a benefit, a value of 10 is assigned to the alternatives in the top for that criterion. Conversely, if the criterion is a cost, a value of 10 is assigned to the alternatives in the bottom . Then, to make the scores comparable, a normalization procedure is used to obtain the normalized values .

- Computing alternative comparisons. Through the normalized scores, the alternatives can be compared based on their overall scores across the criteria. For criterion , the criteria score of alternative against alternative is defined as in [60]where is the objective weight of criterion ; are the two risk parameters of the value function in the domain of gains and losses; and is the loss aversion coefficient in the loss domain.After calculating the dominance degree with respect to criterion between any two alternatives and using Equation (13), the final comparison score concerning each criterion is

- Determining the final ranking between alternatives. In the last step, the rank of each alternative is obtained asThe procedure then concludes with the normalization of the final ranks. These range between 0 and 1, with the most preferred alternative having a value of 1 and the least preferred having a value of 0.

4.2. Application of TODIM to Investable Universe

The previously described multi-criteria decision-making method is applied to the assets that are part of the investable universe, representing the alternatives , using three criteria based on the financial performance of stocks, which will be described in the experimental section. Given this procedure, the cardinality constraint can be tackled by picking the K assets with higher rankings, and, with this procedure, we can express the portfolio optimization problem without the auxiliary vector . To avoid any confusion, the notation of this paper uses a vector of K components instead of to express weights, and we write the two inspected models as follows:

and, for the risk budgeting model,

where for and for , with being the covariance matrix of the K assets selected by TODIM, , and denotes the jth column of the identity matrix.

5. Optimization Module

This section introduces the developed version of the DISH-XX algorithm specifically designed to solve Problems (16) and (17).

5.1. DISH-XX Algorithm

The following steps outline the core components of the DISH-XX algorithm as presented in [25] for the problem of optimizing a generic function .

- Initialization. At iteration , the algorithm commences with the initialization of a random population consisting of solutions. During this step, additional parameters are configured: the final population size (), the maximum number of objective function evaluations (), and two parameters utilized in the mutation operator ( and ). Moreover, two external archives are introduced: the first, denoted as A, stores solutions that have been improved by the corresponding trial vectors; the second, , contains the most promising solutions. Based on the prescriptions given in [25], two historical memory arrays of size H, and , are defined component-wise asandwhich will be used to define the values for the scaling factor F and the crossover rate .

- Mutation. For each generation , the mutation operator used in DISH-XX is the current-to--w/1 strategy. Let be the ratio between the current number of objective function evaluations and . The mutation vector for each individual p is then generated as follows:where is one of the best solutions in the archive , with ; is randomly selected from the current population and from . It is worth noting that . The scaling factor is generated from a Cauchy distribution with location parameter randomly selected from the historical memory array and a scale parameter value of . If the generated value is non-positive, it is drawn again, and, if it is greater than 1, it is set to 1. In addition, to bound its value in the exploration phase, we set whenever and . The weighted scaling factor depends on and as follows:This mutation strategy combines a greedy approach in the first difference and an exploratory factor in the second difference.

- Double Crossover. The DISH-XX algorithm employs a double crossover mechanism. The first crossover is the standard binomial crossover as in [36], which combines the mutation vector with the target vector to produce a temporary trial vector . This process is based on the crossover rate value , which is randomly generated using a normal distribution with a mean value , randomly selected from the memory array , and a standard deviation value of . The value is then bounded between 0 and 1, with values outside this range truncated to the nearest bound. Similarly to the scaling factor, the crossover rate depends on as follows:The second crossover involves the archive of historically best-found solutions , enhancing the diversity and exploration capabilities of the algorithm. Using the same value of the first crossover, the trial vector is generated component-wise as follows:where is a uniformly distributed random number, is a randomly chosen index in , and is a solution randomly selected from .

- Selection. The selection process in DISH-XX is based on the comparison of the trial vector and the target vector . The objective function values of both vectors are evaluated, and the one with the better fitness value is selected for the next generation. This ensures that the population evolves toward better solutions over time.

- Adaptation of Control Parameters. DISH-XX incorporates adaptive mechanisms for control parameters, such as the scaling factor and the crossover rate. These parameters are adjusted based on the success history of previous generations, allowing the algorithm to dynamically adapt to the problem landscape and enhance its performance. After each generation, one cell in both memory arrays is updated. DISH-XX uses an index k to track which cell will be updated. The index is initialized to 1, so, after the first generation, the first memory cell is updated. The index is incremented by one after each update, and, when it exceeds the value of H, it resets to 1. There is one exception to this update process: the last cell in both arrays is never updated and retains a value of 0.9 for both control parameters. Let and be arrays storing successful and , respectively. A pair is considered successful if it generates a trial vector that outperforms the target vector . The size of and is a random number between 0 (indicating that no trial vector is better than the target) and (indicating that all trial vectors are better than their targets). Consequently, the value stored in the kth cell of the memory arrays after a given generation isandwhere is the weighted Lehmer mean of the corresponding control parameter and and is defined asfor . The weights are computed as the Euclidean distance between the trial vector and the the individual ; specifically,This weighting scheme encourages exploitation while aiming to prevent the premature convergence of the algorithm to local optima.

- Decrease in the Population Size. The population size dynamically reduces during the execution of the algorithm to allocate more time for exploration in the later stages of optimization. Specifically, at the end of each generation, the population size is updated using the following formula:

- Population and Archive Management. The archive of historically best-found solutions is maintained throughout the optimization process. The archive is periodically updated with the best solutions available, ensuring that it remains relevant and effective. The population and the archive A adjust their sizes in response to changes in (25) by removing the worst-ranking individuals.

- Termination. The algorithm iterates through the above steps until a termination criterion is met. Common termination criteria include reaching a maximum number of generations, achieving a satisfactory fitness level, or observing no significant improvement over a predefined number of iterations.

5.2. Dealing with Budget and Box Constraints

In the construction phase of portfolio models, admissible solutions have to satisfy budget and buy-in threshold constraints. However, DISH-XX is blind to these constraints. To overcome this issue, the algorithm is equipped with a hybrid constraint-handling procedure. At first, to guarantee feasibility with respect to the bound constraints (7), this study introduces the following random combination [61]:

where and . Then, it uses the repair transformations developed in [39] to also satisfy the budget constraint (5). The assumptions needed to apply this method are the following:

- ;

- ;

- .

Then, for each , the candidate solution is adjusted component-wise

for all . As proven in [39], solutions transformed through Equation (27) fulfill, at the same time, budget and box constraints.

5.3. Dealing with Risk Budgeting Constraints

Dealing with the risk budgeting constraints in Problem (17) requires the definition of a proper constraint violation function. Given a candidate solution , this quantity is defined as

where represents the constraint violation for the jth inequality constraint, with .

Then, the -constrained method proposed in [41] transforms the constrained optimization model into an unconstrained one. More specifically, let be two candidate solutions with objective function values and constraint violations and , respectively. Then, the -comparison of the two solutions is defined as

and

It is worth noting that if both compared solutions are feasible or slightly infeasible (as determined by the value in the first parts of Equations (29) and (30)), or even if they have the same sum of constraint violation, they are compared using the values of the objective function. Conversely, if both solutions are infeasible, they are compared using the sum of their constraint violations. It is interesting to see that, if , the -level comparison works by using as comparison criterion only the objective function values. If , then the -level comparison is equivalent to a lexicographic ordering in which the minimization of the sum of the constraint violation precedes the minimization of the objective function.

5.3.1. Controlling the -Level

This study uses the following scheme based on [41,62] to control the parameter:

where the initial -level is equal to the mean constraint violation of the best half of the initial population, . The level is then updated until the iteration counter t reaches a maximum . After this, the -level is set to 0. To maintain the stability and efficiency of the algorithm, is set equal to 5 and is given by

with representing the maximum number of iterations corresponding to .

5.3.2. Gradient-Based Mutation

The gradient-based mutation is an operator that was first developed in [41], following the seminal work presented in [40]. The main idea of the method is to utilize the gradient information of the constraints to repair the infeasible candidate solutions, moving them toward the feasible region.

Given a candidate solution , the vector of the values of the inequality constraint functions is , and denotes the vector of the constraint violations. Next, the aim is to solve the following system of linear equations:

where the values of the increments are the variables and is the gradient matrix of ,

The Moore–Penrose pseudoinverse gives an approximate solution as follows:

Thus, the new mutated solution can be written as

This repair operation is executed with a probability at every K iterations and is repeated for a maximum of times while the point is not feasible. In the numerical experiments, and . Notice that only non-zero elements of are repaired using this mutation.

5.4. The Proposed DISH-XX- Algorithm

In summary, the developed solver involves the following steps. When individuals are subject to mutation and crossover, the repair operator defined by Equations (26) and (27) manages budget and bound constraints (5) and (7) simultaneously. The risk budgeting inequality constraints, when incorporated into portfolio design, are addressed using the -constraint method, with the comparison (29) and the update rule (31) for the parameter . Then, the gradient-based mutation operator defined by Equations (32) and (33) leverages the gradient information of the risk budgeting constraints to accelerate the convergence of solutions toward the feasible region, as suggested in [40]. Finally, a pair is considered successful if it generates a trial vector that outperforms the target vector in terms of the comparison (29). The same order relation is also used to update the population and the archives. The resulting enhanced DISH-XX algorithm with gradient-based mutation, denoted DISH-XX-, is illustrated in Appendix B in terms of addressing Problem (17). The termination criterion employed is the maximum number of objective function evaluations.

The parameter setup of the DISH-XX- algorithm is based on the recommendations of [25,62]. The maximum number of objective function evaluations depends on the problem dimension according to the following formula:

Similarly, the initial population size is and the final population size is . For the mutation parameters, and . The external archive A and the archive of the historical best solutions are initialized as empty. The historical memory size H is set to 5. Additionally, the termination criterion will be based on the maximum number of objective function evaluations. However, to avoid unnecessary computational costs in financial applications, the algorithm will terminate if the objective function value of the best solution, , does not show a significant improvement over 10 consecutive iterations, indicating convergence.

6. Experimental Analysis

This section provides a detailed description of the numerical analysis aimed at evaluating the flexibility and effectiveness of the proposed automated expert system in managing the two instances of the modified Sharpe ratio-based portfolio model.

6.1. Data Set Description and Experimental Setup

The empirical analysis conducted in this work focuses on the American and European stock markets. Specifically, for the former, the daily closing prices of the constituents of the S&P 500 index for the period from 31 December 2014 to 31 October 2024 are considered. The latter case study refers to the securities listed in the STOXX Europe 600 index for the same period. Assets presenting missing data within the observation window have been discarded. As a result, the American dataset comprises 470 stocks, while the European investment basket includes 535 stocks.

The two case studies consider a rolling window investment plan with monthly portfolio rebalancing, with an out-of-sample window consisting of 94 months, covering the period from 31 January 2017 to 31 October 2024. For each month in this window, a historical approach based on the last two years of daily observations is adopted to calculate the expected rates of return and the covariance matrix. For each month of the investment phase, the DISH-XX-εg solver is used to find the optimal wealth allocation in terms of portfolio weights. Appendix C illustrates the solving capabilities of the proposed algorithm. Table 1 recaps the data set structure and the experimental setup.

Table 1.

Summary of the considered data sets and experimental design.

Regarding the portfolio designs, the risk-free rate of return in the objective function (4) is set to zero, as in [63], and the buy-in thresholds and are equal to and , respectively. The cardinality parameter K is expressed as a fraction of the number of assets in a given data set, i.e., , and is set equal to , , and . Furthermore, in the risk budgeting model, in Equation (9), considering symmetrical ranges of deviation from the risk parity level. Three entries for the parameter are studied, namely , , and , where a higher value indicates more flexibility in the management of risk budgets. Note that this paper does not compare the introduced risk budgeting approach with the classical risk parity portfolio model; however, the choice of represents a scenario with minimal deviations from the parity, which indirectly relates the results to it. Finally, regarding the practical implementation of the TODIM method, this study follows the suggestions in [60] by setting the parameters and the attenuation factor in Equation (13).

6.2. Criteria Used for the Screening of Assets

The preliminary stock-picking phase considers three complementary criteria to implement in the TODIM procedure. The first focuses on the microstructure of the stock market to capture the full spectrum of assets’ dependencies based on mutual information (MI). The so-called momentum measure (MOM) is the second criterion, which exploits the ability of individual stocks to generate value over time. The third metric consists of the upside-to-downside beta ratio (U/D ratio), which assesses the responsiveness of a stock with respect to upward and downward market movements. In the following, a description of the methodology employed to define and compute these three measures is provided.

6.2.1. Eigenvector Centrality Measure Based on Mutual Information

The definition of the first criterion needs some preliminary notions about the Shannon entropy measure. Given a continuous random variable X with probability density function , its entropy is defined as

Similarly, if one considers two continuous random variables X and Y, their joint entropy is given by

where is the joint probability density function of X and Y. The mutual information between two random variables captures the mutual dependence between them. For continuous variables, it is expressed as

and it is zero if and only if they are independent.

Then, the dissimilarity between the rates of return of two stocks, namely and , with and , is quantified by the so-called normalized distance metric, defined as

Notice that this distance ranges from 0 (perfect dependence) to 1 (independence), making it particularly useful in building networks. The last two years of daily observations are employed for the estimation of the distance , and, following the approach described in [42], we consider a minimum spanning tree (MST), defined as a connected subgraph that spans all nodes of a graph with the minimum total edge weight and no cycles. To construct the MST based on (35), Prim’s algorithm is used—a well-known method that, starting from an arbitrary node, iteratively connects it with the shortest edge until all nodes are included.

Once the MST is constructed, to identify key nodes within the network, we exploit eigenvector centrality, a measure that assigns an importance score to each node based on its connections. This centrality measure is obtained by computing the Perron eigenvector of the adjacency matrix of the MST, which corresponds to the principal eigenvalue. A high score of centrality characterizes influential stocks that are important nodes in their respective clusters, facilitating the transfer of information. However, because of their importance in the dynamics of the market, these stocks are more susceptible to market volatility. In contrast, nodes with low centrality scores are located on the periphery of the network, making them less susceptible to market risk and thus representing effective candidates for portfolio selection [55].

6.2.2. Momentum Measure

Let be the random variable expressing the stochastic rate of return of stock i for a given period. The momentum of a stock is typically defined as the observed rate of return over a specified observation window that consists of N periods that begins in and ends in :

where are N consecutive observations of . Stocks with higher momentum values are preferred. The momentum of a stock is calculated considering the last two years of monthly observations.

6.2.3. Upside-to-Downside Beta Ratio

In financial analysis, the downside beta () measures the sensitivity of an asset to market returns when they are below a certain threshold. This beta component is particularly useful in evaluating the risk of an asset in adverse market conditions, and a percentage of the portfolio to stocks with low downside betas provides protection against market downturns [64]. Conversely, the upside beta () refers to periods when the market returns are higher than a threshold and reflects the potential gain capability of an asset during favorable market conditions. By considering this measure, investors can identify growth opportunities in order to construct portfolios that capitalize on market upswings. is a random variable that expresses the benchmark rate of return, where the benchmark is the equally weighted portfolio constructed on the considered market [65]. Then, and can be defined as in [66]:

and

where is the target threshold for the benchmark rate of return. Together, the downside beta and upside beta can be combined by introducing the so-called upside-to-downside beta ratio:

The larger the ratio, the more effectively an asset increases the returns during market upswings, without significantly amplifying the losses during downturns. To compute and , this study considers the last two years of daily observations, and the threshold is set to zero.

6.3. Ex Post Performance Metrics

The experimental analysis of this paper has a twofold objective. On the one hand, it investigates whether the inclusion of the risk budgeting constraints improves the control of portfolio risk, by comparing the two proposed asset allocation models. On the other hand, the aim is to analyze the strengths and differences in the two weighting schemes described in Section 4.1 for the TODIM procedure, namely the equal weighting and entropy weighting methods. Several ex post metrics are used to evaluate the financial performance of the compared portfolio strategies, and they are divided into two groups, namely risk measures and performance measures. Let be the realized portfolio rate of return of a given strategy at the end of month t, with (in our case, ). Given initial capital , the wealth at the end of investing period t is , for , where is its amount in the previous month.

After defining this quantity, the capacity of an investment strategy to avoid high losses through the drawdown risk measure is given by

where is the maximum amount of wealth reached by the strategy by the end of month t. Then, two ex post risk measures linked to drawdowns are considered, namely the maximum,

and the Ulcer index,

In particular, the latter evaluates the depth and the duration of drawdowns in wealth over the out-of-sample period [67]. Note that a smaller value for the three metrics indicates better control of the drawdown risk.

Regarding the performance metrics, to evaluate the attractiveness of the proposed investment strategies, the so-called compound annual growth rate (shortly, ) is introduced, and it is defined as follows:

Moreover, we introduce the out-of-sample monthly rate of return and standard deviation, and ,

and calculate the ex post Sharpe ratio, which is defined as the reward compensation per unit of risk, where the standard deviation is used to quantify the risk:

with being the risk-free rate of return, which we set equal to zero. The second considered performance metric is the Sortino–Satchell ratio [68], which is based on the idea that investors are only concerned about the downside part of the risk, the so-called (negative) semi-standard deviation:

where , and denotes the indicator function of A. Thus, the Sortino–Satchell ratio is as follows:

The third employed risk-adjusted performance measure is the Omega ratio [69], a practical tool to establish whether an investment is more likely to be profitable than loss-making. This quantity is calculated as the ratio between out-of-sample profits and losses:

6.4. Compared Strategies and Benchmark Portfolios

This section introduces the compared investment strategies according to the following notation, which depends on the cardinality size K and the risk parity deviation .

- ModSharpe-Equi-TODIMK: the portfolio model (16) that maximizes the modified Sharpe ratio with cardinality K and using the equal weighting method.

- ModSharpe-Entr-TODIMK: the portfolio model (16) that maximizes the modified Sharpe ratio with cardinality K and using the entropy weighting method.

- ModSharpe-RB-Equi-TODIMK,ν: the proposed risk budgeting portfolio model (17) with cardinality K, risk parity deviation , and adopting the equal weighting method.

- ModSharpe-RB-Entr-TODIMK,ν: the proposed risk budgeting portfolio model (17) with cardinality K, risk parity deviation , and adopting the entropy weighting method.

Moreover, the following two benchmark strategies are considered.

- BenchEW: the equally weighted portfolio constructed using all assets in the investable universe.

- BenchMI,K: an equally weighted strategy that adopts a preliminary stock-picking technique only based on the mutual information criterion for each of the three choices for K.

6.5. Discussion of the Ex Post Investment Results

Table 2 presents the ex post results of the proposed automated decision support system for the two problem instances, compared with the benchmark strategies introduced in Section 6.4, using the US data set. Observe that, for , the models employing the entropy weighting method in the TODIM procedure generally display higher risk-adjusted performance ratios. Among these strategies, those based on risk budgeting perform better than their counterparts that do not incorporate risk control. Conversely, better results in terms of risk measures are obtained when employing the equally weighted method in TODIM. It is worth noting that the three risk budgeting models display better control of portfolio volatility and limited drawdowns. This evidence suggests that adapting the criteria weights according to market signals is beneficial for performance, albeit at the cost of higher volatility and greater loss exposure during market downswings. Lastly, note that the mutual information benchmark excels in terms of risk control and also demonstrates competitive risk-adjusted performance. Furthermore, expanding the number of portfolio constituents enhances the ex post results across many of the examined models. For the case , portfolio models with risk budgeting restrictions that employ equal criteria weighting display significantly better results than in the previous case. In detail, these three models show higher ex post risk-adjusted performance ratios, diminished ex post standard deviations, and lower maximum drawdowns and Ulcer index values. Strategies that use the entropy weighting method yield analogous outcomes to the aforementioned case. For the cardinality , there is solid evidence of improvement for the entropic-based investments. More precisely, the ModSharpe-Entr-TODIM15% strategy shows very high risk-adjusted performance and good results in terms of risk measures, being the only tested model that is capable of outperforming the equally weighted benchmark. In order to assess whether the differences in performance are statistically significant, a robustness check on these results is conducted. The idea is to test the hypothesis that the out-of-sample Sharpe ratios of the compared strategies are equal. To do this, this work considers the approach introduced in [70], which is based on a circular block bootstrap approach with 5000 bootstrap resamples and automatic optimal block length determination. Due to their size, the tables reporting these comparisons are included in Appendix D, where Table A1 depicts the US case study. The results in terms of the p-values for tests in which the null hypothesis is that the Sharpe ratio difference between two compared models is zero are displayed. Specifically, in instances where the null hypothesis is rejected (the observed p-value is lower than ), the alternative hypothesis that is considered is consistent with the observed difference between the two Sharpe ratios. A ‘+’ sign is used to denote a positive difference, indicating that the model in the rows outperforms the one in the columns, while a ‘-’ is used to denote a negative difference, indicating that the model in the columns outperforms the one in the rows. Moreover, the false discovery rate approach proposed in [71] is used to determine the proportions of over-, equal, and under-performing methods, in terms of the Sharpe ratio, between all compared ones. To perform these analyses, the RStudio package PeerPerformance [72] (https://CRAN.R-project.org/package=PeerPerformance, accessed on 17 April 2025) has been exploited. This table points out that the ModSharpe-Entr-TODIM15% strategy is statistically superior to the majority of the other analyzed strategies in terms of the Sharpe ratio. The other portfolio models that, according to the adopted method, are more likely to be the over-performing ones are the equally weighted benchmark and MSR-RB-Entr-TODIM15%,ν. In all other cases, the test for differences in the Sharpe ratios does not show statistically significant results.

Table 2.

Ex post results for the two Sharpe ratio-based optimization strategies in the US data set, compared with the benchmark strategies. The first two columns report the value of the fraction of assets comprising the portfolio and the name of the portfolio strategy, respectively. For simplicity of notation, this table omits the dependence from the parameter K of the considered strategies. The other columns show the results of the ex post metrics presented in Section 6.3.

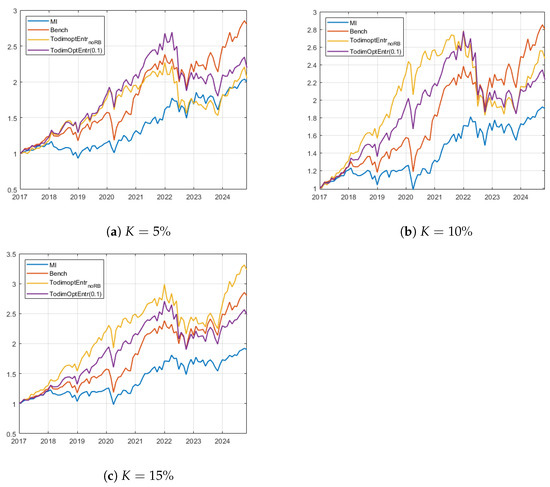

Given these considerations, Figure 2 displays the equity lines of the strategies MSR-Entr-TODIMK and MSR-RB-Entr-TODIMK,0.1% during the investment phase for the US case study, for the three values of K. At first, we observe that the equally weighted US market benchmark shows competitive results, almost increasing the initial wealth allocation threefold. In particular, this strategy exhibits elevated performance spikes after the COVID-19 outbreak and during the last two years. Panels (a) and (b) display the results for the and cases. Notice that the proposed portfolio models are subject to a marked drawdown phase during the first quarter of 2022 after being the best-performing investment plans during the first five years of allocation. This issue is due to the high volatility of the markets at this time, caused by the aftermath of the COVID-19 pandemic and the international tension brought about by the Russian invasion of Ukraine. Conversely, this drawdown is much less pronounced in the two benchmarks due to the higher diversification of the EW benchmark and a more loss-mitigating stock selection made by the mutual information-based model. Moreover, after this downturn, the two portfolios that maximize the modified Sharpe ratio struggle to regain ground, while the EW benchmark shows positive momentum until the conclusion of the investment period. In the cardinality case, the proposed portfolio strategies more efficiently curtail losses during market downturns. This assertion is further substantiated by Table 2, which shows that the two strategies under consideration display lower maximum drawdowns and Ulcer index values within this cardinality configuration. To conclude, despite its underperformance in terms of produced wealth, the mutual information-based benchmark strategy demonstrates superior loss and volatility control in all analyzed cases.

Figure 2.

Wealth evolution for the best selected strategies corresponding to the best ex post models (MSR-Entr-TODIMK and MSR-RB-Entr-TODIMK,0.1) and the benchmarks for the US case study. Panel (a) shows results for , while panels (b) and (c) display and , respectively.

The results for the EU case study are displayed in Table 3. The European market findings deviate considerably from the American market case. Firstly, strategies based on the entropic weighting method to determine the criteria preferences demonstrate markedly inferior results compared to their counterparts that utilize the equal weighting method. In contrast to the observations made in the US case study, adapting the relative preferences on the three criteria based on market information results in a deterioration in both the risk-adjusted performance ratios and risk control measures.

Table 3.

Ex post results for the two Sharpe ratio-based optimization strategies in the EU data set, compared with the benchmark strategies. The first two columns report the value of the fraction of assets comprising the portfolio and the portfolio strategy, respectively. For simplicity of notation, this table omits the dependence from the parameter K of the considered strategies. The other columns show the results of the ex post metrics presented in Section 6.3.

To assess these considerations in terms of out-of-sample Sharpe ratios, statistical tests on the differences are performed, as seen in Table A1. The test results indicate that MSR-Entr-TODIM5% exhibits the least efficacy, as evidenced by its significantly lower Sharpe ratio in comparison to numerous alternative strategies. In addition, as commented previously, strategies that adopt entropic weights demonstrate marked discrepancies (in negative terms) in comparison to their counterparts that employ the equally weighted method.

The mutual information-based benchmark strategies yield highly competitive outcomes regarding financial performance and show a good capacity to control the ex post standard deviation and drawdown measures. Among all the analyzed allocation plans, the MSR-Equi-TODIMK configurations without risk budgeting constraints are the most successful in every respect, producing results aligned with those of the MI-based and the equally weighted benchmarks.

Regarding the statistical tests on the Sharpe ratio differences, the results in Table A1 confirm these insights. Observe that the MI-based benchmarks are the ones with the greatest over-performance, together with the BenchEW strategy. Moreover, the MSR-Equi-TODIM5% configurations are characterized by high values, meaning that they are likely to be in the group of the best-performing strategies. Finally, it is correct to note that (i) there are no significant differences in the group of entropic portfolio strategies and (ii) despite being the strategy with the highest value of detected, BenchMI,5% shows statistically significant differences only against a few of the alternatives in the pairwise comparisons. In this data set, incorporating the risk budgeting constraints within the model during the portfolio construction phase does not enhance the financial performance or the portfolio loss control. In the cases of and , the optimal parameter value for the parameter appears to be , while, when the cardinality is , the most favorable outcomes are obtained with .

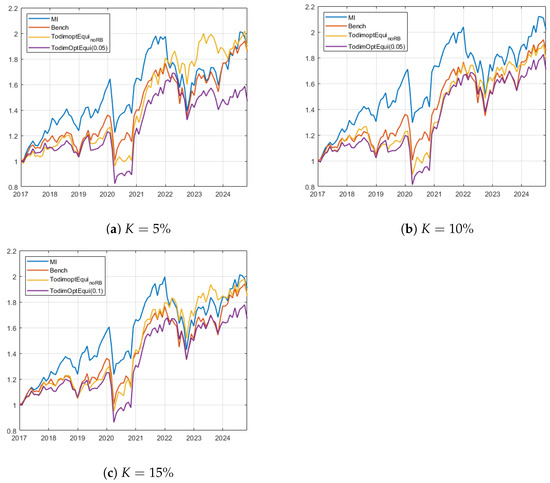

Figure 3 shows the equity lines of the best-performing strategies and the benchmarks for the EU case study. Panels (a) and (b) illustrate the strategies MSR-Equi-TODIMK and MSR-RB-Equi-TODIMK,0.05 for the cardinalities and . Panel (c) shows the evolution of MSR-Equi-TODIMK and MSR-RB-Equi-TODIMK,0.1 for . In this case study, it is evident that the mutual information-based benchmark emerges as the optimal investment strategy, particularly in the context of . In the scenario, the MSR-Equi-TODIM5% portfolio demonstrates the highest equity line at the end of the investment period, despite a turbulent phase during the year 2023. Conversely, the risk budgeting portfolio struggles to recover after periods of market decline, as observed in the post-pandemic era and during the final two years of the investment period. In the setting, the modified Sharpe-based portfolios prove ineffective in surpassing the MI-based benchmark, yielding outcomes comparable to those of the equally weighted European market benchmark. In the last case (), the MSR-Equi-TODIM15% strategy demonstrates results aligned with the two benchmarks, while the risk budgeting model shows marginally diminished performance in terms of wealth generation capabilities.

Figure 3.

Wealth evolution for the best selected strategies corresponding to the best ex post models and the benchmarks for the EU case study. Panel (a) shows the cardinality and the strategies MSR-Equi-TODIM5% and MSR-RB-Equi-TODIM5%,0.05. Panel (b) displays the results for the strategies MSR-Equi-TODIM15% and MSR-RB-Equi-TODIM10%,0.05. Finally, graph (c) shows the evolution of MSR-Equi-TODIM15% and MSR-RB-Equi-TODIM15%,0.1.

7. Conclusions

This study proposes a novel knowledge-based system built upon two interconnected modules, grounded in information theory and financial practices, to assist investors in their financial decision-making. In this context, two instances of a constrained portfolio selection model where the objective function to optimize is a modified version of the Sharpe ratio have been addressed. The first one considers several standard constraints encompassing cardinality, budget, and bound limitations. The second introduces a relaxed risk parity constraint to explicitly control the portfolio volatility in the construction phase. Moreover, a stock-picking procedure that uses a multi-criteria decision-making method named TODIM, which has gained widespread popularity over the years, deals with the cardinality requirement. This technique exploits information from a complementary set of three financial criteria: the mutual information-based peripherality measure, momentum, and the upside-to-downside beta ratio. Finally, a version of the recently proposed distance-based success history differential evolution with double crossover (DISH-XX) algorithm, equipped with an ensemble of constraint-handling techniques, solves the proposed portfolio selection models.

The following summarizes the main experimental findings of this paper. Firstly, the proposed automated decision support system has proven capable of supporting the investment choices of an end user whose preferences are outlined by the two portfolio models analyzed in this paper. Implementing the TODIM module to screen stocks based on three complementary criteria improves the adaptability to the two market scenarios considered. The mutual information-based stock-picking strategy achieves excellent results, especially in a resilient market like the European one, efficiently capturing non-linear relationships between stocks and the market microstructure. This allows potential investors to contain losses during market downswings. Additionally, incorporating criteria such as momentum and the upside-to-downside beta ratio enhances the model’s adaptability to market phases and leverages potential upswings in a thriving market like the American one. Moreover, regarding the optimization module, the solving capabilities of the proposed evolutionary algorithm have been analyzed, demonstrating the convergence of the provided solutions toward the feasible region and the efficient exploration of the search space.

In the subsequent phase of the experimental analysis, this study assessed the financial performance of the proposed investment models, implementing an investment plan with monthly rebalancing from January 2017 to October 2024. Specifically, we compared our strategies against an equally weighted benchmark in both the American and European markets, as well as a mutual information-based stock-picking strategy. The results varied significantly between the two case studies. In the American market, considering a portfolio with of the investable universe resulted in an enhancement in the risk-adjusted performance. In particular, the model without risk budgeting constraints is the only one that can outperform the equally weighted benchmark. In the European case study, the proposed strategy mimics more efficiently the behavior of the equally weighted benchmark and achieves results comparable to those of the mutual information-based benchmark, which is the best-performing portfolio model in the EU case.

A possible limitation of this research is that it adopts a limited number of features for the stock selection phase. Indeed, it would be beneficial to incorporate additional types of information as criteria, such as technical indicators or fundamental analysis metrics extrapolated from firms’ balance sheets. To extend the topic in the field of sustainability (especially relevant in the European context), non-financial disclosure information could also be included as a discriminant. Furthermore, another limitation is represented by the choice of the weighting methods, as we were limited to comparing the performance of only two techniques.

This paper lays the groundwork for several possible future research directions. The first possibility is to consider additional techniques that account not only for agent preferences but also for the predictive capacity of individual criteria over time. Moreover, the flexibility of the proposed knowledge-based financial management system can be tested by considering alternative portfolio models that maximize different objective functions, thereby outlining various investment profiles. To further enhance the practical relevance of the model, an additional constraint to control transaction costs during the investment phase can be introduced. Finally, a third possible extension involves applying different metaheuristics to identify the most suitable algorithm for our cardinality-constrained portfolio optimization models.

Author Contributions

Conceptualization: M.K., R.P. and F.P.; methodology: M.K., R.P. and F.P.; software: M.K.; performance analysis: M.K., R.P. and F.P.; validation: M.K., R.P. and F.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The data sets presented in this article are not readily available because there are technical limitations imposed by the data provider.

Acknowledgments

The authors are affiliated with the GNAMPA-INdAM research group.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Risk Parity and Risk Budgeting

Appendix A.1. Details of Risk Parity

The modern risk parity asset allocation framework has recently gained traction in both academia and industry. In this setting, wealth is assigned in such a way that the individual assets’ contributions to portfolio risk are equalized. As shown in [17], this goal can be achieved through the Euler decomposition of the portfolio risk measure, under the condition that the risk measure must be a homogeneous function of degree one. It is readily shown that the portfolio standard deviation meets this requirement. Moreover, this quantity can be decomposed as follows:

Given the marginal risk contribution of asset i, it follows that denotes its risk contribution. The corresponding relative risk contribution is defined as

The risk parity framework seeks to compute the portfolio that equalizes all risk contributions, satisfying the condition

Appendix A.2. Non-Convexity of the Proposed Risk Budgeting Formulation

Following [30], can be recast using the standard matrix notation as

where captures the individual risk contribution of asset i. The symmetric matrices are composed of the superposition of row i and column i from the original covariance matrix multiplied by one half, with all other elements in the matrix equal to zero, according to the following formula:

where denotes the ith column of the identity matrix. Notice that the set of inequalities introduced in Equation (9) can be rewritten as

and, with some algebra, the following set of conditions follows:

Since is symmetric, the difference is still a symmetric matrix. Then, the generic difference is the following, with :

This results in the matrix

Inspecting the matrices reveals that they are indefinite, each having both positive and negative elements on the diagonal [73]. Thus, any optimization problem that involves the use of is non-convex. Similar conclusions can be inferred for the matrix obtained from the difference .

Appendix B. Pseudocode of DISH-XX-εg

The pseudocode of the proposed DISH-XX-εg is given in Algorithm A1 for the solution of Problem (17).

| Algorithm A1 DISH-XX- |

|

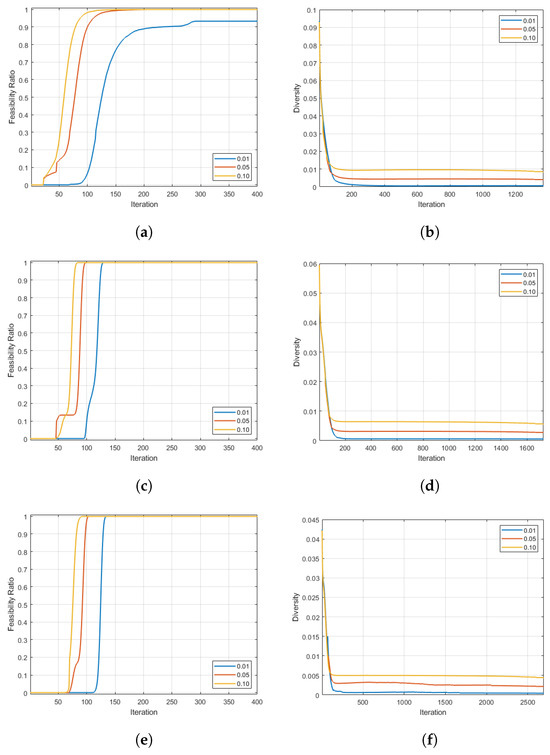

Appendix C. Assessment of the Algorithm’s Efficiency

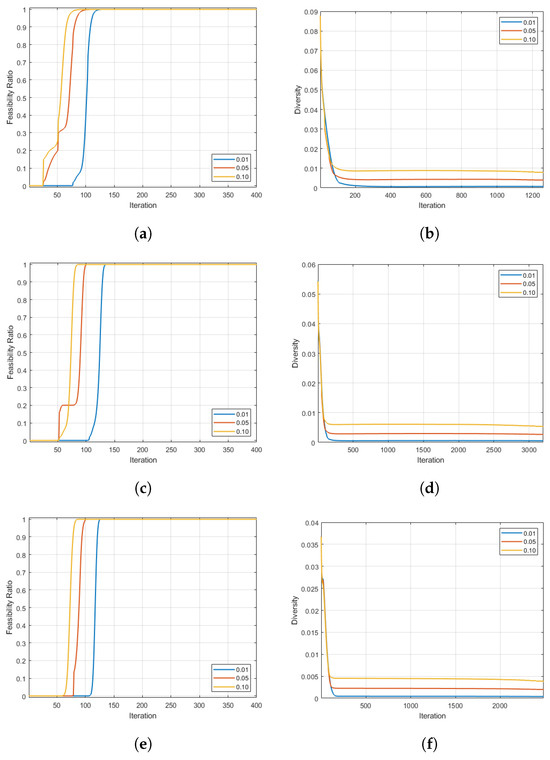

The aim of this section is evaluating whether the proposed algorithm is capable of finding feasible solutions to the risk budgeting portfolio optimization problem. To do this, a date from the 94 available in the ex post window is randomly selected and the nine portfolio configurations presented in Section 6.4 are considered. Then, 30 random different initial portfolios to be optimized are sampled, which already respect the cardinality constraint, in order to demonstrate the algorithm’s search capabilities. In this process, two features are considered: feasibility and diversity. The former is quantified by the so-called feasibility ratio, which is the number of feasible solutions relative to the population size at a given iteration; the latter is evaluated by the population diversity [74], which is defined as

where t is the generation index, is the ith component of the j-th candidate solution, and is the mean position of the population in coordinate i at generation t. Note that can be interpreted as the average Euclidean distance between a candidate solution and the barycenter of the population of candidate solutions at time t.

Figure A1 illustrates the evolution of the average values for the feasibility ratio and population diversity over the generations in the US case study. From panels (a), (c), and (d), observe that the algorithm reaches a feasibility ratio of the population after approximately 400 iterations in all but one case. (The figures plot only the initial 400 iterations to improve the readability of the graphs). The diversity measure plots (b), (d), and (f) point out how the population, at the final stages, narrows down to a very small portion of the search space, assessing the algorithm’s capability to find near-optimal solutions for the optimization problem.

Figure A1.

Average results for 30 random initial portfolio configurations of the feasible ratio and the diversity measure for the US case study by varying the deviation parameter in . Plots (a,b) show the results for ; plots (c,d) show the results for . Finally, results for are displayed in charts (e,f).

Figure A2 illustrates the same plots for the EU case study, showing similar findings. The results for other random dates from the out-of-sample window are very close to the ones presented in Figure A1 and Figure A2 and thus are omitted.

Figure A2.

Average results for 30 random initial portfolio configurations of the feasible ratio and the diversity measure for the EU case study by varying the deviation parameter in . Plots (a,b) show the results for ; plots (c,d) show the results for . Finally, results for are displayed in charts (e,f).

Appendix D. Statistical Significance of Differences Among the Sharpe Ratios of Portfolios

This section analyzes the statistical significance of the differences among the Sharpe ratios of the compared portfolio strategies, according to the robustness Sharpe test introduced in [70], for each pair of the competing portfolios. Table A1 reports the results for the US case study, while Table A2 shows the respective counterparts for the European stock market.

Table A1.

Assessment of the statistical significance of differences among the Sharpe ratios of the competing portfolios according to the Sharpe ratio robustness test for the US data set [70]. The differences are calculated by comparing the model in the rows against the one in the columns. The p-values are reported, and values equal to or lower than are highlighted in bold. The alternative hypothesis is consistent with the sign of the observed difference (‘+’ denotes a positive difference, ‘−’ a negative one). In the bottom part of the table, the proportions of over- (), equal (), and under-performing () strategies, in terms of the Sharpe ratio, are computed following the approach of [71].

Table A1.

Assessment of the statistical significance of differences among the Sharpe ratios of the competing portfolios according to the Sharpe ratio robustness test for the US data set [70]. The differences are calculated by comparing the model in the rows against the one in the columns. The p-values are reported, and values equal to or lower than are highlighted in bold. The alternative hypothesis is consistent with the sign of the observed difference (‘+’ denotes a positive difference, ‘−’ a negative one). In the bottom part of the table, the proportions of over- (), equal (), and under-performing () strategies, in terms of the Sharpe ratio, are computed following the approach of [71].

| BenchEW | BenchMI,5% | BenchMI,10% | BenchMI,15% | Equi-NoRB5% | Equi-NoRB10% | Equi-NoRB15% | Entr-NoRB5% | Entr-NoRB10% | Entr-NoRB15% | |

| BenchMI,5% | 0.4094 | |||||||||

| BenchMI,10% | 0.2394 | 0.9758 | ||||||||

| BenchMI,15% | 0.0665 | 0.6023 | 0.3479 | |||||||

| Equi-NoRB5% | 0.1915 | 0.6093 | 0.5634 | 0.7518 | ||||||

| Equi-NoRB10% | 0.3769 | 0.9258 | 0.9178 | 0.8843 | 0.3474 | |||||

| Equi-NoRB15% | 0.6828 | 0.6943 | 0.6488 | 0.4919 | 0.1680 | 0.3219 | ||||

| Entr-NoRB5% | 0.3074 | 0.8718 | 0.8818 | 0.9383 | 0.5559 | 0.9123 | 0.4594 | |||

| Entr-NoRB10% | 0.7013 | 0.7608 | 0.7198 | 0.5759 | 0.1880 | 0.4649 | 0.9903 | 0.2569 | ||

| Entr-NoRB15% | 0.5504 | 0.2644 | 0.1585 | 0.0995 | 0.1680 | |||||

| Equi-RB5%,0.01 | 0.1910 | 0.6478 | 0.5794 | 0.8238 | 0.7603 | 0.5949 | 0.2639 | 0.7608 | 0.3404 | |

| Equi-RB5%,0.05 | 0.1910 | 0.6483 | 0.5749 | 0.8208 | 0.7583 | 0.5859 | 0.2564 | 0.7588 | 0.3354 | |

| Equi-RB5%,0.10 | 0.1935 | 0.6448 | 0.5784 | 0.8223 | 0.7433 | 0.5934 | 0.2539 | 0.7553 | 0.3334 | |

| Equi-RB10%,0.01 | 0.3634 | 0.9818 | 0.9683 | 0.7818 | 0.2964 | 0.8713 | 0.4799 | 0.8388 | 0.5664 | 0.0575 |

| Equi-RB10%,0.05 | 0.3579 | 0.9743 | 0.9583 | 0.7973 | 0.3074 | 0.8853 | 0.4674 | 0.8548 | 0.5544 | 0.0580 |

| Equi-RB10%,0.10 | 0.3719 | 0.9858 | 0.9868 | 0.7763 | 0.2819 | 0.8513 | 0.4899 | 0.8323 | 0.5754 | 0.0600 |

| Equi-RB15%,0.01 | 0.2514 | 0.9793 | 0.9633 | 0.6853 | 0.3414 | 0.8348 | 0.5019 | 0.7893 | 0.6128 | |

| Equi-RB15%,0.05 | 0.2514 | 0.9848 | 0.9663 | 0.6838 | 0.3394 | 0.8338 | 0.4974 | 0.7888 | 0.6103 | |

| Equi-RB15%,0.10 | 0.2584 | 0.9778 | 0.9573 | 0.6828 | 0.3349 | 0.8283 | 0.5029 | 0.7838 | 0.6168 | |

| Entr-RB5%,0.01 | 0.4014 | 0.9773 | 0.9788 | 0.7748 | 0.3944 | 0.8633 | 0.6063 | 0.6648 | 0.5584 | |

| Entr-RB5%,0.05 | 0.3964 | 0.9848 | 0.9853 | 0.7828 | 0.4034 | 0.8763 | 0.5959 | 0.6838 | 0.5469 | |

| Entr-RB5%,0.10 | 0.4114 | 0.9678 | 0.9628 | 0.7648 | 0.3819 | 0.8503 | 0.6168 | 0.6368 | 0.5694 | |