Improved EEG-Based Emotion Classification via Stockwell Entropy and CSP Integration

Abstract

1. Introduction

2. Related Work

3. Dataset and Methods

3.1. Dataset

3.2. Preprocessing

3.3. Methods

3.3.1. Stockwell Transform

3.3.2. Stockwell Transform Entropy

3.3.3. Common Spatial Pattern

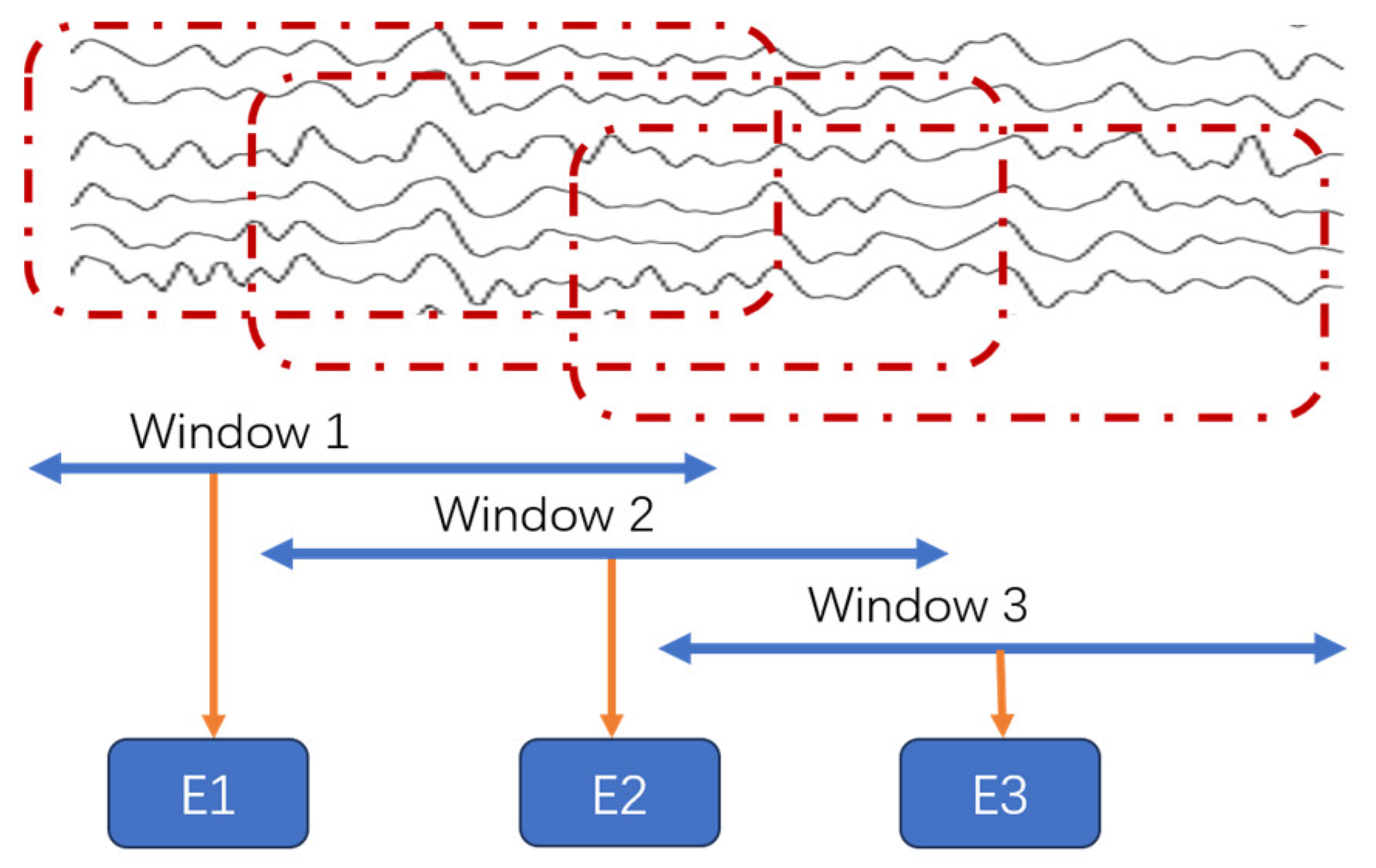

3.3.4. Feature Extraction Process Combining Stockwell Entropy and CSP

3.4. Classification

4. Results

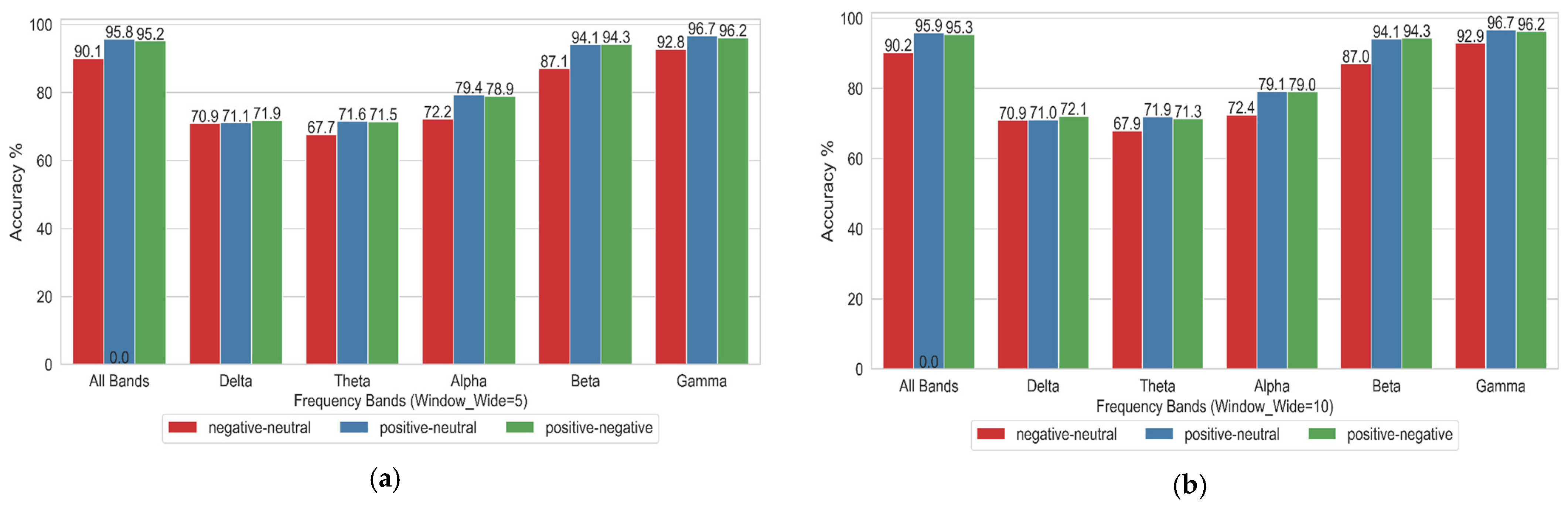

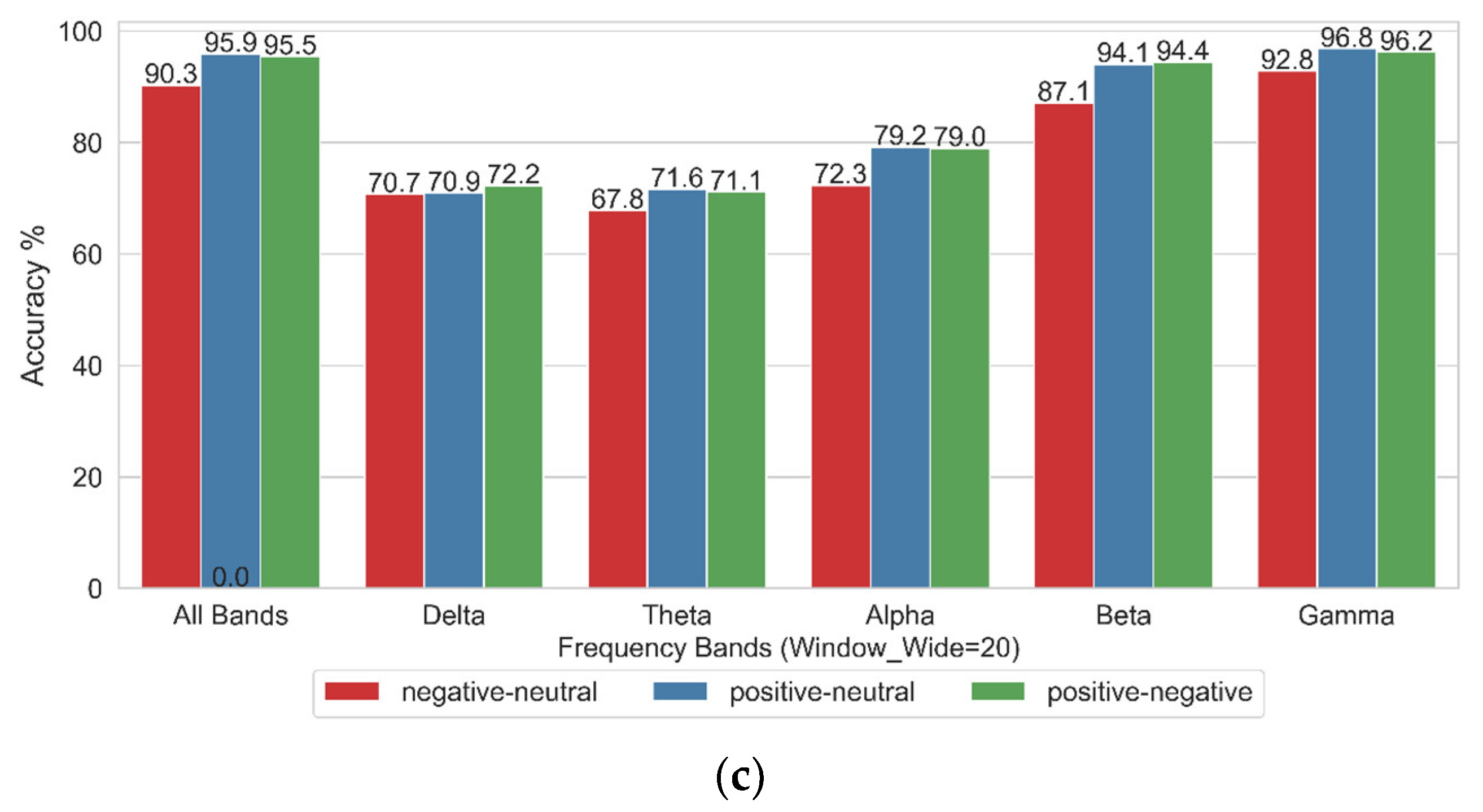

4.1. Impact of Different Frequency Bands on Emotional State Binary Classification

4.2. Influence of Gamma Frequency Band on the Classification of Four Emotional Combinations

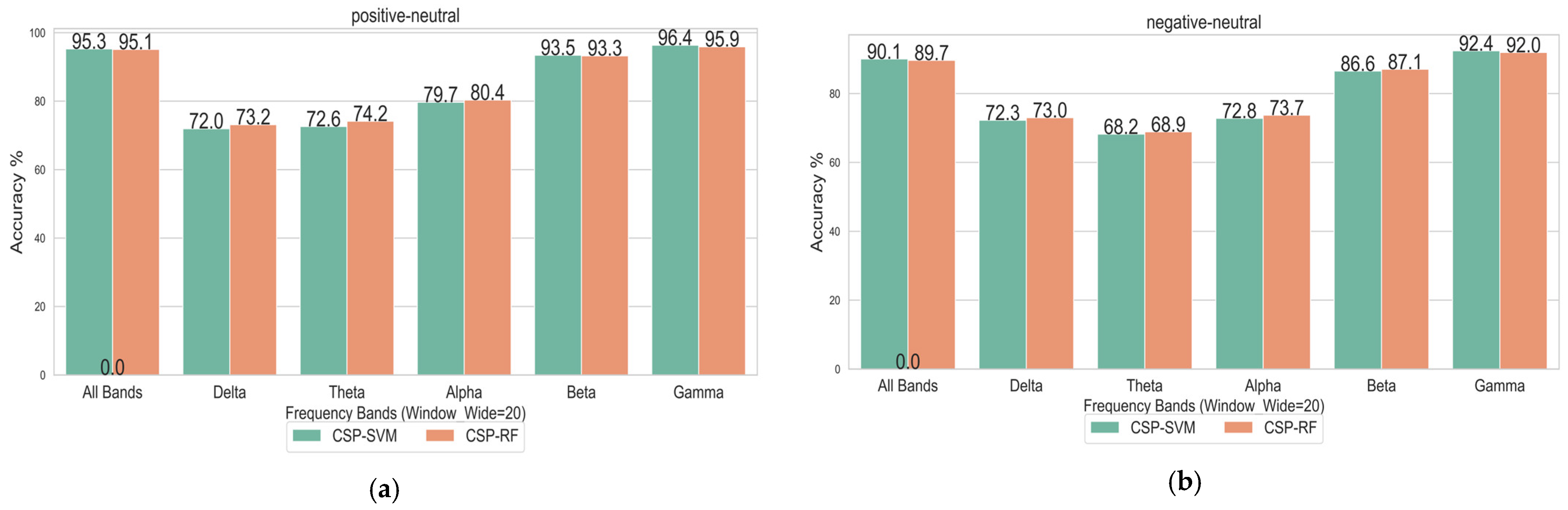

4.3. Influence of Different Classification Methods on Emotional State Recognition

5. Discussion

5.1. Experimental Conclusions

- The classification accuracy was highest in the Gamma frequency band.

- Increasing the sliding window width from W_5 to W_20 had a minimal impact on classification accuracy, demonstrating that the Stockwell entropy–CSP algorithm exhibits relatively stable performance in EEG-based emotion recognition.

- The accuracy of binary classification tasks—namely, Positive vs. Neutral, Negative vs. Neutral, and Positive vs. Negative—was generally high. Among these tasks, Positive vs. Neutral and Positive vs. Negative achieved the highest recognition rates.

- Although the CSP algorithm is more suitable for binary classification tasks, it also demonstrated strong performance in the three-class task (Positive vs. Negative vs. Neutral).

5.2. Analysis of the Reasons Behind the Experimental Results

5.2.1. Influence of Signal Frequency and Amplitude on Stockwell Entropy

- The entropy values of high-frequency signals are relatively stable, while those of low-frequency signals fluctuate significantly.

- Both an increase and a decrease in amplitude can cause changes in the entropy values of high-frequency signals, and these changes are approximately linear. Therefore, the entropy values of high-frequency signals respond well to amplitude changes and can be used to detect such changes.

- As the frequency increases, the values under different window conditions tend to stabilize, indicating that the selection of window width has little impact on the Stockwell entropy values of high-frequency signals. This demonstrates that Stockwell entropy values are highly stable for classification and recognition.

5.2.2. Influence of the CSP Algorithm on Emotional State Classification

5.3. Deficiencies and Prospects

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Alarcão, S.M.; Fonseca, M.J. Emotions Recognition Using EEG Signals: A Survey. IEEE Trans. Affect. Comput. 2019, 10, 374–393. [Google Scholar] [CrossRef]

- Im, C.; Seo, J.M. A review of electrodes for the electrical brain signal recording. Biomed. Eng. Lett. 2016, 6, 104–112. [Google Scholar] [CrossRef]

- Abdulridha, F.; Albaker, B.M. Non-invasive real-time multimodal deception detection using machine learning and parallel computing techniques. Soc. Netw. Anal. Min. 2024, 14, 97. [Google Scholar] [CrossRef]

- Wankar, R.V.; Shah, P.; Sutar, R. Feature extraction and selection methods for motor imagery EEG signals: A review. In Proceedings of the 2017 International Conference on Intelligent Computing and Control (I2C2), Coimbatore, India, 15–16 June 2017; pp. 1–9. [Google Scholar]

- Chanel, G.; Rebetez, C.; Bétrancourt, M.; Pun, T. Emotion Assessment From Physiological Signals for Adaptation of Game Difficulty. IEEE Trans. Syst. Man, Cybern. Part A Syst. Hum. 2011, 41, 1052–1063. [Google Scholar] [CrossRef]

- Shi, S.; Liu, W. Interactive Multi-Agent Convolutional Broad Learning System for EEG Emotion Recognition. Expert Syst. Appl. 2025, 260, 125420. [Google Scholar] [CrossRef]

- Wang, C.; Li, Y.; Wang, L.; Liu, S.; Yang, S. A study of EEG non-stationarity on inducing false memory in different emotional states. Neurosci. Lett. 2023, 809, 137306. [Google Scholar] [CrossRef]

- Kim, J.Y.; Kim, H.G. Emotion Recognition Using EEG Signals and Audiovisual Features with Contrastive Learning. Bioengineering 2024, 11, 997. [Google Scholar] [CrossRef]

- Lu, Y.; Wang, M.; Wu, W.; Han, Y.; Zhang, Q.; Chen, S. Dynamic entropy-based pattern learning to identify emotions from EEG signals across individuals. Measurement 2020, 150, 107003. [Google Scholar] [CrossRef]

- Yao, L.; Lu, Y.; Qian, Y.; He, C.; Wang, M. High-Accuracy Classification of Multiple Distinct Human Emotions Using EEG Differential Entropy Features and ResNet18. Appl. Sci. 2024, 14, 6175. [Google Scholar] [CrossRef]

- Duan, R.N.; Zhu, J.Y.; Lu, B.L. Differential Entropy Feature for EEG-based Emotion Classification. In Proceedings of the 6th International IEEE EMBS Conference on Neural Engineering (NER), San Diego, CA, USA, 6–8 November 2013; pp. 81–84. [Google Scholar]

- García-Martínez, B.; Martínez-Rodrigo, A.; Zangróniz Cantabrana, R.; Pastor García, J.M.; Alcaraz, R. Application of Entropy-Based Metrics to Identify Emotional Distress from Electroencephalographic Recordings. Entropy 2016, 18, 221. [Google Scholar] [CrossRef]

- Zheng, W.-L.; Lu, B.-L. Investigating Critical Frequency Bands and Channels for EEG-based Emotion Recognition with Deep Neural Networks. IEEE Trans. Auton. Ment. Dev. 2015, 7, 162–175. [Google Scholar] [CrossRef]

- Wu, M.; Wan, T.; Wan, X.; Fang, Z.; Du, Y. A New Localization Method for Epileptic Seizure Onset Zones Based on Time-Frequency and Clustering Analysis. Pattern Recognit. 2020, 111, 107687. [Google Scholar] [CrossRef]

- Moukadem, A.; Bouguila, Z.; Abdeslam, D.O.; Dieterlen, A. A new optimized Stockwell transform applied on synthetic and real non-stationary signals. Digit. Signal Process. 2015, 46, 226–238. [Google Scholar] [CrossRef]

- Zhong, X.; Liu, G.; Dong, X.; Li, C.; Li, H.; Cui, H.; Zhou, W. Automatic Seizure Detection Based on Stockwell Transform and Transformer. Sensors 2024, 24, 77. [Google Scholar] [CrossRef]

- Dash, S.; Dash, D.K.; Tripathy, R.K.; Pachori, R.B. Time–frequency domain machine learning for detection of epilepsy using wearable EEG sensor signals recorded during physical activities. Biomed. Signal Process. Control 2025, 100, 107041. [Google Scholar] [CrossRef]

- Blanco-Díaz, C.F.; Guerrero-Mendez, C.D.; Delisle-Rodriguez, D.; Jaramillo-Isaza, S.; Ruiz-Olaya, A.F.; Frizera-Neto, A.; de Souza, A.F.; Bastos-Filho, T. Evaluation of temporal, spatial and spectral filtering in CSP-based methods for decoding pedaling-based motor tasks using EEG signals. Biomed. Phys. Eng. Express 2024, 10, 035003. [Google Scholar] [CrossRef]

- Jiang, Q.; Zhang, Y.; Ge, G.; Xie, Z. An Adaptive CSP and Clustering Classification for Online Motor Imagery EEG. IEEE Access 2020, 8, 156117–156128. [Google Scholar] [CrossRef]

- Venu, K.; Natesan, P. Hybrid optimization assisted channel selection of EEG for deep learning model-based classification of motor imagery task. Biomedizinische Technik. Biomed. Eng./Biomed. Tech. 2023, 69, 125–140. [Google Scholar] [CrossRef]

- Sethi, S.; Upadhyay, R.; Singh, H.S. Stockwell-common spatial pattern technique for motor imagery-based Brain Computer Interface design. Comput. Electr. Eng. 2018, 71, 492–504. [Google Scholar] [CrossRef]

- Mousavi, E.A.; Maller, J.J.; Fitzgerald, P.B.; Lithgow, B.J. Wavelet Common Spatial Pattern in asynchronous offline brain computer interfaces. Biomed. Signal Process. Control 2011, 6, 121–128. [Google Scholar] [CrossRef]

- Chacon-Murguia, M.I.; Rivas-Posada, E. Feature Extraction Evaluation for Two Motor Imagery Recognition Based on Common Spatial Patterns, Time-Frequency Transformations and SVM. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020. [Google Scholar]

- Poh, K.K.; Marziliano, P. Analysis of Neonatal EEG Signals using Stockwell Transform. In Proceedings of the International Conference of the IEEE Engineering in Medicine & Biology Society, Lyon, France, 22–26 August 2007; pp. 594–597. [Google Scholar]

- Kulkarni, D.; Dixit, V.V. EEG-based emotion classification Model: Combined model with improved score level fusion. Biomed. Signal Process. Control 2024, 95, 106352. [Google Scholar] [CrossRef]

- Gupta, B.; Verma, A.K. Linear canonical Stockwell transform and the associated multiresolution analysis. Math. Methods Appl. Sci. 2024, 47, 9287–9312. [Google Scholar] [CrossRef]

- Stockwell, R.G.; Mansinha, L.; Lowe, R.P. Localisation of the complex spectrum: The S Transform. IEEE Trans. Signal Process. 1996, 44, 998–1001. [Google Scholar] [CrossRef]

- Bajaj, A.; Kumar, S. Design of Novel Time–Frequency Tool for Non-stationary α-Stable Environment and its Application in EEG Epileptic Classification. Arab. J. Sci. Eng. 2024, 49, 15863–15881. [Google Scholar] [CrossRef]

- Shin, Y.; Hwang, S.; Lee, S.-B.; Son, H.; Chu, K.; Jung, K.-Y.; Lee, S.K.; Park, K.-I.; Kim, Y.-G. Using spectral and temporal filters with EEG signal to predict the temporal lobe epilepsy outcome after antiseizure medication via machine learning. Sci. Rep. 2023, 13, 22532. [Google Scholar] [CrossRef]

- Jibon, F.A.; Miraz, M.H.; Khandaker, M.U.; Rashdan, M.; Salman, M.; Tasbir, A.; Nishar, N.H.; Siddiqui, F.H. Epileptic seizure detection from electroencephalogram (EEG) signals using linear graph convolutional network and DenseNet based hybrid framework. J. Radiat. Res. Appl. Sci. 2023, 16, 3. [Google Scholar] [CrossRef]

- Guerrero, C.M.; Trigueros, A.M.; Franco, J.I. Time-frequency EEG analysis in epilepsy: What is more suitable? In Proceedings of the Fifth IEEE International Symposium on Signal Processing and Information Technology, Athens, Greece, 18–21 December 2005; pp. 202–207. [Google Scholar] [CrossRef]

- Rao, B.M.; Kumar, A.; Bachwani, N.; Marwaha, P. Detection of atrial fibrillation based on Stockwell transformation using convolutional neural networks. Int. J. Inf. Technol. 2023, 15, 1937–1947. [Google Scholar] [CrossRef]

- Kumar, N.; Raj, S. An Adaptive Scheme for Real-Time Detection of Patient-Specific Arrhythmias Using Single-Channel Wearable ECG Sensor. IEEE Sens. Lett. 2024, 8, 7001504. [Google Scholar] [CrossRef]

- Burnos, S.; Hilfiker, P.; Sürücü, O.; Scholkmann, F.; Krayenbühl, N.; Grunwald, T.; Sarnthein, J. Human intracranial high frequency oscillations (HFOs) detected by automatic time-frequency analysis. PLoS ONE 2014, 9, e94381. [Google Scholar] [CrossRef]

- Shi, L.-C.; Jiao, Y.-Y.; Lu, B.-L. Differential entropy feature for EEG-based vigilance estimation. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 6627–6630. [Google Scholar] [CrossRef]

- Jiang, Q.; Zhang, Y.; Hu, X.; Wang, W.; Ge, G.Y. Geometry-aware Common Spatial Patterns for Motor Imagery-based Brian-Computer Interfaces. IAENG Int. J. Appl. Math. 2024, 54, 1476–1489. [Google Scholar]

- Jiang, X.; Meng, L.; Chen, X.; Wu, D. A CSP-based retraining framework for motor imagery based brain-computer interfaces. Sci. China Inf. Sci. 2024, 67, 189403. [Google Scholar] [CrossRef]

- Grosse-Wentrup, M.; Buss, M. Multiclass Common Spatial Patterns and Information Theoretic Feature Extraction. IEEE Trans. Biomed. Eng. 2008, 55, 1991–2000. [Google Scholar] [CrossRef] [PubMed]

- Mishuhina, V.; Jiang, X. Complex Common Spatial Patterns on Time-Frequency Decomposed EEG for Brain-Computer Interface. Pattern Recognit. 2021, 115, 107918. [Google Scholar] [CrossRef]

- Lu, Y.; Wang, H.; Lu, Z.; Niu, J.; Liu, C. Gait pattern recognition based on electroencephalogram signals with common spatial pattern and graph attention networks. Eng. Appl. Artif. Intell. 2025, 141, 109680. [Google Scholar] [CrossRef]

- Gramfort, A.; Luessi, M.; Larson, E.; Engemann, D.A.; Strohmeier, D.; Brodbeck, C.; Goj, R.; Jas, M.; Brooks, T.; Parkkonen, L.; et al. MEG and EEG data analysis with MNE-Python. Front. Neurosci. 2013, 7, 267. [Google Scholar] [CrossRef]

- Huang, Y.; Zha, W.X. Comparison on Classification Performance Between Random Forests and Support Vector Machine. Software 2012, 33, 107–110. [Google Scholar]

- Kang, J.-H.; Jeong, J.W.; Kim, H.T.; Kim, S.H.; Kim, S.-P. Representation of cognitive reappraisal goals in frontal gamma oscillations. PLoS ONE 2014, 9, e113375. [Google Scholar] [CrossRef]

- Yang, K.; Tong, L.; Shu, J.; Zhuang, N.; Yan, B.; Zeng, Y. High Gamma Band EEG Closely Related to Emotion: Evidence From Functional Network. Front. Hum. Neurosci. 2020, 14, 89. [Google Scholar] [CrossRef]

- Luther, L.; Horschig, J.M.; van Peer, J.M.; Roelofs, K.; Jensen, O.; Hagenaars, M.A. Oscillatory brain responses to emotional stimuli are effects related to events rather than states. Front. Hum. Neurosci. 2023, 16, 868549. [Google Scholar] [CrossRef]

- Kang, J.H.; Ahn, H.M.; Jeong, J.W.; Hwang, I.; Kim, H.T.; Kim, S.H.; Kim, S.P. The modulation of parietal gamma oscillations in the human electroencephalogram with cognitive reappraisal. Neuroreport 2012, 23, 995–999. [Google Scholar] [CrossRef]

| Bands | W_5% | W_10% | W_20% | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Neu vs. Neg | Pos vs. Neg | Pos vs. Neu | Neu vs. Neg | Pos vs. Neg | Pos vs. Neu | Neu vs. Neg | Pos vs. Neg | Pos vs. Neu | |

| All | 90.1 ± 1.1 | 95.2 ± 0.9 | 95.8 ± 0.8 | 90.2 ± 1.2 | 95.3 ± 0.9 | 95.9 ± 0.8 | 90.3 ± 1.2 | 95.5 ± 0.8 | 95.9 ± 0.7 |

| Delta | 70.9 ± 1.6 | 71.9 ± 1.8 | 71.1 ± 2 | 70.9 ± 1.8 | 72.1 ± 1.7 | 71 ± 1.9 | 70.7 ± 1.7 | 72.2 ± 1.6 | 70.9 ± 2.1 |

| Theta | 67.7 ± 1.9 | 71.5 ± 1.7 | 71.6 ± 1.8 | 67.9 ± 1.7 | 71.3 ± 1.6 | 71.9 ± 1.7 | 67.8 ± 2 | 71.1 ± 1.9 | 71.6 ± 1.7 |

| Alpha | 72.2 ± 2 | 78.9 ± 1.5 | 79.4 ± 1.6 | 72.4 ± 2.2 | 79 ± 1.6 | 79.1 ± 1.5 | 72.3 ± 2.3 | 79 ± 1.8 | 79.2 ± 1.5 |

| Beta | 87.1 ± 1.3 | 94.3 ± 1.1 | 94.1 ± 1 | 87 ± 1.3 | 94.3 ± 1.1 | 94.1 ± 1 | 87.1 ± 1.3 | 94.4 ± 0.9 | 94.1 ± 1 |

| Gamma | 92.8 ± 1.2 | 96.2 ± 0.9 | 96.7 ± 0.8 | 92.9 ± 1.3 | 96.2 ± 0.8 | 96.7 ± 0.7 | 92.8 ± 1.3 | 96.2 ± 0.8 | 96.8 ± 0.8 |

| Bands | Frequency | Window = 5 Std | Window = 10 Std | Window = 20 Std |

|---|---|---|---|---|

| Delta | 1 | 16.62 | 15.43 | 13.66 |

| 3 | 14.66 | 12.29 | 9 | |

| Theta | 4 | 13.82 | 11.09 | 7.26 |

| 7 | 11.93 | 8.17 | 3.04 | |

| Alpha | 8 | 11.37 | 7.33 | 1.88 |

| 12 | 9.39 | 4.4 | 0.76 | |

| Beta | 13 | 8.94 | 3.75 | 0.59 |

| 30 | 2.82 | 0.36 | 0 | |

| Gamma | 31 | 2.51 | 0.61 | 0.24 |

| 36 | 1.03 | 0.84 | 0.29 | |

| 41 | 0.23 | 0.22 | 0.21 | |

| 42 | 0.43 | 0.41 | 0.33 | |

| 43 | 0.62 | 0.55 | 0.32 | |

| 44 | 0.79 | 0.63 | 0.17 | |

| 45 | 0.93 | 0.64 | 0.04 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, Y.; Chen, J. Improved EEG-Based Emotion Classification via Stockwell Entropy and CSP Integration. Entropy 2025, 27, 457. https://doi.org/10.3390/e27050457

Lu Y, Chen J. Improved EEG-Based Emotion Classification via Stockwell Entropy and CSP Integration. Entropy. 2025; 27(5):457. https://doi.org/10.3390/e27050457

Chicago/Turabian StyleLu, Yuan, and Jingying Chen. 2025. "Improved EEG-Based Emotion Classification via Stockwell Entropy and CSP Integration" Entropy 27, no. 5: 457. https://doi.org/10.3390/e27050457

APA StyleLu, Y., & Chen, J. (2025). Improved EEG-Based Emotion Classification via Stockwell Entropy and CSP Integration. Entropy, 27(5), 457. https://doi.org/10.3390/e27050457