Abstract

Spike neural networks (SNNs) perform excellently in various domains. However, SNNs based on differential privacy (DP) protocols introduce uniform noise to the gradient parameters, which may affect the trade-off between model efficiency and personal privacy. Therefore, the adaptive differential private SNN (ADPSNN) is proposed in this work. It dynamically adjusts the privacy budget based on the correlations between the output spikes and labels. In addition, the noise is added to the gradient parameters according to the privacy budget. The ADPSNN is tested on four datasets with different spiking neurons including leaky integrated-and-firing (LIF) and integrate-and-fire (IF) models. Experimental results show that the LIF neuron model provides superior utility on the MNIST (accuracy 99.56%) and Fashion-MNIST (accuracy 92.26%) datasets, while the IF neuron model performs well on the CIFAR10 (accuracy 90.67%) and CIFAR100 (accuracy 66.10%) datasets. Compared to existing methods, the accuracy of ADPSNN is improved by 0.09% to 3.1%. The ADPSNN has many potential applications, such as image classification, health care, and intelligent driving.

1. Introduction

In recent years, machine learning has gained significant attention in various fields, such as image classification [1,2], traffic signal control [3,4], and speech recognition [5]. Artificial neural network (ANN) is a crucial branch of machine learning, which has shown significant performance improvements [6,7]. Spiking neural networks (SNNs) [8,9] as the third generation of ANNs, operate in an event-driven manner. Information between layers is transmitted through binary spikes using biological neuron models. SNNs offer advantages such as low power consumption, high robustness, and fast inference speed. They also provide good biological interpretability and hold significant potential for application in neuromorphic hardware [10]. The training datasets for SNN may contain sensitive personal information such as secure passwords, genomic data, and more. However, due to the current limitations of privacy protection mechanisms, it is difficult for SNNs to achieve an optimal balance between performance and privacy. To resist attacks without compromising performance, the development of robust privacy algorithms is crucial.

Differential privacy (DP) [11,12] is an effective privacy protection mechanism. It provides personalized privacy protection against potential attackers, even for the scenarios where attackers have access to all other records except the target record. DP provides various specific implementation solutions tailored to different privacy protection requirements and analysis tasks. The approach of [13] explores learning prior knowledge from images generated by random processes and transferring this prior knowledge to differential data to improve the privacy-utility trade-off of DP-SGD. The method of [14] can ensure both strict differential privacy and balanced computational accuracy of the model.

The inference risk of SNN members was significantly increased by introducing an input dropout strategy, exhibiting privacy vulnerabilities comparable to those of ANN [15]. To address this issue, the DPSNN framework was proposed in [16], providing powerful privacy guarantees for SNN models while maintaining high accuracy. In [17], the construction of low-power SNNs using pre-trained ANN was explored, avoiding the exposure of sensitive information in datasets. Further, an Encrypted-SNN was proposed in [18], addressing the privacy challenge during the transition from ANN to SNN. In the study of SNN privacy protection mechanisms, the impact of quantization and alternative gradient selection on SNN privacy protection was revealed in [19]. By integrating SNN models, the reliability of decision-making was maintained and the uncertainty quantification function of SNN was enhanced in [20]. A novel federated learning framework combining SNN and DP was proposed in [21], which reduced inference attack accuracy to 43% while maintaining low power consumption. A new approach for data privacy protection was provided while maintaining the practicality of the data in [22]. Additionally, the flexibility of SNN in handling damaged inputs was demonstrated, utilizing noise-induced stochastic resonance and dynamic synapses in [23]. A neural morphological visual bullying detection method based on spatiotemporal spike signals was proposed to achieve a balance between privacy protection and bullying recognition in [24]. Privacy issues in SNN-based variational autoencoders (VAEs) were first explored in [25], providing a privacy-preserving solution for image generation and reconstruction tasks.

However, the current privacy-preserving neural network models based on DP still have certain limitations. One of the main challenges is the imbalance between privacy protection and model utility. Traditional DP methods utilize a fixed amount of noise during input processing, which can result in an inadequate trade-off between privacy and utility. When too much noise is added, it may decrease the accuracy and utility of the model. Conversely, insufficient noise may not provide sufficient privacy protection. Therefore, an adaptive differential private SNN (ADPSNN) method has been proposed, which balances model utility and training data privacy, improving the model’s accuracy. In addition, we analyzed the impact of leaky integrated and fire (LIF) [26], and integrated and fire (IF) [27] neurons on the applicability of ADPSNN by selecting the most suitable neuron model for different tasks and datasets. Overall, the three contributions of this work are summarized below.

- The proposed ADPSNN can adaptively adjust the amount of Gaussian noise added to the gradient. Specifically, it adds more noise to gradients that are weakly correlated with the model output to protect model privacy. Meanwhile, less noise is added to gradients that are strongly correlated with the model output to maintain model performance. This provides a more accurate method for protecting the training data privacy in SNNs;

- The performance impact of IF and LIF neurons on ADPSNN is analyzed. On the CIFAR10 and CIFAR100 datasets, the performances of IF neurons are 1.25% and 0.7% higher than that of LIF neurons, respectively. It aims to help select the most suitable neuron model for different tasks and datasets, thus enhancing the applicability of the approach;

- Our method has been extensively tested on four datasets to validate the feasibility of ADPSNN. It effectively balances privacy protection and the practicality of sensitive data in SNNs. In terms of model accuracy, our approach is improved by 0.09% to 3.1% compared to other existing methods. Therefore, our method may be used in some privacy-preserving scenarios, such as image classification, healthcare, and intelligent driving.

2. Preliminaries and Background

In this section, SNN along with the LIF and IF neuron models are introduced in Section 2.1. The DP, global sensitivity, and Gaussian noise mechanisms are introduced in Section 2.2.

2.1. Spiking Neural Network

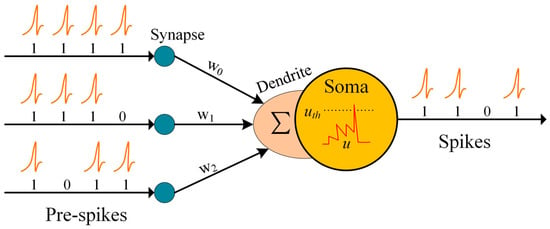

In a SNN, neurons play a fundamental role in transmitting information through discrete spiking events. It consists of three parts, i.e., dendrite, soma, and axon, as shown in Figure 1. Neurons are connected by synapses. When a neuron receives input from other neurons through its synapses, the input is weighted by the strength of each synapse. This weighted input is then transmitted to the dendrites of the neuron. The soma part of the neuron maintains and updates the membrane potential based on the magnitude of the weighted presynaptic input received from the dendrites. The membrane potential represents the electrical charge across the neuronal membrane and reflects the neuron’s state. As the soma accumulates and processes the incoming inputs, the membrane potential gradually changes. Once the membrane potential crosses a certain threshold set by the neuron, a spiking event, or spike, is generated within the neuron. This spike can be seen as a discrete electrical signal.

Figure 1.

Computational model of SNN.

The LIF model is a widely used model for SNN, which simply describes the behavior of each biological neuron. The LIF model can be calculated by

where is the time step, is the time constant, is the membrane potential, and is the output spike. The output spike of the th neuron is denoted by , and stands for the synaptic weight between th and the current neuron. The reset potential following the spike is denoted by , while the trigger threshold is represented by . In the continuous-time domain of the LIF model, the Dirac function can serve as a representation of the spiking signal. This function exhibits a peak of infinite height at the firing time, representing the firing spike event of the neuron. By modulating the membrane potential of a neuron through integrating spikes, one can interpret the neuron’s input as a form of current and integrate it into the neuron’s membrane potential. Upon surpassing a predetermined threshold, the neuron produces a spike and transmits it to other neurons connected to it. After that, the membrane potential is reset to its initial potential in preparation for receiving the next input.

The IF model is another classical method to describe the neuronal dynamics of SNN. The cell membrane is treated as a capacitor and the dynamics of the IF neuron can be governed by differential equations. A neuron fires and returns to its resting potential when the membrane potential exceeds a threshold . The IF model can be calculated by

where is the dynamic change in each neuron’s membrane potential, is the resting potential, is the input received by each neuron, is the response coefficient of each neuron to the input, and is the time constant of each neuron’s membrane potential.

The convolutional SNN can incorporate a network architecture that includes convolutional, pooling, and fully connected layers, akin to convolutional neural networks. However, the input to a convolutional SNN differs significantly; it can either be spike event data captured via dynamic vision sensors or spike inputs derived from Bernoulli sampling transformation applied to standard image datasets. Unlike traditional neural networks, which output continuous values via activation functions, convolutional SNN require classification based on the spike signals within the output layer. The timing and frequency of spikes serve as the basis for representing various pattern classes. This method of spike encoding allows convolutional SNNs to handle temporal information, offering clear benefits for event-driven tasks. By merging traditional convolutional, pooling, and fully connected layers within its architecture, the convolutional SNN provides a versatile and robust framework for utilizing spike signals in classification tasks, encoding and processing temporal data, and serving it as an efficacious tool for managing dynamic event-driven data. This study is designed to benchmark the performance of SNN against conventional approaches in the realm of static image recognition, a domain that has been extensively explored in prior research until the emergence of SNN [28].

2.2. Differential Privacy

DP aims to protect data confidentiality by introducing noise or altering the original dataset. This obfuscation hampers the ability of adversaries to ascertain the presence of specific entries. Even in scenarios where an attacker has comprehensive access to all but one particular record, DP ensures that the existence of that singular record within the database remains indiscernible. The definition of DP provides a well-calibrated trade-off between personal privacy and data utility, offering a measurable degree of privacy assurance. It mandates that any modification to the dataset should exert a negligible effect on the outcomes of analyses that are not specific to any one individual. Consequently, DP upholds the privacy of individuals while maintaining the integrity and precision of the overall dataset.

Definition 1

(ε-Differential Privacy [11]).

Given a pair of adjacent datasets and , an algorithm that is random : meets the requirements for -DP if and only if for any subset of outputs , the probability can be calculated by

where represents probability. This condition ensures that the probability ratio for the occurrence of a particular output subset when executing an algorithm on different datasets and . The probability ratio does not exceed . Privacy budget is a crucial factor influencing the strength of privacy protection. It represents the level of privacy protection offered by the algorithm . A smaller privacy budget ensures stronger privacy guarantees, implying that the algorithm produces consistent results and does not significantly change when any record is removed from the database. The privacy budget is typically chosen to be small. It is crucial to recognize that a non-zero privacy budget is necessary. In theory, a zero privacy budget would provide the highest level of protection for sensitive data during training. In this scenario, the model would produce uniformly probable indistinguishable outcomes for all adjacent dataset pairs, stripping the output of any meaningful insights about the data. However, a zero privacy budget undermines the balance between model utility and the protection of data privacy. -differential privacy offers a precise definition of privacy protection. It is noteworthy to emphasize, nonetheless, that ()-differential privacy is a more lenient variant of -differential privacy. The parameter , which is invariably set to a value greater than zero ( > 0), quantifies the probability of a privacy breach even under stringent DP conditions. ()-differential privacy denotes the high chance of -differential privacy being attained by a randomized method, which is contingent upon the value of . The definition of ()-differential privacy is provided by

where -differential privacy is excessively stringent. This privacy analysis concept is built on the Gaussian Differential Privacy (GDP) framework proposed by Dong et al. [29]. In GDP, a mechanism is deemed to satisfy -GDP if the distribution of its output on the dataset cannot be discerned from the distribution of Gaussian noise , which has a mean of and a standard deviation of one, in any statistically significant manner.

Gaussian noise is widely used in DP. It is used to input noise to the DP mechanism to preserve the privacy of sensitive data. The value range of Gaussian noise is in the field of real numbers, which can generate continuous random numbers and better match the characteristics of actual data. By adjusting the standard deviation of Gaussian noise through the global sensitivity and privacy budget, the size of the noise can be flexibly controlled, and the balance between privacy protection and data utility can be achieved. The Gaussian noise introduced by this mechanism is independently generated on each occasion, uninfluenced by prior noise or data inputs, thereby guaranteeing the mechanism’s reliability and uniformity. Global sensitivity stands as a critical metric in determining the requisite noise level for DP. It is the maximum variation of a function over all possible datasets . Within the Gaussian mechanism, this global sensitivity is instrumental in determining the scale of noise to be added, facilitating a delicate balance between the privacy of the data and its utility. As a rule of thumb, an increase in global sensitivity necessitates the integration of a greater quantum of noise.

Definition 2

(Global sensitivity [11]). The global sensitivity is calculated depending on the specific function . For example, for a query function , global sensitivity can be obtained by computing the maximum absolute difference of the function over different datasets , which is defined as

where and are any two neighbor databases, and is the distance between and . By determining the global sensitivity, combined with privacy parameters such as , an appropriate standard deviation of Gaussian noise can be chosen to achieve the desired level of privacy protection.

Definition 3

(Gaussian mechanism [11,29]). The Gaussian mechanism is a common privacy protection method, that introduces random noise to the data to protect the privacy of sensitive information. For any , there is makes mechanism that satisfies -DP. It can be calculated by

where is the standard deviation of the Gaussian noise mechanism. determines the amount of noise inputted into the data. However, the privacy budget has a negative correlation with the noise level, that is, a lower privacy budget means a larger noise scale is inputted. The relaxation, denoted by the symbol , refers to the risk probability that a violation of strict requirements for data privacy protection occurs. It can be calculated by

3. ADPSNN

A novel privacy-preserving paradigm for SNN based on correlation analysis is given in this section. The promoted method safeguards the privacy of the training dataset while maintaining the model’s effectiveness. This is achieved by the strategic infusion of noise into the gradient. To be more precise, the gradient is modified by the proposed mechanism, which employs the Gaussian mechanism in conjunction with a correlation analysis method, adjusting it adaptively in response to the relationship between the output spike and the corresponding label.

3.1. The ResNetSNN Architecture

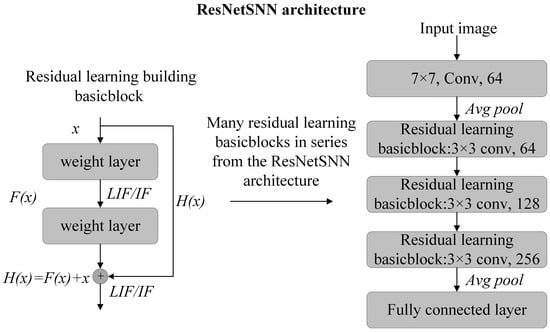

In the research of [30], a ResNet model with LIF neuron is selected for the classification task. Our work combines ResNet and LIF with IF spiking neurons to construct a ResNetSNN model. The detailed structure of the ResNetSNN model is depicted in Figure 2. The ResNetSNN network architecture is underpinned by a fundamental building block known as the residual block, which is employed by neural networks to facilitate residual connections. This structure can ameliorate the model’s performance by mitigating the issues of gradient explosion and vanishing in SNNs. Initially, the input data is processed through a convolutional layer for feature extraction, then it is normalized by a batch normalization layer, which accelerates the training process and bolsters the model’s generalization capabilities. Following this, the data undergo an activation function such as LIF/IF, augmenting the model’s non-linear representation. Finally, the implementation of the residual connection is completed by adding the output of a skip connection to the processed data. In this case, the input data is represented by , the residual function by , the original output by , and the realization of the residual connection by . Instead of learning the original output directly, the neural network can learn the residual function with this design. This enhances the model’s performance by enabling the neural network to utilize the input data’s information more effectively. This work uses a ResNetSNN18 architecture for the MNIST [31] and Fashion-MNIST [32] datasets, while the CIFAR10 and CIFAR100 datasets use a ResNetSNN19 architecture.

Figure 2.

The architecture of the ResNetSNN model.

3.2. Gradient Perturbation for SNN

In this research, a distinctive ADPSNN mechanism is devised to ensure privacy for SNN. This mechanism modulates the quantity of Gaussian noise infused into the gradient, thereby not only successfully protecting the privacy of the data in the training set, but also preserving the operational efficacy of the model.

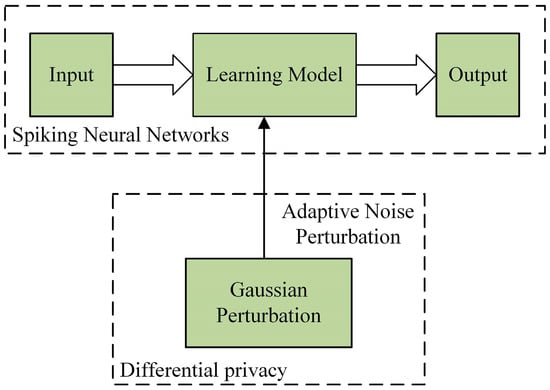

The ADPSNN mechanism combines the advantages of SNN and DP as shown in Figure 3, which is able to improve the performance of SNN while protecting individual privacy. The mechanism consists of three main components: input, learning model, and output. In the input phase, the data are pre-processed and entered into the learning model, which is constructed by the ResNetSNN. The structure of a neural network consists of multiple neurons, each receiving input from other neurons and computing its output, which is modeled using SNN-based models. In this mechanism, the gradient parameter is passed by inputting noise adaptively. This approach allows neurons to inject noise while passing gradient parameters, thereby preserving privacy. DP is realized by Gaussian privacy. Gaussian privacy is a DP algorithm that protects data privacy by inputting Gaussian noise, which is inputted adaptively to balance the needs of privacy protection and neural network performance.

Figure 3.

Diagram of the overall ADPSNN mechanism.

A set L of randomly selected training samples from the dataset D is used in each training step. Updating the parameter for the mini-batch dataset in steps, starting from the initial point by

where is the regularization parameter, is the learning rate at step , and is the gradient over the sample.

To provide a learning mechanism for SNN that meets the requirements of -differential privacy, noise is incorporated into the gradient update procedure. This mechanism updates the parameter at the DP step by

where the Gaussian noise vector, , is random.

Introducing a fixed amount of noise into the gradient can influence the learning outcomes of the model, potentially disrupting the equilibrium between the model’s effectiveness and the privacy protection of the training data. This research suggests the ADPSNN mechanism as a solution to enhance the model’s performance. Depending on the correlation between the model output spike and the label, this ADPSNN can introduce noise in an adaptable manner. First, the correlation of the model output spikes and labels can be computed for the -th data, which can be calculated by

where represents the prediction result of the neural network for the -th data, and , represents the true label for the -th data, and represents the total number of data in dataset D. and are the average prediction overall data and the average actual label overall data, respectively.

To ensure , each is normalized to by

where and denote the maximum and minimum value, respectively, in . Next, an adaptive Gaussian noise strategy is used by a relevance ratio . This means that gradients with a lower correlation to the model output receive more noise, while gradients with a higher correlation receive less noise. It can be calculated by

and the privacy budget is calculated by

Last, Gaussian noise is inputted to the gradient for protection.

Theorem 1.

Suppose the DP can run via Equation (9) for T batches , where the batches are disjoint. If for all and , -differential privacy is satisfied by using its additional Gaussian noise.

Proof.

Let and be two neighboring batches. Let the parameters on and be denoted by and , which can be calculated by

and the inequality can be calculated by

where , , . Because and are two neighboring batches, only one piece of data is different, therefore . In addition, , and . This leads to the inequality . □

To achieve DP protection, the gradient can be inputted to adaptive noise while computing , which is calculated by

where the perturbation of the gradient is . Then the inequality can be calculated by

where , .

4. Experiments and Results

4.1. Datasets

The CIFAR100, CIFAR10, MNIST, and Fashion-MNIST datasets are widely used for image classification tasks. The CIFAR100 contains 100 different categories. Each category includes a wide variety of objects, animals, plants, vehicles, and scenes, making it a diverse dataset. There are 600 training and 100 test images in each category, each measuring 32 by 32 pixels. The CIFAR10 is a small dataset containing 60,000 color images in total. A training set of 50,000 photos and a test set of 10,000 images are the two subgroups into which it is divided. The MNIST dataset comprises approximately 70,000 grayscale images, each sized 28 × 28 pixels; 60,000 of them are used to train the model and another 10,000 to test the performance of the model. The images include handwritten digits from 0 to 9 with approximately 7000 sample images per digit. The Fashion-MNIST dataset is a dataset containing fashion-related clothing and accessories images for testing and validating image classification algorithms. It has the same number of categories, total amount of data and sample dimensions as the MNIST dataset. In addition, the summary of hardware, software, and hyperparameters information was completed to ensure reproducibility in Table 1.

Table 1.

Hardware, software, and hyperparameters information.

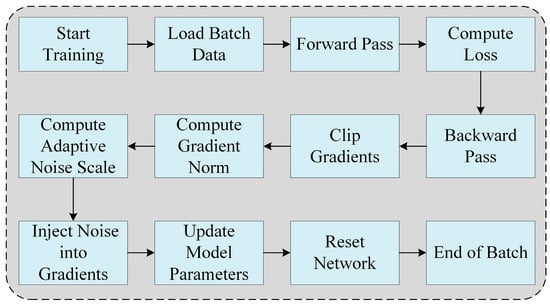

In the training process, adaptive noise is injected into the gradients as shown in Figure 4. After computing the gradients during the backward pass, the gradient norm is calculated. Based on the correlation between the model’s output and the labels, as well as the differential privacy parameters, an adaptive noise scale is dynamically determined. This noise scale is then used to generate Gaussian noise, which is added to the gradients. The noisy gradients are applied to update the model parameters, ensuring both differential privacy and improved robustness. Finally, the network is reset to prepare for the next batch or timestep.

Figure 4.

Adaptive noise injection gradient flowchart during training process.

4.2. Experiments on Differentially Private Algorithms

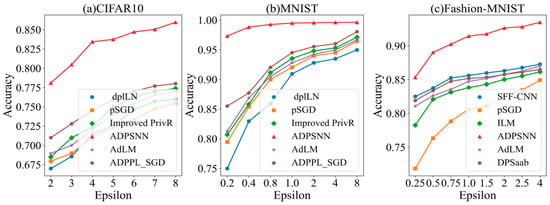

Figure 5 illustrates that the ADPSNN exhibits higher accuracy than other algorithms under different privacy budgets on the CIFAR10, MNIST, and Fashion-MNIST datasets. On the CIFAR10 dataset, the ADPSNN surpasses the cutting-edge ADPPL_SGD [33] optimization method by a margin of 7.95% in accuracy, attaining a peak accuracy of 85.97%, which is more advantageous than the DP SGD algorithm with Identical Laplace Noise (dpILN), pSGD [34], Improved PrivR [35], and AdLM [36] methods. On the MNIST dataset, the accuracy is higher than that of other algorithms. When the privacy budget is set to two, the ADPSNN’s accuracy advantage over the ADPPL_SGD reaches its zenith at 11.80%, and this margin of superiority incrementally widens as the privacy budget is expanded. With the privacy budget set to eight, the mechanism’s test accuracy soars to 99.63%, outstripping the ADPPL_SGD by 1.59% and setting a new benchmark for state-of-the-art performance. On the Fashion-MNIST dataset, the performance is better than the SFF-CNN [37], pSGD, ILM [36], AdLM, and DPSaab [37] methods. As the privacy budget is incrementally enhanced, a corresponding gradual improvement in accuracy is observed. When the privacy budget is two, the approach tested in this study outstrips the prevailing superior SFF-CNN algorithm [33] by a substantial margin of 6.33%, achieving an impressive peak accuracy of 92.64%. Figure 5 shows that the ADPSNN maintains its utility and high accuracy, outperforming alternative algorithms when operating under identical privacy-preserving constraints.

Figure 5.

The test accuracy of the ADPSNN in comparison to competing models.

4.3. Experiments on Different Privacy Levels

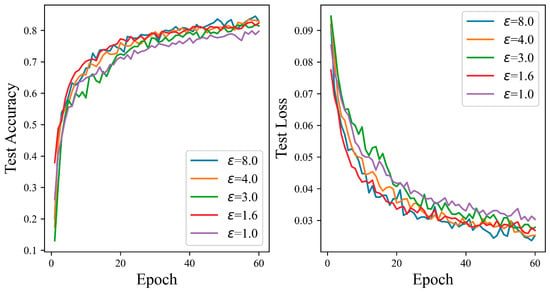

Figure 6 delineates a positive correlation between the accuracy of the proposed mechanism increases and the increment of the privacy budget on the CIFAR10 dataset. When the privacy budget is one, the test accuracy is generally lower than other values. As the number of iterations increases, there is a notable uptick in accuracy across the spectrum of privacy budgets. After conducting 60 iterations at privacy budgets of 1.6, 3.0, and 4.0, the test accuracies are 77.58%, 82.21%, and 82.40%, respectively. When the privacy budget is eight, the ADPSNN attains its pinnacle of accuracy at 83.95%, which is a significant improvement of 4.08% over the accuracy observed at the lowest budget of one.

Figure 6.

The test accuracy of the ADPSNN for different epsilons on the CIFAR10 dataset.

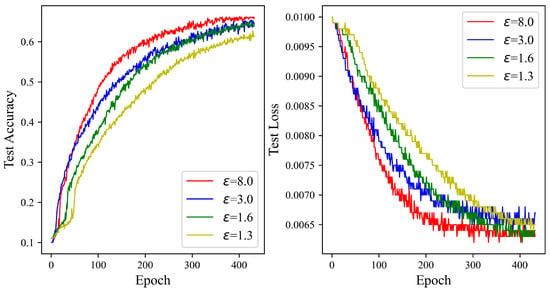

Figure 7 illustrates a general upward trend in the test accuracy curve in tandem with increases in the privacy budget. A gradual climb in accuracy is witnessed when the privacy budget is set to 1.3, cresting at 62.67%. The zenith of accuracy is recorded at 65.42% with a privacy budget of 8.0, marking a 2.75% enhancement over the budget of 1.3. At privacy budgets of 1.6 and 3.0, the accuracies attained are 64.92% and 65.22%, respectively. The experimental results underscore the ADPSNN mechanism’s capacity to maintain robust performance on the complex CIFAR100 dataset while affording a substantial degree of data privacy protection.

Figure 7.

The test accuracy of the ADPSNN for different epsilons on the CIFAR100 dataset.

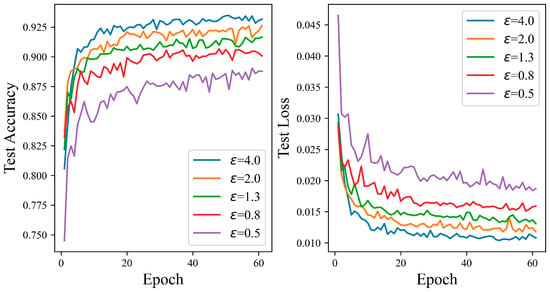

Figure 8 demonstrates that as the budget for privacy rises, the test accuracy curve of the proposed mechanism generally rises. When the privacy budget is 0.5, the SNN has the lowest test accuracy. However, it still achieves a high accuracy of 98.91%, indicating that even if more gradient perturbations are inputted into the SNN network, it can maintain good performance. The suggested algorithm performs at 0.44% as the privacy budget rises to 0.8, higher than that achieved with a privacy budget of 0.5, and remains relatively stable. Finally, as the privacy budget reaches four, the test accuracy peaks at 99.60%. Overall, Figure 8 shows that the ADPSNN mechanism can maintain relatively high performance on the MNIST data set while protecting sensitive information.

Figure 8.

The test accuracy of the ADPSNN for different epsilons on the MNIST dataset.

Figure 9 shows that the test accuracy curves of the proposed mechanism all rise in tandem with the increase in the privacy budget, and this rising trend is obvious. When the privacy budget is 0.5, the test accuracy curve of SNN is the lowest as a whole, but it still achieves a high accuracy of 89.03%. When the privacy budget is 0.8, 1.3, 2.0, and 4.0, the accuracy reaches 90.66%, 91.67%, 92.64%, and 93.48%, respectively. When the privacy budget is four, the performance is 4.45% higher than that of 0.5. With the increase in the number of iterations, the test accuracy curve remains relatively stable. The findings of the experiment confirm the high effectiveness of ADPSNN, and the ADPSNN can maintain good performance while protecting sensitive information.

Figure 9.

The test accuracy of the ADPSNN for different epsilons on the Fashion-MNIST dataset.

4.4. Experiments on Different SNN Algorithms

Table 2 shows that the ADPSNN is better than other SNNs. On the MNIST dataset, the test accuracy of the LIF model reaches 99.56%, which is higher than that of other methods: 0.93% higher than DPSNN [16], 0.09% higher than the Encrypted-SNN method in [18], 0.56% higher than the method of [38], and 0.34% higher than the approach of [19]. The training accuracy of the IF model reaches 99.47%, which is slightly lower (0.09%) than the LIF model, and the time step is eight in the training process. The ADPSNN can protect against sensitive information leakage while maintaining good performance. On the MNIST dataset, the LIF model performs better than the IF model.

Table 2.

Performance of SNN on MNIST dataset.

Table 3 indicates that the accuracy of the IF neuron model is 89.42%, the accuracy of the LIF neuron model tested is 90.67%, and the time step is eight on the CIFAR10 dataset. Compared with other training algorithms without privacy protection, the performance is close to 90.00%. However, on the IF and LIF neuron models, the accuracy is 1.37% and 0.12% higher than the PrivateSNN training method in [17], and 2.57% and 1.32% higher than the Encrypted-SNN method [18], respectively. Compared with methods [15,19], the proposed method improved the performance of LIF neurons by 10.43% and 1.22%, respectively. Table 3 shows that the ADPSNN can strike a balance between the model’s usefulness and performance on the CIFAR10 dataset, and the IF performs better than the LIF neuron model.

Table 3.

Performance comparisons of different models on CIFAR10 datasets.

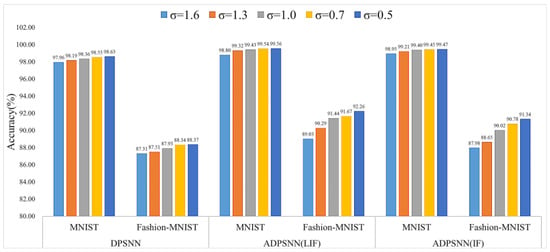

Figure 10 illustrates that the performance of the ADPSNN is better than the DPSNN privacy protection method on the LIF and IF neuron models. Whether it is on the MNIST dataset or the Fashion-MNIST dataset, the performance bar charts increase with the reduction in the inputted noise scale. The degree of increase on the MNIST dataset is slightly slower than the degree of increase on the Fashion-MNIST dataset. The MNIST dataset is much simpler and less complex than the Fashion-MNIST dataset. When the noise scale is 1.6, the accuracy of the proposed ADPSNN mechanism on the MNIST dataset is 98.80% using the IF neuron model. When using the IF neuron model, the accuracy is 98.95%, which is higher than the accuracy of the DPSNN method of 97.96%. Figure 10 reveals that, across noise scales of 1.3, 1.0, 0.7, and 0.5, the LIF model consistently outperforms the IF model in test performance on both the MNIST and Fashion-MNIST datasets within the ADPSNN framework. This suggests that the LIF model is more effective than the IF neuron model in processing these datasets.

Figure 10.

Performance comparisons of different studies on MNIST and Fashion-MNIST datasets with different noise scales.

Table 4 shows the effectiveness of the proposed ADPSNN mechanism. This study is based on the training methods described in the references [10,17,18,46]. Results indicate that the performance of ADPSNN is stable in the CIFAR100 dataset. The ADPSNN using IF and LIF neuron models achieved 66.1% and 65.4% on the CIFAR100 dataset, respectively. ADPSNN (LIF) and ADPSNN (IF) are 2.4% and 3.1% higher than the Encrypted-SNN [18] method on the CIFAR100 dataset, 3.1% and 3.8% higher than the PrivateSNN [17] method, and 1.5% and 2.2% higher than the method of [15], respectively. This means that ADPSNN can maintain high performance while protecting sensitive information on the CIFAR100 dataset.

Table 4.

Performance comparisons of different models on CIFAR100 datasets.

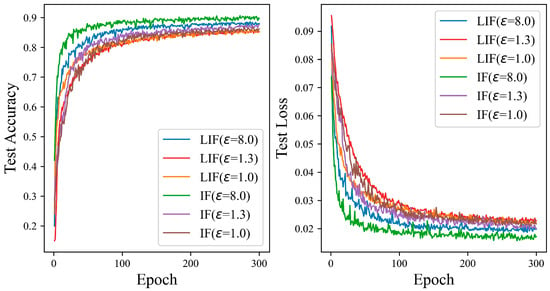

4.5. Experiments on the LIF and IF Models

Figure 11 shows that the ADPSNN uses the IF neuron model to train and test the data on the CIFAR10 dataset. The performance is better than that of the LIF neuron model. When the privacy budget is one, the LIF neuron model initially exhibits superior performance, with its test accuracy surpassing that of the IF neuron model as the number of iterations increases. However, this trend reverses around the 95th iteration, where the IF neuron model’s test accuracy curve begins to exceed that of the LIF neuron. At higher privacy budgets of 1.3 and 8.0, the test accuracy of the IF neuron model is markedly superior to that of the LIF neuron model, with the discrepancy in test accuracy reaching 1.99% and 2.04%, respectively. These experimental results validate the feasibility of the mechanism proposed in this paper. Particularly for the intricate CIFAR10 dataset, the IF neuron model demonstrates enhanced performance compared to the LIF neuron model. The findings affirm that both neuron models can uphold high test accuracy while providing robust privacy safeguards.

Figure 11.

The test accuracy of the ADPSNN using LIF and IF neurons for different epsilons on the CIFAR10 dataset.

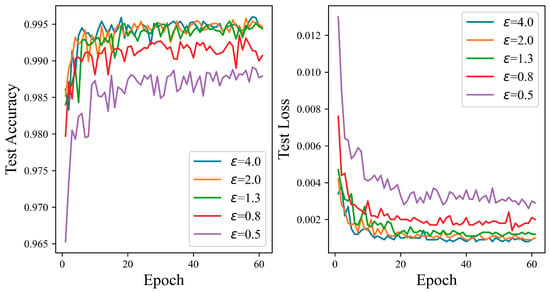

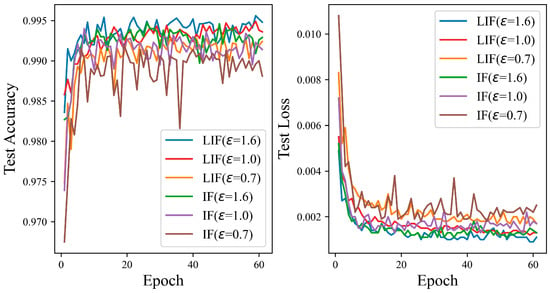

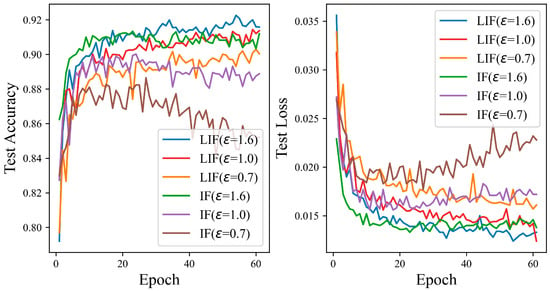

Figure 12 shows that the test accuracy of the LIF neuron model is better than that of the IF neuron model on the MNIST dataset. The test curve of the IF neuron is relatively smoother, and the fluctuation is not as large as that of the LIF neuron model. When the privacy budgets are set to 0.7 and 1.0, the test curve and loss curve of the IF model fluctuate greatly, due to additional Gaussian noise, which affects the model’s performance in some way. At privacy budget settings of 0.7, 1.0, and 1.6, with the iteration count held at 60, the disparity in test accuracy between the IF and LIF models is observed to be 0.43%, 0.22%, and 0.19%, respectively. The test accuracy of the LIF neuron model can reach 99.56%.

Figure 12.

The test accuracy of the ADPSNN using LIF and IF neurons for different epsilons on the MNIST dataset.

Figure 13 indicates that the LIF neuron model’s performance is better than that of the IF neuron model on the Fashion-MNIST dataset. As the privacy budget is elevated, the test accuracy curve for the LIF neuron model generally exhibits an upward trajectory. In contrast, for the IF neuron model, the performance curve demonstrates a decline as the number of iterations increases at privacy budgets of 0.7 and 1.0, with the descent being more pronounced with the 0.7 privacy budget than with 1.0. Specifically, at privacy budgets of 0.7, 1.0, and 1.6, the test accuracy achieved by the ADPSNN mechanism on the LIF model reaches 90.27%, 91.44%, and 92.26%, respectively. Meanwhile, the IF neuron model under the same mechanism achieves test accuracies of 88.65%, 90.02%, and 91.34%. These experimental outcomes suggest that the ADPSNN mechanism better harmonizes model utility with privacy protection, particularly when applied to the Fashion-MNIST dataset.

Figure 13.

The test accuracy of the ADPSNN using LIF and IF neurons for different epsilons on the Fashion-MNIST dataset.

5. Conclusions

To solve the issue of SNN models balancing data privacy protection and high performance, this work proposes an adaptive DP protection learning mechanism for SNN models, namely ADPSNN. ADPSNN not only dynamically introduces Gaussian noise to meet the requirements of DP, but also dynamically adjusts the noise intensity added to the gradient based on correlation. The strength of ADPSNN lies in dynamically analyzing the correlation between the spike sequence output by the model and the real labels, thereby achieving precise control over noise allocation. For gradients that are weakly correlated with the model output, ADPSNN tends to apply higher levels of noise to effectively blur sensitive information and enhance data privacy protection. On the contrary, the amount of noise should be moderately reduced to avoid unnecessary damage to model performance. In addition, this work examines the performance of LIF and IF neural models under the ADPSNN mechanism and analyzes the performance and DP effect of neural models in processing complex classification tasks. Experimental results demonstrate that ADPSNN can maintain high model performance while ensuring data privacy, achieving a balance between the two and providing strong support for the application of SNN in privacy-sensitive scenarios.

In the future, the advancement of the ADPSNN mechanism will center on three key areas. Firstly, there will be an emphasis on refining the gradient adaptation strategy and implementing advanced algorithms to enhance learning convergence while deepening our comprehension of SNN mechanisms. Secondly, innovative fine noise injection techniques are being developed, effectively balancing privacy with performance. Lastly, our applications are planned to be expanded to key areas such as healthcare, finance, and intelligent transportation. They contribute to the innovation and development of SNN and differential privacy-preserving techniques by combining expertise in signal processing and cryptography. For example, in the healthcare field, ADPSNN can be applied for secure medical image analysis and patient data processing. In the financial field, it can enhance fraud detection systems while protecting transaction privacy. In intelligent transportation, it can realize real-time, privacy protected traffic monitoring and auto drive system.

Author Contributions

Conceptualization, methodology, software, validation, X.L., Q.F. and J.L.; formal analysis, investigation, data curation, X.L., Y.L., S.Q. and X.O.; writing—original draft preparation, X.L.; writing—review and editing, J.L. and Q.F.; visualization, Y.L.; supervision, J.L.; funding acquisition, J.L., Q.F. and X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported by the Guangxi Science and Technology Projects under Grant GuiKeAD24010047, the Guangxi Natural Science Foundation under Grants 2022GXNSFFA035028 and 2025GXNSFBA069292, the Guangxi New Engineering and Technical Disciplines Research and Practice Projects under grant XGK2022005, the Basic Ability Enhancement Program for Young and Middle-aged Teachers of Guangxi under Grant 2024KY0074, a grant (No. BCIC-24-Z7) from Guangxi Key Laboratory of Brain-inspired Computing and Intelligent Chips, and the Innovation Project of Guangxi Graduate Education under Grant YCSW2024174.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The data used to support the findings of this study are included within the article and are cited at relevant places within the text as references.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Liu, Y.; Nie, F.; Gao, Q.; Gao, X.; Han, J.; Shao, L. Flexible Unsupervised Feature Extraction for Image Classification. Neural Netw. 2019, 115, 65–71. [Google Scholar]

- Li, Y.; Guo, Z.; Wang, L.; Xu, L. TBC-MI: Suppressing Noise Labels by Maximizing Cleaning Samples for Robust Image Classification. Inf. Process. Manag. 2024, 61, 103801. [Google Scholar]

- Liu, J.; Qin, S.; Su, M.; Luo, Y.; Zhang, S.; Wang, Y.; Yang, S. Traffic Signal Control Using Reinforcement Learning Based on the Teacher-student Framework. Expert Syst. Appl. 2023, 228, 120458. [Google Scholar]

- Liu, J.; Qin, S.; Su, M.; Luo, Y.; Wang, Y.; Yang, S. Multiple Intersections Traffic Signal Control Based on Cooperative Multi-agent Reinforcement Learning. Inf. Sci. 2023, 647, 119484. [Google Scholar]

- Song, Y.; Guo, L.; Man, M.; Wu, Y. The Spiking Neural Network Based on FMRI for Speech Recognition. Pattern Recognit. 2024, 155, 110672. [Google Scholar]

- Liu, J.; Qin, S.; Luo, Y.; Wang, Y.; Yang, S. Intelligent Traffic Light Control by Exploring Strategies in an Optimised Space of Deep Q-Learning. IEEE Trans. Veh. Technol. 2022, 71, 5960–5970. [Google Scholar]

- Liu, J.; Sun, T.; Luo, Y.; Yang, S.; Cao, Y.; Zhai, J. Echo State Network Optimization using Binary Grey Wolf Algorithm. Neurocomputing 2020, 385, 310–318. [Google Scholar]

- Fu, Q.; Dong, H. An Ensemble Unsupervised Spiking Neural Network for Objective Recognition. Neurocomputing 2021, 419, 47–58. [Google Scholar]

- Xian, R.; Xiong, X.; Peng, H.; Wang, J.; de Arellano Marrero, A.R.; Yang, Q. Feature Fusion Method Based on Spiking Neural Convolutional Network for Edge Detection. Pattern Recognit. 2024, 147, 110112–110120. [Google Scholar]

- Hwang, S.; Kung, J. One-Spike SNN: Single-Spike Phase Coding with Base Manipulation for ANN-to-SNN Conversion Loss Minimization. IEEE Trans. Emerg. Top. Comput. 2024, 13, 162–172. [Google Scholar]

- Dwork, C.; Mcsherry, F.; Nissim, K.; Smith, A. Calibrating Noise to Sensitivity in Private Data Analysis. In Theory of Cryptography, Proceedings of the Third Theory of Cryptography Conference, TCC 2006, New York, NY, USA, 4–7 March 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 265–284. [Google Scholar]

- Ribero, M.; Henderson, J.; Williamson, S.; Vikalo, H. Federating Recommendations Using Differentially Private Prototypes. Pattern Recognit. 2022, 129, 108746. [Google Scholar] [CrossRef]

- Tang, X.; Panda, A.; Sehwag, V.; Mittal, P. Differentially Private Image Classification by Learning Priors from Random Processes. In NIPS’23, Proceedings of the 37th International Conference on Neural Information Processing Systems, New Orleans, LA, USA, 10–16 December 2023; Curran Associates Inc.: Red Hook, NY, USA, 2024; pp. 1–23. [Google Scholar]

- Wang, Y.; Nedić, A.N. Differentially-private Distributed Algorithms for Aggregative Games with Guaranteed Convergence. IEEE Trans. Autom. Control. 2024, 69, 5168–5183. [Google Scholar] [CrossRef]

- Guan, J.; Sharma, A.; Tian, C.; Lahlou, S. On the Privacy Risks of Spiking Neural Networks: A Membership Inference Analysis. arXiv 2025, arXiv:2502.13191. [Google Scholar]

- Wang, J.; Zhao, D.; Shen, G.; Zhang, Q.; Zeng, Y. DPSNN: A Differentially Private Spiking Neural Network with Temporal Enhanced Pooling. arXiv 2022, arXiv:2205.12718. [Google Scholar]

- Kim, Y.; Panda, P. PrivateSNN: Privacy-Preserving Spiking Neural Networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 22 February–1 March 2022; pp. 1192–1200. [Google Scholar]

- Luo, X.; Fu, Q.; Qin, S.; Wang, K. Encrypted-SNN: A Privacy-Preserving Method for Converting Artificial Neural Networks to Spiking Neural Networks. In Neural Information Processing, Proceedings of the 30th International Conference, ICONIP 2023, Changsha, China, 20–23 November 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 519–530. [Google Scholar]

- Moshruba, A.; Snyder, S.; Poursiami, H.; Parsa, M. On the Privacy-Preserving Properties of Spiking Neural Networks with Unique Surrogate Gradients and Quantization Levels. arXiv 2025, arXiv:2502.18623. [Google Scholar]

- Chen, J.; Park, S.; Simeone, O. Agreeing to Stop: Reliable Latency-Adaptive Decision Making via Ensembles of Spiking Neural Networks. Entropy 2024, 26, 126. [Google Scholar] [CrossRef]

- Han, B.; Fu, Q.; Zhang, X. Towards Privacy-Preserving Federated Neuromorphic Learning via Spiking Neuron Models. Electronics 2023, 12, 3984. [Google Scholar] [CrossRef]

- Lopuhaä-Zwakenberg, M.; Goseling, J. Mechanisms for Robust Local Differential Privacy. Entropy 2024, 26, 233. [Google Scholar] [CrossRef]

- Garipova, Y.; Yonekura, S.; Kuniyoshi, Y. Noise and Dynamical Synapses as Optimization Tools for Spiking Neural Networks. Entropy 2025, 27, 219. [Google Scholar] [CrossRef]

- Zhang, J.; Yang, T.; Jiang, C.; Liu, J.; Zhang, H. Chaotic loss-based spiking neural network for privacy-preserving bullying detection in public places. Appl. Soft Comput. 2025, 169, 112643. [Google Scholar] [CrossRef]

- Yadav, S.; Pundhir, A.; Goyal, T.; Raman, B.; Kumar, S. Differentially Private Spiking Variational Autoencoder. In Pattern Recognition, Proceedings of the 27th International Conference, ICPR 2024, Kolkata, India, 1–5 December 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 96–112. [Google Scholar]

- Eshraghian, J.K.; Ward, M.; Neftci, E.O.; Wang, X.; Lenz, G.; Dwivedi, G.; Bennamoun, M.; Jeong, D.S.; Lu, W. Training Spiking Neural Networks Using Lessons from Deep Learning. Proc. IEEE 2023, 111, 1016–1054. [Google Scholar]

- Fang, W.; Chen, Y.; Ding, J.; Yu, Z.; Masquelier, T.; Chen, D.; Huang, L.; Zhou, H.; Li, G.; Tian, Y. SpikingJelly: An Open-source Machine Learning Infrastructure Platform for Spike-based intelligence. Sci. Adv. 2023, 9, eadi1480–eadi1497. [Google Scholar] [PubMed]

- Roy, K.; Jaiswal, A.; Panda, P. Towards Spike-based Machine Intelligence with Neuromorphic Computing. Nature 2019, 575, 607–617. [Google Scholar] [CrossRef]

- Dong, J.; Roth, A.; Su, W.J. Gaussian Differential Privacy. J. R. Stat. Soc. Series B. Stat. Methodol. 2022, 84, 3–37. [Google Scholar]

- Kim, Y.; Li, Y.; Park, H.; Venkatesha, Y.; Hambitzer, A.; Panda, P. Exploring Temporal Information Dynamics in Spiking Neural Networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; pp. 8308–8316. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based Learning Applied to Document Recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Xiao, H.; Rasul, K.; Vollgraf, R. Fashion-MNIST: A Novel Image Dataset for Benchmarking Machine Learning Algorithms. arXiv 2017, arXiv:1708.07747. [Google Scholar]

- Gong, M.; Pan, K.; Xie, Y.; Qin, A.K.; Tang, Z. Preserving Differential Privacy in Deep Neural Networks with Relevance-based Adaptive Noise Imposition. Neural Netw. 2020, 125, 131–141. [Google Scholar]

- Abadi, M.; Chu, A.; Goodfellow, I.; Brendan McMahan, H.; Mironov, I.; Talwar, K.; Zhang, L. Deep Learning with Differential Privacy. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, Vienna, Austria, 24–28 October 2016; pp. 308–318. [Google Scholar]

- Gong, M.; Pan, K.; Xie, Y. Differential Privacy Preservation in Regression Analysis Based on Relevance. Knowl.-Based Syst. 2019, 173, 140–149. [Google Scholar]

- Phan, N.H.; Wu, X.; Dou, D. Preserving Differential Privacy in Convolutional Deep Belief Networks. Mach. Learn. 2017, 106, 1681–1704. [Google Scholar]

- Li, D.; Wang, J.; Li, Q.; Hu, Y.; Li, X. A Privacy Preservation Framework for Feedforward-Designed Convolutional Neural Networks. Neural Netw. 2022, 155, 14–27. [Google Scholar] [CrossRef]

- Saranirad, V.; Dora, S.; McGinnity, T.M.; Coyle, D. CDNA-SNN: A New Spiking Neural Network for Pattern Classification Using Neuronal Assemblies. IEEE Trans. Neural Netw. Learn. Syst. 2024, 36, 2274–2287. [Google Scholar]

- Diehl, P.U.; Cook, M. Unsupervised Learning of Digit Recognition using Spike-Timing-Dependent Plasticity. Front. Comput. Neurosci. 2015, 9, 99. [Google Scholar]

- Mostafa, H.; Pedroni, B.U.; Sheik, S.; Cauwenberghs, G. Fast Classification Using Sparsely Active Spiking Networks. In Proceedings of the 2017 IEEE International Symposium on Circuits and Systems (ISCAS), Baltimore, MD, USA, 28–31 May 2017; pp. 1–4. [Google Scholar]

- Srinivasan, G.; Roy, K. ReStoCNet: Residual Stochastic Binary Convolutional Spiking Neural Network for Memory-Efficient Neuromorphic Computing. Front. Neurosci. 2019, 13, 189. [Google Scholar]

- Zhang, W.; Li, P. Temporal Spike Sequence Learning via Backpropagation for Deep Spiking Neural Networks. In NIPS’20, Proceedings of the 34th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 6–12 December 2020; Curran Associates Inc.: Red Hook, NY, USA, 2020; pp. 12022–12033. [Google Scholar]

- Rueckauer, B.; Liu, S.C. Conversion of Analog to Spiking Neural Networks using Sparse Temporal Coding. In Proceedings of the2018 IEEE International Symposium on Circuits and Systems (ISCAS), Florence, Italy, 27–30 May 2018; pp. 1–5. [Google Scholar]

- Chen, T.; Wang, L.; Li, J.; Duan, S.; Huang, T. Improving Spiking Neural Network with Frequency Adaptation for Image Classification. IEEE Trans. Cogn. Dev. Syst. 2023, 16, 864–876. [Google Scholar]

- Han, B.; Roy, K. Deep Spiking Neural Network: Energy Efficiency Through Time based Coding. In Computer Vision—ECCV 2020, Proceedings of the 16th European Conference, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 388–404. [Google Scholar]

- Sengupta, A.; Ye, Y.; Wang, R.; Liu, C.; Roy, K. Going Deeper in Spiking Neural Networks: VGG and Residual Architectures. Front. Neurosci. 2019, 13, 95. [Google Scholar] [CrossRef]

- Rathi, N.; Srinivasan, G.; Panda, P.; Roy, K. Enabling Deep Spiking Neural Networks with Hybrid Conversion and Spike Timing Dependent Backpropagation. arXiv 2020, arXiv:2005.01807. [Google Scholar]

- Lee, C.; Sarwar, S.S.; Panda, P.; Srinivasan, G.; Roy, K. Enabling Spike-Based Backpropagation for Training Deep Neural Network Architectures. Front. Neurosci. 2020, 14, 119. [Google Scholar]

- Wu, Y.; Deng, L.; Li, G.; Zhu, J.; Xie, Y.; Shi, L. Direct Training for Spiking Neural Networks: Faster, Larger, Better. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 1311–1318. [Google Scholar]

- Zhang, Y.; Wang, Z.; Zhu, J.; Yang, Y.; Rao, M.; Song, W.; Zhuo, Y.; Zhang, X.; Cui, M.; Shen, L.; et al. Brain-inspired Computing with Memristors: Challenges in Devices, Circuits, and Systems. Appl. Phys. Rev. 2020, 7, 011308. [Google Scholar]

- Rathi, N.; Roy, K. DIET-SNN: Direct Input Encoding with Leakage and Threshold Optimization in Deep Spiking Neural Networks. arXiv 2020, arXiv:2008.03658. [Google Scholar]

- Wang, Y.; Liu, H.; Zhang, M.; Luo, X.; Qu, H. A Universal ANN-to-SNN Framework for Achieving High Accuracy and Low Latency Deep Spiking Neural Networks. Neural Netw. 2024, 174, 106244. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).