Abstract

Recurrence resonance (RR), in which external noise is utilized to enhance the behaviour of hidden attractors in a system, is a phenomenon often observed in biological systems and is expected to adjust between chaos and order to increase computational power. It is known that connections of neurons that are relatively dense make it possible to achieve RR and can be measured by global mutual information. Here, we used a Boltzmann machine to investigate how the manifestation of RR changes when the connection pattern between neurons is changed. When the connection strength pattern between neurons forms a partially sparse cluster structure revealing Boolean algebra or Quantum logic, an increase in mutual information and the formation of a maximum value are observed not only in the entire network but also in the subsystems of the network, making recurrence resonance detectable. It is also found that in a clustered connection distribution, the state time series of a single neuron shows noise. In proteinoid microspheres, clusters of amino acid compounds, the time series of localized potential changes emit pulses like neurons and transmit and receive information. Indeed, it is found that these also exhibit noise, and the results here also suggest RR.

1. Introduction

In many engineering systems, noise disrupts the signals sent and received, making it impossible to maintain a correct input/output environment, so it is essential to reduce noise from the computational environment as much as possible. In contrast, living systems live in environments full of noise, and it is almost impossible to avoid noise. Therefore, many living systems, on the other hand, make good use of noise to improve various computational capabilities [1,2].

A well-known phenomenon that actively uses noise is stochastic resonance [3,4]. It is known that paddle-fishes use noise to enhance the weak electrical signals emitted by planktonic prey to detect them, and this is a typical example of stochastic resonance [5]. A similar example to stochastic resonance is recurrence resonance [6,7]. This does not enhance signals from the outside, but rather enhances the behaviour of attractors hidden within the systems, and strengthens the system’s potential through noise.

Notably, a method has been proposed that makes it easy to find recurrence resonance by increasing the noise level in a specific noise region, where the global mutual information increases, reaches a maximum value, and then decreases; the importance of recurrence resonance has been further increasing [7]. In this case, it is said that recurrence resonance easily appears if the connections between neurons are dense.

Recurrence resonance could be related to various dynamical systems that utilize internal noise. First, in neural networks that are the basis for deep learning, recurrent neurons [8,9] are equivalent to a ’heat bath’ in reservoir computing [10,11,12], and it is known that their role of stirring up inputs with noise is important. They play a role in moving between attractors generated internally by internal noise, and in that sense, are closely related to recurrence resonance.

Second, it has become clear that the distribution of avalanche firings in neurons follows a power law, and in recent years, discussions of self-organized criticality (SOC) [13,14,15] and edge of chaos [16,17,18] have rekindled in the experimental field of neuroscience [19,20,21]. These are understood as critical phenomena in the phase transition from order to chaos, and it is thought that the balance between the attractor of structure formation and noise (chaos) leads to efficient computation and a power law. However, SOC and edge of chaos require tuning to the critical value, and the generality of tuning even in SOC has not been clarified.

Another scenario for SOC and edge of chaos merits consideration. It involves a system in which order and chaos (noise) are well adjusted by using a synchronous random update of the system elements [2]. When this system is implemented with elementary cellular automata (ECA) [22,23], various power law behaviors can be realized without tuning, and in terms of computational power, while there is a strict trade-off between computational universality and computational efficiency in synchronous ECA, this is broken in asynchronous ECA, and it is known that both computational universality and efficiency are realized [2]. It has also been found that when such an asynchronously adjusted ECA is implemented in a neural network reservoir, learning performance is improved compared to using an ECA (class III) that behaves chaotically in the reservoir [24].

Furthermore, instead of providing asynchronous updating, by implementing an input–output relation which leads to quantum logic in a cellular automaton (quantum automaton) [25], it is easy to obtain a time evolution pattern that features both chaos and order. It has been found in recent years that a power law can also be realized by noise [26].

When considering the relationship between recurrence resonance, SOC, and quantum automata, the following problems have emerged. First, while recurrence resonance is judged by global mutual information, it is impossible to observe all neurons in the brain. Therefore, it is possible that recurrence resonance occurring as a whole can also be observed in parts, depending on the connection state of neurons. Mutual information has a small value in simple chaos or simple order, and its value is large only in complex patterns that have both characteristics. In other words, when recurrence resonance is observed in parts, it is thought that the parts behave like SOC. Second, therefore, in a system in which recurrence resonance can be judged not only in the whole network but also in the partial network, critical phenomenon-like behavior and even power law behavior may be realized. This can be judged by whether noise is found in the behavior time series of a single neuron in the neural network. Third, a network that realizes the first and second behaviors could have a partially sparse distribution, like a quantum logical automaton, where the connection state of neurons brings about quantum logic. The purpose of this study is to measure and evaluate the above in a Boltzmann machine [27,28].

The key point is the following. It was previously reported that RR can be easily realized if the connectivity is dense to some extent. In this paper, we propose the concept of local observability of RR and show that such observability depends on the distribution of connections among elements, giving rise to quantum logic.

In addition to neural networks, clusters of proteinoid microspheres are sparsely connected systems that emit signals like neural pulses and are regarded as one of various hopeful candidates for the system of the origin of life [29,30,31,32]. These are not freely connected to distant elements like neurons, but are clusters that depend on spatial structure. A cluster structure that has sparse parts while connecting neighbours in a spatially dependent manner can be considered a system that can be approximated to a Boltzmann machine that depends on spatial structure. Therefore, we inserted electrodes into part of the proteinoid microsphere cluster, observed the time series of the potential signal, and verified whether noise was observed by FFT. If this is possible, it would be a strong concrete example that supports our view.

2. Materials and Methods

2.1. Boltzmann Machine and Data Analysis

Recurrence resonance is here estimated in a neural network, Boltzmann machine [7]. A one-dimensional Boltzmann machine is expressed as

which involves a recurrent neuron and where

The symbol represents the weight in the connection, where and . Crucially, W is the weight strength, r is the noise strength, and N represents the system size. The are random numbers, drawn from a normal distribution with mean 0 and standard deviation 1. The time development of a one-dimensional network is expressed as

To consider a network dependent on spatial structure, we also estimate a two-dimensional Boltzmann machine expressed as

where

For a two-dimensional network, represents the size of the system. The time development of a two-dimensional network is expressed as

The metric entropy and mutual information are calculated for a time series of neurons to evaluate the recurrence resonance. The metric entropy is defined by

where X is a binary sequence. Mutual information is defined by

where both X and Y are binary operations. If a binary sequence is defined for an array of all neurons such as

for a one-dimensional network and

for a two-dimensional network, the entropy and mutual information are called global entropy and global mutual information. If a binary sequence is defined to have a small part of neurons, we add the term “local”, and they are called local entropy and local mutual information. In global mutual information, Y is defined as

for a one-dimensional network and as

A binary sequence of Y for local mutual information is also defined for a small part of a network.

We estimate the behavior of a neuron in terms of the power spectrum. Given a time series of one neuron, , the power spectrum of a time series is defined as follows. First, the discrete Fourier transform for a time series is obtained by

where is the frequency corresponding to the period, . The power is obtained as the squared absolute value of the discrete Fourier transform such that

2.2. Logical Structure of Neuron Connections

In this paper, we investigate the effect of the distribution of weight of connection, which reveals Boolean and/or quantum logic in terms of lattice theory [33]. A lattice is a kind of algebraic structure, which is a partially ordered set closed with respect to binary operations, join, and meet. Using a rough set lattice technique, the binary relation can be interpreted as a lattice structure [34,35].

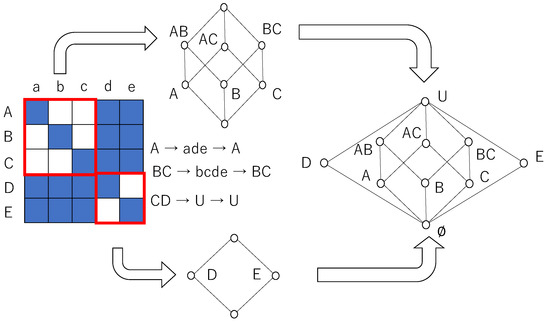

Figure 1 shows how a binary relation is interpreted as a lattice. A binary relation is set for a distribution of the weight of connection or joint probabilities of states of neurons. Define sequences of neurons and such that with . A binary relation I⊆× is obtained dependent on whether exceeds a threshold value or not, and is expressed as

In Figure 1, if , the corresponding cell is painted blue; otherwise, it is blank.

Figure 1.

A lattice obtained from a binary relation by using a closure operation. If a relation is diagonal relation, then its corresponding lattice is a -Boolean lattice consisting of elements. A binary relation (left) consists of - and -diagonal sub-relations surrounded by related cells. As each -sub-relation reveals a -Boolean sub-algebra, a rough set lattice results in a disjoint union of - and -Boolean sub-algebras in which the least and greatest elements are common to both sub-algebras.

The closure operation is defined by the following. For any subset X of , closure operation is expressed as

where represents ∈I. For any subset of , Y, is expressed as

It is straightforwardly verified that a collection of fixed points of closure operations is a rough set lattice such that

As each element of a rough set lattice is a set, the order relation between elements is the inclusion relation.

As shown in Figure 1, a diagonal relation for which all diagonal elements are in the relation and any other elements are not in the relation reveals a Boolean algebra, and a relation for which there are some diagonal sub-relations surrounded by related cells (i.e., ) reveals a non-distributive orthomodular lattice that corresponds to quantum logic. For instance, , , and . Thus, is not a fixed point of closure operation. A Hasse diagram, finally obtained from a given binary relation, is expressed as a disjoint union of - and -Boolean algebras whose greatest and least elements are common [36].

2.3. Proteinoid Microsphere Experiments

Local points from clusters of proteinoid microspheres emit a pulse-like signal in a way that is similar to neurons.

2.3.1. Preparation of Proteinoid Microspheres

The proteinoid synthesis used analytical grade L-glutamic acid (L-Glu, CAS: 56-86-0) and L-phenylalanine (L-Phe, CAS: 63-91-2) from Sigma Aldrich Ltd., Gillingham, UK (purity ≥ 98%). Proteinoid solutions were prepared using either deionized water or KNO3 (0.065 M) ionic solution. To synthesize L-Glu:L-Phe proteinoids, we mixed 2.5 g each of L-glutamic acid and L-phenylalanine. We then heated the mixture to its melting point in a reflux apparatus, with continuous agitation, until a homogeneous slurry formed. The molten mixture was subsequently cooled to 80 °C, diluted with deionized water, and stirred for 3 h. We isolated the resulting precipitate via vacuum filtration. Then, we obtained the final purified proteinoid powder through lyophilization [37].

2.3.2. Bioelectric Signal Measurement Setup

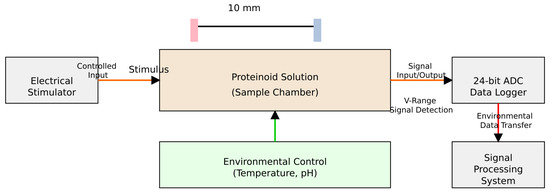

The apparatus for measuring bioelectric signals from the proteinoid system used dual iridium–platinum electrodes (0.1 mm diameter). They were positioned 10 mm apart (Figure 2). We recorded bioelectric potential differences using an ADC-24 PicoLog system. We chose this system for its high-precision voltage measurement. The setup had an electrical stimulator for precise sample excitation. It also had a system to control the environment and maintain experimental conditions. This data acquisition system enabled real-time monitoring and analysis of bioelectric signals. It detected changes in proteinoid microsphere behaviour with precision under controlled stimulation.

Figure 2.

Experimental configuration for measuring bioelectric signals in the proteinoid micropsheres. The apparatus has a pair of iridium–platinum electrodes (0.1 mm diameter). They are 10 mm apart for detecting potential differences. A high-precision ADC-24 PicoLog data logging system performs signal acquisition. The setup has three key components: a precision electrical stimulator for controlled excitation; an environmental monitoring system for stable conditions; and a signal processing unit for real-time analysis and visualization.

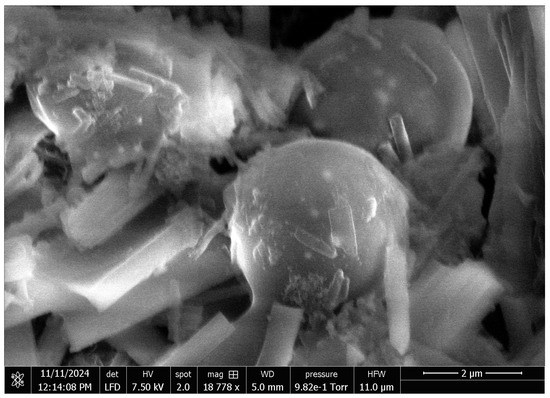

2.3.3. Morphological Characterization of Proteinoid Microspheres

We examined the proteinoids with an FEI Quanta 650 SEM. See Figure 3. The micrograph shows spherical structures, which are 2 to 3 μm in diameter. They have a smooth surface. The microspheres tend to cluster, but they stay intact. The image was made with a low field detector (LFD). It used a 7.50 kV voltage, a 5.0 mm working distance, and a 9.82 × 10−1 Torr chamber pressure. The samples were imaged without metallic coating under low vacuum (HFW: 11.0 μm). This enabled observation of the microspheres at 18,778× magnification in their native, non-conductive state, which provided a clear view of their surface and spatial organization.

Figure 3.

A scanning electron microscopy (SEM) image of uncoated proteinoid microspheres (L-Glu:L-Phe). It shows their spherical shape and distinct surface features. The image was acquired using a low field detector (LFD) at 7.50 kV accelerating voltage, with a working distance (WD) of 5.0 mm and chamber pressure of 9.82 × 10−1 Torr. Imaging the non-conductive sample without a metallic coat was possible by operating under low vacuum (HFW: 11.0 μm). The scale bar represents 2 μm at 18,778× magnification.

3. Results

3.1. One Dimensional Network

First, we describe the behavior of a Boltzmann machine with five neurons arranged in a row. We set various connections between neurons.

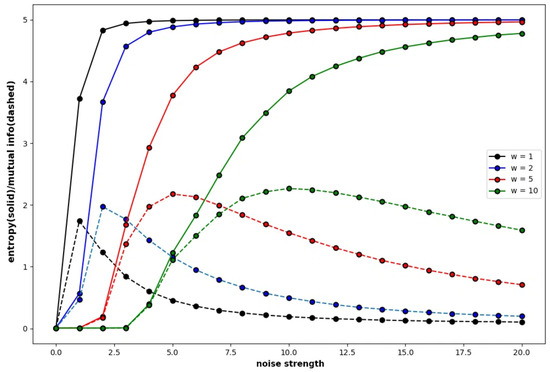

Figure 4 shows the global entropy and global mutual information of a specific Boltzmann machine that consists of five neurons plotted against the noise strength, where the global entropy shows a monotonic increasing function and the other curve of shows the global mutual information. The weight of the connection is determined subject to a normal distribution, which is called the normal condition. The color of the curves show the weight strength. The probability of a binary sequence is obtained by the frequency of the binary sequence in the time development of 10,000 steps, where .

Figure 4.

Four pairs of global entropy and global mutual information plotted against noise strength for the Boltzmann machine consisting of five neurons, under the normal condition. The weight of the connection between neurons is determined by the normal condition, where , , , , , , , , , , , , , , , , , , , , , , , , and . The color of the curves represents the difference of the weight strength multiplied by the weight of connection.

It is clear that the global mutual information increases in the low range of the noise strength. The range in which the global mutual information increases suggests some explicit behaviour based on attractors in the neural network under the normal condition, which indicates recurrence resonance. As each neuron mutually interacts with each other under the normal condition in which each neuron is basically connected to all neurons in the network, the effect of the external noise is weakened by the interaction and is prevailed slowly. Therefore, the global entropy slowly increases as the weight strength increases—. The global mutual information also slowly increases in the smaller range of the noise strength for the high weight strength. It is easy to see that the global mutual information gradually increases and reaches the maximal point till the global entropy reaches about the value of 3.6 independent of the connection strength. The global entropy reaches 3.6 for the noise strength of 1.3 if the weight strength is 1, and it reaches 3.6 for the noise strength of 10.0 if the weight strength is 10. This implies that the recurrence resonance is explicit if the weight strength is high.

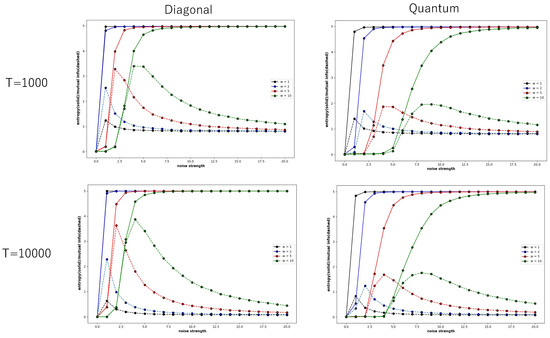

Figure 5 also shows global entropy and global mutual information pairs of a specific Boltzmann machine which consists of five neurons, plotted against the noise strength, where the weight of the connection is determined under the diagonal distribution (left) and under the quantum distribution (right)—these are called the diagonal condition and Quantum condition, respectively. The diagonal distribution of the connection implies that the weight of a connection such as is stochastically determined in the range between 0.7 and 1.0, and any other weight such as is stochastically determined in the range of 0.0 and 0.05. The quantum distribution of the connection implies that weight of a connection such as is stochastically determined in the range between 0.7 and 1.0, each of , , , , , , , , , , , and is stochastically determined in the range between 0.2 and 0.3, and any other weight is stochastically determined in the range of 0.0 and 0.05. Global entropy and global mutual information pairs were obtained from a time series of 1000 steps (above) and 10,000 steps (below).

Figure 5.

Four pairs of the global entropy and global mutual information plotted against noise strength for the Boltzmann machine consisting of five neurons, under the diagonal (left) and quantum conditions (right). The global entropy and mutual information were calculated from a time series of 1000 steps (above) and of 10,000 steps. The color of a pair of curves represents the weight strength, where black, blue, red, and green curves represent , , , and , respectively.

The global entropy under the diagonal condition rapidly increases even for the small noise strength, as the connection between neurons is so weak that each neuron behaves independently of other neurons; the effect of external noise is not weakened due to the interactions between neurons. While the global entropy increases until it reaches about 4.0, the global mutual information also increases until it reaches the maximal point. This tendency is found for both cases in which the probability of binary sequence was obtained in time series of 1000 steps and 10,000 steps. Although the range showing the increase in global mutual information is very narrow, the maximal value of global mutual information under diagonal conditions is very high. This shows that the spatial and temporal patterns under the diagonal condition are very complex between order and chaos.

Compared to other conditions, in the diagonal condition, the maximum value of global mutual information increases rapidly as the weight strength increases. In the normal condition in Figure 4 and the quantum condition in Figure 5, the maximum value of global mutual information hardly changes when the weight strength is changed from 1 to 2, to 5 and then 10. However, in the diagonal condition, when the weight strength is changed from 1 to 10, the maximum value of global mutual information changes about three times. In the diagonal condition, there is no interaction with other neurons, but as the weight strength increases, the autocatalytic effect becomes stronger, which is thought to enhance the tendency of recurrence resonance.

Compared to the behaviour of the Boltzmann machine under the diagonal condition, the global entropy and global mutual information under the quantum condition slowly increase, since the interaction among neurons can contribute to weakening the effect of external noise. The global mutual information increases and reaches the maximal point until the global entropy increases and reaches the value of 3.5, whether the probability was obtained in a time series of 1000 steps or 10,000 steps. The maximal value of the global mutual information is rather small compared to the diagonal condition.

If the probabilities of binary sequences are obtained in a time series of 1000 steps, the global mutual information converges not to 0.0 but to 1.0, regardless of whether the connection of neurons is subject to the diagonal or quantum condition. However, if the probability is obtained in a time series of 10,000 steps, it converges to about 0.0. This implies that if the time development proceeds for long enough time, then explicit complex patterns disappear because of the effect of external noise, and that recurrence resonance appears in the transient stage before the behavior of neural nets converges to a noisy steady state.

Figure 6 also shows global entropy and global mutual information against the noise strength of a Boltzmann machine consisting of seven neurons, where for . The probability of the binary sequence was obtained from a time series calculated over 10,000 time steps. It is easy to see that the patterns of the weight of the connection reveal Boolean algebra and quantum logic. As mentioned in the above section, the diagonal relation reveals Boolean logic. As the pattern of weight in the diagonal condition leads to the diagonal relation by the relation to which only the weight exceeding 0.7 belongs, the weight pattern in diagonal condition reveals Boolean algebra. The pattern of the weight in the quantum condition leads to a relation in which 3 × 3 and 4 × 4 diagonal sub-relations are surrounded by related cells, if the weight exceeding 0.2 is determined as an element of the relation; otherwise, the weight is determined as no relation. This pattern leads to quantum logic, which is expressed as a disjoint union of - and -Boolean algebras whose greatest and least elements are common. We also calculated global entropy and mutual information for the weight pattern, which revealed a disjoint union of two - and one -Boolean algebras under the quantum condition. However, they are omitted because the tendency of the entropy and mutual information is almost same as that in the graph with the quantum condition in Figure 6.

Figure 6.

Four pairs of global entropy and global mutual information plotted against noise strength for a Boltzmann machine consisting of seven neurons under the normal, diagonal, and quantum conditions. The square consisting of 7 × 7 circles (above left) represents a set of weights of the connections between neurons, where dark brown circles arranged along the diagonal line represent the weight stochastically determined between 0.7 and 1.0, orange circles represent the weight stochastically determined between 0.2 and 0.3, and white circles represent the weight stochastically determined between 0.0 and 0.05. Pale orange circles represent the weight stochastically determined and subject to a normal distribution. The color of a pair of curves represents the weight strength, where black, blue, red, and green curves represent , , , and , respectively.

The trends of global entropy and global mutual information do not change fundamentally even when the number of neurons is increased. In the normal condition and quantum condition, the increase in global entropy and global mutual information in response to noise strength is gentle, and the maximum value of global mutual information hardly changes with changes in connection strength. In contrast, in the diagonal condition, both global entropy and global mutual information rise rapidly in response to an increase in noise strength, and as the connection strength increases, the maximum value of global mutual information also increases accordingly. This is thought to be because, as mentioned above, the interaction between neurons is weak, and the autocatalytic effect due to the connection strength is accentuated by the increase in connection strength.

Although the number of calculation steps is 10,000, because the number of neurons is increasing, the state does not converge to a sufficiently stable steady state. Therefore, the entropy and mutual information curves are not stable in the normal condition, and the mutual information converges to 1.0 instead of 0.0 in the diagonal condition and quantum condition.

3.2. Local Estimation in Two-Dimensional Network

It was reported that recurrence resonance could be observed not only in Boltzmann machines but also in various networks such as Hopfield [38] networks if the connections between neurons are dense [7]. This is certainly true. However, the way in which it manifests itself clearly differs depending on the pattern of connections between neurons. As we have seen so far, when the connections are dense as in the normal condition, the interactions between neurons effectively adjust the external noise, emphasizing the behaviour of the attractor of the network itself; while showing recurrence resonance and at the same time mitigating the effects of the noise, the system slowly reaches a steady state. In contrast, in the diagonal condition, recurrence resonance is also shown, but the effects of noise appear directly and do not seem to be significantly mitigated. In the quantum condition, the connection distribution seems to satisfy both characteristics, and recurrence resonance is shown while the effects of noise are also mitigated. Here, there is a struggle between the order latent inside the network and the noise (chaos) from the outside, and it is an interesting problem how the two are mediated in spacetime.

First, we consider a 3 × 3 neural network to consider the information-theoretic relationship between the whole and the part. The condition of connection between neurons is the normal condition. Global entropy and mutual information classify the nine-bit states by looking at all nine neurons and calculating the probability. In other words, the states are 000000000, 000000001, …, 111111111, and the probability is calculated for these states. In contrast, local entropy and mutual information are calculated from only three neurons. In this case, the states are 000, 001, 010, 011, 100, 101, 110, and 111, and the number of states is only . Also, local entropy and mutual information are calculated only for three neurons, , , and , rather than calculating the probability for every three bits for all neurons.

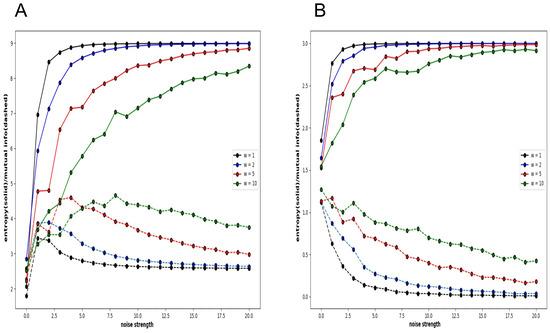

Figure 7A shows the global entropy and mutual information plotted against noise strength for a 3 × 3 neural network. As shown in the graphs of Figure 4, Figure 5 and Figure 6, the different colours represent different connection strengths. The probability is calculated over a time evolution of 50,000 steps. Because of the large number of neurons, as the connection strength increases, the interaction weakens the noise effect, and the time to fall into a noisy steady state becomes long enough, and the curve becomes more uneven. However, the important point is that in the low-noise strength region, there is a clear increase in mutual information and the local maximum. In other words, the global mutual information shows the existence of recurrence resonance. However, the local mutual information does not show the existence of recurrence resonance (Figure 7B). It only decreases monotonically with increasing noise intensity. There is no region where the value increases, which means that no complex pattern appears in any noise region.

Figure 7.

Four pairs of global and local entropy and mutual information plotted against noise strength for Boltzmann machine consisting of 3 × 3 neurons under normal condition. (A). Global entropy and global mutual information. (B). Local entropy and local mutual information.

Although a whole system realizes recurrence resonance by mitigating noise, such a tendency is not observed when only a part of the system is examined. The behaviour of the Boltzmann machine in the normal condition means that the conflict between chaos and order is not observed locally. Mutual information reduces the value of both chaotic patterns and regular patterns such as periodic oscillations; so, locally, there is either chaos or order, and neither of which are mixed in a complex pattern.

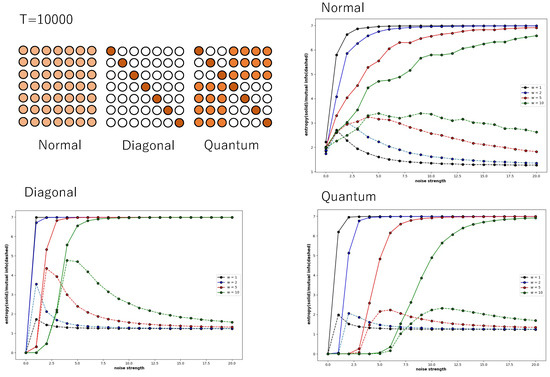

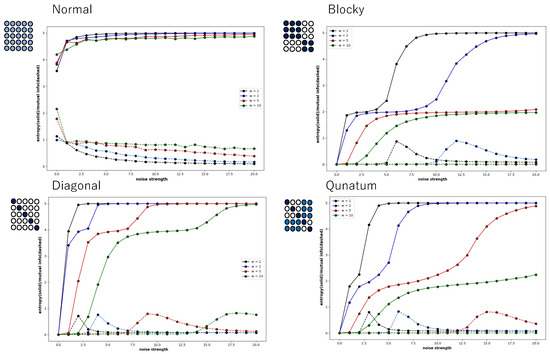

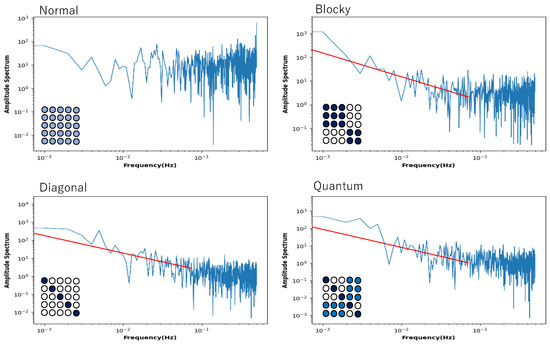

However, Figure 8 shows that this is not a general tendency. It shows the behaviour of local entropy and local mutual information in a Boltzmann machine network consisting of 25 neurons arranged two-dimensionally in a 5 × 5 grid. The horizontal axis is the noise strength, and the colour of the curve indicates the weight strength, as in Figure 4, Figure 5, Figure 6 and Figure 7. Also, all the monotonically increasing curves are local entropy, and the other pairs that do not are mutual information, as shown in Figure 4, Figure 5, Figure 6 and Figure 7. The probability is calculated from the frequency counted over 10,000 steps, with the state defined as .

Figure 8.

Four pairs of local entropy and local mutual information plotted against noise strength for Boltzmann machine consisting of 5 × 5 neurons, under normal, blocky, diagonal, and quantum conditions. Square consisting of 5 × 5 circles represents set of neurons suggesting weight of connections (see text). Colour of pair of curves represents weight strength, where black, blue, red, and green curves indicate , , , and , respectively.

The 5 × 5 circle distribution shown in each graph in Figure 8 does not directly show the weight distribution of the connections. The circles represent neurons, and in the normal condition, the connection weight between all neurons is determined by probabilities that follow a normal distribution. In the blocky, diagonal, and quantum conditions, the connection weight between dark blue neurons is determined between 0.7 and 1.0, and between white neurons, the connection weight is determined between 0.0 and 0.05. In the quantum condition, the connection weight between light blue neurons is determined between 0.2 and 0.3. The difference is clear when comparing the normal condition with the other conditions—blocky, diagonal, and quantum. In all conditions except for the normal condition, an increase in value and a maximum value are observed in the local mutual information at low noise strength. In other words, under these conditions, recurrence resonance is clearly observed, and a complex pattern that has both chaos and order appears locally. This is thought to be due to the fact that the coupling has sparse parts, which is different from the normal condition.

3.3. 1/f Noise in Boolean and Quantum Condition

In the previous section, it was shown that a mixed state of chaos and order can be observed even in the spatial part, depending on the connection state of the neuron. This indicates a phase-transitional critical state in the phase transition from order to chaos. So, can a phase-transitional critical state be found in a single neuron? To evaluate this, a single neuron was selected from the network, and its time series of states was analyzed by Fourier transformation. The time series was calculated from 50 series with random initial values, and the power spectrum was averaged.

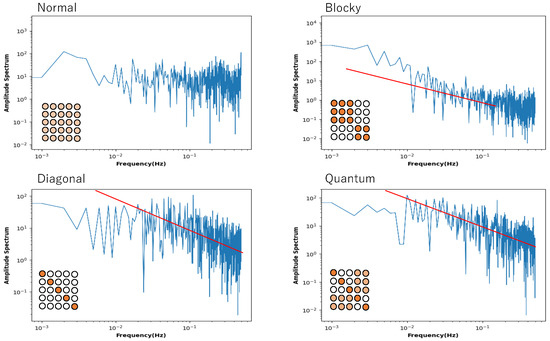

Figure 9 shows the power spectrum plotted against frequency in a log–log plot. The network of this Boltzmann machine is composed of five neurons, and the 5 × 5 square of circles shows the weights of the neuronal connections. The light orange circle in the normal condition indicates that all weights follow a normal distribution. In the blocky, diagonal, and quantum conditions, the dark orange circles show weights between 0.7 and 1.0, the white circles show weights between 0.0 and 0.05, and the light orange circles show weights between 0.2 and 0.3. The power spectrum in the normal condition clearly shows white noise, and no critical behaviour is observed. In the blocky condition, the slope is a little bit larger than 1.0, but there is a tendency toward a power law distribution. Note that the blocky condition also reveals Boolean algebra with respect to rough set lattice transformation. In the diagonal and quantum conditions, there is clear 1/f noise in the high-frequency components. This means that the phase transition critical phenomenon is observed in these connection patterns.

Figure 9.

Power spectrum for a time series of the state of a neuron plotted against frequency in a log–log plot. The neuron was chosen from a Boltzmann machine network consisting of 5 neurons. The square consisting of 25 circles represents the distribution of weights of the connection of neurons. The red line in the diagonal and quantum conditions represents a slope of −1.0.

Recall the 5 × 5 = 25 network (Figure 8), which also shows recurrence resonance in local mutual information. Here, we again selected one neuron, analyzed its time series by fast Fourier transformation with different initial values, and plotted the power spectrum averaged over the time series of 50 trials against frequency, and displayed the graph in a log–log plot (Figure 10). Here, again, the tendency of the power spectrum depending on the neuron’s connection type is almost the same as in Figure 9. In the normal condition, it shows white noise, and in the blocky condition, it shows a tendency toward a power law distribution with a slope of −1.0. In the diagonal and quantum conditions, it shows what is called an exact noise with a slope of −1.0. However, unlike the case of the 5-network, it shows noise for low-frequency components.

Figure 10.

Power spectrum for a time series of the state of a neuron plotted against frequency in a log–log plot. The neuron was chosen from a Boltzmann machine network consisting of 5 × 5 = 25 neurons. The square consisting of 25 circles represents the distribution of neurons, revealing the connectivity between neurons by color. The red line in the diagonal and quantum conditions represents a slope of −1.0.

In the quantum condition and diagonal conditions, the behavior is extremely similar, but since fluctuations are shown for low-frequency components, we can say that there is a clear critical behavior. The distribution pattern of the connection strength between neurons clearly plays a role, and it is thought that the distribution in which there are areas of strong connection and areas of sparse connection is important. In the quantum condition, the connection strength of the non-diagonal sub-relation area (corresponding to the area of strong connection in the blocky condition) is set between 0.2 and 0.3, but it is possible that a difference from the diagonal condition was not observed because this value is relatively small. In the quantum condition, the transition between the neurons arranged diagonally and the area of strong connection in the blocky condition is cut off, so when the transition between these areas is allowed, the frequent transition between chaos and order is further enhanced, and noise may be observed not only in low-frequency components but also in all frequency components. In any case, the increase in local mutual information observed at low noise intensity and the existence of a maximum value not only show the criticality of chaos and order in the subspace within the system, but also in the temporal changes of a single neuron.

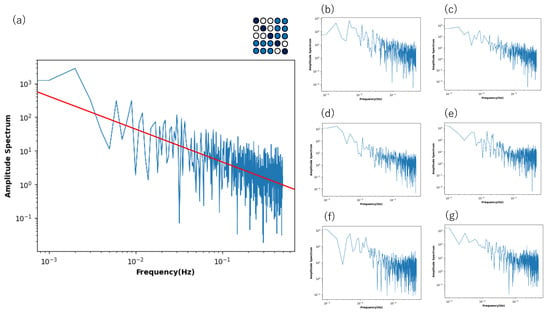

Although Figure 8 shows that only the low-frequency components show the power law, such a property is influenced by the connection strength in the non-diagonal block part. Figure 11 shows the power spectrum for a time series of the state of a neuron plotted against the frequency, where the connection strength in the block part changes in various ways. If the connection strength is set between 0.25 and 0.65 (Figure 11a), the power law is noticeable in the high- and low-frequency components.

Figure 11.

Power spectrum for a time series of the state of a neuron plotted against frequency in a log–log plot. The neuron was chosen from a Boltzmann machine network consisting of 5 × 5 = 25 neurons under the quantum condition, where the connection strength of the non-diagonal sub-relation area was differently given between 0.25 and 0.65 (a), 0.2 and 0.7 (b), 0.35 and 0.65 (c), 0.45 and 0.65 (d), 0.1 and 0.2 (e), 0.08 and 0.18 (f), and 0.09 and 0.19 (g). Other connection strength values were defined as in Figure 8. The red line in (a) represents a slope of −1.0.

So, are these connection strengths (and so on) determined arbitrarily? In excess Bayesian estimation and inverse Bayesian estimation [39], which apply Bayesian inference locally to the connection probability distribution, it is known that the connection probability distribution becomes a distribution similar to the quantum condition. In that case, the connection probability, which corresponds to the connection strength, is determined autonomously by Bayesian and inverse Bayesian inference. It is highly likely that such a mechanism is involved in 1/f noise, and further investigation will be required.

Local recurrence resonance observability (LRRO), for which RR can be detected only from locally parts of a neural network, could be a condition that the assemblage has a blank area and shows a cluster-like structure. Conversely, if such a material situation is allowed, LRRO can be observed regardless of the form of interaction or the form of the dynamical system, and it is possible that the characteristics of the critical phenomenon such as 1/f fluctuations associated with it can be found. Forms such as Boltzmann machines are usually considered to be advanced dynamical systems that implement majority voting, such as highly organized neuronal systems. If the critical phenomenon originates from a dynamical system, it would be misguided to compare the Boltzmann machines with the proteinoid microsphere. However, the LRRO found here and the critical phenomenon caused by it may be a characteristic that depends on the characteristics of the execution environment that executes computation and the distribution pattern of the element connections, rather than the characteristics of the dynamical system. Therefore, although the connection between the two is conceptual, we decided to investigate the membrane potential time series of proteinoid microspheres with cluster-like connections and focus our experiments on whether or not the 1/f fluctuations seen in LRRO can be observed.

3.4. 1/f Noise in Proteinoid Microsphere

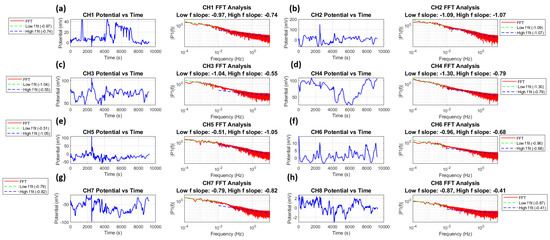

As mentioned before, the proteinoid microsphere is generated by polymerization of aminoacids, and constitutes a cluster structure of microspheres. A membrane potential maintains a pulse-like signal as well as a spike emitted from a neuron [40]. We here show that a time series of membrane potential reveals a typical noise, as shown in Figure 12.

Figure 12.

Time series recordings and spectral analysis of Glu-Phe proteinoid potential activity across eight channels (CH1-CH8). (a) CH1 recordings show potential fluctuations (±40 mV) with dual-regime FFT analysis revealing slopes of and . (b) CH2 displays larger amplitude oscillations (±100 mV) with consistent FFT slopes of and , indicating robust pink noise characteristics across frequencies. (c) CH3 exhibits elevated baseline potentials (60–100 mV) with distinct scaling regimes of and . (d) CH4 shows sustained high-amplitude activity (40–100 mV) with FFT slopes of and . (e) CH5 demonstrates balanced oscillations (±50 mV) with contrasting dynamics of and . (f) CH6 displays lower amplitude fluctuations (±10 mV) with FFT slopes of and . (g) CH7 shows negative-biased potentials (−40 to −100 mV) with similar scaling in both regimes and . (h) CH8 exhibits minimal fluctuations (±2 mV) with shallow slopes of and . Left panels show spontaneous potential fluctuations over 10,000 s. Right panels show Fast Fourier Transform analyses in log–log scale, revealing distinct power law behaviour () in low- ( Hz) and high- ( Hz) frequency regimes. The analysis reveals complex multi-scale dynamics, with CH2 showing the most consistent pink noise characteristics across both frequency regimes (), while other channels display varying degrees of scale-dependent behaviour.

Figure 12 shows a time series analysis and FFT for all eight channels. Each channel shows typical fluctuations in membrane potential () and their power spectral density . The power spectra follow a power law relationship:

where (f) represents frequency, (β) is the scaling exponent, and (C) is a constant. Taking the logarithm of both sides yields the following:

The power spectra analysis shows scaling exponents from −0.43 to −1.07. This confirms -like noise in all channels. The membrane potential time series can be characterized by its autocorrelation function:

where () is the time lag and () denotes time averaging. In our analysis, we define two distinct frequency regimes: the low-frequency regime ( Hz, corresponding to timescales longer than 100 s) and the high-frequency regime ( Hz, corresponding to timescales shorter than 100 s). The power spectrum scaling behaviour is characterized by for low frequencies and for high frequencies, where ranges from −1.30 to −0.51 and ranges from −1.07 to −0.41 across different channels. This division reveals different scaling behaviours at different temporal scales, with the low-frequency regime generally capturing long-term system dynamics and the high-frequency regime reflecting faster fluctuations. The Wiener–Khinchin theorem [41] links the power spectral density to the autocorrelation function.

The amplitudes across channels vary by two orders of magnitude. They range from about 2 mV in channel 8 to 200 mV in channel 2. This shows a significant unevenness in the microsphere distribution and the relevant electrical activity.

The root mean square fluctuation (F()) of the voltage signal over a time window () scales as follows:

where () is related to the power spectral exponent () by (). The analysis of bioelectric signals from proteinoid microspheres reveals complex dynamics with distinct behaviour in low- and high-frequency regimes, as summarized in Table 1. Several channels show strong long-range correlations in the low-frequency regime, particularly channels 2, 3, and 4 (). The theoretical scaling exponents () range from 0.705 to 1.15, with the low-frequency regime generally showing higher values (0.755–1.15) compared to the high-frequency regime (0.705–1.035). Notably, the directly fitted scaling exponents () show systematically lower values than the theoretical predictions, ranging from 0.32 to 0.90, suggesting a more complex relationship between temporal correlations at different scales. The high-frequency regime shows more consistent scaling behaviour across channels, particularly evident in channels 1, 2, 4, 5, and 7 (). This dual-regime analysis reveals that the temporal organization of these bioelectric patterns combines both slow, strongly correlated fluctuations and faster, more varied dynamics, resembling the multi-scale characteristics observed in biological membrane potentials.

Table 1.

Scaling exponent analysis of bioelectric signals from proteinoid microspheres across two frequency regimes. Power spectrum exponents (, ) indicate temporal correlations in different frequency ranges. Theoretical scaling exponent () is calculated from relation , while is obtained from direct fitting of root mean square fluctuation.

4. Discussion

Since Boltzmann machines are originally free to connect between neurons, a space-dependent expression such as a two-dimensional form has no specific meaning. However, in this paper, we consider not only neurons but also proteinoid microspheres to be primitive neurons that emit pulse-like waves, and we think that a phenomenon like recurrence resonance may be hidden in the cluster of proteinoid microspheres. This is why we assumed a space-dependent network that strongly connects between neighboring neurons and a two-dimensional form such as a 5x5 network. Here, we will discuss the local behavior of recurrence resonance and its significance, including the relationship with proteinoid microspheres.

In complex dynamical systems, even if a system starts from random initial values, there are attractors that are not excited from there. A typical example is the Garden of Eden in the Game of Life, which cannot be approached from other states [42]. How can we access such hidden attractors? One of the most hopeful possibilities is recurrence resonance (RR). RR exposes hidden attractors by using external fluctuations. However, if the noise is too large, the behavior of the attractor is drowned out by the noise, so the attractor appears only in a specific low-noise region. Naturally, a certain state of equilibrium between the chaos caused by noise and the order caused by the attractor will emerge here. This is thought to be exactly the critical state discussed in SOC and edge of chaos.

In this paper, we performed numerical calculations based on the expectation that RR and critical states are related. First, we evaluated how the appearance of RR is related to the connection strength pattern of neurons. RR is said to appear if there is a connection between neurons, but it was predicted that there is a difference in its behavior. If the connection strength is massive and follows a normal distribution, such complex behavior will not appear. This is because it is the local loss of information that creates the complex structure. RR can be evaluated by the increase in global mutual information and the existence of a maximum point against the noise strength, so we first calculated global mutual information against the noise strength for small networks consisting of five or seven neurons, where global mutual information can be calculated. The results showed that the maximum value was large and the noise region where global mutual information increased was wide in those regions with a connection strength pattern that indicated Boolean algebra (diagonal condition) or quantum logic (quantum condition) with a connection strength defect.

Secondly, the value of mutual information is low for both random and simple periodic oscillations (order), and is high for complex patterns that are critical states between chaos and order. So, how is this behavior realized as a complex pattern? If there is a local defect in the connection strength, will a complex pattern also appear locally? Therefore, we prepared 5 × 5 neural networks that strengthen the connection strength between nearby neurons depending on the space, and changed the connection strength to normal, diagonal, and quantum conditions to evaluate whether RR appears in local space. This was calculated and evaluated by local mutual information, which calculates mutual information only for a part of the network. No increase in local mutual information was observed in the normal distribution, but the increase and maximum value are shown in the diagonal and quantum conditions, and RR could be clearly recognized even locally.

In the case of the normal condition, the mutual information only decreases monotonically, which means that the pattern found in the initial value becomes a random pattern influenced by noise, and the value decreases, or a clear vibration pattern appears, and then the value decreases. In other words, no critical behavior with a mixture of chaos and noise was found locally. In contrast, the phenomenon found in the diagonal and quantum conditions, where the mutual information increases locally and reaches a maximum value, means that a critical phenomenon in which chaos and order are balanced appears locally. In other words, this means that critical behavior appears locally.

Therefore, we selected neurons from the network that were numerically calculated, analyzed the time series with FFT, and investigated whether the so-called noise appears. noise was clear in the diagonal and quantum conditions, where RR was found in the local mutual information. This noise was found in the high-frequency components of the one-dimensional array, and in the two-dimensional 5 × 5 array, it was found in the low-frequency components. This is because when there is a local defect in the connections of neurons, the flow of information is adjusted, the noise is suppressed at various periods, and the distribution is not such that there is an average value in the periods, resulting in the appearance of noise.

Is it possible that such RR can be observed in other than real neural networks? Proteinoid microspheres, which are materials associated with the origin of life, are known to emit pulse-waves like neurons when electrodes are inserted into their aggregations and their membrane potential is measured. Since the aggregations form a three-dimensional structure with gaps in the form of clusters, the two-dimensionally arranged Boltzmann machines that we have taken up can be considered to approximate this. Here, we measured the time series of potential activity in eight channels in a cluster of Glu-Phe proteinoid microspheres and analyzed the time series by FFT. Finally, we obtained the typical noise in which the power showed a power law distribution with respect to frequency. Because the environment of Glu-Phe proteinoid microspheres was exposed to noise in solution, this was thought to suggest RR. Whether the noise shown by Glu-Phe proteinoid microspheres truly shows RR or not will be clear if we change the noise strength and measure the local mutual information against it. In that case, measuring with eight channels would be sufficient to compute local mutual information.

In the future, we will need to give more thought to mechanisms that create critical behaviour through wandering between attractors and enhance computational power, rather than simply using noise to summon hidden attractors.

5. Conclusions

Originally, RR was calculated by global mutual information (GMI), which calculates the probability from the arrays of the states of all elements, and was defined by the increase in GMI under a specific region of noise strength. However, in a system with a large system size, this cannot be calculated in principle. Here, we found a case where RR can be found by local mutual information (LMI), which calculates the probability from only a part of a neural network. This is local RR observability (LRRO), which depends on the connection state of the neurons and appears in a connection distribution with a certain degree of lack of connection. In other words, LRRO does not exist in normal conditions, but LRRO can be found in diagonal conditions, blocky conditions, and quantum logic conditions. Furthermore, many of the cases where LRRO was found show characteristics of critical phenomena such as , and strict fluctuations can be found, especially in quantum conditions. Since LRRO is a partial increase in the local MI in a local neuron, it suggests that a mixed state of chaos and order is realized locally. MI shows high values in complex patterns that are not simply random or simply periodic oscillations. Therefore, an increase in MI should at least be a necessary condition for complex critical phenomena. In other words, there is no doubt that some LRROs exhibit critical phenomena. We therefore investigated the time series of a single neuron under the coupling conditions that show LRRO, namely, diagonal, blocky, and quantum logic conditions, and evaluated whether fluctuations, a characteristic of critical phenomena, could be observed. As a result, we were able to find fluctuations in these cases, and in particular, we found that strict fluctuations— fluctuations with an exponent extremely close to 1.0—could be found under the quantum logic condition. This may serve as a different scenario for self-organized critical phenomena and edge of chaos. It was believed that the space of possible dynamical systems originally assumed was mostly either order or chaos, and that only a very small number of dynamical systems in between exhibited critical phenomena. From this, the reason why only a small number of dynamical systems are often found in biological systems was attributed to strict natural selection. However, it may not be the dynamical system itself, such as the mode of interaction, that causes criticality, but rather that criticality appears in more fundamental situations, such as the conditions and environment that realize the dynamical system. In quantum logic automata, various transition rules for time evolution are prepared, while in the execution environment that realizes them, deterministic ones and ones that allow ambiguity are prepared. The former is an execution environment that leads to classical logic, and the latter is an execution environment that leads to quantum logic. It was found that criticality is rarely observed in a classical logic environment, but in a quantum logic environment, fluctuations are observed in many cases regardless of the transition rules, and criticality is observed.

The criticality shown by the LRRO we found is also dependent on the bond distribution pattern, which strengthens the scenario of criticality depending on the computation environment. In other words, the reason for the criticality is not due to severe competition for survival, but is due to the distribution of materials that are originally widely present, and it may be something that can be found very easily. The so-called 1/f fluctuation was observed from the local time series of the proteinoid microspheres. Although it is not strictly 1/f, even in the case of the quantum logical condition of the Boltzmann machine, the exponent is different between the high-frequency component and the low-frequency component, so it is possible that 1/f fluctuation was observed in a broad sense. This makes it possible to think that even if there is a difference in the dynamical system between Boltzmann machines and proteinoid microspheres, there is a common condition for both to behave like a critical phenomenon, from which it can be inferred that this is the binding structure of the elements.

Author Contributions

Y.P.G. produced the ground design of the research. Y.H. wrote the code and computed all results concerning Boltzmann machines. P.M. conducted the experiments of proteinoiod microspheres and analyzed the results. A.A. checked the connection between Boltzmann machines and proteinoid microspheres. While Y.P.G. mainly wrote the text, except for the part on proteinoid micripsheres, all members contributed to writing the manuscript. The protenoids part was mainly written by P.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by JSPS 22K12231 and 23K00237, and also by Waseda University.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Conrad, M. Adaptability, The Significance of Variability from Molecule to Ecosystem; Plenum Press: New York, NY, USA, 1983. [Google Scholar]

- Gunji, Y.P.; Uragami, D. Computational power of asynchronously tuned automata enhancing the unfolded edge of chaos. Entropy 2021, 23, 1376. [Google Scholar] [CrossRef]

- McNamara, B.; Wiesenfeld, K. Theory of stochastic resonance. Phys. Rev. A 1989, 39, 4854. [Google Scholar] [CrossRef] [PubMed]

- Gammaitoni, L.; Hänggi, P.; Jung, P.; Marchesoni, F. Stochastic resonance. Rev. Mod. Phys. 1998, 70, 223. [Google Scholar] [CrossRef]

- Russell, D.F.; Wilkens, L.A.; Moss, F. Use of behavioural stochastic resonance by paddle fish for feeding. Nature 1999, 402, 291–294. [Google Scholar] [CrossRef]

- Krauss, P.; Prebeck, K.; Schilling, A.; Metzner, C. “Recurrence resonance” in three-neuron motifs. Front. Comput. Neurosci. 2019, 13, 64. [Google Scholar] [CrossRef]

- Metzner, C.; Schilling, A.; Maier, A.; Krauss, P. Recurrence resonance-noise-enhanced dynamics in recurrent neural networks. Front. Complex Syst. 2024, 2, 1479417. [Google Scholar] [CrossRef]

- Middleton, F.A.; Strick, P.L. Basal ganglia and cerebellar loops: Motor and cognitive circuits. Brain Res. Rev. 2000, 31, 236–250. [Google Scholar] [CrossRef] [PubMed]

- Song, S.; Sjöström, P.J.; Reigl, M.; Nelson, S.; Chklovskii, D.B. Highly nonrandom features of synaptic connectivity in local cortical circuits. PLoS Biol. 2005, 3, e68. [Google Scholar]

- Schrauwen, B.; Verstraeten, D.; Van Campenhout, J. An overview of reservoir computing: Theory, applications and implementations. In Proceedings of the 15th European Symposium on Artificial Neural Networks, Bruges, Belgium, 25–27 April 2007; pp. 471–482. [Google Scholar]

- Verstraeten, D.; Schrauwen, B.; d’Haene, M.; Stroobandt, D. An experimental unification of reservoir computing methods. Neural Netw. 2007, 20, 391–403. [Google Scholar] [CrossRef] [PubMed]

- Lukoševičius, M.; Jaeger, H. Reservoir computing approaches to recurrent neural network training. Comput. Sci. Rev. 2009, 3, 127–149. [Google Scholar] [CrossRef]

- Bak, P.; Tang, C. Earthquakes as a self-organized critical phenomenon. J. Geol. Res. 1989, 94, 635–637. [Google Scholar] [CrossRef]

- Bak, P.; Sneppen, K. Punctuated equilibrium and criticality in a simple model of evolution. Phys. Rev. Lett. 1993, 71, 4083–4086. [Google Scholar] [CrossRef] [PubMed]

- Sneppen, K.; Bak, P.; Flybjerg, H.; Jensen, M. Evolution as a self-organized critical phenomenon. Proc. Nat. Acad. Sci. USA 1995, 92, 5209–5213. [Google Scholar] [CrossRef] [PubMed]

- Kauffman, S.; Johnsen, S. Coevolution to the edge of chaos: Coupled fitness landscape, poised states, and coevolutionary avalanches. J. Theor. Biol. 1991, 149, 467–505. [Google Scholar] [CrossRef]

- Kauffman, S. A World Beyond Physics. The Emergence & Evolution of Life; Oxford University Press: Oxford, UK, 2019. [Google Scholar]

- Langton, C. Computation at the edge of chaos: Phase transitions and emergent computation. Phys. D 1990, 42, 12–37. [Google Scholar] [CrossRef]

- Santos, M.; Szezech, J.; Borges, F.; Iarosz, K.; Caldos, K.; Batista, A.; Viana, R.; Kurths, J. Chimera-like states in a neuronal network model of cat brain. Chaos Solitons Fractals 2017, 101, 86–91. [Google Scholar] [CrossRef]

- Lendner, J.; Helfrich, R.; Mander, B.; Lin, J.; Walker, M.; Larsson, P.; Knight, R. An electrophysiological marker of arousal level in humans. eLife 2020, 9, e55092. [Google Scholar] [CrossRef] [PubMed]

- Plentz, D.; Ribeiro, T.; Miller, S.; Kells, P.; Vakili, A.; Capek, E. Self-organized criticality in the brain. Front. Phys 2021, 9, 365. [Google Scholar]

- Wolfram, S. Statistical mechanics of cellular automata. Rev. Mod. Phys. 1983, 55, 601–644. [Google Scholar] [CrossRef]

- Wolfram, S. A New Kind of Science; Wolfram Media: Champaign, IL, USA, 2002. [Google Scholar]

- Uragami, D.; Gunji, Y. Universal criticality in reservoir computing using asynchronous cellular automata. Complex Syst. 2022, 31, 104–121. [Google Scholar]

- Gunji, Y.P.; Ohzawa, Y.; Tokuyama, Y.; Eto, K. Quantum logic automata generalizing the edge of chaos in complex systems. Front. Complex Syst. 2024, 2, 1347930. [Google Scholar] [CrossRef]

- Tokuyama, Y.; Ohzawa, Y.; Gunji, Y.P. Quantum logic automata generate class IV-like patterns and 1/f noise. BioSystems 2024, 246, 105339. [Google Scholar] [CrossRef] [PubMed]

- Ackley, D.H.; Hinton, G.E.; Sejnowski, T.J. A learning algorithm for Boltzmann machines. Cogn. Sci. 1985, 9, 147–169. [Google Scholar]

- Salakhutdinov, R.; Hinton, G. Deep boltzmann machines. In Proceedings of the Artificial Intelligence and Statistics, PMLR, Clearwater, FL, USA, 16–18 April 2009; pp. 448–455. [Google Scholar]

- Fox, S.W.; Jungck, J.R.; Nakashima, T. From proteinoid microsphere to contemporary cell: Formation of internucleotide and peptide bonds by proteinoid particles. Orig. Life 1974, 5, 227–237. [Google Scholar] [CrossRef]

- Kokufuta, E.; Sakai, H.; Harada, K. Factors controlling the size of proteinoid microspheres. BioSystems 1983, 16, 175–181. [Google Scholar] [CrossRef] [PubMed]

- Nakashima, T. Metabolism of proteinoid microspheres. In Organic Geo- and Cosmochemistry; Springer: Berlin/Heidelberg, Germany, 2005; pp. 57–81. [Google Scholar]

- Mougkogiannis, P.; Adamatzky, A. Logical gates in ensembles of protenoid microsphere. PLoS ONE 2023, 18, e0289433. [Google Scholar] [CrossRef]

- Davey, B. Introduction to Lattices and Order; Cambridge University Press: Cambridge, UK, 2002. [Google Scholar]

- Yao, Y. Concept lattices in rough set theory. In Proceedings of the IEEE Annual Meeting of the Fuzzy Information, 2004. Processing NAFIPS’04, Banff, AB, Canada, 27–30 June 2004; IEEE: Piscataway, NJ, USA, 2004; Volume 2, pp. 796–801. [Google Scholar]

- Gunji, Y.P.; Haruna, T. A non-boolean lattice derived by double indiscernibility. In Transactions on Rough Sets XII; Springer: Berlin/Heidelberg, Germany, 2010; pp. 211–225. [Google Scholar]

- Gunji, Y.P.; Nakamura, K. Psychological origin of quantum logic: An orthomodular lattice derived from natural-born intelligence without hilbert space. BioSystems 2022, 215, 104649. [Google Scholar] [CrossRef] [PubMed]

- Mougkogiannis, P.; Phillips, N.; Adamatzky, A. Transfer functions of proteinoid microspheres. BioSystems 2023, 227, 104892. [Google Scholar] [CrossRef] [PubMed]

- Hopfield, J.J. Hopfield network. Scholarpedia 2007, 2, 1977. [Google Scholar] [CrossRef]

- Gunji, Y.P.; Shinohara, S.; Haruna, T.; Basios, V. Inverse Bayesian inference as a key of consciousness featuring a macroscopic quantum logical structure. BioSystems 2017, 152, 44–65. [Google Scholar] [CrossRef] [PubMed]

- Mougkogiannis, P.; Nikolaidou, A.; Adamatzky, A. Kombucha–Chlorella–Proteinoid Biosynthetic Classifiers of Audio Signals. Nano Sel. 2024, e202400080. [Google Scholar] [CrossRef]

- Jin, R.B.; Shimizu, R. Extended Wiener–Khinchin theorem for quantum spectral analysis. Optica 2018, 5, 93–98. [Google Scholar] [CrossRef]

- Poundstone, W. The Recursive Universe: Cosmic Complexity and the Limits of Scientific Knowledge; Courier Corporation: North Chelmsford, MA, USA, 2013. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).