1. Introduction

With the widespread application of generative artificial intelligence, a large number of images are generated, transmitted, stored, and used in various aspects of society. For the sake of information security, image encryption methods are widely used in the storage and transmission of images. However, due to the unique characteristics of images, such as high redundancy and strong pixel correlation, traditional encryption methods are not suitable for image encryption. Chaotic systems are widely used in image encryption due to their many advantages, such as pseudo-randomness, ergodicity, and extreme sensitivity to control parameters and initial values [

1,

2,

3,

4].

Many studies have established various image encryption methods based on chaotic systems and combined them with various other methods, for example, encryption algorithms that combine chaotic systems and neural networks [

5,

6,

7,

8,

9,

10], encryption methods that combine quantum computing and chaotic systems [

11,

12], and methods that combine chaotic systems and compressive sensing [

13,

14,

15].

Among them, DNA manipulation has been widely applied. DNA manipulation, due to its unique properties, encrypts images at a new level, breaking the strong correlation between adjacent pixels, and is widely used in the process of image diffusion [

16,

17,

18,

19]. The eight-base DNA manipulation is an excellent improvement on DNA manipulation in recent years, adding four artificial bases and expanding the diversity and randomness of DNA manipulation [

20,

21].

Image diffusion and permutation are important methods for image encryption. Permutation is the operation of changing the position of pixels through certain rules, while diffusion is the operation of using the information of chaotic sequences or its own information to change the values of pixels. Zhongyun Hua et al. proposed a method of diffusion in different color planes, expanding the range of diffusion [

22]. DNA manipulation is an important diffusion method that recombines image pixels according to certain DNA rules to obtain base sequences and perform different operations on the base sequence, which generally include “ addition “, “ XOR “, “ transcription “, etc. When restored to pixels, encrypted images exhibit higher information entropy. In recent years, there have also been methods that combine diffusion and permutation operations [

23,

24,

25,

26], reducing encryption steps and improving encryption efficiency.

In addition, the idea of dividing subproblems is widely used in image encryption. There are two branches of image encryption technology based on chaos: image encryption based on stream cipher, and image encoding based on block cipher [

27,

28]. Splitting images by channel is the most fundamental and intuitive method [

29,

30,

31]. Hua, Z. et al. proposed a method for processing images using Latin orthogonalization [

32]. Zhang, D. et al. proposed a method of splitting images into Latin cubes, which increases the dimension of encryption and improves the randomness of encryption [

33]. W. Feng et al. analyzed existing methods and improved the encryption method based on Feistel Network [

34]. Y. Wang et al. proposed dividing the image into n uniform equal parts, encrypting them separately, and finally recombining them into encrypted images, which can fully utilize the multi-threaded advantages of advanced devices and greatly shorten the execution time of the algorithm [

35].

In 1994, Adleman et al. first used DNA molecules to solve the Hamiltonian pathway problem, verifying the feasibility of using biomolecules as computational media and laying the foundation for subsequent DNA information processing technologies. DNA manipulation refers to the process of recombining an image into a sequence of nucleotides in a certain way, and then encrypting the base sequence by controlling the sequence, ultimately achieving better diffusion effects. In the 2010s, DNA manipulation and chaotic systems were organically combined in image encryption, giving rise to a large number of excellent encryption methods. However, at this time, base manipulation was still rare and simple, mainly relying on the randomness of chaotic systems to achieve good results. In recent years, scholars have proposed many new innovations based on this foundation. U.K. Gera et al. introduced algebraic operations into base operations [

36], increasing the diversity of base operations. Yu, Jinwei et al. introduced the triploid mutation method into DNA manipulation and achieved good results, significantly reducing correlation [

37]. Fan et al. introduced eight-base DNA manipulation into image encryption, revitalizing DNA base manipulation and increasing the breadth and depth of DNA manipulation [

19].

These methods have their unique advantages, but they all have a common drawback of limited encryption dimensions: most methods only choose one encoding and decoding method for encryption, and cannot fully utilize the different encoding methods and the greater randomness generated by their combination. In order to fully utilize the different characteristics of various encoding levels and the advantages of mixing different encoding methods, this article proposes a PD5H. This method improves the sensitivity, robustness, and resistance to differential attacks of the algorithm by converting the image into a bitstream, cutting it into different lengths, encoding it, and applying different encryption methods.

The main contributions of PD5H proposed in this article are as follows: (1) On the basis of the original five-dimensional chaotic system, it enhances the correlation of each dimension, which can strengthen the chaotic characteristics of the system. (2) A new diffusion method based on stream encryption was proposed, which combines bit-level and base-level diffusion operations, and simultaneously uses four-base DNA and eight-base DNA diffusion operations. By combining multiple encryption operations, a new encryption method with high key sensitivity and good performance was finally obtained. (3) The experiment shows that the proposed PD5H has achieved good results in color image encryption and can effectively resist various types of attacks. The key innovation of this work is the proposal of an encryption algorithm based on hyper chaotic systems with bit-stream encryption as the core.

The main structure of this article is as follows:

Section 1 mainly analyzes the current status of DNA encryption methods based on chaotic systems and lists the advanced directions and innovative points of current research.

Section 2 briefly introduces the chaotic system referenced and modified in this article and the four-base DNA and eight-base DNA operations.

Section 3 elaborates on the specific methods and processes of encryption in detail.

Section 4 performs experimental testing of encryption methods to resist various attacks and data comparison with other advanced methods.

Section 5 provides a brief summary of the work we have conducted.

2. Preliminaries

2.1. Hyperchaotic System

In 2024, Saleh Mobayen et al. proposed a five-dimensional hyperchaotic system, whose algebraic expression is as follows [

38]:

In the above equation, x, y, z, w, and u are state variables, and the system parameters are a, b, c, d, e, k, r, g, m, and n. When the initial conditions were set as (x, y, z, w, u) = (0, 0, 0, 1, 0) and the system parameters were a = −10, b = 1, c = 3, k = 1, d = 9.8, e = 45, r = −10, g = 5, m = 1, and n = 2/3, with a time step of 0.01 s and a time length of 100 s, numerical simulations were conducted using Matlab. The corresponding Lyapunov exponents are as follows: LE1 = 1.2068, LE2 = 0.002, LE3 = −0.0032, LE4 = −5.2070, and LE5 = −13.9665, with two of them being positive. The sum of the five Lyapunov exponents is negative, indicating that System (1) is a hyperchaotic system.

2.2. Four-Base DNA Operation and Eight-Base DNA Operation

Traditional encryption methods, such as data encryption standard (DES) and advanced encryption standard (AES), cannot effectively eliminate images’ local correlation and statistical features. However, DNA operations can effectively eliminate the statistical features of images and reduce their local correlation through complementary and algebraic operations.

The DNA operation in image encryption is a cryptographic method inspired by molecular biology. Its core lies in mapping image data to a symbol system composed of simulated DNA bases, and performing operations based on specific rules to achieve deep confusion and diffusion. Under the condition of ignoring different encoding methods, there are only eight theoretical monocular calculation methods for traditional four-base DNA operations that satisfy the complementarity rule, with a small variation space and easy to be brute-force cracked. Under the same conditions, there are as many as 384 theoretical calculation methods for eight-base DNA operations that satisfy the complementarity rule, greatly expanding the diversity of encryption methods.

However, the eight-base encoding takes a long time and occupies a large space, so we propose a joint diffusion method that combines a four-base DNA operation and an eight-base DNA operation, which can ensure that the encryption effect is not affected while reducing the encoding and decoding time.

2.2.1. Four-Base DNA Operation

There are 24 encoding methods for encoding two bits of an image into DNA bases, but only eight combinations comply with the principle of base complementarity. Here is a list of encoding methods that meet the requirements, as shown in

Table 1.

Using different encoding methods for the same image can result in different base sequences. Complementary or algebraic operations are often used for encryption, and here are a few common algebraic operations, as shown in

Table 2.

2.2.2. Eight-Base DNA Operation

On the basis of the four-base operation, four new artificial bases were added, which are also paired with each other. Unlike four-base operations, eight-base operations require the use of three bits for encoding, which is very friendly for three-channel images. Each channel can be recombined bit by bit, breaking certain correlations, and there will be no remaining bits generated during processing, allowing for more symmetric encryption.

Here are some coding rules that meet complementary requirements (not all), as shown in

Table 3.

The eight-base DNA manipulation greatly increases the diversity of DNA manipulation, expanding coding and computational methods. Due to the requirement of encoding eight DNA bases using three bits, the encryption of three-channel color images is very friendly and can be widely applied to different encryption methods.

3. The Improved Color Image Encryption Approach

3.1. Improved Five-Dimensional Chaotic System

In order to improve the performance of System (1), we propose an improved system in the Equation (2):

In the above equation, x, y, z, w, and u are state variables. When the initial conditions were set to (x, y, z, w, u) = (1, 0, 1, 0, −3.5) and the system parameters were a = −10, b = 1, c = 3, d = 9.8, e = 45, f = 4, k = 1, r = −10, m = 1, n = 2/3, t = 2, h = 0.7, the time step was 0.01 s and the time length was 3000 s, numerical simulations were conducted using Matlab.

The coupling selection between high-dimensional chaotic systems is an important topic. Excessive coupling can lead to system synchronization, while insufficient coupling can lead to system decoupling, resulting in a decrease in the chaos of chaotic systems. Analyzing the information flow of the original chaotic system, it can be found that there is no direct information flow between and, which may lead to the chaotic behavior of the chaotic system being too simple and even cause the chaotic system to degenerate into a normal system. Therefore, we have appropriately enhanced the information flow of and , enhancing the chaotic behavior of the system.

3.1.1. Attractor Phase Diagram

Here is the attractor phase diagram of the system, as shown in

Figure 1.

Compared to the Lorentz-like phase diagram of the original system rules, the improved system exhibits more complex chaotic behavior and better performance.

3.1.2. Lyapunov Exponent

The Lyapunov exponent dimension, commonly referred to as Kaplan–Yorke dimension or Lyapunov dimension, is a method of estimating the fractal dimension of attractors in dynamical systems through the Lyapunov exponent spectrum of the system. The core idea is based on the Kaplan–Yarke conjecture, which links the Lyapunov exponent with geometric dimensions to describe the complex structure of chaotic attractors.

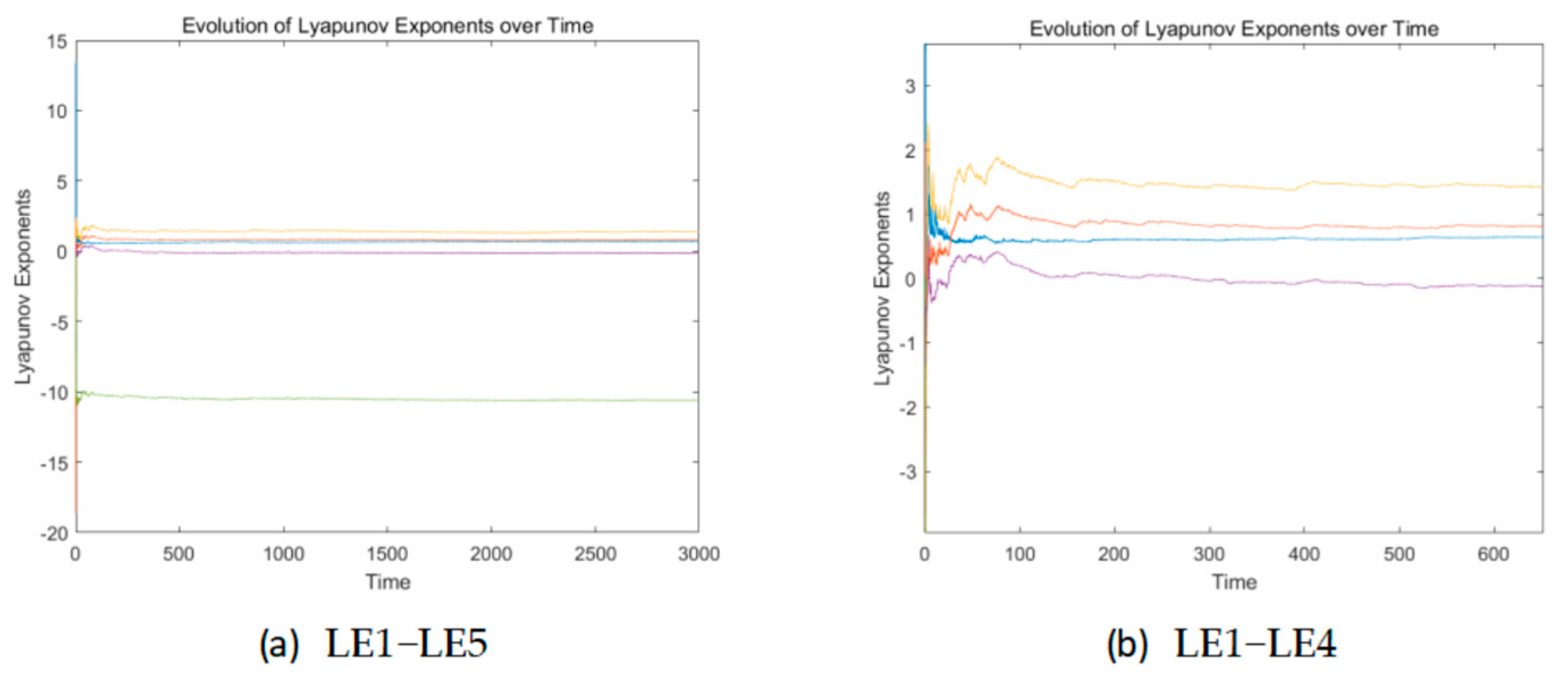

The corresponding Lyapunov exponent is as follows:

LE1 = 1.4002,

LE2 = 0.8084,

LE3 = 0.6665,

LE4 = −0.1335, and

LE5 = −10.5936. It contains three Lyapunov exponents, and the maximum Lyapunov exponent has increased by 16% compared to the original system, showing a significant improvement. The dimension of its Lyapunov exponent is denoted as

, which is as follows:

The Lyapunov exponent dimension of the original system is denoted as

, which is as follows:

Compared to the original system, the Lyapunov Exponent dimension has improved by about 0.55. This represents that the system has a divergent trend in more dimensions, so the improved system has better performance.

Figure 2 shows the time-varying Lyapunov exponent spectrum of the system, which can visually demonstrate the process of Lyapunov exponent tending to stability. The lines from top to bottom in (a) are LE1 to LE5, and the lines from top to bottom in (b) are LE1 to LE4.

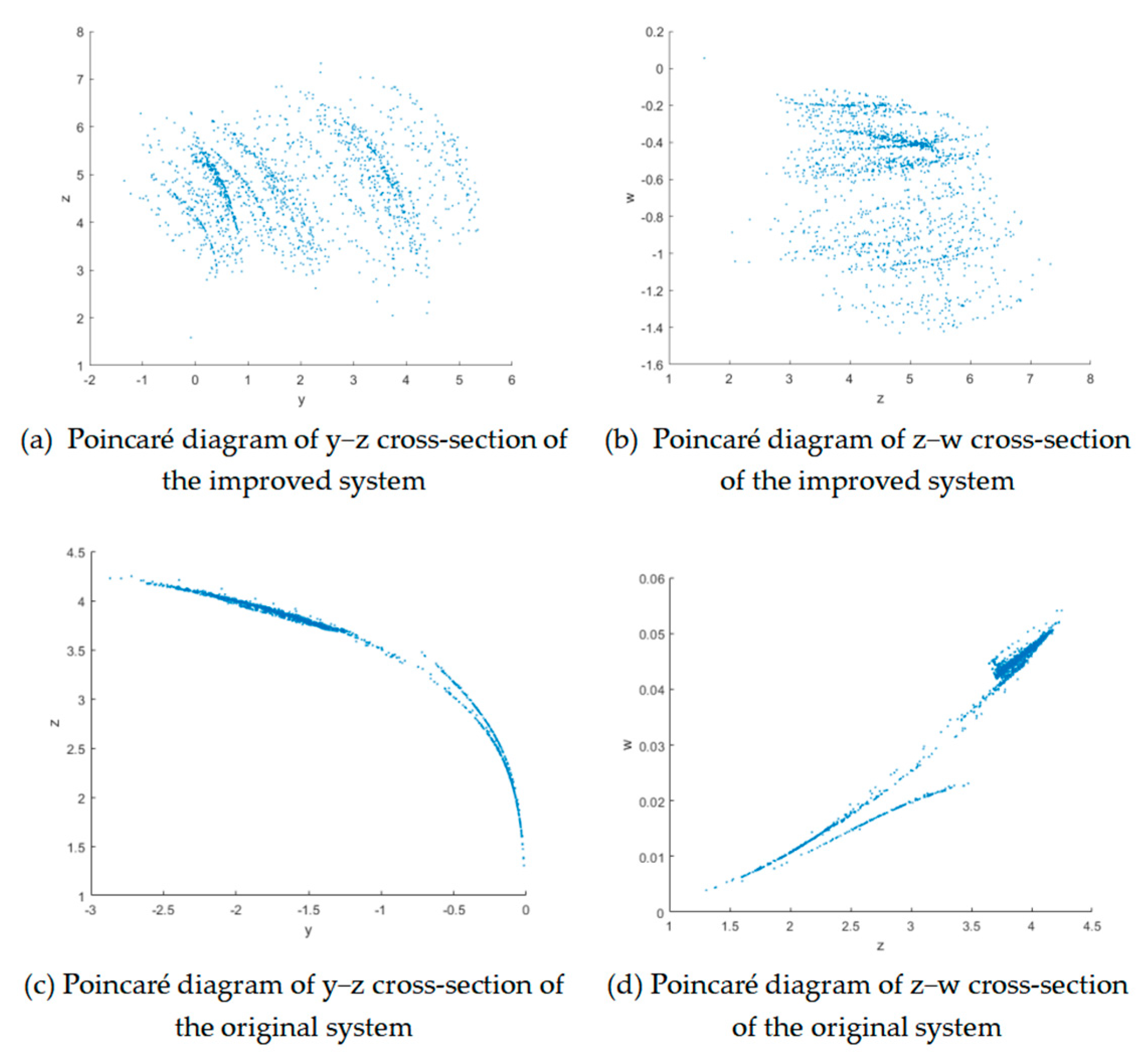

3.1.3. Poincaré Map

The Poincaré map simplifies the analysis of long-term dynamical behavior by selecting a hyperplane section in phase space and recording the intersection points of the dynamical system trajectory each time it passes through that section in a unidirectional manner. It transforms continuous-time dynamical systems into discrete maps. The improved Poincaré diagram of the system reveals a complex fractal structure.

It can be seen that the two Poincaré diagrams of the original system are clearly bounded and clustered, indicating that the original system is a quasi-periodic motion with no obvious chaos from

Figure 3. The Poincaré diagram of the improved chaotic system has a complex fractal structure; therefore, the improved chaotic system is more chaotic.

3.1.4. NIST Test

NIST testing is a statistical testing suite developed by the National Institute of Standards and Technology in the United States to evaluate the quality of random number generators. It includes 15 core tests, such as frequency check, run length check, etc., which detect defects in encryption algorithms or random number generators by analyzing the randomness of binary sequences. This is an important standard for secure authentication of cryptographic products. NIST SP800-22 is a widely accepted suite for verifying the randomness of chaotic sequences [

39]. To verify the effectiveness of our improvement, we present the results of the NIST operation for both our chaotic system and the improved chaotic system. The result is as

Table 7.

The items marked with “*” in the table represent multiple test results, therefore they are expressed in the form of scores as “number of passed items/total number of tested items”.

From the table, it can be seen that our improved system performs well on the original chaotic system. Although both systems can pass all the tests conducted by NIST, the p-value of the improved system is more uniform and higher. Especially in the “w”, “u” two dimensions, the “Rank” and “Cumulative Sums Test” values in the original system are significantly lower compared to other dimensions, which proves that these two dimensions have certain randomness defects. Our improved system has solved this problem to a certain extent.

3.2. Generation of Hyperchaotic Sequences

The encryption method of using the same key for multiple different images is susceptible to known plaintext and selective plaintext attacks. To solve this problem, a mainstream method is to generate a key using the information of the image itself, with each image corresponding to its unique key. This enhances the ability to resist known plaintext and selective plaintext attacks. Even if the cracker already knows the correspondence between some plaintext and ciphertext, as well as the encryption method, they cannot quickly crack the ciphertext. We use this method to generate initial values for chaotic systems. Specifically, the initial values of chaotic systems are generated using the following method:

- Step 1:

The 3D image is flattened into a one-dimensional sequence. The SHA-256 hash of this sequence is computed, resulting in a 256-bit digest. This digest is then split into four contiguous 64-bit blocks and a 64-bit constant constituting the key set ;

- Step 2:

An intermediate variable

is introduced. For each

member

(where

i = 1, 2, 3, 4), the following two operations are performed sequentially:

where

denotes the cyclic left shift

by 48 bits. This operation is equivalent to

, where

and

are logical shifts, and

is the bitwise OR operation. Similarly, rotr (C, 48) denotes the cyclic right shift

by 16 bits, equivalent to

.

- Step 3:

The four 64-bit

is concatenated into a single 256-bit block, which is then interpreted as a sequence of 32 bytes (8-bit unsigned integers):

A new key sequence

is generated from

to enhance diffusion. For each member of

,

(

j = 1, 3, 5, …, 31) obtains its value using the following formula:

- Step 4:

Record the initial value as

and calculate the initial value as follows:

- Step 5:

Input the control parameters a, b, c, d, e, f, g, k, r, m, n, t, and h with initial values into System (2) to generate a hyperchaotic matrix, as has a size of .

The key can be divided into SHA256 and a custom key part , so we will discuss it in two parts.

Every change in the SHA part will result in a corresponding change in (scope of matters 1–32), which in turn will cause a change in two . Under pessimistic estimates, it will cause at least one initial value to change, while under optimistic estimates, it will cause at most two initial values to change. In summary, the expected value is 1.625.

Changing one bit of the custom key will cause four to change. In a pessimistic situation, it will cause three initial values to change. In an optimistic situation, it will cause four initial values to change. So key design is effective and can be used to spread small changes in the key.

3.3. Padding

In order to lay a solid foundation for intra-block encryption in the future, padding is performed once. In the complement operation, parity verification bits are introduced to smoothly remove the filled pixels during decryption. Filling is performed using the first rows, last columns method, as follows:

- Step 1:

If the number of columns is even, skip this step and proceed to Step 2. If the number of columns is odd, duplicate the last column and append it to the image.

- Step 2:

Perform a parity check on the image. Use the last bit of the last column of the image as the parity check bit. This step ensures the number of 1s in the Least Significant Bits (LSBs) of every row is even, effectively using the LSB of the last pixel in each row as a parity bit.

- Step 3:

If the number of rows is even, end. If the number of rows is odd, duplicate the last row of pixels as a new row of pixels.

In this way, a new image is obtained, whose rows meet the parity check requirements and the number of rows and columns is even, as and .

3.4. Ascending Sort

Take a hyperchaotic matrix with a size of 3 × (

h ×

w) from

and interleave the matrix into a one-dimensional sequence, as

. Then, adjust the values of the hyperchaotic sequence

to integers within 0 to 1000 using Equation (9).

Sort

in ascending order, record the original index of each element during the sorting process, and record the index of the sorting result as

. Let the filled image be

, interleave

into a one-dimensional integer sequence, denoted as

, and then perform the following operation on all members

of

:

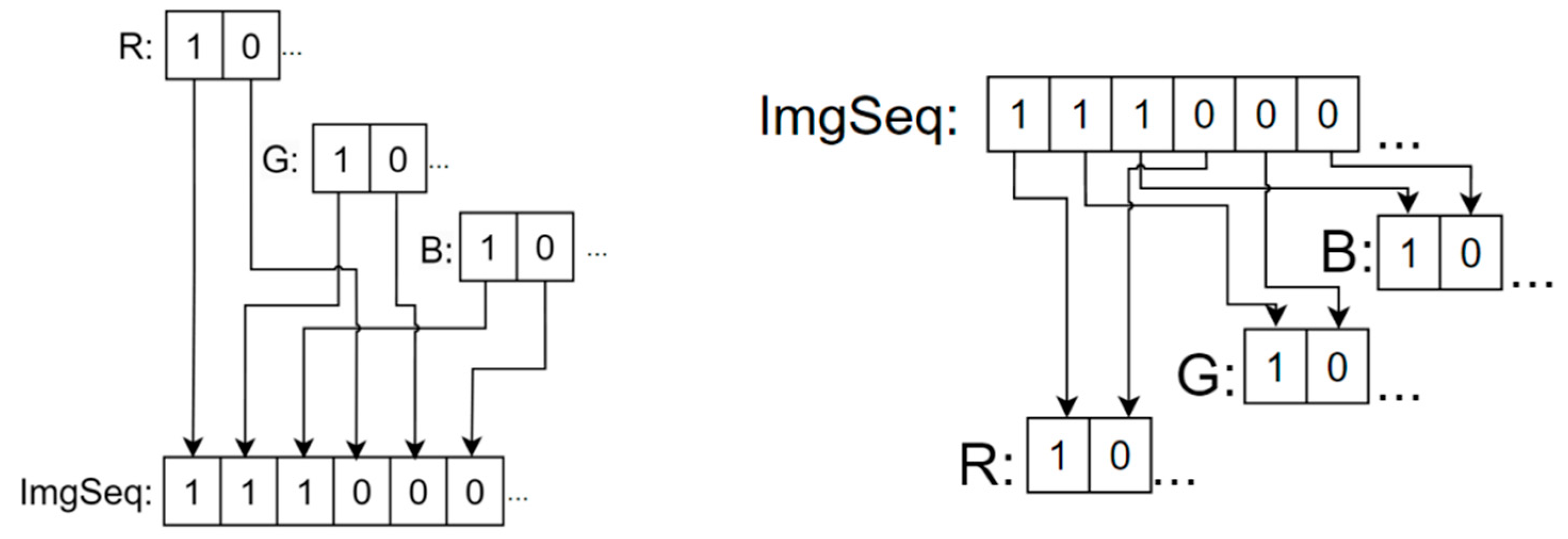

The “interleave” is shown in

Figure 4. The “interleave” (left) and “deinterleave” (right).

Then, convert back into a 3D image.

3.5. Intra-Block Diffusion

Adopting a divide and conquer strategy, the image is segmented into 2 × 2 pixel blocks, and dynamic address mapping is used as the basis for intra-block encryption. Intra-block diffusion is then performed on each pixel block.

Using the following method, first expand the pixel block, mark them as

–

in order, mark the current pixel as

, and then confirm the previous pixel

in the following way:

- Step 1:

Determine two mapping addresses as follows:

- Step 2:

Mark each bit

of

and perform the following operation:

- Step 3:

Exchange the first three bits of and the last three bits of . This operation enhances the strength of diffusion and eliminates the possibility of both dynamic mapping bits having a value of 0, resulting in an unsatisfactory XOR result. At the same time, this operation can prevent the scenario where both mapping addresses point to bits with value 0, which could reduce the effectiveness of XOR operations.

The index

is iterated from one to four, and for each pixel

in the block. Step 1, Step 2, and Step 3 are repeated and exchanged to complete the encryption within the entire block.

Figure 5 is a schematic diagram of the intra-block diffusion.

3.6. Joint Diffusion

Convert the remaining chaotic matrix into a one-dimensional sequence, denoted as

, using Equation (14) to convert it into an eight-bit unsigned integer, denoted as

with a length of

, and then perform the following operation on all members

of

, which

is a member of

:

Set the two flag bits, and to 1, pointing to the first bit of and , respectively. The length of is denoted as . And set the result array to . Next, proceed with the following steps:

- Step 1:

If the size of is greater than or equal to , then end the entire algorithm. If the difference between and is not greater than 4, record the difference as , execute , and then immediately end the algorithm. If it is greater, then take and (hereinafter referred to as ) to be the same as .

- Step 2:

Convert

as a binary number to an integer

and execute Case[X] and let

- Case [0]:

XOR

with

, as

where

refers to the bitwise XOR operation.

- Case [1]:

Take

and convert it into DNA base

according to rule 1 in

Table 1, take

and convert it into a DNA base according to rule 1 in

Table 1, denoted as

. Let

where

denotes the base XOR operation, performed according to the rules specified in

Table 2, and

represents the function that converts bases back into their binary bit sequences according to rule 1 in

Table 1.

- Case [2]:

Take

and convert it into an eight-base DNA base according to rule 1 in

Table 3. Eight-Base DNA encoding rules., denoted as

. Take

and convert it into an eight-base DNA base according to rule 1 in

Table 3. Eight-Base DNA encoding rules., denoted as

. Let

where

refers to base XOR operation, which is performed according to the method specified in

Table 3. Eight-Base DNA encoding rules., and

represents the function that converts bases back into their binary bit sequences according to rule 1 in

Table 3. Eight-Base DNA encoding rules.

- Case [3]:

Take , denoted as , and perform a new classification encryption according to the following method. For ease of expression, the form of ‘’ is used to distinguish, and execute Equation (15).

Then, execute .

- Casem [0]:

Take

, denoted as

, invert

bit by bit, denoted as

, and let

- Casem [1]:

Take

, denoted as integer

, and let

- Casem [2]:

Take

, denoted as integer

, and let

- Casem [3]:

Take , and take , and let

where

refers to the bitwise XOR operation.

Repeat Step 1 and Step 2 until the exceeds the .

After executing all processes, a one-dimensional bit sequence with a size equal to will be obtained. For each case analysis, encrypting 1 bit of on average requires 2.05 bits, with a maximum of 3 required. However, for the stability of the encryption algorithm, a sequence with three times the length of is used to ensure complete processing of all .

This process is the core process of this encryption, which encrypts sequences of different lengths through a variable window. Therefore, any change in the sequence at any position will result in completely different encryption results. Additionally, due to the randomness and extreme sensitivity of the hyper chaotic system to initial values, combined with the different keys generated by SHA-256 for each image, it can be guaranteed that even a slight change will lead to completely different encryption effects.

Due to the slow speed of streaming encryption, a multi-threaded approach can be adopted to improve the encryption speed. Divide and into equal parts to perform sets of encryptions simultaneously. After actual testing, under the experimental conditions of this paper, the encryption speed is the fastest when .

3.7. Image Recombination

The one-dimensional bit sequence obtained by joint diffusion is recombined and restored into a three-channel color image by bit deinterleaving to obtain the final encrypted image.

Figure 4 has a schematic diagram of deinterleaving in bits.

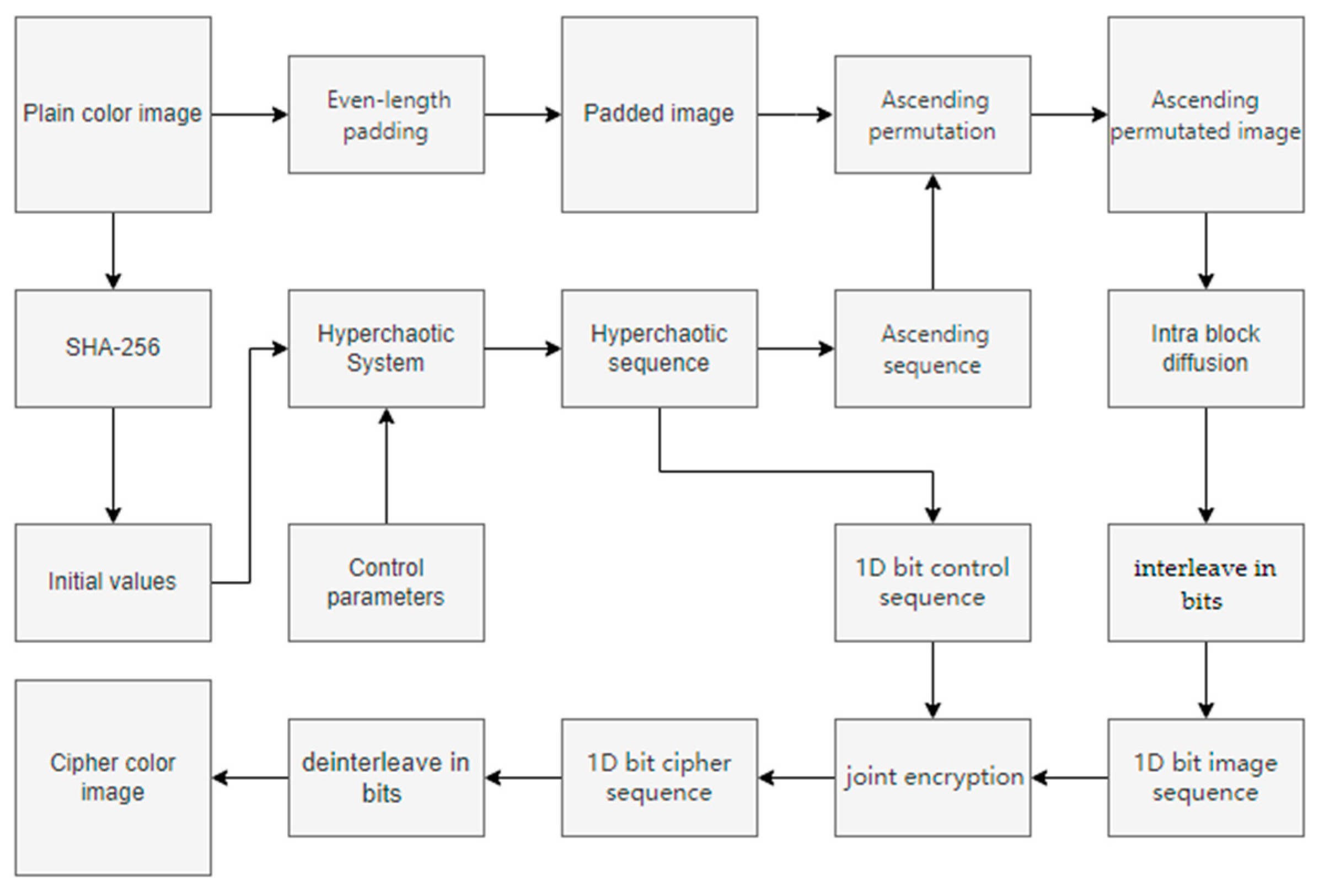

3.8. Encryption Method Framework of the Proposed PD5H

- Step 1:

Key generation: Generate initial values using SHA-256 hash values and custom constants, and combine them with control parameters to construct a chaotic system.

- Step 2:

Padding: Fill the image to an even number of rows and columns, making it easier for subsequent block encryption.

- Step 3:

Ascending Sort: Unfold the pixels into a one-dimensional sequence and scramble them in ascending order using a chaotic sequence.

- Step 4:

Intra-block diffusion: Divide the image into pixel blocks and use dynamic address mapping for intra-block encryption.

- Step 5:

Joint diffusion: Convert the image and chaotic sequence into a one-dimensional bit sequence, and perform flow diffusion by combining four-base and eight-base DNA operations.

- Step 6:

Image Recombination: Recombine the encrypted bit sequence into a three-dimensional color image.

The schematic diagram of the encryption process is shown in

Figure 6.

4. Experimental Results

4.1. Experimental Setup

The performance of the proposed PD5H was evaluated through a series of experiments and compared against other image encryption methods. The number of iterations for generating the hyper chaotic sequence was set to

, ensuring the sequence length is sufficient for encryption, where

and

represent the height and width of the original image, respectively. The following test image sizes were used as shown in

Table 8 in our experiments:

All experiments were conducted using MATLAB R2023a (Mathworks, Natick, MA, USA) on a PC with a 64-bit Windows 11 operating system (Microsoft, Redmond, WA, USA), a 2.40 GHz R9-7940HX CPU, and 32 GB RAM.

4.2. Key Analysis

The cryptographic key is a critical component for the security of any encryption method. To be considered secure, a key must exhibit two essential characteristics: a sufficiently large key space to prevent brute-force attacks and high key sensitivity to ensure that similar keys produce vastly different ciphertexts.

4.2.1. Key Space Analysis

A sufficiently large key space can effectively resist brute-force attacks. Research has shown that a key space larger than can effectively resist brute-force attacks. The key in this article consists of two parts: the SHA256 value of the image and a custom constant . The length of the key is , which should have a large key space of , but as we consider the issue of equivalent keys, the key space has decreased to. It is still much larger than , so it can effectively resist violent attacks. Meanwhile, our key is composed of two different parts, greatly enhancing the algorithm’s resistance to brute-force cryptanalysis.

4.2.2. Key Sensitivity Analysis

Key sensitivity is a fundamental security criterion for encryption algorithms, measuring the effect of minute key alterations on the corresponding ciphertext. In a key-sensitive system, even a single-bit change in the key should produce a completely different ciphertext when applied to the same plaintext, thereby preventing statistical analysis or key deduction based on similar encryption outcomes.

The key used in this article is divided into two parts, and there are significant differences between them. Therefore, we divided the testing into two experiments.

This article uses SHA-256 to generate the key corresponding to each image, so another sensitive method is adopted, which is to use a new key,

that is one bit different from the original key,

for the cipher image, which is as follows:

The ciphertext encrypted with the original key is then decrypted using this slightly modified

to evaluate the sensitivity of the decryption process to minute key alterations, as shown in the

Figure 7.

NPCR (Number of Pixels Change Rate) is a quantitative measure used to evaluate the sensitivity of an encryption algorithm. It represents the proportion of changed pixels to the total number of pixels after encryption, under a minimal change in the key or plaintext. The calculation is given by Equation (24):

where

is the equal relationship between the pixels at the positions of two images (

i,

j), where 0 represents the same, and 1 represents different.

UACI (Unified Average Changing Intensity) measures the average intensity difference between corresponding pixels in two encrypted images. It serves as a standard benchmark for evaluating the effectiveness of an encryption algorithm, with a widely referenced ideal value of approximately 33.4%. The calculation is given by Equation (25):

where

X is the pixel value at position (

i,

j) in image 1.

We change the custom constant C by one bit and then construct a new key to record different encryption results. The results are shown in rows 3 and 4 of

Table 9.

Taking into account the key sensitivity of both parts, we can draw the following conclusion: even when only a single bit of the key is altered, the decrypted image becomes entirely unrecognizable. The NPCR value approaches the ideal benchmark of 99.6%, while the UACI, though slightly below its ideal value, remains sufficiently high. These results demonstrate that the PD5H algorithm exhibits strong key sensitivity. From the attacker’s perspective, the actual performance is lower than the highest value, but it can still resist most attacks.

4.3. Statistical Analysis

In the field of image encryption, statistical analysis refers to the use of mathematical tools to evaluate the statistical properties of encrypted images in order to determine whether they can effectively conceal the statistical patterns of the original image. A robust encryption method must demonstrate strong resistance against statistical analysis, including attacks based on histogram analysis, information entropy analysis, and correlation analysis.

4.3.1. Histogram Analysis

Histogram analysis characterizes an image by counting the frequency of each pixel value, thereby revealing its statistical features. Attackers often use histogram analysis to infer encryption methods or deduce key information. An effectively encrypted image should exhibit a fairly uniform histogram to better conceal the features of the original image

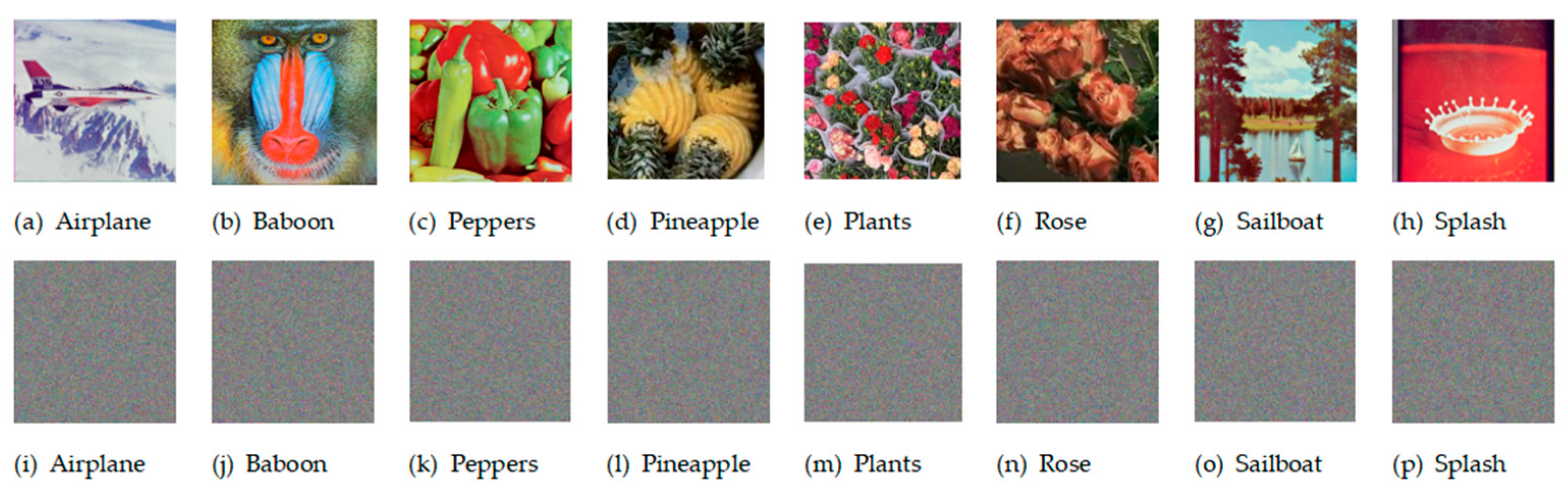

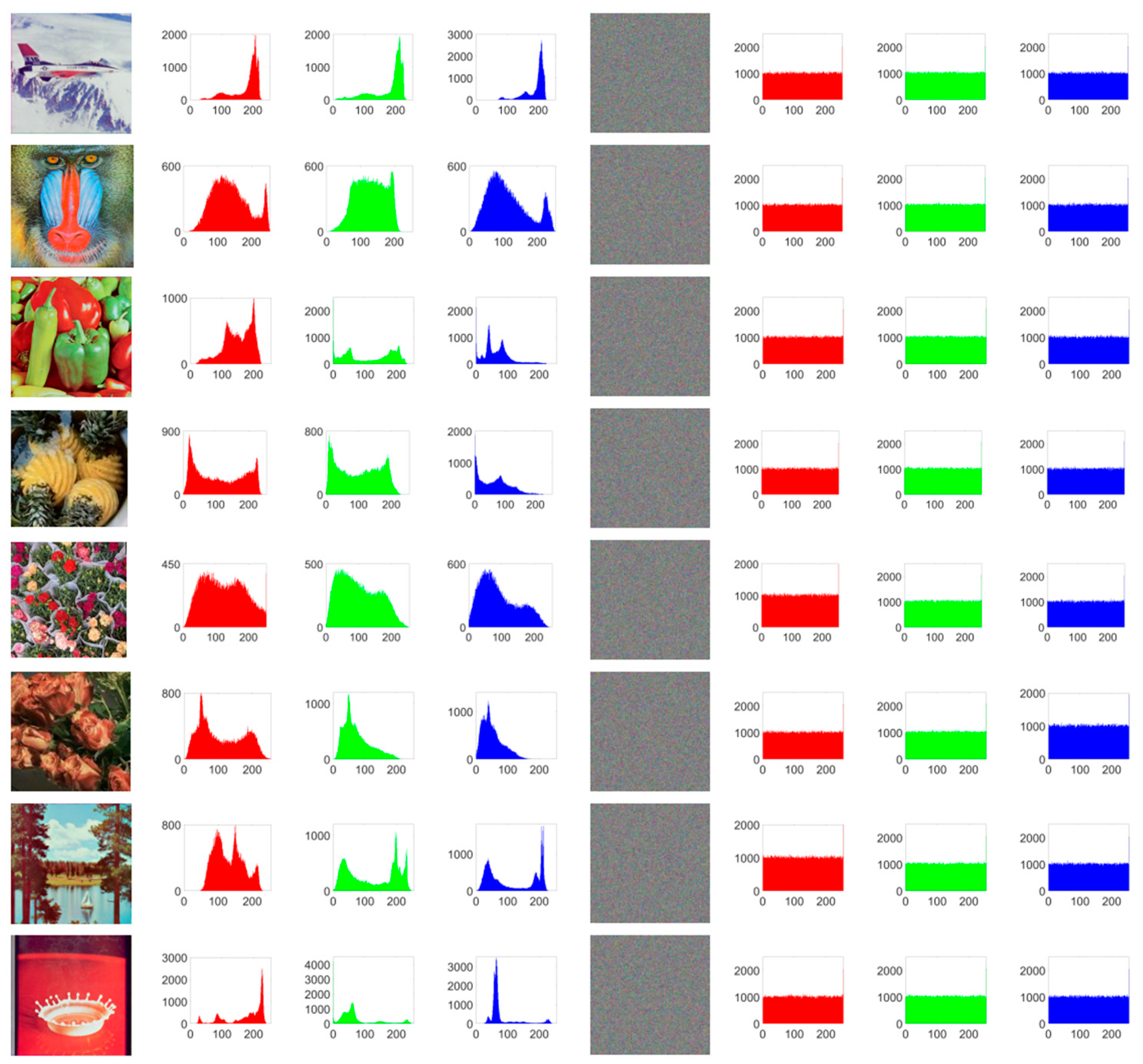

Figure 8 shows the original image, the encrypted image, and their respective histograms.

As can be observed from

Figure 8, the histograms of different original images exhibit distinct and strong features, whereas the histograms of each channel in the encrypted image are nearly uniform, making it difficult for attackers to extract meaningful information from them. It can be seen that PD5H can effectively resist histogram attacks.

4.3.2. Information Entropy

In 1948, American scientist Claude E. Shannon introduced the concept of information entropy as a mathematical measure of uncertainty in information. This metric has since been extensively adopted in image processing. For an encrypted image, a desirable information entropy value should be close to eight, reflecting a high degree of randomness resembling high-frequency noise.

For channel

with a gray level of

is the probability of a pixel with a gray value of

appearing, and its information entropy (

EI(

C)) can be calculated by Equation (26).

Table 9 presents a comparison of information entropy between original and encrypted images using PD5H and several other encryption methods.

As shown in the table, the information entropy of original images typically ranges between 6.5 and 7.5. Among these, the image “Plants” exhibits relatively high entropy owing to its rich detail. In contrast, the encrypted images consistently achieve information entropy values above 7.999 in each channel, indicating that the cipher images approximate the statistical characteristics of high-frequency noise and are difficult to distinguish from random data.

When compared with five other encryption methods, PD5H achieved the highest information entropy in a total of six test cases, demonstrating its superior encryption performance.

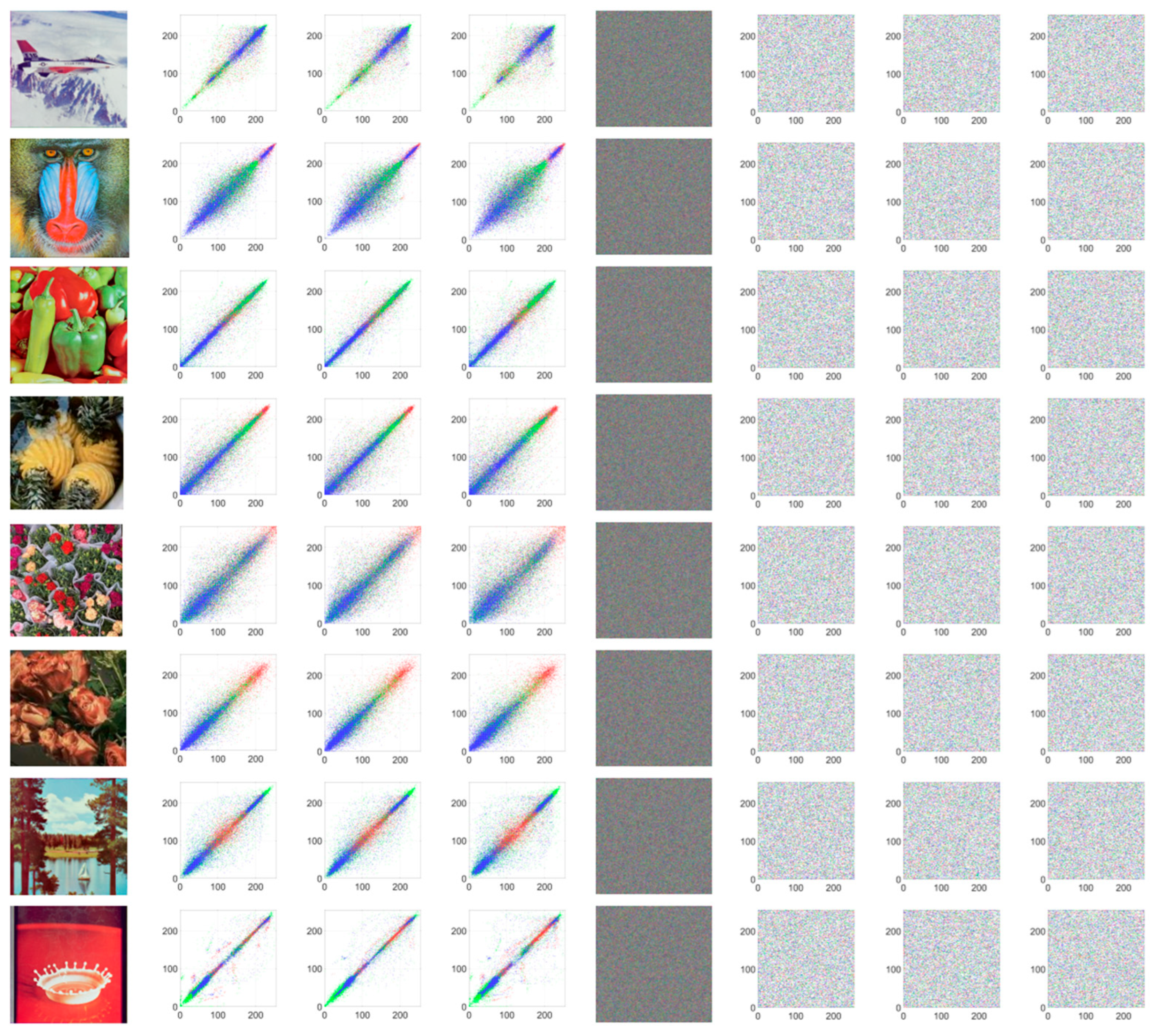

4.3.3. Correlation Analysis

Correlation analysis evaluates the degree of association between adjacent pixels in an image. To measure the correlation between pixels in an image in various directions, the correlation coefficient

γ is defined by Equation (27):

In this equation, and are the gray levels of two adjacent pixels in the auxiliary image channel; is the total number of pixel pairs; and , , and represent the expected value of , the standard deviation of , and the covariance of and .

In natural images, pixels typically exhibit strong correlations due to structural and semantic continuity. Effective encryption, however, should significantly reduce these correlations, making the encrypted image resemble random noise

Table 10 displays the correlation coefficients for each channel of both the original and encrypted images.

The bolded items indicate that different encryption methods exhibit the highest information entropy in the same image and channel.

The original image shows high correlation, with pixel values clustering closely along a diagonal line in a scatter plot. In contrast, the encrypted image exhibits a scattered and disordered distribution, indicating drastically reduced inter-pixel dependency. The correlation coefficients across different channels in the encrypted image are all below 0.005, demonstrating the absence of significant linear relationships within or across color dimensions.

A total of 45 correlation measurements—covering all three channels and multiple directional relationships across five test images—were analyzed. Among these, PD5H achieved the lowest correlation values in 16 cases, outperforming the best results from other encryption methods in 14 comparisons. These results as shown in

Figure 9 and

Table 11 confirm that PD5H effectively disrupts pixel correlations and enhances security against statistical attacks.

Bold items indicate that different encryption methods exhibit the lowest correlation in the same image and channel in

Table 11.

4.4. Differential Attack Analysis

Differential cryptanalysis is a common attack method in which an adversary encrypts two plaintext images with minimal differences and compares the resulting ciphertexts to deduce information about the encryption algorithm or the key. An encryption scheme is considered vulnerable if small changes in the input image lead to only minor changes in the ciphertext. Conversely, a secure encryption method should produce significantly different ciphertexts even when the plaintexts differ by only one pixel.

To evaluate resistance against differential attacks, a one-bit change was introduced into a single pixel of the original image. The modified image was then encrypted, and the resulting ciphertext was compared with the ciphertext of the unmodified original. The NPCR and UACI values were calculated and are presented in

Table 12 and

Table 13.

The results show that even after only a one-bit alteration in the plaintext, the NPCR and UACI values of the ciphertext are close to their ideal theoretical values. This indicates that the encryption method produces drastically different outputs for minimally different inputs, demonstrating strong resistance against differential cryptanalysis.

4.5. Robustness Analysis

During the storage and transmission of digital images, data corruption and noise interference are inevitable challenges. A robust encryption algorithm should, therefore, maintain both security and decipherability under such adverse conditions, demonstrating resistance to both noise contamination and data loss. This capability ensures the encrypted content remains usable even after common transmission errors or storage defects.

Data storage and transmission are susceptible to media damage, which may result in noise. We introduce different proportions of salt and pepper noise into encrypted images and observe their decryption effect. The decrypted images with 1%, 2.5%, 5%, and 10% salt and pepper noise added are shown in

Figure 10.

As can be seen, the more noise added, the more noise in the image, but it does not affect the subject of the image. Therefore, PD5H has a strong ability to resist noise.

Figure 11 are images decrypted from encrypted images with losses of 6.25%, 12.5%, 25%, and 50%. In order to investigate the impact of cutting attacks at different positions on the robustness of encryption algorithms, we cut the image by 25% at different positions and restored it. The results are shown in

Figure 12. It can be seen that even if the encrypted image loses 50%, the restored image still has some practical value and can still distinguish the main content. Different cutting positions will not cause significant changes in the quality of decrypted images. So, PD5H has a high ability to resist pruning attacks.

4.6. Time Analysis

The encryption time measures the efficiency of the algorithm and affects the feasibility of real-time applications. The decryption time directly determines the recovery speed of data availability. The two together reflect the performance and practicality of encryption schemes in practical deployment, and are key indicators for measuring their comprehensive value.

Table 14 is the average time spent encrypting and decrypting images of different sizes 64 times using PD5H.

Due to the inability of stream cipher encryption to optimize encryption time using matrix, multithreading, and other methods, the overall encryption speed is slow but still within an acceptable time range.

5. Conclusions

This study presents an improved five-dimensional chaotic system characterized by a greater number of positive Lyapunov exponents, a higher maximum Lyapunov exponent, and an increased Lyapunov exponent dimension. These index improvements demonstrate that the chaotic performance of the improved system has significantly improved compared to the original system.

A novel image encryption scheme is proposed based on this system, incorporating several innovative features: a new padding method for encrypted image, a block-based encryption method that utilizes the intrinsic information of pixel blocks, and a joint diffusion mechanism that operates on a bit-level basis by integrating four-base and eight-base DNA operations after integer shaping of the image into a bitstream.

PD5H has a large key space, extremely low image correlation, a uniform ciphertext pixel distribution, an excellent ciphertext entropy value (>7.999), and strong resistance to differential attacks. It also demonstrates strong resistance to data loss. This approach demonstrates excellent performance, particularly in resisting differential and statistical attacks. However, this encryption scheme has certain limitations: the encryption time is relatively prolonged, and key sensitivity remains slightly inadequate.

Due to the unique characteristics of stream encryption, PD5H has inherent advantages in terms of software and hardware equivalence and can be applied to low computing power platforms. In addition, PD5H may also be applied in the field of communication, which has reference significance for the transmission and encryption of various data carriers, such as images, videos, audio, etc., making encryption algorithms not limited to mathematical research, but truly applied in communication, storage, and other fields, achieving a wider range of applications.