1. Introduction

Learning algorithms pervade modern science. Machine learning improves neural networks through gradient descent. Evolution improves organisms through natural selection. Bayesian inference improves beliefs through probability updates. Despite decades of research in each field, the ultimate relations between these approaches remain unclear.

This article shows that a single force–metric–bias (FMB) law captures the essential structure of algorithmic learning and natural selection. Improvement arises from three components: force, typically expressed by the performance gradient; metric, typically expressed by inverse curvature; and bias, which includes momentum and other changes in the frame of reference. This structure emerges naturally from the Price equation, a simple notational description for the partitioning of change into components [

1,

2,

3].

Consider how the following two connections arise naturally within this framework. First, the primary equation in evolutionary biology [

4],

, and Newton’s method [

5] in optimization,

, are mathematically analogous. Both describe one step of change by multiplying a gradient-like force,

or

, by evolution’s covariance matrix,

, or Newton’s inverse Hessian,

, each serving the same metric role of rescaling geometry by inverse curvature.

Second, machine learning algorithms are used to improve the performance of neural networks or other methods of prediction. The progression between a few common algorithms perfectly illustrates the FMB decomposition. Stochastic gradient descent [

6] uses force,

. Polyak [

7] adds a momentum bias,

. Adam [

8] includes adaptive metric scaling,

. Adam’s full structure,

, is the same as evolution’s primary equation and Newton optimization, with an additional momentum bias term that often improves performance.

These connections reflect a deeper geometric principle. Many learning algorithms face the same fundamental challenge: maximize improvement in performance minus a cost paid for distance moved in the parameter space. Here, we must account for two aspects of geometry. First, there may be a curved relation between parameters and performance. Second, constraints on movement in the parameter space induce a metric that alters how distance is measured. For example, a lack of genetic variation in a particular direction constrains movement in that direction.

The optimal solution is the product of the force and the inverse curvature metric. Different fields have discovered this same result within specific contexts. Here, we see it in its full simplicity and generality, providing a reason for the recurring role of Fisher information as a curvature metric in probability contexts and inverse Hessian metrics in local geometry contexts.

The geometric structure of learning has been partially recognized in prior work. Fisher [

9] and Rao [

10] established that statistical parameter spaces can have intrinsic curvature. Amari [

11] used this insight to develop natural gradient methods.

In evolutionary biology, Shahshahani [

12] applied differential geometry to the dynamics of natural selection, introducing metric concepts to evolutionary theory. Newton’s method uses curvature to improve stepwise updates. Machine learning algorithms are often designed to estimate local curvature in an efficient way.

These insights about geometry, force, momentum, and bias remained confined to their domains. The full simplicity and universality of algorithmic learning have not been expressed in a clear and formal way. This article demonstrates the underlying unity, revealing the simple mathematical law that governs learning processes.

2. The Force–Metric–Bias Law

2.1. Statement of the FMB Law

I first state the FMB law. The following subsection derives the law and clarifies the notation.

This law is not an empirical hypothesis but rather a universal mathematical structure that underlies learning or selection. The law is

Here,

denotes a vector of

n mean parameter values that is updated by learning, optimization, or natural selection. The law also applies to updates of a single parameter vector,

, instead of mean values. Here,

parameters are values of any sort. In biology, we call them

traits.

The matrix describes a metric, which typically expresses the inverse curvature of the parameter space and the rescaling of distances. The nature of the metric varies in different algorithms, as discussed below. Throughout this article, metric matrices that properly rescale distances are positive definite. When a matrix is not positive definite, algorithms typically modify it or use alternative metrics to ensure valid updates.

The force vector often includes the gradient of the performance function U with respect to the parameters . In general, the force vector typically describes processes that push toward increased performance or constrain such an increase.

The bias vector,

, includes processes such as parameter momentum or change in frame of reference. These processes alter parameters in addition to the standard directly acting forces imposed by performance or constraint. The standard form of bias is

in which

describes a bias metric,

is the slope of performance with respect to biased parameter changes, and

is the bias that is independent of performance. Most algorithms follow this pattern for bias, modifying specific terms according to particular learning goals.

The noise vector,

, has a mean of zero. Commonly, we partition the noise into a metric term and a simple noise-generating process. For example, many algorithms use some variant of

in which

is a metric that reshapes the noise, and

is a basic noise process such as a Gaussian with a mean of zero and a standard deviation of one.

The following derivation of the FMB law reveals further key distinctions between directly acting forces, , and bias, .

2.2. Derivation from the Price Equation

The generality of the FMB law arises from simple notational descriptions of change. This subsection describes the key steps. Note that, at first glance, the definition of terms in the FMB law may not seem to match many common learning algorithms, such as stochastic gradient descent. Later sections make the connections.

A subsequent section shows that the same simple approach also leads to common methods and measures that frequently arise in learning algorithms, such as Fisher information, Kullback–Leibler divergence, and information geometry. This article ties these pieces together.

(1) We begin with the Price equation, a universal expression for change. A probability vector of length m sums to one. Each weights an alternative parameter vector, , with each parameter vector of length n. The symbol without subscript denotes the m-vector of alternative , each parameter vector associated with a probability .

To begin, assume that we have only one parameter, , with m variant values. Later, I show that the same approach works for , with notation extended for vectors and matrices.

An update to the mean parameter value over the

m variants is

, in which the dots denote inner products, and

is the difference between an updated primed value and the original value. Rearranging yields the Price equation [

3]

This equation is simply the definition of change in mean value, rearranged into a chain rule analog for finite differences rather than infinitesimal derivatives. The first term is the change in frequencies holding the parameters constant. The second term is the change in parameter values holding frequencies at their fixed updated values.

(2) Define

as the relative growth of the

ith type,

, such that

In biology,

is called the relative fitness of the

ith type, describing how survival and reproduction alter the frequencies of the types, with

.

By the standard definition of covariance,

, and by the standard definition of expectation

With these definitions, the Price equation can be rewritten as [

2]

These forms of the Price equation are simply notational descriptions for change [

3]. We have not assumed anything about the nature of the values or how they change. We have assumed that

always describes actual frequency changes.

If the performance function,

U, subsumes all of the forces that act on frequency change in a particular time period, then

is the performance of the

ith type,

, normalized to

for notational simplicity and without loss of generality

in which fitness and relative performance are equivalent descriptions of actual change.

In some cases, the forces acting on frequency change are composed of several distinct processes. One component may arise from a performance function, U. Another component may act as a constraining force that prevents frequency changes from following the forces imposed by U. Then frequency change is no longer aligned with the optimal direction for improving performance, and is not equivalent to the relative value of the performance function, U. Nonetheless, is the actual relative performance in the context of the Price equation’s notational conventions.

(3) To derive the first term of the FMB law in Equation (

1), write the standard least-squares regression of fitness on parameter values as

in which

f is the regression coefficient of

w on

, and

is the error uncorrelated with

. Using this regression in the first Price covariance term yields

in which

denotes the partial change caused by the force,

, imposed by relative performance,

w. In the multivariate case, with

parameters, this same term expands to

in which

is the covariance matrix of the parameters, defined by

, and

is the vector of partial regression coefficients for fitness,

w, with respect to each of the

n parameters.

Here, changes average parameter values only through changes in frequency.

(4) Bias directly changes a parameter value. For a parameter influenced by bias,

. To derive the bias term of the FMB law, write the regression of fitness on the changes in parameters

Then the second Price term yields

in which

. For

, the extended notation is

in which

is the covariance matrix of

, and

is the vector of partial regression coefficients for

w on

. The symbol

denotes the partial change caused by bias.

(5) We add a noise term,

, to complete the FMB law. In the infinitesimal limit, the law has the standard form of a stochastic differential equation. The first two components,

and

, define the deterministic drift change, and the third

component defines the stochastic diffusion change [

13].

(6) As the parameter distributions concentrate near their mean values, the variances and covariances become small. In the limit, we have updates to a single parameter vector, , and the regressions in the and terms converge to gradients. This limit recovers the common usage of gradients in learning algorithms that update single parameter vectors rather than updating mean parameter vectors over distributions. In this limit, the metrics provided by the covariance matrices are replaced by other aspects of geometric curvature, as discussed in the following subsections.

At this point, the FMB law is simply a notational partition of change into specific parts. The value arises from the insight and unity this notation brings to the diverse and seemingly unconnected applications that arise in different studies of learning and natural selection.

2.3. Metrics, Sufficiency, and Single-Value Updates

The Price equation’s force, bias, and noise terms in the FMB law of Equation (

1) can be expanded to

For the change in the location of the parameter vector,

, the metrics

,

, and

, the regression-based gradients,

and

, and the additional bias component

are sufficient statistics to reconstruct the update. Additional information about frequencies,

, does not alter the change in the location of the parameter vector.

The sufficiency of the terms in Equation (

8) to describe the change in the location of the parameter vector is important. It means that the FMB law, although initially derived from the Price equation’s population frequencies, also accurately describes changes to a single parameter vector.

The update depends only on the sufficient statistics, which are the metric matrices, the force vectors, and the intrinsic bias. In other words, we can invoke the common geometry that unifies updates to the mean vector, based on underlying frequencies of different parameter vectors, or updates to a single vector, based on alternative calculations of the sufficient statistics.

The Price equation’s metric terms are covariance matrices. However, a covariance matrix is just a metric matrix. In a population interpretation, we call the matrix a covariance. In a geometric interpretation, we call the matrix a metric. Mathematically, they are equivalent.

Similarly, population regressions enter only as slopes that can equivalently be analyzed geometrically. Intrinsic bias can also arise from either a population or a purely geometric interpretation.

Natural selection and some learning algorithms build on population notions of frequency and mean locations. Many other learning algorithms build on single-value updates of metrics, gradients, and geometry. Both interpretations follow from the Price equation’s FMB law. The difference arises in whether we assume that changing population frequencies set the metrics and slopes, or we assume that other attributes of a system set the geometry.

This conceptual shift allows the FMB law to unify disparate fields. As we will see, the metric in natural selection is the empirically observed covariance matrix of parameters. In Newton’s method for optimization, the metric is the analytically calculated inverse Hessian matrix. The FMB law reveals that these are different choices for the metric in different contexts, all within the same underlying mathematical structure.

In the following sections, I first continue to emphasize the Price equation’s population-based perspective. Later, I switch emphasis to single-value updates based purely on a geometric perspective. The two perspectives are different views of the same underlying FMB law.

2.4. A Spectrum of Methods: From Local to Population

In practice, the variety of algorithms forms a spectrum of information-gathering strategies. The spectrum runs across the spatial and temporal scope of the information they use to define the curvature metric, , the force, , and the bias, . Here, spatial scope describes a population of parameter vectors considered at a point in time, whereas temporal scope describes a sequence of parameter vectors over time.

Two extremes define the spectral extent. On one side, the purely local methods obtain information for both metric and force from a single parameter vector. For example, Newton’s method calculates the force vector as the first derivative of performance and the curvature metric as the inverse Hessian matrix, the second derivative of performance. Both derivatives are calculated with respect to a single parameter vector.

On the other side, purely population-based methods use a full spatial scope to define both a covariance metric and a regression-based force. In this case, curvature and force are averaged over a distribution of alternative parameter vectors. Here, I briefly mention a few classic examples to illustrate how various algorithms fall along this spectrum.

Amari’s natural gradient is a hybrid method. It combines a purely local force, the gradient at a point, with a metric of extended spatial scope, the Fisher information metric of a distribution over alternative parameter vectors [

11,

14].

Stochastic gradient descent samples a batch of local gradients. The average of the several precise local force vectors estimates the force for a population sample. In effect, the statistical sampling transforms the local gradient descent method into a quasi-population method [

6,

15,

16,

17].

Optimization methods like Adam substitute temporal scope for spatial scope. As the optimizer traverses the parameter space over time, it generates a historical sequence of parameter vectors. This sequence provides a population of parameter vectors over which the method combines the local gradients to estimate a momentum-like statistic that augments the local force and to build a diagonal metric that captures aspects of the spatially extended curvature metric [

8].

Later sections will develop these analyses in detail, showing how the variety of algorithms arises from particular information-gathering strategies and ways of calculating the components of the FMB law.

2.5. Performance and Cost Functions: Sign Convention

I set U as a performance function that provides increasing benefits as it rises in magnitude. The choice of a target function to maximize arose from the Price equation’s biological convention of fitness as a beneficial attribute.

By contrast, many studies in numerical optimization and other fields take U as a cost function to be minimized. In this article, I adopt the maximization of U as the primary goal. Results for minimizing cost follow by substituting for U. If this substitution is used to minimize cost, then the Hessian calculation for local curvature becomes the curvature of the cost function , which inverts the sign of the Hessian used in maximization.

There is no difference except that one has to pay attention to the directions of change and the appropriate signs appended to terms.

3. Natural Selection, Metrics, and Curvature

This section links the metric and force terms to natural selection, a topic that has a well-developed theoretical foundation. The connection illustrates how the familiar concepts in biology associate with the more abstract geometric concepts of the FMB law. In this case, metric and force arise from the spatial extent notion of populations, the basis of the Price equation, and the initial path to the general FMB geometry.

From Equation (

6), an update caused solely by the first Price term for frequency change is

In biological studies of natural selection, this result is often called the Lande equation [

4]. In that case,

is a vector of

n trait values,

is the covariance matrix of the trait values, and

is the vector of partial regression coefficients of fitness,

w, on trait values,

. The Introduction wrote the right side of this equation for biology as

to distinguish the biological terms. But now we use our standard notation,

.

This classic equation of natural selection matches the primary update process used by most learning algorithms. The slope of performance (fitness) relative to the parameters (traits) creates the primary driving force for updates, . The covariance matrix, , defines the update metric. Other algorithms vary in the spatial and temporal extent used to calculate force and metric, the methods for the particular calculations, and supplemental bias and stochasticity components.

A metric changes the length and direction of the update path,

, by modulating the forces acting in each direction of the parameter space. The expected gain in performance is the force,

, multiplied by the displacement,

, yielding

A metric alters the sum of squares for a vector, changing its Euclidean length, with the requirement on

that the resulting length be a nonnegative real value. We can drop the expectation when

is calculated from an explicit performance function.

A metric has a natural interpretation in terms of inverse curvature. For example, if is a covariance matrix for , then, along any direction, a small value implies that there is little variation in the values of the parameters in that direction and, therefore, relatively little opportunity to shift the mean of the parameters.

A probability distribution with a small variance is narrow and highly curved, linking large curvature to small variation. Thus, inverse curvature describes variance. The movement in a particular direction becomes the force in that direction multiplied by the variance or inverse curvature in that direction. High variance and a straight surface augment the force. Low variance and a curved surface deter the force. Many publications consider the geometry of evolutionary dynamics [

12,

18,

19].

In biology, natural evolutionary processes set the covariance matrix as the update metric. In learning and optimization algorithms, one chooses the metric by assumption or by particular calculations from the data and the update dynamics. The way in which the metric is chosen defines a primary distinction between algorithms.

4. Geometry, Information, and Work

Learning algorithms provide iterative improvement, a particular type of dynamics. This section reviews general properties of learning, which set a foundation for understanding the variety of algorithms and their unification [

20,

21].

We will see that the Price equation’s notation for frequency change naturally gives rise to Fisher information, Kullback–Leibler divergence, information geometry, and d’Alembert’s principle. The simple derivations reveal deep connections between learning dynamics and physical principles.

The Price equation and the consequent classic results follow from the intrinsic geometry of the purely population-based case. The insights from this spatially extended scope of populations provide the foundation to understand how the local geometric analysis of many learning algorithms fits within the broad FMB law.

4.1. Price Equation Foundation

The general expressions for learning updates arise from the Price equation, from which we see that the metric and force terms follow immediately from the basic notational description for the change in frequency.

Many learning algorithms do not have an intrinsic notion of frequency. Earlier, I showed how those algorithms fit into this scheme by considering the FMB terms as sufficient quantities for updates.

In this section, I continue to focus on frequency changes. Frequency here simply means a vector of positive weights with a conserved total value. We normalize the total to be one, which links to notions of probability, frequency, and average values. Several classic measures and methods of learning follow.

Most of the particular results in this section are widely known. Once again, the advantage here is that these aspects emerge simply and naturally as the outcome of our basic Price equation notation, without the need to invoke particular assumptions or interpretations.

4.2. Discrete Fisher–Rao Length

A discrete generalization of the Fisher–Rao length follows immediately from Equation (

9), which gave the partial increase in mean fitness,

, as

. We can express that same quantity purely in terms of frequencies by the first Price term from Equation (

3). To do so, we use fitness as the trait of interest,

, with

from Equation (

4), yielding [

20]

The notation

denotes the vector norm, which is the Euclidean length of the vector, and

denotes the discrete generalization of the squared Fisher–Rao step length that arises from the Fisher information metric [

10].

The value of measures the divergence between probability distributions for the discrete jump . Equivalently, , the variance in fitness, describes the same value.

4.3. Kullback–Leibler Divergence

The Fisher–Rao length measures the separation between probability distributions. The Kullback–Leibler (KL) divergence provides another common way to measure that separation [

22]. If we write

, so that the discrete update is

, then we can think of the discrete change as a continuous path arising from the solution of an infinitesimal process that grows at a nondimensional rate proportional to

, which in biology is the Malthusian parameter. Thus

and using

instead of

in the Price equation, the first Price term for the partial change of mean log fitness caused directly by

becomes

which is known as the Jeffreys divergence [

23], a symmetric form of the KL divergence of information theory

For infinitesimal changes

, we get

so that using

yields

showing that, for continuous infinitesimal changes

, the Jeffreys divergence for discrete changes based on

converges to the squared Fisher–Rao step length,

.

4.4. Information Geometry

Information geometry analyzes the distance between probability distributions on a manifold typically defined by the Fisher information metric. Simple intuition about information geometry follows if we transform to square-root coordinates for frequencies [

14].

Let

, which leads to

, creating unitary coordinates such that all changes in

lie on the surface of a sphere with a radius of one. In the new coordinates, the value of the squared Fisher–Rao step length in Equation (

10) for the infinitesimal limit becomes

. This surface manifold for dynamics illustrates the widespread use of information geometry when studying how probability distributions change.

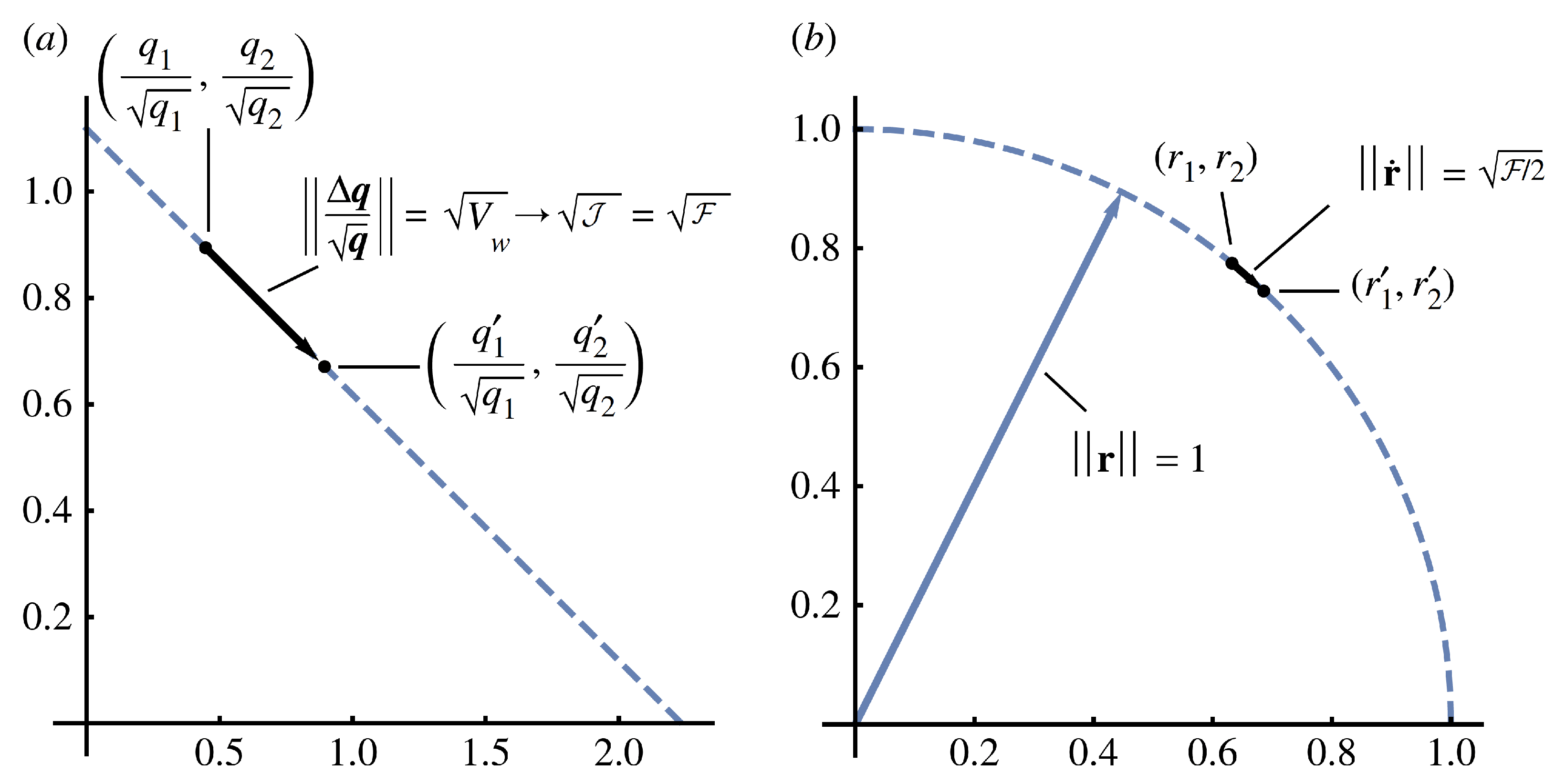

Figure 1 shows aspects of the geometry.

4.5. Bayesian Updating

The distinction between Bayesian prior and posterior distributions is another way to describe the separation between probability distributions. Following Bayesian tradition, denote

as the likelihood of observing the data,

, given parameter values,

. To interpret the likelihood as a performance measure equivalent to relative fitness,

w, the average value of the force must be one to satisfy the conservation of total probability. Thus, define

We can now write the classic expression for the Bayesian updating of a prior,

, driven by the performance associated with new data,

, to yield the posterior,

, as

, or [

24]

By recognizing

, we can use all of the general results derived from the Price equation. For example, the Malthusian parameter of Equation (

11) relates to the log-likelihood as

We can then relate the changes in probability distributions described by the Jeffreys divergence (Equation (

12)) and the squared Fisher–Rao update length

Figure 1.

Geometry of change by direct forces,

. (

a) Divergence between the initial population with probabilities,

, and the altered population with probabilities,

. For discrete changes, the probabilities are normalized by the square root of the probabilities in the initial set. The distance can equivalently be described by the various expressions shown, in which

is the variance in fitness from population biology,

is the Jeffreys divergence from information theory, and

is the squared Fisher–Rao step length. The symbol “→” denotes the limit for small changes. (

b) When changes are small, the same geometry and distances can be described more elegantly in unitary square root coordinates,

From Frank [

20].

Figure 1.

Geometry of change by direct forces,

. (

a) Divergence between the initial population with probabilities,

, and the altered population with probabilities,

. For discrete changes, the probabilities are normalized by the square root of the probabilities in the initial set. The distance can equivalently be described by the various expressions shown, in which

is the variance in fitness from population biology,

is the Jeffreys divergence from information theory, and

is the squared Fisher–Rao step length. The symbol “→” denotes the limit for small changes. (

b) When changes are small, the same geometry and distances can be described more elegantly in unitary square root coordinates,

From Frank [

20].

4.6. d’Alembert’s Principle

We can think of the causes that separate probability distributions during an update as forces. Multiplying force and displacement yields a notion of work. Because we conserve the total weights as normalized probabilities, many learning updates require that virtual work vanishes for allowable displacements, yielding d’Alembert’s principle [

25].

From the definition for

in Equation (

11), and for the infinitesimal limit in Equation (

14), we have

. By the chain rule for differentiation, we can write a Price equation expression

Using

from Equation (

14), noting that

, and rearranging yields

in which

is a small virtual displacement consistent with all constraints. This expression is a nondimensional form of d’Alembert’s principle, in which the virtual work of the directly acting force for an update,

, and displacement,

, is balanced by the virtual work of the inertial force,

, and displacement, yielding

.

In one dimension, we recover an analog of the familiar Newtonian form,

, or

, showing that the force,

F, has an equal and opposite inertial force,

, for mass

m and acceleration,

a. For multiple dimensions, we can rewrite Equation (

19) in canonical coordinates and obtain a simple Hamiltonian expression [

25].

The conservation of total probability often leads to a balance of direct and inertial components, expressed by the Price equation. For example, when we analyze normalized likelihoods such that the average value is one,

, we have a conserved form of the Price equation for normalized likelihood

in which the first term is the gain in performance for the direct force of the data in the likelihood, and the second term is a balancing inertial decay imposed by the rescaling of relative likelihood in each update. Notions of direct and inertial forces and total virtual work provide insight into certain types of learning updates, as shown in later examples.

5. Alternative Perspectives of Dynamics

The preceding sections described the universal geometry of change revealed by the Price equation’s notation. Fundamental concepts emerged naturally, including the Fisher–Rao length, information geometry, and Bayesian updating. In this context, the Price equation is a purely descriptive approach that reveals abstract, universal mathematical properties of learning updates.

However, in practice, learning dynamics are more than descriptions of change. Algorithms infer causes or deduce outcomes. This section links the Price equation’s description to inductive and deductive perspectives.

By making these alternative perspectives explicit, we see why the same mathematical object, such as the Fisher information metric, arises as a simple notational consequence in one context, an empirical observation in another context, and a chosen design principle for optimal performance in a third context [

26,

27].

To clarify these perspectives, we begin with the fact that any dynamic process has three key components: an initial state, a rule for change, and a final state. Typically, we know or assume two of the components and infer the third. The following subsections consider the alternative perspectives in detail, connecting each back to the abstract Price equation foundation.

This section’s alternative perspectives on dynamics add a complementary axis to the local-to-population spectrum of methods introduced earlier. I develop this complementary axis at the population scale, providing the most general conceptual frame. That population context prepares the ground for later discussion of particular algorithms, many of which blend local geometry with broader spatial or temporal scope.

5.1. Descriptive, Inductive, and Deductive Perspectives

In our Price formulation, the partial change associated with force, , describes the initial state as the frequencies, , the rule for change as the fitnesses, , and the updated state as .

(1) The Price formulation is a purely descriptive and exact expression because it tautologically defines the rule for change from the other two pieces,

. Consequently, results that follow directly from the Price equation provide the general, abstract basis for understanding intrinsic principles and geometry [

3].

(2) In biology, actual frequencies change, . Those changes are driven by an interaction between the current state, , and unknown natural forces. A biological system has, in effect, direct access to the data for initial and updated states but not for the hidden rules of change.

Natural selection implicitly runs an inductive process [

28,

29]. It infers aspects of the hidden rules for change, designing systems that use that inferred information to improve future performance. In general, a system may use data on the initial and updated states inductively to infer something about the hidden rules of change.

(3) Most mathematical theories and most learning algorithms run deductively. They start with the initial state and the rule for change and then deduce the updated state. For example, we might have , and the performance function, , from which we calculate .

The updated state is an intrinsic calculation or outcome of the system process. More commonly, in machine learning, the process acts on a single parameter vector, , rather than as a population of alternative parameter vectors. Given and a performance function, the algorithm calculates an updated vector, .

In summary, dynamics has three components: initial state, rule for change, and final state. Descriptive systems define the rule from the other two, . Inductive systems start with the initial and final state and infer the rule, . Deductive systems start with the initial state and the rule and deduce the final state, for populations or for single-vector updates.

5.2. Fisher Information in the Three Perspectives

This subsection shows how to interpret the squared Fisher–Rao step, , in each of the three perspectives. In the pure Price equation, it follows simply and universally from tautological notation. In both the inductive and deductive cases, it is the optimal step in the sense that it maximizes the increase in performance relative to alternative steps of the same length.

5.2.1. Descriptive Perspective

In the abstract mathematical perspective, use Equation (

4) to define the focal trait as the average excess in fitness

Then the partial change in fitness from the first Price term, from Equation (

10), is

By analogy with Equation (

9), this quantity can also be written as

interpreted here purely in frequency space such that the force is

, and the metric is

, a matrix with entries

along the diagonal.

The matrix

with entries

along the diagonal is the Fisher information metric for the probability distribution

, also called the Shahshahani Riemannian metric in certain applications [

12].

Here, I use the Fisher metric in its geometric sense as the Shahshahani metric, a full-rank, diagonal matrix that defines the curvature of frequency space [

12,

14]. In this geometric context, the inverse given here provides the length metric for the space of

values. In classic statistical estimation theory, the Fisher matrix has a different interpretation that reduces its dimension and leads to a different inverse form [

10].

In this pure Price case, the values of and arise directly from notation, without any additional concepts or assumptions derived from information or particular aspects of geometry.

Thus, the Fisher metric may arise so often in widely different applications because of its fundamental basis in simple definitions rather than in the more complex interpretations commonly discussed. Those extended interpretations are useful in particular contexts, as in the following paragraphs.

The point here concerns the genesis and understanding of fundamental quantities rather than their potential applications. For this pure Price case, arises purely from tautological notation and is related to the inverse Fisher metric.

5.2.2. Inductive Perspective

In inductive applications, we begin with or observe . From those data, we may inductively estimate , the slope of w with respect to trait values, . The given frequency changes also inductively improve the system’s internal estimate for parameters that perform well by weighting more heavily the high-performance parameter values.

In general, from Equation (

6), the update is

, and the system’s partial improvement in fitness (performance) caused by frequency change is

, which is the squared Fisher–Rao length. Here,

is

’s covariance matrix, and

is the vector of partial regression coefficients of

w with respect to

or, in the infinitesimal limit, the inferred gradient of

w with respect to

.

The covariance matrix

in parameter space,

, is related to the Fisher metric

in probability space,

, by

in which

is the matrix of parameter deviations from their mean values,

. This expression reveals the relation of the Fisher metric geometry for probabilities to the covariance metric geometry for parameters.

The Fisher–Rao update is optimal in the sense that, at each step, it maximizes the first-order performance gain minus a penalty proportional to the geometric length of the update. In particular, among all possible frequency changes, , that produce the same Fisher–Rao update length, the actual frequency changes for the given trait covariance matrix, , lead to the greatest improvement in performance.

Equivalently, among all possible frequency changes,

, that produce the same improvement in performance,

, the actual frequency changes for the given trait covariance matrix,

, lead to the shortest Fisher–Rao length. In biology, these optimality results are trait-based analogs of Fisher’s Fundamental Theorem for genetics [

30,

31,

32,

33].

Of course, forces other than intrinsic performance can alter frequencies. The more we know about those other forces, such as environmental shifts or directional mutation in biology, the more accurately we can account for the consequences of those forces and improve inductive inference about the causal relation between parameters and performance [

34,

35,

36].

5.2.3. Deductive Perspective: Frequencies

In deductive studies of frequency, we use and to calculate . Mathematically, there is nothing new here because . However, this perspective differs because we take the as given and deduce . In other words, is an identified driving force, whereas in the inductive case, is an observation about frequencies that leads to inference about the variety of forces that have acted to change frequency. However, because the mathematics is the same, we end up with the same covariance and other expressions for change.

5.2.4. Deductive Perspective: Parameters

In deductive studies of parameters, we use and to deduce system updates to parameter values. This approach becomes interesting when, instead of being constrained by the given frequencies, , that set the covariance matrix of values as the metric, we instead choose to achieve a better increase in performance.

Suppose, for example, that for we use , the gradient of performance (fitness) with respect to the parameters. If the gradient provides the best opportunity for improvement in a particular direction of the parameter space, but there is no parameter variation in that direction among the given values, then the associated covariance of and metric prevents the potential gain offered by the gradient.

In a deductive application, we may instead choose to take advantage of the potential increase provided by the gradient. As before, the parameter update is , and the gain in performance is . In this case, we use a local gradient so the steps are accurate to first order, we drop the bar over because we no longer have an underlying distribution, , and we use U for performance.

An optimal update typically occurs when the metric is , in which is the Fisher information matrix in coordinates. That step is optimal in the sense that it provides the greatest increase in performance among all alternative updates with the same Fisher–Rao path length. For an optimal update, the squared Fisher–Rao length equals the gain in performance.

However, we require an arbitrary assumption to calculate the Fisher matrix. That matrix, and the associated optimality, depend on the positive weights assigned to alternative parameter vectors, which we usually express as probabilities. But, in this case, we are considering an update to a given vector, , without any underlying variants associated with probabilities, . So we must create a notion of alternative parameter values with varying weights.

We are free to choose those probability weights,

. A natural choice is to use Boltzmann probabilities of the performance function,

in which

b is a constant value, the maximum of

U is not infinite, and

U is twice differentiable. Here,

b adjusts how quickly the log probabilities rise in proportion to performance,

U.

For the parameter vector

, the

ith row and

jth column entries of the Fisher matrix are

The Boltzmann expression in Equation (

22) links the log-probabilities used in the Fisher matrix to the performance function, yielding

Thus, the Boltzmann choice for probability weights means that the Fisher matrix is the negative expected value of

, the Hessian matrix of second derivatives of

U with respect to

. The expectation is over the Boltzmann probabilities for each Hessian evaluated at a particular

. Thus, when we choose our metric as

, the inverse Fisher matrix, we are choosing a particular metric of inverse curvature.

In the inductive case,

arises from the given frequencies for alternative parameter vectors. For this deductive case, we allow

to vary and ask what matrix maximizes the gain in performance minus the cost for the Fisher–Rao path length. The next subsection shows that

is optimal in this context and, in general, that inverse curvature is often a good metric [

11].

5.3. Why Inverse Curvature Is a Good Metric

Consider first the case in which the only information we have is the local gradient,

, and the local Hessian curvature,

, near a particular point,

, the local end of the spatial extent spectrum. Then, a Taylor series expansion of performance at a nearby point up to second order is

in which

is the Hessian matrix of second derivatives. If we consider a region of the performance surface in which all second derivatives are negative, then

is a positive definite metric that describes local curvature. We can then write the gain in performance for a step

as

What step

maximizes the total performance gain up to second order? Equivalently, what step maximizes the first-order gain,

, for a fixed quadratic cost,

? Lagrangian maximization of the gain subject to the fixed cost yields the optimal direction for an update,

which in this context is the classic Newton optimization method. For maximization, the positive definite metric is

, in which

is the negative-definite Hessian matrix of the performance function

U. For minimization of a cost function, the sign of

reverses, so that the metric becomes

, and the force

also flips its sign. Same idea, different signs [

5].

In this case, the metric scales each component of the step inversely to the local curvature, pushing far in straight directions and contracting where the surface bends sharply. That inverse-curvature rescaling gains the most in first-order performance change for a given quadratic cost.

Considering that the optimal metric for a local step arises from the inverse Hessian, why use the more complex Fisher metric? One reason is that local optimality requires the Hessian to be negative definite for the maximization of performance or, equivalently, positive definite for the minimization of cost. Another reason is that a local calculation ignores the overall shape of the optimization surface. Therefore, a locally optimal step is not necessarily best with regard to broader goals of optimization.

By contrast, the Fisher metric is essentially an average of the best local curvature metrics over a region of the optimization surface, weighting each location on the surface in proportion to a specified probability. This approach, known as the natural gradient, typically points in a better direction with regard to global optimization [

11].

In terms of our local-to-population spectrum of methods, the natural gradient combines the spatial population extent for the calculation of the curvature metric with the local extent for the analysis of the force gradient.

For the Boltzmann distribution, the probability weighting of a location rises with the performance associated with that location, emphasizing strongly those regions of the optimization surface associated with the highest performance. Thus, a Fisher step typically points in a better direction with regard to global optimization.

The Fisher metric also corrects common problems with local Hessians. For example, Hessians are not always proper positive metrics, whereas the Fisher matrix is a proper metric. In addition, local Hessians can change under coordinate transformation, whereas the Fisher metric is coordinate invariant. As the region over which the Fisher metric is defined converges to a local region, the Fisher metric converges to the local Hessian.

The optimality of the Fisher metric follows the same sort of Lagrangian maximization as for the local Hessian [

14]. In particular, we maximize the same gain,

, but this time subject to a fixed KL divergence,

, between the probability weightings for variant parameter values, taken initially as

and after a parameter update as

. Here,

is a general distribution that can take any consistent form. By the Taylor series, the KL divergence to second order is

for Fisher matrix

. We can use the right side in place of the quadratic term in Equation (

24). Then the same Lagrangian approach yields the optimal update in the context of the Taylor series approximations as

which has the same form as the classic Newton update in Equation (

25) but with

, the inverse Fisher metric in place of the positive definite form of the inverse Hessian. In the limit of small changes, twice the KL divergence becomes the squared Fisher–Rao path length,

. Thus, the optimality again becomes the maximum performance gain relative to a fixed Fisher–Rao length.

In practice, the Fisher metric does not always provide the best update step. That metric is based on a particular assumption about global probability weightings for alternative parameter vectors, whereas one might be more interested in the local geometry near a particular point in the parameter space. In some cases, a local estimate of the inverse curvature provides a better update or may be cheaper to calculate.

The variety of learning algorithms trades benefits gained for particular geometries against costs paid for specific calculations. However, inverse curvature remains a common theme across many algorithms.

6. The Variety of Algorithms

The following sections step through some common algorithms. The details show how each fits into the FMB scheme, how the various algorithms relate to each other, and why certain quantities, such as Fisher information, recur in seemingly different learning scenarios.

The key distinctions between algorithms arise from how each gathers information about components of the FMB law. The alternatives span the spectrum from local information taken at the current point in parameter space to spatially extended averaging over a population of alternative parameter vectors, and from current values to temporally extended averaging of past or anticipated future locations. This section provides a brief overview.

First, population and Bayesian methods represent the fully extended spatial scope end of the spectrum. These methods focus on changes in frequencies, . In biology, frequencies change between ancestor and descendant populations. In Bayesian methods, frequencies change between prior and posterior distributions. The frequencies act as relative weights for alternative parameter vectors.

A particular algorithm can, of course, choose to modify how components of an update are calculated. However, populations set the foundation for analysis. Changes in parameter mean values,

, summarize updates. The metric

typically arises from the covariance of alternative parameter values. Some methods use variational optimization and analogies with free energy, which links learning to various physical principles of dynamics [

37,

38].

Second, many algorithms update a single parameter vector. These methods fill in the other end of the spectrum and its middle ground. The purely local strategy, such as Newton’s method, uses a metric and force gradient analyzed at a single point. Hybrid strategies choose metrics that incorporate a broader spatial scope, such as trust regions [

39,

40] or the natural gradient [

11].

Third, all search methods trade off exploiting the directly available information in their spatial domain against exploring more widely to avoid getting stuck in local optima. Noise provides the simplest exploration method, often expanding the spatial scope of analysis. A broader scope can sometimes push the search beyond a local plateau to find the nearest advantageous gradient to climb.

Finally, modern optimizers often include temporal scope. Extensions to stochastic gradient descent, such as Adam [

8] and Nesterov [

41,

42], explicitly use the history of updates to calculate a bias term,

. In these cases, bias explicitly applies physical notions of momentum, in which past movement of parameter values can push future updates beyond local traps on the performance surface.

Overall, common optimizers combine different spatial and temporal extents of gradient forces, inverse curvature metrics, bias, and algorithmic tricks that compensate for missing information, difficult calculations, and challenging search over complex performance surfaces. We see that the seemingly different algorithms all build on the same underlying principles revealed by the Price equation’s FMB law.

I start with spatially extended population methods, which match most closely to the standard interpretation of the Price equation.

7. Population-Based Methods

In machine learning, one often improves performance by directly calculating the gradient of a performance surface. An updated parameter vector follows by moving along the gradient’s direction of improved performance.

However, in many applications, one does not have a smooth, differentiable function that accurately maps parameters to performance. Without the ability to calculate the gradient, one has to test each parameter combination in its environment to measure its performance. Such methods are called black-box optimization algorithms.

Covariance matrix algorithms (CMAs) often provide a good black-box optimization method [

43]. These evolution strategy (ES) methods proceed by analogy with biological evolution. One starts with a target parameter vector and then samples the performance values for a set of different parameter combinations around the target. That set forms a population from which one can calculate an updated target parameter by an empirically calculated covariance matrix and performance gradient, as in Equation (

6).

These methods have three challenges: choosing sample points around the current target, estimating the performance gradient, and calculating the new target from the covariance matrix and performance gradient. I briefly summarize how the popular CMA-ES method handles these three challenges [

44].

First, CMA-ES draws sample points from a multivariate Gaussian distribution. The mean is the current target parameter combination. The algorithm updates the covariance matrix to match its estimate of the performance surface curvature. The covariance is reduced along directions with high curvature and enhanced along directions with low curvature.

The size of the parameter changes in any direction increases with the variance in that direction. Thus, the algorithm tends to explore more widely over straighter (low curvature) regions of the performance surface and to move more slowly in curved directions.

In biology, covariance decreases over time in directions of strong selection because better-performing variants increase rapidly and reduce variation. That decline in variation degrades the ability of the system to continue moving in the same beneficial direction because the distance moved in a direction is the selection gradient multiplied by the variance. Eventually, mutation or other processes may restore the variance, but it can take a while to restore variance after a bout of strong selection.

CMA-ES avoids that potential collapse in evolutionary response by algorithmically maintaining sufficient variance to provide the system with good potential to search the performance landscape. In essence, the algorithm attempts to choose the inverse curvature metric, , to maximize the gain in performance, in which is the covariance matrix.

The algorithm chooses by modifying the inverse Fisher metric of its Gaussian sampling distribution, steadily blending in the weighted variation of the best-performing samples to estimate its covariance matrix for updates.

The estimated covariance matrix typically converges to an approximation for the local inverse curvature metric. For performance maximization, this metric is given by for the local Hessian, , in which the negative sign ensures that the metric is positive definite. The Gaussian sampling process introduces stochasticity that corresponds to in the FMB law.

For the second challenge, CMA-ES approximates a modified performance gradient by sampling candidate solutions, ranking them by fitness, and then averaging the parameter differences between the best candidates and the current mean. The average puts greater weight on the higher fitness candidates. By contrast, biological processes implicitly calculate the partial regression of fitness on parameters.

For the third challenge, the direction in which CMA-ES updates the target parameter vector is approximately the product of its internal estimates for the covariance matrix and modified performance gradient. That approximation follows biology’s updating by

given in Equation (

6), which is the universal combination of force scaled by metric.

The same

structure occurs in other evolution strategy (ES) learning algorithms, which include natural evolution strategies [

45], large-scale parallel ES [

46], and separable low-rank CMA-ES [

47]. The next section moves to the local end of the spectrum, showing that the

structure remains when the population collapses to a single vector.

8. Single-Vector Updates

In population methods, we track a weighted set of alternative parameter vectors over a spatially extended region of the parameter space. We often summarize learning updates by changes in the population mean, . Here, I use the partial component, , of the FMB law to focus on methods that use only the force and metric terms.

Many common algorithms update a single local parameter vector, , without tracking any variant parameter values. This section summarizes a few classic single-vector update methods. The next section adds noise to enhance exploration of complex optimization surfaces, as in the commonly used stochastic gradient descent algorithm. The following section adds bias, including a momentum term that often occurs in some common machine learning algorithms, such as Adam.

For the component of the FMB law, the following algorithms differ mainly in the way that they choose . In some cases, arises from the same local extent as the gradient force calculation. In other cases, an algorithm expands the temporal or spatial extent to obtain a broader calculation of the curvature metric or uses a mirror geometry to design specific curvature attributes into the method.

8.1. Gradient Descent: Constant Euclidean Metric

The update is

in which

is the step size, and

is the gradient of the performance function with respect to the parameters, evaluated at the current parameter vector, a purely local method. The implicit metric is

, in which the identity matrix,

, is the Euclidean metric with no curvature. This simple method typically traces a path along the performance surface from the current location to the nearest local optimum.

8.2. Newton’s Method: Exact Local Curvature

In Equation (

25), we noted that using the positive definite inverse Hessian for the metric,

, yields Newton’s method

in which

if we are maximizing performance, and

with

if we are minimizing cost.

The Hessian is the matrix of second derivatives of the performance function with respect to the parameters at the current single point in the parameter space, a purely local measure of curvature. We could also include a step size multiplier, , for any algorithms. However, we drop that step size term to reduce notational complexity.

The inverse Hessian metric typically improves local optimization by orienting the direction of updates into an improved gain in performance relative to a cost paid for the squared Fisher–Rao path length movement.

On the downside, the second derivatives may not exist everywhere, the Hessian may be computationally expensive to calculate, the information about second derivatives may be lacking, and this method tends to become trapped at the nearest local optimum.

In theory, using the inverse Fisher metric in place of the inverse Hessian often provides a better update [

11]. The Fisher metric measures the curvature of the performance function with respect to the parameters when averaged over a spatially extended region of the parameter space.

The section

Why inverse curvature is a good metric discussed the many theoretical benefits of Fisher information in this context [

11,

14]. For example, the global Fisher metric often reduces the tendency to be trapped at a local optimum when updating the parameters. However, in practice, one often uses local estimates of curvature to avoid the extra assumptions and complex calculations required to estimate the Fisher matrix.

8.3. Quasi-Newton: Temporal Extension

These methods replace the computationally costly exact Hessian calculation with simpler updates that accumulate curvature information over time. In terms of our FMB analysis,

is iteratively updated to approximate

, so that the temporally extended estimation of the metric becomes part of the algorithm [

5].

The Broyden–Fletcher–Goldfarb–Shanno (BFGS) algorithm and its variants are widely used [

48]. After each step, the method compares how the gradient changed to how the parameters moved. That comparison provides information about curvature.

From that curvature information, the algorithm keeps a running update of its estimate for the inverse Hessian matrix. Thus, the method estimates the curvature metric by using only its calculation of first derivatives and parameter updates, without ever directly calculating a second derivative or inverting a matrix.

8.4. Trust Regions: Spatial Extension

Newton’s local calculation of the curvature via the inverse Hessian is fragile. The local Hessian may not exist, it may change significantly over space, or it may not be invertible to provide the inverse curvature. Trust region methods may solve some of these problems by defining a local region of analysis [

39,

40].

One type of trust region method spatially extends the calculation of the curvature metric in a way that matches the FMB law’s notion of a population. By combining a local force gradient with a metric that is a positive definite average of the curvature over the region, the hybrid method follows Amari’s natural gradient approach [

11]. The spatial extension of the metric calculation often improves updates by providing more information about the shape of the local performance landscape.

8.5. Mirror Descent: Transformational Extent

The previous examples in this section match the

force–metric pattern in a simple and direct way. By contrast, the mirror descent algorithm has a more complicated geometry that does not obviously map directly to our

pattern [

49,

50]. However, the following derivations show that this algorithm does, in fact, use the same basic force–metric approach, but with a more involved method to obtain the curvature metric.

In this case, the challenge is that the curvature either cannot be calculated or is computationally too expensive to calculate. So one calculates curvature in an alternative mirror geometry obtained by transformation of the target geometry, in which the curvature has a simpler or more tractable form. Instead of improving the metric by spatial or temporal extent, one uses a transformational extent to a geometry that can be chosen for its anticipated benefits to algorithmic learning.

For example, in a Newton update, the inverse Hessian is taken with respect to the performance function, . In mirror descent, one changes the geometry by choosing a strictly convex potential function that has a positive definite Hessian over the search domain. The inverse provides a consistent positive definite metric for the update, repairing any fragility of the Hessian of U.

Assume throughout this subsection that we are maximizing performance despite the word descent in the common name for this approach.

Allowing for variable step size,

, the generalization of the Newton update for maximization becomes

This update is a first-order approximation of the mirror descent method [

49,

50]. In effect, we are making a Newton-like step in an alternative mirror geometry with metric

, then mapping that step back to our original geometry for

.

This approach allows one to control the metric and to compensate for the geometry of the performance surface that does not have a simple or proper curvature. Because the change in mirror space is determined by the metric , we end up with our simple force–metric expression from the FMB law.

The optimal update rule in Equation (

27) arises as the first-order approximation for the solution of the following optimization problem, which balances the performance gain in the target geometry against a distance penalty in the mirror geometry. The problem is

The new update vector

maximizes the two bracketed terms. The first term is the first-order approximation for the total performance increase. The second term is the Bregman divergence,

, between new and old parameter vectors measured in the mirror geometry defined by transformation,

. That divergence, defined below, is an easily calculated distance moved in the mirror geometry. Thus, we are maximizing the performance gain in the target geometry minus the distance moved in the mirror geometry scaled by

.

In some cases, the mirror transformation depends on local information that changes in each time step, denoted to emphasize the time dependence. Here, for notational simplicity, we drop the t subscript but allow such dependence.

In this update equation, the first right-hand term is the local slope of the performance gain relative to the parameter change, weighted by the amount of parameter change, yielding the total performance increase. In the second term, the Bregman divergence is

We can obtain the first-order approximation for the optimal update given in Equation (

27) by the following. Start by differentiating the terms in the brackets on the right-hand side of Equation (

28), evaluating at

, and setting to zero, which yields

Noting that

, a first-order Taylor expansion of

around

is

Dropping the second-order error and substituting into Equation (

29) gives Equation (

27) to first order in

, noting that

. Thus, even in this relatively complex case, the force–metric law is nearly exact for small updates. This method is purely local in the same sense as Newton’s algorithm, but uses a transformational extent to improve the analysis of the curvature metric.

9. Stochastic Exploration

All gradient algorithms suffer from the tendency to become stuck at a local optimum. This section briefly summarizes two methods that use noise to escape local optima and explore the performance surface more broadly.

These methods match the FMB law, , with no bias, , and noise entering via the stochastic term, . The deterministic component of these methods, , is a single-value update driven by the gradient force, lacking a population.

During a search trajectory, when the magnitude of this deterministic gradient component is greater than the magnitude of the noise, the deterministic single-value update process dominates. When the gradient flattens or the step size weighting for the gradient shrinks, the noise dominates the updates.

In the noise-dominated regime, the temporal trajectory samples a population of parameter locations around the path that the deterministic trajectory would have traced. That temporal wandering changes the search from single-value updates to a quasi-Bayesian population-based method, in which the population distribution is shaped by the covariance of the noise process [

16,

51].

Technically speaking, as the gradient-to-noise ratio declines, the temporal stochastic sampling effectively becomes an ergodic spatially extended population. Thus, these hybrid methods exploit the simplicity and efficiency of single-value updates when the gradient is strongly informative and exploit the broader exploratory benefits of populations when the step-size weighted gradient is relatively weak.

9.1. Stochastic Langevin Search

This algorithm combines a deterministic gradient step and a noise fluctuation [

51]. A simple stochastic differential equation describes the process as follows:

The parameter vector update,

, equals the gradient of the performance surface,

, with respect to the parameters,

, plus a Brownian motion vector,

, weighted by the square root of the diffusion coefficients in the matrix,

, that determines the scale of noise.

In practice, one typically updates by discrete steps, for example, at time

t, the update may be

here,

adjusts the step size.

The metric

scales motion in each direction, typically obtained by estimating the positive definite inverse curvature, such as the inverse of the negative Hessian matrix. The vector

is Gaussian noise with mean zero and covariance given by the identity matrix. The overall noise,

, has a covariance matrix of

. Alternative discrete-step approximations for Equation (

30) may be used to improve accuracy or efficiency.

This update matches our FMB standard for learning algorithms, the gradient force multiplied by the inverse curvature metric. The noise term adds exploratory fluctuations in proportion to the inverse curvature metric, .

When the gradient is relatively large compared to the noise, the deterministic force dominates. When the slope is flat, noise dominates. The weighting of noise by inverse curvature means that fluctuations explore more widely in directions with low gradient and small curvature. Such directions are the most likely to be associated with trend reversals, providing an opportunity to escape a region in which the gradient pushes in the wrong direction.

The relative dominance of the deterministic gradient component compared with the noise component can be adjusted by changing

, the step-size weighting of the gradient term. As the

-weighted deterministic component declines, the method increasingly shifts from single-value updates to population-based updates. Here, dominance by temporal noise creates a similar effect to a spatially extended population [

51], as described in the introduction to this section.

In theory, methods such as stochastic Langevin search provide an excellent balance between exploiting gradient information and exploring by noise. However, the algorithm can be very costly computationally for high dimensions and large data sets. Each step uses all of the data to calculate the gradient with respect to the high-dimensional parameter vector. The size of the inverse curvature matrix is the square of the parameter vector length, which can be very large. Stochastic gradient descent provides similar benefits with lower computational cost.

9.2. Stochastic Gradient Descent

In data-based machine learning methods, stochastic sampling of the data creates exploratory noise, which often improves the learning algorithm’s search efficacy. To explain, I briefly review the steps used by common gradient algorithms in machine learning.

The data have N input–output observations. The model takes an input and predicts the output. The parameter vector influences the model’s predictions.

For each observed input, the model makes a prediction that is compared with the observed output. A function transforms the divergence between the model prediction and the observed output into a performance value. One typically averages the performance value over multiple observations. The gradient vector is the derivative of the average performance with respect to the parameter vector.

In machine learning, the average performance is typically called the loss for that batch of data. In this article, we often use the negative loss as the positive performance value. Climbing the performance scale means descending the loss scale.

The total data set has N observations. If one uses all of the data to calculate the gradient and then updates the parameters using that gradient, the update method is often called gradient descent.

This deterministic gradient descent method will often become trapped in a local minimum, failing to find parameters that produce better performance.

Instead of using all of the data to calculate the gradient, one can instead repeat the process by using many small random samples of the data. Data mini-batches lose gradient precision but gain exploratory noise [

6,

15,

16,

17].

The stochasticity from random sampling can help to escape local optima and find better parameter combinations [

52]. Thus, the method is often called stochastic gradient descent. In this process, a parameter update is

in which

is a chosen step size weighting, and the hat over the gradient,

, denotes an estimated value from the sample batch of data.

The estimated gradient is implicitly the true gradient plus sampling noise. Thus, in theory,

in which

is the true gradient, and

is the sampling noise of the gradient estimate. The variance of the sampling noise scales inversely with the batch sample size. Choosing a good batch size plays an important role in the optimization process [

53,

54].

The deterministic component of parameter updates dominates when the noise is small relative to the gradient signal. The stochastic component dominates when the noise is large relative to the gradient signal. Larger batch sizes reduce the scale of noise relative to the gradient signal.

As noted in the prior subsection, noisy exploration provides the greatest benefit when both the gradient and the curvature are small. In that case, noise provides the opportunity to jump across a near-zero or mildly disadvantageous gradient to a new base from which the gradient leads to improving performance. The more a region curves in a disadvantageous direction, the more noise one needs to jump across it.

As the batch size declines, the method increasingly shifts from deterministic single-value updates to stochastically sampled population-based updates. Here, dominance by temporal noise creates a similar effect to a spatially extended population [

16,

55], as described in the introduction to this section.

Many algorithms build on stochastic gradient descent by adding inverse-curvature metric scaling and bias. The next section provides examples.

10. Bias in Modern Optimization

Several widely used machine learning methods include a bias term. The bias alters parameters in addition to the direct force of the performance gradient.

For example, a moving average of past parameter changes describes the update momentum. That momentum includes temporal information about the shape of the performance surface that goes beyond the information in the local gradient and curvature.

This section provides examples of bias in various machine learning algorithms. Before turning to those examples, I briefly review the role of bias within the Price equation and the FMB law.

10.1. Brief Review of Bias

The Price equation’s full FMB law from Equation (

1) is

The prior examples focused on the

direct force and

noise terms. This section considers the bias term of Equation (

2), repeated here

Bias describes deterministic changes in parameter values,

, that are not caused by the directly acting forces,

. Here, we take

as the regression or gradient of performance with respect to the parameters.

Denote these bias changes as . Then describes the bias that is uncorrelated with the performance. The matrix is the covariance of the bias vector. The vector is the regression of performance on the bias vector, which captures correlations between performance and bias.

For single-value updates to the location vector, we use . The components of bias lose their statistical meaning. Instead, is a metric for the space of bias vectors. The slope is the gradient of performance with respect to the bias vector. The term adds further bias.

To focus on bias in the FMB law, this section drops noise terms. In effect, assume very large batch sizes for single-value updates or very large population sizes for population mean updates. The following examples decompose particular update algorithms into their FMB components.

10.2. Prior Bias: Parameter Regularization

Consider the single-value parameter update

The metric is

. The force is

. The bias terms are

and

.

The gradient imposes a force that pushes parameters to improve performance. The bias term imposes a static force that pulls all parameters toward a prior value, in this case, the origin.

Those parameters that only weakly improve performance end up close to the prior. In practice, one often prunes the parameter vector by dropping all parameters that end up near the prior, a process called regularization [

15].

10.3. Momentum Bias: Polyak

Abbreviate the gradient at time

t as

. Then we can calculate the exponential moving average of the gradient as

A simple parameter update that includes history is [

7]

On the right side, the first term is a standard gradient term for the update,

, with a constant metric

. The second term is the bias caused by the momentum from past updates,

. In this case, the bias is not associated with performance,

.

The idea is that a strong historical tendency to move in a particular direction provides information about the performance surface that supplements the information in the local gradient at the current time. Thus, the algorithm uses the momentum from past updates to push the current update in the direction that has been favored in the past.

Roughly speaking, the method uses temporal extent to gain information about the shape of the performance surface curvature rather than using the spatial extent of populations. However, in this case, the curvature information is used to bias the update rather than to calculate the metric that rescales the local gradient.

10.4. Momentum Bias and Metric: Adam

The widely used Adam algorithm adds metric scaling to the basic momentum update [

8]. The metric arises from the exponential moving average of the squared gradient

The match to FMB follows by

for small constant

c in

. The update follows as

Note in the third line that the bracketed quantity on the right is the Polyak momentum update from the prior subsection. Thus, Adam is proportional to the Polyak update weighted by the metric

.

Here, the metric is based on the exponential moving average of the squared gradient, . Instead of inverse curvature, this metric is an inverse combination of the magnitude of the gradient and the noise in the gradient estimate when using small random batch samples of the data. Thus, the metric reduces step size in directions that have some combination of a large slope or high noise.

In summary, the metric , derived from the history of squared gradients, creates an adaptive learning rate tuned to the geometry of each direction, and the momentum bias provides a temporally smoothed, directed force. Overall, the temporal extent provides an efficient method to estimate spatial aspects of geometry.

11. Gaussian Processes and Kalman Filters

This section returns to population-based algorithms in relation to force and metric. I first introduce Gaussian processes, a population-based Bayesian method that weights alternative functions by how well they describe data rather than weighting alternative parameter vectors. The Bayesian weighting of alternatives provides a natural measure of uncertainty [

56].