Non-Uniform Entropy-Constrained L∞ Quantization for Sparse and Irregular Sources

Abstract

1. Introduction

- We propose an iterative scheme for entropy-constrained scalar -oriented non-uniform quantizers that is applicable to sparse (discontinuous) input distributions.

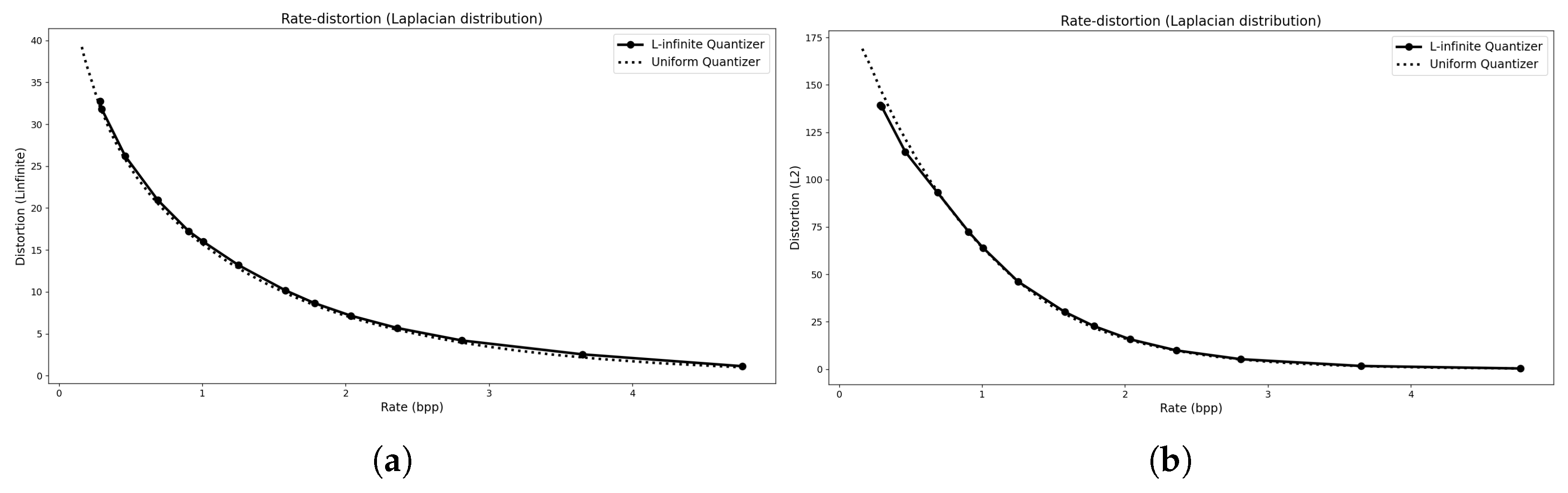

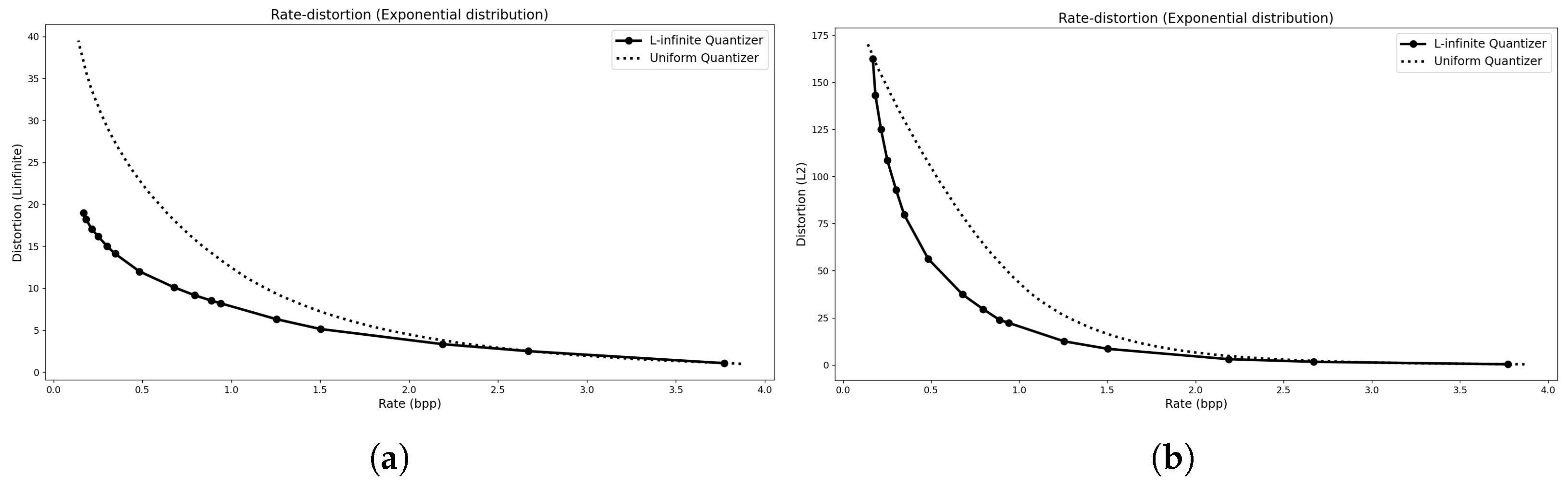

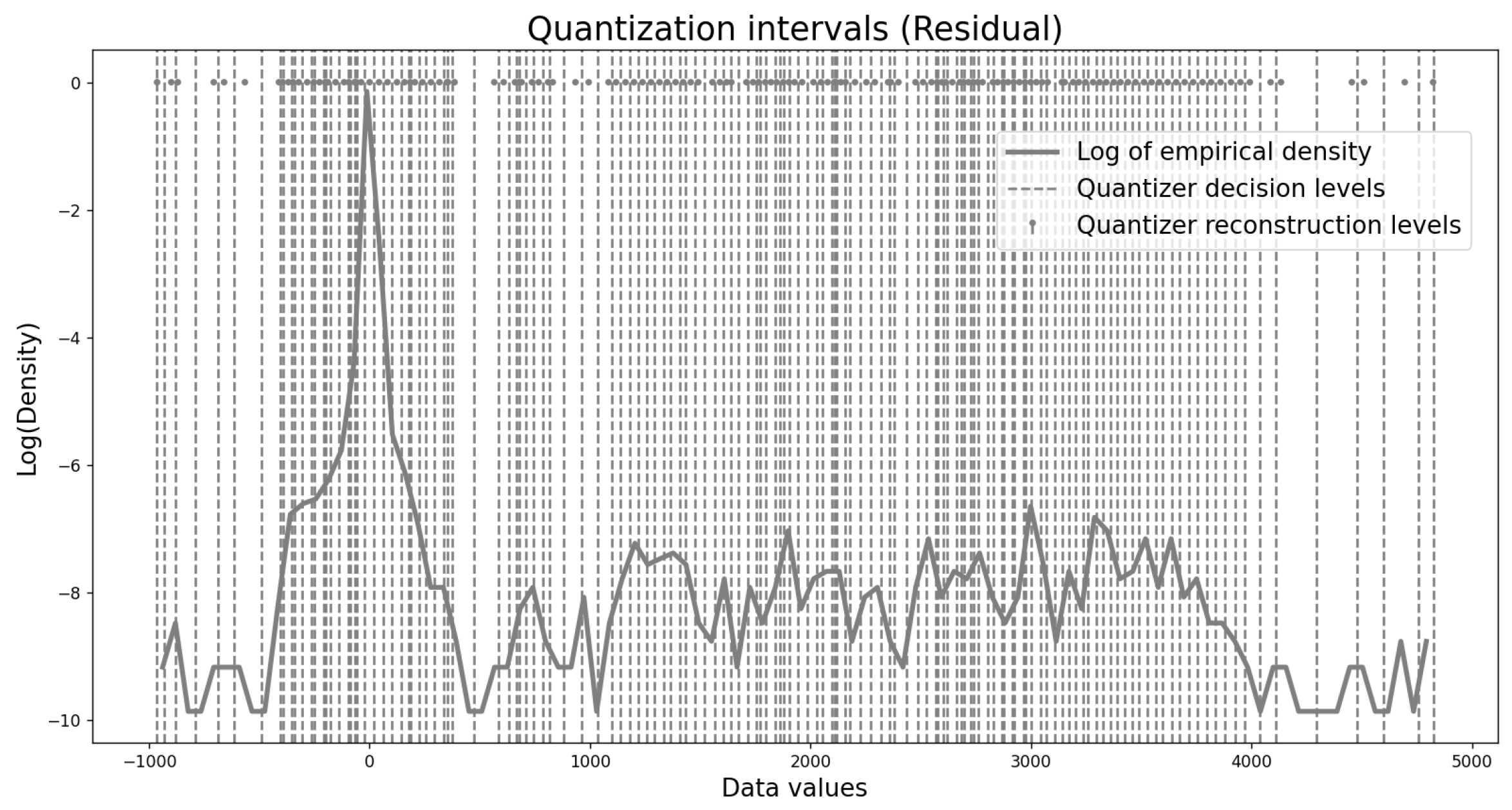

- We demonstrate that the algorithm converges to uniform designs for smooth symmetric sources commonly used to model residuals and yields non-uniform quantizers for sparse or irregular distributions.

- We embed the proposed quantization scheme into a residual-based near-lossless depth video codec and show that it consistently outperforms state-of-the-art methods such as JPEG-LS and CALIC.

2. Related Work

2.1. Optimal Quantizer Design for and Distortion

2.2. Near-Lossless -Oriented Compression Schemes

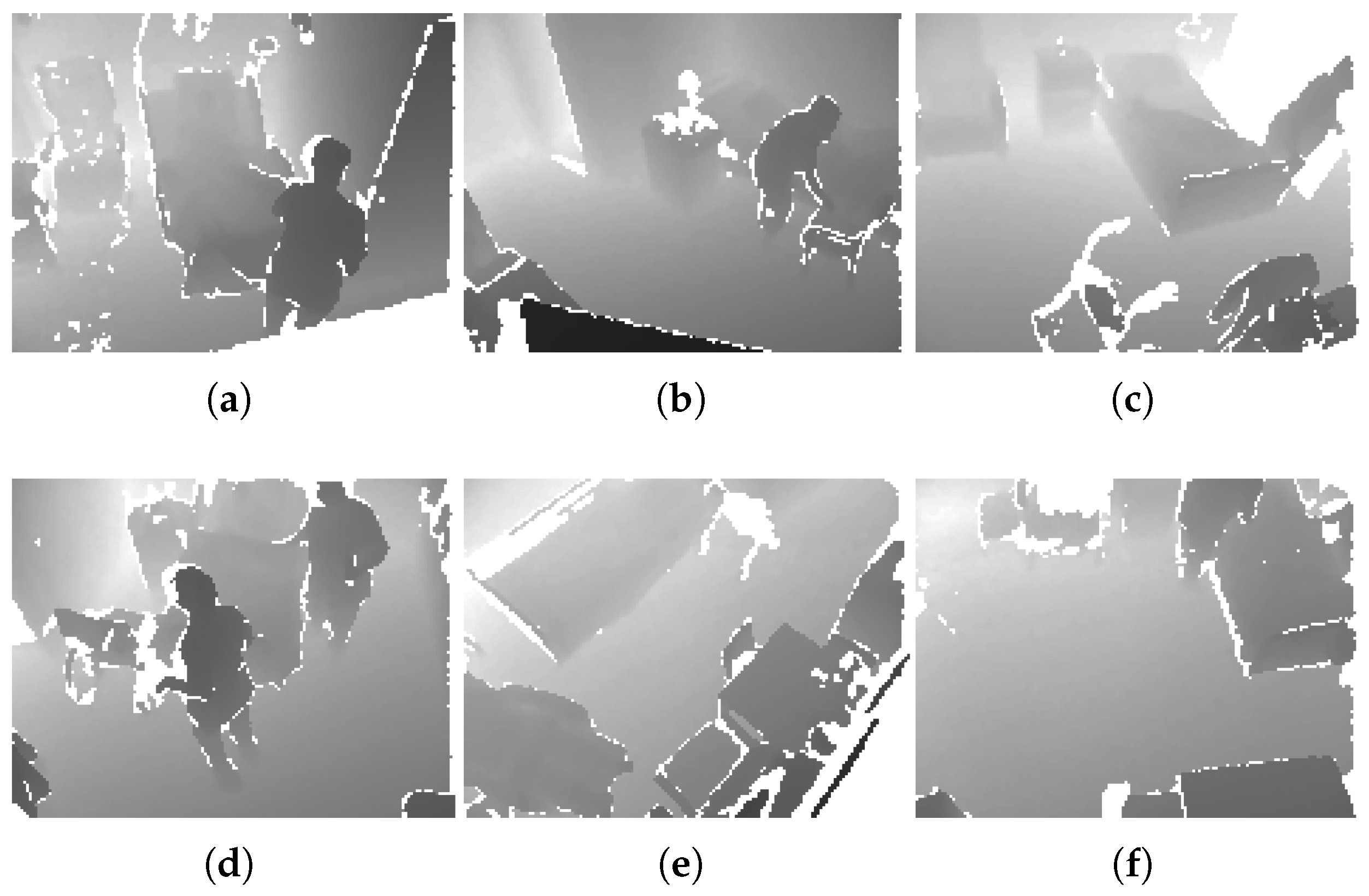

2.3. Near-Lossless Compression of Depth Maps

3. Materials and Methods

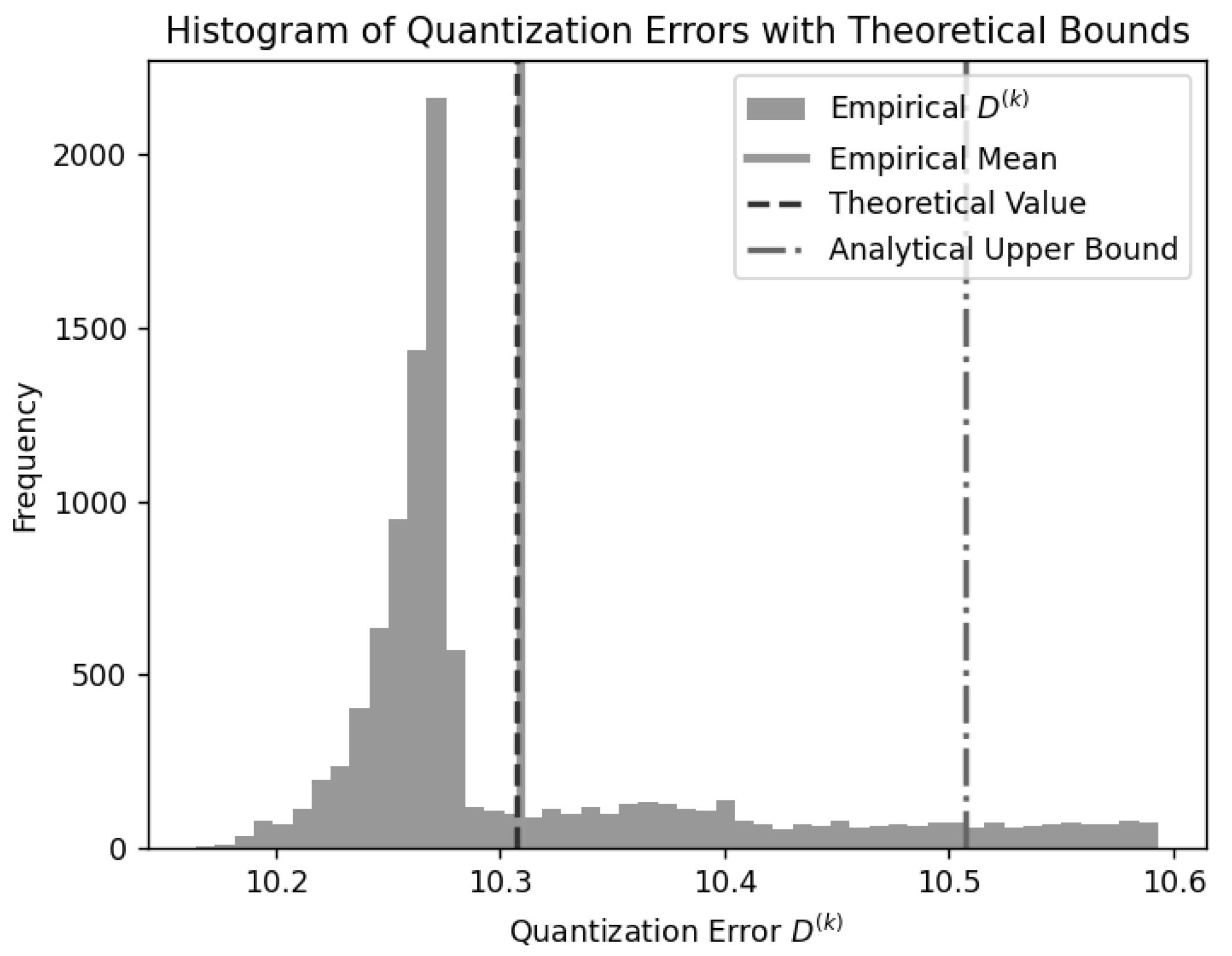

3.1. A Differentiable Approximation of the Quantization Error

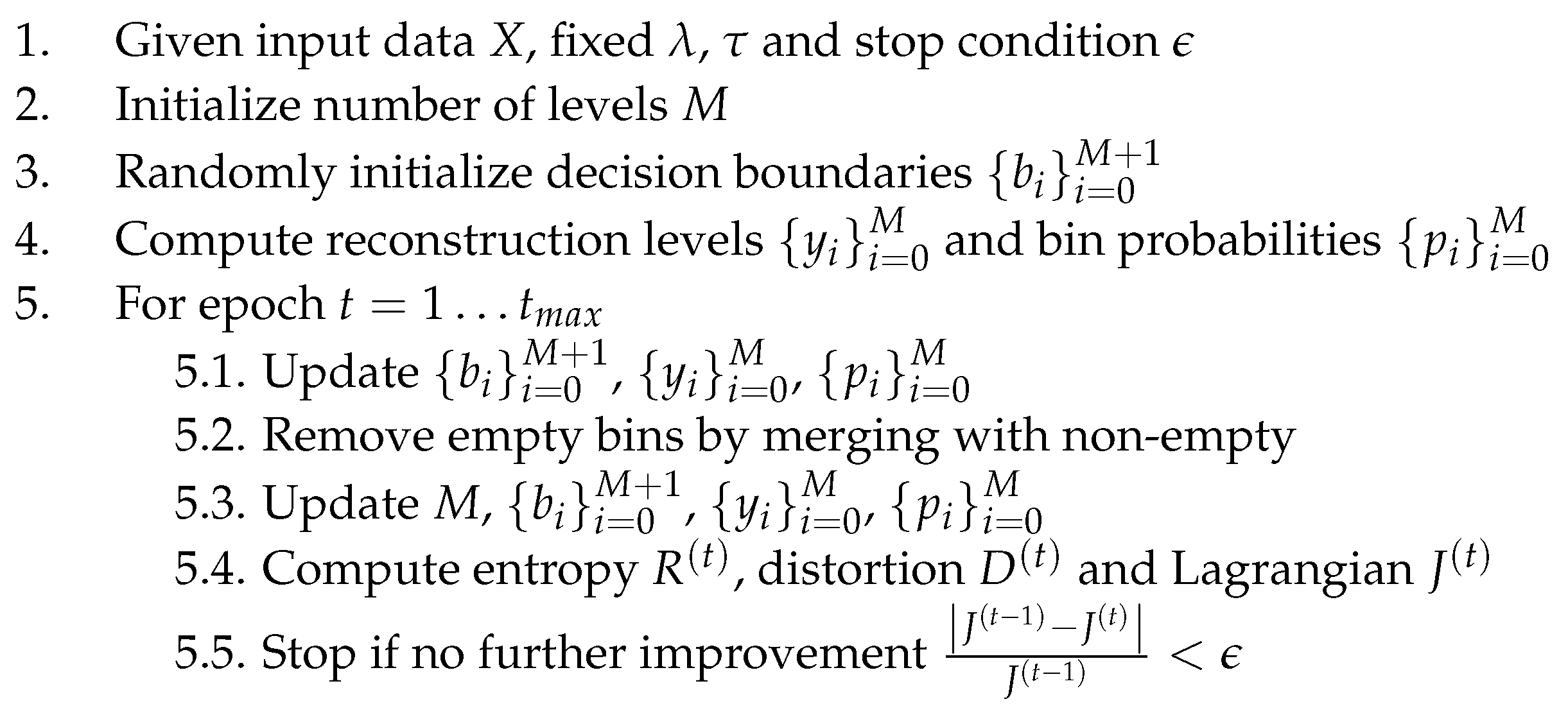

3.2. An Iterative Quantizer Design Algorithm

4. Experimental Results

4.1. Continuous and Discrete Parametric Distributions

4.2. Sparse Source Distributions

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Proof and Derivation Details

References

- Panter, P.F.; Dite, W. Quantization Effects in Pulse-Code Modulation. Proc. IRE 1951, 39, 44–48. [Google Scholar] [CrossRef]

- Gray, R.M.; Neuhoff, D.L. Quantization. IEEE Trans. Inf. Theory 1998, 44, 2325–2383. [Google Scholar] [CrossRef]

- Lloyd, S.P. Least Squares Quantization in PCM. IEEE Trans. Inf. Theory 1982, 28, 129–137. [Google Scholar] [CrossRef]

- Max, J. Quantizing for Minimum Distortion. IEEE Trans. Inf. Theory 1960, 6, 7–12. [Google Scholar] [CrossRef]

- Linde, R.; Buzo, A.; Gray, R.M. An Algorithm for Vector Quantization Design. IEEE Trans. Commun. 1980, 28, 84–95. [Google Scholar] [CrossRef]

- Wood, R. On Optimum Quantization. IEEE Trans. Inf. Theory 1969, 15, 248–252. [Google Scholar] [CrossRef]

- Berger, T. Optimum Quantizers and Permutation Codes. IEEE Trans. Inf. Theory 1972, 18, 759–765. [Google Scholar] [CrossRef]

- Farvardin, N.; Modestino, J. Optimum Quantizer Performance for a Class of Non-Gaussian Memoryless Sources. IEEE Trans. Inf. Theory 1984, 30, 485–497. [Google Scholar] [CrossRef]

- Chou, P.A.; Lookabaugh, T.; Gray, R.M. Entropy-Constrained Vector Quantization. IEEE Trans. Acoust. Speech Signal Process. 1989, 37, 31–42. [Google Scholar] [CrossRef]

- Zamir, R.; Feder, M. On Universal Quantization by Randomized Uniform/Lattice Quantizers. IEEE Trans. Inf. Theory 1992, 38, 428–436. [Google Scholar] [CrossRef]

- Gersho, A.; Gray, R.M. Vector Quantization and Signal Compression; Kluwer Academic Publishers: Boston, MA, USA, 1992. [Google Scholar]

- Mathews, V.J.; Hahn, P.J. Vector Quantization Using the L-Infinite Distortion Measure. IEEE Signal Process. Lett. 1997, 4, 33–35. [Google Scholar] [CrossRef]

- Linder, T.; Zamir, R. High-Resolution Source Coding for Non-Difference Distortion Measures: The Rate–Distortion Function. IEEE Trans. Inf. Theory 1999, 45, 533–547. [Google Scholar] [CrossRef]

- Ling, C.W.; Li, C.T. Rejection-Sampled Universal Quantization for Smaller Quantization Errors. arXiv 2024, arXiv:2402.03030. [Google Scholar] [CrossRef]

- Chang, W.; Schiopu, I.; Munteanu, A. L-Infinite Predictive Coding of Depth. In Proceedings of the Advanced Concepts for Intelligent Vision Systems, ACIVS 2018, Poitiers, France, 24–27 September 2018; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2018; Volume 11157, pp. 127–139. [Google Scholar]

- Schiopu, I.; Tabus, I. Lossy and near-lossless compression of depth images using segmentation into constrained regions. In Proceedings of the 2012 Proceedings of the 20th European Signal Processing Conference (EUSIPCO), Bucharest, Romania, 27–31 August 2012; pp. 1099–1103. [Google Scholar]

- Chen, K.; Ramabadran, T.V. Near-Lossless Compression of Medical Images Through Entropy-Coded DPCM. IEEE Trans. Med. Imaging 1994, 13, 538–548. [Google Scholar] [CrossRef] [PubMed]

- Ke, L.; Marcellin, M. Near-lossless image compression: Minimum-entropy, constrained-error DPCM. IEEE Trans. Image Process. 1998, 7, 225–228. [Google Scholar]

- Zern, J.; Massimino, P.; Alakuijala, J. WebP Image Format. RFC 9649. 2024. Available online: https://www.rfc-editor.org/info/rfc9649 (accessed on 28 October 2025).

- Avcibas, I.; Memon, N.; Sankur, B.; Sayood, K. A Progressive Lossless/Near-Lossless Image Compression Algorithm. IEEE Signal Process. Lett. 2002, 9, 312–314. [Google Scholar] [CrossRef]

- Wu, X.; Bao, P. L/sub/spl infin//constrained high-fidelity image compression via adaptive context modeling. IEEE Trans. Image Process. 2000, 9, 536–542. [Google Scholar]

- Wu, X.; Memon, N. Context-Based, Adaptive, Lossless Image Coding. IEEE Trans. Commun. 1997, 45, 437–444. [Google Scholar] [CrossRef]

- Weinberger, M.; Seroussi, G.; Sapiro, G. The LOCO-I lossless image compression algorithm: Principles and standardization into JPEG-LS. IEEE Trans. Image Process. 2000, 9, 1309–1324. [Google Scholar] [CrossRef]

- Tahouri, M.A.; Alecu, A.A.; Denis, L.; Munteanu, A. Lossless and Near-Lossless L-Infinite Compression of Depth Video Data. Sensors 2025, 25, 1403. [Google Scholar] [CrossRef] [PubMed]

- Karray, L.; Duhamel, P.; Rioul, O. Image Coding with an L-Infinite Norm and Confidence Interval Criteria. IEEE Trans. Image Process. 1998, 7, 621–631. [Google Scholar]

- Ansari, R.; Memon, N.; Ceran, E. Near-Lossless Image Compression Techniques. J. Electron. Imaging 1998, 7, 486–494. [Google Scholar] [CrossRef]

- Alecu, A.; Munteanu, A.; Cornelis, J.P.H.; Schelkens, P. Wavelet-Based Scalable L-Infinity-Oriented Compression. IEEE Trans. Image Process. 2006, 15, 2499–2512. [Google Scholar] [CrossRef] [PubMed]

- Pinho, A.J.; Neves, A.J.R. Progressive lossless compression of medical images. In Proceedings of the 2009 IEEE International Conference on Acoustics, Speech and Signal Processing, Taipei, Taiwan, 19–24 April 2009; pp. 409–412. [Google Scholar] [CrossRef]

- Zhang, X.; Wu, X. Near-Lossless L-infinite-Constrained Image Decompression via Deep Neural Network. In Proceedings of the 2019 Data Compression Conference (DCC), Snowbird, UT, USA, 26–29 March 2019; pp. 33–42. [Google Scholar]

- Zhang, X.; Wu, X. Ultra High Fidelity Deep Image Decompression With L-infinite-Constrained Compression. IEEE Trans. Image Process. 2021, 30, 963–975. [Google Scholar] [PubMed]

- Bai, Y.; Liu, X.; Zuo, W.; Wang, Y.; Ji, X. Learning Scalable L-infinite-constrained Near-lossless Image Compression via Joint Lossy Image and Residual Compression. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 11941–11950. [Google Scholar]

- Bai, Y.; Liu, X.; Wang, K.; Ji, X.; Wu, X.; Gao, W. Deep Lossy Plus Residual Coding for Lossless and Near-Lossless Image Compression. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 3577–3594. [Google Scholar] [CrossRef]

- Mehrotra, S.; Zhang, Z.; Cai, Q.; Zhang, C.; Chou, P.A. Low-complexity, near-lossless coding of depth maps from kinect-like depth cameras. In Proceedings of the 2011 IEEE 13th International Workshop on Multimedia Signal Processing, Hangzhou, China, 17–19 October 2011; pp. 1–6. [Google Scholar]

- Choi, J.A.; Ho, Y.S. Improved near-lossless HEVC codec for depth map based on statistical analysis of residual data. In Proceedings of the 2012 IEEE International Symposium on Circuits and Systems (ISCAS), Seoul, Republic of Korea, 20–23 May 2012; pp. 894–897. [Google Scholar]

- Shahriyar, S.; Murshed, M.; Ali, M.; Paul, M. Depth Sequence Coding With Hierarchical Partitioning and Spatial-Domain Quantization. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 835–849. [Google Scholar]

- von Buelow, M.; Tausch, R.; Schurig, M.; Knauthe, V.; Wirth, T.; Guthe, S.; Santos, P.; Fellner, D.W. Depth-of-Field Segmentation for Near-lossless Image Compression and 3D Reconstruction. J. Comput. Cult. Herit. 2022, 15, 1–16. [Google Scholar] [CrossRef]

- Siekkinen, M.; Kämäräinen, T. Neural Network Assisted Depth Map Packing for Compression Using Standard Hardware Video Codecs. arXiv 2022, arXiv:2206.15183. [Google Scholar] [CrossRef]

- Wu, Y.; Gao, W. End-to-End Lossless Compression of High Precision Depth Maps Guided by Pseudo-Residual. arXiv 2022, arXiv:2201.03195. [Google Scholar]

- Deutsch, L.; Gailly, J. RFC 1950: ZLIB Compressed Data Format Specification Version 3.3. May 1996. Status: INFORMATIONAL. Available online: https://www.rfc-editor.org/info/rfc1950 (accessed on 28 October 2025).

| Laplacian | TSGD | Exponential | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.29 | 32.72 | 99 | 4 | 35.1 | 0.11 | 41.00 | 91 | 3 | 45.9 | 0.17 | 19.00 | 49 | 3 | 19.0 |

| 0.30 | 31.84 | 99 | 5 | 34.3 | 0.12 | 40.00 | 91 | 3 | 43.9 | 0.18 | 18.19 | 49 | 3 | 18.2 |

| 0.46 | 26.19 | 99 | 6 | 27.8 | 0.14 | 39.00 | 91 | 3 | 42.9 | 0.22 | 17.04 | 49 | 4 | 17.1 |

| 0.69 | 20.97 | 99 | 7 | 21.2 | 0.34 | 28.00 | 91 | 5 | 30.6 | 0.25 | 16.17 | 49 | 4 | 15.9 |

| 0.91 | 17.24 | 99 | 9 | 17.1 | 0.56 | 22.00 | 91 | 6 | 23.5 | 0.30 | 15.00 | 49 | 4 | 14.7 |

| 1.01 | 15.98 | 99 | 8 | 15.5 | 0.70 | 19.00 | 91 | 7 | 20.4 | 0.35 | 14.11 | 49 | 4 | 13.6 |

| 1.25 | 13.19 | 99 | 10 | 12.2 | 1.35 | 11.00 | 91 | 10 | 11.2 | 0.48 | 12.01 | 49 | 5 | 11.2 |

| 1.58 | 10.20 | 99 | 12 | 9.0 | 1.44 | 10.00 | 91 | 10 | 10.2 | 0.68 | 10.11 | 49 | 6 | 8.9 |

| 1.78 | 8.64 | 99 | 15 | 7.3 | 1.57 | 9.00 | 91 | 10 | 9.2 | 0.79 | 9.16 | 49 | 7 | 7.8 |

| 2.04 | 7.16 | 99 | 18 | 5.7 | 1.87 | 7.00 | 91 | 14 | 7.1 | 0.89 | 8.54 | 49 | 7 | 7.0 |

| 2.36 | 5.69 | 99 | 22 | 4.1 | 2.36 | 5.00 | 91 | 21 | 4.1 | 0.94 | 8.21 | 49 | 7 | 6.6 |

| 2.81 | 4.21 | 99 | 31 | 2.4 | 3.08 | 3.00 | 91 | 34 | 2.0 | 1.25 | 6.31 | 49 | 10 | 4.7 |

| 3.65 | 2.55 | 99 | 50 | 0.8 | 4.68 | 1.00 | 91 | 86 | 1.0 | 1.50 | 5.14 | 49 | 12 | 3.5 |

| 4.77 | 1.14 | 99 | 88 | 0.5 | 2.19 | 3.34 | 49 | 20 | 1.6 | |||||

| 2.67 | 2.51 | 49 | 25 | 0.8 | ||||||||||

| 3.77 | 1.07 | 49 | 46 | 0.5 | ||||||||||

| Data | Codec w/Quant. | |||

|---|---|---|---|---|

| Non-Unif. | pw-Unif. | Unif. | ||

| S1 | 1 | 2.819 | 2.835 | 3.685 |

| 10 | 2.287 | 2.334 | 2.639 | |

| 20 | 1.965 | 2.062 | 2.216 | |

| 30 | 1.610 | 1.665 | 1.769 | |

| S2 | 1 | 2.335 | 2.386 | 2.968 |

| 10 | 1.792 | 1.794 | 2.043 | |

| 20 | 1.505 | 1.632 | 1.807 | |

| 30 | 1.278 | 1.370 | 1.491 | |

| S3 | 1 | 2.084 | 2.093 | 2.608 |

| 10 | 1.611 | 1.634 | 1.818 | |

| 20 | 1.265 | 1.419 | 1.538 | |

| 30 | 1.053 | 1.092 | 1.152 | |

| S4 | 1 | 2.584 | 2.616 | 3.311 |

| 10 | 2.018 | 2.023 | 2.280 | |

| 20 | 1.608 | 1.796 | 1.963 | |

| 30 | 1.318 | 1.257 | 1.343 | |

| S5 | 1 | 2.614 | 2.635 | 3.248 |

| 10 | 1.989 | 2.023 | 2.241 | |

| 20 | 1.648 | 1.688 | 1.801 | |

| 30 | 1.316 | 1.374 | 1.446 | |

| S6 | 1 | 2.663 | 2.688 | 3.330 |

| 10 | 2.039 | 2.093 | 2.322 | |

| 20 | 1.707 | 1.800 | 1.937 | |

| 30 | 1.422 | 1.408 | 1.506 | |

| Data | JPEG-LS | CALIC | Codec w/Non-Unif. | Codec w/pw-Unif. | Codec w/Unif. | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Rate | PSNR | Rate | PSNR | Rate | PSNR | Rate | PSNR | Rate | PSNR | ||

| S1 | 1 | 7.119 | 99.99 | 4.712 | 100.27 | 3.395 | 120.43 | 3.471 | 119.62 | 4.135 | 119.62 |

| 10 | 4.826 | 65.05 | 3.142 | 65.94 | 2.833 | 61.49 | 2.877 | 82.20 | 3.088 | 82.20 | |

| 20 | 3.990 | 53.80 | 2.593 | 54.93 | 2.497 | 57.26 | 2.591 | 70.43 | 2.666 | 70.43 | |

| 30 | 3.459 | 47.26 | 3.332 | 48.42 | 2.128 | 49.10 | 2.182 | 61.55 | 2.219 | 61.55 | |

| S2 | 1 | 6.302 | 99.97 | 4.611 | 100.16 | 2.875 | 120.47 | 2.975 | 119.11 | 3.389 | 119.11 |

| 10 | 3.994 | 65.02 | 3.089 | 65.84 | 2.300 | 71.42 | 2.297 | 84.77 | 2.464 | 84.77 | |

| 20 | 3.215 | 53.13 | 2.529 | 54.44 | 2.001 | 53.13 | 2.120 | 73.89 | 2.228 | 73.89 | |

| 30 | 2.774 | 46.76 | 2.240 | 48.00 | 1.764 | 47.16 | 1.849 | 62.85 | 1.913 | 62.85 | |

| S3 | 1 | 5.540 | 100.11 | 3.919 | 101.19 | 2.291 | 122.33 | 2.356 | 120.55 | 2.709 | 120.55 |

| 10 | 3.653 | 65.41 | 2.564 | 66.73 | 1.794 | 59.94 | 1.815 | 84.47 | 1.920 | 84.47 | |

| 20 | 2.800 | 53.80 | 2.091 | 56.00 | 1.435 | 51.55 | 1.587 | 73.82 | 1.640 | 73.82 | |

| 30 | 2.301 | 47.19 | 1.855 | 49.12 | 1.212 | 49.85 | 1.248 | 61.80 | 1.254 | 61.80 | |

| S4 | 1 | 6.320 | 99.96 | 4.192 | 100.05 | 2.975 | 119.91 | 3.070 | 119.17 | 3.587 | 119.17 |

| 10 | 3.978 | 65.00 | 2.791 | 65.50 | 2.377 | 64.82 | 2.381 | 82.71 | 2.556 | 82.71 | |

| 20 | 3.300 | 53.65 | 2.270 | 54.29 | 1.954 | 55.34 | 2.140 | 68.75 | 2.238 | 68.75 | |

| 30 | 2.876 | 47.05 | 2.026 | 47.79 | 1.653 | 49.20 | 1.588 | 58.93 | 1.618 | 58.93 | |

| S5 | 1 | 6.722 | 100.05 | 4.265 | 100.79 | 2.782 | 120.57 | 2.860 | 119.30 | 3.298 | 119.30 |

| 10 | 4.655 | 65.38 | 2.872 | 66.06 | 2.130 | 64.61 | 2.160 | 86.31 | 2.291 | 86.31 | |

| 20 | 3.892 | 53.78 | 2.364 | 55.00 | 1.775 | 57.87 | 1.811 | 71.12 | 1.851 | 71.12 | |

| 30 | 3.360 | 47.08 | 2.111 | 48.59 | 1.430 | 52.23 | 1.484 | 61.45 | 1.496 | 61.45 | |

| S6 | 1 | 5.841 | 100.05 | 3.932 | 100.33 | 3.308 | 118.97 | 3.425 | 117.52 | 3.832 | 117.52 |

| 10 | 3.764 | 65.30 | 2.592 | 65.94 | 2.631 | 60.59 | 2.687 | 82.59 | 2.825 | 82.59 | |

| 20 | 2.978 | 53.57 | 2.089 | 54.37 | 2.281 | 56.26 | 2.374 | 68.23 | 2.439 | 68.23 | |

| 30 | 2.500 | 46.91 | 1.860 | 48.09 | 1.983 | 49.08 | 1.967 | 59.78 | 2.008 | 59.78 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alecu, A.-A.; Tahouri, M.A.; Munteanu, A.; Păvăloiu, B. Non-Uniform Entropy-Constrained L∞ Quantization for Sparse and Irregular Sources. Entropy 2025, 27, 1126. https://doi.org/10.3390/e27111126

Alecu A-A, Tahouri MA, Munteanu A, Păvăloiu B. Non-Uniform Entropy-Constrained L∞ Quantization for Sparse and Irregular Sources. Entropy. 2025; 27(11):1126. https://doi.org/10.3390/e27111126

Chicago/Turabian StyleAlecu, Alin-Adrian, Mohammad Ali Tahouri, Adrian Munteanu, and Bujor Păvăloiu. 2025. "Non-Uniform Entropy-Constrained L∞ Quantization for Sparse and Irregular Sources" Entropy 27, no. 11: 1126. https://doi.org/10.3390/e27111126

APA StyleAlecu, A.-A., Tahouri, M. A., Munteanu, A., & Păvăloiu, B. (2025). Non-Uniform Entropy-Constrained L∞ Quantization for Sparse and Irregular Sources. Entropy, 27(11), 1126. https://doi.org/10.3390/e27111126