1. Introduction

Accurate vehicle trajectory prediction is a cornerstone of the Internet of Vehicles (IoV), enabling key applications such as intelligent route planning, traffic flow optimization, and autonomous driving [

1]. By leveraging GPS data, trajectory prediction aims to forecast future vehicle movements in highly complex and dynamic traffic conditions. Nevertheless, the effectiveness of conventional models is often constrained by inherent challenges in real-world GPS data, including temporally correlated (colored) noise, long-term dependencies across time horizons, and the stringent requirements of real-time online prediction. These limitations underscore the need for more robust and adaptable modeling frameworks.

The IoV integrates vehicles, infrastructure, and cloud services, where trajectory prediction must address the variability and uncertainty of driving behaviors [

2]. Among these challenges, colored noise—characterized by time-varying statistical dependencies—makes pattern recognition more difficult, especially when the noise exhibits temporal correlations (e.g., pink or brown noise). Additionally, GPS data are inherently sequential and reflect long-term dependencies due to road structure, traffic flow, and driver intent. Meeting the real-time requirements of IoV further necessitates models that are both efficient and resilient to fluctuating data quality.

Classical tracking methods, such as the Kalman filter [

3], assume white Gaussian noise and rely on recursive estimation. These assumptions, however, break down in the presence of temporally correlated colored noise. In particular, temporal correlation in colored noise causes estimation bias to accumulate across time steps, which may eventually lead to divergence in the state estimates. For instance, when the measurement errors are correlated rather than independent, the filter repeatedly reinforces the same erroneous information instead of averaging it out. In recent years, deep learning approaches like Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRUs) [

4,

5,

6] have achieved success in modeling sequential dependencies. However, these deterministic models typically operate with fixed architectures and parameter sets, which limits their adaptability to dynamic and noisy environments. Moreover, their reliance on long-term dependencies makes them prone to instability in multi-step predictions, where small errors propagate and amplify across horizons, degrading overall robustness. To overcome these shortcomings, several works have explored more flexible sequence models with attention mechanisms, such as the Ada-STGMAT network [

7], which adaptively handles spatiotemporal data in smart city environments.

Stochastic configuration networks (SCNs) [

8] provide an alternative solution, incrementally constructing neural networks through a supervisory mechanism that adaptively regulates random parameter assignment. This design guarantees universal approximation and competitive generalization, while eliminating the need for backpropagation. Although SCNs were originally developed for general-purpose learning tasks, their architecture is particularly well-suited to scenarios involving nonstationary and noisy data due to their fast convergence and incremental learning strategy.

To address these limitations, we propose a probabilistic trajectory prediction framework that marries deterministic attention with stochastic learning via two ingredients: (1) a Bayesian linear head atop SCNs features that yields closed-form Gaussian predictive distributions and captures aleatoric uncertainty and (2) an ensemble of independently constructed SCNs configurations, each refitted with the Bayesian head, whose variability quantifies epistemic uncertainty. We combine these with a trainable attention encoder for context-aware feature extraction. The SCNs architecture is incrementally constructed under a supervisory criterion and then fixed; randomness in the constructive phase is used solely for ensemble-based uncertainty estimation and is not treated as posterior sampling over SCNs parameters. This hybrid attention-guided SCNs (AttSCNs) provides calibrated prediction intervals and context-sensitive processing for IoV trajectories while remaining lightweight at inference.

The structure of this paper is as follows:

Section 2 introduces the aims and key contributions of this work.

Section 3 presents a concise review of traditional and deep learning-based tracking methods, highlighting their strengths and limitations.

Section 4 describes the architecture of the proposed AttSCNs framework, including details of the SCNs construction process and attention mechanism.

Section 5 outlines the experimental setup and evaluation indicators and explains the Bayesian hyperparameter optimization strategy.

Section 6 reports extensive experimental results and comparative analyses against recent baselines. Finally,

Section 7 and

Section 8 conclude this paper and outline future directions.

3. Related Work

Accurate long-term trajectory prediction is pivotal for intelligent transportation systems, as it underpins effective traffic flow management, enhances road safety, and facilitates optimal resource allocation [

9]. Moreover, it serves as a cornerstone for the decision-making and path-planning modules of autonomous vehicles. However, in urban environments, GPS signals are frequently corrupted by complex colored noise—characterized by temporal correlations and non-uniform variance—which severely impairs the reliability of conventional prediction algorithms. These challenges highlight the pressing need for adaptive and robust modeling approaches that can effectively mitigate noise effects and maintain stable performance over extended forecasting horizons.

In the domain of GPS trajectory prediction, traditional methods primarily stem from tracking theory. The Kalman filter, introduced by Rudolf Emil Kalman, has long served as the cornerstone for such approaches [

3]. It operates on Bayesian estimation principles, recursively computing optimal state estimates through prediction and update steps. These steps rely heavily on predefined motion and observation models to describe system dynamics and measurement relations, respectively. Variants of the Kalman filter, including the Constant Velocity (CV) [

10], Constant Acceleration (CA) [

11], Singer [

12], and Current Statistical models [

13], define target maneuvering using specific assumptions about the stochastic nature of acceleration. For instance, while the CV model assumes zero-mean white Gaussian noise in velocity, the Singer model introduces exponentially correlated stochastic processes, and the Current Statistical model adopts a Rayleigh distribution. Yet, the effectiveness of these filters depends significantly on the validity of such noise distribution assumptions, which rarely hold under the complex dynamics of urban transportation systems.

Measurement modeling further complicates Kalman-based tracking. Within the Internet of Vehicles (IoV), GPS sensors are widely used for position and velocity acquisition. However, their signals are frequently contaminated by colored noise, particularly pink noise [

14], which invalidates the white Gaussian noise assumptions inherent in traditional Kalman filters. Such a mismatch leads to decreased estimation accuracy or even filter divergence. Although researchers have proposed adaptive Kalman filters to mitigate model uncertainty, such as by adjusting dynamic noise parameters or online estimation of process noise covariance, the fundamental limitations of model-based estimation frameworks constrain their effectiveness [

15].

Ultimately, traditional methods exhibit significant shortcomings in addressing long-term prediction tasks. Rooted in process models with limited rank and rigid assumptions, these approaches struggle to capture the intricate temporal dependencies embedded in real-world GPS data. Consequently, their predictions often diverge over extended horizons, thereby reinforcing the need to shift toward data-driven alternatives that can better model complex spatiotemporal dynamics.

Deep neural networks (DNNs) represent a widely adopted data-driven approach that circumvents rigid model assumptions by learning complex input–output mappings from data. When trained on large-scale IoV datasets, DNNs have shown potential in predicting dynamic target attributes such as position, velocity, and acceleration. Notably, Bai et al. [

16] proposed a recurrent auto-regressive neural framework for GPS/INS fusion. Similar advancements include the work of Aissa [

17], who extended Kalman filters with neural architectures for robot trajectory tracking, and Markos [

18], who applied Bayesian deep learning for unsupervised GPS trajectory segmentation.

Deep networks such as CNNs, LSTMs, and Transformers have further demonstrated their efficacy in capturing spatiotemporal dependencies. For example, Nawaz [

19] combined CNNs and LSTMs for transportation mode classification using GPS and weather data. LSTM and GRU models effectively capture long-term temporal patterns [

20,

21,

22], while hybrid models such as CNN-LSTM [

23] extract both spatial and temporal features. The Transformer model [

24,

25] and its derivatives—Informer [

26], Autoformer [

27], and others—introduce self-attention mechanisms for long-range sequence modeling.

Additionally, probabilistic generative models such as Planar Flow-based VAEs (PFVAE) offer a promising avenue for learning complex data distributions [

28]. Variational Bayesian En-Decoder models [

29] have also demonstrated success in traffic flow prediction by explicitly modeling uncertainty and dynamic latent structures.

Despite their advancements, deep learning methods face persistent challenges in GPS trajectory prediction. First, the learning capacity of supervised networks is hindered by the nonstationary colored noise embedded in GPS signals. This often results in increasingly deep and complex models that require substantial data, training time, and computational resources to converge. In contrast, probabilistic neural architectures offer better generalization through explicit modeling of data uncertainty. Second, effective long-term prediction necessitates capturing extended temporal dependencies—a task that benefits from attention mechanisms [

30] capable of highlighting relevant sequence features over time.

To address these limitations, our study proposes an attention-guided stochastic configuration network (AttSCNs) framework that integrates attention mechanisms with adaptive SCNs. SCNs are a class of randomized neural networks that incrementally construct their structure by dynamically configuring hidden nodes based on the residual error and supervisory criteria. This allows SCNs to flexibly adapt to complex, nonlinear, and multi-modal distributions, making them particularly suitable for modeling colored noise in GPS signals.

SCNs have demonstrated strong applicability in diverse fields, including finance [

31], healthcare [

32], structural reliability analysis [

33], and traffic forecasting [

34]. For instance, Y. Lin [

34] applied SCNs for long-term traffic prediction in smart cities, showcasing their robustness and accuracy. In structural engineering, Li et al. [

33] leveraged SCNs to predict structural reliability under high-dimensional nonlinear conditions. These applications underscore the efficacy of SCNs in capturing intricate temporal and statistical relationships.

Recent developments in SCNs methodologies have further expanded their capabilities. Wang and Felicetti [

35,

36] introduced Stochastic Configuration Machines (SCMs) and implemented FPGA-based architectures to enhance SCNs computation for real-time systems. Yan [

37] combined Variational Mode Decomposition with SCNs for hydropower vibration prediction. In contrast, Li et al. [

38] developed an online self-learning SCNs model for real-time stream classification in industrial contexts. Moreover, variants such as robust SCNs (RSCNs) [

39], chaotic sparrow search algorithm SCNs (CSSA-SCNs) [

40], and parallel SCNs (PSCNs) [

41] have emerged to improve robustness, parameter tuning, and scalability.

Drawing inspiration from CSSA-SCNs and PSCNs, our proposed AttSCNs model incorporates Bayesian optimization to fine-tune key hyperparameters. This strategy enhances computational efficiency and prediction robustness. By combining attention-driven feature extraction with SCNs’ incremental learning capability, AttSCNs are well-equipped to handle long sequences, temporal noise, and complex data dynamics. Consequently, AttSCNs represent a powerful and efficient solution for trajectory prediction in IoV systems, where conventional neural and filter-based models struggle.

4. Materials and Methods

4.1. Architecture of AttSCNs

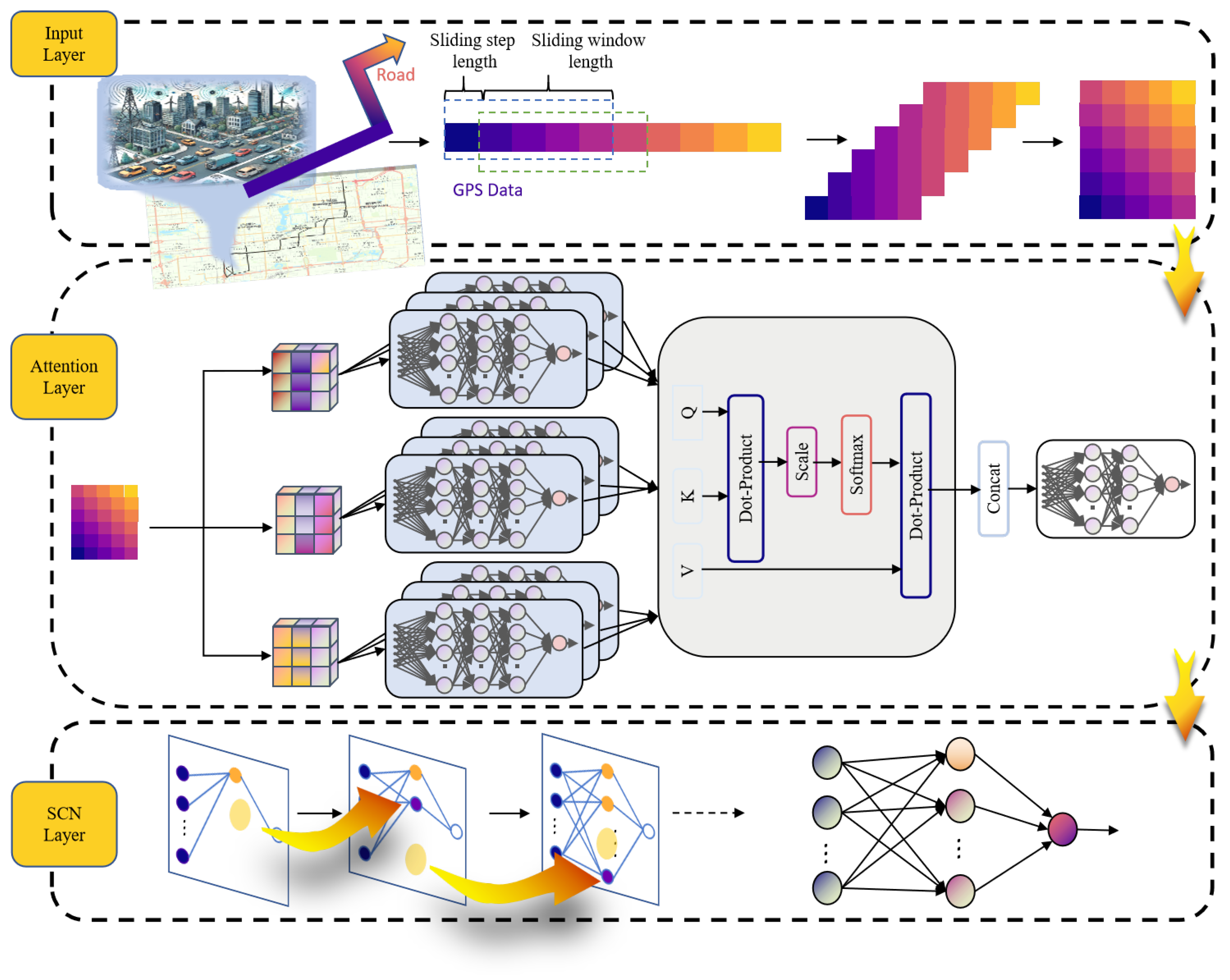

The architecture of the proposed attention-guided stochastic configuration network (AttSCNs) model is illustrated in

Figure 1. In this framework, GPS trajectories are first segmented into overlapping subsequences through a sliding-window strategy to generate input samples. The input layer receives raw multivariate time series data, which are often high-dimensional and contaminated by noise. An explicit probabilistic treatment is achieved by first placing a Bayesian linear head on the stochastic configurations of SCNs’ feature ensembles. An attention encoder is then applied to extract salient temporal features by projecting the inputs into queries (Q), keys (K), and values (V), computing scaled dot-product attention, and aggregating outputs from multiple attention heads. This process enables the model to selectively focus on time-relevant segments while suppressing irrelevant patterns, thereby enhancing feature representation.

The refined features are subsequently fed into the SCNs layer, which incrementally configures hidden nodes under a supervisory mechanism. Unlike conventional neural networks that rely on gradient-based optimization, SCNs construct their architecture in a non-iterative manner by selecting random parameters that satisfy a predefined consistency criterion. This approach enhances robustness and significantly reduces computational cost, particularly under colored-noise conditions common in GPS data. Although the SCNs architecture becomes fixed once the residual error falls below a threshold, the incremental construction process prior to convergence enables the network to flexibly approximate complex nonlinear dynamics.

To ensure convergence and prevent overfitting, AttSCNs employ three stopping criteria during SCNs construction: (1) the residual error norm falls below a predefined threshold ; (2) the number of hidden nodes reaches a maximum value ; or (3) the number of consecutive failed node configurations exceeds a preset limit. These conditions jointly ensure compactness and reliability of the model.

The AttSCNs model comprises three main modules: an input layer for raw data ingestion, an attention encoder for extracting dynamic temporal features, and a stochastic configuration layer for nonlinear modeling. This hybrid structure combines the strengths of attention and SCNs, enabling AttSCNs to handle long sequences, colored noise, and dynamic patterns with high robustness and accuracy.

4.2. Bayesian Linear Head for Probabilistic Treatment

The stochastic configuration networks (SCNs) generate an ensemble of hidden features

through randomized node construction. To enable explicit probabilistic modeling, we place a Bayesian linear head on top of

H, treating the output weights

as random variables with a Gaussian prior:

where

is the identity matrix and

controls the prior’s precision. Given the observed targets

, the likelihood is modeled as follows:

with

as observation noise. The posterior distribution of

then follows from Bayes’ theorem:

Substituting Equations (

1) and (

2), the log-posterior becomes

which is maximized by the MAP estimator equivalent to ridge regression:

The predictive distribution for a new input

with feature

is

where

is the posterior covariance. This Bayesian treatment provides uncertainty quantification by propagating both feature randomness (from SCNs’ stochastic configurations) and weight uncertainty (from the linear head).

4.3. SCNs with Attention Encoder

Calculating the weights of each element in the input sequence is the fundamental concept of the attention mechanism (i.e., attention weights) to determine which parts are most important for the current task [

42]. These weights are used to scale the input data, enabling the model to focus more effectively on key information. The attention weights create a weight matrix across the time dimension, with the sum of all weights in each row equal to 1. By executing multiple scaled dot-product calculations, the model can parallel extract multiple pieces of feature information from the data and pay different attention to various features, further enhancing its feature extraction capability and enabling it to capture more relevant information.

Given the input dataset

, where

, assume the output

and

, where

h is the number of heads. Performing

h linear transformations on

separately, the

i-th head’s output

,

,

is obtained as follows:

As a randomized learning of neural networks, SCNs assign hidden nodes’ input weights and biases at random under a supervisory mechanism that adapts the sampling range, constructing the network incrementally. Beginning with a basic structure, hidden nodes are added until a probabilistic consistency criterion is satisfied.

Configuration of Hidden Layer Node: For a candidate node

with parameters

,

For

accepted nodes, the network output is

with residual

A candidate is accepted when the supervisory score

is non-negative for all outputs

q, with

and

. Summing over

q yields

.

Output weights and maximum a posteriori (MAP) view. Let

H stack all accepted hidden responses. Under a Gaussian prior

and noise

,

, the MAP estimator equals ridge regression:

The deterministic SCNs prediction uses the mean

, while our probabilistic AttSCNs further propagate posterior uncertainty as below.

4.4. Stochastic Configuration Networks from a Variational Perspective

The stochastic configuration process in SCNs can be interpreted through the lens of variational inference (VI), where the random weights and biases play a role analogous to latent variables in a probabilistic model. Unlike traditional VI, which explicitly defines a variational posterior over model parameters and optimizes it via the evidence lower bound (ELBO), SCNs induce randomness through stochastic sampling under supervisory constraints, effectively approximating an implicit variational distribution over network configurations.

In VI, latent variables (e.g., weights

) are treated as random quantities with a learned approximate posterior

. In SCNs, the hidden layer parameters

are randomly sampled from a distribution (e.g., uniform or Gaussian) but are constrained by the supervisory mechanism, ensuring that each configuration contributes meaningfully to the ensemble. This process can be viewed as follows:

where

is an implicit posterior induced by the supervisory criteria. Unlike VI, which explicitly parameterizes

(e.g., as a Gaussian), SCNs do not learn a parametric posterior but instead rely on Monte Carlo sampling from an implicit distribution.

The supervisory mechanism in SCNs ensures that each random configuration improves the ensemble’s performance, analogous to how VI optimizes the ELBO to approximate the true posterior. The key difference is that SCNs do not perform gradient-based optimization over the latent space but instead enforce constraints on the stochastic weights:

where

controls the contribution of each random configuration. This resembles a constraint-based variational objective, where randomness is regulated rather than explicitly optimized.

While VI quantifies uncertainty through the spread of the learned posterior

, SCNs estimate uncertainty empirically via ensemble variance:

where

is the prediction from the

m-th stochastic configuration. This ensemble-based uncertainty is computationally efficient but lacks the theoretical guarantees of VI’s ELBO-optimized posterior.

The proposed AttSCNs have some key differences from traditional VI, including the following: Firstly, SCNs do not learn but sample from an implicit distribution. Secondly, the proposed networks do not employ gradient-based ELBO optimization, in which supervisory constraints replace backpropagation through latent variables. Finally, SCNs avoid the memory cost of maintaining a full posterior over weights. This positions SCNs as a sampling-based alternative to VI, trading theoretical elegance for computational efficiency.

6. Experiment Results

6.1. Beijing Vehicle GPS Trajectory Dataset

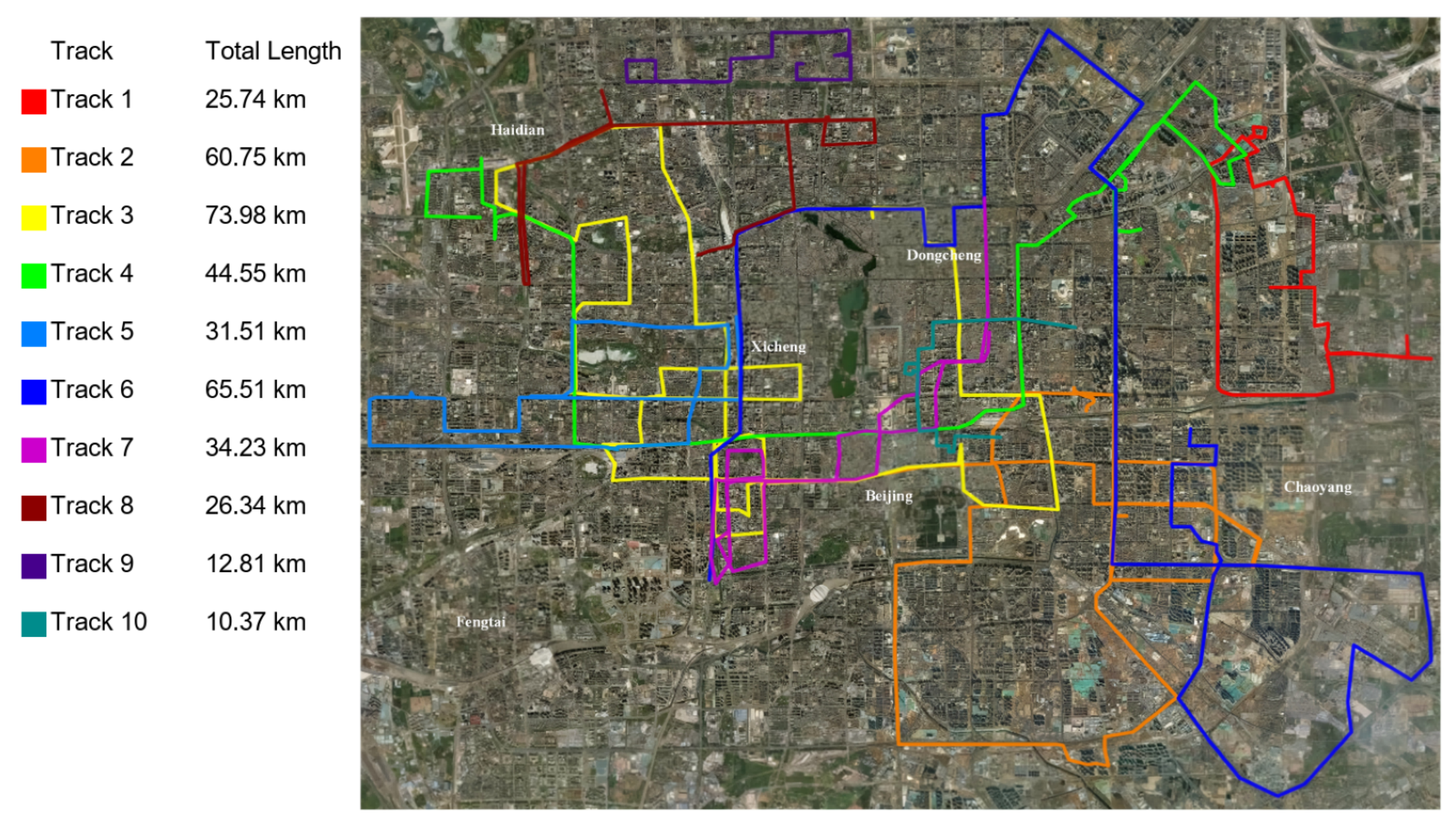

We used the Geolife Beijing vehicle GPS trajectory dataset (

https://www.microsoft.com/en-us/research/publication/t-drive-trajectory-data-sample/ accessed on 30 July 2025), which is a subset of the T-Drive trajectory dataset. This dataset comprises one-week trajectories of 10,357 taxis, totaling about 15 million data points and covering a total distance of approximately 9 million kilometers. The dataset features various sampling intervals, without missing data points, across different trajectory groups.

From this comprehensive dataset, we selected one representative group of trajectories, containing 13,987 GPS coordinates, which was used for training and evaluation. Of these, 80% were used as the training set for the model, while the remaining 20% comprised the test set.

Figure 2 shows ten example trajectories from this dataset.

6.2. Compared with State of the Art

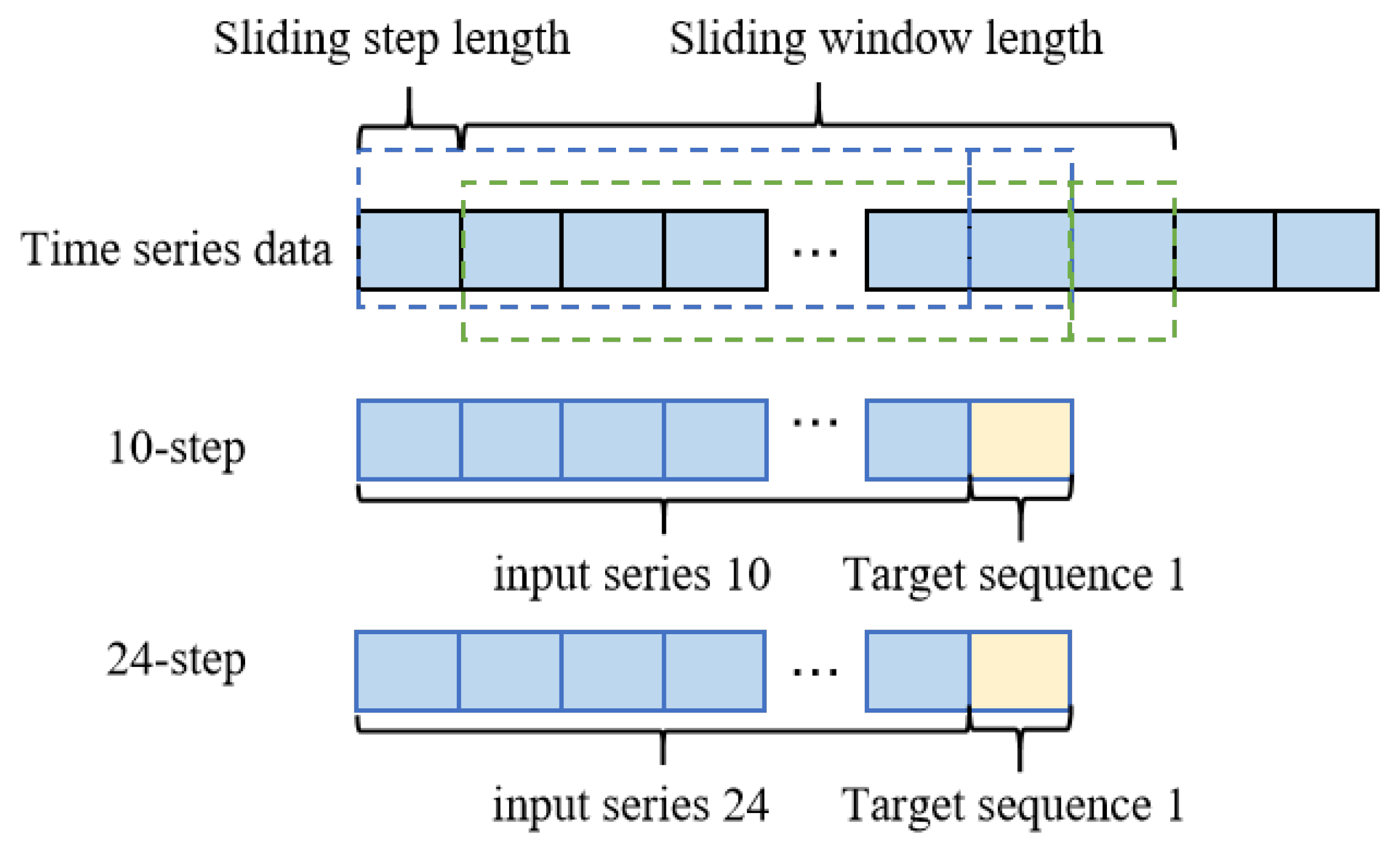

As shown in

Figure 3, suppose that the data

is time series data with a length of

m. The input length

t for the prediction model is 10 or 24, and the prediction length

is 1. The data is divided into a length window

for supervised learning, with a sliding step length

s of 1. After the first operation of the sliding window, the first sequence

is obtained as follows:

The second sequence

is

Inputs for the model are made from the first

t data points in each group, and the last

data points are employed as the target series.

Figure 3 displays the input length

t as 50 and the prediction lengths

as 10 or 24.

We compared the proposed AttSCNs model with the following three groups:

Group 1: Comparing with the classical Kalman filter using a variety of process models, such as CA [

11], CV [

10], Singer [

12], and Current Statistical [

13]. These methods serve as historical baselines to show the gap with modern approaches, while the main comparison focuses on Group 2 and Group 3 models that better highlight the contributions of AttSCNs.

Group 2: Comparing with the deep learning networks, such as GRU [

20], LSTM [

21], CNN-LSTM [

23], Transformer [

24], Informer [

26], and Autoformer [

27], and PFVAE [

28].

Group 3: Comparing with the base SCNs [

8].

The experimental parameter configurations included GRU, LSTM, CNN-LSTM, Transformer, Informer, Autoformer, PFVAE, SCNs, and AttSCNs, which were analyzed for predictive accuracy employing a sliding-window framework of 50 steps predicting 10 steps and 50 steps predicting 24 steps. Before applying the sliding-window operation, the trajectories were first split into training and testing sets at the trajectory level, ensuring that no overlapping windows appeared across the two sets and thereby avoiding potential data leakage. Each model’s input sequence length is standardized at 50 steps, with output sequence lengths set at 10 or 24 steps.

For baseline models, the initial default settings were a learning rate of 0.0001, 100 training epochs, and a batch size of 32. These settings resulted in suboptimal performance. We therefore performed hyperparameter tuning: for Transformer-based models (Transformer, Informer, and Autoformer), we conducted a grid search on the validation set, exploring learning rates (0.0005–0.005), batch sizes (64–128), and dropout rates (0.1–0.3). The final tuned configuration adopted for fair comparison was a learning rate of 0.001, 300 epochs, and a batch size of 100, as reported in

Table 3. Although extending training to 1000 epochs could further improve results, the computational time was prohibitively long, so we selected the current tuned settings as a balanced trade-off between accuracy and efficiency. This ensures that all baseline models were fairly optimized and not disadvantaged by under-tuning.

Two cases are included:

Case 1: The proposed AttSCNs model will predict the next 10 steps based on Beijing vehicle GPS trajectory data. The prediction results will be derived from the existing model and algorithm, providing an assessment of future trends.

Case 2: The proposed AttSCNs model will be used to predict the next 24 steps. This involves a more extended time range and requires the model to be more capable of capturing long-term dependencies in the time series data, which will improve the accuracy and reliability of the predictions.

6.3. Uncertainty and Calibration

We evaluate probabilistic performance using the metrics defined in

Section 5.2.

Table 1 reports results for 10-step predictions.

As detailed in

Section 4.4, AttSCNs yield explicit Gaussian predictive distributions by placing a Bayesian linear head on SCNs features, with predictive mean

and isotropic multi-output covariance

(aleatoric component). Epistemic uncertainty is captured by re-drawing the stochastic SCNs configurations

M times and re-fitting the Bayesian head; the total predictive uncertainty is the sum of aleatoric and epistemic parts. Hence, the metrics in

Table 1 assess the calibration and sharpness of full predictive distributions, not just point errors.

AttSCNs achieve the lowest NLL and CRPS and the narrowest 90% intervals while maintaining near-nominal coverage (PICP with target ), indicating well-calibrated yet sharp predictive densities. Relative to PFVAE, AttSCNs reduce CRPS by 40.6% (2.62 vs. 4.41) and MPIW by 40.5% (15.29 vs. 25.70), while also improving NLL by 5.7% (4.11 vs. 4.36). Compared with SCNs equipped with a ridge head, AttSCNs reduce CRPS/MPIW by about 41.8% and improve NLL by 6.4%, demonstrating that the Bayesian linear head (together with configuration ensembling) yields materially better-calibrated uncertainty than deterministic regression on the same features. By contrast, some deterministic sequence models show much wider intervals at lower coverage (e.g., Transformer: MPIW with PICP ), evidencing miscalibration.

To further strengthen the robustness of the conclusions, we performed formal statistical testing and effect size analysis on the results in

Table 1. For each baseline versus AttSCNs, paired differences were formed and evaluated with a two-sided paired permutation test (10,000 sign flips) and with

p-values adjusted across metrics and models using the Holm–Bonferroni procedure (

). We also report Cliff’s

with 95% BCa bootstrap confidence intervals, as well as paired Cohen’s

d and paired

t-test statistics. The results show that AttSCNs achieve statistically significant improvements over all baselines in NLL and CRPS (adjusted

, at least moderate and often large effect sizes), and consistently narrower MPIW compared with all baselines (adjusted

). At the same time, differences in PICP relative to SCNs and PFVAE are not significant. Overall, these findings confirm that AttSCNs deliver sharper predictive intervals with sound calibration in a statistically significant manner.

6.4. Case 1: 10-Step Predictions

Table 4 presents the comprehensive results of the 10-step predictions for each model. The experimental protocol involved a comparative assessment of these models under noisy conditions. The effectiveness of each model was evaluated using several metrics, including RMSE, MAE, SMAPE, and

value. To enhance reliability, all results are reported as mean ± standard deviation across five independent runs, thereby capturing performance variability. This detailed evaluation elucidates the capabilities and limitations of AttSCNs across various modeling scenarios, providing an extensive benchmark for comparison with traditional and pioneering machine learning techniques.

Table 4 shows that our proposed method has the lowest RMSE, MAE, and SMAPE, as well as the highest

. Specifically, our approach achieves an RMSE of 8.45, an MAE of 4.64, an SMAPE of 0.15, and an

of 0.99981.

Table 4 further reveals that traditional estimation methods rely heavily on parameter settings, which often require extensive expertise and are difficult to optimize. In contrast, our AttSCNs model outperforms the other 12 models evaluated in this study, as evidenced by a 98.61%, 96.88%, 97.20%, 96.52%, 58.70%, 52.53%, 65.50%, 84.80%, 87.26%, 82.03%, 35.10%, and 36.51% reduction in RMSE and a 99.06%, 97.95%, 98.13%, 97.79%, 70.91%, 68.48%, 78.70%, 89.37%, 91.05%, 87.32%, 55.21%, and 38.05% decrease in MAE for each of the comparison models. Additionally, our AttSCNs model decreases SMAPE by 98.65%, 97.97%, 98.15%, 97.84%, 67.39%, 64.29%, 76.56%, 97.90%, 98.42%, 97.60%, 57.14%, and 42.31%, respectively. The specific calculation formula is as follows:

In the 10-step prediction scenario, the performance of Group 1 models, including CA [

11], CV [

10], Singer [

12], and Current Statistical [

13], was notably poor, with

values being very low or even negative. This indicates a significant discrepancy between the predicted trajectories and the reference values, suggesting that the predictions tend to diverge. The underlying reason for this divergence is that these classical models have process models that are not full-rank and possess unstable structures. As a result, they can make predictions only within a minimal horizon before requiring observational data for correction. In the context of 10-step forecasts, these models must iteratively compute forward ten times, making them prone to divergence.

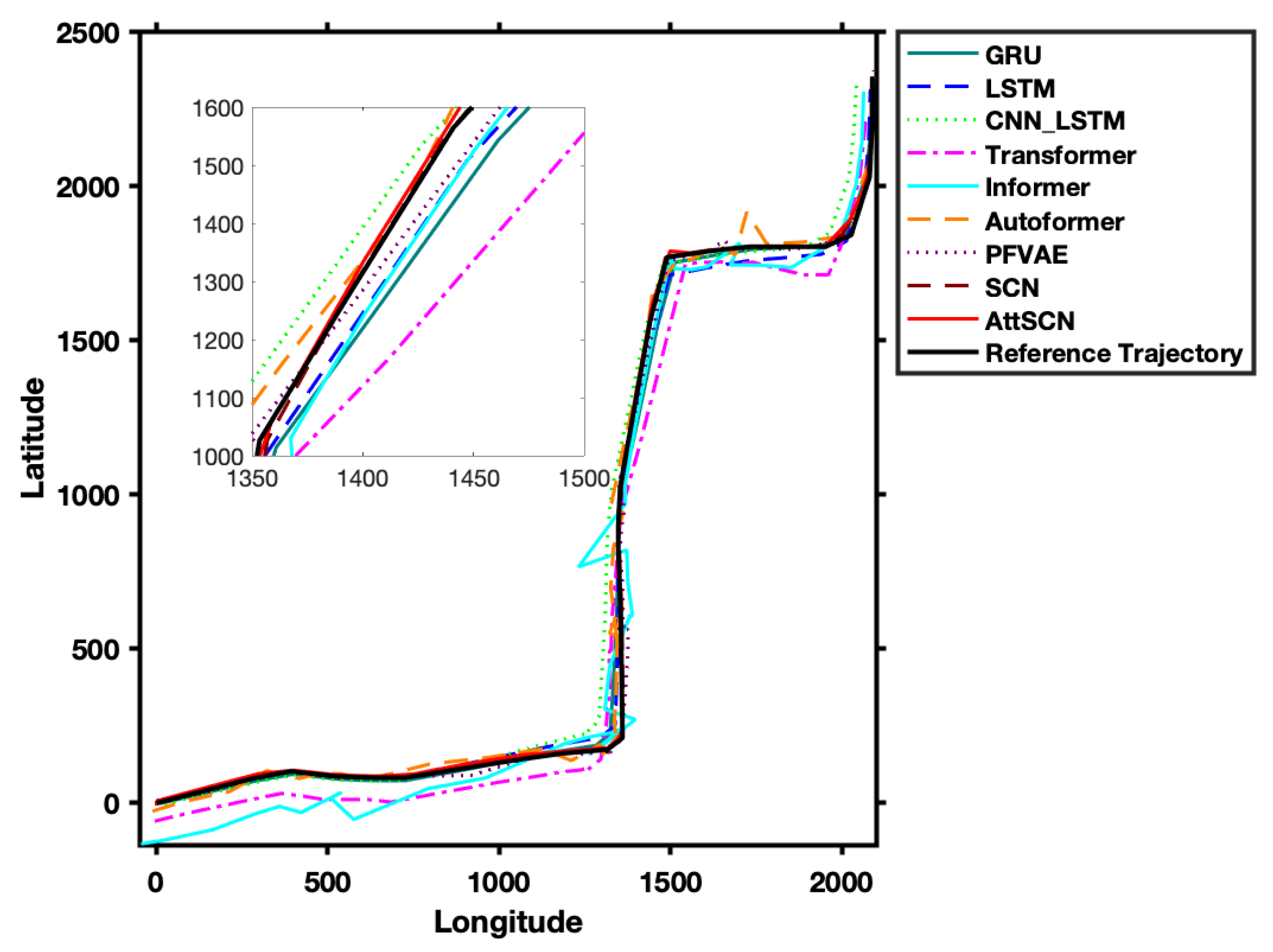

Figure 4 presents the prediction trajectory results for Group 1 models. The predictions of classical estimation models deviate markedly from the reference values, leading to substantial errors. Additionally, we found that these classical estimation methods are susceptible to parameter selection, leading to considerably different prediction results with varying parameters. In particular, prediction using the CV model with parameters T = 1, R = 1600, and Q = 200 shows a divergent trend. Among the classical models, the CV model performs the worst, mainly due to its assumption of constant velocity for the target. When the target dynamics do not meet this assumption, the performance of the estimation and prediction deteriorates significantly. In contrast, the Singer model and the Current Statistical model demonstrate improved prediction performance, as these models assume more complex dynamics for target motion, aligning more closely with the actual situation. However, in general, the prediction results based on classical estimation methods are inadequate, which can be attributed to the complex dynamics of the target motion.

Compared to classical estimation models, the deep models employed in Groups 2 and 3, such as Transformer [

24], Informer [

26], Autoformer [

27], PFVAE [

28] (Planar Flow VAE), and SCNs [

8], demonstrate significant advantages (shown in

Figure 5). This improvement can be attributed to their ability to capture complex patterns and dynamics in the data, which classical models struggle with. Notably, the proposed AttSCNs model performs exceptionally well. For instance, when compared with the baseline SCNs, AttSCNs reduced RMSE from 13.31 to 8.45, lowered MAE from 7.49 to 4.64, and decreased SMAPE from 0.26 to 0.15, while improving

from 0.99937 to 0.99981. These quantitative improvements provide clear evidence of the effectiveness of AttSCNs in achieving more stable and accurate trajectory predictions. This success can be attributed to their attention mechanism, which effectively focuses on relevant aspects of the input data, allowing for more accurate predictions by capturing temporal dependencies and variations in the target’s motion.

However, Informer performs relatively poorly. Its design, though optimized for efficiency and long sequences, may be less effective in capturing the specific dynamics of target motion. The model may overlook critical features necessary for accurate predictions, resulting in suboptimal results compared to other deep learning approaches.

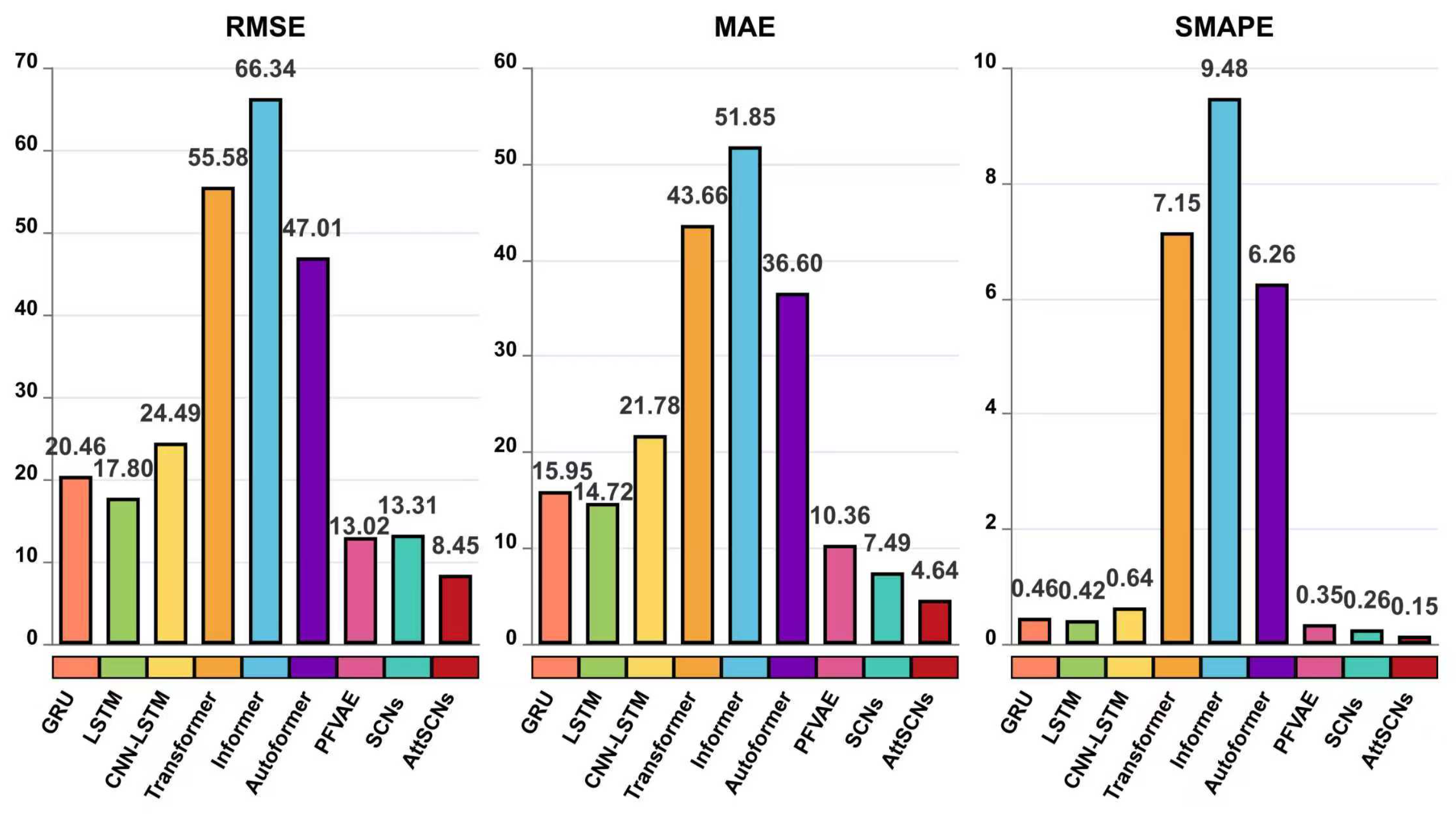

From

Figure 6, it is evident that the proposed AttSCNs achieve the lowest values in terms of RMSE, MAE, and SMAPE. This indicates that AttSCNs have superior predictive accuracy compared to other models evaluated. On the other hand, the Informer model exhibits the most significant errors (just like those shown in

Figure 5), which can be attributed to several factors. One possible reason is that the Informer model might not effectively capture the underlying temporal patterns or dependencies inherent in the dataset. Additionally, its architecture might not be as well-suited for the specific characteristics or noise present in the data, leading to suboptimal performance. Furthermore, the parameter tuning or optimization strategy utilized for the Informer model might not have been as rigorous or practical as that for AttSCNs, contributing to its relatively poor performance. Overall, these aspects suggest that while the Informer model is effective in many contexts, it may not be the best fit for the particular dataset or prediction task at hand, as demonstrated by its higher error rates.

6.5. Case 2: 24-Step Predictions

Similarly,

Table 5 shows that the performance of Group 1 models—CA [

11], CV [

10], Singer [

12], and Current Statistical [

13]—was even poorer, with

values almost negative. As previously analyzed, classical estimation methods for motion models and Kalman filters are unsuitable for long-term trajectory predictions. For modern deep learning models (Group 2 and Group 3), both mean performance and standard deviations are reported, ensuring fair comparison with AttSCNs. The proposed AttSCNs model obtained an RMSE improvement of 53.88%, 37.96%, 47.34%, 79.10%, 82.62%, 84.53%, 36.81%, and 22.67%, as well as an MAE improvement of 62.94%, 58.73%, 63.80%, 85.05%, 87.31%, 88.00%, 59.09%, and 41.67%, when compared to the performance of the GRU [

20], LSTM [

21], CNN-LSTM [

23], Transformer [

24], Informer [

26], Autoformer [

27], PFVAE [

28], and SCNs [

8] models, respectively. Moreover, SMAPE improved by 69.23%, 68.63%, 70.91%, 97.91%, 98.75%, 98.01%, 74.19%, and 61.90%, respectively. Furthermore, our AttSCNs model achieves the best

, implying an optimal fit between the estimated and observed values.

We can observe that the trends in

Figure 7 are very similar to those in

Figure 6. Our proposed AttSCNs model continues to achieve the fewest errors in terms of RMSE, MAE, and SMAPE; the performance of the Transformer, Informer, and Autoformer models declines significantly, with notable increases in RMSE, MAE, and SMAPE. The “former” series models struggle to capture the dynamics of maneuvering targets accurately. This may be due to a few reasons. Firstly, the architectures of these models might not be well-suited for capturing the complex and nonlinear temporal dependencies required for long-term predictions of dynamic systems. Additionally, the models may have insufficient mechanisms to handle varying velocities or abrupt changes in direction, which are common in maneuvering targets. Finally, the models may lack robustness against the increased uncertainty and noise that typically accompany longer prediction horizons, leading to larger errors in their predictions.

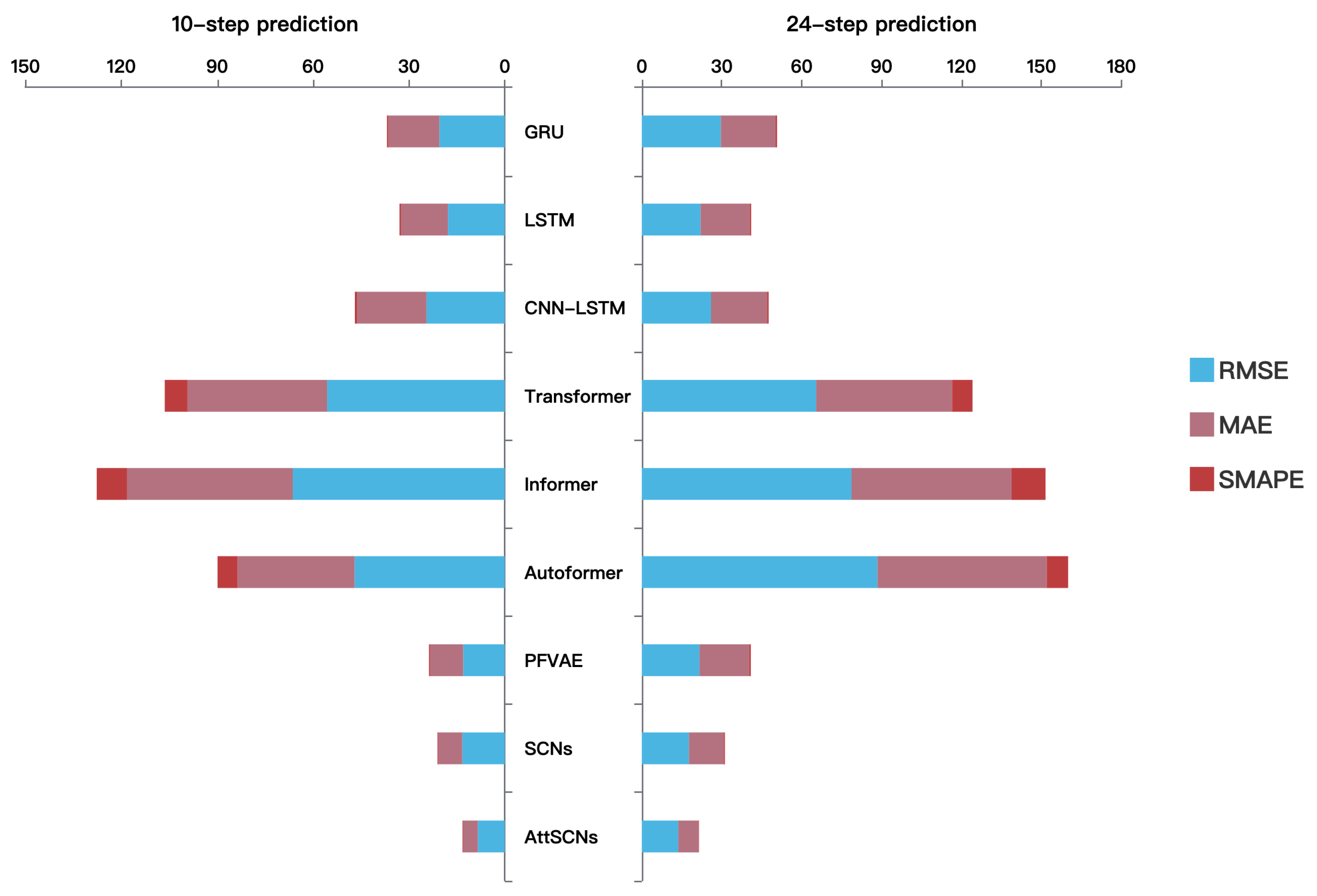

In

Figure 8, we present a stacked chart of RMSE, MAE, and SMAPE for two prediction lengths: 10-step and 24-step predictions. Unlike tables that emphasize exact values, this visualization highlights relative performance trends across multiple error metrics, thereby complementing the numerical results in

Table 4 and

Table 5 and enabling an intuitive, side-by-side comparison of different models. It can be seen that SMAPE is relatively large for the Informer series, while it is smaller for other models. Moreover, the proposed AttSCNs model achieves consistently lower values across all three error metrics and both horizons in the stacked chart, making its superiority in both short-term and long-term forecasting more intuitive. Therefore, the model proposed in this paper offers excellent error control capability, providing an effective method for long-term time series forecasting tasks.

The violin plot presented in

Figure 9 demonstrates the density and distribution characteristics of two prediction horizons, employing a

t-test to assess statistical differences between the groups, with significance levels denoted by the markers * for

p < 0.05, ** for

p < 0.01, and *** for

p < 0.001, and ns to indicate a lack of significance. RMSE was selected as the error metric in this visualization because it penalizes larger errors more heavily, thereby serving as a sensitive indicator of performance variability across repeated runs. While MAE and SMAPE are also valuable measures and are comprehensively reported in

Table 4 and

Table 5, RMSE provides the clearest visualization of distributional differences across models. The plot reveals that the proposed AttSCNs model exhibits a reduced range of error fluctuations, a more concentrated error distribution, and enhanced stability compared to baselines. Moreover, the predictive performance for the 24-step prediction is generally inferior to that of the 10-step prediction, as evidenced by elevated average errors and more dispersed distributions. Notably, the Informer series demonstrates robustness, with the error distribution for 24-step prediction closely resembling that of the 10-step case, reflecting the effectiveness of its sparse attention mechanism in capturing long-term dependencies.

6.6. Ablation Study

Table 6 reports the ablation results of AttSCNs, from which the contribution of each component can be explicitly observed. The SCNs-only variant achieves considerably lower errors than the attention-only model, confirming that SCNs provide the essential robustness for handling noisy GPS trajectories. When attention is integrated with SCNs (AttSCNs with default parameters), performance is further improved, demonstrating the benefit of attention in capturing long-term temporal dependencies.

To explicitly evaluate the contribution of Bayesian optimization, we compared the performance of AttSCNs before and after optimization. Without optimization, the AttSCNs model already outperforms conventional baselines; however, after Bayesian optimization, both prediction accuracy and robustness improved significantly, demonstrating the effectiveness of the hyperparameter search. This process systematically identified parameter values that achieved the best trade-off between predictive accuracy and model complexity. The direct comparison confirms that Bayesian optimization yields tangible performance gains, thereby justifying its inclusion in the trajectory prediction pipeline.

Overall, the ablation results indicate that SCNs constitute the backbone of the architecture, attention offers complementary gains, and Bayesian optimization provides additional refinement for optimal performance.

6.7. Discussion on the Real-Time Performance

To highlight the real-time advantages of the proposed AttSCNs model, this study emphasizes the significance of parameter count, which directly affects computational efficiency and memory consumption. The AttSCNs model contains only 126,252 parameters, a number close to that of GRU (121,400) and LSTM (161,600) and slightly smaller than that of CNN-LSTM (168,000). In contrast, Transformer, Informer, and Autoformer require 8,652,800, 8,652,800, and 3,293,696 parameters, respectively, which are one to two orders of magnitude higher than those of the other models. Despite the similarity in scale to GRU, LSTM, and CNN-LSTM, AttSCNs consistently achieve superior prediction accuracy.

Parameter count provides an intuitive measure of efficiency, but real-time capability is better characterized by computational complexity and inference latency. To this end, we analyzed multiply–accumulate operations (MACs) and estimated latency on representative edge hardware, including an ARM Cortex-A76 CPU (2.8 GHz) and an NVIDIA Jetson Xavier NX GPU (21 TOPS). The comparative results are summarized in

Table 7.

As shown in

Table 7, AttSCNs incur the lowest computational burden, requiring only ∼

MACs per step, and achieve an estimated inference latency of 0.8–1.2 ms on CPU and less than 0.1 ms on GPU. These results demonstrate that AttSCNs not only reduce parameter count but also satisfy real-time latency requirements, making them highly suitable for deployment in vehicle trajectory prediction tasks on edge devices.

The superior prediction accuracy, combined with lightweight computational cost, ensures that AttSCNs can efficiently process high-throughput data streams under stringent latency constraints. The compact parameter scale leads to lower memory consumption and faster execution, thereby facilitating rapid model updates and stable real-time operation. Such efficiency is especially critical in Internet of Vehicles (IoV) applications, where timely processing of sequential data directly impacts navigation safety and decision-making.

Another distinctive strength of AttSCNs lies in the integration of attention mechanisms with stochastic configuration networks. This hybrid design enhances robustness against colored noise and improves the model’s ability to capture long-term dependencies in GPS signals. Compared with rigid architectures such as GRU and LSTM, AttSCNs can dynamically adapt to complex spatiotemporal patterns, ensuring both accuracy and resilience in noisy environments.

In summary, AttSCNs achieve a favorable balance of accuracy, efficiency, and robustness. Their educed complexity and real-time responsiveness underscore their practical value for IoV systems, where rapid and reliable trajectory prediction is essential.

7. Limitations and Future Work

This study employs trajectory data from a single city and domain, which may limit the generalizability of the findings; performance could vary under different traffic conditions, road network configurations, or GPS data quality. From a modeling perspective, the current Bayesian linear head adopts an isotropic multi-output covariance assumption for computational tractability. While this simplification proves effective, it may not fully capture the correlation structure of prediction errors across different dimensions or time horizons. Although our uncertainty evaluation incorporates multiple metrics (NLL, CRPS, PICP, and MPIW), it does not yet include comprehensive diagnostic tools such as reliability diagrams, expected calibration error (ECE), or probability integral transform (PIT) analysis. Additionally, while we report means and standard deviations across multiple random seeds, some experiments have relatively small sample sizes; more robust statistical inference (e.g., effect size estimation via bootstrap or permutation testing) would further strengthen the validity of our conclusions.

Future work will focus on two key directions: (i) conducting uncertainty decomposition studies using normalizing-flow techniques to better model multi-modal dynamics and non-Gaussian noise patterns and (ii) developing Bayesian-inspired neural architecture search methods to jointly optimize SCNs’ constructive policies and attention mechanisms (e.g., through evolutionary or Monte Carlo strategies), aiming to improve both accuracy and calibration metrics (CRPS and NLL). Our long-term objective is to develop a robust prediction system that maintains well-calibrated uncertainty estimates under domain shifts—particularly crucial for safety-critical applications in urban canyons and adverse weather conditions—while remaining computationally efficient for edge device deployment.

8. Conclusions

This paper presents AttSCNs, a novel hybrid framework for trajectory prediction that combines a trainable attention encoder with stochastic configuration networks (SCNs) and a Bayesian linear output layer. The attention mechanism learns context-aware temporal features, while SCNs are incrementally constructed under a supervisory criterion to provide lightweight, noise-resistant representations. The Bayesian linear head generates closed-form Gaussian predictive distributions, enabling principled quantification of aleatoric uncertainty. To model epistemic uncertainty, we employ an ensemble of independently constructed SCNs configurations, each equipped with a refitted Bayesian head; the variance across ensemble predictions is combined with the analytic aleatoric uncertainty to produce calibrated prediction intervals. Notably, Bayesian optimization is used exclusively for hyperparameter tuning (e.g., constructive limits, attention width, and ensemble size) against an uncertainty-aware validation objective—it does not perform posterior inference or directly generate uncertainty estimates.

AttSCNs demonstrate significant practical advantages: once constructed, the architecture remains fixed and requires minimal computational resources during inference, making it particularly suitable for real-time applications in the Internet of Vehicles (IoV) domain. Extensive evaluation on real-world GPS datasets shows that AttSCNs consistently outperform competitive baselines in prediction accuracy while delivering well-calibrated uncertainty estimates. These capabilities enable important downstream applications including risk-aware filtering, adaptive horizon control, and safety-margin decision making.