Abstract

In this paper, we unite concepts from Husserlian phenomenology, the active inference framework in theoretical biology, and category theory in mathematics to develop a comprehensive framework for understanding social action premised on shared goals. We begin with an overview of Husserlian phenomenology, focusing on aspects of inner time-consciousness, namely, retention, primal impression, and protention. We then review active inference as a formal approach to modeling agent behavior based on variational (approximate Bayesian) inference. Expanding upon Husserl’s model of time consciousness, we consider collective goal-directed behavior, emphasizing shared protentions among agents and their connection to the shared generative models of active inference. This integrated framework aims to formalize shared goals in terms of shared protentions, and thereby shed light on the emergence of group intentionality. Building on this foundation, we incorporate mathematical tools from category theory, in particular, sheaf and topos theory, to furnish a mathematical image of individual and group interactions within a stochastic environment. Specifically, we employ morphisms between polynomial representations of individual agent models, allowing predictions not only of their own behaviors but also those of other agents and environmental responses. Sheaf and topos theory facilitates the construction of coherent agent worldviews and provides a way of representing consensus or shared understanding. We explore the emergence of shared protentions, bridging the phenomenology of temporal structure, multi-agent active inference systems, and category theory. Shared protentions are highlighted as pivotal for coordination and achieving common objectives. We conclude by acknowledging the intricacies stemming from stochastic systems and uncertainties in realizing shared goals.

1. Introduction

This paper proposes to understand collective action driven by shared goals by formalizing core concepts from phenomenological philosophy—notably Husserl’s phenomenological descriptions of the consciousness of inner time—using mathematical tools from category theory under the active inference approach to theoretical biology. This project falls under the rubric of computational phenomenology [1] and pursues initial work [2,3] that proposed an active inference version of (core aspects of) Husserl’s phenomenology. Our specific contribution in this paper will be to extend the core aspects of Husserl’s description of time consciousness to group action and to propose a formalization of this extension.

In detail, we unpack the notion of shared goals in a social group by appealing to the construct of protention (or real-time, implicit anticipation) in Husserlian phenomenology. We propose that individual-scale protentions can be communicated (explicitly or implicitly) to other members of a social group, and we argue that, when properly augmented with tools from category theory, the active inference framework allows us to model the resulting shared protentions formally in terms of a shared generative model. To account for multiple agents in a shared environment, we extend our model to represent the interaction of agents having different perspectives on the social world, enabling us to model agents that predict behavior—both their own and that of their companions—as well as the environment’s response to their actions. We utilize sheaf-theoretic and topos-theoretic tools from category theory to construct coherent representations of the world from the perspectives of multiple agents, with a focus on creating “internal universes” (topoi) that represent the beliefs, perceptions, and predictions of each agent. In this setting, shared protentions are an emergent property—and possibly a necessary property—of any collective scale of self-organization, i.e., self-organization where elements or members of an ensemble co-organize themselves.

This paper weaves together elements that might seem relevant to fairly disparate readerships, namely, Husserlian phenomenology, active inference modeling, and category theory. We clarify that our primary intended audience is threefold. The primary intended audience is composed, in part, of phenomenologists who are interested in using contemporary mathematical approaches to generate formal models of the kinds of dynamic lived experiences that are captured by phenomenological descriptions. This segment of our readership will likely intersect with proponents of the project to naturalize phenomenology [4,5]. Our target audience also comprises active inference modelers who have taken an interest in consciousness and phenomenological description.

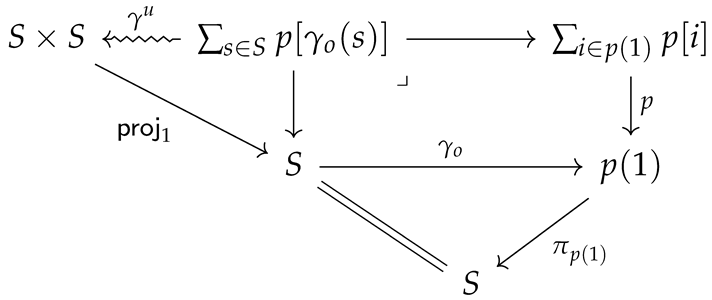

We begin by reviewing aspects of Husserlian temporal phenomenology, with a particular focus on the notions of primal impression, retention, and protention. These provide us with a conceptual foundation to think scientifically about the phenomenology of the emergence of shared goals in a community of interacting agents. We cast these shared goals in terms of the protentional (or future-oriented) aspects of immediate phenomenological experience, in particular, what we call “shared protentional goals”. We then formalize this neo-Husserlian construct with active inference, which allows for the representation and analysis of oneself and another’s generative models and their interactions with their environment. By using tools from category theory, namely, polynomial morphisms and hom polynomials, we are able to design agent architectures that implement a form of recursive cognition and prediction of other agents’ actions and environmental responses. Finally, we propose a method for gluing together the internal universes of multiple agents using topoi from category theory, allowing for a more robust representation and analysis of individual and group interactions within a stochastic environment. We use these tools to construct what we call a “consensus topos”, which represents the understanding of the world that is shared among the agents. This consensus topos may be considered the mathematical object representing the external world, providing a unified framework for analyzing social action based on shared goals. Our integrative approach provides some key first steps towards a computational phenomenology of collective action under a shared goal, which may help us naturalize group intentionality more generally and better understand the complex dynamics of social action.

3. An Overview of Active Inference

Here, we provide a brief overview of active inference, oriented towards application in the setting of shared protentional goals (for a more complete overview of active inference, see [20,21,22], and for its application to modeling phenomenological experience, also known as “computational phenomenology”, see [23]).

Active inference is a mathematical account of the behavior of cognitive agents, modeling the action–perception loop in terms of variational (approximate Bayesian) inference. A generative model is defined, encompassing beliefs about the relationship between (unobservable) causes s—whose temporal transitions depend upon action u—and (observable) effects o, formalized as [24]

This probabilistic model contains a Markov blanket in virtue of certain conditional independencies—implicit in the above factorization—that individuate the observer from the observed (e.g., the agent from their environment). Agents can then be read as minimizing a variational free energy functional of (approximate Bayesian) beliefs over unobservable causes or states , given by

Agents interact with the environment, updating their beliefs to minimize variational free energy. The variational free energy provides an upper bound on the log evidence for the generative model (also known as marginal likelihood), which can be understood in terms of optimizing Bayesian beliefs to provide a simple but accurate account of the sensorium (i.e., minimizing complexity while maximizing accuracy). This is sometimes referred to as self-evidencing [25].

Priors over action are based on the free energy expected following an action, such that the most likely action an agent commits to can be expressed as a softmax function of expected free energy:

Here, is the expected free energy for policy u and is a precision parameter influencing the stochasticity of action selection. Effectively, actions are selected based on their expected value, which is the expected log likelihood of preferred observations, and their epistemic value, which represents the expected information gain. The expected free energy can also be rearranged in terms of risk and ambiguity, namely, the divergence between anticipated and preferred outcomes and the imprecision of outcomes, given their causes. A comparison of Equations (2) and (3) shows that risk is analogous to expected complexity, while ambiguity can be associated with expected inaccuracy. In summary, agents engage with their world by updating beliefs about the hidden or latent states, causing observations while, at the same time, acting to solicit observations that minimize expected free energy, namely, minimizing risk and ambiguity.

It is important to note that the active inference framework is not a metaphysical approach or framework but rather a physics modeling framework. It only assumes the rest of contemporary physics as a background (classical, statistical, and quantum mechanics) and answers the question: given this background, what does it mean for something to be reliably re-identifiable by an external observer as the thing that it is? It makes no metaphysical claims per se (see [26,27,28]).

4. Active Inference and Time Consciousness

We have previously [1] framed Husserl’s descriptions of time consciousness in terms of (Bayesian) belief updating, while further work proposed a mathematical reconstruction of the core notions of Husserl’s phenomenology of time consciousness—retention, primal impression, protention, and the constitution of disclosure of objects in the flow of time consciousness—using active inference [2]. See also [29] for related work. Active inference foregrounds a manner in which previous experience updates an agent’s (Bayesian) beliefs and thereby underwrites behaviors and expectations, leading to a better understanding of the world.

Descriptions of temporal thickness from Husserl’s phenomenology are highly compatible with generative modeling in active inference agents. To connect the phenomenological ideas reviewed above with active inference, consider again how active inference agents update their beliefs about the world based on the temporal flow structure. In active inference, agents continuously update their posterior beliefs by integrating new observations with their existing beliefs. This belief updating involves striking a balance between maintaining the agent’s current beliefs and learning from new information. Retention and primal impressions relate to the fact that, in active inference, all past knowledge contributes to shaping beliefs about the present state of the world. In short, active inference effectively bridges prior experiences with current expectations. In this setting, retentions are formalized as the encoding of new information about the world under a generative model. Primal impressions are formalized as the new data that agents sample over time, forcing an agent to update their beliefs about the world, leading to a better understanding of the environment and allowing for more effective information and preference-seeking behavior. Thus, the consciousness of inner time can be modeled as active inference, where prior beliefs (sedimented retentions) are intermingled with ongoing sensory information (primal impression) and contextualized by an unfolding, implicit, and future-oriented anticipation of what will be sensed next (protention).

4.1. Mapping Husserlian Phenomenology to Active Inference Models

As illustrated in Table 1 and previously explored in [1], active inference comprehensively maps to Husserlian phenomenology: observations represent the hyletic data, namely, sensory information, that set perceptual boundaries but are not directly perceived. The hidden states correspond to the perceptual experiences, namely, the data that are inferred from the sensory input. The likelihood and prior transition matrices are associated with the idea of sedimented knowledge. These matrices represent the background understanding and expectations that scaffold perceptual encounters. The preference matrix evinces a similarity to Husserl’s notions of fulfillment or frustration. It represents the expected results or preferred observations. The initial distributions represent the prior beliefs of the agent that are shaped by previous experiences. In particular, the habit matrix resonates with Husserlian notions of horizon and trail set. Collectively, these aspects of the generative model entail prior expectations and the possible course of action.

Table 1.

Parameters used in the general model under the active inference framework and their phenomenological mapping.

4.2. Intersubjectivity and Intentionality

There is a body of related work on shared intentionality, intersubjectivity, and joint/shared attention that is relevant in this context (see, e.g., [30,31,32,33]). Joint attention is defined as the state in which two or more individuals focus on the same object or event. It involves mutual understanding and the coordination of attention, underpinned by cognitive processes that enable individuals to recognize and follow each other’s gaze or attentional focus, thus establishing a shared point of interest. Shared intentionality is the capacity to share psychological states, including intentions, beliefs, and goals, with others in order to coordinate.

These concepts have been developed in a Bayesian framework, which is quite germane to our own approach. Intersubjectivity here is seen as a consequence of agents conforming to the dependence structures harnessed in the same (or similar) generative models and of the capacity of the agents to consider events and their sequentiality in a similar way and coordinate around them. For instance, ref. [34] has argued that shared intentionality depends on agents having a shared representation of the joint goal and its context. This is a key aspect of intersubjectivity, as the agents maintain beliefs about a shared goal that they are trying to accomplish together. Agents continuously update their beliefs about the joint goal, which act as a context based on observing each other’s actions. This involves inferring the other’s intentions and goals based on their movements. Through this kind of interactive inference, the agents align their representations and behaviors over time. This models the intersubjective alignment and “coupling” of representations that enable successful joint action in humans.

In a different study, ref. [35] investigated ant colony foraging behavior using an active inference model. The study modeled ant behavior in a T-maze paradigm, simulating how ants discover food sources and communicate these locations to the colony through pheromone trails. This behavior is formalized via active inference, emphasizing the ants’ ability to make predictions and inferences about their environment based on sensory inputs and prior knowledge. This study also illustrates how shared representations and goals (e.g., locating food sources) facilitate coordinated actions within the ant colony. The ants’ behavior, driven by hierarchical Bayesian inference, showcases a form of temporal integration where actions are predictive of the future, based on the anticipated positions of resources and the inferred intentions of other ants.

We draw partly from this work to justify our claim that the structure of temporality enables coordination, but we take a significant step beyond merely sharing similar predictive models. We suggest that agents do not just align their predictions of the future, but rather, also integrate the predictive models of others into their own. This integration reflects a deeper level of intersubjectivity, where the contents that are protended include anticipatory representations of other agents’ future states of mind. Such integration is akin to a form of theory of mind, where understanding and predicting the mental states of others—including their intentions and future actions—are essential for complex social interactions. It also entails that the distinction between self and other gets blurrier as we move up a predictive hierarchy, explaining the co-constitutive nature of the self.

4.3. An Active Inference Approach to Shared Protentions

Active inference often involves agents making inferences about each other’s mental states by attributing cues to underlying causes [36]. The emergence of communication and language in collective or federated inference provides a concrete example of how these cues become standardized across agents [37]. Individual agents leverage their beliefs to discern patterns of behavior- and belief-updating in others. This entails a state of mutual predictability that can be seen as a communal or group-level reduction in (joint) free energy [36,38]. When agents are predictable to each other, they can anticipate each other’s actions in a complementary fashion, in a way that manifests as generalized synchrony [39]. This is similar to how language emerges in federated inference as a tool for minimizing free energy across agents in a shared econiche [40].

As agents in a group exhibit similar behaviors, they generate observable cues in the environment (e.g., an elephant path through a park) that guide other agents towards the same generative models and nudge agents towards the same behavior. This has been discussed in terms of “deontic value”, which scores the degree to which an agent’s observation of a behavior will cause that agent to engage in that behavior [40]. Alignment can thus be achieved by agents that share similar enough goals and exist in similar enough environments, thereby reinforcing patterns of behavior and epistemic foraging for new information [36,40]. Individual agents perceive the world, link observable “deontic cues” to the latent states and policies that cause them, and observe others, using these cues to engage in situationally appropriate ways with the world. These deontic cues might range from basic road markings to intricate semiotics or symbols such as language [41].

Consider, for example, someone wearing a white lab coat. You and the person wearing the lab coat share some similarities that lead you to believe that the coat means the same thing to them as it does to you. You know that lab coats are generally worn for scientific or medical purposes (sedimented retentions scaffolded by the cognitive niche). The person wearing the lab coat is standing in a street near a hospital building. From all these cues, without ever wearing a lab coat yourself or being a doctor, you can make a pretty good guess that this individual is a doctor.

Similarly, gathering interoceptive cues to infer one’s own internal states can enable individuals to make sense of other people’s behavior and allow them to infer the internal states of others [42,43]. Within a multi-agent system, the environment is complex, encompassing both abiotic and social niches. The abiotic niche is the physical and inanimate components of the environment, whereas the social niche comprises the interactions and observations resulting from the activities of other agents. This differentiation underscores that the environment is not exclusively shaped by agents but rather is shaped by an intricate, self-sustaining interaction between living and non-living things. This suggests that agents not only acquire knowledge about their environment to efficiently understand and navigate it, but they also acquire knowledge about others and, implicitly, themselves in tandem. These ideas provide some framing for the core question that will concern us presently: how should we model such shared protentions? To model shared protentions effectively, the use of category theory becomes a key epistemological resource. The mathematical precision and structural complexity of category theory provide a sophisticated framework for comprehending the cohesive behaviors of agents with regard to shared objectives or future perspectives. It explores fundamental relationships among things like the characteristics of agents’ interactions, goals, protentions, and the organization of their environmental resources. This theoretical framework allows for a formal and scalable understanding of shared protentions, highlighting the interconnections and relational dynamics between individuals in a complex setting.

6. Closing Remarks

Our paper introduces the integration of Husserlian phenomenology, active inference in theoretical biology, and category theory. We have formalized collective action and shared goals using mathematical tools of increasing generalization. We were able to anchor this formalism in phenomenology by delving into Husserl’s phenomenology of inner time-consciousness, emphasizing retention, primal impression, and protention. We then proposed that these concepts could be connected in the formation of shared goals in social groups. With a short overview of active inference, we cast the action–perception loop of cognitive agents as variational inference, furnishing an isomorphic construct to time consciousness. Building on this introduction, we were able to review the relationship between Husserl’s time consciousness and active inference established in a previous paper, showing how past experiences and expectations influence present behavior and understanding. We then proceeded to leverage a category theory to model shared protentions among active inference agents, using concepts like polynomial functors and sheaves. These sophisticated tools were necessary to account for the complexity of shared protentions, leveraging existing tools of category theory. Our paper achieves a conceptual and mathematical image of the interconnection among agents, enabling them to coordinate in large groups across spatiotemporal scales. It dissolves the boundaries between externalist and internalist perspectives by demonstrating the intrinsic connections of perceptions extended in time. This formalization elucidates how agents co-construct their world and interconnect through this process, offering a novel approach to understanding collective action and shared goals. As is the case with most work in computational neuroscience and psychology, this paper is only one step in a broader, multi-step, iterative process where we transition from abstract theory- and model-building (as in this paper) to experimental validation. The proposal in this paper represents a point of departure for future work on the phenomenology of shared intentionality, indeed, it is formulated quite broadly and does not provide a specific testable model yet. Moving towards such a specific model making testable hypotheses will be left for future follow-up work.

Author Contributions

Conceptualization, M.A., R.J.P., D.A.F. and M.J.D.R.; Formal analysis, T.S.C.S.; Writing—original draft, M.A.; Writing—review & editing, M.A., T.S.C.S., D.A.F., K.F. and M.J.D.R.; Supervision, M.J.D.R. All authors have read and agreed to the published version of the manuscript.

Funding

We wish to acknowledge the Wellcome center grant 088130/Z/09/Z for funding this manuscript in open access.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Albarracin, M.; Pitliya, R.J.; Ramstead, M.J.; Yoshimi, J. Mapping husserlian phenomenology onto active inference. arXiv 2022, arXiv:2208.09058. [Google Scholar]

- Yoshimi, J. The Formalism. In Husserlian Phenomenology; Springer: Cham, Switzerland, 2016; pp. 11–33. [Google Scholar]

- Yoshimi, J. Husserlian Phenomenology: A Unifying Interpretation; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Ramstead, M.J. Naturalizing what? Varieties of naturalism and transcendental phenomenology. Phenomenol. Cogn. Sci. 2015, 14, 929–971. [Google Scholar] [CrossRef]

- Petitot, J. Naturalizing Phenomenology: Issues in Contemporary Phenomenology and Cognitive Science; Stanford University Press: Redwood City, CA, USA, 1999. [Google Scholar]

- Husserl, E. The Phenomenology of Internal Time-Consciousness; Indiana University Press: Bloomington, IN, USA, 2019. [Google Scholar]

- Andersen, H.K.; Grush, R. A brief history of time-consciousness: Historical precursors to James and Husserl. J. Hist. Philos. 2009, 47, 277–307. [Google Scholar] [CrossRef]

- Poleshchuk, I. From Husserl to Levinas: The role of hyletic data, affection, sensation and the other in temporality. Problemos 2009, 76, 112–133. [Google Scholar] [CrossRef]

- Husserl, E. The Phenomenology of Intersubjecitvity; Springer: Berlin/Heidelberg, Germany, 1973. [Google Scholar]

- Sokolowski, R. The Formation of Husserl’s Concept of Constitution; Springer Science & Business Media: New York, NY, USA, 2013; Volume 18. [Google Scholar]

- Hoerl, C. Husserl, the absolute flow, and temporal experience. Philos. Phenomenol. Res. 2013, 86, 376–411. [Google Scholar] [CrossRef]

- Bergson, H. Matière et Mémoire; République des Lettres: Paris, France, 2020. [Google Scholar]

- James, W. Principles of Psychology 2007; Cosimo: New York, NY, USA, 2007. [Google Scholar]

- Laroche, J.; Berardi, A.M.; Brangier, E. Embodiment of intersubjective time: Relational dynamics as attractors in the temporal coordination of interpersonal behaviors and experiences. Front. Psychol. 2014, 5, 1180. [Google Scholar] [CrossRef]

- Husserl, E. Cartesian Meditations: An Introduction to Phenomenology; Springer Science & Business Media: New York, NY, USA, 2013. [Google Scholar]

- Husserl, E. The Crisis of European Sciences and Transcendental Phenomenology: An introduction to Phenomenological Philosophy; Northwestern University Press: Evanston, IL, USA, 1936. [Google Scholar]

- Heinämaa, S. Self–A phenomenological account: Temporality, finitude and intersubjectivity. In Empathy, Intersubjectivity, and the Social World: The Continued Relevance of Phenomenology; De Gruyter: Berlin, Germany, 2022. [Google Scholar]

- Rodemeyer, L.M. Intersubjective Temporality; Springer: Cham, Switzerland, 2006; pp. 181–198. [Google Scholar]

- Benford, S.; Giannachi, G. Temporal trajectories in shared interactive narratives. In Proceedings of the Sigchi Conference on Human Factors in Computing Systems, Florence, Italy, 5–10 April 2008; pp. 73–82. [Google Scholar]

- Smith, R.; Friston, K.J.; Whyte, C.J. A step-by-step tutorial on active inference and its application to empirical data. J. Math. Psychol. 2022, 107, 102632. [Google Scholar] [CrossRef]

- Parr, T.; Pezzulo, G.; Friston, K.J. Active Inference: The Free Energy Principle in Mind, Brain, and Behavior; MIT Press: Cambridge, MA, USA, 2022. [Google Scholar]

- Friston, K.; Da Costa, L.; Sajid, N.; Heins, C.; Ueltzhöffer, K.; Pavliotis, G.A.; Parr, T. The free energy principle made simpler but not too simple. Phys. Rep. 2023, 1024, 1–29. [Google Scholar] [CrossRef]

- Ramstead, M.J.; Seth, A.K.; Hesp, C.; Sandved-Smith, L.; Mago, J.; Lifshitz, M.; Pagnoni, G.; Smith, R.; Dumas, G.; Lutz, A.; et al. From generative models to generative passages: A computational approach to (neuro) phenomenology. Rev. Philos. Psychol. 2022, 13, 829–857. [Google Scholar] [CrossRef]

- Çatal, O.; Nauta, J.; Verbelen, T.; Simoens, P.; Dhoedt, B. Bayesian policy selection using active inference. arXiv 2019, arXiv:1904.08149. [Google Scholar]

- Hohwy, J. The self-evidencing brain. Noûs 2016, 50, 259–285. [Google Scholar] [CrossRef]

- Ramstead, M.J.; Sakthivadivel, D.A.; Heins, C.; Koudahl, M.; Millidge, B.; Da Costa, L.; Klein, B.; Friston, K.J. On Bayesian Mechanics: A Physics of and by Beliefs. arXiv 2022, arXiv:2205.11543. [Google Scholar]

- Ramstead, M.J.; Albarracin, M.; Kiefer, A.; Klein, B.; Fields, C.; Friston, K.; Safron, A. The inner screen model of consciousness: Applying the free energy principle directly to the study of conscious experience. PsyArXiv 2023. [Google Scholar] [CrossRef]

- Ramstead, M.J.; Sakthivadivel, D.A.; Friston, K.J. On the map-territory fallacy fallacy. arXiv 2022, arXiv:2208.06924. [Google Scholar]

- Bogotá, J.D.; Djebbara, Z. Time-consciousness in computational phenomenology: A temporal analysis of active inference. Neurosci. Conscious. 2023, 2023, niad004. [Google Scholar] [CrossRef] [PubMed]

- Tomasello, M.; Carpenter, M. Shared intentionality. Dev. Sci. 2007, 10, 121–125. [Google Scholar] [CrossRef]

- Tomasello, M. Joint attention as social cognition. In Joint Attention; Psychology Press: New York, NY, USA, 2014; pp. 103–130. [Google Scholar]

- Siposova, B.; Carpenter, M. A new look at joint attention and common knowledge. Cognition 2019, 189, 260–274. [Google Scholar] [CrossRef] [PubMed]

- Vincini, S. Taking the mystery away from shared intentionality: The straightforward view and its empirical implications. Front. Psychol. 2023, 14, 1068404. [Google Scholar] [CrossRef]

- Maisto, D.; Donnarumma, F.; Pezzulo, G. Interactive inference: A multi-agent model of cooperative joint actions. arXiv 2023, arXiv:2210.13113. [Google Scholar] [CrossRef]

- Friedman, D.A.; Tschantz, A.; Ramstead, M.J.; Friston, K.; Constant, A. Active inferants: An active inference framework for ant colony behavior. Front. Behav. Neurosci. 2021, 15, 647732. [Google Scholar] [CrossRef] [PubMed]

- Veissière, S.P.; Constant, A.; Ramstead, M.J.; Friston, K.J.; Kirmayer, L.J. Thinking through other minds: A variational approach to cognition and culture. Behav. Brain Sci. 2020, 43, e90. [Google Scholar] [CrossRef]

- Friston, K.; Parr, T.; Heins, C.; Constant, A.; Friedman, D.; Isomura, T.; Fields, C.; Verbelen, T.; Ramstead, M.; Clippinger, J.; et al. Federated inference and belief sharing. Neurosci. Biobehav. Rev. 2024, 156, 105500. [Google Scholar] [CrossRef] [PubMed]

- Ramstead, M.J.; Hesp, C.; Tschantz, A.; Smith, R.; Constant, A.; Friston, K. Neural and phenotypic representation under the free-energy principle. Neurosci. Biobehav. Rev. 2021, 120, 109–122. [Google Scholar] [CrossRef] [PubMed]

- Gallagher, S.; Allen, M. Active inference, enactivism and the hermeneutics of social cognition. Synthese 2018, 195, 2627–2648. [Google Scholar] [CrossRef] [PubMed]

- Constant, A.; Ramstead, M.J.; Veissière, S.P.; Friston, K. Regimes of expectations: An active inference model of social conformity and human decision making. Front. Psychol. 2019, 10, 679. [Google Scholar] [CrossRef] [PubMed]

- Constant, A.; Ramstead, M.J.; Veissiere, S.P.; Campbell, J.O.; Friston, K.J. A variational approach to niche construction. J. R. Soc. Interface 2018, 15, 20170685. [Google Scholar] [CrossRef] [PubMed]

- Ondobaka, S.; Kilner, J.; Friston, K. The role of interoceptive inference in theory of mind. Brain Cogn. 2017, 112, 64–68. [Google Scholar] [CrossRef] [PubMed]

- Seth, A. Being You: A New Science of Consciousness; Penguin: London, UK, 2021. [Google Scholar]

- St Clere Smithe, T. Polynomial Life: The Structure of Adaptive Systems. In Proceedings of the Electronic Proceedings in Theoretical Computer Science, Cambridge, UK, 12–16 July 2022; Volume 372, pp. 133–148. [Google Scholar] [CrossRef]

- Myers, D.J. Categorical Systems Theory (Draft). 2022. Available online: http://davidjaz.com/Papers/DynamicalBook.pdf (accessed on 20 December 2023).

- Spivak, D.I.; Niu, N. Polynomial Functors: A General Theory of Interaction; (In press). Topos Institute: Berkeley, CA, USA, 2021. [Google Scholar]

- Robinson, M. Sheaves Are the Canonical Data Structure for Sensor Integration. Inf. Fusion 2017, 36, 208–224. [Google Scholar] [CrossRef]

- Hansen, J.; Ghrist, R. Opinion Dynamics on Discourse Sheaves. arXiv 2020, arXiv:2005.12798. [Google Scholar] [CrossRef]

- Hansen, J. Laplacians of Cellular Sheaves: Theory and Applications. Ph.D. Thesis, University of Pennsylvania, Philadelphia, PA, USA, 2020. [Google Scholar]

- Abramsky, S.; Carù, G. Non-Locality, Contextuality and Valuation Algebras: A General Theory of Disagreement. Philos. Trans. R. Soc. Math. Phys. Eng. Sci. 2019, 377, 20190036. [Google Scholar] [CrossRef]

- Hansen, J.; Ghrist, R. Learning Sheaf Laplacians from Smooth Signals. In Proceedings of the (ICASSP 2019) 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019. [Google Scholar] [CrossRef]

- Shulman, M. Homotopy Type Theory: The Logic of Space. In Proceedings of the New Spaces for Mathematics and Physics, Paris, France, 18–23 September 2017. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).