Abstract

Mobile robot olfaction of toxic and hazardous odor sources is of great significance in anti-terrorism, disaster prevention, and control scenarios. Aiming at the problems of low search efficiency and easily falling into a local optimum of the current odor source localization strategies, the paper proposes the adaptive space-aware Infotaxis II algorithm. To improve the tracking efficiency of robots, a new reward function is designed by considering the space information and emphasizing the exploration behavior of robots. Considering the enhancement in exploratory behavior, an adaptive navigation-updated mechanism is proposed to adjust the movement range of robots in real time through information entropy to avoid an excessive exploration behavior during the search process, which may lead the robot to fall into a local optimum. Subsequently, an improved adaptive cosine salp swarm algorithm is applied to confirm the optimal information adaptive parameter. Comparative simulation experiments between ASAInfotaxis II and the classical search strategies are carried out in 2D and 3D scenarios regarding the search efficiency and search behavior, which show that ASAInfotaxis II is competent to improve the search efficiency to a larger extent and achieves a better balance between exploration and exploitation behaviors.

1. Introduction

Odor source localization (OSL) is important for anti-terrorist attacks and disaster emergency response, such as the leakage of toxic flammable and explosive gases [1], nuclear accidents [2], volcanic eruptions [3], as well as multiple scenarios. The occurrence of these events has a considerable impact on human safety, atmospheric environment, and many other aspects. The release of these odor sources may pose a threat to human health, or perhaps provide clues to the location of a resource. The ability to identify and quantify these sources, to monitor source emissions, or to respond quickly to an incident is critical.

Early odor source localization is usually processed using static sensor networks and weather station networks [4,5], through which the early detection of places of interest or strategically important sites can be performed. However, static sensing network methods have significantly long sampling times, limited measurement ranges, and relatively high installation and maintenance costs. Therefore, with the rapid development of small sensors and smart mobile search devices [6], which are competent to overcome the problems of maintenance, power supply, network design, and cost of large static sensor networks [7], searching for release sources using smart mobile search devices is gradually becoming a mainstream approach to source localization. At present, mobile robot olfaction is mainly divided into three types of situations: reactive strategies, heuristic strategies, and cognitive strategies.

Reactive strategies are the earliest situations in the field of OSL performed by mobile robots, and draw inspiration from the behavior of organisms searching for food, mates, or other predators, e.g., moths [8] and Escherichia coli [9], for the purpose of autonomously tracking chemical plumes. Reactive strategies are generally gradient-based, e.g., zigzag–silkworm [10] and spiral–surge [11], in which the robot moves repeatedly along the chemical concentration gradient, constantly steering so that the robot moves to the side with a higher chemical concentration. Reactive strategies are suitable for diffusion-dominated airflow environments and are usually used for micro-vehicles or ground robots to search for odor sources in indoor environments without a strong airflow [12]. After that, some researchers have performed OSL by some heuristic strategies, which are generally designed for multiple robots and consider OSL as a mathematical optimization problem. During the optimization process, different optimization algorithms can be chosen to update the objective function, such as whale optimization algorithm [13], particle swarm optimization [14], and reinforcement learning methods [15]. However, most real-world scenarios are dominated by turbulence, and the fluctuations generated by turbulence make the search process more complicated. The instantaneous concentration of odors presents a curved and intermittent structure, at which time there is no more accurate concentration gradient, and thus the robot is prone to falling into a local optimum during the OSL process, leading to the failure of reactive search strategies as well as heuristic strategies. Cognitive search strategies based on probabilistic inference are built on the foundation of Bayesian theory, which provides a statistically rigorous approach to deal with uncertainty in the inference process [16]. A variety of stochastic factors, such as sensor errors and intermittent turbulence, can be taken into account while incorporating the available observational data, which provide stronger robustness under the condition of sparse cues. Cassandra et al. [17] investigated the uncertainty of mobile robot navigation through discrete Bayesian models. Later, Vergassola et al. [18] introduced information entropy based on the Bayesian model and proposed Infotaxis. Infotaxis has now been successfully applied to many OSL tasks and demonstrated a fast and stable search capability [19]. Ristic et al. [20] implemented autonomous search for release sources in unknown environments using Rao-Blackwellised particle filters with entropy-decreasing motion control in a Bayesian framework. Eggels et al. [21] implemented Infotaxis in three dimensions. Ruddick et al. [22] evaluated the improved performance of Infotaxis under different environmental conditions, which showed that Infotaxis had an excellent OSL performance at high wind speeds.

In recent years, new cognitive search strategies have been proposed. Hutchinson et al. [23] designed a reward function based on maximum entropy sampling, known as the Entrotaxis, but the function performs poorly in two dimensions, which may be caused by the strong gradient near the source in the model, leading to biased decisions based on local random samples. Song et al. [24] proposed a reward function that combines entropy and potential energy, which explores more information through entropy and uses the potential energy to enhance the chasing behavior as uncertainty recedes, effectively solving the problem of the classic Infotaxis being more likely to fall into local self-trapping. Rahbar et al. [25] designed an enhanced navigation strategy that allowed the robot to maintain a balance between exploration and exploitation behaviors during the search process and used the Metropolis–Hasting method [26] for the estimation of the source location parameters, which reduces the cost associated with the probabilistic inference computation. Ji et al. [27] proposed the MEGI-taxis, which reconstructs the posterior probability density function (PDF) using a Gaussian mixture model (GMM) and guides the searcher by using the maximum effective Gaussian distribution (MEGI), performing more accurately and quickly than other cognitive strategies in turbulent environments. Park et al. [28] combined Infotaxis with the GMM to propose GMM-Infotaxis, where the GMM appropriately clustered all possible source locations to promote more exploitation behaviors, which contributed to a better trade-off between exploration and exploitation. Zhao et al. [29] designed a passive evasion mechanism for the problem of source searching in randomly obstructed environments by marking forbidden zones and allowing the searcher to be forced out of the cluttered location to improve the performance of the cognitive strategy in obstructed environments. To address the real-life problem of obstacles such as buildings obstructing the search path and interfering with the flow of air sources, An et al. [30] introduced rapidly exploring random trees (RRTs) as a local path planner for source search to generate efficient paths in a continuous domain filled with obstacles, and RRT-Infotaxis can autonomously find a balance between exploration and exploitation.

Although the above literature improved Infotaxis to a certain extent, it still suffers from the problems of a lower search efficiency and the failure to obtain a better balance between exploration and exploitation behaviors, which leads the robot to fall into a local optimum during the search process. In this regard, this paper proposes the adaptive space-aware Infotaxis II (ASAInfotaxis II). Aiming at the problem of the low efficiency of the current cognitive strategies, a reward function focusing on the exploration behavior is designed. From the idea of balancing the exploration and exploitation behaviors, an adaptive navigation-updated mechanism is proposed, which adjusts the moving range of robots in each step in real time through information entropy to prevent the robot from falling into the local optimum in the process. For different application scenarios, the adaptive cosine salp swarm algorithm (ACSSA) is used to find the optimal information adaptive parameter to obtain the optimal search path.

The remainder of this paper is organized as follows. Section 2 describes the mathematical models for the plume model, gas sensing model, and robotic cognitive search strategies. Section 3 provides the conceptual solution of ASAInfotaxis II, including the design of a reward function, the adaptive navigation-updated mechanism, and the adaptive cosine salp swarm algorithm. Section 4 conducts numerical simulation experiments to compare the differences in search performance and search behavior between ASAInfotaxis II and several classical cognitive search strategies. In the last section, the conclusions and future work are presented.

2. Materials

2.1. Isometric Plume Model

The airflow in a macroscopic scene is almost always in a turbulent state. After being subjected to turbulence, the plume is twisted into various kinds of jagged fragment-like morphologies, and the position of the plume changes constantly with the wind speed and direction, which make it difficult to express the motion state of the plume. In this paper, an isotropic plume model, which is commonly used in turbulent environments, was used to characterize the random distribution and sparseness of plumes. In this model, detectable “particles” are emitted by the odor source at a rate of , and the emitted particles have a finite lifetime , are transported by a mean wind , and propagate with isotropous effective diffusivity D [31,32]. Assuming that the wind is blowing in the negative direction along the x-axis, the average stationary concentration field produced by the odor source located at satisfies the following advection–diffusion equation [33]:

Then, in 2D, the analytical solution of the concentration field is:

where and denotes the zero-order corrected Bessel function. Similarly, the analytical solution of the concentration field in 3D is:

According to Smoluchowski’s expression [34], a spherical object of linear size moving into the media undergoes a series of collisions at the rate . Therefore, the model takes the capture plume cues as particle collisions and describes the spatial distribution of the cues by particle collision probability. The cue capture rate of the robot at position for the odor source located at is [18]:

Similarly, the cue capture rate at position for the odor source in 3D is [18]:

2.2. Gas Sensing Model

When the sensor measures chemical substances diffused in a fluid, the instantaneous concentration gradient detected fluctuates greatly and is prone to sudden changes. In this paper, a binary detection sensor was utilized to process the sampled concentration, and the robot was considered to have captured the plume information when the detected value of the sensor was greater than a set threshold , at which time the sensing result was “1”; otherwise, the sensing result was “0”. Therefore, the robot’s cue capture rate at position is approximated as a Poisson process. The probability that the robot captures a cue at position for times during the time interval is [24]:

2.3. Cognitive Search Strategy

Cognitive strategies divide the entire search area into a grid, and the robot continuously updates the PDF of the odor source location through real-time measurements from sensors. The darker the grid color, the higher the probability that the odor source is in that grid. In the initial stage of the algorithm, the whole environment map is unknown, and the probability that each grid is an odor source is equal, and the probability exhibits a uniform distribution. After each measurement, the PDF is updated according to the plume model, and the robot moves to the next target point based on the reward function. As the entropy of the PDF becomes smaller and smaller, the robot gradually approaches the odor source. The entire cognitive search process is often formulated as partially observable Markov decision processes (POMDPs) [35,36]. POMDPs consist of three main elements: an information state, a reward function, and a set of admissible actions [37]. When the PDF acts as the information state, the current knowledge about the odor source is completely specified by the PDF. The reward function maps each acceptable action as a non-negative real number that represents a measure of the expected knowledge gain, and the optimal strategy represents the action with the highest reward function. The set of allowed actions represents the set of optional positions for next step of the robot.

2.3.1. Information State

As the search proceeds, the robot obtains a series of detection sequences [], which can be obtained from the trajectory by Bayesian inference with the PDF of the source location . The trajectory is defined as:

where denotes the total number of robot captures cues along the trajectory , corresponds to the moment of cue capture, denotes that no plume cue was captured, and denotes that a plume cue was captured.

Based on this, the of the odor source after the robot undertakes trajectory is:

2.3.2. Reward Function

Assuming that the search region is Ω, the information entropy is calculated as:

The robot arrives at position at time , and its collected information is in , at which point the information entropy is . The decrease in information entropy when the robot moves to a neighboring position or remains stationary is [18]:

where and represents the probability that the robot captures the plume cue at the kth detection. indicates the probability that the odor source is located at . The reward function consists of two terms. The first term on the right is the exploitation term, which refers to the use of already acquired knowledge to travel to the most probable source location, and the second term on the right is the exploration term, which refers to the gathering of more information and obtaining a more reliable estimate of the odor source.

In addition, new reward functions for improving exploration and exploitation equilibrium are proposed:

The Infotaxis II [19] reward function is defined as:

The Entrotaxis [23] reward function is defined as:

The Sinfotaxis [38] reward function is defined as:

where is the threshold value that considers the distance to find the odor source.

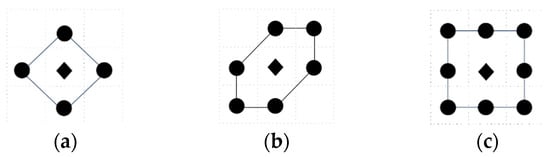

2.3.3. A Set of Admissible Actions

In 2D, a set of admissible actions is available in 4-direction, 6-direction, as well as 8-direction sets, as shown in Figure 1. The black “◆” indicates the current location of the robot, and the black “●” indicates the optional target point for the next move. It should be noted that, for the majority of cognitive strategies, the evaluation of the next goal point also includes the current location of the robot; as Entrotaxis is based on the maximum entropy strategy, the current location of the robot is not considered.

Figure 1.

Schematic diagram of admissible action sets in a 2D space: (a) 4-direction; (b) 6-direction; and (c) 8-direction sets.

In 3D, a set of admissible actions is available in 6-direction, 14-direction, and 26-direction sets. The left, main, and top views of their pathway units are shown in Figure 2. The pathway units can be imagined as within a positive 3D space, and the robot is located at the center of the positive 3D space. Similarly, the black “◆” indicates the current location of the robot, and the black “●” indicates the optional target point for the next move.

Figure 2.

Schematic diagram of admissible action sets in a 3D space: (a) 6-direction set, left view; (b) 6-direction set, main view; (c) 6-direction set, top view; (d) 14-direction set, left view; (e) 14-direction set, main view; (f) 14-direction set, top view; (g) 26-direction set, left view; (h) 26-direction set, main view; and (i) 26-direction set, top view.

3. Adaptive Space-Aware Infotaxis II Search Scheme

3.1. Construction of Space-Aware Infotaxis II

A very important component of cognitive search strategies is the design of reward functions, which determines how the robot chooses the next moving direction under the current PDF and largely determines the efficiency of OSL. Classical cognitive search strategies introduce information entropy to measure the uncertainty of the environment, which is calculated as shown in Formula (9). Information entropy contains only the mastery of information about the current environment, but does not contain a measure of potential source positions relative to the position of the robot. To improve the exploitation behavior, the distance information metric was introduced to quantify the distance between the robot and all the potential source positions [39]. The distance information metric is updated according to:

where is the PDF in the current state, is all possible source positions, denotes the current position of the robot, and denotes the Manhattan distance between the robot and the potential position (other distance calculations can be used as well, e.g., Euclidean distance and Chebyshev distance). Combining information entropy and distance information as a new reward measure to characterize the uncertainty of the environment, denoted as , the calculation method is as follows:

Therefore, the space-aware Infotaxis (SAI) [39] reward function is:

Suppose the robot arrives at position at time , and its collected information is in . The first term on the right side of Formula (16) indicates that the robot found the location of the odor source using the current information, which means that becomes a function and the information entropy becomes 0 at the next moment, but this term is meaningful only when the robot finds the source location at the next moment, and the remaining moments have a small value of . The second term on the right side is an exploratory term, which means that the robot did not find the source at , where denotes the probability that the robot makes detections at position in a time interval, given by the Poisson distribution. In this regard, the first term is discarded, and a new reward function is established, which enhances the exploration behavior. The new reward function is:

3.2. Adaptive Navigation-Updated Mechanism

To compensate for the performance loss caused by discarding the exploitation term in the reward function, and to better achieve the balance between exploration and exploitation behaviors, this paper proposes an information adaptive navigation-updated mechanism, where the robot relies on the information obtained at each moment to determine the moveable range of the next moment. The adaptive change in movement is set as follows:

where denotes the navigation correction factor, is the information entropy of the current location of the robot, is the maximum value of the information entropy of the environment, denotes the information adaptive parameter, and is the initial range of movement according to:

where is the robot’s moving speed and is the time for the robot to move one grid. In the case that the initial state is unknown to the whole environment, the unknown situation in each place in the environment is the same; so, the initial distribution is set to a uniform distribution, and the information entropy is maximal. The initial PDF is set to:

where is the area of the search region; then, (X, Y) obeys a uniform distribution on , denoted as . Meanwhile, the maximum value of information entropy is found:

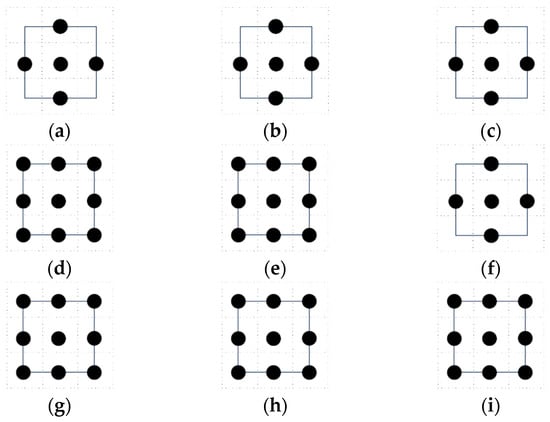

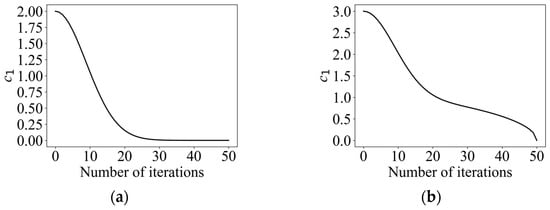

In the process of robot searching, the uncertainty of the environment decreases, the overall information entropy shows a decreasing trend, and gradually increases. The movable range of the robot should be gradually reduced with the searching; so, was introduced. To better adapt to the changes in the environment, the information adaptive parameter was introduced, and its variation range was set to [0, 1.5]. Taking the maximum value of information entropy as an example, the changes in the navigation correction factor with information entropy under different information adaptive parameter are shown in Figure 3. Six values of −0.5, 0.5, 1.1, 1.5, 1.9, and 2.2 were selected for . When the value of is positive, with the reduction in information entropy, gradually decreases, which is in line with the expected design, and the exploration behavior in the early stage is enhanced, and the exploitation behavior in the later stage is enhanced. When the value of is negative, the trend of the change in is just the opposite, which is not in line with the original intention of the design. Therefore, should be selected as a positive number. However, it should be noted that, if a too large value of is selected, there will be a situation where the search range is particularly large at the beginning, which may exceed the search range and miss important information about the odor plume; and at a later stage, there can be a situation where the search range is too small, which will result in an excessive exploitation behavior of the robot, which limits the confirmation of the odor source and leads the robot to fall into a local optimum. In this regard, [0, 1.5] was selected as the interval of variation of .

Figure 3.

Variation curves of the navigation correction factor under different information adaptive parameters.

3.3. Finding Optimal Information Adaptive Parameters Based on ACSSA

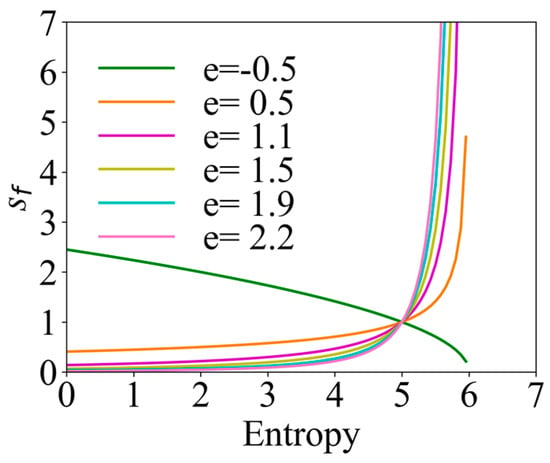

3.3.1. Multi-Peak Optimization Problem

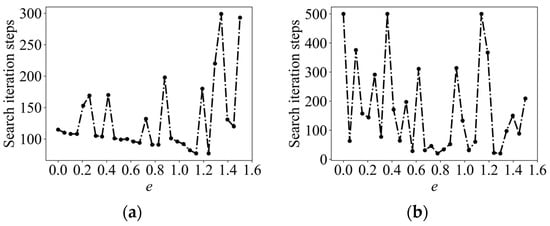

The information adaptive parameter was finely divided in the range of [0, 1.5] by enumeration and the corresponding number of iteration steps was calculated to find out the relatively shortest search path, called enumerating space-aware Infotaxis II (ESAInfotaxis II). Taking the 4-direction set in 2D and the 6-direction set in 3D as an example, the change curve of the number of iterative steps with is shown in Figure 4. The change in the number of iterative steps does not conform to the law of linear change, whether in 2D or 3D, and the optimal path-solving problem has multiple local optimal values.

Figure 4.

Multi-peak optimization problem: 2D parameters: , , , , , , , and odor source location (1, 6.4); 3D parameters: , , , , , , , , and odor source location (2, 2, 5). (a) The 4-direction set in 2D; and (b) 6-direction set in 3D.

To solve the problem of the enumeration method not being able to find the optimal accurately, a swarm intelligence algorithm is proposed to be used for the solution of the optimal problem. As it can be seen in Figure 4, the optimal search path-solving problem has multiple local optimal values and only one global optimal value, which is a multi-peak optimization problem. Therefore, a swarm intelligence algorithm that has a strong global search ability and does not easily fall into the local optimum was considered.

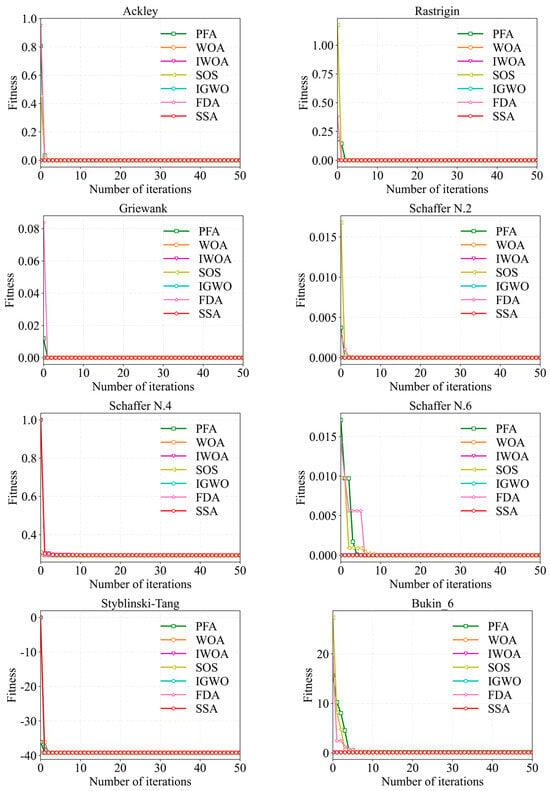

The comparison process of 20 swarm intelligence algorithms under multi-peak test functions can be found in Appendix A. The specific information of multi-peak test functions is shown in Table A1, and the parameters of 20 swarm intelligence algorithms are shown in Table A2. It can be seen in Table A3 and Figure A1 that the salp swarm algorithm (SSA) shows significant competitiveness and stability on the multi-peak test functions with respect to both mean, std as well as time. SSA always maintains a fast convergence speed as well as a high convergence accuracy compared to other algorithms. In this regard, SSA was finally used in this paper for solving the optimal problem.

3.3.2. Adaptive Cosine Salp Swarm Algorithm

SSA simulates the foraging and navigational behaviors of salps in the ocean. Salps behave as a chain when searching for food; the closest salp to the food serves as the leader, called the head of the chain, and the rest of the salps serve as the followers. Each of the followers approaches toward the previous salp, and this chain of salps searches for the food source in the search space. Since the number of variables in this paper is only one parameter of the information adaptive parameter, the leader position of the SSA is updated as follows:

where denotes the location of the leader; denotes the location of the food source, and the optimal solution is assigned to based on the a fitness function; are the upper and lower bounds of the search space, respectively; is the main parameter for balancing the global exploration and the local exploitation, which is determined by Equation (23); and are random numbers generated in the interval [0, 1].

where and denote the current iteration number and the maximum iteration number, respectively. The change curve is shown in Figure 5a. Since solving the optimal path is a multi-peak problem, the algorithm is required to have a strong ability to jump out of the local optimum. Therefore, the cosine control factor was introduced in this paper, which makes the range of the convergence factor become larger, while the decreasing trend becomes slower, and maintains a relatively high global exploration ability at the early stage. , the improved convergence factor, is calculated as shown in Equation (24), and its change curve is shown in Figure 5b.

Figure 5.

(a) Convergence factor curve for a conventional scheme. (b) Cosine convergence factor variation curve.

The follower position update utilizes Newton’s laws of motion as follows:

where and denotes the position of the ith salp follower. Ultimately, the chain of salps can be modeled by Formula (22) and Formula (25).

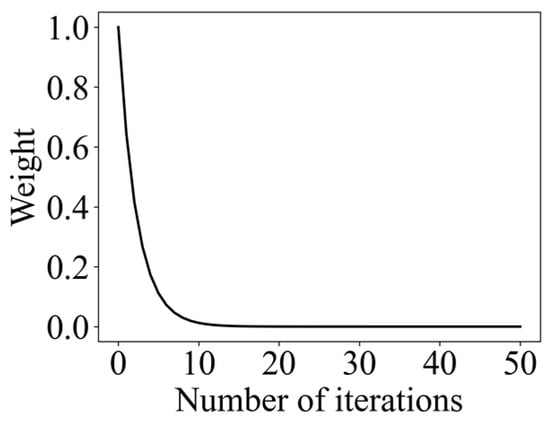

Moreover, an adaptive weight was utilized to control the leader’s search range, as shown in Formula (26), and its change curve is shown in Figure 6. As it can be seen in Figure 6, making the leader search range larger at the beginning helps to enhance the exploration behavior, which improves the convergence speed of the algorithm. As the search proceeds, the leader search range gradually becomes smaller. In the late iteration, the weight is lower, which makes the leader carry out a locally accurate search in the vicinity of the optimal solution, which improves the ability to find the optimal solution. After adding adaptive weights, the position of the leader is updated as expressed in Formula (27).

Figure 6.

Adaptive weight change curve.

Ultimately, we combined the improved reward function SAInfotaxis II and adaptive navigation-updated mechanism with the improved ACSSA to adaptively find the optimal information adaptive parameter , so as to find the shortest search path in a specific scenario, which is called adaptive space-aware Infotaxis II (ASAInfotaxis II).

4. Simulations and Discussion

The section analyzes and compares the performance of ASAInfotaxis II with the classical Infotaxis, Infotaxis II, Entrotaxis, Sinfotaxis, and space-aware Infotaxis for OSL using sparse cues through numerical simulation experiments in 2D as well as 3D to verify the feasibility and effectiveness of ASAInfotaxis II. The experimental simulation environment was Windows 11 64-bit, PyCharm2020, Intel Core i7-12700H processor, and 16 GB RAM. In this paper, the algorithms were evaluated according to the following aspects:

- (1)

- The number of search iteration steps: The sum of steps moved by the robot to complete the search task. One of the moving steps refers to the whole process of the robot staying at the original position, updating the PDF, making a moving decision, and moving to the next target point. The number of iterative steps is the basic index to measure the efficiency of the search methods.

- (2)

- The time to find the source: Since ESAInfotaxis II and ASAInfotaxis II are not fixed-step searches, the evaluation metric of the search time was added to further judge the search efficiency of the algorithms.

- (3)

- Information collection rate: The change in information entropy with the number of search steps in the source search process. The change in information entropy reflects the collection of environmental information in the robot search process in real time.

- (4)

- PDFs of the arrival times: The variation in PDFs with the search time; arrival time pdfs can respond to the ability to find the source of robots.

The robot terminates the search when any of the following conditions are met:

- (1)

- Search iteration steps of the robot reach 500, but the odor source is not found.

- (2)

- The robot is considered to have found the odor source if its distance from the source is within the specified range .

4.1. Simulations for Two-Dimensional Scenarios

4.1.1. Two-Dimensional Simulation Scenario

Since the odor information in a natural scene is sparse and discontinuous, this paper tried to fit the actual application scene as much as possible to achieve OSL in a sparse environment. As it can be seen in the Beaufort scale, the wind speed below 0.2 m/s can be regarded as “no wind (calm)”; so, this paper set the wind speed to 1 m/s to achieve the conditions of sparse odor cues. The search radius of the robot was = 0.1 m, which is in line with the general size of ground robots in real scenarios. The simulated spatial extent was set to 9 m × 8 m, and the gird size was set to 0.1 m × 0.1 m due to the small size of the 2D scene. The effective diffusion coefficient of the plume was , the particle lifetime was , and the odor source emitted at a rate of at the location of (8, 7.4). The robot moved at a speed of 0.1 m/s, and its sensors detected at every 1 s. The initial position of the robot was arbitrarily specified. The sensor detection threshold was , above which the sensing result was “1”. When the position of the robot and the odor source was within the range of , the robot was considered to have found the odor source.

4.1.2. Two-Dimensional Simulation Results

The results of the mean iterative steps after 50 simulations of randomly selecting the initial position of the robot with different movement strategies in 2D scenarios, as set in Section 4.1.1, are shown in Table 1. As it can be seen in Table 1, ASAInfotaxis II, proposed in this paper, shows a very significant improvement in search efficiency compared to the classical cognitive search strategies. The improvement in the search efficiency of SAInfotaxis II using the improved reward function alone is unstable, and the mean iterative steps in the 6-direction set exceed those of the classical strategies, which may be due to the strong exploration behavior that makes the robot fall easily into a local optimal state and “passes by” the odor source. The adaptive navigation-updated mechanism compensates for this by changing the movement range of the robot in real time through information entropy, which becomes relatively small in the later stages of the search, thus making the robot less likely to fall into a local optimum in the source confirmation stage and achieving a balance between the exploration and exploitation behaviors. The search efficiency of ESAInfotaxis II using the enumeration method is also significantly improved, but the comparison with ASAInfotaxis II shows that the optimal cannot be found precisely using the enumeration method, and the search efficiency is further improved by solving with ACSSA.

Table 1.

Comparison of the mean search iteration steps of different algorithms in 2D scenarios.

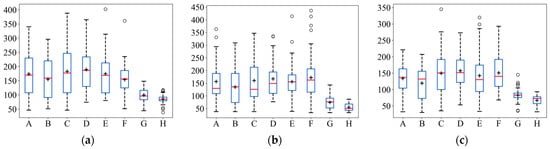

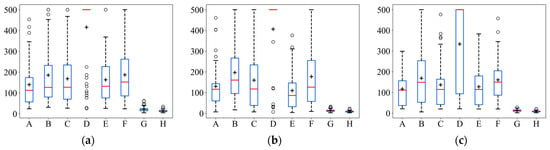

In order to more intuitively represent the numerical distribution of all algorithms, box line plots were used to represent the distribution of each algorithm result in terms of the iterative steps searched under the different initial positions of the robot. The distribution of the search results for the eight strategies is shown in Figure 7. In Figure 7, it can be seen that ASAInfotaxis II shows remarkable stability and excellent search performance under all admissible action sets, and its mean, upper edge, upper quartile, lower edge, lower quartile, and median are consistently lower than those of the other cognitive strategies under the same admissible action set.

Figure 7.

Box line plots of ASAInfotaxis II with the other cognitive search strategies for various admissible action sets in 2D scenarios, where A = Infotaxis, B = Infotaxis II, C = Entrotaxis, D = Sinfotaxis, E = Space-aware Infotaxis, F = SAInfotaxis II, G = ESAInfotaxis II, and H = ASAInfotaxis II. (a) The 4-direction; (b) 6-direction; and (c) 8-direction sets. The black “+” indicates the mean of the data, the red line indicates the median of the data, and the black “○” indicates outliers.

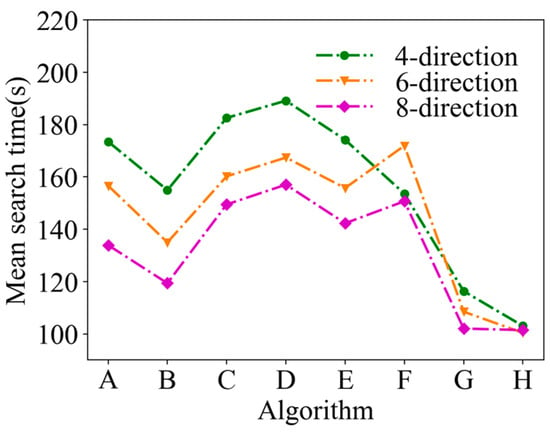

A comparison of the mean search time after 50 simulations under the same conditions is shown in Figure 8. In Figure 8, it can be seen that the mean search time of ASAInfotaxis II remains the lowest under any admissible action set, which indicates a significant improvement in the search efficiency of ASAInfotaxis II.

Figure 8.

Mean search time in a 2D scene, where A = Infotaxis, B = Infotaxis II, C = Entrotaxis, D = Sinfotaxis, E = Space-aware Infotaxis, F = SAInfotaxis II, G = ESAInfotaxis II, and H = ASAInfotaxis II.

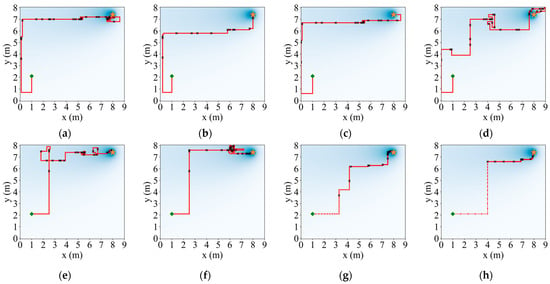

Taking the 4-direction admissible action set as an example, the initial position (1, 2.1) of the robot was randomly selected to make a comparison of the search paths, as shown in Figure 9. In Figure 9, it can be seen that Infotaxis, Sinfotaxis, and space-aware Infotaxis fall into a local optimum during the process. When the new improved SAInfotaxis II reward function is used alone, the robot may fall into a local optimum at a later stage of the search due to an enhanced exploratory behavior, as shown in Figure 9f, but when SAInfotaxis II is combined with the adaptive navigation-updated mechanism, the narrowing of the movement range at a later stage neutralizes well the over-exploration behavior caused by the reward function, and the final search efficiency and search behavior are considerably improved after the optimization by ACSSA, as shown in Figure 9h.

Figure 9.

Comparison results of the search paths under a 4-direction admissible action set. (a) Infotaxis: 209 steps; (b) Infotaxis II: 167 steps; (c) Entrotaxis: 187 steps; (d) Sinfotaxis: 267 steps; (e) Space-aware Infotaxis: 195 steps; (f) SAInfotaxis II: 199 steps; (g) ESAInfotaxis II: 118 steps; and (h) ASAInfotaxis II_ 96 steps. The orange star indicates the true source location, the green square indicates the initial robot position, the red line indicates the trajectory of the robot, red dots indicate zero measurements, and black crosses indicate non-zero measurements.

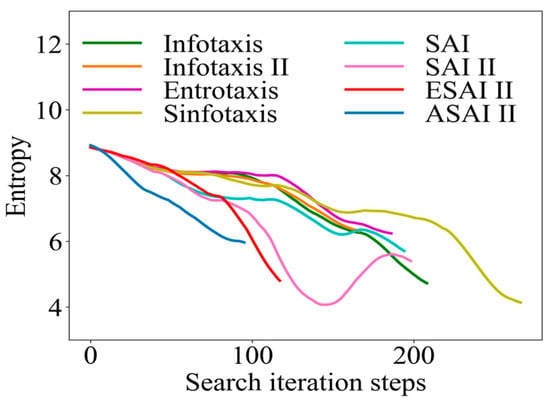

Similarly, taking the 4-direction admissible action set as an example, the initial position of the robot (1, 2.1) was selected to obtain the information collection rate curve, as shown in Figure 10. As mentioned in Section 3.1, when the robot locates the odor source, the information entropy becomes 0. Therefore, we do not show the moment when the final information entropy becomes 0, but focused on analyzing the change in information entropy in the search process. The degree of inclination of the curve reflects the information collection rate of the algorithms. As it can be seen in Figure 10, with the improved SAInfotaxis II reward function alone, the exploratory behavior of the robot is significantly improved compared to several other classical strategies, but the information entropy of such a scheme appears to have rebounded in the later stages of the search, which indicates that the robot is trapped in a local optimum. After the introduction of the adaptive navigation-updated mechanism, this shortcoming is compensated well. Finally, after the optimization by ACSSA, the exploration behavior of ASAInfotaxis II always maintains a significant advantage, and the exploration and exploitation behaviors always maintain a relatively good balance in the whole search process.

Figure 10.

Comparison of the information-gathering rate curves in a 2D scene, where SAInfotaxis II = SAI II, ESAInfotaxis II = ESAI II, and ASAInfotaxis II = ASAI II.

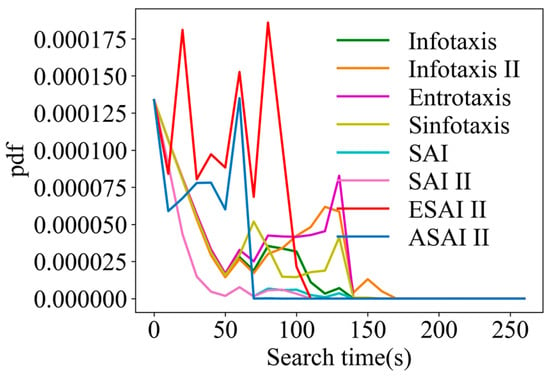

In a 2D scene, a randomly selected fixed point (7, 4) is used to make a curve comparison of the arrival time pdf, as shown in Figure 11. In Figure 11, it can be seen that the several types of classical cognitive search strategies have a poor convergence ability with very slowly decaying tails, especially Infotaxis II, which is due to the fact that only the exploration term is preserved, leading to too much greed. Although only the exploration term is preserved in the novel SAInfotaxis II reward function, distance information is introduced, which effectively enhances the exploitation behavior, and thus this strategy decays much faster. After combining the adaptive navigation-updated mechanism as well as ACSSA on top of SAInfotaxis II, ASAInfotaxis II has a much shorter arrival time with only a relatively short time to converge, indicating that the robot is relatively better at source finding.

Figure 11.

Comparison of the arrival time pdfs in a 2D scene, from the point (7, 4) for ASAInfotaxis II and several cognitive strategies, where SAInfotaxis II = SAI II, ESAInfotaxis II = ESAI II, and ASAInfotaxis II = ASAI II.

4.2. Simulations for Three-Dimensional Scenarios

4.2.1. Three-Dimensional Simulation Scenario

Considering a realistic scene as well as a sparse environment setting, the wind speed was 1 m/s, and the robot search radius was = 0.2 m, which is in line with the general size of the UAV under actual circumstances. The simulated spatial range was set to 18 m × 18 m × 18 m, and the gird size was set to 1 m × 1 m × 1 m because the search range in 3D is larger than that of the scene in 2D. The effective diffusion coefficient of the plume was , the particle lifetime was , and the odor source emitted at a rate of at the location of (2, 2, 5). The robot moved at a speed of 1 m/s, and its sensors detected at every 1 s. The initial position of the robot was arbitrarily specified. The sensor detection threshold was , above which the sensing result was “1”. When the position of the robot and the odor source were within the range of , the robot was considered to have found the odor source.

4.2.2. Three-Dimensional Simulation Results

The results of the mean iterative steps after 50 simulations of randomly selecting the initial position of the robot with different admissible action sets in 3D, as set in Section 4.2.1, are shown in Table 2. In Table 2, ASAInfotaxis II, proposed in this paper, shows a very significant improvement in search efficiency compared to the classical strategies. Similarly, as in the 2D scene, the improvement in search efficiency using SAInfotaxis II alone is erratic, and its mean iterative steps exceed that of the classical strategies under various admissible action sets, which is well compensated for by the adaptive navigational-updating mechanism that achieves a balance between exploration and exploitation behaviors. The search efficiency of ESAInfotaxis II using the enumeration approach is also improved significantly, but the comparison with ASAInfotaxis II shows that the optimal cannot be found accurately by using the enumeration approach, and ACSSA for solving leads to a further improvement in the search efficiency.

Table 2.

Comparison of the mean search iteration steps of the different algorithms in 3D scenarios.

Similarly, in order to visually represent the numerical distribution of the search iterative steps for all algorithms in 3D scenarios, box line plots were made, as shown in Figure 12. In Figure 12, it can be seen that ASAInfotaxis II shows the same remarkable stability and excellent search performance under all the admissible action sets, more so than in 2D scenarios. Its mean, upper edge, upper quartile, lower edge, lower quartile, and median consistently outperform those of the other cognitive search strategies.

Figure 12.

Box line plots of ASAInfotaxis II with the other cognitive search strategies for various admissible action sets in 3D scenarios, where A = Infotaxis, B = Infotaxis II, C = Entrotaxis, D = Sinfotaxis, E = Space-aware Infotaxis, F = SAInfotaxis II, G = ESAInfotaxis II, and H = ASAInfotaxis II. (a) The 6-direction set; (b) 14-direction set; and (c) 26-direction set. The black “+” indicates the mean of the data, the red line indicates the median of the data, and the black “○” indicates outliers.

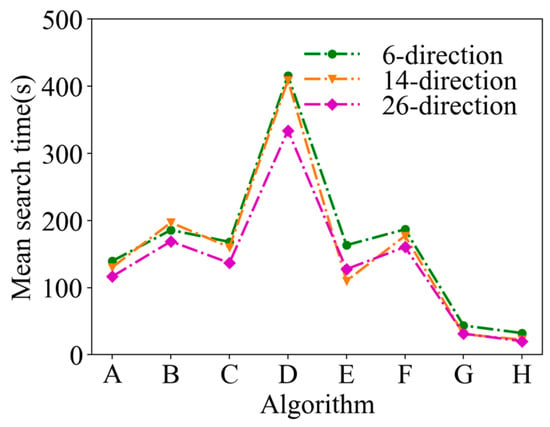

A comparison of the mean search time after 50 simulations under the same conditions is shown in Figure 13. In Figure 13, it can be seen that the mean search time of ASAInfotaxis II remains the lowest under any admissible action set, and the improvement in search efficiency is more pronounced than that in 2D scenarios.

Figure 13.

Mean search time in 3D scenarios, where A = Infotaxis, B = Infotaxis II, C = Entrotaxis, D = Sinfotaxis, E = Space-aware Infotaxis, F = SAInfotaxis II, G = ESAInfotaxis II, and H = ASAInfotaxis II.

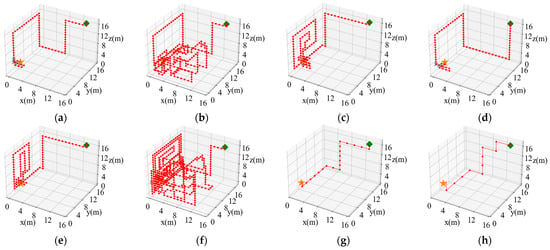

Taking the 6-direction admissible action set as an example, the initial position of the robot (14, 14, 17) was randomly selected to make a comparison of the search paths, as shown in Figure 14. In Figure 14, it can be seen that the classical cognitive search strategies all fall into a local optimum during the process. When using the newly improved SAInfotaxis II reward function alone, the robot also falls into a local optimum due to an enhanced exploration behavior, as shown in Figure 14f, but when SAInfotaxis II is combined with the adaptive navigation-updated mechanism and through the ACSSA optimization search, the search efficiency as well as behavior are considerably improved, as shown in Figure 14h.

Figure 14.

Comparison results of the search paths under the 6-direction admissible action set. (a) Infotaxis: 77 steps; (b) Infotaxis II: 283 steps; (c) Entrotaxis: 129 steps; (d) Sinfotaxis: 81 steps; (e) Space-aware Infotaxis: 113 steps; (f) SAInfotaxis II: 500 steps; (g) ESAInfotaxis II: 21 steps; and (h) ASAInfotaxis II: 12 steps. The orange star indicates the true source location, the green square indicates the initial robot position, the red line indicates the trajectory of the robot, red dots indicate zero measurements, and black crosses indicate non-zero measurements.

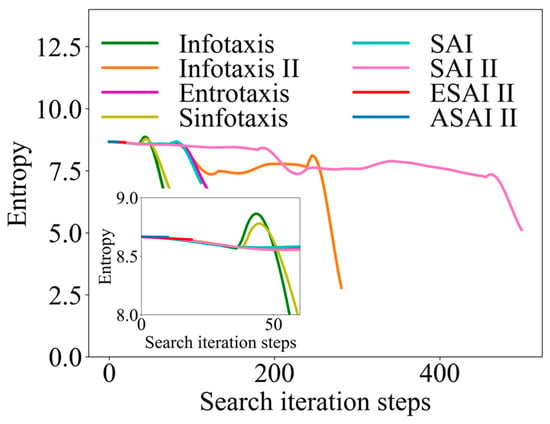

Similarly, taking the 6-direction admissible action set as an example, the initial position of the robot (14, 14, 17) was randomly selected to obtain the information collection rate curve, as shown in Figure 15. Similarly, the moment when the final information entropy becomes 0 was not represented in this paper, focusing on analyzing the change in information entropy during the search process. In Figure 15, it can be seen that, in the classical strategies, the information entropy almost always appears rebounded, which indicates that the robot may fall into a local optimum in the search. However, ASAInfotaxis II avoids this well, and ASAInfotaxis II achieves OSL with only a very small entropy drop, which indicates that this scheme can achieve OSL in the case of not having much information about the environment.

Figure 15.

Comparison of information-gathering rate curves in 3D scenarios, where SAInfotaxis II = SAI II, ESAInfotaxis II = ESAI II, and ASAInfotaxis II = ASAI II.

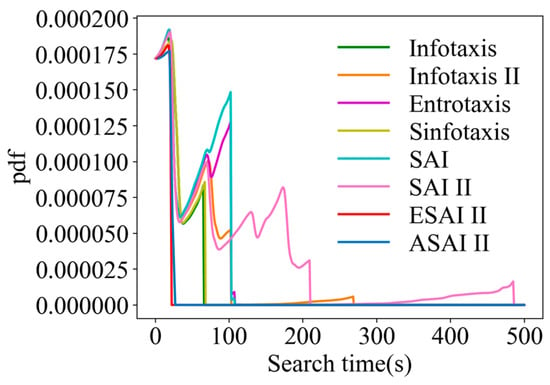

A comparison of the arrival time pdfs is shown in Figure 16 for a randomly selected fixed point (6, 14, 5) in 3D scenarios. From Figure 16, it can be seen that SAInfotaxis II as well as Infotaxis II converge very slowly due to over-exploration. In contrast, the performance of ASAInfotaxis II is improved after the inclusion of the adaptive navigation-updated mechanism, which balances the exploration and exploitation behaviors of the robot relatively well.

Figure 16.

Comparison of the arrival time pdfs in 3D scenarios, from the point (6, 14, 5) for ASAInfotaxis II and several cognitive strategies, where SAInfotaxis II = SAI II, ESAInfotaxis II = ESAI II, and ASAInfotaxis II = ASAI II.

5. Conclusions

Aiming at the problems of the low search efficiency of the current OSL strategies and the imbalance between exploration and exploitation behaviors that cause the robot to easily fall into local optima, ASAInfotaxis II is proposed. ASAInfotaxis II introduces three strategies based on Infotaxis. First, a new reward function is designed, which takes into account distance information and emphasizes the exploration behavior of the robot. Second, the movement range of the robot is adjusted in real time through an adaptive navigation-updated mechanism to avoid the robot falling into a local optimum. Third, ACSSA is used to confirm the optimal information adaptive parameters. Comparative simulation experiments between ASAInfotaxis II and the classical search strategies were conducted in 2D and 3D scenarios with respect to search efficiency and search behavior. The experimental results show that ASAInfotaxis II can quickly and accurately locate the odor source, and the number of iterative search steps in 2D scenarios is reduced by more than 44.96% compared to the classical cognitive strategies, and the number of iterative search steps in 3D scenarios is reduced by more than 90.17% compared to the classical cognitive strategies. The mean search time is also significantly reduced. ASAInfotaxis II demonstrates a good searching ability as well as stability, especially in 3D scenarios, which shows excellent performance and obvious competitiveness. In addition, the information collection rate of ASAInfotaxis II is maintained at a faster rate, implying that the robot has a significant advantage in the collection of environmental information as well as the process of converging to the source of the odor at a later stage. The implementation results show that ASAInfotaxis II can accomplish the efficient localization of odor sources in complex environments, such as rich in local information and with sparse cues.

In future work, we will optimize the search model to make fuller use of the prior information for further narrowing down the possible source locations, thus saving computational costs and speeding up the convergence of the algorithm. It is also planned to extend the OSL problem to more complex scenarios, such as near streets or around buildings.

Author Contributions

Conceptualization, S.L.; methodology, S.L.; validation, S.L., Y.Z. and S.F.; writing—original draft preparation, S.L.; writing—review and editing, S.L. and Y.Z.; funding acquisition, S.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Shijiazhuang Science and Technology Cooperation Special Project, grant number SJZZXB23004. The APC was funded by SJZZXB23004.

Data Availability Statement

The dataset generated from the numerical simulation experiments in this paper can be shared by emailing 202121902015@stu.hebut.edu.cn.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Appendix A

In this appendix, we provide specific information about 8 multi-peak test functions selected from CEC (International Conference on Evolutionary Computation), as shown in Table A1.

Table A1.

Description of the 8 classic benchmarking functions.

Table A1.

Description of the 8 classic benchmarking functions.

| Function | Dim | Range | Optimum Value | |

|---|---|---|---|---|

| Ackley | 1 | [−32, 32] | 0 | |

| Rastrigin | 1 | [−5.12, 5.12] | 0 | |

| Griewank | 1 | [−600, 600] | 0 | |

| Schaffer N.2 | 2 | [−100, 100] | 0 | |

| Schaffer N.4 | 2 | [−100, 100] | 0.292579 | |

| Schaffer N.6 | 2 | [−100, 100] | 0 | |

| Styblinski–Tang | 1 | [−5, 5] | −39.16599 | |

| Bukin_6 | 2 | 0 |

A comparison of 20 swarm intelligence algorithms was carried out, and the parameters in each algorithm were set for the original document, and the specific parameter settings are shown in Table A2.

The test results for 20 swarm intelligence algorithms running independently for 30 times on 8 benchmark test functions are shown in Table A3. It should be noted that, in this paper, the mean results of Schaffer N.4 are accurate to 0.000001; the mean results of Styblinski–Tang are accurate to 0.00001; and the test results are accurate to 0.0001 in the rest of the cases. In Table A3, PFA, WOA, IWOA, SOS, SSA, FDA, and IGWO show significant competitiveness under all the tested functions, and the performances of both mean and std have strong advantages in finding the global optimum as well as jumping out of the local optimum point, contrasting with the other algorithms. The WOA, IWOA, SOS, and IGWO algorithms were solved to the theoretical optimum in the Ackley, Rastrigin, Griewank, Schaffer N.2, and Schaffer N.6 function tests.

In terms of computational costs, MBO has the best performance. In addition, the PSO, SSA, MVO, MBO, and AOA algorithms also have a relatively short running time, while the PFA, FDA, and DA algorithms require large computations with relatively a high time cost, especially the PFA and FDA algorithms, whose computational cost reaches about one minute, although the PFA and FDA algorithms perform better in terms of global searching and circumventing local optimums, but this comes at the cost of a large amount of time.

Table A2.

Parameter settings of each algorithm.

Table A2.

Parameter settings of each algorithm.

| Algorithm | Specific Parameter Settings |

|---|---|

| PSO | |

| PFA | |

| WOA | |

| IWOA | |

| HHO | |

| SOS | |

| SCA | |

| SSA | |

| MVO | |

| SPBO | |

| MBO | |

| JSO | |

| IGWO | |

| FDA | |

| DA | |

| ALO | |

| AOA | |

| CS | |

| GOA | |

| GA |

It is clear from the above analysis that the PFA, WOA, IWOA, SOS, SSA, IGWO, as well as FDA algorithms show significant advantages in solving the mean and std; so, we next analyzed the convergence speed as well as the convergence ability of the above algorithms only. The convergence curves of the above algorithms on the 8 benchmark functions are shown in Figure A1. In Figure A1, it can be seen that the convergence speed and the convergence accuracy of SOS, PFA, and FDA generally perform poorly. Moreover, although WOA and IWOA also have significant advantages in convergence speed, their convergence accuracy is lower than that of SSA on the Schaffer N.4 and Styblinski–Tang.

Figure A1.

Convergence curves of the swarm intelligence algorithms.

Table A3.

Test results of the swarm intelligence algorithms on the benchmark test functions..

Table A3.

Test results of the swarm intelligence algorithms on the benchmark test functions..

| Function | Criteria | PSO | PFA | WOA | IWOA | HHO | SOS | SCA | SSA | MVO | SPBO |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Ackley | Mean | 0.0089 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0006 | 0.0575 |

| Std | 0.0139 | 0.0 | 0.0 | 0.0 | 0.0001 | 0.0 | 0.0 | 0.0 | 0.0007 | 0.2132 | |

| Time | 2.5075 | 87.3500 | 2.7731 | 6.2540 | 3.0480 | 5.2511 | 2.8093 | 2.5220 | 1.9123 | 4.3905 | |

| Rastrigin | Mean | 0.0013 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.2839 |

| Std | 0.0029 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.6607 | |

| Time | 2.3012 | 78.0795 | 2.3197 | 5.7256 | 2.7999 | 3.6491 | 2.4768 | 2.3112 | 1.6072 | 3.8947 | |

| Griewank | Mean | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0001 |

| Std | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0005 | |

| Time | 2.1831 | 77.9928 | 2.4811 | 5.7041 | 2.8670 | 4.4761 | 2.3947 | 2.3123 | 1.2743 | 3.9660 | |

| Schaffer N.2 | Mean | 0.0 | 0.0 | 0.0 | 0.0 | 0.0010 | 0.0 | 0.0 | 0.0 | 0.0002 | 0.0067 |

| Std | 0.0001 | 0.0 | 0.0 | 0.0 | 0.0016 | 0.0 | 0.0 | 0.0 | 0.0012 | 0.0082 | |

| Time | 2.2509 | 80.7760 | 2.6066 | 5.8712 | 2.7963 | 4.7656 | 2.5900 | 2.1123 | 1.7282 | 4.1343 | |

| Schaffer N.4 | Mean | 0.292761 | 0.292591 | 0.292658 | 0.292587 | 0.293011 | 0.292580 | 0.292655 | 0.292619 | 0.292586 | 0.294534 |

| Std | 0.0002 | 0.0 | 0.0001 | 0.0 | 0.0007 | 0.0 | 0.0001 | 0.0001 | 0.0 | 0.0020 | |

| Time | 2.4200 | 81.782 | 2.5146 | 5.8164 | 2.8567 | 4.8856 | 2.6282 | 2.2947 | 1.7542 | 4.1134 | |

| Schaffer N.6 | Mean | 0.0084 | 0.0056 | 0.0 | 0.0 | 0.0071 | 0.0 | 0.0053 | 0.0 | 0.0094 | 0.0113 |

| Std | 0.0029 | 0.0047 | 0.0 | 0.0 | 0.0043 | 0.0 | 0.0047 | 0.0 | 0.0017 | 0.0059 | |

| Time | 2.2076 | 82.7493 | 2.4682 | 5.5752 | 2.6915 | 4.3382 | 2.5646 | 2.3579 | 1.7895 | 4.1644 | |

| Styblinski–Tang | Mean | −39.16609 | −39.16617 | −39.16617 | −39.16617 | −39.16616 | −39.16617 | −39.16601 | −39.16617 | −38.69494 | −39.13254 |

| Std | 0.0001 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0002 | 0.0 | 2.5376 | 0.1102 | |

| Time | 2.0835 | 72.2040 | 2.0767 | 5.3305 | 2.8530 | 4.1391 | 2.3961 | 2.1449 | 1.5155 | 3.9183 | |

| Bukin_6 | Mean | 4.5934 | 0.0955 | 0.1 | 0.1 | 1.3550 | 0.1038 | 0.6841 | 0.1 | 1.0947 | 5.7670 |

| Std | 2.7638 | 0.0330 | 0.0 | 0.0 | 1.3874 | 0.0183 | 0.7055 | 0.0 | 0.4910 | 7.2578 | |

| Time | 0.8791 | 23.3484 | 0.9719 | 2.3828 | 1.1133 | 1.8759 | 1.0460 | 0.9330 | 0.7453 | 1.6423 | |

| Function | Criteria | MBO | JSO | IGWO | FDA | DA | ALO | AOA | CS | GOA | GA |

| Ackley | Mean | 0.0040 | 0.0032 | 0.0 | 0.0 | 0.0011 | 0.0 | 1.5654 | 0.3197 | 0.0002 | 0.0001 |

| Std | 0.0055 | 0.0029 | 0.0 | 0.0 | 0.0024 | 0.0 | 1.4184 | 0.4081 | 0.0008 | 0.0001 | |

| Time | 1.6513 | 2.6549 | 7.2145 | 63.1317 | 38.0546 | 20.7061 | 2.6846 | 4.9786 | 13.4924 | 4.2537 | |

| Rastrigin | Mean | 0.1693 | 0.0007 | 0.0 | 0.0 | 0.0 | 0.0 | 1.1856 | 0.4213 | 0.0995 | 0.0466 |

| Std | 0.3699 | 0.0009 | 0.0 | 0.0 | 0.0001 | 0.0 | 1.2042 | 0.4773 | 0.2985 | 0.1838 | |

| Time | 1.3953 | 2.1311 | 6.4451 | 59.1630 | 36.9516 | 14.9701 | 1.9045 | 4.2763 | 12.2868 | 3.6602 | |

| Griewank | Mean | 0.0306 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0269 | 0.1537 | 0.0 | 0.0 |

| Std | 0.0571 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0453 | 0.2506 | 0.0 | 0.0 | |

| Time | 1.3753 | 2.5003 | 7.0041 | 56.6412 | 36.1668 | 19.6636 | 2.3902 | 0.6494 | 12.5253 | 3.7303 | |

| Schaffer N.2 | Mean | 0.0150 | 0.0004 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0095 | 0.0014 | 0.0045 | 0.0007 |

| Std | 0.0201 | 0.0007 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0095 | 0.0017 | 0.0067 | 0.0013 | |

| Time | 1.4359 | 2.6264 | 7.0888 | 56.5816 | 36.7568 | 20.3590 | 2.5359 | 3.9743 | 12.9016 | 3.8950 | |

| Schaffer N.4 | Mean | 0.303105 | 0.292909 | 0.292587 | 0.292579 | 0.292676 | 0.292665 | 0.297561 | 0.293003 | 0.294915 | 0.293242 |

| Std | 0.0118 | 0.0003 | 0.0 | 0.0 | 0.0002 | 0.0002 | 0.0040 | 0.0006 | 0.0018 | 0.0007 | |

| Time | 1.4997 | 2.5283 | 7.1459 | 58.4871 | 36.2815 | 20.2342 | 2.5560 | 4.4635 | 13.2616 | 4.1806 | |

| Schaffer N.6 | Mean | 0.0418 | 0.0087 | 0.0 | 0.0020 | 0.0042 | 0.0071 | 0.0202 | 0.0097 | 0.0100 | 0.0088 |

| Std | 0.0396 | 0.0018 | 0.0 | 0.0036 | 0.0048 | 0.0043 | 0.0131 | 0.0003 | 0.0056 | 0.0025 | |

| Time | 1.4807 | 2.6265 | 6.9701 | 61.2268 | 35.1733 | 20.7181 | 2.6076 | 4.5070 | 12.6626 | 4.0441 | |

| Styblinski–Tang | Mean | −38.22368 | −39.16615 | −39.16617 | −39.16617 | −39.16614 | −39.16617 | −37.98762 | −39.12722 | −39.16617 | −39.16617 |

| Std | 3.5263 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 2.2548 | 0.0699 | 0.0 | 0.0 | |

| Time | 1.3130 | 2.4119 | 6.5984 | 54.7924 | 35.2751 | 19.3258 | 2.3063 | 3.9453 | 12.0077 | 3.4342 | |

| Bukin_6 | Mean | 1.7159 | 2.3654 | 0.1 | 0.05 | 2.1321 | 0.2266 | 33.6252 | 6.2734 | 0.2261 | 0.2630 |

| Std | 1.1010 | 1.0687 | 0.0 | 0.0001 | 1.5829 | 0.1322 | 20.1480 | 5.3777 | 0.2068 | 0.1325 | |

| Time | 0.5719 | 0.9972 | 2.8712 | 21.7811 | 13.2006 | 9.9783 | 0.9815 | 1.7210 | 5.7485 | 1.5428 |

References

- Khalil, Y.F. A probabilistic visual-flowcharting-based model for consequence assessment of fire and explosion events involving leaks of flammable gases. J. Loss Prev. Process Ind. 2017, 50, 190–204. [Google Scholar] [CrossRef]

- Evangeliou, N.; Hamburger, T.; Cozic, A.; Balkanski, Y.; Stohl, A. Inverse modeling of the Chernobyl source term using atmospheric concentration and deposition measurements. Atmos. Chem. Phys. 2017, 17, 8805–8824. [Google Scholar] [CrossRef]

- Saremi, S.; Mirjalili, S.; Lewis, A. Grasshopper Optimisation Algorithm: Theory and application. Adv. Eng. Softw. 2017, 105, 30–47. [Google Scholar] [CrossRef]

- Hutchinson, M.; Oh, H.; Chen, W.-H. A review of source term estimation methods for atmospheric dispersion events using static or mobile sensors. Inf. Fusion 2017, 36, 130–148. [Google Scholar] [CrossRef]

- Hutchinson, M.; Liu, C.; Chen, W.-H. Information based mobile sensor planning for source term estimation of a non-continuous atmospheric release. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018. [Google Scholar]

- Ishida, H.; Wada, Y.; Matsukura, H. Chemical Sensing in Robotic Applications: A Review. IEEE Sens. J. 2012, 12, 3163–3173. [Google Scholar] [CrossRef]

- Hutchinson, M.; Liu, C.; Chen, W.H. Source term estimation of a hazardous airborne release using an unmanned aerial vehicle. J. Field Robot. 2019, 36, 797–817. [Google Scholar] [CrossRef]

- Shigaki, S.; Sakurai, T.; Ando, N.; Kurabayashi, D.; Kanzaki, R. Time-Varying Moth-Inspired Algorithm for Chemical Plume Tracing in Turbulent Environment. IEEE Robot. Autom. Lett. 2018, 3, 76–83. [Google Scholar] [CrossRef]

- Cremer, J.; Honda, T.; Tang, Y.; Wong-Ng, J.; Vergassola, M.; Hwa, T. Chemotaxis as a navigation strategy to boost range expansion. Nature 2019, 575, 658–663. [Google Scholar] [CrossRef] [PubMed]

- Ma, D.; Mao, W.; Tan, W.; Gao, J.; Zhang, Z.; Xie, Y. Emission source tracing based on bionic algorithm mobile sensors with artificial olfactory system. Robotica 2022, 40, 976–996. [Google Scholar] [CrossRef]

- Terutsuki, D.; Uchida, T.; Fukui, C.; Sukekawa, Y.; Okamoto, Y.; Kanzaki, R. Electroantennography-based Bio-hybrid Odor-detecting Drone using Silkmoth Antennae for Odor Source Localization. JoVE-J. Vis. Exp. 2021, 174, e62895. [Google Scholar] [CrossRef]

- Terutsuki, D.; Uchida, T.; Fukui, C.; Sukekawa, Y.; Okamoto, Y.; Kanzaki, R. Real-time odor concentration and direction recognition for efficient odor source localization using a small bio-hybrid drone. Sens. Actuators B Chem. 2021, 339, 129770. [Google Scholar] [CrossRef]

- Jiang, M.; Liao, Y.; Guo, X.; Cai, H.; Jiang, W.; Yang, Z.; Li, F.; Liu, F. A comparative experimental study of two multi-robot olfaction methods: Towards locating time-varying indoor pollutant sources. Build. Environ. 2022, 207, 108560. [Google Scholar] [CrossRef]

- Gunawardena, N.; Leang, K.K.; Pardyjak, E. Particle swarm optimization for source localization in realistic complex urban environments. Atmos. Environ. 2021, 262, 118636. [Google Scholar] [CrossRef]

- Chen, X.; Fu, C.; Huang, J. A Deep Q-Network for robotic odor/gas source localization: Modeling, measurement and comparative study. Measurement 2021, 183, 109725. [Google Scholar] [CrossRef]

- Oladyshkin, S.; Mohammadi, F.; Kroeker, I.; Nowak, W. Bayesian3 Active Learning for the Gaussian Process Emulator Using Information Theory. Entropy 2020, 22, 890. [Google Scholar] [CrossRef] [PubMed]

- Cassandra, A.R.; Kaelbling, L.P.; Kurien, J.A. Acting under uncertainty: Discrete Bayesian models for mobile-robot navigation. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems IROS ’96, Osaka, Japan, 4–8 November 1996. [Google Scholar]

- Vergassola, M.; Villermaux, E.; Shraiman, B.I. ‘Infotaxis’ as a strategy for searching without gradients. Nature 2007, 445, 406–409. [Google Scholar] [CrossRef] [PubMed]

- Ristic, B.; Skvortsov, A.; Gunatilaka, A. A study of cognitive strategies for an autonomous search. Inf. Fusion 2016, 28, 1–9. [Google Scholar] [CrossRef]

- Ristic, B.; Skvortsov, A.; Walker, A. Autonomous Search for a Diffusive Source in an Unknown Structured Environment. Entropy 2014, 16, 789–813. [Google Scholar] [CrossRef]

- Eggels, A.W.; Kunnen, R.P.J.; Koren, B.; Tijsseling, A.S. Infotaxis in a turbulent 3D channel flow. J. Comput. Appl. Math. 2017, 310, 44–58. [Google Scholar] [CrossRef]

- Ruddick, J.; Marjovi, A.; Rahbar, F.; Martinoli, A. Design and Performance Evaluation of an Infotaxis-Based Three-Dimensional Algorithm for Odor Source Localization. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018. [Google Scholar]

- Hutchinson, M.; Oh, H.; Chen, W.-H. Entrotaxis as a strategy for autonomous search and source reconstruction in turbulent conditions. Inf. Fusion 2018, 42, 179–189. [Google Scholar] [CrossRef]

- Song, C.; He, Y.; Lei, X. Autonomous Searching for a Diffusive Source Based on Minimizing the Combination of Entropy and Potential Energy. Sensors 2019, 19, 2465. [Google Scholar] [CrossRef] [PubMed]

- Rahbar, F.; Marjovi, A.; Martinoli, A. Design and Performance Evaluation of an Algorithm Based on Source Term Estimation for Odor Source Localization. Sensors 2019, 19, 656. [Google Scholar] [CrossRef] [PubMed]

- Jiang, S.W.; Harlim, J. Parameter Estimation with Data-Driven Nonparametric Likelihood Functions. Entropy 2019, 21, 559. [Google Scholar] [CrossRef] [PubMed]

- Ji, Y.; Wang, Y.; Chen, B.; Zhao, Y.; Zhu, Z. A strategy for autonomous source searching using the Gaussian Mixture Model to fit the estimate of the source location. In Proceedings of the 2021 IEEE 17th International Conference on Automation Science and Engineering (CASE), Lyon, France, 23–27 August 2021. [Google Scholar]

- Park, M.; An, S.; Seo, J.; Oh, H. Autonomous Source Search for UAVs Using Gaussian Mixture Model-Based Infotaxis: Algorithm and Flight Experiments. IEEE Trans. Aerosp. Electron. Syst. 2021, 57, 4238–4254. [Google Scholar] [CrossRef]

- Zhao, Y.; Chen, B.; Zhu, Z.; Chen, F.; Wang, Y.; Ji, Y. Searching the diffusive source in an unknown obstructed environment by cognitive strategies with forbidden areas. Build. Environ. 2020, 186, 107349. [Google Scholar] [CrossRef]

- An, S.; Park, M.; Oh, H. Receding-horizon RRT-Infotaxis for autonomous source search in urban environments. Aerosp. Sci. Technol. 2022, 120, 107276. [Google Scholar] [CrossRef]

- Shraiman, B.I.; Siggia, E.D. Scalar turbulence. Nature 2000, 405, 639–646. [Google Scholar] [CrossRef] [PubMed]

- Falkovich, G.; Gawedzki, K.; Vergassola, M. Particles and fields in fluid turbulence. Rev. Mod. Phys. 2001, 73, 913–975. [Google Scholar] [CrossRef]

- Heinonen, R.A.; Biferale, L.; Celani, A.; Vergassola, M. Optimal policies for Bayesian olfactory search in turbulent flows. Phys. Rev. E 2023, 107, 055105. [Google Scholar] [CrossRef]

- Smoluchowski, M.V. Versuch einer mathematischen theorie der koagulationskinetic kolloider Lösungen. Z. Phys. Chem. 1917, 92, 129–168. [Google Scholar]

- Ristic, B.; Angley, D.; Moran, B.; Palmer, J.L. Autonomous Multi-Robot Search for a Hazardous Source in a Turbulent Environment. Sensors 2017, 17, 918. [Google Scholar] [CrossRef]

- Song, C.; He, Y.; Ristic, B.; Lei, X. Collaborative infotaxis: Searching for a signal-emitting source based on particle filter and Gaussian fitting. Robot. Auton. Syst. 2020, 125, 103414. [Google Scholar] [CrossRef]

- Loisy, A.; Heinonen, R.A. Deep reinforcement learning for the olfactory search POMDP: A quantitative benchmark. Eur. Phys. J. E 2023, 46, 17. [Google Scholar] [CrossRef]

- Fan, S.; Hao, D.; Sun, X.; Sultan, Y.M.; Li, Z.; Xia, K. A Study of Modified Infotaxis Algorithms in 2D and 3D Turbulent Environments. Comput. Intell. Neurosci. 2020, 2020, 4159241. [Google Scholar] [CrossRef]

- Loisy, A.; Eloy, C. Searching for a source without gradients: How good is infotaxis and how to beat it. Proc. R. Soc. A-Math. Phys. Eng. Sci. 2022, 478, 20220118. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).