Deep Learning for 3D Reconstruction, Augmentation, and Registration: A Review Paper

Abstract

1. Introduction

1.1. Our Previous Work

1.2. Research Methodology

2. 3D Data Representations

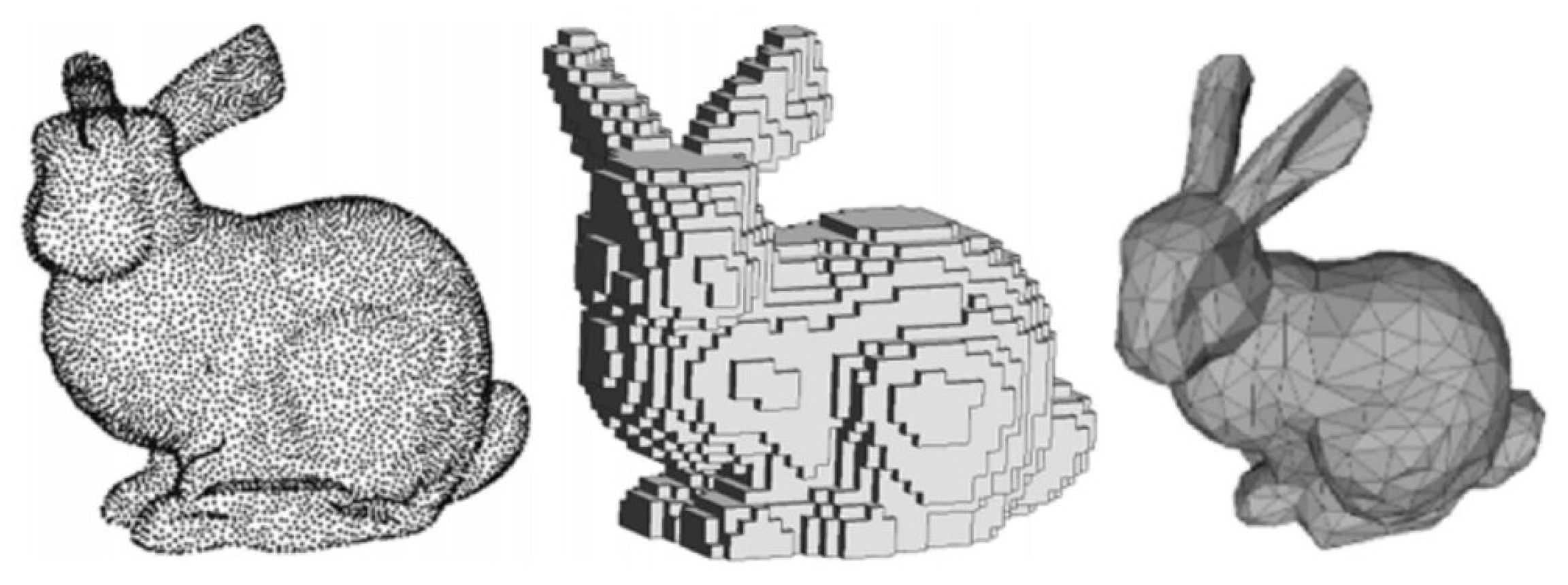

2.1. Point Clouds

2.2. Voxels

2.3. Meshes

3. 3D Benchmark Datasets

3.1. ModelNet

3.2. PASCAL3D+

3.3. ShapeNet

3.4. ObjectNet3D

3.5. ScanNet

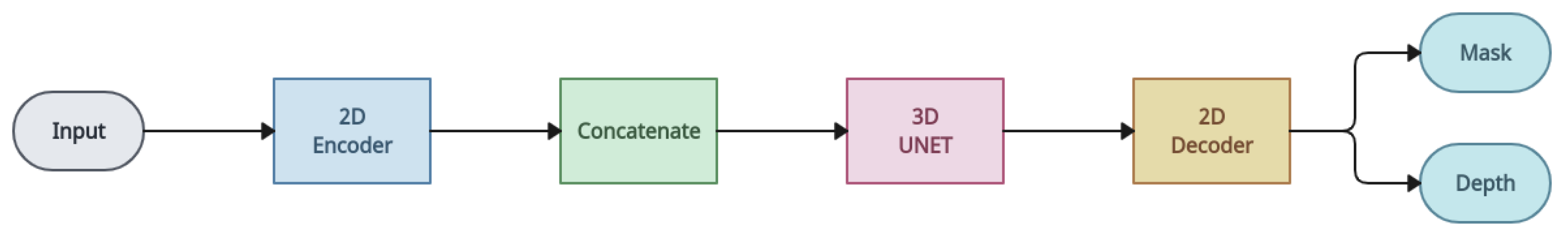

4. Object Reconstruction

4.1. Procedural-Based Approaches

4.2. Deep-Learning-Based Approaches

4.3. Single-View Reconstruction

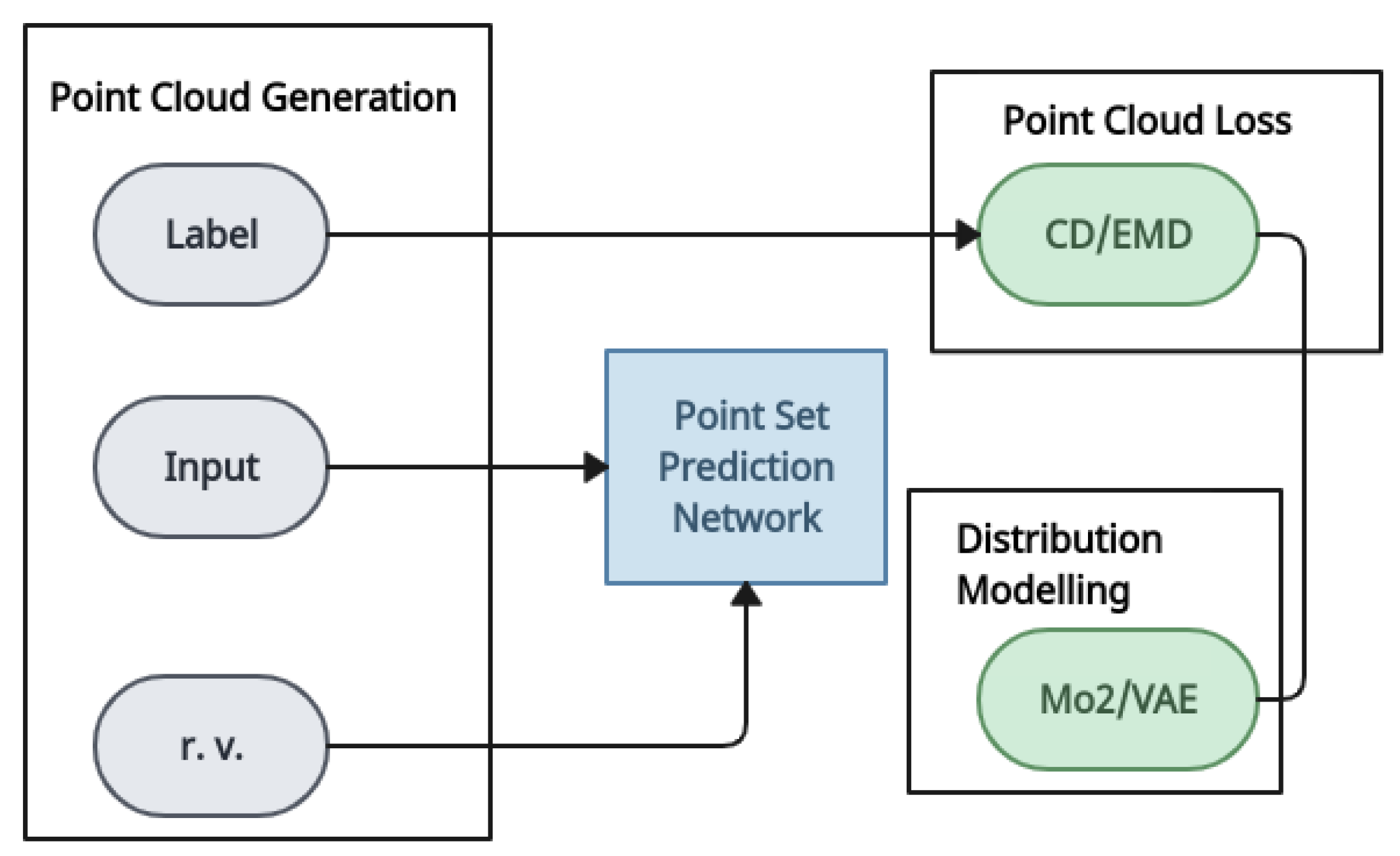

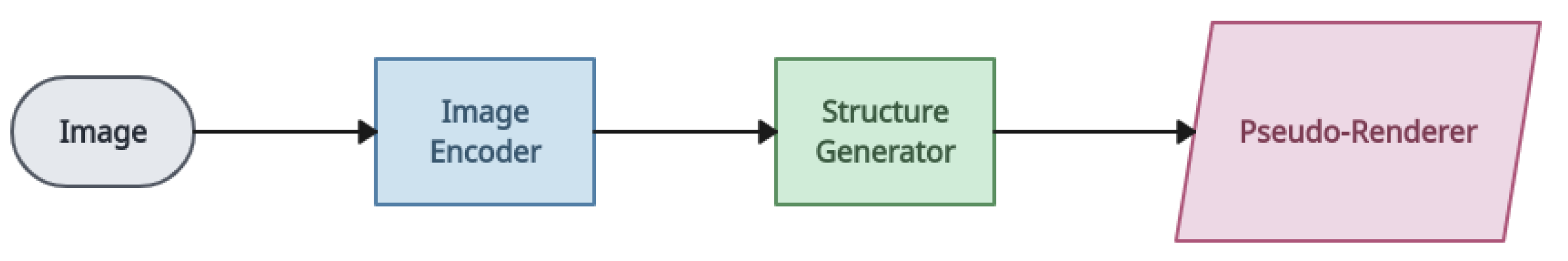

4.3.1. Point Cloud Representation

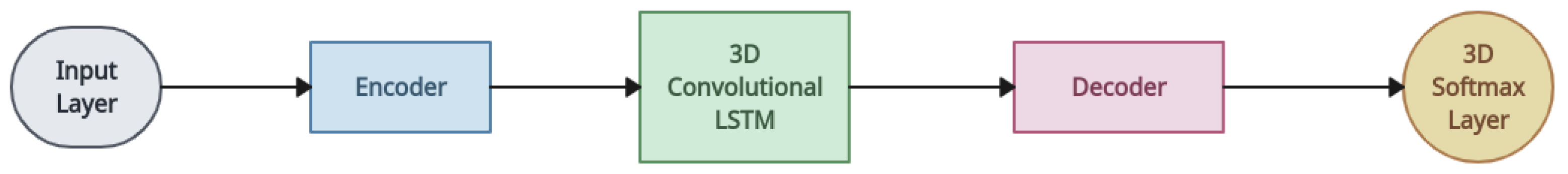

4.3.2. Voxel Representation

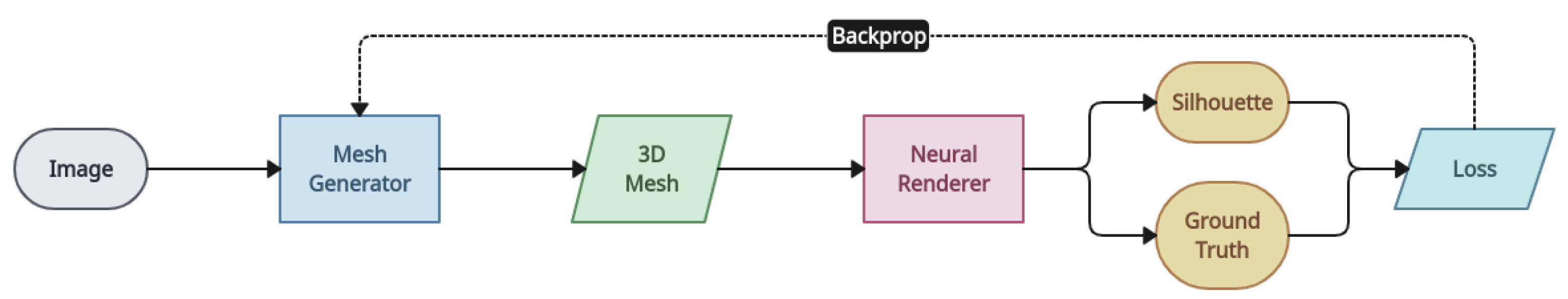

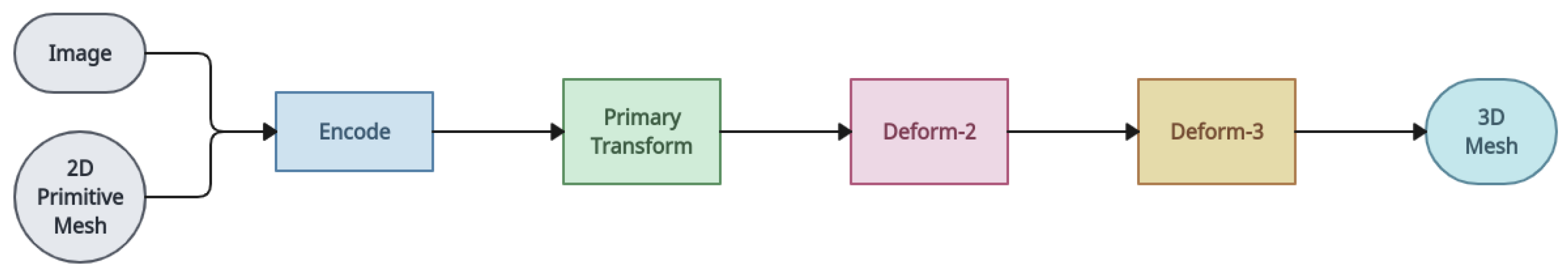

4.3.3. Mesh Representation

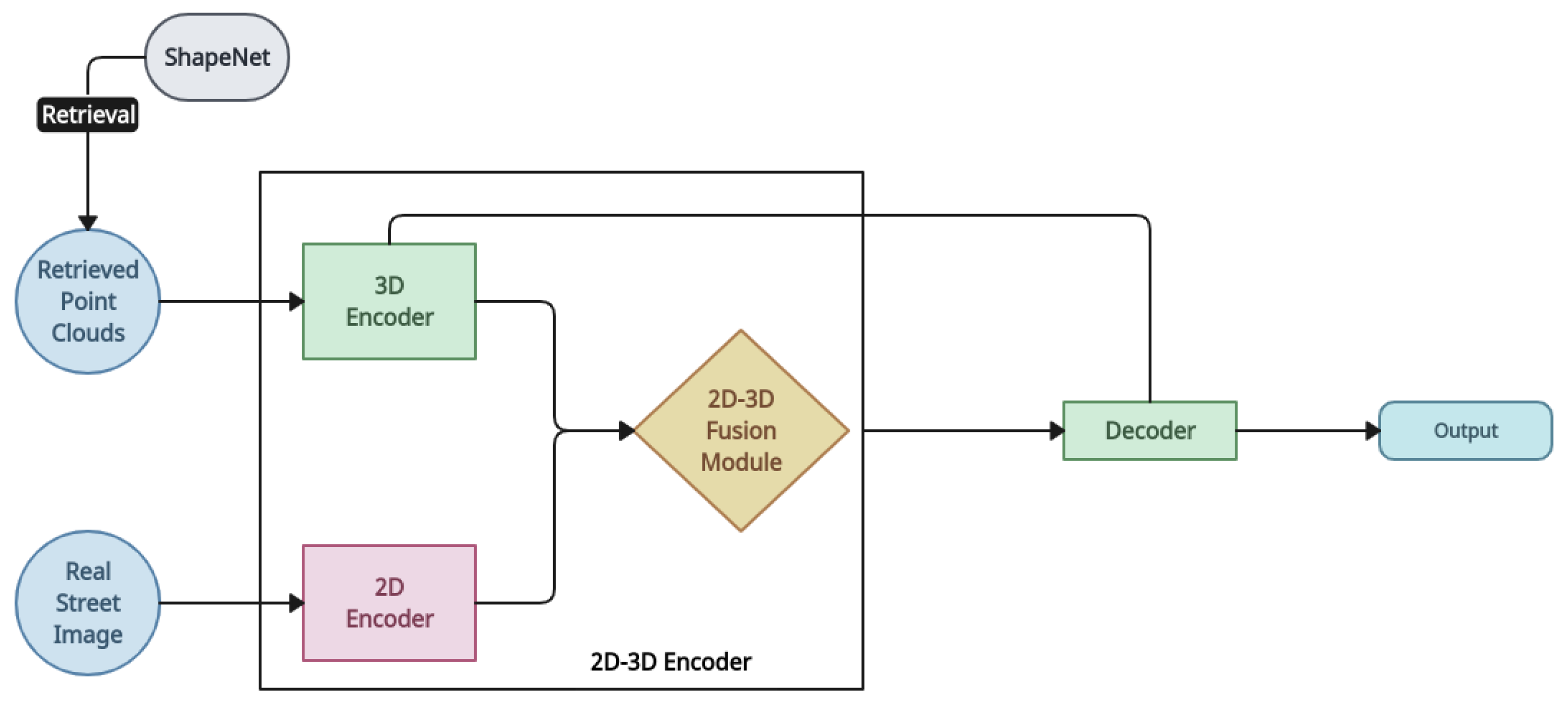

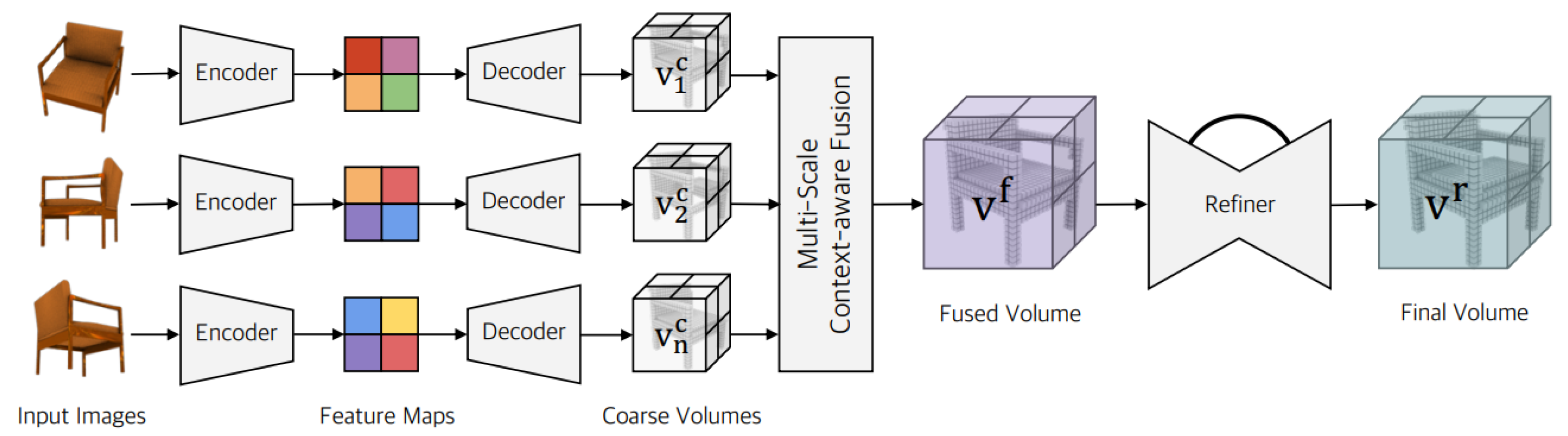

4.4. Multiple-View Reconstruction

4.4.1. Point Cloud Representation

4.4.2. Voxel Representation

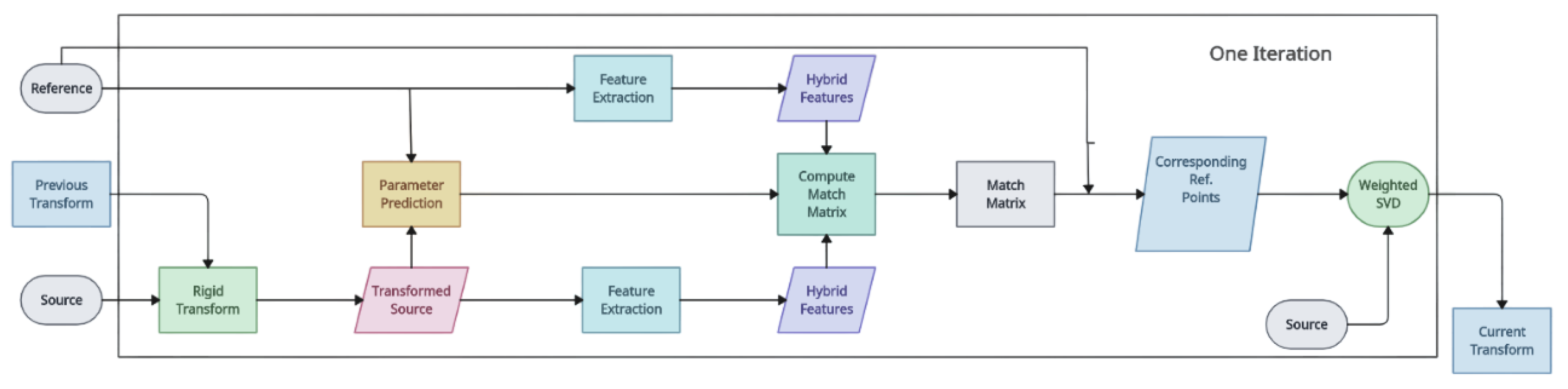

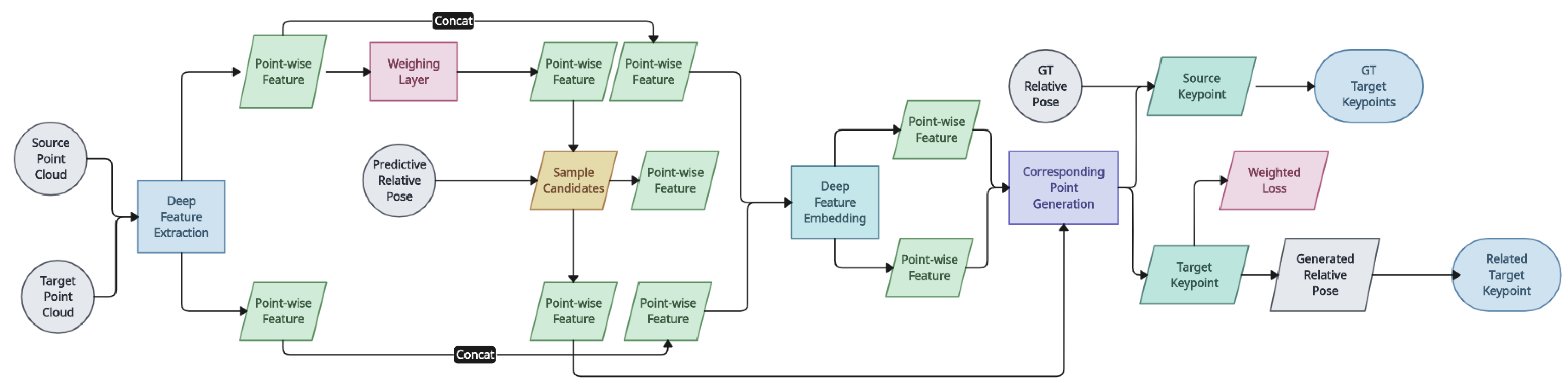

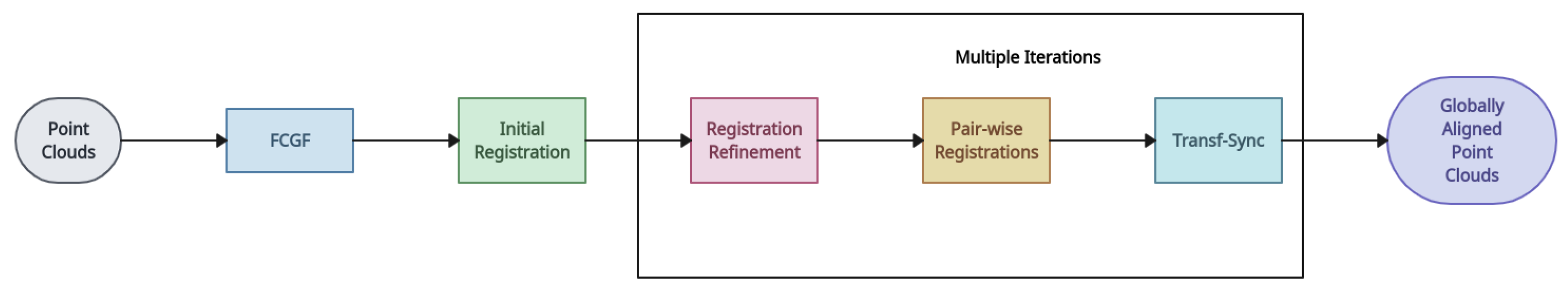

5. Registration

5.1. Traditional Methods

5.2. Learning-Based Methods

6. Augmentation

6.1. Denoising

6.2. Upsampling

6.3. Downsampling

7. Point Cloud Completion

| Model | Advantages | Limitations |

|---|---|---|

| PCN [205] | Acquires knowledge of a projection from the space of incomplete observations to the space of fully formed shapes. | Requires training data to be prepared in partial shapes since it expects a test input that is identical to the training data. |

| USCN [207] | Does not require explicit correspondence between example complete shape models and incomplete point sets. | Training GANs can be difficult due to common errors such as mode collapse. |

| MSN [209] | Uses EMD as a better metric for measuring completion quality. | Frequently disregards the spatial correlation between points. |

| PF-Net [214] | Accepts a partial point cloud as input and only outputs the portion of the point cloud that is missing. | Model’s intricate design results in a comparatively large number of parameters. |

| GRNet [211] | Uses 3D grids as intermediary representations to maintain unordered point clouds. | Difficult to maintain an organised structure for points in small patches due to the discontinuous character of the point cloud. |

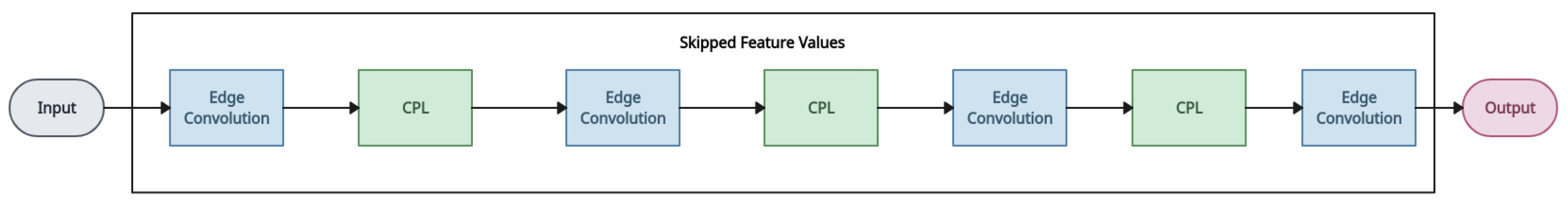

| SnowflakeNet [190] | Focuses specifically on the process of decoding incomplete point clouds. | Fine-grained details are lost easily during pooling operations in the encoding phase. |

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| 3D | Three-dimensional |

| 2D | Two-dimensional |

| LiDAR | Light detection and ranging |

| RGB-D | Red, green, blue plus depth |

| CAD | Computer-aided design |

| MLP | Multilayer perceptron |

| CNN | Convolutional neural network |

| FCGFs | Fully convolutional geometric features |

| GPU | Graphics processing unit |

| RAM | Random access memory |

References

- Vinodkumar, P.K.; Karabulut, D.; Avots, E.; Ozcinar, C.; Anbarjafari, G. A Survey on Deep Learning Based Segmentation, Detection and Classification for 3D Point Clouds. Entropy 2023, 25, 635. [Google Scholar] [CrossRef]

- Behley, J.; Garbade, M.; Milioto, A.; Quenzel, J.; Behnke, S.; Stachniss, C.; Gall, J. Semantickitti: A dataset for semantic scene understanding of lidar sequences. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9297–9307. [Google Scholar]

- Armeni, I.; Sener, O.; Zamir, A.R.; Jiang, H.; Brilakis, I.; Fischer, M.; Savarese, S. 3d semantic parsing of large-scale indoor spaces. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1534–1543. [Google Scholar]

- Qi, C.R.; Chen, X.; Litany, O.; Guibas, L.J. ImVoteNet: Boosting 3D Object Detection in Point Clouds with Image Votes. arXiv 2020, arXiv:2001.10692. [Google Scholar] [CrossRef]

- Zhou, Y.; Tuzel, O. Voxelnet: End-to-end learning for point cloud based 3d object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar]

- Shi, S.; Wang, X.; Li, H. PointRCNN: 3D Object Proposal Generation and Detection from Point Cloud. arXiv 2018, arXiv:1812.04244. [Google Scholar] [CrossRef]

- Hanocka, R.; Hertz, A.; Fish, N.; Giryes, R.; Fleishman, S.; Cohen-Or, D. Meshcnn: A network with an edge. ACM Trans. Graph. (TOG) 2019, 38, 1–12. [Google Scholar] [CrossRef]

- Wang, S.; Zhu, J.; Zhang, R. Meta-RangeSeg: LiDAR Sequence Semantic Segmentation Using Multiple Feature Aggregation. arXiv 2022, arXiv:2202.13377. [Google Scholar]

- Fan, H.; Su, H.; Guibas, L.J. A Point Set Generation Network for 3D Object Reconstruction from a Single Image. arXiv 2016, arXiv:1612.00603. [Google Scholar] [CrossRef]

- Chang, A.X.; Funkhouser, T.; Guibas, L.; Hanrahan, P.; Huang, Q.; Li, Z.; Savarese, S.; Savva, M.; Song, S.; Su, H.; et al. ShapeNet: An Information-Rich 3D Model Repository. arXiv 2015, arXiv:1512.03012. [Google Scholar]

- Choy, C.B.; Xu, D.; Gwak, J.; Chen, K.; Savarese, S. 3D-R2N2: A Unified Approach for Single and Multi-view 3D Object Reconstruction. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; pp. 628–644. [Google Scholar]

- Lin, C.H.; Kong, C.; Lucey, S. Learning Efficient Point Cloud Generation for Dense 3D Object Reconstruction. arXiv 2017, arXiv:1706.07036. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, Z.; Liu, T.; Peng, B.; Li, X. RealPoint3D: An Efficient Generation Network for 3D Object Reconstruction From a Single Image. IEEE Access 2019, 7, 57539–57549. [Google Scholar] [CrossRef]

- Xiang, Y.; Kim, W.; Chen, W.; Ji, J.; Choy, C.; Su, H.; Mottaghi, R.; Guibas, L.; Savarese, S. Objectnet3d: A large scale database for 3d object recognition. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part VIII 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 160–176. [Google Scholar]

- Navaneet, K.L.; Mathew, A.; Kashyap, S.; Hung, W.C.; Jampani, V.; Babu, R.V. From Image Collections to Point Clouds with Self-supervised Shape and Pose Networks. arXiv 2020, arXiv:2005.01939. [Google Scholar] [CrossRef]

- Sun, X.; Wu, J.; Zhang, X.; Zhang, Z.; Zhang, C.; Xue, T.; Tenenbaum, J.B.; Freeman, W.T. Pix3d: Dataset and methods for single-image 3d shape modeling. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2974–2983. [Google Scholar]

- Bautista, M.A.; Talbott, W.; Zhai, S.; Srivastava, N.; Susskind, J.M. On the generalization of learning-based 3D reconstruction. arXiv 2020, arXiv:2006.15427. [Google Scholar] [CrossRef]

- Rezende, D.J.; Eslami, S.M.A.; Mohamed, S.; Battaglia, P.; Jaderberg, M.; Heess, N. Unsupervised Learning of 3D Structure from Images. arXiv 2016, arXiv:1607.00662. [Google Scholar] [CrossRef]

- LeCun, Y. The MNIST Database of Handwritten Digits. 1998. Available online: http://yann.lecun.com/exdb/mnist/ (accessed on 12 November 2023).

- Zhang, X.; Zhang, Z.; Zhang, C.; Tenenbaum, J.B.; Freeman, W.T.; Wu, J. Learning to Reconstruct Shapes from Unseen Classes. arXiv 2018, arXiv:1812.11166. [Google Scholar]

- Wu, J.; Wang, Y.; Xue, T.; Sun, X.; Freeman, W.T.; Tenenbaum, J.B. MarrNet: 3D Shape Reconstruction via 2.5D Sketches. arXiv 2017, arXiv:1711.03129. [Google Scholar] [CrossRef]

- Xiang, Y.; Mottaghi, R.; Savarese, S. Beyond PASCAL: A Benchmark for 3D Object Detection in the Wild. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Steamboat Springs, CO, USA, 24–26 March 2014. [Google Scholar]

- Yan, X.; Yang, J.; Yumer, E.; Guo, Y.; Lee, H. Perspective Transformer Nets: Learning Single-View 3D Object Reconstruction without 3D Supervision. arXiv 2016, arXiv:1612.00814. [Google Scholar] [CrossRef]

- Zhu, R.; Galoogahi, H.K.; Wang, C.; Lucey, S. Rethinking Reprojection: Closing the Loop for Pose-Aware Shape Reconstruction from a Single Image. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 57–65. [Google Scholar] [CrossRef]

- Xiao, J.; Hays, J.; Ehinger, K.A.; Oliva, A.; Torralba, A. SUN database: Large-scale scene recognition from abbey to zoo. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 3485–3492. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision—ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V 13. Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Wu, J.; Zhang, C.; Xue, T.; Freeman, W.T.; Tenenbaum, J.B. Learning a Probabilistic Latent Space of Object Shapes via 3D Generative-Adversarial Modeling. arXiv 2016, arXiv:1610.07584. [Google Scholar] [CrossRef]

- Wu, Z.; Song, S.; Khosla, A.; Tang, X.; Xiao, J. 3D ShapeNets for 2.5D Object Recognition and Next-Best-View Prediction. arXiv 2014, arXiv:1406.5670. [Google Scholar]

- Lim, J.J.; Pirsiavash, H.; Torralba, A. Parsing ikea objects: Fine pose estimation. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2992–2999. [Google Scholar]

- Xie, H.; Yao, H.; Zhang, S.; Zhou, S.; Sun, W. Pix2Vox++: Multi-scale Context-aware 3D Object Reconstruction from Single and Multiple Images. Int. J. Comput. Vis. 2020, 128, 2919–2935. [Google Scholar] [CrossRef]

- Gwak, J.; Choy, C.B.; Garg, A.; Chandraker, M.; Savarese, S. Weakly supervised 3D Reconstruction with Adversarial Constraint. arXiv 2017, arXiv:1705.10904. [Google Scholar] [CrossRef]

- Banani, M.E.; Corso, J.J.; Fouhey, D.F. Novel Object Viewpoint Estimation through Reconstruction Alignment. arXiv 2020, arXiv:2006.03586. [Google Scholar] [CrossRef]

- Turk, G.; Levoy, M. Zippered polygon meshes from range images. In Proceedings of the 21st Annual Conference on Computer Graphics and Interactive Techniques, Orlando, FL, USA, 24–29 July 1994; pp. 311–318. [Google Scholar]

- Hoang, L.; Lee, S.H.; Kwon, O.H.; Kwon, K.R. A Deep Learning Method for 3D Object Classification Using the Wave Kernel Signature and A Center Point of the 3D-Triangle Mesh. Electronics 2019, 8, 1196. [Google Scholar] [CrossRef]

- Kato, H.; Ushiku, Y.; Harada, T. Neural 3D Mesh Renderer. arXiv 2017, arXiv:1711.07566. [Google Scholar] [CrossRef]

- Pan, J.; Li, J.Y.; Han, X.; Jia, K. Residual MeshNet: Learning to Deform Meshes for Single-View 3D Reconstruction. In Proceedings of the 2018 International Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018; pp. 719–727. [Google Scholar]

- Wang, N.; Zhang, Y.; Li, Z.; Fu, Y.; Liu, W.; Jiang, Y.G. Pixel2mesh: Generating 3d mesh models from single rgb images. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 52–67. [Google Scholar]

- Popov, S.; Bauszat, P.; Ferrari, V. CoReNet: Coherent 3D scene reconstruction from a single RGB image. arXiv 2020, arXiv:2004.12989. [Google Scholar] [CrossRef]

- Dai, A.; Chang, A.X.; Savva, M.; Halber, M.; Funkhouser, T.; Nießner, M. Scannet: Richly-annotated 3d reconstructions of indoor scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5828–5839. [Google Scholar]

- Shilane, P.; Min, P.; Kazhdan, M.; Funkhouser, T. The princeton shape benchmark. In Proceedings of the Shape Modeling Applications, Genova, Italy, 7–9 June 2004; IEEE: Piscataway, NJ, USA, 2004; pp. 167–178. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Henn, A.; Gröger, G.; Stroh, V.; Plümer, L. Model driven reconstruction of roofs from sparse LIDAR point clouds. ISPRS J. Photogramm. Remote Sens. 2013, 76, 17–29. [Google Scholar] [CrossRef]

- Buyukdemircioglu, M.; Kocaman, S.; Kada, M. Deep learning for 3D building reconstruction: A review. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 43, 359–366. [Google Scholar] [CrossRef]

- Tran, H.; Khoshelham, K. Procedural reconstruction of 3D indoor models from lidar data using reversible jump Markov Chain Monte Carlo. Remote Sens. 2020, 12, 838. [Google Scholar] [CrossRef]

- Mura, C.; Mattausch, O.; Pajarola, R. Piecewise-planar reconstruction of multi-room interiors with arbitrary wall arrangements. In Proceedings of the Computer Graphics Forum; Wiley Online Library: Hoboken, NJ, USA, 2016; Volume 35, pp. 179–188. [Google Scholar]

- Oesau, S.; Lafarge, F.; Alliez, P. Indoor scene reconstruction using feature sensitive primitive extraction and graph-cut. ISPRS J. Photogramm. Remote Sens. 2014, 90, 68–82. [Google Scholar] [CrossRef]

- Khoshelham, K.; Díaz-Vilariño, L. 3D modelling of interior spaces: Learning the language of indoor architecture. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 40, 321–326. [Google Scholar] [CrossRef]

- Tran, H.; Khoshelham, K.; Kealy, A.; Díaz-Vilariño, L. Shape grammar approach to 3D modeling of indoor environments using point clouds. J. Comput. Civ. Eng. 2019, 33, 04018055. [Google Scholar] [CrossRef]

- Wonka, P.; Wimmer, M.; Sillion, F.; Ribarsky, W. Instant architecture. ACM Trans. Graph. (TOG) 2003, 22, 669–677. [Google Scholar] [CrossRef]

- Becker, S. Generation and application of rules for quality dependent façade reconstruction. ISPRS J. Photogramm. Remote Sens. 2009, 64, 640–653. [Google Scholar] [CrossRef]

- Dick, A.R.; Torr, P.H.; Cipolla, R. Modelling and interpretation of architecture from several images. Int. J. Comput. Vis. 2004, 60, 111–134. [Google Scholar] [CrossRef]

- Becker, S.; Peter, M.; Fritsch, D. Grammar-supported 3d indoor reconstruction from point clouds for “as-built” BIM. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 2, 17–24. [Google Scholar] [CrossRef]

- Döllner, J. Geospatial artificial intelligence: Potentials of machine learning for 3D point clouds and geospatial digital twins. PFG- Photogramm. Remote Sens. Geoinf. Sci. 2020, 88, 15–24. [Google Scholar] [CrossRef]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Hoeser, T.; Kuenzer, C. Object detection and image segmentation with deep learning on earth observation data: A review-part i: Evolution and recent trends. Remote Sens. 2020, 12, 1667. [Google Scholar] [CrossRef]

- Rottensteiner, F.; Sohn, G.; Jung, J.; Gerke, M.; Baillard, C.; Benitez, S.; Breitkopf, U. The ISPRS benchmark on urban object classification and 3D building reconstruction. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. I-3 2012, 1, 293–298. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Liu, C.; Kong, D.; Wang, S.; Wang, Z.; Li, J.; Yin, B. Deep3D reconstruction: Methods, data, and challenges. Front. Inf. Technol. Electron. Eng. 2021, 22, 652–672. [Google Scholar] [CrossRef]

- Bhat, S.F.; Alhashim, I.; Wonka, P. Adabins: Depth estimation using adaptive bins. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4009–4018. [Google Scholar]

- Kasieczka, G.; Nachman, B.; Shih, D.; Amram, O.; Andreassen, A.; Benkendorfer, K.; Bortolato, B.; Brooijmans, G.; Canelli, F.; Collins, J.H.; et al. The LHC Olympics 2020 a community challenge for anomaly detection in high energy physics. Rep. Prog. Phys. 2021, 84, 124201. [Google Scholar] [CrossRef] [PubMed]

- Yu, X.; Rao, Y.; Wang, Z.; Liu, Z.; Lu, J.; Zhou, J. Pointr: Diverse point cloud completion with geometry-aware transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 12498–12507. [Google Scholar]

- Peng, S.; Niemeyer, M.; Mescheder, L.; Pollefeys, M.; Geiger, A. Convolutional occupancy networks. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part III 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 523–540. [Google Scholar]

- Kato, H.; Beker, D.; Morariu, M.; Ando, T.; Matsuoka, T.; Kehl, W.; Gaidon, A. Differentiable rendering: A survey. arXiv 2020, arXiv:2006.12057. [Google Scholar]

- Fu, K.; Peng, J.; He, Q.; Zhang, H. Single image 3D object reconstruction based on deep learning: A review. Multimed. Tools Appl. 2021, 80, 463–498. [Google Scholar] [CrossRef]

- Zhang, Y.; Huo, K.; Liu, Z.; Zang, Y.; Liu, Y.; Li, X.; Zhang, Q.; Wang, C. PGNet: A Part-based Generative Network for 3D object reconstruction. Knowl.-Based Syst. 2020, 194, 105574. [Google Scholar] [CrossRef]

- Lu, Q.; Xiao, M.; Lu, Y.; Yuan, X.; Yu, Y. Attention-based dense point cloud reconstruction from a single image. IEEE Access 2019, 7, 137420–137431. [Google Scholar] [CrossRef]

- Yuniarti, A.; Suciati, N. A review of deep learning techniques for 3D reconstruction of 2D images. In Proceedings of the 2019 12th International Conference on Information & Communication Technology and System (ICTS), Surabaya, Indonesia, 18 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 327–331. [Google Scholar]

- Monnier, T.; Fisher, M.; Efros, A.A.; Aubry, M. Share with thy neighbors: Single-view reconstruction by cross-instance consistency. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 285–303. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Hu, T.; Wang, L.; Xu, X.; Liu, S.; Jia, J. Self-supervised 3D mesh reconstruction from single images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 6002–6011. [Google Scholar]

- Joung, S.; Kim, S.; Kim, M.; Kim, I.J.; Sohn, K. Learning canonical 3d object representation for fine-grained recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1035–1045. [Google Scholar]

- Niemeyer, M.; Mescheder, L.; Oechsle, M.; Geiger, A. Differentiable volumetric rendering: Learning implicit 3d representations without 3d supervision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3504–3515. [Google Scholar]

- Biundini, I.Z.; Pinto, M.F.; Melo, A.G.; Marcato, A.L.; Honório, L.M.; Aguiar, M.J. A framework for coverage path planning optimization based on point cloud for structural inspection. Sensors 2021, 21, 570. [Google Scholar] [CrossRef]

- Chibane, J.; Alldieck, T.; Pons-Moll, G. Implicit functions in feature space for 3d shape reconstruction and completion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6970–6981. [Google Scholar]

- Collins, J.; Goel, S.; Deng, K.; Luthra, A.; Xu, L.; Gundogdu, E.; Zhang, X.; Vicente, T.F.Y.; Dideriksen, T.; Arora, H.; et al. Abo: Dataset and benchmarks for real-world 3d object understanding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 21126–21136. [Google Scholar]

- Sahu, C.K.; Young, C.; Rai, R. Artificial intelligence (AI) in augmented reality (AR)-assisted manufacturing applications: A review. Int. J. Prod. Res. 2021, 59, 4903–4959. [Google Scholar] [CrossRef]

- Mescheder, L.; Oechsle, M.; Niemeyer, M.; Nowozin, S.; Geiger, A. Occupancy networks: Learning 3d reconstruction in function space. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4460–4470. [Google Scholar]

- Liu, R.; Wu, R.; Van Hoorick, B.; Tokmakov, P.; Zakharov, S.; Vondrick, C. Zero-1-to-3: Zero-shot one image to 3d object. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 9298–9309. [Google Scholar]

- Xu, D.; Jiang, Y.; Wang, P.; Fan, Z.; Shi, H.; Wang, Z. Sinnerf: Training neural radiance fields on complex scenes from a single image. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 736–753. [Google Scholar]

- Kanazawa, A.; Tulsiani, S.; Efros, A.A.; Malik, J. Learning category-specific mesh reconstruction from image collections. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 371–386. [Google Scholar]

- Zhou, T.; Brown, M.; Snavely, N.; Lowe, D.G. Unsupervised learning of depth and ego-motion from video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1851–1858. [Google Scholar]

- Yu, A.; Ye, V.; Tancik, M.; Kanazawa, A. pixelnerf: Neural radiance fields from one or few images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4578–4587. [Google Scholar]

- Sitzmann, V.; Zollhöfer, M.; Wetzstein, G. Scene representation networks: Continuous 3d-structure-aware neural scene representations. Adv. Neural Inf. Process. Syst. 2019, 32. [Google Scholar] [CrossRef]

- Enebuse, I.; Foo, M.; Ibrahim, B.S.K.K.; Ahmed, H.; Supmak, F.; Eyobu, O.S. A comparative review of hand-eye calibration techniques for vision guided robots. IEEE Access 2021, 9, 113143–113155. [Google Scholar] [CrossRef]

- Tatarchenko, M.; Richter, S.R.; Ranftl, R.; Li, Z.; Koltun, V.; Brox, T. What do single-view 3d reconstruction networks learn? In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3405–3414. [Google Scholar]

- Sünderhauf, N.; Brock, O.; Scheirer, W.; Hadsell, R.; Fox, D.; Leitner, J.; Upcroft, B.; Abbeel, P.; Burgard, W.; Milford, M.; et al. The limits and potentials of deep learning for robotics. Int. J. Robot. Res. 2018, 37, 405–420. [Google Scholar] [CrossRef]

- Han, X.F.; Laga, H.; Bennamoun, M. Image-based 3D object reconstruction: State-of-the-art and trends in the deep learning era. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1578–1604. [Google Scholar] [CrossRef]

- Varol, G.; Ceylan, D.; Russell, B.; Yang, J.; Yumer, E.; Laptev, I.; Schmid, C. Bodynet: Volumetric inference of 3d human body shapes. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 20–36. [Google Scholar]

- Najibi, M.; Ji, J.; Zhou, Y.; Qi, C.R.; Yan, X.; Ettinger, S.; Anguelov, D. Motion inspired unsupervised perception and prediction in autonomous driving. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 424–443. [Google Scholar]

- Xu, Q.; Wang, W.; Ceylan, D.; Mech, R.; Neumann, U. Disn: Deep implicit surface network for high-quality single-view 3d reconstruction. Adv. Neural Inf. Process. Syst. 2019, 32. [Google Scholar] [CrossRef]

- Creswell, A.; White, T.; Dumoulin, V.; Arulkumaran, K.; Sengupta, B.; Bharath, A.A. Generative adversarial networks: An overview. IEEE Signal Process. Mag. 2018, 35, 53–65. [Google Scholar] [CrossRef]

- Zhu, J.Y.; Zhang, Z.; Zhang, C.; Wu, J.; Torralba, A.; Tenenbaum, J.; Freeman, B. Visual object networks: Image generation with disentangled 3D representations. Adv. Neural Inf. Process. Syst. 2018, 31. [Google Scholar] [CrossRef]

- Gadelha, M.; Maji, S.; Wang, R. 3d shape induction from 2d views of multiple objects. In Proceedings of the 2017 International Conference on 3D Vision (3DV), Qingdao, China, 10–12 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 402–411. [Google Scholar]

- Chan, E.R.; Monteiro, M.; Kellnhofer, P.; Wu, J.; Wetzstein, G. pi-gan: Periodic implicit generative adversarial networks for 3d-aware image synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 5799–5809. [Google Scholar]

- Park, J.J.; Florence, P.; Straub, J.; Newcombe, R.; Lovegrove, S. Deepsdf: Learning continuous signed distance functions for shape representation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–19 June 2019; pp. 165–174. [Google Scholar]

- Gao, J.; Shen, T.; Wang, Z.; Chen, W.; Yin, K.; Li, D.; Litany, O.; Gojcic, Z.; Fidler, S. Get3d: A generative model of high quality 3d textured shapes learned from images. Adv. Neural Inf. Process. Syst. 2022, 35, 31841–31854. [Google Scholar]

- Mittal, P.; Cheng, Y.C.; Singh, M.; Tulsiani, S. Autosdf: Shape priors for 3d completion, reconstruction and generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 306–315. [Google Scholar]

- Li, X.; Liu, S.; Kim, K.; De Mello, S.; Jampani, V.; Yang, M.H.; Kautz, J. Self-supervised single-view 3d reconstruction via semantic consistency. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XIV 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 677–693. [Google Scholar]

- de Melo, C.M.; Torralba, A.; Guibas, L.; DiCarlo, J.; Chellappa, R.; Hodgins, J. Next-generation deep learning based on simulators and synthetic data. Trends Cogn. Sci. 2022, 26. [Google Scholar] [CrossRef]

- Loper, M.M.; Black, M.J. OpenDR: An approximate differentiable renderer. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part VII 13. Springer: Berlin/Heidelberg, Germany, 2014; pp. 154–169. [Google Scholar]

- Ravi, N.; Reizenstein, J.; Novotny, D.; Gordon, T.; Lo, W.Y.; Johnson, J.; Gkioxari, G. Accelerating 3d deep learning with pytorch3d. arXiv 2020, arXiv:2007.08501. [Google Scholar]

- Michel, O.; Bar-On, R.; Liu, R.; Benaim, S.; Hanocka, R. Text2mesh: Text-driven neural stylization for meshes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 13492–13502. [Google Scholar]

- Fahim, G.; Amin, K.; Zarif, S. Single-View 3D reconstruction: A Survey of deep learning methods. Comput. Graph. 2021, 94, 164–190. [Google Scholar] [CrossRef]

- Tang, J.; Han, X.; Pan, J.; Jia, K.; Tong, X. A skeleton-bridged deep learning approach for generating meshes of complex topologies from single rgb images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4541–4550. [Google Scholar]

- Xu, Q.; Nie, Z.; Xu, H.; Zhou, H.; Attar, H.R.; Li, N.; Xie, F.; Liu, X.J. SuperMeshing: A new deep learning architecture for increasing the mesh density of physical fields in metal forming numerical simulation. J. Appl. Mech. 2022, 89, 011002. [Google Scholar] [CrossRef]

- Dahnert, M.; Hou, J.; Nießner, M.; Dai, A. Panoptic 3d scene reconstruction from a single rgb image. Adv. Neural Inf. Process. Syst. 2021, 34, 8282–8293. [Google Scholar]

- Liu, F.; Liu, X. Voxel-based 3d detection and reconstruction of multiple objects from a single image. Adv. Neural Inf. Process. Syst. 2021, 34, 2413–2426. [Google Scholar]

- Pan, J.; Han, X.; Chen, W.; Tang, J.; Jia, K. Deep mesh reconstruction from single rgb images via topology modification networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9964–9973. [Google Scholar]

- Mustikovela, S.K.; De Mello, S.; Prakash, A.; Iqbal, U.; Liu, S.; Nguyen-Phuoc, T.; Rother, C.; Kautz, J. Self-supervised object detection via generative image synthesis. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 8609–8618. [Google Scholar]

- Huang, Z.; Jampani, V.; Thai, A.; Li, Y.; Stojanov, S.; Rehg, J.M. ShapeClipper: Scalable 3D Shape Learning from Single-View Images via Geometric and CLIP-based Consistency. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 12912–12922. [Google Scholar]

- Kar, A.; Häne, C.; Malik, J. Learning a multi-view stereo machine. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar] [CrossRef]

- Yang, G.; Cui, Y.; Belongie, S.; Hariharan, B. Learning single-view 3d reconstruction with limited pose supervision. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 86–101. [Google Scholar]

- Huang, Z.; Stojanov, S.; Thai, A.; Jampani, V.; Rehg, J.M. Planes vs. chairs: Category-guided 3d shape learning without any 3d cues. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 727–744. [Google Scholar]

- Jiao, L.; Huang, Z.; Liu, X.; Yang, Y.; Ma, M.; Zhao, J.; You, C.; Hou, B.; Yang, S.; Liu, F.; et al. Brain-inspired Remote Sensing Interpretation: A Comprehensive Survey. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, Volume 16, 2992–3033. [Google Scholar] [CrossRef]

- Yang, Z.; Ren, Z.; Bautista, M.A.; Zhang, Z.; Shan, Q.; Huang, Q. FvOR: Robust joint shape and pose optimization for few-view object reconstruction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2497–2507. [Google Scholar]

- Bechtold, J.; Tatarchenko, M.; Fischer, V.; Brox, T. Fostering generalization in single-view 3d reconstruction by learning a hierarchy of local and global shape priors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 15880–15889. [Google Scholar]

- Thai, A.; Stojanov, S.; Upadhya, V.; Rehg, J.M. 3d reconstruction of novel object shapes from single images. In Proceedings of the 2021 International Conference on 3D Vision (3DV), London, UK, 1–3 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 85–95. [Google Scholar]

- Yang, X.; Lin, G.; Zhou, L. Single-View 3D Mesh Reconstruction for Seen and Unseen Categories. IEEE Trans. Image Process. 2023, 32, 3746–3758. [Google Scholar] [CrossRef] [PubMed]

- Anciukevicius, T.; Fox-Roberts, P.; Rosten, E.; Henderson, P. Unsupervised Causal Generative Understanding of Images. Adv. Neural Inf. Process. Syst. 2022, 35, 37037–37054. [Google Scholar]

- Fan, H.; Su, H.; Guibas, L.J. A point set generation network for 3d object reconstruction from a single image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 605–613. [Google Scholar]

- Niemeyer, M.; Geiger, A. Giraffe: Representing scenes as compositional generative neural feature fields. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 11453–11464. [Google Scholar]

- Or-El, R.; Luo, X.; Shan, M.; Shechtman, E.; Park, J.J.; Kemelmacher-Shlizerman, I. Stylesdf: High-resolution 3d-consistent image and geometry generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 13503–13513. [Google Scholar]

- Xie, H.; Yao, H.; Sun, X.; Zhou, S.; Zhang, S. Pix2vox: Context-aware 3d reconstruction from single and multi-view images. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2690–2698. [Google Scholar]

- Melas-Kyriazi, L.; Laina, I.; Rupprecht, C.; Vedaldi, A. Realfusion: 360deg reconstruction of any object from a single image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 8446–8455. [Google Scholar]

- Xiang, P.; Wen, X.; Liu, Y.S.; Cao, Y.P.; Wan, P.; Zheng, W.; Han, Z. Snowflake point deconvolution for point cloud completion and generation with skip-transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 6320–6338. [Google Scholar] [CrossRef]

- Boulch, A.; Marlet, R. Poco: Point convolution for surface reconstruction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 6302–6314. [Google Scholar]

- Wen, X.; Zhou, J.; Liu, Y.S.; Su, H.; Dong, Z.; Han, Z. 3D shape reconstruction from 2D images with disentangled attribute flow. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 3803–3813. [Google Scholar]

- Wang, D.; Cui, X.; Chen, X.; Zou, Z.; Shi, T.; Salcudean, S.; Wang, Z.J.; Ward, R. Multi-view 3d reconstruction with transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 5722–5731. [Google Scholar]

- Kirillov, A.; Wu, Y.; He, K.; Girshick, R. Pointrend: Image segmentation as rendering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9799–9808. [Google Scholar]

- Chen, Z.; Zhang, H. Learning implicit fields for generative shape modeling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5939–5948. [Google Scholar]

- Wen, C.; Zhang, Y.; Li, Z.; Fu, Y. Pixel2mesh++: Multi-view 3d mesh generation via deformation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1042–1051. [Google Scholar]

- Jiang, Y.; Ji, D.; Han, Z.; Zwicker, M. Sdfdiff: Differentiable rendering of signed distance fields for 3d shape optimization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1251–1261. [Google Scholar]

- Wu, J.; Zhang, C.; Zhang, X.; Zhang, Z.; Freeman, W.T.; Tenenbaum, J.B. Learning shape priors for single-view 3d completion and reconstruction. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 646–662. [Google Scholar]

- Ma, W.C.; Yang, A.J.; Wang, S.; Urtasun, R.; Torralba, A. Virtual correspondence: Humans as a cue for extreme-view geometry. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 15924–15934. [Google Scholar]

- Goodwin, W.; Vaze, S.; Havoutis, I.; Posner, I. Zero-shot category-level object pose estimation. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 516–532. [Google Scholar]

- Myronenko, A.; Song, X. Point Set Registration: Coherent Point Drift. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 2262–2275. [Google Scholar] [CrossRef] [PubMed]

- Iglesias, J.P.; Olsson, C.; Kahl, F. Global Optimality for Point Set Registration Using Semidefinite Programming. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 8284–8292. [Google Scholar] [CrossRef]

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A benchmark for the evaluation of RGB-D SLAM systems. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 573–580. [Google Scholar]

- Yew, Z.J.; Lee, G.H. RPM-Net: Robust Point Matching using Learned Features. arXiv 2020, arXiv:2003.13479. [Google Scholar] [CrossRef]

- Lu, W.; Wan, G.; Zhou, Y.; Fu, X.; Yuan, P.; Song, S. DeepICP: An End-to-End Deep Neural Network for 3D Point Cloud Registration. arXiv 2019, arXiv:1905.04153. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? the kitti vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–24 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 3354–3361. [Google Scholar]

- Lu, W.; Zhou, Y.; Wan, G.; Hou, S.; Song, S. L3-net: Towards learning based lidar localization for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–19 June 2019; pp. 6389–6398. [Google Scholar]

- Gojcic, Z.; Zhou, C.; Wegner, J.D.; Wieser, A. The Perfect Match: 3D Point Cloud Matching with Smoothed Densities. arXiv 2018, arXiv:1811.06879. [Google Scholar] [CrossRef]

- Zeng, A.; Song, S.; Nießner, M.; Fisher, M.; Xiao, J.; Funkhouser, T. 3dmatch: Learning local geometric descriptors from rgb-d reconstructions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1802–1811. [Google Scholar]

- Gojcic, Z.; Zhou, C.; Wegner, J.D.; Guibas, L.J.; Birdal, T. Learning multiview 3D point cloud registration. arXiv 2020, arXiv:2001.05119. [Google Scholar] [CrossRef]

- Choi, S.; Zhou, Q.Y.; Koltun, V. Robust reconstruction of indoor scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5556–5565. [Google Scholar]

- Ma, J.; Jiang, X.; Fan, A.; Jiang, J.; Yan, J. Image matching from handcrafted to deep features: A survey. Int. J. Comput. Vis. 2021, 129, 23–79. [Google Scholar] [CrossRef]

- Sotiras, A.; Davatzikos, C.; Paragios, N. Deformable medical image registration: A survey. IEEE Trans. Med Imaging 2013, 32, 1153–1190. [Google Scholar] [CrossRef]

- Yang, J.; Li, H.; Campbell, D.; Jia, Y. Go-ICP: A globally optimal solution to 3D ICP point-set registration. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 2241–2254. [Google Scholar] [CrossRef]

- Huang, X.; Mei, G.; Zhang, J.; Abbas, R. A comprehensive survey on point cloud registration. arXiv 2021, arXiv:2103.02690. [Google Scholar]

- Brynte, L.; Larsson, V.; Iglesias, J.P.; Olsson, C.; Kahl, F. On the tightness of semidefinite relaxations for rotation estimation. J. Math. Imaging Vis. 2022, 64, 57–67. [Google Scholar] [CrossRef]

- Yang, H.; Carlone, L. Certifiably optimal outlier-robust geometric perception: Semidefinite relaxations and scalable global optimization. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 2816–2834. [Google Scholar] [CrossRef] [PubMed]

- Huang, S.; Gojcic, Z.; Usvyatsov, M.; Wieser, A.; Schindler, K. Predator: Registration of 3d point clouds with low overlap. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4267–4276. [Google Scholar]

- Yew, Z.J.; Lee, G.H. Regtr: End-to-end point cloud correspondences with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 6677–6686. [Google Scholar]

- Bai, X.; Luo, Z.; Zhou, L.; Chen, H.; Li, L.; Hu, Z.; Fu, H.; Tai, C.L. Pointdsc: Robust point cloud registration using deep spatial consistency. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 15859–15869. [Google Scholar]

- Fu, K.; Liu, S.; Luo, X.; Wang, M. Robust point cloud registration framework based on deep graph matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8893–8902. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. arXiv 2017, arXiv:1706.02413. [Google Scholar] [CrossRef]

- Ren, S.; Chen, X.; Cai, H.; Wang, Y.; Liang, H.; Li, H. Color point cloud registration algorithm based on hue. Appl. Sci. 2021, 11, 5431. [Google Scholar] [CrossRef]

- Yao, W.; Chu, T.; Tang, W.; Wang, J.; Cao, X.; Zhao, F.; Li, K.; Geng, G.; Zhou, M. SPPD: A Novel Reassembly Method for 3D Terracotta Warrior Fragments Based on Fracture Surface Information. ISPRS Int. J. Geo-Inf. 2021, 10, 525. [Google Scholar] [CrossRef]

- Liu, J.; Liang, Y.; Xu, D.; Gong, X.; Hyyppä, J. A ubiquitous positioning solution of integrating GNSS with LiDAR odometry and 3D map for autonomous driving in urban environments. J. Geod. 2023, 97, 39. [Google Scholar] [CrossRef]

- Du, G.; Wang, K.; Lian, S.; Zhao, K. Vision-based robotic grasping from object localization, object pose estimation to grasp estimation for parallel grippers: A review. Artif. Intell. Rev. 2021, 54, 1677–1734. [Google Scholar] [CrossRef]

- Choy, C.; Park, J.; Koltun, V. Fully convolutional geometric features. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8958–8966. [Google Scholar]

- Lee, J.; Kim, S.; Cho, M.; Park, J. Deep hough voting for robust global registration. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 15994–16003. [Google Scholar]

- Lu, F.; Chen, G.; Liu, Y.; Zhang, L.; Qu, S.; Liu, S.; Gu, R. Hregnet: A hierarchical network for large-scale outdoor lidar point cloud registration. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 16014–16023. [Google Scholar]

- Sarode, V.; Dhagat, A.; Srivatsan, R.A.; Zevallos, N.; Lucey, S.; Choset, H. MaskNet: A Fully-Convolutional Network to Estimate Inlier Points. arXiv 2020, arXiv:2010.09185. [Google Scholar] [CrossRef]

- Pistilli, F.; Fracastoro, G.; Valsesia, D.; Magli, E. Learning Graph-Convolutional Representations for Point Cloud Denoising. arXiv 2020, arXiv:2007.02578. [Google Scholar] [CrossRef]

- Luo, S.; Hu, W. Differentiable Manifold Reconstruction for Point Cloud Denoising. arXiv 2020, arXiv:2007.13551. [Google Scholar] [CrossRef]

- Yu, L.; Li, X.; Fu, C.; Cohen-Or, D.; Heng, P. PU-Net: Point Cloud Upsampling Network. arXiv 2018, arXiv:1801.06761. [Google Scholar] [CrossRef]

- Wang, Y.; Wu, S.; Huang, H.; Cohen-Or, D.; Sorkine-Hornung, O. Patch-based Progressive 3D Point Set Upsampling. arXiv 2018, arXiv:1811.11286. [Google Scholar] [CrossRef]

- Nezhadarya, E.; Taghavi, E.; Liu, B.; Luo, J. Adaptive Hierarchical Down-Sampling for Point Cloud Classification. arXiv 2019, arXiv:1904.08506. [Google Scholar] [CrossRef]

- Lang, I.; Manor, A.; Avidan, S. SampleNet: Differentiable Point Cloud Sampling. arXiv 2019, arXiv:1912.03663. [Google Scholar] [CrossRef]

- Zaman, A.; Yangyu, F.; Ayub, M.S.; Irfan, M.; Guoyun, L.; Shiya, L. CMDGAT: Knowledge extraction and retention based continual graph attention network for point cloud registration. Expert Syst. Appl. 2023, 214, 119098. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, T.; Tang, X.; Lei, X.; Peng, Y. Introducing Improved Transformer to Land Cover Classification Using Multispectral LiDAR Point Clouds. Remote Sens. 2022, 14, 3808. [Google Scholar] [CrossRef]

- Huang, X.; Li, S.; Zuo, Y.; Fang, Y.; Zhang, J.; Zhao, X. Unsupervised point cloud registration by learning unified gaussian mixture models. IEEE Robot. Autom. Lett. 2022, 7, 7028–7035. [Google Scholar] [CrossRef]

- Zhao, Y.; Fan, L. Review on Deep Learning Algorithms and Benchmark Datasets for Pairwise Global Point Cloud Registration. Remote Sens. 2023, 15, 2060. [Google Scholar] [CrossRef]

- Shi, C.; Chen, X.; Huang, K.; Xiao, J.; Lu, H.; Stachniss, C. Keypoint matching for point cloud registration using multiplex dynamic graph attention networks. IEEE Robot. Autom. Lett. 2021, 6, 8221–8228. [Google Scholar] [CrossRef]

- Wu, Y.; Zhang, Y.; Fan, X.; Gong, M.; Miao, Q.; Ma, W. Inenet: Inliers estimation network with similarity learning for partial overlapping registration. IEEE Trans. Circuits Syst. Video Technol. 2022, 33, 1413–1426. [Google Scholar] [CrossRef]

- Wu, Y.; Zhang, Y.; Ma, W.; Gong, M.; Fan, X.; Zhang, M.; Qin, A.; Miao, Q. RORNet: Partial-to-partial registration network with reliable overlapping representations. IEEE Trans. Neural Netw. Learn. Syst. 2023. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.; Wu, Y.; Dai, Q.; Zhou, H.Y.; Xu, M.; Yang, S.; Han, X.; Yu, Y. A survey on graph neural networks and graph transformers in computer vision: A task-oriented perspective. arXiv 2022, arXiv:2209.13232. [Google Scholar]

- Simonovsky, M.; Komodakis, N. Dynamic edge-conditioned filters in convolutional neural networks on graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 June 2017; pp. 3693–3702. [Google Scholar]

- Mou, C.; Zhang, J.; Wu, Z. Dynamic attentive graph learning for image restoration. In Proceedings of the IEEE/CVF International Conference on Computer Vision, New Orleans, LA, USA, 18–24 June 2021; pp. 4328–4337. [Google Scholar]

- Luo, S.; Hu, W. Score-based point cloud denoising. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 4583–4592. [Google Scholar]

- Chen, H.; Wei, Z.; Li, X.; Xu, Y.; Wei, M.; Wang, J. Repcd-net: Feature-aware recurrent point cloud denoising network. Int. J. Comput. Vis. 2022, 130, 615–629. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S.E.; Bronstein, M.M.; Solomon, J.M. Dynamic graph cnn for learning on point clouds. ACM Trans. Graph. (TOG) 2019, 38, 1–12. [Google Scholar] [CrossRef]

- Chen, H.; Luo, S.; Gao, X.; Hu, W. Unsupervised learning of geometric sampling invariant representations for 3d point clouds. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 893–903. [Google Scholar]

- Zhou, L.; Sun, G.; Li, Y.; Li, W.; Su, Z. Point cloud denoising review: From classical to deep learning-based approaches. Graph. Model. 2022, 121, 101140. [Google Scholar] [CrossRef]

- Liu, W.; Sun, J.; Li, W.; Hu, T.; Wang, P. Deep learning on point clouds and its application: A survey. Sensors 2019, 19, 4188. [Google Scholar] [CrossRef]

- Yin, T.; Zhou, X.; Krähenbühl, P. Multimodal virtual point 3d detection. Adv. Neural Inf. Process. Syst. 2021, 34, 16494–16507. [Google Scholar]

- Xu, Q.; Zhou, Y.; Wang, W.; Qi, C.R.; Anguelov, D. Spg: Unsupervised domain adaptation for 3d object detection via semantic point generation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 15446–15456. [Google Scholar]

- Xiang, P.; Wen, X.; Liu, Y.S.; Cao, Y.P.; Wan, P.; Zheng, W.; Han, Z. Snowflakenet: Point cloud completion by snowflake point deconvolution with skip-transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 5499–5509. [Google Scholar]

- Li, R.; Li, X.; Fu, C.W.; Cohen-Or, D.; Heng, P.A. Pu-gan: A point cloud upsampling adversarial network. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 7203–7212. [Google Scholar]

- Wang, X.; Ang, M.H., Jr.; Lee, G.H. Cascaded refinement network for point cloud completion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 790–799. [Google Scholar]

- Lang, I.; Manor, A.; Avidan, S. Samplenet: Differentiable point cloud sampling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 7578–7588. [Google Scholar]

- Chen, C.; Chen, Z.; Zhang, J.; Tao, D. Sasa: Semantics-augmented set abstraction for point-based 3d object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 22 February–1 March 2022; Volume 36, pp. 221–229. [Google Scholar]

- Cui, B.; Tao, W.; Zhao, H. High-precision 3D reconstruction for small-to-medium-sized objects utilizing line-structured light scanning: A review. Remote Sens. 2021, 13, 4457. [Google Scholar] [CrossRef]

- Liu, K.; Gao, Z.; Lin, F.; Chen, B.M. Fg-net: A fast and accurate framework for large-scale lidar point cloud understanding. IEEE Trans. Cybern. 2022, 53, 553–564. [Google Scholar] [CrossRef]

- Liu, K.; Gao, Z.; Lin, F.; Chen, B.M. Fg-net: Fast large-scale lidar point clouds understanding network leveraging correlated feature mining and geometric-aware modelling. arXiv 2020, arXiv:2012.09439. [Google Scholar]

- Wang, Y.; Yan, C.; Feng, Y.; Du, S.; Dai, Q.; Gao, Y. Storm: Structure-based overlap matching for partial point cloud registration. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 1135–1149. [Google Scholar] [CrossRef] [PubMed]

- Yang, L.; Shrestha, R.; Li, W.; Liu, S.; Zhang, G.; Cui, Z.; Tan, P. Scenesqueezer: Learning to compress scene for camera relocalization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8259–8268. [Google Scholar]

- Wang, T.; Yuan, L.; Chen, Y.; Feng, J.; Yan, S. Pnp-detr: Towards efficient visual analysis with transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 4661–4670. [Google Scholar]

- Zhu, M.; Ghaffari, M.; Peng, H. Correspondence-free point cloud registration with SO (3)-equivariant implicit shape representations. In Proceedings of the Conference on Robot Learning, Auckland, NZ, USA, 14–18 December 2022; pp. 1412–1422. [Google Scholar]

- Wang, H.; Pang, J.; Lodhi, M.A.; Tian, Y.; Tian, D. Festa: Flow estimation via spatial-temporal attention for scene point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14173–14182. [Google Scholar]

- Lv, C.; Lin, W.; Zhao, B. Approximate intrinsic voxel structure for point cloud simplification. IEEE Trans. Image Process. 2021, 30, 7241–7255. [Google Scholar] [CrossRef] [PubMed]

- Yang, P.; Snoek, C.G.; Asano, Y.M. Self-Ordering Point Clouds. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 15813–15822. [Google Scholar]

- Yuan, W.; Khot, T.; Held, D.; Mertz, C.; Hebert, M. Pcn: Point completion network. In Proceedings of the 2018 International Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 728–737. [Google Scholar]

- Zamanakos, G.; Tsochatzidis, L.; Amanatiadis, A.; Pratikakis, I. A comprehensive survey of LIDAR-based 3D object detection methods with deep learning for autonomous driving. Comput. Graph. 2021, 99, 153–181. [Google Scholar] [CrossRef]

- Chen, X.; Chen, B.; Mitra, N.J. Unpaired point cloud completion on real scans using adversarial training. arXiv 2019, arXiv:1904.00069. [Google Scholar]

- Achituve, I.; Maron, H.; Chechik, G. Self-supervised learning for domain adaptation on point clouds. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 123–133. [Google Scholar]

- Liu, M.; Sheng, L.; Yang, S.; Shao, J.; Hu, S.M. Morphing and sampling network for dense point cloud completion. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11596–11603. [Google Scholar]

- Zhou, L.; Du, Y.; Wu, J. 3d shape generation and completion through point-voxel diffusion. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 5826–5835. [Google Scholar]

- Xie, H.; Yao, H.; Zhou, S.; Mao, J.; Zhang, S.; Sun, W. Grnet: Gridding residual network for dense point cloud completion. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 365–381. [Google Scholar]

- Pan, L.; Chen, X.; Cai, Z.; Zhang, J.; Zhao, H.; Yi, S.; Liu, Z. Variational relational point completion network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8524–8533. [Google Scholar]

- Zhang, J.; Chen, X.; Cai, Z.; Pan, L.; Zhao, H.; Yi, S.; Yeo, C.K.; Dai, B.; Loy, C.C. Unsupervised 3d shape completion through gan inversion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1768–1777. [Google Scholar]

- Huang, Z.; Yu, Y.; Xu, J.; Ni, F.; Le, X. Pf-net: Point fractal network for 3d point cloud completion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 7662–7670. [Google Scholar]

- Fei, B.; Yang, W.; Chen, W.M.; Li, Z.; Li, Y.; Ma, T.; Hu, X.; Ma, L. Comprehensive review of deep learning-based 3d point cloud completion processing and analysis. IEEE Trans. Intell. Transp. Syst. 2022, 23, 22862–22883. [Google Scholar] [CrossRef]

- Yan, X.; Lin, L.; Mitra, N.J.; Lischinski, D.; Cohen-Or, D.; Huang, H. Shapeformer: Transformer-based shape completion via sparse representation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 6239–6249. [Google Scholar]

- Zhou, H.; Cao, Y.; Chu, W.; Zhu, J.; Lu, T.; Tai, Y.; Wang, C. Seedformer: Patch seeds based point cloud completion with upsample transformer. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 416–432. [Google Scholar]

| Model | Dataset | Data Representation |

|---|---|---|

| PointOutNet [9] | ShapeNet [10], 3D-R2N2 [11] | Point Cloud |

| Pseudo-renderer [12] | ShapeNet [10] | Point Cloud |

| RealPoint3D [13] | ShapeNet [10], ObjectNet3D [14] | Point Cloud |

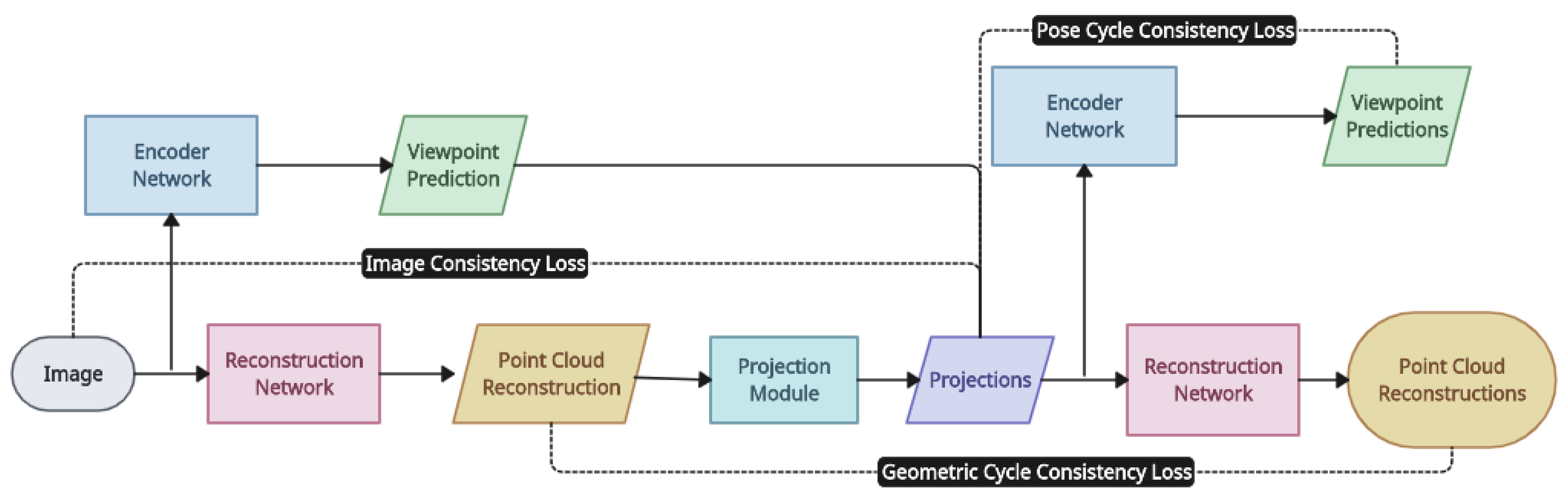

| Cycle-consistency-based approach [15] | ShapeNet [10], Pix3D [16] | Point Cloud |

| 3D34D [17] | ShapeNet [10] | Point Cloud |

| Unsupervised learning of 3D structure [18] | ShapeNet [10], MNIST3D [19] | Point Cloud |

| Models | Dataset | Data Representation |

|---|---|---|

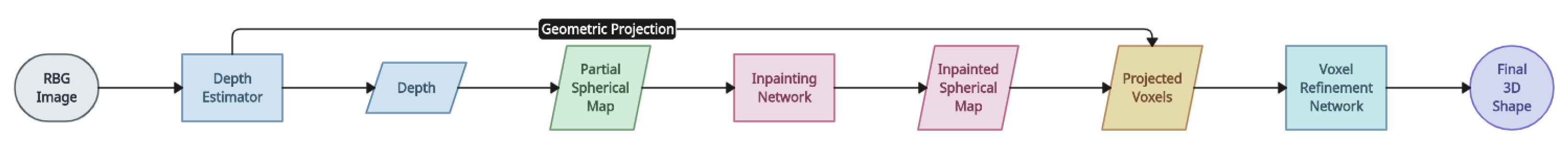

| GenRe [20] | ShapeNet [10], Pix3D [16] | Voxels |

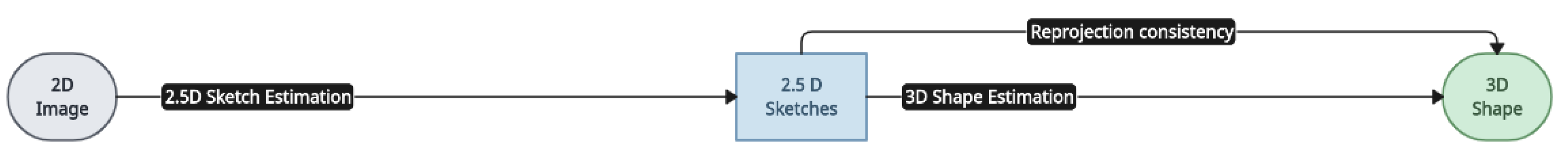

| MarrNet [21] | ShapeNet [10], PASCAL3D+ [22] | Voxels |

| Perspective Transformer Nets [23] | ShapeNet [10] | Voxels |

| Rethinking reprojection [24] | ShapeNet [10], PASCAL3D+ [22], SUN [25], MS COCO [26] | Voxels |

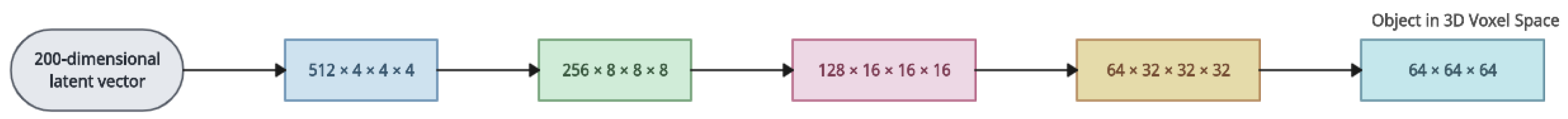

| 3D-GAN [27] | ModelNet [28], IKEA [29] | Voxels |

| Pix2Vox++ [30] | ShapeNet [10], Pix3D [16], Things3D [30] | Voxels |

| 3D-R2N2 [11] | ShapeNet [10], PASCAL3D+ [22], MVS CAD 3D [11] | Voxels |

| Weak recon [31] | ShapeNet [10], ObjectNet3D [14] | Voxels |

| Relative viewpoint estimation [32] | ShapeNet [10], Pix3D [16], Things3D [30] | Voxels |

| Datasets | Number of Frames | Number of Labels | Object Type | 5 Common Classes |

|---|---|---|---|---|

| ModelNet [28] | 151,128 | 660 | 3D CAD Scans | Bed, Chair, Desk, Sofa, Table |

| PASCAL3D+ [22] | 30,899 | 12 | 3D CAD Scans | Boat, Bus, Car, Chair, Sofa |

| ShapeNet [10] | 220,000 | 3135 | Scans of Artefact, Plant, Person | Table, Car, Chair, Sofa, Rifle |

| ObjectNet3D [14] | 90,127 | 100 | Scans of Artifact, Vehicles | Bed, Car, Door, Fan, Key |

| ScanNet [39] | 2,492,518 | 1513 | Scans of Bedrooms, Kitchens, Offices | Bed, Chair, Door, Desk, Floor |

| Nr. | Model | Dataset | Data Representation |

|---|---|---|---|

| 1 | PointOutNet [9] | ShapeNet [10], 3D-R2N2 [11] | Point Cloud |

| 2 | Pseudo-renderer [12] | ShapeNet [10] | Point Cloud |

| 3 | RealPoint3D [13] | ShapeNet [10], ObjectNet3D [14] | Point Cloud |

| 4 | Cycle- consistency-based [15] approach | ShapeNet [10], Pix3D [16] | Point Cloud |

| 5 | GenRe [20] | ShapeNet [10], Pix3D [16] | Voxels |

| 6 | MarrNet [21] | ShapeNet [10], PASCAL3D+ [22] | Voxels |

| 7 | Perspective Transformer [23] Nets | ShapeNet [10] | Voxels |

| 8 | Rethinking reprojection | ShapeNet [10], PASCAL3D+ [22], SUN [25], MS COCO [26] | Voxels |

| 9 | 3D-GAN [24] | ModelNet [28], IKEA [29] | Voxels |

| 10 | Neural renderer [35] | ShapeNet [10] | Meshes |

| 11 | Residual MeshNet [36] | ShapeNet [10] | Meshes |

| 12 | Pixel2Mesh [37] | ShapeNet [10] | Meshes |

| 13 | CoReNet [38] | ShapeNet [10] | Meshes |

| Model | Advantages | Limitations |

|---|---|---|

| PointOutNet [9] | Introduces the chamfer distance loss, which is invariant to the permutation of points and is adopted by many other models as a regulariser. | Utilises less memory, but since they lack connection information, they need extensive postprocessing. |

| Pseudo-renderer [12] | Uses 2D supervision in addition to 3D supervision to obtain multiple projection images from various viewpoints of the generated 3D shape for optimisation. | Predicts denser, more accurate point clouds but is limited to the amount of points that point cloud-based representations can accommodate. |

| RealPoint3D [13] | Attempts to recreate 3D models from nature photographs with complicated backgrounds. | Needs an encoder to extract the input image’s 2D features and input point cloud data’s 3D features. |

| Cycle- consistency-based approach [15] | Uses a differentiable renderer to infer a 3D shape without using ground truth 3D annotation. | Cycle consistency produces deformed body structure or out-of-view images if it is unaware of the previous distribution of the 3D features, which interferes with the training process. |

| GenRe [20] | Can rebuild 3D objects with resolutions of up to 128 × 128 × 128 and more detailed reconstruction outcomes. | Higher resolutions have been used by this model at the expense of sluggish training or lossy 2D projections, as well as small training batches. |

| MarrNet [21] | Avoids modelling item appearance differences within the original image by generating 2.5D drawings from it. | Relies on 3D supervision which is only available for restricted classes or in a synthetic setting. |

| Perspective Transformer Nets [23] | Learns 3D volumetric representations from 2D observations based on principles of projective geometry. | Struggles to produce images that are consistent across several views as the underlying 3D scene structure cannot be utilised. |

| Rethinking reprojection [24] | Decoupling shape and posture lowers the number of free parameters in the network, increasing efficiency. | Assumes that the scene or object to be registered is either non-deformable or generally static. |

| 3D-GAN [27] | Generative component aims to map a latent space to a distribution of intricate 3D shapes. | GAN training is notoriously unreliable. |

| Neural renderer [35] | Objects are trained in canonical pose. | This mesh renderer modifies geometry and colour in response to a target image. |

| Residual MeshNet [36] | Reconstructing 3D meshes using MLPs in a cascaded hierarchical fashion. | Produces mesh automatically during the finite element method (FEM) computation process, although it does not save time increasing computing productivity. |

| Pixel2Mesh [37] | Extracts perceptual features from the input image and gradually deforms an ellipsoid in order to obtain the output geometry. | Several perspectives of the target object or scene are not included in the training data for 3D shape reconstruction, as in real-world scenarios. |

| CoReNet [38] | Reconstructs the shape and semantic class of many objects directly in a 3D volumetric grid using a single RGB image. | Training on synthetic representations restricts their practicality in real-world situations. |

| Nr. | Model | Dataset | Data Representation |

|---|---|---|---|

| 1 | 3D34D [17] | ShapeNet [10] | Point Cloud |

| 2 | Unsupervised learning of 3D structure [18] | ShapeNet [10], MNIST3D [19] | Point Cloud |

| 3 | Pix2Vox++ [30] | ShapeNet [10], Pix3D [16], Things3D [30] | Voxels |

| 4 | 3D-R2N2 [11] | ShapeNet [10], PASCAL3D+ [22], MVS CAD 3D [11] | Voxels |

| 5 | Weak recon [31] | ShapeNet [10], ObjectNet3D [14] | Voxels |

| 6 | Relative viewpoint estimation [32] | ShapeNet [10], Pix3D [16], Things3D [30] | Voxels |

| Model | Advantages | Limitations |

|---|---|---|

| 3D34D [17] | Obtains a more expressive intermediate shape representation by locally assigning features and 3D points. | Performs admirably on synthetic objects rendered with a clear background, but not on actual photos, novel categories, or more intricate object geometries. |

| Unsupervised learning of 3D structures [18] | Optimises 3D representations to provide realistic 2D images from all randomly sampled views. | Only basic and coarse shapes can be reconstructed. |

| Pix2Vox++ [30] | Generates a coarse volume for each input image. | Because of memory limitations, the model’s cubic complexity in space results in coarse discretisations. |

| 3D-R2N2 [11] | Converts RGB image partial inputs into a latent vector, which is then used to predict the complete volumetric shape using previously learned priors. | Only works with coarse 64 × 64 × 64 grids. |

| Weak recon [31] | Alternative to costly 3D CAD annotation, and proposes using lower-cost 2D supervision. | Reconstructions are hampered by this weakly supervised environment. |

| Relative viewpoint estimation [32] | Predicts a transformation that optimally matches the bottleneck features of two input images during testing. | It can only predict posture for instances of a single item and does not extend to the category level. |

| Nr. | Model | Dataset | Data Representation |

|---|---|---|---|

| 1 | CPD [136] | Stanford Bunny [33] | Meshes |

| 2 | PSR-SDP [137] | TUM RGB-D [138] | Point Cloud |

| 3 | RPM-Net [139] | ModelNet [28] | Meshes |

| 4 | DeepICP [140] | KITTI [141], SouthBay [142] | Point Cloud, Voxels |

| 5 | 3D-SmoothNet [143] | 3DMatch [144] | Point Cloud, Voxels |

| 6 | 3D multi-view registration [145] | 3DMatch [144], Redwood [146], ScanNet [39] | Point Cloud |

| Model | Advantages | Limitations |

|---|---|---|

| CPD [136] | Considers the alignment as a probability density estimation problem, where one point cloud set represents the Gaussian mixture model centroids, and the other represents the data points. | While GMM-based methods might increase resilience against outliers and bad initialisations, local search remains the foundation of the optimisation. |

| PSR-SDP [137] | Allows for verifying the global optimality of a local minimiser in a significantly faster manner. | Provides poor estimates even in the presence of a single outlier because it assumes that all measurements are inliers. |

| RPM-Net [139] | Able to solve the partial visibility of the point cloud and obtain a soft assignment of point correspondences. | Computational efficacy increases as the number of points in the point clouds increases. |

| DeepICP [140] | By creating a connection using the point cloud’s learned attributes, this study improved the conventional ICP algorithm using the neural network technique. | Takes a lot of computing effort to combine deep learning with ICP directly. |

| 3DSmoothNet [143] | First learned, universal matching method that allows transferring trained models between modalities. | 290 times slower than FCGF [162] model. |

| 3D multi-view registration [145] | First end-to-end algorithm for joint learning of both stages of the registration problem. | A lot of training data are required. |

| Nr. | Model | Dataset | Data Representation |

|---|---|---|---|

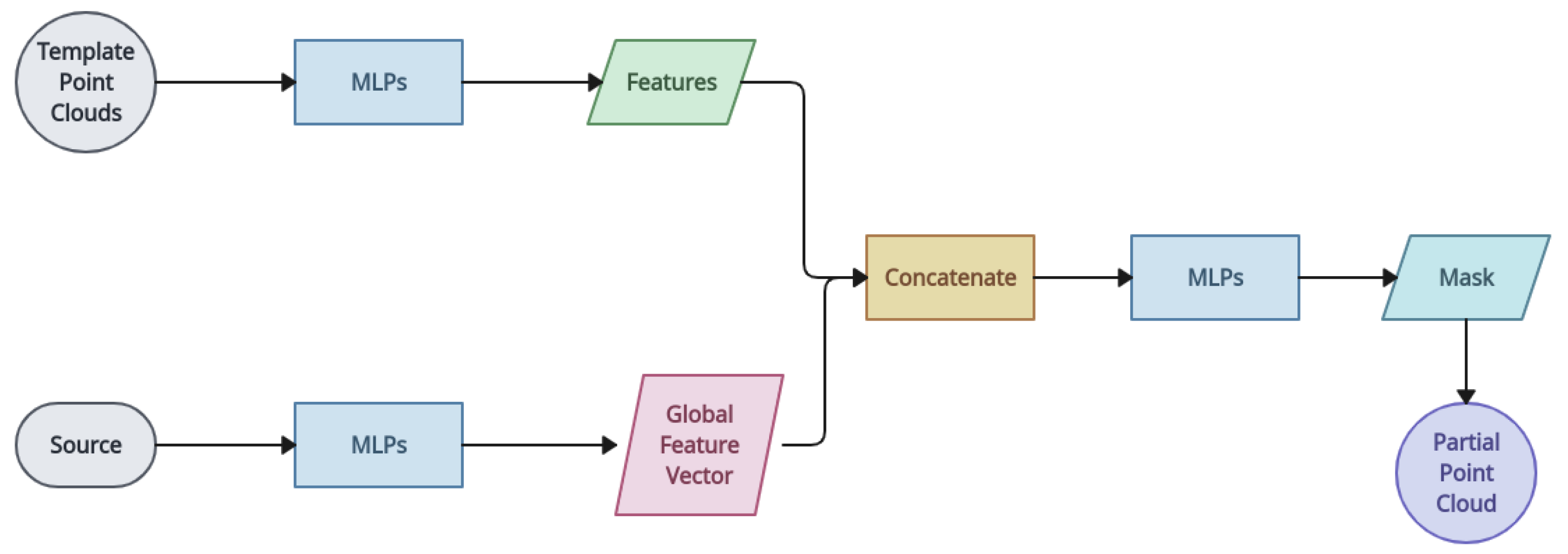

| 1 | MaskNet [165] | S3DIS [3], 3DMatch [144], ModelNet [28] | Point Cloud |

| 2 | GPDNet [166] | ShapeNet [10] | Point Cloud |

| 3 | DMR [167] | ModelNet [28] | Point Cloud |

| 4 | PU-Net [168] | ModelNet [28], ShapeNet [10] | Point Cloud |

| 5 | MPU [169] | ModelNet [28], MNIST-CP [19] | Point Cloud |

| 6 | CP-Net [170] | ModelNet [28] | Point Cloud |

| 7 | SampleNet [171] | ModelNet [28], ShapeNet [10] | Point Cloud |

| Model | Advantages | Limitations |

|---|---|---|

| MaskNet [165] | Rejects noise in even partial clouds in a rather computationally inexpensive manner. | Requires the input of both a partial and complete point cloud. |

| GDPNet [166] | Deals with the permutation-invariance problem and builds hierarchies of local or non-local features to effectively address the denoising problem. | The point clouds’ geometric characteristics are often oversmoothed. |

| DMR [167] | Patch manifold reconstruction (PMR) upsampling technique is straightforward and efficient. | Downsampling step invariably results in detail loss, especially at low noise levels, and could also oversmooth by removing some useful information. |

| PU-Net [168] | Both reconstruction loss and repulsion loss are jointly utilised to improve the quality of the output. | Only learns spatial relationships at a single level of multi-step point cloud decoding via self-attention. |

| MPU [169] | Trained end-to-end on high-resolution point clouds and emphasises a certain level of detail by altering the spatial span of the receptive field in various steps. | Cannot be used for completion tasks and is restricted to upsampling sparse locations. |

| CP-Net [170] | Final representations typically retain crucial points that take up a significant number of channels. | Potential loss of information due to the down-sampling process. |

| SampleNet [171] | Sampling procedure for the representative point cloud classification problem becomes differentiable, allowing for end-to-end optimisation. | Fails to attain a satisfactory equilibrium between maintaining geometric features and uniform density. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vinodkumar, P.K.; Karabulut, D.; Avots, E.; Ozcinar, C.; Anbarjafari, G. Deep Learning for 3D Reconstruction, Augmentation, and Registration: A Review Paper. Entropy 2024, 26, 235. https://doi.org/10.3390/e26030235

Vinodkumar PK, Karabulut D, Avots E, Ozcinar C, Anbarjafari G. Deep Learning for 3D Reconstruction, Augmentation, and Registration: A Review Paper. Entropy. 2024; 26(3):235. https://doi.org/10.3390/e26030235

Chicago/Turabian StyleVinodkumar, Prasoon Kumar, Dogus Karabulut, Egils Avots, Cagri Ozcinar, and Gholamreza Anbarjafari. 2024. "Deep Learning for 3D Reconstruction, Augmentation, and Registration: A Review Paper" Entropy 26, no. 3: 235. https://doi.org/10.3390/e26030235

APA StyleVinodkumar, P. K., Karabulut, D., Avots, E., Ozcinar, C., & Anbarjafari, G. (2024). Deep Learning for 3D Reconstruction, Augmentation, and Registration: A Review Paper. Entropy, 26(3), 235. https://doi.org/10.3390/e26030235