Abstract

We explore formal similarities and mathematical transformation formulas between general trace-form entropies and the Gini index, originally used in quantifying income and wealth inequalities. We utilize the notion of gintropy introduced in our earlier works as a certain property of the Lorenz curve drawn in the map of the tail-integrated cumulative population and wealth fractions. In particular, we rediscover Tsallis’ q-entropy formula related to the Pareto distribution. As a novel result, we express the traditional entropy in terms of gintropy and reconstruct further non-additive formulas. A dynamical model calculation of the evolution of Gini index is also presented.

1. Motivation

This paper responds to a call by the journal to accompany various contributions in honor of Constantino Tsallis’ 80th birthday. Professor Tsallis initiated the field of non-extensive statistical mechanics with his seminal paper in 1988 [1] and kept this field flourishing with his continuous activity since then. One of his recent books on Non-Extensive Statistical Mechanics [2], has the subtitle “Approaching a Complex World”. It characterizes the range of research fields, beyond physics, where non-additive entropy formulas can be applied [3,4,5,6,7]. Adding a physicist’s approach to the mathematical predecessor formulas, such as Rényi entropy [8], and further generalizations of the Boltzmannian log-formula proliferating in the field of informatics and mathematics [9,10,11,12], his work is acknowledged to date in a wide and strengthening community of researchers dealing with complexity [13,14,15,16,17,18,19,20].

Over the years, newcomers and opponents of non-extensive thermodynamics have often argued that using any formula between entropy and probability besides the classical Boltzmann–Gibbs–Shannon version can only then be generally applied, and it is advised to use it if it moves beyond merely being an alternative formal possibility—when it must be applied. Therefore, there is an ongoing challenge to find real-world data and applications that can only be described by a non-Boltzmannian entropy formula. Such cases are found with increasing frequency in complex systems. An interesting approach is presented in [21]: it shows how to analyze nuclear production data to reveal non-extensive thermodynamics. (Our earlier calculations of fluctuations and deviations from an exponential kinetic energy distribution due to the finiteness of a heat bath, presented in several publications, should not be cited here, because the Editors at MDPI consider self-citations, even one sixth of the total, to be biased and unnecessary.)

The Tsallis and Rényi entropy formulas are monotonic functions of one another; therefore, their respective canonical equilibrium distribution functions coincide, not accounting for constant factors related to the partition sum. Since the Rényi entropy is defined as

and the Tsallis entropy as

one obtains, in the canonical approach to the physical energy distribution,

The actual energy level is denoted by in this formula, while and are Lagrange multipliers. The former is related to the partition function and the latter to the absolute temperature (via the average value of the energy). The prefactor Equation (3) is independent of ; therefore, the functional forms of the canonical PDFs coincide, reconstructing the Pareto or Lomax distribution [22,23,24,25].

In a microcanonical approach, all trace-form entropies are maximal at the distribution uniform in x, provided that the non-trivial function in the formula satisfies the general properties of non-negativity and convexity. Constraining the expectation value of the base variable, , of which we intend to study the probability density function, , leads to an entropy depending on the constrained value, say for an energy (E) distribution. These functions, of course, vary. The properties of entropy formulas also differ: while the Rényi entropy is additive for the factorization of probabilities and the Tsallis q-entropy is not, the q-entropy is formally an expectation value and the Rényi entropy is not.

In this paper, we first briefly review the Gini index and the Lorenz curve, spanning a map of the tail-cumulative fractions of a population and the wealth owned by this population. We furthermore review the definition and basic properties of gintropy, defined as the difference between the above two cumulatives. Following this, we introduce some gintropy formulas being formal doubles of well-known and used entropies. Finally, we explore the transformations from one (entropic) view to the other (gintropic view) and present a dynamical model calculation of the evolution of the Gini index based on a master equation.

2. About Gintropy

In our search for additional motivation for the use of non-Boltzmannian entropy formulas, we encounter the Gini index [26,27,28], classically used in income and wealth data analyses. It measures the expectation value of the absolute difference, , normalized by that of the sum, , when taking both variables from the same distribution. It delivers values between zero and one (100%):

Here, is the underlying PDF. This formula can be transformed into several alternate forms, as has been shown in Ref. [29] in detail. We have also found that a function defined by tail-cumulative functions, gintropy, has properties very similar to those of an entropy–probability trace formula function.

Two basic tail-cumulative functions constitute the definition and usefulness of gintropy. The first is the cumulative population,

and the second is the cumulative wealth normalized by its average value (also called the scaled and (from below) truncated expectation value),

We note here that the notions “population” and “wealth” are used in a general sense: any type of real random variable x associated with a well-defined PDF, , has a tail-cumulative fraction (cf. Equation (5)) and a scaled fraction of the occurrence of the basic variable defined in Equation (6). For example, x may denote the number of citations that an individual author receives and the distribution of this number in the analyzed population. Then, is the fraction of papers cited x times or more, and is the fraction of citations received for these relative to all citations [30]. The above definitions and the following analysis of gintropy can be used for any PDF defined on non-negative variables and having a finite expectation value.

The Lorenz map [31] plots the essence of a PDF on a plane. Since always , following from the positivity of the PDF, , the Lorenz curve always runs on this map above or on the diagonal. At , both quantities are vanishing, , because the integration range shrinks to zero, and they also coincide at , following from their normalized definitions: . The Gini index can be described as the area fraction between the Lorenz curve and the diagonal to the whole upper triangle (with an area of 1/2). The quantity of gintropy, introduced by us in an earlier work [29], is the difference

This is a function of the fiducial variable x, and it vanishes as a function only for those PDFs that allow only a single value for x. The gintropy is non-negative and it shows a definite sign of curvature. On the Lorenz map, it is best viewed and expressed as a function of . The connection between these two variables, derived from Equation (5), is given by . Likewise, follows from the definition in Equation (6). Then, it is easy to establish that it has a maximum exactly at the average case, :

The second derivative of gintropy in the Lorenz map is always negative:

As a consequence, the gintropy, , has a single maximum (between two maxima, there would be a region with an opposite-sign second derivative for a continuous function). This maximum can be expressed as a function of the average value:

Finally, the Gini index itself is twice the area under the gintropy:

3. Entropy from Gintropy

It is important to consider a few simple cases for gintropy. First of all, a PDF allowing only a singular value, such as , leads to vanishing gintropy. Then, for all . This case is degenerate; the second derivative is also zero across the whole interval and there is no definite maximum. A few examples have been discussed in Ref. [29]. Here, we use the Tsallis–Pareto distribution, as a limiting case, as it includes the Boltzmann–Gibbs exponential too. The tail-cumulative function is given as a two-parameter set with a power-law tail and the proper normalization:

Here, a and b are positive. It follows a PDF,

an expectation value of , and finally a gintropy formula:

Related to the more popular form, one uses as a parameter and arrives at the q-gintropy formula:

The limit of this formula is the Boltzmann–Gibbs–Shannon relation:

The Gini index in the Tsallis–Pareto case is easily obtained as being

The formal analogy between the expressions of gintropy in terms of the tail-cumulative data population on the one hand and the entropy density in terms of the PDF on the other hand is obvious (cf. Equation (15)). Moreover, the general form of trace entropy is given as

while the Gini index is obtained according to our previous discussion above as

Here, we utilize the fact that

for an arbitrary integrand, .

Despite the intriguing analogies, we do not have a quantity that would be equivalent to the total entropy in social and econophysics. On the other hand, the nontrivial identification, , would make the Gini index equal to the entropy, . Since is a negative derivative of the cumulative function , the above correspondence is a complex differential equation for . It may therefore be valid only for a single PDF, , for the solution of the above implicit differential equation. In conclusion, gintropy cannot be replaced by entropy for a general PDF.

Let us review, briefly, how to obtain the general trace-form entropy once the gintropy, , is known. To begin with, one uses a general function, , in the definition of entropy with the required non-negativity and convexity properties. Due to its relation to the fiducial PDF, , and using Equation (18), we obtain

with the short-hand notation

In particular, the Boltzmann entropy becomes

4. Dynamics of the Gini Index

After the introduction of gintropy, the authors of [32] provided several examples for different socioeconomic systems and compared the inequality measure G for their wealth distribution. Here, we supplement this steady picture with a dynamic one. We demonstrate, based on the example of the linear growth with reset (LGGR) model [33,34], that the Gini index mostly (i.e., not accounting for a short overshoot period, probably of numerical origin) increases monotonically, as the wealth distribution tends towards the stationary Tsallis–Pareto distribution. This behavior of the Gini index is not yet proven for the general case, in contrast to the entropy, cf. [32].

As in [32], the society members may have discrete units of wealth. We assume that these members of the society acquire another unit of wealth with a rate that is linear to their actual wealth value (the rich get richer effect). We also incorporate a constant reset rate as in [32].

The evolution equation for the probability density function of the wealth distribution in the LGGR model is applied here to a binned wealth representation. In this case, the evolution equation, denoting with an overdot, reads

where is the actual fraction of people in the wealth slot around k. In other words, one becomes richer with a state-dependent rate, , while there is a reset mechanism to zero wealth with the rate . This means not only a ruin probability rate, but also includes any type of exit of people, receiving the income k, from the studied population (e.g., resorting to pensions or the decay of hadrons containing energy k). The boundary condition at ensures that remains constant in time. This requirement results in

with .

We solve Equation (24) as a time recursion problem, with the linear and the constant parameter functions. In the numerical simulation, we discretize the possible values of k and use them as an integer index. Starting from a theoretical society where everybody has zero wealth, is represented by a Kronecker delta , delivering a vanishing Gini index, . Moreover, the whole Lorenz curve shrinks in this case to the diagonal and correspondingly the gintropy vanishes everywhere as a function of either k or .

The growth rate , which is linear in k, is a common choice when dealing with the distribution of network hubs’ connection numbers and is called a preferential rate [35,36,37,38]. Obviously, the linear assumption is the mathematically simplest between all possible models. Nevertheless, further assumptions, such as a quadratic one, also can be made. The linear preference in the growth rate, utilized in the present discussion, together with a constant reset rate, has the Tsallis–Pareto distribution as the stationary PDF in the LGGR model.

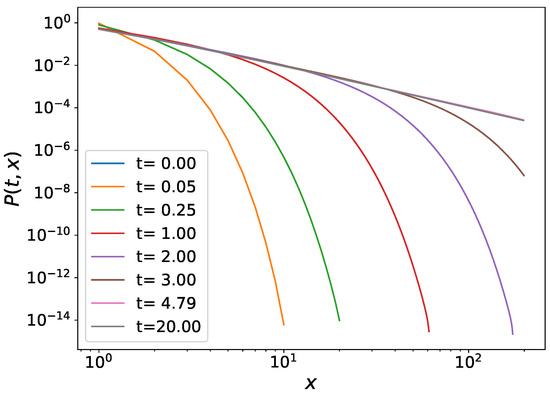

We also observe in our numerical simulations that the Tsallis–Pareto power-law tailed wealth distribution develops, as was already anticipated in Ref. [32], cf. Figure 1. Furthermore, in Ref. [39], analytical expressions were given for the evolution of a general distribution for the cases with constant rates and for the presently discussed case of a linear growth rate with a constant reset rate.

Figure 1.

The time evolution of the wealth distribution starting from a society in which everybody has zero wealth.

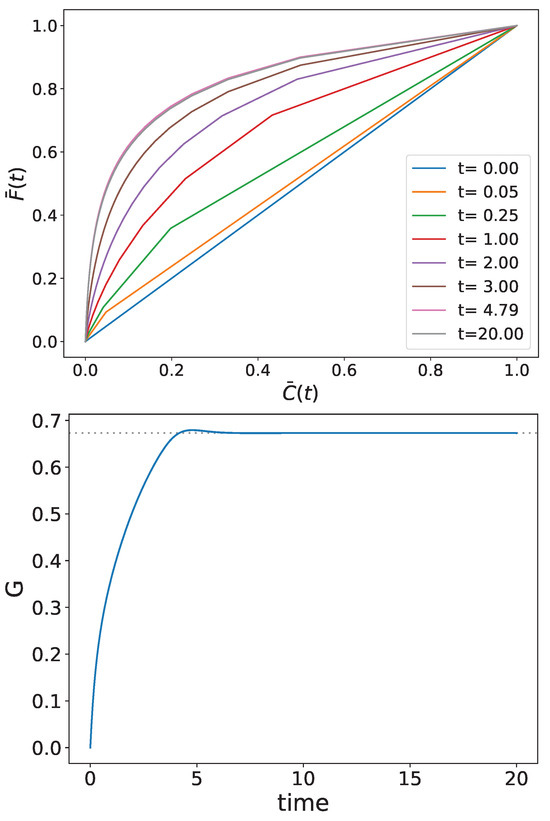

We follow the time evolution of the Lorenz curve, vs. , as well as the time-dependent Gini index. The results of the numerical calculation are shown in the upper and lower panels of Figure 2, respectively.

Figure 2.

Time evolution of the Lorenz curve (upper panel) and the Gini index (lower panel). The steady dotted line in the lower panel corresponds to the final stationary Gini index.

As can be observed, the wealth inequality grows in this theoretical example until it reaches its stationary position. The apparent slight overshoot at mid-time may be a numerical consequence of the time discretization. Recent, yet unpublished, analytical calculations of the time evolution of the Gini index in the very unique case studied numerically in the present paper indicate that would monotonically increase from zero to its stationary value. These somewhat laborious calculations will be published in a separate paper. On the other hand, since the Gini index is not an entropy underlying the second law in thermodynamics, the issue of the monotonity of the Gini index’s evolution in the general case calls for further investigations for a better understanding.

5. Summary

In summary, the quantity of gintropy, the difference between two tail-cumulative integrals of any PDF defined on non-negative values, features a formal dependence on the cumulative data population fraction having the form of various entropy formulas in terms of the original PDF [32]. In this paper, the particular form of Tsallis entropy was discussed in some detail.

The Gini index, used in economic studies to describe income and wealth inequality in societies, is an integral of the gintropy-cumulative data population fraction function. However, the Gini index–total entropy correspondence cannot be generally held, but only for a special PDF, given the trace entropy formula specification. Without this, the gintropic view of known entropy formulas can be obtained by expressing the PDF with the help of the gintropy’s second derivative with respect to the cumulative data population fraction and the average value of the base variable.

Time evolution in the particular but widespread case of a linear growth rate paired with a uniform reset rate was obtained numerically to demonstrate the evolution of the Gini index in time. A slight overshoot beyond its stationary value has been observed, so the Gini index does not appear to behave similarly to entropy in this particular case. However, to obtain a final conclusion, the scaling with the finite index space size should be studied.

Author Contributions

The concept of gintropy was developed by T.S.B. and further developed by T.S.B. and A.T.; the numerical calculations were performed by A.J.; the original manuscript was first produced by T.S.B.; validation and formula control were performed by A.T. and A.J. All authors have read and agreed to the published version of the manuscript.

Funding

T.S.B. was partially funded by UEFISCDI, Romania, grant Nr PN-III-P4-ID-PCE-2020-0647.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Acknowledgments

One of the authors, T.S.B., thanks Tsallis for his friendship and his guiding collegiality during the last several decades, after they first met at a Sigma Phi conference in Crete, Greece, around 2005.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Tsallis, C. Possible generalization of Boltzmann–Gibbs statistics. J. Stat. Phys. 1988, 52, 479. [Google Scholar] [CrossRef]

- Tsallis, C. Introduction to Nonextensive Statistical Mechanics–Approaching a Complex World; Springer Science+Business Media, LLC.: New York, NY, USA, 2009. [Google Scholar]

- Tsallis, C. Nonadditive entropy: The concept and its use. EPJ A 2009, 40, 257. [Google Scholar] [CrossRef]

- Tsekouras, G.A.; Tsallis, C. Generalized entropy arising from a distribution of q indices. Phys. Rev. E 2005, 71, 046144. [Google Scholar] [CrossRef] [PubMed]

- Tsallis, C.; Gell-Mann, M.; Sato, Y. Asymptotically scale-invariant occupancy of phase space makes the entropy Sq extensive. Proc. Nat. Acad. Sci. USA 2005, 102, 15377. [Google Scholar] [CrossRef] [PubMed]

- Abe, S. Axioms and uniqueness theorem for Tsallis entropy. Phys. Lett. A 2000, 271, 74. [Google Scholar] [CrossRef]

- Abe, S.; Rajagopal, A.K. Revisiting Disorder and Tsallis Statistics. Science 2003, 300, 249. [Google Scholar] [CrossRef]

- Rényi, A. On the measures of information and entropy. In Proceedings of the Fourth Berkeley Symposium on Mathematical Statistics and Probability, Berkeley, CA, USA, 20 June–30 July 1961; pp. 547–561. [Google Scholar]

- Shannon, C.E. The Mathematical Theory of Communication; University of Illinois Press: Urbana, IL, USA, 1949. [Google Scholar]

- Zapirov, R.G. Novie Meri i Metodi v Teorii Informacii; Kazan State Technological University: Kazan, Russia, 2005. [Google Scholar]

- Havrda, J.; Charvát, F. Quantification method of classification processes. Concept of structural α-entropy. Kyberbetika 1967, 3, 30. [Google Scholar]

- Nielsen, F.; Nock, R. A closed-form expression for the Sharma–Mittal entropy of exponential families. J. Phys. A 2012, 45, 032003. [Google Scholar] [CrossRef]

- Biro, T.S.; Purcsel, G. Nonextensive Boltzmann Equation and Hadronization. Phys. Rev. Lett. 2005, 95, 162302. [Google Scholar] [CrossRef]

- Thurner, S.; Hanel, R.; Corominus-Murna, B. The three faces of entropy for complex sytems-information, thermodynamics and maxent principle. Phys. Rev. E 2017, 96, 032124. [Google Scholar] [CrossRef]

- Pham, T.M.; Kondor, I.; Hanel, R.; Thurner, S. The effect of social balance on social fragmentation. J. R. Soc. Interface 2020, 17, 20200752. [Google Scholar] [CrossRef]

- Beck, C.; Cohen, E.D.G. Superstatistics. Physica A 2003, 322, 267–275. [Google Scholar] [CrossRef]

- Wilk, G.; Wlodarczyk, Z. Power law tails in elementary and heavy ion collisions—A story of fluctuations and nonextensivity? EPJ A 2009, 40, 299–312. [Google Scholar] [CrossRef]

- Wilk, G.; Wlodarczyk, Z. Consequences of temperature fluctuations in observacbles measured in high-energy collisions. EPJ A 2012, 48, 161. [Google Scholar] [CrossRef]

- Deppman, A. Systematic analysis of pT-distributions in p+p collisions. EPJ A 2013, 49, 17. [Google Scholar]

- Deppman, A.; Megias, E.; Menezes, D.P. Fractal Structures of Yang-Mills Fields and Non-Extensive Statistics: Applications to High Energy Physics. Physics 2020, 2, 455–480. [Google Scholar] [CrossRef]

- Tawfik, A.N. Axiomatic nonextensive statistics at NICA energeies. EPJ A 2016, 52, 253. [Google Scholar] [CrossRef]

- Pareto, V. Manual of Political Economy: A Critical and Variorum Edition; Number 9780199607952; Montesano, A., Zanni, A., Bruni, L., Chipman, J.S., McLure, M., Eds.; Oxford University Press: Oxford, UK, 2014; 664p, Available online: https://ideas.repec.org/b/oxp/obooks/9780199607952.html (accessed on 1 January 2024).

- Lomax, K.S. Business failures. Another example of the analysis of failure data. J. Am. Stat. Assoc. 1954, 49, 847. [Google Scholar] [CrossRef]

- Newman, M.E.J. Power laws, Pareto distributions and Zipf’s law. Contemp. Phys. 2005, 46, 323. [Google Scholar] [CrossRef]

- Rootzén, H.; Tajvidi, N. Multivariate generalized Pareto distributions. Bernoulli 2006, 12, 917. [Google Scholar] [CrossRef]

- Gini, C. Variabilita et Mutuabilita. Contributo allo Studio delle Distribuzioni e delle Relazioni Statistiche; C. Cuppini: Bologna, Italy, 1912. [Google Scholar]

- Gini, C. On the Measure of Concentration with Special Reference to Income and Statistics; General Series No 208; Colorado College Publication: Denver, CO, USA, 1936; Volume 37. [Google Scholar]

- Dorfman, R. A Formula for the Gini Coefficient. Rev. Econ. Stat. 1979, 61, 146. [Google Scholar] [CrossRef]

- Biró, T.S.; Néda, Z. Gintropy: A Gini Index Based Generalization of Entropy. Entropy 2020, 22, 879. [Google Scholar] [CrossRef] [PubMed]

- Biró, T.S.; Telcs, A.; Józsa, M.; Néda, Z. Gintropic scaling of scientometric indexes. Physica A 2023, 618, 128717. [Google Scholar] [CrossRef]

- Lorenz, M.O. Methods of measuring the concentration of wealth. Publ. Am. Stat. Assoc. 1905, 9, 209. [Google Scholar]

- Biró, T.S.; Néda, Z.; Telcs, A. Entropic Divergence and Entropy Related to Nonlinear Master Equations. Entropy 2019, 21, 993. [Google Scholar] [CrossRef]

- Biró, T.S.; Néda, Z. Unidirectional random growth with resetting. Physica A 2018, 449, 335. [Google Scholar] [CrossRef]

- Biró, T.S.; Néda, Z. Thermodynamical Aspects of the LGGR Approach for Hadron Energy Spectra. Symmetry 2022, 14, 1807. [Google Scholar] [CrossRef]

- Irwin, J.O. The Generalized Waring Distribution Applied to Accident Theory. J. R. Stat. Soc. A 1968, 131, 205–225. [Google Scholar] [CrossRef]

- Thurner, S.; Kyriakopoulos, F.; Tsallis, C. Unified model for network dynamics exhibiting nonextensive statistics. Phys. Rev. E 2007, 76, 036111. [Google Scholar] [CrossRef]

- Krapivsky, P.L.; Redner, S.; Leyvraz, F. Connectivity of Growing Random Networks. Phys. Rev. Lett. 2000, 85, 4629–4632. [Google Scholar] [CrossRef]

- Krapivsky, P.L.; Rodgers, G.J.; Redner, S. Degree Distributions of Growing Networks. Phys. Rev. Lett. 2001, 86, 5401–5404. [Google Scholar] [CrossRef]

- Biró, T.S.; Csillag, L.; Néda, Z. Transient dynamics in the random growth and reset model. Entropy 2021, 23, 306. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).