FBANet: Transfer Learning for Depression Recognition Using a Feature-Enhanced Bi-Level Attention Network

Abstract

1. Introduction

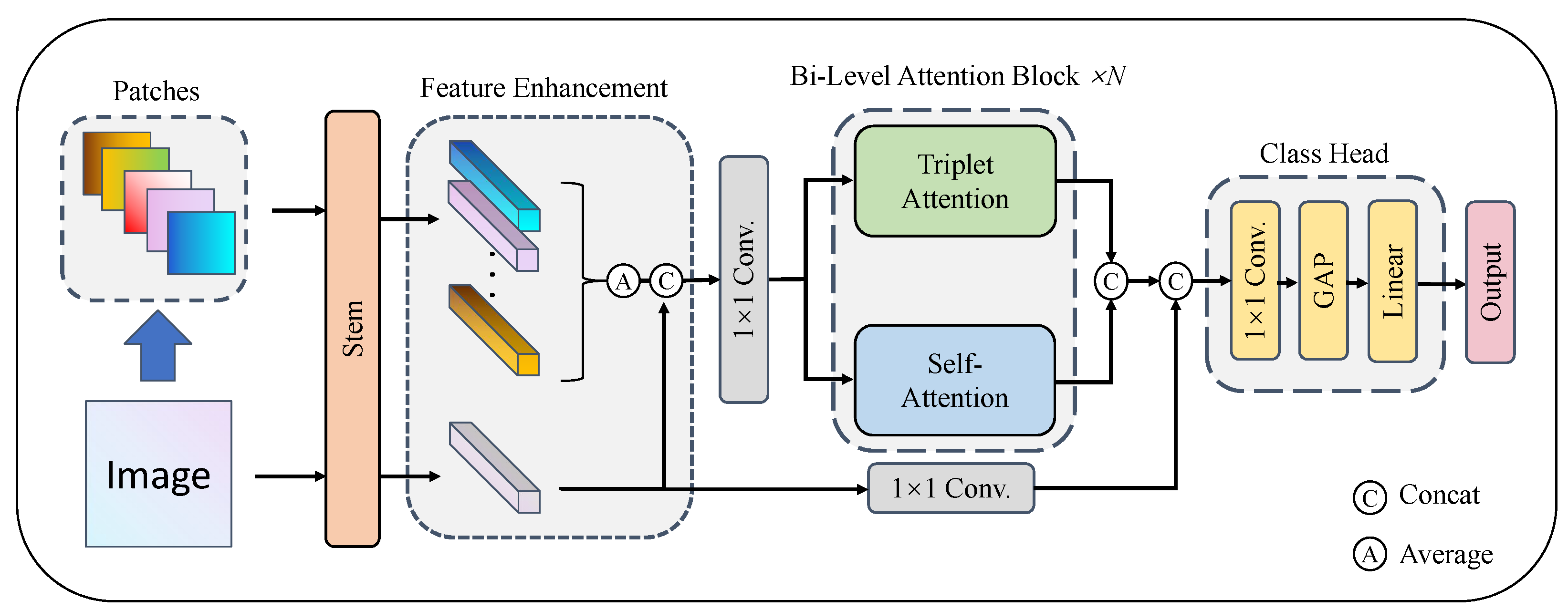

- A deep learning-based, one-stage depression recognition method (FBANet) for HTP sketches is proposed for the first time. The FBANet comprises three key modules: the Feature Enhancement module, which enhances the network’s feature capture ability; the Bi-Level Attention module, which captures both contextual and spatial information; and the Classification Head module, which obtains the classification results. After simple preprocessing, high-accuracy recognition results can be obtained by feeding the images into FBANet, making it an expected auxiliary diagnostic method for depression.

- Given the small size of the HTP sketch dataset, transfer learning is employed to improve the model’s accuracy and reduce the risk of overfitting. Specifically, the model is pre-trained on a large-scale sketch dataset and fine-tuned on the HTP sketch dataset. Experimental results demonstrate the superior performance of the proposed model.

2. Related Work

2.1. Traditional Depression Diagnosis

2.2. Computer Diagnosis of Depression Based on Physiological Signal

2.3. Analysis of Depression Recognition Based on HTP Sketch

2.4. Image Classification Models and Attention Mechanisms

2.5. Transfer Learning

3. Methodology

3.1. Feature Enhancement

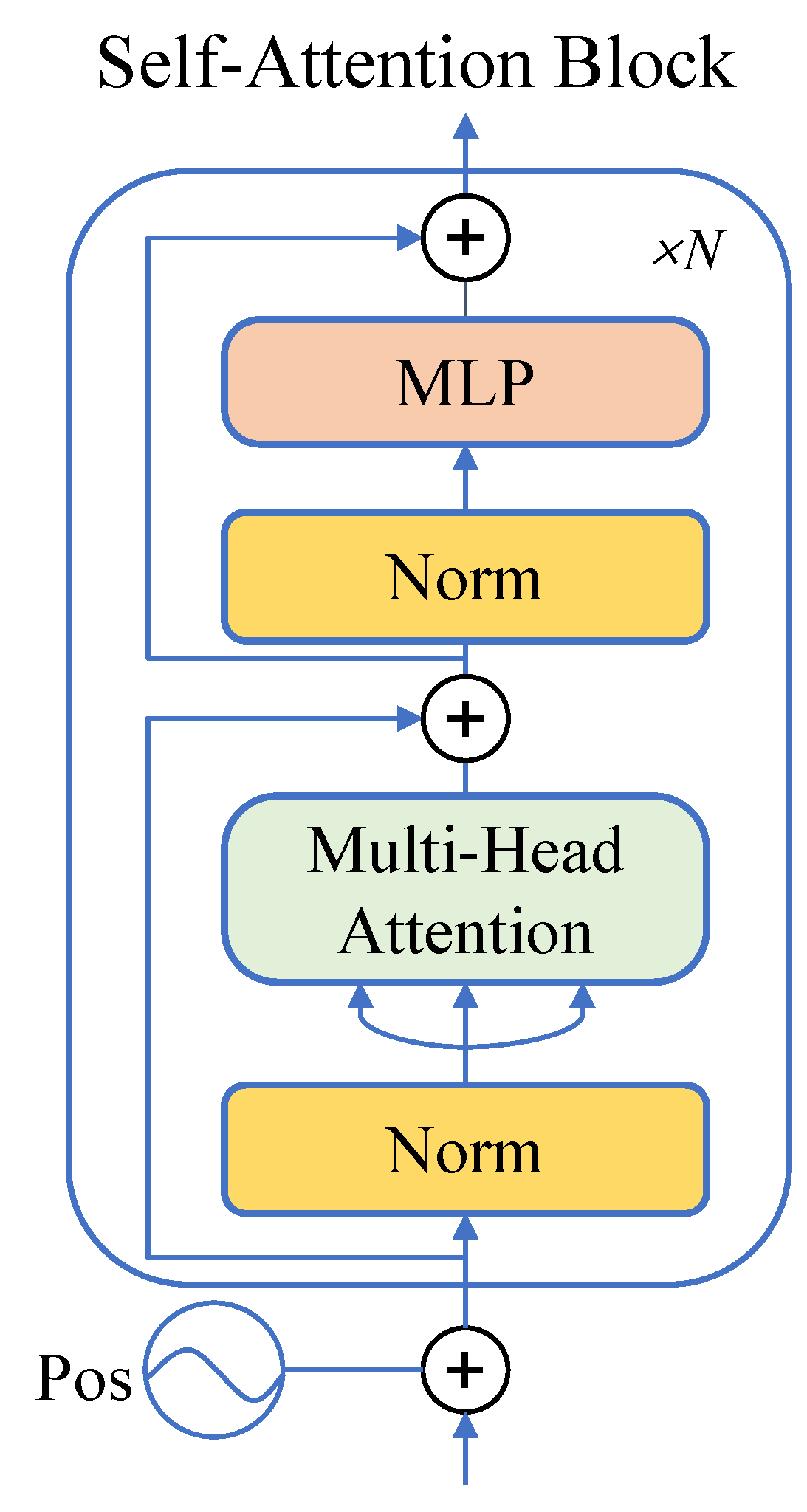

3.2. Self-Attention

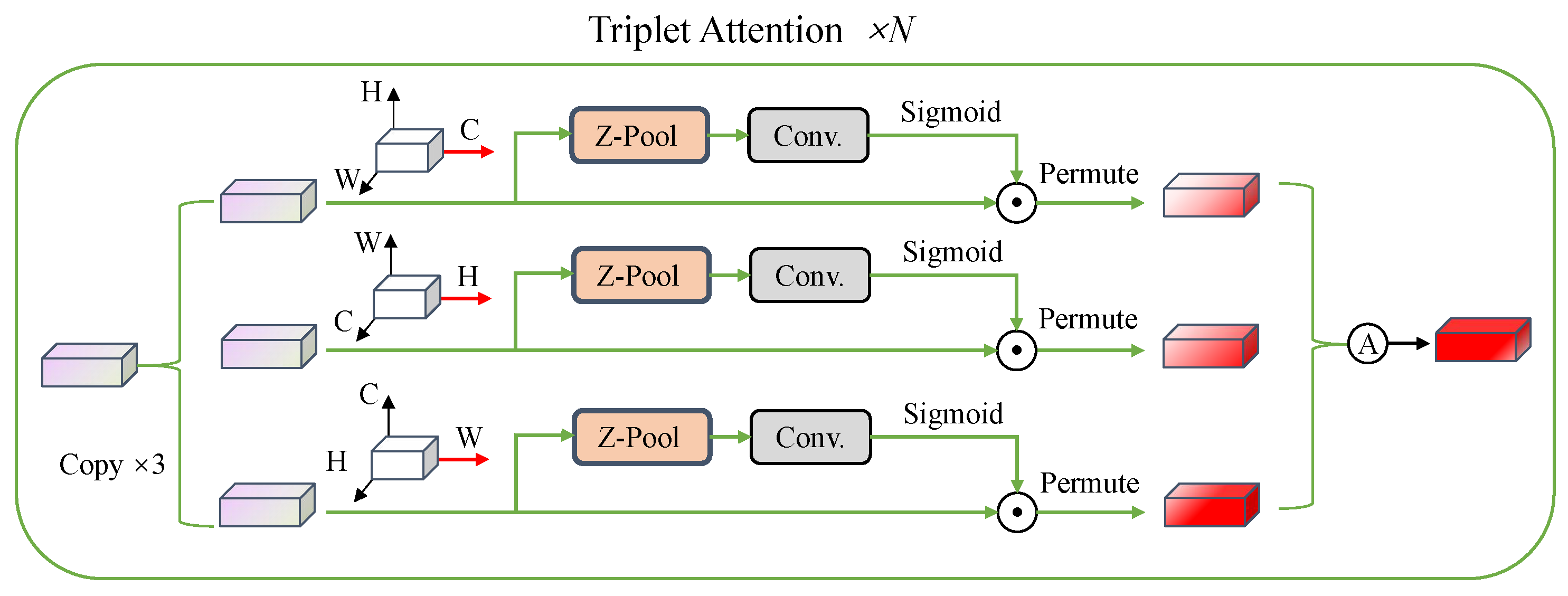

3.3. Triplet Attention

3.4. Bi-Level Attention Fusion and Classification Head

4. Experiments

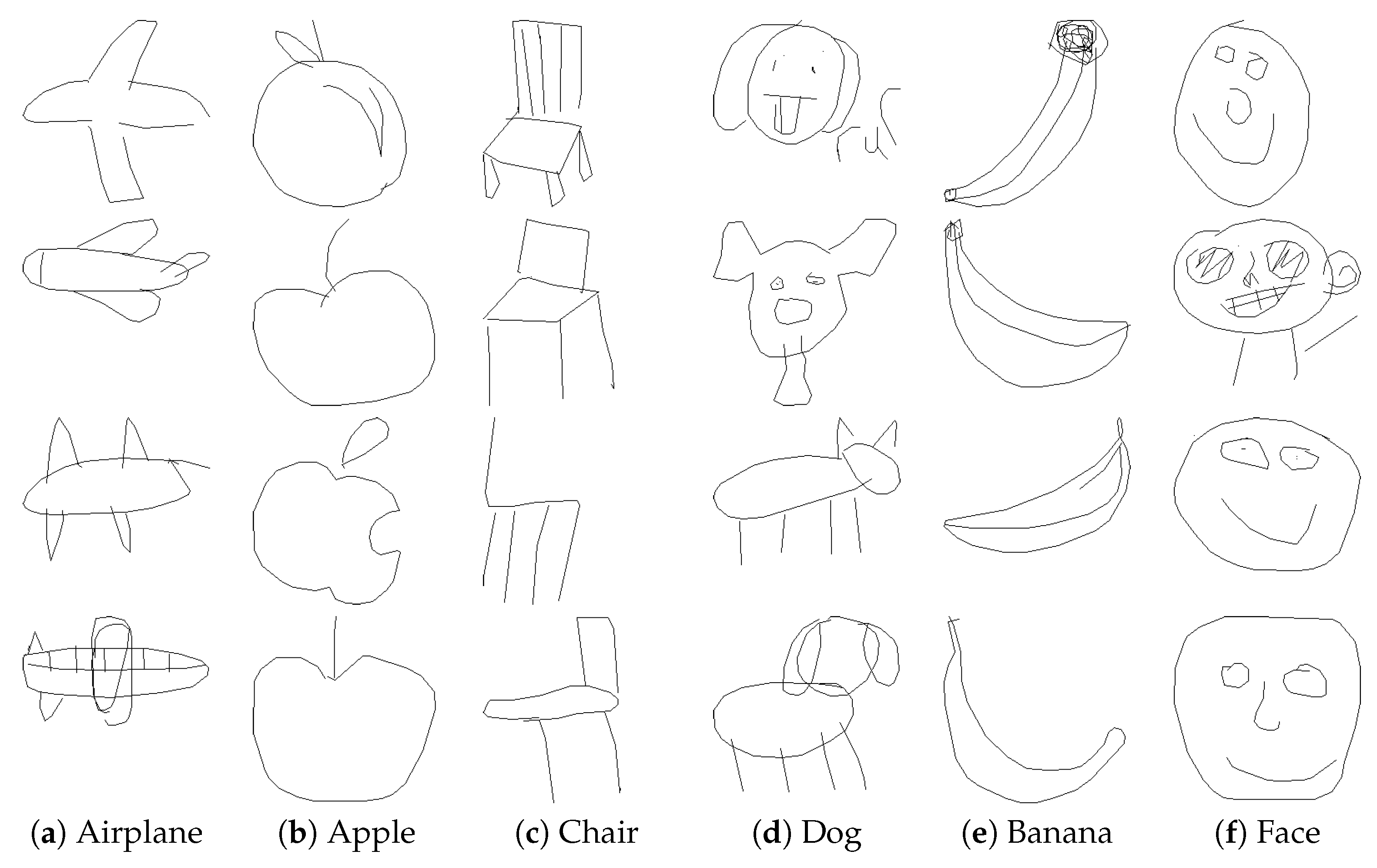

4.1. Datasets and Settings

4.1.1. Datasets

4.1.2. Implementation Details

4.2. Metrics

4.3. Pre-Training

4.4. Fine-Tuning

4.5. Ablation Study

4.6. Limitations

- The accuracy of the FBANet models in recognizing the category of non-depression is lower compared to that of recognizing depression. As shown in Figure 6, except for Confusion Matrixes (c,f), where the classification accuracy is almost equal, the remaining Confusion Matrixes exhibit noticeably higher accuracy in recognizing depression. Therefore, future research will focus on improving the accuracy of the models in recognizing the category of non-depression.

- The FBANet models have a high number of parameters and computational complexity, as evident from Table 4: FBA-Small-5 compared to ResNet50, Inceptionv3, EfficientNetb5; FBA-Base-5 compared to ViT, Hybrid ViT, Swin. Therefore, future research will explore the design of lightweight models for depression classification.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Arbanas, G. Diagnostic and Statistical Manual of Mental Disorders (DSM-5). Alcohol. Psychiatry Res. 2015, 51, 61–64. [Google Scholar] [CrossRef]

- Zimmerman, M.; Coryell, W.H. The Inventory to Diagnose Depression (IDD): A self-report scale to diagnose major depressive disorder. J. Consult. Clin. Psychol. 1987, 55 1, 55–59. [Google Scholar] [CrossRef]

- WHO. Depressive Disorder (Depression). 2023. Available online: https://www.who.int/news-room/fact-sheets/detail/depression (accessed on 2 July 2023).

- Depression and Other Common Mental Disorders: Global Health Estimates; World Health Organization (WHO): Geneva, Switzerland, 2017.

- Hamilton, M. A rating scale for depression. J. Neurol. Neurosurg. Psychiatry 1960, 23, 56–62. [Google Scholar] [CrossRef]

- Zung, W.W.K. A self-rating depression scale. Arch. Gen. Psychiatry 1965, 12, 63–70. [Google Scholar] [CrossRef]

- Buck, J.N. The H-T-P test. J. Clin. Psychol. 1948, 4, 151–159. [Google Scholar] [CrossRef]

- Burns, R.C. Kinetic House-Tree-Person Drawings: K-H-T-P: An Interpretative Manual; Brunner/Mazel: Levittown, PA, USA, 1987. [Google Scholar] [CrossRef][Green Version]

- Oster, G.D. Using Drawings in Assessment and Therapy; Routledge: New York, NY, USA, 2004. [Google Scholar] [CrossRef]

- Kong, X.; Yao, Y.; Wang, C.; Wang, Y.; Teng, J.; Qi, X. Automatic Identification of Depression Using Facial Images with Deep Convolutional Neural Network. Med. Sci. Monit. Int. Med. J. Exp. Clin. Res. 2022, 28, e936409. [Google Scholar] [CrossRef] [PubMed]

- Khan, W.; Crockett, K.A.; O’Shea, J.D.; Hussain, A.J.; Khan, B. Deception in the eyes of deceiver: A computer vision and machine learning based automated deception detection. Expert Syst. Appl. 2020, 169, 114341. [Google Scholar] [CrossRef]

- Wang, B.; Kang, Y.; Huo, D.; Feng, G.; Zhang, J.; Li, J. EEG diagnosis of depression based on multi-channel data fusion and clipping augmentation and convolutional neural network. Front. Physiol. 2022, 13, 1029298. [Google Scholar] [CrossRef] [PubMed]

- Zang, X.; Li, B.; Zhao, L.; Yan, D.; Yang, L. End-to-End Depression Recognition Based on a One-Dimensional Convolution Neural Network Model Using Two-Lead ECG Signal. J. Med. Biol. Eng. 2022, 42, 225–233. [Google Scholar] [CrossRef] [PubMed]

- Lu, X.; Shi, D.; Liu, Y.; Yuan, J. Speech depression recognition based on attentional residual network. Front. Biosci. 2021, 26, 1746–1759. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V.N. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Pan, T.; Zhao, X.; Liu, B.; Liu, W. Automated Drawing Psychoanalysis via House-Tree-Person Test. In Proceedings of the 2022 IEEE 34th International Conference on Tools with Artificial Intelligence (ICTAI), Macao, China, 31 October–2 November 2022; pp. 1120–1125. [Google Scholar] [CrossRef]

- Yang, G.; Zhao, L.; Sheng, L. Association of Synthetic House-Tree-Person Drawing Test and Depression in Cancer Patients. BioMed Res. Int. 2019, 2019, 1478634. [Google Scholar] [CrossRef]

- Yu, Y.; Ming, C.Y.; Yue, M.; Li, J.; Ling, L. House-Tree-Person drawing therapy as an intervention for prisoners’ prerelease anxiety. Soc. Behav. Personal. 2016, 44, 987–1004. [Google Scholar] [CrossRef]

- Polatajko, H.J.; Kaiserman, E. House-Tree-Person Projective Technique: A Validation of its Use in Occupational Therapy. Can. J. Occup. Ther. 1986, 53, 197–207. [Google Scholar] [CrossRef]

- Zhang, J.; Yu, Y.; Barra, V.; Ruan, X.; Chen, Y.; Cai, B. Feasibility study on using house-tree-person drawings for automatic analysis of depression. Comput. Methods Biomech. Biomed. Eng. 2023, 1–12. [Google Scholar] [CrossRef]

- Beck, A.T.; Rush, A.J.; Shaw, B.F.; Emery, G.D. Kognitive Therapie der Depression; Beltz: Weinheim, Germany, 2010. [Google Scholar]

- Derogatis, L.R.; Lipman, R.S.; Covi, L. SCL-90: An outpatient psychiatric rating scale—Preliminary report. Psychopharmacol. Bull. 1973, 9, 13–28. [Google Scholar]

- Hamilton, M. The assessment of anxiety states by rating. Br. J. Med. Psychol. 1959, 32, 50–55. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2012, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Zhou, X.; Jin, K.; Shang, Y.; Guo, G. Visually Interpretable Representation Learning for Depression Recognition from Facial Images. IEEE Trans. Affect. Comput. 2020, 11, 542–552. [Google Scholar] [CrossRef]

- Deng, X.; Fan, X.; Lv, X.; Sun, K. SparNet: A Convolutional Neural Network for EEG Space-Frequency Feature Learning and Depression Discrimination. Front. Neuroinform. 2022, 16, 914823. [Google Scholar] [CrossRef] [PubMed]

- Zhang, F.; Wang, M.; Qin, J.; Zhao, Y.; Sun, X.; Wen, W. Depression Recognition Based on Electrocardiogram. In Proceedings of the 2023 8th International Conference on Computer and Communication Systems (ICCCS), Guangzhou, China, 21–23 April 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Fisher, J.P.; Zera, T.; Paton, J.F. Chapter 10—Respiratory–cardiovascular interactions. In Respiratory Neurobiology; Chen, R., Guyenet, P.G., Eds.; Handbook of Clinical Neurology; Elsevier: Amsterdam, The Netherlands, 2022; Volume 188, pp. 279–308. [Google Scholar] [CrossRef]

- Cover, T.M.; Hart, P.E. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Quinlan, J.R. Induction of Decision Trees. Mach. Learn. 1986, 1, 81–106. [Google Scholar] [CrossRef]

- Davis, S.; Mermelstein, P. Comparison of Parametric Representations for Monosyllabic Word Recognition in Continuously Spoken Sentences. IEEE Trans. Acoust. Speech Signal Process. 1980, 28, 357–366. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar] [CrossRef]

- Sardari, S.; Nakisa, B.; Rastgoo, M.N.; Eklund, P.W. Audio based depression detection using Convolutional Autoencoder. Expert Syst. Appl. 2021, 189, 116076. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Kroenke, K.; Spitzer, R.L.; Williams, J.B. The PHQ-9: Validity of a brief depression severity measure. J. Gen. Intern. Med. 2001, 16 9, 606–613. [Google Scholar] [CrossRef]

- Li, C.Y.; Chen, T.J.; Helfrich, C.A.; Pan, A.W. The Development of a Scoring System for the Kinetic House-Tree-Person Drawing Test. Hong Kong J. Occup. Ther. 2011, 21, 72–79. [Google Scholar] [CrossRef]

- Hu, X.; Chen, H.; Liu, J.; Yang, C.; Chen, J. Application of the HTP test in junior students from earthquake-stricken area. Chin. Med. Guid. 2015, 12, 79–82. [Google Scholar]

- Yan, H.; Yu, H.; Chen, J. Application of the House-tree-person Test in the Depressive State Investigation. Chin. J. Clin. Psychol. 2014, 22, 842–848. [Google Scholar]

- Girshick, R.B.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- LeCun, Y.; Boser, B.E.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.E.; Jackel, L.D. Handwritten Digit Recognition with a Back-Propagation Network. Adv.NeuralInf.Process.Syst. 1989, 2, 396–404. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.E.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. arXiv 2019, arXiv:1905.11946. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.G.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 9992–10002. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 42, 2011–2023. [Google Scholar] [CrossRef]

- Misra, D.; Nalamada, T.; Arasanipalai, A.U.; Hou, Q. Rotate to Attend: Convolutional Triplet Attention Module. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021; pp. 3138–3147. [Google Scholar] [CrossRef]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A Comprehensive Survey on Transfer Learning. Proc. IEEE 2019, 109, 43–76. [Google Scholar] [CrossRef]

- Donahue, J.; Jia, Y.; Vinyals, O.; Hoffman, J.; Zhang, N.; Tzeng, E.; Darrell, T. DeCAF: A Deep Convolutional Activation Feature for Generic Visual Recognition. arXiv 2013, arXiv:1310.1531. [Google Scholar]

- Oquab, M.; Bottou, L.; Laptev, I.; Sivic, J. Learning and Transferring Mid-level Image Representations Using Convolutional Neural Networks. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1717–1724. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Everingham, M.; Gool, L.V.; Williams, C.K.I.; Winn, J.M.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Shehada, D.; Turky, A.M.; Khan, W.; Khan, B.; Hussain, A.J. A Lightweight Facial Emotion Recognition System Using Partial Transfer Learning for Visually Impaired People. IEEE Access 2023, 11, 36961–36969. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Erhan, D.; Carrier, P.L.; Courville, A.C.; Mirza, M.; Hamner, B.; Cukierski, W.J.; Tang, Y.; Thaler, D.; Lee, D.H.; et al. Challenges in representation learning: A report on three machine learning contests. Neural Netw. Off. J. Int. Neural Netw. Soc. 2013, 64, 59–63. [Google Scholar] [CrossRef] [PubMed]

- Lucey, P.; Cohn, J.F.; Kanade, T.; Saragih, J.M.; Ambadar, Z.; Matthews, I. The Extended Cohn-Kanade Dataset (CK+): A complete dataset for action unit and emotion-specified expression. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition—Workshops, San Francisco, CA, USA, 13–18 June 2010; pp. 94–101. [Google Scholar] [CrossRef]

- Shin, H.C.; Roth, H.R.; Gao, M.; Lu, L.; Xu, Z.; Nogues, I.; Yao, J.; Mollura, D.J.; Summers, R.M. Deep Convolutional Neural Networks for Computer-Aided Detection: CNN Architectures, Dataset Characteristics and Transfer Learning. IEEE Trans. Med. Imaging 2016, 35, 1285–1298. [Google Scholar] [CrossRef] [PubMed]

- Apostolopoulos, I.D.; Bessiana, T. COVID-19: Automatic detection from X-ray images utilizing transfer learning with convolutional neural networks. Phys. Eng. Sci. Med. 2020, 43, 635–640. [Google Scholar] [CrossRef] [PubMed]

- Xu, P.; Joshi, C.K.; Bresson, X. Multigraph Transformer for Free-Hand Sketch Recognition. IEEE Trans. Neural Netw. Learn. Syst. 2019, 33, 5150–5161. [Google Scholar] [CrossRef] [PubMed]

- Google. Quick, Draw! 2016. Available online: https://quickdraw.withgoogle.com/data (accessed on 7 July 2023).

- Buslaev, A.V.; Parinov, A.; Khvedchenya, E.; Iglovikov, V.I.; Kalinin, A.A. Albumentations: Fast and flexible image augmentations. Information 2020, 11, 125. [Google Scholar] [CrossRef]

- Dementyiev, V.E.; Andriyanov, N.A.; Vasilyiev, K.K. Use of Images Augmentation and Implementation of Doubly Stochastic Models for Improving Accuracy of Recognition Algorithms Based on Convolutional Neural Networks. In Proceedings of the 2020 Systems of Signal Synchronization, Generating and Processing in Telecommunications (SYNCHROINFO), Svetlogorsk, Russia, 1–3 July 2020; pp. 1–4. [Google Scholar] [CrossRef]

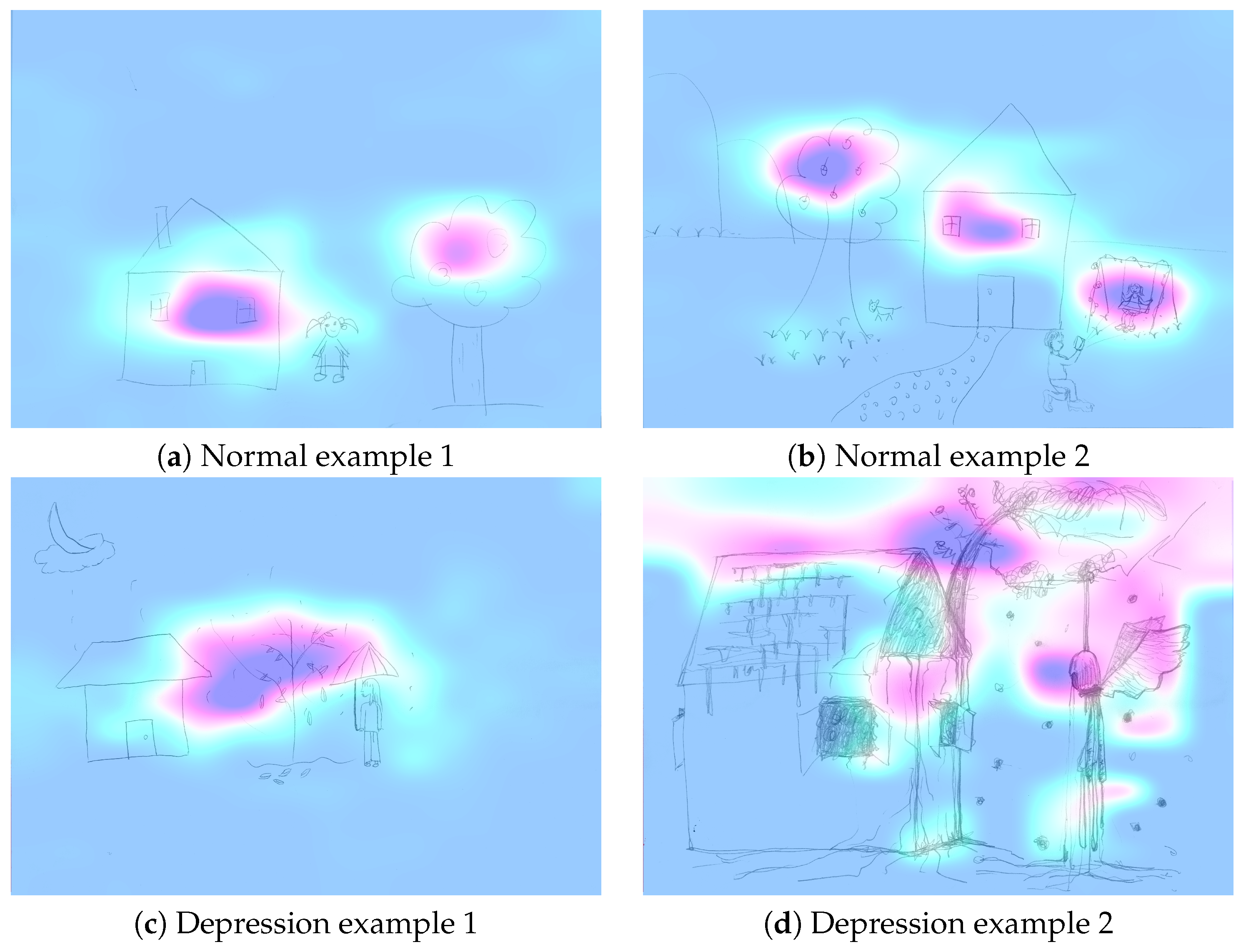

- Selvaraju, R.R.; Das, A.; Vedantam, R.; Cogswell, M.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2016, 128, 336–359. [Google Scholar] [CrossRef]

| Kind | Name | Description | Examples | Scale of Scores |

|---|---|---|---|---|

| Self-Rating Scale | Self-Rating Depression Scale (SDS) [6] | The SDS contains 20 statements, each with 4 different degrees of answer, 0 (rarely), 1 (sometimes), 2 (often), 3 (almost always), corresponding to a score of 1, 2, 3, and 4. | 1. I feel sad or depressed. 2. I feel a loss of interest or fun. 3. I feel anxious or scared. | 0–52: Normal. 53–62: Mild depression. 63–72: Moderate depression. 73–80: Severe depression. |

| Beck Depression Inventory (BDI) [22] | The BDI contains 21 statements, each with 4 different degrees of answer, which are 0 (none or very few), 1 (sometimes), 2 (quite a lot), 3 (extremely severe), corresponding to a score of 0, 1, 2, and 3. | 1. Lose interest. 2. Feeling lonely. 3. Feel disappointed. | 0–13: Normal. 14–19: Mild depression. 20–28: Moderate depression. 29–63: Severe depression. | |

| Symptom Checklist-90 (SCL-90) [23] | The SCL-90 contains 90 statements, and there are 13 statements that measure depression. Each statement has 5 different degrees of answer: 1 (never), 2 (very mild), 3 (moderate), 4 (quite a lot), and 5 (severe), corresponding to a score of 1, 2, 3, 4, and 5. | 1. Feel your energy levels drop and your activities slow down. 2. Wanting to end your life. 3. You feel lonely. | 13–26: Mild depression. 39–65: Severe depression. | |

| Clinical Scale | Hamilton Depression Rating Scale (HAMD) [5] | The original HAMD contains 21 items with 3–5 descriptions for each item, and subjects are required to choose the answer that best fits their situation. | “Depressed mood”: 0. Not present (none); 1. Tell only when asked (lightly). 2. Describe spontaneously in the interview (moderate). 3. The emotion can be expressed without words in the expression, posture, voice, or the desire to cry (severe). 4. The patient’s spontaneous verbal and non-verbal expressions (expressions, movements) almost exclusively reflect this emotion (extremely severe). | 0–7: Normal. 8–17: May have depression. 18–24: Depression. >24: Severe depression. |

| Hamilton Anxiety Rating Scale (HAMA) [24] | The HAMA contains 14 statements, each with 5 different levels of answers, which are 0 (no symptoms), 1 (mild), 2 (moderate), 3 (severe), 4 (extremely severe), corresponding to scores of 0, 1, 2, 3, and 4. | 1. Insomnia. 2. Memory or attention disorders. 3. Depression. | 0–7: Normal. 8–14: Mild anxiety symptoms. 15–21: Moderate anxiety symptoms. ≥22: Severe anxiety symptoms. |

| Model | Layers | Patches | Params (M) | FLOPs (G) |

|---|---|---|---|---|

| FBA-Small-5 1,2 | 6 | 5 | 58.97 | 9.16 |

| FBA-Base-5 | 12 | 5 | 101.50 | 17.52 |

| FBA-Large-5 | 18 | 5 | 144.03 | 25.87 |

| FBA-Small-9 | 6 | 9 | 58.97 | 9.16 |

| FBA-Base-9 | 12 | 9 | 101.50 | 17.52 |

| FBA-Large-9 | 18 | 9 | 144.03 | 25.87 |

| Model | Accuracy (%) | F1 Score (%) | Precision (%) | Recall (%) | Flops (G) | Params (M) | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| Validation | Test | Validation | Test | Validation | Test | Validation | Test | |||

| ResNet50 | 69.33 | 69.53 | 69.11 | 69.29 | 70.12 | 70.22 | 69.33 | 69.53 | 4.21 | 24.21 |

| Inceptionv3 | 69.08 | 68.92 | 68.90 | 68.73 | 69.27 | 69.05 | 69.08 | 68.92 | 2.85 | 25.82 |

| MobileNetv3 | 70.39 | 70.64 | 70.25 | 70.58 | 70.48 | 70.83 | 70.38 | 70.64 | 227.52 | 4.64 |

| EfficientNetb5 | 69.93 | 69.69 | 69.75 | 69.53 | 70.05 | 69.77 | 69.93 | 69.70 | 2.33 | 29.05 |

| ViT | 67.94 | 67.90 | 67.82 | 67.74 | 68.21 | 68.10 | 67.94 | 67.90 | 16.86 | 86.06 |

| Hybrid ViT | 71.78 | 72.04 | 71.73 | 71.95 | 72.30 | 72.48 | 71.78 | 72.04 | 16.91 | 98.16 |

| Swin | 56.75 | 56.77 | 56.29 | 56.28 | 56.65 | 56.64 | 56.75 | 56.77 | 15.51 | 87.1 |

| FBA-Small-5 | 70.81 | 70.53 | 70.68 | 70.42 | 71.27 | 71.03 | 70.81 | 70.53 | 9.16 | 58.97 |

| FBA-Small-9 | 73.43 | 73.35 | 73.34 | 73.24 | 73.73 | 73.56 | 73.44 | 73.35 | 9.16 | 58.97 |

| FBA-Base-5 | 73.93 | 73.81 | 73.91 | 73.79 | 74.21 | 74.09 | 73.93 | 73.81 | 17.52 | 101.50 |

| FBA-Base-9 | 74.01 | 73.83 | 73.98 | 73.79 | 74.27 | 74.11 | 74.01 | 73.83 | 17.52 | 101.50 |

| FBA-Large-5 | 73.01 | 73.21 | 72.96 | 73.15 | 73.23 | 73.42 | 73.01 | 73.21 | 25.87 | 144.03 |

| FBA-Large-9 | 73.79 | 73.75 | 73.76 | 73.75 | 74.01 | 74.10 | 73.79 | 73.75 | 25.87 | 144.03 |

| Model | Accuracy (%) | F1 Score (%) | Precision (%) | Recall (%) | Flops (G) | Params (M) | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| ResNet50 | 86.56 | 92.26 | 64.97 | 82.52 | 72.28 | 92.86 | 88.67 | 100 | 4.21 | 24.21 |

| Inceptionv3 | 82.97 | 86.69 | 53.19 | 65.22 | 64.18 | 93.33 | 61.73 | 73.44 | 2.85 | 25.82 |

| MobileNetv3 | 85.88 | 92.26 | 62.10 | 80.31 | 65.50 | 80.95 | 65.73 | 81.25 | 227.52 | 4.64 |

| EfficientNetv5 | 85.26 | 91.95 | 62.15 | 69.92 | 64.36 | 78.38 | 67.92 | 90.63 | 2.33 | 29.05 |

| ViT | 82.17 | 84.21 | 89.66 | 90.50 | 83.33 | 87.73 | 99.92 | 100 | 16.86 | 86.06 |

| Hybrid ViT | 88.11 | 92.26 | 92.75 | 95.06 | 91.50 | 94.66 | 98.53 | 99.61 | 16.91 | 98.16 |

| Swin | 80.56 | 81.42 | 89.19 | 89.66 | 80.54 | 81.25 | 99.92 | 100 | 15.51 | 87.1 |

| Pan et al. [17] | 85.55 | 93.33 | - | - | - | - | - | - | - | - |

| Zhang et al. [21] | 91.33 | 95.00 | 91.30 | 95.65 | 95.12 | 97.06 | 87.84 | 94.29 | - | - |

| FBA-Small-5 | 96.72 | 97.21 | 97.95 | 99.23 | 99.27 | 100 | 98.84 | 100 | 9.16 | 58.97 |

| FBA-Small-9 | 94.49 | 98.45 | 96.54 | 99.03 | 97.98 | 99.61 | 99.92 | 100 | 9.16 | 58.97 |

| FBA-Base-5 | 96.72 | 98.45 | 97.97 | 99.04 | 99.31 | 100 | 99.23 | 100 | 17.52 | 101.50 |

| FBA-Base-9 | 97.09 | 99.07 | 98.19 | 99.42 | 99.11 | 100 | 98.77 | 100 | 17.52 | 101.50 |

| FBA-Large-5 | 97.71 | 99.07 | 98.56 | 99.42 | 99.30 | 100 | 99.54 | 100 | 25.87 | 144.03 |

| FBA-Large-9 | 97.13 | 98.76 | 98.12 | 99.22 | 99.08 | 100 | 98.85 | 100 | 25.87 | 144.03 |

| Model | Feature Enhance | Triplet Attention | Self-Attention | Accuracy (%) | FLOPs (G) | Params (M) |

|---|---|---|---|---|---|---|

| FBANet | ✓ | ✓ | 72.34 | 17.36 | 100.72 | |

| FBANet | ✓ | ✓ | 71.84 | 0.72 | 15.71 | |

| FBANet | ✓ | ✓ | 71.54 | 17.37 | 100.91 | |

| FBANet | ✓ | ✓ | ✓ | 73.81 | 17.52 | 101.50 |

| Model | Feature Enhance | Triplet Attention | Self-Attention | Average Accuracy (%) | FLOPs (G) | Params (M) |

|---|---|---|---|---|---|---|

| FBANet | ✓ | ✓ | 89.35 | 17.36 | 100.72 | |

| FBANet | ✓ | ✓ | 87.55 | 0.72 | 15.71 | |

| FBANet | ✓ | ✓ | 93.81 | 17.37 | 100.91 | |

| FBANet | ✓ | ✓ | ✓ | 96.72 | 17.52 | 101.50 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, H.; Zhang, J.; Huang, Y.; Cai, B. FBANet: Transfer Learning for Depression Recognition Using a Feature-Enhanced Bi-Level Attention Network. Entropy 2023, 25, 1350. https://doi.org/10.3390/e25091350

Wang H, Zhang J, Huang Y, Cai B. FBANet: Transfer Learning for Depression Recognition Using a Feature-Enhanced Bi-Level Attention Network. Entropy. 2023; 25(9):1350. https://doi.org/10.3390/e25091350

Chicago/Turabian StyleWang, Huayi, Jie Zhang, Yaocheng Huang, and Bo Cai. 2023. "FBANet: Transfer Learning for Depression Recognition Using a Feature-Enhanced Bi-Level Attention Network" Entropy 25, no. 9: 1350. https://doi.org/10.3390/e25091350

APA StyleWang, H., Zhang, J., Huang, Y., & Cai, B. (2023). FBANet: Transfer Learning for Depression Recognition Using a Feature-Enhanced Bi-Level Attention Network. Entropy, 25(9), 1350. https://doi.org/10.3390/e25091350