Person Re-Identification Method Based on Dual Descriptor Feature Enhancement

Abstract

1. Introduction

- Proposes a Dual Descriptor Feature Enhancement (DDFE) network that extracts and integrates features from two perspectives of person images, resulting in more discriminative features and improved recognition accuracy of the model;

- Designs targeted training strategies for the Dual Descriptor Feature Enhancement network, including the incorporation of CurricularFace loss, DropPath operation, and the Integration Training Module;

- Tests extensively on datasets Market1501 and MSMT17, demonstrating state-of-the-art performance in person re-identification.

2. Related Work

2.1. Person Re-Identification

2.2. Face Recognition Loss Function

3. Methodology

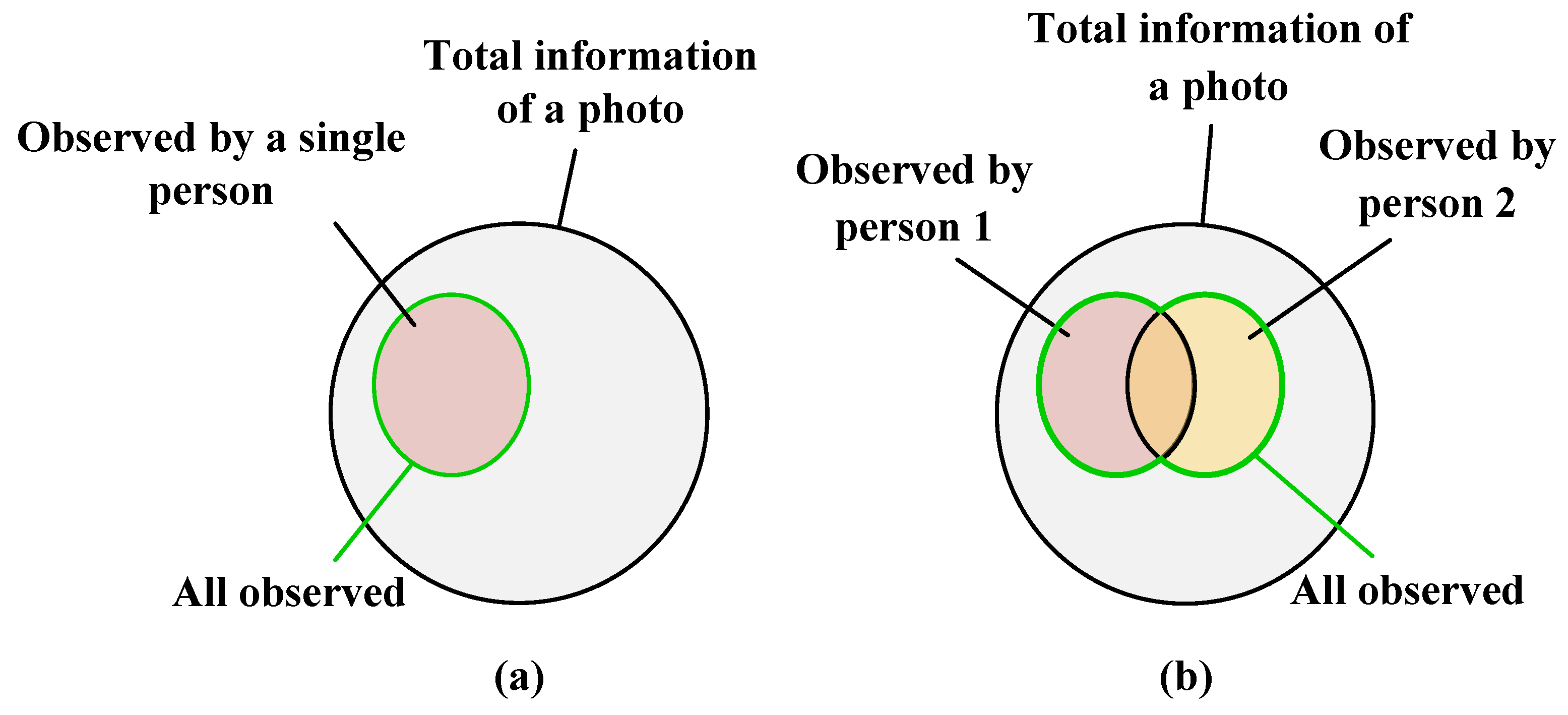

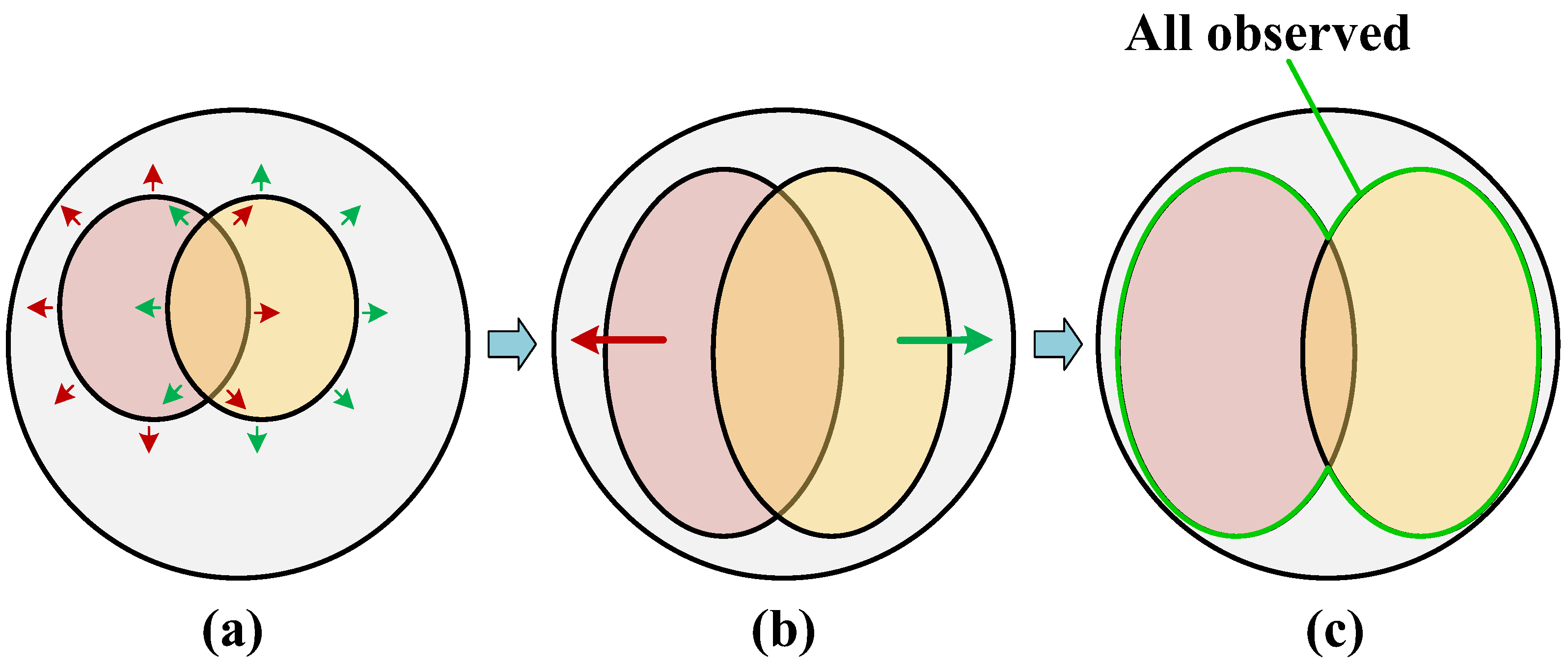

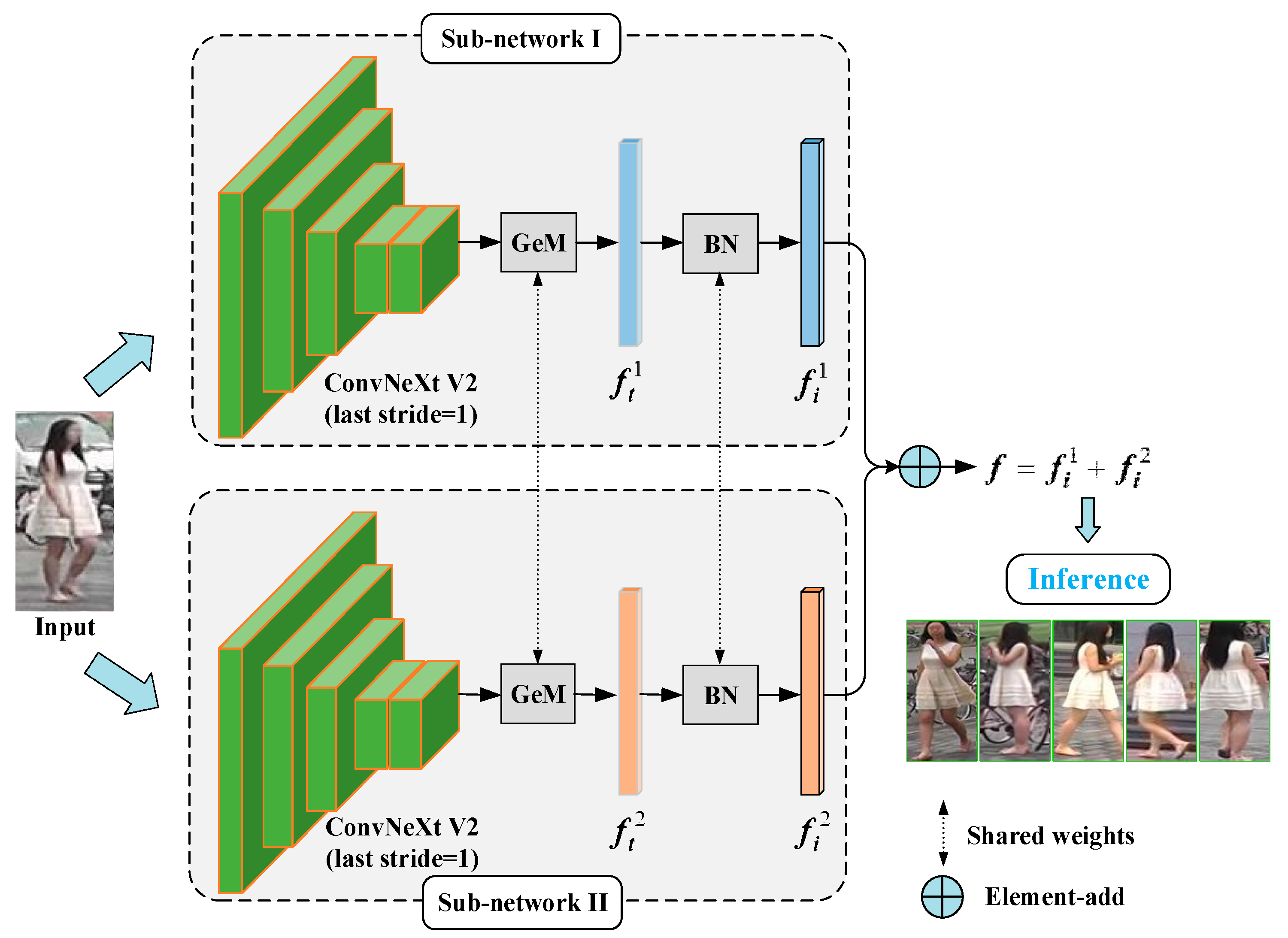

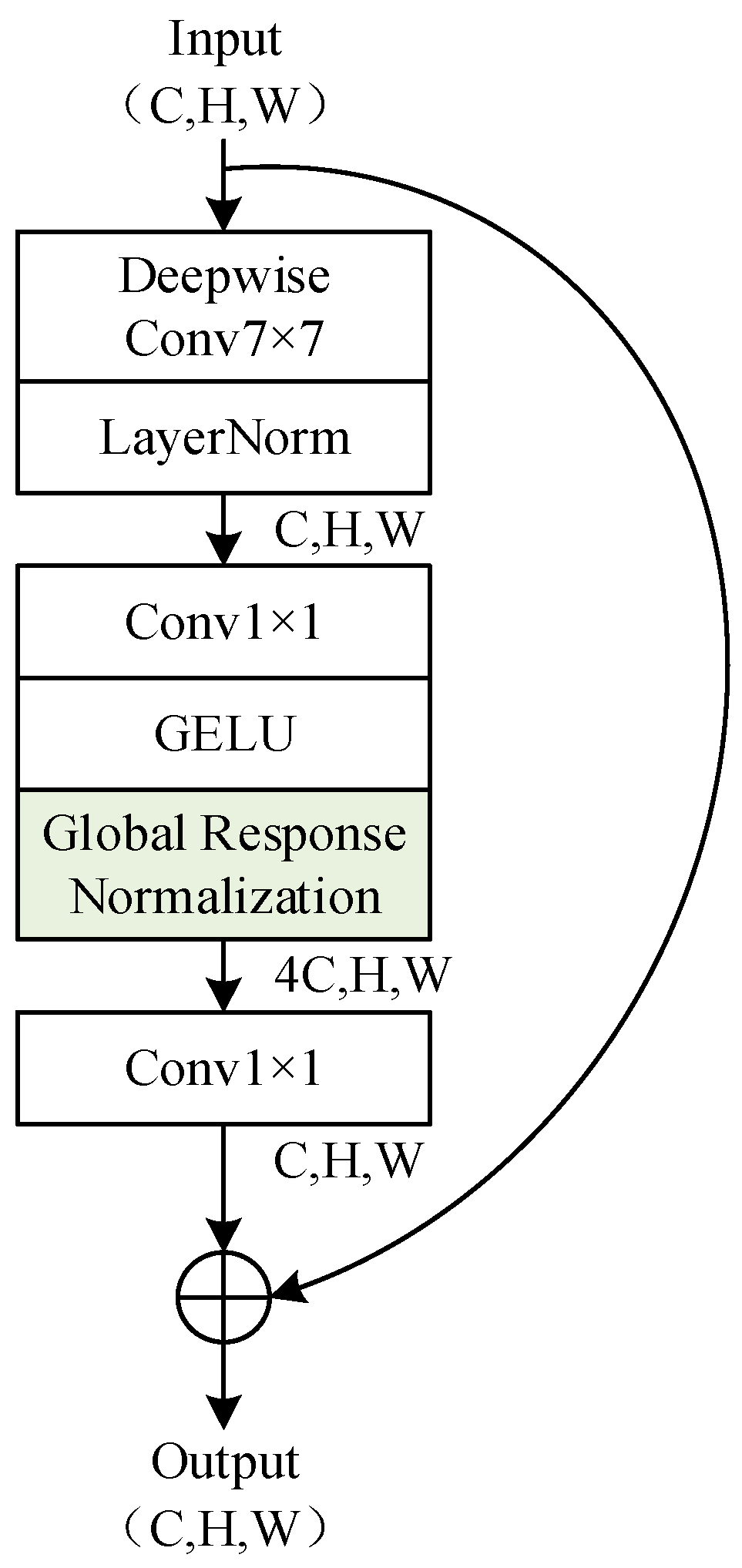

3.1. Dual Descriptor Feature Enhancement (DDFE) Network

3.2. Training of the DDFE Network

3.2.1. Overview

3.2.2. DropPath

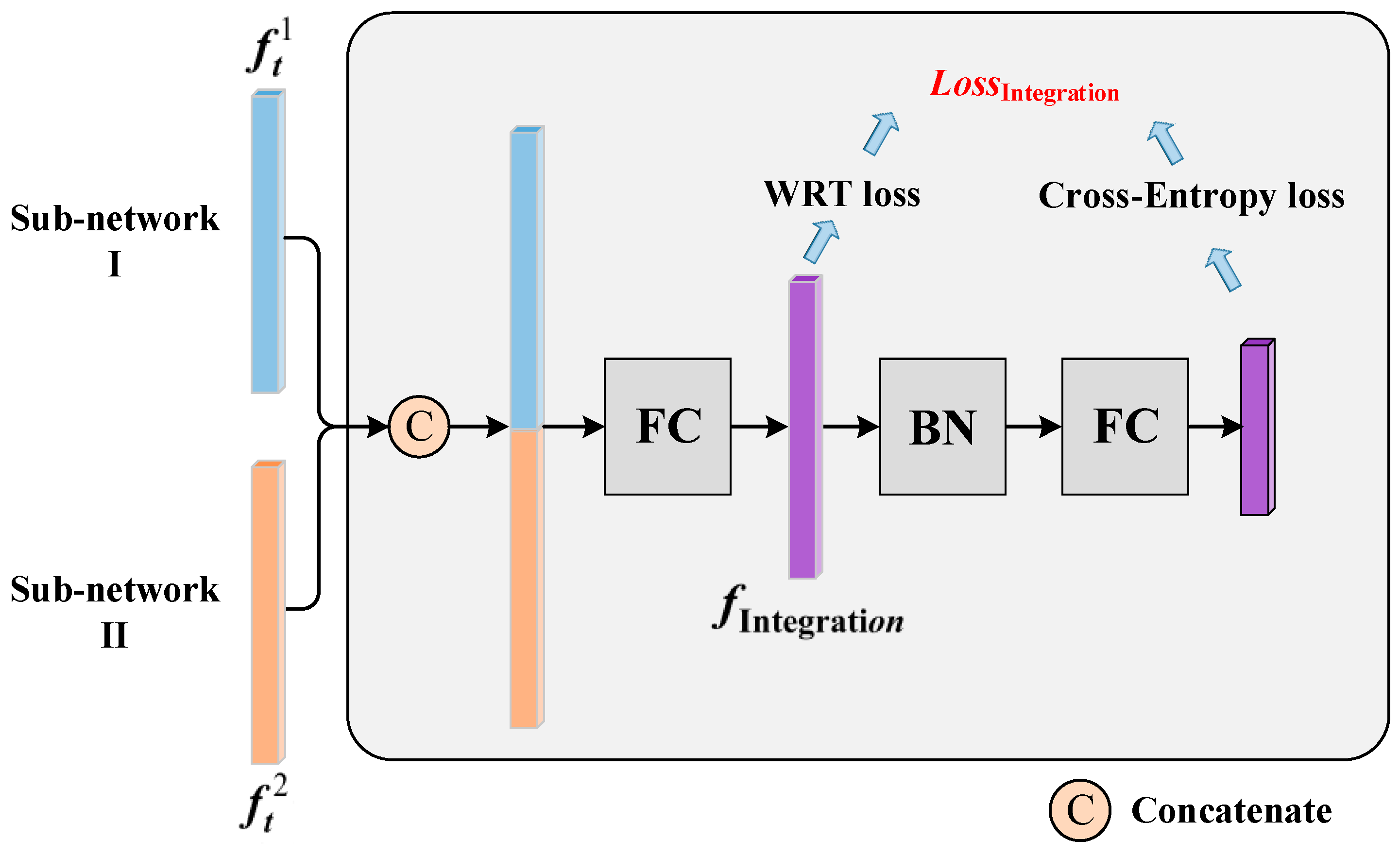

3.2.3. Integration Training Module (ITM)

3.2.4. CurricularFace Loss

3.2.5. Total Loss

4. Experiments and Analysis

4.1. Datasets and Evaluation Metric

4.2. Experimental Settings

4.3. Comparison with Existing Methods

4.4. Ablation Study

4.4.1. Performance Comparison: Sub-Network Descriptors vs. Integrated Feature

4.4.2. Component Ablation Experiments of the Training Strategy

4.4.3. Generalizability Analysis

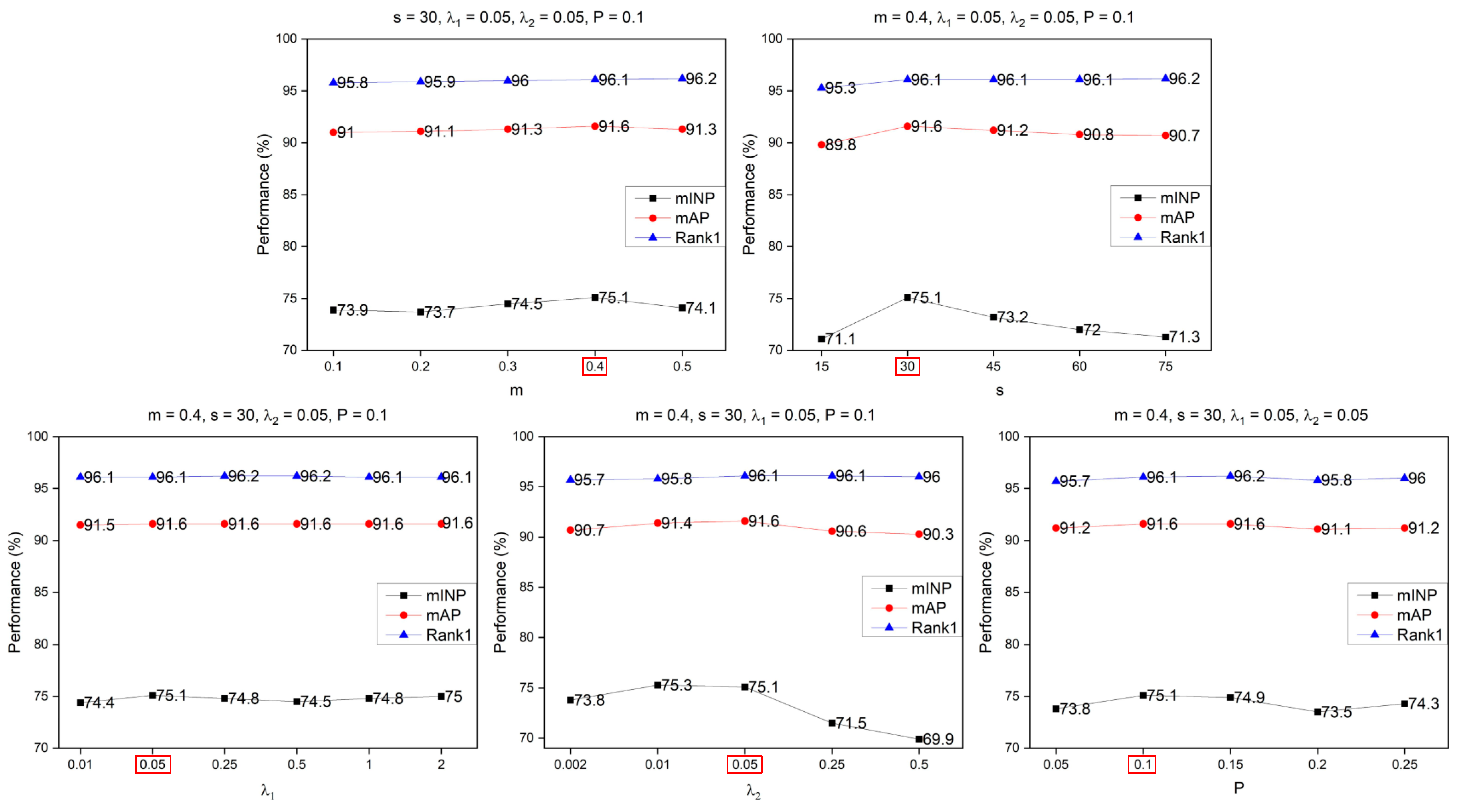

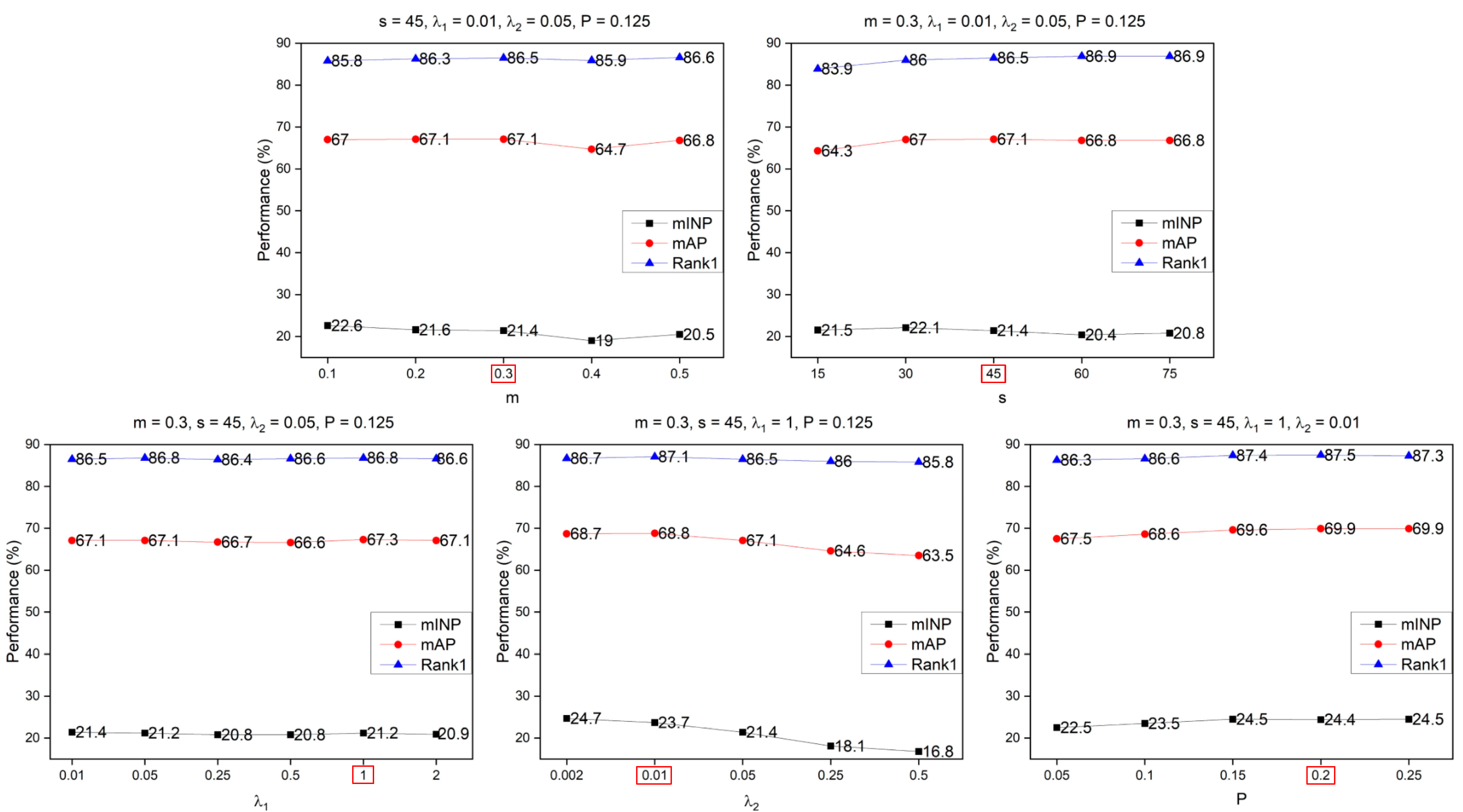

4.4.4. Parameter Analysis

4.5. Visualization Analysis

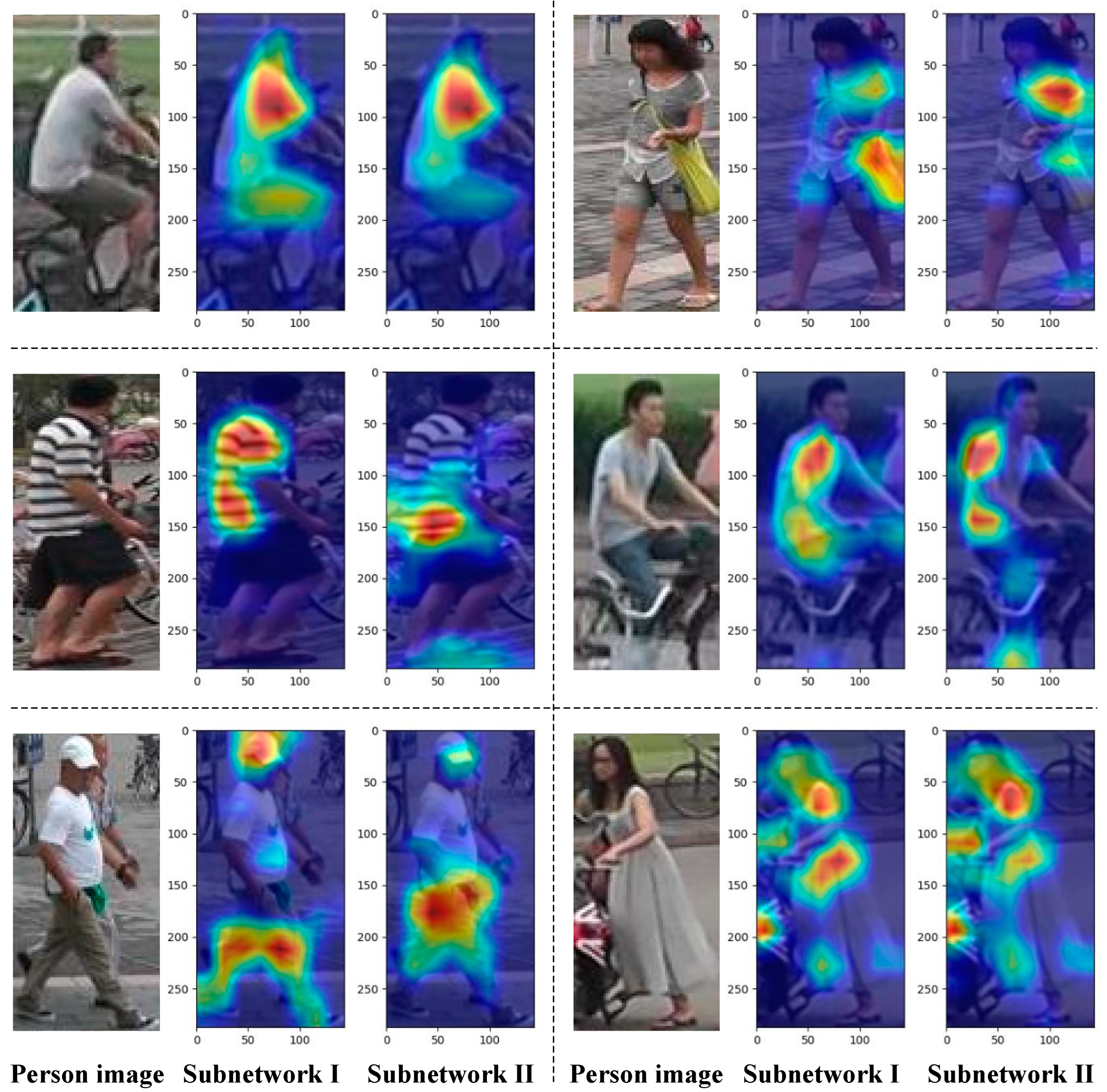

4.5.1. Attention Heatmap Analysis of Each Sub-Network

4.5.2. Visualization and Analysis of the Training Strategy

4.5.3. Results Output

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Hu, M.; Zeng, K.; Wang, Y.; Guo, Y. Threshold-based hierarchical clustering for person re-identification. Entropy 2021, 23, 522. [Google Scholar] [CrossRef] [PubMed]

- Ye, M.; Shen, J.; Lin, G.; Xiang, T.; Shao, L.; Hoi, S.C. Deep learning for person re-identification: A survey and outlook. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 2872–2893. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Zhang, H.; Liu, S. Person re-identification using heterogeneous local graph attention networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 12136–12145. [Google Scholar]

- Zhang, G.; Zhang, P.; Qi, J.; Lu, H. Hat: Hierarchical aggregation transformers for person re-identification. In Proceedings of the 29th ACM International Conference on Multimedia, Virtual, 17 October 2021; pp. 516–525. [Google Scholar]

- He, S.; Luo, H.; Wang, P.; Wang, F.; Li, H.; Jiang, W. Transreid: Transformer-based object re-identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 15013–15022. [Google Scholar]

- Lee, H.; Eum, S.; Kwon, H. Negative Samples are at Large: Leveraging Hard-Distance Elastic Loss for Re-identification. In Proceedings of the Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin, Germany, 2022. Part XXIV. pp. 604–620. [Google Scholar]

- Wieczorek, M.; Rychalska, B.; Dąbrowski, J. On the unreasonable effectiveness of centroids in image retrieval. In Proceedings of the Neural Information Processing: 28th International Conference, ICONIP 2021, Sanur, Bali, Indonesia, 8–12 December 2021; Springer: Berlin, Germany, 2021. Part IV 28. pp. 212–223. [Google Scholar]

- Yan, C.; Pang, G.; Bai, X.; Liu, C.; Ning, X.; Gu, L.; Zhou, J. Beyond triplet loss: Person re-identification with fine-grained difference-aware pairwise loss. IEEE Trans. Multimed. 2021, 24, 1665–1677. [Google Scholar] [CrossRef]

- Huang, Y.; Wang, Y.; Tai, Y.; Liu, X.; Shen, P.; Li, S.; Huang, F. Curricularface: Adaptive curriculum learning loss for deep face recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 5901–5910. [Google Scholar]

- Larsson, G.; Maire, M.; Shakhnarovich, G. Fractalnet: Ultra-deep neural networks without residuals. arXiv 2016, arXiv:1605.07648. [Google Scholar]

- Zheng, L.; Zhang, H.; Sun, S.; Chandraker, M.; Yang, Y.; Tian, Q. Person re-identification in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1367–1376. [Google Scholar]

- Wu, L.; Shen, C. Hengel Avd. Personnet: Person re-identification with deep convolutional neural networks. arXiv 2016, arXiv:1601.07255. [Google Scholar]

- Sun, Y.; Zheng, L.; Yang, Y.; Tian, Q.; Wang, S. Beyond part models: Person retrieval with refined part pooling (and a strong convolutional baseline). In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 480–496. [Google Scholar]

- Zhang, X.; Luo, H.; Fan, X.; Xiang, W.; Sun, Y.; Xiao, Q.; Sun, J. Alignedreid: Surpassing human-level performance in person re-identification. arXiv 2017, arXiv:1711.08184. [Google Scholar]

- Lai, S.; Chai, Z.; Wei, X. Transformer meets part model: Adaptive part division for person re-identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 4150–4157. [Google Scholar]

- Li, W.; Zhu, X.; Gong, S. Harmonious attention network for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2285–2294. [Google Scholar]

- Rao, Y.; Chen, G.; Lu, J.; Zhou, J. Counterfactual attention learning for fine-grained visual categorization and re-identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 1025–1034. [Google Scholar]

- Su, C.; Li, J.; Zhang, S.; Xing, J.; Gao, W.; Tian, Q. Pose-driven deep convolutional model for person re-identification. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3960–3969. [Google Scholar]

- Ding, C.; Wang, K.; Wang, P.; Tao, D. Multi-task learning with coarse priors for robust part-aware person re-identification. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 1474–1488. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Wu, G.; Zheng, W.-S. Combined depth space based architecture search for person re-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 6729–6738. [Google Scholar]

- Liu, W.; Wen, Y.; Yu, Z.; Li, M.; Raj, B.; Song, L. Sphereface: Deep hypersphere embedding for face recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 212–220. [Google Scholar]

- Wang, H.; Wang, Y.; Zhou, Z.; Ji, X.; Gong, D.; Zhou, J.; Liu, W. Cosface: Large margin cosine loss for deep face recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5265–5274. [Google Scholar]

- Deng, J.; Guo, J.; Xue, N.; Zafeiriou, S. Arcface: Additive angular margin loss for deep face recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4690–4699. [Google Scholar]

- Wang, X.; Zhang, S.; Wang, S.; Fu, T.; Shi, H.; Mei, T. Mis-classified vector guided softmax loss for face recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12241–12248. [Google Scholar]

- Woo, S.; Debnath, S.; Hu, R.; Chen, X.; Liu, Z.; Kweon, I.S.; Xie, S. ConvNeXt V2: Co-designing and Scaling ConvNets with Masked Autoencoders. arXiv 2023, arXiv:2301.00808. [Google Scholar]

- Radenović, F.; Tolias, G.; Chum, O. Fine-tuning CNN image retrieval with no human annotation. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 1655–1668. [Google Scholar] [CrossRef] [PubMed]

- Zheng, L.; Shen, L.; Tian, L.; Wang, S.; Wang, J.; Tian, Q. Scalable person re-identification: A benchmark. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1116–1124. [Google Scholar]

- Wei, L.; Zhang, S.; Gao, W.; Tian, Q. Person transfer gan to bridge domain gap for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 79–88. [Google Scholar]

- Zhang, A.; Gao, Y.; Niu, Y.; Liu, W.; Zhou, Y. Coarse-to-fine person re-identification with auxiliary-domain classification and second-order information bottleneck. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 598–607. [Google Scholar]

- Zhang, W.; Ding, Q.; Hu, J.; Ma, Y.; Lu, M. Pixel-wise Graph Attention Networks for Person Re-identification. In Proceedings of the 29th ACM International Conference on Multimedia, Virtual, 17 October 2021; pp. 5231–5238. [Google Scholar]

- Zhu, H.; Ke, W.; Li, D.; Liu, J.; Tian, L.; Shan, Y. Dual cross-attention learning for fine-grained visual categorization and object re-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4692–4702. [Google Scholar]

- Wang, P.; Zhao, Z.; Su, F.; Meng, H. LTReID: Factorizable Feature Generation with Independent Components for Long-Tailed Person Re-Identification. IEEE Trans. Multimed. 2022. [Google Scholar] [CrossRef]

- Ci, Y.; Wang, Y.; Chen, M.; Tang, S.; Bai, L.; Zhu, F.; Ouyang, W. UniHCP: A Unified Model for Human-Centric Perceptions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 17840–17852. [Google Scholar]

- Li, S.; Sun, L.; Li, Q. CLIP-ReID: Exploiting Vision-Language Model for Image Re-Identification without Concrete Text Labels. Proc. AAAI Conf. Artif. Intell. 2023, 37, 1405–1413. [Google Scholar] [CrossRef]

- Li, W.; Zou, C.; Wang, M.; Xu, F.; Zhao, J.; Zheng, R.; Chu, W. DC-Former: Diverse and Compact Transformer for Person Re-Identification. arXiv 2023, arXiv:2302.14335. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Houlsby, N. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Li, S.; Wang, Z.; Liu, Z.; Tan, C.; Lin, H.; Wu, D.; Li, S.Z. Efficient multi-order gated aggregation network. arXiv 2022, arXiv:2010.11929. [Google Scholar]

- Rao, Y.; Zhao, W.; Tang, Y.; Zhou, J.; Lim, S.N.; Lu, J. Hornet: Efficient high-order spatial interactions with recursive gated convolutions. Adv. Neural Inf. Process. Syst. 2022, 35, 10353–10366. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

| Dataset | Number of Cameras | Training Set | Test Set | ||

|---|---|---|---|---|---|

| Number of Persons | Number of Images | Number of Persons | Number of Images | ||

| Market1501 | 6 | 751 | 12,936 | 750 | 19,732 |

| MSMT17 | 15 | 1040 | 32,621 | 3010 | 82,161 |

| Methods | Venue | Backbone during the Inference Stage (Params) | Training Cost | Market1501 | MSMT17 | ||

|---|---|---|---|---|---|---|---|

| mAP | Rank1 | mAP | Rank1 | ||||

| CF-ReID [29] | CVPR2021 | ResNet50 (25.5 M) | - | 87.7 | 94.8 | - | - |

| PGANet-152 [30] | ACM MM2021 | ResNet152 (60.2 M) | - | 89.3 | 95.4 | - | - |

| CAL [17] | ICCV2021 | ResNet101 (44.6 M) | - | 89.5 | 95.5 | 64 | 84.2 |

| TransReID [5] | ICCV2021 | ViT-Base (86 M) | Nvidia Tesla V100 | 88.9 | 95.2 | 67.4 | 85.3 |

| TMP [15] | ICCV2021 | ResNet101 (44.6 M) | 4 × Nvidia Tesla V100 | 90.3 | 96 | 62.7 | 82.9 |

| DCAL [31] | CVPR2022 | ViT-Base (86 M) | Nvidia Tesla V100 | 87.5 | 94.7 | 64 | 83.1 |

| LTReID [32] | TMM2022 | ResNet50 (25.5 M) | 4 × GeForce GTX 1080 Ti | 89 | 95.9 | 58.6 | 81 |

| UniHCP [33] | CVPR2023 | ViT-Base (86 M) | - | 90.3 | - | 67.3 | - |

| CLIP-ReID [34] | AAAI2023 | ViT-Base (86 M) | - | 90.5 | 95.4 | 75.8 | 89.7 |

| DC-Former [35] | AAAI2023 | ViT-Base (86 M) | 4 × Nvidia Tesla V100 | 90.4 | 96 | 69.8 | 86.2 |

| DDFE | Ours | ConvNeXt v2 Tiny × 2 (57.2 M) | Nvidia Titan V + Nvidia GeForce RTX 2080 | 91.6 | 96.1 | 69.9 | 87.5 |

| Feature | mAP | Rank1 | mINP |

|---|---|---|---|

| (sub-network 1) | 91.3 | 96.1 | 73.9 |

| (sub-network 2) | 91.4 | 96.2 | 73.9 |

| (integrated feature) | 91.6 | 96.1 | 75.1 |

| Number | DropPath | ITM | CurricularFace | mAP | Rank1 | mINP |

|---|---|---|---|---|---|---|

| 1 | - | - | - | 89.3 | 95.2 | 69.1 |

| 2 | √ | - | - | 89.7 | 95.7 | 69.7 |

| 3 | √ | √ | - | 90.4 | 96 | 71.3 |

| 4 | √ | √ | √ | 91.6 | 96.1 | 75.1 |

| Feature Extractor | Year | Parameters | Configuration | mAP | Rank1 | mINP |

|---|---|---|---|---|---|---|

| ResNet-50 [36] | 2015 | 25.5 M | Single Network | 86.5 | 94.3 | 60.6 |

| Dual Network | 89.2 | 95.7 | 67.8 | |||

| MogaNet-T [38] | 2022 | 5.5 M | Single Network | 85.9 | 93.8 | 61.6 |

| Dual Network | 86.8 | 94.4 | 62.4 | |||

| HorNet-T (GF) [39] | 2022 | 23 M | Single Network | 86.9 | 94.4 | 63.3 |

| Dual Network | 88.8 | 95.6 | 66.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, R.; Wang, R.; Zhang, W.; Wu, A.; Sun, Y.; Bi, Y. Person Re-Identification Method Based on Dual Descriptor Feature Enhancement. Entropy 2023, 25, 1154. https://doi.org/10.3390/e25081154

Lin R, Wang R, Zhang W, Wu A, Sun Y, Bi Y. Person Re-Identification Method Based on Dual Descriptor Feature Enhancement. Entropy. 2023; 25(8):1154. https://doi.org/10.3390/e25081154

Chicago/Turabian StyleLin, Ronghui, Rong Wang, Wenjing Zhang, Ao Wu, Yang Sun, and Yihan Bi. 2023. "Person Re-Identification Method Based on Dual Descriptor Feature Enhancement" Entropy 25, no. 8: 1154. https://doi.org/10.3390/e25081154

APA StyleLin, R., Wang, R., Zhang, W., Wu, A., Sun, Y., & Bi, Y. (2023). Person Re-Identification Method Based on Dual Descriptor Feature Enhancement. Entropy, 25(8), 1154. https://doi.org/10.3390/e25081154