Probing Intrinsic Neural Timescales in EEG with an Information-Theory Inspired Approach: Permutation Entropy Time Delay Estimation (PE-TD)

Abstract

1. Introduction

2. Materials and Methods

2.1. Estimation of EEG Time Scales through Permutation Entropy—PE-TD

PE-TD

2.2. ACW-0

2.3. Simulations

- First, we generated a series of “stationary” IAF time series of equal length (40,000 time points);

- Then, we obtained a nonstationary IAF signal by concatenating the previously synthetized stationary signals into a single signal;

- Eventually, by comparing the time scales measured in the synthetic nonstationary signal and the average of the time scale estimated on the stationary segments, one can assess the resilience of the tested measure to nonstationarity.

2.4. Experimental Data (EEG)

2.5. Pre-Processing

2.6. Statistics

3. Results

3.1. Simulations

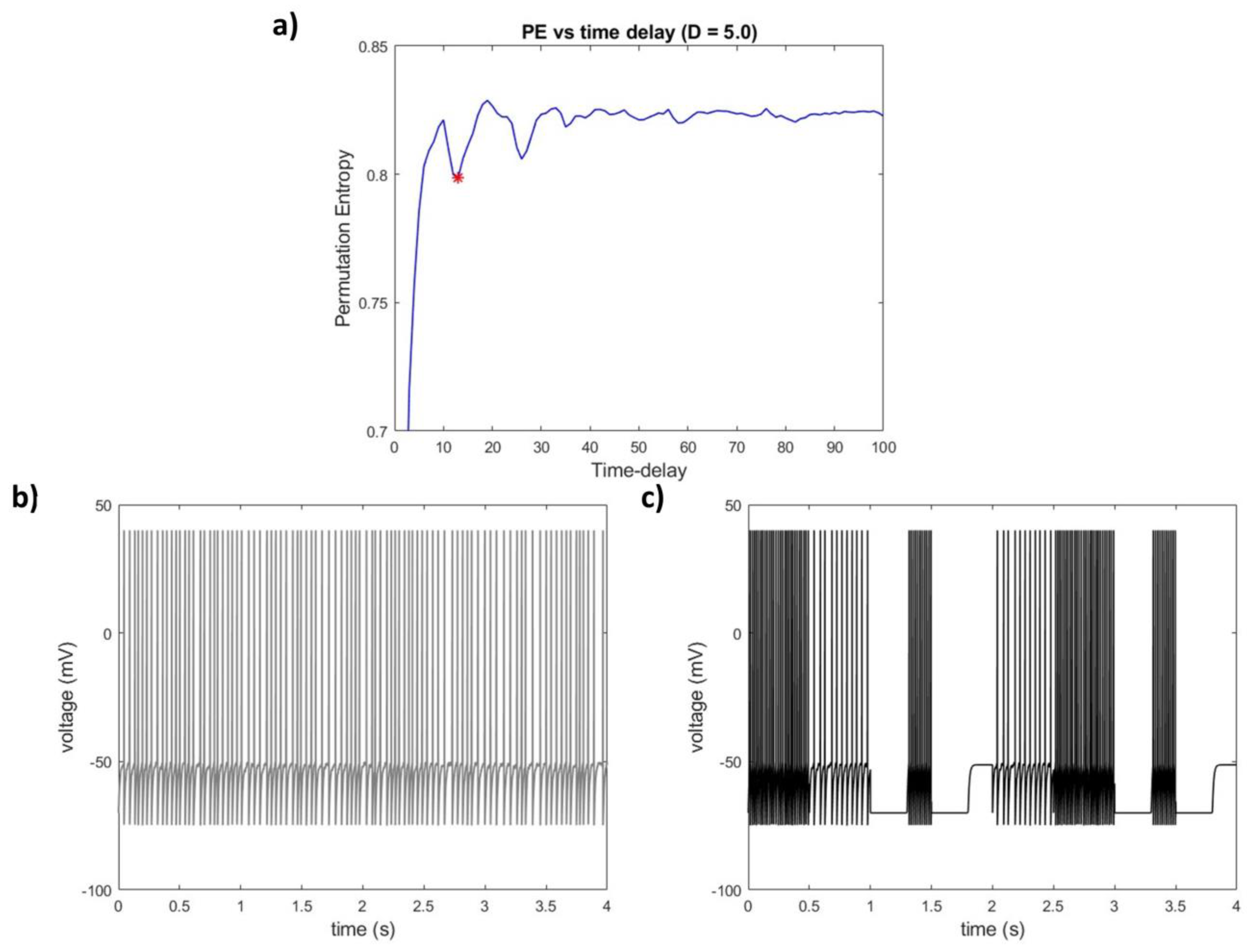

3.1.1. The Effect of Nonstationarity on the Accuracy of PE-TD

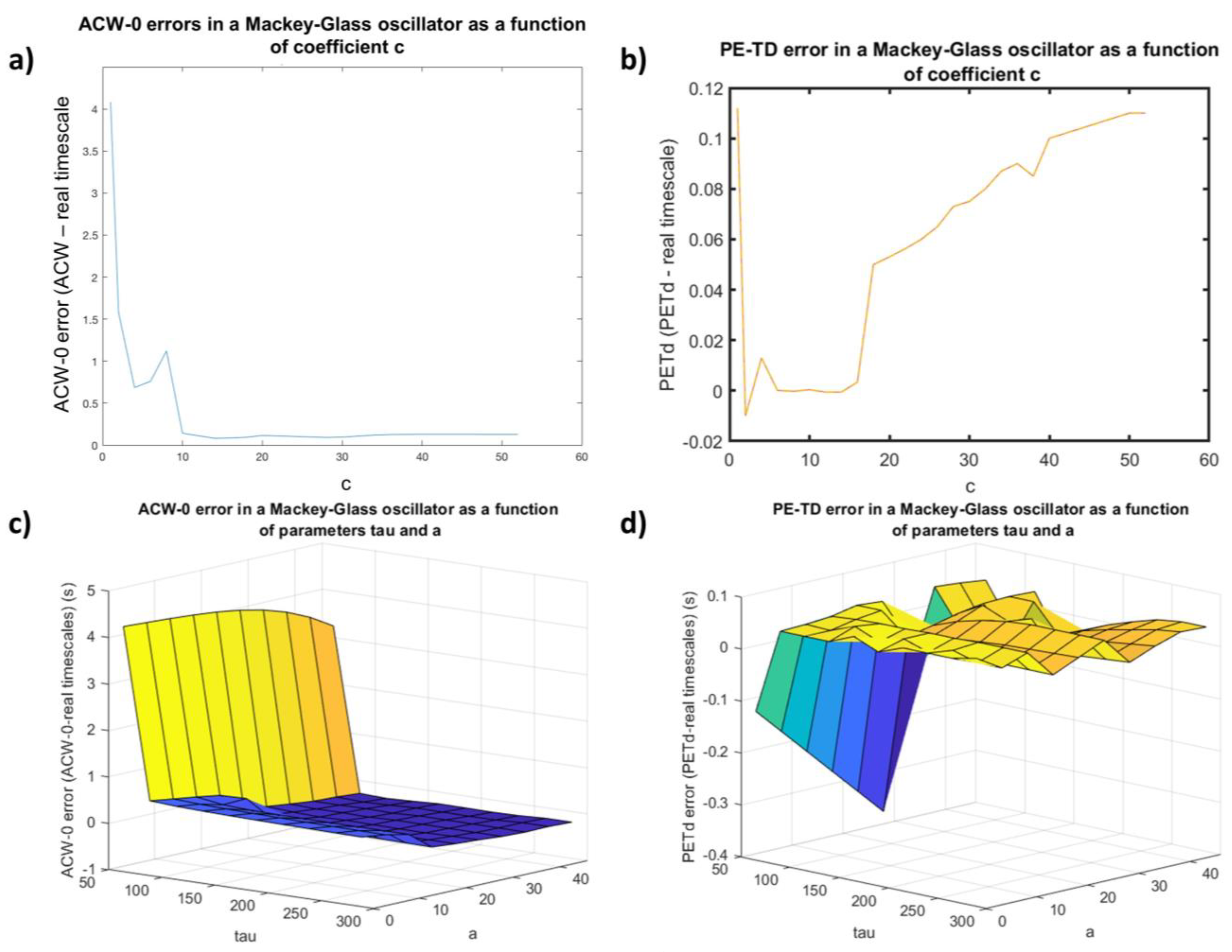

3.1.2. PE-TD Behavior as a Function of Parametrization Choice in a Non-Linear Delay System

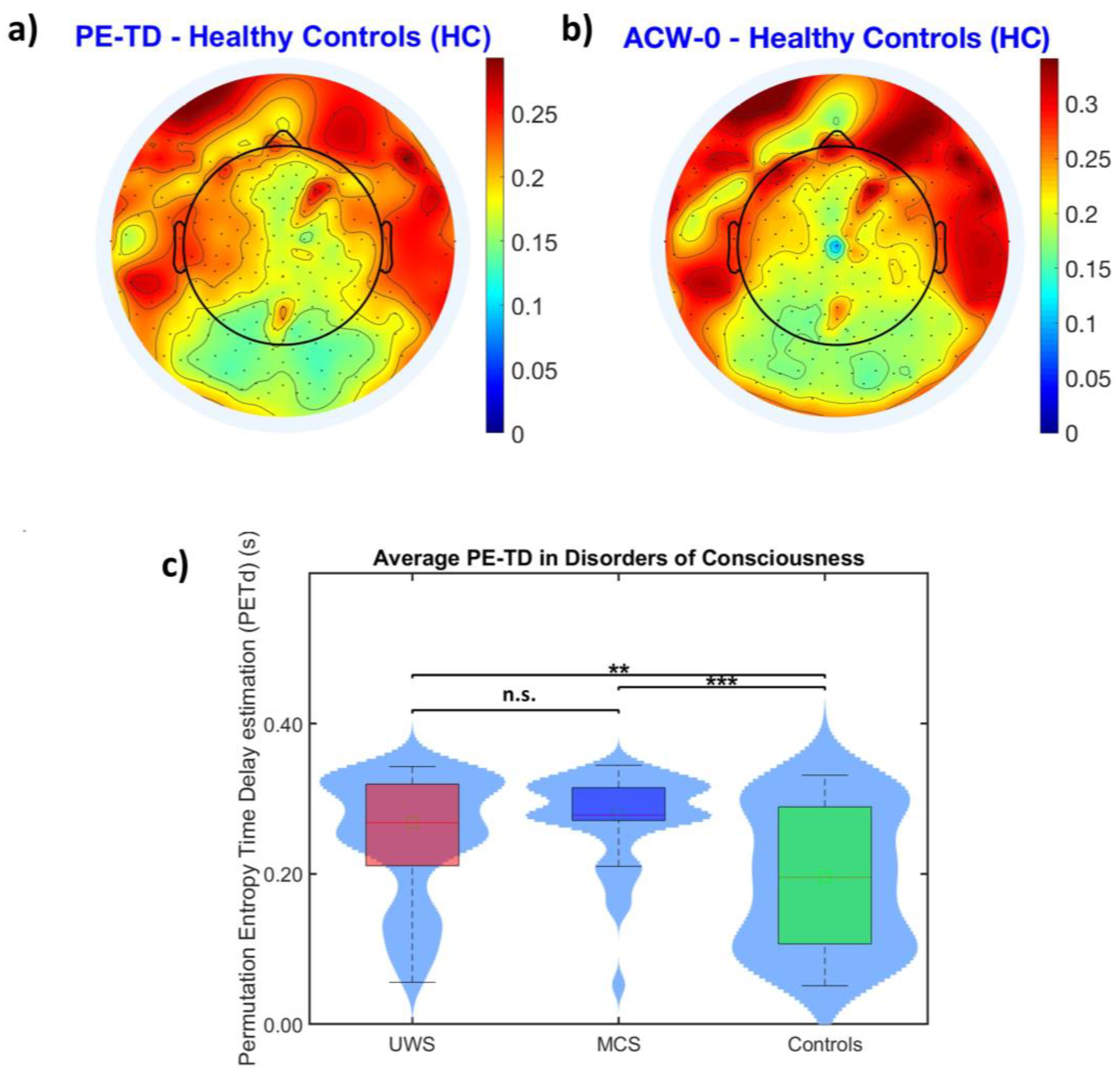

3.2. PE-TD in EEG and DoC

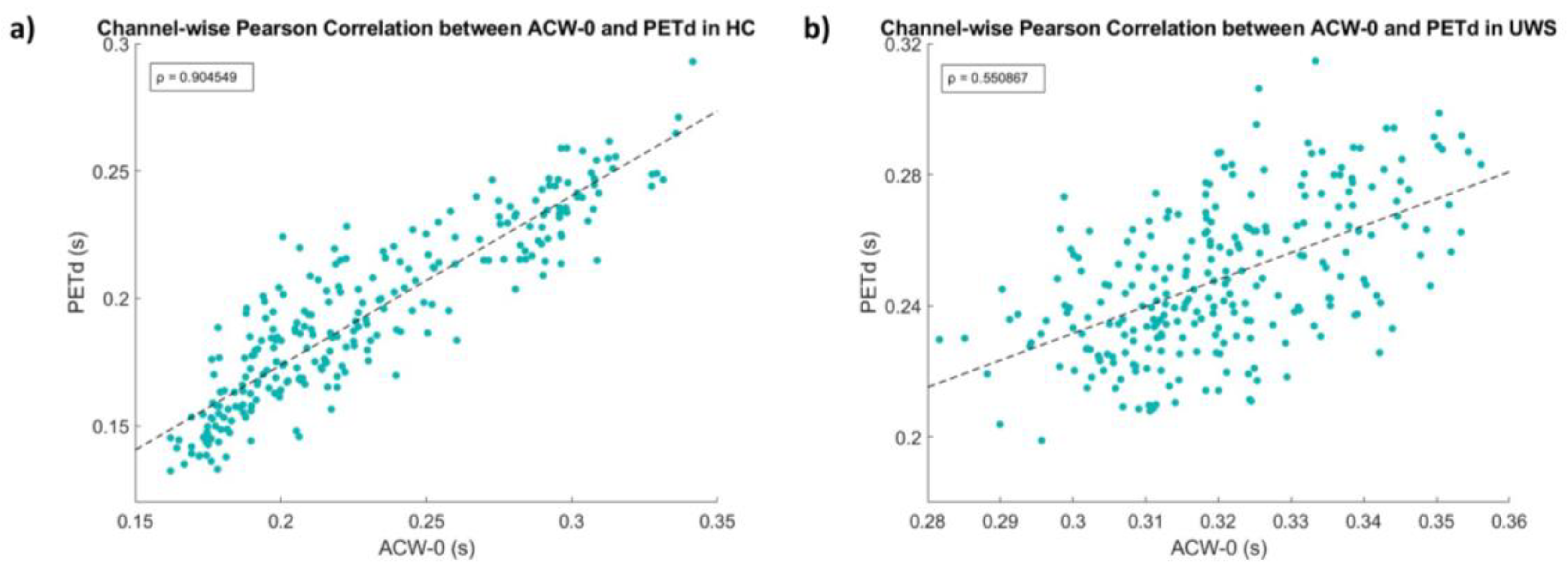

3.2.1. PE-TD Values Converge with INTs Probed with ACW-0

3.2.2. Increased Distance between INTs Obtained with PE-TD and ACW-0 during Loss of Consciousness

4. Discussion

Limitations

- Thus far, we have provided evidence for the use of ordinal quantifiers (PE) to estimate neural time scales. However, a recent study [33] showed that the use of ordinal statistics still has room for improvement, e.g., using weighted permutation entropy (PE) to account for amplitude. In this study—which to our knowledge is the first neuroscientific example of the use of ordinal quantifiers for time-delay estimation—we proceeded with PE because of how better understood it is in comparison with its more recent variants.

- PE-TD, in its first implementation, only takes the absolute minimum of the PE vs. time delay graph as its estimated INT. We followed these heuristics of the novelty of this approach in neuroscience and, furthermore, to allow for an approachable comparison with the ACW. However, we do not suggest that the absolute minimum is always the relevant time scale of an EEG signal, as multiple time scales are to be expected, even in the same brain population: this fact is already implied in the way that INTs are extracted through ACW, which has multiple versions [8] that are believed to capture different neural time scales. Pragmatically, the high correlation between PE-TD and ACW-0 in our healthy subjects suggests that, at least for the preliminary use of PE-TD, it is an appropriate choice; however, future studies must include the investigation of other local minima for the relation of different minima to neural/behavioral events.

- On the other hand, along with the replication of the abnormally prolonged average INT values in DoCs obtained through ACW [14,24] we observe a similar lack of significance in the difference between the UWS and MCS diagnostic groups, as already shown in [24]. Because of the current clinical challenge posed by the presence of covert consciousness [58,59], we argue that the lack of predictive power of average INT values to distinguish between different states of consciousness, even with PE-TD, is not an intrinsic weakness of this approach but rather a symptom of the discrepancy between behavioral responsiveness and consciousness itself [26]. Therefore, we encourage further studies, which are needed to refine the study of INTs and to improve on its potential as a diagnostic/prognostic marker of conscious states.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Otto, A.; Just, W.; Radons, G. Nonlinear Dynamics of Delay Systems: An Overview. Philos. Trans. R. Soc. A 2019, 377, 20180389. [Google Scholar] [CrossRef] [PubMed]

- Erneux, T. Applied Delay Differential Equations; Surveys and Tutorials in the Applied Mathematical Sciences; Springer: New York, NY, USA, 2009; ISBN 978-0-387-74371-4. [Google Scholar]

- Golesorkhi, M.; Gomez-Pilar, J.; Zilio, F.; Berberian, N.; Wolff, A.; Yagoub, M.C.E.; Northoff, G. The Brain and Its Time: Intrinsic Neural Timescales Are Key for Input Processing. Commun. Biol. 2021, 4, 970. [Google Scholar] [CrossRef] [PubMed]

- Hasson, U.; Chen, J.; Honey, C.J. Hierarchical Process Memory: Memory as an Integral Component of Information Processing. Trends Cogn. Sci. 2015, 19, 304–313. [Google Scholar] [CrossRef]

- Northoff, G.; Klar, P.; Bein, M.; Safron, A. As without, so within: How the Brain’s Temporo-Spatial Alignment to the Environment Shapes Consciousness. Interface Focus. 2023, 13, 20220076. [Google Scholar] [CrossRef]

- Simoncelli, E.P.; Olshausen, B.A. Natural Image Statistics and Neural Representation. Annu. Rev. Neurosci. 2001, 24, 1193–1216. [Google Scholar] [CrossRef]

- Sterling, P.; Laughlin, S. Principles of Neural Design; The MIT Press: Cambridge, MA, USA, 2015; ISBN 978-0-262-02870-7. [Google Scholar]

- Wolff, A.; Berberian, N.; Golesorkhi, M.; Gomez-Pilar, J.; Zilio, F.; Northoff, G. Intrinsic Neural Timescales: Temporal Integration and Segregation. Trends Cogn. Sci. 2022, 26, 159–173. [Google Scholar] [CrossRef]

- Honey, C.J.; Thesen, T.; Donner, T.H.; Silbert, L.J.; Carlson, C.E.; Devinsky, O.; Doyle, W.K.; Rubin, N.; Heeger, D.J.; Hasson, U. Slow Cortical Dynamics and the Accumulation of Information over Long Timescales. Neuron 2012, 76, 423–434. [Google Scholar] [CrossRef] [PubMed]

- Park, K.I. Fundamentals of Probability and Stochastic Processes with Applications to Communications, 1st ed.; Springer International Publishing Imprint; Springer: Cham, Switzerland, 2018; ISBN 978-3-319-68075-0. [Google Scholar]

- Golesorkhi, M.; Gomez-Pilar, J.; Tumati, S.; Fraser, M.; Northoff, G. Temporal Hierarchy of Intrinsic Neural Timescales Converges with Spatial Core-Periphery Organization. Commun. Biol. 2021, 4, 277. [Google Scholar] [CrossRef] [PubMed]

- Smith, D.; Wolff, A.; Wolman, A.; Ignaszewski, J.; Northoff, G. Temporal Continuity of Self: Long Autocorrelation Windows Mediate Self-Specificity. NeuroImage 2022, 257, 119305. [Google Scholar] [CrossRef]

- Huang, Z.; Liu, X.; Mashour, G.A.; Hudetz, A.G. Timescales of Intrinsic BOLD Signal Dynamics and Functional Connectivity in Pharmacologic and Neuropathologic States of Unconsciousness. J. Neurosci. 2018, 38, 2304–2317. [Google Scholar] [CrossRef]

- Zilio, F.; Gomez-Pilar, J.; Cao, S.; Zhang, J.; Zang, D.; Qi, Z.; Tan, J.; Hiromi, T.; Wu, X.; Fogel, S.; et al. Are Intrinsic Neural Timescales Related to Sensory Processing? Evidence from Abnormal Behavioral States. NeuroImage 2021, 226, 117579. [Google Scholar] [CrossRef] [PubMed]

- Bandt, C.; Pompe, B. Permutation Entropy: A Natural Complexity Measure for Time Series. Phys. Rev. Lett. 2002, 88, 174102. [Google Scholar] [CrossRef] [PubMed]

- Shannon, C.E. A Mathematical Theory of Communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Rosso, O.A.; Craig, H.; Moscato, P. Shakespeare and Other English Renaissance Authors as Characterized by Information Theory Complexity Quantifiers. Phys. A Stat. Mech. Its Appl. 2009, 388, 916–926. [Google Scholar] [CrossRef]

- West, B.J.; Geneston, E.L.; Grigolini, P. Maximizing Information Exchange between Complex Networks. Phys. Rep. 2008, 468, 1–99. [Google Scholar] [CrossRef]

- Soriano, M.C.; Zunino, L.; Rosso, O.A.; Fischer, I.; Mirasso, C.R. Time Scales of a Chaotic Semiconductor Laser With Optical Feedback Under the Lens of a Permutation Information Analysis. IEEE J. Quantum. Electron. 2011, 47, 252–261. [Google Scholar] [CrossRef]

- Wu, J.-G.; Wu, Z.-M.; Xia, G.-Q.; Feng, G.-Y. Evolution of Time Delay Signature of Chaos Generated in a Mutually Delay-Coupled Semiconductor Lasers System. Opt. Express 2012, 20, 1741. [Google Scholar] [CrossRef]

- Zunino, L.; Soriano, M.C.; Fischer, I.; Rosso, O.A.; Mirasso, C.R. Permutation-Information-Theory Approach to Unveil Delay Dynamics from Time-Series Analysis. Phys. Rev. E 2010, 82, 046212. [Google Scholar] [CrossRef]

- Kolvoort, I.R.; Wainio-Theberge, S.; Wolff, A.; Northoff, G. Temporal Integration as “Common Currency” of Brain and Self—Scale-free Activity in Resting-state EEG Correlates with Temporal Delay Effects on Self-relatedness. Hum. Brain Mapp. 2020, 41, 4355–4374. [Google Scholar] [CrossRef]

- Sancristóbal, B.; Ferri, F.; Longtin, A.; Perrucci, M.G.; Romani, G.L.; Northoff, G. Slow Resting State Fluctuations Enhance Neuronal and Behavioral Responses to Looming Sounds. Brain Topogr. 2022, 35, 121–141. [Google Scholar] [CrossRef]

- Buccellato, A.; Zang, D.; Zilio, F.; Gomez-Pilar, J.; Wang, Z.; Qi, Z.; Zheng, R.; Xu, Z.; Wu, X.; Bisiacchi, P.; et al. Disrupted Relationship between Intrinsic Neural Timescales and Alpha Peak Frequency during Unconscious States—A High-Density EEG Study. NeuroImage 2023, 265, 119802. [Google Scholar] [CrossRef] [PubMed]

- Giacino, J.T. Disorders of Consciousness: Differential Diagnosis and Neuropathologic Features. Semin. Neurol. 1997, 17, 105–111. [Google Scholar] [CrossRef] [PubMed]

- Hermann, B.; Sangaré, A.; Munoz-Musat, E.; Salah, A.B.; Perez, P.; Valente, M.; Faugeras, F.; Axelrod, V.; Demeret, S.; Marois, C.; et al. Importance, Limits and Caveats of the Use of “Disorders of Consciousness” to Theorize Consciousness. Neurosci. Conscious. 2021, 2021, niab048. [Google Scholar] [CrossRef] [PubMed]

- Northoff, G.; Huang, Z. How Do the Brain’s Time and Space Mediate Consciousness and Its Different Dimensions? Temporo-Spatial Theory of Consciousness (TTC). Neurosci. Biobehav. Rev. 2017, 80, 630–645. [Google Scholar] [CrossRef]

- Northoff, G.; Zilio, F. Temporo-Spatial Theory of Consciousness (TTC)—Bridging the Gap of Neuronal Activity and Phenomenal States. Behav. Brain Res. 2022, 424, 113788. [Google Scholar] [CrossRef]

- Galadí, J.A.; Silva Pereira, S.; Sanz Perl, Y.; Kringelbach, M.L.; Gayte, I.; Laufs, H.; Tagliazucchi, E.; Langa, J.A.; Deco, G. Capturing the Non-Stationarity of Whole-Brain Dynamics Underlying Human Brain States. NeuroImage 2021, 244, 118551. [Google Scholar] [CrossRef]

- Kaplan, A.Y.; Fingelkurts, A.A.; Fingelkurts, A.A.; Borisov, S.V.; Darkhovsky, B.S. Nonstationary Nature of the Brain Activity as Revealed by EEG/MEG: Methodological, Practical and Conceptual Challenges. Signal Process. 2005, 85, 2190–2212. [Google Scholar] [CrossRef]

- Casali, A.G.; Gosseries, O.; Rosanova, M.; Boly, M.; Sarasso, S.; Casali, K.R.; Casarotto, S.; Bruno, M.-A.; Laureys, S.; Tononi, G.; et al. A Theoretically Based Index of Consciousness Independent of Sensory Processing and Behavior. Sci. Transl. Med. 2013, 5, 198ra105. [Google Scholar] [CrossRef]

- Tononi, G. Consciousness and Complexity. Science 1998, 282, 1846–1851. [Google Scholar] [CrossRef]

- Soriano, M.C.; Zunino, L. Time-Delay Identification Using Multiscale Ordinal Quantifiers. Entropy 2021, 23, 969. [Google Scholar] [CrossRef]

- Unakafova, V.; Keller, K. Efficiently Measuring Complexity on the Basis of Real-World Data. Entropy 2013, 15, 4392–4415. [Google Scholar] [CrossRef]

- Huang, X.; Shang, H.L.; Pitt, D. Permutation Entropy and Its Variants for Measuring Temporal Dependence. Aus. N. Z. J. Stat. 2022, 64, 442–477. [Google Scholar] [CrossRef]

- Mikosch, T.; Stărică, C. Nonstationarities in Financial Time Series, the Long-Range Dependence, and the IGARCH Effects. Rev. Econ. Stat. 2004, 86, 378–390. [Google Scholar] [CrossRef]

- Burkitt, A.N. A Review of the Integrate-and-Fire Neuron Model: I. Homogeneous Synaptic Input. Biol. Cybern. 2006, 95, 1–19. [Google Scholar] [CrossRef] [PubMed]

- Gerstner, W.; Kistler, W.M. Spiking Neuron Models: Single Neurons, Populations, Plasticity, 1st ed.; Cambridge University Press: Cambridge, UK, 2002; ISBN 978-0-521-81384-6. [Google Scholar]

- Salinas, E.; Sejnowski, T.J. Integrate-and-Fire Neurons Driven by Correlated Stochastic Input. Neural Comput. 2002, 14, 2111–2155. [Google Scholar] [CrossRef] [PubMed]

- Mackey, M.C.; Glass, L. Oscillation and Chaos in Physiological Control Systems. Science 1977, 197, 287–289. [Google Scholar] [CrossRef]

- Bélair, J.; Glass, L.; An Der Heiden, U.; Milton, J. Dynamical Disease: Identification, Temporal Aspects and Treatment Strategies of Human Illness. Chaos 1995, 5, 1–7. [Google Scholar] [CrossRef]

- Giacino, J.T.; Kalmar, K.; Whyte, J. The JFK Coma Recovery Scale-Revised: Measurement Characteristics and Diagnostic Utility. Arch. Phys. Med. Rehabil. 2004, 85, 2020–2029. [Google Scholar] [CrossRef]

- Teasdale, G.; Jennett, B. Assessment of Coma and Impaired Consciousness. Lancet 1974, 304, 81–84. [Google Scholar] [CrossRef]

- Delorme, A.; Makeig, S. EEGLAB: An Open Source Toolbox for Analysis of Single-Trial EEG Dynamics Including Independent Component Analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef]

- Fisher, R.A. Statistical Methods for Research Workers. In Breakthroughs in Statistics; Kotz, S., Johnson, N.L., Eds.; Springer Series in Statistics; Springer: New York, NY, USA, 1992; pp. 66–70. ISBN 978-0-387-94039-7. [Google Scholar]

- Walter, N.; Hinterberger, T. Determining States of Consciousness in the Electroencephalogram Based on Spectral, Complexity, and Criticality Features. Neurosci. Conscious. 2022, 2022, niac008. [Google Scholar] [CrossRef] [PubMed]

- Barlow, H.B. Possible Principles Underlying the Transformations of Sensory Messages. In Sensory Communication; Rosenblith, W.A., Ed.; The MIT Press: Cambridge, MA, USA, 2012; pp. 216–234. ISBN 978-0-262-51842-0. [Google Scholar]

- Northoff, G.; Wainio-Theberge, S.; Evers, K. Is Temporo-Spatial Dynamics the “Common Currency” of Brain and Mind? In Quest of “Spatiotemporal Neuroscience”. Phys. Life Rev. 2020, 33, 34–54. [Google Scholar] [CrossRef] [PubMed]

- Kreuzer, M.; Kochs, E.F.; Schneider, G.; Jordan, D. Non-Stationarity of EEG during Wakefulness and Anaesthesia: Advantages of EEG Permutation Entropy Monitoring. J. Clin. Monit. Comput. 2014, 28, 573–580. [Google Scholar] [CrossRef] [PubMed]

- Box, G.E.P.; Jenkins, G.M.; Reinsel, G.C.; Ljung, G.M. Time Series Analysis: Forecasting and Control, 5th ed.; Wiley Series in Probability and Statistics; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2016; ISBN 978-1-118-67502-1. [Google Scholar]

- Koch, C.; Massimini, M.; Boly, M.; Tononi, G. Neural Correlates of Consciousness: Progress and Problems. Nat. Rev. Neurosci. 2016, 17, 307–321. [Google Scholar] [CrossRef]

- Mashour, G.; Roelfsema, P.; Changeux, J.P.; Dehaene, S. Conscious Processing and the Global Neuronal Workspace Hypothesis. Neuron 2020, 105, 776–798. [Google Scholar] [CrossRef]

- Hudetz, A.G.; Liu, X.; Pillay, S. Dynamic Repertoire of Intrinsic Brain States Is Reduced in Propofol-Induced Unconsciousness. Brain Connect. 2015, 5, 10–22. [Google Scholar] [CrossRef]

- Northoff, G.; Lamme, V. Neural Signs and Mechanisms of Consciousness: Is There a Potential Convergence of Theories of Consciousness in Sight? Neurosci. Biobehav. Rev. 2020, 118, 568–587. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, Z.; Chen, Y.; Zhang, J.; Ghinda, D.; Nikolova, Y.; Wu, J.; Xu, J.; Bai, W.; Mao, Y.; et al. Breakdown in the Temporal and Spatial Organization of Spontaneous Brain Activity during General Anesthesia. Hum. Brain. Mapp. 2018, 39, 2035–2046. [Google Scholar] [CrossRef]

- Carhart-Harris, R.L. The Entropic Brain—Revisited. Neuropharmacology 2018, 142, 167–178. [Google Scholar] [CrossRef]

- Petelczyc, M.; Czechowski, Z. Effect of Nonlinearity and Persistence on Multiscale Irreversibility, Non-Stationarity, and Complexity of Time Series—Case of Data Generated by the Modified Langevin Model. Chaos Interdiscip. J. Nonlinear Sci. 2023, 33, 053107. [Google Scholar] [CrossRef]

- Bayne, T.; Hohwy, J.; Owen, A.M. Reforming the Taxonomy in Disorders of Consciousness. Ann. Neurol. 2017, 82, 866–872. [Google Scholar] [CrossRef] [PubMed]

- Owen, A.M.; Coleman, M.R.; Boly, M.; Davis, M.H.; Laureys, S.; Pickard, J.D. Detecting Awareness in the Vegetative State. Science 2006, 313, 1402. [Google Scholar] [CrossRef] [PubMed]

| Parameter | Value |

|---|---|

| Vrest | −70 mV |

| Vreset | −75 mV |

| Vth | −50 mV |

| Sampling rate | 10 kHz |

| Resistance | 10 MΩs |

| Decay time constant | 10 ms |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Buccellato, A.; Çatal, Y.; Bisiacchi, P.; Zang, D.; Zilio, F.; Wang, Z.; Qi, Z.; Zheng, R.; Xu, Z.; Wu, X.; et al. Probing Intrinsic Neural Timescales in EEG with an Information-Theory Inspired Approach: Permutation Entropy Time Delay Estimation (PE-TD). Entropy 2023, 25, 1086. https://doi.org/10.3390/e25071086

Buccellato A, Çatal Y, Bisiacchi P, Zang D, Zilio F, Wang Z, Qi Z, Zheng R, Xu Z, Wu X, et al. Probing Intrinsic Neural Timescales in EEG with an Information-Theory Inspired Approach: Permutation Entropy Time Delay Estimation (PE-TD). Entropy. 2023; 25(7):1086. https://doi.org/10.3390/e25071086

Chicago/Turabian StyleBuccellato, Andrea, Yasir Çatal, Patrizia Bisiacchi, Di Zang, Federico Zilio, Zhe Wang, Zengxin Qi, Ruizhe Zheng, Zeyu Xu, Xuehai Wu, and et al. 2023. "Probing Intrinsic Neural Timescales in EEG with an Information-Theory Inspired Approach: Permutation Entropy Time Delay Estimation (PE-TD)" Entropy 25, no. 7: 1086. https://doi.org/10.3390/e25071086

APA StyleBuccellato, A., Çatal, Y., Bisiacchi, P., Zang, D., Zilio, F., Wang, Z., Qi, Z., Zheng, R., Xu, Z., Wu, X., Del Felice, A., Mao, Y., & Northoff, G. (2023). Probing Intrinsic Neural Timescales in EEG with an Information-Theory Inspired Approach: Permutation Entropy Time Delay Estimation (PE-TD). Entropy, 25(7), 1086. https://doi.org/10.3390/e25071086