EnRDeA U-Net Deep Learning of Semantic Segmentation on Intricate Noise Roads

Abstract

1. Introduction

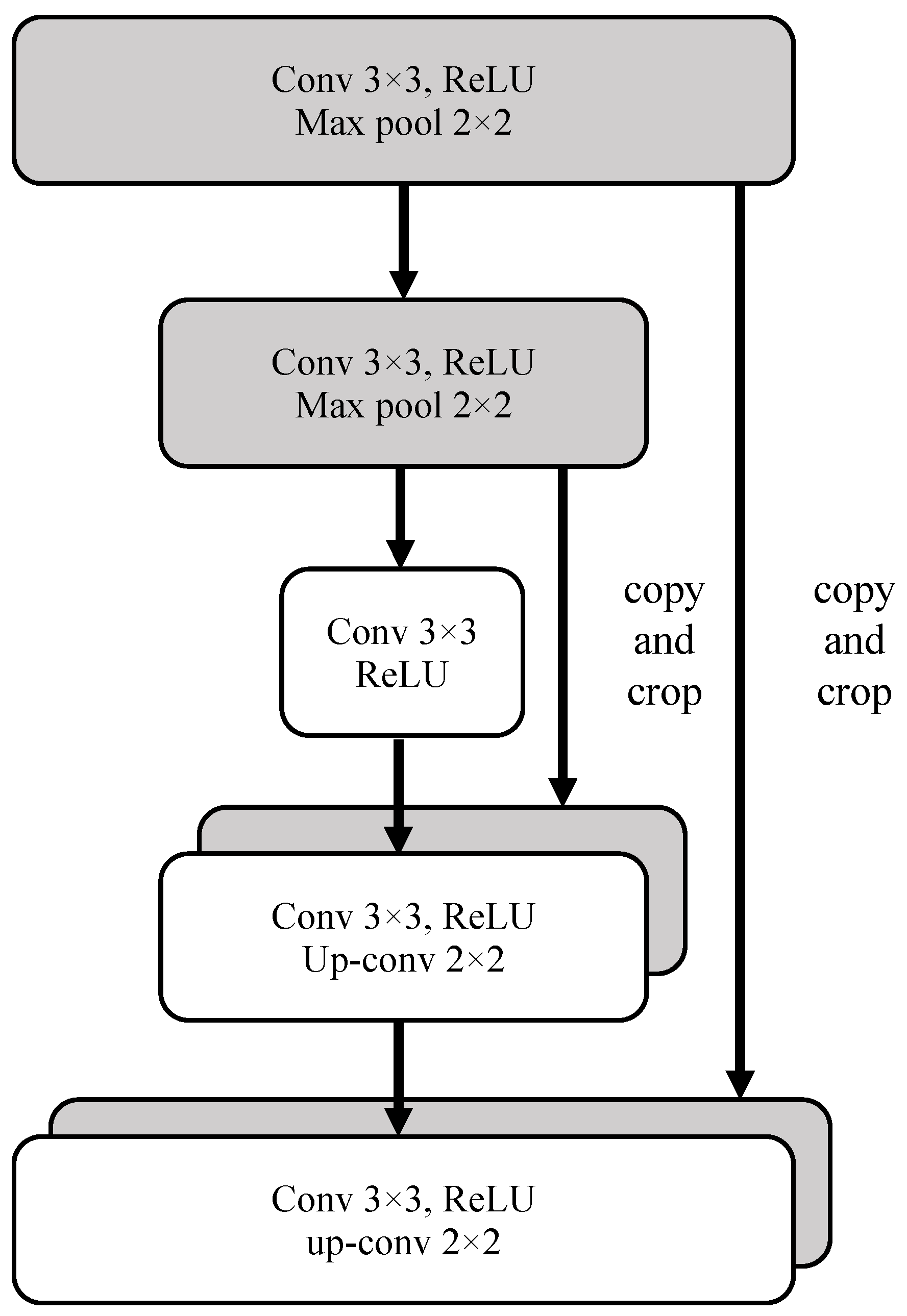

2. EnRDeA U-Net Framework

2.1. Embedded Resdual U-Net with Attention Module

2.2. Encoder and Decoder

2.2.1. Encoder

2.2.2. Decoder

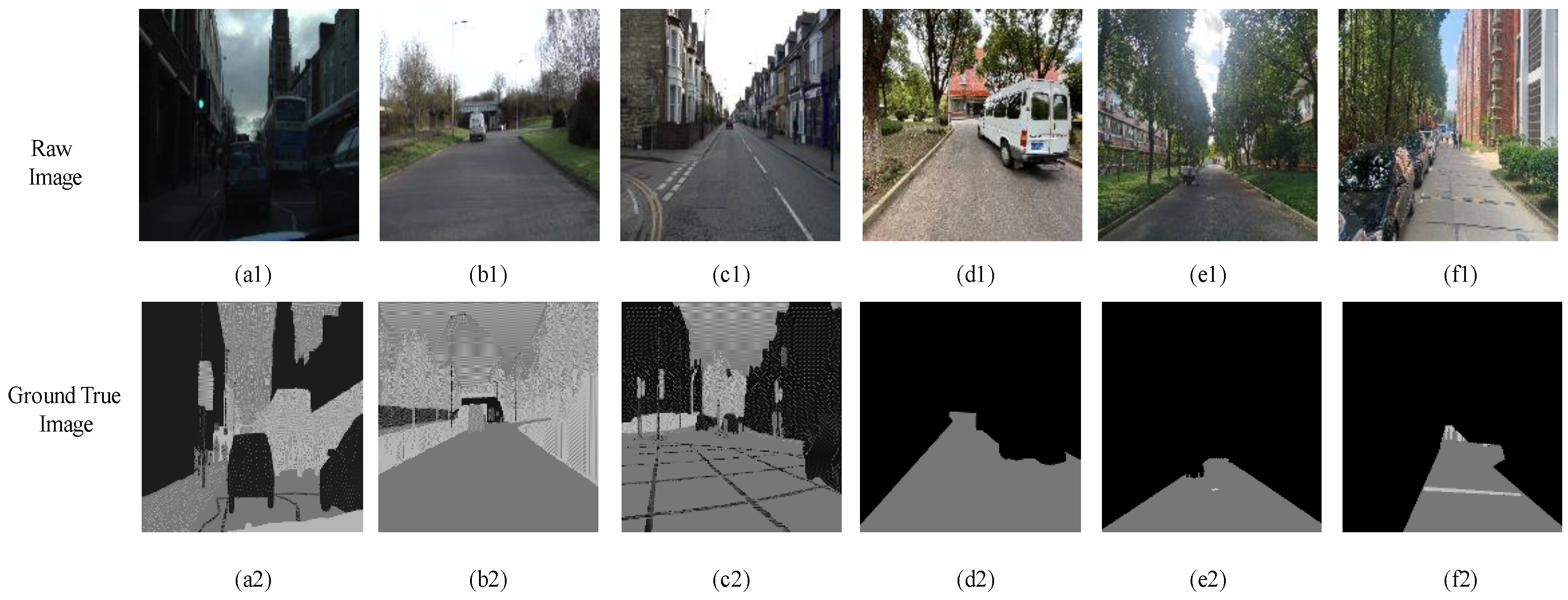

3. Dataset Analysis

3.1. Intricate Road Characteristics

3.2. Three Categories of Validated Datasets

- In fully cloudy conditions, the pixels at the edge between road and grass presented lower grayscale values than in full-sunshine conditions, resulting in confusion with respect to the status of distant road, and failure in segmentation.

- In full-sunshine conditions, road pixels, on average, exhibited a higher grayscale contrast, compared with non-road areas, particularly if the road was beneath the large trees where partially shadowing sunlight spots occurred. In such cases, the grayscale values of pixels in sunlit areas were obviously higher than in shadowed regions. Moreover, the texture feature on roads lit by intense sunlight caused the region to become more blurred. Additionally, the distant road showed bright regions of high intensity, implying a challenge for road segmentation.

- Many complex symbols and signs were found that increased the difficulty of road segmentation. For example, there were many cracks in the road caused by long-term intense sunshine. In addition, traffic signs such as white arrows and lines, black manhole covers, zebra crossings, and roadway speed bumps were also influential factors which led to further challenges.

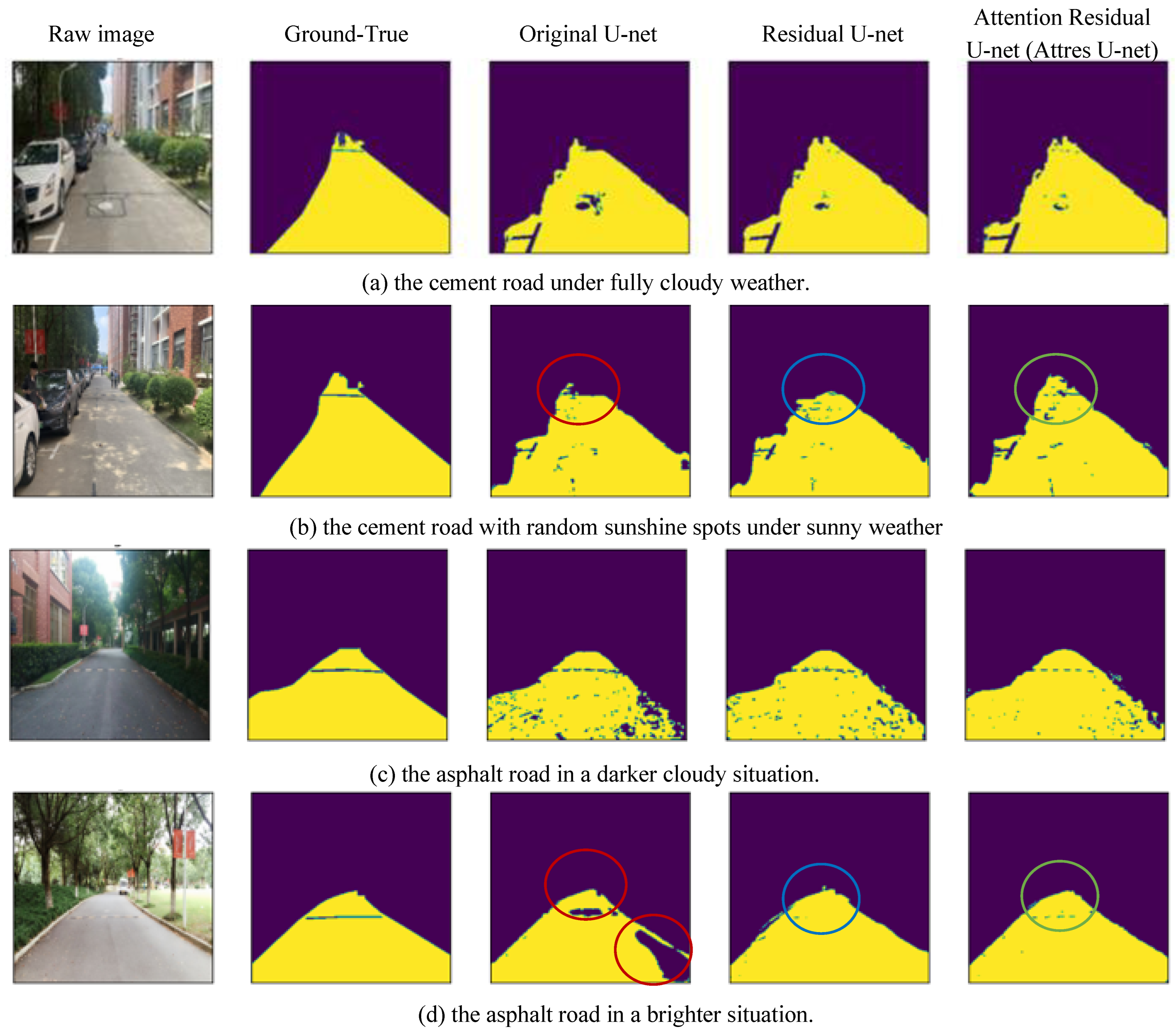

3.3. U-Net Extensions Comparison

4. Experimental Setup and Results

4.1. Training Dataset and Data Enhencement

4.2. Segmentation Results and Analysis

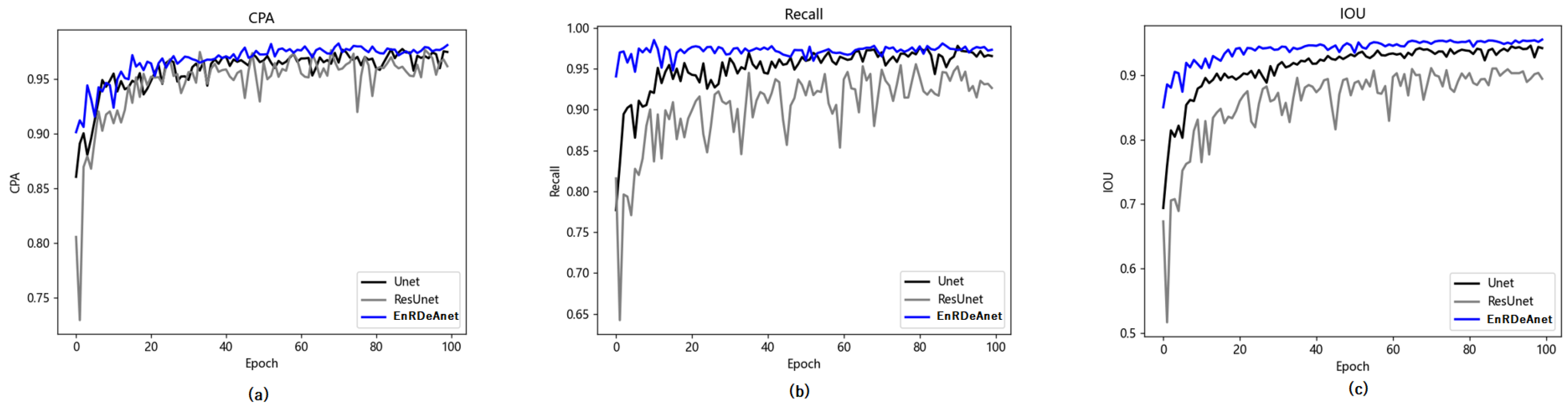

4.3. Index Evaluation

5. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Katiyar, S.; Ibraheem, N.; Ansari, A.Q. Ant colony optimization: A tutorial review. In Proceedings of the 10th IET International Conference on Advances in Power System Control, Operation and Management (APSCOM 2015), Hong Kong, China, 8–12 November 2015; pp. 99–110. [Google Scholar]

- Kuan, T.W.; Chen, S.; Luo, S.N.; Chen, Y.; Wang, J.F.; Wang, C. Perspective on SDSB Human Visual Knowledge and Intelligence for Happiness Campus. In Proceedings of the 2021 9th International Conference on Orange Technology (ICOT), Tainan, Taiwan, 16–17 December 2021; pp. 1–4. [Google Scholar]

- Kuan, T.W.; Xiao, G.; Wang, Y.; Chen, S.; Chen, Y.; Wang, J.-F. Human Knowledge and Visual Intelligence on SDXtensionB. In Proceedings of the 2022 10th International Conference on Orange Technology (ICOT), Shanghai, China, 10–11 November 2022; pp. 1–4. [Google Scholar]

- Medina, M. The World’s Scavengers: Salvaging for Sustainable Consumption and Production; Rowman Altamira: Walnut Creek, CA, USA, 2007. [Google Scholar]

- Yu, X.; Kuan, T.W.; Zhang, Y.; Yan, T. YOLO v5 for SDSB Distant Tiny Object Detection. In Proceedings of the 2022 10th International Conference on Orange Technology (ICOT), Shanghai, China, 10–11 November 2022; pp. 1–4. [Google Scholar]

- Zhan, W.; Sun, C.; Wang, M.; She, J.; Zhang, Y.; Zhang, Z.; Sun, Y. An improved Yolov5 real-time detection method for small objects captured by UAV. Soft Comput. 2022, 26, 361–373. [Google Scholar] [CrossRef]

- Liu, Z.; Gao, X.; Wan, Y.; Wang, J.; Lyu, H. An Improved YOLOv5 Method for Small Object Detection in UAV Capture Scenes. IEEE Access 2023, 11, 14365–14374. [Google Scholar] [CrossRef]

- Kuan, T.-W.; Gu, Y.; Chen, T.; Shen, Y. Attention-based U-Net extensions for Complex Noises of Smart Campus Road Segmentation. In Proceedings of the 2022 10th International Conference on Orange Technology (ICOT), Shanghai, China, 10–11 November 2022; pp. 1–4. [Google Scholar]

- Yu, X.; Kuan, T.W.; Qian, Z.Y.; Wang, Q. HSV Semantic Segmentation on Partially Facility and Phanerophyte Sunshine-Shadowing Road. In Proceedings of the 2022 10th International Conference on Orange Technology (ICOT), Shanghai, China, 10–11 November 2022; pp. 1–4. [Google Scholar]

- Sun, Z.; Geng, H.; Lu, Z.; Scherer, R.; Woźniak, M. Review of road segmentation for SAR images. Remote Sens. 2021, 13, 1011. [Google Scholar] [CrossRef]

- Wang, J.; Qin, Q.; Gao, Z.; Zhao, J.; Ye, X. A new approach to urban road extraction using high-resolution aerial image. ISPRS Int. J. Geo-Inf. 2016, 5, 114. [Google Scholar] [CrossRef]

- Hui, Z.; Hu, Y.; Yevenyo, Y.Z.; Yu, X. An improved morphological algorithm for filtering airborne LiDAR point cloud based on multi-level kriging interpolation. Remote Sens. 2016, 8, 35. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; BROX, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the 18th International Conference on Medical Image Computing and Computer Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Li, L.; Wang, C.; Zhang, H.; Zhang, B.; Wu, F. Urban building change detection in SAR images using combined differential image and residual u-net network. Remote Sens. 2019, 11, 1091. [Google Scholar] [CrossRef]

- Shuai, L.; Gao, X.; Wang, J. Wnet++: A nested W-shaped network with multiscale input and adaptive deep supervision for osteosarcoma segmentation. In Proceedings of the 2021 IEEE 4th International Conference on Electronic Information and Communication Technology (ICEICT), Xi’an, China, 18–20 August 2021; pp. 93–99. [Google Scholar]

- Kamble, R.; Samanta, P.; Singhal, N. Optic disc, cup and fovea detection from retinal images using U-Net++ with EfficientNet encoder. In Proceedings of the Ophthalmic Medical Image Analysis: 7th International Workshop, OMIA 2020, Lima, Peru, 8 October 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 93–103. [Google Scholar]

- Cui, H.; Liu, X.; Huang, N. Pulmonary vessel segmentation based on orthogonal fused u-net++ of chest CT images. In Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2019: 22nd International Conference, Shenzhen, China, 13–17 October 2019; Springer International Publishing: Cham, Switzerland, 2019; pp. 293–300. [Google Scholar]

- Çiçek, Ö.; Abdulkadir, A.; Lienkamp, S.S.; Brox, T.; Ronneberger, O. 3D U-Net: Learning dense volumetric segmentation from sparse annotation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2016: 19th International Conference, Athens, Greece, 17–21 October 2016; Springer International Publishing: Cham, Switzerland, 2016; pp. 424–432. [Google Scholar]

- Isensee, F.; Maier-Hein, K.H. An attempt at beating the 3D U-Net. arXiv 2019, arXiv:1908.02182. [Google Scholar]

- Hwang, H.; Rehman, H.Z.U.; Lee, S. 3D U-Net for skull stripping in brain MRI. Appl. Sci. 2019, 9, 569. [Google Scholar] [CrossRef]

- Wang, F.; Jiang, R.; Zheng, L.; Meng, C.; Biswal, B. 3d u-net based brain tumor segmentation and survival days prediction. In International MICCAI Brainlesion Workshop; Springer International Publishing: Cham, Switzerland, 2019; pp. 131–141. [Google Scholar]

- Zhang, Z.; Liu, Q.; Wang, Y. Road extraction by deep residual u-net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef]

- Yu, G.; Dong, J.; Wang, Y.; Zhou, X. RUC-Net: A Residual-Unet-Based Convolutional Neural Network for Pixel-Level Pavement Crack Segmentation. Sensors 2022, 23, 53. [Google Scholar] [CrossRef] [PubMed]

- Rehan, R.; Usama, I.B.; Yasar, M.; Muhammad, W.A.; Hassan, J.M. dResU-Net: 3D deep residual U-Net based brain tumor segmentation from multimodal MRI. Biomed. Signal Process. Control 2023, 79, 103861. [Google Scholar]

- Yang, X.; Li, X.; Ye, Y.; Zhang, X.; Zhang, H.; Huang, X.; Zhang, B. Road detection via deep residual dense u-net. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–7. [Google Scholar]

- Shamsolmoali, P.; Zareapoor, M.; Wang, R.; Zhou, H.; Yang, J. A novel deep structure U-Net for sea-land segmentation in remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3219–3232. [Google Scholar] [CrossRef]

- Chen, Z.; Li, D.; Fan, W.; Guan, H.; Wang, C.; Li, J. Self-attention in reconstruction bias U-Net for semantic segmentation of building rooftops in optical remote sensing images. Remote Sens. 2021, 13, 2524. [Google Scholar] [CrossRef]

- Mustafa, N.; Zhao, J.; Liu, Z.; Zhang, Z.; Yu, W. Iron ORE region segmentation using high-resolution remote sensing images based on Res-U-Net. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 2563–2566. [Google Scholar]

- Wang, Y.; Kong, J.; Zhang, H. U-net: A smart application with multidimensional attention network for remote sensing images. Sci. Program. 2022, 2022, 1603273. [Google Scholar] [CrossRef]

- Oktay, O.; Schlemper, J.; Le Folgoc, L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B.; et al. Attention u-net: Learning where to look for the pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar]

- Petit, O.; Thome, N.; Rambour, C.; Themyr, L.; Collins, T.; Soler, L. U-net transformer: Self and cross attention for medical image segmentation. In Proceedings of the Machine Learning in Medical Imaging: 12th International Workshop, MLMI 2021, Strasbourg, France, 27 September 2021; Springer International Publishing: Cham, Switzerland, 2021; pp. 267–276. [Google Scholar]

- Wu, C.; Zhang, F.; Xia, J.; Xu, Y.; Li, G.; Xie, J.; Du, Z.; Liu, R. Building damage detection using U-Net with attention mechanism from pre-and post-disaster remote sensing datasets. Remote Sens. 2021, 13, 905. [Google Scholar] [CrossRef]

- Iglovikov, V.; Shvets, A. Ternausnet U-net with vgg11 encoder pre-trained on image net for image segmentation. arXiv 2018, arXiv:1801.05746. [Google Scholar]

- Debgupta, R.; Chaudhuri, B.B.; Tripathy, B.K. A wide ResNet-based approach for age and gender estimation in face images. In Proceedings of the International Conference on Innovative Computing and Communications: Proceedings of ICICC 2019, Bhubaneswar, India, 16–17 December 2019; Volume 1, pp. 517–530. [Google Scholar]

- Ali, L.; Alnajjar, F.; Al Jassmi, H.; Gocho, M.; Khan, W.; Serhani, M.A. Performance evaluation of deep CNN-based crack detection and localization techniques for concrete structures. Sensors 2021, 21, 1688. [Google Scholar] [CrossRef] [PubMed]

- Peteinatos, G.G.; Reichel, P.; Karouta, J.; Andújar, D.; Gerhards, R. Weed identification in maize, sunflower, and potatoes with the aid of convolutional neural networks. Remote Sens. 2020, 12, 4185. [Google Scholar] [CrossRef]

- Wickens, C.D.; Mccarley, J.S.; Gutzwiller, R.S. Applied Attention Theory; CRC Press: Boca Raton, FL, USA, 2022. [Google Scholar]

- Brostow, G.J.; Fauqueur, J.; Cipolla, R. Semantic object classes in video: A high-definition ground truth database. Pattern Recognit. Lett. 2009, 30, 88–97. [Google Scholar] [CrossRef]

| Net Versions | U-Net | Residual U-Net | Attention U-Net | EnRDeA U-Net |

|---|---|---|---|---|

| Net Structure | Encoder-coder | Encoder with Residual Block | Decoder with Attention Gate | Pairwise Encoder/Decoder with Residual Block and Attention Gate |

| Number of Parameters | 34.53 M | 48.53 M | 34.88 M | 52.02 M |

| Characteristic Performance | Original Method Lower Training Cost | Better Segmented Efficiency, Higher Training Cost | Noises Reduction, Lower Training Cost | Superior Segmented Efficiency, Fairly Training Cost |

| U-Net Model Name | PA | CPA | MIoU |

|---|---|---|---|

| U-Net | 95.45% | 94.56% | 89.56% |

| Residual U-Net | 96.04% | 95.14% | 90.60% |

| EnRDeA U-Net | 96.68% | 95.48% | 91.77% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, X.; Kuan, T.-W.; Tseng, S.-P.; Chen, Y.; Chen, S.; Wang, J.-F.; Gu, Y.; Chen, T. EnRDeA U-Net Deep Learning of Semantic Segmentation on Intricate Noise Roads. Entropy 2023, 25, 1085. https://doi.org/10.3390/e25071085

Yu X, Kuan T-W, Tseng S-P, Chen Y, Chen S, Wang J-F, Gu Y, Chen T. EnRDeA U-Net Deep Learning of Semantic Segmentation on Intricate Noise Roads. Entropy. 2023; 25(7):1085. https://doi.org/10.3390/e25071085

Chicago/Turabian StyleYu, Xiaodong, Ta-Wen Kuan, Shih-Pang Tseng, Ying Chen, Shuo Chen, Jhing-Fa Wang, Yuhang Gu, and Tuoli Chen. 2023. "EnRDeA U-Net Deep Learning of Semantic Segmentation on Intricate Noise Roads" Entropy 25, no. 7: 1085. https://doi.org/10.3390/e25071085

APA StyleYu, X., Kuan, T.-W., Tseng, S.-P., Chen, Y., Chen, S., Wang, J.-F., Gu, Y., & Chen, T. (2023). EnRDeA U-Net Deep Learning of Semantic Segmentation on Intricate Noise Roads. Entropy, 25(7), 1085. https://doi.org/10.3390/e25071085