Abstract

A new trend in deep learning, represented by Mutual Information Neural Estimation (MINE) and Information Noise Contrast Estimation (InfoNCE), is emerging. In this trend, similarity functions and Estimated Mutual Information (EMI) are used as learning and objective functions. Coincidentally, EMI is essentially the same as Semantic Mutual Information (SeMI) proposed by the author 30 years ago. This paper first reviews the evolutionary histories of semantic information measures and learning functions. Then, it briefly introduces the author’s semantic information G theory with the rate-fidelity function R(G) (G denotes SeMI, and R(G) extends R(D)) and its applications to multi-label learning, the maximum Mutual Information (MI) classification, and mixture models. Then it discusses how we should understand the relationship between SeMI and Shannon’s MI, two generalized entropies (fuzzy entropy and coverage entropy), Autoencoders, Gibbs distributions, and partition functions from the perspective of the R(G) function or the G theory. An important conclusion is that mixture models and Restricted Boltzmann Machines converge because SeMI is maximized, and Shannon’s MI is minimized, making information efficiency G/R close to 1. A potential opportunity is to simplify deep learning by using Gaussian channel mixture models for pre-training deep neural networks’ latent layers without considering gradients. It also discusses how the SeMI measure is used as the reward function (reflecting purposiveness) for reinforcement learning. The G theory helps interpret deep learning but is far from enough. Combining semantic information theory and deep learning will accelerate their development.

1. Introduction

Information-Theoretic Learning (ITL) has been used for a long time. The primary method is to use the likelihood function as the learning function, bring it into the cross-entropy, and then use the minimum cross-entropy or the minimum Kulback–Leibler (KL) divergence criterion to optimize model parameters. However, a new trend, represented by Mutual Information Neural Estimation (MINE) [1] and Information Noise Contrastive Estimation (InfoNCE) [2], is emerging in the field of deep learning. In this trend, researchers use similarity functions to construct parameterized mutual information (we call it Estimated Mutual Information (EMI)) that approximates to Shannon’s Mutual Information (ShMI) [3], and then optimize the Deep Neural Network (DNN) by maximizing EMI. Abbreviation Section lists all abbreviations with original texts.

In January 2018, Belghazi et al. [1] published “MINE: Mutual Information Neural Estimation”, which is the first article that uses the similarity function to construct EMI. In July 2018, Oord et al. [2] published “Representation Learning with Comparative Predictive Coding”, proposing Information Noise Contrast Estimate (InfoNCE). This paper explicitly proposes that the similarity function is proportional to P(x|yj)/P(x) for expressing EMI. MINE and InfoNCE achieve distinct successes and encourage others. In 2019, Hjelm et al. [4,5] proposed Deep InfoMax (DIM) based on MINE. They combine DIM and InfoNCE (see Equation (5) in [4]) to achieve better results (they believe that InfoNCE was put forward independently of MINE). In 2020, Chen et al. proposed SimCLR [6], He et al. proposed MoCo [7], and Grill et al. presented BOYL [8], all of which show strong learning ability. All of them use similarity functions to construct the EMI or the loss function similar to that used for InfoNCE.

On the other hand, in response to the call from Weaver in 1949 [9], many researchers have been studying semantic information theory. The trend of researching semantic communication and semantic information theory is also gradually growing. One reason is the demand of next-generation Internet for semantic communication [10,11]. We need to use similarity or semantics instead of the Shannon channel to make probability predictions and data compression. Another reason is that semantic information theory has also made significant progress in machine learning. In particular, the author (of this paper) proposed a simple method of obtaining truth or similarity functions from sampling distributions in 2017 [12] and provided a group of channels matching algorithms for multi-label learning, the maximum mutual information classification of unseen instances, and mixture models [12,13].

Coincidentally, the EMI measure is essentially the same as the semantic information measure proposed by the author 30 years ago. In 1990, the author proposed using truth (or similarity) functions to construct semantic and estimated information measures [14]. In 1993, he published the monograph “A Generalized Information Theory” (in Chinese) [15]. In 1999, he improved this theory and introduced it in an English journal [16]. Since 2017, the author has adopted the P-T probability framework with model parameters for this theory’s applications to machine learning [12,13,17].

The semantic information theory introduced in [14,15] is called the semantic information G theory or the G theory for short (G means generalization). The semantic information measure is called the G measure. In the G theory, the information rate-distortion function R(D) is extended to the R(G) function. In R(G), R is the lower limit of ShMI for a given G, and G is the upper limit of SeMI for a given R. The R(G) function can reveal the relationship between SeMI and ShMI for understanding deep learning. Its applications to machine learning can be found in [12,13,18]. The main difference between semantic information and Shannon’s information is that the former involves truth and falsehood, as discussed by Floridi [19]. According to Tarski’s semantic conception of truth [20] and Davidson’s truth-conditional semantics [21], the truth function of a hypothesis or label determines its semantic meaning (See Appendix A for details).

To measure the semantic information conveyed by a label yj (a constant) about an instance x (a variable), we need the (fuzzy) truth function (where x makes yj true). Generally, x and yj belong to different domains. If x and yj belong to the same domain, yj becomes an estimation, i.e., yj = j = “x is about xj”. In this case, semantic information becomes estimated information. For example, a GPS pointer means an estimate yj = j (see Section 3.1 for details); it conveys estimated information. Since it may be wrong, the information is also semantic information. Therefore, we can say that estimated information is a special case of semantic information; this paper also regards estimated information as semantic information and the learning method of using EMI as the semantic ITL method.

Although the method of using estimated information in deep learning is very successful, there are still many problems. Firstly, some essential functions and entropy or information measures have no unified names, such as function m(x, y) = P(x, y)/[P(x) P(y)] and generalized entropies related to EMI. It is particularly worth mentioning that EMI, as the protagonist, does not have its own name. It is often called the lower bound of mutual information. On the other hand, the same name may represent very different functions. For example, the semantic similarity [22] may be expressed with exp[−d(x, y)] between 0 and 1 or −logP (c) [23] between 0 and ∞. To exchange ideas conveniently, we should unify the names of various functions and entropy and information measures.

Secondly, there are some essential questions that the researchers of deep learning cannot answer. The questions include:

- How are EMI and ShMI related in supervised, semi-supervised, and unsupervised learning?

- Is similarity probability? If it is, why is it not normalized? If it is not, why can we bring it into Bayes’ formula (see Equation (9))?

- Can we get similarity functions, distortion functions, truth functions, or membership functions directly from samples or sampling distributions?

However, the G theory can answer these questions clearly.

Thirdly, due to the unclear understanding of the relationship between EMI and ShMI, many deep learning researchers [4,24] believe that maximizing ShMI is a good objective; since EMI is the lower bound of ShMI, we can maximize ShMI by maximizing EMI. However, Tschannen et al. [25] think that maximizing ShMI is not enough or not good in some cases; there are other reasons for the recent success of deep learning. The author thinks that their questioning deserves attention. From the G theory perspective, EMI is similar to the utility, and ShMI is like the cost; our purpose is to maximize EMI. In some cases, both need maximization; while in other cases, to improve communication efficiency, we need to reduce ShMI.

Tishby et al. [26,27] use the information bottleneck to interpret deep learning. According to their theory, for a neural network with the structure X—>T—>Y, we need to maximize I(Y; T) − βI(X; T) [26] or minimize I(X; T) − βI(Y; T), where β is a Lagrange multiplier that controls the trade-off between maximizing I(Y; T) and minimizing I(X; T). This interpretation involves the rate-distortion function R(D) and is very inspiring. However, in this theory, EMI (or parameterized mutual information) and ShMI are not well distinguished, ignoring that making them close to each other is an important task. Therefore, this paper (Section 5.2) will provide a competitive interpretation using the R(G) function.

Despite various problems in deep learning, the Deep Neural Network (DNN) has a strong feature-extraction ability; combined with the EMI measure, it provides many convincing applications. In contrast, applying the G theory to machine learning is relatively simple without combining DNNs. From the practical viewpoint, the G theory’s applications lack persuasiveness. Information theories (including the G theory) are also insufficient to explain many ingenious methods in deep learning.

Now is the time to merge the two trends and let them learn from each other!

There are many generalized entropies. However, the generalized entropies related to the G measure keep the structure of the Shannon entropy. Only the function on the right of the log is changed. In addition to the cross-entropy method for classical ITL [28,29], researchers have also proposed other generalized entropies, such as Reny Entropy [30,31], Tsallis Entropy [32,33], Correntropy [34], matrix-based Entropy [35], and related methods for generalized ITL [35]. Figure 1 shows the distinctions and relations between classical, generalized, and semantic ITL.

Figure 1.

The distinctions and relations between four types of learning [31,34].

The main difference between Semantic ITL and Non-ITL is that the latter uses truth, correctness, or accuracy as the evaluation criterion, whereas the former uses truthlikeness (i.e., the successful approximation to truth) [36] or its logarithm as the evaluation criterion. Truth is independent of the prior probability distribution P(x) of instance x, whereas truthlikeness is related to P(x). Two criteria should have different uses. The main difference between Semantic ITL and generalized ITL in Figure 1 is that the former only generalizes Shannon’s entropy and information with truth functions.

Although many methods use parameters or SoftMax functions to construct mutual information, they cannot be regarded as semantic ITL methods if they do not use similarity or truth functions. Of course, these entropy and information measures also have their application values, but they are not what this article is about.

In addition to the semantic information measures mentioned above, there are other semantic information measures, such as those proposed by Floridi [37] and Zhong [38]. However, the author has not found their applications to machine learning.

This paper mainly aims at:

- Reviewing the evolutionary histories of semantic information measures and learning functions;

- Clarifying the relationship between SeMI and ShMI;

- Promoting the integration and development of the G theory and deep learning.

The contents of the following sections include:

- Reviewing the evolution of semantic information measures;

- Reviewing the evolution of learning functions;

- Introducing the G theory and its applications to machine learning;

- Discussing some questions related to SeMI and ShMI maximization and deep learning;

- Discussing some new methods worth exploring and the limitation of the G theory;

- Conclusions with opportunities and challenges.

2. The Evolution of Semantic Information Measures

2.1. Using the Truth or Similarity Function to Approximate to Shannon’s Mutual Information

The ShMI formula is:

where xi is an instance, yj is a label, and X and Y are two random variables.

To illustrate EMI, let us look at the core part of ShMI:

where x is a variable, and yj is a constant. Deep learning researchers [1,2] found that we could use parameters to construct similarity functions and then use similarity functions to construct EMI that approximates to ShMI. The main characteristic of similarity functions is:

The above formula also defines m(x, yj), an important function. However, the author has never seen its name. We call m(x, yj) the relatedness function now. We can also construct the distortion function d(x, yj) with parameters and use d(x, yj) to express the similarity function so that

Then we can construct estimated information:

where k denotes the sequence number of the k-th example in a sample {(xk, yk) | k = 1, 2, …, N}. EMI is expressed as:

On the other hand, information-theoretic researchers generally use sampling distribution P(x, y) to average information. Estimated information and EMI are expressed as

Note that P(xl) is added in the partition function. Nevertheless, Equations (4) and (6) are equivalent. Compared with the likelihood function P(x|θj) (where θj represents yj and related parameters), the similarity function is independent of the source P(x). After the source and the channel change, the similarity function is still proper as a predictive model. We can use the similarity function and the new source P’(x) to make a new probability prediction or produce a likelihood function:

Equations (5) and (6) fit small samples, whereas Equations (7) and (8) fit large samples. In addition, the latter can indicate the change in the source and helps clarify the relationship between SeMI and ShMI.

2.2. Historial Events Related to Semantic Information Measures

The author lists major historical events related to semantic information measures to the best of his knowledge.

In 1931, Popper put forward in the book “The Logic of Scientific Discovery” [39] (p. 96) that the smaller the logical probability of a scientific hypothesis, the greater the amount of (semantic) information if it can stand the test. We can say that Popper is the earliest researcher of semantic information [19]. Later, he proposed a logical probability axiom system. He emphasized that there are two kinds of probabilities, statistical and logical probabilities, at the same time [39] (pp. 252–258). However, he had not established a probability system that includes both.

In 1948, Shannon [3] published his famous paper: A mathematical theory of communication. Shannon’s information theory provides a powerful guiding ideology for optimizing communication codes. However, Shannon only uses statistical probability based on the frequency interpretation. In the above paper, he also proposed the information rate-fidelity function. It was later renamed as the information rate-distortion function, i.e., R(D) (D is the upper limit of average distortion, and R is the Minimum Mutual Information (MinMI)).

In 1949, Shannon and Weaver [9] jointly published a book: “The Mathematical Theory of Communication”, which contains Shannon’s famous article and Weaver’s article: “Recent Contributions to The Mathematical Theory of Communication”. In Weaver’s article, he put forward the three levels of communication:

“Level A. How accurately can the symbols of communication be transmitted?”

“Level B. How precisely do the transmitted symbols convey the desired meaning?”

“Level C. How effectively does the received meaning affect conduct in the desired way?”

Level A only involves Shannon information; Level B is related to both Shannon and semantic information; Level C is also associated with information values.

In the last century, many people rejected the study of semantic information. Their main reason was that Shannon said [9] (p. 3):

“Frequently, the messages have meaning; that is they refer to or are correlated according to some system with certain physical or conceptual entities. These semantic aspect of communication are irrelevant to the engineering problem.”

Shannon only limited the application scope of his theory without opposing the study of semantic information. How could he agree with Weaver to jointly publish the book if he objected? In the last ten years, with the developments of artificial intelligence and the Internet, the requirement for semantic communication has become increasingly urgent, and more and more scholars have begun to study semantic communication [10,11], including semantic information theory.

In 1951, Kullback and Leibler [40] proposed Kullback–Leibler (KL) divergence, also known as KL information or relative entropy:

KL information can be regarded as a special case of ShMI as Y = yj, whereas ShMI can also be seen as the KL information between two distributions: P(x, y) and P(x)P(y).

Since Shannon’s information measure cannot measure semantic information, in 1952, Carnap and Bar-Hillel [41] proposed a semantic information formula:

where mp is a proposition’s logical probability. This formula partly reflects Popper’s idea that the smaller the logical probability, the greater the amount of semantic information. However, ICB does not indicate whether the hypothesis can stand the test. Therefore, this formula is not practical. In addition, the logical probability mp is independent of the prior probability distribution of the instance, which is also unreasonable.

ICB = log [1/mp].

In 1957, Shepard [42] proposed using distance to express similarity: S(x, y) = exp[−d(x, y)].

In 1959, Shannon [43] provided the solution for a binary memoryless source’s R(D) function. He also deduced the parametric solution of R(D) of a general memoryless source:

where dij = d(xi, yj), s ≤ 0, and Zi is the partition function. In the author’s opinion, exp(sdij) is a truth function, and this MinMI can be regarded as SeMI [18].

In 1967, Theil [44] put forward the generalized KL information formula:

where there are three functions. We may regard R(x) as the prediction of P(x) and Q(x) as the prior distribution. Hence, ITheil means predictive information.

In 1965, Zadeh [45] initiated the fuzzy sets theory. The membership grade of an instance xi in a fuzzy set Aj is denoted as MAj(xi). The author of this paper explained in 1993 [15] that the membership function MAj(x) is also the truth function of the proposition function yj = yj(x) = “x is in Aj”. If we assume that there is a typical xj (that is, Plato’s idea, which may not be in Aj) that makes yj true, i.e., MAj(xj) = 1, then the membership function MAj(x) is the similarity function between x and xj.

In 1972, De Luca and Termini [46] defined fuzzy entropy with a membership function. We call it DT fuzzy entropy.

In 1974, Akaike [47] brought model parameters into the KL information formula and proved that the maximum likelihood estimation is equivalent to the minimum KL information estimation. Although he did not explicitly use the term “cross-entropy”, the log-likelihood he uses is equal to the negative cross-entropy multiplied by the sample size N:

In 1981, Thomas [48] used an example to explain that we may bring a membership function into Bayes’ formula to make a probability prediction (see Equation (31)). According to Dubois and Prade’s paper [49], other people almost simultaneously mentioned such a formula.

In 1983, Donsker and Varadhan [50] put forward that KL information can be expressed as:

IDV was later called the Donsker–Varadhan representation in [1]. We also called it DV-KL information. They proposed this formula perhaps because they were inspired by the information rate-distortion function or the Gibbs (or Boltzmann–Gibbs) distribution. To understand this formula, we replace P(x) with P(x|yj) and Q(x) with P(x). Then the KL information becomes:

To express KL information, we only need to find T(xi) so that exp[T(xi)]∝P(yj|x). DV-KL information was later used for MINE [1]. However, exponential or negative-exponential functions are generally symmetrical, while P(yj|x) is usually asymmetrical. Thus, it is not easy to make two information quantities equal.

In 1983, Wang [51] proposed the statistical interpretation of the membership function by the Random Set Falling Shadow theory. In terms of machine learning, we can use a sample in which each example contains a label and some similar instances, that is, S = {(xt1, xt2, …; yt) |t = 1, 2, …, N)}, the probability of xi in examples with label yj is membership grade MAj(xi). The author later [17] proved that the membership function MAj(x) is a truth function and can be obtained from a regular sample, where an example only includes one instance.

In 1986, Aczel and Forte [52] proposed a generalized entropy, which is now called cross-entropy. They proved an inequality:

with lnx ≤ x − 1. They explain that this formula also reflects Shannon’s lossless coding theorem, which means the Shannon entropy is the lower limit of the average codeword length.

In 1986, Zadeh defined the probability of fuzzy events [53], which is equal to the average of a membership function. This paper will show that this probability is the logical probability and the partition function we often use.

In 1990, the author [14] proposed using both the truth function and the logical probability to express semantic information (quantity). This measure can indicate whether a hypothesis can stand the test and overcome the shortcoming of Carnap–Bar-Hillel’s semantic information measure. The author later called this measure the semantic information G measure to distinguish it from other semantic information measures. The formula is:

where Aj is a fuzzy set, yj = “x belongs to Aj” is a hypothesis, and Q(Aj|x) is the membership function of Aj and the (fuzzy) truth function of yj. Q(Aj) is the logical probability of yj and the probability of fuzzy events called by Zadeh [53]. If there is always Q(Aj|x) = 1, the above semantic information formula becomes Carnap–Bar-Hillel’s semantic information formula.

In fact, the author first wanted to measure the information of color perceptions. He proposed the decoding model of color vision before [54,55]. This model has three pairs of opponent colors: red-green, blue-yellow, and green-magenta, whereas, in the famous zone model of color vision, there are only two pairs: red-green and yellow-blue. To defend the decoding model by showing that higher discrimination can convey more information, he tried measuring the information of color perceptions. For this reason, he regarded a color perception as the estimate of a color, i.e., yj = j, and employed the Gaussian function as the discrimination or similarity function. Later, he found that we could also measure natural language information by replacing the similarity function with the truth function. Since statistical probability is used for averaging, this information measure can ensure that wrong hypotheses or estimates will reduce semantic information.

Averaging ILu(x; yj), we obtain generalized Kullback–Leibler (KL) information (if we use P(x|Aj)), as proposed by Theil, and semantic KL information (if we use Q(Aj|x)), namely:

The above Equation adopts Bayes’ formula with the membership function (see Section 4.1 for details). At that time, the author did not know of Thomas and others’ studies. If Q(Aj|x) is expressed as exp[−d(x, yj)], semantic KL information becomes DV-KL information:

Nevertheless, the author only later learned of Theil’s formula and recently learned of DM-KL information. However, not all KL information or semantic KL information can be expressed as DV-KL information with exponential or negative exponential functions. For example, the truth function of yj = “Elderly” is asymmetric and can be described by a Logistic function rather than an exponential function. Therefore, DV-KL information is only a particular case of semantic KL information.

In 1993, the author [15] extended Shannon’s information rate-distortion function R(D) by replacing d(x, y) with ILU(x; y), to obtain the information rate-fidelity function R(G), which means minimum ShMI for given SeMI. The R(G) function reveals the matching relationship between ShMI and SeMI. Meanwhile, he defined the truth value matrix as the generalized channel and proposed the idea of mutual matching of two channels. He also studied how to compress image data according to the visual discrimination of colors and the R(G) function [15,16].

In 2017, the author proposed the P-T probability framework [12] by replacing Q with T and Aj with θj. In 2020, He discussed how the P-T probability framework is related to Popper’s theory [17]. The θ represents not only a fuzzy set but also a set of model parameters. With the P-T probability framework, we can conveniently use the G measure for machine learning. The relationship between SeMI and several generalized entropies is:

where three generalized entropies are:

H(X|Yθ) can be called the prediction entropy. It is also a cross-entropy. About the other two generalized entropies, the author suggests that we call H(Yθ|X) the fuzzy entropy and H(Yθ) the coverage entropy (see Section 5.4 for details).

Compared with the DT fuzzy entropy, H(Yθ|X) as the fuzzy entropy is more general. The reason is that if n labels become two complementary labels, y1 and y0, and P(y1|x) and P(y0|x) equals two membership functions T(θ1|x) and T(θ0|x), fuzzy entropy H(Yθ|X) degenerates into the DT fuzzy entropy.

More fuzzy entropies and fuzzy information measures defined by others can be found in [56]. The author thinks that some semantic information measures constructed with the DT fuzzy entropy are inappropriate (see the analysis of Equation (1) in [13]). The main reason is that they cannot reflect whether a hypothesis can stand the test.

In 2021, the author extended the information rate-distortion function and the maximum entropy method by replacing distortion function d(x, yj) with −logT(θj|x) and the average distortion with H(Yθ|X) [18]. In this way, the constraint is more flexible.

In recent years, deep learning researchers have expressed similarity functions with feature vectors and brought similarity functions into semantic or estimated information measures. Their efforts have made semantic information measures more abundant and more practical. See the next section for details.

3. The Evolution of Learning Functions

3.1. From Likelihood Functions to Similarity and Truth Functions

This section reviews machine learning with different learning functions and criteria for readers to understand why we choose the similarity (or truth) function and the estimated (or semantic) information measure.

The task of machine learning is to use samples or sampling distributions to optimize learning functions and then use learning functions to make probability predictions or classifications. There are many criteria for optimizing learning functions, such as maximum accuracy, maximum likelihood, Maximum Mutual Information (MaxMI), maximum EMI, minimum mean distortion, Regularized Least Squares (RLS), minimum KL divergence, and minimum cross-entropy criteria. Some criteria are equivalent, such as minimum cross-entropy and maximum EMI criteria. In addition, some criteria are similar or compatible, such as the RLS and the maximum EMI criteria.

Different Learning functions approximate to the following different probability distributions:

- P(x) is the prior distribution of instance x, representing the source. We use Pθ(x) to approximate to it.

- P(y) is the prior distribution of label y, representing the destination. We use Pθ(yj) to approximate to it.

- P(x|yj) is the posterior distribution of x. We use the likelihood function P(x|θj) = P(x|yj, θ) to approximate to it.

- P(yj|x) is the transition probability function [13]. What approximates to it is P(θj|x). Fisher proposed it and called it the inverse probability [57,58]. We call it the inverse probability function. The Logistic function is often used in this way.

- P(y|xi) is the posterior distribution of y. Since P(y|xi) = P(y)P(x|y)/P(xi), Bayesian Inference uses P(θ) P(x|y, θ)/Pθ(x) (Bayesian posterior) [57] to approximate to it.

- P(x, y) is the joint probability distribution. We use P(x, y|θ) to approximate to it.

- m(x, yj) = P(x, yj)/[P(x)P(yj)] is the relatedness function. What approximates to it is mθ(x, yj). We call mθ(x, yj) the truthlikeness function, which changes between 0 and ∞.

- m(x, yj)/max[m(x, yj)] = P(yj|x)/max[P(yj, x)] is the relative relatedness function. We use the truth function T(θj|x) or the similarity function S(x, yj) to approximate to it.

The author thinks that learning functions have evolved from likelihood functions to truthlikeness, truth, and similarity functions. Meanwhile, the optimization criterion has also evolved from the maximum likelihood criterion to the maximum semantic (or estimated) information criterion. In the following, we investigate the reasons for the evolution of learning functions.

We cannot use a sampling distribution P(x|yj) to make a probability prediction because it may be unsmooth or even intermittent. For this reason, Fisher [57] proposed using the smooth likelihood function P(x|θj) with parameters to approximate to P(x|yj) with the maximum likelihood criterion. Then we can use P(x|θj) to make a probability prediction.

The main shortcoming of the maximum likelihood method is that we have to retrain P(x|θj) after P(x) is changed. In addition, P(x|yj) is often irregular and difficult to be approximated by a function. Therefore, the inverse probability function P(θj|x) is used. With this function, when P(x) becomes P’(x), we can obtain a new likelihood function P’(x|θj) by using Bayes’ formula. In addition, we can also use P(θj|x) for classification with the maximum accuracy criterion.

The Logistic function is often used as the inverse probability function. For example, when y has only two possible values, y1 and y0, we can use a pair of Logistic functions with parameters to approximate to P(y1|x) and P(y0|x). However, this method also has two disadvantages:

- When the number of labels is n > 2, it is difficult to construct a set of inverse probability functions because P(θj|x) should be normalized for every xi:The above formula’s restriction makes applying the inverse probability function to multi-label learning difficult. An expedient method is Binary Relevance [59], which collects positive and negative examples for each label, and converts a multi-label learning task into n single-label learning tasks. However, preparing n samples for n labels is uneconomical, especially for image and text learning. In addition, the results are different whether a group of inverse probability functions are optimized separately or together.

- Although P(yj|xi; i = j) indicates the accuracy for binary communication, when n > 2, P(yj|xi; i = j) may not mean it, especially for semantic communication. For example, x represents an age, y denotes one of the three labels: y0 = “Non-adult”, y1 = “Adult”, and y2 = “Youth”. If y2 is rarely used, both P(y2) and P(y2|x) are tiny. However, the accuracy of using y2 for x = 20 should be 1.

P(x, y|θ) is also often used as a learning function, such as in the RBM [60] and many information-theoretic learning tasks [29]. Like P(x|θj), P(x, y|θ) is not suitable for irregular or changeable P(x). The Bayesian posterior is also unsuitable.

Therefore, we need a learning function that is proportional to P(yj|x) and independent of P(x) and P(y). The truth function and the similarity function are such functions.

We use GPS as an example to illustrate why we use the truth (or similarity) function T(θj|x), instead of P(x|θj) or P(x, y|θ), as the learning function. A GPS pointer indicates an estimate yj = j, whereas the real position of x may be a little different. In this case, the similarity between x and j is the truth value of j as x happens. This explains why the similarity is often called the semantic similarity [22].

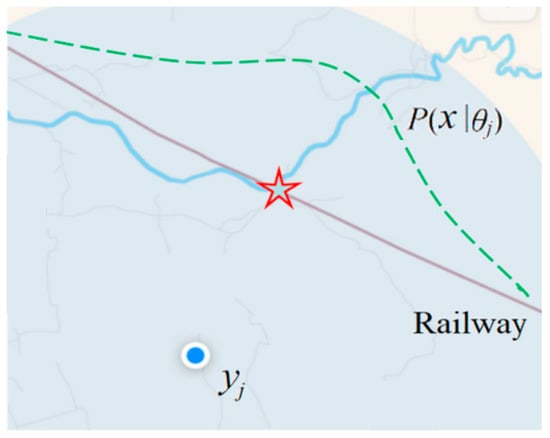

Figure 2 shows a real screenshot, where the dashed line, the red star, and letters are added. The brown line represents the railway; the GPS device is in a train on the railway. Therefore, we may assume that P(x) is equally distributed over the railway. The yj does not point at the railway because it is slightly inaccurate.

Figure 2.

Illustrating a GPS device’s positioning with a deviation. We predict the probability distribution of x according to yj and the prior knowledge P(x). The red star represents the most probable position.

The semantic meaning of the GPS pointer can be expressed with

where xj is the pointed position by yj, and σ is the Root Mean Square (RMS). For simplicity, we assume x is one-dimensional in the above Equation. According to Equation (9), we can predict that the star represents the most probable position.

T(θj|x) = S(x, xj) = exp[−(x − xj)2/(2σ2)],

However, if we use P(x|θj) or P(x, y|θj) as the learning function and the predictive model, after P(x) is changed, this function cannot make use of the new P(x), and hence the learning is not transferable. In addition, using the truth function, we can learn the system deviation and the precision of a GPS device from a sample [13]. However, using P(x|θj) or P(x, y|θj), we cannot do this because the deviation and precision are independent of P(x) and P(y). Consider a car with a GPS map on a road, where P(x) and P(x|θj) may be more complex and changeable. However, T(θj|x) can be an invariant Gaussian function.

The above example explains why we use similarity functions as learning functions. Section 4.2 proves that the similarity function approximates to m(x, yj)/max[m(x, yj)].

3.2. The Definitions of Semantic Similarity

“Similarity” in this paper means semantic similarity [22]. For example, people of ages 17 and 18, snow and water, or message and information are similar. However, it does not include superficial similarities, such as between snow and salt or between “message” and “massage”.

Semantic similarity can be between two sensory, geometric, biological, linguistic, etc., objects. The similarity is often defined by distance. Distance also reflects distortion or error. Following Shepard [42], Ashby and Perrin [61] further discussed the probability prediction related to similarity, distance, and perception. The similarity between images or biological features is usually expressed with the dot product or cosine of two feature vectors [2,4,6].

There are many studies on the similarity between words, sentences, and texts [22]. Some researchers use semantic structure to define the similarity between two words. For example, in 1995, Resnik [23] used the structure of WordNet to define the similarity between any two words. Later, others proposed some improved forms [62]. Some researchers use statistical data to define similarity. For example, in Latent Semantic Analysis [63], researchers use vectors from statistics to express similarity, whereas, some researchers use Pointwise Mutual Information (PMI) [64], i.e., I(xi; yj) (see Equation (2)), as a similarity measure. Since PMI varies between −∞ and ∞, an improved PMI similarity between 0 and 1 was proposed [65]. We can also see that semantic similarity is defined with semantic distance. More semantic similarity and semantic distance measures can be found in [66]. Some researchers believe semantic similarity includes relatedness, while others believe the two are different [67]. For example, hamburgers and hot dogs are similar, while hamburgers and French fries are related. However, the author believes that because the relatedness of two objects reflects the similarity between their positions in space or time, it is practical and reasonable to think that the related two objects are similar.

The truth function T(θj|x) proposed by the author can also be understood as the similarity function (between x and xj). We can define it with distance or distortion and optimize it with a sampling distribution. The author uses T(θj|x) to approximate to m(x, yj)/max[m(x, yj)]. When the sample is large enough, they are equal.

The similarity function S(x, y) can be directly used as a learning function to generate the posterior distribution of x. We can use such a transformation: exp[kS(x, y)], as a new similarity function or truth function [2,6] (where k is a parameter that can enlarge or reduce the similarity range). For those similarity measures suitable to be put in exp( ), such as the dot product or the cosine of two vectors, we had better understand them as fidelity measures and denote them as fd(xi, yj). Assuming that the maximum possible fidelity is fdmax, we may define the distortion function: d(x, yj) = k[fdmax − fd(x, yj)], and then use exp[−d(x, yj)] as the similarity function. Nevertheless, with or without this conversion, the value of the SoftMax function or the truthlikeness function is unchanged.

The study of semantic similarity reminds us that we should use similarity functions to measure semantic information (including sensory information). In machine learning, it is important to use parameters to construct similarity functions and then use samples or sampling distributions to optimize the parameters.

3.3. Similarity Functions and Semantic Information Measures Used for Deep Learning

In 1985, Achley, Hinton, and Sejnowski proposed the Boltzmann machine [68], and later they proposed the Restricted Boltzmann Machine (RBM) [60]. The SoftMax function is used to generate the Gibbs distribution. In 2006, Hinton et al. used the RBM and Backpropagation to optimize the AutoEncoder [69] and the Deep Belief Net (DBN) [70], achieving great success. However, the RBM is a classical ITL method, not a semantic ITL method, because the SoftMax function approximates to P(x, y) rather than a truthlikeness function with a similarity function. Nevertheless, using the SoftMax function demonstrates the use of similarity functions later.

In 2010, Gutmann and Hyvärinen [71] proposed noise contrast learning and obtained good results. They used non-normalized functions and partition functions. From the author’s viewpoint, if we want to get P(θj|x) for a given P(x|yj), we need P(x) because P(yj|x)/P(yj) = P(x|yj)/P(x). Without P(x), we can create a counterexample by noise and get P(x). Then we can optimize P(θj|x). Like Binary Relevance, this method converts a multi-label learning task into n single-label learning tasks.

In 2016, Sohn [72] creatively proposed distance metric learning with the SoftMax function. Although it is not explicitly stated that the learning function is proportional to m(x, y), the distance is independent of P(x) and P(y).

In 2017, the author concluded [12] that when T(θj|x) ∝ P(x|yj)/P(x) = m(x, y) or T(θj|x) ∝ P(yj|x), SeMI reaches its maximum and is equal to ShMI. T(θj|x) is the longitudinal normalization of parameterized m(x, y). When the sample is large enough, we can obtain the optimized truth function from the sampling distribution:

In addition, the author defined the semantic channel (previously called the generalized channel [15]) with a set of truth functions: T(θj|x), j = 1, 2, … In [12,13], he developed a group of Channels Matching algorithms for solving the multi-label classification, the MaxMI classification of unseen instances, and mixture models. He also proved the convergence of mixed models [73] and derived the new Bayesian confirmation and causal confirmation measures [74,75]. However, the author has not provided the semantic ITL method’s applications to neural networks.

In January 2018, Belghazi et al. [1] proposed MINE, which showed promising results. The EMI for MINE is the generalization of DV-KL information, in which the learning function is:

Although Tw(x, yj) is not negative, it can be understood as a fidelity function.

In July 2018, Oord et al. [2] presented InfoNCE and explicitly pointed out that the learning function is proportional to m(x, y) = P(x|yj)/P(x). The expression in their paper is:

where ct is the feature vector obtained from previous data, xt+k is the predictive vector, and fk(xt+k, ct) is a similarity function (between predicted xt+k and real xt+k) used to construct EMI. The n pairs of Logistic functions in Noise Contrast Learning become n SoftMax functions, which can be directly used for multi-label learning. However, fk(xt+k, ct) is not limited to exponential or negative exponential functions, unlike the learning function in MINE. Therefore, the author believes that it is more flexible to use a function such as a membership function as the similarity function.

The estimated information measure for MINE and InfoNCE are almost the same as the author’s semantic information G measure. Their characteristics are:

- A function proportional to m(x, yj) is used as the learning function (denoted as S(x, yj)); its maximum is generally 1, and its average is the partition function Zj.

- The semantic or estimated information between x and yj is log[S(x, yj)/Zj].

- The statistical probability distribution P(x, y) is used for the average.

- The semantic or estimated mutual information can be expressed as the coverage entropy minus the fuzzy entropy, and the fuzzy entropy is equal to the average distortion.

4. The Sematic Information G Theory and Its Applications to Machine Learning

4.1. The P-T Probability Framework and the Semantic Information G Measure

In the P-T probability framework, the logical probability is denoted by T, and the statistical probability (including subjective probability, such as likelihood) is still represented by P. We define this framework as follows.

Let X be a random variable and denote an instance. It takes a value x ∈ U = {x1, x2, …}. Let Y be a random variable representing a label or hypothesis. It takes a value y ∈ V = {y1, y2, …}. A set of transition probability represents a Shannon channel P(yj|x) (j = 1, 2, …), whereas a set of truth functions T(yj|x) (j = 1, 2, …) denotes a semantic channel.

Let elements in U that make yj true form a fuzzy subset θj (i.e., yj = “x is in θj”). Then the membership function (denoted as T(θj|x)) of x in θj is the truth function T(yj|x) of proposition function yj(x). That is T(θj|x) = T(yj|x). The logical probability of yj is the probability of a fuzzy event defined by Zadeh [45] as:

When yj is true, the predicted probability of x is

where θj can also be regarded as the model parameter so that P(x|θj) is a likelihood function.

For estimation, U = V and yj = j = “x is about xj.” Then T(θj|x) can be understood as the confusion probability or similarity between x and xj. For given P(x) and P(x|θj), supposing the maximum of T(θj|x) is 1, we can derive [13]:

The main difference between logical and statistical probabilities is that statistical probability is horizontally normalized (the sum is 1), while logical probability is longitudinally normalized (the maximum is 1). Generally, the maximum of each T(θj|x) is 1 (for different x), and T(θ1) + T(θ2) + … > 1. In contrast, P(y0|x) + P(y1|x) + … = 1 for every x, and P(y1) + P(y2) + … = 1.

Semantic information (quantity) conveyed by (true) yj about xi is:

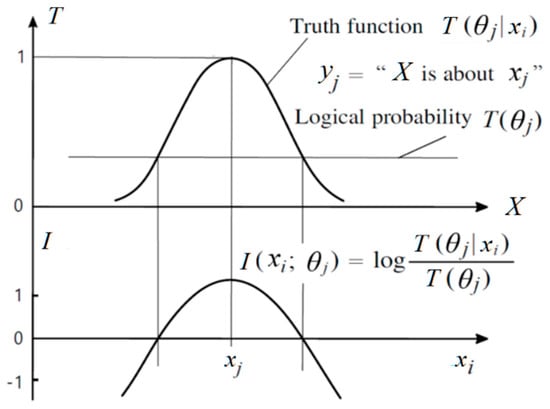

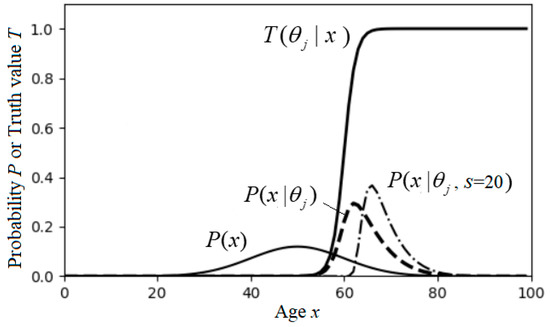

This Semantic information measure, illustrated in Figure 3, can indicate whether a hypothesis can stand the test.

Figure 3.

The semantic information conveyed by yj about xi decreases with the deviation or distortion increasing. The larger the deviation is, the less information there is.

Averaging I(x; θj) for different x, we obtain semantic KL information I(X; θj) (see Equation (19)). Averaging I(X; θj) for different y, we get SeMI I(X; Yθ) (See Equation (21)).

4.2. Optimizing Truth Functions and Making Probability Predictions

A set of truth functions, T(θj|x) (j = 1, 2, …), constitutes a semantic channel, just as a set of transition probability functions, P(yj|x) (j = 1, 2, …), forms a Shannon channel. When the semantic channel matches the Shannon channel, that is, T(θj|x)∝P(yj|x)∝P(x|yj)/P(x), or P(x|θj) = P(x|yj), the semantic KL information and SeMI reach their maxima. If the sample is large enough, we have:

where mmj is the maximum of function m(x, yj) (for different x). The author has proved in [17] that the above formula is compatible with Wang’s Random Set Falling Shadow theory [51]. We can think that T(θj|x) in Equation (34) comes from Random Point Falling Shadow.

We call m(x, yj) the relatedness function, which varies between 0 and ∞. Note that relatedness functions are symmetric, whereas truth functions or membership functions are generally asymmetric, i.e., T(θxi|yj) ≠ T(θj|xi) (θxi means xi and related parameters). The reason is that mmi = max[m(xi, y)] is not necessarily equal to mmj = max[m(x, yj)]. If we replace mmj and mmi with the maximum in matrix m(x, y), the truth function is also symmetrical, like the distortion function. In that case, the maximum of a truth function may be less than 1, so it is not convenient to use a negative exponential function to express a similarity function. Nevertheless, the similarity function S(x, j) between different instances should be symmetrical, i.e., S(xj, i) = S(xi, j). The truth function expressed as exp[−d(x, y)] should also be symmetrical if d(x, y) is symmetrical.

The author thinks that m(x, yj) or m(x, y) is an essential function. From the perspective of calculation, there exists P(x, y) before m(x, y); but from a philosophical standpoint, there exists m(x, y) before P(x, y). Therefore, we use mθ(x, y) to approximate to m(x, y) and call mθ(x, y) the truthlikeness function.

If the sample is not large enough, we can use the semantic KL information formula to optimize the truth function:

Using m(x, y) for probability predictions is simpler than using Bayes’ formula because

Using mθ(x, y) is similar. Unfortunately, it is difficult for the human brain to remember truthlikeness functions. Nevertheless, it is easier for the human brain to remember truth functions. Therefore, we need T(yj|x) or S(x, yj). With the truth or similarity function, we can also make probability predictions when P(x) is changed (see Equation (31)).

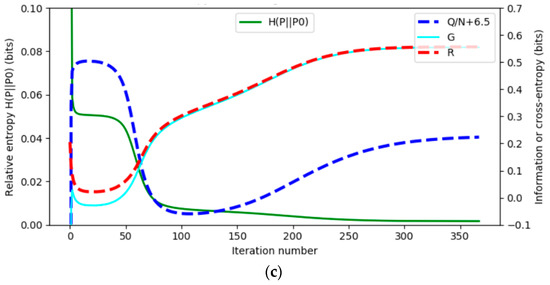

4.3. The Information Rate-Fidelity Function R(G)

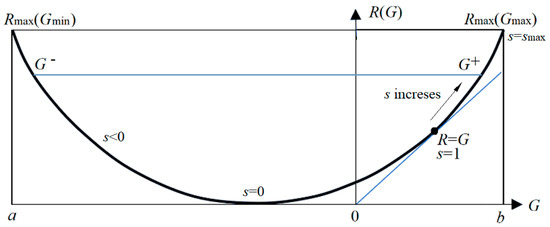

To extend the information rate-distortion function R(D), we replace the distortion function d(x, yj) with semantic information I(x; θj) and replace the upper limit D of average distortion with the lower limit G of SeMI. Then R(D) becomes the information rate-fidelity function R(G) [13,18] (see Figure 4). Finally, following the deduction for R(D), we obtain the R(G) function with parameter s:

Figure 4.

The information rate-fidelity function R(G) for binary communication. Any R(G) function is a bowl-like function. There is a point at which R(G) = G (s = 1). For given R, two anti-functions exist: G-(R) and G+(R).

To get the Shannon channel that matches the constraints, we need iterations for the MinMI. That is to repeat the following two formulas:

In the R(G) function, s = dR/dG is positive on the right side, whereas s in the R(D) function is always negative. When s = 1, R equals G, meaning that the semantic channel matches the Shannon channel. G/R indicates information efficiency. We can apply the R(G) function to image compression according to visual discrimination [16], semantic compression [18], and the convergence proofs for the MaxMI classification of unseen instances [13] and mixture models [73]. In addition, the author proves that the MaxMI test is equivalent to the maximum likelihood ratio test [76]. More discussions about the R(G) function can be found in [13,18].

4.4. Channels Matching Algorithms for Machine Learning

4.4.1. For Multi-Label Learning

We consider multi-label learning, which is supervised learning. We can obtain the sampling distribution P(x, y) from a sample. If the sample is large enough, we let T(θj|x) = P(yj|x)/max[P(yj|x)], j = 1, 2, …; otherwise, we can use the semantic KL information formula to optimize T(θj|x) (see Equation (35)).

For multi-label classifications, we can use the following classifier:

Binary Relevance [59] is unnecessary. See [13] for details.

4.4.2. For the MaxMI Classification of Unseen Instances

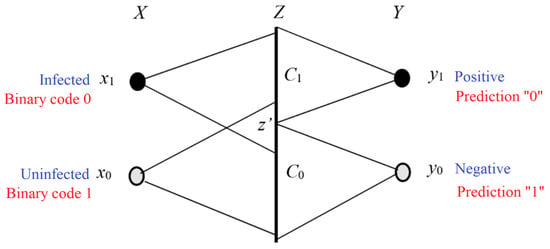

This classification belongs to semi-supervised learning. Figure 5 illustrates a simple example showing a medical test or signal detection. In Figure 5, Z is a random variable taking a value z∈C. The probability distributions P(x) and P(z|x) are given. The task is to find the classifier y = h(z) that maximizes ShMI between X and Y.

Figure 5.

Illustrating the medical test and the signal detection. We choose yj according to z ∈ Cj. The task is to find the dividing point z’ that results in MaxMI between X and Y.

The following algorithm is not limited to binary classifications. Let Cj be a subset of C and yj = f(z|z ∈ Cj); hence S = {C1, C2, …} is a partition of C. Our task is to find the optimized S, which is

First, we initiate a partition. Then we do the following iterations.

Matching I: Let the semantic channel match the Shannon channel and set the reward function. First, for a given S, we obtain the Shannon channel:

Then we obtain the semantic channel T(y|x) from the Shannon channel and T(θj) (or mθ(x, y) = m(x, y)). Then we have I(xi; θj). For given z, we have conditional information as the reward function:

Matching II: Let the Shannon channel match the semantic channel by the classifier:

Repeat Matching I and Matching II until S does not change. Then, the convergent S is S* we seek. The author has explained the convergence with the R(G) function (see Section 3.3 in [13]).

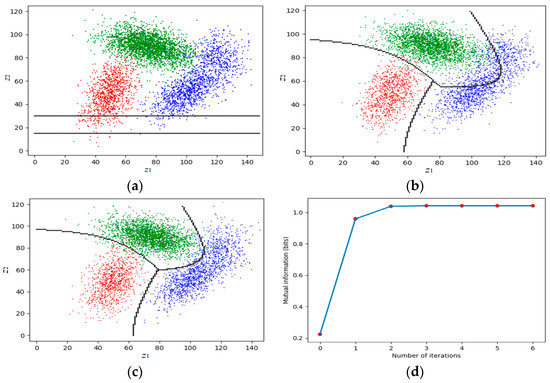

Figure 6 shows an example. The detailed data can be found in Section 4.2 of [13]. The two lines in Figure 6a represent the initial partition. Figure 6d shows that the convergence is very fast.

Figure 6.

The MMI classification with a very bad initial partition. The convergence is very fast and stable without considering gradients. (a) The very bad initial partition. (b) The partition after the first iteration. (c) The partition after the second iteration. (d) The mutual information changes with iterations.

4.4.3. Explaining and Improving the EM Algorithm for Mixture Models

We know formula P(x) = ∑j P(yj)P(x|yj). For a given sampling distribution P(x), we use the mixture model Pθ(x) = ∑j P(yj)P(x|θj) to approximate to P(x), making relative entropy H(P‖Pθ) close to 0. After setting the initial P(x|θj) and P(yj), j = 1, 2, …, we do the following iterations, each of which includes two matching steps (for details, see [13,73]):

Matching 1: Let the Shannon channel P(y|x) match the semantic channel by repeating the following two formulas n times:

Of all the above steps, only the first step that changes θj increases or decreases R; other steps only reduce R.

Matching 2: Let the semantic channel match the Shannon channel to maximize G by letting

End the iterations until θ or H(P‖Pθ) cannot be improved.

For the convergence proof, we can deduce [13]:

This formula can also be used to explain pre-training in deep learning.

Since Matching 2 maximizes G, and Matching 1 minimizes R and H(P+1(y)‖P(y)), H(P‖Pθ) can approach 0.

The above algorithm can be called the EnM algorithm, in which we repeat Equation (44) n or fewer times (such as n ≤ 3) for P+1(y) ≈ P(y). The EnM algorithm can perform better than the EM algorithm in most cases. Moreover, the convergence proof can help us avoid blind improvements.

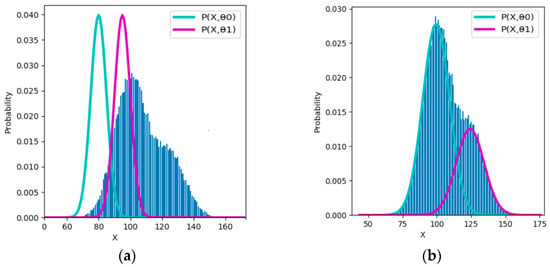

Figure 7 shows an example of Gaussian mixture models for comparing the EM and E3M algorithms. The real model parameters are (µ1, µ2, σ1, σ2, P(y1)) = (100, 125, 10, 10, 0.7).

Figure 7.

Comparing EM and E3M algorithms with an example that is hard to converge. The EM algorithm needs about 340 iterations, whereas the E3M algorithm needs about 240 iterations. In the convergent process, complete data log-likelihood Q is not monotonously increasing. H(P||Pθ) decreases with R − G. (a) Initial components with (µ1, µ2) = (80, 95). (b) Globally convergent two components. (c) Q, R, G, and H(P||Pθ) changes with iterations (initialization: (µ1, µ2, σ1, σ2, P(y1)) = (80, 95, 5, 5, 0.5)).

This example reveals that the EM algorithm can converge only because semantic or predictive mutual information G and ShMI R approach each other, not because the complete data’s log-likelihood Q continuously increases. The detailed discussion can be found in Section 3.3 of [73].

Note that how many iterations are needed mainly depends on the initial parameters. For example, suppose we use initialization (µ1, µ2, σ1, σ2, P(y1)) = (105, 120, 5, 5, 0.5) according to the fair competition principle [73]. In that case, the EM algorithm needs about four iterations, whereas the E3M algorithm needs about three iterations, on average. Ref. [73] provides an initialization map, which can tell if a pair of initial means (µ1, µ2) is good.

Refs. [13,73] provide more examples for testing the EnM algorithm.

5. Discussion 1: Clarifying Some Questions

5.1. Is Mutual Information Maximization a Good Objective?

Some researchers [3,24] actually maximize EMI, but they explain that we need to maximize ShMI. Since EMI is the lower limit of ShMI, we can reach maximum ShMI by maximizing EMI. However, Tschannent et al. doubt that ShMI maximization is a good objective [25]. The author thinks their doubt deserves attention.

From the perspective of the G theory, it is incorrect to say that EMI is the lower limit of ShMI because EMI changes with ShMI when we change the Shannon channel. If we optimize model parameters to maximize EMI, the Shannon channel and ShMI do not change. Generally, SeMI or EMI maximization is our purpose. In some cases, we need to maximize ShMI only because ShMI is the upper limit of SeMI. In the following, we discuss information maximization in different circumstances.

For supervised learning, such as multi-label learning (X—>Y), in the learning stage, the sampling distribution P(x, y) constrains ShMI, which is the upper limit of SeMI. This upper limit cannot be increased. However, in the classification stage, we classify x according to truthlikeness functions mθ(x, yj), j = 1, 2, … to maximize ShMI and SeMI simultaneously. According to the R(G) function, the classification is to make s—>∞, so that P(yj|x) = 1 or 0 (see Equation (38)), and both R and G reach the highest point on the right side of the R(G) curve (see Figure 4). When s increases from 1, information efficiency G/R decreases. Sometimes, we have to balance between maximizing G and maximizing G/R.

For semi-supervised learning, such as the MaxMI classification of unseen instances (denoted as X—>Z—>Y, see Figure 5), SeMI is a reward function, and ShMI is its upper limit. We increase SeMI by maximizing ShMI, and, finally, we maximize both.

The following section discusses the maximization or minimization of R and G in deep learning.

Why is ShMI alone not suitable as an objective function in semantic communication? This is because it has nothing to do with right or wrong. For example, we classify people into four classes with labels: y1 = “children”, y1 = “youth”, y3 = “adult”, and y4 = “elderly”, according to their ages x. ShMI reaches its maximum when the classification makes P(y1) = P(y2) = P(y3) = P(y4) = 1/4. However, this is not our purpose. In addition, even if we exchange the labels of two age groups {children} and {elderly}, ShMI does not change. However, if labels are misused, the SeMI will be greatly reduced or negative. Therefore, it is problematic to maximize ShMI alone. However, SeMI maximization can ensure less distortion and more predictive information and is equivalent to—likelihood maximization and compatible with the RLS criterion. In addition, ShMI maximization is not a good objective because using Shannon’s posterior entropy H(Y|X) as the loss function is improper (see Section 5.4).

5.2. Interpreting DNNs: The R(G) Function vs. the Information Bottleneck

We take the AutoEncoder as an example to discuss the changes in ShMI and SeMI in self-supervised learning. The Autoencoder has a structure X—>Y—>, where X is an input image, Y is a low-dimensional representation of X, and is the estimate of X. The successful learning method [69] is that first, we pre-train the network parameters of a multi-layer RBM from X to Y, similar to solving a mixed model, to minimize the relative entropy H(P‖Pθ). Then we decode Y into and fine-tune the network parameters between X and to minimize the loss with the RLS criterion, like maximizing EMI I(X; θ).

From the perspective of the G theory, pre-training is to solve the mixed model so that R = G between X and Y, and the relative entropy is close to 0. Fine-tuning is to maximize R and G. In terms of the R(G) function (see Figure 4), pre-training is to find the point where R = G and s = 1. Fine-tuning increases R and G so that s = smax by reducing the distortion between X and

Can we increase s to improve I(X; ? Theoretically, it is feasible. With T(θj|x) = exp[−sd(x, yj)] (s > 0), increasing s is to narrow the coverage of the truth function (or the membership function of fuzzy set θj). If both x and xj are elements in the fuzzy set θj, the average distortion between them will also be reduced. As for whether increasing s is enough, it needs to be tested.

According to the information bottleneck theory [27], to optimize a DNN with structure X —>T1—>… —>Tm —>Y, such as a DBN, we need to minimize I(Ti-1; Ti) − βI(Y; Ti-1|Ti) (i = 1, 2, …, m), like solving a R(D) function. This idea is very inspiring. However, the author believes that every latent layer (Ti) needs its SeMI maximization and ShMI minimization. The reasons are:

- DNNs often need pre-training and fine-tuning. In the pre-training stage, the RBM is used for every latent layer.

- The RBM is like the EM algorithm for mixture models [77,78]. The author has proved (see Equation (46)) that we need to maximize SeMI and minimize ShMI to make mixture models converge.

On the other hand, the information bottleneck theory maximizes ShMI between X and or Y and . In contrast, the G theory suggests maximizing SeMI between X and or Y and .

5.3. Understanding Gibbs Distributions, Partition Functions, MinMI Matching, and RBMs

In statistical mechanics, the Gibbs distribution can be expressed as a distribution over different phase lattices or states:

It can also be expressed as a distribution over different energy levels:

We can understand energy as distortion and exp[−ei/(kT)] as the truth function. For machine learning, to predict the posterior probability of xi, we have:

which is similar to Equation (48).

Logical probability T(θj) is the partition function Z. If we put T(θj|x) = m(x, yj)/mmj into T(θj), there is

From Equations (49) and (50), we derived P(x|θj) = P(x|yj). We can see that the summation for T(θj) is only to get T(θj) = 1/mmj so that 1/mmj in the numerator and the denominator of the Gibbs distribution are eliminated simultaneously. Then the distribution P(x|θj) approximates to P(x)m(x, yj) = P(x|yj). It does not matter how big mmj is.

Some researchers use the SoftMax function to express learning function P(x, y|θj) and the probability prediction, such as P(x, y|θj) = exp[−d(x, yj)] and

This expression is very similar to that of mθ(x, yj) in the semantic information method, but they are different. In the semantic information method,

in which there are P(x) and P(xk).

Given P(x) and semantic channel T(y|x), we can order P(yj|x) = kT(yj|x) for MinMI matching and then obtain P(yj) = kT(yj), k = 1/∑j T(yj). Hence,

Note that if T(y|x) also needs optimization, we can only use the MinMI iteration (see Section 6.2). If we use the above formula, T(y|x) cannot be further optimized.

In addition, for a given m(x, y), P(x) and P(y) are related; we cannot simply let P(x, y) = P(x)m(x, y)P(y) because new P(y) may not be normalized, that is,

Nevertheless, we can fix one of P(x) and P(y) to get another. For example, given P(x) and m(x, y), we first obtain T(yj|x) = m(x, yj)/mmj, then use Equation (53) to get P(y).

Equation (54) can help us further understand the RBM. A RBM with structure V —> H [60] contains a set of parameters: θ = {ai, wij, bj|i = 1, 2, …, n; j = 1, 2, …, m}. Parameters ai, wij, and bj are associated to P(vi), mθ(vi, hj), and P(hj), respectively. Optimizing {wij} (weights) improves mθ(v, h) and maximizes SeMI, and optimizing {bj} improves P(h) and minimizes ShMI. Alternate optimization makes SeMI and ShMI close to each other.

5.4. Understanding Fuzzy Entropy, Coverage Entropy, Distortion, and Loss

We assume that θ is a crisp set to see the properties of coverage entropy H(Yθ). We take age x and the related classification label y as an example to explain the coverage entropy.

Suppose the domain U of x is divided into four crisp subsets with boundaries: 15, 30, and 55. The labels are y1 = “Children”, y2 = “Youth”, y3 = “Middle-aged people”, and y4 = “Elderly”. The four subsets constitute a partition of U. We divide U again into two subsets according to whether x ≥ 18 and add labels y5 = “Adult” and y6 = “Non-adult”. Then six subsets constitute the coverage of U.

The author has proved [16] that the coverage entropy H(Yθ) represents the MinMI of decoding y to generate P(x) for given P(y). The constraint condition is that P(x|yj) = 0 for xθj. Some researchers call this MinMI the complexity distortion [79]. A simple proof method is to make use of the R(D) function. We define the distortion function:

Then we obtain

If the above subsets are fuzzy, and the constraint condition is P(x|yj) ≤ P(x|θj) for T(θj|x) < 1, then the minimum ShMI equals the coverage entropy minus the fuzzy entropy, that is, R = I(X; Y) = H(Yθ) − H(Yθ|X) [18].

Shannon’s distortion function also ascertains a semantic information quantity. Given the source P(x) and the distortion function d(x, y), we can get the truth function T(θj|x) = exp[−d(x, yj)] and the logical probability T(θj) = Zj. Then letting P(yj|x) = kT(θj|x), we can get P(yj|x) = exp[−d(x, yj)]/∑jT(θj). Furthermore, we have I(X; Y) = I(X; Yθ) = H(Yθ) − , which means that the minimum ShMI for a given distortion limit can be expressed as SeMI, and the SeMI decreases with the average distortion increasing.

Many researchers also use Shannon’s posterior entropy H(Y|X) as the average distortion (or loss) or −logP(y|xi) as the distortion function. This usage shows a larger distortion when P(y|xi) is much less than 1. However, from the perspective of semantic communication, less P(y|xi) does not mean larger distortion. For example, with ages, when the above six labels are used on some occasions, y6 = “Non-adult” is rarely used, so P(y6|x < 18) is very small, and hence −logP(y6|x < 18) is very large. However, there is no distortion because T(θ6|x < 18) = 1. Therefore, Using H(Y|X) to express distortion or loss is often unreasonable. In addition, it is easy to understand that for a given xi, the distortion of a label has nothing to do with the frequency in which the label is selected, whereas P(y|xi) is related to P(y).

Using DKL to represent distortion or loss [27] has a similar problem, whereas using semantic KL information to represent negative loss is appropriate.

Since SeMI or EMI can be written as the coverage entropy minus the average distortion, it can be used as a negative loss function.

5.5. Evaluating Learning Methods: The Information Criterion or the Accuracy Criterion?

In my opinion, there is an extraordinary phenomenon in the field of machine learning: researchers use the maximum likelihood, minimum cross-entropy, or maximum EMI criterion to optimize model parameters, but in the end, they prove that their methods are good because they have high classification accuracies for open data sets. Given this, why do they not use the maximum accuracy or minimum distortion criterion to optimize model parameters? Of course, one may explain that the maximum accuracy criterion cannot guarantee the robustness of the classifier; a classifier may be ideal for one source P(x) but not suitable for another. However, can the robustness of classifiers be fairly tested with open data sets?

The author believes that using information criteria (including the maximum likelihood criterion) is not only for improving robustness but also for reducing the underreporting of small probability events or increasing the detection rate of small probability events. The reason is that the information loss due to failing to report smaller probability events is greater in general; the economic loss is also greater in general, such as in medical tests, X-ray image recognitions, weather forecasts, earthquake predictions, etc.

How do we resolve the inconsistency between learning and evaluation criteria? Using information criteria to evaluate various methods is best, but it is not feasible in practice. Therefore, the author suggests that in cases where overall accuracies are not high, we also check the accuracy of small probability events in addition to the overall accuracy. Then we use the vector composed of two accuracies to evaluate different learning methods.

6. Discussion 2: Exploring New Methods for Machine Learning

6.1. Optimizing Gaussian Semantic Channels with Shannon’s Channels

It is difficult to train the truth function expressed as SoftMax functions with samples or sampling distributions, where Gradient Descent is usually used. Suppose we can convert SoftMax function learning into Gaussian function learning without considering gradients. In that case, the learning will be as simple as the EM algorithm of the Gaussian mixture models.

Suppose that P(yj|x) is proportional to a Gaussian function. Then, P(yj|x)/∑kP(yj|xk) is a Gaussian distribution. We can assume P(x) = 1/|U| (|U| is the number of elements in U) and then use P(yj|x) to optimize T(θj|x) by maximizing semantic KL information:

Obviously, I(X; θj) reaches its maximum as T(θj|x)∝P(yj|x), which means that we can use the expectation μj and the standard deviation σj of P(yj|x) as those of T(θj|x).

In addition, if we only know P(x|yj) and P(x), we can replace P(yj|x) with m(x, yj) = P(x|yj)/P(x), and then optimize the Gaussian truth function. However, this method requires that no x makes P(x) = 0. For this reason, we need to replace P(x) with an uninterrupted distribution close to P(x).

The above method of optimizing Gaussian truth functions does not need Gradient Descent.

6.2. The Gaussian Channel Mixture Model and the Channel Mixture Model Machine

So far, only the mixture model that consists of likelihood functions, such as Gaussian likelihood functions, has been used. However, we can also use Gaussian truth functions to construct the Gaussian Channel Mixture Model (GCMM).

We can also use the EnM algorithm for the GCMM. Matching 1 and Matching 2 becomes:

Matching 1: Let the Shannon channel match the semantic channel by using P(x|θj) = P(x)T(θj|x)/T(θj) and repeating Equation (44) n times.

Matching 2: Let the semantic channel match the Shannon channel by letting:

which means we can use the expectation and the standard deviation of P(yj|x) or P(x)T(θj|x)/Pθ(x) as those of T(θj+1|x).

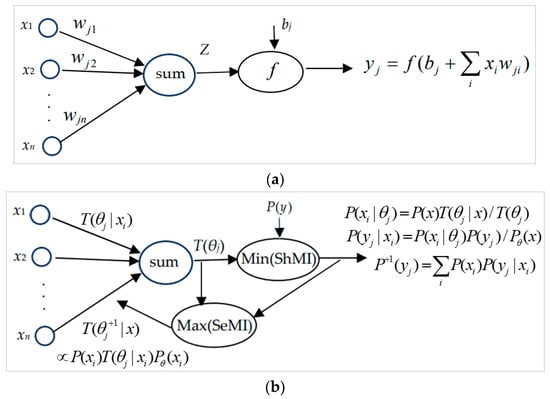

We can set up a neural network working as a channel mixture model and call it the Channel Mixture Model Machine (CMMM). Figure 8 includes a typical neuron and a neuron in the CMMM. In Figure 8b, the truth value T(θj|xi) is used as weight wji. The input may be xi, a vector x, or a distribution P(x), for which we use different methods to obtain the same T(θj).

Figure 8.

Comparing a typical neuron and a neuron in a CMMM. (a) A typical neuron in neural networks. (b) A neuron in the CMMM and its optimization.

As shown in Figure 8a, f is an activation function in a typical neuron. If bj = P(yj) − T(θj) ≤ 0 and f is a Relu function, two neurons will be equivalent.

We only need to repeatedly assign values to optimize the model parameters in the CMMM. The initial truth values or weights may be between 0 and a positive number c because replacing T(θj|x) with cT(θj|x) does not change P(x|θj).

The author’s preliminary experiments show that a CMMM works as expected. The convergence is very fast. Optimizing weights only needs 3–4 iterations. We need more experiments to test whether we can use CMMMs or Gaussian CMMMs (weight distributions are limited to Gaussian functions) to replace RBMs.

6.3. Calculating the Similarity between any Two Words with Sampling Distributions

Considering the similarity between two words, x and y, we can get the similarity from a sampling distribution:

where max[m(x, y)] is the maximum of all m(x, y). The similarity obtained in this way is symmetrical. This similarity can be regarded as relative truthlikeness. The advantages of this method are:

- It is simple without needing the semantic structure such as that in WordNet;

- This similarity is similar to the improved PMI similarity [65] which varies between 0 and 1;

- This similarity function is suitable for probability predictions.

The disadvantage of this method is that the semantic meaning of the similarity function may be unclear. We can limit x and y in different and smaller domains and get asymmetrical truth functions (see Equation (34)) that can better indicate semantic meanings.

Some semantic similarity measures, such as Resnik’s similarity measure [23]), based on the structure of WordNet, reflect philosophical thought that a hypothesis with less logical probability can carry more information content. They are similar to truthlikeness mθ(x, y) and suitable for classification, whereas S(x, yj) defined above is suitable for probability predictions. We can convert S(x, yj) with P(x) into the truthlikeness function for classification.

Latent Semantic Analysis (LSA) has been successfully used in natural language processing and the Transformer. How to combine the semantic information method with LSA to get better results is worth exploring.

6.4. Purposive Information and the Information Value as Reward Functions for Reinforcement Learning

The G measure can be used to measure purposive information (about how the result accords with the purpose) [80]. For example, a passenger and a driver in a car get information from a GPS map about how far they are from their destination. For the passenger, it is purposive information, while for the driver, it is the feedback information for control. If we train the machine to drive, the purposive information can be used as a reward function for reinforcement learning.

Generally, control systems use error as the feedback signal, but Wiener proposes using entropy to indicate the uncertainty of control results. Sometimes we need both accuracy and precision. The G measure meets this purpose.

Researchers have used Shannon information theory for constraint control and reinforcement learning [81,82]. ShMI (or DKL) represents necessary control complexity. Given distortion D, the smaller the mutual information R, the higher the control efficiency. When we use the G theory for constraint control and reinforcement learning, the SeMI measure can be used as the reward function, then G/R represents the control efficiency. We explain reinforcement learning as being like driving a car to a destination. We need to consider:

- Choosing an action a to reach the destination;

- Learning the system state’s change from P(x) to P(x|a);

- Setting the reward function, which is a function of the goal, P(x), and P(x|a).

Some problems that reinforcement learning needs to resolve are:

- How to get P(x|a) or P(x|a, h) (h means the history)?

- How to choose an action a according to the system state and the reward function?

- How to achieve the goal economically, that is, to balance the reward maximization and the control-efficiency maximization?

In the following, we suppose that P(x|a) is known already; only problems 2 and 3 need to be resolved.

Sometimes, the goal is not a point but a fuzzy range for the control of uncertain events. For example, a country, whose average death age is 50, sets a goal: yj = “The death ages of the population had better not be less than 60 years old”. Suppose that the control method is to improve medical conditions. In this case, Shannon information represents the control cost, and the semantic information indicates the control effect. Although we can raise the average death age to 80 at a higher cost, it is uneconomical in terms of control efficiency. There is enough purposive information when the average death age reaches about 62. The author in [80] discusses this example.

Assume that the goal is expressed as Logistic function T(θj|x) = 1/[1 + exp(−0.8(x − 60))] (see Figure 9). The prior distribution P(x) is normal (μ = 50 and σ = 10), and the control result P(x|aj) of a medical condition aj is also normal. The purposive information or the reward function of aj is:

Figure 9.

Illustrating population death age control for measuring purposive information. P(x|aj) approximates to P(x|θj) = P(x|θj, s = 1) for information efficiency G/R = 1. G and R are close to their maxima as P(x|aj) approximates to P(x|θj, s = 20).

We can let P(x|aj) approximate to P(x|θj) by changing the μj and σj of P(x|aj) to get the minimum KL information I(X; aj) so that information efficiency I(X; θj|aj)/I(X; aj) is close to 1. In addition, we can increase s in the following formula to increase both SeMI and ShMI:

The information efficiency will decrease with s increasing from 1. So, we need the trade-off between information efficiency maximization and purposive information maximization.

The author, in [80], provides the computing results for this example. We assume Y = yj, then SeMI equals I(X; θj|aj), and ShMI equals I(X; aj). The results indicate that the highest information efficiency G/R is 0.95 when P(x|aj) approximates to P(x|θj). When s increases from 1 to 20, the purposive information G increases from 2.08 bits to 3.13 bits, but the information efficiency G/R decreases from 0.95 to 0.8. To balance G and G/R, we should select s between 5 and 15.

If there are multiple goals, we must optimize P(aj) = P(yj), j = 1, 2, … according to Equation (38).

Reinforcement learning is also related to information value.

Cover and Thomas have employed the information-theoretic method to optimize the portfolio [83]. The author has used semantic information to optimize the portfolio [84]. The author obtained the value-added entropy formula and the information value formula (see Appendix B for details):

Hv(R, q|θj) is the value-added entropy, where q is the vector of portfolio ratios, Ri(q) is the return of the portfolio with q when the i-th price vector xi appears, and R is the return vector. V(X; θj) is the predictive information value, where X is a random variable taking x as its value, q* is the optimized vector of portfolio ratios according to the prior distribution P(x), and q** is that according to prediction P(x|θj). V(X; θj) can be used as a reward function to optimize probability predictions and decisions. However, to calculate the actual information value (in the learning stage), we need to replace P(x|θj) with P(x|yj) in Equation (63).

From the value-added entropy formula, we can derive some useful formulas. For example, if an investment will result in two possible results, yield rate r1 < 0 with probability P1 and yield rate r2 > 0 with probability P2, the optimized investment ratio is

where E is the expected yield rate. If r1 = −1, we have q* = P2 − P1/r2, which is the famous Kelly formula. For the above formula, we assume that the risk-free rate r0 = 0; otherwise, there is

where E0 = E − r0, r10 = r1 − r0, r20 = r2 − r0.

q* = E/|r1r2|,

q* = E0R0/|r10r20|,

However, V(X; θj) is only the information value in particular cases. Information values in other situations need to be further explored.

6.5. The Limitations of the Semantic Information G Theory

Based on the G theory, we seem to be able to apply the channels matching algorithms (for muti-label learning, MMI classifications, and mixture models) to DNNs for various learning tasks. However, DNNs include not only statistical reasoning but also logical reasoning. The latter is realized by setting biases and activation functions. For example, the Relu function is the fuzzy logic operation, which the author uses in establishing the decoding model of color vision [54,55], whereas the semantic information-theoretic method now only involves the statistical part. It helps explain deep learning, but it is far from enough to guide one in building efficient DNNs.

DNNs have shown strong vitality in many aspects, such as feature extraction, self-supervised learning, and reinforcement learning. However, the G theory is not sufficient as a complete semantic information theory. Instead, it should be combined with feature extraction and fuzzy reasoning for further developments to keep pace with deep learning.

7. Conclusions