A Review of Partial Information Decomposition in Algorithmic Fairness and Explainability

Abstract

1. Introduction

1.1. Scenario 1: Quantifying Non-Exempt Disparity [8,29]

1.2. Scenario 2: Explaining Contributions [31]

1.3. Scenario 3: Formalizing Tradeoffs in Distributed Environments [34]

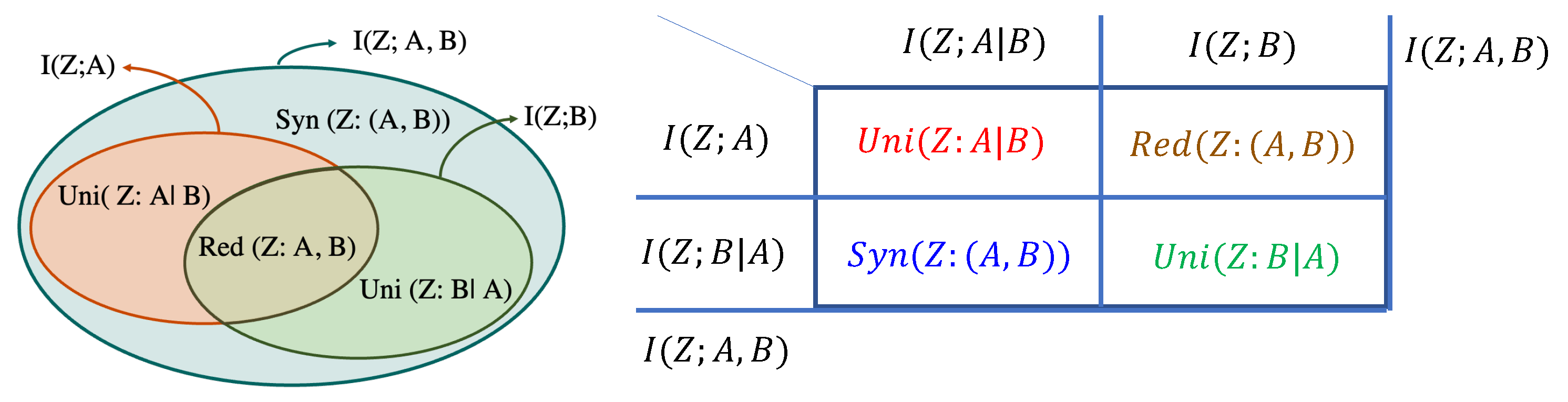

2. Background on Partial Information Decomposition

3. Quantifying Non-Exempt Disparity

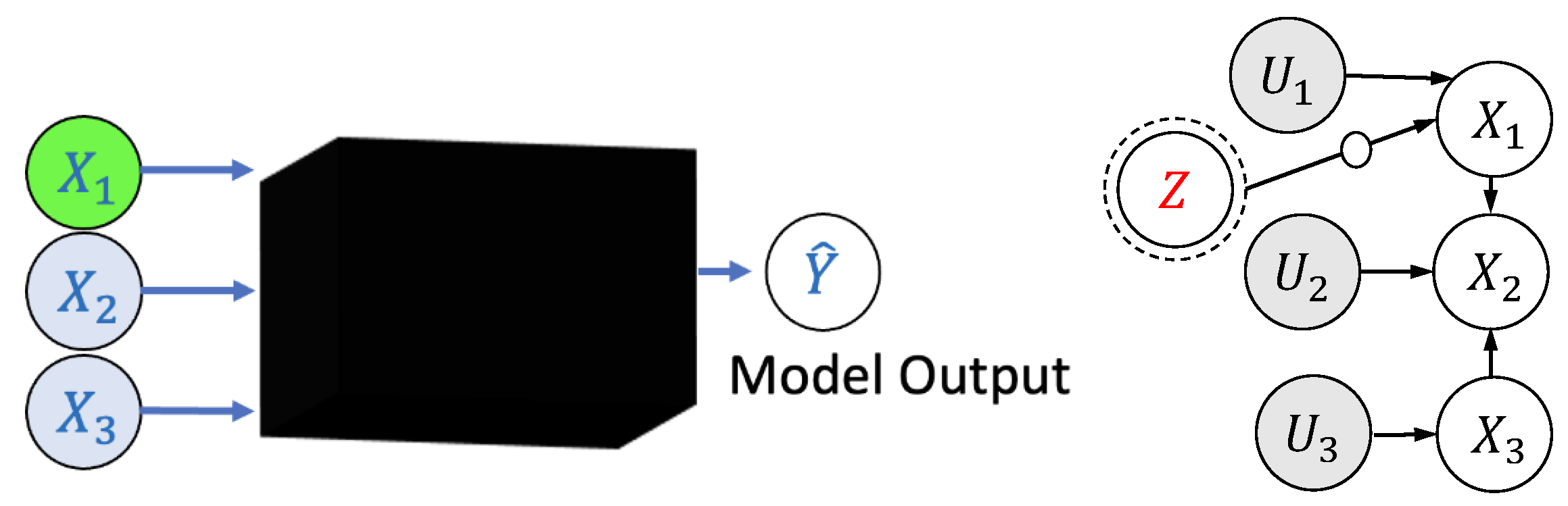

3.1. Preliminaries

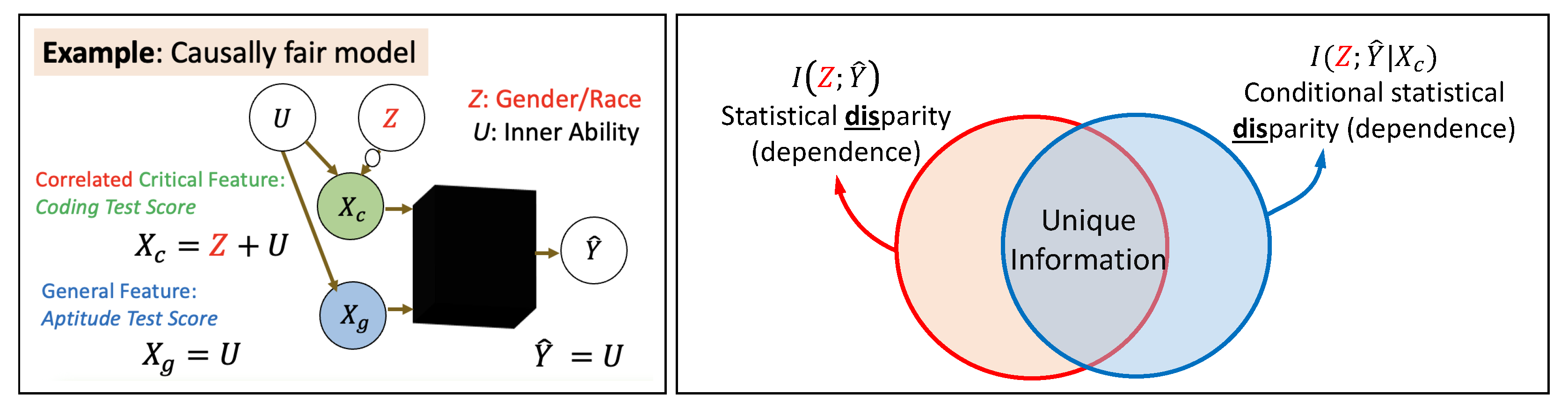

3.2. Quantifying Non-Exempt Disparity

3.3. Demystifying Unique Information as a Measure of Non-Exempt Disparity

- if the model is causally fair.

- if all features are non-critical, i.e., and .

- For a fixed set of features X and a fixed model , a should be non-increasing if a feature is removed from and added to .

- if all features are critical, i.e., and .

4. Explaining Contributions

4.1. Preliminaries

4.2. Information-Theoretic Measures

4.3. Notable Related Works Bridging Fairness, Explainability, and Information Theory

5. Formalizing Tradeoffs in Distributed Environments

5.1. Preliminaries

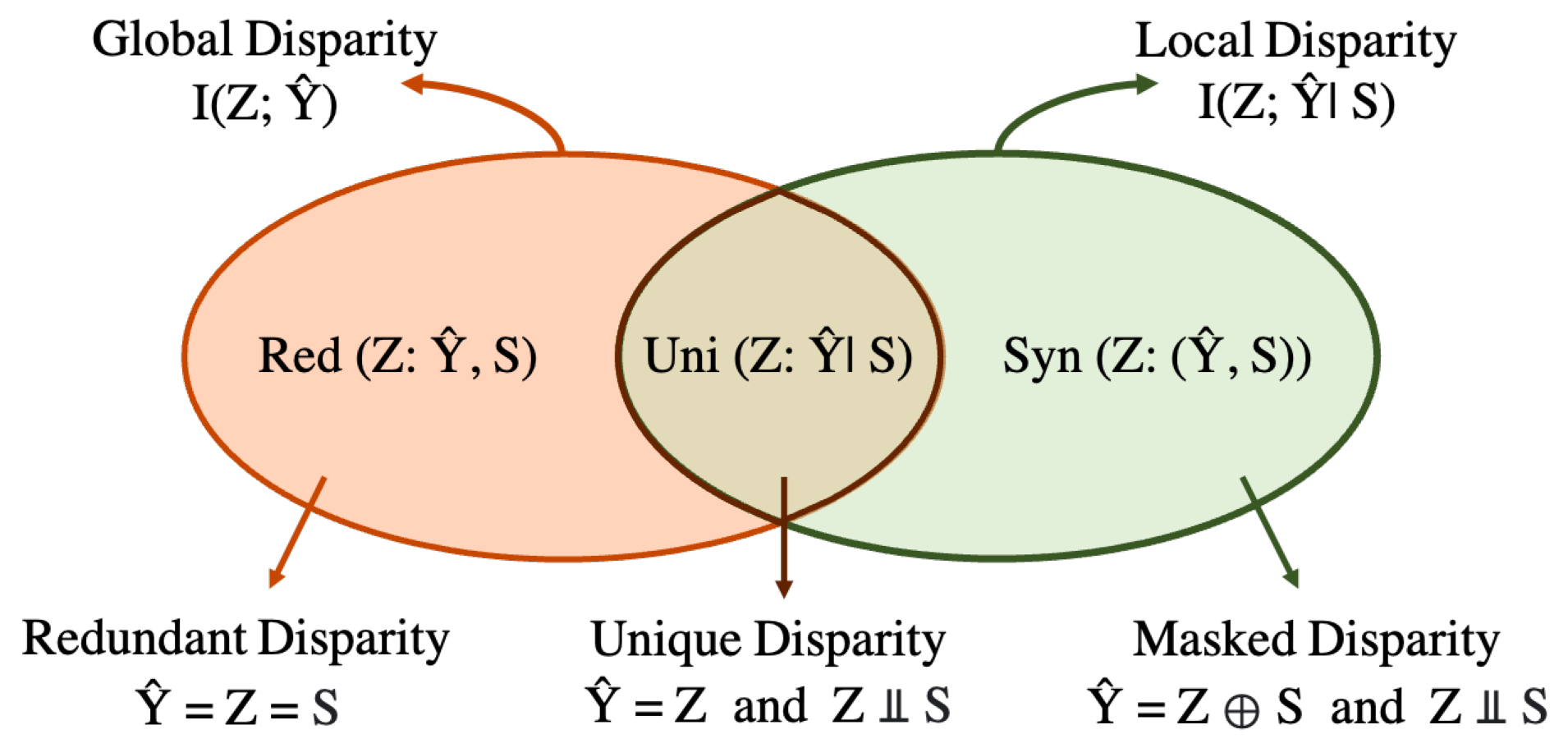

5.2. Partial Information Decomposition of Disparity in FL

5.3. Fundamental Limits and Tradeoffs between Local and Global Fairness

6. Discussion

6.1. Estimation of PID Measures

6.2. Summary of Contributions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Dwork, C.; Hardt, M.; Pitassi, T.; Reingold, O.; Zemel, R. Fairness through awareness. In Proceedings of the 3rd Innovations in Theoretical Computer Science Conference, Cambridge, MA, USA, 8–10 January 2012; ACM: New York, NY, USA, 2012; pp. 214–226. [Google Scholar]

- Datta, A.; Fredrikson, M.; Ko, G.; Mardziel, P.; Sen, S. Use privacy in data-driven systems: Theory and experiments with machine learnt programs. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; ACM: New York, NY, USA, 2017; pp. 1193–1210. [Google Scholar]

- Kamiran, F.; Žliobaitė, I.; Calders, T. Quantifying explainable discrimination and removing illegal discrimination in automated decision making. Knowl. Inf. Syst. 2013, 35, 613–644. [Google Scholar] [CrossRef]

- Mehrabi, N.; Morstatter, F.; Saxena, N.; Lerman, K.; Galstyan, A. A Survey on Bias and Fairness in Machine Learning. ACM Comput. Surv. 2021, 54, 1–35. [Google Scholar] [CrossRef]

- Varshney, K.R. Trustworthy machine learning and artificial intelligence. XRDS Crossroads ACM Mag. Stud. 2019, 25, 26–29. [Google Scholar] [CrossRef]

- Barocas, S.; Hardt, M.; Narayanan, A. Fairness and Machine Learning: Limitations and Opportunities. 2019. Available online: http://www.fairmlbook.org (accessed on 1 February 2023).

- Pessach, D.; Shmueli, E. A Review on Fairness in Machine Learning. ACM Comput. Surv. 2022, 55, 1–44. [Google Scholar] [CrossRef]

- Dutta, S.; Venkatesh, P.; Mardziel, P.; Datta, A.; Grover, P. Fairness under feature exemptions: Counterfactual and observational measures. IEEE Trans. Inf. Theory 2021, 67, 6675–6710. [Google Scholar] [CrossRef]

- Calmon, F.; Wei, D.; Vinzamuri, B.; Ramamurthy, K.N.; Varshney, K.R. Optimized pre-processing for discrimination prevention. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 3992–4001. [Google Scholar]

- Dutta, S.; Wei, D.; Yueksel, H.; Chen, P.Y.; Liu, S.; Varshney, K. Is There a Trade-Off between Fairness and Accuracy? A Perspective Using Mismatched Hypothesis Testing. In Proceedings of the 37th International Conference on Machine Learning, Virtual, 13–18 July 2020; Daumé, H., III, Singh, A., Eds.; Proceedings of Machine Learning Research (PMLR). Volume 119, pp. 2803–2813. [Google Scholar]

- Varshney, K.R. Trustworthy Machine Learning; Kush R. Varshney: Chappaqua, NY, USA, 2021. [Google Scholar]

- Wang, H.; Hsu, H.; Diaz, M.; Calmon, F.P. To Split or not to Split: The Impact of Disparate Treatment in Classification. IEEE Trans. Inf. Theory 2021, 67, 6733–6757. [Google Scholar] [CrossRef]

- Alghamdi, W.; Hsu, H.; Jeong, H.; Wang, H.; Michalak, P.W.; Asoodeh, S.; Calmon, F.P. Beyond adult and compas: Fairness in multi-class prediction. arXiv 2022, arXiv:2206.07801. [Google Scholar]

- Datta, A.; Sen, S.; Zick, Y. Algorithmic transparency via quantitative input influence: Theory and experiments with learning systems. In Proceedings of the 2016 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–26 May 2016; pp. 598–617. [Google Scholar]

- Lundberg, S.M.; Lee, S.I. A Unified Approach to Interpreting Model Predictions. Adv. Neural Inf. Process. Syst. 2017, 30, 4765–4774. [Google Scholar]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why should I trust you?” Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar]

- Koh, P.W.; Liang, P. Understanding black-box predictions via influence functions. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; Volume 70, pp. 1885–1894. [Google Scholar]

- Molnar, C. Interpretable Machine Learning. 2019. Available online: https://christophm.github.io/interpretable-ml-book/ (accessed on 5 February 2023).

- Verma, S.; Boonsanong, V.; Hoang, M.; Hines, K.E.; Dickerson, J.P.; Shah, C. Counterfactual Explanations and Algorithmic Recourses for Machine Learning: A Review. arXiv 2020, arXiv:2010.10596. [Google Scholar]

- Bertschinger, N.; Rauh, J.; Olbrich, E.; Jost, J.; Ay, N. Quantifying unique information. Entropy 2014, 16, 2161–2183. [Google Scholar] [CrossRef]

- Banerjee, P.K.; Olbrich, E.; Jost, J.; Rauh, J. Unique informations and deficiencies. In Proceedings of the 2018 56th Annual Allerton Conference on Communication, Control, and Computing (Allerton), Monticello, IL, USA, 2–5 October 2018; pp. 32–38. [Google Scholar]

- Williams, P.L.; Beer, R.D. Nonnegative decomposition of multivariate information. arXiv 2010, arXiv:1004.2515. [Google Scholar]

- Venkatesh, P.; Schamberg, G. Partial information decomposition via deficiency for multivariate gaussians. In Proceedings of the 2022 IEEE International Symposium on Information Theory (ISIT), Espoo, Finland, 26 June–1 July 2022; pp. 2892–2897. [Google Scholar]

- Gurushankar, K.; Venkatesh, P.; Grover, P. Extracting Unique Information Through Markov Relations. In Proceedings of the 2022 58th Annual Allerton Conference on Communication, Control, and Computing (Allerton), Monticello, IL, USA, 27–30 September 2022; pp. 1–6. [Google Scholar]

- Liao, J.; Sankar, L.; Kosut, O.; Calmon, F.P. Robustness of maximal α-leakage to side information. In Proceedings of the 2019 IEEE International Symposium on Information Theory (ISIT), Paris, France, 7–12 July 2019; pp. 642–646. [Google Scholar]

- Kamishima, T.; Akaho, S.; Asoh, H.; Sakuma, J. Fairness-aware classifier with prejudice remover regularizer. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Bristol, UK, 24–28 September 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 35–50. [Google Scholar]

- Cho, J.; Hwang, G.; Suh, C. A fair classifier using mutual information. In Proceedings of the 2020 IEEE International Symposium on Information Theory (ISIT), Los Angeles, CA, USA, 21–26 June 2020; pp. 2521–2526. [Google Scholar]

- Ghassami, A.; Khodadadian, S.; Kiyavash, N. Fairness in supervised learning: An information theoretic approach. In Proceedings of the 2018 IEEE International Symposium on Information Theory (ISIT), Vail, CO, USA, 17–22 June 2018; pp. 176–180. [Google Scholar]

- Dutta, S.; Venkatesh, P.; Mardziel, P.; Datta, A.; Grover, P. An Information-Theoretic Quantification of Discrimination with Exempt Features. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Grover, S.S. The business necessity defense in disparate impact discrimination cases. Ga. Law Rev. 1995, 30, 387. [Google Scholar]

- Dutta, S.; Venkatesh, P.; Grover, P. Quantifying Feature Contributions to Overall Disparity Using Information Theory. arXiv 2022, arXiv:2206.08454. [Google Scholar]

- It’s Time for an Honest Conversation about Graduate Admissions. 2020. Available online: https://news.ets.org/stories/its-time-for-an-honest-conversation-about-graduate-admissions/ (accessed on 1 February 2023).

- The Problem with the GRE. 2016. Available online: https://www.theatlantic.com/education/archive/2016/03/the-problem-with-the-gre/471633/ (accessed on 10 February 2023).

- Hamman, F.; Dutta, S. Demystifying Local and Global Fairness Trade-Offs in Federated Learning Using Information Theory. In Review. Available online: https://github.com/FaisalHamman/Fairness-Trade-offs-in-Federated-Learning (accessed on 1 February 2023).

- Galhotra, S.; Shanmugam, K.; Sattigeri, P.; Varshney, K.R. Fair Data Integration. arXiv 2020, arXiv:2006.06053. [Google Scholar]

- Khodadadian, S.; Nafea, M.; Ghassami, A.; Kiyavash, N. Information Theoretic Measures for Fairness-aware Feature Selection. arXiv 2021, arXiv:2106.00772. [Google Scholar]

- Galhotra, S.; Shanmugam, K.; Sattigeri, P.; Varshney, K.R. Causal feature selection for algorithmic fairness. In Proceedings of the 2022 International Conference on Management of Data, Philadelphia, PA, USA, 12–17 June 2022; pp. 276–285. [Google Scholar]

- Harutyunyan, H.; Achille, A.; Paolini, G.; Majumder, O.; Ravichandran, A.; Bhotika, R.; Soatto, S. Estimating informativeness of samples with smooth unique information. In Proceedings of the ICLR 2021, Virtual Event, Austria, 3–7 May 2021. [Google Scholar]

- Griffith, V.; Koch, C. Quantifying synergistic mutual information. In Guided Self-Organization: Inception; Springer: Berlin/Heidelberg, Germany, 2014; pp. 159–190. [Google Scholar]

- Banerjee, P.K.; Rauh, J.; Montufar, G. Computing the Unique Information. In Proceedings of the 2018 IEEE International Symposium on Information Theory (ISIT), Vail, CO, USA, 17–22 June 2018. [Google Scholar] [CrossRef]

- James, R.G.; Ellison, C.J.; Crutchfield, J.P. dit: A Python package for discrete information theory. J. Open Source Softw. 2018, 3, 738. [Google Scholar] [CrossRef]

- Zhang, J.; Bareinboim, E. Fairness in decision-making—The causal explanation formula. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Voume 32. [Google Scholar]

- Corbett-Davies, S.; Pierson, E.; Feller, A.; Goel, S.; Huq, A. Algorithmic Decision Making and the Cost of Fairness. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD ’17), Halifax, NS, Canada, 13–17 August 2017; ACM: New York, NY, USA, 2017; pp. 797–806. [Google Scholar] [CrossRef]

- Nabi, R.; Shpitser, I. Fair inference on outcomes. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Chiappa, S. Path-specific counterfactual fairness. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27–28 January 2019; Volume 33, pp. 7801–7808. [Google Scholar]

- Xu, R.; Cui, P.; Kuang, K.; Li, B.; Zhou, L.; Shen, Z.; Cui, W. Algorithmic Decision Making with Conditional Fairness. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Virtual Event, CA, USA, 6–10 July 2020; pp. 2125–2135. [Google Scholar]

- Salimi, B.; Rodriguez, L.; Howe, B.; Suciu, D. Interventional fairness: Causal database repair for algorithmic fairness. In Proceedings of the 2019 International Conference on Management of Data, Amsterdam, The Netherlands, 30 June–5 July 2019; pp. 793–810. [Google Scholar]

- Kusner, M.J.; Loftus, J.; Russell, C.; Silva, R. Counterfactual fairness. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 4066–4076. [Google Scholar]

- Kilbertus, N.; Carulla, M.R.; Parascandolo, G.; Hardt, M.; Janzing, D.; Schölkopf, B. Avoiding discrimination through causal reasoning. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 656–666. [Google Scholar]

- Peters, J.; Janzing, D.; Schölkopf, B. Elements of Causal Inference: Foundations and Learning Algorithms; MIT Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Bellamy, R.K.; Dey, K.; Hind, M.; Hoffman, S.C.; Houde, S.; Kannan, K.; Lohia, P.; Martino, J.; Mehta, S.; Mojsilović, A.; et al. AI Fairness 360: An extensible toolkit for detecting and mitigating algorithmic bias. IBM J. Res. Dev. 2019, 63, 4:1–4:15. [Google Scholar] [CrossRef]

- Arya, V.; Bellamy, R.K.; Chen, P.Y.; Dhurandhar, A.; Hind, M.; Hoffman, S.C.; Houde, S.; Liao, Q.V.; Luss, R.; Mojsilović, A.; et al. AI Explainability 360 Toolkit. In Proceedings of the 3rd ACM India Joint International Conference on Data Science & Management of Data (8th ACM IKDD CODS & 26th COMAD), Bangalore, India, 2–4 January 2021; pp. 376–379. [Google Scholar]

- Bakker, M.A.; Noriega-Campero, A.; Tu, D.P.; Sattigeri, P.; Varshney, K.R.; Pentland, A. On fairness in budget-constrained decision making. In Proceedings of the KDD Workshop of Explainable Artificial Intelligence, Egan, MN, USA, 4 August 2019. [Google Scholar]

- Yang, Q.; Liu, Y.; Cheng, Y.; Kang, Y.; Chen, T.; Yu, H. Federated Learning; Synthesis Lectures on Artificial Intelligence and Machine Learning, #43; Morgan & Claypool: San Rafael, CA, USA, 2020. [Google Scholar]

- Du, W.; Xu, D.; Wu, X.; Tong, H. Fairness-aware agnostic federated learning. In Proceedings of the 2021 SIAM International Conference on Data Mining (SDM), SIAM, Virtual Event, 29 April–1 May 2021; pp. 181–189. [Google Scholar]

- Abay, A.; Zhou, Y.; Baracaldo, N.; Rajamoni, S.; Chuba, E.; Ludwig, H. Mitigating bias in federated learning. arXiv 2020, arXiv:2012.02447. [Google Scholar]

- Ezzeldin, Y.H.; Yan, S.; He, C.; Ferrara, E.; Avestimehr, S. Fairfed: Enabling group fairness in federated learning. arXiv 2021, arXiv:2110.00857. [Google Scholar]

- Cui, S.; Pan, W.; Liang, J.; Zhang, C.; Wang, F. Addressing algorithmic disparity and performance inconsistency in federated learning. Adv. Neural Inf. Process. Syst. 2021, 34, 26091–26102. [Google Scholar]

- Griffith, V.; Chong, E.K.; James, R.G.; Ellison, C.J.; Crutchfield, J.P. Intersection information based on common randomness. Entropy 2014, 16, 1985–2000. [Google Scholar] [CrossRef]

- Kolchinsky, A. A Novel Approach to the Partial Information Decomposition. Entropy 2022, 24, 403. [Google Scholar] [CrossRef] [PubMed]

- Harder, M.; Salge, C.; Polani, D. Bivariate measure of redundant information. Phys. Rev. E 2013, 87, 012130. [Google Scholar] [CrossRef] [PubMed]

- Ince, R.A.A. Measuring Multivariate Redundant Information with Pointwise Common Change in Surprisal. Entropy 2017, 19, 318. [Google Scholar] [CrossRef]

- James, R.G.; Emenheiser, J.; Crutchfield, J.P. Unique information via dependency constraints. J. Phys. A Math. Theor. 2018, 52, 014002. [Google Scholar] [CrossRef]

- Finn, C.; Lizier, J.T. Pointwise Partial Information Decomposition Using the Specificity and Ambiguity Lattices. Entropy 2018, 20, 297. [Google Scholar] [CrossRef]

- Pál, D.; Póczos, B.; Szepesvári, C. Estimation of Rényi entropy and mutual information based on generalized nearest-neighbor graphs. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 6–9 December 2010; pp. 1849–1857. [Google Scholar]

- Mukherjee, S.; Asnani, H.; Kannan, S. CCMI: Classifier based conditional mutual information estimation. In Proceedings of the Uncertainty in Artificial Intelligence (PMLR), Virtual, 3–6 August 2020; pp. 1083–1093. [Google Scholar]

- Liao, J.; Huang, C.; Kairouz, P.; Sankar, L. Learning Generative Adversarial RePresentations (GAP) under Fairness and Censoring Constraints. arXiv 2019, arXiv:1910.00411. [Google Scholar]

- Pakman, A.; Nejatbakhsh, A.; Gilboa, D.; Makkeh, A.; Mazzucato, L.; Wibral, M.; Schneidman, E. Estimating the unique information of continuous variables. Adv. Neural Inf. Process. Syst. 2021, 34, 20295–20307. [Google Scholar]

- Kleinman, M.; Achille, A.; Soatto, S.; Kao, J.C. Redundant Information Neural Estimation. Entropy 2021, 23, 922. [Google Scholar] [CrossRef]

- Tokui, S.; Sato, I. Disentanglement analysis with partial information decomposition. arXiv 2021, arXiv:2108.13753. [Google Scholar]

| Measure | Discussion |

|---|---|

| Statistical Parity () |

|

| Equalized Odds () |

|

| Conditional Statistical Parity () |

|

| Unique Information () |

|

| Measure | Discussion |

|---|---|

| SHAP [15] (Can be adapted for disparity) |

|

| Interventional Contribution to disparity |

|

| Potential Contribution to disparity |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dutta, S.; Hamman, F. A Review of Partial Information Decomposition in Algorithmic Fairness and Explainability. Entropy 2023, 25, 795. https://doi.org/10.3390/e25050795

Dutta S, Hamman F. A Review of Partial Information Decomposition in Algorithmic Fairness and Explainability. Entropy. 2023; 25(5):795. https://doi.org/10.3390/e25050795

Chicago/Turabian StyleDutta, Sanghamitra, and Faisal Hamman. 2023. "A Review of Partial Information Decomposition in Algorithmic Fairness and Explainability" Entropy 25, no. 5: 795. https://doi.org/10.3390/e25050795

APA StyleDutta, S., & Hamman, F. (2023). A Review of Partial Information Decomposition in Algorithmic Fairness and Explainability. Entropy, 25(5), 795. https://doi.org/10.3390/e25050795