A Multi-Agent Deep Reinforcement Learning-Based Popular Content Distribution Scheme in Vehicular Networks

Abstract

1. Introduction

1.1. Related Work

1.2. Contributions

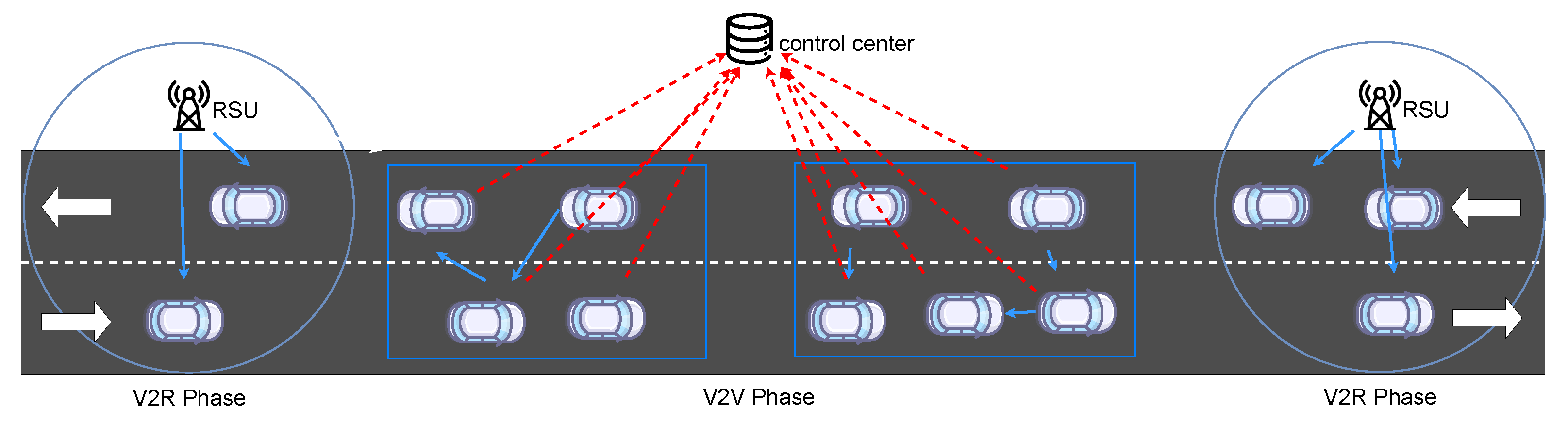

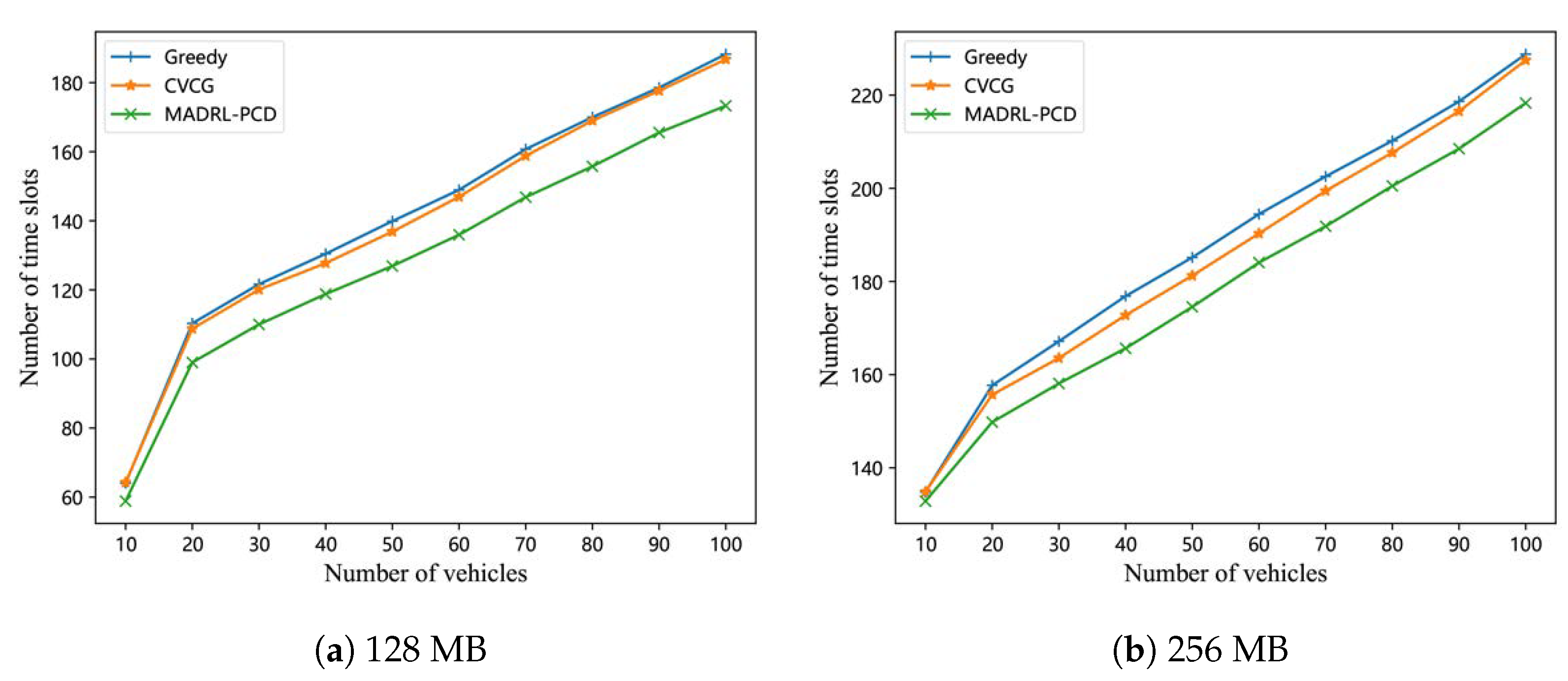

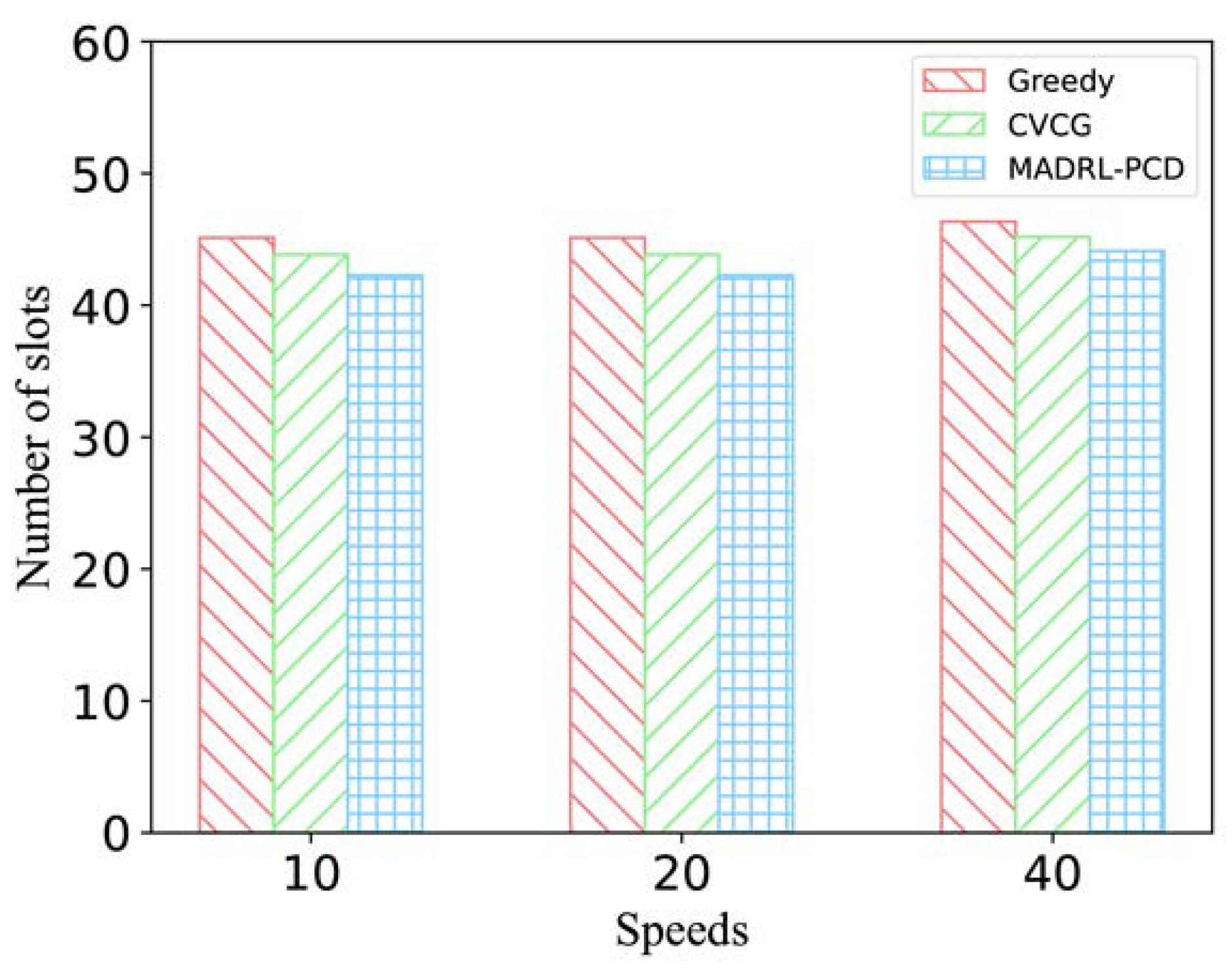

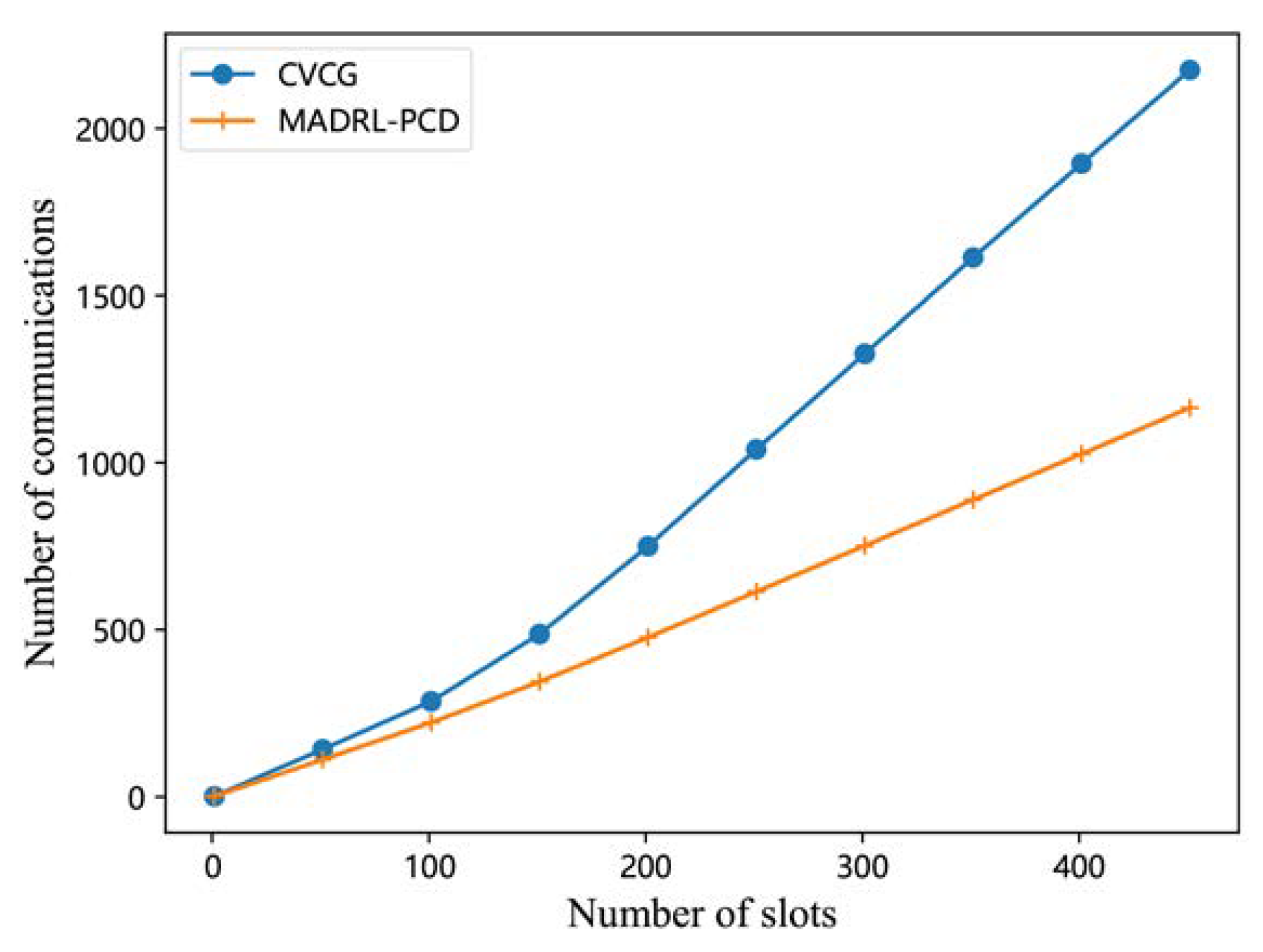

- A multi-agent deep reinforcement learning-based popular content distribution (MADRL-PCD) scheme in vehicular networks is proposed. We divide PCD into two phases. First, vehicles obtain part of the popular content from a few RSUs in the V2R phase. Then, based on the different parts of the content they own, vehicles form a vehicular ad hoc network (VANET) for data sharing to acquire the whole popular content in the V2V phase. We train the agent to coordinate data transmissions in the VANET by multi-agent reinforcement learning. Simulation results show that our scheme outperforms the greedy-based scheme and the game theory-based CVCG scheme with a lower delivery delay and a higher delivery efficiency.

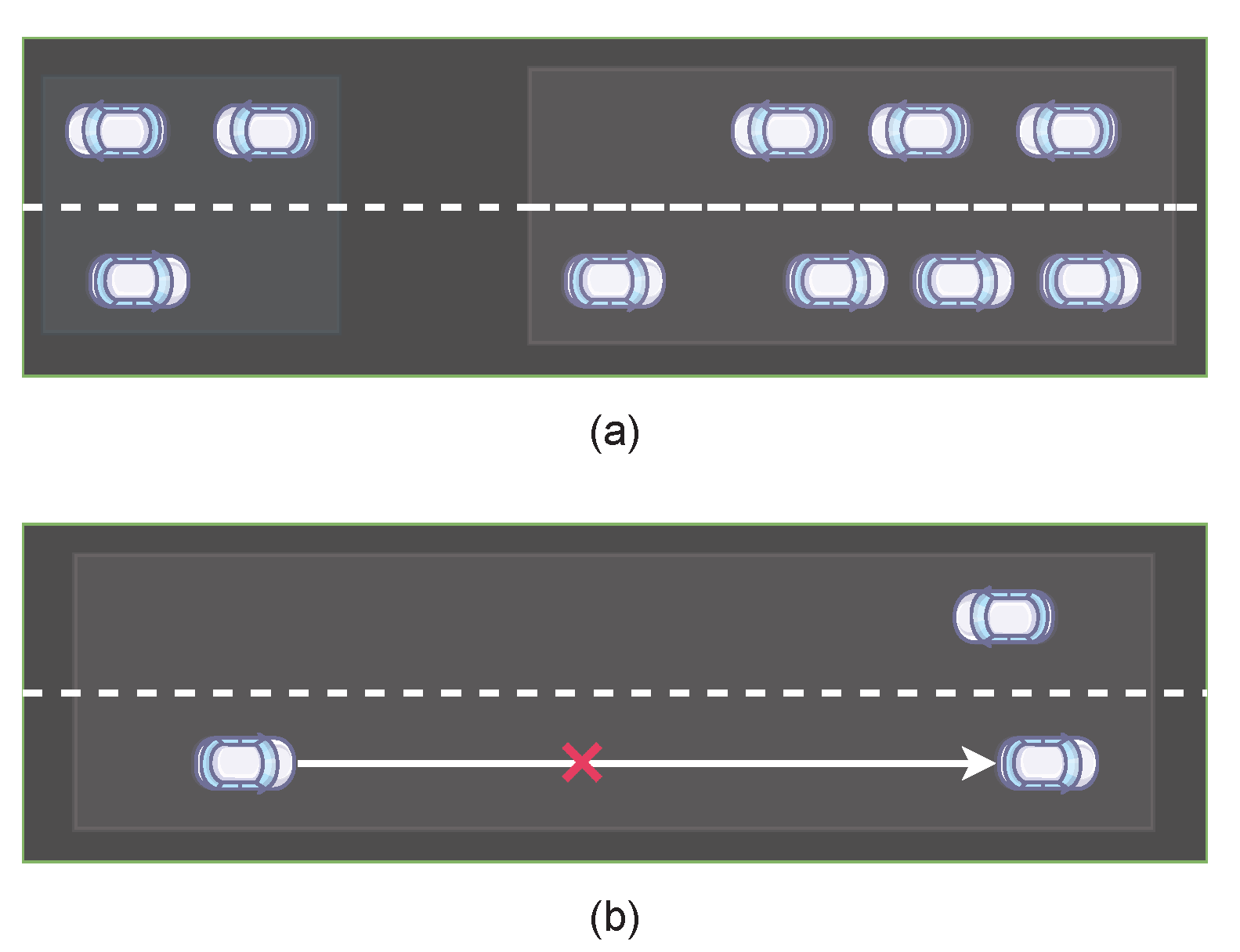

- To reduce the complexity of the learning algorithm, we propose a vehicle clustering algorithm based on spectral clustering to divide all vehicles in the V2V stage into multiple non-interfering groups, so that data exchange occurs only among vehicles within the same group. Moreover, by clustering, the number of vehicles within each group does not exceed the number of agents, which is helpful to apply the MADRL efficiently. Based on the vehicle partition, we abstract a multi-vehicle environment training and train a fixed group of intelligent agents using the MAPPO algorithm.

- When applying MADRL, we define the agent’s observation and the environment’s state as matrix forms. Considering that the agent’s decision is based on the correlation of any two vehicles’ information, we use the self-attention mechanism to construct the agent’s neural network. Moreover, we use the invalid action masking technique to avoid unreasonable actions by the agent and accelerate its training process.

2. System Model and Problem Formulation

2.1. Channel Model

2.2. Vehicle Clustering

| Algorithm 1 Vehicle clustering algorithm |

| Input: All vehicle positions , the maximum number of vehicles in a group , the communication range |

| Output: The partition result |

|

2.3. Problem Formulation

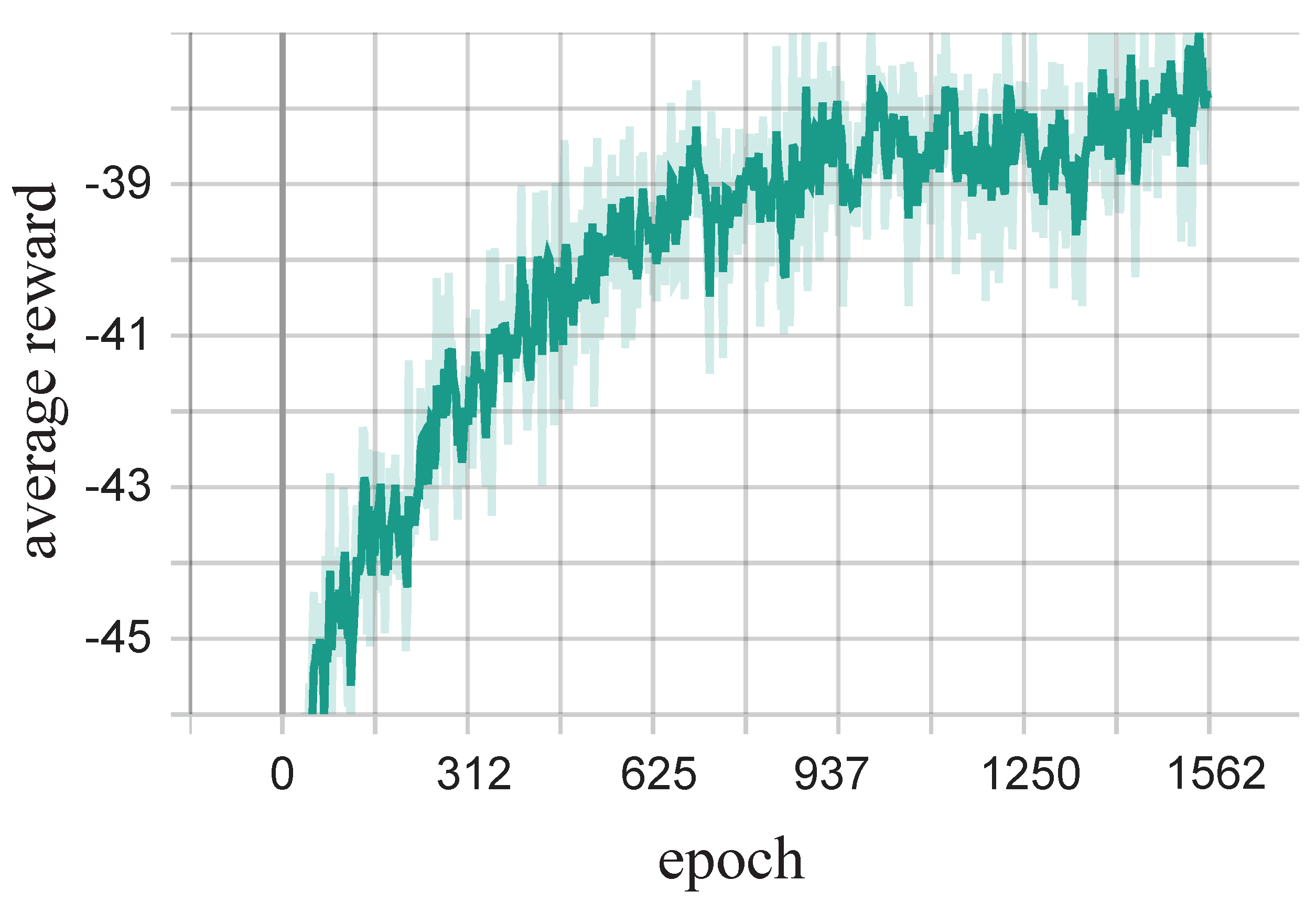

3. Multi-Agent Deep Reinforcement Learning

3.1. Environmental Modelling

3.2. MADRL-PCD Network Architecture

4. Performance Evaluation

5. Conclusions and Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yousefi, S.; Mousavi, M.S.; Fathy, M. Vehicular Ad Hoc Networks (VANETs): Challenges and Perspectives. In Proceedings of the 2006 6th International Conference on ITS Telecommunications, Chengdu, China, 21–23 June 2006; pp. 761–766. [Google Scholar] [CrossRef]

- Kaiwartya, O.; Abdullah, A.H.; Cao, Y.; Altameem, A.; Prasad, M.; Lin, C.T.; Liu, X. Internet of Vehicles: Motivation, Layered Architecture, Network Model, Challenges, and Future Aspects. IEEE Access 2016, 4, 5356–5373. [Google Scholar] [CrossRef]

- Yin, J.; ElBatt, T.; Yeung, G.; Ryu, B.; Habermas, S.; Krishnan, H.; Talty, T. Performance Evaluation of Safety Applications over DSRC Vehicular Ad Hoc Networks. In Proceedings of the 1st ACM International Workshop on Vehicular Ad Hoc Networks, VANET’04, Philadelphia, PA, USA, 1 October 2004; Association for Computing Machinery: New York, NY, USA, 2004; pp. 1–9. [Google Scholar] [CrossRef]

- Cheng, H.T.; Shan, H.; Zhuang, W. Infotainment and Road Safety Service Support in Vehicular Networking: From a Communication Perspective. Mech. Syst. Signal Process. 2011, 25, 2020–2038. [Google Scholar] [CrossRef]

- El-Rewini, Z.; Sadatsharan, K.; Selvaraj, D.F.; Plathottam, S.J.; Ranganathan, P. Cybersecurity Challenges in Vehicular Communications. Veh. Commun. 2020, 23, 100214. [Google Scholar] [CrossRef]

- Shahwani, H.; Attique Shah, S.; Ashraf, M.; Akram, M.; Jeong, J.P.; Shin, J. A Comprehensive Survey on Data Dissemination in Vehicular Ad Hoc Networks. Veh. Commun. 2022, 34, 100420. [Google Scholar] [CrossRef]

- Li, M.; Yang, Z.; Lou, W. CodeOn: Cooperative Popular Content Distribution for Vehicular Networks using Symbol Level Network Coding. IEEE J. Sel. Areas Commun. 2011, 29, 223–235. [Google Scholar] [CrossRef]

- Schwartz, R.S.; R. Barbosa, R.R.; Meratnia, N.; Heijenk, G.; Scholten, H. A Directional Data Dissemination Protocol for Vehicular Environments. Comput. Commun. 2011, 34, 2057–2071. [Google Scholar] [CrossRef]

- Di Felice, M.; Bedogni, L.; Bononi, L. Group Communication on Highways: An Evaluation Study of Geocast Protocols and Applications. Ad Hoc Netw. 2013, 11, 818–832. [Google Scholar] [CrossRef]

- Kumar, N.; Lee, J.H. Peer-to-Peer Cooperative Caching for Data Dissemination in Urban Vehicular Communications. IEEE Syst. J. 2014, 8, 1136–1144. [Google Scholar] [CrossRef]

- d’Orey, P.M.; Maslekar, N.; de la Iglesia, I.; Zahariev, N.K. NAVI: Neighbor-Aware Virtual Infrastructure for Information Collection and Dissemination in Vehicular Networks. In Proceedings of the 2015 IEEE 81st Vehicular Technology Conference (VTC Spring), Glasgow, UK, 11–14 May 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Nawaz Ali, G.; Chong, P.H.J.; Samantha, S.K.; Chan, E. Efficient Data Dissemination in Cooperative Multi-RSU Vehicular Ad Hoc Networks (VANETs). J. Syst. Softw. 2016, 117, 508–527. [Google Scholar] [CrossRef]

- Shafi, S.; Venkata Ratnam, D. A Cross Layer Cluster Based Routing Approach for Efficient Multimedia Data Dissemination with Improved Reliability in VANETs. Wirel. Pers. Commun. 2019, 107, 2173–2190. [Google Scholar] [CrossRef]

- Shafi, S.; Venkata Ratnam, D. An Efficient Cross Layer Design of Stability Based Clustering Scheme Using Ant Colony Optimization in VANETs. Wirel. Pers. Commun. 2022, 126, 3001–3019. [Google Scholar] [CrossRef]

- Sun, J.; Dong, P.; Du, X.; Zheng, T.; Qin, Y.; Guizani, M. Cluster-based Cooperative Multicast for Multimedia Data Dissemination in Vehicular Networks. In Proceedings of the 2020 IEEE Wireless Communications and Networking Conference (WCNC), Seoul, Republic of Korea, 25–28 May 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Jeevitha, R.; Bhuvaneswari, N. CPDD: Clustering and Probabilistic based Data Dissemination in Vehicular Adhoc Networks. Indian J. Sci. Technol. 2022, 15, 2303–2316. [Google Scholar] [CrossRef]

- Hasson, S.T.; Abbas, A.T. A Clustering Approach to Model the Data Dissemination in VANETs. In Proceedings of the 2021 1st Babylon International Conference on Information Technology and Science (BICITS), Babil, Iraq, 28–29 April 2021; pp. 337–342. [Google Scholar] [CrossRef]

- Wang, T.; Song, L.; Han, Z.; Jiao, B. Dynamic Popular Content Distribution in Vehicular Networks using Coalition Formation Games. IEEE J. Sel. Areas Commun. 2013, 31, 538–547. [Google Scholar] [CrossRef]

- Hu, J.; Chen, C.; Liu, L. Popular Content Distribution Scheme with Cooperative Transmission Based on Coalitional Game in VANETs. In Proceedings of the 2018 21st International Symposium on Wireless Personal Multimedia Communications (WPMC), Chiang Rai, Thailand, 25–8 November 2018; pp. 69–74. [Google Scholar] [CrossRef]

- Chen, C.; Hu, J.; Qiu, T.; Atiquzzaman, M.; Ren, Z. CVCG: Cooperative V2V-Aided Transmission Scheme Based on Coalitional Game for Popular Content Distribution in Vehicular Ad-Hoc Networks. IEEE Trans. Mob. Comput. 2019, 18, 2811–2828. [Google Scholar] [CrossRef]

- Wang, Y.; Wu, H.; Niu, Y.; Han, Z.; Ai, B.; Zhong, Z. Coalition Game Based Full-Duplex Popular Content Distribution in mmWave Vehicular Networks. IEEE Trans. Veh. Technol. 2020, 69, 13836–13848. [Google Scholar] [CrossRef]

- Zhang, D.G.; Zhu, H.L.; Zhang, T.; Zhang, J.; Du, J.Y.; Mao, G.Q. A New Method of Content Distribution Based on Fuzzy Logic and Coalition Graph Games for VEC. Clust. Comput. 2023, 26, 701–717. [Google Scholar] [CrossRef]

- Huang, W.; Wang, L. ECDS: Efficient Collaborative Downloading Scheme for Popular Content Distribution in Urban Vehicular Networks. Comput. Netw. 2016, 101, 90–103. [Google Scholar] [CrossRef]

- Chen, C.; Xiao, T.; Zhao, H.; Liu, L.; Pei, Q. GAS: A Group Acknowledgment Strategy for Popular Content Distribution in Internet of Vehicle. Veh. Commun. 2019, 17, 35–49. [Google Scholar] [CrossRef]

- Li, Y. Deep Reinforcement Learning: An Overview. arXiv 2017, arXiv:1701.07274. [Google Scholar] [CrossRef]

- Wu, C.; Liu, Z.; Zhang, D.; Yoshinaga, T.; Ji, Y. Spatial Intelligence toward Trustworthy Vehicular IoT. IEEE Commun. Mag. 2018, 56, 22–27. [Google Scholar] [CrossRef]

- Wu, C.; Liu, Z.; Liu, F.; Yoshinaga, T.; Ji, Y.; Li, J. Collaborative Learning of Communication Routes in Edge-Enabled Multi-Access Vehicular Environment. IEEE Trans. Cogn. Commun. Netw. 2020, 6, 1155–1165. [Google Scholar] [CrossRef]

- Mchergui, A.; Moulahi, T.; Nasri, S. Relay Selection Based on Deep Learning for Broadcasting in VANET. In Proceedings of the 2019 15th International Wireless Communications & Mobile Computing Conference (IWCMC), Tangier, Morocco, 24–28 June 2019; pp. 865–870. [Google Scholar] [CrossRef]

- Lolai, A.; Wang, X.; Hawbani, A.; Dharejo, F.A.; Qureshi, T.; Farooq, M.U.; Mujahid, M.; Babar, A.H. Reinforcement Learning Based on Routing with Infrastructure Nodes for Data Dissemination in Vehicular Networks (RRIN). Wirel. Netw. 2022, 28, 2169–2184. [Google Scholar] [CrossRef]

- Lou, C.; Hou, F. Efficient DRL-based HD map Dissemination in V2I Communications. In Proceedings of the ICC 2022—IEEE International Conference on Communications, Dubrovnik, Croatia, 30 May–3 June 2022; pp. 5041–5046. [Google Scholar] [CrossRef]

- Friis, H. A Note on a Simple Transmission Formula. Proc. IRE 1946, 34, 254–256. [Google Scholar] [CrossRef]

- Rappaport, T.S. Wireless Communications–Principles and Practice, (The Book End). Microw. J. 2002, 45, 128–129. [Google Scholar]

- He, R.; Renaudin, O.; Kolmonen, V.M.; Haneda, K.; Zhong, Z.; Ai, B.; Hubert, S.; Oestges, C. Vehicle-to-Vehicle Radio Channel Characterization in Crossroad Scenarios. IEEE Trans. Veh. Technol. 2016, 65, 5850–5861. [Google Scholar] [CrossRef]

- Turgut, E.; Gursoy, M.C. Coverage in Heterogeneous Downlink Millimeter Wave Cellular Networks. IEEE Trans. Commun. 2017, 65, 4463–4477. [Google Scholar] [CrossRef]

- Liu, L.; Chen, C.; Qiu, T.; Zhang, M.; Li, S.; Zhou, B. A Data Dissemination Scheme Based on Clustering and Probabilistic Broadcasting in VANETs. Veh. Commun. 2018, 13, 78–88. [Google Scholar] [CrossRef]

- Von Luxburg, U. A Tutorial on Spectral Clustering. Stat. Comput. 2007, 17, 395–416. [Google Scholar] [CrossRef]

- MacKay, D. Information Theory, Inference and Learning Algorithms; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Oliehoek, F.A.; Amato, C. A Concise Introduction to Decentralized POMDPs; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Huang, S.; Ontañón, S. A Closer Look at Invalid Action Masking in Policy Gradient Algorithms. arXiv 2020, arXiv:2006.14171. [Google Scholar] [CrossRef]

- Lopez, P.A.; Behrisch, M.; Bieker-Walz, L.; Erdmann, J.; Flötteröd, Y.P.; Hilbrich, R.; Lücken, L.; Rummel, J.; Wagner, P.; Wießner, E. Microscopic Traffic Simulation using SUMO. In Proceedings of the 21st IEEE International Conference on Intelligent Transportation Systems, Maui, HI, USA, 4–7 November 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar]

| Parameter | Value |

|---|---|

| Length of simulation environment | 10,000 m |

| Length of training environment | 250 m |

| Coverage of RSU | 450 m |

| The size of popular content chunk | 8 MB |

| Transmit power of RSU | dBm |

| Transmit power of vehicles | dBm |

| Antenna gain | |

| System loss | |

| Additive Gaussian white noise | dBm |

| Interference rate | |

| SINR threshold | dB |

| The learning rate of actor network | |

| The learning rate of critic network | |

| Discount factor | |

| The reward factor | |

| The penalty factor |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, W.; Huang, X.; Guan, Q.; Zhao, S. A Multi-Agent Deep Reinforcement Learning-Based Popular Content Distribution Scheme in Vehicular Networks. Entropy 2023, 25, 792. https://doi.org/10.3390/e25050792

Chen W, Huang X, Guan Q, Zhao S. A Multi-Agent Deep Reinforcement Learning-Based Popular Content Distribution Scheme in Vehicular Networks. Entropy. 2023; 25(5):792. https://doi.org/10.3390/e25050792

Chicago/Turabian StyleChen, Wenwei, Xiujie Huang, Quanlong Guan, and Shancheng Zhao. 2023. "A Multi-Agent Deep Reinforcement Learning-Based Popular Content Distribution Scheme in Vehicular Networks" Entropy 25, no. 5: 792. https://doi.org/10.3390/e25050792

APA StyleChen, W., Huang, X., Guan, Q., & Zhao, S. (2023). A Multi-Agent Deep Reinforcement Learning-Based Popular Content Distribution Scheme in Vehicular Networks. Entropy, 25(5), 792. https://doi.org/10.3390/e25050792