Abstract

The existence of the typical set is key for data compression strategies and for the emergence of robust statistical observables in macroscopic physical systems. Standard approaches derive its existence from a restricted set of dynamical constraints. However, given its central role underlying the emergence of stable, almost deterministic statistical patterns, a question arises whether typical sets exist in much more general scenarios. We demonstrate here that the typical set can be defined and characterized from general forms of entropy for a much wider class of stochastic processes than was previously thought. This includes processes showing arbitrary path dependence, long range correlations or dynamic sampling spaces, suggesting that typicality is a generic property of stochastic processes, regardless of their complexity. We argue that the potential emergence of robust properties in complex stochastic systems provided by the existence of typical sets has special relevance to biological systems.

1. Introduction

Many living systems are characterized by a high degree of internal stochasticity and display processes that form organization of growing complexity [1,2,3,4]. Such complexification processes exist on various scales, from the evolutionary scale [5,6], to the scale of single organisms [7]. The increase of complexity of the different forms of living entities triggered the debate whether the existence of open-endedness is a defining trait of biological evolution [8,9,10,11,12,13,14], with the resulting challenge of finding a potential statistical-physics-like characterization of it. At the single organism scale, the developmental process consists in the emergence of an adult multicellular organism from a single cell [7]. This astonishingly fast process of complexification sets challenges in many directions. One of these challenges is the characterization of the evolution of the space of potential configurations the system may acquire in time: In early embryo morphogenesis, for example, not only does the number of cells increase exponentially, resulting in the corresponding increase of potential configurations, but also cells differentiate into specialized cell types and create collective structures implying, in statistical physics language, that new states enter the system. This process is almost completely irreversible and, although highly precise, it is known to have a strong stochastic component [15,16,17]. Away from biology, non stable configuration spaces and processes of complexification have been identified in systems with innovation [18,19,20]. On the other hand, one can consider processes where the potential number of configurations decreases with time. Away from biology, recent advances in decay dynamics in nuclear physics were achieved considering a mathematical framework based on the stochastic collapse of the phase space [21,22,23]. In Figure 1 we schematically show the kind of processes we are exploring, all taking place in dynamic phase spaces, that is, processes where the amount of available possibilities may change (either grow or decrease) in time. Despite the ubiquity of such phenomena, a comprehensive characterization of systems with dynamic phase spaces, in terms equivalent to the ensemble theory of statistical mechanics, is lacking.

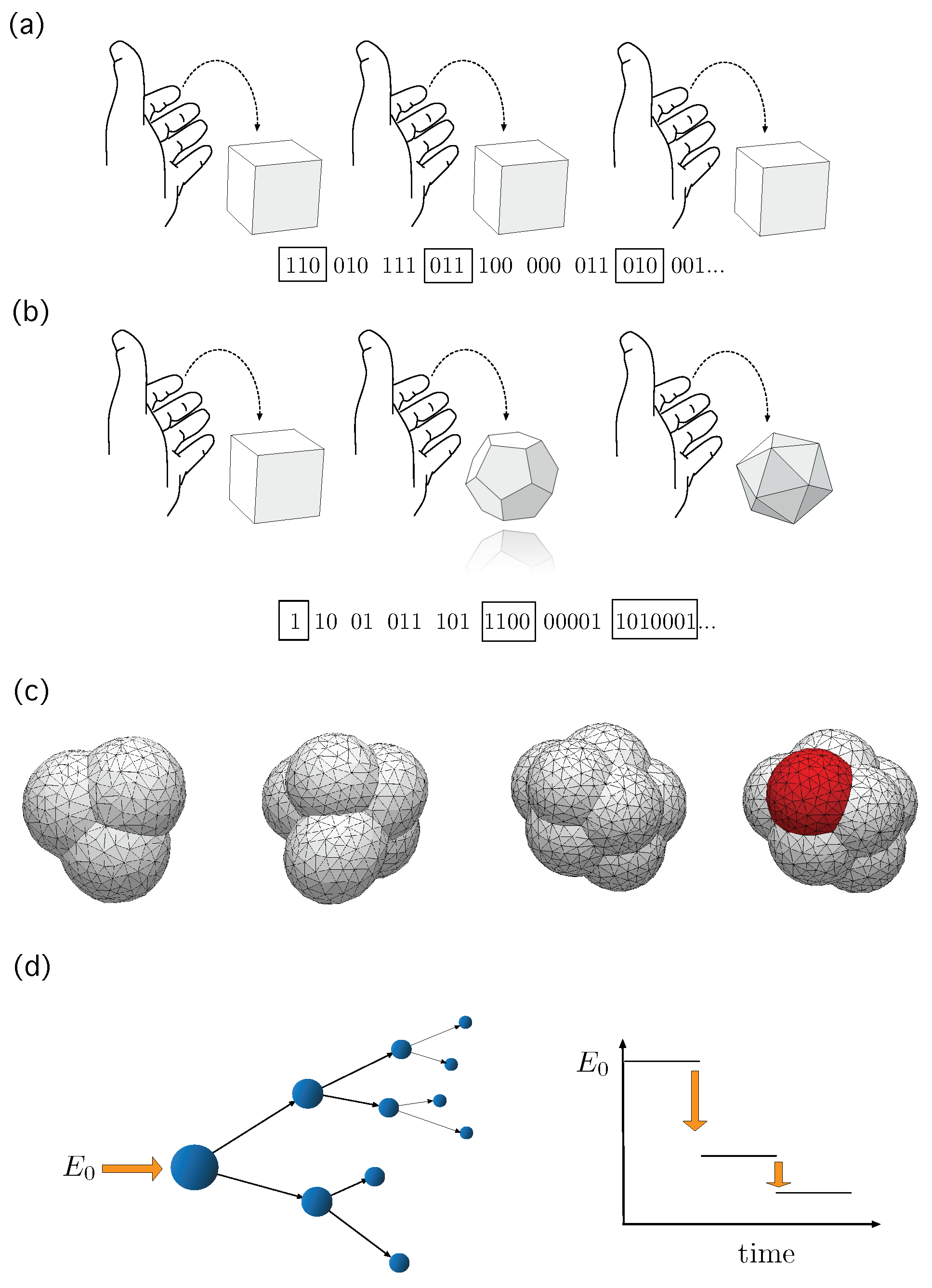

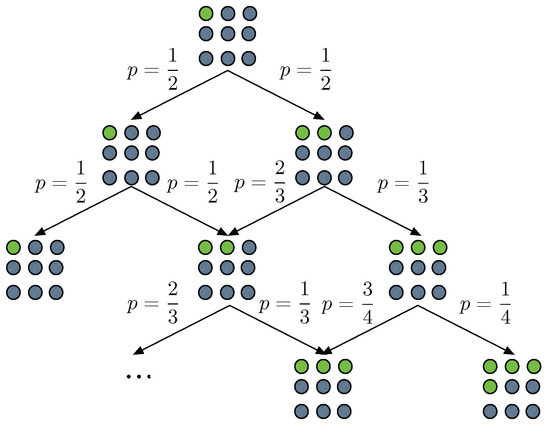

Figure 1.

(a) Independent draws of the same dice, either fair or biased, define a i.i.d. stochastic processes whose typical set is well defined and grows following approximately an exponential trend [24]. (b) An example of a system whose typical set may show a super-exponential growth: At every drawing we update the dice by adding, e.g., a new face. (c) Potential configurations of early embryo development resembles, intuitively, the picture of the dice with growing number of faces. In this biological setting, new cells appear and, with that, new configurations but, on top of that, cells differentiate into new types—shown here in red—adding new states to the system that were not there before. Interestingly, even highly reproducible, the whole process displays a strong stochastic component [15]. (d) Nuclear disintegration can be studied from the framework of collapsing phase spaces [23]. In these processes, the amount of potential configurations of the system shrinks as long as the process unfolds. Toy models of embryo packings in (c) have been drawn using the evolver software package.

Ensemble formalism in statistical mechanics can be grounded in the concept of typicality [24,25,26,27,28]. Informally speaking, given the set of all potential sequences of events resulting from a stochastic process, a subset, the typical set, carries most of the probability [24,25]. This should not be confused with the set of most probable sequences: in the case of the biased coin, for example, the most probable sequence is not in the typical set. In other words, for long enough sequences, the probability that the observed sequence or state belongs to the subset of sequences forming the typical set goes asymptotically to 1. Generalizing to continuous systems, this is known as the concentration of measure phenomenon [29]. Accordingly, a typical property for a stochastic system is robust and acts as a strong, almost deterministic attractor as the process unfolds [27], and one may expect to observe it in the vast majority of cases. Moreover, if such a typical property exists, one can use this single property to—at least partially—characterize the system, and hence avoid a detailed microscopic description of all of the system’s components. Arguably, considerations based on typicality drive the connection between microscopic dynamics and macroscopic observables and underlie the existence of the thermodynamic limit [28,30,31]. In the context of information theory, the existence of the typical set for a given information source has deep consequences in the context of data compression [24,25].

The size of the typical set gives us valuable information on how the stochastic process is filling the phase space. In equilibrium systems or for information sources drawing independently from identically distributed (i.i.d.) random variables, the Gibbs–Shannon entropic functional arises naturally in the characterization of the typical set [24,25], establishing a clear connection between thermodynamics and phase space occupation. In systems/processes with collapsing or exploding phase spaces, path dependence or strong internal correlations [4,18,19,20,21,32,33,34,35,36,37], the phase space may grow super- or sub-exponentially, and the emergence of the Shannon–Gibbs entropic functional derived from phase space volume occupancy considerations is no longer guaranteed. The same situation may arise in cases dealing with non-stationary information sources [38,39,40,41,42]. Generalized forms for entropies have been proposed to encompass these more general scenarios [43,44,45,46,47,48,49,50,51], some of them explicitly linking the entropic functional to the expected evolution of the phase space volumes [36,46,52,53,54,55,56]. Despite the notable advances reported also for systems with physical significance [37,57,58], the concept of typicality has not been yet explored for systems/processes with exploding or shrinking phase spaces, which display path dependent dynamics or are subject to emergent internal constraints and correlations.

The purpose of this paper is to fill this important gap in the theory of stochastic processes, providing results with potential implications in the theory of non-equilibrium systems, data compression and coding strategies. As we shall see, the typical set can be defined for processes arbitrarily away from the i.i.d. framework, by only assuming a very generic convergence criteria, satisfied by a broad class of stochastic processes, that we refer to here as compact stochastic processes.

2. Results

2.1. Compact Stochastic Processes

Let us consider a general class of stochastic processes [59,60]. This class encompasses almost any discrete stochastic process that can be conceived. A realization of t steps of the process is denoted as :

where are random variables themselves. Note that, in different realizations of t steps of the process, the sequence of random variables can be different, as the process may display path dependence, long term correlations, or changes of the state space dynamics itself (either shrinking or expanding). We denote a particular trajectory/path the process may follow as:

being the set of all possible paths of the process up to time t. We focus on the family of stochastic processes where there exists (i) a positive, strictly concave and strictly increasing function in the interval , such that , and (ii) a positive, strictly increasing, , in the interval , by which:

where the convergence is in probability [60]. We will call this family of stochastic processes compact stochastic processes (CSP). Given a CSP process , a pair of functions by which Equation (1) is satisfied define a compact scale of the CSP process . Note that these two functions may not be unique for a given process, meaning that the process can, in principle, have several compact scales.

It is straightforward to check that, if is a sequence of i.i.d. random variables , and is t times the Shannon entropy of a single realization, , the above condition holds, as it recovers the standard formulation of the Asymptotic Equipartition Property (AEP) [24,25]. Therefore, the drawing of i.i.d. random variables is a CSP with compact scale . However, the range of potential processes that are CSPs is, in principle, much broader. In consequence, the first question we ask concerns the constraints that the convergence condition (1) imposes on . Assuming that (1) holds, one finds that the candidates to characterize CSPs are the s satisfying the following condition; see Proposition A1 of the Appendix B.2 for details:

Typical candidates for are of the form , where are two positive, real valued constants or, more generally:

where and are positive, real valued constants. In previous approaches, these constants have been identified as scaling exponents, enabling us to classify the different potential growth dynamics of the phase space [54]. We observe that the existence of the inverse function of , , by which , is guaranteed by the assumption made in the definition of CSPs that is a strictly monotonically growing function.

The convergence condition defining CSPs has a direct consequence on how probabilities are distributed along the set of all potential paths. Indeed, from Equation (1) it follows that there exist two non-increasing sequences of positive numbers , , with , from which one can define a sequence of subsets of paths (such that ) as follows: For all :

and the probability of a given path to belong to is bounded as:

where:

We call the sequence of subsets of the respective sampling spaces a sequence of typical sets of η. Informally speaking, Equation (4) tells us that, for large enough t, the probability of observing a path that does not belong to the typical set becomes negligible. As a consequence, the typical set can be identified for CSPs: Given a CSP, the typical set absorbs all the probability, in the limit . We summarize the above considerations in a theorem:

Theorem 1.

If η is a CSP in , then there exist (a) a non-increasing sequence of positive numbers with limit , associated to η and hence (b) the respective sequence of typical sets by which:

Proof.

Since is a CSP we know, by assumption, that, as also (in probability). We can rewrite this condition by stating that, for every , there exists a by which, for each [60]:

Let be the smallest such . We can then use two arbitrary strictly monotonic decreasing functions and that converge to zero and construct a monotonically increasing sequence of times such that for all it is true that

From that, it is straightforward to define a non-increasing sequence converging to 0, by just taking:

Finally, the condition:

follows as a direct consequence of the construction of the sequence of typical sets , thereby concluding the proof. □

We omitted a direct reference to the process in the notation of the typical set (i.e., ) for the sake of readability, and we will explicitly refer to it only if it is strictly necessary. In the next section we provide more details on the specific bounds in size by studying a subclass of the CSPs, namely, the class of simple CSPs. For them, the characterization of the typical set can be achieved using generalized forms of entropy.

2.2. The Typical Set and Generalized Entropies

Equation (1) can be related to a general form of path entropy:

It can be proven that satisfies three of the four Shannon–Khinchin axioms expected by an entropic functional [25,61,62] in Khinchin’s formulation [62], to be referred to as SK1, SK2, SK3. In particular SK1 states that entropy must be a function of the probabilities, which is satisfied by , by construction. SK2 states that is maximized by the uniform distribution q over , i.e.,

Finally, SK3 states that, if , then does not contribute to the entropy, which implies:

satisfied as well for any considered in the definition of the CSPs.

We further observe that is a monotonically increasing function as well, in the case of uniform probabilities: Let us suppose two CSPs and that sample uniformly their respective sampling spaces, , such that . Let, in consequence, q and be the uniform distributions over and , respectively. Then:

where are the generalized entropies as defined in Equation (5) applied to distributions q and . In the Proposition A3 of the Appendix C we provide details of the above derivations. We observe that SK4 is not generally satisfied: This axiom states that , and one can only guarantee its validity in the case of Shannon entropy, where . In the general case, this condition may not be satisfied. A different arithmetic rule can substitute SK4 to accommodate other entropic forms [46]. Notice, however, that the use of Shannon (path) entropy, i.e., , in the compact scale of a CSP may be used in in a broad spectrum of cases, including systems with correlations or super-exponential sample space growth, as we will see in Section 2.3.

If the contributions to the above entropy of the paths belonging to the complementary set of , that is, , are negligible in the limit of , then we call the CSP simple. For simple CSPs with compact scale the following convergence condition holds:

Moreover, in simple CSPs, the typical set has the largest contribution to the entropy. First we define the contribution of the typical set to the entropy, , as:

We then demonstrate the above claim with the following proposition:

Theorem 2.

If η is a simple CSP with compact scale , there exists a non-increasing sequence of positive numbers with limit and its corresponding sequence of typical sets , such that:

Proof.

We will start with the second equality, namely:

From the definition of typical sets we know that, for paths , it is true that:

In consequence, given that , one can bound as:

Since, by construction , this second part of the theorem is proven. From that, the statement of the theorem:

follows directly given the assumption of simplicity. □

In consequence, the typical set can be naturally defined for simple CSPs in terms of the generalized entropy . To see that, we first reword condition (1) for simple CSPs as:

(in probability). We can rewrite the above condition in a more convenient form: Given a simple CSP , there are two non-increasing sequences of positive numbers , , with , by which:

Then, for each there is a set of paths, the typical set , such that for all :

by which . Notice that, now, the typical set is characterized using the generalized entropy .

The next obvious question refers to the cardinality of the typical set . We will see that it can be bounded from above and below in a way analogous to the standard one [24]. The first bound is obtained by observing that:

where is the inverse function of , i.e., , which exists given the assumption that is a monotonically growing function made in the definition of CSPs. From that, it follows that the cardinality of the typical set is bounded from below as:

For the upper bound, we observe that:

leading to:

Given the bounds provided in Equations (8) and (9), one can (roughly) estimate the cardinality of the typical set as:

We present a rigorous version of the above result as a proposition.

Proposition 1.

Let η be a simple CSP in with some typical localizer sequence , then:

Proof.

From Equations (8) and (9), one can derive the following chain of inequalities:

The last term poses no difficulties. To explore the behavior of the first one, we just rename the term:

and rewrite the first term of the inequality:

We know, from Proposition A1, that the functions we are dealing with behave such that:

As a consequence:

Therefore, since also the third term goes trivially to , we can conclude that:

as we wanted to demonstrate. □

The above proven asymptotic equivalence gives us the opportunity of rewriting the entropy in a Boltzmann-like form:

This identifies the cardinality of the typical set with Boltzmann’s W, the number of alternatives the system can effectively display: Finally, we notice that we can (roughly) approximate the typical probabilities as:

We thus provided a general proof that the typical set exists and that it can be properly defined for a wide class of stochastic processes, the CSPs, those satisfying convergence condition (1). Moreover, we show that its volume can be bounded and fairly approximated as a function of the generalized entropy emerging from the convergence condition, , as defined in Equation (5).

2.3. Example: A Path Dependent Process

We briefly explore the behavior of the typical set and its associated entropic forms through a model displaying both path dependence and unbounded growth of the phase space. The process works as follows: Let us suppose we have a restaurant with an infinite number of tables . At a customer enters the restaurant and sits at table . At time t a new customer enters the restaurant where already tables are occupied; the occupation number of each table is unbounded. The customer can chose either to sit at an already occupied table from the occupied tables, each with equal probability , or in the next unoccupied one, , again with probability . This process is a version of the so-called Chinese restaurant process [33,63], exhibiting a simple form of memory/path dependence. Hence, we refer to it as the Chinese restaurant process with memory (CRPM). In Figure 2 we sketch the rules of this process. Crucially, as , the random variable accounting for the number of tables has the following convergent behavior; see Proposition A5 of the Appendix D.2 for details:

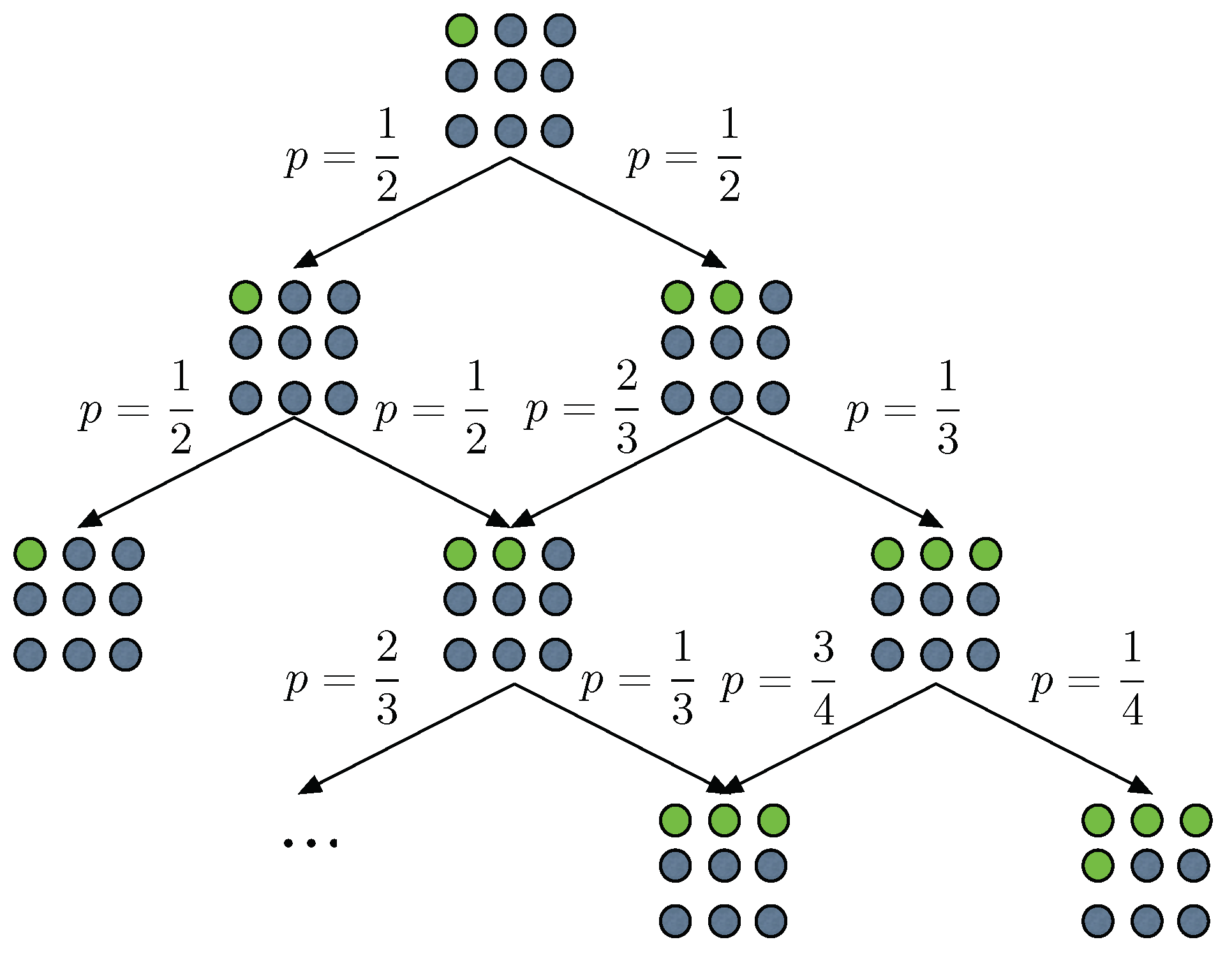

Figure 2.

The rules of the Chinese restaurant process with memory. Here green circles represent occupied tables and grey circles empty table. Notice that in the mathematical formulation of the problem the number of tables is infinite. Arrows depict the possible transitions of the process and the associated probabilities.

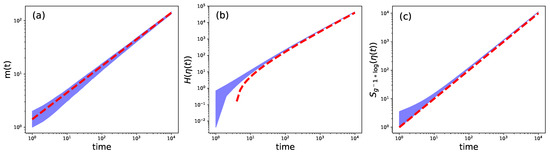

In Figure 3a we see that the prediction is quite accurate when compared to numerical simulations of the process. This property enables us to demonstrate that the CRPM we are studying is actually a CSP with compact scale ; see Theorem A1 of the Appendix D.3. In particular, Equation (1) is satisfied, in this particular case as:

in probability. In addition, the process is simple; see Theorem A2. Since we are using , the entropy form that will arise is Shannon path entropy, by direct application of Equation (5), i.e., , with defined as:

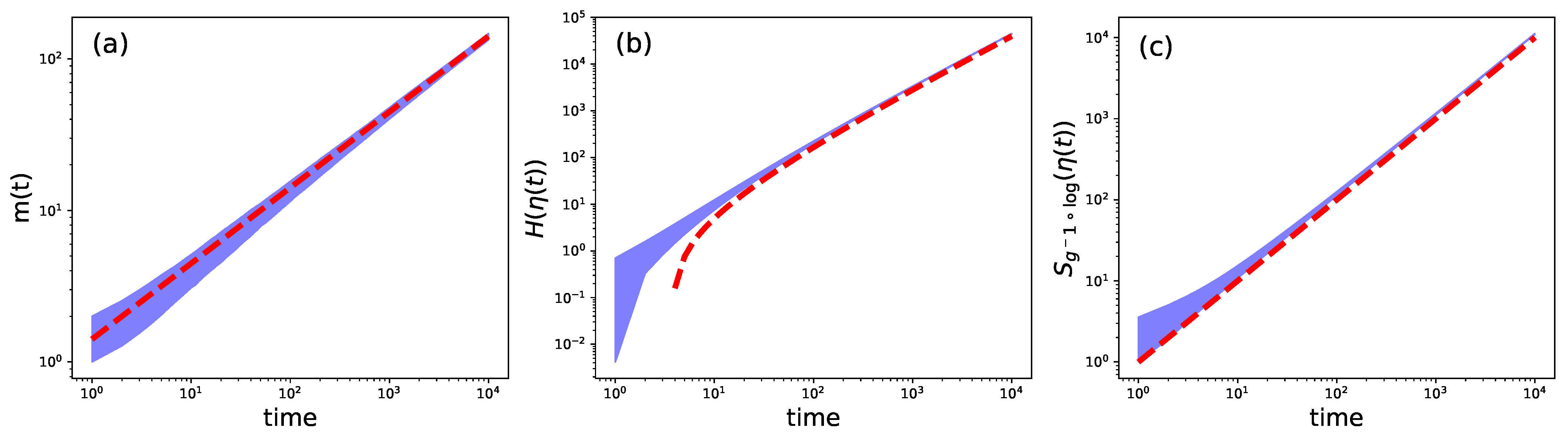

Figure 3.

Numerical simulations for the Chinese Restaurant process with memory. The blue cloud represents actual numerical outcomes, dashed orange line the theoretical prediction. Time is given in arbitrary coordinates, representing a step in the process. In (a) we show the evolution of the amount of occupied tables against the prediction . (b) The evolution of Shannon path entropy for the CRPM, being the prediction given in (11). The dashed red line shows the function . (c) Evolution of the generalized path entropy , with as defined in Equation (14). Numerical outcomes have been obtained from 1000 replicas of the whole CRPM process up to steps.

It directly follows that:

Given the compact scale used, one can estimate the evolution of the size of the typical set as:

where is the standard -function [64]. We see that the growth of the typical set as shown in Equation (13) is clearly faster than exponential. The phenomenon of concentration of measure [29] is clearly manifested here, as the size of the typical set vanishes in relation to the size of the whole potential set of outcomes, . A rough estimation leads to , leading to:

despite .

Additionally, in Figure 3b we see that the prediction made in Equation (12) fits perfectly with the numerical realizations of the process. Note that we have shown the dependence on Shannon path entropy for the clarity in the exposition. Indeed, as pointed out above, a CSP may have several compact scales. For example, taking the compact scale that led to Shannon entropy, , with , one can construct another compact scale for the CRPM by composing (which, by assumption, exists) with both functions. In consequence, one will have a new compact scale , defined as:

where is the positive, real branch of the Lambert function [64]. In Figure 3c we see that fits perfectly , proving that is a compact scale for the CRPM; see also Appendix D.5. We observe that this particular compact scale makes the path entropy extensive when applied to the CRPM.

3. Discussion

We demonstrated that, for a very general class of stochastic processes, which we refer to as compact stochastic processes, the typical set is well defined, as the probability measure tends to concentrate in clearly identifiable regions of the space of possible outcomes of the process. These processes can be path dependent, contain arbitrary internal correlations or display dynamic behavior of the phase space, showing sub- or super-exponential growth on the effective number of configurations the system can achieve. The only requirement is that there exist two functions for which Equation (1) holds. Along the existence of the typical set, a generalized form of entropy naturally arises, from which, in turn, the cardinality of the typical set can be computed.

The existence of the typical set in systems with arbitrary phase space growth opens the door to a proper characterization, in terms of statistical mechanics, of a number of processes, mainly biological, where the number of configurations and states changes over time. In particular, it paves the path towards the statistical-mechanics-like understanding of processes showing open-ended evolution on the basis of typicality. For example, this could encompass thermodynamic characterizations of—part of—the developmental paths in early stages of embryogenesis. The existence of the typical set, even in some extreme scenarios of stochasticity and phase space behavior, leads us to the speculative hypothesis that typicality may underlie the astonishing reproducibility and precision of some biological processes. In this scenario, stochasticity would drive the system to the set of correct configurations—those belonging to the typical set—with high accuracy. Selection, in turn, would operate on typical sets, thereby promoting certain stochastic processes over others. More specific scenarios are nevertheless required in order to make this intriguing hypothesis more sound. Further works should clarify the potential of the proposed probabilistic framework to accommodate generalized, consistent forms of thermodynamics and explore the complications that can arise due to the loss of ergodicity that is characteristic for some of the processes that are nonetheless compatible with the above description.

Importantly, our results provide a potential starting point for an ensemble formalism for systems with sub- or super-exponential phase space growth. This opens the possibility to extend the concept of thermodynamic limit to these systems without requiring further conditions such as microscopic detailed balance, which cannot be justified in a broad range of out-of-equilibrium processes. Questions like the definition of free energies or the possible need of extensivity, which have to be answered in order to progress towards a complete and consistent thermodynamic picture remain, however, open. For tentative answers to those questions, connections to early proposals could be drawn, both on the thermodynamic grounds; see, e.g., [36,65,66], and from the perspective of entropy characterization; see, e.g., [37,44,46,53,54]. In this paper we meet an equivalence relation underlying compact scales, which allows us to transform between compact scales that do not differ too strongly, i.e., not more than by a power, without essentially changing the structure of the typical set sequence, a fact that one can utilize to give the entropic functional particular properties. For instance making the entropy associated with the compact scale extensive, as we demonstrated above for the CRPM. However, this also introduces the possibility that processes may have more than one inequivalent compact scale. We are aware that, in order to demonstrate the full potential of the theory one should aim at examples more extreme than the Chinese restaurant process we present. However, this would require an additional inquiry into the potentially hierarchic structure of potentially inequivalent compact scales that both in technical terms and conceptual terms go beyond the scope of this paper. This intriguing issue may be related to different levels of coarse graining, and deserves further investigations.

We finally point out the implications of our results for the study of information sources, given the fundamental role the typical set plays in optimal coding and data compression. The existence of the typical set in these broad class of information sources, where, simply put, the information flow is not constant, may open the possibility of new compressing strategies. These strategies could be based, for example, on properties of the specific CSP representing the information source and the compact scale that characterize the process.

Author Contributions

Conceptualization, R.H. and B.C.-M.; Formal analysis, R.H. and B.C.-M.; Writing–original draft, R.H. and B.C.-M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

The authors want to thank Petr Jizba and Artemy Kolchinsky for the helpful discussions that enabled us to improve the quality of the manuscript. B.C.-M. wants to acknowledge the hints provided by Daniel R. Amor and the support of the field of excellence Complexity of Life, Basic Research and Innovation of the University of Graz.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

In this appendix we provide the mathematical details required to completely justify the results contained in the main text of the manuscript “The typical set and entropy in stochastic systems with arbitrary phase space growth”.

Appendix B. Compact Categorial Processes

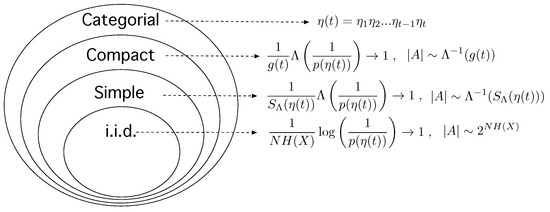

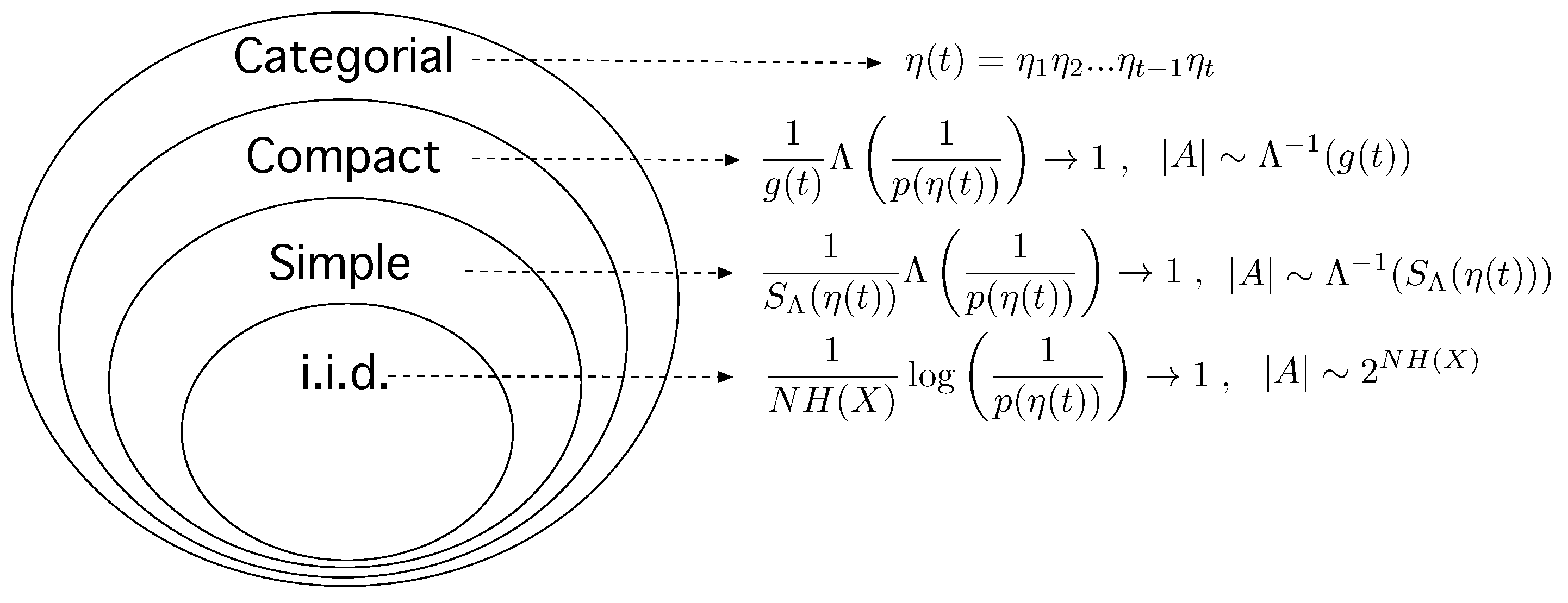

We start by providing a general framework for the processes considered in the paper. In Figure A1, below, we outline the hierarchy that our study induces over stochastic processes.

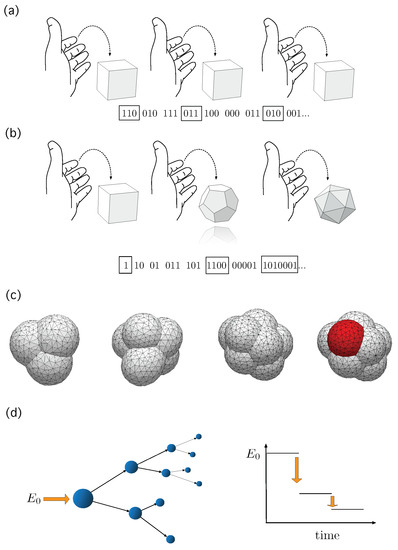

Figure A1.

A potential hierarchy of discrete stochastic processes. The largest class would correspond to the categorial processes, which comprise almost anything that can be conceived as a discrete stochastic process. A subclass of categorial processes are the compact processes, by which the convergence condition stated in the Equation (1) of the main text holds and, therefore, a sequence of typical sets can be identified. Inside the compact processes, we identify the subclass of simple processes, where the sequence of typical sets can be defined in terms of a general form of entropy. Finally, the simplest subclass is the one defined by stochastic processes defined by sequences of independent, identically distributed random variables , by which the sequence of typical sets can be defined from the entropy in Shannon-like form. In turn, in i.i.d. systems, the path entropy up to time t can be written as t times the contribution of a single event [24]. Note that no assumptions of independence or stability of the sampling space are needed in the two first subclasses. Even the typical set can be consistently identified. In addition, as we will see in Appendix D, the use of Shannon entropy to characterize the sequence of typical sets is not restricted to i.i.d. systems.

Figure A1.

A potential hierarchy of discrete stochastic processes. The largest class would correspond to the categorial processes, which comprise almost anything that can be conceived as a discrete stochastic process. A subclass of categorial processes are the compact processes, by which the convergence condition stated in the Equation (1) of the main text holds and, therefore, a sequence of typical sets can be identified. Inside the compact processes, we identify the subclass of simple processes, where the sequence of typical sets can be defined in terms of a general form of entropy. Finally, the simplest subclass is the one defined by stochastic processes defined by sequences of independent, identically distributed random variables , by which the sequence of typical sets can be defined from the entropy in Shannon-like form. In turn, in i.i.d. systems, the path entropy up to time t can be written as t times the contribution of a single event [24]. Note that no assumptions of independence or stability of the sampling space are needed in the two first subclasses. Even the typical set can be consistently identified. In addition, as we will see in Appendix D, the use of Shannon entropy to characterize the sequence of typical sets is not restricted to i.i.d. systems.

Appendix B.1. Categorial Processes

Categorial processes are processes that at any time t sample one state from a finite number of distinguishable states collected in the set , called the sample space of the process at time t. If we look at a discrete time line we represent the process up to time t as a sequence of random variables , i.e.,

The process neither needs to consist of statistically independent random variables nor does the sample space of the process need to be constant. That is, the local sample spaces of the variable can differ from the sample space of another variable . If we sample this provides us with a particular path:

with , being defined as:

in other words, the Cartesian product of all local sample spaces up to time t.

In principle it could be that also the sample space depends on the path the process has taken up to time . In consequence, could contain many paths that are not possible for the process and, therefore, have zero probability of being sampled. However, in such a case we can always consider the non-empty subset , which contains all paths , and only those paths of , with .

Definition A1.

Let η be a categorial process. We call the probability of paths to be sampled by η and, then, we call:

the well formed interior of or the path sample space of the process.

Let us call the set of states of that can be sampled at time t provided that our trajectory up to time was . The set of potential states that can be visited at time t, will then be:

i.e., contains all states the process could possibly sample at time t after all possible histories the process could have sampled up to time . In this way we can always assume that we can find and its well formed interior by pruning all ill formed sequences from . All information on how the sample space gets sampled then solely resides in the hierarchy of transition probabilities , where and . We therefore get:

In the context of categorial processes, is clearly a monotonic decreasing function in time bounded below by zero, . In consequence, converges for all possible paths of the process . This also means that for almost all paths the probability even though some finite number of paths could have non-zero probabilities even in the limit , although convergence is guaranteed (Unlike for processes, for systems, e.g., particles in a box, it is not guaranteed that adding a new particle, as analog of to a new sample step, . In consequence, for systems, we cannot guarantee convergence of as the system size ). After this general description of categorial processes, we can start by characterizing the subclass of them we are interested in. In the following we provide the condition of compactness that we impose to categorial processes in order to ensure the existence of a typical set.

Definition A2.

Let η be a categorial process η and let denote the process up to time t and denote the path sample space of (as discussed above). Let us consider pairs of functions , such that Λ is a twice continuously differentiable, strictly monotonic increasing and strictly concave function on the interval with and ; and g is a twice continuously differentiable strictly monotonically increasing function on the interval . If we can associate such a pair of functions with the process η, such that:

(in probability), then we call the process η compact in , and a compact scale of η.

We will call these processes Compact Stochastic Processes (CSP). Note that compact scales associated to a given CSP need not be unique (The fact that we can find different pairs of functions, , in which a process is compact, leads to questions related to equivalence relations on the space of pairs, . As it turns out, following up the idea of typical sets, that we are to explore below, a process induces an equivalence relation on this space, partitioning the space into monads of equivalent compact scales, , of the process. At the same time this means that there exist inequivalent compact scales, which can be thought of as different “scales of resolution” to look at a process.).

Appendix B.2. Properties of Λ

The next step is to check what kind of functions can be expected when dealing with CSPs. To that end, we will impose a condition to the CSP, namely, that the CSP is filling. From this, very mild, condition, we will then check which functions enable the convergence criteria to be fullfilled. First of all, we need to introduce some technical terms.

Definition A3.

Let η be a CSP in . Let be a non-increasing sequence of real numbers such that , from which one can construct a sequence of typical sets of the respective sample spaces such that . We define the upper and lower typical ratios, , in as:

where is the inverse function of Λ, which exists due to the strict monotonicity of Λ.

Note that, by construction, and .

Definition A4.

We call a CSP η filling in if its typical ratios have the property and .

We can now say something about the shape of functions .

Proposition A1.

If η is a filling CSP in , then it follows that:

for all .

Proof.

We note that for filling CSP it is true that:

as . We can rewrite and for we find a such that . Therefore for all we find:

The other case, , we prove analogously using instead of , and the proposition follows. □

To get an idea which kind of functions satisfy this condition we can look at the following example:

Proposition A2.

For any the function has the property for all .

Proof.

We can prove this by direct computation, i.e.,

Since , one can easily read from the last line that the example family of functions fulfills the proposition. □

In general we can say that candidates for of filling CSPs are of the form for some positive constants c and d or even slower growing functions of the form:

where and are positive, real valued constants.

Appendix C. Properties of the Generalized Entropies

The generalized entropy associated to a CSP in is defined as:

Definition A5.

Let η be a CSP in , then we call the measure, of , defined as:

a generalized path entropy associated with η. Note that for a set the generalized entropy measure, , is given by .

In the following proposition we see that the above defined entropy satisfies three of the four Shannon–Khinchin axioms for an entropy measure [62] (SK1, SK2, SK3). The fourth axiom (SK4) is not generally satisfied.

Proposition A3.

The entropy functional defined in Equation (A3) satisfies the first three of the four Shannon–Khinchin axioms for an entropy measure as formulated in [62]:

- SK1 is a continuous function only depending on the probabilities .

- SK2 is maximized if , i.e., equiprobability.

- SK3If , then: , i.e., events with zero probability have no contribution to the entropy.

Proof.

To demonstrate SK1, it is enough to observe that is only function of the probabilities and to take into account that, by assumption, , therefore, continuous.

To demonstrate SK2, we need to maximize the functional , defined as:

where is a Lagrangian multiplier implementing the normalization constraint. Maximizing with respect to a yields:

where and is the first derivative of . Note that if the equation does not depend explicitly on and if it has a unique solution then the proposition is proved, since all have the same value. To see that a unique solution exists we need to show that is strictly monotonic. To see that, it is enough to note that the first derivative of f is given by , since, by definition of compactness, and strictly concave; and therefore .

Finally, to demonstrate that satisfies SK3 we apply the l’Hopital rule. First, by defining , one has that:

Then, considering that, by definition, is a strictly growing and concave function, one is led, after application to the l’Hopital rule for the limit, to:

thereby concluding the proof. □

We observe that SK3 enables us to safely perform the sum for the entropy over the whole set of paths , since .

Appendix D. The Chinese Restaurant Process

We now turn to analyzing the version of the Chinese restaurant process with memory (CRPM) discussed in the main body of the paper. The version presented here is a variation of the standard Chinese Restaurant process as found in [33,63].

Appendix D.1. Definition and Basics

Suppose a restaurant with an infinite set of tables each with infinite capacity. The first customer enters and sits at the first table . The second customer now has a choice to also sit down at the first table together with the first customer or to choose a free table , each with probability . Let be the number of occupied tables at . If the t’th customer finds that tables are already occupied by some guests, then again the customer will choose one of the non-empty tables:

each with probability , in which case , or the next empty table , also with probability . In this latter case . The key point is therefore the number of occupied tables . We observe that the amount of occupied tables can be rewritten as a stochastic recurrence:

where is a random variable by which:

Clearly,

is a non-decreasing function in t. Now let us define as a random variable taking values uniformly at random over the set at time t. The sequence of random variables accounting describing the CRPM can be written as:

leading to paths of the kind:

We emphasize that the tables visited are distinguishable and can be visited repeatedly. The CRPM however does not fill up , i.e., there exist elements in , that are not potential paths of the CRP. This includes all sequences that select a table without ever having chosen some table , with before. For example, the path is not possible, because is chosen before . The CRPM therefore gives us the opportunity to introduce sampling spaces conditional to a particular well formed path. In this particular case, it is enough to observe that the sampling space some well formed path sees at time t is given by:

where is the number of different tables the well formed CRPM path has sampled at time . By convention, we define .

Appendix D.2. Statistics of the CRPM

We start computing the probability of a particular path .

Proposition A4.

Let process be the CRPM and be a given path of the process up to time t. Let be the number of occupied tables in the restaurant associated with the path at time . Then the probability to observe the particular sequence of tables, is given by:

Proof.

The proposition follows from direct calculation. □

Now we will see that the sequence corresponding to the number of occupied tables converges to a tractable functional form.

Proposition A5.

Given the sequence of occupied tables of the CRPM as defined in Equation (A4), then:

in probability.

Proof.

Consider the random variable denoting the amount of steps by which . is a geometric random variable with associated law:

Now we construct a new set of renormalized random variables, as:

In that context, the sum of is the sum of k random variables with mean 1 and . Therefore, there exists a monotonically increasing function, by which, by the law of large numbers, for each pair , there exists such that, for :

Notice that, in this setting, we have that the deviations behave close to a discrete random walk centered at 0 and with step length . Since for all , :

and, since, by construction:

one has that:

where “∼” means asymptotically equivalent, as we wanted to demonstrate. □

Appendix D.3. The Typical Set of the CRP

Theorem A1.

The CRPM is compact with compact scale .

Proof.

We need to demonstrate that:

We first note that, according to the definition of the sequence of the number of occupied tables given in Equation (A4), and the statement of Proposition A4, one can rewrite the logarithmic term of the condition for compactness as:

Now, let us define a new random variable as follows:

with . Notice that, according to Proposition A5, we have that:

We can then rewrite condition of compactness, with , as:

It remains to see that the second term of the sum goes to 0. Clearly, by Proposition A5:

Consequently:

Finally, we need to compute the asymptotic form of . Observing that we have a Riemann sum, one can consider:

thus concluding the proof. □

Appendix D.4. The Entropy of the CRP

We demonstrate here that the CRPM is simple. In consequence, the typical set can be computed as a function of the entropy. In that case, one can show that a suitable choice is , chosen for the sake of simplicity, implying that the associated entropy is Shannon path entropy. However, we emphasize that this choice is not unique. Given a different choice of g, one could have another by which the process is also simple and, therefore, the typical set could be defined through another form of entropy. We briefly comment on this point in the next section, sketching how another potential pair functions would work as well.

Theorem A2.

The CRPM is simple in .

Proof.

Since the CRPM is compact with compact scale , we know that there is a typical localizer sequence with associated , such that . The paths belonging to the typical set , are those satisfying:

In addition, the measure associated to the typical set is given by:

In consequence,

Now let us consider that the complement of the typical set , whose measure is:

is completely populated by those paths by which:

therefore, for all paths :

The least probable path is the one that increases the number of tables at every step, having a probability of:

leading to , thanks to the Stirling approximation [64]. In that context:

Collecting the above reasoning, and by observing that the defined entropy is actually the Shannon path entropy, [24]:

one has that:

In consequence, by defining , one is led to:

Since we know that we conclude that:

as we wanted to demonstrate. □

Appendix D.5. A Different Compact Scale (Λ,G) for the CRP

We finally briefly comment how another compact scale made of different functions could be used to characterize the typical set and the generalized entropy of the CRP. We avoid the technical details, for the sake of simplicity. We start computing the inverse function of g, :

where is the Lambert function [64], where only the positive, real branch is taken into account. Then we compose it with the log function, thereby defining a new function as:

We observe that as above defined is a strictly growing, concave function with continuous second derivatives. Clearly, . Therefore, as a direct consequence of Theorem A1, the CRPM is compact in :

(in probability). In consequence, the size of the typical set and the typical probabilities can be approximated from the following generalized entropy :

where we explicitly wrote it in terms the functional form of as defined in Equation (A6).

References

- Morowitz, H.J. Energy Flow in Biology: Biological Organization as a Problem in Thermal Physics; Academic Press: London, UK, 1968. [Google Scholar]

- Bialek, W. Biophysics: Searching for Principles; Princeton University Press: Princeton, NJ, USA, 2012. [Google Scholar]

- Solé, R.; Goodwin, B. Signs of Life; Basic Books; Perseus Group: New York, NY, USA, 2000. [Google Scholar]

- Corominas-Murtra, B.; Seoane, L.; Solé, R. Zipf’s Law, unbounded complexity and open-ended evolution. J. R. Soc. Interface 2018, 15, 20180395. [Google Scholar] [CrossRef] [PubMed]

- Maynard Smith, J.; Szathmáry, E. The Major Transitions in Evolution; Freeman: Oxford, UK, 1995. [Google Scholar]

- Bonner, J.T. The Evolution of Complexity by Means of Natural Selection; Princeton University Press: Princeton, NJ, USA, 1988. [Google Scholar]

- Wolpert, L.; Jessell, T.; Lawrence, P.; Meyerowitz, E.; Robertson, E.; Smith, J. Principles of Development, 3rd ed.; Oxford University Press: Oxford, UK, 2007. [Google Scholar]

- Schuster, P. How does complexity arise in evolution? In Evolution and Progress in Democracies. Theory and Decision Library; Götschl, J., Ed.; Springer: Dordrecht, The Netherlands, 1996; Volume 31. [Google Scholar]

- Bedau, M.A.; McCaskill, J.S.; Packard, N.H.; Rasmussen, S.; Adami, C.; Green, D.G.; Ikegami, T.; Kaneko, K.; Ray, T.S. Open Problems in Artificial Life. Artif. Life 2000, 6, 363–376. [Google Scholar] [CrossRef] [PubMed]

- Ruíz-Mirazo, K.; Peretó, J.; Moreno, A. A universal definition of life: Autonomy and open-ended evolution. Orig. Life Evol. Biosph. 2004, 34, 323–346. [Google Scholar] [CrossRef] [PubMed]

- Ruíz-Mirazo, K.; Umérez, J.; Moreno, A. Enabling conditions for “open-ended” evolution. Biol. Philos. 2008, 23, 67–85. [Google Scholar] [CrossRef]

- Day, T. Computability, Gödel’s incompleteness theorem, and an inherent limit on the predictability of evolution. J. R. Soc. Interface 2012, 9, 624–639. [Google Scholar] [CrossRef] [PubMed]

- Packard, N.; Bedau, M.A.; Channon, A.; Ikegami, T.; Rasmussen, S.; Stanley, K.O.; Taylor, T. An Overview of Open-Ended Evolution: Editorial Introduction to the Open-Ended Evolution II Special Issue. Artif. Life 2019, 25, 93–103. [Google Scholar] [CrossRef]

- Pattee, H.H.; Sayama, H. Evolved Open-Endedness, Not Open-Ended Evolution. Artif. Life 2019, 25, 4–8. [Google Scholar] [CrossRef]

- Dietrich, J.E.; Hiiragi, T. Stochastic patterning in the mouse pre-implantation embryo. Development 2007, 134, 4219–4231. [Google Scholar] [CrossRef] [PubMed]

- Maitre, J.L.; Turlier, H.; Illukkumbura, R.; Eismann, B.; Niwayama, R.; Nédélec, F.; Hiiragi, T. Asymmetric division of contractile domains couples cell positioning and fate specification. Nature 2016, 536, 344–348. [Google Scholar] [CrossRef]

- Giammona, J.; Campàs, O. Physical constraints on early blastomere packings. PLoS Comput. Biol. 2021, 17, e1007994. [Google Scholar] [CrossRef] [PubMed]

- Tria, F.; Loreto, V.; Servedio, V.D.P.; Strogatz, S.H. The dynamics of correlated novelties. Sci. Rep. 2014, 4, 5890. [Google Scholar] [CrossRef]

- Loreto, V.; Servedio, V.D.P.; Strogatz, S.H.; Tria, F. Dynamics on expanding spaces: Modeling the emergence of novelties. Creat. Universality Lang. 2016, 59–83. [Google Scholar] [CrossRef]

- Iacopini, I.; Di Bona, G.; Ubaldi, E.; Loreto, V.; Latora, V. Interacting Discovery Processes on Complex Networks. Phys. Rev. Lett. 2020, 125, 248301. [Google Scholar] [CrossRef]

- Corominas-Murtra, B.; Hanel, R.; Thurner, S. Understanding scaling through history-dependent processes with collapsing sample space. Proc. Natl. Acad. Sci. USA 2015, 112, 5348–5353. [Google Scholar] [CrossRef] [PubMed]

- Corominas-Murtra, B.; Hanel, R.; Thurner, S. Sample space reducing cascading processes produce the full spectrum of scaling exponents. Sci. Rep. 2017, 7, 11223. [Google Scholar] [CrossRef]

- Fujii, K.; Berengut, J.C. Power-Law Intensity Distribution of γ-Decay Cascades: Nuclear Structure as a Scale-Free Random Network. Phys. Rev. Lett. 2021, 126, 102502. [Google Scholar] [CrossRef] [PubMed]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; John Wiley & Sons: New York, NY, USA, 2012. [Google Scholar]

- Ash, R.B. Information Theory; Dover Publications: New York, NY, USA, 2012. [Google Scholar]

- Pathria, R.K. Statistical Mechanics; Oxford University Press: Oxford, UK, 2002. [Google Scholar]

- Pitowsky, I. Typicality and the Role of the Lebesgue Measure in Statistical Mechanics. In Probability in Physics; Ben-Menahem, Y., Hemmo, M., Eds.; Springer: Berlin, Germany, 2012; pp. 41–58. [Google Scholar]

- Lebowitz, J.L. Macroscopic Laws, Microscopic Dynamics, Time’s Arrow and Boltzmann’s Entropy. Physica A 1993, 194, 1–27. [Google Scholar] [CrossRef]

- Ledoux, M. The Concentration of Measure Phenomenon; American Mathematical Society: Providence, RI, USA, 2005. [Google Scholar]

- Battermann, R. The Devil in the Details: Asymptotic Reasoning in Explanation, Reduction, and Emergence; Oxford University Press: Oxford, UK, 2001. [Google Scholar]

- Frigg, R. Typicality and the Approach to Equilibrium in Boltzmannian Statistical Mechanics. Philos. Sci. 2009, 76, 997–1008. [Google Scholar] [CrossRef]

- Kač, M. A history-dependent random sequence defined by Ulam. Adv. Appl. Math. 1989, 10, 270–277. [Google Scholar] [CrossRef]

- Pitman, J. Combinatorial Stochastic Processes; Springer: Berlin, Germany, 2006. [Google Scholar]

- Clifford, P.; Stirzaker, D. History-dependent random processes. Proc. R. Soc. Lond. A 2008, 464, 1105–1124. [Google Scholar] [CrossRef]

- Biró, T.; Néda, Z. Unidirectional random growth with resetting. Phys. A Stat. Mech. Its Appl. 2018, 499, 335–361. [Google Scholar] [CrossRef]

- Jensen, H.J.; Pazuki, R.H.; Pruessner, G.; Tempesta, P. Statistical mechanics of exploding phase spaces: Ontic open systems. J. Phys. A Math. Theor. 2018, 51, 375002. [Google Scholar] [CrossRef]

- Korbel, J.; Lindner, S.D.; Hanel, R.; Thurner, S. Thermodynamics of structure-forming systems. Nat. Commun. 2021, 12, 1127. [Google Scholar] [CrossRef]

- Gray, R.M.; Davisson, L.D. Source coding theorems without the ergodic assumption. IEEE Trans. Inform. Theory 1974, 20, 502–516. [Google Scholar] [CrossRef]

- Visweswariah, K.; Kulkarni, S.R.; Verdu, S. Universal coding of nonstationary sources. IEEE Trans. Inf. Theory 2000, 46, 1633–1637. [Google Scholar] [CrossRef]

- Vu, V.Q.; Yu, B.; Kass, R.E. Information in the Non-Stationary Case. Neural Comput. 2009, 21, 688–703. [Google Scholar] [CrossRef] [PubMed]

- Boashash, B.; Azemi, G.; O’Toole, J. Time-frequence processing of nonstationary signals: Advanced TFD design to aid diagnosis with highlights from medical applications. IEEE Signal Process. Mag. 2013, 30, 108–119. [Google Scholar] [CrossRef]

- Granero-Belinchón, C.; Roux, S.G.; Garnier, N.B. Information Theory for Non-Stationary Processes with Stationary Increments. Entropy 2019, 21, 1223. [Google Scholar] [CrossRef]

- Abe, S. Axioms and uniqueness theorem for Tsallis entropy. Phys. Lett. A 2000, 271, 74–79. [Google Scholar] [CrossRef]

- Hanel, R.; Thurner, S. A comprehensive classification of complex statistical systems and an axiomatic derivation of their entropy and distribution functions. Europhys. Lett. 2011, 93, 20006. [Google Scholar] [CrossRef]

- Enciso, A.; Tempesta, P. Uniqueness and characterization theorems for generalized entropies. J. Stat. Mech. Theory Exp. 2017, 12, 123101. [Google Scholar] [CrossRef]

- Tempesta, P. Beyond the Shannon–Khinchin formulation: The composability axiom and the universal-group entropy. Ann. Phys. 2016, 365, 180–197. [Google Scholar] [CrossRef]

- Tempesta, P. Formal groups and Z-entropies. Proc. R. Soc. Lond. A 2016, 472, 20160143. [Google Scholar] [CrossRef]

- Thurner, S.; Corominas-Murtra, B.; Hanel, R. The three faces of entropy for complex systems—Information, thermodynamics and the maxent principle. Phys. Rev. E 2017, 96, 032124. [Google Scholar] [CrossRef] [PubMed]

- Jizba, P.; Korbel, J. When Shannon and Khinchin meet Shore and Johnson: Equivalence of information theory and statistical inference axiomatics. Phys. Rev. E 2020, 101, 042126. [Google Scholar] [CrossRef]

- Korbel, J.; Jizba, P. Maximum Entropy Principle in Statistical Inference: Case for Non-Shannonian Entropies. Phys. Rev. Lett. 2019, 122, 120601. [Google Scholar]

- Jizba, P.; Korbel, J. On the Uniqueness Theorem for Pseudo-Additive Entropies. Entropy 2017, 19, 605. [Google Scholar] [CrossRef]

- Hanel, R.; Thurner, S. When do generalized entropies apply? How phase space volume determines entropy. Europhys. Lett. 2011, 96, 50003. [Google Scholar] [CrossRef]

- Jensen, H.J.; Tempesta, P. Group entropies: From phase space geometry to entropy functionals via group theory. Entropy 2018, 20, 804. [Google Scholar] [CrossRef]

- Korbel, J.; Hanel, R.; Thurner, S. Classification of complex systems by their sample-space scaling exponents. New J. Phys. 2018, 20, 093007. [Google Scholar] [CrossRef]

- Korbel, J.; Hanel, R.; Thurner, S. Information geometry of scaling expansions of non-exponentially growing configuration spaces. Eur. Phys. J. Spec. Top. 2020, 229, 787–807. [Google Scholar] [CrossRef]

- Hanel, R.; Thurner, S. Generalized (c,d)-entropy and aging random walks. Entropy 2013, 15, 5324–5337. [Google Scholar] [CrossRef]

- Nicholson, S.B.; Alaghemandi, M.; Green, J.R. Learning the mechanisms of chemical disequilibria. J. Chem. Phys. 2016, 145, 084112. [Google Scholar] [CrossRef] [PubMed]

- Balogh, S.G.; Palla, G.; Pollner, P.; Czégel, D. Generalized entropies, density of states, and non-extensivity. Sci. Rep. 2020, 10, 15516. [Google Scholar] [CrossRef] [PubMed]

- Gardiner, C.W. Handbook of Stochastic Methods for Physics, Chemistry and the Natural Sciences; Springer: Berlin, Germany, 1983. [Google Scholar]

- Feller, W. An Introduction to Probability Theory and Its Applications; Wiley: New York, NY, USA, 1991; Volumes 1 and 2. [Google Scholar]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Khinchin, A. Mathematical Foundations of Information Theory; Dover: New York, NY, USA, 1957. [Google Scholar]

- Bassetti, B.; Zarei, M.; Cosentino Lagomarsino, M.; Bianconi, G. Statistical mechanics of the “Chinese restaurant process”: Lack of self-averaging, anomalous finite-size effects, and condensation. Phys. Rev. E 2009, 80, 066118. [Google Scholar] [CrossRef]

- Abramowitz, M.; Stegun, I. Handbook of Mathematical Functions. National Bureau of Standards; Applied Mathematics Series 55; U.S. Government Printing Office: Washington, DC, USA, 1964. [Google Scholar]

- Abe, S.; Martínez, S.; Pennini, F.; Plastino, A. Nonextensive thermodynamic relations. Phys. Lett. A 2001, 281, 126–130. [Google Scholar] [CrossRef]

- Abe, S. Temperature of nonextensive systems: Tsallis entropy as Clausius entropy. Phys. A Stat. Mech. Its Appl. 2006, 368, 430–434. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).