Abstract

The original formulation of the boson sampling problem assumed that little or no photon collisions occur. However, modern experimental realizations rely on setups where collisions are quite common, i.e., the number of photons M injected into the circuit is close to the number of detectors N. Here we present a classical algorithm that simulates a bosonic sampler: it calculates the probability of a given photon distribution at the interferometer outputs for a given distribution at the inputs. This algorithm is most effective in cases with multiple photon collisions, and in those cases, it outperforms known algorithms.

1. Introduction

Quantum computers are computational devices that operate using phenomena described by quantum mechanics. Therefore, they can carry out operations that are not available for classical computers. Practical tasks are known which can be solved exponentially faster using quantum computers rather than classical ones. For example, the problem of integer factorization, which underlies the widely used RSA cryptosystem, can be solved by classical computers only in an exponential number of operations, whereas the quantum Shor’s algorithm [1] can solve it in a polynomial number of operations. Due to the technological challenges of manufacturing quantum computers, quantum supremacy (the ability of a quantum computational device to solve problems that are intractable for classical computers) in practice remains an open question.

Boson sampling [2] is a good candidate for demonstrating quantum supremacy. Basically, boson samplers are linear-optical devices containing a number of non-classical sources of indistinguishable photons, a multichannel interferometer mixing photons of different sources, and photon detectors at the output channels of the interferometer. A more specific set-up to be addressed in this paper deals with the single photon sources. In this case, there are exactly M single photons injected into some or all N inputs of the interferometer. Performing multiple measurements of the photon counts at the outputs, one characterizes experimentally the many-body quantum statistics after the interferometer [3], given an input state and the interferometer matrix.

Boson samplers are not universal quantum computers, that is they cannot perform arbitrary unitary rotations in a high-dimensional Hilbert space of a quantum system. Nevertheless, a simulation of a boson sampler with a classical computer requires a number of operations exponential in M. It was shown [4] that a classical complexity of the boson sampling matches the complexity of computing the permanent of a complex matrix. This means that the problem of a boson sampling is #P-hard [5] and there are no known classical algorithms that solve it in polynomial time. The best known exact algorithm for computing the permanent of a matrix is the Ryser formula [6], which requires operations. The Clifford–Clifford algorithm [7] is known to solve the boson sampling problem in operations. This makes large enough bosonic samples practically intractable with classical computational devices. Although boson sampling does not allow for arbitrary quantum computations, there are still practical problems that can be solved with boson sampling: for example, molecular docking [8], calculating the vibronic spectrum of a molecule [9,10] as well as certain problems in graph theory [11]. Boson sampling is also useful for statistical modeling [12] and machine learning [13,14].

There are several variants of boson sampling that aim at improving the photon generation efficiency and increasing the scale of implementations. For example, Scattershot boson sampling uses many parametric down-conversion sources to improve the single photon generation rate. It has been implemented experimentally using a 13-mode integrated photonic chip and six PDC photon sources [15]. Another variant is the Gaussian boson sampling [2,16], which uses the Gaussian input states instead of single photons. The Gaussian input states are generated using PDC sources, and it allows a deterministic preparation of the non-classical input light sources. In this variant, the relative input photon phases can affect the sampling distribution. Experiments were carried out with [17] and [18]. The latter implementation uses the PPKTP crystals as PDC sources and employs an active phase-locking mechanism to ensure a coherent superposition.

Any experimental set-up, of course, differs from the idealized model considered in theoretical modeling. Bosonic samplers suffer from two fundamental types of imperfections. First, the parameters of a real device, such as the reflection coefficients of the beam splitters and the phase rotations, are never known exactly. Varying the interferometer parameters too much can change the sampling statistics drastically so that modeling of an ideal device no longer makes much sense. Assume now that we know the parameters of the experimental set-up with great accuracy. Then what makes the device non-ideal is primarily photon losses, that is, not all photons emitted at the inputs are detected in the output channels. These losses happen because of imperfections in photon preparation, absorption inside the interferometer and imperfect detectors. There are different ways of modeling losses, for example by introducing the extra beam splitters [19] or replacing the interferometer matrix by a combination of lossless linear optics transformations and the diagonal matrix that contains transmission coefficients that are less than one [20].

Imperfections in middle-sized systems make them, in general, easier to emulate with classical computers [21]. It was shown [22] that with the increase of losses in a system the complexity of the task decreases. When the number of photons that arrive at the outputs is less than , the problem of a boson sampling can be efficiently solved using classical computers. On the other hand, if the losses are low, the problem remains hard for classical computers [23].

Photon collisions are a particular phenomenon that is present in nearly any experimental realization but were disregarded in the proposal by Aaronson and Arkhipov [4]. Originally it was proposed that the number of the interferometer channels is roughly a square of the number of photons in the set-up, . In this situation, all or most of the photons arrive at a separate channel, that is no or a few of the photon collisions occur. In the experimental realizations [18], however, .

Generally, a large number of photon collisions makes the system easier to emulate. For example, one can consider the extreme case that all photons arrive at a single output channel – the probability of such an outcome can be estimated within a polynomial time. The effect of the photon collisions on the computational complexity of a boson sampling has been previously studied [24]. A measure called the Fock state concurrence sum was introduced and it was shown that the minimal algorithm runtime depends on this measure. There is an algorithm for the Gaussian boson sampling that also takes advantage of photon collisions [25].

In this paper, we present the algorithm aimed at the simulation of the bosonic samplers with photon collisions. In the regime, our scheme outperforms the Clifford–Clifford method. For example, we consider an output state of the sampler with where one-half of the outputs are empty, and the other half is populated with 2 photons in each channel. Computing the probability of such an outcome requires us operations. The speedup in states that have more collisions is even greater.

2. Problem Specification

Consider a linear-optics interferometer with N inputs and N outputs which is described by a given unitary matrix U:

where and are the creation operators acting on inputs and outputs, respectively. We will denote the input state as

where is the number of photons in the i-th input. The output state will be denoted as

It follows from (1) and (2) that a specific input state corresponds to a set of output states that are observed with different probabilities:

The product can be written as

After expanding, this expression will be a sum of terms that have the following form:

where is a complex number that consists of the elements of U that correspond to the given output state. Therefore, the probability of observing an output state will be

The problem is to determine the probabilities of all of the output states. The main difficulty lies in calculating the number for the given input and output states. This paper presents an algorithm that solves this problem using the properties of the Fourier transform.

3. Algorithm Description

Let us define a function

where is some fixed set of N natural numbers. The choice of will later be discussed in detail. This function represents the expression (3), where the creation operators are replaced with exponents that oscillate with frequencies .

After expanding the expression (4), we get

where the sum is computed over all sets such that .

Therefore, for each possible output state there is the harmonic in that has a frequency of and an amplitude of . The set of numbers can be chosen in such a way that the harmonics do not overlap, i.e., there are no two outputs states and with equal frequencies .

If no harmonics overlap, then any of the numbers can be found from the Fourier transform of the function . On the other hand, to calculate the probability of a specific state it is sufficient to choose in such a way that the frequency is unique in the spectrum, i.e., the frequency of any other state differs from the frequency of the state in consideration: .

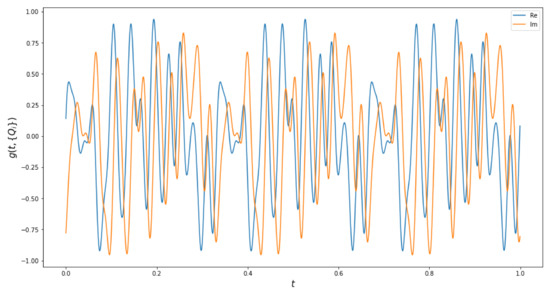

An example of with non-overlapping harmonics and its spectrum can be seen in Figure 1 (the choice of used here is described in Section 3.1).

Figure 1.

An example of the function (the top picture) and its spectrum obtained as squared modulus of the Fourier transform of (the bottom picture). A system with is used, the input state is . Each peak in the spectrum corresponds to one of ten possible output states.

3.1. The First Method of Choosing

Let us consider the methods of choosing that will satisfy the necessary conditions on the spectrum. The first one consists of the following: let M be the total number of photons at the inputs, i.e., for the input state . We choose , or . Then for an any output state the sum

will be a number that has a representation in a positional numeral system with a radix (since ). From the uniqueness of representation of numbers in positional numeral systems, it follows that every sum (some number in a positional numeral system with a radix ) will correspond to exactly one set of numbers (its representation in this numeral system; being its digits).

Using this method of choosing guarantees that the probability of any output state can be calculated from the spectrum of , since the frequencies , are different for any two output states .

3.2. The Second Method of Choosing

Another method of choosing is useful when the goal is to compute the probability of one specific output state when the input state is given. This method does not guarantee that the frequencies will be different for any two output states, but it guarantees that the frequency of the state in consideration (the target frequency) will be unique in the spectrum. Note that in this case , i.e., the choice of depends on the output state.

This method of choosing can be described in the following way:

Therefore if all of the outputs in the state contain the photons, then , , and so on: is times greater than .

Let us show that this method will actually lead to the target frequency being unique in the spectrum. Let be the frequencies calculated using the method described above. We need to prove that for any output state it is true that , i.e.,

Firstly, let us suppose that some of the outputs in the state contain 0 photons. Let be the indices of the outputs that contain at least one photon: . Then the condition becomes

since all the terms corresponding to empty outputs are zero in both sums ( if the i-th output contains 0 photons).

Note that we can view it as a “reduced” system with the K outputs, in which the output state contains at least one photon in every output. However, this system has one difference. Previously we considered the possible output states to be all the states that satisfy and . Now, in this “reduced” system we must consider all the output states such that and . This happens because the output states can have a non-zero amount of the photons in the outputs that were empty in ; such outputs will have no effect on the frequency and they remain outside the “reduced” system.

Therefore, instead of a system where some outputs can be empty and some can be zero, but , we can consider the system where but . In this system , and .

To prove the correctness of the algorithm, we must prove the following statement:

Theorem 1.

Let N be some natural number. Let be natural numbers that satisfy the following conditions:

(1) ;

(2) ;

(3) , where (and ).

Then .

The proof of this statement can be found in Appendix A.

4. Parameters of the Fourier Transform

To calculate the Fourier transform of the function we will use a fast Fourier transform (FFT). Firstly, we will define its parameters: the sampling interval (or the sampling frequency ) and the number of data points K. The function will be calculated at points . Since all the frequencies in the spectrum of are natural numbers, they can be discerned with the frequency resolution of . The function therefore will be calculated in the points within an interval which contains at least one period of each harmonic.

The sampling frequency is often chosen according to the Nyquist-Shannon theorem: if the Nyquist frequency is greater than the highest frequency in the spectrum , then the function can be reconstructed from the spectrum and no aliasing occurs. Therefore, one way of choosing the sampling frequency is . It can be used with both methods of choosing .

Since the function is calculated in the points within the interval , the number of data points K is equal to the sampling frequency . Optimization of the algorithm requires lowering the sampling frequency as much as possible.

If the goal is to calculate the probability of one specific state and the second method of choosing is used, then the sampling frequency can be chosen to be lower than . This will lead to aliasing: a peak with frequency f will be aliased by the peaks with frequencies . To correctly calculate the probability of the output state from the spectrum computed this way, the spectrum must not contain frequencies that satisfy . Note that it will not be possible to reconstruct the function from such a spectrum.

We will show that the sampling frequency for calculating the probability of the output state using the second method of choosing can be chosen to be greater by one than the target frequency :

To prove this statement, we must show that the spectrum of will not contain the frequencies that satisfy . This is shown by a theorem that is analogous to Theorem 1 yet has a weaker condition: equation in condition (3) is taken modulo .

Theorem 2.

Let N be some natural number. Let be natural numbers that satisfy the following conditions:

(1) ;

(2) ;

(3) , where (and ).

Then .

The proof of this statement can be found in Appendix A.

5. Complexity of the Algorithm

Let us consider the computational complexity of this algorithm. The complexity of a fast Fourier transform of a data array of K points is . The total complexity of the algorithm consists of the complexity of calculating in K points and the complexity of a fast Fourier transform.

Computing in each point is done in operations: the expression for consists of at most N factors, each of which can be computed in N additions, N multiplications and N exponentiations. If some of the inputs are empty, there will be fewer factors in the expression, and the resulting complexity will be lower.

When the first method of choosing is used, the number of data points K is proportional to the highest frequency in the spectrum of , since the sampling frequency is chosen using the Nyquist-Shannon theorem. The frequencies corresponding to the outputs states, in this case, are equal to . The highest frequency then is and corresponds to the state where the last output contains all the photons. The total complexity of calculating all the probabilities will then be

When the second method of choosing is used, the number of data points K depends on the frequency of the output state in consideration. This frequency is highest when photons are spread over outputs evenly. For the system with , this corresponds to the state where each output contains m photons. In this case, the highest frequency is equal to

Therefore, the sampling frequency and the required number of data points will be . The complexity of the algorithm in the worst case will be

In most states, however, photons will not be spread evenly between outputs, and outputs with a high number of photons will lower the sampling frequency and the complexity for calculating the probability of the state. This means that the more photon collisions are in a state, the better this algorithm performs. Let us consider several specific cases.

1. , the goal is to compute the output state that contains 2 photons in one half of the outputs and 0 photons in the other half. The frequency corresponding to such a state will be

and the complexity of the algorithm will be equal to

For comparison, the complexity of the Clifford–Clifford algorithm (which is ) in this case will be equal to .

2. , the goal is to compute the output state that contains photons in one half of the outputs and 0 photons in the other half. The frequency corresponding to such state will be , and the algorithm complexity will be

Again, the complexity of the Clifford–Clifford algorithm, in this case, will be equal to .

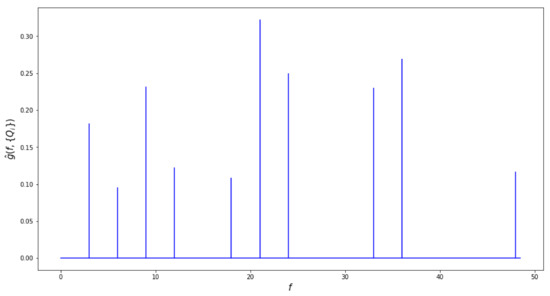

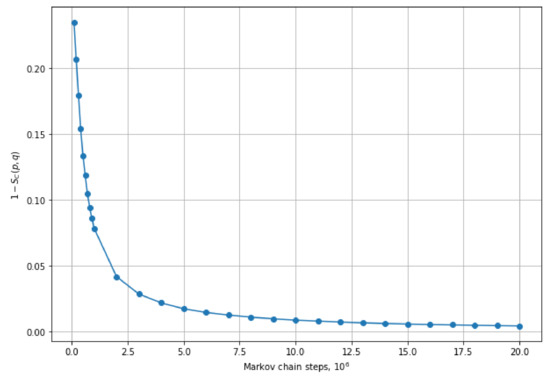

Weighted Average Complexity

We can measure the weighted average computational complexity of the algorithm described above by computing , where i numbers measurement outcomes (output states) for the bosonic sampler, is the probability to observe the ith state, is the complexity of calculating (assuming the second method of choosing is used), and the sum is calculated over all possible outcomes.

We have computed this weighted average complexity for the systems with varying N. We set , and as the input state. The interferometer matrices for those systems were randomly generated unitary matrices. Figure 2 shows that the weighted average complexity of the algorithm is significantly lower than the maximum complexity of the algorithm and their ratio decreases as N increases.

Figure 2.

Decrease of the ratio of weighted average complexity to maximum complexity with the increase of N.

6. The Metropolis–Hastings

For systems with large N it might be computationally intractable to calculate the exact probability distribution of output states. The number of the possible output states scales with N and M as

Sampling from a probability distribution from which direct sampling is difficult can be done using the Metropolis–Hastings algorithm, which uses a Markov process. It allows us to generate a Markov chain in which points appear with frequencies that are equal to their probability. In our case, the points will be represented by the output states, i.e., sets of numbers such that .

We will require a transition function that will generate a candidate state from the last state in the chain. When the points are represented by real numbers, a candidate state can be chosen from a Gaussian distribution centered around the last point. In our case, however, the transition function will be more complex.

The transition function (Algorithm 1) must allow the chain to arrive in each of the possible states. It will be convenient to define it in the following way:

| Algorithm 1: Transition function |

|

For the condition of a detailed balance to hold, we will require a function which is equal to the probability of being the transition function output when the last state in the chain is . It is trivially constructed from the transition function.

Let u be the ratio of the exact probabilities of the states and . The condition of a detailed balance will hold if the Markov chain will go from the state to the state with the probability

Given the Markov chain, we can then calculate the approximate probability of a state by dividing the number of times this state occurs in the chain by the total number of steps of the chain.

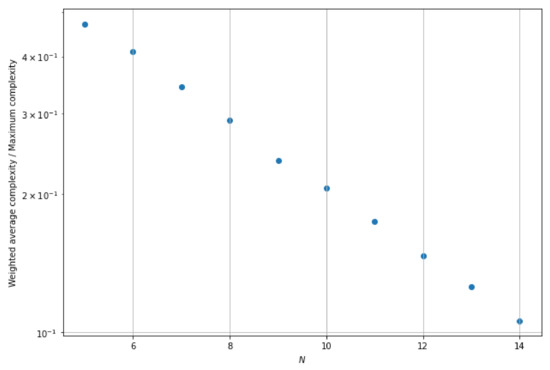

Results

To demonstrate that the frequencies with which states appear in the Markov chain converge to the exact probability distribution, we have tested it on the system with and a random unitary matrix as the interferometer matrix. To calculate the distance between the exact and the approximate distribution we used cosine similarity:

where p and q are some probability distributions. Namely, the value of is 0 when p and q are equal.

Figure 3 shows that decreases as the Markov chain makes more steps.

Figure 3.

Convergence of the approximate probability distribution to the exact probability distribution.

7. Conclusions

We have presented a new algorithm for calculating the probabilities of the output states in the boson sampling problem. We have shown the correctness of the algorithm and calculated its computational complexity. This algorithm is simple in implementation as it relies heavily on the Fourier transform, which has numerous well-documented implementations.

The performance of this algorithm is better than the other algorithms in cases where there are many photon collisions. An example we give is an output state where all the photons are spread equally across one-half of the outputs, with the other half of the outputs empty. In this case the algorithm requires operations, while the Clifford–Clifford algorithm requires operations.

We have also proposed a method to approximately calculate the probability distribution in the boson sampling problem. It can be used when the system size is too large and calculating the exact probability distribution is intractable. Our results show that this algorithm indeed produces a probability distribution that converges to the exact probability distribution.

We plan to study further the application of the Metropolis–Hastings algorithm to approximate the boson sampling problem. When losses are modeled in the system, the probability distribution of the output states becomes concentrated. For example, when losses are high, the most probable states are those with many lost photons. When the losses are low, the probability is concentrated in the area where no or a few photons are lost. This property makes the Metropolis–Hastings algorithm especially effective in solving this problem.

Author Contributions

Conceptualization, A.N.R.; Software, M.U.; Formal analysis, M.U.; Investigation, M.U. All authors have read and agreed to the published version of the manuscript.

Funding

This research work was supported by the Russian Roadmap for the Development of Quantum Technologies, contract No. 868-1.3-15/15-2021.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Theorem A1.

Let N be some natural number. Let be natural numbers that satisfy the following conditions:

(1) ;

(2) ;

(3) , where (and ).

Then .

Proof.

We will prove this theorem by induction on N. The base case will be . Both the base case and the induction step will be proven by contradiction.

1. Base case.

Let us assume the opposite: and/or .

The condition (3) will take the form

We expand the brackets:

According to condition (2), . Therefore,

The condition (1) states that . Therefore, .

On the other hand, let us write condition (3) modulo ; the terms containing will be zero:

, since otherwise it follows from condition (3) that which leads to a contradiction (both and ). Therefore, since and , we have . However, previously we have shown that , which leads to a contradiction. This proves the base case.

2. Let us prove some general statements that will help us prove the induction step. Let us assume that the statement of the theorem is true for . Then the following lemma holds for numbers that satisfy the conditions of the theorem for N: □

Lemma A1.

the following is true: , where and are natural numbers and .

Proof.

We will prove this lemma by induction on m. First we prove the base case . Let us write down the expression from condition (3) of the theorem modulo (all terms that contain will turn to zero and only the first ones from each side will remain):

Then

where is an integer. Since , must be natural. Since , the equation is true.

Now let us assume that the statement of this lemma holds for all i such that . Let us write down the expression from condition (3) of the theorem modulo —this will turn to zero all the terms, except for first m on both sides (note that has a form of ):

Then

where is an integer. Using and dividing by , we get

Applying sequentially and dividing by for all i from 2 to we get

where by the assumption of the induction step and since .

Let us specifically consider the case . The expression (A3) will take the following form:

Using condition (3) of the theorem we get

which proves the lemma.

We now go back to the theorem. Suppose the theorem is false for N but true for . Lemma 1 has some corollaries that are used in proving the induction step. Firstly, suppose but . Then the condition (3) will take the form of

which can be divided by to get

Moreover, the condition (2) can be written as

It means that numbers satisfy the conditions of the theorem for , which is assumed to be true. Therefore, which is a contradiction. As a result, ; using (A2) we get .

Secondly, let us write down condition (2) of the theorem with the equation (which is given by Lemma 1):

After expanding it we get

Since ,

However, the condition (1) states that , Lemma 1 states that , and we have shown above that . Therefore and , which means that . We get a contradiction which proves the theorem. □

Theorem A2.

Let N be some natural number. Let be natural numbers that satisfy the following conditions:

(1) ;

(2) ;

(3) , where (and ).

Then .

Proof.

We will prove this theorem by contradiction. Suppose there are such numbers that satisfy the conditions of the theorem, but . Let us write down condition (3) of the theorem in the following way:

where and since . If , than Theorem 1 can be applied and , which is a contradiction. From now on we will consider the case . We can rearrange the expression which is multiplied by q:

Now condition (3) of the theorem can be rewritten as

Let us define , . Then

The numbers satisfy the conditions of the Theorem 1. The condition (1) is satisfied because and . Since

The condition (2) of the Theorem 1 is also satisfied. The condition (3) is identical to the expression (A4). Therefore, ; but

This is a contradiction which proves the theorem. □

References

- Shor, P.W. Polynomial-Time Algorithms for Prime Factorization and Discrete Logarithms on a Quantum Computer. SIAM J. Comput. 1997, 26, 1484–1509. [Google Scholar] [CrossRef]

- Lund, A.; Laing, A.; Rahimi-Keshari, S.; Rudolph, T.; O’Brien, J.; Ralph, T. Boson Sampling from a Gaussian State. Phys. Rev. Lett. 2014, 113, 100502. [Google Scholar] [CrossRef] [PubMed]

- Gard, B.T.; Motes, K.R.; Olson, J.P.; Rohde, P.P.; Dowling, J.P. An Introduction to Boson-Sampling. In From Atomic to Mesoscale; World Scientific: Singapore, 2015; pp. 167–192. [Google Scholar] [CrossRef]

- Aaronson, S.; Arkhipov, A. The Computational Complexity of Linear Optics. arXiv 2010, arXiv:1011.3245. [Google Scholar]

- Aaronson, S. A linear-optical proof that the permanent is #P-hard. Proc. R. Soc. A Math. Phys. Eng. Sci. 2011, 467, 3393–3405. [Google Scholar] [CrossRef]

- Ryser, H.J. Combinatorial Mathematics; American Mathematical Society: Providence, RI, USA, 1963; Volume 14. [Google Scholar]

- Clifford, P.; Clifford, R. The Classical Complexity of Boson Sampling. In Proceedings of the 2018 Annual ACM-SIAM Symposium on Discrete Algorithms (SODA), New Orleans, LA, USA, 7–10 January 2018. [Google Scholar]

- Banchi, L.; Fingerhuth, M.; Babej, T.; Ing, C.; Arrazola, J.M. Molecular docking with Gaussian Boson Sampling. Sci. Adv. 2020, 6, eaax1950. [Google Scholar] [CrossRef] [PubMed]

- Huh, J.; Guerreschi, G.G.; Peropadre, B.; McClean, J.R.; Aspuru-Guzik, A. Boson sampling for molecular vibronic spectra. Nat. Photonics 2015, 9, 615–620. [Google Scholar] [CrossRef]

- Huh, J.; Yung, M.H. Vibronic Boson Sampling: Generalized Gaussian Boson Sampling for Molecular Vibronic Spectra at Finite Temperature. Sci. Rep. 2017, 7, 7462. [Google Scholar] [CrossRef]

- Brádler, K.; Dallaire-Demers, P.L.; Rebentrost, P.; Su, D.; Weedbrook, C. Gaussian boson sampling for perfect matchings of arbitrary graphs. Phys. Rev. A 2018, 98, 032310. [Google Scholar] [CrossRef]

- Jahangiri, S.; Arrazola, J.M.; Quesada, N.; Killoran, N. Point processes with Gaussian boson sampling. Phys. Rev. E 2020, 101, 022134. [Google Scholar] [CrossRef]

- Schuld, M.; Brádler, K.; Israel, R.; Su, D.; Gupt, B. Measuring the similarity of graphs with a Gaussian boson sampler. Phys. Rev. A 2020, 101, 032314. [Google Scholar] [CrossRef]

- Banchi, L.; Quesada, N.; Arrazola, J.M. Training Gaussian boson sampling distributions. Phys. Rev. A 2020, 102, 012417. [Google Scholar] [CrossRef]

- Bentivegna, M.; Spagnolo, N.; Vitelli, C.; Flamini, F.; Viggianiello, N.; Latmiral, L.; Mataloni, P.; Brod, D.J.; Galvão, E.F.; Crespi, A.; et al. Experimental scattershot boson sampling. Sci. Adv. 2015, 1, e1400255. [Google Scholar] [CrossRef] [PubMed]

- Hamilton, C.S.; Kruse, R.; Sansoni, L.; Barkhofen, S.; Silberhorn, C.; Jex, I. Gaussian Boson Sampling. Phys. Rev. Lett. 2017, 119, 170501. [Google Scholar] [CrossRef] [PubMed]

- Zhong, H.S.; Peng, L.C.; Li, Y.; Hu, Y.; Li, W.; Qin, J.; Wu, D.; Zhang, W.; Li, H.; Zhang, L.; et al. Experimental Gaussian Boson sampling. Sci. Bull. 2019, 64, 511–515. [Google Scholar] [CrossRef] [PubMed]

- Zhong, H.S.; Wang, H.; Deng, Y.H.; Chen, M.C.; Peng, L.C.; Luo, Y.H.; Qin, J.; Wu, D.; Ding, X.; Hu, Y.; et al. Quantum computational advantage using photons. Science 2020, 370, 1460–1463. [Google Scholar] [CrossRef]

- Oh, C.; Noh, K.; Fefferman, B.; Jiang, L. Classical simulation of lossy boson sampling using matrix product operators. Phys. Rev. A 2021, 104, 022407. [Google Scholar] [CrossRef]

- García-Patrón, R.; Renema, J.J.; Shchesnovich, V. Simulating boson sampling in lossy architectures. Quantum 2019, 3, 169. [Google Scholar] [CrossRef]

- Popova, A.S.; Rubtsov, A.N. Cracking the Quantum Advantage threshold for Gaussian Boson Sampling. arXiv 2021, arXiv:2106.01445. [Google Scholar]

- Qi, H.; Brod, D.J.; Quesada, N.; García-Patrón, R. Regimes of Classical Simulability for Noisy Gaussian Boson Sampling. Phys. Rev. Lett. 2020, 124, 100502. [Google Scholar] [CrossRef]

- Aaronson, S.; Brod, D.J. BosonSampling with lost photons. Phys. Rev. A 2016, 93, 012335. [Google Scholar] [CrossRef]

- Chin, S.; Huh, J. Generalized concurrence in boson sampling. Sci. Rep. 2018, 8, 6101. [Google Scholar] [CrossRef] [PubMed]

- Bulmer, J.F.F.; Bell, B.A.; Chadwick, R.S.; Jones, A.E.; Moise, D.; Rigazzi, A.; Thorbecke, J.; Haus, U.U.; Vaerenbergh, T.V.; Patel, R.B.; et al. The boundary for quantum advantage in Gaussian boson sampling. Sci. Adv. 2022, 8, eabl9236. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).