Abstract

Measurements of systems taken along a continuous functional dimension, such as time or space, are ubiquitous in many fields, from the physical and biological sciences to economics and engineering. Such measurements can be viewed as realisations of an underlying smooth process sampled over the continuum. However, traditional methods for independence testing and causal learning are not directly applicable to such data, as they do not take into account the dependence along the functional dimension. By using specifically designed kernels, we introduce statistical tests for bivariate, joint, and conditional independence for functional variables. Our method not only extends the applicability to functional data of the Hilbert–Schmidt independence criterion (hsic) and its d-variate version (d-hsic), but also allows us to introduce a test for conditional independence by defining a novel statistic for the conditional permutation test (cpt) based on the Hilbert–Schmidt conditional independence criterion (hscic), with optimised regularisation strength estimated through an evaluation rejection rate. Our empirical results of the size and power of these tests on synthetic functional data show good performance, and we then exemplify their application to several constraint- and regression-based causal structure learning problems, including both synthetic examples and real socioeconomic data.

1. Introduction

Uncovering the causal relationships between measured variables, a discipline known as causal structure learning or causal discovery, is of great importance across various scientific fields, such as climatology [1], economics [2], and biology [3]. Doing so from passively collected (‘observational’) data enables the inference of causal interactions between variables without performing experiments or randomised control trials, which are often expensive, unethical, or impossible to conduct [4]. Causal structure learning is the inference, under a given set of assumptions, of directed and undirected edges in graphs representing the data-generating process, where the nodes represent variables and the inferred edges capture causal (directed) or non-causal (undirected) relationships between them.

Research in various areas collates functional data consisting of multiple series of measurements observed conjointly over a given continuum (e.g., time, space, or frequency), where each series is assumed to be a realisation of an underlying smooth process ([5], §3). By viewing the series of measurements as discretisations of functions, the observations are not required to be collected over regular meshes of points along the continuum. If the variables are measured over time as the underlying continuum, then there is a long history of methods that have been developed to infer (time-based) causality between variables. Among those, the classic Granger causality [6] declares that variable ‘X causes Y’ () if predicting the future of Y becomes more accurate with, as compared to without, access to the past of X, conditional on all other relevant variables [7]. However, these methods assume that the observed time-series are stationary and the causal dependency of X on Y is linear. More recently, Sugihara et al. [8] developed convergent cross mapping (ccms), a method that relaxes the assumption of linearity and finds causal relationships based on time-embeddings of the (stationary) time-series at each point. While useful in many situations, Granger causality and ccm can perform weakly when the time-series for X and Y are nonlinearly related or nonstationary, respectively (see Appendix H).

Here, we present a method that uses kernel-based independence tests to detect statistically significant causal relationships by extending constraint- and regression-based causal structure learning to functional data. The key advantages over Granger causality and ccm are both the systematic consideration of confounders and the relaxation of assumptions around linear relationships or stationarity in the data, which can lead to different causal relationships between variables. As a motivating example, consider the relationship between two variables, ‘corruption’ and ‘income inequality’, as measured using the World Governance Indicator (wgis) [9] and the World Bank [10], respectively. Using data for 48 African countries from 1996 to 2016, Sulemana and Kpienbaareh [2] investigated their cause–effect relationship and found that corruption ‘Granger-causes’ lead to income inequality. We have also confirmed independently that applying ccm to the same data leads to the same conclusion. However, by considering the time-series data as realisations of functions over time, and thus avoiding linearity and stationarity assumptions, our proposed kernel-based approach suggests the reverse result, i.e., causal influence of income inequality on corruption appears as the more statistically likely direction. Although a bidirectional causal dependency between these two variables might appear as more realistic, this conclusion is in agreement with other quantitative findings, which draw on different data sources [11,12,13]. We will return to this example in Section 4.2.2 where we analyse causal dependencies between all six wgis.

Methodologically, our work extends the applicability of two popular paradigms in causal structure learning—constraint-based ([14], § 5) and regression-based methods [15]—to functional data. Independence tests play a crucial role in uncovering causal relationships in both paradigms, and kernels provide a powerful framework for such tests by embedding probability distributions in reproducing kernel Hilbert spaces (rkhss) ([16], § 2.2). Until now, however, related methods for causal learning had only been applicable to univariate and multivariate data, but not to functional data. To address this limitation, we employ recently derived kernels over functions [17] to widen the applicability of kernel-based independence tests to functional data settings. To test for conditional independence, we can then compute hscic [18] in a conditional permutation test (cpt) [19], and we propose a straightforward search to determine the optimised regularisation rate in hscic.

We structure our paper as follows. Section 2 provides a brief overview of prior literature on functional data analysis, kernel-based independence tests, and causal structure learning methods. Section 3 presents the definition of a conditional independence test for functional data and its applicability to causal structure learning on such data. Then, we empirically analyse the performance of our independence tests and causal structure learning algorithms on synthetic and real-world data in Section 4. We conclude with a discussion in Section 5.

Our main contribution lies in Section 3, where we propose a conditional independence test for functional data that combines a novel test statistic based on hscic with cpt to generate samples under the null hypothesis. The algorithm also searches for the optimised regularisation strength required to compute hscic, by pre-test permutations to calculate an evaluation rejection rate. We also highlight the following secondary contributions:

- In Section 4.1.2, we extend the historical functional linear model [20] to the multivariate case for regression-based causal structure learning, and we show how a joint independence test can be used to verify candidate directed acyclic graph (dags) ([21], § 5.2) that embed the causal structure of function-valued random variables. This model has been contributed to the Python package scikit-fda [22].

- On synthetic data, we show empirically that our bivariate, joint, and conditional independence tests achieve high test power, and that our causal structure learning algorithms outperform previously proposed methods.

- Using a real-world dataset (World Governance Indicators), we demonstrate how our method can yield insights into cause–effect relationships amongst socioeconomic variables measured in countries worldwide.

- Implementations of our algorithms are made available at https://github.com/felix-laumann/causal-fda/ (accessed on 10 October 2023) in an easily usable format that builds on top of scikit-fda and causaldag [23].

2. Background and Related Work

2.1. Functional Data Analysis

In functional data analysis [5], a variable X is described by a set of n samples (or realisations), , where each functional sample corresponds to a series of observations over the continuum s, also called the functional dimension. Typical functional dimensions are time or space. In practical settings, the observations are taken at a set of S discrete values of the continuum variable s. Examples of functional datasets include the vertical position of the lower lip over time when speaking out a given word [20], the muscle soreness over the duration of a tennis match [24], or the ultrafine particle concentration in air measured over the distance to the nearest motorway [25].

In applications, the functional samples are usually represented as linear combinations of a finite set of M basis functions (e.g., Fourier or monomial basis functions):

where the coefficients characterise each sample. If the number of basis functions is equal to the number of observations, , then each observed value can be fitted exactly by obtaining the coefficients using standard nonlinear least squares fitting techniques (provided the are valid basis functions), and Equation (1) allows us to interpolate between any two observations. When the number of basis functions is smaller than the number of observations, , as it is commonly the case in practice, the basis function expansion (1) provides a smoothed approximation to the set of observations, .

For the many applications where the continuum is time, historical functional linear model (hflms) [20] provides a comprehensive framework to map the relationship between two sets of functional samples. Let 0 and T be the initial and final time points for a set of samples . hflms describes the dependencies that can vary over time using the function , which encapsulates the influence of on another variable at any two points in time, and :

where is the maximum allowable lag for any influence of X on Y and . Typical choices for are exponential decay and hyperbolic paraboloid (or “saddle”) functions. The continuum is not required to be time but can also be space, frequency, or others (see Ramsay and Hooker [26] for an extensive collection of function-to-function models and applications).

2.2. Kernel Independence Tests

Let and be separable rkhss with kernels and such that the tensor product kernel implies . If the kernel uniquely embeds a distribution in an rkhs by a mean embedding, then

which captures any information about ; we call a characteristic kernel on [27]. Characteristic kernels have thus been extensively used in bivariate (), joint (), and conditional () independence tests (e.g., [21,28,29]).

For the bivariate independence test, let denote the joint distribution of and . Then, hsic is defined as

We refer to Gretton et al. [28] for the definition of an estimator for finite samples that constitutes the test statistic in the bivariate independence test with null hypothesis . The test statistic is then computed on the original data and statistically compared to random permutations under the null hypothesis.

For distributions with more than two variables, let denote the joint distribution on . To test for joint independence, we compute

Pfister et al. [21] derive a numerical estimator, which serves as the basis for a joint independence test on finite samples. Here, the distribution under the null hypothesis of joint independence is generated by randomly permuting all sample sets in the same way as is in the bivariate independence test.

Lastly, the conditional independence test relies on accurately sampling from the distribution under the null hypothesis . At the core of conditional permutation test [19] lies a randomisation procedure that generates permutations of , denoted , which are generated without altering the conditional distribution , so that

under , while breaking any dependence between X and Y. The null distribution can therefore be generated by repeating this procedure multiple times, and we can decide whether should be rejected by comparing a test statistic on the original data against its results on the generated null distribution.

The existing literature on kernel-based independence tests is extensive, see, e.g., Berrett et al. [19] for a relevant review, but only a small part of those tests investigates independence among functional variables [30,31]. There have been particularly strong efforts in developing conditional independence tests and understanding their applicable settings. The authors of kernel conditional independence test (kcit) [29], for example, gave promising results in use with univariate data but increasingly suffered when the number of conditional variables was large. In contrast, the authors of kernel conditional independence permutation test (kcipt) [32] repurposed the well-established kernel two-sample test [33] to a conditional independence setting which delivered stable results for multiple conditional variables. However, Lee and Honavar [34] pointed out that, as the number of permutations increases, while its power increases, its calibration decreases. This issue was overcome by their proposed self-discrepancy conditional independence test (sdcit), which is based on a modified unbiased estimate of the maximum mean discrepancy (mmd).

2.3. Causal Structure Learning

The aim of causal structure learning, or causal discovery, is to infer the qualitative causal relationships among a set of observed variables, typically in the form of a causal diagram or dag. Once learnt, such a causal structure can then be used to construct a causal model such as a causal Bayesian network or a structural causal model (scm) [35]. Causal models are endowed with a notion of manipulation and, unlike a statistical model, do not just describe a single distribution, but many distributions indexed by different interventions and counterfactuals. They can be used for causal reasoning, that is, to answer causal questions such as computing the average causal effect of a treatment on a given outcome variable. Such questions are of interest across many disciplines, and causal discovery is thus a highly topical area. We refer to Glymour et al. [4], Mooij et al. [36], Peters et al. [37], Schölkopf and von Kügelgen [38], Squires and Uhler [39], Vowels et al. [40] for comprehensive surveys and accounts of the main research concepts. In particular, we focus here on causal discovery methods for causally sufficient systems, for which there are no unobserved confounders influencing two or more of the observed variables. Existing causal discovery methods can roughly be categorised into three families:

- Score-based approaches assign a score, such as a penalised likelihood, to each candidate graph and then pick the highest scoring graph(s). A common drawback of score-based approaches is the need for a combinatorial enumeration of all dags in the optimisation, although greedy approaches have been proposed to alleviate such issues [41].

- Constraint-based methods start by characterising the set of conditional independences in the observed data [14]. They then determine the graph(s) consistent with the detected conditional independences by using a graphical criterion called d-separation, as well as the causal Markov and faithfulness assumptions, which establish a one-to-one connection between d-separation and conditional independence (see Appendix B for definitions). When only observational i.i.d. data are available, this yields a so-called Markov equivalence class, possibly containing multiple candidate graphs. For example, the graphs , , and are Markov equivalent, as they all imply and no other conditional independence relations.

- Regression-based approaches directly fit the structural equations of an underlying scm for each , where denote the parents of in the causal dag and are jointly independent exogenous noise variables. Provided that the function class of the is sufficiently restricted, e.g., by considering only linear relationships [42] or additive noise models [43], the true causal graph is identified as the unique choice of parents for each i such that the resulting residuals are jointly independent.

As can be seen from these definitions, conditional, bivariate, and joint independence tests are an integral part of constraint- and regression-based causal discovery methods. Our main focus in the present work is therefore to extend the applicability of these causal discovery frameworks to functional data by generalising the underlying independence tests to such domains.

3. Methods

In all three of our independence tests (bivariate, joint, conditional), we employ kernels over functions, also known as squared-exponential T (se-t) kernels [17]. Let and be real, separable Hilbert spaces with norms and , respectively. Then, for , se-t kernels are defined as

where is commonly defined as the median heuristic, . Replacing any characteristic kernel by the se-t kernels for bivariate independence tests (based on hsic) and for joint independence tests (based on d-variable Hilbert–Schmidt independence criterion (hsic)) is straightforward and does not require further theoretical investigation besides evaluating numerically the validity and power of the tests (see Section 4). However, the application of se-t kernels to conditional independence tests needs further theoretical results, as we discuss next.

3.1. Conditional Independence Test on Functional Data

We consider the conditional independence test, which generates samples under the null hypothesis based on the cpt, and uses the sum of hscics over all samples as its test statistic. The cpt defines a permutation procedure that preserves the dependence of Z on both X and Y while resampling data for X that eliminates any potential dependence between X and Y. This procedure results in samples according to the null hypothesis, . We use this procedure whenever permutations are required as part of the conditional independence test. Given the computation of the hscic is based on a kernel ridge regression, it requires to set a regularisation strength , which has been manually chosen previously [18]. Generally, our aim is to have type-I error rates close to the allowable false-positive rate . However, choosing inappropriately may result in an invalid test (type-I error rates exceed if is chosen too large), or in a deflated test power (type-I error rates are well below and type-II error rates are high if is chosen too small). Thus, we must define an algorithm that conducts a search over a range of potentially suitable values for and assesses each candidate value by—what we will call—an evaluation rejection rate.

The search proceeds by iterating over a range of values to find the optimised value , as follows. For each , we start by producing one permutation of the samples , which we denote as , and we compute its corresponding evaluation test statistic given by the sum of hscics over all samples . Then, we apply the usual strategy for permutation-based statistical tests: we produce an additional set of P permuted sample sets of , which we denote , and for each , we determine the sum of hscics over to generate a distribution over statistics under the null hypothesis, which we call the evaluation null statistics. Then, we compute the percentile where the evaluation test statistic on falls within the distribution of evaluation null statistics on the permutation set . This results in an evaluation p-value which is compared to the allowable false-positive rate to determine whether the hypothesis  can be rejected. Given that both the evaluation test statistic and the evaluation null statistics are computed on conditionally independent samples, we repeat this procedure for times to estimate an evaluation rejection rate for each value of . Having completed this procedure over all values , we select the that produces an evaluation rejection rate closest to as the optimised regularisation strength, . Finally, we apply a cpt-based conditional independence test using the optimised to test the null hypothesis

can be rejected. Given that both the evaluation test statistic and the evaluation null statistics are computed on conditionally independent samples, we repeat this procedure for times to estimate an evaluation rejection rate for each value of . Having completed this procedure over all values , we select the that produces an evaluation rejection rate closest to as the optimised regularisation strength, . Finally, we apply a cpt-based conditional independence test using the optimised to test the null hypothesis  . This entire procedure is summarised in Algorithm 1.

. This entire procedure is summarised in Algorithm 1.

can be rejected. Given that both the evaluation test statistic and the evaluation null statistics are computed on conditionally independent samples, we repeat this procedure for times to estimate an evaluation rejection rate for each value of . Having completed this procedure over all values , we select the that produces an evaluation rejection rate closest to as the optimised regularisation strength, . Finally, we apply a cpt-based conditional independence test using the optimised to test the null hypothesis

can be rejected. Given that both the evaluation test statistic and the evaluation null statistics are computed on conditionally independent samples, we repeat this procedure for times to estimate an evaluation rejection rate for each value of . Having completed this procedure over all values , we select the that produces an evaluation rejection rate closest to as the optimised regularisation strength, . Finally, we apply a cpt-based conditional independence test using the optimised to test the null hypothesis  . This entire procedure is summarised in Algorithm 1.

. This entire procedure is summarised in Algorithm 1.The following Theorem 1 guarantees the consistency of the conditional independence test in Algorithm 1 with respect to the regularisation parameter .

Theorem 1.

Let and be separable RKHSs with kernels and such that the tensor product kernel is a characteristic kernel on [44]. If the regularisation parameter decays as at a slower rate than , then the test based on the test statistic in Algorithm 1 (lines 19–27) is consistent.

Proof.

See Appendix A. □

| Algorithm 1 Search for with subsequent conditional independence test | |

| Require: Samples (), Range , Significance level , Permutation iterations P, Rejection iterations B | |

| Initalise: | |

| 1: | for do ▹Start: Search for |

| 2: | for do |

| 3: | Permute by cpt, call them |

| 4: | |

| 5: | for do |

| 6: | Permute by cpt, call them |

| 7: | |

| 8: | |

| 9: | |

| 10: | if then |

| 11: | Fail to reject |

| 12: | rejects |

| 13: | else |

| 14: | Reject  |

| 15: | |

| 16: | |

| 17: | if then |

| 18: | |

| 19: | return ▹End: Search for |

| 20: | ▹Start: Conditional independence test |

| 21: | for do |

| 22: | Permute by cpt, call them |

| 23: | |

| 24: | |

| 25: | if then |

| 26: | Fail to reject |

| 27: | else |

| 28: | Reject  |

| ▹End: Conditional independence test | |

3.2. Causal Structure Learning on Functional Data

To infer the existence of (directed) edges in an underlying causal graph G based on the joint data distribution over a set of observed variables, we must assume that G and are intrinsically linked. The authors of (Spirtes et al. [14], § 2.3.3) define the faithfulness assumption, from which it follows that if two random variables are (conditionally) independent in the observed distribution , then they are d-separated in the underlying causal graph G ([35], § 1.2.3). Using this fact, constraint-based causal structure learning methods ([14], § 5) take advantage of bivariate and conditional independence tests to infer whether two nodes are d-separated in the underlying graph. These methods yield completed partially directed acyclic graphs (cpdags), which are graphs with undirected and/or directed edges. In contrast, regression-based methods [15] utilise the joint independence test to discover dags that have all edges oriented. Next, we describe both of these approaches in more detail.

3.2.1. Constraint-Based Causal Structure Learning

Constraint-based causal structure learning relies on performing conditional independence tests for each pair of variables, X and Y, conditioned on any possible subset of the remaining variables, that is, any subset within the power set of the remaining variables. Therefore, for d variables, we need to carry out conditional independence tests for every pair of variables, which results in tests in total.

Conditional dependences found in the data can then be used to delete and orient edges in the graph G, as follows. We start with a complete graph with d nodes, where each node corresponds to one of the variables. The edge connecting X and Y is deleted if there exists a subset of the remaining variables such that . If we then find another subset , such that , , and for which  , we can orient the edges and to form colliders (or v-structures), .

, we can orient the edges and to form colliders (or v-structures), .

, we can orient the edges and to form colliders (or v-structures), .

, we can orient the edges and to form colliders (or v-structures), .Based on these oriented edges, ‘Meek’s orientation rules’, defined in Meek [45] ([37], see, e.g., § 7.2.1) can be applied to direct additional edges based on certain graphical compositions, as shown in Appendix C. Briefly, these rules follow from the avoidance of cycles, which would violate the acyclicity of a dag, and colliders, which violate the found conditional independence. Algorithms that implement these criteria are the SGS algorithm and the more efficient PC algorithm [14], which we summarise in Appendix C.

3.2.2. Regression-Based Causal Structure Learning

To carry out our regression-based causal learning, we choose additive noise models (anms), a special class of scms where the noise terms are assumed to be independent and additive. This assumption guarantees that the causal graph can be identified if the function is nonlinear with Gaussian additive noise [43,46], a typical setting in functional data. Our model is set over the set of random variables is given by:

where the additive noise terms are jointly independent, i.e., any noise term is independent of any intersection of the other noise terms,

Based on these assumptions, we follow resit (regression with subsequent independence testing; [15]), an approach to causal discovery that can be briefly described as follows. If we only have two variables X and Y, then we start by assuming that the samples in are the effect and the samples in are the cause. Therefore we write as a function of plus some noise, and we run tests to determine whether the residual is independent of . Then, we exchange the roles of assumed effect and assumed cause to obtain the residual , which is tested for independence from . To overcome issues with finite samples and test power, Peters et al. [15] used the p-value of the independence tests as a measure of strength of independence, which we follow in our experiments in Section 4. Alternatively, one may determine the causal direction for this bivariate case by comparing both cause–effect directions with respect to a score defined by Bühlmann et al. [47].

For d variables, the joint independence test replaces the bivariate independence test. Firstly, we consider the set of candidate causal graphs, , which contains every potential dag with d nodes. For each candidate dag, , we regress each descendant on its parents :

and we compute the residuals . Then, we apply the joint independence test to all d residuals. The candidate dag is accepted as the ‘true’ causal graph if the null hypothesis of joint independence amongst the residuals is not rejected. This process is repeated over all candidate causal graphs in the set . Again, because in finite samples this procedure may not always lead to only one candidate dag being accepted, the one with the highest p-value is chosen [15].

4. Experiments

In practice, functional data analysis requires samples of continuous functions evaluated over a mesh of discrete observations. In our synthetic data, we consider the interval for our functional dimension and draw 100 equally spaced points over s to evaluate the functions. For real-world data, we map the space in which the data live (e.g., the years 1996 to 2020) to the interval and interpolate over the discrete measurement points. Then, we use this interpolation to evaluate the functional samples on 100 equally spaced points. Unless otherwise mentioned, henceforth we use se-t kernels with , where I is the identity matrix and as the median heuristic, as previously defined in Section 3.

4.1. Evaluation of Independence Tests for Functional Data

Before applying our proposed independence tests to causal structure learning problems, we use appropriate synthetic data to evaluate their type-I error rate (test size) and type-II error rate (test power). Type-I errors are made when the samples are independent (achieved by setting in our experiments below) but the test concludes that they are dependent, i.e., the test falsely rejects the null hypothesis . In contrast, type-II errors appear when the samples are dependent but the test fails to reject (achieved by setting ). Specifically, we compute the error rates on 200 independent trials, which correspond to different random realisations of the datasets, i.e., synthetically generated datasets with random Fourier basis coefficients, random coefficients of the -functions and random additive noise. We set the test significance level at and approximate the null hypothesis of independence by 1000 permutations using the cpt scheme described above. Note that although our synthetic data are designed to replicate typical behaviour in time-series, the independence tests are not limited to time as the functional dimension, and can be used more generally.

4.1.1. Bivariate Independence Test

We consider n functional samples of a random variable X defined over the interval . To generate our samples, we sum Fourier basis functions with period and coefficients randomly drawn from a standard normal distribution:

where the Fourier functions are , and . To mimic real-world measurements, we draw each sample over a distinct, irregular mesh of evaluation points. Then, we interpolate them with splines, and generate realisations over a regular mesh of points. Multivariate random noise , where I is the identity matrix, is then added. The samples of random variable Y are defined as a hflm [20] over by:

where . The function maps the dependence from at any point s to at any point t, and is defined here as a hyperbolic paraboloid function:

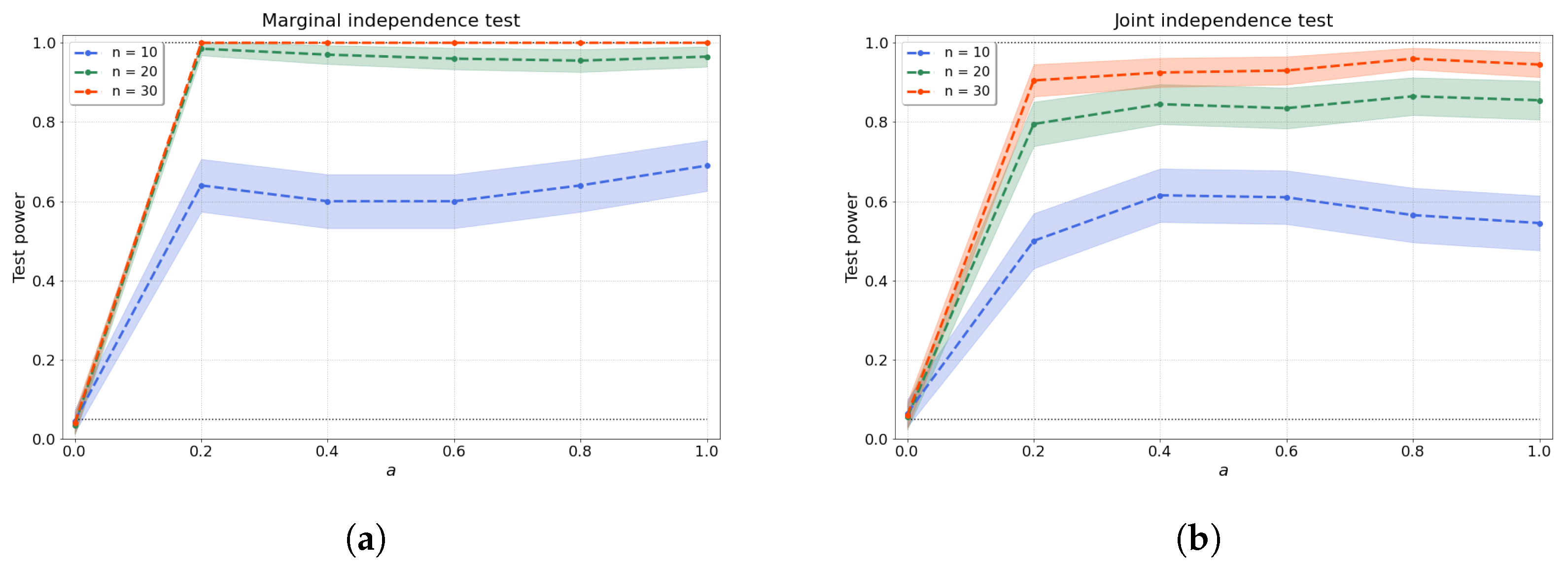

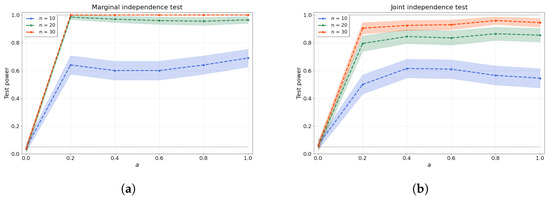

with coefficients and drawn independently from a uniform distribution over the interval . Afterwards, the samples are evaluated over a regular mesh of 100 points and random noise is added. Clearly, for , our samples are independent, as the samples are just random noise. As a increases, the dependence between the samples and becomes easier to detect. Figure 1a shows that our test stays within the allowable false-positive rate and detects the dependence as soon as , even with a low number of samples.

Figure 1.

(a) Bivariate and (b) joint independence tests over various values of the dependence factor a and sample size n. The joint independence test is conducted with variables.

4.1.2. Joint Independence Test

To produce the synthetic data for joint independence tests, we first generate random dags with d nodes using an Erdös-Rényi model with density 0.5 ([21], § 5.2). For each dag, we first use Equation (10) to produce function-valued random samples for the variables without parents. Then, we generate samples for other variables using a historical functional linear model:

where are the parents of node , and the function is given by Equation (12) with random coefficients and independently generated for each descendant-parent pair indexed by p. After being evaluated at a regular mesh of 100 points within , random noise is added. Note that, again, an increase in the factor should make the dependence structure of the dag easier to detect. Figure 1b shows the test size where , resulting in independent variables, and test power where . We evaluate the joint independence test for variables over various values of a and a range of sample sizes.

4.1.3. Conditional Independence Test

To evaluate whether X is independent of Y given , where may be any subset within the power set of the remaining variables, we generate data samples according to:

where is the cardinality of the set , ’s are given by Equation (12), and noise terms , and , are added to the discrete observation values.

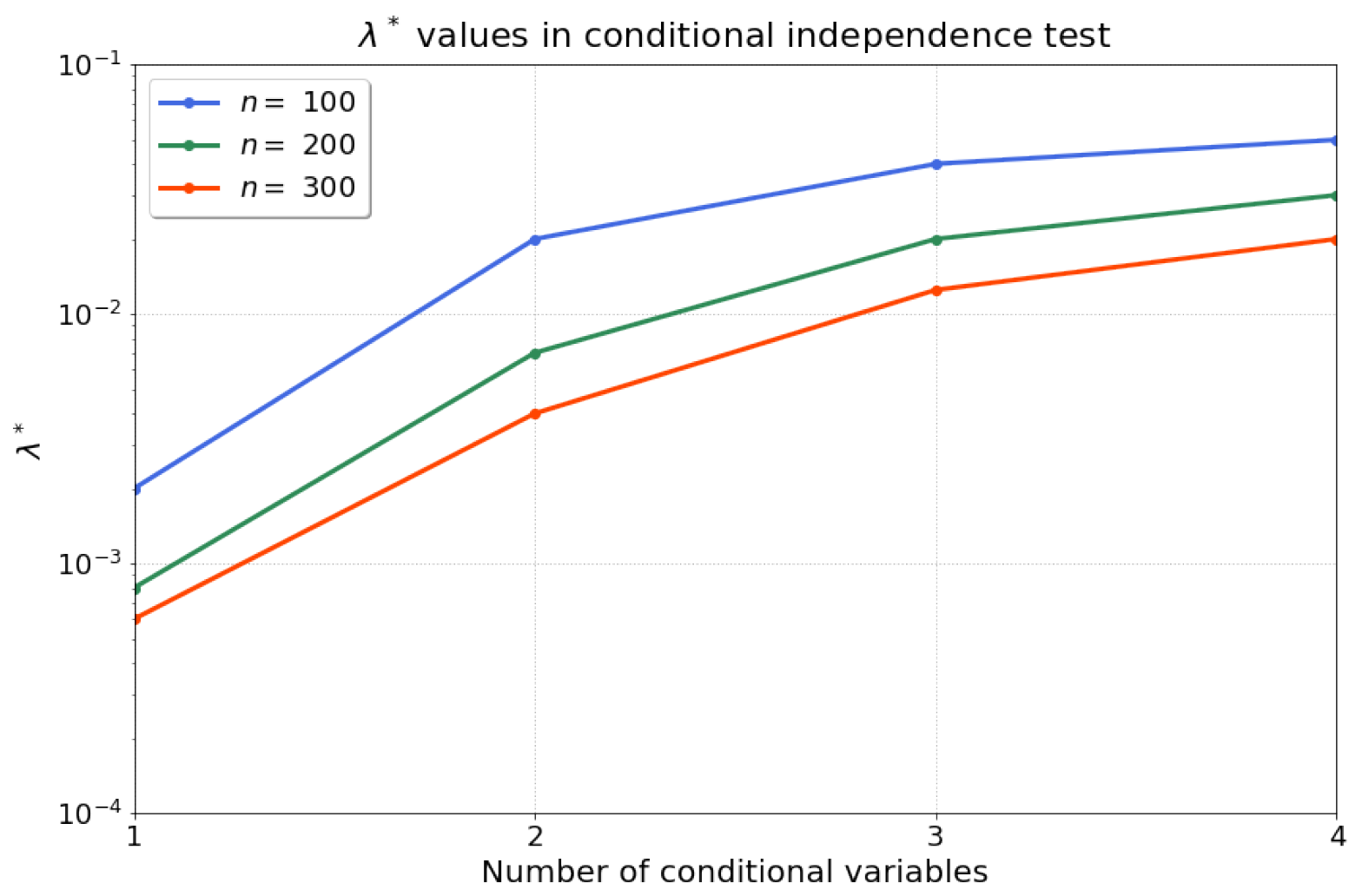

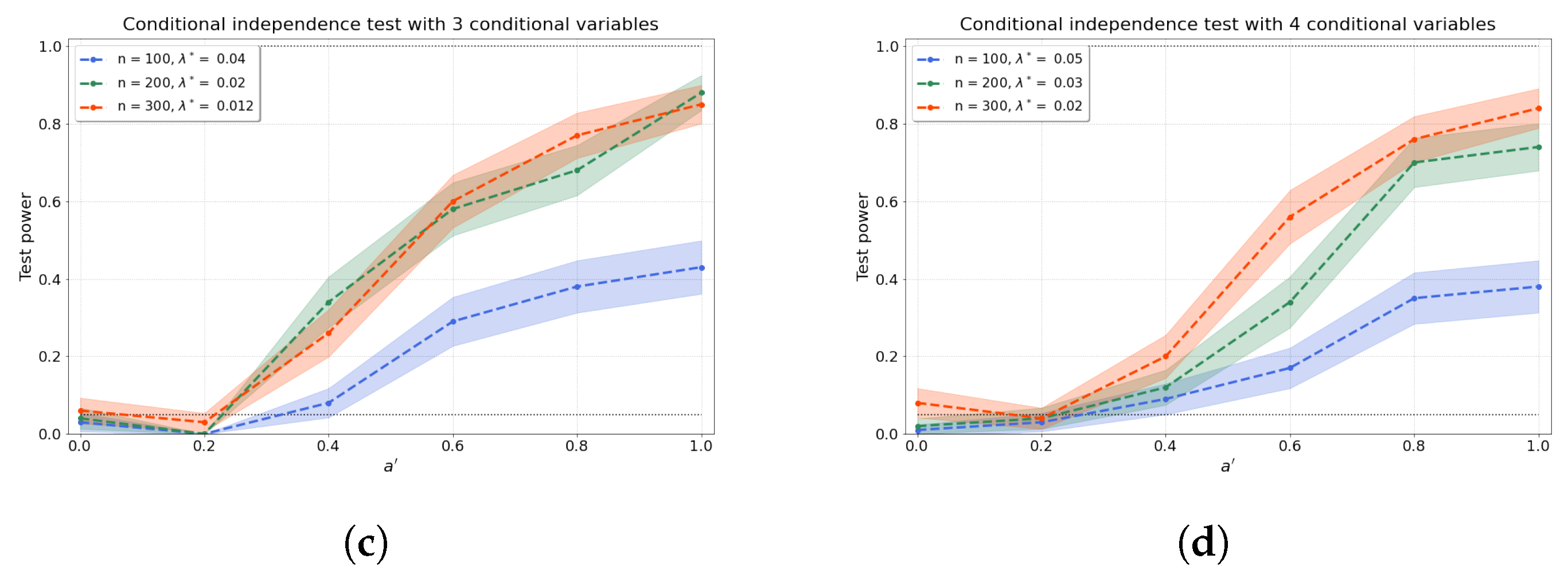

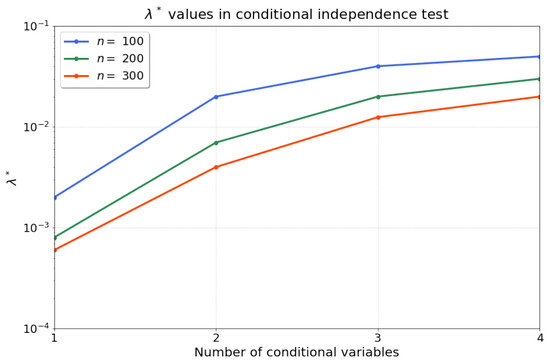

Then, we apply Algorithm 1 to compute the statistics for our conditional independence test. Firstly, we find that the optimised regularisation strength is robust and reproducible for different random realisations with the same sample size and model parameters. Consequently, we search for one optimised (line 1–18 in Algorithm 1) for each sample size, and fix this value for all 200 independent trials. Figure 2 summarises the results for for samples after conducting a grid search over a range of possible values , with permutations, and rejection iterations. Note that the range of values for can be tuned in stages by the practitioner, e.g., starting with a coarse initial exploration followed by a more fine-grained range. We recommend to choose for ease of comparison of the evaluation rejection rate to the acceptable false-positive rate, . We find that the optimised exhibits a saturation as the number of conditional variables (the dimension of the conditional set) is increased. Given that we perform a kernel ridge regression, a larger number of samples n should result in lower requirements for regularisation—which aligns with our observations of a decreasing over the increase in number of samples n. Therefore, Algorithm 1 optimises the regularisation parameter such that the evaluation rejection rate of the test is closest to the allowable false-positive rate .

Figure 2.

The optimised for increasing dimension d of the evaluated size of the conditional set and different sample sizes .

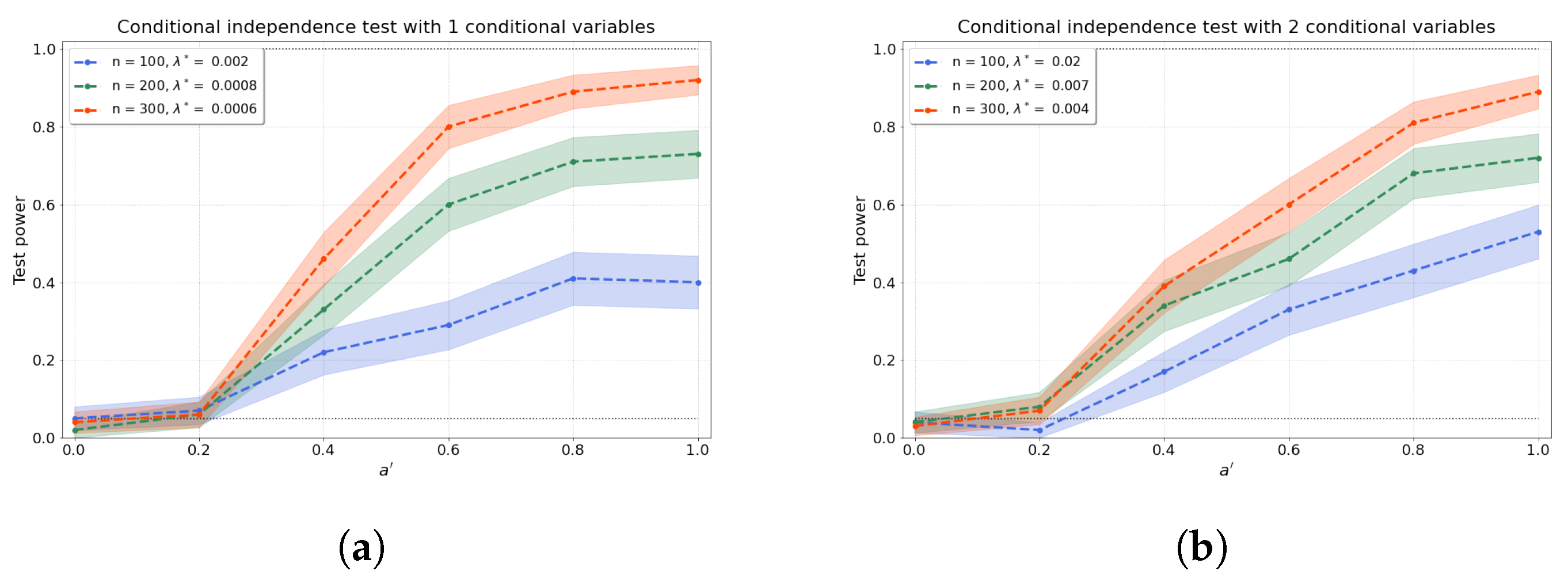

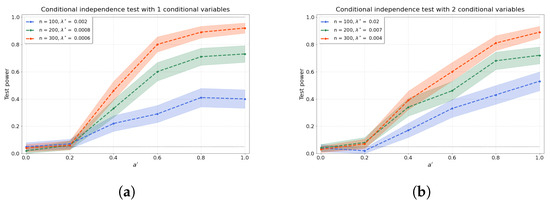

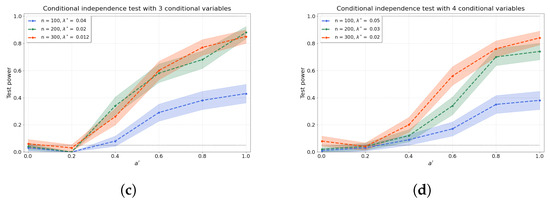

Figure 3a–d shows the results of the conditional independence test for increasing dimension d of the conditional set (i.e., number of conditional variables). We find that the test power is well preserved as d increases through a concomitant increase in that partly ameliorates the “curse of dimensionality” [48]. Furthermore, the values of the test power for correspond to the type-I error rates.

Figure 3.

The test power of the conditional independence tests over various values for and sample sizes n, from (a–d) 1–4 conditional variables. The regularisation parameter is given in the legend.

4.2. Causal Structure Learning

We use the three independence tests evaluated numerically in Section 4.1 to learn different causal structures among function-valued random variables. We start with regression-based methods for the bivariate case and extend to the multivariate case through regression-, constraint-based, and a combination of constraint- and regression-based causal structure learning approaches.

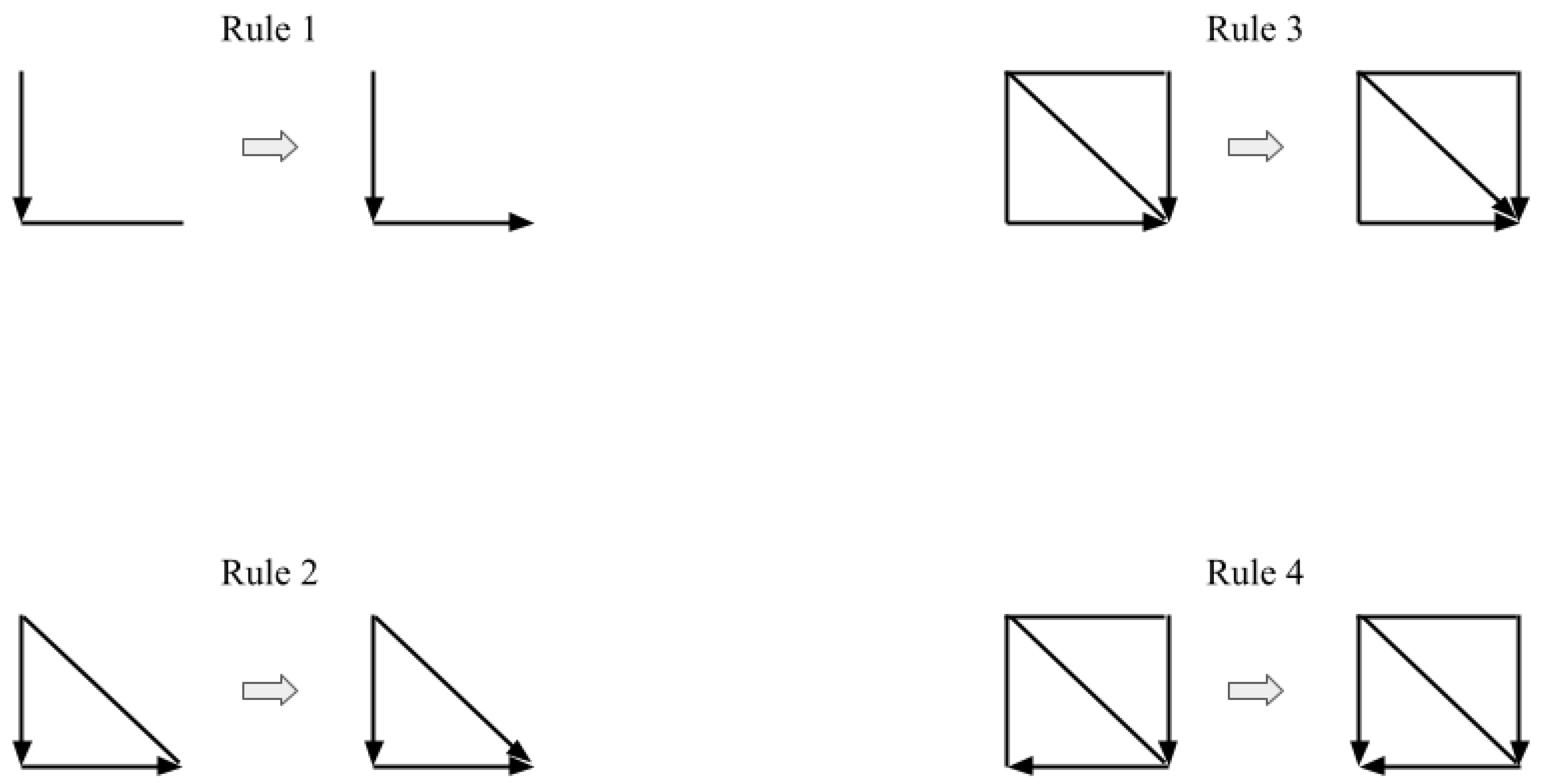

4.2.1. Synthetic Data

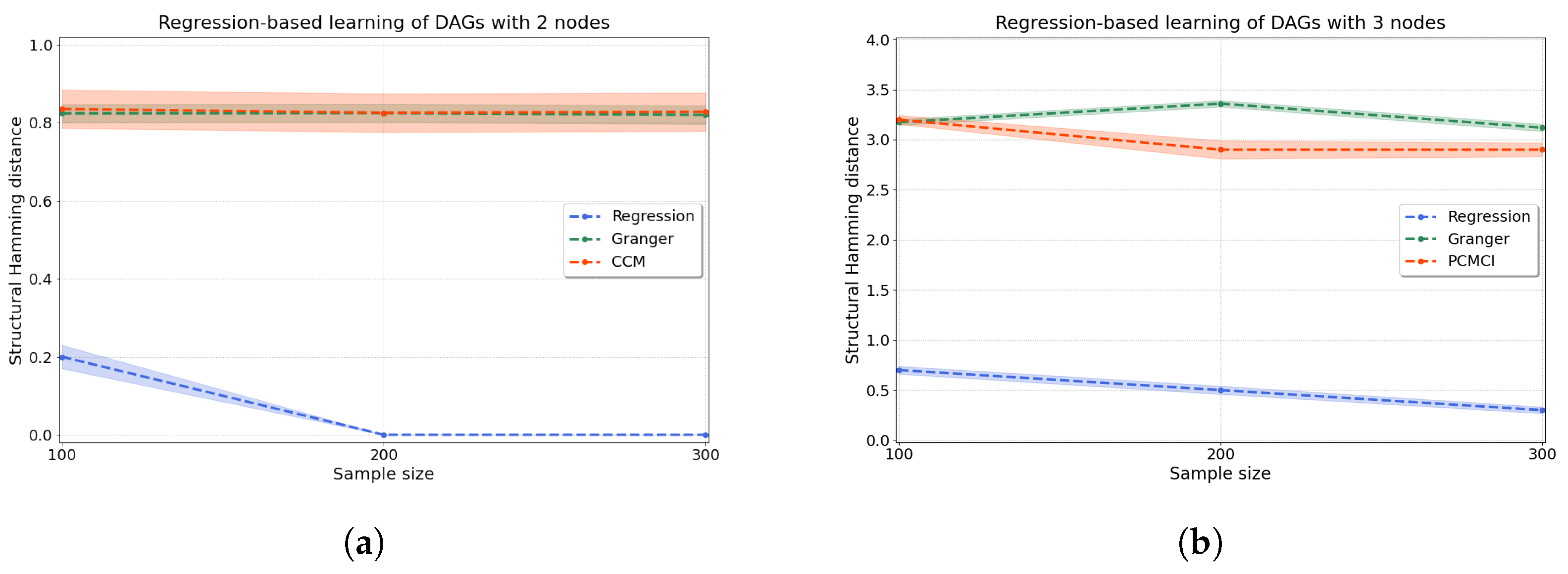

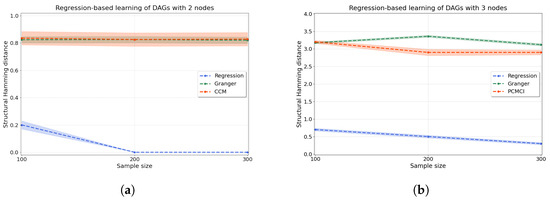

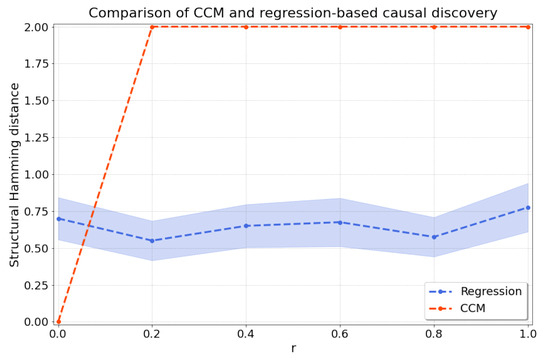

Regression-based causal discovery. We start by evaluating synthetic data for a bivariate system generated according to Equations (10) and (11) with . We generate 200 independent trials, and we score the performance of the method using the structural Hamming distance (shd) (see Appendix D for its definition). As seen in Figure 4a, our regression-based algorithm using resit outperforms two well-known algorithms for causal discovery: Granger causality, which assumes linearity in the relationships [6], and ccm, which allows for nonlinearity through a time-series embedding [8].

Figure 4.

Accuracy of the regression-based causal discovery using kernel-based joint independence tests among residuals for (a) two and (b) three variables. In the case of two variables (a), we compare our kernel-based method to Granger causality and ccm. In the case of three variables (b), we compare to multivariate Granger causality as well as using pcmci to produce comparable results. Our method significantly outperforms both Granger causality and ccm in the bivariate setting, with just a few mistakes made for low sample numbers () and no mistakes for higher sample sizes. For three variables, our method is substantially more accurate than pcmci and Granger causality, with an average shd of at versus for pcmci and for Granger causality. The accuracy of the methods is measured in terms of the structural Hamming distance (shd).

Then, we evaluate how the regression-based approach performs in a system of three variables where data are generated according to random dags with three nodes that entail historical nonlinear dependence. One of the above methods (ccm) is commonly applied to bivariate problems only, hence we compare our method on a system with three variables to multivariate Granger causality [7] as well as to PC algorithm with momentary conditional independence (pcmci), an algorithm where each time point is represented as a node in a graph [49] from which we extract a directed graph for the variables. Figure 4b shows substantially improved performance (lower shd) of our regression-based algorithm with respect to both of these methods on three-variable systems. Details of all the methods are given in Appendix E.

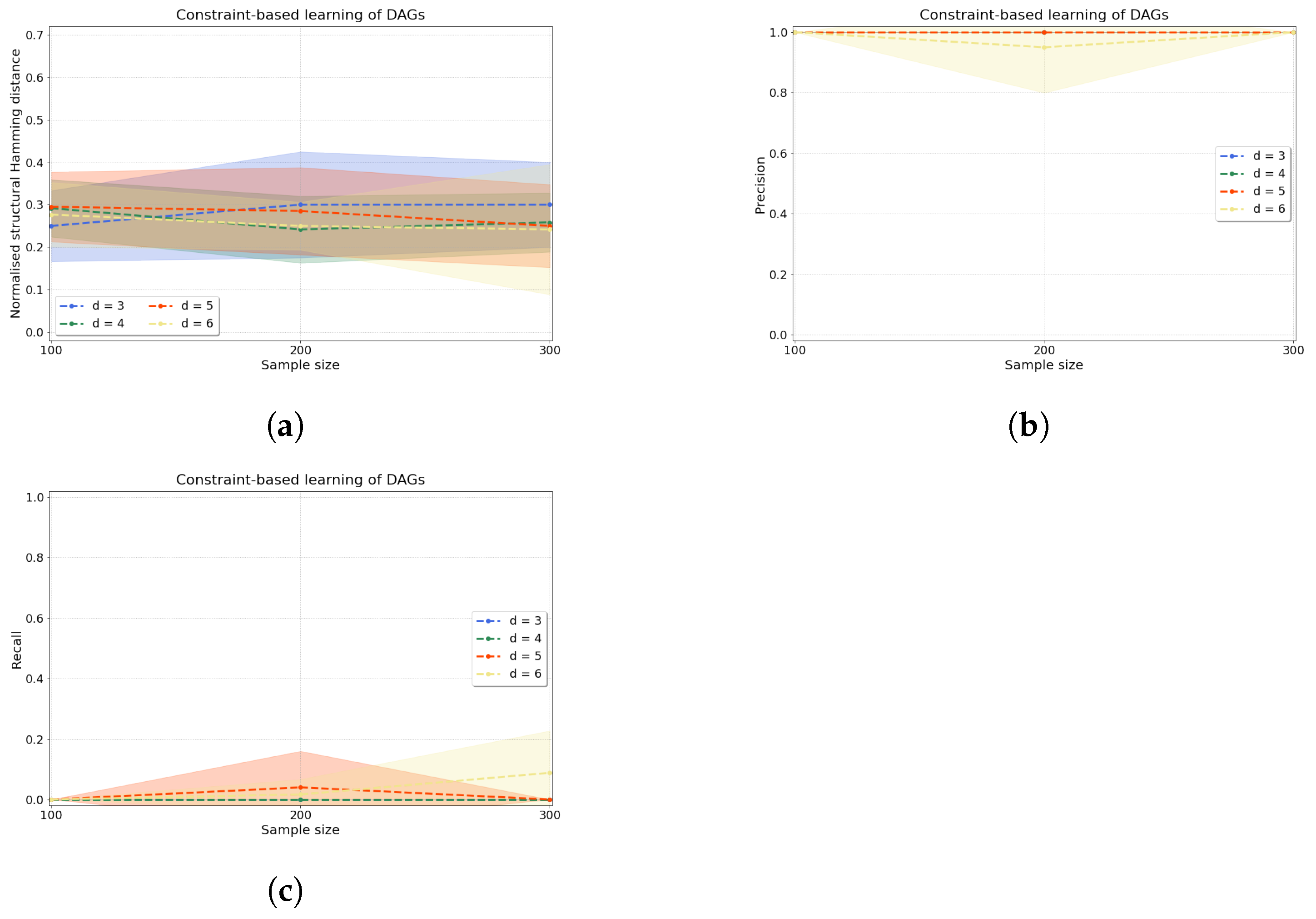

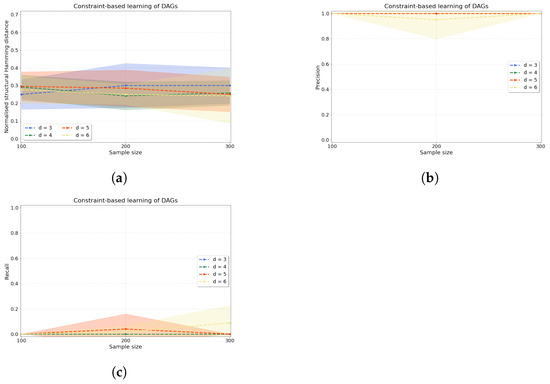

Constraint-based causal discovery. The accuracy of our constraint-based approach is evaluated by computing three metrics: normalised shd (shdnorm), precision, and recall (see Appendix D for definitions). Figure 5 shows the accuracy of our algorithm when applied to variables. The normalised shd in Figure 5a demonstrates consistent accuracy of the constraint-based causal structure learning method across sample sizes for all d. To complement this measure, we can examine jointly precision and recall in Figure 5b,c, where we find that the learnt edges are predominantly unoriented (low values of recall) but the oriented edges that are found are indeed correctly oriented (high values of precision).

Figure 5.

Constraint-based causal structure learning experiments. From left to right, we compute (a) the normalised shd, (b) precision and (c) recall over variables and samples with as the dependence between any two variables in the data that are connected by an edge in the true dag.

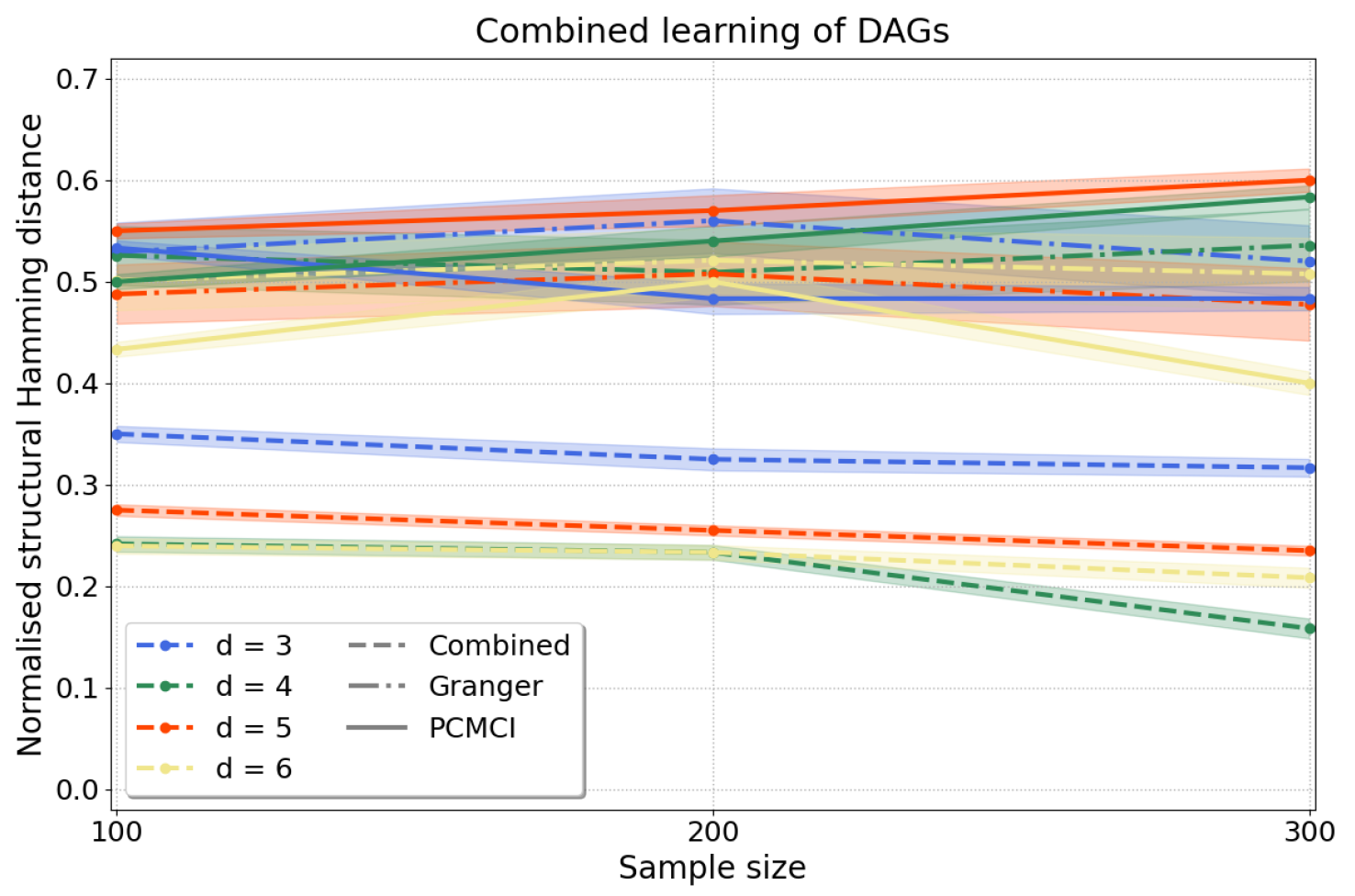

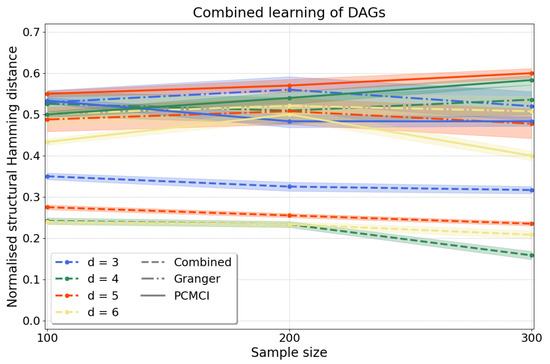

Combined approach. To scale up our causal discovery techniques more efficiently to larger graphs, it is possible to combine constraint- and regression-based causal learning, yielding dags. In this “combined” approach we start with a constraint-based step through which we learn cpdags by applying conditional independence tests to any two variables conditioned on any subset of the remaining variables and orient edges according to the Algorithm in Appendix C, which finds v-structures, applies Meek’s orientation rules and returns the Markov equivalence class. Often, the Markov equivalence class entails undirected edges, but we can then take a second step using a regression-based approach, under a different set of assumptions, to orient edges by applying resit. This two-step process yields a dag, i.e., every edge in the graph is oriented, in a more scalable manner than applying directly regression-based causal discovery. Then, we measure the accuracy of our approach computing the normalised shd (A2) as above. Figure 6 shows that our results for variables compare favourably to pcmci and to multivariate Granger causality with consistently lower shd for all d.

Figure 6.

Causal structure learning with the “combined” approach, where we first apply the constraint-based method to find the Markov equivalence class, followed by the regression-based method to orient the undirected edges of the Markov equivalence class. We compute the normalised shd over variables and samples with as in Equation (13). Our results (dashed lines) have substantially lower normalised shd than those obtained from Granger causality (dash-dotted lines) and pcmci (solid lines), applied as described in Appendix E.

4.2.2. Real-World Data

To showcase the application of our methods to real data, we return now to the motivating example on socioeconomic indicators mentioned in the Introduction (Section 1). Sulemana and Kpienbaareh [2] tested for the causal direction between corruption and income inequality, as measured using the Control of Corruption index [9] and Gini coefficient [50], respectively. They applied Granger causality with Wald- tests, which statistically test the null hypothesis that an assumed explanatory variable does not have a (linear) influence on other considered variables. Each variable, corruption and income inequality, was tested against being the explanatory variable (i.e., the cause) in their bivariate relationship. Their findings showed that income inequality as the explanatory variable results in a p-value of , whereas corruption is more likely to be the cause with a p-value of . Analysing the same data using ccm, which does not assume linearity, we also find the same cause–effect direction: of the sample pairs validate corruption as the cause, against of pairs which detect the opposite direction. However, using our proposed regression-based kernel approach, which does not assume linearity or stationarity, we find the opposite result, i.e., the causal dependence of corruption on income inequality is statistically more likely (p ) than the reverse direction (p) when applying regression with subsequent independence test (resit) that rejects the null hypothesis of independence of the residuals after regression in the false causal directions.

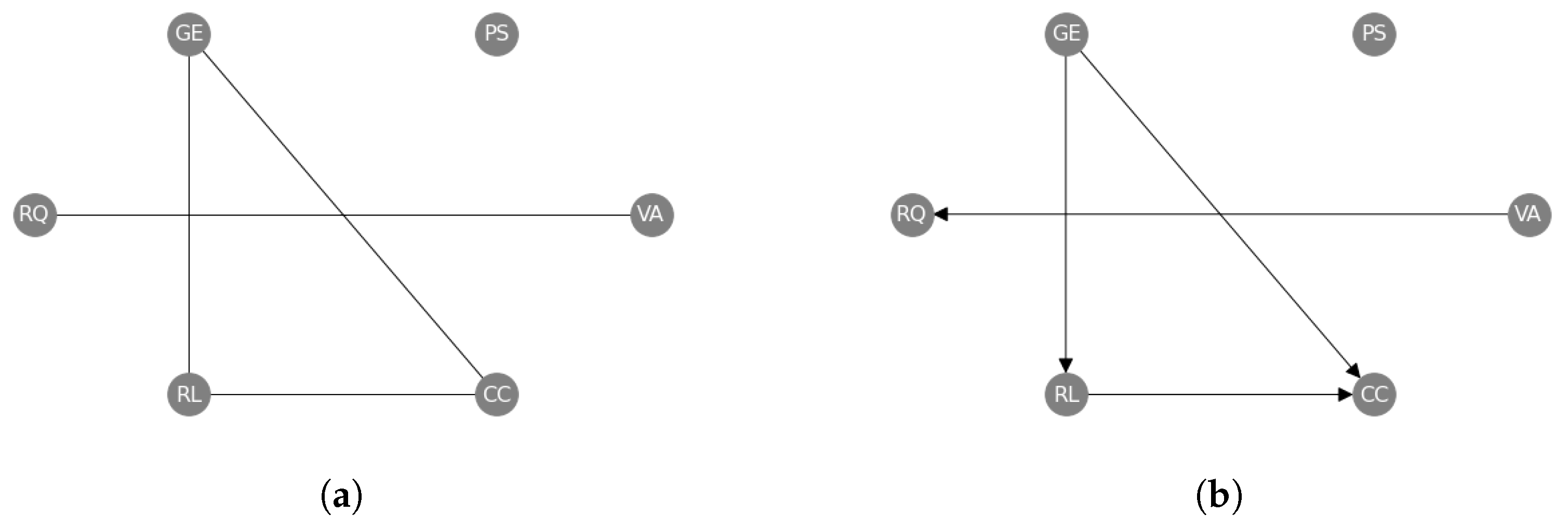

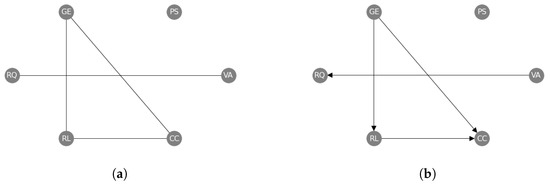

Going beyond pairwise interactions, we have also applied our causal structure learning method to the set of World Governance Indicators (wgis) [9]. The wgis are a collection of six variables, which have been measured in 182 countries over 25 years from 1996 to 2020 (see Appendix I). We view the time-series of the countries as independent functional samples of each variable (so that and the functional dimension is time), and we apply our methods as described above to establish the causal structure amongst the six wgis. In this case, we use the “combined” approach by successively applying our constraint- and regression-based causal structure learning methods. We first learn an undirected causal skeleton from the wgis (Figure 7a), and we find a triangle between Government Effectiveness (GE), Rule of Law (RL) and Control of Corruption (CC), and a separate link between Voice and Accountability (VA) and Regulatory Quality (RQ). Then, we orient these edges (Figure 7b) and find that Government Effectiveness (GE) causes both Rule of Law (RL) and Control of Corruption (CC), and Rule of Law (RL) causes Control of Corruption (CC). We also find that Voice and Accountability (VA) causes Regulatory Quality (RQ).

Figure 7.

Undirected (a) and directed (b) causal networks on the World Governance Indicators dataset to evaluate the results of the constraint-based approach alone (a) and the subsequent regression-based approach (b). The labels are abbreviations of the official names: Voice and Accountability (VA), Political Stability (PS), Government Effectiveness (GE), Regulatory Quality (RQ), Rule of Law (RL), and Control of Corruption (CC) (see Appendix I).

5. Discussion and Conclusions

We present a causal structure learning framework on functional data that utilises kernel-based independence tests to extend the applicability of the widely used regression- and constraint-based approaches. The foundation of the framework originates from the functional data analysis literature by interpreting any discrete-measured observations of a random variable as finite-dimensional realisations of functions.

Using synthetic data, we have demonstrated that our regression-based approach outperforms existing methods such as Granger causality, ccm and pcmci when learning causal relationships between two and three variables. In the bivariate case, we have carried out a more detailed comparison to Granger causality and ccm to explore the robustness of our regression-based approach to nonlinearity and nonstationarity in the data (see Appendix H) We find that while Granger degrades under the introduction of nonlinearity in the data, and ccm degrades under the introduction of nonstationarity, our method remains robust in its performance under both nonlinearity and nonstationarity. In addition, as seen in Figure 4a, ccm can have difficulty in detecting strong unidirectional causal dependencies [8,51], where the cause variable X uniquely determines the state of the effect variable Y inducing “generalised synchrony” [52]. In such cases, ccm can predict samples of Y from X equally well as X from Y; hence, ccm finds and indistinctly. In contrast, our experiments (Section 4.2.1 and Appendix H) show that our regression-based method is unaffected by the presence of ‘generalised synchrony’ in the data.

Further, we show that our conditional independence test, which is the cornerstone of the constraint-based causal discovery approach, achieves type-I error rates close to the acceptable false-positive rate and high type-II error rates, even when the number of variables in the conditional set increases. Shah et al. [48] rightly state that any conditional independence test can suffer from an undesirable test size or low test power in finite samples, and our method is obviously no exception. However, Figure 3 demonstrates the counterbalance of the “curse of dimensionality” through the optimised regularisation strength . Indeed, while with larger numbers of conditional variables, we would generally expect the test power to diminish, the optimisation of offsets this reduction, resulting in no significant decrease in test power with a growing number of conditional variables. Although our proposed method is computationally expensive and significantly benefits from high sample sizes, a suitable regularisation strength could be chosen for large conditional sets in principle.

Moreover, we demonstrate that constraint- and regression-based causal discovery methods can be combined to learn dags with large number of nodes, which would otherwise be computationally very expensive when relying on regression-based methods to yield dags. By comparing Figure 5a and Figure 6 we see however that it does not necessarily result in a lower shd. After learning the Markov equivalence class through the constraint-based approach, we apply resit to orient undirected edges in the “combined” approach. Here, mistakes in the orientation of undirected edges add 2 to the shd whereas an undirected edge only adds 1 to the shd. When applied to real-world data, we have utilised this approach to learn a causal graph of the wgis, assuming each relationship between two variables is uni-directionally identifiable as suggested by several economic studies [11,12,13].

The presented work contributes to opening the field of causal discovery to functional data. Beyond the results presented here, we believe that more research needs to be conducted to (i) increase the efficiency of the independence tests, meaning smaller sample sets can achieve higher test power; (ii) learn about upper bounds of the regularisation strength with respect to the size of the sample and conditional sets; (iii) reduce the computational cost of the conditional independence test (see Appendices Appendix F and Appendix G for a comparison to other methods); and (iv) establish connections and investigate differences to causal structure learning approaches based on transfer entropy [53,54].

Author Contributions

Conceptualization: F.L., J.v.K. and J.P.; Methodology: F.L., J.v.K. and J.P.; Software: F.L.; Validation: F.L., B.S. and M.B.; Formal analysis: F.L.; Data curation: F.L.; Writing—original draft: F.L.; Writing—review and editing: J.v.K., B.S. and M.B.; Supervision: M.B. All authors have read and agreed to the published version of the manuscript.

Funding

F.L. and M.B. acknowledge support by EPSRC grant EP/N014529/1 funding the EPSRC Centre for Mathematics of Precision Healthcare at Imperial, and by the Nuffield Foundation under the project “The Future of Work and Well-being: The Pissarides Review”. J.v.K., J.P. and B.S. acknowledge support by the Tübingen AI Center (FKZ: 01IS18039B) and by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) under Germany’s Excellence Strategy—EXC number 2064/1—Project number 390727645.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proof of Theorem 1

Proof.

All norms and inner products in this proof are taken with respect to the RKHS in which the normand and the arguments of the inner product reside.

Denote by the true Hilbert–Schmidt conditional independence criterion between the random variables and given , i.e.,

and denote by the empirical estimate of based on samples, i.e.,

where , and are the empirical estimates of the conditional mean embeddings , and obtained with the regularisation parameter .

Note that by the reverse triangle inequality, followed by the ordinary triangle inequality, we have

Here,

where we used the Cauchy–Schwarz inequality in the last inequality. We note that (Park and Muandet [18], Theorem 4.4) ensures that each of , and converge to 0 in probability, hence in probability with respect to the -norm.

Now we show that the empirical average of the empirical HSCIC converges to the population integral of the population HSCIC. Writing as a shorthand for , we see that

Here, the first term converges to 0 thanks to the uniform law of large numbers over the function class in which the empirical estimation of is carried out, and the second term converges to 0, as shown above.

Now, by (Park and Muandet [18], Theorem 5.4), since our kernel is characteristic, we have that is the identically zero function if—and only if—. Hence, if the alternative hypothesis holds, then we have

See that, for any , we have

where we used Portmanteau’s theorem ([55], p. 6, Lemma 2.2) to get from the second line to the third, using the convergence of to shown above. Hence, our test is consistent. □

Appendix B. Additional Definitions for Causal Learning

Appendix B.1. d-Separation

If , and Z are three pairwise disjoint (sets of) nodes in a dag G, then Z d-separates X from Y in G if Z blocks every possible path between (any node in) X and (any node in) Y in G ([35], § 1.2.3). Then, we write . The symbol is commonly used to denote d-separation.

Appendix B.2. Faithfulness Assumption

If two random variables are (conditionally) independent in the observed distribution , then they are d-separated in the underlying causal graph G ([37], Definition 6.33).

Appendix B.3. Causal Markov Assumption

The distribution is Markov with respect to a dag G if for all disjoint (sets of) nodes , where denotes d-separation ([37], Definition 6.21).

Appendix C. Meek’s Orientation Rules, the SGS and the PC Algorithms

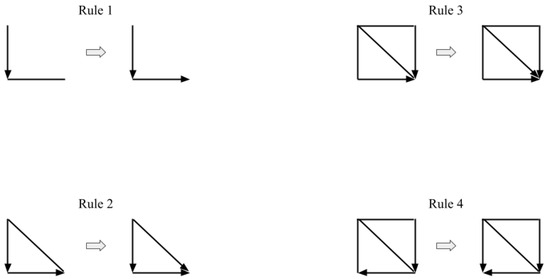

In Figure A1, a node is placed at each end of the edges. Rule 1 states that if one directed edge (here the vertical edge) points towards another undirected edge (the horizontal edge) that is not further connected to any other edges, the undirected edge must point in the same direction as the adjacent edge, because the three vertices would otherwise form a v-structure (which are only formed with conditional dependence). Rule 2, in contrast, points the undirected edge in both adjacent vertices’ direction because it would violate the acyclicity of a dag otherwise. Rule 3 and 4 avoid new v-structures which would come into existence by applying Rule 2 twice if the oriented edges pointed in the opposite direction. These new v-structures would be in the left top corners of the rectangles.

Based on Meek’s orientation rules, the SGS algorithm tests each pair of variables as follows ([14], § 5.4.1):

- Form the complete undirected graph G from the set of vertices (or nodes) .

- For each pair of vertices X and Y, if there exists a subset S of such that X and Y are d-separated, i.e., conditionally independent (causal Markov condition), given Z, remove the edge between X and Y from G.

- Let K be the undirected graph resulting from the previous step 2. For each triple of vertices X, Y, and Z such that the pair X and Y and the pair Y and Z are each adjacent in K (written as ) but the pair X and Z are not adjacent in K, orient as if—and only if—there is no subset S of Y that d-separates X and Z.

- Repeat the following steps until no more edges can be oriented:

- (a)

- If , Y and Z are adjacent, X and Z are not adjacent, and there is no arrowhead of other vertices at Y, then orient as (Rule 1 of Meek’s orientation rules).

- (b)

- If there is a directed path over some other vertices from X to Y, and an edge between X and Y, then orient as (Rule 2 of Meek’s orientation rules).

Figure A1.

Meek rules to orient edges that remain in the graph after conditional independence tests and edges are oriented based on detected colliders. Proofs that edges cannot be oriented in the opposite direction without violating the acyclicity of the graph and found conditional independencies are given in Meek [45].

Figure A1.

Meek rules to orient edges that remain in the graph after conditional independence tests and edges are oriented based on detected colliders. Proofs that edges cannot be oriented in the opposite direction without violating the acyclicity of the graph and found conditional independencies are given in Meek [45].

The large computational cost of applying the SGS algorithm (especially step 2) to data, due to the large number of combinations of variables as the potential conditional sets, opened the door for computationally more efficient methods. The PC algorithm ([14], § 5.4.2) is amongst the most popular alternatives and minimises the computational cost by searching for conditional independence in a structured manner, as opposed to step 2 of the SGS algorithm that iterates over all possible conditional subsets to any two variables A and B. Starting again with a fully connected undirected graph, the PC algorithm begins by testing for marginal independence between any two variables A and B and deletes the edge connecting A and B, , if . Then, it proceeds with testing for and erases if one conditional variable C is found that makes A and B independent given C. Afterwards, the conditional set is extended to two variables, , and the edge is deleted if this conditional set makes A and B independent. The conditional set is extended, round after round, until no more conditional independencies are found, resulting in the sparse graph K. Step 3 and 4 are then pursued as in the SGS algorithm.

Appendix D. Definitions of Performance Metrics

Appendix D.1. shd

For two graphs, and , shd is the sum of the element-wise absolute differences between the adjacency matrices and of and , respectively:

Thus, when a learnt causal graph includes an edge oriented opposite to the corresponding edge in the true graph (i.e., is true), we add 2 to the shd score (“double penalty”). Note that others (e.g., [56]) only penalise by 1 for a learnt edge being directed opposite to the true edge.

Appendix D.2. Normalised shd

We also define the normalised shd, where we divide shd by the number of possible directed edges in a graph of size d, thus allowing comparison across graphs with different number of nodes:

Appendix D.3. Precision

Let the total number of edges in a learnt graph be the sum of truly and falsely oriented edges, (the true-positives and false-positives, respectively). The truly oriented edges are the edges that correspond to the zero elements in the matrix containing the element-wise differences between the learnt and the true . In contrast, the falsely oriented edges are the elements having a value of 2 (“double penalty”). Precision is the proportion of correctly oriented edges in a learnt graph in comparison to a true graph out of all learnt edges e in :

Appendix D.4. Recall

We separate between oriented and unoriented edges, and , respectively, which can again be summed up to the total number of edges, . Recall is the fraction of edges in the cpdag that are oriented:

Appendix E. Other Causal Methods Used for Comparison

Appendix E.1. Granger causality

We implement Granger causality through software provided by Seabold and Perktold [57] for and by Runge et al. [49] for , and define the optimal lag as the first local minimum in the mutual information function over measurement points. Given that Granger causality is applied to a pair of samples, , we iterate over every pair and determine the percentage of pairs that result in against . We follow a similar process for more than two variables.

Appendix E.2. ccm

We implement ccm through software provided by Javier [58] and define the optimal lag as for Granger causality. The embedding dimensionality is determined by a false nearest neighbour method [59]. As with Granger causality, ccm is also applied to a pair of samples, . We take the same summarising approach and iterate over every pair and determine the percentage of of pairs that result in against .

Appendix E.3. pcmci

Runge et al. [49] proposed pcmci, a method that produces time-series graphs where each node represents a variable at a certain state in time. In our experiments, we estimate the maximum possible lag as the average of the first local minimum of mutual information of all considered data series. To test for the presence of an edge, we apply distance correlation-based independence tests [60] between the residuals of X and Y that remain after regressing out the influence of the remaining nodes in the graph through Gaussian processes. The distance correlation coefficients of the significant edges between two variables are summed up to measure the prevalence of a causal direction of edges connecting these two variables. The causal direction with the greater sum is considered to be the directed edge, and assessed against the edge between the same two variables in the true dag.

Appendix F. Computational Complexity of Our Proposed Methods

Appendix F.1. Regression-Based Causal Discovery

One needs to iterate over all possible candidate dags to check the possibility that it was hte causal graph that generated the observed data. The total number of candidate dags of a graph with d nodes is given by

Appendix F.2. Constraint-Based Approach

One needs to exhaustively test for conditional independence between any two nodes of the graph given any possible subset of the remaining nodes. Including the empty conditional set, this results in conditional independence tests for every pair of nodes in the graph. Then, the total number of conditional independence tests is:

Appendix G. Comparison of Running Times of Our Proposed Method and the Other Causal Methods

Table A1 shows the running times of the proposed and comparison methods for synthetic data in Section 4. Furthermore, for the bivariate real-world socioeconomic dataset of corruption and income inequality in Section 4.2.2, Granger causality completes the computation in 731 ms, ccm in 537 ms, and the regression-based approach in 19.2 s (according to our Python implementations).

Table A1.

Running times for one trial with 100 samples for our three proposed methods (regression, constraint, combined) and the three comparison methods (Granger, ccm, pcmci) over various graph sizes (number of variables ). The dash “–” implies that the method was not applied in our experiments in Section 4.

Table A1.

Running times for one trial with 100 samples for our three proposed methods (regression, constraint, combined) and the three comparison methods (Granger, ccm, pcmci) over various graph sizes (number of variables ). The dash “–” implies that the method was not applied in our experiments in Section 4.

| d | Regression-Based | Constraint-Based | Combined | Granger Causality | CCM | PCMCI |

|---|---|---|---|---|---|---|

| 2 | 1 min 57 s | – | – | 998 ms | 1.57 s | – |

| 3 | 30 min 35 s | 1 min 10 s | 3 min 13 s | 43 s | – | 45.5 s |

| 4 | – | 8 min 10 s | 8 min 1 s | 1 min 23 s | – | 1 min 29 s |

| 5 | – | 34 min 33 s | 39 min 8 s | 2 min 44 s | – | 2 min 50 s |

| 6 | – | 1 h 31 min 36 s | 1 h 43 min 54 s | 3 min 20 s | – | 3 min 27 s |

Appendix H. Influence of Nonlinearity and Nonstationarity on Granger Causality and ccm

Appendix H.1. Nonlinearity and Granger Causality:

To examine the increased robustness of our proposed algorithm to nonlinearity, we define a bivariate system with X as the cause and Y as the effect under the assumption of causal sufficiency, i.e., . We consider n functional samples of X defined over the interval generated as described in Equation (10), and the samples of Y defined over by:

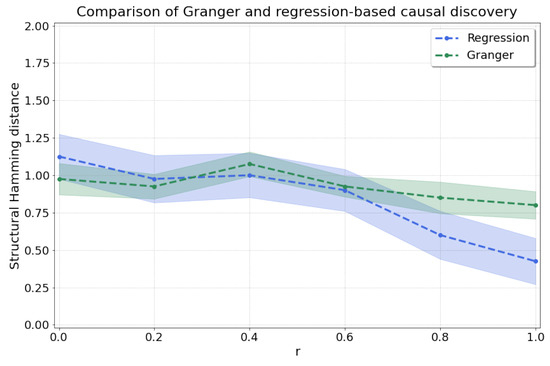

By sweeping , we go from linear dependence between and at to nonlinear dependence at , where we recover the model in Equation (11) with . We thus expect high accuracy from Granger causality at , where there is stationarity and linearity [6]. As r increases, we expect that our regression-based approach will achieve more accurate results, and the results of Granger will degrade as the assumption of linearity will not be met. As expected, Figure A2 shows the improved prediction accuracy (lower shd) of our regression-based approach as r is increased, reaching shd of (versus for Granger causality) at .

Figure A2.

Comparison between Granger causality and our regression-based method for and various values for r which influence the level of nonlinearity in the dependence from X to Y.

Figure A2.

Comparison between Granger causality and our regression-based method for and various values for r which influence the level of nonlinearity in the dependence from X to Y.

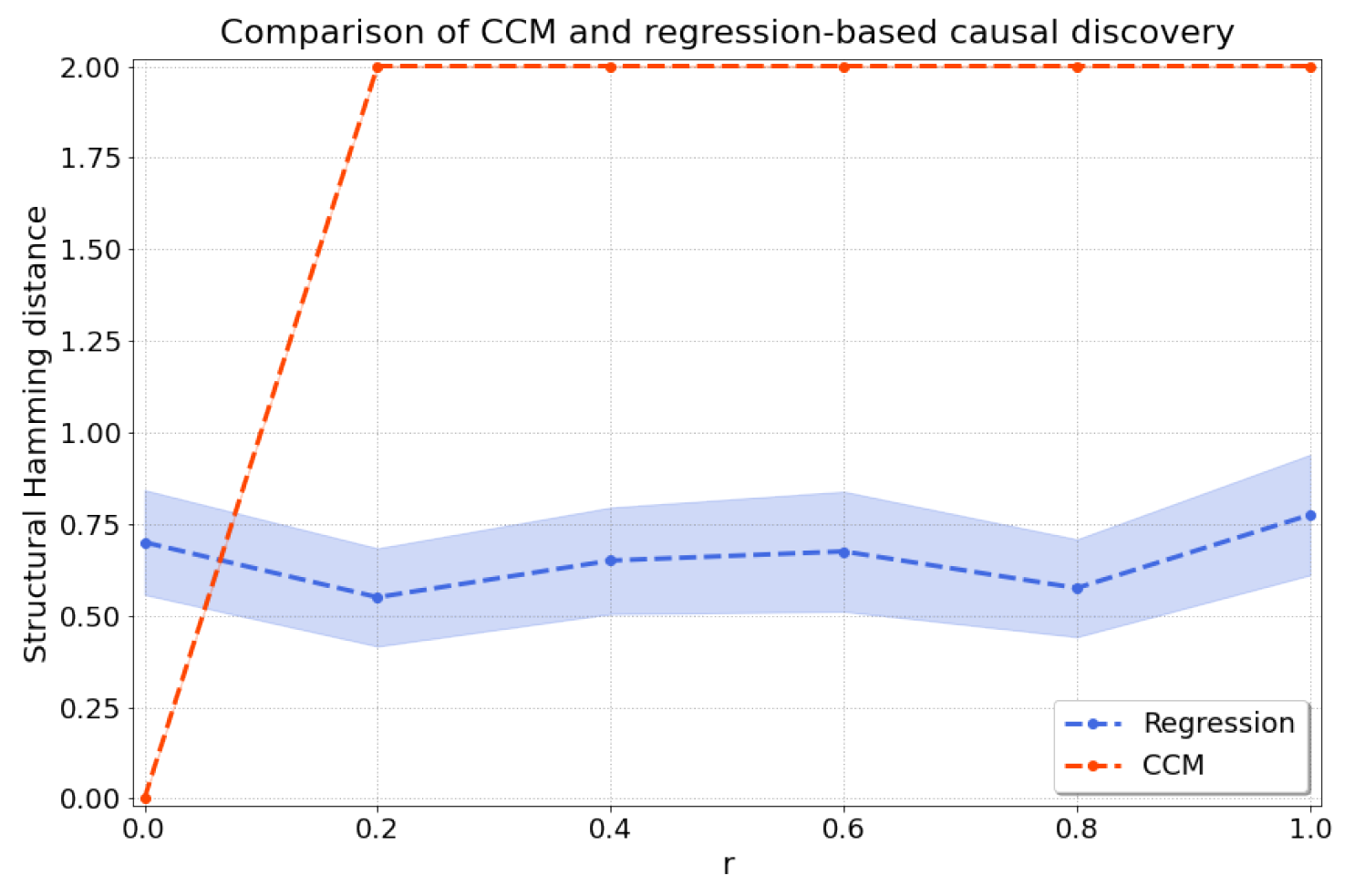

Appendix H.2. Nonstationarity and ccm:

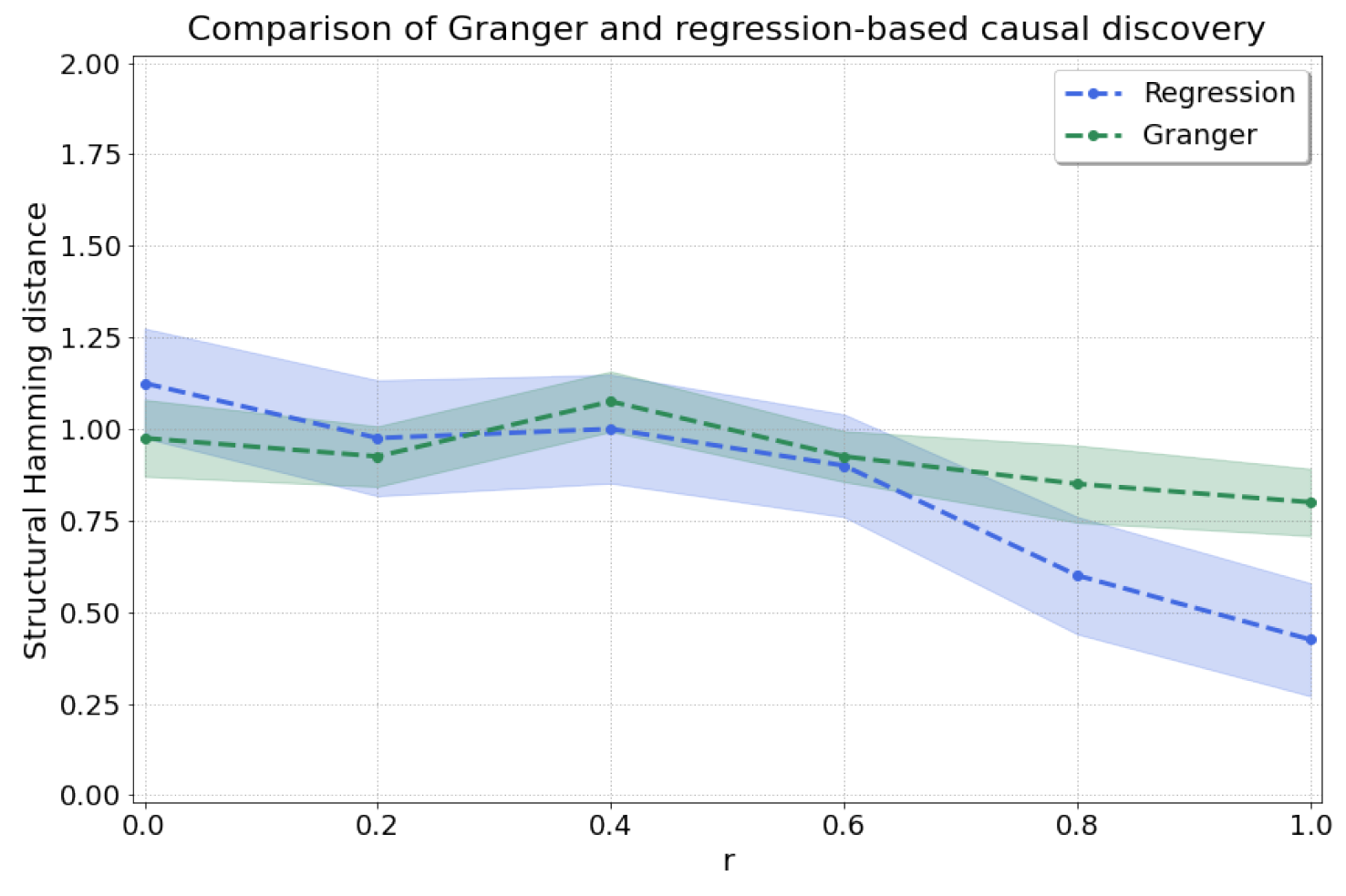

To evaluate the importance of nonstationarity in causal learning, we compare our regression-based method against ccm [8], as a nonstationary component is introduced. We generate samples from a bivariate coupled nonlinear logistic map as defined in Sugihara et al. [8] such that X influences Y more strongly than vice versa and we add nonstationary trends and :

where with , and similarly for . The factor controls the weight of the nonstationarity of the time-series as it is increased.

As shown in Figure A3, ccm is only able to capture the true causal direction for , as expected, but ccm loses its detection power as soon as the data become nonstationary (). In contrast, the regression-based maintains its detection power as the nonstationarity is increased.

Figure A3.

Comparison between ccm and our regression-based method for and various values for r which influence the level of nonstationarity in the data for X and Y.

Figure A3.

Comparison between ccm and our regression-based method for and various values for r which influence the level of nonstationarity in the data for X and Y.

Appendix I. World Governance Indicators

In Figure 7, we use the official names, as mentioned in Table A2, where the descriptions are taken from https://info.worldbank.org/governance/wgi/ (accessed on 7 June 2023). The dataset consists of six variables (normalised to a range from 0 to 100), each of which is a summary of multiple indicators measured over 25 years from 1996 to 2020 in 182 countries, as described in (Kaufmann et al. [9], §4).

Table A2.

Abbreviations, official names and descriptions of the variables in the World Governance Indicators data set.

Table A2.

Abbreviations, official names and descriptions of the variables in the World Governance Indicators data set.

| Abbreviation | Official Name | Description |

|---|---|---|

| VA | Voice and Accountability | Voice and accountability captures perceptions of the extent to which a country’s citizens are able to participate in selecting their government, as well as freedom of expression, freedom of association, and a free media. |

| PS | Political Stability and No Violence | Political Stability and Absence of Violence/Terrorism measures perceptions of the likelihood of political instability and/or politically-motivated violence, including terrorism. |

| GE | Government Effectiveness | Government effectiveness captures perceptions of the quality of public services, the quality of the civil service and the degree of its independence from political pressures, the quality of policy formulation and implementation, and the credibility of the government’s commitment to such policies. |

| RQ | Regulatory Quality | Regulatory quality captures perceptions of the ability of the government to formulate and implement sound policies and regulations that permit and promote private sector development. |

| RL | Rule of Law | Rule of law captures perceptions of the extent to which agents have confidence in and abide by the rules of society, and in particular the quality of contract enforcement, property rights, the police, and the courts, as well as the likelihood of crime and violence. |

| CC | Control of Corruption | Control of corruption captures perceptions of the extent to which public power is exercised for private gain, including both petty and grand forms of corruption, as well as “capture” of the state by elites and private interests. |

References

- Runge, J.; Bathiany, S.; Bollt, E.; Camps-Valls, G.; Coumou, D.; Deyle, E.; Glymour, C.; Kretschmer, M.; Mahecha, M.D.; Muñoz-Marí, J.; et al. Inferring causation from time series in Earth system sciences. Nat. Commun. 2019, 10, 2553. [Google Scholar] [CrossRef] [PubMed]

- Sulemana, I.; Kpienbaareh, D. An empirical examination of the relationship between income inequality and corruption in Africa. Econ. Anal. Policy 2018, 60, 27–42. [Google Scholar] [CrossRef]

- Finkle, J.D.; Wu, J.J.; Bagheri, N. Windowed granger causal inference strategy improves discovery of gene regulatory networks. Proc. Natl. Acad. Sci. USA 2018, 115, 2252–2257. [Google Scholar] [CrossRef]

- Glymour, C.; Zhang, K.; Spirtes, P. Review of causal discovery methods based on graphical models. Front. Genet. 2019, 10, 524. [Google Scholar] [CrossRef]

- Ramsay, J.O. Functional Data Analysis, 2nd ed.; Springer series in statistics; Springer: New York, NY, USA, 2005. [Google Scholar]

- Granger, C.W. Investigating causal relations by econometric models and cross-spectral methods. Econom. J. Econom. Soc. 1969, 37, 424–438. [Google Scholar] [CrossRef]

- Geweke, J. Measurement of linear dependence and feedback between multiple time series. J. Am. Stat. Assoc. 1982, 77, 304–313. [Google Scholar] [CrossRef]

- Sugihara, G.; May, R.; Ye, H.; Hsieh, C.h.; Deyle, E.; Fogarty, M.; Munch, S. Detecting causality in complex ecosystems. Science 2012, 338, 496–500. [Google Scholar] [CrossRef]

- Kaufmann, D.; Kraay, A.; Mastruzzi, M. The worldwide governance indicators: Methodology and analytical issues. Hague J. Rule Law 2011, 3, 220–246. [Google Scholar] [CrossRef]

- World Bank. Gini Index. 2022. Available online: https://data.worldbank.org/indicator/SI.POV.GINI (accessed on 7 June 2023).

- Jong-Sung, Y.; Khagram, S. A comparative study of inequality and corruption. Am. Sociol. Rev. 2005, 70, 136–157. [Google Scholar] [CrossRef]

- Alesina, A.; Angeletos, G.M. Corruption, inequality, and fairness. J. Monet. Econ. 2005, 52, 1227–1244. [Google Scholar] [CrossRef]

- Dobson, S.; Ramlogan-Dobson, C. Is there a trade-off between income inequality and corruption? Evidence from Latin America. Econ. Lett. 2010, 107, 102–104. [Google Scholar] [CrossRef]

- Spirtes, P.; Glymour, C.N.; Scheines, R.; Heckerman, D. Causation, Prediction, and Search; MIT Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Peters, J.; Mooij, J.M.; Janzing, D.; Schölkopf, B. Causal discovery with continuous additive noise models. J. Mach. Learn. Res. 2014, 15, 2009–2053. [Google Scholar]

- Muandet, K.; Fukumizu, K.; Sriperumbudur, B.; Schölkopf, B. Kernel mean embedding of distributions: A review and beyond. Found. Trends Mach. Learn. 2017, 10, 1–141. [Google Scholar] [CrossRef]

- Wynne, G.; Duncan, A.B. A kernel two-sample test for functional data. J. Mach. Learn. Res. 2022, 23, 1–51. [Google Scholar]

- Park, J.; Muandet, K. A measure-theoretic approach to kernel conditional mean embeddings. Adv. Neural Inf. Process. Syst. 2020, 33, 21247–21259. [Google Scholar]

- Berrett, T.B.; Wang, Y.; Barber, R.F.; Samworth, R.J. The conditional permutation test for independence while controlling for confounders. J. R. Stat. Soc. Ser. B Stat. Methodol. 2020, 82, 175–197. [Google Scholar] [CrossRef]

- Malfait, N.; Ramsay, J.O. The historical functional linear model. Can. J. Stat. 2003, 31, 115–128. [Google Scholar] [CrossRef]

- Pfister, N.; Bühlmann, P.; Schölkopf, B.; Peters, J. Kernel-based tests for joint independence. J. R. Stat. Soc. Ser. B Stat. Methodol. 2018, 80, 5–31. [Google Scholar] [CrossRef]

- Ramos-Carreño, C.; Suárez, A.; Torrecilla, J.L.; Carbajo Berrocal, M.; Marcos Manchón, P.; Pérez Manso, P.; Hernando Bernabé, A.; García Fernández, D.; Hong, Y.; Rodríguez-Ponga Eyriès, P.M.; et al. GAA-UAM/scikit-fda: Version 0.7.1. 2022. Available online: https://zenodo.org/records/5903557(accessed on 7 June 2023).

- Squires, C. Causaldag: Creation, Manipulation, and Learning of Causal Models. 2018. Available online: https://github.com/uhlerlab/causaldag (accessed on 7 June 2023).

- Girard, O.; Lattier, G.; Micallef, J.P.; Millet, G.P. Changes in exercise characteristics, maximal voluntary contraction, and explosive strength during prolonged tennis playing. Br. J. Sport. Med. 2006, 40, 521–526. [Google Scholar] [CrossRef]

- Zhu, Y.; Kuhn, T.; Mayo, P.; Hinds, W.C. Comparison of daytime and nighttime concentration profiles and size distributions of ultrafine particles near a major highway. Environ. Sci. Technol. 2006, 40, 2531–2536. [Google Scholar] [CrossRef]

- Ramsay, J.; Hooker, G. Dynamic Data Analysis; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Fukumizu, K.; Gretton, A.; Sun, X.; Schölkopf, B. Kernel measures of conditional dependence. In Proceedings of the 20th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 3–6 December 2007. [Google Scholar]

- Gretton, A.; Fukumizu, K.; Teo, C.H.; Song, L.; Schölkopf, B.; Smola, A.J. A kernel statistical test of independence. In Proceedings of the 20th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 3–6 December 2007; pp. 585–592. [Google Scholar]

- Zhang, K.; Peters, J.; Janzing, D.; Schölkopf, B. Kernel-based conditional independence test and application in causal discovery. arXiv 2012, arXiv:1202.3775. [Google Scholar]

- Lai, T.; Zhang, Z.; Wang, Y.; Kong, L. Testing independence of functional variables by angle covariance. J. Multivar. Anal. 2021, 182, 104711. [Google Scholar] [CrossRef]

- Górecki, T.; Krzyśko, M.; Wołyński, W. Independence test and canonical correlation analysis based on the alignment between kernel matrices for multivariate functional data. Artif. Intell. Rev. 2020, 53, 475–499. [Google Scholar] [CrossRef]

- Doran, G.; Muandet, K.; Zhang, K.; Schölkopf, B. A Permutation-Based Kernel Conditional Independence Test. In Proceedings of the Thirtieth Conference on Uncertainty in Artificial Intelligence, Quebec City, QC, Canada, 23–27 July 2014; pp. 132–141. [Google Scholar]

- Gretton, A.; Borgwardt, K.M.; Rasch, M.J.; Schölkopf, B.; Smola, A. A kernel two-sample test. J. Mach. Learn. Res. 2012, 13, 723–773. [Google Scholar]

- Lee, S.; Honavar, V.G. Self-discrepancy conditional independence test. In Proceedings of the 33rd Conference on Uncertainty in Artificial Intelligence, UAI 2017, Sydney, Australia, 11–15 August 2017; Volume 33. [Google Scholar]

- Pearl, J. Causality; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Mooij, J.M.; Peters, J.; Janzing, D.; Zscheischler, J.; Schölkopf, B. Distinguishing cause from effect using observational data: Methods and benchmarks. J. Mach. Learn. Res. 2016, 17, 1103–1204. [Google Scholar]

- Peters, J.; Janzing, D.; Schölkopf, B. Elements of Causal Inference; The MIT Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Schölkopf, B.; von Kügelgen, J. From statistical to causal learning. arXiv 2022, arXiv:2204.00607. [Google Scholar]

- Squires, C.; Uhler, C. Causal structure learning: A combinatorial perspective. Found. Comput. Math. 2023, 23, 1781–1815. [Google Scholar] [CrossRef]

- Vowels, M.J.; Camgoz, N.C.; Bowden, R. D’ya like dags? A survey on structure learning and causal discovery. ACM Comput. Surv. 2022, 55, 1–36. [Google Scholar] [CrossRef]

- Chickering, D.M. Optimal structure identification with greedy search. J. Mach. Learn. Res. 2002, 3, 507–554. [Google Scholar]

- Shimizu, S.; Hoyer, P.O.; Hyvärinen, A.; Kerminen, A.; Jordan, M. A linear non-Gaussian acyclic model for causal discovery. J. Mach. Learn. Res. 2006, 7, 2003–2030. [Google Scholar]

- Hoyer, P.; Janzing, D.; Mooij, J.M.; Peters, J.; Schölkopf, B. Nonlinear causal discovery with additive noise models. In Proceedings of the 21st International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–10 December 2008. [Google Scholar]

- Szabó, Z.; Sriperumbudur, B.K. Characteristic and Universal Tensor Product Kernels. J. Mach. Learn. Res. 2017, 18, 1–29. [Google Scholar]

- Meek, C. Complete Orientation Rules for Patterns; Carnegie Mellon, Department of Philosophy: Pittsburgh, PA, USA, 1995. [Google Scholar]

- Peters, J.; Mooij, J.; Janzing, D.; Schölkopf, B. Identifiability of causal graphs using functional models. arXiv 2012, arXiv:1202.3757. [Google Scholar]

- Bühlmann, P.; Peters, J.; Ernest, J. CAM: Causal additive models, high-dimensional order search and penalized regression. Ann. Statist. 2014, 42, 2526–2556. [Google Scholar] [CrossRef]

- Shah, R.D.; Peters, J. The hardness of conditional independence testing and the generalised covariance measure. Ann. Stat. 2020, 48, 1514–1538. [Google Scholar] [CrossRef]

- Runge, J.; Nowack, P.; Kretschmer, M.; Flaxman, S.; Sejdinovic, D. Detecting and quantifying causal associations in large nonlinear time series datasets. Sci. Adv. 2019, 5, eaau4996. [Google Scholar] [CrossRef] [PubMed]

- Gini, C. On the measure of concentration with special reference to income and statistics. Colo. Coll. Publ. Gen. Ser. 1936, 208, 73–79. [Google Scholar]

- Ye, H.; Deyle, E.R.; Gilarranz, L.J.; Sugihara, G. Distinguishing time-delayed causal interactions using convergent cross mapping. Sci. Rep. 2015, 5, 14750. [Google Scholar] [CrossRef] [PubMed]

- Rulkov, N.F.; Sushchik, M.M.; Tsimring, L.S.; Abarbanel, H.D. Generalized synchronization of chaos in directionally coupled chaotic systems. Phys. Rev. E 1995, 51, 980. [Google Scholar] [CrossRef]

- Barnett, L.; Barrett, A.B.; Seth, A.K. Granger causality and transfer entropy are equivalent for Gaussian variables. Phys. Rev. Lett. 2009, 103, 238701. [Google Scholar] [CrossRef]

- Porta, A.; Faes, L.; Nollo, G.; Bari, V.; Marchi, A.; De Maria, B.; Takahashi, A.C.; Catai, A.M. Conditional self-entropy and conditional joint transfer entropy in heart period variability during graded postural challenge. PLoS ONE 2015, 10, e0132851. [Google Scholar]

- Van der Vaart, A.W. Asymptotic Statistics; Cambridge University Press: Cambridge, UK, 2000; Volume 3. [Google Scholar]

- Peters, J.; Bühlmann, P. Structural intervention distance for evaluating causal graphs. Neural Comput. 2015, 27, 771–799. [Google Scholar] [CrossRef] [PubMed]

- Seabold, S.; Perktold, J. Statsmodels: Econometric and statistical modeling with Python. In Proceedings of the 9th Python in Science Conference (SCIPY 2010), Austin, TX, USA, 28–30 June 2010. [Google Scholar]

- Javier, P.J.E. Causal-ccm: A Python Implementation of Convergent Cross Mapping. 2021. Available online: https://github.com/PrinceJavier/causal_ccm (accessed on 12 July 2021).

- Munch, E.; Khasawneh, F.; Myers, A.; Yesilli, M.; Tymochko, S.; Barnes, D.; Guzel, I.; Chumley, M. Teaspoon: Topological Signal Processing in Python. 2022. Available online: https://teaspoontda.github.io/teaspoon/ (accessed on 12 July 2022).

- Székely, G.J.; Rizzo, M.L.; Bakirov, N.K. Measuring and testing dependence by correlation of distances. Ann. Statist. 2007, 35, 2769–2794. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).