Abstract

We introduce a quantum version for the statistical complexity measure, in the context of quantum information theory, and use it as a signaling function of quantum order–disorder transitions. We discuss the possibility for such transitions to characterize interesting physical phenomena, as quantum phase transitions, or abrupt variations in correlation distributions. We apply our measure on two exactly solvable Hamiltonian models: the -Quantum Ising Model (in the single-particle reduced state), and on Heisenberg XXZ spin- chain (in the two-particle reduced state). We analyze its behavior across quantum phase transitions for finite system sizes, as well as in the thermodynamic limit by using Bethe Ansatz technique.

1. Introduction

Let us consider a physical, chemical, or biological process such as, for example, the change in the temperature of the water, the mixture between two solutions, or the formation of a neural network. It is intuitive to believe that if one can classify all the possible configurations of such systems, described by their ordering and disordering patterns, it would be possible to characterize and control them. As, for example, during the process of changing the temperature of water, by knowing the pattern of ordering and disordering of its molecular structure, it would be possible to characterize and completely control its phase transitions.

In information theory, the ability to identify certain patterns of order and disorder of probability distributions enables us to control the creation, transmission, and measurement of information. In this way, the characterization and quantification of complexity contained in physical systems and their constituent parts is a crucial goal for information theory [1]. One point of consensus in the literature about complexity is that no formal definition of this term exists. Intuition suggests that systems which can be described as “not complex” are readily comprehended: they can be described concisely by means of few parameters or variables, and their information content is low. Complexity quantifiers must satisfy some properties: (i.) assign a minimum value (possibly zero) for opposite extremes of order and disorder; (ii.) should be sensitive to transitions of order–disorder patterns; (iii.) and must be computable. There are considerable ways to define measures of the degree of complexity of physical systems. Among such definitions, we can mention measures based on data compression algorithms of finite size sequences [2,3,4], Kolmogorov or Chaitin measures based on the size of the smallest algorithm that can reproduce a particular type of pattern [5,6], and measures concerning the classical information theory [7,8,9,10,11,12,13]. Based on recent progress in defining a general canonical divergence within Information Geometry, a canonical divergence measure was presented with the objective of quantify complexity for both classical and quantum systems. In the classical realm, it was proven that this divergence coincides with the classical Kullback–Leibler divergence, and in the quantum domain it reduces to the quantum relative entropy [14,15].

The statistical complexity assigns the simplicity of a probability distribution to the amount of resources needed to store information [16,17]. Similarly, in the quantum realm, the complexity of a given density matrix could be translated as the resource needed to create, operate or measure the quantum state of the system [18,19,20]. On the other hand, the quantum information meaning of complexity could play an important role in the quantification of transitions of order and disorder patterns, which could indicate some quantum physical phenomenon, such as quantum phase transitions.

Regarding the complexity contained in systems, some of the simplest models in physics are the ideal gas and the perfect crystal. In an ideal gas model, the system can be found with the same probability in any of the available micro-states, therefore each state contributes equally to the same amount of information. On the other hand, in a perfect crystal, the symmetry rules restrict the accessible states of the system to only one very symmetric state. These simple models are extreme cases of minimum complexity, in a scale of order and disorder, therefore, there might exist some intermediate state which contains a maximum complexity value in that scale [21].

The main goal of this work is to introduce a quantum version of the statistical complexity measure, based on the physical meaning of the characterization and quantification of transitions between order–disorder patterns of quantum systems. As a by-product, this measure could be applied in the study of quantum phase transitions. Physical properties of systems across a quantum phase transition are dramatically altered, and in this way, it is interesting to understand how the complexity of a system would behave under such transitions. In our analysis, we studied the single particle reduced state of the parametric -Ising Model and the two-particle reduced state of Heisenberg XXZ spin- Model.

The manuscript is organized as follows: In Section 2, we introduce the Statistical Measure of Complexity defined by Lópes-Ruiz et al. in [21], and in Section 3, we introduce a quantum counterpart of this measure: the Quantum Statistical Complexity Measure. We present some properties of this measure (in Section 4), and also exhibit a closed expression of this measure for one-qubit, written in the Bloch basis (Section 4.1). We also discuss two interesting examples and applications: the -Quantum Ising Model (Section 4.2), in which we compute the Quantum Statistical Complexity Measure for one-qubit reduced state from N spins, in the thermodynamic limit, with the objective of determining the quantum phase transition point. We further determine the first-order quantum transition point and the continuous quantum phase transition for the Heisenberg XXZ spin- model, with (Section 4.3), by means of the Quantum Statistical Complexity Measure of the two-qubit reduced state for nearest neighbors, and in the thermodynamic limit. Finally, we give concluding remarks in Section 5.

2. Classical Statistical Complexity Measure—CSCM

Consider a system possessing N accessible states , when observed on a particular scale, with each state having an intrinsic probability given by . As discussed before, the candidate function to quantify the complexity of a probability distribution associated with a physical system, must attribute zero value for systems with the maximum degree of order, that is, for pure distributions: , and also assign zero for disordered systems which are characterized by an independent and identically distributed vector (i.i.d.): , for all . Let us address the case of ordered (Section 2.1) and disordered (Section 2.2) systems separately.

2.1. Degree of Order

A physical system possessing the maximum degree of order can be regarded as a system with a symmetry of all of its elements. The probability distribution that describes such systems is best represented by a pure vector, which places the system as having only one possible configuration. Physically, this is the case for a gas at zero Kelvin temperature, or a perfect crystal where the symmetry rules restrict the accessible state to a very symmetric one. In order to quantify the degree of order of a given system, the function must assign maximum value for pure probability distributions, and attribute zero for equiprobable configurations. A function capable of quantifying such a degree of order is the -distance between the probability distribution and the independent and identically distributed vector (i.i.d.):

where , is the (i.i.d.) vector, i.e., it is a vector with elements: , for all . This function plays the role of the disequilibrium function and it quantifies the degree of order of a probability vector. It consists of the sum of the absolute values of the elements of the vector . The disequilibrium measure D will have zero value for maximally disordered systems and maximum value for maximally ordered systems.

2.2. Degree of Disorder

In contrast with an ordered system, a system possessing the maximum degree of disorder is described by an equiprobable distribution. This means an equally probable expectation of occurring any of its configurations, as in a fair dice game, or in a partition function of an isolated ideal gas. From a statistical point of view, the probability vector that describes this feature is the independent and identically distributed vector (i.i.d.) , as defined above. One can define the degree of disorder of a system as a function which assigns value of zero for pure probabilities distributions, (associated with maximally ordered distributions), and a maximum value for the i.i.d. distribution. A well known function capable of quantifying the degree of disorder of a probability vector is the Shannon entropy:

In this way, the Shannon entropy will assign zero for maximally ordered systems, and a maximal value for i.i.d vectors equals to . The log function is usually taken in basis 2, in order to quantify the amount of disorder in bits.

2.3. Quantifying Classical Complexity

Using the maximally ordered and maximally disordered states and Equations (1) and (2), respectively, Lópes-Ruiz et al. in [21] defined a classical statistical measure of complexity constructed as a product of such order–disorder quantifiers. There are intermediate states of order–disorder that may exhibit some interesting physical properties and which can be associated with complex behavior. Therefore, in this sense, this measure should deal with these intermediate states by measuring the amount of complexity of a physical system [21].

Definition 1

(Classical Statistical Complexity Measure—(CSCM) [21]). Let us consider a probability vector given by , with , associated with a random variable X, representing all possible states of a system. The function is a measure of the system’s complexity and can be defined as:

The function will vanish for “simple systems”, such as the ideal gas model or a crystal, and it should reach a maximum value for some state.

Definition 2

(Classical Statistical Complexity Measure of Joint Probability Distributions and of Marginal Probability Distributions). Given a known joint distribution of two discrete random variables X and Y, given by: , of dimension N, one can define the CSCM of the joint distribution , by Equation (4):

and in the same way, we define the CSCM of the two marginal distributions, , by Equation (5) and , by Equation (6):

where and are marginal probability density functions given by: , and .

Generalizations of these measures defined above to continuous variables are straightforward (cf. Refs. [22,23]). In the same way, we can also generalize Definition 2 in order to deal with conditional probability densities like or , which may be useful in some other more general contexts.

Classical Statistical Complexity Measure depends on the nature of the description associated to a system and with the scale of observation [24]. This function, generalized as a functional of a probability distribution, has a relation with a time series generated by a classical dynamical system [24]. Two ingredients are fundamental in order to define such a quantity: the first one is an entropy function which quantifies the information contained in a system, and could also be the Tsallis’ Entropy [25], Escort-Tsallis [26], or Rényi Entropy [27]. The other ingredient is the definition of a distance function in the state of probabilities, which indicates the disequilibrium relative to a fixed distribution (in this case the distance to the i.i.d. vector). For this purpose we can use an Euclidean Distance (or some other p-norm), the Bhattacharyya Distance [28], or Wootters’ Distance [29]. We can also apply a statistical measure of divergence, for example the Classical Relative Entropy [30], Hellinger distance, and also Jensen–Shannon Divergence [31]. We make note of some other generalized versions of complexity measures in recent years, and these functions have proven to be useful in some branches of classical information theory [7,32,33,34,35,36,37,38,39,40].

3. Quantum Statistical Complexity Measure—QSCM

3.1. Quantifying Quantum Complexity

The quantum version of the statistical complexity measure quantifies the amount of complexity contained in a quantum system, in an order–disorder scale. For quantum systems, the probability distribution is replaced by a density matrix (positive semi-definite and trace one). Likewise, the classical case, the extreme cases of order and disorder, are, respectively, the pure quantum states , and the maximally mixed state: , where N is the dimension of the Hilbert space. Additionally, in analogy with the description for classical probability distributions, the quantifier of quantum statistical complexity must be zero for maximum degree of order and disorder. One can define the Quantum Statistical Complexity Measure (QSCM) as a product of an order and a disorder quantifiers: a quantum entropy, which measures the amount of disorder related to a quantum system, and a pairwise distinguishability measure of quantum states, which plays the role of a disequilibrium function. One of the functions to measure the amount of disorder of a quantum system is the von Neumann entropy and it is given by:

where is the density matrix of the system. The trace distance between and the the maximally mixed state quantifies the degree of order:

For our purposes here, we define the Quantum Statistical Complexity Measure–(QSCM) in Definition 4 by means of the trace distance between the reduced quantum state and acting as the reference state. However, the trace distance function was chosen in both Definition 3 and in Definition 4 because it is the most distinguishable distance in the Hilbert space, and also monotonic under stochastic operations.

Definition 3

(Quantum Statistical Complexity Measure—(QSCM)). Let be a quantum state over an -dimensional Hilbert space. Then we can define the Quantum Statistical Complexity Measure as the following functional of ρ:

where is the von Neumann entropy, and is a distinguishability quantity between the state ρ and the normalized maximally mixed state , defined in the suitable space.

Definition 4

(Quantum Statistical Complexity Measure of the Reduced Density Matrix). Let be a global system of dimension , or any compound state of a system and its environment, and let , (having dimension N), be the reduced state of this compound state (where is the partial trace over the environment). Then we can define the Quantum Statistical Complexity Measure of the Reduced Density Matrix as:

where is the von Neumann entropy of the quantum reduced state: , and is a distinguishability quantity between the quantum reduced state (), and the normalized maximally mixed state , defined in the suitable space.

In this work, we will use the Definition 4 (Quantum Statistical Complexity Measure of the Reduced Density Matrix) as the Quantum Statistical Complexity Measure–(QSCM). The reason for this choice is that we will study quantum phase transitions, therefore, we will apply the QSCM in the one-qubit state (reduced by N parts) in the thermodynamic limit, in the case of the -Ising Model, (Section 4.2), and in the case of the Heisenberg Model XXZ-, (Section 4.3), we will apply the QSCM in the two-qubit state (reduced of N parts), also in the thermodynamic limit. Definition 4 is the quantum analogue of Definition 2, i.e., it is the quantum correspondent of CSCM defined by means of “marginal probability distributions”. Extensions of these measures defined above to continuous variables are trivial.

In analogy with the classical counterpart, in the definition of quantum statistical complexity measure, there is a carte blanche in choosing the quantum entropy function, such as the quantum Rényi entropy [41], or quantum Tsallis entropy [42], among many others functions. Similarly, we can choose other disequilibrium functions as a measure of distinguishability of quantum states. It can be some Shatten-p norm [43], or a quantum Rényi relative entropy [44], the quantum skew divergence [45], or a quantum relative entropy [46]. Another feature that might generalize the quantities defined in Definition 3 and Definition 4 is to define a more general quantum state as a reference state (rather than the normalized maximally mixed state ) in the disequilibrium function. This choice must be guided by some physical symmetry or interest. Some obvious candidates are the thermal mixed quantum state, and the canonical thermal pure quantum state [47].

3.2. Some Properties of the QSCM

To complete our introduction of the quantifier of quantum statistical complexity, we should require some properties to guarantee a bona fide information quantifier. The amount of order–disorder, as measured by the QSCM, must be invariant under local unitary operations because it is related to the purity of the quantum reduced states.

Proposition 1

(Local Unitary Invariance). The Quantum Statistical Complexity Measure is invariant under local unitary transformations, applied on the quantum reduced state of system-environment: Let a quantum system: be the quantum reduced state of dimension N, and let be the compound system-environment state, having dimension , such that: , therefore:

where , and is a local unitary transformation acting on , and is the partial trace over the environment E. The extension of this property to the global state is trivial.

This statement comes directly from the invariance under local unitary transformation of von Neumann entropy and trace distance applied on the quantum reduced state .

Another important property regards the case of inserting copies of the system in some experimental contexts. Let us consider an experiment in which the experimentalist must quantify the QSCM of a given state by means of a certain number n of copies , which therefore implies that the QSCM of the copies should be bounded by the quantity of only one copy.

Proposition 2

(Sub-additivity over copies). Given a product state , with , the QSCM is a sub-additive function over copies:

Indeed this is an expected property for a measure of information, since the regularized number of bits of information gained from a given system cannot increase just by considering more copies of the same system. The proof of Proposition 2 is in Appendix A, and it comes from the additivity of von Neumann entropy and sub-additivity of trace distance.

It is important to notice that quantum statistical complexity is not sub-additive over general extensions with quantum states, for example: i. Extensions with maximally mixed states: let us consider a given state is extended with one maximally mixed state , with .

Equation (15) presents an upper bound to the QSCM for this extended state. This feature demonstrates that the measure of the compound state is bounded by the quantity of one copy. ii. In Equation (18) we present the QSCM for a more general extension given by . This feature shows that the measure of the compound state is also bounded by the quantity of one copy.

iii. As a last example of nonextensivity over general compound states, let us consider the extension with a pure state , with .

As discussed above, the QSCM is a measure that intends to detect changes in properties, as for example changes on patterns of order and disorder. Therefore, the measure must be a continuous function over the parameters of the states responsible for its transitional characteristics. Naturally, the quantum complexity is a continuous function, since it comes from the product of two continuous functions. Due to continuity, it is possible to define the derivative function of the quantum statistical complexity measure:

Definition 5

(Derivative). Let us consider a physical system described by the one-parameter set of states: , for . We can define the derivative with respect to α as:

In the same way as defined in Definition 5, it is possible to obtain higher order derivatives.

Definition 6

(Correlation Transition). In many-particle systems a transition of correlations occurs when a system changes from a state that has a certain order, pattern or correlation, to another state possessing another order pattern or correlation.

At low temperatures, physical systems are typically ordered, increasing the temperature of the system, they can undergo phase transitions or order–disorder transitions into less ordered states: solids lose their crystalline form in a solid–liquid transition; iron loses magnetic order if heated above the Curie point in a ferromagnetic-paramagnetic transition, etc. The description of physical systems depends on measurable quantities such as temperature, interaction strength, interaction range, orientation of an external field, etc. These quantities can be described by parameters in a suitable space. For example, let us consider a parameter describing some physical quantity , and a set of one parameter state .

Phase transitions are characterized by a sharp change in the complexity of the physical system that exhibits such emergent phenomena when this suitable control parameter exceeds a certain critical value. The study of transitions between correlations with the objective of inferring physical properties of a system can generate great interest. We know that at the phase transition point, the reduced state of N particles undergoes abrupt transitions that go through states that have a certain purity and change abruptly to reduced mixed states. These transitions can indicate a certain type of correlation transition that can be detected. For many-particle and composed systems, quick change on the local order–disorder degree can be associated with a transition in the correlations pattern. In this way, a detectable change in these parameters may indicate an alteration in system configuration, which is considered here as a change in the pattern of order–disorder.

Quantum phase transition is a fundamental phenomenon in condensed matter physics, and is tightly related to quantum correlations. Quantum critical points for Hamiltonian models with external magnetic fields at finite temperatures were studied extensively. In the Quantum Information scenario, the quantum correlation functions used in these studies of quantum phase transitions concerned almost only concurrence and quantum discord. The behavior of quantum correlations for the Heisenberg XXZ spin-1/2 chain via negativity, information deficit, trace distance discord, and local quantum uncertainty was investigated in [48]. However, other measures of quantum correlations had also been proposed in order to detect quantum phase transitions, such as: local quantum uncertainty [49], entanglement of formation [50,51], quantum discord, and classical correlations [52]. Authors in Ref. [53] revealed a quantum phase transition in an infinite -XXZ chain by using concurrence and Bell inequalities. The behaviors of the quantum discord, quantum coherence, and Wigner–Yansase skew information and the relations between the phase transitions and symmetry points in the Heisenberg XXZ spin-1 chains have been broadly investigated in Ref. [54]. The ground state properties of the one-dimensional extended Hubbard model at half filling from the perspective of its particle reduced density matrix were studied in [55], where the authors focused on the reduced density matrix of two fermions and performed an analysis of its quantum correlations and coherence along the different phases of the model.

In an abstract manner, a quantum state undergoing a path through the i.i.d. identity matrix is an example of such transitions which may have physical meaning, as we will observe later in some examples. Let us suppose that a certain subspace of a quantum system can be interpreted as having a certain order (i.e., a degree of purity of the compound state of N particles), and there exists a path in which this subspace passes through the identity. This path can be analyzed as having an order–disorder transition. In order to illustrate the formalism of quantum statistical complexity in this context of order–disorder transition, in Section 4 we apply it to two well-known quantum systems that exhibit quantum phase transitions: the -Quantum Ising Model (Section 4.2), and the Heisenberg XXZ spin- model (Section 4.3).

4. Examples and Applications

In this section we calculate an analytic expression for the Quantum Statistical Complexity Measure (QSCM), of one-qubit, written in the Bloch basis, (Section 4.1), and present the application of QSCM in order to evince quantum phase transitions and correlation ordering transitions for the -Quantum Ising Model (Section 4.2) and for the Heisenberg XXZ spin- model (Section 4.3).

4.1. QSCM of One-Qubit

Let us suppose we have a one-qubit state , written in the Bloch basis. In Equation (22), we analytically exhibit the Quantum Statistical Complexity Measure of one-qubit, written as: :

where , and , with , and is the Pauli matrix vector. Normalization constants such as , for example, are omitted in Equation (22) just for aesthetic reasons.

It is interesting to notice that the Quantum Statistical Complexity Measure of one-qubit, written in the Bloch basis, is a function dependent only on r, that is, . This expression will be useful in the study of quantum phase transitions, for example, in the -Ising Model, discussed in Section 4.2, where an analytical expression for the state of one-qubit reduced from N spins, in the thermodynamic limit, will be obtained. Other useful expressions can be obtained, for example, the trace distance between the state and the normalized identity for one-qubit is also a function of r, in the Bloch’s basis, , and therefore, the entropy function can be easily written as by using Equation (22). In addition, we exhibit analytic expressions for the first (Equation (23)), and the second, Equation (24)) derivatives of QSCM, for one-qubit, written in the Bloch Basis. One can observe that these functions also depend only on r:

4.2. Quantum Ising Model

The -Quantum Ising Model presents a quantum phase transition and, despite its simplicity, still generates a lot of interest from the research community. One of the motivations lies in the fact that spin chains possess a great importance in modelling quantum computers. The Hamiltonian of the -Quantum Ising Model is given by:

where are the Pauli matrices, J is an exchange constant that sets the interaction strength between the pairs of first neighbors , and g is a parameter that represents an external transverse field. Without loss of generality, we can set , since it simply defines an energy scale for the Hamiltonian. The Ising model ground state can be obtained analytically by a diagonalization consisting of three steps:

- A Jordan–Wigner transformation:where and are the annihilation-creation operators, respecting the anti-commutation relations: , and ;

- A Discrete Fourier Transform (DFT):

- A Bogoliubov transformation:where represents the basis rotation from the mode to the new mode representation . The angles are chosen such that the ground state of the Hamiltonian in Equation (25) is the vacuum state in mode representation, and it is given by 56].

We can calculate the reduced density matrix of one spin by using the Bloch representation, in which all coefficients are obtained via expectation values of Pauli operators. The one-qubit state in the site j, given by can be written as:

where are expected values in vacuum state in the site j, and . Note that , , because they combine an odd number of fermions. Therefore, the Bloch’s vector possesses only the z-component. Let us define , and . Thus, the z-component will be given by:

As discussed above, the only non-vanishing term will be , and therefore:

In Equations (28) we exhibit the one-qubit reduced density matrix in the Bloch basis ():

where the angle is the Bogoliubov rotation angle and the summation index , with . This result is independent of the spin index, as expected for systems that are translational invariant.

We can now calculate QSCM for the reduced density matrix analytically. From Equation (22), we simply identify the Bloch vector of the reduced density matrix having only z-component, as written in Equation (27). This quantity in the thermodynamic limit can be obtained by taking the limit of the Riemann sums, . This Bloch’s vector component is a function of the field g, that is, , with , and . Thus, the z component of Bloch’s vector given in Equation (27) goes to the following integral, written as:

This integral can be solved analytically in the thermodynamic limit for some values of the transverse field parameter. For , we can easily obtain , which corresponds to a one-qubit maximally mixed reduced state . At , i.e., in the critical point, the integral given in Equation (29) can be also solved and we obtain , in the thermodynamic limit. The eigenvalues of the one-qubit reduced state , at , can be obtained analytically as: . For other values of g, the integral written in Equation (29) can be written as elliptic integrals of first and second kinds [57]. By using the result given in Equation (29) on Equation (22), we can thus obtain the quantum statistical complexity measure for one-qubit reduced density matrix in the thermodynamic limit as a function of the transverse field parameter g.

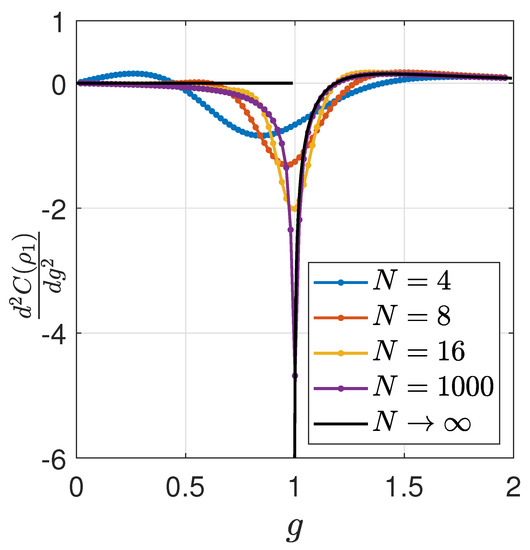

In Figure 1 we present the second derivative of QSCM with respect to the transverse field parameter g, for different finite system sizes . We also calculated this derivative in the thermodynamic limit by using Equation (24) and Equation (29).

Figure 1.

Second derivative of QSCM as a function of g. The Second derivative of QSCM with respect to the transverse field parameter g, for different finite system sizes: , and for the thermodynamic limit (continuous line), , for .

It is well known that at there is a quantum phase transition of second order [58]. By observing Figure 1, we can directly recognize a sharp behavior of the measure in the transition point.

4.3. XXZ-½ Model

Quantum spin models as the XXZ- can be simulated experimentally by using Rydberg-excited atomic ensembles in magnetic microtrap arrays [59], and also by a low-temperature scanning tunneling microscopy [60], among many other quantum simulation experimental arrangements. Let us consider a Heisenberg XXZ spin- model defined by the following Hamiltonian:

with periodic boundary conditions, , and , where are Pauli matrices, and is the uni-axial parameter strength, which is a ratio of interactions between or interactions. This model can interpolate continuously between classical Ising, quantum XXX, and quantum XY models. At , it turns to the quantum or model which corresponds to free fermions on a lattice. For , (), the anisotropic XXZ model Hamiltonian reduces to the isotropic (ferro)anti-ferromagnetic XXX model Hamiltonian. For , the model goes to an (ferro)anti-ferromagnetic Ising Model.

The parameter J defines an energy scale and only its sign is important: we observe a ferromagnetic ordering along the plane for positive values of J, and, for negative ones, we notice the anti-ferromagnetic alignment. The uni-axial parameter strength distinguishes a planar regime (when ), from the axial alignment, (for ), cf. [61]. Thereby, it is useful to define two regimes: for , the Ising-like regime and , the XY-like regime in order to model materials possessing respectively an easy-axis and easy-plane magnetic anisotropies [62].

Here we are interested in quantum correlations between the nearest and next to nearest neighbor spins in the XXZ spin- chain with , at a temperature of , and zero external field (). The matrix elements of are written in function of expectation values which mean the correlation functions for nearest neighbor , (for ), and the correlation functions for next-to-nearest neighbors , (for ), and they are given by a set of integral equations which can be found in Appendix B or in [48,53,63,64,65]. These two point correlation functions for the XXZ model at zero temperature and in the thermodynamic limit can be derived by using the Bethe Ansatz technique. In Equation (30), due to the symmetry in the Hamiltonian model, it is presented the two-qubit reduced density matrix of sites i and , for , in the thermodynamic limit, written in the basis , , and , where and are the eigenstates of the Pauli z-operator [48]:

where , , and , with , and .

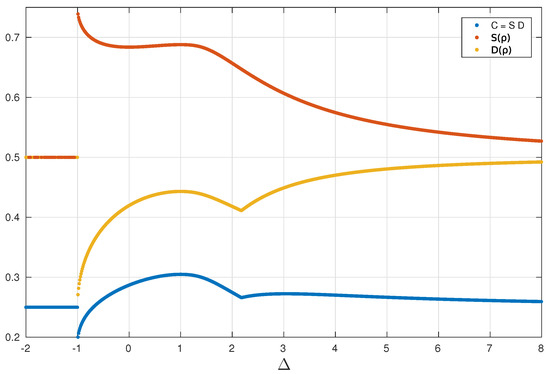

In Figure 2 we show the QSCM, , for nearest neighbor, in contrast with the von Neumann entropy and the trace distance between and the normalized identity matrix, all as a function of the uni-axial parameter strength . The XXZ model possess two critical points: the first-order transition occurs at , and also a continuous phase transition shows up at [66]. An interesting feature of the QSCM is the fact that it evinces points of correlation transitions, related to the order–disorder transitions, which may not necessarily be connected with phase transitions. In this respect, we take note of the cusp point in Figure 2, at .

Figure 2.

Comparison between measures. Quantum Statistical Complexity Measure (blue), von Neumann Entropy (orange), and the disequilibrium function given by the Trace distance (yellow) in function of . All measures were calculated for the two-qubit reduced density matrix of sites i and , , for , in the thermodynamic limit.

In order to investigate the cusp point of at , let us consider what happens with the state , given by Equation (30), as varies. The state given in Equation (30) can be easily diagonalized, thus let us study the following matrix , which plays an important role in the quantum statistical complexity measure as already discussed. This matrix has the following eigenvalues: . As the value increases in the interval , correlation values in the x direction also increase while correlations in z decrease, reaching the local minimum observed in Figure 2. In this interval, the eigenvalue goes through zero, and this, therefore, should cause the correlation transition. This correlation transition is due to the fact that this eigenvalue vanishes for some in this interval, which should imply a change of orientation of spin correlations.

By following this reasoning, in order to determine such points at which changes of orientation of spin correlations occur, it is necessary to solve numerically some integral equations, given by the eigenvalues of Equation (30), which are functions of expected values given in Refs. [48,53,63,64,65]. This procedure has the objective of determining the solution for which values of the following integral equation holds: . Due to the fact that the values of , for this Hamiltonian, the solution of this equation indicates the point where planar -correlation decreases while z-correlation increases, although we are already in the ferromagnetic phase. For , the system moves towards a configuration that exhibits correlation only in the z-direction. Proceeding in the same way, by solving the other integral equation given in the eigenvalues set: , we obtain a divergence solution for which .

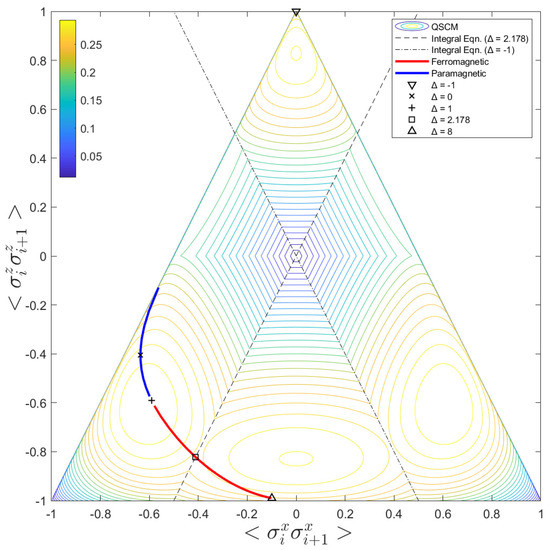

In Figure 3, we call attention to a contour map of in function of , and . The triangle region represents the convex hull of positive semi-definite density matrices. The vertices of this triangle are given by: and . Along with the contour map of QSCM as a function of the correlation functions in x and z directions, the integral equations obtained while the two eigenvalues of goes to zero are also represented in Figure 3. These integral equations are represented by the two inclined straight lines (the dash and dash-dot ones). The dash and inclined straight line describes the integral equation whose solution is . The dash-dot straight line represents the curve for solution, for which there exists a divergence point (the phase transition point).

Figure 3.

Contour map. The contour map of QSCM, , in function of the correlation functions and . The dash inclined straight line represents the integral equation whose solution is , and the dash-point straight line represents the curve for , for which there is a divergence point. The indicated path inside the contour map shows the curve performed by the variation of , inside the positive semi-definite density matrix space, for . The highlighted points are: , (); , (×); , (+); , (☐) and , (Δ). Additionally, the ferromagnetic region (red) and the paramagnetic region (blue) are also represented in this path.

As previously mentioned, QSCM showed to be sensitive to correlation transitions. In Figure 3, the thick and colorful curve inside the contour map shows the path taken by , while the values of correlations in x and z vary when increases monotonically in the interval . This same path was also presented in Figure 2, on the blue curve. The blue part of the thick curve represents values for the correlations in which we have a paramagnetic state, and the red part of the thick and colorful curve indicates the values for the ferromagnetic arrangement. Additionally, we have highlighted some interesting points in this colorful curve by a ×: for , (); for , (×); , (+); , (☐) and for , (Δ).

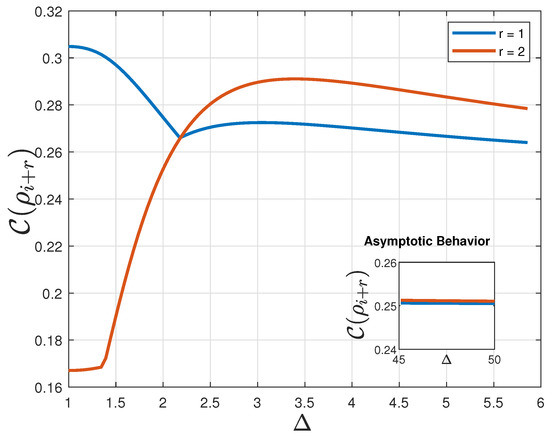

Figure 4 shows QSCM for nearest neighbors, given by , (blue), and for next-to-nearest neighbors, written as , (orange), both in the thermodynamic limit in function of the uni-axial parameter strength .

Figure 4.

QSCM for nearest neighbors, (), and for next-to-nearest neighbors (). for the two-qubit reduced density matrix of sites i and , for (blue), and for (orange), both in the thermodynamic limit in function of the uni-axial parameter strength . (a) Sub-Figure: Asymptotic Behavior. The sub-figure shows the asymptotic behavior for large for both cases (in fact, both and , when ).

The asymptotic limit for both measures () is also presented in the sub-figure. As , the behavior of these two correlation functions and , for both cases. In this limit, the density matrix of the system can be written as , for both cases and thus, . Additionally, , which makes , for and for . It is interesting to notice that exactly at . The QSCM for nearest neighbor is greater than the QSCM for next-to-nearest neighbors, i.e.,;, for . For , , until both goes to , for large values of . This behavior can be an indication of a probable increase in complexity as the transition of order–disorder occurs. Therefore, it should be expected that this measure could act as a complexity pointer. Considering more correlations possible if we count on the action of second neighbors, it is understandable that an increase of complexity should occur at .

5. Conclusions

We introduced a quantum version for the statistical complexity measure, the Quantum Statistical Complexity Measure (QSCM), and displayed some of its properties. The measure has demonstrated to be useful and physically meaningful. It possesses several of the expected properties for a bona fide complexity measure and demonstrates its possible usefulness in other areas of quantum information theory.

We presented two applications of the QSCM, investigating the physics of two exactly solvable quantum Hamiltonian models, namely: the -Quantum Ising Model and the Heisenberg XXZ spin- chain, both in the thermodynamic limit. Firstly we calculated the QSCM for one-qubit, in the Bloch’s base, and we determined this measure as a function of the magnitude of the Bloch vector r. We computed its magnitude in the thermodynamic limit, first by analytically calculating the measure for the one-qubit state reduced density matrix from N spins. Later, in order to study the quantum phase transition for the -Quantum Ising Model, we performed the limit . For the -Quantum Ising Model, we obtained the quantum phase transition point at . In this way, we have found that the QSCM can be used as a signaling of quantum phase transitions for this model.

Secondly, we studied the Heisenberg XXZ spin- chain, and by means of QSCM we evince a point at which a correlation transition occurs for this model. Physically, at , the planar -correlation decreases while the z-correlation increases, reaching a minimum point, although we are already in the ferromagnetic arrangement. This competition between these two different alignments of correlations indicates an order–disorder transition in which the measure was shown to be sensitive.

We have studied the derivatives of the QSCM and they demonstrated to be sensitive to the quantum transition points. As a summary of this study for the Heisenberg XXZ spin-, we can list: (i) the Quantum Statistical Complexity Measure, characterizing the first-order quantum phase transition at , (ii) and evinces the continuous quantum phase transition at , and (iii) witnesses order–disorder transition at , related to the alignment of the spin correlations.

Author Contributions

Conceptualization, A.T.C.; methodology, A.T.C., D.L.B.F.; software, A.T.C. and D.L.B.F.; formal analysis, A.T.C. and D.L.B.F.; validation, A.T.C., D.L.B.F., T.O.M., T.D., F.I. and R.O.V.; funding acquisition R.O.V.; Writing—original draft, A.T.C.; Writing—review/editing, A.T.C., T.D., F.I. and R.O.V.; Project administration, R.O.V.; supervision, R.O.V. and T.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the brazilian agency FAPEMIG through Reinaldo O. Vianna’s project: PPM-00711-18.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

This work was partially supported by Brazilian agencies Fapemig, Capes, CNPq and INCT-IQ through the project (465469/2014-0). ROV also acknowledges FAPEMIG project PPM-00711-18. T.D. also acknowledge the support from the Austrian Science Fund (FWF) through the project P 31339-N27. F.I. acknowledges the financial support of the Brazilian funding agencies CNPq (Grant No. 308205/2019-7), FAPERJ (Grant No. E-26/211.318/2019 and No. E-26/201.365/2022). T.O.M. acknowledge financial support from the Serrapilheira Institute (grant number Serra-1709-17173), and the Brazilian agencies CNPq (PQ grant No. 305420/2018-6) and FAPERJ (JCN E-26/202.701/2018).

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CSCM | Classical Statistical Complexity Measure |

| QSCM | Quantum Statistical Complexity Measure |

Appendix A. Sub-Additivity over Copies

Proposition A1.

Given a product state , the QSCM is a sub-additive function:

Equality holds if we choose the disequilibrium function to be the quantum relative entropy, , which is additive under tensor products. In this case, the measure will be additive: .

Proof.

Von Neumann entropy is additive for product states: . This means that information contained in an uncorrelated system , is equal to the sum of its constituents parts. This equality also holds for Shannon Entropy. However, the trace distance is sub-additive with respect to the tensor product: [67]. We will prove this proposition by induction and also we will only consider states with same dimension, i.e.,. It is easy to observe that the proposition is true for . Let us suppose now, as an induction step, that for some arbitrary , .

Using the sub-additivity property for the trace distance: , and also using , for an arbitrary

Therefore □

Appendix B. Correlation Functions for Nearest Neighbors and Next-to-Nearest Neighbors

The correlation functions for nearest neighbors () spins of XXZ- model. The two-point correlation functions of this model at zero temperature and in the thermodynamics limit can be derived by using the Bethe Ansatz technique [66]. The spin–spin correlation functions between nearest-neighbors spin sites for are given by Takahashi et al. in [63]:

with . For , we have , and for , and (see [53]). For the correlation functions are given by Kato et al. in Ref. [64]:

where .

The correlation functions for next-to-nearest neighbors spins of XXZ- model. For the next-to-nearest-neighbors spins, in the region we have the correlation functions (see [63]) are:

where , , and . For , we have (see [65,68]):

and, also:

References

- Badii, R.; Politi, A. Complexity: Hierarchical Structures and Scaling in Physics; Cambridge University Press: Cambridge, UK, 1997. [Google Scholar]

- Lempel, A.; Ziv, J. On the Complexity of Finite Sequences. IEEE Trans. Inf. Theory 1976, 22, 75–81. [Google Scholar] [CrossRef]

- Jiménez-Montaño, M.A.; Ebeling, W.; Pohl, T.; Rapp, P.E. Entropy and complexity of finite sequences as fluctuating quantities. Biosystems 2002, 64, 23–32. [Google Scholar] [CrossRef]

- Szczepanski, J. On the distribution function of the complexity of finite sequences. Inf. Sci. 2009, 179, 1217–1220. [Google Scholar] [CrossRef][Green Version]

- Kolmogorov, A.N. Three approaches to the quantitative definition of information. Probl. Inf. Transm. 1965, 1, 1–7. [Google Scholar] [CrossRef]

- Chaitin, G.J. On the Length of Programs for Computing Finite Binary Sequences. J. ACM 1966, 13, 547–569. [Google Scholar] [CrossRef]

- Martin, M.T.; Plastino, A.; Rosso, O.A. Statistical complexity and disequilibrium. Phys. Lett. A 2003, 311, 126–132. [Google Scholar] [CrossRef]

- Lamberti, P.W.; Martin, M.T.; Plastino, A.; Rosso, O.A. Intensive entropic non-triviality measure. Phys. A 2004, 334, 119–131. [Google Scholar] [CrossRef]

- Binder, P.-M. Complexity and Fisher information. Phys. Rev. E 2000, 61, R3303–R3305. [Google Scholar] [CrossRef] [PubMed]

- Shiner, J.S.; Davison, M.; Landsberg, P.T. Simple measure for complexity. Phys. Rev. E 1999, 59, 1459–1464. [Google Scholar] [CrossRef]

- Toranzo, I.V.; Dehesa, J.S. Entropy and complexity properties of the d-dimensional blackbody radiation. Eur. Phys. J. D 2014, 68, 316. [Google Scholar] [CrossRef]

- Wackerbauer, R.; Witt, A.; Atmanspacher, H.; Kurths, J.; Scheingraber, H. A comparative classification of complexity measures. Chaos Solitons Fractals 1994, 4, 133–173. [Google Scholar] [CrossRef]

- Zurek, W.H. Complexity, Entropy, and the Physics of Information; Addison-Wesley Pub. Co.: Redwood City, CA, USA, 1990. [Google Scholar]

- Domenico, F.; Stefano, M.; Nihat, A. Canonical Divergence for Measuring Classical and Quantum Complexity. Entropy 2019, 21, 435. [Google Scholar]

- Felice, D.; Cafaro, C.; Mancini, S. Information geometric methods for complexity. Chaos 2018, 28, 032101. [Google Scholar] [CrossRef] [PubMed]

- Crutchfield, J.P.; Ellison, C.J.; Mahoney, J.R. Time’s Barbed Arrow: Irreversibility, Crypticity, and Stored Information. Phys. Rev. Lett. 2009, 103, 94101. [Google Scholar] [CrossRef] [PubMed]

- Riechers, P.M.; Mahoney, J.R.; Aghamohammadi, C.; Crutchfield, J.P. Minimized state complexity of quantum-encoded cryptic processes. Phys. Rev. A 2016, 93, 052317. [Google Scholar] [CrossRef]

- Gu, M.; Wiesner, K.; Rieper, E.; Vedral, V. Quantum mechanics can reduce the complexity of classical models. Nat. Commun. 2012, 3, 762–765. [Google Scholar] [CrossRef] [PubMed]

- Yang, C.; Binder, F.C.; Narasimhachar, V.; Gu, M. Matrix Product States for Quantum Stochastic Modeling. Phys. Rev. Lett. 2018, 121, 260602. [Google Scholar] [CrossRef] [PubMed]

- Thompson, J.; Garner, A.J.P.; Mahoney, J.R.; Crutchfield, J.P.; Vedral, V.; Gu, M. Causal Asymmetry in a Quantum World. Phys. Rev. X 2018, 8, 031013. [Google Scholar] [CrossRef]

- López-Ruiz, R.; Mancini, H.L.; Calbet, X. A statistical measure of complexity. Phys. Lett. A 1995, 209, 321–326. [Google Scholar] [CrossRef]

- Anteneodo, C.; Plastino, A.R. Some features of the López-Ruiz-Mancini-Calbet (LMC) statistical measure of complexity. Phys. Lett. A 1996, 223, 348–354. [Google Scholar] [CrossRef]

- Catalán, R.G.; Garay, J.; López-Ruiz, R. Features of the extension of a statistical measure of complexity to continuous systems. Phys. Rev. E 2002, 66, 011102. [Google Scholar] [CrossRef] [PubMed]

- Rosso, O.A.; Martin, M.T.; Larrondo, H.A.; Kowalski, A.M.; Plastino, A. Generalized Statistical Complexity—A New Tool for Dynamical Systems; Bentham Science Publisher: Sharjah, United Arab Emirates, 2013. [Google Scholar]

- Tsallis, C. Possible generalization of Boltzmann-Gibbs statistics. J. Stat. Phys. 1988, 52, 479–487. [Google Scholar] [CrossRef]

- Gell-Mann, M.; Tsallis, C. Nonextensive Entropy-Interdisciplinary Applications; Oxford University Press: Oxford, UK, 2004. [Google Scholar]

- Rényi, A. On measures of Entropy and Information; University of California Press: Berkeley, CA, USA, 1961; pp. 547–561. [Google Scholar]

- Bhattacharyya, A. On a Measure of Divergence between Two Statistical Populations Defined by Their Probability Distributions. Bull. Calcutta Math. Soc. 1943, 35, 99–109. [Google Scholar]

- Majtey, A.; Lamberti, P.W.; Martin, M.T.; Plastino, A. Wootters’ distance revisited: A new distinguishability criterium. Eur. Phys. J. D 2005, 32, 413–419. [Google Scholar] [CrossRef]

- Kullback, S.; Leibler, R.A. On Information and Sufficiency. Ann. Math. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- Majtey, A.P.; Lamberti, P.W.; Prato, D.P. Jensen-Shannon divergence as a measure of distinguishability between mixed quantum states. Phys. Rev. A 2005, 72, 052310. [Google Scholar] [CrossRef]

- López-Ruiz, R.; Nagy, Á.; Romera, E.; Sañudo, J. A generalized statistical complexity measure: Applications to quantum systems. J. Math. Phys. 2009, 50, 123528. [Google Scholar] [CrossRef]

- Sañudo, J.; López-Ruiz, R. Statistical complexity and Fisher-Shannon information in the H-atom. Phys. Lett. A 2008, 372, 5283–5286. [Google Scholar] [CrossRef]

- Montgomery, H.E.; Sen, K.D. Statistical complexity and Fisher–Shannon information measure of H2+. Phys. Lett. A 2008, 372, 2271–2273. [Google Scholar] [CrossRef]

- Sen, K.D. Statistical Complexity-Applications in Electronic Structure; Springer: Dordrecht, The Netherlands, 2011. [Google Scholar]

- Sañudo, J.; López-Ruiz, R. Alternative evaluation of statistical indicators in atoms: The non-relativistic and relativistic cases. Phys. Lett. A 2009, 373, 2549–2551. [Google Scholar] [CrossRef][Green Version]

- Moustakidis, C.C.; Chatzisavvas, K.C.; Nikolaidis, N.S.; Panos, C.P. Statistical measure of complexity of hard-sphere gas: Applications to nuclear matter. Int. J. Appl. Math. Stat. 2012, 26, 2. [Google Scholar]

- Sánchez-Moreno, P.; Angulo, J.C.; Dehesa, J.S. A generalized complexity measure based on Rényi entropy. J. Eur. Phys. J. D 2014, 68, 212. [Google Scholar] [CrossRef]

- Calbet, X.; López-Ruiz, R. Tendency towards maximum complexity in a nonequilibrium isolated system. Phys. Rev. E 2001, 63, 066116. [Google Scholar] [CrossRef] [PubMed]

- Lopez-Ruiz, R.; Sanudo, J.; Romera, E.; Calbet, X. Statistical Complexity and Fisher-Shannon Information: Applications. In Statistical Complexity; Sen, K., Ed.; Springer: Dordrecht, The Netherlands, 2011. [Google Scholar]

- Müller-Lennert, M.; Dupuis, F.; Szehr, O.; Fehr, S.; Tomamichel, M. On quantum Rényi entropies: A new generalization and some properties. J. Math. Phys. 2013, 54, 122203. [Google Scholar] [CrossRef]

- Petz, D.; Virosztek, D. Some inequalities for quantum Tsallis entropy related to the strong subadditivity. Math. Inequalities Appl. 2015, 18, 555–568. [Google Scholar] [CrossRef]

- Bhatia, R. Matrix Analysis; Graduate Texts in Mathematics; Springer: New York, NY, USA, 2013. [Google Scholar]

- Misra, A.; Singh, U.; Bera, M.N.; Rajagopal, A.K. Quantum Rényi relative entropies affirm universality of thermodynamics. Phys. Rev. E 2015, 92, 042161. [Google Scholar] [CrossRef] [PubMed]

- Audenaert, K.M.R. Quantum skew divergence. J. Math. Phys. 2014, 55, 112202. [Google Scholar] [CrossRef]

- Schumacher, B.; Westmoreland, M.D. Relative entropy in quantum information theory. arXiv 2000, arXiv:quant-ph/0004045. [Google Scholar]

- Sugiura, S.; Shimizu, A. Canonical Thermal Pure Quantum State. Phys. Rev. Lett. 2013, 111, 010401. [Google Scholar] [CrossRef] [PubMed]

- Ye, B.-L.; Li, B.; Li-Jost, X.; Fei, S.-M. Quantum correlations in critical XXZ system and LMG model. Int. J. Quantum Inf. 2018, 16, 1850029. [Google Scholar] [CrossRef]

- Girolami, D.; Tufarelli, T.; Adesso, G. Characterizing Nonclassical Correlations via Local Quantum Uncertainty. Phys. Rev. Lett. 2013, 110, 240402. [Google Scholar] [CrossRef] [PubMed]

- Werlang, T.; Trippe, C.; Ribeiro, G.A.P.; Rigolin, G. Quantum Correlations in Spin Chains at Finite Temperatures and Quantum Phase Transitions. Phys. Rev. Lett. 2010, 105, 095702. [Google Scholar] [CrossRef] [PubMed]

- Werlang, T.; Ribeiro, G.A.P.; Rigolin, G. Spotlighting quantum critical points via quantum correlations at finite temperatures. Phys. Rev. A 2011, 83, 062334. [Google Scholar] [CrossRef]

- Li, Y.-C.; Lin, H.-Q. Thermal quantum and classical correlations and entanglement in the XY spin model with three-spin interaction. Phys. Rev. A 2011, 83, 052323. [Google Scholar] [CrossRef]

- Justino, L.; de Oliveira, T.R. Bell inequalities and entanglement at quantum phase transitions in the XXZ model. Phys. Rev. A 2012, 85, 052128. [Google Scholar] [CrossRef]

- Malvezzi, A.L.; Karpat, G.; Çakmak, B.; Fanchini, F.F.; Debarba, T.; Vianna, R.O. Quantum correlations and coherence in spin-1 Heisenberg chains. Phys. Rev. B 2016, 93, 184428. [Google Scholar] [CrossRef]

- Ferreira, D.L.B.; Maciel, T.O.; Vianna, R.O.; Iemini, F. Quantum correlations, entanglement spectrum, and coherence of the two-particle reduced density matrix in the extended Hubbard model. Phys. Rev. B 2022, 105, 115145. [Google Scholar] [CrossRef]

- Osborne, T.J.; Nielsen, M.A. Entanglement in a simple quantum phase transition. Phys. Rev. A 2002, 66, 032110. [Google Scholar] [CrossRef]

- Pfeuty, P. The one-dimensional ising model with a transverse field. Ann. Phys. 1970, 57, 79–90. [Google Scholar] [CrossRef]

- Damski, B.; Rams, M.M. Exact results for fidelity susceptibility of the Quantum Ising Model: The interplay between parity, system size, and magnetic field. J. Phys. Math. Theor. 2014, 47, 025303. [Google Scholar] [CrossRef]

- Whitlock, S.; Glaetzle, A.W.; Hannaford, P. Simulating quantum spin models using rydberg-excited atomic ensembles in magnetic microtrap arrays. J. Phys. B At. Mol. Opt. Phys. 2017, 50, 074001. [Google Scholar] [CrossRef]

- Toskovic, R.; van den Berg, R.; Spinelli, A.; Eliens, I.S.; van den Toorn, B.; Bryant, B.; Caux, J.-S.; Otte, A.F. Atomic spin-chain realization of a model for quantum criticality. Nat. Phys. 2016, 12, 656–660. [Google Scholar] [CrossRef]

- Franchini, F. Notes on Bethe Ansatz Techniques. Available online: https://people.sissa.it/~ffranchi/BAnotes.pdf (accessed on 26 July 2022).

- Sarıyer, O.S. Two-dimensional quantum-spin-1/2 XXZ magnet in zero magnetic field: Global thermodynamics from renormalization group theory. Philos. Mag. 2019, 99, 1787–1824. [Google Scholar] [CrossRef]

- Takahashi, M.; Kato, G.; Shiroishi, M. Next Nearest-Neighbor Correlation Functions of the Spin-1/2 XXZ Chain at Massive Region. J. Phys. Soc. Jpn. 2004, 73, 245–253. [Google Scholar] [CrossRef]

- Kato, G.; Shiroishi, M.; Takahashi, M.; Sakai, K. Third-neighbour and other four-point correlation functions of spin-1/2 XXZ chain. J. Phys. A Gen. 2004, 37, 5097. [Google Scholar] [CrossRef][Green Version]

- Kato, G.; Shiroishi, M.; Takahashi, M.; Sakai, K. Next-nearest-neighbour correlation functions of the spin-1/2 XXZ chain at the critical region. J. Phys. Math. Gen. 2003, 36, L337. [Google Scholar] [CrossRef][Green Version]

- Shiroishi, M.; Takahashi, M. Exact Calculation of Correlation Functions for Spin-1/2 Heisenberg Chain. J. Phys. Soc. Jpn. 2005, 74, 47–52. [Google Scholar] [CrossRef]

- Wilde, M.M. Quantum Information Theory, 2nd ed.; Cambridge University Press: Cambridge, UK, 2017. [Google Scholar]

- Takahashi, M. Thermodynamics of One-Dimensional Solvable Models; Cambridge University Press: Cambridge, UK, 1999. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).