1. Introduction

There has been a considerable amount of research work on modeling offensive and defensive abilities in baseball. In particular, offensive measures are often assessed and analyzed to predict future hitting performance. Further, various measures have been proposed in the past decades through Sabermetrics (known initially as SABRmetrics) to evaluate batters’ hitting performance. As mentioned by [

1], successful free-agent hitters who are expected to produce similar future outcomes are entitled to an enormous amount of money with large contracts. To estimate the parameter of interest,

the probability of success (e.g., the probability of hits or the batting probability of a baseball player), in this scenario, the binomial regression model is a commonly used model with a generic term in conjunction with regression covariates.

Many studies have been conducted on binomial regression models with well-known link functions such as the logistic, probit, and complementary log-log functions. Ref. [

2] discussed hierarchical models for analyzing binomial data in a Bayesian framework. Bedrick et al. [

3] presented extensive Bayesian methodologies for binomial regression models without incorporating random effects. Later, Chenetal [

4] investigated the theoretical properties of the Jeffreys prior to general binomial regression models. Pires and Diniz [

5] and Prasetyo et al. [

6] also analyzed binomial regression models based on the Bayesian perspective. Here, we notice that the conventional binomial regression model is applicable only when a single success probability is associated with players or teams. However, we often encounter situations where two dependent probabilities of success are of interest when analyzing the offensive sides of hitters in baseball. Specifically, the ‘batting average’ is an older well-recognized measure to evaluate batters’ performance. In the modern baseball era, the ‘slugging percentage’ is another crucial measure to see how often power hitters can produce extra-base hits [

7]. Under these circumstances, it is important to investigate which covariates are related to these two dependent measures separately and simultaneously.

The bivariate binomial (BVB) distribution originally proposed by [

8] is one of the remedies for dealing with two success probabilities when nested binary data come into play. Based on the BVB distribution, several theoretical properties, including Jeffreys priors, were investigated by [

9]. Ref. [

10] suggested a bivariate extension of the binomial autoregressive (AR) model and proposed a new class of bivariate binomial AR models based on a binomial thinning operation. Recently, Ref. [

11] conducted a changepoint analysis with the BVB to investigate the streakiness of baseball and basketball players. However, to the best of our knowledge, a regression model for the BVB distribution has not yet been studied in the literature.

In this paper, we utilize the BVB distribution with two inter-linked logit functions to capture the association between two success probabilities based on nested binary data. The proposed model allows the two inter-linked logit functions to share some common covariates. In other words, some covariates are included in both logit functions and some covariates are included in only one of the logit functions. Another novel feature of our proposed model is that unobserved characteristics of individuals or groups that simultaneously affect two success probabilities are taken into account via joint random effects. The joint random effects are unobserved heterogeneity that commonly exists in both dependent success probabilities. We treat the joint random effects as model parameters and estimate them using a Markov Chain Monte Carlo (MCMC) algorithm under the Bayesian paradigm. The direct estimation of the joint random effects is important for two reasons. First, in a regression analysis setting, random effects typically represent subject-specific means in a longitudinal dataset that are assumed to be independent of any covariates. Those random effects are treated as an additional source of regression errors. However, as in our empirical application, the random effects contain valuable information about individuals or groups and can be useful for prediction. For instance, the average performance over the full sample period can differ significantly across batters, and it is likely that these differences among players will persist in the future. Estimating these random effects directly will provide a way to extract useful subject-specific information. Second, by directly estimating the common random effects, we can test if the unobserved heterogeneity of one success probability is associated with its counterpart in the pair of success probabilities. In addition, our direct estimation approach provides empirical results that are robust to potential non-zero correlations between unobserved heterogeneity and the covariates in the two logit functions. When the unobserved heterogeneity is correlated with the covariates in a regression model, it is typically modeled with fixed effects and the unobserved heterogeneity should be explicitly estimated or eliminated before model fitting by data transformations such as differentiating successive observations or demeaning observations by their time-series means. However, these data transformations are difficult to apply when using a logit function. In contrast, our proposed approach can handle the non-zero correlations between covariates and the unobserved heterogeneity through a direct estimation method.

In this work, we consider the Bayesian approach for the parameter estimation, since the Bayesian method is more suitable for the estimation in the proposed model than the classical maximum likelihood (ML) estimation method because high-dimensional parameter spaces are involved in the model. Due to the longitudinal nature of data, the number of model parameters, including individual random effects, increases linearly with the cross-section size. The high-dimensional parameter space involved in the model brings additional difficulties to the parameter estimation process for the ML method. For instance, the ML estimates are mostly not in closed-form and require a numerical method to solve the optimization problem. For high-dimensional parameter spaces, obtaining a reasonable set of initial estimates of the model parameters for the ML estimation method can be challenging. Subsequently, the chance of locating a local maximum instead of the global maxima in the numerical optimization process can be higher. Moreover, the numerical optimization algorithm involved in the ML method could be very unstable when the sample size is small in the time dimension. In application to the baseball data analysis, we consider a panel dataset with (6 months) with (60 players). The reason we choose is because that we want the player performances to be as homogeneous as possible during the sample period, and that a single regular season in Major League Baseball (MLB) consists of about six months. Typically, a baseball player’s performance varies significantly from season to season. Therefore, restricting the sample period to one season keeps the homogeneity of the data and allows us to model the average performance of each player using the random effect. However, the relatively small sample may cause difficulties in the maximum likelihood estimation for the time series dimension. These technical issues in the estimation process can be readily avoided using the Bayesian estimation method by suitably imposing Bayesian priors over the model parameters.

The rest of this paper is organized as follows. In

Section 2, we introduce the mathematical notations and the BVB distribution along with the three proposed BVB regression models.

Section 3 discusses the Bayesian inference based on the proposed BVB regression models and provides the Bayesian MCMC algorithm. A practical data analysis based on the performance data of MLB players is presented in

Section 4. Then, to evaluate the performance of the proposed models and estimation methods, Monte Carlo simulation studies are used and the settings and results are presented in

Section 5. Finally, brief concluding remarks along with future research directions are provided in

Section 6.

2. Models and Notations

We use

to denote the observed sequence of nested bivariate binary data for the

ℓ-th individual/group (

) at time points

with the covariate vector

that contains

K covariates, where the bivariate random vector

with

follows a BVB distribution denoted by

for

. The BVB distribution considered here is based on a two-stage binomial model. For example, in assessing the performance of a baseball player, say the

ℓ-th player at time

t, suppose the probability of hits is

, then the number of hits out of

total at-bats is a random variable

that follows a binomial distribution

. For the same player at time

t, suppose the probability of extra-base hits (two-base hits, three-base hits, and home runs) out of total hits is

. Given the number of hits

, the number of extra-base hits is a random variable

that follows a binomial distribution

. From [

8], the joint probability mass function of

that follows the BVB distribution is given by

Here,

is the number of successes out of

trials with the probability of success

and

is the number of successes out of

trials with probability of success

.

Remark 1. The motivation of our work is based on nested (two-stage) binary data encountered in basketball and baseball, as well as in other fields such as microbiology [8]. The basketball data analyzed in [11] have a similar structure in which m is the number of shoot attempts, is the number of shoots made out of the m attempts, and is the number of three-point field goals out of the shots made. Following the same idea, one can construct a trivariate binomial distribution based on a three-stage binomial model by incorporating another nested stage. For example, in the basketball example, can be the number of 4-point plays (i.e., a player completes a three-pointer while being fouled, which leads to a free-throw) out of the three-point field goals. Wallis [

12] proposed the logit transformation

as an appropriate transformation for percentages, proportions, and probabilities because the logit transformation stabilizes the variance and brings the range of the data from

to

, which improves the performance of normal approximation. Moreover, the logit transformation ensures that the estimates and projections are in the interval

. Since we have two inter-related success probabilities

and

, we consider the following three logistic regression models based on the BVB distribution that can be formulated as follows.

Model 0—Model with no random effect

We consider the following two logit link functions to model the two inter-related success probabilities

and

with the covariates:

for

, where

and

are the vectors of regression coefficients associated with parameters

and

, respectively. The model in Equation (

1) is our benchmark model and hence, we refer it to as

Model 0.

Model 1—Model with joint random effect

We consider the following two logit link functions to model two inter-related success probabilities

and

with the covariates:

for

, where

and

are the vectors of regression coefficients associated with parameters

and

. Here, we assume that

,

,

are independent and identically distributed standard normal random variables representing a random effect, and parameter

indicates a varying deviance between subjects or individuals. The model in Equation (

2) incorporates the joint random effects in the two logit functions. The key model parameter,

, captures the link intensity between the unobserved heterogeneities of the two success probabilities, which allows us to statistically test for the link in a straightforward manner.

Model 2—Model with joint random effect and unobserved heterogeneity

We now extend Model 1 by incorporating an additional random effect term in

. The extended model denoted by

Model 2 is given by

where

is regarded as the joint random effect, and

is the independent random effect of

. We assume that

follows a standard normal distribution, as is the case for

. The implicit assumption behind Model 1 is that the joint random effect term

is a single source of the unobserved heterogeneity for

and

. However, this may not be the case depending on the data of various applications. In one extreme situation, in which

and

are completely independent after controlling for the effects of observed covariates, there will be no term in Model 1 that can reflect the unobserved heterogeneity of

because

should be zero. In the most plausible scenario for which

and

are connected but not perfectly connected, we also need a term that can control for the unobserved heterogeneity in

independent of

. Therefore, Model 2 is designed to handle such cases.

4. Practical Data Analysis

For sports statistics, statistical analysis of baseball data has become more important in professional baseball. In the past two decades, there have been a substantial number of studies on measuring the offensive abilities of MLB players see, for example [

17,

18,

19,

20,

21]. Readers who are interested in the field of sports statistics may refer to the book by [

22]. In this practical data analysis, we consider a dataset on MLB players with several covariates commonly used to evaluate batters’ hitting performances and apply the proposed logistic regression model based on the BVB distribution.

Here are the definitions of the variables considered in this analysis:

Win Probability Added (WPA): The percent change in a team’s chances of winning from one game to the next;

Center percentage (Cent%): The percentage of balls in play that were hit to center fields by batters;

Pull percentage (Pull%): The percentage of balls in play that were pulled by hitters;

Opposite percentage (Oppo%): The percentage of balls in play that were hit to opposite fields by batters;

BABIP (Batting Average on Balls in Play): A statistic indicating how often a ball in play goes for a hit;

Walk to strikeout ratio (BB/K): A batting ratio that shows the ratio of walks for each strikeout. The higher the ratio, the better the performance;

Home run to fly ball ratio (HR/FB): The ratio of how many home runs are hit against a pitcher for every fly ball he/she allows;

Line drive percentage (LD%): The percentage of balls hit into the field of play that are characterized as line drives;

Ground ball percentage (GB%): The percentage of batted balls hit as ground balls against a pitcher;

Fly ball percentage (FB%): The percentage of fly balls hit into the field of play.

There are 30 teams in MLB among which two players from each team are selected. The regular season of MLB starts in early April and finishes at the end of September, during which 162 games are played. We used the data for the year 2021, which can be collected online:

https://www.fangraphs.com/ (accessed on 19 July 2022). In the dataset used here, there are 60 players (i.e.,

) measured at

time points (monthly from April to September). Parameter

p denotes the batting average, and parameter

q denotes the proportion of extra-base hits (two-base hits, three-base hits, and home runs) out of the total hits. In this empirical analysis, we use the demeaned covariates by their time series means so that the intercept coefficients or random effects can be directly translated into a play’s performance.

First, we compare the results with the frequentist approach under Model 0 in (

1). The MLEs can be obtained by maximizing the likelihood function in Equation (

4) without the term

. Let

denote the MLEs of the parameter vectors

. The asymptotic distribution of

is

-variate multivariate normal with mean vector (

,

) and variance-covariance matrix

, where

Here,

is the inverse of the observed Fisher information matrix. We can test the significance of the regression parameter, i.e., testing the hypothesis

vs.

, or

vs.

,

, based on the asymptotic properties of the MLEs. For example, to test

vs.

, we consider the test statistic

with

p-value

, where

Z is the standard normal random variable. In summary, we consider the following covariates for modeling probabilities

p and

q:

Variables influencing both p and q: WPA, Cent%;

Variables influencing p only: BABIP, BB/K, LD%, GB%, Oppo%;

Variables influencing q only: FB%, HR/FB, Pull%.

We define , , , , , , , , , with and .

We also applied the proposed Bayesian estimation method presented in

Section 3 based on Model 0. Regarding the hyperparameters

,

, and

in the normal priors, we set the variances as 100, which leads to vague or weakly informative priors that reflect the lack of prior knowledge of the model parameters. Vague or weakly informative priors have been extensively used in [

23,

24]. To assess the robustness of the results for different variances in the normal priors, we conducted the Bayesian inference under other variances in the normal priors and compared the results (the results are not shown here for the sake of brevity). We found that the results from Bayesian inference are fairly robust to the changes on the hyperparameters.

The Bayes estimates are obtained from 6000 MCMC iterations with 1000 burn-in iterations. In

Table 1, we provide the MLEs, the Bayesian posterior means, 95% HPD intervals, and

p-values for testing if each coefficient is zero based on the procedures described above.

From

Table 1, the frequentist and Bayesian approaches are congruent. Specifically, both approaches yield estimates of the parameters that are close to each other. Moreover, the parameters that have

p-values based on MLEs smaller than 5% are also those for which the corresponding 95% HPD interval does not contain zero.

From variable selection perspectives, WPA turns out to be significant for

p while it was barely not for

q. Notice that the estimates of coefficients associated with WPA have different signs for

p and

q, which implies that WPA has a positive impact on the “batting average” while WPA has a negative impact on producing more extra-base hits when keeping other variables fixed. This is within our expectation, since Model 0 consists of two independent logit functions. The factor BABIP yields a significant

p-value among all the variables in conjunction with the batting average

p. We notice that BABIP is one of the important factors in modern baseball to assess batters’ performance [

25,

26]. The covariate BB/K reveals a decently significant result for modeling the batting average. Both FB% and HR/FB show significant impacts on predicting

q with parameter estimates that have positive signs.

As discussed in

Section 2, Model 0 does not consider random effects, therefore, we apply Model 1 using the Bayesian approach for the baseball data.

Table 2 presents the parameter estimates (posterior mean), the standard deviations (SD) of the estimates, and the 95% HPD interval for Model 1 in (

2). The Bayes estimates are obtained from 6000 MCMC iterations with 1000 burn-in iterations. The assessment of convergence of the Gibbs sampling is carried out based on the methodology proposed by [

27].

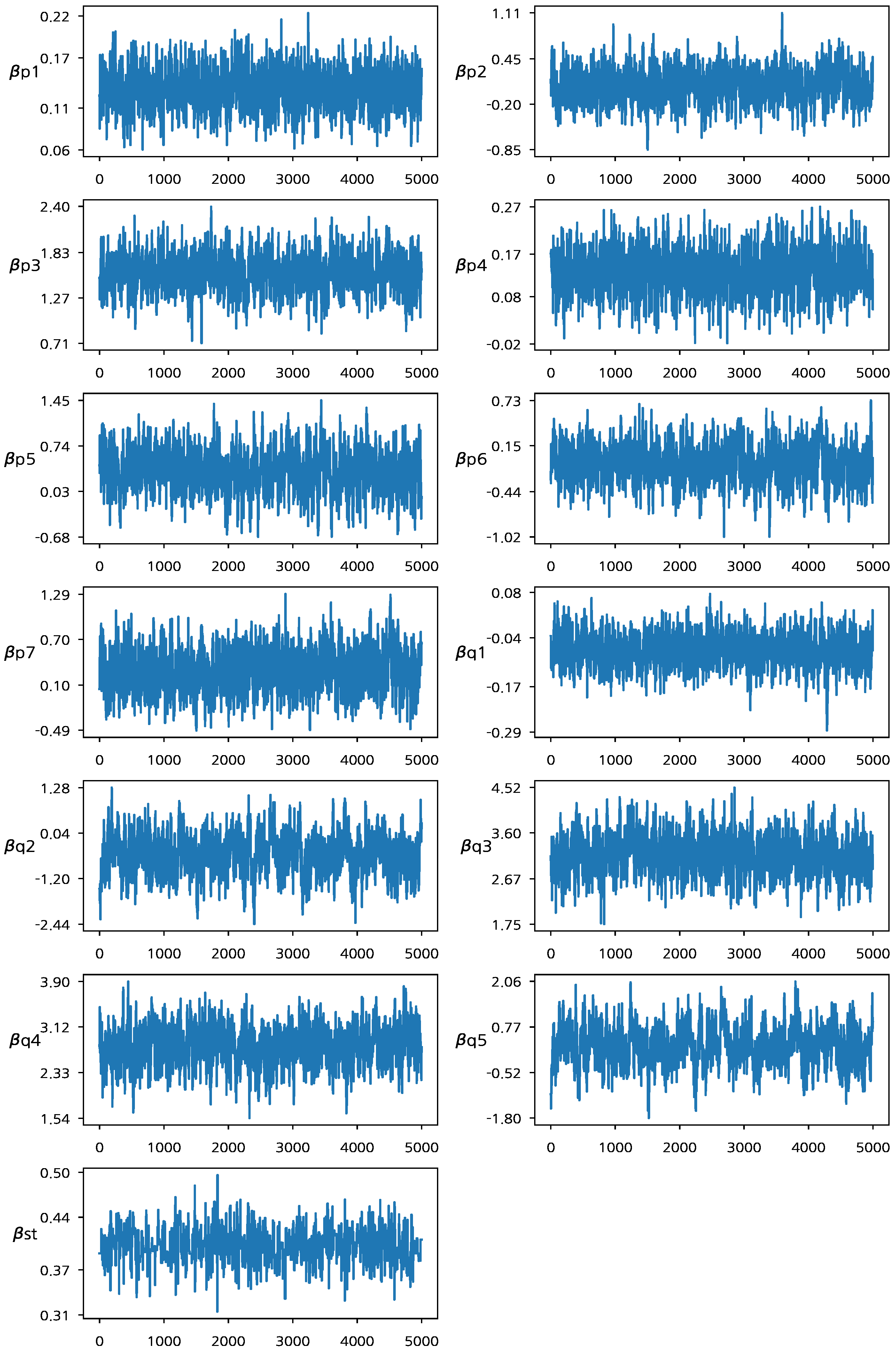

Figure 1 presents the trace plots of the MCMC samples. From

Figure 1, we can see that the MCMC chain of the Bayesian estimation algorithm has converged to the target posterior distribution after 1000 MCMC iterations. When fitting Model 1, we excluded the two intercept terms

and

in order for random effects to capture both the average performances and variabilities between players [

28,

29]. In addition, the exclusion of the intercept terms improves the convergence of the MCMC algorithm (We note that a player’s average performance can be captured by both intercept and random effect terms. Thus, the MCMC samples of the two terms are strongly correlated if they are drawn from their respective conditional posterior distributions which significantly affect each other. This makes the convergence of the proposed algorithm slow). The posterior mean for

is 0.402, and the corresponding 95% HPD interval is (0.3562, 0.4482), which does not contain zero. This reflects that there is a meaningful connection between the two logit functions associated with the joint random effects and provides the basis to claim that the two success probabilities should be jointly investigated to evaluate a player’s underlying performance.

Compared to the results based on Model 0 in

Table 1, the signs of the estimates of regression parameters are the same for all the parameters, though there are slight changes in magnitude. Comparing the results based on Model 0 and Model 1 provides us with an important piece of information on the property of the random effects. The variation in the unobserved heterogeneity may or may not be correlated with the covariates of the BVB regression model.

If variation in the unobserved heterogeneity and the covariates are correlated, then Model 0 that does not contain the individual effects will produce biased estimates which are different from those estimates of Model 1 with the random effect terms. Note that Model 1 directly estimates the random effects, which always produces unbiased estimates regardless of the relation between the unobserved heterogeneity and the covariates. On the other hand, Model 0 produces unbiased estimates only when there is no correlation between the unobserved heterogeneity and the covariates. Thus, the closeness of the parameter estimates based on Model 0 and Model 1 provides suggestive evidence that the unobserved heterogeneity is not associated with any of the covariates included in the regression model.

After considering Model 1, we also apply Model 2 to the baseball dataset. The empirical results of Model 2 are reported in

Figure 2 and

Table 3. The MH algorithm with slight modifications is used to incorporate

in the model. As in fitting Model 1, we fix

and do not include these terms in the computation procedure to avoid slow convergence (In Model 2, the intercept coefficients are redundant given the two random effect terms). We note that Model 2 requires a larger number of iterations for the convergence in the computation due to the additional random effect term

(The traceplot of MCMC samples for Model 2 is not reported in this section for the sake of brevity. The traceplot shows that all model parameters converge to their stationary posterior distributions).

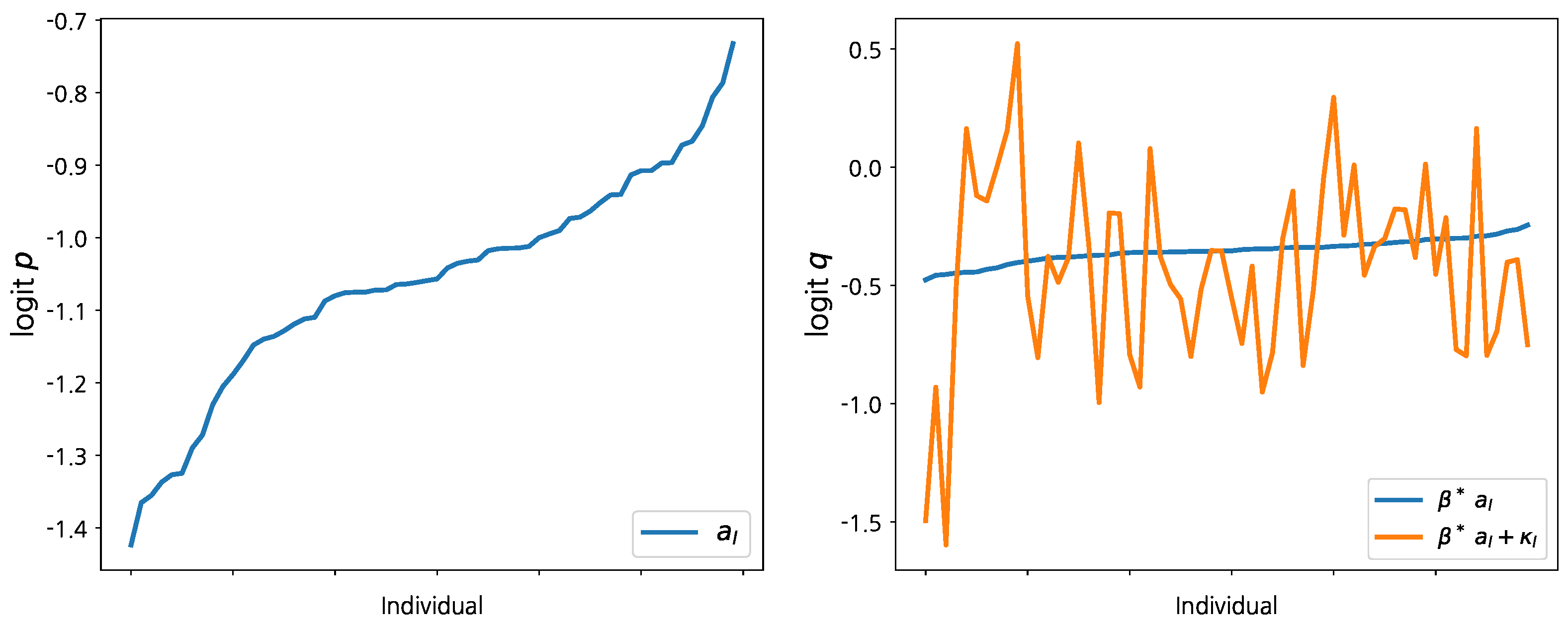

The left panel of

Figure 2 displays the posterior means for

, which is the unobserved heterogeneity in conjunction with success probability

p. To get a meaningful interpretation, the estimates of

are sorted in ascending order and we label each MLB player accordingly. The first player’s average success probability (batting average)

p over the full six-month sample period is estimated as

. (Recall that we use demeaned covariates. Thus, the estimated random effects can be interpreted as the average performance of a player.) The last player’s average success probability

p can be estimated as

. Hence, the average success probabilities of the other MLB players considered in this dataset are in between 0.19 and 0.33 based on this analysis. The right panel of

Figure 2 compares the random effects of the two logit functions based on the player labels according to

. Although the posterior estimates in the second panel are noisy, a similar pattern with

is hidden in the posterior means of

, which represent the unobserved heterogeneity of success probability

q. However, this is only suggestive evidence because the posterior estimates of

vary significantly across players.

To obtain more concrete empirical evidence on the link between

p and

q, we check the posterior estimate of

in the last row of

Table 3. Although the confidence interval for

is wider than that of Model 1, the sign of the estimate is still positive and the 95% HPD interval does not contain zero. From these results, we claim that the two success probabilities are inter-connected even after controlling for the effects of the commonly used covariates. The other Bayes estimates of the model parameters in

Table 3 confirm that the estimated marginal effects are consistent across different model specifications.

5. Monte Carlo Simulation Studies

To empirically demonstrate the performance of the proposed regression model and the Bayesian estimation method, we perform Monte Carlo simulation studies with different settings. We generate 200 sets of data with

,

, and

based on Model 0 and Model 1. We also consider Model 2 in the simulation study, but the simulation results for Model 2 are not presented here, as the algorithm for estimating the parameters in Model 2 is a simple extension of the algorithm for Model 1. For each dataset, we estimate the corresponding model parameters in the two models with the MH algorithm described in

Section 3. For Model 1, the two intercept terms

and

are set to be zero and they are not estimated to increase the speed of the convergence of the estimation algorithm. For Model 0, the true values of the model parameters are set to be

. For Model 1, the true values of the model parameters are set to be

. We have considered other sets of true parameters, and the simulation results lead to qualitatively similar conclusions that the MH algorithm works well. Therefore, the simulation results for other settings are omitted in this paper. The random effect term

is generated from the standard normal distribution, i.e.,

.

For each simulated dataset, we compute the posterior means and HPD intervals of the model parameters. After collecting the posterior estimates, we evaluate the performance of the point estimates based on the average posterior mean, the bias, and the mean squared error (MSE); and evaluate the performance of the interval estimates based on the coverage probability (CP), and average width (AW). The CP represents the proportion that the 95% HPD interval contains the true value. The total and burn-in MCMC iterations of the Bayesian estimation are set to be 6000 and 1000, respectively.

Table 4 presents the simulation results of Model 0. The simulated average posterior means, biases, and MSEs show that the proposed Bayesian estimation procedure performs well for point estimation. The simulated CPs show that the Bayesian 95% credible intervals control the coverage probabilities at or above the nominal level in most cases. Moreover, the simulated AWs show that the 95% credible intervals provides reasonable interval estimates. Overall, the proposed Bayesian estimation procedure with the MH algorithm can accurately estimate the model parameters for Model 0.

The simulation results of Model 1 are presented in

Table 5. Similar conclusions about the performance of the point and interval estimates based on the proposed Bayesian approach can be obtained as they were for Model 0. The major difference between Model 0 and Model 1 is that the amount of uncertainty in the estimation process is larger in Model 1 due to the inclusion of random effects. The increase in uncertainty explains the larger simulated MSEs and AWs of the estimates in Model 1 compared with those in Model 0. These results are a natural consequence of introducing joint random effects to the logit functions. The results in

Table 5 show that the parameter

that plays a special role in our model can be precisely estimated by the proposed Bayesian approach.