Abstract

A new nonparametric test of equality of two densities is investigated. The test statistic is an average of log-Bayes factors, each of which is constructed from a kernel density estimate. Prior densities for the bandwidths of the kernel estimates are required, and it is shown how to choose priors so that the log-Bayes factors can be calculated exactly. Critical values of the test statistic are determined by a permutation distribution, conditional on the data. An attractive property of the methodology is that a critical value of 0 leads to a test for which both type I and II error probabilities tend to 0 as sample sizes tend to ∞. Existing results on Kullback–Leibler loss of kernel estimates are crucial to obtaining these asymptotic results, and also imply that the proposed test works best with heavy-tailed kernels. Finite sample characteristics of the test are studied via simulation, and extensions to multivariate data are straightforward, as illustrated by an application to bivariate connectionist data.

1. Introduction

Ref. [1] proposed the use of cross-validation Bayes factors in the classic two-sample problem of comparing two distributions. Their basic idea is to randomly divide the data into two distinct parts, call them A and B, and to define two models based on kernel density estimates from part A. One model assumes that the two distributions are the same and the other allows them to be different. A Bayes factor comparing the two part A models is then defined from the part B data. In order to stabilize the Bayes factor, Ref. [1] suggest that a number of different random data splits be used, and the resulting log-Bayes factors averaged.

In the current paper we consider a special case of this approach in which the part A data consists of all the available observations save one. If the sample sizes of the two data sets are m and n, this entails that a total of log-Bayes factors may be calculated. The average of these quantities becomes the test statistic here considered, and is termed .

Although is an average of log-Bayes factors, it does not lead to a consistent Bayes test because each of the log-Bayes factors is based on just a single observation. Ref. [1] suppose that the validation set size grows to ∞, while in our case it remains of size 1. This results in the converging to the Kullback–Leibler divergence of the two densities, and not ∞ as in the case of [1]. We therefore use frequentist ideas to construct our test. The exact null distribution of conditional on order statistics is obtained using permutations of the data. Doing so leads to a consistent frequentist test whose size is controlled exactly. The problem of bandwidth selection is dealt with by using leave-one-out likelihood cross-validation applied to the combination of the two data sets. This method is computationally efficient in that the resulting bandwidth is invariant to permutations of the combined data, and therefore has to be computed just once. Our methodology is easily extended to bivariate data, and we do so in a real data example.

Ref. [2] also use a permutation test based on kernel estimates for the two-sample problem, their statistic being based on an distance. Ref. [3] shows how other distances and divergences compare when applying them to the general k-sample problem, restricting their comparisons to the one-dimensional case. Our method mainly differs from these procedures by virtue of its Bayesian motivation. Existing methodology that most closely resembles ours is that of [4], who use a kernel-based marginal likelihood ratio to test goodness of fit of parametric models for a distribution. Their marginal likelihood employs a prior for a bandwidth, as does ours.

2. Methodology

We assume that are independent and identically distributed (i.i.d.) from density f, and independently are i.i.d. from density g. We are interested in the problem of testing the null hypothesis that f and g are identical on the basis of the data and . Let be an arbitrary set of k scalar observations, and define a kernel density estimate by

where K is the kernel and the bandwidth.

2.1. The Test Statistic

Let , , , , and be the vector with all its components except , . Furthermore, let be all the components of except , , and all the components of except , . If we assume that f is identical to g, then potential models for f are , . Suppose that . If we allow that f and g are different, then a model for the datum is . In this case a legitimate Bayes factor for comparing and on the basis of the datum has the form

where, mainly for convenience, we have assumed that the bandwidth priors are the same in all cases. Likewise, if , then is a model for the datum , and a Bayes factor for comparing and is

When m and n are large, it is expected that will be a good model for f if and for g if . Likewise, each of will be a good model for the common density on the assumption that f and g are identical. However, none of will be Bayes factors that can provide convincing evidence for either hypothesis simply because each one uses likelihoods based on a single datum. At first blush one might think that a solution to this problem is to take the average of the log-Bayes factors:

However, this results in a statistic that will consistently estimate 0 or a positive constant in the respective cases or . In neither case does the statistic have the property of Bayes consistency, i.e., the property that the Bayes factor tends to 0 and ∞ when and , respectively.

The discussion immediately above points out a fundamental fact that seems not to have been widely discussed: combining a large number of inconsistent Bayes factors does not necessarily lead to a consistent Bayes factor. A guiding principle in [1] was that of averaging log-Bayes factors from different random splits of the data with the aim of producing a more stable log-Bayes factor. However, in order for this practice to yield a consistent Bayes factor, it is important that each of the log-Bayes factors being averaged is consistent. Furthermore, to ensure this consistency, it is necessary that the sizes of both the training and validation sets tend to ∞ with the samples sizes m and n. Obviously this is not the case when the size of each validation set is just 1, as in the current paper.

An advantage of the approach proposed herein is that the practitioner does not have to choose the size of the training sets. The cost is that the resulting statistic does not have the property of Bayes consistency. We thus propose that the statistic be used in frequentist fashion. An appealing way of doing so is to use a permutation test, which (save for certain practical issues to be discussed) leads to a test with exact type I error probability for all and . Let be the order statistics for the combined sample. Let be a random permutation of , and define to be the statistic (1) when the X-sample is taken to be and the Y-sample to be . It follows that, conditional on the order statistics , the values taken on by are equally likely. Therefore, if is a quantile of the empirical distribution of , then the test that rejects when will have an (unconditional) type I error probability of . As will be shown in the Appendix A.3, is negative with probability tending to 1 as , implying that for any will be negative for m and n large enough. From an evidentiary standpoint, it is nonsense to reject for a negative value of . We therefore suggest using the critical value , which ensures that the test is sensible and has level .

2.2. The Effect of Using Scale Family Priors

Let be an arbitrary density with support . A possible family of priors is one that contains all rescaled versions of . For , using the prior and making the change of variable in the denominator of , we have

where the kernel L is

So, by using this type of prior, each marginal likelihood comprising becomes a kernel density estimate with bandwidth equal to the scale parameter of the prior. In one sense this is disappointing since it means that averaging kernel estimates with respect to a bandwidth prior does not actually sidestep the issue of choosing a smoothing parameter. One has simply traded bandwidth choice for choice of the prior’s scale. However, it turns out that there is a quantifiable advantage to using a prior for the bandwidth of K. As detailed in the Appendix A.2, likelihood cross-validation is often more efficient when applied to rather than to .

When using a scale family of priors, the result immediately above implies that

and so the proposed statistic is proportional to the log of a likelihood ratio. The two likelihoods are cross-validation likelihoods, and the numerator and denominator of the ratio correspond to the hypotheses of different and equal densities, respectively.

In practice one must select both the kernel L and bandwidth b. For the moment we assume that L is given. The denominator of as a function of b is the likelihood cross-validation criterion, as studied by [5], based on the combined sample. We propose using , the maximizer of this denominator. This bandwidth has the desirable property that it is invariant to the ordering of the data in the combined sample. Let be the value of test statistic (1) for a permuted data set. One should use the principle that is the same function of the permuted data as is of the original data. So, in principle the bandwidth should be selected for every permuted data set, but because of the invariance of to the ordering of the combined sample, this data-driven bandwidth equals for every permuted data set. This results in a large computational savings relative to a procedure that selects the bandwidth differently for the X- and Y-samples. Using the same bandwidth under both null and alternative hypotheses also fits with the principle espoused by [6].

Concerning L, Ref. [5] showed that kernels must be relatively heavy-tailed in order for them to perform well with respect to likelihood cross-validation. In particular, he shows that likelihood cross-validation fails miserably as a method for choosing the bandwidth of a kde based on a Gaussian kernel. The tails of the kernel must be considerably heavier than those of a Gaussian density in order for likelihood cross-validation to be effective. Proposition A1 in the Appendix A.1 shows that under very general conditions L (as defined in (2)) has heavier tails than those of K. Therefore, the Bayesian notion of averaging commonly used kernel estimates with respect to a prior brings the resulting kernel estimate more in line with the conditions of [5]. This has a substantial benefit for our statistic inasmuch as we use a likelihood cross-validation bandwidth in its construction.

Consider the following kernel proposed by [5]:

Suppose that a kde is defined using kernel and its bandwidth is chosen by likelihood cross-validation. Ref. [5] shows that, in general, this cross-validation bandwidth will be asymptotically optimal in a Kullback–Leibler sense. We will therefore use in all subsequent simulations. Results in the Appendix A.2 provide a kernel K and corresponding prior that produce .

2.3. Further Properties of

In the Appendix A.3 we will show that the test is consistent in the frequentist sense. In other words, for any alternative the power of an test of fixed level tends to 1 as m and n tend to ∞.

Interestingly, has the property of being sharply bounded above. It can be rewritten as follows:

Defining ,

and therefore

A similar bound applies for the other component of , implying that

Using the fact that has its maximum at when , bound (4) implies that

Unless one of m and n is very small, the effective bound on is . This reinforces the fact that does not have the property of Bayes consistency. While it is true that is an average of Bayes factors, none of these Bayes factors can ever provide compelling evidence in favor of the alternative. To reiterate, this problem is overcome by employing in frequentist fashion.

While can take on positive values when the null hypothesis is true, our proof of frequentist consistency shows that, under , as . This implies that if 0 is used as a critical value, then the resulting test level tends to 0 as . So, even though does not tend to ∞, the sign of provides compelling evidence for the hypotheses of interest when the sample sizes are large.

The exact conditional distribution of is known under the null hypothesis, as we use a permutation test. Nonetheless, it is of some interest to have an impression of the unconditional distribution of . To this end, we randomly select two normal mixture densities that differ. The number of components M in the first mixture is between 2 and 20 and chosen from a distribution such that the probability of m is proportional to , . Given , mixture weights are drawn from a Dirichlet distribution with all m parameters equal to . Given and mixture weights, variances of the normal components are a random sample from an inverse gamma distribution with both parameters equal to . Finally, means of the normal components are such that given are independent with , . The second normal mixture is independently selected using exactly the same mechanism. Random selection of densities in this manner for simulation studies has been proposed and explored in [7].

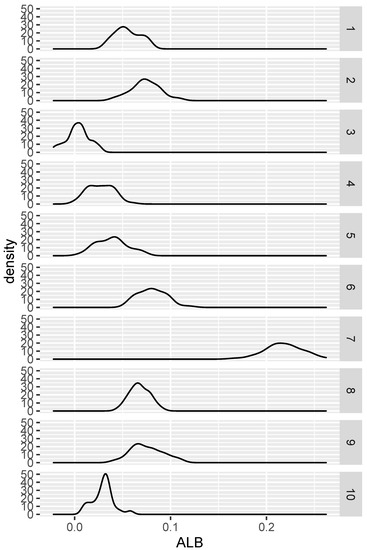

We draw a sample of size 100 from each of the two randomly generated densities (so that ), and then compute . This procedure is replicated on the same two densities 100 times. After this, we repeat the whole procedure for nine more pairs of randomly selected densities. The results are seen in Figure 1. Save for case 3, the proportion of positive s is nearly 1 in all cases.

Figure 1.

Distribution of under various alternative hypotheses.

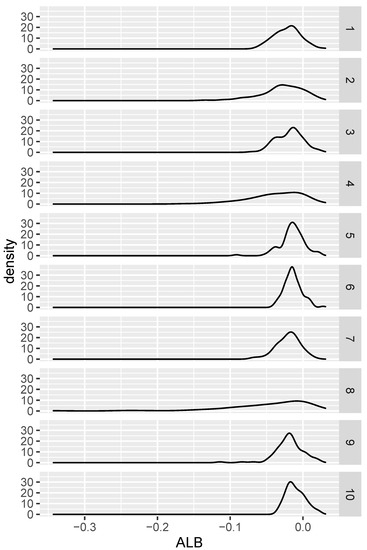

We repeated a similar procedure for the null hypothesis setting. The simulation was exactly the same except that in each of the ten cases, only one density was generated, and a pair of independent samples (of size 100 each) was selected from this same density. The resulting distributions can be seen in Figure 2. The proportion of the cases where for the 10 densities were, respectively, 0.89, 0.83, 0.83, 0.84, 0.85, 0.87, 0.91, 0.84, 0.84, and 0.76. These results are consistent with the fact that tends to 1 with sample size.

Figure 2.

Distribution of under various null hypotheses.

We feel that has potential for screening variables in a binary classification problem. Since is negative with high probability under , we feel that 0 is a nicely interpretable cutoff for variable inclusion. However, we leave this topic for future research.

3. Simulations

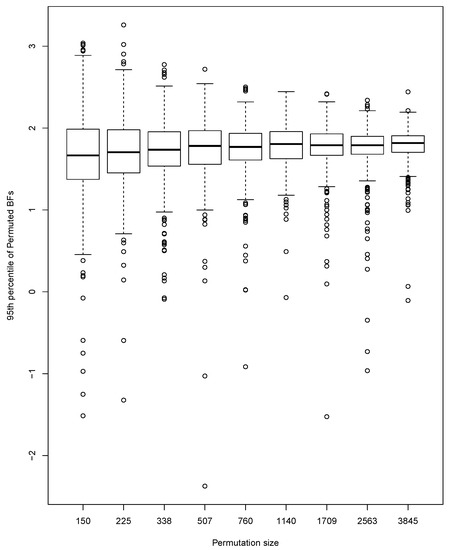

We perform a small simulation study to investigate the size and power of our test. To explore the effect of the number of permutations, we generate 500 pairs of data sets, with one data set being a random sample of size from a standard normal distribution, and the other a random sample of size from a normal distribution with mean 0 and standard deviation 2. For each of the 500 pairs of data sets, the 95th percentile of s is approximated using a range of different numbers (N) of permutations starting at 100 and increasing by a factor of 1.5 up to 3845. Results are indicated by the boxplots in Figure 3. The percentiles are centered at approximately the same value for all N. Not surprisingly, the variability of the percentiles becomes smaller as N increases. This implies a certain amount of mismatch between percentiles at and those at smaller N.

Figure 3.

Effect of number of permutations on the 95th percentile of permutation distributions.

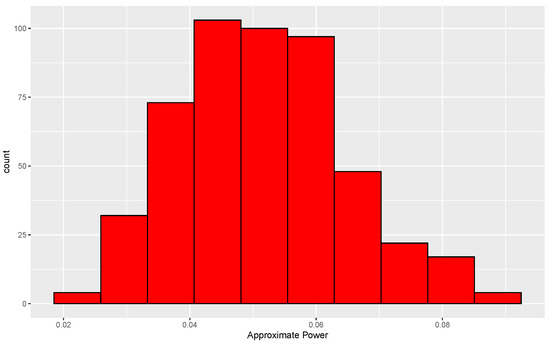

The consequence of the mismatch just alluded to can be investigated by determining the true conditional and unconditional levels of tests based on small N. For the null case, two data sets, each of size 50, are generated from a common normal distribution. Since the distribution of is invariant to location and scale in the null case, we use a standard normal without loss of generality. For each pair of data sets, the data are randomly permuted 338 times, which leads to 338 values of . A second set of 3845 permutations is then performed, leading to 3845 more values of . The proportion of s from the second set that exceed the 95th percentile of the s formed from the first set is then determined. This proportion is approximately equal to the conditional level of the test based on 338 permutations. This same procedure is used for each of 500 data sets, and the resulting distribution of approximate levels is shown in Figure 4.

Figure 4.

Distribution of approximate conditional levels of permutation tests under the null hypothesis. Each conditional level is the proportion of 3845 s from permuted data sets that exceed the 95th percentile of s formed from 338 permuted data sets. Results are based on 500 replications in each of which both distributions are standard normal.

The histogram is centered near 0.05, and 87% of the conditional levels are between 0.03 and 0.07. Furthermore, an approximation to the unconditional level is , where is the approximate conditional level for the ith data set, . Based on these results, use of only 338 permutations is arguably adequate.

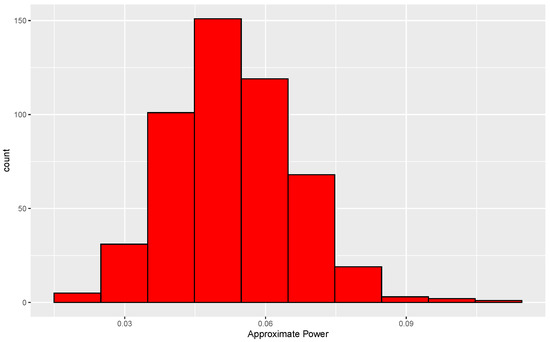

The same experiment is repeated except now the two data sets are drawn from different distributions, a standard normal and a normal with mean 0 and standard deviation 2. Results from this experiment are given in Figure 5.

Figure 5.

Distribution of approximate conditional levels of permutation tests under an alternative hypothesis. Each conditional level is the proportion of 3845 s from permuted data sets that exceed the 95th percentile of s formed from 338 permuted data sets. Results are based on 500 replications in each of which one distribution is standard normal and the other is normal with mean 0 and standard deviation 2.

As in the null case, the conditional levels based on the use of 338 permutations are quite good. Eighty-eight percent of the levels are between 0.03 and 0.07, and the approximate unconditional level is 0.051.

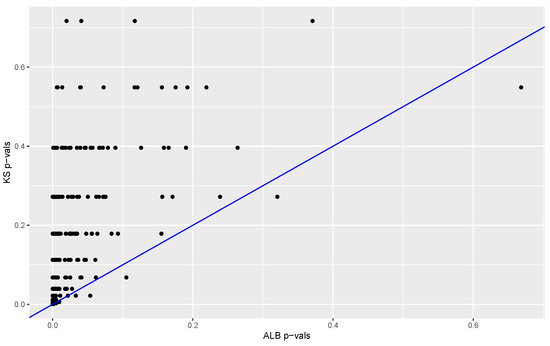

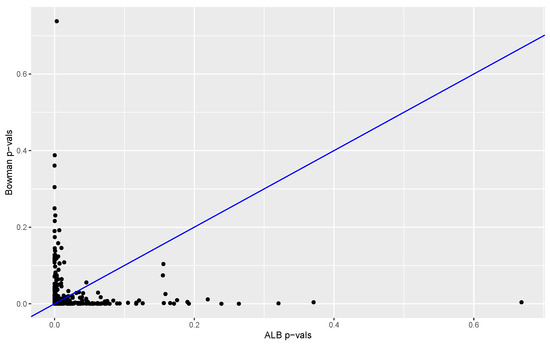

The proportion of s from permuted data sets that are larger than the computed from the original data provides a p-value. The p-values obtained with our method (based on 3845 permutations) are compared to the p-values obtained with the Kolmogorov–Smirnov test and Bowman’s two-sample test. Results are summarized in Figure 6 and Figure 7. In 98% of the replications the K-S p-value was larger than the p-value, and in 57% of the cases the Bowman p-value was equal to or larger than the p-value. These results suggest that in this case our test has much better power than that of the Kolmogorov–Smirnov test and power at least comparable to that of Bowman’s test.

Figure 6.

Kolmogorov–Smirnov p-values versus p-values. Results are based on 500 data sets in each of which one distribution is standard normal and the other is normal with mean 0 and standard deviation 2. The p-value is less than the KS-test p-value in 98% of cases. There are only 183 p-values from the KS-test that are less than .

Figure 7.

Bowman p-values versus p-values. Results are based on 500 data sets in each of which one distribution is standard normal and the other is normal with mean 0 and standard deviation 2. The number of p-values less than 0.05 for Bowman’s test and the test are 454 and 458, respectively. The ALB p-value is less than, more than and equal to the Bowman p-value in 49%, 43% and 8% of cases, respectively.

4. A Bivariate Extension of the Two-Sample Test and Application to Connectionist Bench Data

Our method can be extended to the bivariate case by using a bivariate kernel density estimate. Assume now that are independent and identically distributed from density f and are independent and identically distributed from g, where and are each bivariate observations, , .

A product kernel K will be used, i.e., the bivariate kernel K is the product of two univariate kernels. For k arbitrary bivariate observations , , , and , the kernel estimate is defined by

where , and is a two-vector of (positive) bandwidths.

We will use the same sort of notation as before, i.e., , , , , and is the object with all its components except , . In this case the ith Bayes factor is defined as

and similarly for . As before the test statistic is .

This form may seem daunting, but reduces to a more familiar form if we take . In this case, proceeding exactly as in Section 2, has the form

and similarly for , where and L is defined by (2).

We will analyze a subset of the connectionist bench data, which consist of measurements obtained after bouncing sonar waves off of either rocks or metal cylinders. The data may be found at the UCI Machine Learning repository, Ref. [8]. There are 60 variables in the data set, with and measurements of each variable for the metal cylinders and rocks, respectively. Variable numbers (1 to 60) correspond to increasing aspect angles at which signals are bounced off of either metal or rock, and each of the 60 numbers is an amount of energy within a particular frequency band, integrated over a certain period of time. We will apply our test to see if the first two variables (corresponding to the smallest aspect angles) have a different distribution for rocks than they do for metal cylinders. In our analysis K is taken to be , the standard normal density, and to be of the form (A1). In this event L is a t-density with degrees of freedom. We will use , leading to a fairly heavy-tailed kernel, which is desirable for reasons discussed previously.

The data for each variable are inherently between 0 and 1, and bivariate kernel estimates display boundary effects along the lines and , with the largest bias near the origin. We therefore use a reflection technique to reduce bias along these two lines. Suppose one has k observations on the unit square. Each observation is reflected to create three new observations: , and , . One then simply computes, at points in the unit square, a standard kernel density estimate from the data set of size , and multiplies it by 4 to ensure integration to 1. The value of is computed as described previously except that each leave-out estimate leaves out four values: the observation at which the estimate is evaluated plus its three reflected versions. In this way the kde is constructed from data that are independent of the value at which the kde is evaluated.

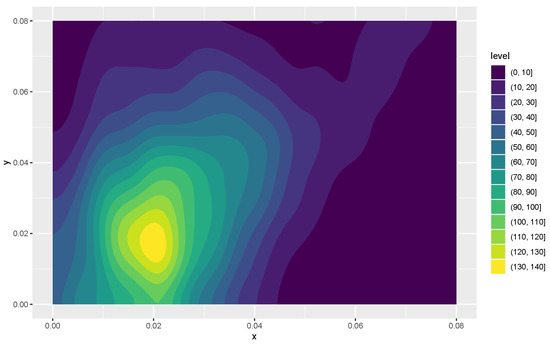

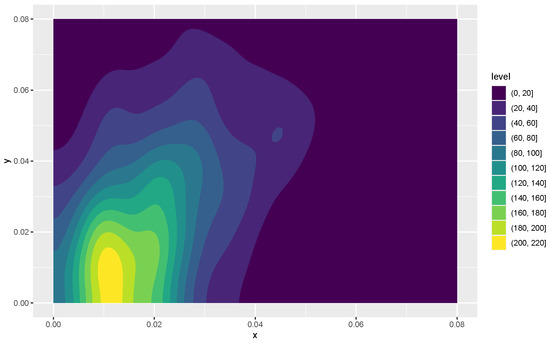

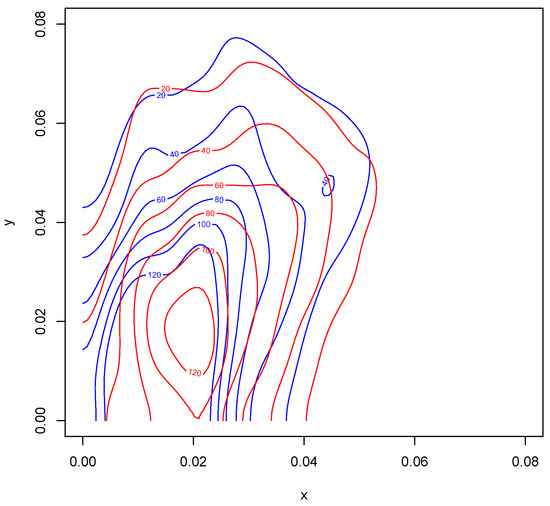

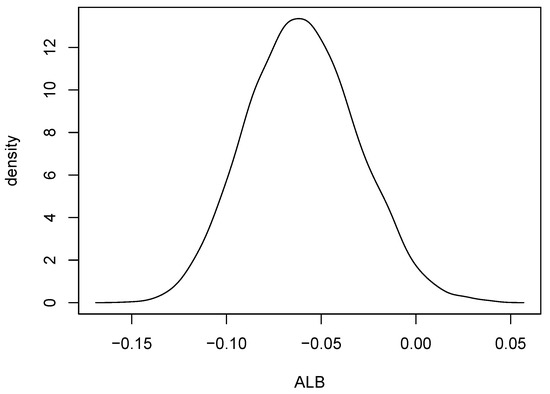

Kernel density estimates for variables 1 and 2 in the form of heat maps are shown in Figure 8 and Figure 9, and contours of the estimates are given in Figure 10. The latter figure suggests that the distributions for metal cylinders and rock are different. The value of turned out to be , and an approximate p-value based on 10,000 permuted data sets was 0.0076. So, there is strong evidence of a difference between the rock and metal bivariate distributions. Interestingly, the percentage of negative s among the 10,000 permutations was . A kernel density estimate based on the 10,000 values of is shown in Figure 11.

Figure 8.

A heat map of the first two variables for the signals bounced off the metal cylinder. Variables x and y correspond to the smallest and next to smallest aspect angles, respectively.

Figure 9.

A heat map of the first two variables for the signals bounced off the rock object. Variables x and y are as defined in Figure 8.

Figure 10.

Contour plots of the first two variables of both rock and cylinder objects. The blue contours correspond to the rock measurements and red to the cylinder measurements. Variables x and y are as defined in Figure 8.

Figure 11.

A kernel density estimate computed using 10,000 values of from permuted data sets. The value of for the original data set was 0.013.

5. Conclusions and Future Work

We have proposed a new nonparametric test of the null hypothesis that two densities are equal. An attractive property of the test is that its critical values are defined by a permutation distribution, allaying essentially any concern about test validity. The fact that the statistic is an average of log-Bayes factors leads to another attractive property: a critical value of 0 leads to a test with type I error probability tending to 0 with sample size. A simulation study showed the new test to have much better power than the Kolmogorov–Smirnov test in a case where the two densities differed with respect to scale. An application to connectionist data illustrated the usefulness of our methodology for bivariate data.

Future work includes efforts to increase the speed of computing the test statistic and its permutation distribution, especially for large data sets. We are also interested in applying the new test to the problem of screening variables prior to performing binary classification. A common method of doing so is to compute a two-sample test statistic for each variable, and to then select variables whose statistics exceed some threshold. An inherent problem in this approach is objectively choosing a threshold. Results of the current paper suggest that 0 would be a natural and effective threshold for variable screening.

Author Contributions

Conceptualization, N.M. and J.D.H.; Methodology, N.M. and J.D.H.; Software, N.M. and J.D.H.; Investigation, N.M. and J.D.H.; Writing, N.M. and J.D.H. All authors have read and agreed to the published version of the manuscript.

Funding

The APC was funded by the Department of Statistics, Texas A&M University.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Appendix A.1. Relationship of K and L

By far the most popular choice of kernel in practice is the Gaussian kernel, , , where is the standard normal density. For , define

If one takes K to be the the standard normal kernel and uses prior (A1), then the corresponding kernel L is a t-density with degrees of freedom. An interesting aspect of these kernels is that they have heavier tails than those of the Gaussian kernel. This is especially true for the more diffuse, or noninformative priors, i.e., those for which is small. (The mean and variance of (A1) exist for . At , the two are 1.382 and 1.090, respectively, and as they converge to 1 and 0).

The fact that the kernel L is more heavy-tailed than K in the previous example is not an isolated phenomenon, as indicated by the following proposition (which is straightforward to prove):

Proposition A1.

If has support with and the tails of K decay exponentially, then the tails of L are heavier than those of K in that as .

In principle, many different choices of and K could produce the same kernel L. Or, one might ask “given kernel K, what prior would produce a specified L?” When K is Gaussian, the latter question is answered by solving an integral equation. Unfortunately, doing so, at least in a general sense, exceeds our mathematical abilities. In the case where K is uniform, though, an elegant solution exists, as seen in the next section.

Appendix A.2. When K Is Uniform

In the special case where K is uniform on the interval , it is easy to check that, for all u,

If has support , then L has support , and hence we see again that averaging kernels with respect to a prior leads to a more heavy-tailed kernel.

Since our statistic ends up being a log-likelihood ratio based on kernel L, an interesting question is “what prior gives rise to a specified kernel L?" Taking , (A2) implies that

When L is decreasing on it follows that is a density. (Under mild tail conditions on L and assuming that exists finite, it is easy to show using integration by parts that (A3) integrates to 1 on ).

Suppose that a kde is defined using the Hall kernel and its bandwidth is chosen by likelihood cross-validation. Ref. [5] shows that, in general, this cross-validation bandwidth will be asymptotically optimal in a Kullback–Leibler sense. In contrast, using cross-validation to choose the bandwidth of a uniform kernel kde will produce a bandwidth that diverges to ∞ as the sample size tends to ∞.

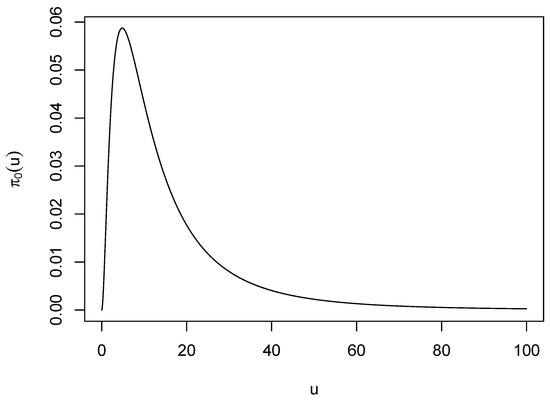

Using (A3) the prior, shown in Figure A1, that produces is

This shape for the bandwidth prior could be considered canonical inasmuch as will be similarly shaped for kernels that are decreasing on .

Figure A1.

The prior that produces the Hall kernel when K is uniform.

Appendix A.3. Consistency

Here we prove

- R1.

- frequentist consistency of our test, and

- R2.

- as .

Our proof uses the following assumptions.

- A1.

- Under the null and alternative hypotheses the following integrals exist finite:When the alternative hypothesis is true, f and g are assumed to be different in the sense that the total variation distance, , is positive.

- A2.

- The kernel L in (expression (3)) is the Hall kernel, .

- A3.

- The combined data likelihood cross-validation is maximized over an interval of the form , where is an arbitrarily small positive constant. The maximizer of this cross-validation is denoted .

- A4.

- The ratio tends to , , as tend to ∞.

- A5.

- The densities f, g and satisfy the conditions of [5] that are needed for the asymptotic optimality of a likelihood cross-validation bandwidth.

- A6.

- Under the null hypothesis, let be the Kullback–Leibler risk of a kernel density estimate based on sample size k, kernel and bandwidth b. Then satisfiesfor positive constants a, and with .

Before proceeding to the proof, remarks about assumption A6 are in order. This condition is needed only in proving R2, and represents a subset of the cases studied by [5]. It has been assumed merely to allow a more concise proof of R2, which remains true under more general conditions on .

The critical values of a test with fixed size will tend to 0 as tend to ∞ so long as tends to 0 in probability under the null hypothesis. Therefore, the power of the test will tend to 1 if we can show that tends to a positive constant under the alternative. Our proof of consistency thus boils down to showing that, as tend to ∞, converges in probability to 0 and a positive number under the null and alternative hypotheses, respectively.

For data , define

The statistic may then be written

where maximizes for .

Now suppose that is a random sample from density d, is the expectation of the Kullback–Leibler loss of and define

where exists finite. Then if d satisfies the conditions of [5] and ,

uniformly in , where is arbitrarily small. By the strong law of large numbers converges to 0 in probability. Furthermore, tends to 0 as . If the maximizer of is in it therefore follows that converges in probability to as .

In the null case, (A4) implies that

uniformly in , where we have used all of A1–A5. Since , (A5) implies that converges to 0 in probability as , which proves one part of R1.

To prove R2, we first observe that the bias component of is free of sample size, and hence the first order term of (A5) is free of bias components. Along with A3 and A6, this implies that

uniformly in . By A5, is asymptotic in probability to , the minimizer of the Kullback–Leibler risk . Along with (A6), this implies that

By A6, we have

where

Combining the previous results yields

Using the fact that it now follows that as .

Turning to the alternative case, we apply (A4) to conclude that , and are consistent for , , and , respectively, where

It follows that is consistent for , where denotes the Kullback–Leibler divergence between and . By the Csiszár-Kemperman-Kullback-Pinsker inequality,

with the last inequality following by assumption. This completes the proof of R1.

References

- Merchant, N.; Hart, J.; Choi, T. Use of cross-validation Bayes factors to test equality of two densities. arXiv 2020, arXiv:2003.06368. [Google Scholar]

- Bowman, A.W.; Azzalini, A. Applied Smoothing Techniques for Data Analysis: The Kernel Approach with S-Plus Illustrations; OUP Oxford: New York, NY, USA, 1997; Volume 18. [Google Scholar]

- Baranzano, R. Non-Parametric Kernel Density Estimation-Based Permutation Test: Implementation and Comparisons. Ph.D. Thesis, Uppsala University, Uppsala, Sweden, 2011. [Google Scholar]

- Hart, J.D.; Choi, T.; Yi, S. Frequentist nonparametric goodness-of-fit tests via marginal likelihood ratios. Comput. Stat. Data Anal. 2016, 96, 120–132. [Google Scholar] [CrossRef] [Green Version]

- Hall, P. On Kullback-Leibler loss and density estimation. Ann. Stat. 1987, 15, 1491–1519. [Google Scholar] [CrossRef]

- Young, S.G.; Bowman, A.W. Non-parametric analysis of covariance. Biometrics 1995, 51, 920–931. [Google Scholar] [CrossRef]

- Hart, J.D. Use of BayesSim and smoothing to enhance simulation studies. Open J. Stat. 2017, 7, 153–172. [Google Scholar] [CrossRef] [Green Version]

- Dua, D.; Graff, C. UCI Machine Learning Repository. School of Information and Computer Sciences, University of California, Irvine. 2017. Available online: http://archive.ics.uci.edu/ml (accessed on 15 March 2022).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).