Abstract

Gradient Learning (GL), aiming to estimate the gradient of target function, has attracted much attention in variable selection problems due to its mild structure requirements and wide applicability. Despite rapid progress, the majority of the existing GL works are based on the empirical risk minimization (ERM) principle, which may face the degraded performance under complex data environment, e.g., non-Gaussian noise. To alleviate this sensitiveness, we propose a new GL model with the help of the tilted ERM criterion, and establish its theoretical support from the function approximation viewpoint. Specifically, the operator approximation technique plays the crucial role in our analysis. To solve the proposed learning objective, a gradient descent method is proposed, and the convergence analysis is provided. Finally, simulated experimental results validate the effectiveness of our approach when the input variables are correlated.

1. Introduction

Data-driven variable selection aims to select informative features related with the response in high-dimensional statistics and plays a critical role in many areas. For example, if the milk production of dairy cows can be predicted by the blood biochemical indexes, then the doctors are eager to know which indexes can drive the milk production because each of them is independently measured with additional burden. Therefore, an explainable and interpretable system to select the effective variables is critical to convince domain experts. Currently, the methodologies on variable selection methods can be roughly divided into three categories including linear models [1,2,3], nonlinear additive models [4,5,6], and partial linear models [7,8,9]. Although achieving promising performance in some applications, these methods mentioned above still suffer from two main limitations. Firstly, the target function of these methods is restricted on the assumption of specific structures. Secondly, these methods cannot revive how the coordinates vary with respect to each other. As an alternative, Mukherjee and Zhou [10] proposed the gradient learning (GL) model, which aims to learn the gradient functions and enjoys the model-free property.

Despite the empirical success [11,12,13], there are still some limitations of the GL model, such as high computational cost, lacking the sparsity in high-dimensional data and lacking the robustness to complex noises. To this end, several variants of the GL model have been devoted to developing alternatives for individual purposes. For example, Dong and Zhou [14] proposed a stochastic gradient descent algorithm for learning the gradient and demonstrated that the gradient estimated by the algorithm converges to the true gradient. Mukherjee et al. [15] provided an algorithm to reduce dimension on manifolds for high-dimensional data with few observations. They obtained generalization error bounds of the gradient estimates and revealed that the convergence rate depends on the intrinsic dimension of the manifold. Borkar et al. [16] combined ideas from Spall’s Simultaneous Perturbation Stochastic Approximation with compressive sensing and proposed to learn the gradient with few function evaluations. Ye et al. [17] originally proposed a sparse GL model to further address the sparsity for high-dimensional variable selection of the estimated sparse gradients. He et al. [18] developed a three-step sparse GL method which allows for efficient computation, admits general predictor effects, and attains desirable asymptotic sparsistency. Following the research direction of robustness, Guinney et al. [19] provided a multi-task model which are efficient and robust for high-dimensional data. In addition, Feng et al. [20] provided a robust gradient learning (RGL) framework by introducing a robust regression loss function. Meanwhile, a simple computational algorithm based on gradient descent was provided, and the convergence of the proposed method is also analyzed.

Despite rapid progress, the GL model and its extensions mentioned above are established under the framework of empirical risk minimization (ERM). While enjoying the nice statistical properties, ERM usually performs poorly in situations where average performance is not an appropriate surrogate for the problem of interest [21]. Recently, a novel framework, named tilted empirical risk minimization (TERM), is proposed to flexibly address the deficiencies in ERM [21]. By using a new loss named t-tilted loss, it has been shown that TERM (1) can increase or decrease the influence of outliers, respectively, to enable fairness or robustness; (2) has variance reduction properties that can benefit generalization; and (3) can be viewed as a smooth approximation to a superquantile method. Considering these strength, we propose to investigate the GL under the framework of TERM. The main contributions of this paper can be summarized as follows:

- New learning objective. We propose to learn the gradient function under the framework of TERM. Specifically, the t-tilted loss is embedded into the GL model. To the best of our knowledge, it may be the first endeavor in this topic.

- Theoretical guarantees. For the new learning objective, we estimate the generalization bound by error decomposition and operator approximation technique, and further provide the theoretical consistency and the convergence rate. To be specific, the convergence rate can recover the result of traditional GL as t tends 0 [10].

- Efficient computation. A gradient descent method is provided to solve the proposed learning objective. By showing the smoothness and strongly convex of the learning objective, the convergence to the optimal solution is proved.

The rest of this paper is organized as follows: Section 2 proposes the GL with t-tilted loss (TGL) and states the main theoretical results on the asymptotic estimation. Section 3 provides the computational algorithm and its convergence analysis. Numerical experiments on synthetic data sets will be implemented in Section 4. Finally, Section 5 closes this paper with some conclusions.

2. Learning Objective

In this section, we introduce TGL and provide the main theoretical results on the asymptotic estimation.

2.1. Gradient Learning with t-Tilted Loss

Let X be a compact subset of and . Assume that is a probability measure on . It induces the marginal distribution on X and conditional distributions at . Denote as the space with the metric . In addition, the regression function associated with is defined as

For , the gradient of is the vector of functions (if the partial derivatives exist)

The relevance between the l-th coordinate and can be evaluated via the norm of its partial derivative , where a large value implies a large change in the function with respect to a sensitive change in the l-th coordinate. This fact gives an intuitive motivation for the GL. In terms of Taylor series expansion, the following equation holds:

for and . Inspired by (1), we denote the weighted square loss of as

where the restriction will be enforced by weights given by with a constant , see, e.g., [10,11,19]. Then, the expected risk of can be given by

As mentioned in [21], the defined in (3) usually performs poorly in situations where average performance is not an appropriate surrogate. Inspired from [21], for , we address the deficiencies by introducing the t-tilted loss and define the expected risk of with t-tilted loss as

Remark 1.

Note that is a real-valued hyperparameter, and it can encompass a family of objectives which can address the fairness () or robustness () by different choices. In particular, it recovers the expected risk (3) as .

On this basis, the GL with t-tilted loss is formulated as the following regularization scheme:

where is a regularization parameter. Here, is a Mercer kernel that is continuous, symmetric, and positive semidefinite [22,23] and induced by K be an RKHS defined as the closure of the linear span of the set of functions with the inner product satisfying . The reproducing property takes the form Then, we denote as an n-fold RKHS with the inner product

and norm .

2.2. Main Results

This subsection states our main theoretical results on the asymptotic estimation of on the space with norm . Before proceeding, we provide some necessary assumptions which have been used extensively in machine learning literature, e.g., [24,25].

Assumption 1.

Supposing that and the kernel K is , there exists a constant such that

Assumption 2.

Assume , almost surely. Suppose that, for some , , the marginal distribution satisfies

and the density of exists and satisfies

Taking the functional derivatives of (5), we know that can be expressed in terms of the following integral operator on the space .

Definition 1.

Let integral operator be defined by

where

The operator has its range in . It can also be regarded as a positive operator on . We shall use the same notion for the operators on these two different domains. Given the definition of integral operator , we can write in the following equation.

Theorem 1.

Given the integral operator , we have the following relationship:

where , and I is the identity operator.

Proof of Theorem 1.

To solve the scheme (5), we take the functional derivative with respect to , apply it to an element of and set it equal to 0. We obtain

Since it holds for any , it is trivial to obtain

and

The desired result follows by shifting items. □

On this basis, we propose to bound the error by a functional analysis approach and present the error decomposition as following proposition. The proof is straightforward and omitted for brevity.

Proposition 1.

For the defined in (5), it holds that

In the sequel, we focus on bounding and , respectively. Before we embark on the proof, we single out a important property regarding that will be useful in later proofs.

Lemma 1.

Under the Assumptions 1 and 2, there exists and dependent on t satisfying

Proof of Lemma 1.

Since the kernel K is and , we know from Zhou [26] that is for each l. There exists a constant satisfying . Hence, using Cauchy inequality, we have

By a direct computation, we obtain

The desired result follows. □

Denote and the moments of the Gaussian as , , we establish the following Lemma.

Lemma 2.

Under Assumptions 1 and 2, we have

Proof of Lemma 2.

Taking notice of (10), it follows that

Then, we have

We note that

From Assumptions 1 and 2, we have

The desired result follows. □

As for , the multivariate mean value theorem ensures that there exists , such that

From (14), we can define the integral operator associated with the Mercer kernel K which is related to . Using Lemma 16 and Lemma 18 in [10], we establish the following Lemma.

Lemma 3.

Under the Assumption 2, denote and . For any , we have

where is a positive operator on defined by

Proof of Lemma 3.

To estimate (15), we need to consider the convergence of as . Denote the stepping stone

we deduce that

Using the multivariate mean value theorem, there exists , such that

Noticing , we have

Combining the above two estimates, there holds for any ,

Using Lemma 18 in [10] and (16), the desired result follows. □

Since the measure is probability one on X, we know that the operator can be used to define the reproducing kernel Hilbert space [22]. Let be the -th power of the positive operator on with norm having a range in , where . Then, is the range of

The assumption we shall use is . It means that lies in the range of . Finally, we can give the upper bound of the error .

Theorem 2.

Under the Assumptions 1 and 2, choose and . For any , there exists a constant such that we have

Proof of Theorem 2.

Using Cauchy inequality, for , we have

It means that

According to the definitions of and , it is trivial to obtain

Remark 2.

Theorem 2 shows when , . This means that the scheme (5) is consistent. In addition, and tend to 1 as t tends 0, we can see that the convergence rate of Scheme (5) is , which is consistent with previous result in [10]. It means that the proposed method can be regarded as an extension of traditional GL.

3. Computing Algorithm

In this section, we present the GL model under TERM and propose to use the gradient descent algorithm to find the minimizer. Finally, the convergence of the proposed algorithm is also guaranteed.

Given a set of observations independently drawn according to and assume that the RKHS are rich that the kernel matrix is strictly positive definite [27]. According to the Representer Theorem of kernel methods [28], we assert the approximation of has the following form: Let , the empirical version of (4) is formulated as follows:

where

with . For simplicity, we denote

and

The gradients of and at c are given by

and

Correspondingly, scheme (20) can be solved via the following gradient method:

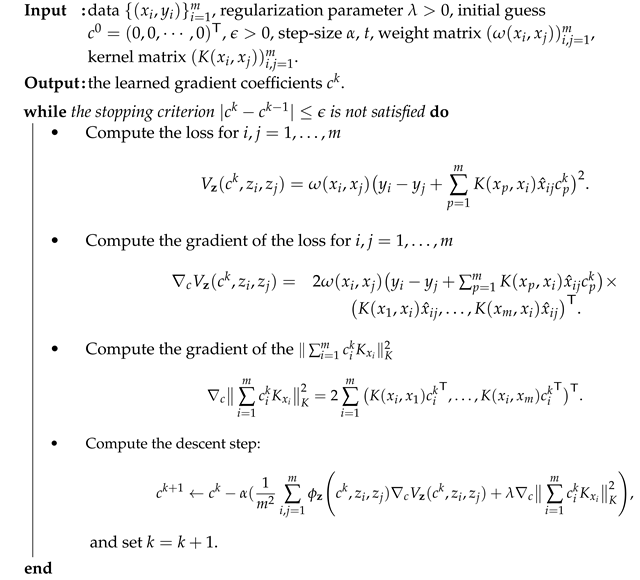

where is the calculated solution at iteration k, and is the step-size. The detailed gradient descent scheme is stated in Algorithm 1. To prove the convergence, we introduce the following lemma derived from Theorem 1 in [29].

Lemma 4.

When has an γ-Lipschitz continuous gradient (γ-smoothness) and is μ-strongly convex, for the basic unconstrained optimization problem , the gradient descent algorithm with a step-size of has a global linear convergence rate

| Algorithm 1 Gradient descent for the Gradient Learning under TERM |

|

From Lemma 4, we obtain the following conclusion which states that the proposed algorithm converges to (20) by choosing a suitable step size .

Theorem 3.

Denote , are the maximum and minimum eigenvalues of kernel matrix , respectively. There exist and dependent on t such that is γ-smoothness and μ-strongly convex for any . In addition, let the minimizer defined in scheme (20) and be the sequence generated by Algorithm 1 with , we have

Proof of Theorem 3.

Note that the strong convexity and the smoothness are related to the Hessian Matrix, and we provide the proof by dividing the Hessian Matrix into three parts:

(1) Estimation on : Note that and . It follows that

Hence, we can get the following equation:

Similar to the proof of Lemma 1, for , it directly follows that

Note that, for , has a sole eigenvalue, it means

and we have

It means that the maximum eigenvalue of is . Then, the following inequations are satisfied

where is the matrix with all elements zero.

(2) Estimation on : Note that can be rewritten as

Similar to (25), we have . It follows

(3) Estimation on : By a direct computation, we have

Setting , we deduce that

Note that the matrix of quadratic form is , then we can obtain

Note as , and it means that is -smoothness and -strongly convex. The desired result follows by Lemma 4. □

4. Simulation Experiments

In this section, we carry out simulation studies with the TGL model ( for robust) on a synthetic data set in the robust variable selection problem. Let the observation data set with be generated by the following linear equations:

where represents the outliers or noises. To be specific, three different noises are used: Cauchy noise with the location parameter and scale parameter , Chi-square noise with 5 DOF scaled by 0.01 and Gaussian noise . Three different proportions of outliers including , , or are drawn from the Gaussian noise . Meanwhile, we consider two different cases with corresponding to m = n and m < n, respectively. The weighted vector over different dimensions is constructed as follows:

, for and 0, otherwise.

Here, means the number of effective variables. Two situations including uncorrelated variables and correlated variables are implemented for x, where the covariance matrix is given with the th entry .

For the variable selection algorithms, we perform the TGL with and compare the traditional GL model [10] and RGL model [20]. For the GL and TGL models, variables are selected by ranking

For the RGL model, variables are selected by ranking

A model selecting more effective variables means a better algorithm.

We repeat experiments for 30 times with the observation set generated in each circumstance. The average selected effective variables for different circumstances are reported in Table 1, and the optimal results are marked in bold. Several useful conclusions can be drawn from Table 1.

Table 1.

Variable selection results for different circumstances.

(1) When the input variables are uncorrelated, the three models have similar performance under different noise conditions and can provide satisfactory variable selection results (approaching ) without outliers. However, the performance degrades severely for GL and a little for TGL ( for robust) with the increasing proportions of outliers, especially in case . In contrast, RGL can always provide satisfying performance. This is consistent with the previous phenomenon [20].

(2) When the input variables are correlated, the three models also have similar performance under different noise conditions but only can select partial effective variables ranging from to . In general, they degrade slowly with the increasing proportions of outliers and perform better in case than in . Specifically, the TGL model with gives slightly better selection results than GL and RGL in case . It supports the superiority of TGL to some extent.

(3) It is worth noting that the TGL model with has similar performance to GL. This phenomenon supports the theoretical conclusion that TGL recovers the GL as and the algorithmic effectiveness that the proposed gradient descent method can converge to the minimizer.

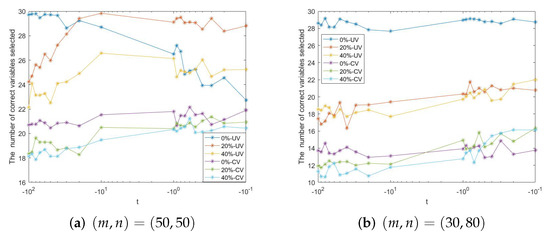

(4) Noting that the TGL model with different parameters t has great differences in the variable selection results, we further conduct some simulation studies to investigate the influence. Figure 1 shows the variable selection results of different parameters t ranging from to . We can see that the satisfying performance can be achieved when the parameter t is near . It does not turn out well when is too large. This coincides with our previous discussion that is strongly convex with limited t.

Figure 1.

The influence of different t on the variable selection results.

5. Conclusions

In this paper, we have proposed a new learning objective TGL by embedding the t-tilted loss into the GL model. On the theoretical side, we have established its consistency and provided the convergence rate with the help of error decomposition and operator approximation technique. On the practical side, we have proposed a gradient descent method to solve the learning objective and provided the convergence analysis. Simulated experiments have verified the theoretical conclusion that TGL recovers the GL as and the algorithmic effectiveness that the proposed gradient descent method can converge to the minimizer. In addition, they also demonstrated the superiority of TGL when the input variables are correlated. Along the line of the present work, several open problems deserve further research—for example, using the random feature approximation to scale up the kernel methods [30] and learning with data-dependent hypothesis space to achieve a tighter error bound [31]. These problems are under our research.

Author Contributions

All authors have made a great contribution to the work. Methodology, L.L., C.Y., B.S. and C.X.; formal analysis, L.L. and C.X.; investigation, C.Y., Z.P. and C.X.; writing—original draft preparation, L.L., B.S. and W.L.; writing—review and editing, W.L. and C.X.; visualization, C.Y. and Z.P.; supervision, C.X.; project administration, B.S.; funding acquisition, B.S. and W.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Fundamental Research Funds for the Central Universities of China (2662020LXQD002), the Natural Science Foundation of China (12001217), the Key Laboratory of Biomedical Engineering of Hainan Province (Opening Foundation 2022003), and the Hubei Key Laboratory of Applied Mathematics (HBAM 202004).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Schwarz, G. Estimating the Dimension of a Model. Ann. Stat. 1978, 6, 461–464. [Google Scholar] [CrossRef]

- Fan, J.; Li, R. Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 2001, 96, 1348–1360. [Google Scholar] [CrossRef]

- Efron, B.; Hastie, T.; Johnstone, I.; Tibshirani, R. Least angle regression. Ann. Stat. 2004, 32, 407–499. [Google Scholar] [CrossRef]

- Chen, H.; Guo, C.; Xiong, H.; Wang, Y. Sparse additive machine with ramp loss. Anal. Appl. 2021, 19, 509–528. [Google Scholar] [CrossRef]

- Chen, H.; Wang, Y.; Zheng, F.; Deng, C.; Huang, H. Sparse Modal Additive Model. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 2373–2387. [Google Scholar] [CrossRef]

- Deng, H.; Chen, J.; Song, B.; Pan, Z. Error bound of mode-based additive models. Entropy 2021, 23, 651. [Google Scholar] [CrossRef] [PubMed]

- Engle, R.F.; Granger, C.W.J.; Rice, J.; Weiss, A. Semiparametric Estimates of the Relation Between Weather and Electricity Sales. J. Am. Stat. Assoc. 1986, 81, 310–320. [Google Scholar] [CrossRef]

- Zhang, H.; Cheng, G.; Liu, Y. Linear or Nonlinear? Automatic Structure Discovery for Partially Linear Models. J. Am. Stat. Assoc. 2011, 106, 1099–1112. [Google Scholar] [CrossRef]

- Huang, J.; Wei, F.; Ma, S. Semiparametric Regression Pursuit. Stat. Sin. 2012, 22, 1403–1426. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Mukherjee, S.; Zhou, D. Learning Coordinate Covariances via Gradients. J. Mach. Learn. Res. 2006, 7, 519–549. [Google Scholar]

- Mukherjee, S.; Wu, Q. Estimation of Gradients and Coordinate Covariation in Classification. J. Mach. Learn. Res. 2006, 7, 2481–2514. [Google Scholar]

- Jia, C.; Wang, H.; Zhou, D. Gradient learning in a classification setting by gradient descent. J. Approx. Theory 2009, 161, 674–692. [Google Scholar]

- He, X.; Lv, S.; Wang, J. Variable selection for classification with derivative-induced regularization. Stat. Sin. 2020, 30, 2075–2103. [Google Scholar] [CrossRef]

- Dong, X.; Zhou, D.X. Learning gradients by a gradient descent algorithm. J. Math. Anal. Appl. 2008, 341, 1018–1027. [Google Scholar] [CrossRef]

- Mukherjee, S.; Wu, Q.; Zhou, D. Learning gradients on manifolds. Bernoulli 2010, 16, 181–207. [Google Scholar] [CrossRef]

- Borkar, V.S.; Dwaracherla, V.R.; Sahasrabudhe, N. Gradient Estimation with Simultaneous Perturbation and Compressive Sensing. J. Mach. Learn. Res. 2017, 18, 161:1–161:27. [Google Scholar]

- Ye, G.B.; Xie, X. Learning sparse gradients for variable selection and dimension reduction. Mach. Learn. 2012, 87, 303–355. [Google Scholar] [CrossRef]

- He, X.; Wang, J.; Lv, S. Efficient kernel-based variable selection with sparsistency. arXiv 2018, arXiv:1802.09246. [Google Scholar] [CrossRef]

- Guinney, J.; Wu, Q.; Mukherjee, S. Estimating variable structure and dependence in multitask learning via gradients. Mach. Learn. 2011, 83, 265–287. [Google Scholar] [CrossRef]

- Feng, Y.; Yang, Y.; Suykens, J.A.K. Robust Gradient Learning with Applications. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 822–835. [Google Scholar] [CrossRef]

- Li, T.; Beirami, A.; Sanjabi, M.; Smith, V. On tilted losses in machine learning: Theory and applications. arXiv 2021, arXiv:2109.06141. [Google Scholar]

- Cucker, F.; Smale, S. On the mathematical foundations of learning. Bull. Am. Math. Soc. 2002, 39, 1–49. [Google Scholar] [CrossRef]

- Chen, H.; Wang, Y. Kernel-based sparse regression with the correntropy-induced loss. Appl. Comput. Harmon. Anal. 2018, 44, 144–164. [Google Scholar] [CrossRef]

- Feng, Y.; Fan, J.; Suykens, J.A. A Statistical Learning Approach to Modal Regression. J. Mach. Learn. Res. 2020, 21, 1–35. [Google Scholar]

- Yang, L.; Lv, S.; Wang, J. Model-free variable selection in reproducing kernel hilbert space. J. Mach. Learn. Res. 2016, 17, 2885–2908. [Google Scholar]

- Zhou, D.X. Capacity of reproducing kernel spaces in learning theory. IEEE Trans. Inf. Theory 2003, 49, 1743–1752. [Google Scholar] [CrossRef]

- Belkin, M.; Niyogi, P.; Sindhwani, V. Manifold Regularization: A Geometric Framework for Learning from Labeled and Unlabeled Examples. J. Mach. Learn. Res. 2006, 7, 2399–2434. [Google Scholar]

- Schölkopf, B.; Smola, A.J.; Bach, F. Learning with Kernels: Support Vector Machines, Regularization, Optimization, and Beyond; MIT Press: Cambridge, MA, USA, 2002. [Google Scholar]

- Karimi, H.; Nutini, J.; Schmidt, M. Linear convergence of gradient and proximal-gradient methods under the polyak-łojasiewicz condition. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Riva del Garda, Italy, 19–23 September 2016; Springer: Cham, Switzerland, 2016; pp. 795–811. [Google Scholar]

- Dai, B.; Xie, B.; He, N.; Liang, Y.; Raj, A.; Balcan, M.F.F.; Song, L. Scalable Kernel Methods via Doubly Stochastic Gradients. In Advances in Neural Information Processing Systems; Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N., Weinberger, K., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2014; Volume 27. [Google Scholar]

- Wang, Y.; Chen, H.; Song, B.; Li, H. Regularized modal regression with data-dependent hypothesis spaces. Int. J. Wavelets Multiresolution Inf. Process. 2019, 17, 1950047. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).