1. Introduction

The error number of the codeword in the time-varying channel has great randomness, which makes it redundant to realize channel decoding with fixed decoding parameters. Therefore, channel evaluation plays an important role in channel decoding. It is of great significance to analyze the reliability of the channel in real-time by the received code sequence and automatically adjust the decoding parameters so that the channel decoder can maintain an efficient and low redundancy state on the premise of meeting the decoding performance.

Low-complexity chase (LCC) [

1] is an excellent algebraic soft-decision decoding (ASD) algorithm for medium-to-high rate Reed–Solomon (RS) codes, which can achieve comparable error correction performance with other ASD algorithms, e.g., the Kötter–Vardy (KV) algorithm [

2] and Bit-level Generalized Minimum Distance (BGMD) algorithm [

3], while having lower complexity. As the main benefit of the LCC decoder, one level of multiplicity makes it possible to replace the interpolation and factorization stages by reformulated inversionless Berlekamp–Massey (RiBM) algorithm [

4,

5].

However, the LCC algorithm needs to judge the error symbol number of the codeword to dynamically adjust the decoding parameters for successfully decoding, otherwise it may cause a lot of redundant operations. The research work on the LCC algorithm mainly focuses on the selection of test vectors (TVs) and the design of high efficient decoders in recent years. A unified syndrome computation (USC)-based LCC decoder was proposed in [

6]. The hardware of multiplicity assignment (MA) was implemented using the received bit-level magnitudes to evaluate the symbol reliability values [

7].

However, the low hardware speed of this module limits the performance of the whole decoder. After that, an early termination algorithm for improving the throughput of the serial LCC decoder was proposed in [

8]. In [

9], a novel set of TVs derived from the analysis of the symbol error probabilities was applied to the modified LCC decoding. A LCC decoding algorithm using the module basis reduction (BR) interpolation technique [

10], namely the LCC-BR algorithm, was proposed to reduce decoding complexity and latency. In addition, the number of 1 in the first syndrome

can be used to infer whether the number of errors is even or odd, so as to reduce the number of TVs by half [

11].

Thus far, there have been some preliminary studies on the decoding of time-varying channels. In [

12], three different unreliable symbol position numbers

are used to realize channel environment adaptation; however, no specific channel evaluation scheme is given. An et al. [

13] explored a classification decoding method based on deep learning to save decoding time. One of the most critical problems in the time-varying channel is decoding redundancy, which results in a large decoding latency. The decoding latency greatly limits the high-speed processing ability of the LCC decoder for massive data.

This study aimed to dynamically adjust the decoding parameters, reduce the decoding redundancy and improve the real-time communication performance by evaluating the number of errors in the received code sequence. The main contributions of the paper are summarized as follows.

(1) A time-varying channel adaptive LCC decoding algorithm is presented based on the channel soft information. It evaluates the time-varying channel environment and generates a suitable number of TVs and syndromes to reduce decoding redundancy and decoding delay.

(2) To improve the hardware performance of the multiplicity assignment (MA) block, we provide a simplified MA scheme and its hardware architecture. We also propose a new multi-functional block that can implement polynomial selection, Chien search and Forney algorithm (PCF) for saving hardware resources.

(3) A high-performance time-varying channel adaptive LCC decoder is provided. The decoding delay of the decoder is greatly reduced, and the hardware efficiency is significantly improved.

The rest of the paper is as follows.

Section 2 introduces the conventional LCC decoding algorithm. The time-varying channel adaptive LCC algorithm is presented in

Section 3.

Section 4 introduces the simplified MA scheme, PCF block and the proposed LCC decoder. The implementation results are provided in

Section 5.

Section 6 draws the conclusion.

2. LCC Decoding Algorithm

The

RS codes over finite field

are modulated by binary phase-shift keying (BPSK) and transmitted over an additive white Gaussian noise (AWGN) channel, where

n is the code symbol length,

k is the message symbol length,

m denotes the number of bits per symbol and

. The field elements are

with the primitive element

. The LCC decoder compares the reliability of each symbol

in one codeword at the MA stage. The reliability of the

i-th received symbol is defined by first hard-decision value

and the second hard-decision value

as

The smaller is, the less reliable the symbol is. The hard-decision and can be obtained by flipping the bit with the lowest reliability (i.e., the minimum level value). unreliable symbols with the smallest values of are selected to generate multiple TVs that are most likely to achieve successful decoding. TVs can be obtained by combining or of the unreliable symbols. If there exists a TV whose error symbol number is less than the decoding radius t in the TV set (TV1,TV2,⋯, TV), where , the decoding can succeed.

3. Time-Varying Channel Adaptive LCC Algorithm

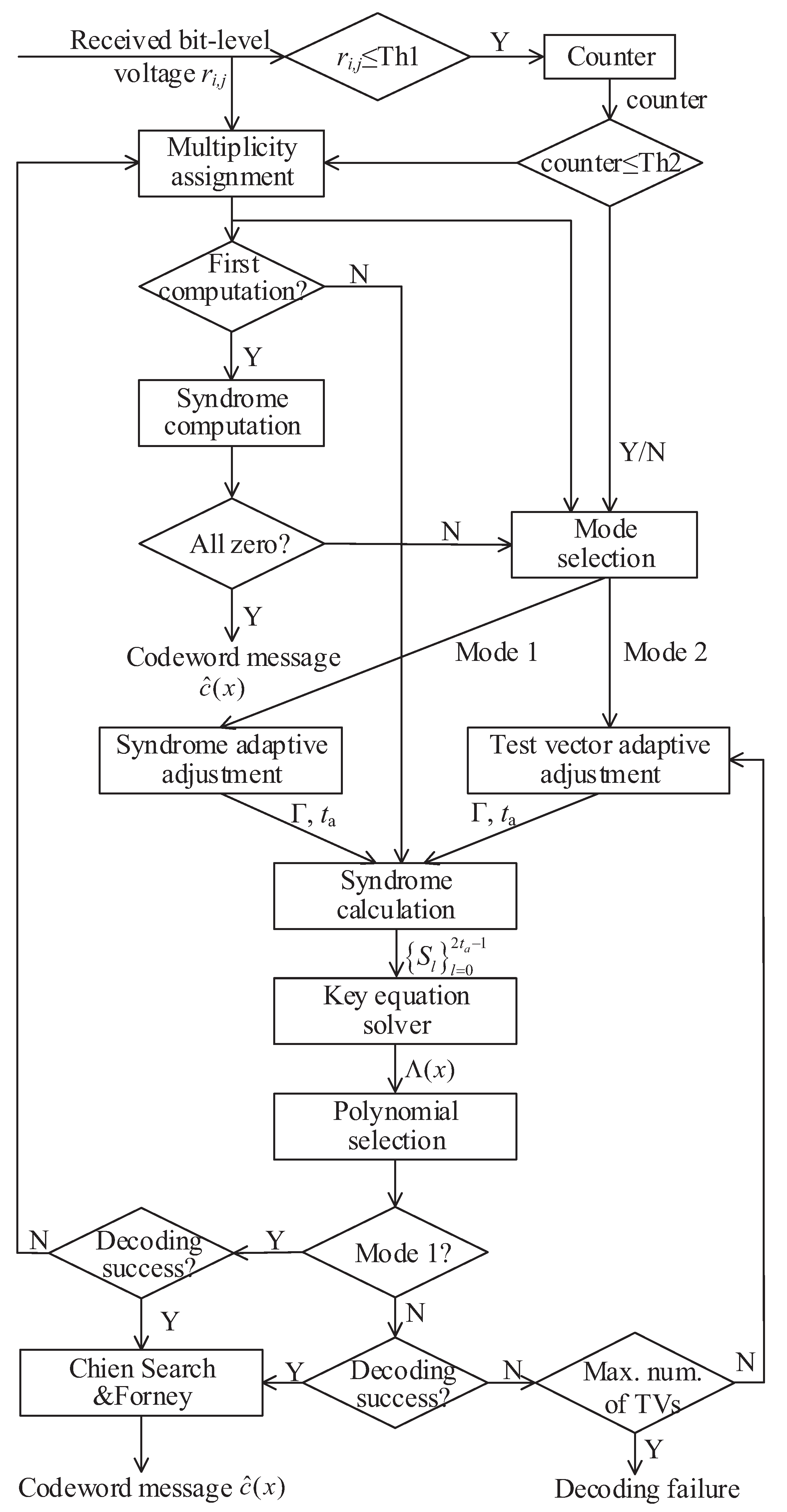

In the time-varying channel, the signal-to-noise ratio (SNR) changes rapidly with time, which makes the LCC decoding algorithm with fixed parameters cause a great deal of decoding redundancy and delay. To solve this problem, we propose a novel LCC decoding algorithm in Algorithm 1 to reduce the number of TVs and syndromes, and the flowchart is shown in

Figure 1. The main idea of the algorithm is to evaluate the error symbol number of each codeword by counting the number of unreliable bits in order to dynamically adjust the number of TVs and the number of syndromes used to achieve successful decoding.

In step 1, the error symbol number and unreliable value of the codeword are evaluated by the received bit-level value , where i is the symbol position and j is the bit position. The number of bits in each codeword whose bit-level value is less than the threshold Th1 (set to 0.3 in this paper) is counted. For each symbol, the smallest bit is selected, and the unreliable bit position set is updated.

In step 2, two modes are set, one is the syndrome adaptive adjustment, and the other is the TV adaptive adjustment. First, if the syndromes calculated from the first test vector TV1 are all zero, then the codeword is correct. Otherwise, if the unreliable bit number (i.e., counter) is less than Th2, mode 1 is performed. The threshold Th2 used to distinguish and e > t is the number of bits whose level values are less than Th1, where e is the number of error symbols.

If counter ≤ Th2, which indicates that the number of error symbols does not exceed

t, hard decision decoding (HDD) can be adopted, otherwise, the LCC decoding can be selected. On the premise of ensuring decoding performance, Th2 can be taken as data equal to

. For the standard (255,239) RS codes, Th2 values can be 16 as shown in

Table 1. If some performance is lost in exchange for decoding speed, the value of Th2 can be appropriately increased. The syndrome

S, error location

and error value

satisfy the following equations:

When

, only the first

syndromes need to be calculated to get the error vector. The number of equations to be solved is reduced from

to

, which can reduce the redundant calculation of

equations. If counter > Th2, we should make full use of the channel soft information to achieve a larger decoding radius. The selected number of TVs can be determined by the counter, which is obtained by statistical regulation.

Table 1 shows the corresponding relationship between the counter value of the received (255,239) RS code and the maximum possible number of error symbols and gives the corresponding values of

and the number of TVs.

Table 2 shows the parameter correspondence of (127,119) RS code.

| Algorithm 1: Time-Varying Channel Adaptive LCC Algorithm |

|

In addition, in order to find the TV that can be successfully decoded with fewer attempts, we introduce two TV selection and sorting methods. After determining the TV1, we need to find the TV2 based on the idea of decoding complementarity, then find the TV3 complementary to their decoding based on the previous two TVs and constantly repeat the above rules to obtain the TV index set

TV1,TV2,…,TV

. It can be concluded from [

11] that when the signal to noise ratio (SNR) exceeds 6.1 dB, the probability that the symbol error has a 1-bit error is above 99%.

The first syndrome is

, where

represents the received codeword and

is the error vector. According to the parity number of 1 contained in

and the idea of compensation decoding, two sets of index sets, odd index set

TV

,TV

,…,TV

and even index set

TV

,TV

,…,TV

, are tested.

Table 3 shows the sequence of 16 TVs of decoding (255,239) RS codes obtained through simulation, and each TV is the best compensation for all previous TVs. The order of eight TVs for decoding (127,119) RS codes is given in

Table 4. In addition, the corresponding optimal test order when the number of 1 in

is odd or even is given.

In step 3, the RS code is regarded as RS code, and the first 2 syndromes are used to complete decoding. The number of iterations for solving the key equation changed from times to times, reducing iterations. Most of the corresponding relations are reliable; however, there are a few counter values that can not accurately reflect the error symbol number of codewords. Therefore, we introduce the error mode update method in step 4 to reduce the impact of the above problems on the decoding performance. The decoding failed codewords in the syndrome adaptive adjustment are corrected by the syndromes of TV1 again.

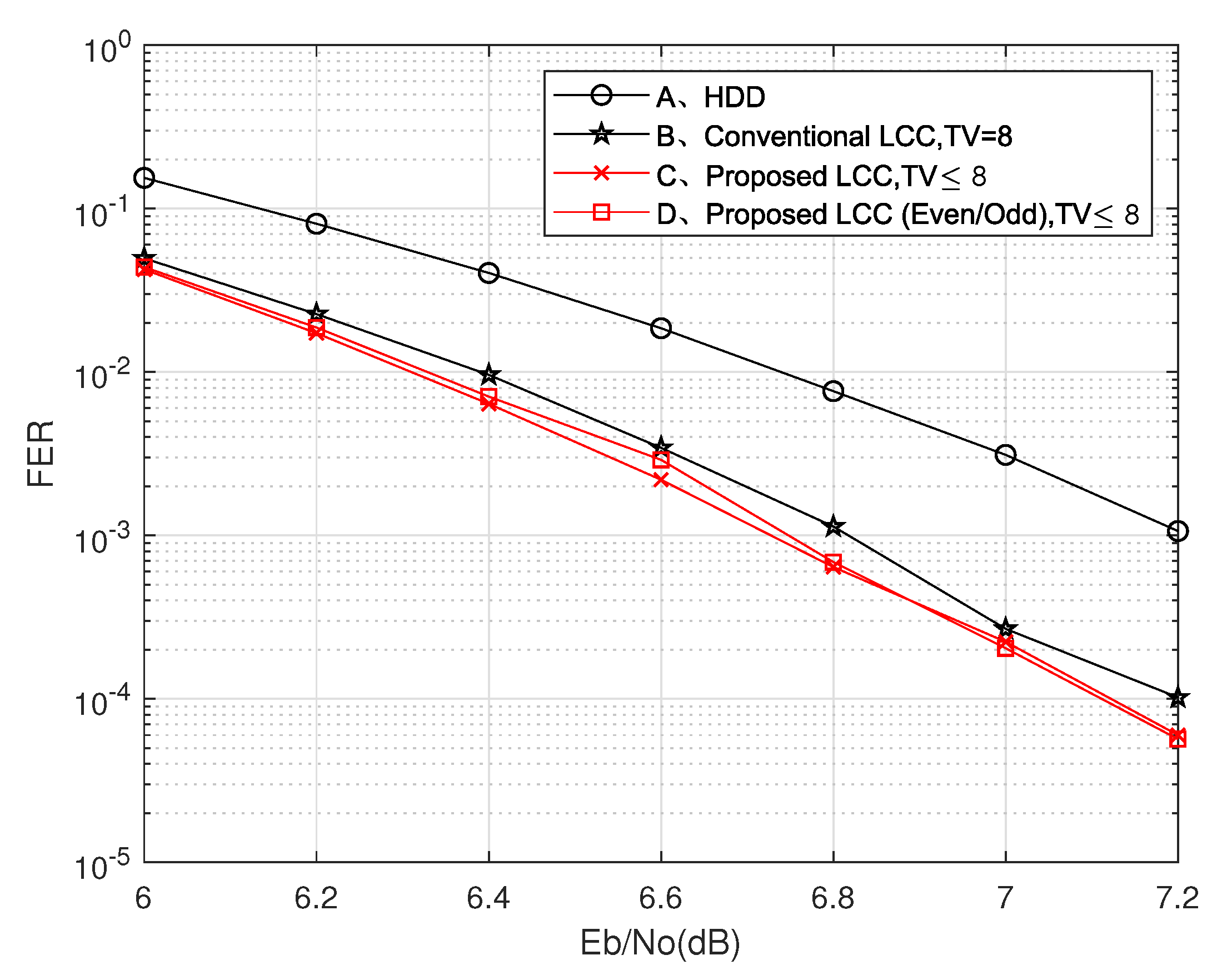

The simulation results of the proposed LCC algorithm for (255,239) RS code are given and compared with several previous algorithms in

Figure 2. The proposed LCC algorithm reduces the decoding complexity by dynamically adjusting the number of TVs. Compared with the current state-of-the-art LCC decoding algorithms [

9,

10,

11], the proposed LCC decoding algorithm has achieved equivalent or better decoding performance with fewer TVs. The amount of computation of our algorithm is greatly reduced. We simulate the time-varying channel, which randomly changes with frame in the range of [6.5, 8] dB and simulate the decoding performance of 800,000 codewords through different algorithms.

We give the total number and average number of TVs required to decode these codewords. As shown in

Table 5, compared with the cases of TV = 8 and TV = 16 in [

9], the proposed LCC decoding algorithm achieves better decoding performance while reducing TVs by 23.5% and 50.4%, respectively. Compared with the case of TV = 8 in [

11], the proposed LCC decoding algorithm achieves a performance gain of 43.1% when the number of TVs is approximately equal.

Figure 3 shows the decoding performance of the proposed decoder with other algorithms for (127,119) RS codes. The proposed scheme obtains better performance than the traditional LCC algorithm with fewer TVs. When the channel conditions are poor, the performance of the LCC decoding scheme based on a single test set is better because the probability of multi-bit errors in the symbol is greater when the channel conditions are poor, which makes the error parity judgment confused. However, when the channel conditions are good, the performance of the LCC decoding scheme based on the parity test set is better. Comparisons in the time-varying channel of [6.5, 8] dB are also provided. As shown in

Table 6, the proposed LCC decoding algorithm reduces the number of TVs by 52.4%, which also achieves more competitive decoding performance than the traditional LCC decoding algorithm.

4. The Proposed Time-Varying Channel Adaptive LCC Decoder

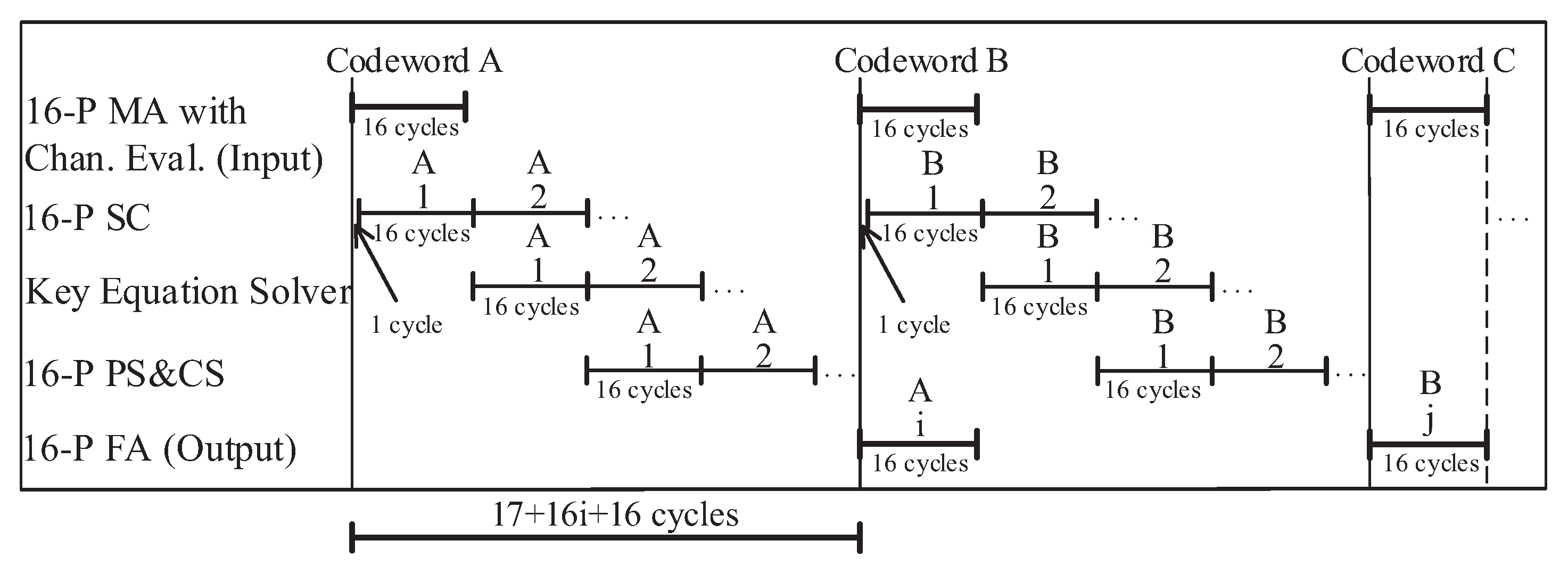

This section presents the architecture of the proposed time-varying channel adaptive LCC decoder, which processes up to 16 test vectors in the set. As shown in

Figure 4, the proposed decoder consists of four blocks: 16-parallel MA with channel evaluation, 16-parallel syndrome computation (SC), key equation solver (KES), 16-parallel PCF block. An

size RAM is used to cache the codeword.

The decoding chronogram of the proposed decoder is shown in

Figure 5. As the timing diagram shows, when the 16-parallel MA block generates the TVs, the 16-parallel SC block only delays one clock cycle to start working, and both blocks need 16 clock cycles.

Thus, it only takes 17 clock cycles from the input of the first codeword A to the output of the first TV’s syndromes. It takes 16 clock cycles for the KES block to compute the error locator polynomial and the error magnitude polynomial through the RiBM algorithm. At the same time, the 16-parallel SC block calculates the syndromes of the second TV. Then, the 16-parallel PCF block completes PS and Chein search (CS) after 16 clock cycles. If the order of the error location polynomial is lower than t, the decoder will complete the calculation of Forney algorithm by reusing the PCF block, which takes 16 clock cycles.

Otherwise, it will continue to judge the next TV. Since the first TV is the best hard decision value of the received codeword, it can be successfully decoded in most cases. If all the syndromes are zero, the TV will be output directly. If , the KES block only needs 2 clock cycles to calculate the equation; however, other blocks still need 16 clock cycles to complete their functions.

The latency of the proposed LCC decoder is 17 + 16 × i + 16 cycles. When i (i.e., the ordinal number of the TV) takes the maximum value of 16, the maximum latency of the proposed decoder is 17 + 16 × 16 + 16 = 289 cycles. When the minimum value of i is 1, the minimum latency of the proposed decoder is 17 + 16 × 1 + 16 = 49 cycles.

4.1. Multiplicity Assignment with Channel Detection

The existing MA scheme [

7] needs to realize

times pairwise comparisons through the comparator to select the most unreliable bit of each symbol, which greatly limits the hardware performance. To solve this issue, we propose a simplified MA scheme by making full use of the channel soft information. If the level value

is less than Th1, it is considered as the most unreliable bit

of this symbol.

Otherwise, the first bit

in the symbol is considered unreliable.

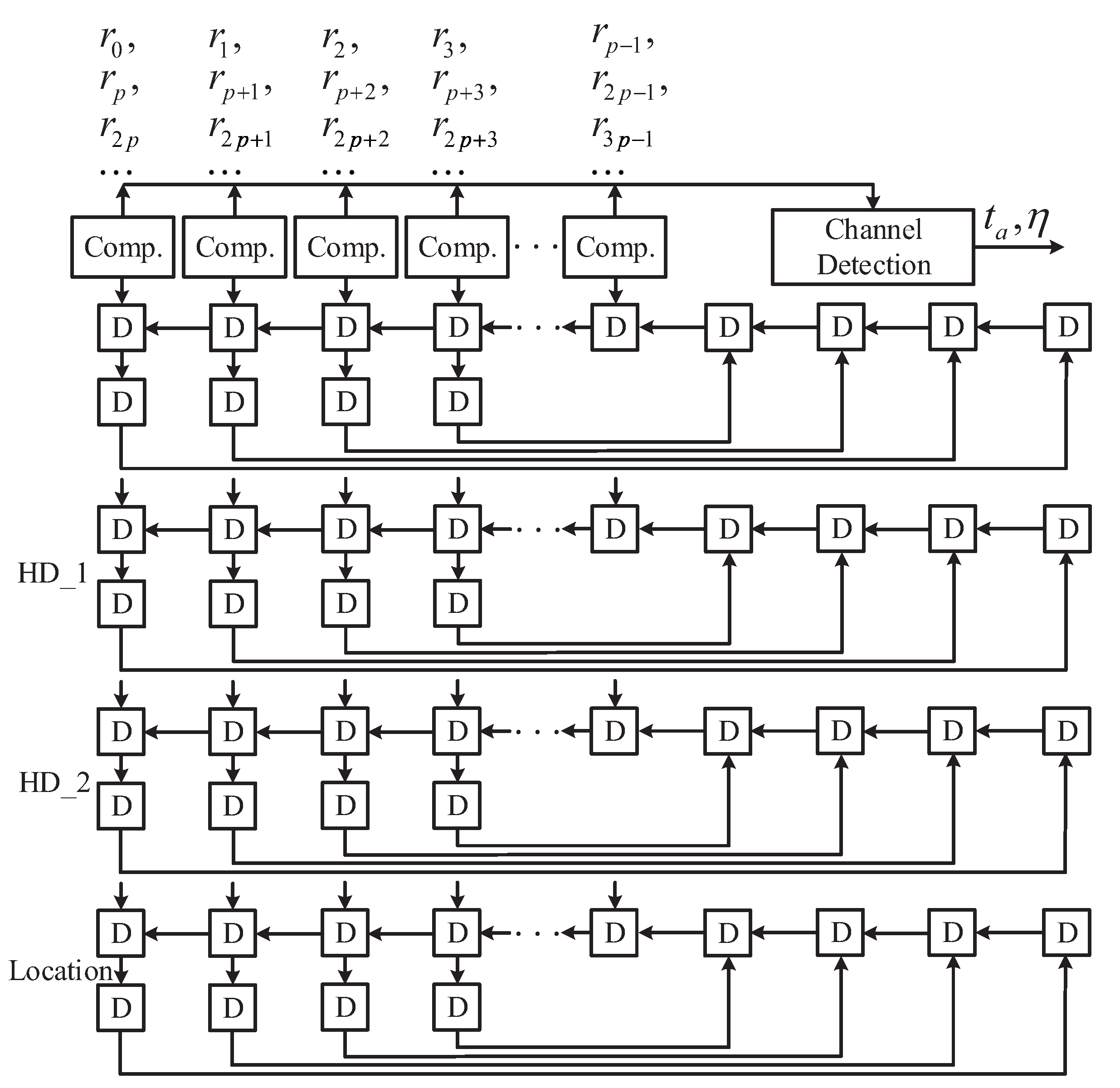

Statistics show that the probability of two or more unreliable bits in a symbol is very small. If multiple unreliable bits appear in a symbol, the last bit less than Th1 is selected as the unreliable bit. Each update of the MA block keeps only the first and second decision values of the p most unreliable elements and their location information.

The simulation results in

Table 7 show that the proposed MA block can achieve the maximum clock frequency of 385 MHz in SMIC 0.13

CMOS technology, whose throughput is 75% higher than the MA in [

7]. In addition, the hardware area and power consumption are also reduced. A

p-degree parallel MA architecture including

p comparators is shown in

Figure 6, which only needs

clock cycles to complete MA.

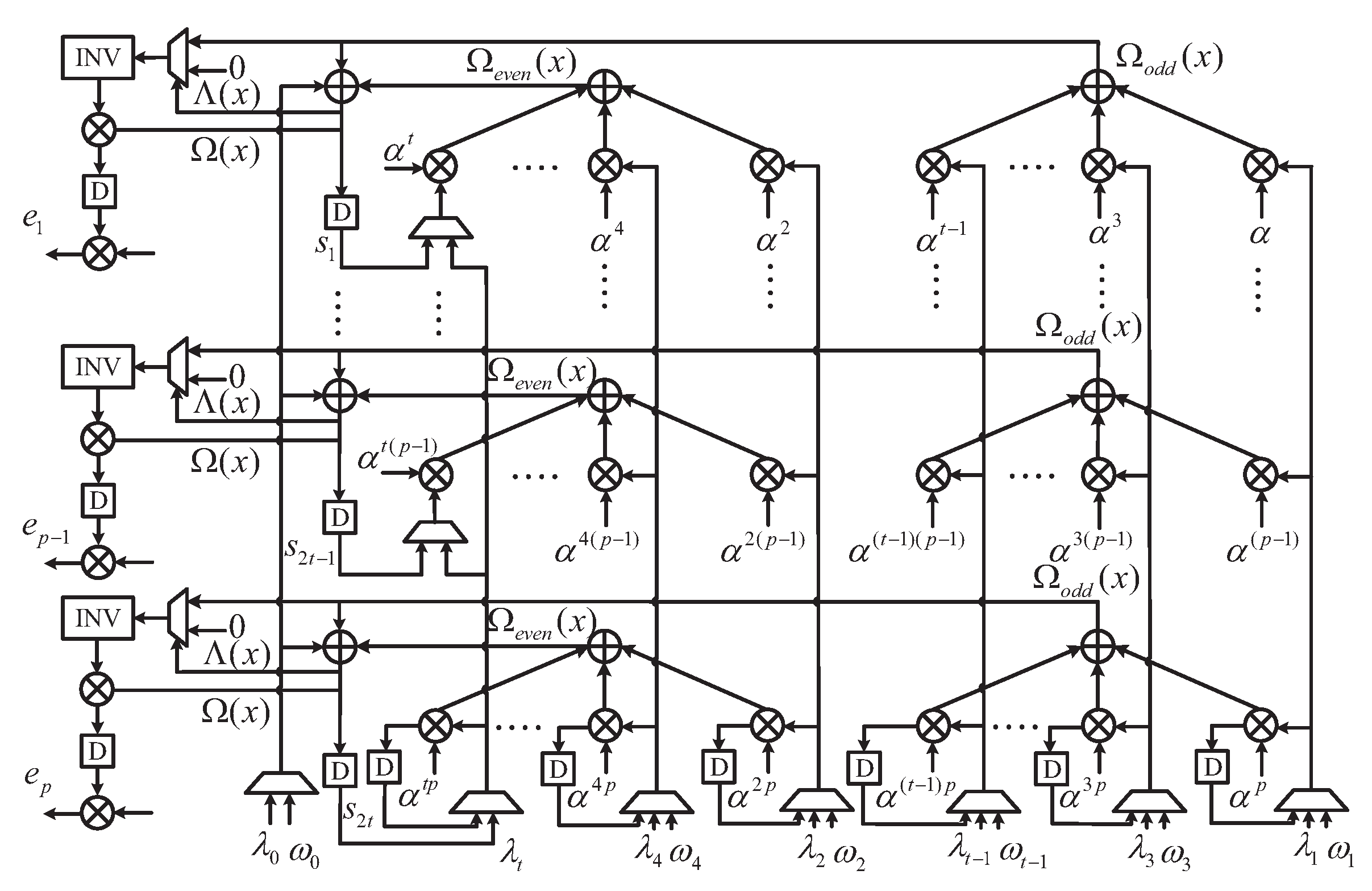

4.2. The Architecture of the Proposed Pcf Block

The proposed

p-parallel PCF block shown in

Figure 7 is an upgraded module of [

14], which only needs 16 clock cycles to realize PS and Chein search at the same time. If the polynomial is selected correctly, the block can reuse the basic unit to complete the

p-way

Forney algorithm.

5. Implementation Results

The proposed time-varying channel adaptive LCC decoder of (255,239) RS code was implemented using 0.13

and 65 nm CMOS process. As shown in

Table 8, the maximum clock frequency of the proposed decoder is 385 MHz in 0.13

process and 550 MHz in 65 nm process by Synopsys design tools. Compared with the current state-of-the-art LCC decoders [

9,

11], the proposed LCC decoder achieves equivalent or better coding gain. However, the high clock frequency and additional hardware resources also increase the power consumption of the proposed decoder.

For the time-varying channel with the range of [6.5, 8] dB, the proposed decoder requires an average of 1.06 TVs to complete decoding. The average latency of the decoder is 17 + 1.06 × 16 + 16 = 50 cycles, which is 81.6% smaller than that of the LCC decoder in [

11]. The average throughput of the proposed decoder can be calculated by Formula (5).

The estimated throughput is (255 × 8 × 385)/50 = 15.7 Gb/s in 0.13 process. The estimated throughput is (255 × 8 × 550)/50 = 22.4 Gb/s in the 65 nm process.

Compared with [

7,

9,

11], the throughput (5) of the proposed decoder is increased by 8.8, 4.1 and 1.5 times, respectively. Compared with the state-of-the-art LCC decoder, the proposed decoder has lower latency and higher throughput, which indicates that the proposed decoder has better hardware performance.

6. Conclusions

In this paper, we derived a time-varying channel adaptive LCC algorithm, which can dynamically adjust the decoding parameters through channel detection. The proposed algorithm can greatly reduce the redundant TVs and key equations, thus, reducing the decoding complexity and latency. To evaluate the algorithmic performance, we performed effect analysis in the time-varying channel.

Compared to the state-of-the-art LCC algorithm, the number of TVs required was reduced by 50.4%, and the average latency of the decoder was reduced by 81.6%. To reduce the pairwise comparison times of selecting the most unreliable bit for each symbol in the MA block, a simplified scheme assisted by setting a bit-level threshold was proposed to improve its hardware efficiency. In addition, a PCF block was proposed to reduce the consumption of hardware resources.

Based on the above techniques, the hardware architecture of the proposed time-varying channel LCC decoder is presented. Compared to previously published papers, the implementation results show that the proposed LCC decoder achieved at least 150% throughput improvement. Future work will focus on high-performance time-varying LCC decoders.