Multidimensional Feature in Emotion Recognition Based on Multi-Channel EEG Signals

Abstract

1. Introduction

- We adopt a four-dimensional (4D) feature structure, including the frequency, space, and time information of EEG signals, as the feature input of EEG signal emotion recognition.

- We use the FSTception model based on a depthwise separable convolutional neural network to solve the problems of a few training samples of EEG data, large feature dimensions, and feature extraction.

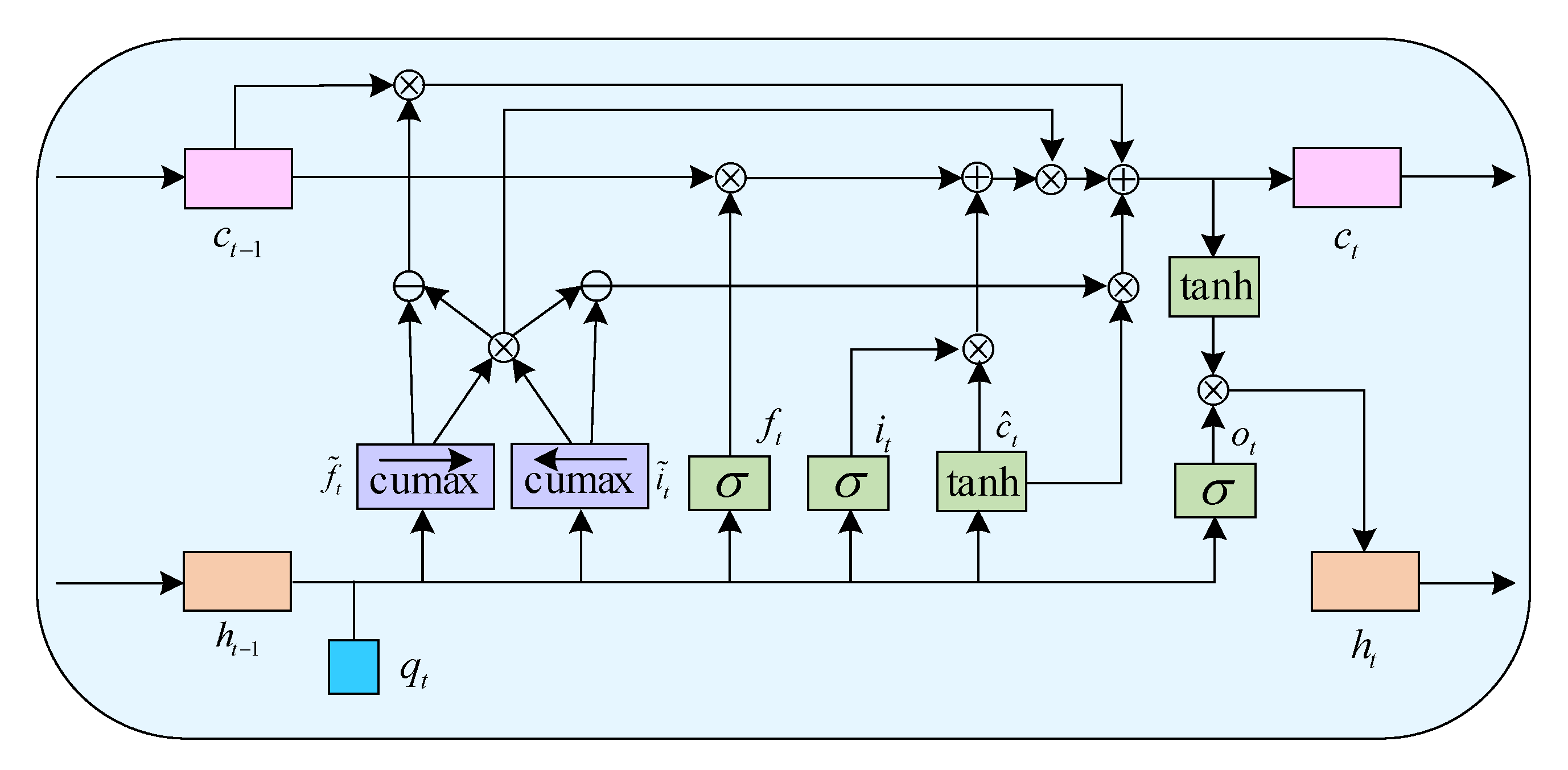

- In particular, we adopt the ON-LSTM structure to deal with the deep emotional feature extraction hidden on time series in input features with a 4D structure.

2. Materials and Methods

2.1. 4D Frequency Spatial Temporal Representation

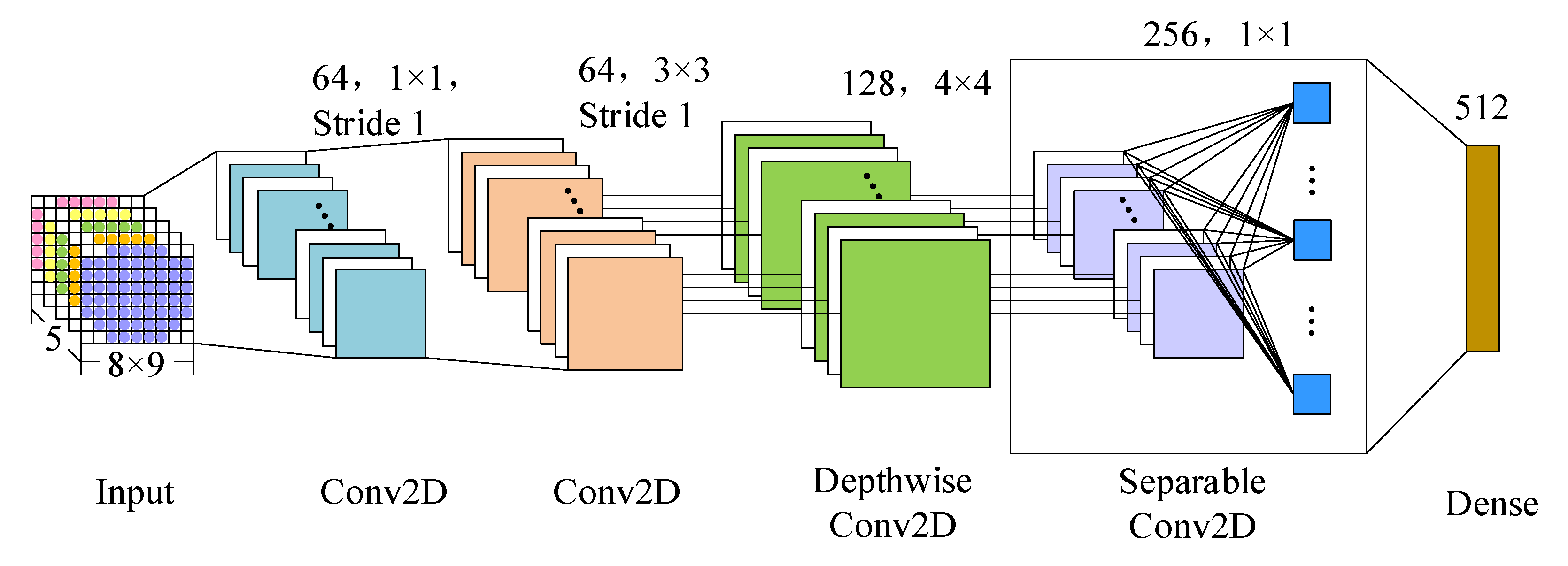

2.2. The Structure of FSTception

2.2.1. Frequency Spatial Characteristic Learning

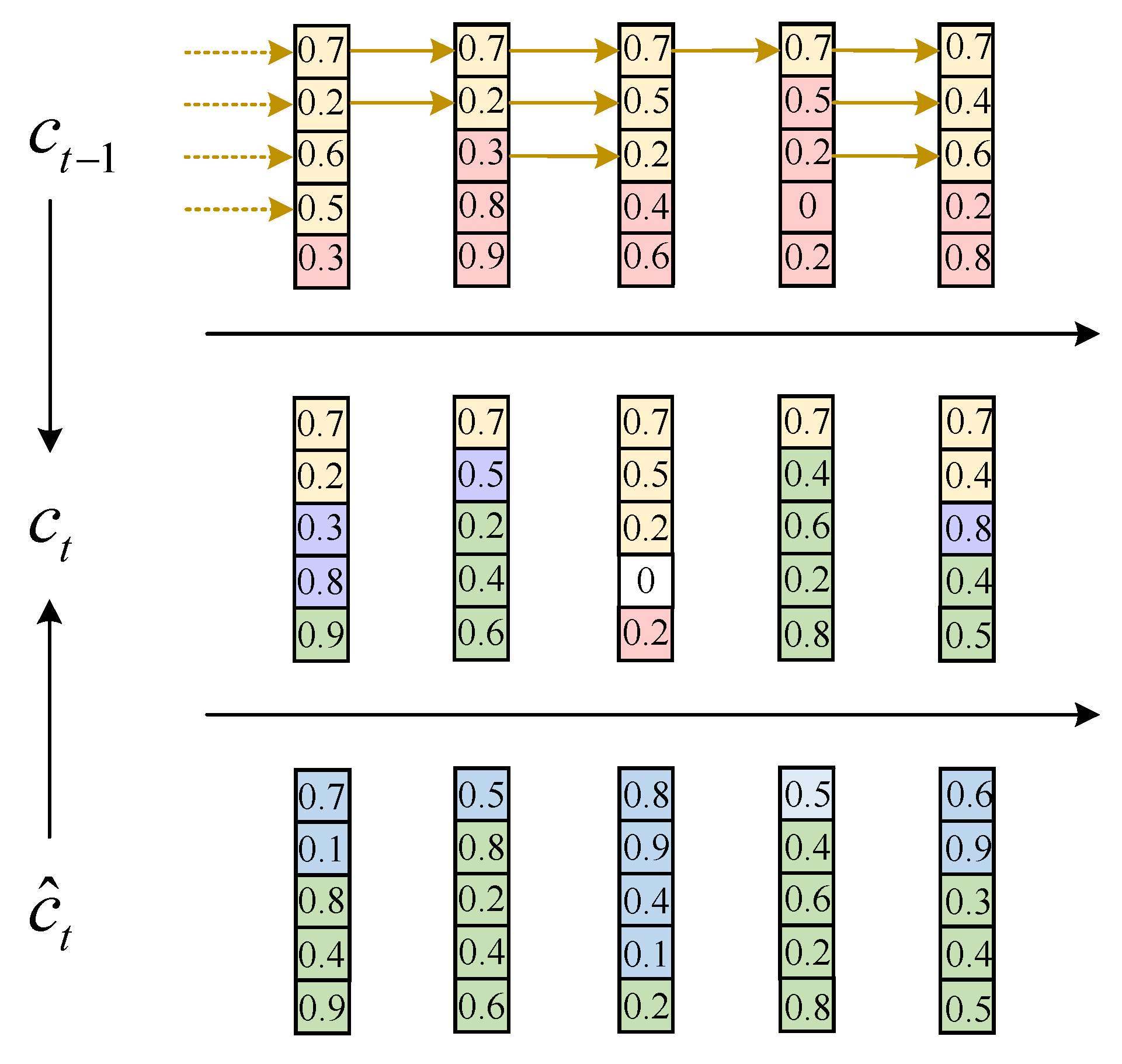

2.2.2. Temporal Characteristic Learning

2.3. Classification

3. Results

3.1. Experimental Setup

3.2. Dataset

3.2.1. SEED Dataset

3.2.2. DEAP Dataset

3.3. EEG Data Preprocessing

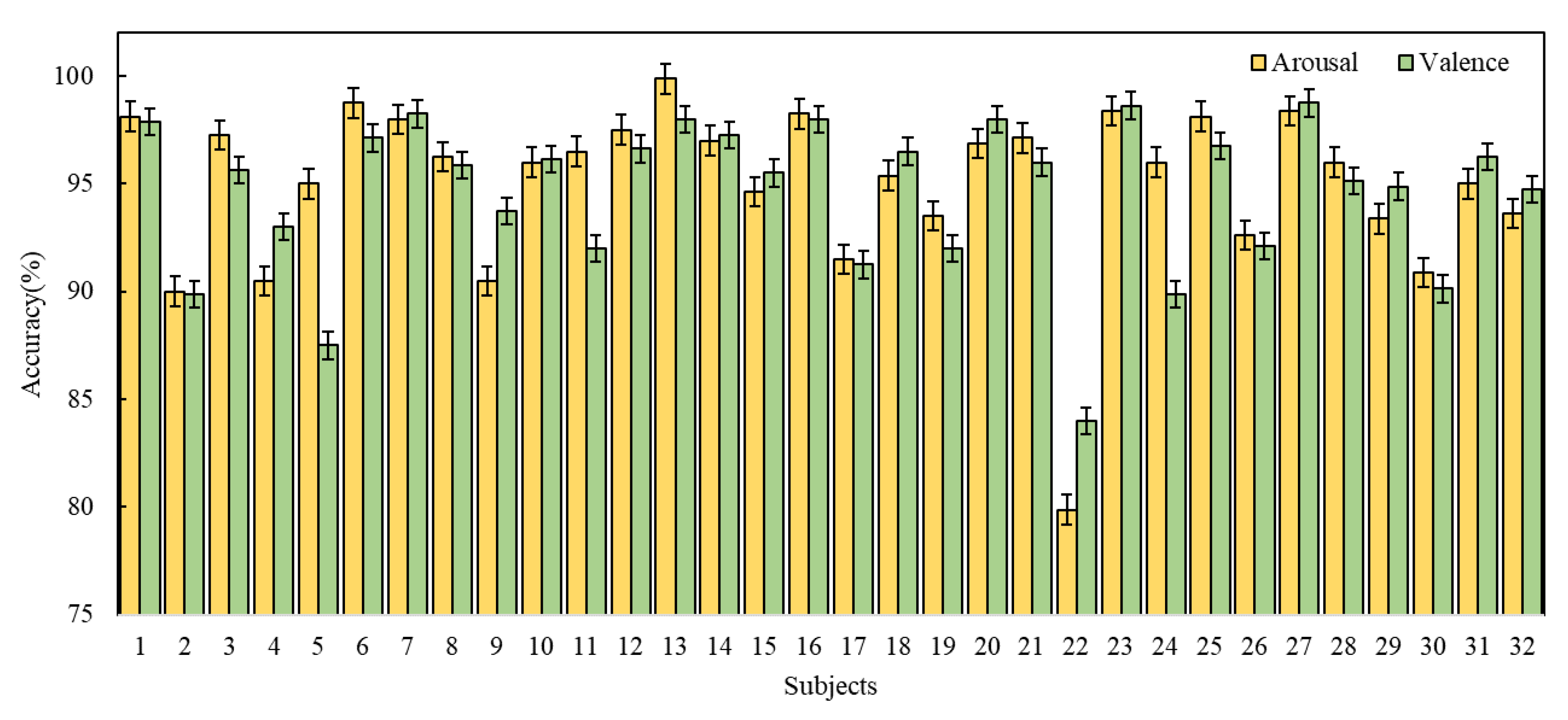

3.4. Results and Discussion

3.5. Method Comparison

- HCNN [38]: It uses a hierarchical CNN for EEG emotion classification and recognition and uses the differential entropy features of two-dimensional EEG as the input of the neural network model, which proves that the band and band are more suitable for emotion recognition. The method considers the spatial information and frequency information of the EEG signal.

- RGNN [39]: It uses the adjacency matrix in the graph neural network to simulate the inter-channel relationship in the EEG signal and realizes the simultaneous capture of the relationship between the local channel and the global channel. The connection and sparseness of the adjacency matrix are determined by humans. Supported by the neurological theory of brain tissue, this method shows that the relationship between global channels and local channels in the left and right hemispheres plays an important role in emotion recognition.

- BDGLS [40]: It uses differential entropy features as input data. By combining the advantages of dynamic graph convolutional neural networks and generalized learning systems, emotion recognition accuracy can be improved over the full frequency band of EEG features. This method considers the frequency information and spatial information of the EEG signal at the same time.

- PCRNN [32]: It first uses the CNN module to obtain space characteristics from each 2D EEG topographic map, then uses LSTM to obtain time characteristics from the EEG vector sequence, and finally integrates space and time characteristics to carry out emotional classification.

- 4D-CRNN [41]: It first extracts features from EEG signals to construct a four-dimensional feature structure, then uses convolutional recurrent neural networks to extract EEG signals to obtain spatial features and frequency features, and uses LSTM to extract time from EEG vector sequences features, and finally carry out EEG emotion classification.

- 4D-aNN (DE) [42]: It uses 4D space-spectrum-time representations containing the space, frequency spectrum, and time information of the EEG signal as input. An attention mechanism is added to the CNN module and the bidirectional LSTM module. This method also considers the time, space, and frequency of EEG information.

- ACRNN [43]: It adopts the convolutional recurrent neural network method based on the attention mechanism. It first uses the attention mechanism to distribute the weights between channels, then uses the CNN to extract the spatial information of the EEG, and finally uses the RNN to integrate and extract the temporal information features of the EEG. The method considers spatial information and temporal information for the emotion classification of EEG signals.

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bota, P.J.; Wang, C.; Fred, A.L.N.; Da Silva, H.P. A review, current challenges, and future possibilities on emotion recognition using machine learning and physiological signals. IEEE Access 2019, 7, 140990–141020. [Google Scholar] [CrossRef]

- Huang, Y.A.; Dupont, P.; Van de Vliet, L.; Jastorff, J.; Peeters, R.; Theys, T.; Van Loon, J.; Van Paesschen, V.; Van Den Stock, J.; Vandenbulcke, M. Network level characteristics in the emotion recognition network after unilateral temporal lobe surgery. Eur. J. Neurosci. 2020, 52, 3470–3484. [Google Scholar] [CrossRef]

- Egger, M.; Ley, M.; Hanke, S. Emotion recognition from physiological signal analysis: A review. Electron. Notes Theor. Comput. Sci. 2019, 343, 35–55. [Google Scholar] [CrossRef]

- Luo, J.; Tian, Y.; Yu, H.; Chen, Y.; Wu, M. Semi-Supervised Cross-Subject Emotion Recognition Based on Stacked Denoising Autoencoder Architecture Using a Fusion of Multi-Modal Physiological Signals. Entropy 2022, 24, 577. [Google Scholar] [CrossRef]

- Yao, L.; Wang, M.; Lu, Y.; Li, H.; Zhang, X. EEG-Based Emotion Recognition by Exploiting Fused Network Entropy Measures of Complex Networks across Subjects. Entropy 2021, 23, 984. [Google Scholar] [CrossRef]

- Keshmiri, S.; Shiomi, M.; Ishiguro, H. Entropy of the Multi-Channel EEG Recordings Identifies the Distributed Signatures of Negative, Neutral and Positive Affect in Whole-Brain Variability. Entropy 2019, 21, 1228. [Google Scholar] [CrossRef]

- Pan, L.; Wang, S.; Yin, Z.; Song, A. Recognition of Human Inner Emotion Based on Two-Stage FCA-ReliefF Feature Optimization. Inf. Technol. Control 2022, 51, 32–47. [Google Scholar] [CrossRef]

- Gao, Z.; Cui, X.; Wan, W.; Gu, Z. Recognition of Emotional States Using Multiscale Information Analysis of High Frequency EEG Oscillations. Entropy 2019, 21, 609. [Google Scholar] [CrossRef]

- Chao, H.; Dong, L.; Liu, Y.; Lu, B. Emotion recognition from multiband EEG signals using CapsNet. Sensors 2019, 19, 2212. [Google Scholar] [CrossRef]

- Catrambone, V.; Greco, A.; Scilingo, E.P.; Valenza, G. Functional Linear and Nonlinear Brain–Heart Interplay during Emotional Video Elicitation: A Maximum Information Coefficient Study. Entropy 2019, 21, 892. [Google Scholar] [CrossRef]

- Krishnan, P.T.; Joseph Raj, A.N.; Rajangam, V. Emotion classification from speech signal based on empirical mode decomposition and non-linear features. Complex Intell. Syst. 2021, 7, 1919–1934. [Google Scholar] [CrossRef]

- Danelljan, M.; Robinson, A.; Shahbaz Khan, F.; Felsberg, M. Beyond correlation filters: Learning continuous convolution operators for visual tracking. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 472–488. [Google Scholar] [CrossRef]

- Zhang, J.; Min, Y. Four-Classes Human Emotion Recognition Via Entropy Characteristic and Random Forest. Inf. Technol. Control 2020, 49, 285–298. [Google Scholar] [CrossRef]

- Cao, R.; Shi, H.; Wang, X.; Huo, S.; Hao, Y.; Wang, B.; Guo, H.; Xiang, J. Hemispheric Asymmetry of Functional Brain Networks under Different Emotions Using EEG Data. Entropy 2020, 22, 939. [Google Scholar] [CrossRef]

- Liu, Y.; Sourina, O. Real-time fractal-based valence level recognition from EEG. In Transactions on Computational Science XVIII; Springer: Berlin/Heidelberg, Germany, 2013; pp. 101–120. [Google Scholar] [CrossRef]

- Lin, Y.P.; Wang, C.H.; Jung, T.P.; Wu, T.L.; Jeng, S.K.; Duann, J.R.; Chen, J.H. EEG-based emotion recognition in music listening. IEEE Trans. Biomed. Eng. 2010, 57, 1798–1806. [Google Scholar] [CrossRef]

- Altan, G.; Yayık, A.; Kutlu, Y. Deep learning with ConvNet predicts imagery tasks through EEG. Neural Process. Lett. 2021, 53, 2917–2932. [Google Scholar] [CrossRef]

- Subha, D.P.; Joseph, P.K.; Acharya, U.R.; Lim, C.M. EEG signal analysis: A survey. J. Med. Syst. 2010, 34, 195–212. [Google Scholar] [CrossRef]

- Zou, X.; Feng, L.; Sun, H. Compressive Sensing of Multichannel EEG Signals Based on Graph Fourier Transform and Cosparsity. Neural Process. Lett. 2020, 51, 1227–1236. [Google Scholar] [CrossRef]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep learning for computer vision: A brief review. Comput. Intell. Neurosci. 2018, 2018, 7068349. [Google Scholar] [CrossRef]

- Khalil, R.A.; Jones, E.; Babar, M.I.; Jan, T.; Zafar, M.H.; Alhussain, T. Speech emotion recognition using deep learning techniques: A review. IEEE Access 2019, 7, 117327–117345. [Google Scholar] [CrossRef]

- Young, T.; Hazarika, D.; Poria, S.; Cambria, E. Recent trends in deep learning based natural language processing. IEEE Comput. Intell. Mag. 2018, 13, 55–75. [Google Scholar] [CrossRef]

- Komolovaitė, D.; Maskeliūnas, R.; Damaševičius, R. Deep Convolutional Neural Network-Based Visual Stimuli Classification Using Electroencephalography Signals of Healthy and Alzheimer’s Disease Subjects. Life 2022, 12, 374. [Google Scholar] [CrossRef]

- Thammasan, N.; Fukui, K.I.; Numao, M. Application of deep belief networks in eeg-based dynamic music-emotion recognition. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 881–888. [Google Scholar] [CrossRef]

- Tripathi, S.; Acharya, S.; Sharma, R.D.; Mittal, S.; Bhattacharya, S. Using deep and convolutional neural networks for accurate emotion classification on deap dataset. In Proceedings of the Twenty-ninth IAAI Conference, San Francisco, CA, USA, 6–9 February 2017. [Google Scholar] [CrossRef]

- Salama, E.S.; El-Khoribi, R.A.; Shoman, M.E.; Shalaby, M.A.W. EEG-based emotion recognition using 3D convolutional neural networks. Int. J. Adv. Comput. Sci. Appl. 2018, 9, 329–337. [Google Scholar] [CrossRef]

- Yang, Y.; Wu, Q.; Fu, Y.; Chen, X. Continuous convolutional neural network with 3D input for EEG-based emotion recognition. In International Conference on Neural Information Processing; Springer: Cham, Switzerland, 2018; pp. 433–443. [Google Scholar] [CrossRef]

- Riezler, S.; Hagmann, M. Validity, Reliability, and Significance; Springer: Cham, Switzerland, 2022. [Google Scholar] [CrossRef]

- Zheng, W.L.; Lu, B.L. Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks. IEEE Trans. Auton. Ment. Dev. 2015, 7, 162–175. [Google Scholar] [CrossRef]

- Meng-meng, Y.A.N.; Zhao, L.V.; Wen-hui, S.U.N. Extraction of spatial features of emotional EEG signals based on common spatial pattern. J. Graph. 2020, 41, 424. [Google Scholar]

- Liu, C.Y.; Li, W.Q.; Bi, X.J. Research on EEG emotion recognition based on RCNN-LSTM. Acta Autom. Sin. 2020, 45, 1–9. [Google Scholar] [CrossRef]

- Yang, Y.; Wu, Q.; Qiu, M.; Wang, Y.; Chen, X. Emotion recognition from multi-channel EEG through parallel convolutional recurrent neural network. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–7. [Google Scholar]

- Duan, R.N.; Zhu, J.Y.; Lu, B.L. Differential entropy feature for EEG-based emotion classification. In Proceedings of the 2013 6th International IEEE/EMBS Conference on Neural Engineering (NER), San Diego, CA, USA, 6–8 November 2013; pp. 81–84. [Google Scholar] [CrossRef]

- Shi, L.C.; Jiao, Y.Y.; Lu, B.L. Differential entropy feature for EEG-based vigilance estimation. In Proceedings of the 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Osaka, Japan, 3–7 July 2013; pp. 6627–6630. [Google Scholar]

- Shen, Y.; Tan, S.; Sordoni, A.; Courville, A. Ordered neurons: Integrating tree structures into recurrent neural networks. arXiv 2018, arXiv:1810.09536. [Google Scholar]

- Zheng, W.L.; Liu, W.; Lu, Y.; Lu, B.L.; Cichocki, A. Emotionmeter: A multimodal framework for recognizing human emotions. IEEE Trans. Cybern. 2018, 49, 1110–1122. [Google Scholar] [CrossRef]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. Deap: A database for emotion analysis; using physiological signals. IEEE Trans. Affect. Comput. 2011, 3, 18–31. [Google Scholar] [CrossRef]

- Li, J.; Zhang, Z.; He, H. Hierarchical convolutional neural networks for EEG-based emotion recognition. Cogn. Comput. 2018, 10, 368–380. [Google Scholar] [CrossRef]

- Zhong, P.; Wang, D.; Miao, C. EEG-based emotion recognition using regularized graph neural networks. IEEE Trans. Affect. Comput 2020, 13, 1290–1301. [Google Scholar] [CrossRef]

- Wang, X.H.; Zhang, T.; Xu, X.M.; Chen, L.; Xing, X.F.; Chen, C.P. EEG emotion recognition using dynamical graph convolutional neural networks and broad learning system. In Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Madrid, Spain, 3–6 December 2018; pp. 1240–1244. [Google Scholar] [CrossRef]

- Shen, F.; Dai, G.; Lin, G.; Zhang, J.; Kong, W.; Zeng, H. EEG-based emotion recognition using 4D convolutional recurrent neural network. Cogn. Neurodynamics 2020, 14, 815–828. [Google Scholar] [CrossRef]

- Xiao, G.; Shi, M.; Ye, M.; Xu, B.; Chen, Z.; Ren, Q. 4D attention-based neural network for EEG emotion recognition. Cogn. Neurodynamics 2022, 16, 805–818. [Google Scholar] [CrossRef]

- Tao, W.; Li, C.; Song, R.; Cheng, J.; Liu, Y.; Wan, F.; Chen, X. EEG-based emotion recognition via channel-wise attention and self attention. IEEE Trans. Affect. Comput. 2020. [Google Scholar] [CrossRef]

| Article | Method | Application | Dataset | Accuracy (%) |

|---|---|---|---|---|

| Komolovaitė et al. [23] | EEGNet SSVEP | Alzheimer research | Figshare website | 50.2 |

| Thammasan et al. [24] | Deep Belief Network (DBN) | Emotion recognition in music listening | 15 recruited healthy students (valence/arousal emotion types) | 82.42/88.24 |

| Tripathi et al. [25] | Convolutional Neural Network (CNN) | Automatic emotion recognition | DEAP (valence/arousal emotion types) | 73.36/81.41 |

| Salama et al. [26] | 3-Dimensional Convolutional Neural Networks (3D-CNN) | Emotion recognition | DEAP (valence/arousal emotion types) | 88.49/87.44 |

| Yang et al. [27] | Convolutional Neural Network (CNN) | Automatic emotion recognition | DEAP (valence/arousal emotion types) | 90.24/89.45 |

| Zheng et al. [29] | Deep Belief Network (DBN) | Emotion recognition | 15 recruited subjects | 86.65 |

| Meng-meng et al. [30] | Common Spatial Pattern (CSP) | Emotion recognition | 6 recruited healthy students | 87.54 |

| Liu et al. [31] | Recurrent Convolutional Neural Network and Long Short Term Memory (RCNN-LSTM) | Automatic emotion recognition | DEAP | 96.63 |

| Patterns | Frequency | Brain State | Awareness |

|---|---|---|---|

| () Delta | 1–4 Hz | Deep sleep pattern | Lower |

| () Theta | 4–8 Hz | Light sleep pattern | Low |

| () Alpha | 8–13 Hz | Closing the eyes, relax state | Medium |

| () Beta | 13–30 Hz | Active thinking, focus, high alert, anxious | High |

| () Gamma | 30–50 Hz | Mentally active and hypertensive | Higher |

| Name | Size | Contents |

|---|---|---|

| Data | 40 × 40 × 8064 | videos × channels × data |

| Labels | 40 × 4 | videos × labels (valence, arousal, dominance, liking) |

| Subjects | Accuracy | Subjects | Accuracy | Subjects | Accuracy | Subjects | Accuracy |

|---|---|---|---|---|---|---|---|

| 1 | 92.45% | 5 | 95.06% | 9 | 95.89% | 13 | 96.12% |

| 2 | 96.86% | 6 | 97.63% | 10 | 96.83% | 14 | 95.32% |

| 3 | 94.17% | 7 | 93.99% | 11 | 95.83% | 15 | 91.50% |

| 4 | 99.59% | 8 | 96.15% | 12 | 95.03% |

| Subjects | Accuracy | Subjects | Accuracy | Subjects | Accuracy | Subjects | Accuracy |

|---|---|---|---|---|---|---|---|

| 1 | 98.13% | 9 | 90.50% | 17 | 91.50% | 25 | 98.13% |

| 2 | 90.00% | 10 | 96.00% | 18 | 95.38% | 26 | 92.63% |

| 3 | 97.25% | 11 | 96.50% | 19 | 93.50% | 27 | 98.38% |

| 4 | 90.50% | 12 | 97.50% | 20 | 96.88% | 28 | 96.00% |

| 5 | 95.00% | 13 | 99.86% | 21 | 97.13% | 29 | 93.38% |

| 6 | 98.75% | 14 | 97.00% | 22 | 79.88% | 30 | 90.88% |

| 7 | 98.00% | 15 | 94.63% | 23 | 98.38% | 31 | 95.00% |

| 8 | 96.25% | 16 | 98.25% | 24 | 96.00% | 32 | 93.63% |

| Subjects | Accuracy | Subjects | Accuracy | Subjects | Accuracy | Subjects | Accuracy |

|---|---|---|---|---|---|---|---|

| 1 | 97.88% | 9 | 93.75% | 17 | 91.25% | 25 | 96.75% |

| 2 | 89.88% | 10 | 96.13% | 18 | 96.50% | 26 | 92.13% |

| 3 | 95.63% | 11 | 92.00% | 19 | 92.00% | 27 | 98.75% |

| 4 | 93.00% | 12 | 96.63% | 20 | 98.00% | 28 | 95.13% |

| 5 | 87.50% | 13 | 98.00% | 21 | 96.00% | 29 | 94.88% |

| 6 | 97.13% | 14 | 97.25% | 22 | 84.00% | 30 | 90.13% |

| 7 | 98.25% | 15 | 95.50% | 23 | 98.63% | 31 | 96.25% |

| 8 | 95.88% | 16 | 98.00% | 24 | 89.88% | 32 | 94.75% |

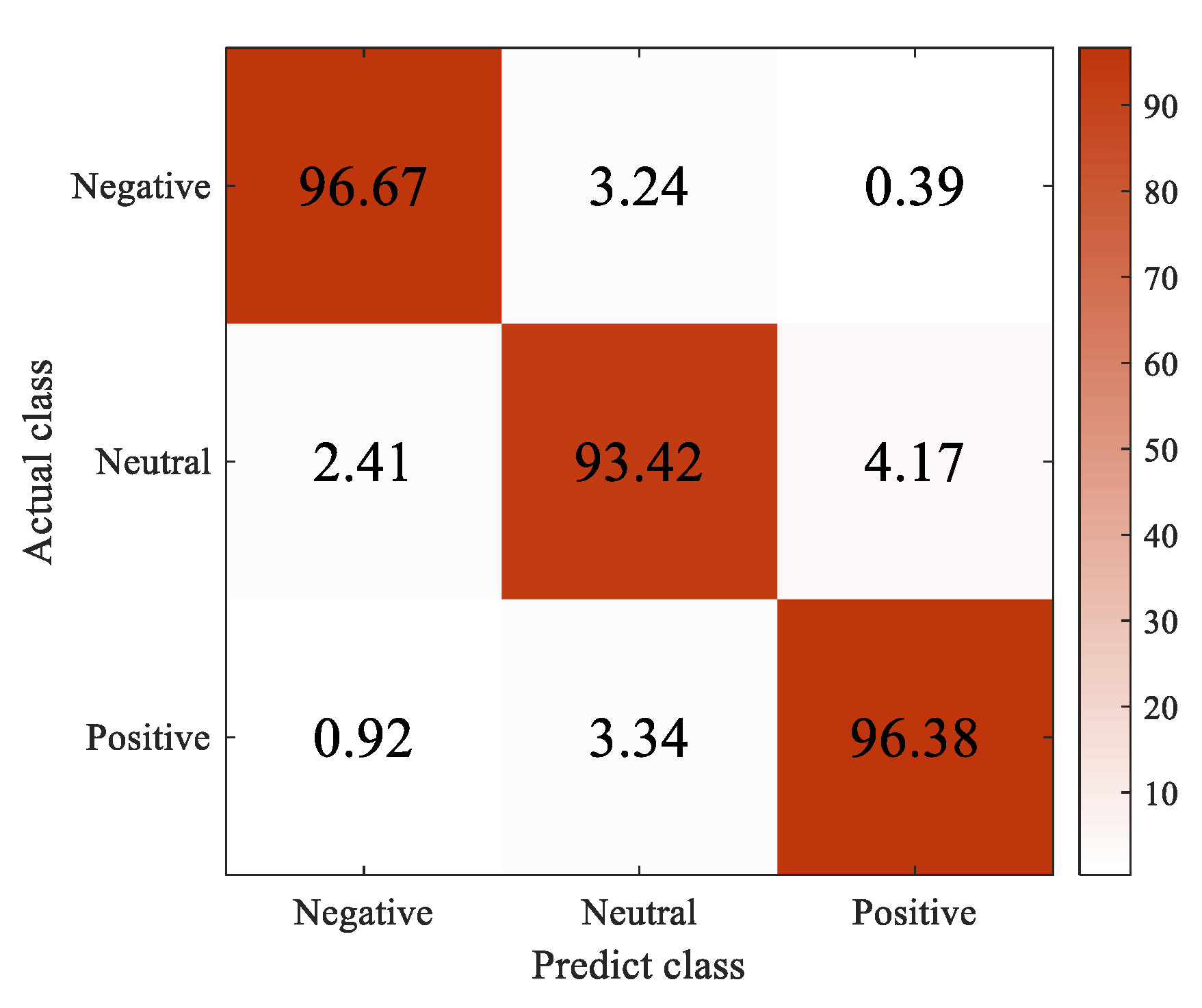

| Classes | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Negative | 96.67% | 94.87% | 92.50% | 93.67% |

| Neutral | 93.42% | 94.29% | 93.94% | 96.12% |

| Positive | 96.38% | 98.96% | 95.00% | 96.94% |

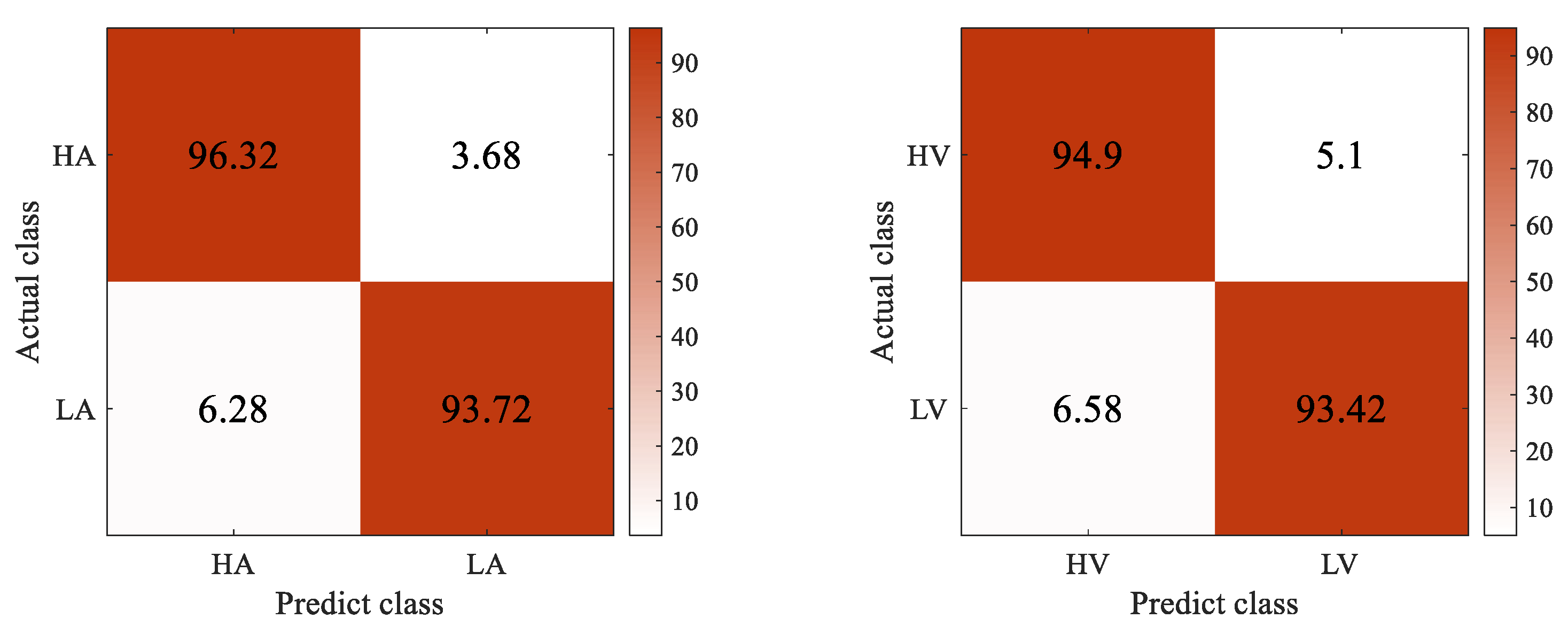

| Valence/Arousal | Class | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|---|

| Valence | High | 94.90% | 98.48% | 93.94% | 96.47% |

| Low | 93.42% | 98.41% | 93.18% | 96.12% | |

| Arousal | High | 96.32% | 98.02% | 91.67% | 94.74% |

| Low | 93.72% | 93.30% | 95.37% | 96.71% |

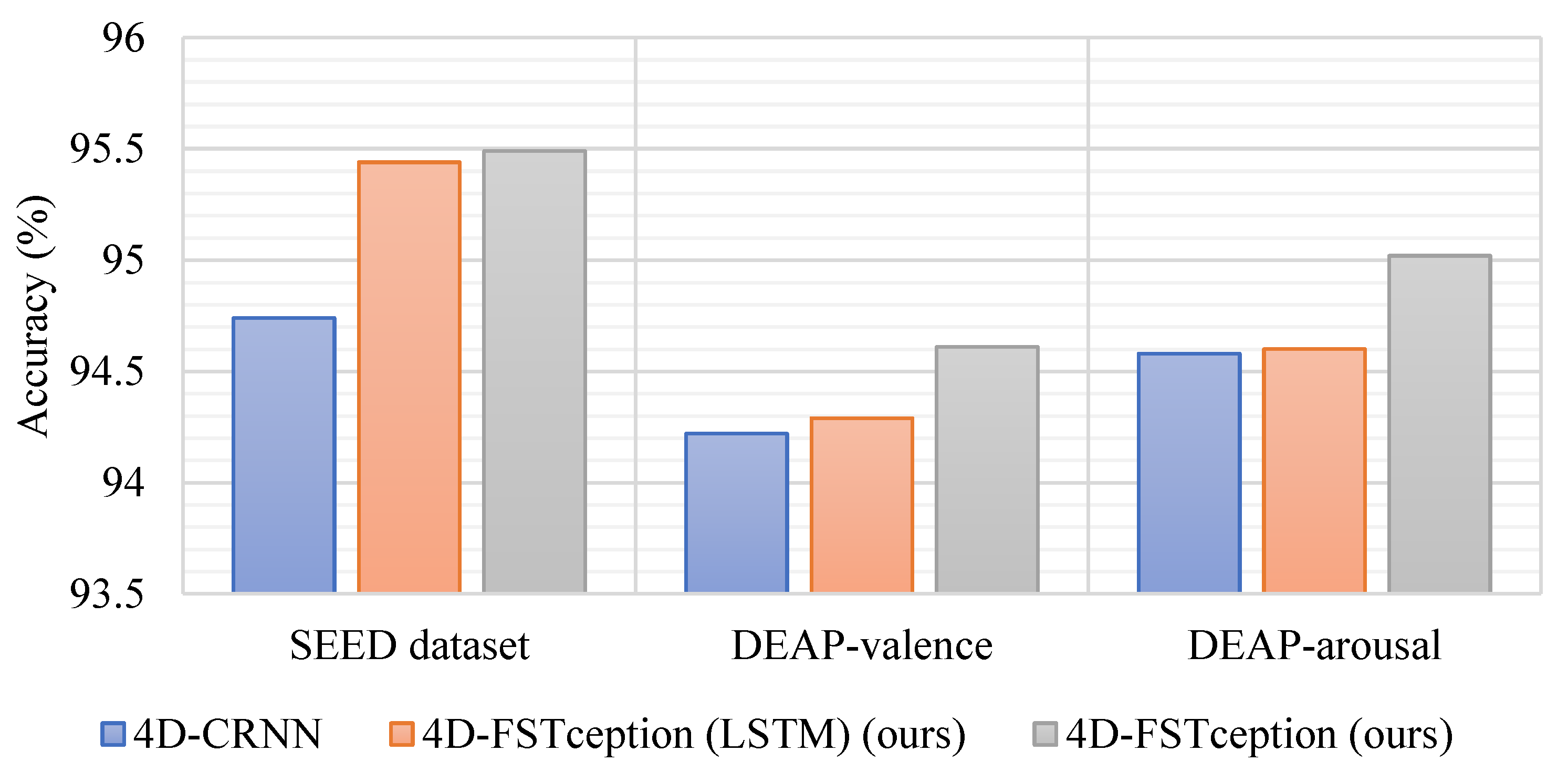

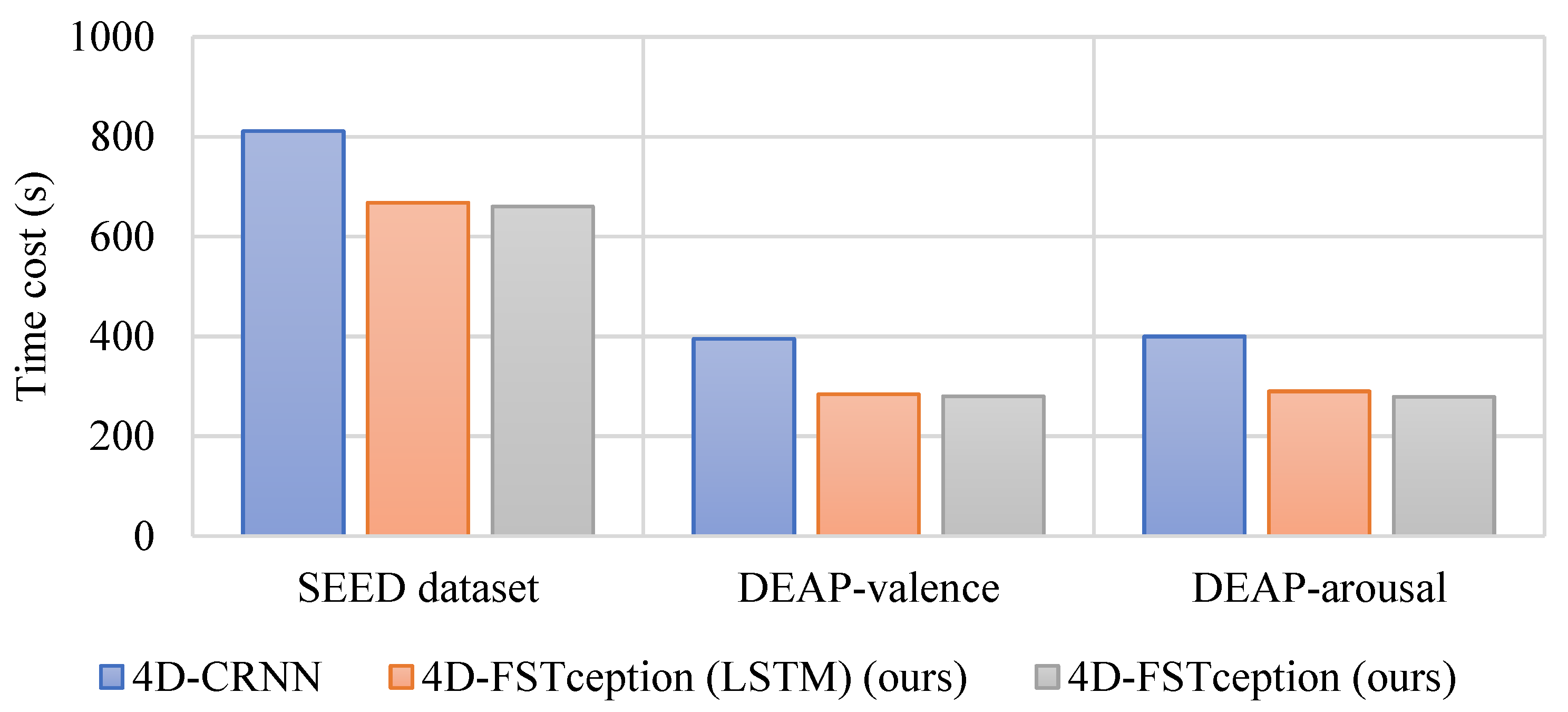

| Method | Map Shape | SEED | DEAP-Valence | DEAP-Arousal | |||||

|---|---|---|---|---|---|---|---|---|---|

| Acc (%) | Time Cost (s) | Acc (%) | Time Cost (s) | Acc (%) | Time Cost (s) | FLOPS (G) | Params (M) | ||

| HCNN [38] | 19 × 19 | 88.60 | 3600 | - | - | - | - | - | - |

| 4D-CRNN [42] | 8 × 9 | 94.74 | 811 | 94.22 | 395 | 94.58 | 400 | 18.63 | 15.8 |

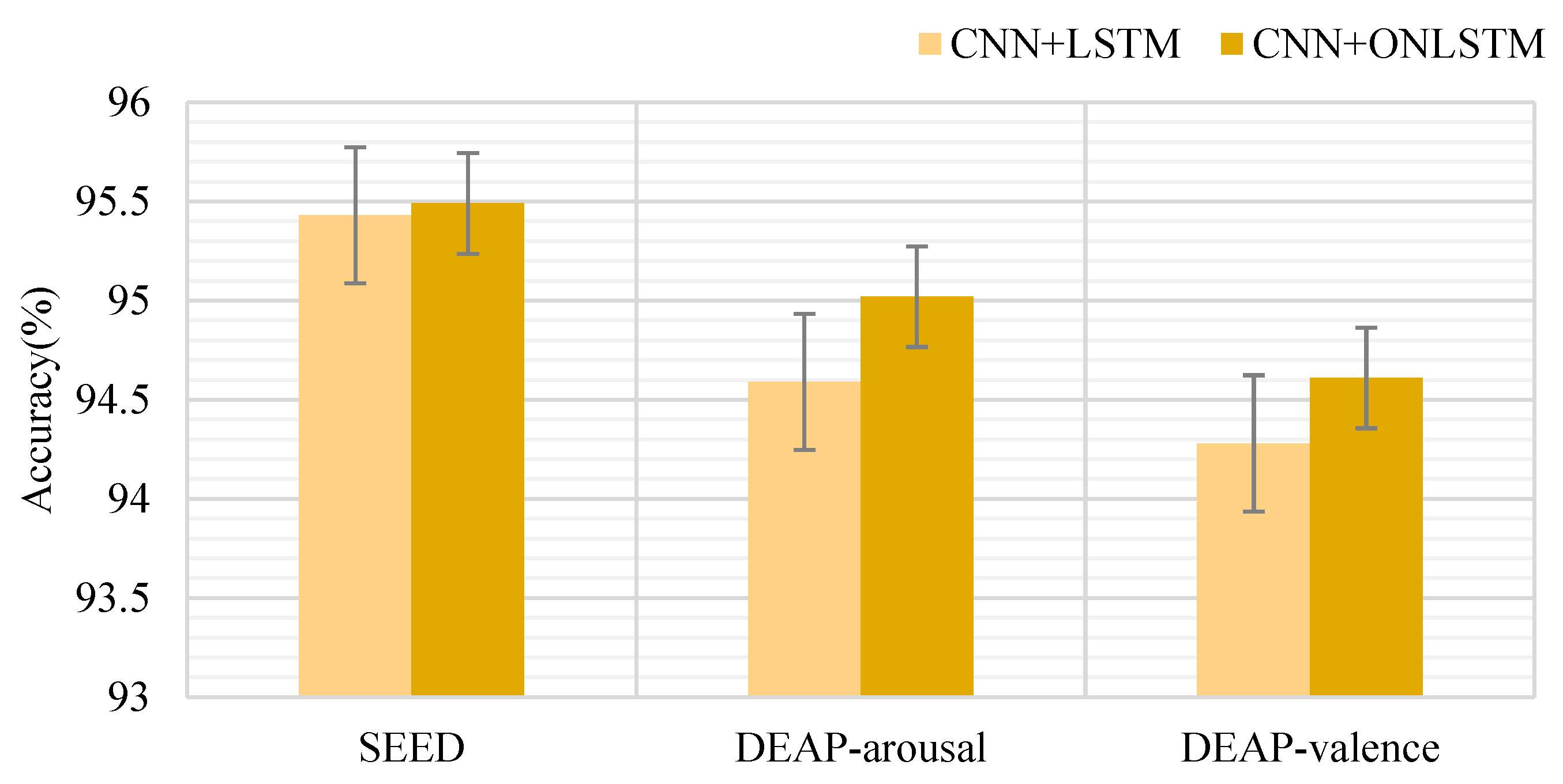

| 4D-FSTception (LSTM) (ours) | 8 × 9 | 95.44 | 668 | 94.29 | 284 | 94.60 | 290 | 19.94 | 14.4 |

| 4D-FSTception (ours) | 8 × 9 | 95.49 | 660 | 94.61 | 280 | 95.02 | 279 | 20.61 | 12.7 |

| Method | Information | ACC ± STD (%) | ||

|---|---|---|---|---|

| SEED | DEAP-Valence | DEAP-Arousal | ||

| HCNN [38] | Frequency + spatial | 88.60 ± 2.60 | - | - |

| RGNN [39] | Frequency + spatial | 94.24 ± 5.95 | - | - |

| BDGLS [40] | Frequency + spatial | 93.66 ± 6.11 | - | - |

| PCRNN [32] | Spatial + temporal | - | 90.26 ± 2.88 | 90.98 ± 3.09 |

| ACRNN [43] | Spatial + temporal | 93.72 | 3.21 | 93.38 |

| 4D-CRNN [41] | Frequency + spatial + temporal | 94.74 ± 2.32 | 94.22 ± 2.61 | 94.58 ± 3.69 |

| 4D-aNN (DE) [42] | Frequency + spatial + temporal | 95.39 ± 3.05 | - | - |

| 4D-FSTception (LSTM) (ours) | Frequency + spatial + temporal | 95.44 ± 0.32 | 94.29 ± 1.89 | 94.60 ± 2.08 |

| 4D-FSTception (ours) | Frequency + spatial + temporal | 95.49 ± 3.01 | 94.61 ± 2.83 | 95.02 ± 2.85 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Q.; Liu, Y.; Liu, Q.; Zhang, Q.; Yan, F.; Ma, Y.; Zhang, X. Multidimensional Feature in Emotion Recognition Based on Multi-Channel EEG Signals. Entropy 2022, 24, 1830. https://doi.org/10.3390/e24121830

Li Q, Liu Y, Liu Q, Zhang Q, Yan F, Ma Y, Zhang X. Multidimensional Feature in Emotion Recognition Based on Multi-Channel EEG Signals. Entropy. 2022; 24(12):1830. https://doi.org/10.3390/e24121830

Chicago/Turabian StyleLi, Qi, Yunqing Liu, Quanyang Liu, Qiong Zhang, Fei Yan, Yimin Ma, and Xinyu Zhang. 2022. "Multidimensional Feature in Emotion Recognition Based on Multi-Channel EEG Signals" Entropy 24, no. 12: 1830. https://doi.org/10.3390/e24121830

APA StyleLi, Q., Liu, Y., Liu, Q., Zhang, Q., Yan, F., Ma, Y., & Zhang, X. (2022). Multidimensional Feature in Emotion Recognition Based on Multi-Channel EEG Signals. Entropy, 24(12), 1830. https://doi.org/10.3390/e24121830