Abstract

Aiming at the path planning problem of unmanned aerial vehicle (UAV) base stations when performing search tasks, this paper proposes a Double DQN-state splitting Q network (DDQN-SSQN) algorithm that combines state splitting and optimal state to complete the optimal path planning of UAV based on the Deep Reinforcement Learning DDQN algorithm. The method stores multidimensional state information in categories and uses targeted training to obtain optimal path information. The method also references the received signal strength indicator (RSSI) to influence the reward received by the agent, and in this way reduces the decision difficulty of the UAV. In order to simulate the scenarios of UAVs in real work, this paper uses the Open AI Gym simulation platform to construct a mission system model. The simulation results show that the proposed scheme can plan the optimal path faster than other traditional algorithmic schemes and has a greater advantage in the stability and convergence speed of the algorithm.

1. Introduction

Nowadays, with the development of 5G communication technology and the improvement of the mobile Internet of Things, unmanned aerial vehicle (UAV) base stations have begun to be widely used in auxiliary communication and post-disaster rescue tasks [1,2]. The UAV air base station has the characteristics of strong maneuverability, controllable mobility, and convenient deployment and can support the high-speed transmission of communication data, etc. [3]. The application of UAV base stations in disaster relief scenarios improves the problem of communication signals being difficult to reach in complex environments, and also makes it possible to provide all-round coverage of communication signals in disaster relief mission areas. Considering the special nature of UAV base stations and the complexity of the disaster relief environment [4,5], how to use the high flexibility of UAVs to plan an optimal collision-free path in the complex situation with obstacles after a disaster is the most pending problem of UAVs in disaster rescue missions.

In the scenario where UAVs search for location information of disaster victims, the path planning problem of UAVs is the key to accomplish such tasks. Traditional UAV path planning algorithms include the artificial potential field method, heuristic algorithm, ant colony algorithm, etc. The UAV path planning method using an improved artificial potential field was proposed in [6,7], which effectively solved the path planning of multi-UAS and UAV obstacle avoidance against dynamic obstacles by introducing a rotating potential field and Markov prediction model. In [8,9,10], an improved heuristic algorithm was proposed to solve the problem of UAV path planning and mission area coverage in complex environments. To solve the coverage path planning problem for autonomous heterogeneous UAVs over a finite area, an original clustering-based algorithm was designed in [11], which divided the mission area into clusters to obtain the optimal UAV point-to-point path. For the multi-target search and path planning problem in unknown environment, Refs [12,13] proposed an improved artificial bee colony algorithm, which greatly improved the stability of UAV flight and the speed of UAV path planning. In [14], a combination of a pseudospectra algorithm and an ant colony algorithm was used to solve the 3D path planning problem of solar powered UAV. In [14], a combination of the pseudospe-tral and colony algorithms was used to solve the 3D path planning problem of solar powered UAV. For the path planning problem of multiple UAVs, the authors of [15,16] proposed the use of a sparrow search algorithm and a two-level coordination framework approach to achieve path planning for UAV swarms in dynamic obstacle environments, respectively. In order to improve the stability of the algorithm and the efficiency of the task completion during the task execution, designing a new alternative framework for UAV motion performance can effectively reduce the task execution time of UAVs while improving the robustness of the algorithmic framework [17,18]. A non-rigid hierarchical discrete grid structure was proposed in [19] to achieve path planning of UAVs in 3D space. These optimization algorithms based on traditional algorithms were all based on converting the UAV path planning problem into a path optimization problem and solving the optimization model to obtain the optimal flight path. However, these methods often suffer from a low intelligence level, a long solution time and restricted application scenarios, and cannot be applied to post-disaster rescue scenarios with complex environments and changing scenarios.

In recent years, artificial intelligence technology has flourished, and deep reinforcement learning has gradually been applied to solve the path planning problems of UAVs in various complex environments. In [20,21], the use of the DDPG algorithm was proposed to enable UAVs to autonomously avoid threat areas and thus obtain an optimal flight path. The improved DRL algorithm was used in [22,23,24] to implement real-time UAV path planning in the respective application scenarios. In [25], to improve the collection of global and local information during the flight of UAVs, a multi-layer path planning algorithm based on reinforcement learning (RL) technique was proposed, which divided the information into upper and lower layers and then coordinated the processing of the upper and lower layers to finally plan a collision-free path for the UAV. By introducing a UAV mobile edge computing platform in [26,27,28], a better quality of path planning for reinforcement learning algorithms was provided while risk avoidance was achieved. In scenarios where there is no basic communication infrastructure or where communication with the Internet is not possible due to emergencies such as disasters, a reinforcement learning-based path planning scheme for IoT UAVs was proposed in [29] to achieve autonomous path planning for UAVs in unknown environments. A DL-based collision avoidance method for UAV communication networks was proposed in [30], while a series of convex optimization problems were formulated and solved to effectively solve the optimal trajectory planning problem for UAV communication networks. To optimize the flight trajectory and improve the energy management capability of the solar powered aircraft, a neural network controller was trained using the RL method in [31] and used as an integrated controller for aircraft navigation and guidance. In [32,33], the algorithms of dual-latency deep deterministic policy gradient (TD3) participant-critic deep reinforcement learning (DRL) framework and multi-step duel DDQN (multi-step D3QN) were used to implement UAV path design in 3D space, respectively. For the path planning problem of multi-UAV wireless data collection, the process was solved by the DRL method in [34] and an approximate optimal UAV control strategy with unknown environmental data information was implemented.

Traditional reinforcement learning algorithms and traditional path planning algorithms often have good test effects in some simple scenarios with simple environments and small state dimensions of algorithm input, but the execution effect of the algorithms will be greatly reduced in some scenarios with complex environments, many obstacles, and large state information dimensions input by the algorithms.

For the shortcomings of traditional path planning algorithms, this paper studies the path planning problem of UAV base stations collecting location information of trapped people based on deep reinforcement learning technology, proposes the reinforcement learning idea of combining state splitting and optimal value, and also designs the DDQN-SSQN reinforcement learning algorithm to solve the path planning problem of UAVs in performing search and rescue tasks by combining the received signal strength indication (RSSI) collected by UAV base stations in real time during the flight. Finally, the DDQN-SSQN algorithm is used to simulate the built task environment and compare with the traditional reinforcement learning algorithm to demonstrate the advantages of the proposed algorithm. The proposed algorithm improves the network structure of the algorithm on the basis of the traditional DDQN algorithm, and the algorithm classifies the multi-dimensional environmental state and conducts targeted training, which greatly improves the decision-making efficiency of the Agent.

The main contributions of this paper are as follows:

(1) The path decision-making algorithm based on deep reinforcement learning is used to classify and store multi-dimensional state information, and the optimal path information is obtained through targeted training, which greatly improves the efficiency of UAV to complete search and rescue tasks;

(2) Use the self-built virtual environment model to complete the training of the drone, which effectively avoids the training loss of the physical UAV and reduces the cost of model training;

(3) Introduce the received signal strength indication (RSSI) into the algorithm model of deep reinforcement learning and use this to affect the reward obtained by the agent, which reduces the difficulty of UAV decision-making and improves the positioning accuracy of the ground user’s location coordinates.

The structure of this article is as follows: In the second chapter, the construction of a virtual disaster relief scenario is introduced. The construction of the scene model is mainly completed from three aspects: system environment model, obstacle model, and ground user model. In the third chapter, the design ideas of the DDQN-SSQN algorithm proposed in this paper and the network structure of the algorithm are introduced. In the fourth chapter, the relevant parameters of the algorithm simulation are given, and the test effect of the proposed algorithm and the traditional reinforcement learning algorithm is compared to verify the advantages of the proposed new algorithm. The full text is summarized and prospected in Section 5.

2. Model Construction

This chapter mainly introduces the construction of the UAV search ground disaster personnel model. The model describes the environmental information for the UAV search and rescue mission and also provides a training ground for the UAV. The system model transforms the UAV path planning problem into a reward and punishment decision problem by introducing decision theory and a related mathematical model of the cost function. In order to simulate the disaster relief environment of UAV in real situations, the whole mission model is modeled from three aspects: system environment, obstacles, and ground users.

2.1. System Environment Model

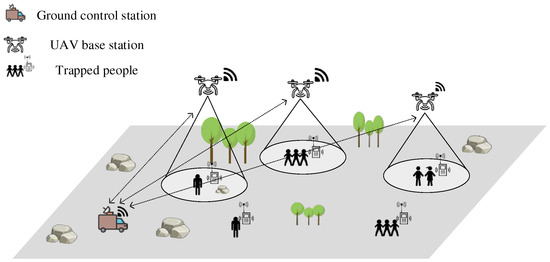

Figure 1 shows the system environment model of the UAV base station performing search and rescue missions for trapped people.

is the area of the entire mission area. The entire scene model is divided into multiple subtask areas according to the number of UAVs, and the area of each subtask area is . , is the number of drones used to perform search and rescue missions in the scenario, , is the number of people affected on the ground. The position coordinates of the UAV change with increasing time and can be expressed as:

Figure 1.

System environment model.

In the equation, denotes the time of flight of the UAV and indicate the time required for the n UAVs to complete the search and rescue mission. Considering that the ground users will be trapped in the debris when the house collapses and the movement of the trapped people is often restricted by the spatial environment, the ground trapped people are set to appear randomly at any location of the scene model and the location coordinates do not change with the increase in time. The spatial coordinates of the trapped people can be expressed as:

Here, . , indicates the number of obstacles in the scene. Since the position of obstacles in the mission scene generally does not move after the house collapses, the location of the set obstacle is fixed in the scene. The coordinates of the spatial location of the obstacle are as follows:

In the formula, denotes the height of the obstacle from the horizontal ground.

The entire area to be searched will be divided into N subtask areas according to the performance of UAVs, and a UAV will be arranged on each subtask area to collect the location information of trapped people, and so as to achieve the coverage of the entire mission area by UAVs. The area of subtask regions is . The area of the scene model can be expressed as:

The size of the subtask area obtained from the scene model segmentation will be set according to the performance of UAVs. The performance of the drone will be judged based on a combination of indicators such as the drone’s endurance, flight speed, and climb rate. The performance of UAVs in the scenario is , The area of the task area obtained by segmentation can be expressed as:

Here, .

2.2. Obstacle Model

UAVs inevitably encounter obstacles in the actual flight process. Since there is randomness in whether an obstacle will appear in the UAV’s line-of-sight range, the obstacle model can be built by random generation. The virtual scene dynamically adjusts the number of obstacles in the environment according to the size of the actual task area and places the obstacles randomly at any location in the scene. Considering the UAV’s own load and the search effect of the UAV, the UAV needs to fly close to the ground when performing rescue missions, which leads to the UAV having to avoid various obstacles such as trees, houses, and ruins during the process of moving. In order to enable the UAV to better detect the surrounding environment, this paper used millimeter-wave radar technology to realize the real-time monitoring and identification of obstacles during the flight of the UAV. The back-end signal processing module of millimeter-wave radar can use the echo signal to calculate the presence, speed, direction, distance, angle and other target information of moving objects. At the same time, millimeter-wave radar also has the characteristics of small size and light weight, so it can be carried around the airframe without affecting the UAV load, and effectively complete the obstacle avoidance task.

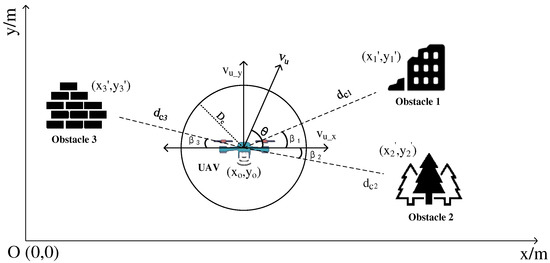

The obstacle avoidance detection of the UAV is shown in Figure 2. As can be seen in the figure, the position coordinates of the UAV in flight are , the distance between the UAV and the obstacle is , and the phase difference is . The two-dimensional coordinates of the obstacle obtained by the UAV monitoring during the flight can be expressed as:

Figure 2.

UAV obstacle avoidance detection.

Here, . In the figure, denotes the velocity of the UAV flight, denotes the component velocity of the UAV along the -axis direction, and denotes the component velocity of the UAV along the -axis direction. The flight speed of the UAV at the moment and the component speeds on the and axes can be expressed as:

In the formula, denotes the flight acceleration of the UAV, and denotes the angle between the actual flight direction of the UAV and the -axis.

The UAV will monitor the location information of surrounding obstacles in real time through the millimeter wave radar mounted around the body during flight. The signal processing module of the UAV will transmit a feedback signal to the UAV based on the distance between the body and the obstacle, and the signal can be expressed as:

Here, denotes the distance between the UAV and the obstacle, and denotes the danger radius where the UAV is at risk of collision. Equation (12) can be seen, when the horizontal distance between the UAV and the obstacle is greater than 15m, the threat of the obstacle to the UAV is very small, at this time can be considered that there is no obstacle around the body, that is, ; when the distance is greater than the danger radius of the UAV is less than 15m, at this time can be considered that the UAV is approaching the obstacle, that is, ; when the distance is less than the danger radius , the UAV may collide with the obstacle at any time, at this time can be considered mission failure, that is, .

2.3. Ground User Model

This scenario model is used to simulate a situation where people are buried when a building collapses. The UAV carries an airborne base station over the mission area and collects in real time the received signal strength between the cell phones of the affected people on the ground and the base station. The formula for calculating RSSI is as follows:

Here, denotes the received signal strength of the UAV to the ground phone during flight, and denotes the power of the received signal.

Received Signal Strength Indication (RSSI) is mainly applied to measure the distance between the transmitter and the receiver, and its range measurement method is implemented mainly based on the principle that the power of the radio wave signal decays with the increase in the propagation distance during the transmission of the radio wave in the medium. Therefore, the transmit signal power and the received signal power of a known node are obtained from the transmitter, and then the distance magnitude between the transmitter and the receiver can be calculated by the attenuation model between the signal power and the distance. The relationship between received signal power and distance can be expressed as:

Here, denotes the power of the received signal, denotes the power of the transmitted signal, denotes the distance between the UAV base station and the ground user, and denotes the propagation factor of the signal. Substituting Equation (14) into Equation (13), the arithmetic expression of the received signal strength versus distance can be obtained as:

The received signal power A between the UAV base station and the ground user’s cell phone at a distance of 1 m can be expressed as:

Substituting Equation (16) into Equation (15) yields:

Since the number of trapped people in the ground scenario is , the RSSI between the cell phone terminal of user and the UAV base station can be expressed as:

Here, denotes the received signal strength of the UAV to the cell phone terminal of ground user during flight, and denotes the distance size between the UAV base station and user .

From Equation (18), the distance between the aerial UAV base station and the ground user can be expressed as:

Here, denotes the absolute value of the received signal strength of the UAV to the ground user cell phone terminal during flight, denotes the received signal power between the UAV base station and the ground user cell phone at a distance of 1 m, and denotes the propagation factor of the signal.

Considering that in the actual house collapse environment, the specific number of buried people, and the location of buried people are unknown, random generation is used to construct the ground user model. From Equation (2), the number of ground users is , where , and the 3D position coordinates of each user are . The UAV base station will collect the received signal strength from the ground user’s cell phone in real time during the flight and calculate the distance between the ground user and the UAV base station at the same time, and finally, the UAV base station will send the search result to the ground control station. The search result is as follows:

Here, denotes the maximum search radius of the UAV base station for ground users. When the received signal strength between the ground user’s cell phone and the base station exceeds the set threshold, i.e., the distance between the UAV base station and the ground user’s cell phone terminal is less than the maximum search radius of the UAV, the ground console can precisely locate the location information of the ground user.

3. DDQN-SSQN UAV Path Planning Algorithm

This chapter first introduces the knowledge of deep reinforcement learning (DRL) algorithms, and then introduces the design ideas and structure of Double DQN-state splitting Q network (DDQN-SSQN) algorithms in detail.

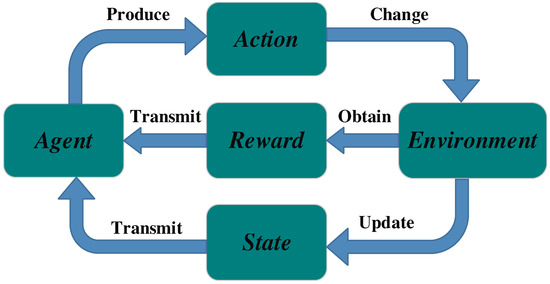

3.1. Deep Reinforcement Learning

Reinforcement learning (RL) is an important branch of artificial intelligence today, and is now widely used in robotics, autonomous driving, computer vision, natural language processing, and other fields. The core of reinforcement learning is oriented to an intelligent body (agent) and puts it into a complex, uncertain environment for interaction. The interaction process between the intelligent body and the environment is shown in Figure 3. The interaction process between agent and environment can be represented by the Markov Decision Process (MDP), and the basic framework of MDP is (S, A, R) [35]. The Agent outputs an action A after acquiring a state S in the environment based on the learned experience. action A, when executed in the environment, outputs the next state of the intelligence and the reward r from that action, and the reward r obtained is superimposed as the intelligence interacts with the environment. The ultimate goal of reinforcement learning is to maximize the value of the reward obtained in long term iterative environmental exploration. Deep Reinforcement Learning (DRL) is a decision control system that combines the ideas of feature extraction and neural networks from Deep Learning (DL) based on reinforcement learning. By inputting multidimensional environmental data information, DRL uses the success and failure experience gained during the exploration process as the basis for updating the decision network and optimizing the network parameters in order to obtain the optimal strategy.

Figure 3.

Reinforcement learning schematic.

Deep Q-network (DQN) is a Q-learning algorithm based on deep learning. The rewards r obtained by taking action A at each state S are usually stored in Q-learning using the form of a table. Since the learning tasks that the Agent has to face in a practical reinforcement learning task are often continuous, there will be an infinite number of state information, which also leads to the problem of dimensional disaster. DQN combines value function approximation and neural network technology and adopts the target network and experience playback method to train the network, which reduces the requirements for storage space and solves the problem of excessive dimension. The value function approximation can be expressed as:

Here, s represents the state , a represents the action , represents the parameter of the network, and represents the actual value function. The target Q-value function y of the DQN algorithm can be expressed as:

Here, represents the reward value obtained and represents the discount factor.

Double DQN (DDQN) is an improvement on DQN. The model structure of DDQN and the model structure of DQN are basically the same; the difference lies in their objective function. The objective function of DDQN can be expressed as:

Here, is the periodic copy of , which represents the target network parameters. When DDQN calculates the target value, it is calculated not according to the parameters of the target Q network such as DQN, but according to the parameters of the current Q network. Therefore, the calculated target value will be a little smaller than the original. This reduces the problem of overvaluation and makes the Q value closer to the true value.

Dueling DQN is also improved on the basis of DQN, and the modified point is at the last layer of the DQN neural network. Originally, the last layer of the DQN neural network was the fully connected layer, and the output after passing through this layer was n Q values. Dueling DQN does not directly train to get these n Q values, it obtains two indirect variable state values V and act ion advantage A through training, and then expresses the Q value through their sum [36]. The calculation formula of the Q function can be expressed as:

where is the convolutional layer parameter, and and are the two-branch fully connected layer parameters. In practice, action advantage A is generally set as a separate action advantage function minus the average of all action dominance functions in state [37], The specific formula is as follows:

This can narrow the range of Q values without affecting the size ordering of the dominant function, which greatly improves the stability of the algorithm.

3.2. DDQN-SSQN Algorithm Design

In this paper, an improved algorithm DDQN-SSQN for split-state Q network is proposed based on Double DQN (DDQN) algorithm and Dueling DQN algorithm. The DDQN-SSQN algorithm was used to solve the path decision problem of UAV in flight process. In this algorithm, the process of executing a task by a UAV base station in a simulated scenario was modeled, and the whole model consists of five parts: intelligent body, state, action, reward, and task completion. The modules were built in the following manner.

(1) Agent: The UAV base station will fly as an Agent in the mission area and collect environmental data information in real time. The UAV will select the next action to be completed according to the current state and the internal policy . When the action is completed, the UAV will be in a new state , after which the next action will be obtained through the policy , and so on until the mission is completed.

(2) State: the state that the UAV is in during flight. Where denotes the two-dimensional coordinates of the location of the UAV ), denotes the two-dimensional location coordinates of the obstacle detected within the sight range of the UAV , denotes the size of the horizontal distance between the UAV and the obstacle, denotes the distance between the UAV base station and the trapped person on the ground, denotes the degree of convergence of the UAV moving toward the trapped person at the current moment compared to the previous moment. The expression for is as follows:

indicates the number of cell phone locations of people trapped on the ground that were successfully searched by the UAV base station.

(3) Action: Since the flight height of the UAV is fixed in the air 10 m away from the ground, the flight action of the UAV can be regarded as a two-dimensional plane flight problem. Flight action consists of . means one step forward, means one step to the left, means one step to the right, and means one step backward.

(4) Reward: The real-time reward obtained by the UAV in the process of completing the mission is determined by the state the UAV is in at that moment and the action to be performed next, so the reward obtained at moment can be expressed as . The reward obtained by the Agent at each moment consists of five components: the reward obtained by approaching the obstacle, the reward obtained by collision, the reward obtained by approaching the target, the reward obtained by completing the task, and the reward obtained by the loss of raw material of the UAV. can be calculated as follows:

Here, , , , and denote the weights of the rewards obtained by completing the corresponding target tasks, respectively, and the sum of all reward weights satisfies:

The UAV can detect the horizontal distance between the airframe and the surrounding obstacles in real time by the millimeter wave radar it carries. Since the detection range of the millimeter wave radar is limited and the threat of the obstacle to the airframe itself is minimal when the horizontal distance between the obstacle and the UAV is more than 10 m, is set. Therefore, the proximity to the obstacle reward can be expressed as:

Here, denotes the reward factor for proximity to an obstacle.

Collision reward is the reward obtained when a UAV collides with an obstacle in its flight path. However, according to the actual knowledge, the UAV base station is costly and easily damaged, and once it collides with an obstacle it will cause huge property damage. Therefore, the collision range is set to 2 m in the collision model, i.e., a collision event is considered to occur when the distance between the UAV and the obstacle is less than 2 m. Then the collision reward can be expressed as:

The RSSI signal data between the airborne base station and the ground personnel’s cell phone are continuously collected by the UAV base station in the course of the disaster relief mission, and are used to obtain the horizontal distance of the affected personnel from the UAV and the convergence degree of the UAV to the location of the affected personnel at the current moment compared to the previous moment. Therefore, the proximity to the target reward can be expressed as:

Here, represents the reward factor close to the target, and represents the number of people trapped on the ground that the UAV searches.

The UAV base station establishes information communication with the trapped person’s cell phone to obtain the received signal strength indication RSSI and determines the direction and specific location of the trapped person’s cell phone according to the change of signal strength. When the distance between the UAV and the ground user’s mobile phone terminal is long due to low communication signal strength, large signal interference, and other reasons, it will be impossible to accurately locate the location of the trapped person, so when the horizontal distance between the UAV base station and the ground mobile phone terminal is less than 5 m, it can be considered that the disaster victim has been successfully found. If the location of all trapped persons is found, the mission is considered complete. Task completion reward can be expressed as:

Here, represents the reward coefficient for task completion.

In the process of performing tasks, drones will inevitably have problems with power consumption and hardware equipment consumption. UAV raw material loss reward can be expressed as:

Here, represents the reward coefficient of the loss of raw materials for the UAV.

(5) Task completion: The completion of UAV flight tasks determined by the maximum number of flight steps p and the number of UAV base stations successfully searched for ground mobile phone terminals c. The expression for task completion is as follows:

Among them, when , it means that the search and rescue mission is still in progress; When , it means that the UAV has completed this search and rescue mission, the indicates the maximum number of flight steps of the UAV, and represents the number of trapped people on the ground.

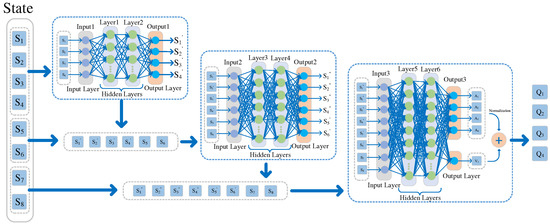

3.3. DDQN-SSQN Algorithm Structure

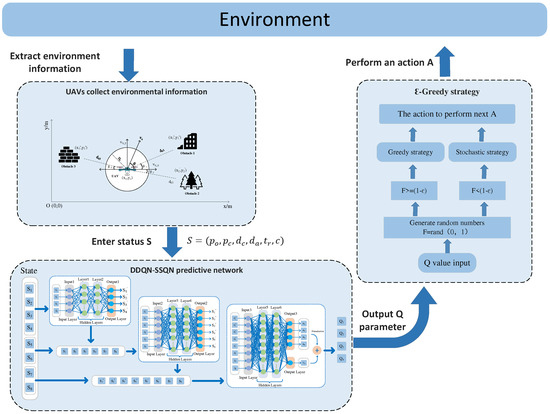

The DDQN-SSQN algorithm proposed in this paper is an improvement on the neural network based on the traditional DDQN algorithm. The network structure of the DDQN-SSQN algorithm is shown in Figure 4, where denotes the state information of the intelligent body, mainly including the position coordinates of the UAV, the position coordinates of the obstacle, the horizontal distance between the obstacle and the UAV, the straight-line distance between the trapped person and the UAV, the convergence degree of the UAV moving toward the trapped person, and the number of people who searched for the trapped person. Before the state information of the drone is input into the neural network, the overall state State is divided into three parts: position state State1 , distance state State2 and other state State3 . State1 is predicted by hidden layer 1 and hidden layer 2 to obtain the position state of UAV and obstacle at the next moment (); State2 and () are predicted by hidden layer 3 and hidden layer 4 to obtain the position state and distance state at the next moment (); State3 and () output action value and state advantage through hidden layer 5 and hidden layer 6, and then sum up the two to finally obtain . represents the value of each action performed by the Agent in the current state and is calculated as:

Here, , s represents the state , a represents the action .

Figure 4.

Network structure of DDQN-SSQN algorithm.

The execution architecture of the DDQN-SSQN algorithm is shown in Figure 5. The action policy used by the algorithm is the -greedy policy [38], i.e., the Agent has a probability of executing the action corresponding to the maximum Q function in the scene model with . The execution action A can be expressed as:

Figure 5.

DDQN-SSQN algorithm execution architecture.

Here, denotes the action corresponding to the maximum value in the function.

The DDQN-SSQN algorithm proposed in this paper is summarized in Algorithm 1, where the input is composed of the UAV’s own flight parameters, agent model, and environmental parameters (step 1), and the output is the set of relevant data parameters obtained during training and the trained agent model (step 29).

In the model training phase, relevant training parameters such as the number of training rounds, discount factor, experience pool capacity, and update frequency are first initialized. Next, set the number of obstacles and trapped people, and clear the target Q network of the agent (step 2).

| Algorithm 1: The Proposed DDQN-SSQN Algorithm Scheme |

|

At the beginning of each round of training, the position coordinates of the obstacles and the position coordinates of the trapped people are randomly reset; the mission environment faced by the drone will reset and obtain the initial state ; even if the reward received by the agent is cleared to zero (step 4). The training of the model will undergo iterations (step 3).

In the process of training, the agent will obtain the next action to be performed according to the current state (step 7), and the selection of actions will be completed using the -greedy strategy (Equation (32)); After the agent executes action , the task environment will be updated, and a new status will be obtained, and the reward obtained for performing the action will be obtained (steps 8–9); During the execution of training, the agent will collect real-time task parameter information, including: task completion, flight step, flight time, and the number of trapped people found (steps 10–13); The collected data information is packaged and sent to the experience pool (step 14) to provide a data set for the agent’s learning. After the above data collection is completed, the system will update the status information and the agent in time, and obtain the sum of the rewards obtained by the agent from the beginning of the first round to the end of the round (steps 15–17). During each turn, the agent repeats the flight mission in the simulation environment until the flight mission is completed, the flight time exceeds the set threshold, or all the difficult personnel are found, and the flight mission is terminated (steps 6–18). After the task is terminated, the various data obtained up to this turn will be packaged and stored in the form of a collection (steps 22–26), and if the number of training rounds meets the system update frequency, the target network will be updated (steps 19–21). After this round of training, a new round of training will be restarted, and training will not stop until the number of training rounds reaches . Finally, the parameter information of various tasks obtained during training will be output, and a trained agent model will also be obtained. After that, the drone will use the trained model to carry out real-time path planning in the disaster relief environment and the location information collection of trapped people.

4. Experimental Simulation and Analysis

This chapter focuses on the experimental simulation design. First, the algorithm is simulated and analyzed using the Open AI Gym simulation platform, and then the DDQN-SSQN algorithm is compared with the traditional DDQN, DQN, and Q-Learning algorithm schemes to verify the superiority of the algorithm proposed in this paper.

4.1. Simulate Experimental Design

The simulation parameters of the scene where the UAV base station collects the location information of the affected personnel were as follows: the flight area of the UAV base station was set to 20 × 20, and the entire area was composed of 400 rectangles with a side length of 5 m, so the task range of the scene was 100 m × 100 m. The flight height of the UAV was fixed in the air at a height of from the ground, the maximum flight speed was set to , and the maximum flight time was set to . The obstacles encountered by the drone during flight randomly appeared in the scene model, the number of generated obstacles in the scene model , and the two-dimensional position coordinates of the obstacles were , of which , , . In the scene model, the ground disaster victims appeared randomly, the number of disaster victims generated in the model was , and the two-dimensional location coordinates of the ground users were , where , , . Table 1 describes the simulation parameters during training.

Table 1.

Training simulation parameters.

4.2. Analysis of Simulation Result

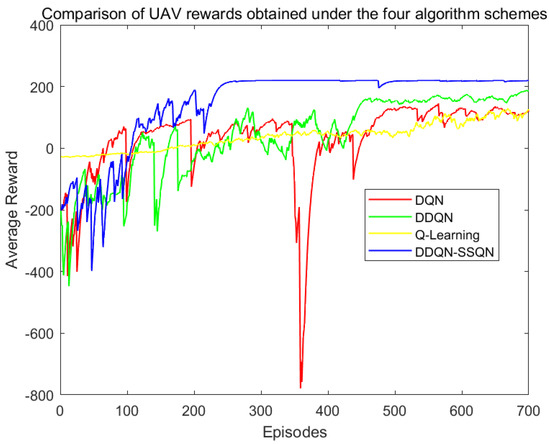

This paper used the PyTorch deep learning architecture to complete the network part of the deep reinforcement learning algorithm. When the drone collided with an obstacle or the flight time of the UAV was greater than , the training round was set to end. The training reward results of the four algorithms in the simulation scenario are shown in Figure 6.

Figure 6.

Comparison chart of rewards earned.

According to Figure 6, in the first 100 rounds of training, the four algorithms were in the environmental exploration stage, and the reward value obtained by the agent oscillated disorderly; In rounds, the rewards obtained by the DDQN-SSQN algorithm began to rise rapidly, the rewards obtained by the DDQN and DQN algorithms rose slowly, and the rewards obtained by the Q-Learning algorithm did not see an upward trend; When the number of training rounds reached 200 rounds, the reward return of the DDQN-SSQN algorithm began to converge and eventually stabilized, while the remaining three algorithms began to converge after the training round reached 400 rounds, and were not completely stable before 500 rounds and still had a large oscillation amplitude. The DDQN-SSQN algorithm proposed in this paper can accelerate the learning process by learning the environmental state, and compared with the other three algorithms, the DDQN-SSQN algorithm can provide the best flight strategy for the UAV in a shorter time and obtain more rewards.

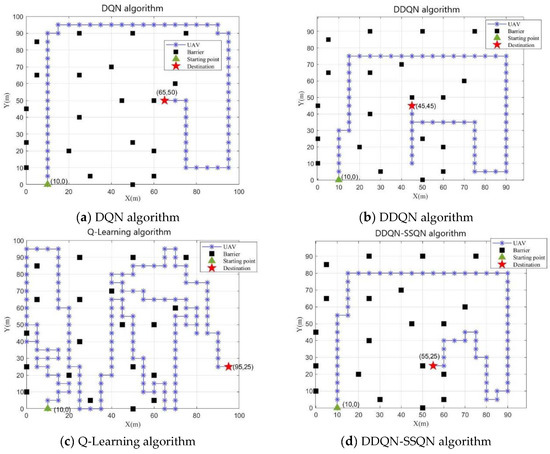

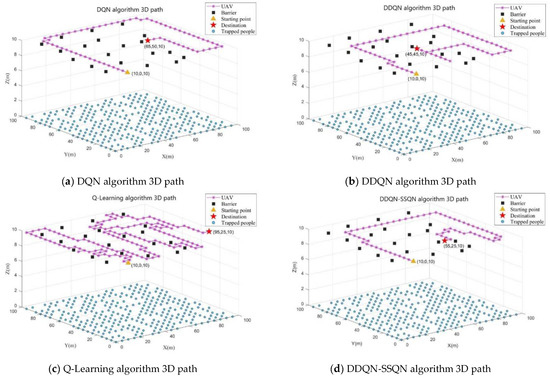

In Figure 7a–d show the obstacle avoidance path diagram obtained by UAV training under the four algorithms of DQN, DDQN, Q-Learning, and DDQN-SSQN, respectively. By observing the above path diagram, it can be seen that the drone can effectively avoid obstacles under the training of the four algorithms, but the path taken by the drone was different. In the obstacle avoidance path diagram of the Q-Learning algorithm shown in Figure 7c, the drone consumed the most steps, and the search strategy selected by the algorithm was to fly along the edge of the obstacle, although this method can avoid obstacles, but also increased the risk of drone crash; Figure 7a shows the obstacle avoidance path of the DQN algorithm, and it can be seen that the UAV calculation strategy was to search away from obstacles, so that although the threat of obstacles can be effectively avoided, the UAV’s search coverage of the mission area was reduced; Figure 7b shows the obstacle avoidance path of the DDQN algorithm, which was significantly better than that of Figure 7a and Figure 7c, but repeated paths appeared during the UAV search process, which increased the energy consumption of the UAV; It can be seen from the UAV obstacle avoidance path diagram under the DDQN-SSQN algorithm shown in Figure 7d that under this scheme, the UAV could complete the entire search task with the least number of flight steps under the condition of ensuring the coverage of the mission area, effectively reducing the flight energy consumption of the UAV. Therefore, the DDQN-SSQN algorithm scheme was better than other schemes.

Figure 7.

UAV obstacle avoidance path diagram under four algorithm schemes.

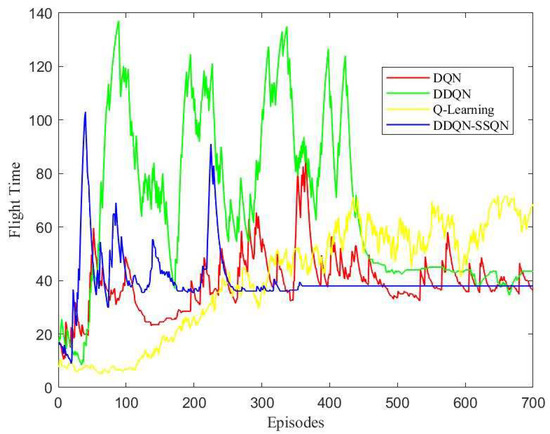

The time taken by the UAV to complete the flight task under the control of four algorithms was compared, and the comparison results are shown in Figure 8.

Figure 8.

Comparison of UAV flight time under four algorithm schemes.

According to Figure 8, in the first 250 rounds, because the UAV needed to explore the environment and gain experience, it took more time for the four algorithms to complete a task cycle in the early stage; When the training reached rounds, the time required for the search and rescue UAV based on the DDQN-SSQN algorithm to complete the task was greatly reduced, and the time consumed after 300 rounds began to stabilize, about . From the perspective of the overall convergence trend, the Q-Learning algorithm scheme took the longest time to complete the task, about , while the time consumed by DDQN and DQN algorithm schemes decreased after 450 rounds, but it was always higher than the algorithm scheme proposed in this paper. Therefore, the DDQN-SSQN algorithm scheme was superior to other schemes, under which the UAV could complete the search and rescue task in the shortest time.

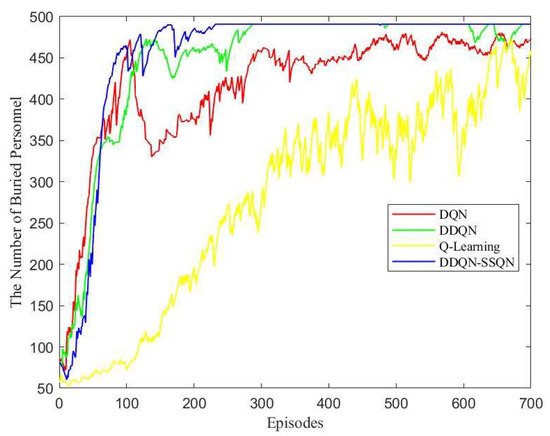

Figure 9 shows the 3D path planning diagram of the UAV under the four algorithms of DQN, DDQN, Q-Learning, and DDQN-SSQN. The UAV made an optimal path planning solution based on the size of the RSSI value and the coordinates of the obstacle’s location. Figure 10 shows the comparison graph of the number of people searched by UAVs under the four algorithms, from which it can be seen that the number of people searched by the three algorithms DQN, DDQNm and DDQN-SSQN in rounds rose rapidly and the Q-Learning algorithm rose very slowly; When the training reached rounds, the rising trend of the DQN and DDQN algorithms moderated and oscillated a little, and the DDQN-SSQN algorithm continued to rise rapidly and started to stabilize after 200 rounds; After 250 rounds the DDQN algorithm started to stabilize gradually, while the DQN, Q-Learning algorithm remained in oscillation until the end of training. From the above analysis, it can be seen that the proposed scheme could search for more affected people in the shortest time, so the DDQN-SSQN algorithm scheme was better than other schemes.

Figure 9.

Three-dimensional path planning of UAV under four algorithm schemes.

Figure 10.

Comparison of the number of UAVs searching for disaster victims under four algorithm schemes.

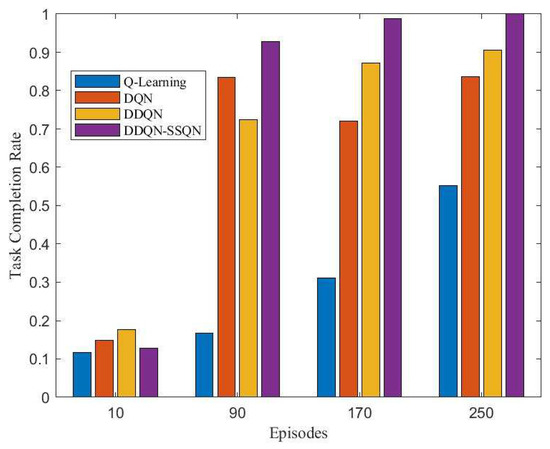

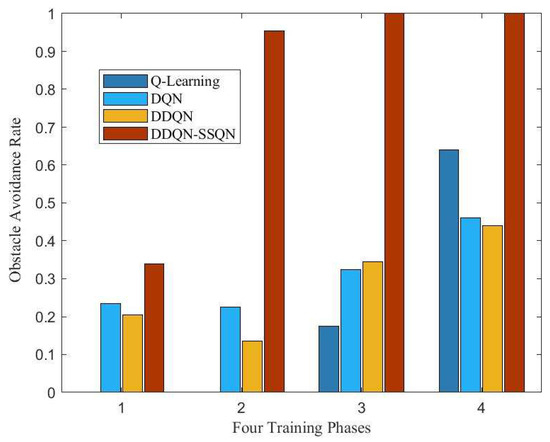

The number of buried people found throughout the search task was used as an indicator of task completion. The more buried people found in a task cycle, then the higher the task completion rate. Figure 11 shows the completion of the search and rescue tasks by the UAV base station under the manipulation of the four algorithms. From the figure, it can be seen that the task completion rate of all four algorithm schemes gradually increased with the increase in training rounds, among which the DDQN-SSQN algorithm scheme had the fastest increase in task completion rate and ccould complete the whole search and rescue task after the training reached 250 rounds. Figure 12 shows a comparison chart of the success rate of UAV obstacle avoidance under four algorithm schemes. The four stages in the figure represent the number of rounds in the training process, 1, 2, 3, 4 correspond to rounds, rounds, rounds, and rounds, respectively. It can be seen from the figure that the success rate of DDQN-SSQN algorithm scheme in obstacle avoidance was much higher than that of the remaining three algorithms during the training process. According to the above analysis, the DDQN-SSQN algorithm enabled the UAV to maximize the completion rate of search and rescue tasks with the shortest number of training rounds without collision. Therefore, the proposed algorithm scheme was better than the other three algorithms.

Figure 11.

Comparison of UAV mission completion rates under four algorithm schemes.

Figure 12.

Comparison of the success rate of UAV obstacle avoidance under four algorithm schemes.

5. Conclusions

This paper investigated the path planning problem in the process of collecting the location information of the affected people by UAV base station based on deep reinforcement learning algorithm and proposed a DDQN-SSQN reinforcement learning algorithm combining state splitting and optimal state to complete the optimal path planning in the process of UAV information collection. In order to realize the collection of the location information of the trapped people on the ground by the UAV base station, we introduced RSSI as the search indication of the disaster victims and input tedit into the algorithm network as status information for learning, which reduced the difficulty of search and rescue decision-making of the UAV base station. In this paper, the virtual disaster relief scenario model was constructed using the Open AI Gym simulation platform, and the network structure of the algorithm was improved on the basis of the DDQN algorithm, and then the new algorithm designed in this paper was compared with the traditional reinforcement learning algorithm through simulation comparison experiments. The simulation experimental results showed that the proposed algorithm could find the optimal search path in the shortest time compared with several other reinforcement learning algorithms. The proposed algorithm was better than the traditional algorithm in many aspects such as training speed, task completion rate, and obstacle avoidance rate. In future research, we plan to consider the constraints related to terrain and environment when dividing the mission area, so as to avoid wasting resources due to UAV search and exploration in uninhabited land. In addition, the location information of the trapped people on the ground collected by the UAV can be further processed to achieve three-dimensional coordinate positioning of the disaster-stricken people.

Author Contributions

Methodology, J.L.; Software, C.W.; Formal analysis, R.L.; Investigation, Z.G.; Writing—original draft, J.Z.; Supervision, W.L.; Project administration, K.Y.; Funding acquisition, Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Zhejiang Key Research and Development Project grant number 2022C01048; This research was funded by Zhejiang Province Public Welfare Project grant number LGG22F010012.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wang, Z.; Guo, J.; Chen, Z.; Yu, L.; Wang, Y.; Rao, H. Robust secure UAV relay-assisted cognitive communications with resource allocation and cooperative jamming. J. Commun. Netw. 2022, 24, 139–153. [Google Scholar] [CrossRef]

- Sun, M.; Xu, X.; Qin, X.; Zhang, P. AoI-Energy-Aware UAV-Assisted Data Collection for IoT Networks: A Deep Rein-forcement Learning Method. IEEE Internet Things J. 2021, 8, 17275–17289. [Google Scholar] [CrossRef]

- Ouahouah, S.; Bagaa, M.; Prados-Garzon, J.; Taleb, T. Deep-Reinforcement-Learning-Based Collision Avoidance in UAV Environment. IEEE Internet Things J. 2022, 9, 4015–4030. [Google Scholar] [CrossRef]

- Shiri, H.; Seo, H.; Park, J.; Bennis, M. Attention-Based Communication and Control for Multi-UAV Path Planning. IEEE Wirel. Commun. Lett. 2022, 11, 1409–1413. [Google Scholar] [CrossRef]

- Sun, R.; Zhao, D.; Ding, L.; Zhang, J.; Ma, H. UAV-Net+: Effective and Energy-Efficient UAV Network Deployment for Extending Cell Tower Coverage with Dynamic Demands. IEEE Trans. Veh. Technol. 2022, 1–13. [Google Scholar] [CrossRef]

- Pan, Z.; Zhang, C.; Xia, Y.; Xiong, H.; Shao, X. An Improved Artificial Potential Field Method for Path Planning and For-mation Control of the Multi-UAV Systems. IEEE Trans. Circuits Syst. II Express Briefs 2022, 69, 1129–1133. [Google Scholar]

- Feng, J.; Zhang, J.; Zhang, G.; Xie, S.; Ding, Y.; Liu, Z. UAV Dynamic Path Planning Based on Obstacle Position Prediction in an Unknown Environment. IEEE Access 2021, 9, 154679–154691. [Google Scholar] [CrossRef]

- Qadir, Z.; Zafar, M.H.; Moosavi, S.K.R.; Le, K.N.; Mahmud, M.A.P. Autonomous UAV Path-Planning Optimization Using Metaheuristic Approach for Predisaster Assessment. IEEE Internet Things J. 2021, 9, 12505–12514. [Google Scholar] [CrossRef]

- Shao, X.X.; Gong, Y.J.; Zhan, Z.H.; Zhang, J. Bipartite Cooperative Coevolution for Energy-Aware Coverage Path Planning of UAVs. IEEE Trans. Artif. Intell. 2022, 3, 29–42. [Google Scholar] [CrossRef]

- Sanchez-Fernandez, A.J.; Romero, L.F.; Bandera, G.; Tabik, S. VPP: Visibility-Based Path Planning Heuristic for Moni-toring Large Regions of Complex Terrain Using a UAV Onboard Camera. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 944–955. [Google Scholar] [CrossRef]

- Chen, J.; Du, C.; Zhang, Y.; Han, P.; Wei, W. A Clustering-Based Coverage Path Planning Method for Autonomous Het-erogeneous UAVs. IEEE Trans. Intell. Transp. Syst. 2021, 1–11. [Google Scholar] [CrossRef]

- Jinqiang, H.; Husheng, W.; Renjun, Z.; Rafik, M.; Xuanwu, Z. Self-organized search-attack mission planning for UAV swarm based on wolf pack hunting behavior. J. Syst. Eng. Electron. 2021, 32, 1463–1476. [Google Scholar] [CrossRef]

- Zhou, X.; Gao, F.; Fang, X.; Lan, Z. Improved Bat Algorithm for UAV Path Planning in Three-Dimensional Space. IEEE Access 2021, 9, 20100–20116. [Google Scholar] [CrossRef]

- Wang, X.; Yang, Y.; Wu, D.; Zhang, Z.; Ma, X. Mission-Oriented 3D Path Planning for High-Altitude Long-Endurance So-lar-Powered UAVs with Optimal Energy Management. IEEE Access 2020, 8, 227629–227641. [Google Scholar] [CrossRef]

- Liu, Q.; Zhang, Y.; Li, M.; Zhang, Z.; Cao, N.; Shang, J. Multi-UAV Path Planning Based on Fusion of Sparrow Search Algo-rithm and Improved Bioinspired Neural Network. IEEE Access 2021, 9, 124670–124681. [Google Scholar] [CrossRef]

- Cheng, Z.; Zhao, L.; Shi, Z. Decentralized Multi-UAV Path Planning Based on Two-Layer Coordinative Framework for Formation Rendezvous. IEEE Access 2022, 10, 45695–45708. [Google Scholar] [CrossRef]

- Huang, Z.; Chen, C.; Pan, M. Multiobjective UAV Path Planning for Emergency Information Collection and Transmis-sion. IEEE Internet Things J. 2020, 7, 6993–7009. [Google Scholar] [CrossRef]

- Airlangga, G.; Liu, A. Online Path Planning Framework for UAV in Rural Areas. IEEE Access 2022, 10, 37572–37585. [Google Scholar] [CrossRef]

- Wu, X.; Lei, Y.; Tong, X.; Zhang, Y.; Li, H.; Qiu, C.; Guo, C.; Sun, Y.; Lai, G. A Non-rigid Hierarchical Discrete Grid Structure and its Application to UAVs Conflict Detection and Path Planning. IEEE Trans. Aerosp. Electron. Syst. 2022. [Google Scholar] [CrossRef]

- Qie, H.; Shi, D.; Shen, T.; Xu, X.; Li, Y.; Wang, L. Joint Optimization of Multi-UAV Target Assignment and Path Planning Based on Multi-Agent Reinforcement Learning. IEEE Access 2019, 7, 146264–146272. [Google Scholar] [CrossRef]

- Li, B.; Wu, Y. Path Planning for UAV Ground Target Tracking via Deep Reinforcement Learning. IEEE Access 2020, 8, 29064–29074. [Google Scholar] [CrossRef]

- Wei, K.; Huang, K.; Wu, Y.; Li, Z.; He, H.; Zhang, J.; Chen, J.; Guo, S. High-Performance UAV Crowdsensing: A Deep Reinforcement Learning Approach. IEEE Internet Things J. 2022, 9, 18487–18499. [Google Scholar] [CrossRef]

- Zhu, B.; Bedeer, E.; Nguyen, H.H.; Barton, R.; Henry, J. Joint Cluster Head Selection and Trajectory Planning in UAV-Aided IoT Networks by Reinforcement Learning with Sequential Model. IEEE Internet Things J. 2021, 9, 12071–12084. [Google Scholar] [CrossRef]

- Zhu, S.; Gui, L.; Cheng, N.; Sun, F.; Zhang, Q. Joint Design of Access Point Selection and Path Planning for UAV-Assisted Cellular Networks. IEEE Internet Things J. 2019, 7, 220–233. [Google Scholar] [CrossRef]

- Cui, Z.; Wang, Y. UAV Path Planning Based on Multi-Layer Reinforcement Learning Technique. IEEE Access 2021, 9, 59486–59497. [Google Scholar] [CrossRef]

- Wang, Z.; Rong, H.; Jiang, H.; Xiao, Z.; Zeng, F. A Load-Balanced and Energy-Efficient Navigation Scheme for UAV-Mounted Mobile Edge Computing. IEEE Trans. Netw. Sci. Eng. 2022, 9, 3659–3674. [Google Scholar] [CrossRef]

- Zhang, L.; Jabbari, B.; Ansari, N. Deep Reinforcement Learning Driven UAV-assisted Edge Computing. IEEE Internet Things J. 2022. [Google Scholar] [CrossRef]

- Chang, H.; Chen, Y.; Zhang, B.; Doermann, D. Multi-UAV Mobile Edge Computing and Path Planning Platform Based on Reinforcement Learning. IEEE Trans. Emerg. Top. Comput. Intell. 2021, 6, 489–498. [Google Scholar] [CrossRef]

- Liu, R.; Qu, Z.; Huang, G.; Dong, M.; Wang, T.; Zhang, S.; Liu, A. DRL-UTPS: DRL-based Trajectory Planning for Unmanned Aerial Vehicles for Data Collection in Dynamic IoT Network. IEEE Trans. Intell. Veh. 2022, 1–14. [Google Scholar] [CrossRef]

- Hsu, Y.-H.; Gau, R.-H. Reinforcement Learning-Based Collision Avoidance and Optimal Trajectory Planning in UAV Communication Networks. IEEE Trans. Mob. Comput. 2022, 21, 306–320. [Google Scholar] [CrossRef]

- Xi, Z.; Wu, D.; Ni, W.; Ma, X. Energy-Optimized Trajectory Planning for Solar-Powered Aircraft in a Wind Field Using Reinforcement Learning. IEEE Access 2022, 10, 87715–87732. [Google Scholar] [CrossRef]

- Babu, N.; Donevski, I.; Valcarce, A.; Popovski, P.; Nielsen, J.J.; Papadias, C.B. Fairness-Based Energy-Efficient 3-D Path Planning of a Portable Access Point: A Deep Reinforcement Learning Approach. IEEE Open J. Commun. Soc. 2022, 3, 1487–1500. [Google Scholar] [CrossRef]

- Xie, H.; Yang, D.; Xiao, L.; Lyu, J. Connectivity-Aware 3D UAV Path Design with Deep Reinforcement Learning. IEEE Trans. Veh. Technol. 2021, 70, 13022–13034. [Google Scholar] [CrossRef]

- Bayerlein, H.; Theile, M.; Caccamo, M.; Gesbert, D. Multi-UAV Path Planning for Wireless Data Harvesting with Deep Reinforcement Learning. IEEE Open J. Commun. Soc. 2021, 2, 1171–1187. [Google Scholar] [CrossRef]

- Li, Y.; Aghvami, A.H.; Dong, D. Path Planning for Cellular-Connected UAV: A DRL Solution with Quantum-Inspired Experience Replay. IEEE Trans. Wirel. Commun. 2022, 21, 7897–7912. [Google Scholar] [CrossRef]

- Khamidehi, B.; Sousa, E.S. Reinforcement Learning-aided Safe Planning for Aerial Robots to Collect Data in Dynamic Environments. IEEE Internet Things J. 2022, 9, 13901–13912. [Google Scholar] [CrossRef]

- Wang, X.; Gursoy, M.C.; Erpek, T.; Sagduyu, Y.E. Learning-Based UAV Path Planning for Data Collection with Inte-grated Collision Avoidance. IEEE Internet Things J. 2022, 9, 16663–16676. [Google Scholar] [CrossRef]

- Li, D.; Yin, W.; Wong, W.E.; Jian, M.; Chau, M. Quality-Oriented Hybrid Path Planning Based on A* and Q-Learning for Unmanned Aerial Vehicle. IEEE Access 2021, 10, 7664–7674. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).