Abstract

In this work, we formulate the image in-painting as a matrix completion problem. Traditional matrix completion methods are generally based on linear models, assuming that the matrix is low rank. When the original matrix is large scale and the observed elements are few, they will easily lead to over-fitting and their performance will also decrease significantly. Recently, researchers have tried to apply deep learning and nonlinear techniques to solve matrix completion. However, most of the existing deep learning-based methods restore each column or row of the matrix independently, which loses the global structure information of the matrix and therefore does not achieve the expected results in the image in-painting. In this paper, we propose a deep matrix factorization completion network (DMFCNet) for image in-painting by combining deep learning and a traditional matrix completion model. The main idea of DMFCNet is to map iterative updates of variables from a traditional matrix completion model into a fixed depth neural network. The potential relationships between observed matrix data are learned in a trainable end-to-end manner, which leads to a high-performance and easy-to-deploy nonlinear solution. Experimental results show that DMFCNet can provide higher matrix completion accuracy than the state-of-the-art matrix completion methods in a shorter running time.

1. Introduction

Matrix completion (MC) [1,2,3,4,5] aims to recover a matrix with missing matrix elements or incomplete data. It has been successfully applied to a wide range of signal processing and image analysis tasks, including collaborative filtering [6,7], image in-painting [8,9,10], image denoising [11,12], and image classification [13,14]. The MC methods assume that the original matrix is low rank and the missing elements of the matrix can be estimated based on rank minimization. It should be noted that the rank minimization problem is generally non-convex and NP-hard [15]. A typical approach to address this issue is to establish a convex approximation of the original non-convex objective function.

Existing approaches for solving the MC problem are mainly based on nuclear norm minimization (NNM) and matrix factorization (MF). The NNM approach [16,17,18] aims to minimize the sum of matrix singular values, which is a convex relaxation of the matrix rank. The nuclear norm minimization can be solved by singular value thresholding (SVT) algorithms [19], inexact increasing Lagrange multiplier (IALM) methods [16], and an alternating direction method (ADM) [17,20]. One major disadvantage of the NNM approach is that singular value decomposition (SVD) needs to be performed in each iteration of the optimization process, which has very high computational complexity when the matrix size is large. To avoid this problem, matrix factorization (MF), which does not need SVD, has been proposed by researchers to solve the MC problem [6,21,22,23]. Assuming that the rank of the original matrix is known, the MF method aims to decompose and approximate the matrix into a product of a thin matrix and a short matrix [21,24,25,26], and then reconstruct the missing element using this low-rank representation. Low-rank matrix fitting (LMaFit) [21] was one of the earliest MF methods. Although LMaFit is able to obtain an exact solution, it is sensitive to the rank estimation and cannot be globally optimized due to its non-convex formulation.

Both the NNM and the MF methods assume the low-rank property of the original matrix. Their performance degrades significantly when this property does not hold any more and the data are generated from a nonlinear latent variable model [10,27,28,29]. Recently, encouraged by the remarkable success of deep learning in many computer vision and machine learning tasks [30,31,32,33], researchers have explored the deep learning methods to nonlinear MC problems [28,29,30]. For example, the autoencoder-based collaborative filtering (AECF) approach [34] learns an autoencoder network to map the input matrix into a latent space and then reconstructs the matrix by minimizing the reconstruction error. The deep learning-based matrix complementation (DLMC) method [28] learns a stacked autoencoder network with with a nonlinear latent variable model. One major disadvantage of these deep learning-based methods is that they are unable to explore the global structure of the matrix, which degrades their performance in matrix completion, especially in image analysis where the global structure plays an important role in its restoration process.

In this paper, we propose a deep matrix factorization and completion network (DMFCNet) for matrix completion by coupling deep learning with traditional matrix completion methods. Our main idea is to use a neural network to simulate the iterative update of variables in the traditional matrix factorization process and learn the underlying relationship between input matrix data and the recovered output data after matrix completion in an end-to-end manner. We apply the proposed method to image in-painting to demonstrate its performance.

The main contributions of this paper can be summarized as follows.

- (1)

- Compared with existing methods, our proposed method is able to address the nonlinear data model problem faced by the traditional MC methods. It is also able to address the global structure problem in existing deep learning-based MC methods.

- (2)

- The proposed method can be pre-trained to learn the global image structure and underlying relationship between input matrix data with missing elements and the recovered output data. Once successfully trained, the network does not need to be optimized again in the subsequent image in-painting tasks, thereby providing a high-performance and easy-to-deploy nonlinear matrix completion solution.

- (3)

- To improve the performance of the proposed method, a new algorithm for pre-filling the missing elements of the image is proposed. This new padding method performs global analysis of the matrix data to predict the missing elements as their initial values, which improves the performance of matrix completion and image in-painting.

2. Related Work

In this section, we review existing work related to our proposed method. For example, the mathematical models of the low-rank Hankel matrix factorization (LRHMF) method [35] and the deep Hankel matrix factorization (DHMF) method [36] will be introduced, respectively. The LRHMF method is a low-rank matrix factorization method that avoids singular value decomposition to achieve fast signal reconstruction. The DHMF method [36] inspired by LRHMF is a complex exponential signal recovery method based on deep learning and Hankel matrix factorization. The method proposed in this paper for image in-painting is inspired by them.

As said in [35], the rank of the Hankel matrix is equal to the number of exponentials in x which is a vector of exponential functions. Thus, the low-rank Hankel matrix completion (LRHMC) problem can be solved by using the low-rank property of the Hankel matrix. Its mathematical formula can be described as:

where x is the signal to be recovered from the undersampled data y, is the operator that converts the signal to the Hankel matrix , U denotes the undersampling matrix, and is the balance parameter. is the nuclear norm of the matrix, which is used to restrict the rank of the matrix. The second term is used to measure the consistency of the data. However, it is very time-consuming to solve this problem because of its frequent singular value decomposition (SVD). To avoid this problem, the LRHMF method uses matrix factorization [37,38] instead of the nuclear norm minimization. Given any matrix, its nuclear norm can be approximated as:

where , , denotes the square of the Frobenius norm of the matrix, and the superscript H denotes the conjugate transpose. If we substitute Equation (2) to optimization problem (1), then the optimization problem can be reformulated as:

Since the nuclear norm of is replaced by the Frobenius norm of its matrix factorization, it is no longer necessary to calculate the singular value decomposition. To solve this problem effectively, the alternating direction multiplier method (ADMM) is adopted in LRHMF [35], and its corresponding extended Lagrangian function is derived as:

where D denotes the increasing Lagrange multiplier, denotes the inner product operator, and the and are the balanced parameters.

3. The Proposed Method

In this section, we construct a deep matrix factorization completion network (DMFCNet) for matrix completion and image in-painting. We derive the mathematical model for our DMFCNet method, discuss the network design, and then introduce two network structures based on different prediction methods for missing elements. Finally, we introduce the loss functions and explain the network training process.

3.1. Mathematical Model of the DMFCNet Method

The proposed DMFCNet is based on low-rank matrix factorization [35,36]. The optimization objective function of our proposed model can be formulated as

where is the observation matrix with missing elements whose initial values are set to be a predefined constant. is the matrix that needs to be recovered from the matrix Y, and is a regularization parameter. is the nuclear norm of the matrix X, which is used to restrict the rank of X. denotes the reconstruction error of Y, where ⊙ is the Hadamard product. is a mask indicating the positions of missing data. If Y is missing data at position , the value of is 0; otherwise, it is 1. We use matrix factorization instead of the traditional nuclear norm minimization, and the proposed model can be formulated as follows:

where and . The augmented Lagrangian function for (7) is given by

Here, is the penalty parameter and is the Lagrangian multiplier corresponding to the constraint . Since it is difficult to solve for U, V, S and X simultaneously in (8), following the idea of alternating direction method of multipliers (ADMM), we minimize the Lagrangian function with respect to each block variable U, V, S and X at a time while fixing the other blocks at their latest values. Thus, the proposed optimization process becomes:

where is the step size of the optimization process.

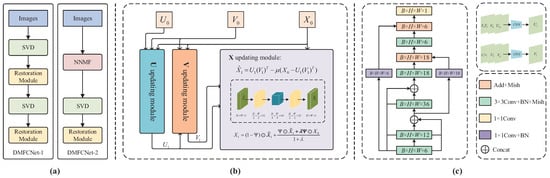

However, there are many limitations if solved directly by traditional algorithms, so we propose to solve the above optimization problem using a deep learning approach. The main idea is to update the variables using neural network modules. As shown in Figure 1, we construct a deep neural network based on (9), which has three updating modules shown in Figure 1b. A completed restoration module contains the U updating module and V updating module for updating matrices U and V, and it contains the X updating module for restoring the incomplete matrix.

Figure 1.

The structure of the DMFCNet network. (a) Network architecture. (b) Restoration Module. (c) U and V updating modules.

3.1.1. and Updating Modules

In our proposed DMFCNet method, the input matrix is first processed by U and V updating modules. According to the analysis in [36], U and V are updated as follows:

Note that the variables and are included in the update formula of U. So, we choose to add them to the input of the U updating module. In order to learn the maximum convolutional features, is also added as an input to the U updating module. The auxiliary matrix variable in (10) is initialized as a zero matrix; thus, it can be removed in the U and V updating modules. Based on (10), for the U updating module, we concatenate variables , and in the channel dimension as input and use a convolutional neural network to update the variable. The updating of the V matrix follows a similar procedure.

Once is updated, we concatenate , and channel-wise to obtain . Thus, the updating formulas for U and V matrices are:

where denotes the convolutional neural network.

We observe that the final matrix recovery performance is sensitive to the initialization of U and V. To address this issue, we propose to perform the following SVD of to initialize U and V:

where is a diagonal matrix with on the diagonal and zeros elsewhere, . is the i-th singular value of matrix . and are left and right singular vectors, respectively. Then, and are initialized by

where are the first r columns of U, are the first r columns of V, and are the first r rows and first r columns of . In this paper, we set .

In order to maintain the maximum amount of information during the matrix completion process, a dense convolutional structure is used in the network, and a residual structure is added to improve the stability of the training process. The Mish function is chosen as the activation function due to its smoothness at almost all points of the curve, which allows more information to flow through the neural network. A batch normalization operation (BN) layer is added between convolution layers to speed up the convergence.

3.1.2. Updating Module

After obtaining and using the U and V updating modules, the Lagrange multiplier can be updated using following formula:

Then, they will be fed into the X updating module, and will be obtained by the following equation.

To improve the reconstruction performance, we further process by an autoencoder network. As shown in Figure 1b, the network contains four convolution layers, a batch normalization module, and the last activation layer with the tanh function. For image in-painting applications, to enhance the smoothness of the recovered image, we incorporate the following weighted averaging operation into the network

where is the initial matrix and is a weighting parameter. When a pixel value is missing at a point in the image, the output of the network is assigned directly to the value at the corresponding location. Otherwise, a weighted average between the output of the network and the pixel value of the corresponding location of the input image are used to obtain the final reconstructed pixel value of that location.

3.2. Pre-Filling

Note that the initialization of U and V in the network is obtained from the original incomplete matrix using SVD, so the missing entries in the matrix need to be filled with predefined constants before the singular value decomposition. However, the network is extremely sensitive to the pre-filled constants and will directly affect the in-painting performance if not filled properly.

To reduce the effect of filling random constants, we first obtain by replacing the missing values of the observation matrix with predefined constants such as 255. Then, the singular value decomposition operation is performed on to obtain and and input them into the restoration module for preliminary restoration to obtain , and the output matrix can be calculated by:

This step is the preliminary inference of the missing values by the restoration module. The predicted values are filled to the missing positions of the observation matrix, and then, the filled is used as the input to the second restoration module. Since and in the second restoration module are obtained by the singular value decomposition of the new , which can largely eliminate the negative effects of using random constant filling, better restoration results can be obtained by the second restoration module. The following Algorithm 1 summarizes the DMFCNet-1 algorithm for network-based pre-filling operations.

| Algorithm 1 DMFCNet-1 |

|

However, a pre-filling-based neural network requires a singular value decomposition, which will take a relatively long time. To improve the running time, according to the structural characteristics of the image, a new pre-filling algorithm, called Nearest Neighbor Mean Filling (NNMF), is presented. It takes the data observed near the location of the missing value as a reference to infer the missing value.

It is assumed that we need to fill the data at the location of the missing values. Let be the value of the first non-missing position traversed from position to the left, be the value of the first non-missing position traversed from position to the right, and similarly, let be the value of the first non-missing position traversed from position to the top and be the value of the first non-missing position traversed from position to the bottom. Then, the formula for filling the data at the location of the missing value is as follows.

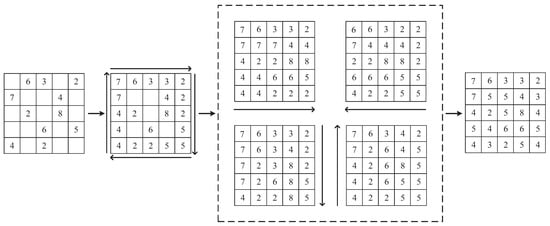

It is a very time-consuming operation to traverse the location of each missing value and then find the four values in turn. In this paper, we design a calculation procedure as shown in Figure 2, which can efficiently calculate the fill values at the locations of all missing data by dynamic programming. As shown in Figure 2, the missing values at the edges are first filled in a clockwise direction; then, four matrices are generated in four directions, and finally, the four generated matrices are summed to find the mean value to obtain the filled matrix.

Figure 2.

Schematic diagram of the operation of the NNMF algorithm.

Algorithm 2 summarizes the DMFCNet-2 algorithm based on NNMF for pre-filling operation. The matrix obtained from the pre-filling operation of the observation matrix using the NNMF algorithm is used as the input of the restoration module. Based on the two pre-filling methods, the network framework of the DMFCNet-1 algorithm and DMFCNet-2 algorithm proposed in this paper is shown in Figure 1a. During training, only the weighting parameters in the convolutional networks and and the autoencoder are optimized.

| Algorithm 2 DMFCNet-2 |

|

3.3. Loss Function

The general convolutional neural network, whose network interior is equivalent to a black box for people, can only be globally optimized by constraining the final output of the network to the whole network weights. In contrast, each variable in the interpretable network built based on the iterative model in this paper is of practical significance. So, in addition to restricting the final output of the restoration module in the loss function, this paper also restricts the intermediate variables in the module, which can make its training more stable and efficient. Frobenius parametrization is used to restrict the variables in the network, from which the loss function of a recovery module can be derived as follows:

where is the network parameter of the restoration module, B is the number of samples input to the network, and and are the regular term coefficients. denotes the output of the b-th sample in the restoration module and is the input of the b-th sample of the autoencoder in the X updating module. and are the and of the b-th sample output, and is the complete image corresponding to the b-th sample.

3.4. Training

According to the diversity of the VOC dataset [39,40], this dataset is selected as the training sample to adapt to the recovery task of more complex images. Firstly, the image is converted into a grayscale image of size 256 × 256, and then, some random pixel values in the image are replaced by 255.

The hyperparameters in training are set as follows. The first 50 singular values are taken when initializing the U and V matrices. Adam is chosen as the optimizer for training the network, and the learning rate is set to , which is reduced to after stabilization and set to for global fine-tuning. is set to and is set to 10 in the X updating module. The loss function canonical term coefficients and are set to 0.1 and 0.01, respectively. The autoencoder in the X updating module contains a total of three hidden layers with the dimensions , and .

To make it more targeted for the recovery of images with missing elements, two models are trained for each of the DMFCNet-1 and DMFCNet-2 networks. The first model uses a dataset containing images with a 30% to 50% missing rate, so this model is mainly used for recovering images with a 50% missing rate and below. The second model uses a dataset containing images with a 50% to 70% missing rate, and this model is used to recover images with a 50% to 70% missing rate.

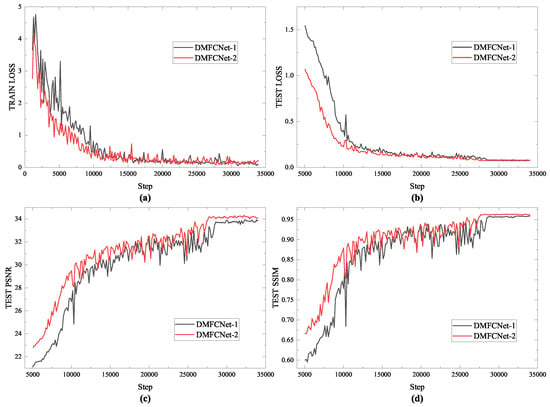

Specifically, DMFCNet-1 is trained with one restoration module as the training unit. The first restoration module is trained, and the weights of the first restoration module are frozen after the training is completed. Then, the second restoration module is added and trained, and the weights of the first repair module are unfrozen for global fine-tuning when the training of the second restoration module is completed. Figure 3 shows the loss convergence during the training period of the two models and the reconstruction results of the test data.

Figure 3.

The loss convergence of the network and the reconstruction results. (a) Loss convergence of the training. (b) Loss convergence of the test set. (c) PSNR values of the reconstructed images in the test set. (d) SSIM values of the reconstructed images in the test set.

4. Experiments

In this section, we first compare the two versions of the DMFCNet model proposed in this paper in image in-painting tasks, and then, we compare them with six popular matrix completion methods. These methods are matrix factorization (MF) by LmaFit [21], nuclear norm minimization (NNM) by IALM [16], truncated nuclear norm minimization (TNNM) by ADMM [8], DLMC method-based deep learning [28], NC-MC method [41], and LNOP method by ADMM [42]. The peak signal-to-noise ratio (PSNR) [43] and structural similarity (SSIM) [44] were used in the experiments to evaluate the quality of the restored images.

4.1. Datasets

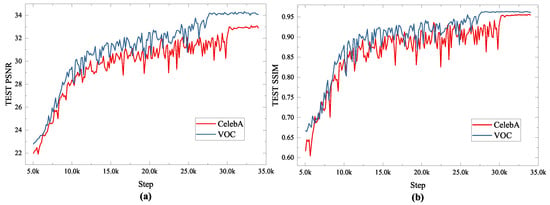

In this part, we discuss how to select the dataset for training the model. The method proposed requires pre-training the network model parameters, which requires a large number of datasets for training. We hope that the proposed algorithm is not only limited to simple low-rank images but includes both low-rank images and more complex images. Therefore, two datasets are chosen to train the model and test the effect of different datasets on the image restoration performance. The first dataset is the CelebFaces Attributes Dataset (CelebA) [45], which is a large-scale face attribute dataset with over 200,000 celebrity images. These images contain some degree of pose variation but remain relatively simple and homogeneous images overall. The second dataset is the VOC dataset [39,40], which has a more diverse set of images, including simple low-rank images as well as complex images.

Two datasets are used to train the DMFCNet-2 model, where the images of the datasets are converted to grayscale images of size 256 × 256, and 30% of random pixel information will be discarded. The test results of the model on complex images obtained by training with different datasets are shown in Figure 4. It can be seen from Figure 4 that the training loss when training the network using the CelebA dataset is smaller than that when training the network using the VOC dataset because of its relative simplicity. However, the loss and reconstruction performance of the network trained with the VOC dataset outperformed the network trained with the CelebA dataset when tested on complex images. Therefore, to improve the image restoration performance, we recommend using a more targeted dataset.

Figure 4.

Test results of the model on complex images obtained by training with different datasets. (a) Average PSNR values of recovered images (b) Average SSIM values of recovered images.

4.2. Experimental Settings

To make the best performance of six methods for comparison, the hyperparameters of each method were chosen as follows. In MF, since automatic estimation often leads to poor performance in image restoration problems, the fixed number of ranks is chosen for different missing rates, with the rank set to 30 for restoring images containing 20% to 30% missing rates, 20 for restoring images containing 40% to 50% missing rates, and 10 for restoring images containing 60% to 70% missing rates and text masks. In TNNM, the parameter r is uniformly set to 10. In DLMC, the weight decay penalty is set to 0.01, the network contains three hidden layers, and the number of hidden cells is set to [100 50 100]. p is set to 0.7 in LNOP. Other parameters follow the settings in the original paper.

4.3. Image In-Painting

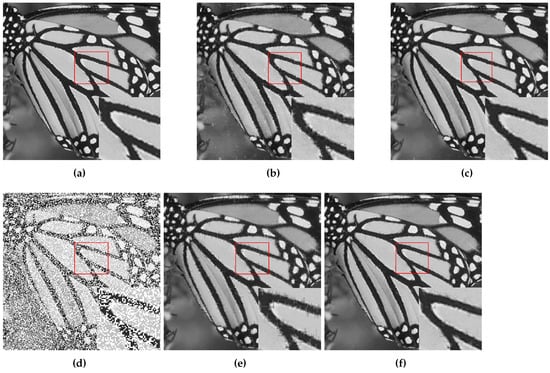

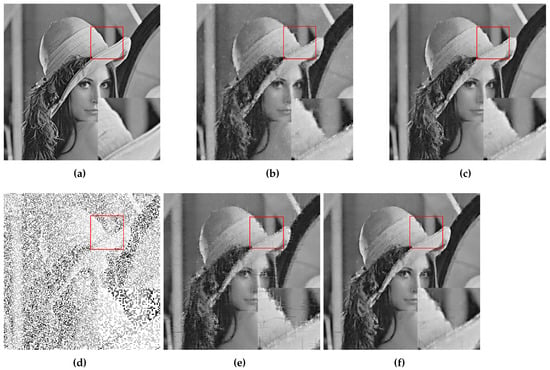

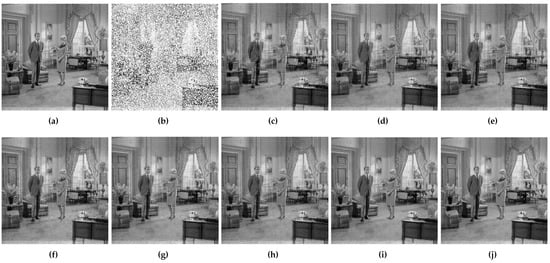

At first, DMFCNet-1 and DMFCNet-2 are compared, which includes the preliminary restoration results (pre-filling results) and the final restoration results. Figure 5 and Figure 6 show the restoration results of the two models restoring images containing 40% and 60% missing rates. As shown in Figure 5e and Figure 6e, the image pre-filled with NNMF can achieve relatively good results in the relatively smooth areas of the image, but it produces more obvious vertical stripes in the areas with large variations in pixel values. Figure 5f and Figure 6f show that the vertical stripes of the restored image using the DMFCNet-2 model have disappeared a lot, and the overall image is smoother, but there are still some spots left by the pre-filling of the image with NNMF. The DMFCNet-1 model uses the restoration module for the preliminary restoration, as shown in Figure 5b and Figure 6b. As can be seen, although there is no vertical stripe, the image has some white spots and is rougher overall. Figure 5c and Figure 6c show the final restoration result of DMFCNet-1.

Figure 5.

DMFCNet-1 and DMFCNet-2 restore image containing 40% missing rate. (a) Original image. (b) Preliminary restoration result of DMFCNet-1. (c) Final restoration result of DMFCNet-1. (d) Partially missing image. (e) Preliminary restoration result of DMFCNet-2. (f) Final restoration result of DMFCNet-2.

Figure 6.

DMFCNet-1 and DMFCNet-2 restore image containing 60% missing rate. (a) Original image. (b) Preliminary restoration result of DMFCNet-1. (c) Final restoration result of DMFCNet-1. (d) Partially missing image. (e) Preliminary restoration result of DMFCNet-2. (f) Final restoration result of DMFCNet-2.

It can be seen that after the second restoration module, the image was restored more carefully based on the preliminary restoration. The white spots in the image basically disappear, but the overall image is a bit rougher than the restored result of DMFCNet-2. In addition, Table 1 shows the recovery of the two methods at different missing rates, which contains a performance comparison of the preliminary restoration ability of the two models. It can be seen from Table 1 that the DMFCNet-2 model with pre-filling using NNMF is better at a low missing rate, but the preliminary restoration of DMFCNet-1 at a high missing rate gives stronger results than that of pre-filling using NNMF. However, the recovery of DMFCNet-2 is better than that of DMFCNet-1 because the images obtained by NNMF are smoother overall.

Table 1.

Restoration results of two methods of restoration containing different missing rates, each method contains preliminary restoration results (left) and final restoration results (right).

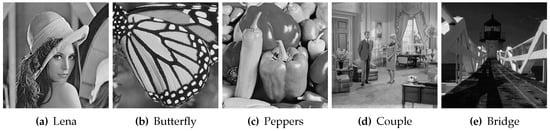

The next step is to compare the proposed methods with other methods of matrix completion. Five images as shown in Figure 7 are selected for the comparison experiments. Two masks are considered in the experiments: the first one is a random pixel mask, where 20% to 70% of the pixels in the image are removed randomly. The second one is a text mask containing English words. Although the DMFCNet-1 model and DMFCNet-2 model are not trained to restore images that contain text masks, the DMFCNet-2 network is used to compare with other methods in the tests containing text masks because of the characteristics of NNMF.

Figure 7.

Five grayscale images of 256 × 256 size for comparison experiments, numbered 1–5 from left to right.

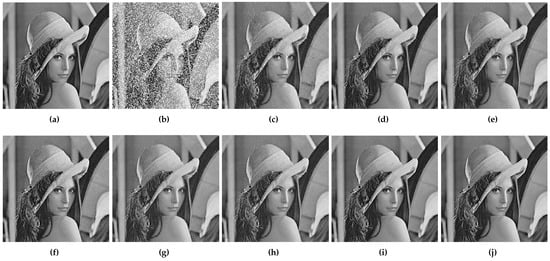

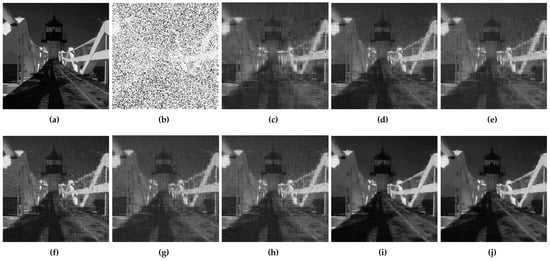

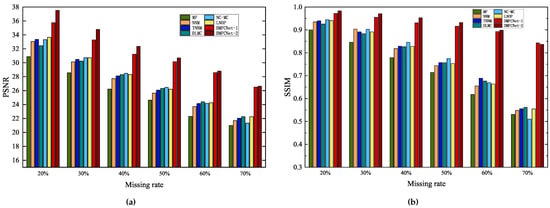

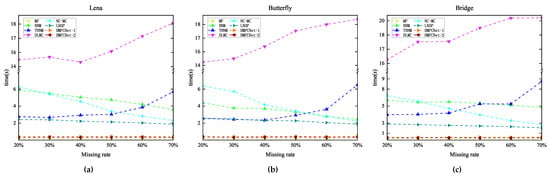

Figure 8, Figure 9 and Figure 10 show the original images, images containing random pixel masks, and examples of restored images obtained by each of the six methods. Here, 30%, 50% and 70% of the pixels in the original image are removed randomly, respectively. From the images, it can be visually seen that the images obtained by restoration through the MF method are rougher than those obtained by other methods, and DMFCNet-1 and DMFCNet-2 have the best restoration results. We also conducted more comprehensive tests on other images, and the experimental results are shown in Table 2. Table 2 shows the PSNR values and SSIM values of the images obtained from the recovery of five images containing a 20% to 70% missing rate by six methods, respectively. Figure 11 illustrates the average recovery performance for five images with different missing rates using the eight methods. Figure 12 shows the execution time of the eight methods to recover grayscale images of size 256 × 256 containing different missing rates. Meanwhile, Table 3 shows the average running times of the eight methods for recovering images of different sizes containing different missing rates.

Figure 8.

Image recovery containing a 30% random pixel mask. (a) Complete image of size 256 × 256. (b) Partially missing image (10.52 dB/0.127). (c) Restored result by MF in 0.099 s (27.42 dB/0.822/0.099 s). (d) Restored result by NNM (29.37 dB/0.873/5.488 s). (e) Restored result by TNNM (29.676 dB/0.883/2.669 s). (f) Restored result by DLMC (29.354 dB/0.867/15.181 s). (g) Restored result by NC-MC (29.69 dB/0.893/5.399 s). (h) Restored result by LNOP (29.816 dB/0.881/2.391 s). (i) Restored result by DMFCNet-1 (33.29 dB/0.956/0.388 s). (j) Restored result by DMFCNet-2 (34.68 dB/0.971/0.345 s).

Figure 9.

Image recovery containing a 50% random pixel mask. (a) Complete image of size 256 × 256. (b) Partially missing image (8.29 dB/0.076). (c) Restored result by MF (25.69 dB/0.784/0.072 s). (d) Restored result by NNM (26.82 dB/0.805/4.297 s). (e) Restored result by TNNM (27.264 dB/0.820/3.094 s). (f) Restored result by DLMC (27.08 dB/0.812/17.956 s). (g) Restored result by NC-MC (27.63 dB/0.837/3.473 s). (h) Restored result by LNOP (27.29 dB/0.816/2.105 s). (i) Restored result by DMFCNet-1 (29.73 dB/0.898/0.403 s). (j) Restored result by DMFCNet-2 (30.04 dB/0.908/0.346 s).

Figure 10.

Image recovery containing a 70% random pixel mask. (a) Complete image of size 256 × 256. (b) Partially missing image (4.61 dB/0.024). (c) Restored result by MF (24.86 dB/0.614/0.034 s). (d) Restored result by NNM (25.78 dB/0.683/4.836 s). (e) Restored result by TNNM (26.126 dB/0.664/8.630 s). (f) Restored result by DLMC (27.057 dB/0.719/19.767 s). (g) Restored result by NC-MC (26.09 dB/0.663/2.266 s). (h) Restored result by LNOP (26.53 dB/0.68/1.808 s). (i) Restored result by DMFCNet-1 (29.82 dB/0.859/0.449 s). (j) Restored result by DMFCNet-2 (31.47 dB/0.884/0.339 s).

Table 2.

PSNR and SSIM values of the five images recovered by eight methods containing 20% to 70% missing rate, respectively. The best results are highlighted in bold.

Figure 11.

The average restoration performance of the eight methods for five images with different missing rates. The PSNR and SSIM values of the recovered images are shown on the (a) and (b), respectively.

Figure 12.

Execution times of eight methods to recover grayscale images of size 256 × 256 containing different missing rates. (a) Lena. (b) Butterfly. (c) Bridge.

Table 3.

Average running time (in seconds) for eight methods to restore images containing different missing rates and different sizes.

The graphical data show that MF(LMaFit) takes the shortest time, which is followed by the methods proposed in this paper. Due to the superiority of deep learning, using the trained network model for image restoration can significantly reduce the time required for the restoration task; even if the missing rate increases gradually, it does not increase the time required for restoration. In contrast, the deep learning-based DLMC method takes the longest time because it needs to optimize the network weights, and its running time growth rate is the largest among all methods when the image size increases. From the overall graphical data, it can be seen that DMFCNet-1 and DMFCNet-2 can achieve better recovery performance than competing methods in the shortest time, both for images containing small and large missing rates. Especially when the missing rate is large, the recovery performance of other methods decreases faster, but the proposed methods can still achieve satisfactory results.

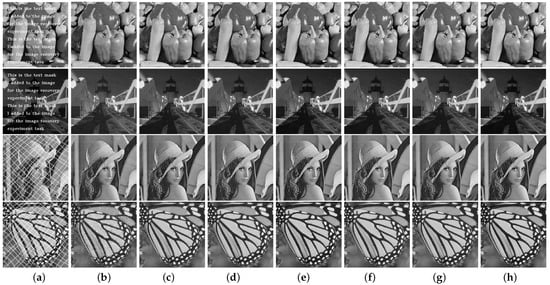

Figure 13 shows the examples of the images containing text masks and grid masks and the recovered images obtained by the seven methods. Table 4 shows the restoration results of the seven methods on the five images containing text masks and grid masks. The data in Table 4 show that the proposed DMFCNet-2 network, even though it is not trained to recover in the case of text-masked and grid-masked images, still performs well due to the characteristics of NNMF.

Figure 13.

Image recovery with text mask and grid mask. (a) Images with masks. (b) Restored results by MF. (c) Restored results by NNM. (d) Restored results by TNNM. (e) Restored results by DLMC. (f) Restored results by NC-MC. (g) Restored results by LNOP. (h) Restored results by DMFCNet-2.

Table 4.

Restoration results of seven methods on five images containing text mask and grid mask.

5. Conclusions

In this work, a new end-to-end neural network structure for image restoration called DMFCNet is proposed in this paper by combining deep learning with traditional matrix complementation algorithms. Experimental results on data containing random masks and other masks show that DMFCNet performs optimally in image restoration compared to the currently popular methods, and it remains stable even when it contains high missing rates.

Although the methods have good performance, there is still room for further improvement. For example, when restoring images containing a high missing rate, the restoration result of DMFCNet-1 contains white spots and the restoration result of DMFCNet-2 contains vertical stripes. Therefore, how to combine these two restoration results to obtain better restoration results is a problem that needs to be investigated in the future. In addition, the adjustment of hyperparameters in this method is also one of the important elements of the next work. A variety of other experiments will be carried out in the future in order to apply the methods of this paper to a wider range of experiments, such as larger image sizes (e.g., 512 × 512 pixels) or missing data due to other factors (e.g., image processing or transmission).

Author Contributions

Conceptualization, X.M., Z.L. and H.W.; data curation, Z.L.; formal analysis, X.M. and Z.L.; funding acquisition, X.M. and H.W.; investigation, X.M. and Z.L.; methodology, X.M., Z.L. and H.W.; project administration X.M.; resources, X.M.; software, X.M. and Z.L.; supervision, X.M., H.W.; validation, Z.L.; visualization, Z.L.; writing—original draft, X.M., Z.L.; writing—review and editing, X.M., Z.L. and H.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key Research and Development Program of China under Grant of No. 2020YFB2103604 and No. 2020YFF0305504, the National Natural Science Foundation of China (Nos. 62072024, 61971290), the Research Ability Enhancement Program for Young Teachers of Beijing University of Civil Engineering and Architecture (No. X21024), the Talent Program of Beijing University of Civil Engineering and Architecture, the BUCEA Post Graduate Innovation Project.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Candes, E.J.; Recht, B. Exact matrix completion via convex optimization. Found. Comput. Math. 2009, 9, 717–772. [Google Scholar] [CrossRef]

- Fan, J.; Chow, T.W. Sparse subspace clustering for data with missing entries and high-rank matrix completion. Neural Netw. 2017, 93, 36–44. [Google Scholar] [CrossRef] [PubMed]

- Liu, G.; Li, P. Low-rank matrix completion in the presence of high coherence. IEEE Trans. Signal Process. 2016, 64, 5623–5633. [Google Scholar] [CrossRef]

- Lu, X.; Gong, T.; Yan, P.; Yuan, Y.; Li, X. Robust alternative minimization for matrix completion. IEEE Trans. Syst. Man Cybern. Part (Cybern.) 2012, 42, 939–949. [Google Scholar]

- Wang, H.; Zhao, R.; Cen, Y. Rank adaptive atomic decomposition for low-rank matrix completion and its application on image recovery. Neurocomputing 2014, 145, 374–380. [Google Scholar] [CrossRef]

- Lara-Cabrera, R.; González-Prieto, A.; Ortega, F.; Bobadilla, J. Evolving matrix-factorization-based collaborative filtering using genetic programming. Appl. Sci. 2020, 10, 675. [Google Scholar] [CrossRef]

- Zhang, D.; Liu, L.; Wei, Q.; Yang, Y.; Yang, P.; Liu, Q. Neighborhood aggregation collaborative filtering based on knowledge graph. Appl. Sci. 2020, 10, 3818. [Google Scholar] [CrossRef]

- Hu, Y.; Zhang, D.; Ye, J.; Li, X.; He, X. Fast and accurate matrix completion via truncated nuclear norm regularization. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 2117–2130. [Google Scholar] [CrossRef]

- Le Pendu, M.; Jiang, X.; Guillemot, C. Light field inpainting propagation via low rank matrix completion. IEEE Trans. Image Process. 2018, 27, 1981–1993. [Google Scholar] [CrossRef]

- Alameda-Pineda, X.; Ricci, E.; Yan, Y.; Sebe, N. Recognizing emotions from abstract paintings using non-linear matrix completion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5240–5248. [Google Scholar]

- Yang, Y.; Feng, Y.; Suykens, J.A. Correntropy based matrix completion. Entropy 2018, 20, 171. [Google Scholar] [CrossRef]

- Ji, H.; Liu, C.; Shen, Z.; Xu, Y. Robust video denoising using low rank matrix completion. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 1791–1798. [Google Scholar]

- Cabral, R.; De la Torre, F.; Costeira, J.P.; Bernardino, A. Matrix completion for weakly-supervised multi-label image classification. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 121–135. [Google Scholar] [CrossRef] [PubMed]

- Luo, Y.; Liu, T.; Tao, D.; Xu, C. Multiview matrix completion for multilabel image classification. IEEE Trans. Image Process. 2015, 24, 2355–2368. [Google Scholar] [CrossRef] [PubMed]

- Harvey, N.J.; Karger, D.R.; Yekhanin, S. The complexity of matrix completion. In Proceedings of the Seventeenth Annual ACM-SIAM Symposium on Discrete Algorithm, Alexandria, VA, USA, 9–12 January 2006; pp. 1103–1111. [Google Scholar]

- Lin, Z.; Chen, M.; Ma, Y. The augmented lagrange multiplier method for exact recovery of corrupted low-rank matrices. arXiv 2010, arXiv:1009.5055. [Google Scholar]

- Shen, Y.; Wen, Z.; Zhang, Y. Augmented Lagrangian alternating direction method for matrix separation based on low-rank factorization. Optim. Methods Softw. 2014, 29, 239–263. [Google Scholar] [CrossRef]

- Toh, K.C.; Yun, S. An accelerated proximal gradient algorithm for nuclear norm regularized linear least squares problem. Pac. J. Optim. 2010, 6, 615–640. [Google Scholar]

- Cai, J.F.; Candes, E.J.; Shen, Z. A singular value thresholding algorithm for matrix completion. SIAM J. Optim. 2010, 20, 1956–1982. [Google Scholar] [CrossRef]

- Chen, C.; He, B.; Yuan, X. Matrix completion via an alternating direction method. IMA J. Numer. Anal. 2012, 32, 227–245. [Google Scholar] [CrossRef]

- Wen, Z.; Yin, W.; Zhang, Y. Solving a low-rank factorization model for matrix completion by a nonlinear successive over-relaxation algorithm. Math. Program. Computn. 2012, 4, 333–361. [Google Scholar] [CrossRef]

- Han, H.; Huang, M.; Zhang, Y.; Bhatti, U.A. An extended-tag-induced matrix factorization technique for recommender systems. Information 2018, 9, 143. [Google Scholar] [CrossRef]

- Wang, C.; Liu, Q.; Wu, R.; Chen, E.; Liu, C.; Huang, X.; Huang, Z. Confidence-aware matrix factorization for recommender systems. In Proceedings of the AAAI Conference on Artificial Intelligence, Orleans, LA, USA, 2–7 February 2018; Volume 32, p. 1. [Google Scholar]

- Luo, Z.; Zhou, M.; Li, S.; Xia, Y.; You, Z.; Zhu, Q.; Leung, H. An efficient second-order approach to factorize sparse matrices in recommender systems. IEEE Trans. Ind. Inform. 2015, 11, 946–956. [Google Scholar] [CrossRef]

- Luo, Z.; Zhou, M.; Li, S.; Xia, Y.; You, Z.; Zhu, Q. A nonnegative latent factor model for large-scale sparse matrices in recommender systems via alternating direction method. IEEE Trans. Neural Netw. Learn. Syst. 2015, 27, 579–592. [Google Scholar] [CrossRef]

- Cao, X.; Zhao, Q.; Meng, D.; Chen, Y.; Xu, Z. Robust low-rank matrix factorization under general mixture noise distributions. IEEE Trans. Image Process. 2016, 25, 4677–4690. [Google Scholar] [CrossRef] [PubMed]

- Lawrence, N.; Hyvärinen, A. Probabilistic non-linear principal component analysis with Gaussian process latent variable models. J. Mach. Learn. Res. 2005, 6, 2005. [Google Scholar]

- Fan, J.; Chow, T. Deep learning based matrix completion. Neurocomputing 2017, 266, 540–549. [Google Scholar] [CrossRef]

- Fan, J.; Cheng, J. Matrix completion by deep matrix factorization. Neural Netw. 2018, 98, 34–41. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, D.M.; Tsiligianni, E.; Calderbank, R.; Deligiannis, N. Regularizing autoencoder-based matrix completion models via manifold learning. In Proceedings of the 2018 26th European Signal Processing Conference (EUSIPCO), Rome, Italy, 3–7 September 2018; pp. 1880–1884. [Google Scholar]

- Abavisani, M.; Patel, V.M. Deep sparse representation-based classification. IEEE Signal Process. Lett. 2019, 26, 948–952. [Google Scholar] [CrossRef]

- Bobadilla, J.; Alonso, S.; Hernando, A. Deep learning architecture for collaborative filtering recommender systems. Appl. Sci. 2020, 10, 2441. [Google Scholar] [CrossRef]

- Zhang, S.; Yao, L.; Sun, A.; Tay, Y. Deep learning based recommender system: A survey and new perspectives. ACM Comput. Surv. (CSUR) 2019, 52, 1–38. [Google Scholar] [CrossRef]

- Sedhain, S.; Menon, A.K.; Sanner, S.; Xie, L. Autorec: Autoencoders meet collaborative filtering. In Proceedings of the 24th International Conference on World Wide Web, New York, NY, USA, 18–22 May 2015; pp. 111–112. [Google Scholar]

- Guo, D.; Lu, H.; Qu, X. A fast low rank Hankel matrix factorization reconstruction method for non-uniformly sampled magnetic resonance spectroscopy. IEEE Access 2017, 5, 16033–16039. [Google Scholar] [CrossRef]

- Huang, Y.; Zhao, J.; Wang, Z.; Guo, D.; Qu, X. Complex exponential signal recovery with deep hankel matrix factorization. arXiv 2020, arXiv:2007.06246. [Google Scholar]

- Signoretto, M.; Cevher, V.; Suykens, J.A. An SVD-free approach to a class of structured low rank matrix optimization problems with application to system identification. In Proceedings of the IEEE Conference on Decision and Control (CDC), Firenze, Italy, 10–13 December 2013. [Google Scholar]

- Lee, D.; Jin, K.H.; Kim, E.Y.; Park, S.H.; Ye, J.C. Acceleration of MR parameter mapping using annihilating filter-based low rank hankel matrix (ALOHA). Magn. Reson. Med. 2016, 76, 1848–1864. [Google Scholar] [CrossRef] [PubMed]

- Everingham, M.; Gool, L.V.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 3–338. [Google Scholar] [CrossRef]

- Everingham, M.; Eslami, S.A.; Gool, L.V.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes challenge: A retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Nie, F.; Hu, Z.; Li, X. Matrix completion based on non-convex low-rank approximation. IEEE Trans. Image Process. 2019, 28, 2378–2388. [Google Scholar] [CrossRef]

- Chen, L.; Jiang, X.; Liu, X.; Zhou, Z. Robust Low-Rank Tensor Recovery via Nonconvex Singular Value Minimization. IEEE Trans. Image Process. 2020, 29, 9044–9059. [Google Scholar] [CrossRef]

- Gu, K.; Zhai, G.; Yang, X.; Zhang, W. Using free energy principle for blind image quality assessment. IEEE Trans. Multimed. 2014, 17, 50–63. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Liu, Z.; Luo, P.; Wang, X.; Tang, X. Deep learning face attributes in the wild. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3730–3738. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).