Abstract

Information flow provides a natural measure for the causal interaction between dynamical events. This study extends our previous rigorous formalism of componentwise information flow to the bulk information flow between two complex subsystems of a large-dimensional parental system. Analytical formulas have been obtained in a closed form. Under a Gaussian assumption, their maximum likelihood estimators have also been obtained. These formulas have been validated using different subsystems with preset relations, and they yield causalities just as expected. On the contrary, the commonly used proxies for the characterization of subsystems, such as averages and principal components, generally do not work correctly. This study can help diagnose the emergence of patterns in complex systems and is expected to have applications in many real world problems in different disciplines such as climate science, fluid dynamics, neuroscience, financial economics, etc.

1. Introduction

When investigating the properties of a complex system, it is often necessary to study the interaction between one subsystem and another subsystem, which themselves also form complex systems, usually with a large number of components involved. In climate science, for example, there is much interest in understanding how one sector of the system collaborates with another sector to cause climate change (see [1] and the references therein); in neuroscience, it is important to investigate the effective connectivity from one brain region to another, each with millions of neurons involved (e.g., [2,3]), and the interaction between structures (e.g., [4,5,6]; see more references in a recent review [7]). This naturally raises a question: How can we study the interaction between two subsystems in a large parental system?

An immediate answer coming to mind might be to study the componentwise interactions by assessing the causalities between the respective components using, for instance, the classical causal inference approaches (e.g., [8,9,10]). This is generally infeasible if the dimensionality is large. For two subsystems, each with, say, 1000 components, they end up with 1 million causal relations, making it impossible to analyze, albeit with all the details. In this case, the details are not a benefit; they need to be re-analyzed for a big, interpretable picture of the phenomena. On the other hand, in many situations, this is not necessary; one needs only a “bulk” description of the subsystems and their interactions. Such examples are seen from the Reynolds equations for turbulence (e.g., [11]) and the thermodynamic description of molecular motions (e.g., [12]). In some fields (e.g., climate science, neuroscience, geography, etc.), a common practice is simply to take respective averages and form the mean properties, and to study the interactions between the proxies, i.e., the mean properties. A more sophisticated approach is to extract the respective principal components (PCs) (e.g., [13,14,15]), based on which the interactions are analyzed henceforth. These approaches, as we will be examining in this study, however, may not work satisfactorily; their validities need to be carefully checked before being put into application.

During the past 16 years, it has been gradually realized that causality in terms of information flow (IF) is a real physical notion that can be rigorously derived from first principles (see [16]). When two processes interact, IF provides not only the direction but also the strength of the interaction. Thus far, the formalism of the IF between two components has been well established (see [16,17,18,19,20], among others). It has been shown promising to extend the formalism to subspaces with many components involved. A pioneering effort is [21], where the authors show that the heuristic argument in [17] equally applies to that between subsystems in the case with only one-way causality. A recent study on the role of individual nodes in a complex network [22] may be viewed as another effort. (Causality analyses between subspaces with the classical approaches are rare; a few examples are [23,24], etc.) However, a rigorous formalism for more generic problems (e.g., with mutual causality involved) is yet to be implemented. This makes the objective of this study, i.e., to study the interactions between two complex subsystems within a large parental system by investigating the “bulk” information flow between them.

2. Information Flow between Two Subspaces of a Complex System

Consider an n-dimensional dynamical system

where denotes the vector of state variable , are differentiable functions of and time t, is a vector of m independent standard Wiener processes, and is an matrix of stochastic perturbation amplitudes. Here we follow the convention in physics not to distinguish a random variable from its deterministic counterpart. From the components , we separate out two sets, and , and denote them as and , respectively. The remaining components are denoted as . The subsystems formed by them are henceforth referred to as A and B, and the following is a derivation of the information flow between them. Note that, for convenience, here A and B are put adjacent to each other; if not, the equations can always be rearranged to make them so.

Associated with Equations (1)–(3) there is a Fokker–Planck equation governing the evolution of the joint probability density function (pdf) of :

where , . Without much loss of generality, is assumed to be compactly supported on . The joint pdfs of and are, respectively,

With respect to them, the joint entropies are then

To derive the evolution of , integrate out in Equation (4). This yields, by using the assumption of compactness for ,

Similarly,

Multiplication of Equation (7) by , followed by an integration with respect to over , yields

Note that in the second term of the left hand side, the part within the summation is, by integration by parts,

In the derivation, the compactness assumption has been used (variables vanish at the boundaries). By the same approach, the right hand side becomes

Hence,

Likewise, we have

Now consider the impact of the subsystem A on its peer B, written . Following Liang (2016) [16], this is associated with the evolution of the joint entropy of the latter:

where signifies the entropy evolution with the influence of A excluded, which is found by instantaneously freezing as parameters. To do this, examine, on an infinitesimal interval , a system modified from the original Equations (1)–(3) by removing the r equations for , , ..., from the equation set

Notice that the s and s still have dependence on , which, however, appear in the modified system as parameters. By [16], we can construct a mapping , , where means but with appearing as parameters, and study the Frobenius–Perron operator (see, for example, [25]) of the modified system. An alternative approach is given by Liang in [18], which we henceforth follow. Observe that on the interval , corresponding to the modified dynamical system, there is also a Fokker–Planck equation

Here , means the joint pdf of with frozen as parameters. Note the difference between and ; the former has as parameters, while the latter has no dependence on . However, they are equal at time t.

Integration of the above Fokker–Planck equation with respect to gives the evolution of the pdf of subsystem B with A frozen as parameters, written :

Divide Equation (16) by and simplify the notation by to obtain

Discretizing and noticing that , we have (in the following, unless otherwise indicated, the variables without arguments explicitly specified are assumed to be at time step t)

To arrive at , we need to find . Using the Euler–Bernstein approximation,

where, just as the notation ,

and , we have

Take mathematical expectation on both sides. The left hand side is . By Corollary III.I of [16], and noting , and the fact that are independent of , we have

Thus,

Hence, the information flow from to is

Likewise, we can obtain the information flow from subsystem B to subsystem A. These are summarized in the following theorem.

Theorem 1.

For the dynamical system Equations (1)–(3), if the probability density function (pdf) of is compactly supported, then the information flow from to and that from to are (in nats per unit time), respectively,

where , and E signifies mathematical expectation.

When , , (20) reduces to

which is precisely the same as the Equation (15) in [18]; the same holds for Equation (19). These equations are hence verified.

The following theorem forms the basis for causal inference.

Theorem 2.

If the evolution of subsystem A (resp. B) does not depend on (resp. ), then (resp. ).

Proof.

We only check the formula for . In (20), the deterministic part

Now, is independent of , and note that is also so. Thus, we may integrate within the parentheses directly with respect to , yielding

By the compactness of , the whole deterministic part hence vanishes. Likewise, it can be proved that the stochastic part also vanishes.

This theorem allows us to identify the causality with information flow. Ideally, if , then B is not causal to A, and vice versa; the same holds for . □

3. Information Flow between Linear Subsystems and Its Estimation

Linear systems provide the simplest framework which is usually taken as the first step toward a more generic setting. Simple as it may be, it has been demonstrated in practice that linear results often provide a good approximation of an otherwise much more complicated problem. It is hence of interest to examine this special case.

Let

where and are constants. Additionally, suppose that are constants—that is to say, the noises are additive. Then, are also constants. Thus, in Equation (20),

The same holds in Equation (19). Thus, the stochastic parts in both Equations (19) and (20) vanish.

Since a linear system initialized with a Gaussian process will always be Gaussian, we may write the joint pdf of as

where is the population covariance matrix of . By the property of the Gaussian process, it is easy to show

where , is the vector of the means of , and the covariance matrix of . For easy correspondence, we will augment , , and , so that their entries have the same indices as their counterparts in , and . Separate into two parts:

where and correspond to the respective parts in the two square brackets. Thus, has nothing to do with the subspace B. By Theorem 2, this part does not contribute to the causality from A to B, so we only need to consider in evaluating ; that is to say,

The second term in the bracket is . The first term is

Here, is the entry of the matrix

Since, here, only are in question, this is equal to the entry of the matrix

As is symmetric, so is , and hence . Thus,

Substituting back, we obtain a very simplified result for . Likewise, can also be obtained, as shown in the following.

Theorem 3.

where and are also constants. Furthermore, suppose that initially has a Gaussian distribution; then,

where is the entry of , and

where is the entry of .

Given a system such as (1)–(3), we can evaluate in a precise sense the information flows among the components. Now, suppose that instead of the dynamical system, what we have are just n time series with K steps, , . We can estimate the system from the series and then apply the information flow formula to fulfill the task. Assume a linear model as shown above, and assume . following Liang (2014) [19], the maximum likelihood estimator (mle) of is equal to the least-square solution of the following over-determined problem:

where ( is the time stepsize), for , . Use an overbar to denote the time mean over the K steps. The above equation is

Denote by the matrix

the vector , and the row vector . Then, . The least square solution of , , solves

Note that is , where is the sample covariance matrix. Thus,

where is the sample covariance between the series and .

Thus, finally, the mle of is

where is the entry of , and

Likewise,

Here,

and is the entry of .

4. Validation

4.1. One-Way Causal Relation

To see if the above formalism works, consider the vector autoregressive (VAR) process:

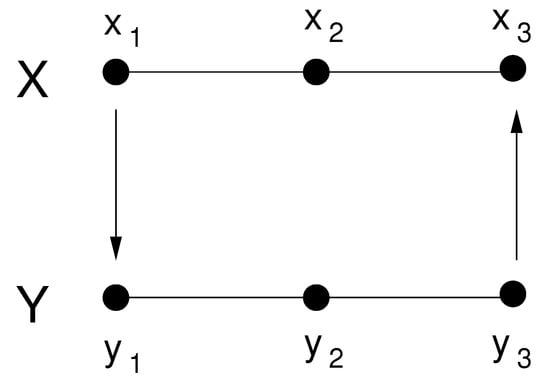

where , , are independent. As schematized in Figure 1, and form two subsystems, written as X and Y, respectively. They are coupled only through the first and third components; more specifically, drives , and Y feeds back to X through coupling with . The strength of the coupling is determined by the parameters and . In this subsection, , so the causality is one-way, i.e., from X to Y without feedback.

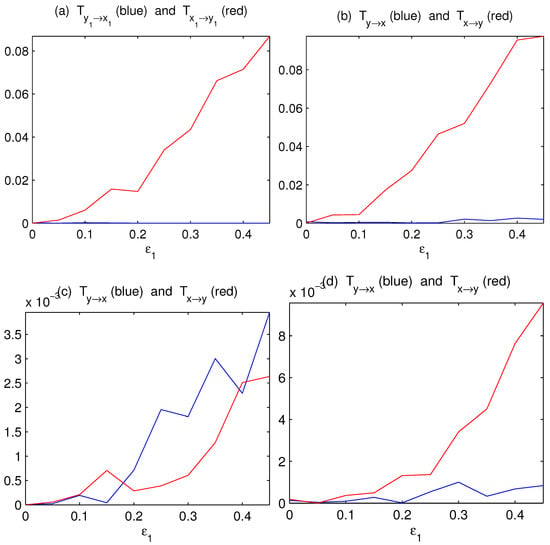

Initialized with random numbers, we iterate the process for 20,000 steps and discard the first 10,000 steps to form six time series with a length of 10,000 steps. Using the algorithm by Liang (e.g., [16,18,19,20]), the information flows between and can be rather accurately obtained. As shown in Figure 2a, the information flow/causality from X to Y increases with , and there is no causality the other way around, just as expected. Since no other coupling exists, one can imagine that the bulk information flows must also bear a similar trend. Using Equations (28) and (30), the estimators are indeed similar to that, as shown in Figure 2b. This demonstrates the success of the above formalism.

Figure 2.

The absolute information flows between subspaces X and Y as functions of the coupling coefficients (). (a) The componentwise information flows between and ; (b) the bulk information flows between subsystems X and Y computed with Equations (28) and (30); (c) the information flows between and ; (d) the information flows between the first principal components of () and (), respectively (units: nats per time step).

Since practically averages and principal components (PCs) have been widely used to measure complex subsystem variations, we also compute the information flows between and , and that between the first PCs of and . The results are plotted in Figure 2c,d, respectively. As can be seen, the principal component analysis (PCA) method works just fine in this case. By comparison, the averaging method yields an incorrect result.

The incorrect inference based on averaging is within expectation. In a network with complex causal relations, for example, with a causality from to , the averaging of with is equivalent to mixing with its future state, which is related to the contemporary state of , and hence will yield a spurious causality to . The PCA here functions satisfactorily, perhaps because in selecting the most coherent structure, it discards most of the influences from other (implicit) time steps. However, the relative success of PCA may not be robust, as evidenced in the following mutually causal case.

4.2. Mutually Causal Relation

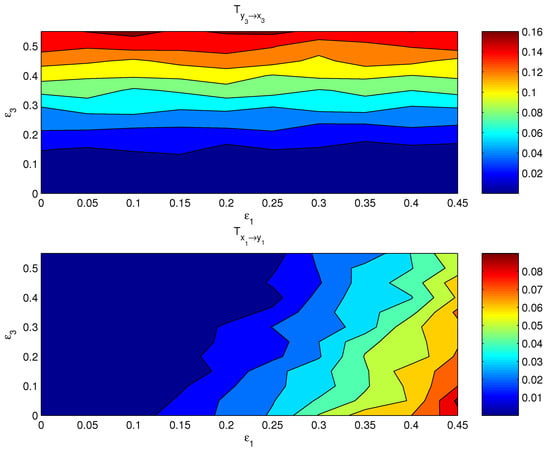

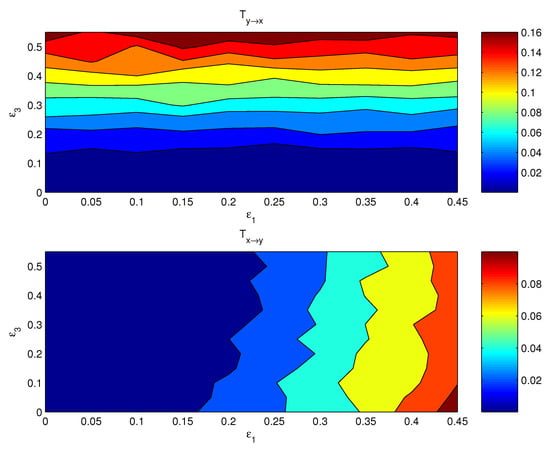

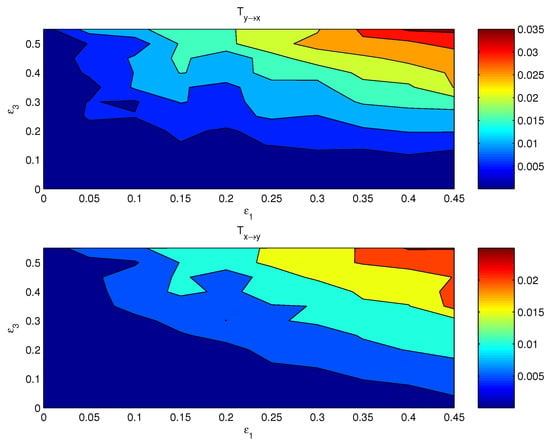

If both the coupling parameters, and , are turned on, the resulting causal relation has a distribution on the plane. Figure 3 shows the componentwise information flows (bottom) and (top) on the plane. The other two flows, i.e., their counterparts and , are by computation essentially zero. As argued in the preceding subsection, the bulk information flows should follow the general pattern, albeit perhaps in a more coarse and/or mild pattern, since it is a property on the whole. This is indeed true. Shown in Figure 4 are the bulk information flows between X and Y computed using Equations (28) and (30).

Figure 3.

The absolute information flow from to and that from to as functions of and . The units are in nats per time step.

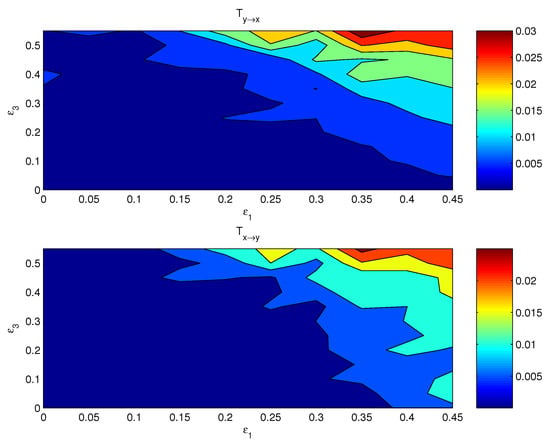

Figure 4.

The absolute bulk information flow from subsystem Y to subsystem X, and that from X to Y. The abscissa and ordinate are the coupling coefficients and , respectively.

Again, as usual, we try the averages and first PCs as proxies for estimating the causal interaction between X and Y. Figure 5 shows the distributions of the information flows between and . The resulting patterns are totally different from what Figure 3 displays; obviously, these patterns are incorrect.

Figure 5.

As Figure 4, but for the information flows between the mean series and .

One may expect that the PCA method should yield more reasonable causal patterns. We have computed the first PCs for and , respectively, and estimated the information flows using the algorithm by Liang [20]. The resulting distributions, however, are no better than those with the averaged series (Figure 6). That is to say, this seemingly more sophisticated approach does not yield the right interaction between the complex subsystems, either.

Figure 6.

As Figure 4, but for the information flows between the first principal component of and that of .

5. Summary

Information flow provides a natural measure of the causal interaction between dynamical events. In this study, the information flows between two complex subsystems of a large dimensional system are studied, and analytical formulas have been obtained in a closed form. For easy reference, the major results are summarized hereafter.

For an n-dimensional

if the probability density function (pdf) of is compactly supported, then the information flows from subsystem A, which are made of , to subsystem B, made of (), and that from B to A are, respectively (in nats per unit time),

where , and E signifies mathematical expectation. Given n stationary time series, and can be estimated. The maximum likelihood estimators under a Gaussian assumption are referred to in Equations (28) and (30).

We have constructed a VAR process to validate the formalism. The system has a dimension of 6, with two subsystems respectively denoted by X and Y, each with a dimension of 3. X drives Y via the coupling at one component, and Y feeds back to X via another. The detailed, componentwise causal relation can be easily found using our previous algorithms such as that in [20]. It is expected that the bulk information flow should in general also follow a similar trend, though the structure could be in a more coarse and mild fashion, as now displayed is an overall property. The above formalism does yield such a result. On the contrary, the commonly used proxies for subsystems, such as averages and principal components (PCs), generally do not work. Particularly, the averaged series yield the wrong results in the two cases considered in this study; the PC series do not work either for the mutually causal case, though they result in a satisfactory characterization for the case with a one-way causality.

The result of this study is applicable in many real world problems. As explained in the Introduction, it will be of particular use in the related fields of climate science, neuroscience, financial economics, fluid mechanics, etc. For example, it helps clarify the role of greenhouse gas emissions in bridging the climate system and the socioeconomic system (see a review in [26]). Likewise, the interaction between the earth system and public health [27] can also be studied. In short, it is expected to play a role in the frontier field of complexity, namely, multiplex networks or networks of networks (see the references in [28,29,30]). We are therefore working on these applications.

Funding

This research was funded by the Shanghai International Science and Technology Partnership Project (grant number: 21230780200), the National Science Foundation of China (grant number: 41975064), and the 2015 Jiangsu Program for Innovation Research and Entrepreneurship Groups.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The author declares no conflict of interest.

References

- Intergovenmental Panel on Climate Change (IPCC). The Sixth Assessment Report, Climate Change 2021: The Physical Science Basis. Available online: https://www.ipcc.ch/report/ar6/wg1/#FullReport (accessed on 15 November 2021).

- Friston, K.J.; Harrison, L.; Penny, W. Dynamic causal modeling. Neuroimage 2003, 19, 1273–1302. [Google Scholar] [CrossRef]

- Li, B.; Daunizeau, J.; Stephan, K.E.; Penny, W.; Hu, D.; Friston, K. Generalised filtering and stochastic DCM for fMRI. Neuroimage 2011, 58, 442–457. [Google Scholar] [CrossRef]

- Friston, K.J.; Ungerleider, L.G.; Jezzard, P.; Turner, R. Characterizing modulatory interactions between V1 and V2 in human cortex with fMRI. Hum. Brain Mapp. 1995, 2, 211–224. [Google Scholar] [CrossRef]

- Karl, J. Friston, Joshua Kahan, Adeel Razi, Klaas Enno Stephan, Olaf Sporns. On nodes and modes in resting state fMRI. NeuroImage 2014, 99, 533C547. [Google Scholar]

- Qiu, P.; Jiang, J.; Liu, Z.; Cai, Y.; Huang, T.; Wang, Y.; Liu, Q.; Nie, Y.; Liu, F.; Cheng, J.; et al. BMAL1 knockout macaque monkeys display reduced sleep and psychiatric disorders. Natl. Sci. Rev. 2019, 6, 87–100. [Google Scholar] [CrossRef]

- Wang, X.-J.; Hu, H.; Huang, C.; Keennedy, H.; Li, C.T.; Logothetis, N.; Lu, Z.-L.; Luo, Q.; Poo, M.-M.; Tsao , D.; et al. Computational neuroscience: A frontier of the 21st century. Natl. Sci. Rev. 2020, 7, 1418–1422. [Google Scholar] [CrossRef] [PubMed]

- Granger, C. Investigating causal relations by econometric models and cross-spectral methods. Econometrica 1969, 37, 424. [Google Scholar] [CrossRef]

- Pearl, J. Causality: Models, Reasoning, and Inference, 2nd ed.; Cambridge University Press: New York, NY, USA, 2009. [Google Scholar]

- Imbens, G.W.; Rubin, D.B. Causal Inference for Statistics, Social, and Biomedical Sciences; Cambridge University Press: Cambridge, UK, 2015. [Google Scholar]

- Batchelor, G.K. The Theory of Homogeneous Turbulence; Cambridge University Press: Cambridge, UK, 1953; 197p. [Google Scholar]

- Landau, L.D.; Lifshitz, E.M. Statistical Physics, 2nd Revised and Enlarged ed.; Pergamon Press: Oxford, UK, 1969. [Google Scholar]

- Preisendorfer, R. Principal Component Analysis in Meteorology and Oceanography; Elsevier: Amsterdam, The Netherlands, 1998; 418p. [Google Scholar]

- Friston, K.J.; Frith, C.D.; Liddle, P.F.; Frackowiak, R.S. Functional connectivity: The principal-component analysis of large (PET) data sets. J. Cereb. Blood Flow Metab. 1993, 13, 5–14. [Google Scholar] [CrossRef]

- Friston, K.; Phillips, J.; Chawla, D.; Buchel, C. Nonlinear PCA: Characterizing interactions between modes of brain activity. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2000, 355, 135–146. [Google Scholar] [CrossRef][Green Version]

- Liang, X.S. Information flow and causality as rigorous notions ab initio. Phys. Rev. E 2016, 94, 052201. [Google Scholar] [CrossRef]

- Liang, X.S.; Kleeman, R. Information transfer between dynamical system components. Phys. Rev. Lett. 2005, 95, 244101. [Google Scholar] [CrossRef]

- Liang, X.S. Information flow within stochastic systems. Phys. Rev. E 2008, 78, 031113. [Google Scholar] [CrossRef]

- Liang, X.S. Unraveling the cause-effect relation between time series. Phys. Rev. E 2014, 90, 052150. [Google Scholar] [CrossRef] [PubMed]

- Liang, X.S. Normalized multivariate time series causality analysis and causal graph reconstruction. Entropy 2021, 23, 679. [Google Scholar] [CrossRef] [PubMed]

- Majda, A.J.; Harlim, J. Information flow between subspaces of complex dynamical systems. Proc. Natl. Acad. Sci. USA 2007, 104, 9558–9563. [Google Scholar] [CrossRef]

- Liang, X.S. Measuring the importance of individual units in producing the collective behavior of a complex network. Chaos 2021, 31, 093123. [Google Scholar] [CrossRef]

- AI-Sadoon, M.M. Testing subspace Granger causality. Econom. Stat. 2019, 9, 42–61. [Google Scholar]

- Triacca, U. Granger causality between vectors of time series: A puzzling property. Stat. Probab. Lett. 2018, 142, 39–43. [Google Scholar] [CrossRef]

- Lasota, A.; Mackey, M.C. Chaos, Fractals, and Noise: Stochastic Aspects of Dynamics; Springer: New York, NY, USA, 1994. [Google Scholar]

- Tachiiri, K.; Su, X.; Matsumoto, K.K. Identifying the key processes and sectors in the interaction between climate and socio-economic systems: A review toward integrating Earth-human systems. Progress. Earth Planet. Sci. 2021, 8, 24. [Google Scholar] [CrossRef]

- Balbus, J.; Crimmins, A.; Gamble, J.L.; Easterling, D.R.; Kunkel, K.E.; Saha, S.; Sarofim, M.C. Introduction: Climate Change and Human Health. The Impacts of Climate Change on Human Health in the United States: A Scientifi Assessment. U.S. Global Change Research Program: Washington, DC, USA, 2016. [Google Scholar]

- D’Agostino, G.; Scala, A. Networks of Networks: The Last Frontier of Complexity; Springer: New York, NY, USA, 2014. [Google Scholar]

- Kenett, D.Y.; Matjaž, P.; Boccaletti, S. Networks of networks—An introduction. Chaos Solitons Fractals 2015, 80, 1–6. [Google Scholar] [CrossRef]

- DeFord, D.R.; Pauls, S.D. Spectral clustering methods for multiplex networks. Phys. A Stat. Mech. Its Appl. 2019, 533, 121949. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).