Abstract

Causality analysis is an important problem lying at the heart of science, and is of particular importance in data science and machine learning. An endeavor during the past 16 years viewing causality as a real physical notion so as to formulate it from first principles, however, seems to have gone unnoticed. This study introduces to the community this line of work, with a long-due generalization of the information flow-based bivariate time series causal inference to multivariate series, based on the recent advance in theoretical development. The resulting formula is transparent, and can be implemented as a computationally very efficient algorithm for application. It can be normalized and tested for statistical significance. Different from the previous work along this line where only information flows are estimated, here an algorithm is also implemented to quantify the influence of a unit to itself. While this forms a challenge in some causal inferences, here it comes naturally, and hence the identification of self-loops in a causal graph is fulfilled automatically as the causalities along edges are inferred. To demonstrate the power of the approach, presented here are two applications in extreme situations. The first is a network of multivariate processes buried in heavy noises (with the noise-to-signal ratio exceeding 100), and the second a network with nearly synchronized chaotic oscillators. In both graphs, confounding processes exist. While it seems to be a challenge to reconstruct from given series these causal graphs, an easy application of the algorithm immediately reveals the desideratum. Particularly, the confounding processes have been accurately differentiated. Considering the surge of interest in the community, this study is very timely.

1. Introduction

Recent years have seen a surge of interest in causality analysis. The main thrust is the recognition of its increasing importance in machine learning and artificial intelligence, a milestone being the connection of the principle of independent causal mechanisms to semi-supervised learning by Schölkopf et al. [1]. Different methods have been proposed for inferring the causality from data, in addition to the classical ones such as Granger causality testing. While traditionally causal inference has been categorized as a subject in statistics, and now also a subject in computer science, it merits mentioning that, during recent decades, contributions from different disciplines have augmented the subject and significant advances have been made ever since. Early efforts since Clive Granger and Judea Pearl (cf. [2] include, for example, Spirtes and Glymour (1991) [3], Schreiber (2000) [4], Paluš et al. (2001) [5], and Liang and Kleeman (2005) [6]. Recently, due to the rush in artificial intelligence, publications have been growing rapidly, among which are Zhang and Spirtes (2008) [7], Maathuis et al. (2009) [8], Pompe and Runge (2011) [9], Janzing et al. (2012) [10], Sugihara et al. (2012) [11], Schölkopf et al. (2012) [1], Sun and Bollt (2014) [12], Peters et al. (2017) [13], to name but a few; see [13] and [14] for more references.

Although causality has long been investigated ever since Granger [15], thanks to the systematic works by Pearl (e.g., [2]) and others, its “mathematization is a relatively recent development,” said Peters, Janzing and Schökopf (2017) [13]. On the other hand, Liang (2016) [16] argued that it is actually “a real physical notion that can be derived ab initio.” Despite the current rush, this latter line of work, which starts some 16 years ago, seems to have gone almost unnoticed. It can be traced back to a discovery of two-dimensional (2D) information flow in 2005 by Liang and Kleeman [6]. With the later efforts of, e.g., Liang (2008) [17] and Liang (2014) [18], a very easy method for bivariate time series causality analysis has been established, validated, and applied successfully to real world problems in different disciplines. More details can be found below in Section 2. Recently, the whole formalism has been put on a rigorous footing [16]; explicit formulas for multidimensional information flow have been obtained in a closed form with both deterministic and stochastic systems.

The multivariate time series causality analysis, however, has not been established since Liang (2016)’s comprehensive work [16]. Considering the enormous interest in this field, we are henceforth intented to fill the gap. The purpose of this study is hence two-fold: (1) Implement Liang (2016)’s theory into the long-due multivariate time series causality analysis; (2) along with the implementation present a brief introduction of this line of work.

The remaining of the paper is organized as follows. In Section 2, we first establish the framework, and then take a stroll through the theory of information flow and the information flow-based bivariate time series causality analysis. Section 3 presents an estimate of the information flow rates among multivariate time series, and their significance tests. These information flows can be normalized to reveal the impact of the role in question (Section 4). In order to test the power of the method, in Section 5, it is applied to infer the causal graphs with two extreme processes, one being a network with heavy noise (noise-to-signal ratio exceeding 100), another being a network of almost synchronized chaotic oscillators. Section 6 closes the paper with a brief summary of the study.

2. An Overview of the Theory of Information Flow-Based Causality Analysis

2.1. Directed Graph, Uncertainty Propagation, and Causality

In this framework, causal inference is based on information flow (rather than the other way around), which has been recognized as a real physical notion that can be put on a rigorous footing (see Liang, 2016). Consider a graph , where V and E are the sets of vertexes and edges, and the structural causal model on the graph, , where is a collection of d structural assignments , , indicating the parents or direct causes of , and being a joint distribution over the noise variables [2]. The basic idea is that this can be recast within the framework of dynamical systems, and that the causal inference problem can be carried forth to that between the coordinates in a dynamical system. This is how Liang and Kleeman (2005) [6] originally conceptualized the problem. Recently, it has also been realized by Røysland (2012) [19], Mooij et al. (2013) [20] and Mogensen et al. (2018) [21].

In physics there is a notion called information flow, which can be readily cast within the dynamical system framework. As Shannon entropy (simply “entropy” hereafter) is by interpretation “self information”, it is natural to measure it with the propagation of entropy or uncertainty, from one component to another. (Other entropies may provide alternative choices. Particularly, a generalized permutation entropy is referred to [22].) In this light, we have the following definition:

Definition 1.

In a dynamical system on the d-dimensional phase space Ω, where may be a continuous-time flow () or discrete-time mapping ), the information flow from a component/coordinate to another component/coordinate , written , is defined as the contribution of entropy (uncertainty) from per unit time () or per step () in increasing the marginal entropy of .

With information flow, causality can be defined, and, moreover, quantitatively defined:

Definition 2.

is causal to iff . The magnitude of the causality from to is measured by .

By evaluating the information flow within a dynamical system, the underlying causal graph is henceforth determined.

For this study, we consider only the continuous flow case. The vector field that forms the structural assignments is hence differentiable. Further, we assume a Wiener process for the noise (white noise). Note that some of these assumptions can be easily relaxed, and the generalization is straightforward. However, that is outside the scope of this study.

2.2. A Brief Stroll through the Theory and Recent Advances

This line of work begins with Liang and Kleeman (2005) [6] within the framework of 2D deterministic systems. Originally, it is based on a heuristic argument, but later on it is rigorized. Its generalization to multidimensional and stochastic systems, however, has not been fulfilled until the recent theoretical work by Liang (2016) [16]. The following is just a brief review.

We begin by stating an observational fact about causality:

If the evolution of an event, say, , is independent of another one, , then the information flow from to is zero.

Since it is the only quantitatively stated fact about causality, all previous empirical/half-empirical causality formalisms have attempted to verify it in applications. For this reason, it has been referred to as the principle of nil causality (e.g., [16]). We will soon see below that, within the information flow framework, this principle turns out to be a proven theorem.

Consider a d-dimensional continuous-time stochastic system for

where may be arbitrary nonlinear functions of and t, is a vector of standard Wiener processes, and is the matrix of perturbation amplitudes, which may also be any functions of and t. Assume that and are both differentiable with respect to and t. We then have the following theorem [16]:

Theorem 1.

For the system (1), the rate of information flowing from to (in nats per unit time) is

where signifies , E stands for mathematical expectation, , is the marginal probability density function (pdf) of , is the pdf of conditioned on , and .

For discrete-time mappings, the information flow is in a more complicated form; see [16].

Corollary 1.

When ,

This is the early result of Liang (2008) [17] on which the bivariate causality analysis is based; see Theorem 5 below.

There is a nice property for the above information flow:

Theorem 2.

If in (1) neither nor depends on , then .

Note this is precisely the principle of nil causality. Remarkably, here it appears as a proven theorem, while the classical ansatz-like formalisms attempt to verify it in applications.

Moreover, it has been established that [23]:

Theorem 3.

is invariant under arbitrary nonlinear transformation of .

This is a very important result, as we will see soon in the causal graph reconstruction. On the other hand, this tells that the obtained information flow should be an intrinsic property in physical world.

For linear systems, the information flow can be greatly simplified.

Theorem 4.

In (1), if , and is a constant matrix, then

where is the entry of , and the population covariance between and .

Observe that, if , then ; but if , does not necessarily vanish. Contrapositively, this means that correlation does not mean causation. We hence have the following corollary:

Corollary 2.

In the linear sense, causation implies correlation, but correlation does not imply causation.

This explicit mathematical expression hence provides a solution to the long-standing debate ever since George Berkeley (1710) [24] over correlation versus causation. Note, however, this is for linear systems only. For nonlinear systems, the existence of such a relation, and, if existing, how it is like, are yet to be explored. Nonetheless, as proved in [25], this relation indeed holds for some counter-examples in terms of normalized information flow (see Section 4 below).

In the case with only two time series (no dynamical system is given), we have the following result [18]:

Theorem 5.

Given two time series and , under the assumption of a linear model with additive noise, the maximum-likelihood estimator (mle) of (3) is

where is the sample covariance between and , and is the sample covariance between and a series derived from using the Euler forward differencing scheme: , with some integer.

Equation (5) is rather concise in form; it only involves the common statistics, i.e., sample covariances. In other words, a combination of some sample covariances will give a quantitative measure of the causality between the time series. This makes causality analysis, which otherwise would be complicated with the classical empirical/half-empirical methods, very easy. Nonetheless, note that Equation (5) cannot replace (3); it is just the mle of the latter. A statistical significance test must be performed before a causal inference is made based on the computed . For details, refer to [18].

The above formalism has been validated with many benchmark systems such as baker transformation, Hénon map, Kaplan–Yorke map, Rössler system (see [16]), to name a few. Particularly, the concise Equation (5) has been validated with problems where traditional approaches fail. An example is the mysterious anticipatory system problem discovered by Hahs and Pethel [26], which with (5) is successfully fixed in an easy way.

The formalism has been successfully applied to the studies of many real world problems, among them are the El Niño-Indian Ocean Dipole relation [18], global climate change [27], soil moisture–precipitation interaction [28], glaciology [29], and neuroscience problems [30], to name a few. Here, we particularly want to mention the study by Stips et al. [27], who, through examining with (5) the causality between the CO index and the surface air temperature, identified a reversing causal relation with time scale. They found, during the past century, indeed CO emission drives the recent global warming; the causal relation is one-way, i.e., from CO to global mean atmosphere temperature. Moreover, they were able to find how the causality is distributed over the globe, thanks to the quantitative nature of (5). However, on a time scale of 1000 years or over, the causality is completely reversed; that is to say, on a paleoclimate scale, it is global warming that drives the CO concentration to rise.

3. Information Flow among Time Series and Algorithm for Multivariate Causal Inference

We now estimate (2), given observations of the d components, in order to arrive at a practically easy-to-use formula for causal inference. As mentioned in Section 1, this has not been done yet; the available estimator (5) is for (3). Here, we only consider time series, but it can be easily extended to other forms of data. We further assume the series are stationary and equi-distanced. Without loss of generality, it suffices to examine .

As in the bivariate case considered in [18], we estimate the linear version (4). We hence examine a linear stochastic differential equation

where is a constant vector, and and are constant matrices. Initially, if obeys a Gaussian distribution, then it is a Gaussian for ever, i.e., , with and being the mean vector and covariance matrix, respectively. Hence, .

The above results need to be estimated if what we are given are just d time series. That is to say, what we know is a single realization of some unknown system, which, if known, can produce infinitely many realizations. We use maximum-likelihood estimation (e.g., [31]) to achieve the goal. The procedure follows that of [18], which for easy reference, we briefly summarize here. As established before, a further assumption that is diagonal, i.e., , for , and hence , will greatly simplify the problem, while in practice, this is quite reasonable.

Suppose that the series are equal-distanced with a time stepsize , and let N be the sample size. Consider an interval , and let the transition pdf be , where stands for the vector of parameters to be estimated. So, the log likelihood is

As N is usually large, the term can be dropped without causing much error. The transition pdf is, with the Euler–Bernstein approximation (see [18]),

where . This results in a log likelihood functional

where

and is the Euler forward differencing approximation of :

with . Usually, should be used to ensure accuracy, but in some cases of deterministic chaos and the sampling is at the highest resolution, one needs to choose . Maximizing , it is easy to find that the maximizer satisfies the following algebraic equation:

where the overline signifies sample mean. After some algebraic manipulations as that in [18], this yields the maximum-likelihood estimators (mle):

where

are the sample covariances, the cofactors of the matrix , and

By (4), this yields an estimator of the information flow from to :

where is the sample covariance between and the derived series as computed by (7). When , it is easy to show that this is reduced to (5), the 2D estimator as obtained in [18].

Information flow concerns the influence from one element to another element, i.e., the causal relation between different elements. A relation can also contain two identical elements; this corresponds to a self-loop in a graph. Historically, before establishing the information flow from, say , to another component, say , the contribution of the change of marginal entropy of by itself is first established. This contribution, denoted by , proves to be (cf. [16]). As we can see from above, besides the estimator of information flow, in this study, we actually have also estimated , i.e., the influence of a component (here ) on itself.

Theorem 6.

Under a linear assumption, the maximum-likelihood estimator of is

Proof.

Since , which is in this case. The mle hence follows. □

This supplies information not seen in previous causality analysis along this line. As will be clear soon, this helps identify self loops in a causal graph.

Statistical significance tests can be performed for (14) and (15). When N is large, they are approximately normally distributed around their true values with variances and , respectively, thanks to the mle property. Here, and are determined as follows (e.g., [31]). Denote . Compute

to form a matrix , namely, the Fisher information matrix. The inverse is the covariance matrix of , within which are and . Given a significance level, the confidence interval can be found accordingly.

From the above, an algorithm for causal inference hence can be implemented, as shown in Algorithm 1.

| Algorithm 1: Quantitative causal inference |

| Input : d time series Output: a DG , and IFs along edges initialize such that all vertexes are isolated; set a significance level ; for each do compute by (14); if is significant at level then add to ; record ; end end return , together with the IFs |

4. Normalization of the Causality among Multivariate Time Series

In many problems, just an assertion whether a causality exists is not enough; we need to know how important it is. This raises an issue of normalization. The normalization of information flow is by no means as trivial as it seemingly looks. Quite different from the case of covariance vs. correlation coefficient, no such relation as Cauchy–Schwartz inequality exists. Liang [32] listed some difficulties in the problem, and so far this is still an area of research. Hereafter, we follow [32] to propose the normalizer for (14).

The basic idea is that the normalizer for should be related to , as the former is by derivation a part of the contribution to the latter. However, itself cannot be the normalizer, since many terms tend to cancel; sometimes may even completely vanish, just as in the Hénon map case. We now write out the estimator of and see how the problem can be fixed.

By [16], the time rate of change of the marginal entropy of is

In this linear case,

The first term is , i.e., the contribution from itself, and the last term is the effect of noise, written . The remaining parts are the information flows to , just as expected. We may hence propose a normalizer as follows:

Hence, the normalized information flow from to is:

Clearly, lies on . So, when is 100%, has the maximal impact on .

Note that , where is always positive. That is to say, noise always contributes to increase the marginal entropy of , agreeing with our common sense. Obviously, this term is related to the noise-to-signal ratio.

5. Application to Causal Graph Reconstruction

5.1. A Noisy Causal Network from Autoregressive Processes

Consider the series generated from a d-dimensional vector autoregressive (VAR) process:

where , , , and is a diagonal matrix with diagonal entries , . Here, the errors are independent, and are the amplitudes of stochastic perturbation. Let

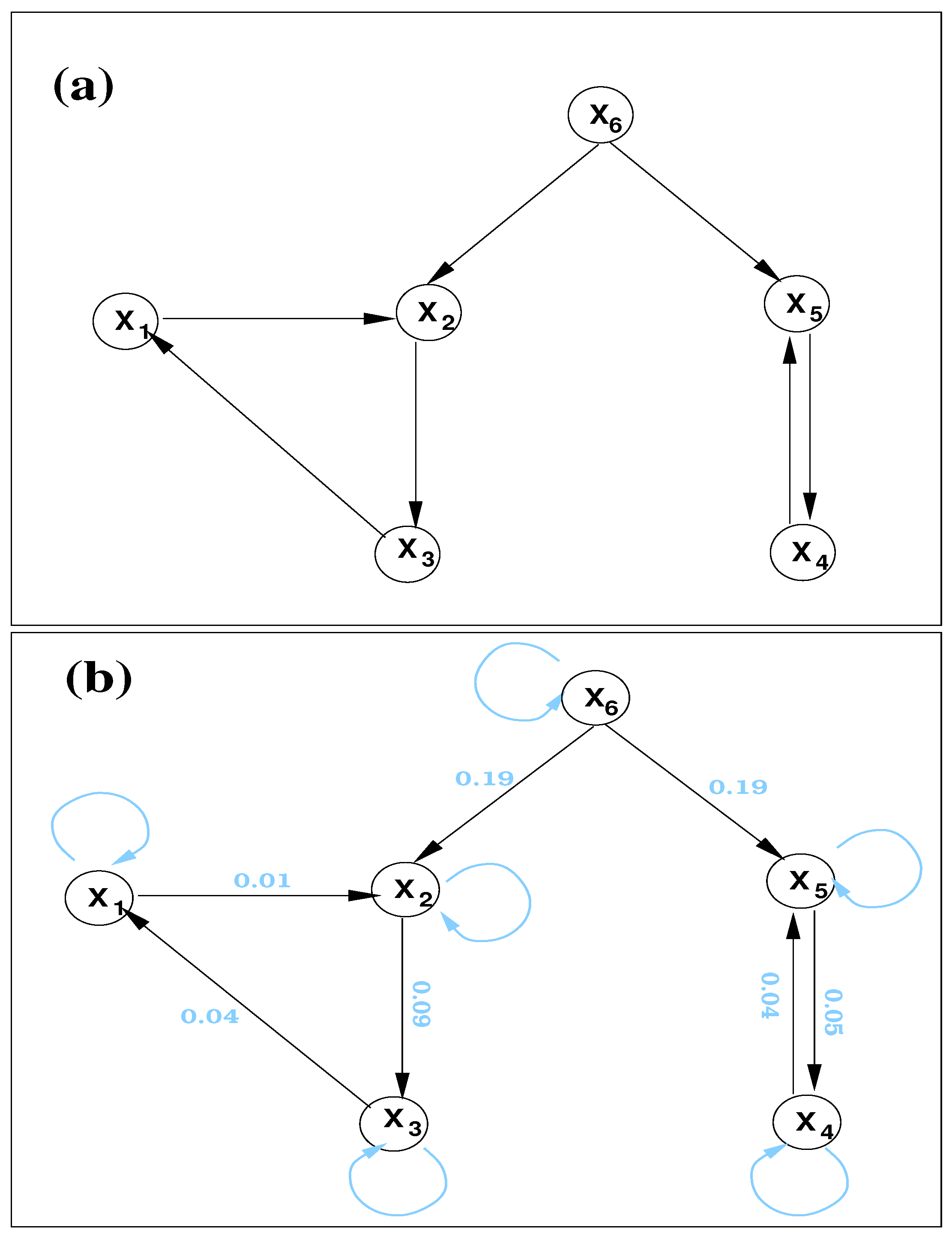

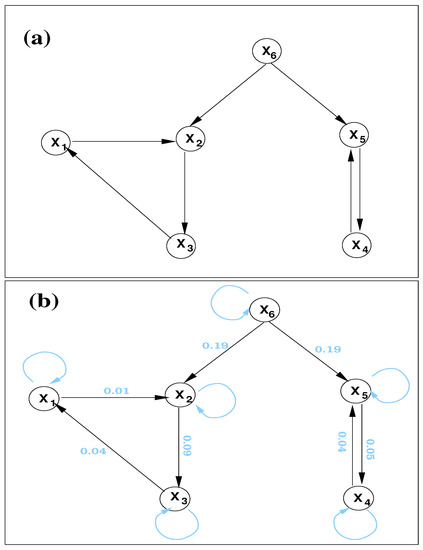

The formed network is as shown in Figure 1a. So, by design, we have two directed cycles (,,) and (,). The former is of length 3, while the latter are parallel edges. These cycles are driven by a common cause or confounder . Since no diagonal entries of is 1, all nodes are self loops (trivial cycles of length 1). The resulting autocorrelation is believed to be a challenge in causal inferences for some techniques. This and the confoundingness of , have been two major issues for many causal inference methods.

Figure 1.

(a) A schematic of the directed network generated with the vector autoregressive processes (21). (b) The directed graph reconstructed from the six time series. Overlaid numbers are the respective significant information flows (in nats per time step); also overlaid are the inferred self loops or trivial cycles of length 1 (in light blue).

First, consider the case . Accordingly, six series of 10,000 steps are generated (randomly initialized).

By computation, the information flow rates are (only absolute values are shown), if arranged in a matrix form such that the entry indicates , then the absolute information flow rates are

So, the only significant information flows (numbers in bold) are , , , , , , , as indicated in Figure 1b. (At a 90% confidence level, the maximal error is 0.005, so all these values are significant.) This is precisely the same as designed. So, the causal graph is accurately reconstructed. Also, by (15) can be computed. They are , , , , , , where the errors at a 90% confidence level are shown. So, here all the nodes are self loops (trivial cycles of length 1).

It should be particularly pointed out that the confoundingness of does not make an issue here. As shown in Figure 1, there is no significant information flow between and ; in other words, they are not directly causal to each other. Nor are and . This is actually not a surprise; it is a corollary of the principle of nil causality, as proved before (see Theorem 2). Considering the difficulty of this problem, the performance of this concise Formula (14) is remarkable.

The above information flows can be normalized to understand the impact of one unit on another. For example, , . For another example, in the cycle (, ), the relative information flows are , , in contrast to the almost identical absolute information flows. This is understandable: though is comparable to , the parts contributing to are different from that to , and thus they may have different weights.

Now, consider an extreme case when the signals are buried within heavy noise. Let, , and repeat the above steps. The results are, remarkably, almost the same. So, the Formula (14) is very robust in the presence of noise.

If the time series is short, the performance is still satisfactory. For example, if it has only 500 data points, the above case with heavy noise () results in the following matrix of information flow rates:

with the corresponding errors at the 90% confidence level being:

So, the significant (at the 90% level) information flows are still those as shown in bold face.

Note we do not mean to compete with the classical method(s) in this application. Granger causality testing, for example, works well here. Nonetheless, the simplicity of the Formula (14) and the algorithm has greatly increased the performance of computation. On MATLAB, (14) is by test more than 100 times faster than the embedded matlab function gctest.

5.2. A Network of Nearly Synchronized Chaotic Series

Now, consider the following causal graph made of Rössler oscillators X, Y and Z, where X is a confounder. A Rössler oscillator has three components, so the system actually has a dimension of 9.

We use for this purpose the coupled system investigated by Palu et al. [33]. The 9 series are generated through the following Rössler systems

Clearly, the first is the driving or “master” system, while the latter two are slaves which are not directly connected. We hence use them to define X, Y and Z. This system is exactly the same as the one studied in [33], except for the addition of another subsystem, Z. The parameters are also chosen to be the same as theirs, and , but with an additional one, . As can be seen, X is coupled with Y and Z through the first component, and the coupling is one-way, i.e., from X to Y and from X to Z. The coupling parameter is left open for tuning.

The above equations are differenciated and the system is solved using the second-order Runge–Kutta scheme with a time stepsize . Initialized with random numbers, the state is integrated forward for 50,000 steps (). Discard the initial 10,000 steps and form the 9 time series with 40,000 data points.

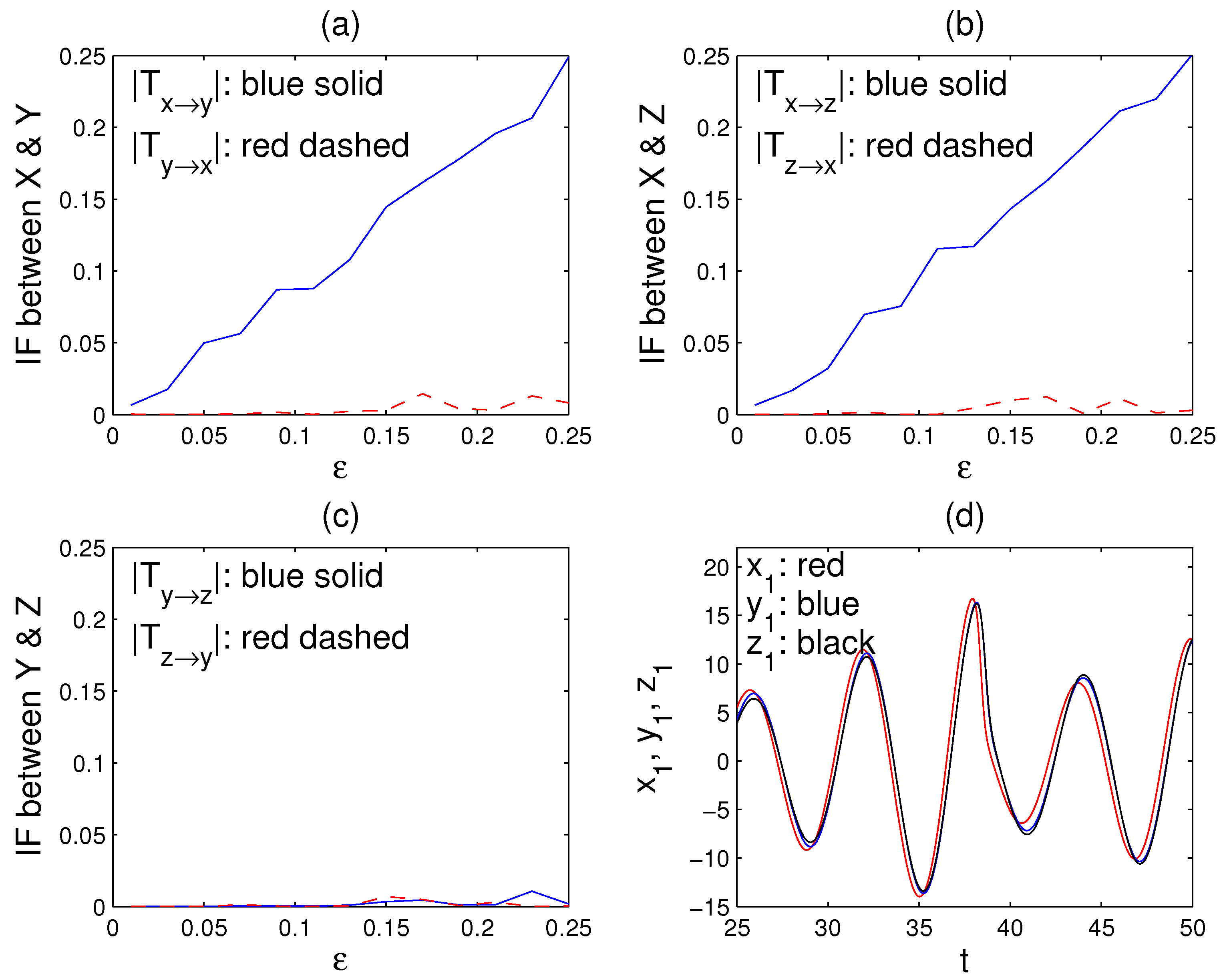

The oscillators are highly chaotic. As increases, the three oscillators gradually become in pace. They become almost synchronized after . Shown in Figure 2d is an episode of the synchronization for .

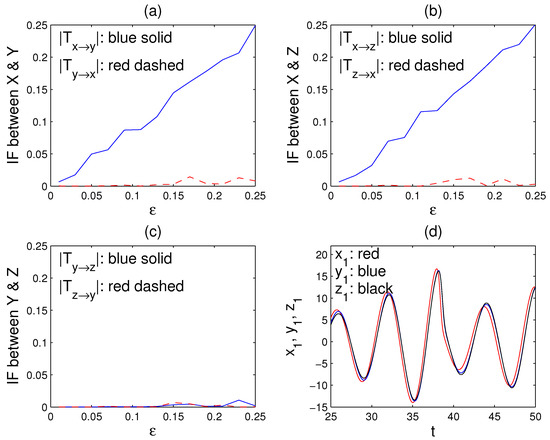

Figure 2.

The information flows among the oscillators X, Y, and Z (in nats/unit time) versus the coupling strength : (a) (blue) and (red); (b) (blue) and (red); (c) (blue) and (red). (d) The series of , , and on a time interval when the coupling parameter . (Note, in solving for , the initial conditions are randomly chosen, some of which may happen to make a highly singular matrix and hence cause large errors. In that case, simply re-run the program.)

We now apply (14) to compute the information flows among X, Y, and Z. Since this is a deterministic chaos problem, choose in (7) and (14). Following [33], the series , , and are used to represent the three oscillators. Shown in Figure 2a–c are dependencies of the computed information flows on the coupling strength . Clearly, among the six information flows, only and are significant, indicating (1) that the causal relation between X and Y is unidirectionally from X to Y, (2) that the causality between X and Z is also one-way, i.e., from X to Z, and, mostly importantly (3) that no direct causality exists between Y and Z, although they are highly correlated (c.f. Figure 2d). So, here the confoundingness is not at all an issue.

After exceeds 0.15, the systems begin to synchronize (see [33]), and it is impossible to infer the causal relation using traditional methods. This is understandable, as the series gradually approach toward one series. Here, however, even with , i.e., even after the series are almost synchronized, in this framework, the inference still performs remarkably well, as clearly seen in Figure 2a–c. This attests to the power of the information flow-based causal inference technique, which is concise and very easy to implement.

6. Conclusions

Recent years have seen a surge of interest in causality analysis. This study introduced a line of work starting some 16 years ago which has gone almost unnoticed, and implemented the state-of-the-art theory [16] into an easy-to-use algorithm. Particularly, this study extended the bivariate time series analysis of [18] to the long-due multivariate time series causal inference.

In a multivariate stochastic system, the information flow from one component to another proves to be (2). When only time series are available, it can be estimated using (14) under a linear assumption. Ideally if it is not zero, then there exists causality between the components, but practically statistical significance needs to be tested. These have been easily implemented as an algorithm for use.

More than just finding the information flows, hence the causalities, among the units (as in [18]), we have also estimated the influence of a unit to itself. This results in autocorrelation, which becomes an issue in some causal inferences. The consequence is that, in a causal graph, those nodes which are self loops (cycles of length 1) can be easily identified. Also different from previous studies, in a unified treatment, the role of noise has been quantified along with the causality analysis. This quantity has an easy physical interpretation, namely, the ratio of noise to signal. Besides, the obtained causalities can be normalized to measure the importance of the respective parental nodes.

The above very concise and transparent formulas have been applied to examine two problems in extreme situations: (1) a network of multivariate processes with heavy noise (stochastic perturbation amplitude 100 times the signal amplitude); (2) a network with nearly synchronized oscillators. Besides, confounding processes exist in both causal graphs. Case (1) is made of vector autoregressive processes. By applying the algorithm, the causal graph is accurately recovered in a very easy and efficient way. In particular, the confounding processes have been accurately clarified.

Note Granger causality testing works well in case (1). Nonetheless, the simplicity of the Formula (14) allows for an increase of performance by at least two orders.

In case (2), the network is formed with three chaotic Rössler oscillators. When the coupling coefficient exceeds a threshold, synchronization occurs. However, even with the almost completely synchronized time series, the information flow approach still performs remarkably well, with the causalities accurately inferred, and the causal graph accurately reconstructed. In particular, the one-way causalities between the master–slave systems have been recovered. Moreover, it is accurately shown that the two highly correlated, almost identical series due to the confounder are not causally linked.

It should be mentioned that, in arriving at the concise formula for causal inference, an assumption of linearity has been invoked. For some nonlinear problems, the inference may not be precisely as expected. For example, in Figure 2a,b, the red dashed lines are supposed to be zero, but here they are not. However, qualitatively, the inference is still good, as the one-way causality is clearly seen. Such success has already been evidenced in the bivariate case of [18], where a highly nonlinear problem defying classical approaches is examined. Nonetheless, the power of the information flow-based causality analysis will not be fully realized until the linear assumption is relaxed. To generalize to the fully nonlinear case is hence the goal of future work.

Funding

This research is partially supported by National Science Foundation of China under grant number 41975064.

Data Availability Statement

Not Applicable.

Conflicts of Interest

The author declares no conflict of interest.

References

- Schölkopf, B.; Janzing, D.; Peters, J.; Sgouritsa, E.; Zhang, K.; Mooij, J.M. On causal and anticausal learning. In Proceedings of the 29th International Conference on Machine Learning (ICML), Edinburgh, Scotland, UK, 26 June–1 July 2012; pp. 1255–1262. [Google Scholar]

- Pearl, J. Causality: Models, Reasoning, and Inference, 2nd ed; Cambridge University Press: New York, NY, USA, 2009. [Google Scholar]

- Spirtes, P.; Glymour, C. An algorithm for fast recovery of sparse causal graphs. Soc. Sci. Comput. Rev. 1991, 9, 62–72. [Google Scholar] [CrossRef]

- Schreiber, T. Measuring information transfer. Phys. Rev. Lett. 2000, 85, 461. [Google Scholar] [CrossRef]

- Paluš, M.; Komárek, V.; Hrnčiř, Z.; Štěrbová, K. Synchronization as adjustment of information rates: Detection from bivariate time series. Phys. Rev. E 2001, 63, 046211. [Google Scholar] [CrossRef]

- Liang, X.S.; Kleeman, R. Information transfer between dynamical system components. Phys. Rev. Lett. 2005, 95, 244101. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Spirtes, P. Detection of unfaithfulness and robust causal inference. Minds Mach. 2008, 18, 239–271. [Google Scholar] [CrossRef]

- Maathuis, M.H.; Colombo, D.; Kalisch, M.; Bühlmann, P. Estimating high-dimensional intervention effects from observation data. Ann. Stat. 2009, 37, 3133–3164. [Google Scholar] [CrossRef]

- Pompe, B.; Runge, J. Momentary information transfer as a coupling measure of time series. Phys. Rev. E 2011, 83, 051122. [Google Scholar] [CrossRef] [PubMed]

- Janzing, D.; Mooij, J.; Zhang, K.; Lemeire, J.; Zscheischler, J.; Daniušis, P.; Steudel, B.; Schölkopf, B. Information-geometric approach to inferring causal dierctions. Artif. Intell. 2012, 182, 1–31. [Google Scholar] [CrossRef]

- Sugihara, G.; May, R.; Ye, H.; Hsieh, C.H.; Deyle, E.; Fogarty, M.; Munch, S. Detecting causality in complex ecosystems. Science 2012, 338, 496–500. [Google Scholar] [CrossRef]

- Sun, J.; Bollt, E. Causation entropy identifies indirect influences, dominance of neighbors, and anticipatory couplings. Physica D 2014, 267, 49–57. [Google Scholar] [CrossRef]

- Peters, J.; Janzing, D.; Schölkopf, B. Elements of Causal Inference: Foundations and Learning Algorithms; The MIT Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Spirtes, P.; Zhang, K. Causal discovery and inference: Concepts and recent methodological advances. Appl. Inform. 2016, 3, 3. [Google Scholar] [CrossRef] [PubMed]

- Granger, C.W.J. Investigating causal relations by econometric models and cross-spectral methods. Econometrica 1969, 37, 424–438. [Google Scholar] [CrossRef]

- Liang, X.S. Information flow and causality as rigorous notions ab initio. Phys. Rev. E 2016, 94, 052201. [Google Scholar] [CrossRef]

- Liang, X.S. Information flow within stochastic dynamical systems. Phys. Rev. E 2008, 78, 031113. [Google Scholar] [CrossRef]

- Liang, X.S. Unraveling the cause-effect relation between time series. Phys. Rev. E 2014, 90, 052150. [Google Scholar] [CrossRef] [PubMed]

- Røysland, K. Counterfactual analyses with graphical models based on local independence. Ann. Stat. 2012, 40, 2162–2194. [Google Scholar]

- Mooij, J.M.; Janzing, D.; Heskes, T.; Schölkopf, B. From ordinary differential equations to structural causal models: The deterministic case. In Proceedings of the 29th Annual Conference on Uncertainty in Artificial Intelligence, Bellevue, WA, USA, 11–15 July 2013; pp. 440–448. [Google Scholar]

- Mogensen, S.W.; Malinksky, D.; Hansen, N.R. Causal learning for partially observed stochastic dynamical systems. In Proceedings of the 34th Conference on Uncertainty in Artificial Intelligence (UAI), Monterey, CA, USA, 6–10 August 2018. [Google Scholar]

- Amigó, J.M.; Dale, R.; Tempesta, P. A generalized permutation entropy for noisy dynamics and random processes. Chaos 2021, 31, 013115. [Google Scholar] [CrossRef] [PubMed]

- Liang, X.S. Information flow with respect to relative entropy. Chaos 2018, 28, 075311. [Google Scholar] [CrossRef] [PubMed]

- Berkeley, G. A Treatise Concerning the Principles of Human Knowledge; Aaron Rhames: Dublin, Ireland, 1710. [Google Scholar]

- Liang, X.S.; Yang, X.-Q. A note on causation versus correlation in an extreme situation. Entropy 2021, 23, 316. [Google Scholar] [CrossRef] [PubMed]

- Hahs, D.W.; Pethel, S.D. Distinguishing anticipation from causality: Anticipatory bias in the estimation of information flow. Phys. Rev. Lett. 2011, 107, 12870. [Google Scholar] [CrossRef]

- Stips, A.; Macias, D.; Coughlan, C.; Garcia-Gorriz, E.; Liang, X.S. On the causal structure between CO2 and global temperature. Sci. Rep. 2016, 6, 21691. [Google Scholar] [CrossRef] [PubMed]

- Hagan, D.F.T.; Wang, G.; Liang, X.S.; Dolman, H.A.J. A time-varying causality formalism based on the Liang-Kleeman information flow for analyzing directed interactions in nonstationary climate systems. J. Clim. 2019, 32, 7521–7537. [Google Scholar] [CrossRef]

- Vannitsem, S.; Dalaiden, Q.; Goosse, H. Testing for dynamical dependence—Application to the surface mass balance over Antarctica. Geophys. Res. Lett. 2019. [Google Scholar] [CrossRef]

- Hristopulos, D.T.; Babul, A.; Babul, S.; Brucar, L.R.; Virji-Babul, N. Dirupted information flow in resting-state in adolescents with sports related concussion. Front. Hum. Neurosci. 2019, 13, 419. [Google Scholar] [CrossRef] [PubMed]

- Garthwaite, P.H.; Jolliffe, I.T.; Jones, B. Statistical Inference; Prentice-Hall: Hertfordshire, UK, 1995. [Google Scholar]

- Liang, X.S. Normalizing the causality between time series. Phys. Rev. E 2015, 92, 022126. [Google Scholar] [CrossRef]

- Paluš, M.; Krakovská, A.; Jakubfk, J.; Chvosteková, M. Causality, dynamical systems and the arrow of time. Chaos 2018, 28, 075307. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).