Abstract

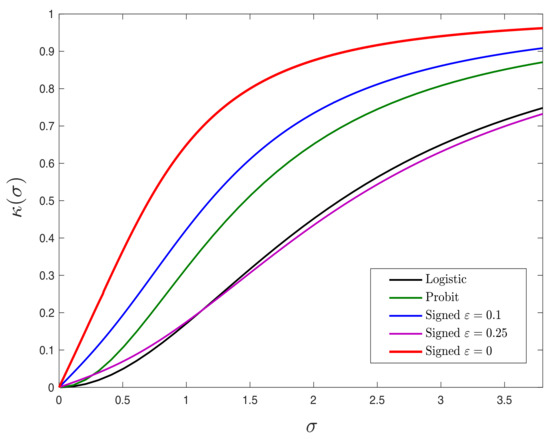

We study convex empirical risk minimization for high-dimensional inference in binary linear classification under both discriminative binary linear models, as well as generative Gaussian-mixture models. Our first result sharply predicts the statistical performance of such estimators in the proportional asymptotic regime under isotropic Gaussian features. Importantly, the predictions hold for a wide class of convex loss functions, which we exploit to prove bounds on the best achievable performance. Notably, we show that the proposed bounds are tight for popular binary models (such as signed and logistic) and for the Gaussian-mixture model by constructing appropriate loss functions that achieve it. Our numerical simulations suggest that the theory is accurate even for relatively small problem dimensions and that it enjoys a certain universality property.

1. Introduction

1.1. Motivation

Classical estimation theory studies problems in which the number of unknown parameters n is small compared to the number of observations m. In contrast, modern inference problems are typically high-dimensional, that is n can be of the same order as m. Examples are abundant in a wide range of signal processing and machine learning applications such as medical imaging, wireless communications, recommendation systems, etc. Classical tools and theories are not applicable in these modern inference problems [1]. As such, over the last two decades or so, the study of high-dimensional estimation problems has received significant attention.

Perhaps the most well-studied setting is that of noisy linear observations (namely, linear regression). The literature on the topic is vast with remarkable contributions from the statistics, signal processing and machine learning communities. Several recent works focus on the proportional/linear asymptotic regime and derive sharp results on the inference performance of appropriate convex optimization methods (e.g., [2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23]). These works show that, albeit challenging, sharp results are advantageous over loose order-wise bounds. Not only do they allow for accurate comparisons between different choices of the optimization parameters, but they also form the basis for establishing optimal such choices as well as fundamental performance limitations (e.g., [12,14,15,16,24,25,26]).

This paper takes this recent line of work a step further by demonstrating that results of this nature can be achieved in binary observation models. While we depart from the previously studied linear regression model, we remain faithful to the requirement and promise of sharp results. Binary models are popularly applicable in a wide range of signal-processing (e.g., highly quantized measurements) and machine learning (e.g., binary classification) problems. We derive sharp asymptotics for a rich class of convex optimization estimators, which include least-squares, logistic regression and hinge loss as special cases. Perhaps more interestingly, we use these results to derive fundamental performance limitations and design optimal loss functions that provably outperform existing choices. Our results hold both for discriminative and generative data models.

In Section 1.2, we formally introduce the problem setup. The paper’s main contributions and organization are presented in Section 1.4. A detailed discussion of prior art follows in Section 1.5.

Notation 1.

The symbols , and denote probability, expectation and variance, respectively. We use boldface notation for vectors. denotes the Euclidean norm of a vector . We write for . When writing we let the operator return any one of the possible minimizers of f. For all , is the cumulative distribution function of standard normal and Gaussian Q-function at x is defined as

1.2. Data Models

Consider m data pairs generated i.i.d from one of the following two models such that and for all .

Binary models with Gaussian features: Here, the feature/measurement vectors have i.i.d Gaussian entries, i.e., Given the feature vector , the corresponding label takes the form

for some unknown true signal and a label/link function a (possibly random) binary function. Some popular examples for the label function f include the following:

- (Noisy) Signed:

- Logistic:

- Probit:

We remark that when the signal strength , logistic and Probit label functions approach the signed model (i.e., noisy-signed function with ).

Throughout, we assume that . This assumption is without loss of generality since the norm of can always be absorbed in the link function. Indeed, letting , we can always write the measurements as , where (hence, ) and . We make no further assumptions on the distribution of the true vector .

Gaussian-mixture model: In Section 5, we also study the following generative Gaussian-mixture model (GMM):

Above, is the prior of class and is the true signal, which here represents the mean of the features.

1.3. Empirical Risk Minimization

We study the performance of empirical-risk minimization (ERM) estimators of that solve the following optimization problem for some convex loss function

Loss function. Different choices for ℓ lead to popular specific estimators including the following:

- Least Squares (LS):,

- Least-Absolute Deviations (LAD):,

- Logistic Loss:,

- Exponential Loss:,

- Hinge Loss:.

Performance Measure. We measure performance of the estimator by the value of its correlation to , i.e.,

Obviously, we seek estimates that maximize correlation. While correlation is the measure of primal interest, our results extend rather naturally to other prediction metrics, such as classification error given by (e.g., see [27] (Section D.2.)),

Expectation in (5) is derived based on a test sample from the same distribution of the training set.

1.4. Contributions and Organization

As mentioned, our techniques naturally apply to both binary Gaussian and Gaussian-mixture models. For concreteness, we focus our presentation on the former models (see Section 2, Section 3 and Section 4.1). Then, we extend our results to Gaussian mixtures in Section 5. Numerical simulations corroborating our theoretical findings for both models are presented in Section 6.

Now, we state the paper’s main contributions:

- Precise Asymptotics: We show that the absolute value of correlation of to the true vector is sharply predicted by where the “effective noise” parameter can be explicitly computed by solving a system of three non-linear equations in three unknowns. We find that the system of equations (and, thus, the value of ) depends on the loss function ℓ through its Moreau envelope function. Our prediction holds in the linear asymptotic regime in which and (see Section 2).

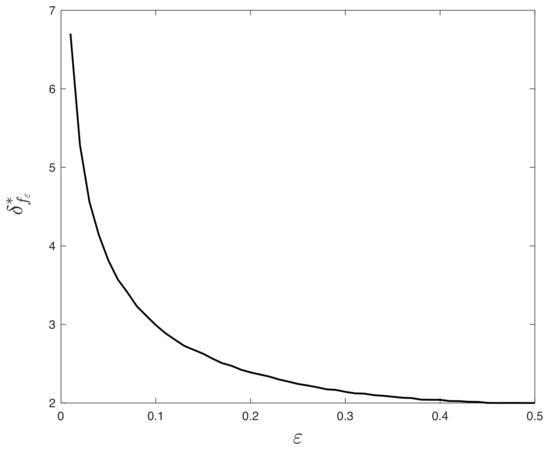

- Fundamental Limits: We establish fundamental limits on the performance of convex optimization-based estimators by computing an upper bound on the best possible correlation performance among all convex loss functions. We compute the upper bound by solving a certain nonlinear equation and we show that such a solution exists for all (see Section 3.1).

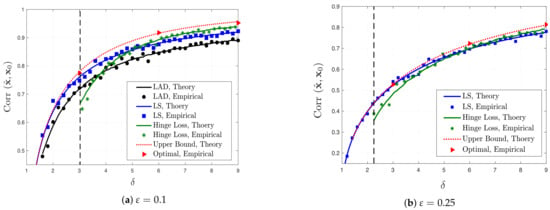

- Optimal Performance and (sub)-optimality of LS for binary models: For certain binary models including signed and logistic, we find the loss functions that achieve the optimal performance, i.e., they attain the previously derived upper bound (see Section 3.2). Interestingly, for logistic and Probit models with , we prove that the correlation performance of least-squares (LS) is at least as good 0.9972 and 0.9804 times the optimal performance. However, as grows large, logistic and Probit models approach the signed model, in which case LS becomes sub-optimal (see Section 4.1).

- Extension to the Gaussian-Mixture Model: In Section 5, we extend the fundamental limits and the system of equations to the Gaussian-mixture model. Interestingly, our results indicate that, for this model, LS is optimal among all convex loss functions for all .

- Numerical Simulations: We do numerous experiments to specialize our results to popular models and loss functions, for which we provide simulation results that demonstrate the accuracy of the theoretical predictions (see Section 6 and Appendix E).

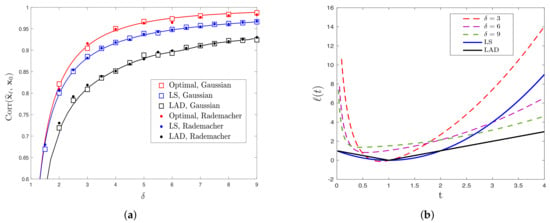

Figure 1 contains a pictorial preview of our results described above for the special case of signed measurements. First, Figure 1a depicts the correlation performance of LS and LAD estimators as a function of the aspect ratio . Both theoretical predictions and numerical results are shown; note the close match between theory and empirical results for both i.i.d. Gaussian (shown by circles) and i.i.d. Rademacher (shown by squares) distributions of the feature vectors for even small dimensions. Second, the red line on the same figure shows the upper bound derived in this paper—there is no convex loss function that results in correlation exceeding this line. Third, we show that the upper bound can be achieved by the loss functions depicted in Figure 1b for several values of . We solve (3) for this choice of loss functions using gradient descent and numerically evaluate the achieved correlation performance. The recorded values are compared in Table 1 to the corresponding values of the upper bound; again, note the close agreement between the values as predicted by the findings of this paper, which suggests that the fundamental limits derived in this paper hold for sub-Gaussian features. We present corresponding results for the logistic and Probit models in Section 6 and for the noisy-signed model in Appendix E.

Figure 1.

(a) Comparison between theoretical (solid lines) and empirical (markers) performance for least-squares (LS) and least-absolute deviations (LAD), as predicted by Theorem 1, and the optimal performance, as predicted by the upper bound of Theorem 2, for the signed model. The squares and circles denote the empirical performance for Gaussian and Rademacher features, respectively. (b) Illustrations of optimal loss functions for the signed model for different values of according to Theorem 3.

Table 1.

Theoretical predictions and empirical performance of the optimal loss function for signed model. Empirical results are averaged over 20 experiments for .

A remark on the Gaussianity assumption. Our results on precise asymptotics (to which our study of fundamental limits rely upon) hold rigorously for the two data models in Section 1.2, in which the feature vectors have entries i.i.d. standard Gaussian. However, we conjecture that the Gaussianity assumption can be relaxed. As partial numerical evidence, note in Figure 1a the perfect match of our theory with the empirical performance over data in which the feature vectors have entries i.i.d. Rademacher (i.e., centered Bernoulli with probability 1/2). Figure 2 shows corresponding results for the Gaussian-mixture model. Our conjecture that the so-called universality property holds in our setting is also in line with similar numerical observations and partial theoretical evidence previously made for linear regression settings [7,28,29,30,31]. A formal proof of universality of our results is beyond the scope of this paper. However, we remark that, as long as the asymptotic predictions of Section 2 enjoy this property, then all our results on fundamental performance limits and optimal functions automatically hold under the same relaxed assumptions.

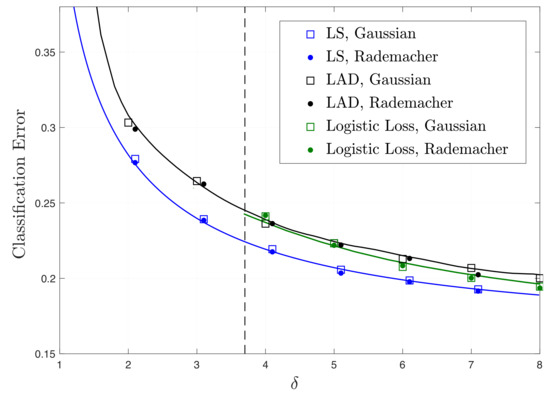

Figure 2.

Theoretical (solid lines) and empirical (markers) results of classification risk in GMM as in Theorem 4 and (39) for LS, LAD and logistic loss functions as a function of for . The vertical line represents the threshold as evaluated by (36). Logistic loss gives unbounded solution if and only if .

1.5. Related Works

Over the past two decades, there has been a long list of works that derive statistical guarantees for high-dimensional estimation problems. Many of these are concerned with convex optimization-based inference methods. Our work is most closely related to the following three lines of research.

- (a)

- Sharp asymptotics for linear measurements.

Most of the results in the literature of high-dimensional statistics are order-wise in nature. Sharp asymptotic predictions have only more recently appeared in the literature for the case of noisy linear measurements with Gaussian measurement vectors. There are by now three different approaches that have been used towards asymptotic analysis of convex regularized estimators: (i) the one that is based on the approximate message passing (AMP) algorithm and its state-evolution analysis (e.g., [5,8,14,20,32,33,34]); (ii) the one that is based on Gaussian process (GP) inequalities, specifically on the convex Gaussian min-max Theorem (CGMT) (e.g., [9,10,13,15,18,19]); and (iii) the “leave-one-out” approach [11,35]. The three approaches are quite different to each other and each comes with its unique distinguishing features and disadvantages. A detailed comparison is beyond our scope.

Our results in Theorems 2 and 3 for achieving the best performance across all loss functions is complementary to [12] (Theorem 1) and the work of Advani and Ganguli [16], who proposed a method for deriving optimal loss function and measuring its performance, albeit for linear models. Instead, we study binary models. The optimality of regularization for linear measurements is recently studied in [22].

In terms of analysis, we follow the GP approach and build upon the CGMT. Since the previous works are concerned with linear measurements, they consider estimators that solve minimization problems of the form

Specifically, the loss function penalizes the residual. In this paper, we show that the CGMT is applicable to optimization problems in the form of (3). For our case of binary observations, (3) is more general than (6). To see this, note that, for and popular symmetric loss functions , e.g., least-squares (LS), (3) results in (6) by choosing in the former. Moreover, (3) includes several other popular loss functions such as the logistic loss and the hinge loss which cannot be expressed by (6).

- (b)

- One-bit compressed sensing.

Our work naturally relates to the literature on one-bit compressed sensing (CS) [36]. The vast majority of performance guarantees for one-bit CS are order-wise in nature (e.g., [37,38,39,40,41,42]). To the best of our knowledge, the only existing sharp results are presented in [43] for Gaussian measurement vectors, which studies the asymptotic performance of regularized LS. Our work can be seen as a direct extension of the work in [43] to loss functions beyond least-squares (see Section 4.1 for details).

Similar to the generality of our paper, Genzel [41] also studied the high-dimensional performance of general loss functions. However, in contrast to our results, their performance bounds are loose (order-wise); as such, they are not informative about the question of optimal performance which we also address here.

- (c)

- Classification in high-dimensions.

In [44,45], the authors studied the high-dimensional performance of maximum-likelihood (ML) estimation for the logistic model. The ML estimator is a special case of (3) and we consider general binary models. In addition, their analysis is based on the AMP framework. The asymptotics of logistic loss under different classification models is also recently studied in [46]. In yet another closely related recent work [47], the authors extended the results of Sur and Candes [45] to regularized ML by using the CGMT. Instead, we present results for general convex loss functions and for binary linear models. Importantly, we also study performance bounds and optimal loss functions.

We also remark on the following closely related parallel works. While the conference version of this paper was being reviewed, the CGMT was applied by Montanari et al. [48] and Deng et al. [49] to determine the generalization performance of max-margin linear classifiers in a binary classification setting. In essence, these results are complementary to the results of our paper in the following sense. Consider a binary classification setting under the logistic model and Gaussian regressors. As discussed in Section 4.2, the optimal set of (3) is bounded with probability approaching one if and only if , for appropriate threshold determined for first time in [44] (see also Figure 3a). Our results hold in this regime. In contrast, the papers by Montanari et al. [48] and Deng et al. [49] study the regime .

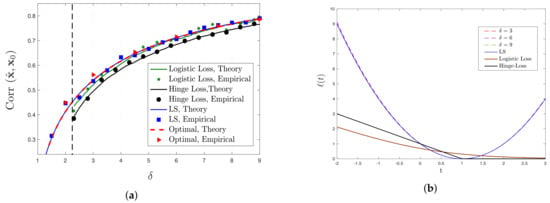

Figure 3.

(a) Comparison between analytical and empirical results for the performance of LS, logistic loss, hinge loss and optimal loss function for logistic model. The vertical dashed line represents , as evaluated by (35). (b) Illustrations of optimal loss functions for different values of , derived according to Theorem 3 for logistic model. To signify the similarity of optimal loss function to the LS loss, the optimal loss functions (hardly visible) are scaled such that and .

We close this section by mentioning works that build on our results and appeared after the initial submission of this paper. The paper by Mignacco et al. [50] studies sharp asymptotics of ridge-regularized ERM with an intercept for Gaussian-mixture models. In [27], we extend the results of this paper on fundamental limits and optimality to the case of ridge-regularized ERM (see also the concurrent work by Aubin et al. [51]).

2. Sharp Performance Guarantees

2.1. Definitions

Moreau Envelopes. Before stating the first result, we need a definition. We write

for the Moreau envelope function of the loss at x with parameter . The minimizer (which is unique by strong convexity) is known as the proximal operator of ℓ at x with parameter and we denote it as . A useful property of the Moreau envelope function is that it is continuously differentiable with respect to both x and [52]. We denote these derivatives as follows

2.2. A System of Equations

As we show shortly the asymptotic performance of the optimization in (3) is tightly connected to the solution of a certain system of nonlinear equations, which we introduce here. Specifically, define random variables and Y as follows:

and consider the following system of non-linear equations in three unknowns :

The expectations are with respect to the randomness of the random variables G, S and Y. We remark that the equations are well defined even if the loss function ℓ is not differentiable. In Appendix A, we summarize some well-known properties of the Moreau envelope function and use them to simplify (8) for differentiable loss functions.

2.3. Asymptotic Prediction

We are now ready to state our first main result.

Theorem 1.

(Sharp Asymptotics). Assume data generated from the binary model with Gaussian features and assume such that the set of minimizers in (3) is bounded and the system of Equation (8) has a unique solution , such that . Let be as in (3). Then, in the limit of , , it holds with probability one that

Moreover,

Theorem 1 holds for any convex loss function. In Section 4, we specialize the result to specific popular choices and also present numerical simulations that confirm the validity of the predictions (see Figure 1a, Figure 3a, Figure 4a and Figure A4a,b). Before that, we include a few remarks on the conditions, interpretation and implications of the theorem. The proof is deferred to Appendix B and uses the convex Gaussian min-max theorem (CGMT) [13,15].

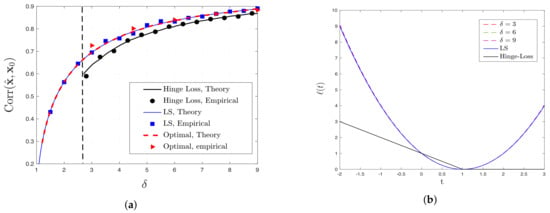

Figure 4.

(a) Comparison between analytical and empirical results for the performance of LS, hinge loss and optimal loss function for Probit model. The vertical dashed line represents , as evaluated by (35). (b) Illustrations of optimal loss functions for different values of derived according to Theorem 3 for Probit model. To signify the similarity of optimal loss function to the LS loss, the optimal loss functions (hardly visible) are scaled such that and .

Remark 1.

(The Role of and ). According to (9), the prediction for the limiting behavior of the correlation value is given in terms of an effective noise parameter , where μ and α are unique solutions of (8). The smaller is the value of is, the larger the correlation value becomes. While the correlation value is fully determined by the ratio of α and μ, their individual role is clarified in (10). Specifically, according to (10), is a biased estimate of the true and μ represents exactly the correlation bias term. In other words, solving (3) returns an estimator that is close to a μ-scaled version of . When and are scaled appropriately, the -norm of their difference converges to α.

Remark 2.

(Why ). The theorem requires that (equivalently, asymptotically). Here, we show that this condition is necessary for Equations (8) to have a bounded solution. To see this, take squares in both sides of (8c) and divide by (8b) to find that

The inequality follows by applying Cauchy–Schwarz and using the fact that .

Remark 3.

(On the Existence of a Solution to (8)). While is a necessary condition for the equations in (8) to have a solution, it is not sufficient in general. This depends on the specific choice of the loss function. For example, in Section 4.1, we show that, for the squared loss , the equations have a unique solution iff . On the other hand, for logistic loss and hinge loss, it is argued in Section 4.2 that there exists a threshold value such that the set of minimizers in (3) is unbounded if . In this case, the assumptions of Theorem 1 do not hold. We conjecture that, for these choices of loss, Equations (8) are solvable iff . Justifying this conjecture and further studying more general sufficient and necessary conditions under which the Equation (8) admit a solution is left to future work. However, in what follows, given such a solution, we prove that it is unique for a wide class of convex loss functions of interest.

Remark 4.

(On the Uniqueness of Solutions to (8)). We show that, if the system of equations in (8) has a solution, then it is unique provided that ℓ is strictly convex, continuously differentiable and its derivative satisfies . For instance, this class includes the square, the logistic and the exponential losses. However, it excludes non-differentiable functions such as the LAD and hinge loss. We believe that the differentiability assumption can be relaxed without major modification in our proof, but we leave this for future work. Our result is summarized in Proposition 1 below.

Proposition 1.

(Uniqueness). Assume that the loss function has the following properties: (i) it is proper strictly convex; and (ii) it is continuously differentiable and its derivative is such that . Further, assume that the (possibly random) link function f is such that has strictly positive density on the real line. The following statement is true. For any , if the system of equations in (8) has a bounded solution, then it is unique.

The detailed proof of Proposition 1 is deferred to Appendix B.5. Here, we highlight some key ideas. The CGMT relates—in a rather natural way—the original ERM optimization (3) to the following deterministic min-max optimization on four variables

In Appendix B.4, we show that the optimization above is convex-concave for any lower semi-continuous, proper and convex function . Moreover, it is shown that one arrives at the system of equations in (8) by simplifying the first-order optimality conditions of the min-max optimization in (11). This connection is key to the proof of Proposition 1. Indeed, we prove uniqueness of the solution (if such a solution exists) to (8), by proving instead that the function above is (jointly) strictly convex in and strictly concave in γ, provided that ℓ satisfies the conditions of the proposition. Next, let us briefly discuss how strict convex-concavity of (11) can be shown. For concreteness, we only discuss strict convexity here; the ideas are similar for strict concavity. At the heart of the proof of strict convexity of F is understanding the properties of the expected Moreau envelope function defined as follows:

Specifically, we prove in Proposition A7 in Appendix A.6 that if ℓ is strictly convex, differentiable and does not attain its minimum at 0, then Ω is strictly convex in and strictly concave in γ. It is worth noting that the Moreau envelope function for fixed and is not necessarily strictly convex. Interestingly, we show that the expected Moreau envelope has this desired feature. We refer the reader to Appendix A.6 and Appendix B.5 for more details.

3. On Optimal Performance

3.1. Fundamental Limits

In this section, we establish fundamental limits on the performance of (3) by deriving an upper bound on the absolute value of correlation that holds for all choices of loss functions satisfying Theorem 1. The result builds on the prediction of Theorem 1. In view of (9), upper bounding correlation is equivalent to lower bounding the effective noise parameter . Theorem 2 derives such a lower bound.

Before stating the theorem, we need a definition. For a random variable H with density that has a derivative , we denote its score function . Then, the Fisher information of H, denoted by , is defined as follows (e.g., [53] (Sec. 2)):

Theorem 2.

(Best Achievable Performance). Let the assumptions and notation of Theorem 1 hold and recall the definition of random variables and Y in (7). For , define a new random variable and the function as follows,

Further, define as follows,

Then, for , it holds that .

The theorem above establishes an upper bound on the best possible correlation performance among all convex loss functions. In Section 3.2, we show that this bound is often tight, i.e., there exists a loss function that achieves the specified best possible performance.

Remark 5.

Theorem 2 complements the results in [12,14] (Lem. 3.4) and [15] (Rem. 5.3.3), in which the authors considered only linear regression. In particular, Theorem 2 shows that it is possible to achieve results of this nature for the more challenging setting of binary classification considered here.

Proof of Theorem 2.

Fix a loss function ℓ and let be a solution to (8), which by assumptions of Theorem 1 is unique. The first important observation is that the error of a loss function is unique up to a multiplicative constant. To see this, consider an arbitrary loss function and let be a minimizer in (3). Now, consider (3) with the following loss function instead, for some arbitrary constants :

It is not hard to see that is the minimizer for . Clearly, has the same correlation value with as , showing that the two loss functions ℓ and perform the same. With this observation in mind, consider the function such that . Then, notice that

Using this relation in (8) and setting , the system of equations in (8) can be equivalently rewritten in the following convenient form,

Next, we show how to use (14) to derive an equivalent system of equations based on . Starting with (14c), we have

where . Since it holds that , using (A74), it follows that

where in the last step we use

Therefore, we have by (16) that

This combined with (14c) gives Second, multiplying (14c) with and adding it to (14a) yields that,

Putting these together, we conclude with the following system of equations which is equivalent to (14),

Note that, for , exists everywhere. This is because for all : and is continuously differentiable. Combining (19a) and (19c), we derive the following equation which holds for ,

By Cauchy–Schwarz inequality, we have that

Using the fact that (by integration by parts), , and (19b), the right hand side of (20) is equal to

Therefore, we conclude with the following inequality for ,

which holds for all . In particular, (21) holds for the following choice of values for and :

(The choice above is motivated by the result of Section 3.2; see Theorem 3). Rewriting (21) with the chosen values of and yields the following inequality,

where on the right-hand side above, we recognize the function defined in the theorem.

Next, we use (22) to show that defined in (12) yields a lower bound on the achievable value of . For the sake of contradiction, assume that . By the above, . Moreover, by the definition of , we must have that Since and is a continuous function we conclude that for some , it holds that . Therefore, for , we have , which contradicts the definition of . This proves that , as desired.

To complete the proof, it remains to show that the equation admits a solution for all . For this purpose, we use the continuous mapping theorem and the fact that the Fisher information is a continuous function [54]. Recall that, for two independent and non-constant random variables, it holds that [53] (Eq. 2.18). Since G and are independent random variables, we find that which implies that is uniformly bounded for all values of . Therefore,

Furthermore, when . Hence,

Note that , which further yields that for all . Finally, since is a continuous function, we deduce that range of is , implying the existence of a solution to (12) for all . This completes the proof of Theorem 2. □

A useful closed-form bound on the best achievable performance: In general, determining requires computing the Fisher information of the random variable for . If the probability distribution of is continuously differentiable (e.g., logistic model; see Appendix C.1), then we obtain the following simplified bound.

Corollary 1.

(Closed-form Lower Bound on ). Let be the probability distribution of . If is differentiable for all , then,

Proof.

Based on Theorem 2, the following equation holds for

or, equivalently, by rewriting the right-hand side,

Define the following function

The function h is increasing in the region According to Stam’s inequality [55], for two independent random variables X and Y with continuously differentiable and , it holds that

where equality is achieved if and only if X and Y are independent Gaussian random variables. Therefore, since by assumption is differentiable on the real line, Stam’s inequality yields

Next, we prove that for all , both sides of (25) are in the region . First, we prove that . By Cramer–Rao bound (e.g., see [53] (Eq. 2.15)) for Fisher information of a random variable X, we have that . In addition, for the random variable , we know that , thus

Using the relation , one can check that the following inequality holds:

Therefore, from (26) and (27), we derive that for all . Furthermore, by the inequality in (25) and the definition of it directly follows that for all

Finally, noting that is increasing in , combined with (25), we have

which after using the relation and further simplification yields the inequality in the statement of the corollary. □

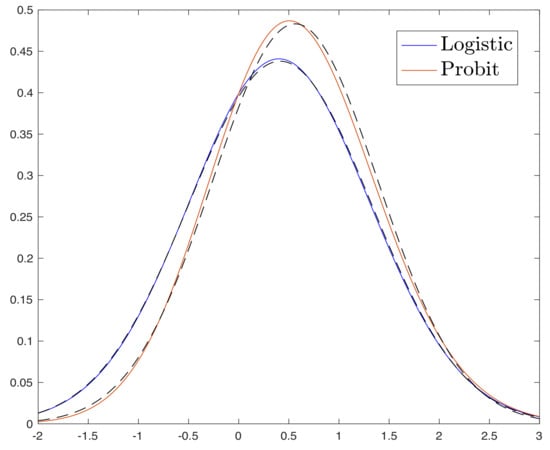

The proof of the corollary reveals that (23) holds with equality when is Gaussian. In Appendix C.1, we compute for the logistic and the Probit models with and numerically show that it is close to the density of a Gaussian random variable. Consequently, the lower bound of Corollary 1 is almost exact when measurements are obtained according to the logistic and Probit models (see Figure A2 in the Appendix C).

3.2. On the Optimal Loss Function

It is natural to ask whether there exists a loss function that attains the bound of Theorem 2. If such a loss function exists, then we say it is optimal in the sense that it maximizes the correlation performance among all convex loss functions in (3).

Our next theorem derives a candidate for the optimal loss function, which we denote . Before stating the result, we provide some intuition about the proof which builds on Theorem 2. The critical observation in the proof of Theorem 2 is that the effective noise of is minimized (i.e., it attains the value ) if the Cauchy–Schwartz inequality in (20) holds with equality. Hence, we seek so that for some ,

By choosing , integrating and ignoring constants irrelevant to the minimization of the loss function, the previous condition is equivalent to the following It turns out that this condition can be “inverted” to yield the explicit formula for as, Of course, one has to properly choose and to make sure that this function satisfies the system of equations in (19) with . The correct choice is specified in the theorem below. The proof is deferred to Appendix D.1.

Theorem 3.

In general, there is no guarantee that the function as defined in (29) is convex. However, if this is the case, the theorem above guarantees that it is optimal (Strictly speaking, the performance is optimal among all convex loss functions ℓ for which (8) has a unique solution as required by Theorem 2.). A sufficient condition for to be convex is provided in Appendix D.2. Importantly, in Appendix D.2.1, we show that this condition holds for observations following the signed model. Thus, for this case, the resulting function is convex. Although we do not prove the convexity of optimal loss function for the logistic and Probit models, our numerical results (e.g., see Figure 3b) suggest that this is the case. Concretely, we conjecture that the loss function is convex for logistic and Probit models, and therefore by Theorem 3 its performance is optimal.

4. Special Cases

4.1. Least-Squares

By choosing in (3), we obtain the standard least-squares estimate. To see this, note that since , it holds for all i that Thus, is minimizing the sum of squares of the residuals:

For this choice of a loss function, we can solve the equations in (8) in closed form. Furthermore, the equations have a (unique, bounded) solution for any provided that . The final result is summarized in the corollary below (see Appendix F.1 for the proof).

Corollary 2.

Corollary 2 appears in [43] (see also [40,41,56] and Appendix F for an interpretation of the result). However, these previous works obtain results that are limited to least-squares loss. In contrast, our results are general and LS prediction is obtained as a simple corollary of our general Theorem 1. Moreover, our study of fundamental limits allows us to quantify the sub-optimality gap of least-square (LS) as follows.

On the Optimality of LS. On the one hand, Corollary 2 derives an explicit formula for the effective noise variance of LS in terms of and . On the other hand, Corollary 1 provides an explicit lower bound on the optimal value in terms of and . Combining the two, we conclude that

In terms of correlation,

where the first inequality follows from the fact that Therefore, the performance of LS is at least as good as times the optimal one. In particular, assuming and for logistic and Probit models (for which Corollary 1 holds), we can explicitly compute , respectively. However, we recall that for large logistic and Probit models approach the signed model, and, as Figure 1a demonstrates, LS becomes suboptimal.

Another interesting consequence of combining Corollaries 1 and 2 is that LS would be optimal if were a Gaussian random variable. To see this, recall from Corollary 1 that, if is Gaussian, then:

However, for Gaussian, we can explicitly compute , which leads to

The right hand side is exactly . Therefore, the optimal performance is achieved by the square loss function if is a Gaussian random variable. Remarkably, for logistic and Probit models with small SNR (i.e., small ), density of is close to the density of a normal random variable (see Figure A2 in the Appendix C), implying the optimality of LS for these models.

4.2. Logistic and Hinge Loss

Theorem 1 only holds in regimes for which the set of minimizers of (3) is bounded. As we show here, this is always the case. Specifically, consider non-negative loss functions with the property . For example, the hinge, exponential and logistic loss functions all satisfy this property. Now, we show that for such loss functions the set of minimizers is unbounded if for some appropriate . First, note that the set of minimizers is unbounded if the following condition holds:

Indeed, if (34) holds then with , attains zero cost in (3); thus, it is optimal and the set of minimizers is unbounded. To proceed, we rely on a recent result by Candes and Sur [44] who proved that (34) holds iff (To be precise, Candes and Sur [44] proved the statement for measurements that follow a logistic model. Close inspection of their proof shows that this requirement can be relaxed by appropriately defining the random variable Y in (7) (see also [48,49]).)

where and Y are random variables as in (7) and . We highlight that logistic and hinge losses give unbounded solutions in the noisy-signed model with , since the condition (34) holds for . However, their performances are comparable to the optimal performance in both logistic and Probit models (see Figure 3a and Figure 4a).

5. Extensions to Gaussian-Mixture Models

In this section, we show that our results on sharp asymptotics and lower bounds on error can be extended to include the Gaussian-Mixture model (GMM) presented in Section 1.2. The discussions on the phase transition for the existence of a bounded solution in Section 4.2 applies here as well. We rely on a phase-transition result [49] (Prop. 3.1), which proves that (34) holds if and only if

where and are random variables defined in (7) and . Therefore, for loss functions satisfying this property, e.g., hinge loss and logistic loss, the solution to (3) is unbounded if and only if

5.1. System of Equations for GMM

It turns out that, similar to the generative models, the asymptotic performance of (3) for GMM depends on the loss function ℓ via its Moreau envelope. Specifically, let and be independent Gaussian random variables such that

where

Consider the following system of non-linear equations in three unknowns :

The expectations above are with respect to the randomness of the random variables and .

As we show shortly, the solution to these equations is tightly connected to the asymptotic behavior of the optimization in (3).

5.2. Theoretical Prediction of Error for Convex Loss Functions

Theorem 4.

(Asymptotic Prediction). Assume data generated from the Gaussian-mixture model and assume such that the set of minimizers in (3) is bounded and the system of Equation (38) has a unique solution , such that . Let be as in (3) and . Then, in the limit of , , it holds with probability one that

where denotes the classification test error defined in (5).

Remark 6

(Proof of Theorem 4). The high-level steps of the proof of Theorem 4 follow closely the proof of Theorem 1. Particularly, for GMM one can show the correlation of the ERM estimate with the true vector is predicted by a system of Equations as in (38), only with replaced by a non-gaussian random variable (denoted as in Theorem 1). Specifically, by rotational invariance of the Gaussian feature vectors , we can assume, without loss of generality, that . Then, we can can guarantee that with probability one it holds that

where μ and α are specified by (38). To see how this implies (39), we argue as follows. Recalling that , we have

Using this and (40) leads to the asymptotic value of correlation and classification error as presented in (39).

Remark 7.

(On the Uniqueness of Solutions to Equation (38)) Our results in proving the uniqueness of solutions to the equations for generative models (8) in Proposition 1, extend to GMM. Noting that in (38) plays the role of in (8), we straightforwardly deduce the following result for uniqueness of solutions to (38).

Proposition 2.

Assume that the loss function has the following properties: (i) it is proper strictly convex; and (ii) it is continuously differentiable and its derivative is such that . The following statement is true. For any , if the system of equations in (38) has a bounded solution, then it is unique.

5.3. Special Case: Least-Squares

By choosing in (3), we obtain the standard least-squares estimate. To see this, note that since , it holds for all i that

Thus, the estimator is minimizing the sum of squares of the residuals:

For the choice , it turns out that we can solve the equations in (38) in closed form. The final result is summarized in the corollary below and proved in Appendix G.1.

5.4. Optimal Risk for GMM

Next, we characterize the best achievable classification error by different choices of loss function. Considering (39), we see that an optimal choice of ℓ is the one that minimizes . The next theorem characterizes the best achievable among convex loss functions by deriving an equivalent set of equations to (38) and combining them with proper coefficients. Similar to the proof of Theorem 2, a key step in the proof is properly setting up a Cauchy–Schwarz inequality that exploits the structure of the new set of equations. The proof is deferred to Appendix G.2.

Theorem 5.

(Lower Bound on Risk). Under the assumptions of Theorem 4, the following inequality holds for the effective risk parameter () of a loss function ℓ:

Remark 8.

(Optimality of Least-squares for GMM). Theorem 5 provides a lower bound for the asymptotic value of which holds for all and . This result together with Corollary 3 implies that least-squares achieves the least value of risk (i.e., and ) for all and among all convex loss functions ℓ for which the set of minimizers in (3) is bounded.

6. Numerical Experiments

In this section, we present numerical simulations that validate the predictions of Theorems 1–5. To begin, we use the following three popular models as our case study: signed, logistic and Probit. We generate random measurements according to (1). Without loss of generality (due to rotational invariance of the Gaussian measure), we set . We then obtain estimates of by numerically solving (3) and measure performance by the correlation value . Throughout the experiments, we set and the recorded values of correlation are averages over 25 independent realizations. For each label function, we first provide plots that compare results of Monte Carlo simulations to the asymptotic predictions for loss functions discussed in Section 4, as well as to the optimal performance of Theorem 2. We next present numerical results on optimal loss functions. To empirically derive the correlation of optimal loss function, we run gradient descent-based optimization with 1000 iterations. As a general comment, we note that, despite being asymptotic, our predictions appear accurate even for relatively small problem dimensions. For the analytical predictions, we apply Theorem 1. In particular, for solving the system of non-linear equations in (3), we empirically observe (see also [15,47] for similar observation) that, if a solution exists, then it can be efficiently found by the following fixed-point iteration method. Let and be such that (3) is equivalent to . With this notation, we initialize and for repeat the iterations until convergence.

Logistic model. For the logistic model, comparison between the predicted values and the numerical results is illustrated in Figure 3a. Results are shown for LS, logistic and hinge loss functions. Note that minimizing the logistic loss corresponds to the maximum-likelihood estimator (MLE) for logistic model. An interesting observation in Figure 3a is that in the high-dimensional setting (finite ) LS has comparable (if not slightly better) performance to MLE. Additionally, we observe that in this model, performance of LS is almost the same as the best possible performance derived according to Theorem 2. This confirms the analytical conclusion of Section 4.1. The comparison between the optimal loss function as in Theorem 3 and other loss functions is illustrated in Figure 3b. We note the obvious similarity between the shapes of optimal loss functions and LS which further explains the similarity between their performance.

Probit model. Theoretical predictions for the performance of hinge and LS loss functions are compared with the empirical results and optimal performance of Theorem 2 in Figure 4a. Similar to the logistic model, in this model, LS also outperforms hinge loss and its performance resembles the performance of optimal loss function derived according to Theorem 3. Figure 4b illustrates the shapes of LS, hinge loss and the optimal loss functions for the Probit model. The obvious similarity between the shape of LS and optimal loss functions for all values of explains the close similarity of their performance.

Additionally, by comparing the LS performance for the three models in Figure 1a, Figure 3a and Figure 4a, it is clear that higher (respectively, lower) correlation values are achieved for signed (respectively, logistic) measurements. This behavior is indeed predicted by Corollary 2: correlation performance is higher for higher values of . It can be shown that, for the signed, probit and logistic models (with ), we have , respectively.

Optimal loss function. By putting together Theorems 2 and 3, we obtain a method on deriving the optimal loss function for generative binary models. This requires the following steps.

Note that computing needs the density function of the random variable . In principle can be calculated as the convolution of the Gaussian density with the pdf of . Moreover, it follows from the recipe above that the optimal loss function depends on in general. This is because itself depends on via (12).

Numerical Experiments for GMM

Theorem 5 implies the optimality of least-squares among convex loss functions in the under-parameterized regime . In Figure 2, we demonstrate the classification risk of least-squares alongside other well-known loss functions LAD and logistic, for . Solid lines correspond to the theoretical predictions of Theorem 4. For least-squares we rely on the result of Corollary 3 and for LAD and logistic loss, the system of equations are solved by iterating over the equations, where we observe that after relatively small number of iterations the triple converges to . We use and samples to compute the expectations in (38) for LAD and logistic loss, respectively. After deriving , the classification risk is obtained according to the formula in (39). Dots correspond to the empirical evaluations of the classification risk of loss functions for and for different values of The resulting numbers are averaged over 30 independent experiments. As is observed, the empirical results closely follow the theoretical predictions of Theorem 4. Furthermore, as predicted by Theorem 5, least-squares has the minimum expected classification risk among other convex loss functions and for all .

7. Conclusions

We derive theoretical predictions for the generalization error of estimators obtained by ERM for generative binary models and a Gaussian Mixture model. Furthermore, we use this theoretical characterizations to find the optimal performance and optimal loss function among all convex losses. Although our analysis is true for Gaussian matrices, we empirically show they hold for sub-Gaussian matrices as well. As an exciting future direction, we plan to extend our analysis on sharp asymptotics and optimal loss function to non-isotropic (Gaussian) features with arbitrary covariance. A more challenging, albeit interesting, direction is going beyond (binary) linear models studied in this paper, by considering asymptotics and optimal error for kernel models and neural networks (see [48,57] for partial progress in this direction).

Author Contributions

Formal analysis, H.T., R.P. and C.T.; Funding acquisition, R.P. and C.T.; Investigation, H.T., R.P. and C.T.; Methodology, H.T., R.P. and C.T.; Project administration, H.T., R.P. and C.T.; Software, H.T.; Supervision, H.T., R.P. and C.T.; Writing—original draft, H.T., R.P. and C.T.; Writing—review & editing, H.T., R.P. and C.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by NSF CNS-2003035, NSF CCF-2009030 and NSF CCF-1909320.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Properties of Moreau Envelopes

Appendix A.1. Derivatives

Recall the definition of the Moreau envelope and proximal operator of a function ℓ:

and .

Proposition A1

(Basic properties of and [52]). Let be lower semi-continuous (lsc), proper and convex. The following statements hold for any .

- (a)

- The proximal operator is unique and continuous. In fact, whenever with .

- (b)

- The value is finite and depends continuously on , with for all x as .

- (c)

- The Moreau envelope function is differentiable with respect to both arguments. Specifically, for all , the following properties are true:If in addition ℓ is differentiable and denotes its derivative, then

Appendix A.2. Alternative Representations of (8)

Replacing the above relations for derivative of in (8), we can write the equations in terms of the proximal operator. If ℓ is differentiable, then Equations (8) can be equivalently written as follows:

Finally, if ℓ is two times differentiable, then applying integration by parts in Equation (14c) results in the following reformulation of (8c):

Appendix A.3. Examples of Proximal Operators

LAD.

For , the proximal operator admits a simple expression, as follows:

where

is the standard soft-thresholding function.

Hinge Loss.

When , the proximal operator can be expressed in terms of the soft-thresholding function as follows:

Appendix A.4. Fenchel–Legendre Conjugate Representation

For a function , its Fenchel–Legendre conjugate, is defined as:

The following proposition relates Moreau Envelope of a function to its Fenchel–Legendre conjugate.

Proposition A2.

For and a function h, we have:

where

Proof.

□

Appendix A.5. Convexity of the Moreau Envelope

Lemma A1.

The function defined as follows

is jointly convex in its arguments.

Proof.

Note that the function is jointly convex in . Thus, its perspective function

is jointly convex in [58] (Sec. 2.3.3), which completes the proof. □

Proposition A3.

(a) Ref. [52] (Prop. 2.22) Let be jointly convex in its arguments. Then, the function is convex.

(b) Ref. [58] (Sec. 3.2.3) Suppose is a set of concave functions, with an index set. Then, the function defined as is concave.

Lemma A2.

Let be a lsc, proper, convex function. Then, is jointly convex in .

Proof.

Recall that

where, for compactness, we let denote the triplet . Now, let , and . With this notation, we may write

For the first equality above, we recall the definition of in (A10) and the inequality right after follows from Lemma A1 and convexity of ℓ. Thus, the function G is jointly convex in its arguments. Using this fact, as well as (A11), and applying Proposition A3(a) completes the proof. □

Appendix A.6. The Expected Moreau-Envelope (EME) Function and its Properties

The performance of the ERM estimator (3) is governed by the system of equations (8) in which the Moreau envelope function of the loss function ℓ plays a central role. More precisely, as already hinted by (8) and becomes clear in Appendix B, what governs the behavior is the function

which we call the expected Moreau envelope (EME). Recall here that . Hence, the EME is the key summary parameter that captures the role of both the loss function and of the link function on the statistical performance of (3).

In this section, we study several favorable properties of the EME. In (A12), the expectation is over . We first study the EME under more general distribution assumptions in Appendix A.6.1, Appendix A.6.2 and Appendix A.6.3 and we then specialize our results to Gaussian random variables G and S in Appendix A.6.4.

Appendix A.6.1. Derivatives

Proposition A4.

Let be a lsc, proper and convex function. Further, let be independent random variables with bounded second moments , . Then, the expected Moreau envelope function is differentiable with respect to both c and λ and the derivatives are given as follows:

Proof.

The proof is an application of the Dominated Convergence Theorem (DCT). First, by Proposition A1(b), for every and any , the function takes a finite value. Second, by Proposition A1(c), is continuously differentiable with respect to both c and :

From this, note that the Cauchy–Schwarz inequality gives

Therefore, the remaining condition to check so that DCT can be applied is that the term above is integrable. To begin with, we can easily bound A as: Next, by non-expansiveness (Lipschitz property) of the proximal operator [52] (Prop. 12.19), we have that Putting together, we find that

We consider two cases. First, for fixed and any compact interval , we have that

Similarly, for fixed c and any compact interval on the positive real line, we have that

where we also used boundedness of the proximal operator (cf. Proposition A1(a)). This completes the proof. □

Appendix A.6.2. Strict Convexity

We study convexity properties of the expected Moreau envelope function :

for a lsc, proper, convex function ℓ and independent random variables X and Z with positive densities. Here, and onwards, we let denote a triplet and the expectation is over the randomness of X and Z. From Lemma A2, it is easy to see that is convex. In this section, we prove a stronger claim:

“ If ℓ is strictly convex and does not attain its minimum at 0, then is also strictly convex. ”

This is summarized in Proposition A5 below.

Proposition A5.

(Strict Convexity). Let be a function with the following properties: (i) it is proper strictly convex; and (ii) it is continuously differentiable and its derivative is such that . Further, let be independent random variables with strictly positive densities. Then, the function in (A15) is jointly strictly convex in its arguments.

Proof.

Let , and . Further, assume that and define the proximal operators

for . Finally, denote and . With this notation, -4.6cm0cm

The first inequality above follows by the definition of the Moreau envelope in (A1). The equality in the second line uses the definition of the function in (A10). Finally, the last inequality follows from convexity of H as proved in Lemma A1.

Continuing from (59), we may use convexity of ℓ to find that

This already proves convexity of (A15). In what follows, we argue that the inequality in (A17) is in fact strict under the assumption of the lemma.

Specifically, in Lemma A3, we prove that, under the assumptions of the proposition, for , it holds that

Using this in (A16) completes the proof of the proposition. The idea behind the proof of Lemma A3 is as follows. First, we use the fact that and to argue that there exists a non-zero measure set of such that . Then, the desired claim follows by strict convexity of ℓ. □

Lemma A3.

Let be a proper strictly convex function that is continuously differentiable with . Further, assume independent continuous random variables with strictly positive densities. Fix arbitrary triplets such that . Further, denote

Then, there exists a ball of nonzero measure, i.e., , such that , for all . Consequently, for any and , the following strict inequality holds,

Proof.

Note that (A19) holds trivially with “ replaced by “ due to the convexity of ℓ. To prove that the inequality is strict, it suffices, by strict convexity of ℓ, that there exists subset that satisfies the following two properties:

- , for all .

- .

Consider the following function :

By Lemma A4, there exists such that

Moreover, by continuity of the proximal operator (cf. Proposition A1(a)), it follows that f is continuous. From this and (A21), we conclude that for sufficiently small there exists a -ball centered at , such that property 1 holds. Property 2 is also guaranteed to hold for , since both have strictly positive densities and are independent. □

Lemma A4.

Let be a proper, convex function. Further, assume that is continuously differentiable and . Let , . Then, the following statement is true

-4.6cm0cm

Proof.

We prove the claim by contradiction, but first, let us set up some useful notation. Let denote triplets and further define

and

By Proposition A1, the following is true:

For the sake of contradiction, assume that the claim of the lemma is false. Then,

From this, it also holds that

Recalling (A23) and applying (A24), we derive the following from (A25):

We consider the following two cases separately.

Case 1: : Since , it holds that

However, from (A26) we have that for all . This contradicts (A27) and completes the proof for this case.

Case 2: : Continuing from (A26), we can compute that for all

By replacing from (A26), we derive that:

where

By replacing in (A29), we find that . This contradicts the assumption of the lemma and completes the proof. □

Appendix A.6.3. Strict Concavity

In this section, we study the following variant of the expected Moreau envelope:

for a lower semi-continuous, proper, convex function ℓ and continuous random variable X. The expectation above is over the randomness of X. In Appendix B.4, we show that the function is concave in . Here, we prove the following statement regarding strict-concavity of :

“ If ℓ is convex, continuously differentiable and , then is strictly concave. ”

This is summarized in Proposition A6 below.

Proposition A6.

(Strict concavity). Let be a convex, continuously differentiable function for which . Further, let X be a continuous random variable in with strictly positive density in the real line. Then, the function Γ in (A23) is strictly concave in .

Proof.

Before everything, we introduce the following convenient notation:

Note from Proposition A1 that is differentiable with derivative

We proceed in two steps as follows. First, for fixed and , we prove in Lemma A5 that

This shows that for all

Second, we use Lemma A3 to argue that the inequality is in fact strict for all where and . To be concrete, apply Lemma A3 for . Notice that all the assumptions of the lemma are satisfied, hence there exists interval for which and

Hence, from (A32), it follows that

From this, and (A31) we conclude that

Thus, from (A33) and (A34), as well as the facts that and , we conclude that is strictly concave in . □

Lemma A5.

Let be a convex, continuously differentiable function. Fix and denote . Then, for any , it holds that

Moreover, for , the following statement is true:

Proof.

First, we prove (A35). Then, we use it to prove (A36).

Proof of (A35): Consider function defined as follows . By assumption, g is differentiable with derivative . Moreover, g is -strongly convex. Finally, by optimality of the proximal operator (cf. Proposition A1), it holds that and . Using these, it can be computed that and .

In the following inequalities, we combine all the aforementioned properties of the function g to find that

This leads to the desired statement and completes the proof of (A35).

Proof of (A36): We fix and apply (A35) two times as follows. First, applying (A35) for and using the fact that , we find that

Second, applying (A35) for and using again the fact that , we find that

Adding (A37) and (A38), we show the desired property as follows:

□

Appendix A.6.4. Summary of Properties of (uid135)

Proposition A7.

Let be a lsc, proper, convex function. Let and function such that the random variable has a continuous strictly positive density on the real line. Then, the following properties are true for the expected Moreau envelope function

- (a)

- The function Ω is differentiable and its derivatives are given as follows:

- (b)

- The function Ω is jointly convex and concave on γ.

- (c)

- The function Ω is increasing in α.For the statements below, further assume that ℓ is strictly convex and continuously differentiable with .

- (d)

- The function Ω is strictly convex in and strictly concave in λ.

- (e)

- The function Ω is strictly increasing in α.

Proof.

Statements (a), (b) and (d) follow directly by Propositions A4–A6. It remains to prove Statements (c) and (e). Let . Then, there exist independent copies of G and such that . Hence, we have the following chain of inequalities:

where the inequality follows from Jensen and convexity of with respect to (see Statement (b) of the Proposition). This proves Statement (c). For Statement (e), note that the inequality is strict provided that is strictly convex (see Statement (d) of the Proposition). □

Appendix B. Proof of Theorem 1

In this section, we provide a proof sketch of Theorem 1. The main technical tool that facilitates our analysis is the convex Gaussian min-max theorem (CGMT), which is an extension of Gordon’s Gaussian min-max inequality (GMT). We introduce the necessary background on the CGMT in Appendix B.1.

The CGMT has been mostly applied to linear measurements [9,10,13,15,19]. The simple, yet central idea, which allows for this extension, is a certain projection trick inspired by Plan and Vershynin [40]. Here, we apply a similar trick, but, in our setting, we recognize that it suffices to simply rotate to align with the first basis vector. The simple rotation decouples the measurements from the last coordinates of the measurement vectors (see Appendix B.2). While this is sufficient for LS in [43], to study more general loss functions, we further need to combine this with a duality argument similar to that in [13]. Second, while the steps that bring the ERM minimization to the form of a PO (see (A48)) bear the aforementioned similarities to those in [13,43], the resulting AO is different from the one studied in previous works. Hence, the mathematical derivations in Appendix B.3 and Appendix B.4 are different. This also leads to a different system of equations characterizing the statistical behavior of ERM. Finally, in Appendix B.5, we prove uniqueness of the solution of this system of equations using the properties of the expected Moreau envelope function studied in Appendix A.6.

Appendix B.1. Technical Tool: CGMT

Appendix B.1.1. Gordon’s Min-Max Theorem (GMT)

The Gordon’s Gaussian comparison inequality [59] compares the min-max value of two doubly indexed Gaussian processes based on how their autocorrelation functions compare. The inequality is quite general (see [59]), but for our purposes we only need its application to the following two Gaussian processes:

where , , , they all have entries iid Gaussian; the sets and are compact; and . For these two processes, define the following (random) min-max optimization programs, which we refer to as the primary optimization (PO) problem and the auxiliary optimization (AO).

According to Gordon’s comparison inequality (To be precise, the formulation in (A42), which is due to [13], is slightly different from the original statement in Gordon’s paper (see [13] for details).), for any , it holds:

In other words, a high-probability lower bound on the AO is a high-probability lower bound on the PO. The premise is that it is often much simpler to lower bound the AO rather than the PO. To be precise, (A42) is a slight reformulation of Gordon’s original result proved in [13].

Appendix B.1.2. Convex Gaussian Min-Max Theorem (CGMT)

The proof of Theorem 1 builds on the CGMT [13]. For ease of reference, we summarize here the essential ideas of the framework following the presentation in [15] (please see [15] (Section 6) for the formal statement of the theorem and further details). The CGMT is an extension of the GMT and it asserts that the AO in (41b) can be used to tightly infer properties of the original (PO) in (41a), including the optimal cost and the optimal solution. According to the CGMT [15] (Theorem 6.1), if the sets and are convex and is continuous convex-concave on , then, for any and , it holds that

In words, concentration of the optimal cost of the AO problem around implies concentration of the optimal cost of the corresponding PO problem around the same value . Moreover, starting from (A43) and under strict convexity conditions, the CGMT shows that concentration of the optimal solution of the AO problem implies concentration of the optimal solution of the PO to the same value. For example, if minimizers of (A41b) satisfy for some , then the same holds true for the minimizers of (A41a): [15] ([Theorem 6.1(iii)). Thus, one can analyze the AO to infer corresponding properties of the PO, the premise being of course that the former is simpler to handle than the latter.

Appendix B.2. Applying the CGMT to ERM for Binary Classification

In this section, we show how to apply the CGMT to (3). For convenience, we drop the subscript ℓ from and simply write

where the measurements follow (1). By rotational invariance of the Gaussian distribution of the measurement vectors , we assume without loss of generality that . We can rewrite (A44) as a constrained optimization problem by introducing n variables as follows:

This problem is now equivalent to the following min-max formulation:

Now, let us define

such that and are the first entries of and , respectively. Note that in this new notation (1) becomes:

and

where we decompose . In addition, (A45) is written as

or, in matrix form, as

where is a diagonal matrix with on the diagonal, and is an matrix with rows .

In (A48), we recognize that the first term has the bilinear form required by the GMT in (A41a). The rest of the terms form the function in (A41a): they are independent of and convex-concave as desired by the CGMT. Therefore, we express (A44) in the desired form of a PO and for the rest of the proof we analyze the probabilistically equivalent AO problem. In view of (A41b), this is given as follows,

where as in (A41b) and .

Appendix B.3. Analysis of the Auxiliary Optimization

Here, we show how to analyze the AO in (A49). To begin with, note that , therefore and . In addition, let us denote the first entry of as

The first step is to optimize over the direction of . For this, we express the AO as: -4.6cm0cm

Now, denote and observe that for every the objective above is minimized (with respect to to ) at . Thus, it follows by [23] (Lem. 8) that (A50) simplifies to

Next, let and optimize over the direction of to yield

To continue, we utilize the fact that for all , . Hence,

With this trick, the optimization over becomes separable over its coordinates . By inserting this in (A42), we have

Now, we show that the objective function above is convex-concave. Clearly, the function is linear (thus, concave in ). Moreover, from Lemma A1, the function is jointly convex in . The rest of the terms are clearly convex and this completes the argument. Hence, with a permissible change in the order of min-max, we arrive at the following convenient form (Here, we skip certain technical details in this argument regarding boundedness of the constraint sets in (A49). While they are not trivial, they can be handled with the same techniques used in [15,60].):

where recall the definition of the Moreau envelope in (A1). As to now, we have reduced the AO into a random min-max optimization over only four scalar variables in (A53). For fixed , direct application of the weak law of large numbers shows that the objective function of (A53) converges in probability to the following as and :

where and (in view of (A46)). Based on that, it can be shown (similar arguments are developed in [15,60]) that the random optimizers and of (A53) converge to the deterministic optimizers and of the following (deterministic) optimization problem (whenever these are bounded as the statement of the theorem requires):

At this point, recall that represents the norm of and the value of . Thus, in view of (i) (A47); (ii) the equivalence between the PO and the AO; and (iii) our derivations thus far, we have that with probability approaching 1,

where and are the minimizers in (A54). The three equations in (8) are derived by the first-order optimality conditions of the optimization in (A54). We show this next.

Appendix B.4. Convex-Concavity and First-Order Optimality Conditions

First, we prove that the objective function in (A54) is convex–concave. For convenience define the function as follows

Based on Lemma A2, it immediately follows that, if ℓ is convex, F is jointly convex in . To prove concavity of F based on , it suffices to show that is concave in for all . To show this, we note that

which is the point-wise minimum of linear functions of . Thus, using Proposition A3(b), we conclude that is concave in . This completes the proof of convex-concavity of the function F in (A55) when ℓ is convex. By direct differentiation and applying Proposition A7(a), the first-order optimality conditions of the min–max optimization in (A54) are as follows:

Next, we show how these equations simplify to the following system of equations (same as (8):

Let . First, (A57a) is immediate from equation (A56a). Second, substituting from (A56c) in (A56d) yields or , which together with (A56b) leads to (A57c). Finally, (A57b) can be obtained by substituting in (A56c) and using the fact that (see Proposition A1):

Appendix B.5. On the Uniqueness of Solutions to (A57): Proof of Proposition 1

Here, we prove the claim of Proposition 1 through the following lemmas. As discussed in Remark 4, the main part of the proof is showing strict convex-concavity of F in (11). Lemma A6 proves that this is the case, and Lemmas A7 and A8 show that this is sufficient for the uniqueness of solutions to (A57). When put together, these complete the proof of Proposition 1.

Lemma A6.

(Strict Convex-Concavity of (A55)). Let be proper and strictly convex function. Further, assume that ℓ is continuously differentiable with . In addition, assume that has positive density in the real line. Then, the function defined in (A55) is strictly convex in and strictly concave in γ.

Proof.

The claim follows directly from the strict convexity-concavity properties of the expected Moreau-envelope proved in Propositions A5 and A6. Specifically, we apply Proposition A7. □

Lemma A7.

If the objective function in (A55) is strictly convex in and strictly concave in γ, then (A56) has a unique solution .

Proof.

Let be two different saddle points of (A55). For convenience, let for . By strict-concavity in , for fixed values of , the value of maximizing is unique. Thus, if , then it must hold that , which is a contraction to our assumption of . Similarly, we can use strict-convexity to derive that . Then, based on the definition of the saddle point and strict convexity-concavity, the following two relations hold for :

We choose for and for to find

From the above, it follows that and , which is a contradiction. This completes the proof. □

Lemma A8.

If (A56) has a unique solution , then (A57) has a unique solution .

Proof.

First, following the same approach of deriving Equations (A57) from (A56) in (A56), it is easy to see that existence of solution to (A57) implies existence of solution to (A57). Now, for the sake of contradiction to the statement of the lemma, assume that there are two different triplets and with and satisfying (Appendix B.4). Then, we can show that both such that:

satisfy the system of equations in (A56). However, since , it must be that . This contradicts the assumption of uniqueness of solutions to (A56) and completes the proof. □

Appendix C. Discussions on the Fundamental Limits for Binary Models

- On the Uniqueness of Solutions to Equation

The existence of a solution to the equation is proved in the previous section. However, it is not clear if the solution to this equation is unique, i.e., for any there exists only one such that If this is the case, then Equation (12) in Theorem 2 can be equivalently written as

Although we do not prove this claim, our numerical experiments in Figure A1 show that is a monotonic function for noisy-signed, logistic and Probit measurements, implying the uniqueness of solution to the equation for all .

Appendix C.1. Distribution of SY in Special Cases

We derive the following densities for for the special cases ():

- Signed:

- Logistic:

- Probit:

In particular, we numerically observe that for logistic and Probit models; the resulting densities are similar to the density of a gaussian distribution derived according to . Figure A2 illustrates this similarity for these two models. As discussed in Corollary 1, this similarity results in the tightness of the lower bound achieved for in Equation (23).

Figure A1.

The value of as in Theorem 2 for various measurement models. Since is a monotonic function of , the solution to determines the minimum possible value of .

Figure A2.

Probability distribution function of for the logistic and Probit models () compared with the probability distribution function of the Gaussian random variable (dashed lines) with the same mean and variance i.e., .

Appendix D. Proofs and Discussions on the Optimal Loss Function

Appendix D.1. Proof of Theorem 3

We show that the triplet is a solution to Equations (8) for ℓ chosen as in (29). Using Proposition A2 in the Appendix, we rewrite using the Fenchel–Legendre conjugate as follows:

where . For a function f, its Fenchel–Legendre conjugate is defined as:

Next, we use the fact that, for any proper, closed and convex function f, it holds that [61] (theorem 12.2). Therefore, noting that is a convex function (see the proof of Lemma A9 in the Appendix), combined with (A58), it yields that

Additionally, using Proposition A2, we find that which by (A49) reduces to:

Thus, by differentiation, we find that satisfies (28) with , i.e.,

Next, we establish the desired by directly substituting (A60) into the system of equations in (19). First, using the values of and in (30), as well as the fact that , we have the following chain of equations:

This shows (8b). Second, using again the specified values of and , a similar calculation yields

Recall from (17) that This combined with (A62) yields (8c). Finally, we use again (A60) and the specified values of and to find that

However, using (17), it holds that

This combined with (A63) and (A62) shows that , as desired to satisfy (8a). This completes the proof of the theorem.

Appendix D.2. On the Convexity of Optimal Loss Function

Here, we provide a sufficient condition for to be convex.

Lemma A9.

The optimal loss function as defined in Theorem 3 is convex if

Proof.

Using (A9) optimal loss function is written in the following form

Next, we prove that is a convex function. We first show that both and are positive numbers for all values of . We first note that, since G and are independent random variables, . Therefore,

Additionally, following the Cramer–Rao bound [53] for Fisher information yields that:

Using this inequality for , we derive that

From (A65) and (A66), it follows that .

Based on the definition of the random variable :

where c is a constant independent of w. By differentiating twice, we see that

is a convex function of w. Therefore, to prove that is a convex function, it is sufficient to prove that is a convex function or equivalently . Replacing values of and recalling the equation for yields that

which implies the convexity of . To obtain the derivative of , we use the result in [61] (Cor. 23.5.1), which states that, for a convex function f,

Therefore, following (A64),

Differentiating again and using the properties of inverse function yields that

where

Note that the denominator of (A68) is nonnegative since it is second derivative of a convex function. Therefore, it is evident from (A68) that a sufficient condition for the convexity of is that

or

This condition is satisfied if the statement of the lemma holds for :

where we use (A66) in the last inequality. This concludes the proof. □

Appendix D.2.1. Provable Convexity of the Optimal Loss Function for Signed Model

In the case of signed model, it can be proved that the conditions of Lemma A9 is satisfied. Since , we derive the probability density of as follows:

where

Direct calculation shows that f is a log-concave function for all . Therefore,

This proves the convexity of optimal loss function derived according to Theorem 3 when measurements follow the signed model.

Appendix E. Noisy-Signed Measurement Model

Consider a noisy-signed label function as follows:

where .

Figure A3.

The value of the threshold in (A69) as a function of probability of error . For logistic and hinge losses, the set of minimizers in (3) is bounded (as required by Theorem 1) iff .

In the case of signed measurements, i.e., , it can be observed that for all possible values of , the condition (34) in Section 4.2 holds for . This implies the separability of data and therefore the solution to the optimization problem (3) is unbounded for all . However, in the case of noisy signed label function, boundedness or unboundedness of solutions to (3) depends on . As discussed in Section 4.2, the minimum value of for bounded solutions is derived from the following:

where . It can be checked analytically that is a decreasing function of with and .

In Figure A3, we numerically evaluate the threshold value as a function of the probability of error . For , the set of minimizers of the (3) with logistic or hinge loss is unbounded.

The performances of LS, LAD and hinge loss functions for noisy-signed measurement model with and are demonstrated in Figure A4a,b, respectively. Comparing performances of least-squares and hinge loss functions suggest that hinge loss is robust to measurement corruptions, as for moderate to large values of it outperforms the LS estimator. Theorem 1 opens the way to analytically confirm such conclusions, which is an interesting future direction.

Figure A4.