Abstract

We developed Variational Laplace for Bayesian neural networks (BNNs), which exploits a local approximation of the curvature of the likelihood to estimate the ELBO without the need for stochastic sampling of the neural-network weights. The Variational Laplace objective is simple to evaluate, as it is the log-likelihood plus weight-decay, plus a squared-gradient regularizer. Variational Laplace gave better test performance and expected calibration errors than maximum a posteriori inference and standard sampling-based variational inference, despite using the same variational approximate posterior. Finally, we emphasize the care needed in benchmarking standard VI, as there is a risk of stopping before the variance parameters have converged. We show that early-stopping can be avoided by increasing the learning rate for the variance parameters.

1. Introduction

Neural networks are increasingly being used in safety-critical settings, such as self-driving cars [1] and medical diagnosis [2]. In these settings, it is critical to be able to reason about uncertainty in the parameters of the network—for instance, so that the system is able to call for additional human input when necessary [3]. Several approaches to Bayesian inference in neural networks are available, including stochastic gradient Langevin dynamics [4] Laplace’s method [5,6,7] and variational inference [8,9].

Here, we focus on combining the advantages of Laplace’s method [5,6,7] and variational inference (VI; [10]). In particular, Laplace’s method is very fast, as it begins by finding a mode using a standard gradient descent procedure, and then computes a local Gaussian approximate of the mode by performing a second-order Taylor expansion. However, as the mode is discovered by standard gradient descent, it may be a narrow mode that generalizes poorly [11]. In contrast, variational inference (VI; [8]) is slower, as it requires stochastic sampling of the weights, but that stochastic sampling forces it to find a broad, flat mode that presumably generalizes better.

We developed a new Variational Laplace (VL) method that combines the best of both worlds, giving a method that finds broad, flat modes even in the absence of the stochastic sampling. The resulting objective is composed of the log-likelihood, standard weight-decay regularization and a squared-gradient regularizer, which is weighted by the variance of the approximate posterior. VL displayed improved performance over VI and MAP in standard benchmark tasks.

Our squared gradient regularizer relates strongly to the effectiveness of stochastic gradient descent. In particular, recent work has shown that gradient descent implicitly uses a squared gradient regularizer [12], and that full-batch gradient descent with a squared gradient regularizer can recover much of the benefits of implicit regularization from minibatched stochastic gradient descent [13]. Our work implies that these regularizers can be interpreted as a form of approximate inference over the neural-network weights.

2. Background

2.1. Variational Inference (VI) for Bayesian Neural Networks

To perform variational inference for neural networks, we follow the usual approach [8,14], by using independent Gaussian priors, P and approximate posteriors Q for all parameters, :

where and are learned parameters of the approximate posterior, and where is a diagonal matrix, with . We fit the approximate posterior by optimizing the evidence lower bound objective (ELBO) with respect to parameters of the variational posterior, and :

Here, is all training inputs, is all training outputs, and is the tempering parameter which is 1 for a close approximation to Bayesian inference, but is often set to smaller values to “temper” the posterior, which often improves empirical performance [15,16] and has theoretical justification as accounting for the data-curation process [17].

We need to optimize the expectation in Equation (3) with respect to the parameters of , the distribution over which the expectation is taken. To perform this optimization efficiently, the usual approach is to use the reparameterization trick [8,18,19]—we write in terms of :

where . Thus, the ELBO can be written as an expectation over :

where the distribution over is now fixed. Critically, now the expected gradient of the term inside the expectation is equal to the gradient of , so we can use samples of to estimate the expectation.

2.2. Laplace’s Method

Laplace’s method [5,6,7] first finds a mode by doing gradient ascent on the log-joint:

and uses a Gaussian approximate posterior around that mode,

where H is Hessian of the log-joint at .

3. Related Work

There is past work on Variational Laplace [20,21,22], which learns the mean parameters, , of a Gaussian approximate posterior,

and obtains the covariance matrix as a function of the mean parameters using the Hessian, as in Laplace’s method. However, instead of taking the approximation to be centered around a MAP solution, , they take the approximate posterior to be centered on learned mean parameters, . Importantly, they simplify the ELBO by substituting this approximate posterior into Equation (3), and approximating the log-joint using its Taylor series expansion. Ultimately they obtain

However, there are two problems with this approach when applied to neural networks. First, the algebraic manipulations required to derive Equation (9) require the full Hessian, , for all N parameters, and neural networks have too many parameters for this to be feasible. Second, the term in Equation (9) cannot be minibatched, as we need the full sum over minibatches inside the log to compute the Hessian:

where is the contribution to the Hessian from an individual minibatch. Due to these issues, past Variational Laplace methods did not scale to large neural networks.

An alternative deterministic approach to variational inference in Bayesian neural networks, approximates the distribution over activities induced by stochasticity in the weights [23]. Unfortunately, it is important to capture the covariance over features induced by stochasticity in the weights. In fully connected networks, this is feasible, as we usually have a small number of features at each layer. However, in convolutional networks, we have a large number of features, . In the lower layers of a ResNet, we may have 64 channels and a feature map, resulting in = 65,536 features and a 65,536 × 65,536 covariance matrix. These scalability issues prevented them from applying their approach to convolutional networks. In contrast, our approach is highly scalable and readily applicable to the convolutional setting. Subsequent work such as Haußmann et al. [24] introduced other deterministic approximations, based on decomposing the relu into a linear function and a Heaviside step. However, their approach had errors of ∼30% on CIFAR-10.

Ritter et al. [7] and MacKay [25] used Laplace’s method in Bayesian neural networks, by first finding the mode by doing gradient ascent on the log-joint probability, and expanding around that mode. As usual for Laplace’s method, they risk finding a narrow mode that generalizes poorly. In contrast, we find a mode using an approximation to the ELBO that takes the curvature into account and hence is biased towards broad, flat modes that presumably generalise better.

Our approach gives a squared-gradient regularizer that is similar to those discovered in past work [12,26]. They showed that squared-gradient regularizers connect to gradient descent, in that approximation errors due to finite-step sizes in gradient-descent imply an effective squared gradient regularization. The similarity of our objectives raises profound questions about the extent to which gradient descent can be said to perform Bayesian inference. That said there are three key differences. First, their approach connects full-batch gradient descent to squared-gradient regularizers. Of course, most neural network training is stochastic gradient descent based on minibatches. Given that the stationary distribution of SGD is isotropic Gaussian (in a quadratic loss-function; Appendix A), we are able to connect stochastic gradient descent to squared gradient regularizers, and hence to approximate variational inference. This is especially important in light of recent work showing full-batch gradient descent gives poor performance, but that performance can be improved by including explicit squared gradient regularization. Our work indicates that explicit squared gradient regularization is mimicking the implicit regularization from stochastic gradient descent. First, our method uses the Fisher, (i.e., the gradients for data sampled from the model) whereas their approach uses the empirical Fisher, (i.e., gradients for the observed data) to form the squared gradient regularizer [27]. Second, our approach gives a principled method to learn a separate weighting for the squared-gradient for each parameter, whereas the connection to SGD forces Barrett and Dherin [12] to use a uniform weighting across all parameters.

Our work differs from, e.g., Khan et al. [28] by explicitly providing a simply implemented loss-function in terms of a squared-gradient regularizer, instead of working with NTK-inspired approximations to the Hessian.

Other approaches include “Broad Bayesian Learning” [29], which optimizes the architecture of a Bayesian neural network, exploiting information from previously trained but different networks. Of course, quantification of uncertainty for Bayesian neural networks is always fraught [30]. As such, we followed standard practice in the literature of reporting OOD detection performance and a measure of calibration accuracy [31].

4. Methods

To combine the best of VI and Laplace’s method, we begin by noting that the ELBO can be rewritten in terms of the KL divergence between the prior and approximate posterior:

where the KL-divergence can be evaluated analytically:

As such, the only term we need to approximate is the expected log-likelihood.

To approximate the expectation, we begin by taking a second-order Taylor series expansion of the log-likelihood around the current settings of the mean parameters, :

where B is the number of minibatches, is the gradient for minibatch j and H is the Hessian for the full dataset:

Here, and are the the inputs and outputs for the full dataset, whereas and are the inputs and outputs for minibatch j. Now we consider the expectation of each of these terms under the approximate posterior, . The first term is constant and independent of . The second (linear) term is zero, because the expectation of under the approximate posterior is zero

The third (quadratic) term might at first appear difficult to evaluate because it involves, the matrix of second derivatives, where N is the number of parameters in the model. However, using properties of the trace, and noting that the expectation of is the covariance of the approximate posterior, we obtain

writing the trace in index notation, and substituting for the (diagonal) posterior covariance, :

Thus, our first approximation of the expected log-likelihood is

and substituting this into Equation (11) gives

This resolves most of the issues with the original Variational Laplace method: it requires only the diagonal of the Hessian, it can be minibatched and it does not blow up if is zero.

4.1. Pathological Optima When Using the Hessian

However, a new issue arises: is usually negative, in which case the approximation in Equation (20) can be expected to work well. However there is nothing to stop from becoming positive. Usually if we, e.g., took the log-determinant of the negative Hessian, this would immediately break the optimization process (as we would be taking the logarithm of a negative number). However, in our context, there is no immediate issue as Equation (20) takes on a well-defined value even when one or more ’s are positive. That said, we rapidly encounter similar issues as we get pathological optimal values of . In particular, picking out the terms in the objective that depend on , absorbing the other terms into the constant, and taking for simplicity, we have

Thus, the gradient wrt a single variance parameter is

In the typical case, is negative so is positive, and we can find the optimum by solving for the value of where the gradient is zero:

However, if is positive and sufficiently large, , then becomes negative, and not only is the mode in Equation (23) undefined, but the gradient is always positive:

as both terms in the sum: and are positive. As such, when , the variance, grows without bound.

4.2. Avoiding Pathologies with the Fisher

To avoid pathologies arising from the fact that the Hessian is not necessarily negative definite, a common approach is to approximate the Hessian using the Fisher information matrix:

Importantly, is the gradient of the log-likelihood for data sampled from the model, , not for the true data:

This gives us the Fisher, which is a commonly used and well-understood approximation to the Hessian [27]. Importantly, this contrasts with the empirical Fisher [27], which uses the gradient conditioned on the actual data (and not data sampled from the model):

which is problematic, because there is a large rank-1 component in the direction of the mean gradient, which disrupts the estimated matrix specifically in the direction of interest for problems such as optimization [27].

Using the Fisher information (Equation (25)) in Equation (19), we obtain an approximate expected log-likelihood:

Substituting this into Equation (11) gives us the final VL objective, , which is an approximation of the ELBO:

In practice, we typically take the objective for a minibatch, divided by the number of datapoints in a minibatch, S:

where are the gradients of the log-likelihood for the minibatch averaged across datapoints, i.e., the gradient of . Remember B is the number of minibatches so is the total number of training datapoints.

4.3. Constraints on the Network Architecture

Importantly, here the regularizer is the squared gradient of the loss with respect to the parameters. As such, computing the loss implicitly involves a second-derivative of the log-likelihood, and we therefore cannot use piecewise linear activation functions such as ReLU, which have pathological second derivatives. In particular, the second derivative has a delta-function “spike” at zero:

where is the relu nonlinearity, is the Heaviside step function which is zero for and one for , and is the Dirac delta function. As the function is almost never evaluated at exactly zero, it is not possible to sensibly take into account the contribution of the infinitely high spike in the second derivative at zero. Interestingly, this issue is very similar to the one that turns up when differentiating step (i.e., ) activations—the derivative is well-defined and zero almost everywhere. The issue is that there are delta-function spikes in the gradient at zero that gradient descent cannot reasonably work with. Instead, we used a softplus activation function, but any activation with well-behaved second derivatives is admissible.

5. Results

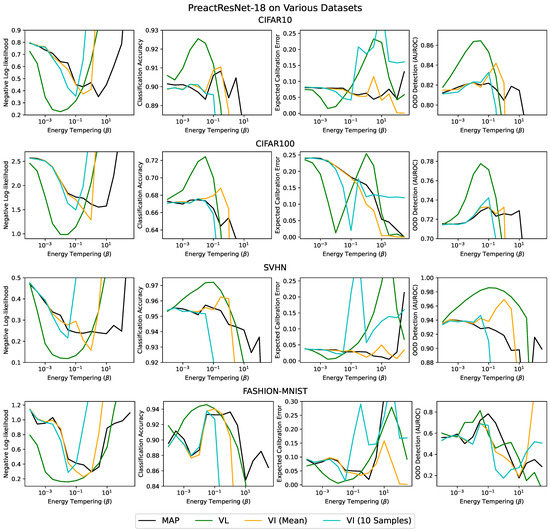

We compared MAP, VI and our method (VL) on four different datasets (CIFAR-10, CIFAR-100 [32], SVHN [33] and fashion-MNIST [34] MIT Licensed) using a PreactResNets-18 [35] with an initial learning rate of 1E-4, which decreased by a factor of 10 after 100 and 150 epochs and a batch size of 128 with all the other optimizer hyperparameters set to their default values. We tried two variants of variational inference: evaluating test-performance using the mean network, VI (mean), and evaluating test performance by drawing 10 samples from the approximate posterior, VI (sampled). We swept across different degrees of posterior tempering, . Using is normatively justified in the Bayesian framework as accounting for the effect of data curation [17]. For many values of VL gave better test accuracies, test log-likelihoods and expected calibration errors [31,36] than VI or MAP inference (Figure 1). Importantly though, for the optimal value of , VL almost always gave better performance on these metrics (Table 1). These experiments took ∼480 GPU hours, and were run on a mixture of nVidia 1080 and 2080 GPUs in an internal cluster.

Figure 1.

Training a PreactResNet-18 on various datasets, displaying the test accuracy, test log-likelihood, expected calibration error (ECE) [31,36] and OOD detection metric (AUROC) for CIFAR-10, CIFAR-100, SVHN and fashion MNIST. Downsampled Imagenet [37] was used as OOD data. See Appendix B.

Table 1.

Best values test NLL, test accuracy and ECE for a variety of datasets, as we used different values of the tempering parameter, .

The runtimes of the methods are listed in Table 2. VL or gradient regularization was around a factor of three slower than either VI or MAP due to the need to compute second-derivatives. It is still eminently feasible, especially in comparison to past methods for deterministic variational inference that have fundamental difficulties in scaling to convolutional networks [23]. Furthermore, we did not find that increasing the number of epochs improved performance either for VI or MAP, as we were already training for convergence.

Table 2.

Time per epoch for different methods on CIFAR-10.

Early-Stopping and Poor Performance in VI

Before performing comparisons where we learn the approximate posterior variance, it is important to understand the pitfalls when optimizing variational Bayesian neural networks using adaptive optimizers such as Adam. In particular, there is a strong danger of stopping the optimization before the variances have converged. To illustrate this risk, note that Adam [38] updates take the form

where is the learning rate, m is an unbiased estimator of the mean gradient, , v is an unbiased estimator of the squared gradient, and is a small positive constant to avoid divide-by-zero. The magnitude of the updates, , is maximized by having exactly the same gradient on each step, in which case, neglecting , we have . As such, with a learning rate of , a training set of 50,000 and a batch size of 128 parameters can move at most 50,000/128 per epoch. Doing 100 epochs at this learning rate, a parameter can change by at most 4 over the 100 epochs before the first learning rate step.

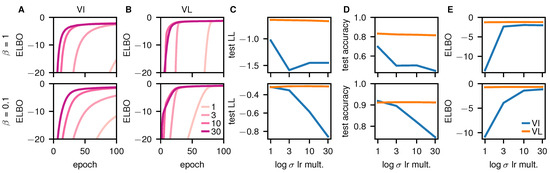

This is fine for the weights, which typically have very small values. However, the underlying parameters used for the variances typically take on larger values. In our case, we will use as the parameter, and initialize it to three less than the prior standard deviation, . To ensure reasonable convergence, should be able to revert back to the prior, implying that it must be able to change by at least three during the course of training. Unfortunately, 3 is very close to the maximum possible change of 4, raising the possibility that the variance parameters will not actually converge. To check whether early-stopping was indeed an issue, we plotted the (tempered) ELBO for VI (Figure 2A) and VL (Figure 2B). For VI (Figure 2A) with the standard setup (lightest line with a learning rate multiplier of 1), the ELBO clearly has not converged at 100 epochs, indicating early-stopping. Notably, this was still an issue with VL (Figure 2B), especially if we were to train for fewer epochs. However, the effect was smaller for VL, which may have been because the gradients were more consistent, as it did not sample the weights. These issues can be rectified by increasing the learning rate specifically for the parameters (darker lines).

Figure 2.

Analysis of early stopping in VI and VL. The first row is untempered (), and the second row is tempered (). (A) ELBO over epochs 0–100 (with the highest initial learning rate) for VI. Different lines correspond to networks with learning rate multipliers for of 1, 3, 10 and 30. (B) As (A), but for VL. CDE Final test-log-likelihood (C), test accuracy (D) and ELBO (E) after 200 epochs for different learning rate multipliers.

We then plotted the test log-likelihood (Figure 2C), test accuracy (Figure 2D) and ELBO (Figure 2E) against the learning rate multiplier. Again, the performance for VL (orange) was reasonably robust to changes in the learning rate multiplier. However, the performance of VI (blue) was very sensitive to the multiplier: as the multiplier increased, test performance fell but the ELBO rose. As we ultimately care about test performance, these results would suggest that we should use the lowest multiplier (1), and accept the possibility of early-stopping. That may be a perfectly good choice in many cases. However, VI is supposed to be an approximate Bayesian method, and using an alternative form for the ELBO,

we can see that the ELBO measures KL-divergence between the true and approximate posterior, and hence the quality of our approximate Bayesian inference. As such, very poor ELBOs imply that the KL-divergence between the true and approximate posterior is very large, and hence the “approximate posterior” is no longer actually approximating the true posterior. As such, if we are to retain a Bayesian interpretation of VI, we need to use larger learning rate multipliers which give better values for the ELBO (Figure 2E). However, in doing that, we get worse test performance (Figure 2C,D). This conflict between approximate posterior quality and test performance is very problematic: the Bayesian framework would suggest that as Bayesian inference becomes more accurate, performance should improve, whereas for VI, performance gets worse. Concretely, by initializing to a small value and then early-stopping, we leave at a small value through training, in which case VI becomes equivalent to MAP inference with a negligibly small amount of noise added to the weights. We would therefore expect early-stopped VI to behave (and be) very similar to MAP inference.

In subsequent experiments, we chose to use a learning rate multiplier of 10, as this largely eliminated early-stopping (though see VI with ; Figure 2E). Overall, this indicates that we have to be very careful to avoid early stopping when running standard, sampling-based variational inference.

6. Conclusions

We gave a novel Variational Laplace approach to inference in Bayesian neural networks which combines the best of previous approaches based on variational inference and Laplace’s Method. This method gave excellent empirical performance compared to VI.

Author Contributions

Conceptualization, L.A.; methodology, A.U.; software, A.U.; validation, L.A.; formal analysis, L.A.; investigation, A.U.; resources, L.A.; data curation, A.U.; writing—original draft preparation, L.A.; writing—review and editing, L.A.; visualization, A.U.; supervision, L.A.; project administration, L.A.; funding acquisition, L.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Code is available at https://github.com/LaurenceA/fitr (accessed on 2 December 2021).

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. The Stationary Distribution of SGD

We sought to relate these gradient regularizers back to work on SGD. In particular, we looked to work on the stationary distribution of SGD, which noted that under quadratic losses functions, SGD samples an isotropic Gaussian (i.e., with covariance proportional to the identity matrix). In particular, consider a loss function which is locally closely approximated by a quadratic. Without loss of generality, we consider a mode at ,

where P is the total number of datapoints. Typically, the objective used in SGD is the loss for a minibatch of size . Following Mandt et al. [39], we use the Fisher information to identify the noise in the minibatch gradient,

where is sampled from a standard IID Gaussian. For SGD, this gradient is multiplied by a learning rate, ,

This is an multivariate Gaussian autoregressive process, so we can solve for the stationary distribution of the weights. In particular, we note that the covariance at time is

Following Mandt et al. [39], when the learning rate is small, the quadratic term can be neglected.

We then solve for steady-state in which ,

so,

Appendix B. Varying the Batch Size

Here, we vary the batch size. We used a batch size of 128 in the main text. We found that batch sizes of 64 and 256 have no effect on the relative performance of the methods.

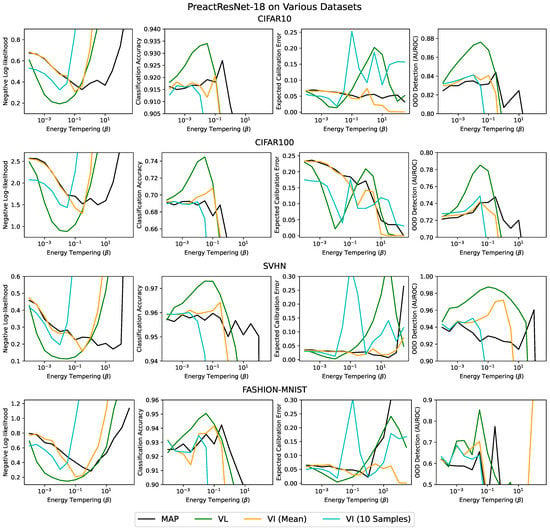

Figure A1.

Replication of Figure 1 with a batch size of 64.

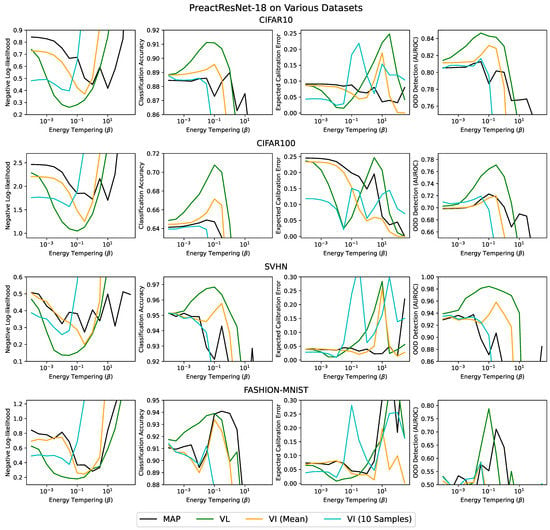

Figure A2.

Replication of Figure 1 with a batch size of 256.

References

- Bojarski, M.; Del Testa, D.; Dworakowski, D.; Firner, B.; Flepp, B.; Goyal, P.; Jackel, L.D.; Monfort, M.; Muller, U.; Zhang, J.; et al. End to end learning for self-driving cars. arXiv 2016, arXiv:1604.07316. [Google Scholar]

- Amato, F.; López, A.; Peña-Méndez, E.M.; Vanhara, P.; Hampl, A.; Havel, J. Artificial neural networks in medical diagnosis. J. Appl. Biomed. 2013, 11, 47–58. [Google Scholar] [CrossRef]

- McAllister, R.; Gal, Y.; Kendall, A.; Van Der Wilk, M.; Shah, A.; Cipolla, R.; Weller, A. Concrete problems for autonomous vehicle safety: Advantages of bayesian deep learning. In Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017. [Google Scholar]

- Welling, M.; Teh, Y.W. Bayesian learning via stochastic gradient Langevin dynamics. In Proceedings of the 28th International Conference on Machine Learning (ICML-11), Bellevue, WA, USA, 28 June–2 July 2011; pp. 681–688. [Google Scholar]

- Azevedo-Filho, A.; Shachter, R.D. Laplace’s Method Approximations for Probabilistic Inference in Belief Networks with Continuous Variables. In Uncertainty Proceedings 1994; Elsevier: Amsterdam, The Netherlands, 1994; pp. 28–36. [Google Scholar]

- MacKay, D.J. Information Theory, Inference and Learning Algorithms; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Ritter, H.; Botev, A.; Barber, D. A scalable laplace approximation for neural networks. In Proceedings of the 6th International Conference on Learning Representations (ICLR 2018), Vancouver, BC, Canada, 30 April–3 May 2018; Volume 6. [Google Scholar]

- Blundell, C.; Cornebise, J.; Kavukcuoglu, K.; Wierstra, D. Weight uncertainty in neural networks. arXiv 2015, arXiv:1505.05424. [Google Scholar]

- Ober, S.W.; Aitchison, L. Global inducing point variational posteriors for Bayesian neural networks and deep Gaussian processes. arXiv 2020, arXiv:2005.08140. [Google Scholar]

- Wainwright, M.J.; Jordan, M.I. Graphical Models, Exponential Families, and Variational Inference; Now Publishers Inc.: Delft, The Netherlands, 2008. [Google Scholar]

- Neyshabur, B.; Bhojanapalli, S.; McAllester, D.; Srebro, N. Exploring generalization in deep learning. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2017; pp. 5947–5956. [Google Scholar]

- Barrett, D.G.; Dherin, B. Implicit Gradient Regularization. arXiv 2020, arXiv:2009.11162. [Google Scholar]

- Geiping, J.; Goldblum, M.; Pope, P.E.; Moeller, M.; Goldstein, T. Stochastic training is not necessary for generalization. arXiv 2021, arXiv:2109.14119. [Google Scholar]

- Hinton, G.E.; Van Camp, D. Keeping the neural networks simple by minimizing the description length of the weights. In Proceedings of the Sixth Annual Conference on Computational Learning Theory, Santa Cruz, CA, USA, 26–28 July 1993; pp. 5–13. [Google Scholar]

- Huang, C.W.; Tan, S.; Lacoste, A.; Courville, A.C. Improving explorability in variational inference with annealed variational objectives. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2018; pp. 9701–9711. [Google Scholar]

- Wenzel, F.; Roth, K.; Veeling, B.S.; Swiatkowski, J.; Tran, L.; Mandt, S.; Snoek, J.; Salimans, T.; Jenatton, R.; Nowozin, S. How Good is the Bayes Posterior in Deep Neural Networks Really? arXiv 2020, arXiv:2002.02405. [Google Scholar]

- Aitchison, L. A statistical theory of cold posteriors in deep neural networks. arXiv 2020, arXiv:2008.05912. [Google Scholar]

- Kingma, D.P.; Welling, M. Auto-encoding variational bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Rezende, D.J.; Mohamed, S.; Wierstra, D. Stochastic backpropagation and approximate inference in deep generative models. arXiv 2014, arXiv:1401.4082. [Google Scholar]

- Friston, K.; Mattout, J.; Trujillo-Barreto, N.; Ashburner, J.; Penny, W. Variational free energy and the Laplace approximation. NeuroImage 2007, 34, 220–234. [Google Scholar] [CrossRef]

- Daunizeau, J.; Friston, K.J.; Kiebel, S.J. Variational Bayesian identification and prediction of stochastic nonlinear dynamic causal models. Phys. D Nonlinear Phenom. 2009, 238, 2089–2118. [Google Scholar] [CrossRef] [Green Version]

- Daunizeau, J. The Variational Laplace approach to approximate Bayesian inference. arXiv 2017, arXiv:1703.02089. [Google Scholar]

- Wu, A.; Nowozin, S.; Meeds, E.; Turner, R.E.; Hernández-Lobato, J.M.; Gaunt, A.L. Deterministic variational inference for robust bayesian neural networks. arXiv 2018, arXiv:1810.03958. [Google Scholar]

- Haußmann, M.; Hamprecht, F.A.; Kandemir, M. Sampling-free variational inference of bayesian neural networks by variance backpropagation. In Proceedings of the 35th Uncertainty in Artificial Intelligence Conference, Tel Aviv, Israel, 22–25 July 2019; pp. 563–573. [Google Scholar]

- MacKay, D.J. A practical Bayesian framework for backpropagation networks. Neural Comput. 1992, 4, 448–472. [Google Scholar] [CrossRef]

- Smith, S.L.; Dherin, B.; Barrett, D.G.; De, S. On the Origin of Implicit Regularization in Stochastic Gradient Descent. arXiv 2021, arXiv:2101.12176. [Google Scholar]

- Kunstner, F.; Hennig, P.; Balles, L. Limitations of the empirical Fisher approximation for natural gradient descent. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2019; pp. 4156–4167. [Google Scholar]

- Khan, M.E.; Immer, A.; Abedi, E.; Korzepa, M. Approximate inference turns deep networks into gaussian processes. arXiv 2019, arXiv:1906.01930. [Google Scholar]

- Kuok, S.C.; Yuen, K.V. Broad Bayesian learning (BBL) for nonparametric probabilistic modeling with optimized architecture configuration. Comput.-Aided Civ. Infrastruct. Eng. 2021, 36, 1270–1287. [Google Scholar] [CrossRef]

- Yao, J.; Pan, W.; Ghosh, S.; Doshi-Velez, F. Quality of uncertainty quantification for Bayesian neural network inference. arXiv 2019, arXiv:1906.09686. [Google Scholar]

- Guo, C.; Pleiss, G.; Sun, Y.; Weinberger, K.Q. On calibration of modern neural networks. In Proceedings of the 34th International Conference on Machine Learning (ICML 2017), Sydney, NSW, Australia, 6–11 August 2017; pp. 1321–1330. [Google Scholar]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images; University of Toronto: Toronto, ON, Canada, 2009. [Google Scholar]

- Netzer, Y.; Wang, T.; Coates, A.; Bissacco, A.; Wu, B.; Ng, A.Y. Reading digits in natural images with unsupervised feature learning. In Proceedings of the NIPS 2011 Workshop on Deep Learning and Unsupervised Feature Learning, Granada, Spain, 16–17 December 2011. [Google Scholar]

- Xiao, H.; Rasul, K.; Vollgraf, R. Fashion-mnist: A novel image dataset for benchmarking machine learning algorithms. arXiv 2017, arXiv:1708.07747. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity mappings in deep residual networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 630–645. [Google Scholar]

- Naeini, M.P.; Cooper, G.; Hauskrecht, M. Obtaining well calibrated probabilities using bayesian binning. In Proceedings of the Twenty-Ninth AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015. [Google Scholar]

- Chrabaszcz, P.; Loshchilov, I.; Hutter, F. A Downsampled Variant of ImageNet as an Alternative to the CIFAR datasets. arXiv 2017, arXiv:1707.08819. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Mandt, S.; Hoffman, M.D.; Blei, D.M. Stochastic gradient descent as approximate bayesian inference. J. Mach. Learn. Res. 2017, 18, 4873–4907. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).