Abstract

This paper studies the discrete-time Poisson channel and the noiseless binary channel where, after recording a 1, the channel output is stuck at 0 for a certain period; this period is called the “dead time.” The communication capacities of these channels are analyzed, with main focus on the regime where the allowed average input power is close to zero, either because the bandwidth is large, or because the available continuous-time input power is low.

1. Introduction

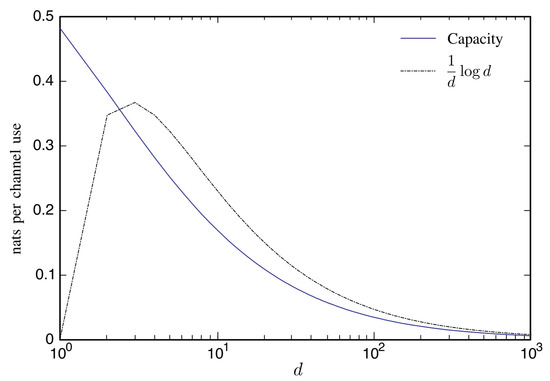

For some detection systems that record arrivals, for example, a single-photon avalanche diode, after each recorded arrival, there comes a period during which the detector is not able to record any new arrivals. This period is often called the “dead time”; see, e.g., [1,2,3]. There are mainly two types of behavior for the dead time: nonparalyzable (nonextendable) means the detector dead time is a fixed period after each recorded arrival; and paralyzable (extendable) means the dead time happens after each occurred arrival, i.e., an arrival that is not recorded because the channel is already “dead” will restart the dead-time period. The two types of dead time are illustrated in Figure 1.

Figure 1.

Nonparalyzable (top) and paralyzable (bottom) dead time. The colored rectangles indicate the dead-time periods.

One important example of a communication system where the detector may be affected by dead time is direct-detection optical communication, where the input signal is emitted by a laser or light-emitting diode. In Information Theory, such a system is often modeled as a Poisson channel. Some capacity results on the Poisson channel can be found in [4,5,6,7,8,9,10,11]. In this work we are interested in the communication capacity of the discrete-time Poisson channel with detector dead time, which, to our knowledge, is largely unexplored.

Before attacking the Poisson channel, we first look at the simpler problem of the noiseless binary channel, where the input can be 0 or 1, and where, when the channel is not “dead,” the channel output always equals the input. We think of each received 1 as an arrival, and assume that it triggers a dead-time period of d channel uses. This problem has been extensively studied in the literature on run-length limited (RLL) coding [12,13,14,15]; we shall elaborate on this later. The noiseless binary channel with dead time can be thought of as a model for direct-detection optical channel with number states containing zero or one photon as inputs.1 Furthermore, as we shall see, it serves as a reference for comparison for the Poisson channel.

For both channels, we are primarily interested in the wideband regime, where each channel use corresponds to a short time duration. We believe this regime to be particularly relevant, because each dead-time period would occupy a large number of channel uses. When studying the Poisson channel in the wideband regime, we distinguish between the cases where there is feedback and where there is not. Related to wideband, we also study the low-continuous-time-power regime where bandwidth is moderate. In both regimes, the average input power per channel use is small, but in the low-continuous-time-power regime, the dead-time period only occupies a moderate number of channel uses.

For most of the above cases, we determine the asymptotic capacity; in some cases we also determine the second-order term. In these cases, we show that dead time does not affect the asymptotic capacity. An exception is the Poisson channel in the wideband regime without feedback, for which we prove a lower bound, but we have not found a matching upper bound. In this case, we suspect that dead time does incur a penalty on the dominant term in capacity.

After introducing some notation and definitions, we present first our study on the noiseless binary channel, and then that on the Poisson channel. At the end of the paper, we summarize the results and discuss some future research directions.

Some Notation and Definitions

The channel, whether it is noiseless or Poisson, is characterized by two parameters: denotes the maximum allowed average input power per channel use, and d denotes the duration of each dead-time period in channel uses. We further denote

which corresponds to the maximum allowed average input energy per dead-time period.

Capacity is in discrete time and has the unit “nats per channel use.” As usual, it is defined as the supremum over all communication rates for which the probability of a decoding error can be made arbitrarily small. Given the above two parameters and d, we use to denote the capacity of the noiseless binary channel, that of the Poisson channel without feedback, and that of the Poisson channel with feedback. Sometimes, to highlight the fact that we hold the parameter to be fixed, we write in place of d, such as in .

Unless otherwise stated, we use and to describe functions of in the regime where tends to zero, hence a function described as satisfies

and a function described as satisfies

Sometimes the dependence of the function f on may be implicit, and f may be the constant 1.

Throughout this paper, log denotes the natural logarithm, and information is measured in nats.

2. The Noiseless Binary Channel

We consider a noiseless channel with dead time d, where the input X takes value in . The law of the channel for nonparalyzable dead time is described as

For paralyzable dead time, the channel law is given by

Without constraints on the input sequence, the capacity of the channels (4) and (5) is well understood in terms of RLL codes. For example, it is given by the logarithm of the largest root to [13]

Alternatively, it can be written as

where denotes the binary entropy function:

Now consider the case where an average-power constraint is imposed on the input sequence. Specifically, for a codebook at blocklength n, we require that the average number of 1s contained in a codeword not exceed . The capacity of this power-constrained channel is again an elementary result. For completeness, we present it as a proposition and provide a proof.

Proposition 1.

Proof.

See Appendix A. □

Before proceeding with the asymptotic analysis, we put the above channel model in a continuous-time perspective. To this end, consider a noiseless photon channel where the receiver employs a single-photon detector with a time resolution of t seconds. More precisely, the time axis is divided into slots each lasting t seconds, and the detector declares each detected photon as belonging to a certain slot; it is not able to provide finer timing information on the photons. The sender sends a sequence of photons into the channel, each at a chosen time.2 We impose an input-power constraint which says that the transmitter can send at most photons per second. Assume that each dead-time period lasts seconds, where is an integer multiple of t. We can now think of each t-second slot as one use of a discrete-time noiseless binary channel. The discrete-time input is 1 if and only if the sender sends at least one photon in this slot. (Note that the transmitter’s choice of timing within that slot is irrelevant, because the receiver cannot recover this information. Note as well that it is suboptimal for the transmitter to send more than one photon within a slot, because the second photon cannot be detected.) The discrete-time output is 1 if and only if a photon is detected in this slot. The discrete-time input-power constraint is , and the discrete-time dead-time duration is channel uses.

In the following, we study the wideband regime where t is brought down to zero while is held fixed. In this case, approaches zero proportionally to t, while d grows to infinity proportionally to ; the product remains unchanged. Then we also study the low-continuous-time-power regime where is brought down to zero while t is held fixed. In this case, d is fixed and finite when . For clarity, in the rest of this section we shall stay with the discrete-time picture, and only use the parameters d, , and .

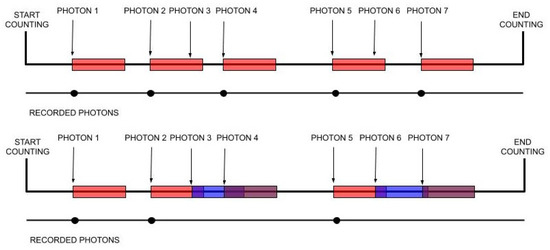

As a reference for comparison, we recall that the capacity of the noiseless binary channel without dead time, in the regime where the average power , is given by and behaves like

We note that (10) has the same dominant term as the asymptotic capacity of the Poisson channel (without dead time), but a better second-order term [9,10]; see also (47) ahead.

2.1. Wideband Regime

We consider the regime where while is held fixed. As we shall see, the asymptotic capacity has different expressions when and when .

2.1.1. The Case

In this case, the following proposition shows that the detector dead-time effect on is on the second-order term.

Proposition 2.

In the regime where and is fixed and less than 1,

Proof.

First, note that, when , the condition for the maximization in (9) is equivalent to

When is sufficiently small, one could verify that is monotonically increasing in satisfying (12). Consequently, (for sufficiently small ) the maximum in (9) is achieved by , and

The expression (11) follows by applying Taylor series expansion and rearranging terms. □

2.1.2. The Case

In this case, dead time incurs a penalty on the first-order term of as shown by the following proposition.

Proposition 3.

In the regime where and is fixed and greater than or equal to 1,

Proof.

We first note that the condition in (9) always holds. Indeed, when , we have

Hence the average-power constraint is inactive.

We now derive a lower bound on . To this end, we bound

With the specific choice , we obtain

and since when , we arrive at the asymptotic lower bound

Let be the value of that achieves the maximum in (24). The derivative with respect to of the expression to be maximized on the right-hand side of (24) is

Since f is monotonically decreasing (which can be verified by computing its derivative), positive when , and negative at , it must have a unique root, therefore must satisfy

Since

is positive for all , we have

for all . Therefore, for large enough d,

where the second inequality follows by (28). Hence, by (24), (31), and the fact that achieves the maximum in (24), we get

Combining (20) and (32) proves

which, in the asymptotic regime of interest, is equivalent to (15). □

Remark 1.

The converse part of Proposition 3 can also be proven by noting the following. The dead time effectively imposes a constraint on the number of 1s in the output sequence: the proportion of 1s cannot exceed . Hence the capacity of this channel can be upper-bounded by the noiseless binary channel without dead time, but with an average-power constraint .

2.2. Low-Continuous-Time-Power Regime

In this regime, the effect of a fixed dead time affects capacity starting only on the third-order term:

Proposition 4.

In the regime where and d is fixed and finite,

3. The Poisson Channel

To introduce our model of the discrete-time Poisson channel with dead time, we start with a continuous-time picture. As in the noiseless case, we assume that the receiver employs a single-photon detector with a time resolution of t seconds: the time axis is divided into t-second slots, and each detected photon is declared to be in a certain slot. Further assume that a dead-time period lasts seconds, where for an integer d. The transmitter modulates a laser signal by a (properly normalized) nonnegative waveform . Let Y be the number of detected photons within a slot . Assume there is no dark current. Then, provided that the channel is not dead at time ,

where

Note that (42) is different from the channel law of a standard Poisson channel, for which Y can take values that are larger than 1. This is because we assume the dead time to be longer than one slot t, and consequently there cannot be more than one recorded photon within one slot.

Thus, formally, the discrete-time Poisson channel with nonparalyzable dead time d has input alphabet , output alphabet , and channel law

To model paralyzable dead time, we introduce an auxiliary sequence , which describes the output of the channel (42) without dead time:

We then model the output sequence as follows:

form a Markov chain, and

form a Markov chain, and

form a Markov chain, and

form a Markov chain, and

Assume that an average-power constraint is imposed on the continuous-time input waveform . By (43), this implies an average-power constraint on the discrete-time input x. That is, averaged over the codebook and the blocklength,

Remark 2.

The capacities of the two channels (44) and (45a,b) under the constraint (46) are in general not equal. For simplicity of exposition, in the rest of this section we shall focus on nonparalyzable dead time (44). However, all results in this section, namely, Propositions 5–8, hold for paralyzable dead time (45a,b) as well. Indeed, the proofs and derivations in this section apply almost without change to paralyzable deadtime; we shall provide additional explanations when needed.

We next study the capacity of the Poisson channel with nonparalyzable dead time (44) in the regime where is close to zero. As in the previous section, we distinguish between the wideband scenario and the low-continuous-time-power scenario. In the former, , so and with remaining unchanged; in the latter, so while d remains unchanged. In the former, wideband scenario, we further distinguish between the cases where there is feedback and where there is not. Henceforth we shall stay in the discrete-time picture and no longer refer to the continuous-time picture.

For comparison, we note that, for a discrete-time Poisson channel without dead time and with average-power constraint , in the regime where is close to zero, the best known capacity approximation is given by [10]

(The work [10] considers the standard Poisson channel where the output is not binary. However, the achievability proof in [10] maps the output to a binary random variable, so (47) applies also to the binary-output channel that is the same as our model when .) In general, no closed-form capacity expression has been found for this channel. Several capacity bounds and asymptotic results have been obtained in [7,8,9,10,11].

3.1. Wideband Regime, with Feedback

Consider the regime where while is held fixed. Assume that immediate noiseless feedback is available, so the encoder learns the realizations of before producing . We first observe that the capacity of the Poisson channel with feedback cannot exceed that of the noiseless binary channel.

Proposition 5.

Proof.

Assume that an encoder-decoder pair for the Poisson channel with feedback is given. When the encoder is applied on the message , the output vector equals with a certain probability

Now for the noiseless channel we construct a random encoder as follows: given the message , the codeword is chosen to be with the probability given by (49). Clearly, all codewords will pass through the channel without change. Combining this random encoder with the given decoder for the Poisson channel, we then obtain exactly the same error probability for both channels. Further, from (44) it is clear that the average power in is less than or equal to that in , therefore our random encoder for the noiseless channel consumes at most as much power as the given encoder for the Poisson channel. Thus, given any code for the Poisson channel with feedback, we can construct a valid code for the noiseless binary channel that has the same performance. □

The next proposition shows that, in the wideband regime, the capacity of the Poisson channel with feedback has the same dominant term as that of the noiseless binary channel.

Proposition 6.

Proof.

The converse part follows immediately from Propositions 2, 3 and 5.

We next prove the achievability part in the case where . We shall describe a random coding scheme. We first note a small technicality: the average input power of the following scheme is larger than and of the form . Hence, to obtain a codebook that satisfies the average-power constraint, one should replace in the following by for some positive , and later let approach zero. For simplicity, we shall ignore this technicality henceforth.

Assume that is sufficiently small. For the first channel use, we choose

For future channel uses, based on the feedback, the transmitter determines whether the channel is dead or not. When it is dead, the transmitter chooses with probability one; otherwise it picks X according to (51) independently of all past inputs and outputs.

From (51) we obtain

and

Since each results in d dead channel uses, recalling , we have by (53) that, as and , the ratio of dead to not-dead channel uses approaches in probability, and hence the proportion of not-dead channel uses among all channel uses approaches in probability. This combined with (52) shows that, as claimed earlier, over all channel uses .

The dead channel uses can be identified by both the transmitter and the receiver, hence they can be discarded. The remaining channel uses form a sequence that is memoryless, and our choice of inputs on these channel uses are independent and identically distributed (IID). We can hence apply Shannon’s classic result for discrete memoryless channels [17]: over the channel uses that are not dead, we can achieve all rates up to the mutual information. Note that, for small a,

Recalling (53), we then have

From (51) we have

Hence we have

Recalling that the proportion of not-dead channel uses tends to in probability as and completes the proof for the case where .

We now consider the case where . We use a similar random coding scheme as above, the difference being we replace the distribution (51) by the following:

where will be chosen to approach zero later on. Instead of (53), we now have

This implies that, as and , the portion of not-dead channel uses approaches in probability. This further implies that

so the average-power constraint is satisfied when is small enough.

By the same argument as in the previous case, over the channel uses that are not dead, we can achieve any rate up to

The overall rate is thus given by the above multiplied by , which is

Letting approach zero completes the proof. □

Remark 3.

The coding schemes used in the above proof work for paralyzable dead time as well. When dead time is paralyzable, then in general whether the channel is dead or not cannot be determined from the output sequence. Nevertheless, for the proposed schemes, it can be so determined. Indeed, since the initial state of the channel is known (“not dead”), and since the transmitter always sends 0 when it knows the channel to be dead, one can show by induction that no arrival will occur when the channel is dead, hence dead time will never be restarted in the paralyzable case. This implies with probability one.

3.2. Wideband Regime, without Feedback

Again consider the regime where while is held fixed. Now we assume there is no feedback, so the transmitter cannot know exactly when the channel is dead. In the following we analyze a strategy in which the transmitter sends zero whenever the channel may be dead. The strategy employs pulse-position modulation (PPM) [10,18,19].

We fix a positive integer b and specify its value later. We divide the n channel uses into blocks of length . Within each block, we send a PPM symbol in the first b channel uses, and send zeros in the last d channel uses. Specifically, the transmitter uniformly picks one among the first b channel uses, and sends

In all other channel uses in this block, it sends . The pulse position in different blocks are chosen independently. Clearly, the power constraint (46) is satisfied.

Within a specific block, given the input vector, the output has the following distribution. With probability , at the same position as the input pulse, and elsewhere; and with probability , for the entire block. Furthermore, the channel’s behavior between different blocks are independent (because the last d channel uses in every block are not used, a dead-time period cannot extend to the next block). We thus obtain uses of a memoryless b-ary erasure channel, where the erasure probability is , and with IID uniform inputs. The capacity of this erasure channel is given by

The rate we thus achieve over the Poisson channel is (ignoring the difference between and , whose effect vanishes for large n)

We now choose

for some positive , and recall that . Then (65) becomes

(In the above we ignored the difference between and , the effect of which becomes negligible as .) Letting in the above yields the following asymptotic lower bound.

Proposition 7.

In the above scheme, the transmitter sends 0 whenever there is a nonzero probability that the channel is dead, hence no input energy is wasted. The penalty of this approach is that most of the channel uses are wasted because they are treated as “possibly dead.” Each “possibly dead” period spans d channel uses, and we must use all the energy budget that is “saved” within this period, which equals , before the next “possibly dead” period starts. This means our choice of the amplitude of the pulse in PPM must be at least . For the Poisson channel without dead time, the asymptotically optimal choice for the amplitude of the pulse in PPM should approach zero [10]. That we must choose in place of 0 accounts for the factor in (68) as opposed to 1 in (47). One can check that using on-off keying instead of PPM (while still sending zero whenever the channel may be dead) achieves the same lower bound.

An alternative approach to the above would be to “waste” energy instead of channel uses, or to find a trade-off between these two resources. At the time of writing this paper, we have not found a scheme along this direction that provides a better asymptotic lower bound than (68). Neither have we found a nontrivial upper bound; the best asymptotic upper bound that we know is the capacity with feedback (50).

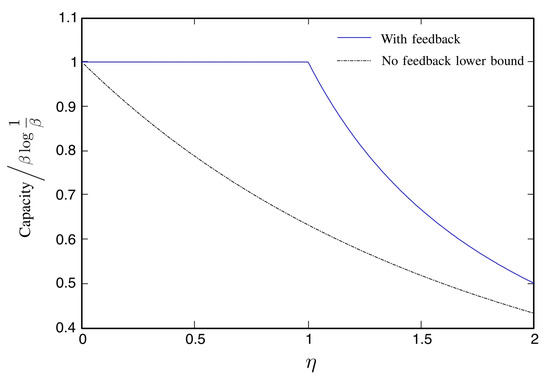

We compare the asymptotic capacity with feedback (50) and the lower bound without feedback (68) in Figure 3.

Figure 3.

The Poisson channel in the wideband regime: comparison between the asymptotic capacity in the presence of feedback (50) and the lower bound when there is no feedback (68). Both expressions are divided by and taken to the limit where , i.e., we compare the scaling constant in front of the dominant term .

3.3. Low-Continuous-Time-Power Regime

We now consider the regime where the allowed continuous-time input power is low, i.e., where while d is held fixed. We shall not consider feedback, because, as we shall see, the effect of dead time on capacity is rather small even when there is no feedback.

We again consider a PPM scheme. The n channel uses are divided into blocks of length , where we now choose

Within each block, the transmitter uniformly picks one among the first b channel uses, where it sends

For all the other channel uses in this block, the transmitter sends 0. The pulse position in different blocks are chosen independently of each other. The average-power constraint (46) is satisfied: over all the n channel uses,

As in Section 3.2, we obtain uses of a b-ary erasure channel. For each block, with probability , the output sequence contains only zeros; and with probability , at the same position as the input pulse, and all other outputs in the block are zero. The capacity of this b-ary erasure channel is

The rate we can thus achieve over the original channel is (ignoring the effect of the operation)

Comparing this expression with (47) shows that the influence of d is only in the term. We summarize this observation in the following proposition.

4. Discussion

As a first step toward understanding communication channels with detector dead time, we have studied the noiseless binary channel and the discrete-time Poisson channel with dead time in the asymptotic regime where the allowed average input power approaches zero. Although these channels have memory, the results in this paper were obtained using simple tools; we have not explored information stability [20] or information-spectrum methods [21,22].

In the scenario where bandwidth is fixed and the average continuous-time input power is required to be low, for both channels, dead time has no effect on the first- and second-order terms in capacity. This may not be surprising, as only a vanishing proportion of channel uses are dead. In the scenario where continuous-time input power is fixed and bandwidth grows large, if dead time limits the maximum possible output rate (as compared to the allowed input rate), then it incurs a penalty in the dominant term in capacity for both channels. If dead time does not limit the output rate, then, for the noiseless channel and for the Poisson channel with feedback, it does not affect the dominant term in capacity, even though now a nonvanishing proportion of channel uses would be dead. For the Poisson channel without feedback in this scenario, there is a gap in the dominant term between our best achievability result and the capacity without dead time. Intuitively, this gap is due to the fact that the transmitter must either sacrifice almost all available channel uses (if it sends nothing when the channel “may be dead”), or risk wasting input energy. We conjecture that the dominant term in capacity in this case is indeed smaller than that without dead time, but a nontrivial upper bound remains to be proven.

In our study of the Poisson channel, we have assumed the dark current to be zero. In the presence of a nonzero dark current, detections can occur at the receiver even when no input is provided to the channel, and these detections will trigger the dead time, complicating the problem considerably. We leave this as a topic for future work.

Another potentially interesting problem is the peak-limited continuous-time Poisson channel [4,5,6]. The closed-form capacity expression of this channel is a classic result. However, we are not aware of any capacity results on such a channel with dead time.

Funding

This research received no external funding.

Acknowledgments

Figure 1 is produced by Anastacia Londoño.

Conflicts of Interest

The author declares no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| IID | independent and identically distributed |

| PPM | pulse-position modulation |

| RLL | run-length limited |

Appendix A

In this appendix we prove Proposition 1. We first derive capacity under a stronger constraint which requires

We then show that this capacity equals .

Let denote the number of length-n sequences containing q 1s, and satisfying that each 1 (except the last) must be followed by at least d 0s. The total number of possible distinct output sequences, for both channels (4) and (5) under (A1), is then given by

It follows that the capacities of the channels (4) and (5) under constraint (A1) are equal and given by

To compute , note that the concerned sequences can be generated by distributing q 1s among positions, and then adding d 0s after each 1 except the last. Therefore,

We upper-bound the summation in (A3) by upper-bounding each summand by the largest summand, and bounding the number of summands by n. This gives

On the other hand, clearly

Combining (A3), (A5) and (A6), and noting that as , we obtain

For , define

By (A4) and Equation (11.40) in [17], we have

As n grows large, as defined by (A8) can be arbitrarily close to any real number that satisfies

Thus we can combine (A7) and (A9) to obtain

Finally, we show that for all and d. Since is the capacity subject to a stronger constraint than , we immediately have . For the reverse direction, consider a sequence of length-n codebooks at rate R that satisfies the average-power constraint. Take an arbitrary . Form a new sequence of codebooks by collecting all the codewords in the original codebooks that contain at most 1s. Clearly, the new codebook satisfies the hard constraint for average power . The size of the new codebook must be at least . As n grows large, the influence of the factor on the rate of the codebook vanishes. We thus obtain, for any ,

The proof is completed by noting that is a continuous function.

References

- Müller, J.W. Dead-Time Problems. Nucl. Instrum. Methods 1973, 112, 47–57. [Google Scholar] [CrossRef]

- Cantor, B.I.; Teich, M.C. Dead-Time-Corrected Photocounting Distributions for Laser Radiation. J. Opt. Soc. Am. 1975, 65, 786–791. [Google Scholar] [CrossRef]

- Teich, M.C.; Cantor, B.I. Information, Error, and Imaging in Deadtime-Perturbed Doubly Stochastic Poisson Counting Systems. IEEE J. Quantum Electron. 1978, QE-14, 993–1003. [Google Scholar]

- Kabanov, Y. The Capacity of a Channel of the Poisson Type. Theory Probab. Appl. 1978, 23, 143–147. [Google Scholar] [CrossRef]

- Davis, M.H.A. Capacity and Cutoff Rate for Poisson-Type Channels. IEEE Trans. Inform. Theory 1980, 26, 710–715. [Google Scholar] [CrossRef]

- Wyner, A.D. Capacity and Error Exponent for the Direct Detection Photon Channel—Parts I and II. IEEE Trans. Inform. Theory 1988, 34, 1462–1471. [Google Scholar] [CrossRef]

- Shamai (Shitz), S. Capacity of a Pulse Amplitude Modulated Direct Detection Photon Channel. IEE Proc. Commun. Speech Vis. 1990, 137, 424–430. [Google Scholar] [CrossRef]

- Lapidoth, A.; Moser, S.M. On the Capacity of the Discrete-Time Poisson Channel. IEEE Trans. Inform. Theory 2009, 55, 303–322. [Google Scholar] [CrossRef]

- Lapidoth, A.; Shapiro, J.H.; Venkatesan, V.; Wang, L. The Discrete-Time Poisson Channel at Low Input Powers. IEEE Trans. Inform. Theory 2011, 57, 3260–3272. [Google Scholar] [CrossRef]

- Wang, L.; Wornell, G.W. A Refined Analysis of the Poisson Channel in the High-Photon-Efficiency Regime. IEEE Trans. Inform. Theory 2014, 60, 4299–4311. [Google Scholar] [CrossRef]

- Cheraghchi, M.; Ribeiro, J.A. Improved Upper Bounds and Structural Results on the Capacity of the Discrete-Time Poisson Channel. IEEE Trans. Inform. Theory 2019, 65, 4052–4068. [Google Scholar] [CrossRef]

- Freiman, C.V.; Wyner, A.D. Optimum Block Codes for Noiseless Input Restricted Channels. Inf. Control. 1964, 7, 398–415. [Google Scholar] [CrossRef]

- Ashley, J.J.; Siegel, P.H. A Note on the Shannon Capacity of Run-Length Limited Codes. IEEE Trans. Inform. Theory 1987, 33, 601–605. [Google Scholar] [CrossRef]

- Shouhamer Immink, K.A. A Survey of Codes for Optical Disk Recording. IEEE J. Sel. Areas Commun. 2001, 19, 756–764. [Google Scholar] [CrossRef]

- Peled, O.; Sabag, O.; Permuter, H.H. Feedback Capacity and Coding for the (0, k)-RLL Input-Constrained BEC. IEEE Trans. Inform. Theory 2019, 65, 4097–4114. [Google Scholar] [CrossRef]

- Mandel, L.; Wolf, E. Optical Coherence and Quantum Optics; Cambridge University Press: Cambridge, UK, 1995. [Google Scholar]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory, 2nd ed.; John Wiley & Sons: New York, NY, USA, 2006. [Google Scholar]

- Pierce, J. Optical Channels: Practical Limits with Photon Counting. IEEE Trans. Commun. 1978, 26, 1819–1821. [Google Scholar] [CrossRef]

- Massey, J.L. Capacity, Cutoff Rate, and Coding for a Direct-Detection Optical Channel. IEEE Trans. Commun. 1981, 29, 1615–1621. [Google Scholar] [CrossRef]

- Pinsker, M.S. Information and Information Stability of Random Variables and Processes; Holden-Day: San Francisco, CA, USA, 1964. [Google Scholar]

- Verdú, S.; Han, T.S. A General Formula for Channel Capacity. IEEE Trans. Inform. Theory 1994, 40, 1147–1157. [Google Scholar] [CrossRef]

- Han, T.S. Information Spectrum Methods in Information Theory; Springer: Berlin, Germany, 2003. [Google Scholar]

| 1 | Number states (Fock states) are pure quantum states that contain deterministic numbers of photons. They are different from coherent states, which are used to model light emitted by lasers. A (nonzero) coherent state is also a pure quantum state, but contains a random number of photons following a Poisson distribution. For more details we refer the reader to [16]. |

| 2 | For physicists, the sender sending a photon at a specific time really means that it sends the number state in an optical mode centered at that time. Here we assume that the available optical bandwidth is sufficiently large so that each t-second slot accommodates a large number of optical modes, and that the time uncertainty of each mode can be ignored. Note that in the text we use “bandwidth” to refer to —the reciprocal of the detector’s time resolution—and not the optical bandwidth. |

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).