Privacy-Aware Distributed Hypothesis Testing †

Abstract

1. Introduction

1.1. Background

1.2. Main Contributions

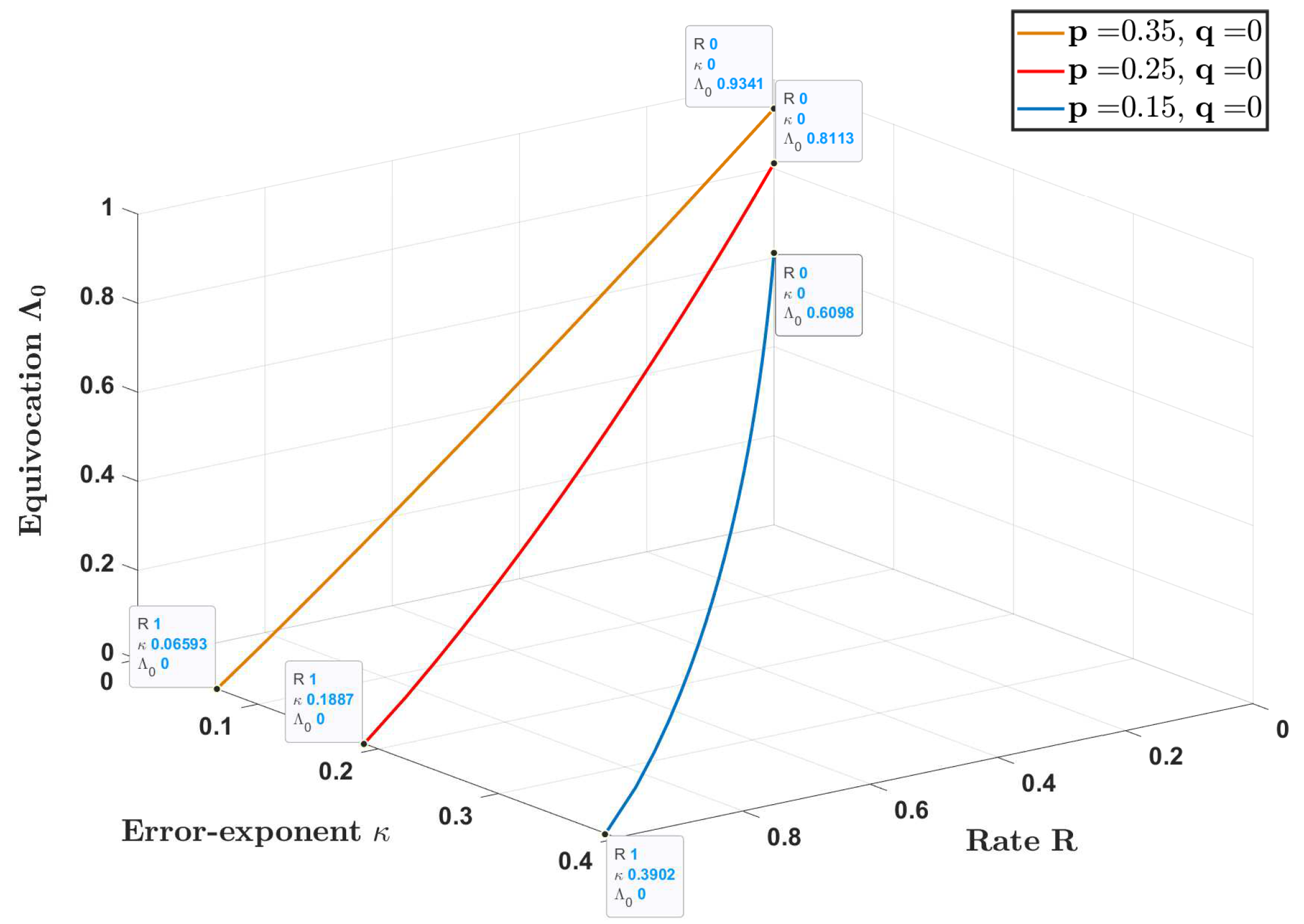

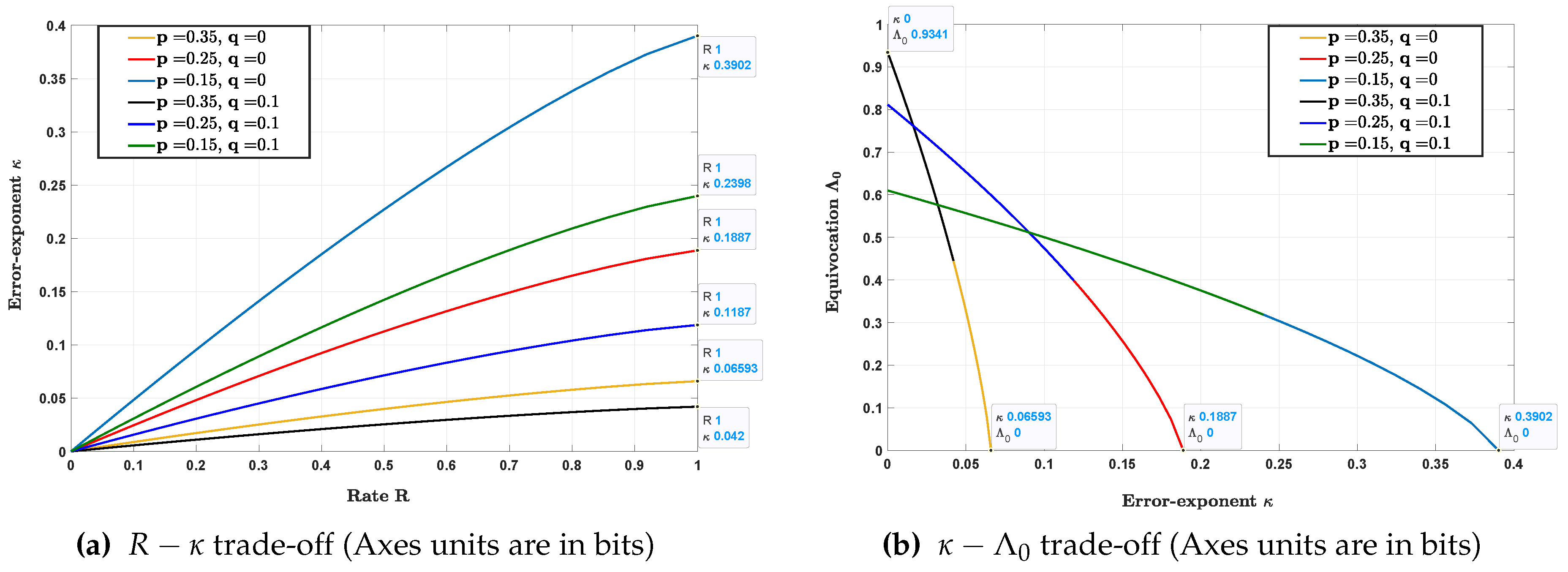

- In Section 3, Theorem 1 (resp. Theorem 2), we establish a single-letter inner bound on the rate-error exponent-equivocation (resp. rate-error exponent-distortion) trade-off for DHT with a privacy constraint. The distortion and equivocation privacy constraints we consider, which is given in (6) and (7), respectively, are slightly stronger than what is usually considered in the literature (stated in (8) and (9), respectively).

- Exact characterizations are obtained for some important special cases in Section 4. More specifically, a single-letter characterization of the optimal rate-error exponent-equivocation (resp. rate-error exponent-distortion) trade-off is established for:

- (a)

- TACI with a privacy constraint (for vanishing type I error probability constraint) in Section 4.1, Proposition 1 (resp. Proposition 2),

- (b)

- DHT with a privacy constraint for zero-rate compression in Section 4.2, Proposition 4 (resp. Proposition 3).

Since the optimal trade-offs in Propositions 3 and 4 are independent of the constraint on the type I error probability, they are strong converse results in the context of HT. - Finally, in Section 5, we provide a counter-example showing that for a positive rate , the strong converse result does not hold in general for TAI with a privacy constraint.

1.3. Organization

2. Preliminaries

2.1. Notations

2.2. Problem Formulation

2.3. Relation to Previous Work

2.4. Supporting Results

3. Main Results

4. Optimality Results for Special Cases

4.1. TACI with a Privacy Constraint

4.2. Zero-Rate Compression

5. A Counter-Example to the Strong Converse

- Quantize to codewords in and send the index of quantization to the detector, i.e., if , send , where j is the index of in . Else, send .

- At the detector, if , declare . Else, declare if for some , and otherwise.

- If , send with probability , where j is the index of in , and with probability , send . If , send .

- At the detector, if , declare . Else, declare if for some , and otherwise.

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| HT | Hypothesis testing |

| DHT | Distributed hypothesis testing |

| TACI | Testing against conditional independence |

| TAI | Testing against independence |

| DP | Differential privacy |

| KL | Kullback–Leibler |

| SHA | Shimokawa-Han-Amari |

Appendix A. Proof of Lemma 1

Appendix B. Proof of Lemma 2

Appendix C. Proof of Lemma 4

Appendix D. Proof of Theorems 1 and 2

Appendix E. Proof of Lemma 5

References

- Appari, A.; Johnson, E. Information security and privacy in healthcare: Current state of research. Int. J. Internet Enterp. Manag. 2010, 6, 279–314. [Google Scholar] [CrossRef]

- Gross, R.; Acquisti, A. Information revelation and privacy in online social networks. In Proceedings of the ACM workshop on Privacy in Electronic Society, Alexandria, VA, USA, 7 November 2005; pp. 71–80. [Google Scholar]

- Miyazaki, A.; Fernandez, A. Consumer Perceptions of Privacy and Security Risks for Online Shopping. J. Consum. Aff. 2001, 35, 27–44. [Google Scholar] [CrossRef]

- Giaconi, G.; Gündüz, D.; Poor, H.V. Privacy-Aware Smart Metering: Progress and Challenges. IEEE Signal Process. Mag. 2018, 35, 59–78. [Google Scholar] [CrossRef]

- Ahlswede, R.; Csiszár, I. Hypothesis Testing with Communication Constraints. IEEE Trans. Inf. Theory 1986, 32, 533–542. [Google Scholar] [CrossRef]

- Han, T.S. Hypothesis Testing with Multiterminal Data Compression. IEEE Trans. Inf. Theory 1987, 33, 759–772. [Google Scholar] [CrossRef]

- Shimokawa, H.; Han, T.S.; Amari, S. Error Bound of Hypothesis Testing with Data Compression. In Proceedings of the IEEE International Symposium on Information Theory, Trondheim, Norway, 27 June–1 July 1994. [Google Scholar]

- Shalaby, H.M.H.; Papamarcou, A. Multiterminal Detection with Zero-Rate Data Compression. IEEE Trans. Inf. Theory 1992, 38, 254–267. [Google Scholar] [CrossRef]

- Zhao, W.; Lai, L. Distributed Testing Against Independence with Multiple Terminals. In Proceedings of the 52nd Annual Allerton Conference, Monticello, IL, USA, 30 September–3 October 2014; pp. 1246–1251. [Google Scholar]

- Katz, G.; Piantanida, P.; Debbah, M. Distributed Binary Detection with Lossy Data Compression. IEEE Trans. Inf. Theory 2017, 63, 5207–5227. [Google Scholar] [CrossRef]

- Rahman, M.S.; Wagner, A.B. On the Optimality of Binning for Distributed Hypothesis Testing. IEEE Trans. Inf. Theory 2012, 58, 6282–6303. [Google Scholar] [CrossRef][Green Version]

- Sreekumar, S.; Gündüz, D. Distributed Hypothesis Testing Over Noisy Channels. In Proceedings of the IEEE International Symposium on Information Theory, Aachen, Germany, 25–30 June 2017; pp. 983–987. [Google Scholar]

- Sreekumar, S.; Gündüz, D. Distributed Hypothesis Testing Over Discrete Memoryless Channels. IEEE Trans. Inf. Theory 2020, 66, 2044–2066. [Google Scholar] [CrossRef]

- Salehkalaibar, S.; Wigger, M.; Timo, R. On Hypothesis Testing Against Conditional Independence with Multiple Decision Centers. IEEE Trans. Commun. 2018, 66, 2409–2420. [Google Scholar] [CrossRef]

- Salehkalaibar, S.; Wigger, M. Distributed Hypothesis Testing based on Unequal-Error Protection Codes. arXiv 2018, arXiv:1806.05533. [Google Scholar] [CrossRef]

- Han, T.S.; Kobayashi, K. Exponential-Type Error Probabilities for Multiterminal Hypothesis Testing. IEEE Trans. Inf. Theory 1989, 35, 2–14. [Google Scholar] [CrossRef]

- Haim, E.; Kochman, Y. On Binary Distributed Hypothesis Testing. arXiv 2018, arXiv:1801.00310. [Google Scholar]

- Weinberger, N.; Kochman, Y. On the Reliability Function of Distributed Hypothesis Testing Under Optimal Detection. IEEE Trans. Inf. Theory 2019, 65, 4940–4965. [Google Scholar] [CrossRef]

- Bayardo, R.; Agrawal, R. Data privacy through optimal k-anonymization. In Proceedings of the International Conference on Data Engineering, Tokyo, Japan, 5–8 April 2005; pp. 217–228. [Google Scholar]

- Agrawal, R.; Srikant, R. Privacy-preserving data mining. In Proceedings of the ACM SIGMOD International Conference on Management of Data, Dallas, TX, USA, 18–19 May 2000; pp. 439–450. [Google Scholar]

- Bertino, E. Big Data-Security and Privacy. In Proceedings of the IEEE International Congress on BigData, New York, NY, USA, 27 June–2 July 2015; pp. 425–439. [Google Scholar]

- Gertner, Y.; Ishai, Y.; Kushilevitz, E.; Malkin, T. Protecting Data Privacy in Private Information Retrieval Schemes. J. Comput. Syst. Sci. 2000, 60, 592–629. [Google Scholar] [CrossRef]

- Hay, M.; Miklau, G.; Jensen, D.; Towsley, D.; Weis, P. Resisting structural re-identification in anonymized social networks. J. Proc. VLDB Endow. 2008, 1, 102–114. [Google Scholar] [CrossRef]

- Narayanan, A.; Shmatikov, V. De-anonymizing Social Networks. In Proceedings of the IEEE Symposium on Security and Privacy, Berkeley, CA, USA, 17–20 May 2009. [Google Scholar]

- Liao, J.; Sankar, L.; Tan, V.; Calmon, F. Hypothesis Testing Under Mutual Information Privacy Constraints in the High Privacy Regime. IEEE Trans. Inf. Forensics Secur. 2018, 13, 1058–1071. [Google Scholar] [CrossRef]

- Liao, J.; Sankar, L.; Calmon, F.; Tan, V. Hypothesis testing under maximal leakage privacy constraints. In Proceedings of the IEEE International Symposium on Information Theory, Aachen, Germany, 25–30 June 2017. [Google Scholar]

- Gilani, A.; Amor, S.B.; Salehkalaibar, S.; Tan, V. Distributed Hypothesis Testing with Privacy Constraints. Entropy 2019, 21, 478. [Google Scholar] [CrossRef]

- Gündüz, D.; Erkip, E.; Poor, H.V. Secure lossless compression with side information. In Proceedings of the IEEE Information Theory Workshop, Porto, Portugal, 5–9 May 2008; pp. 169–173. [Google Scholar]

- Gündüz, D.; Erkip, E.; Poor, H.V. Lossless compression with security constraints. In Proceedings of the IEEE International Symposium on Information Theory, Toronto, ON, Canada, 6–11 July 2008; pp. 111–115. [Google Scholar]

- Mhanna, M.; Piantanida, P. On secure distributed hypothesis testing. In Proceedings of the IEEE International Symposium on Information Theory, Hong Kong, China, 14–19 June 2015; pp. 1605–1609. [Google Scholar]

- Sweeney, L. K-anonymity: A model for protecting privacy. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 2002, 10, 557–570. [Google Scholar] [CrossRef]

- Dwork, C.; McSherry, F.; Nissim, K.; Smith, A. Calibrating Noise to Sensitivity in Private Data Analysis. In Theory of Cryptography; Springer: Berlin/Heidelberg, Germany, 2006; pp. 265–284. [Google Scholar]

- Calmon, F.; Fawaz, N. Privacy Against Statistical Inference. In Proceedings of the 50th Annual Allerton Conference, Illinois, IL, USA, 1–5 October 2012; pp. 1401–1408. [Google Scholar]

- Makhdoumi, A.; Salamatian, S.; Fawaz, N.; Medard, M. From the information bottleneck to the privacy funnel. In Proceedings of the IEEE Information Theory Workshop, Hobart, Australia, 2–5 November 2014; pp. 501–505. [Google Scholar]

- Calmon, F.; Makhdoumi, A.; Medard, M. Fundamental limits of perfect privacy. In Proceedings of the IEEE International Symposium on Information Theory, Hong Kong, China, 14–19 June 2015; pp. 1796–1800. [Google Scholar]

- Issa, I.; Kamath, S.; Wagner, A.B. An Operational Measure of Information Leakage. In Proceedings of the Annual Conference on Information Science and Systems, Princeton, NJ, USA, 16–18 March 2016; pp. 1–6. [Google Scholar]

- Rassouli, B.; Gündüz, D. Optimal Utility-Privacy Trade-off with Total Variation Distance as a Privacy Measure. IEEE Trans. Inf. Forensics Secur. 2019, 15, 594–603. [Google Scholar] [CrossRef]

- Wagner, I.; Eckhoff, D. Technical Privacy Metrics: A Systematic Survey. arXiv 2015, arXiv:1512.00327v1. [Google Scholar] [CrossRef]

- Goldwasser, S.; Micali, S. Probabilistic encryption. J. Comput. Syst. Sci. 1984, 28, 270–299. [Google Scholar] [CrossRef]

- Bellare, M.; Tessaro, S.; Vardy, A. Semantic Security for the Wiretap Channel. In Proceedings of the Advances in Cryptology-CRYPTO 2012, Heidelberg, Germany, 19–23 August 2012; pp. 294–311. [Google Scholar]

- Yamamoto, H. A Rate-Distortion Problem for a Communication System with a Secondary Decoder to be Hindered. IEEE Trans. Inf. Theory 1988, 34, 835–842. [Google Scholar] [CrossRef]

- Tandon, R.; Sankar, L.; Poor, H.V. Discriminatory Lossy Source Coding: Side Information Privacy. IEEE Trans. Inf. Theory 2013, 59, 5665–5677. [Google Scholar] [CrossRef]

- Schieler, C.; Cuff, P. Rate-Distortion Theory for Secrecy Systems. IEEE Trans. Inf. Theory 2014, 60, 7584–7605. [Google Scholar] [CrossRef]

- Agarwal, G.K. On Information Theoretic and Distortion-based Security. Ph.D. Thesis, University of California, Los Angeles, CA, USA, 2019. Available online: https://escholarship.org/uc/item/7qs7z91g (accessed on 3 January 2020).

- Li, Z.; Oechtering, T.; Gündüz, D. Privacy against a hypothesis testing adversary. IEEE Trans. Inf. Forensics Secur. 2019, 14, 1567–1581. [Google Scholar] [CrossRef]

- Cuff, P.; Yu, L. Differential privacy as a mutual information constraint. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, Vienna, Austria, 24–28 October 2016; pp. 43–54. [Google Scholar]

- Goldfeld, Z.; Cuff, P.; Permuter, H.H. Semantic-Security Capacity for Wiretap Channels of Type II. IEEE Trans. Inf. Theory 2016, 62, 3863–3879. [Google Scholar] [CrossRef]

- Sreekumar, S.; Bunin, A.; Goldfeld, Z.; Permuter, H.H.; Shamai, S. The Secrecy Capacity of Cost-Constrained Wiretap Channels. arXiv 2020, arXiv:2004.04330. [Google Scholar]

- Kasiviswanathan, S.P.; Lee, H.K.; Nissim, K.; Raskhodnikova, S.; Smith, A. What can we learn privately? SIAM J. Comput. 2011, 40, 793–826. [Google Scholar] [CrossRef]

- Duchi, J.C.; Jordan, M.I.; Wainwright, M.J. Local Privacy and Statistical Minimax Rates. In Proceedings of the 2013 IEEE 54th Annual Symposium on Foundations of Computer Science, Berkeley, CA, USA, 26–29 October 2013; pp. 429–438. [Google Scholar]

- Duchi, J.C.; Jordan, M.I.; Wainwright, M.J. Privacy Aware Learning. J. ACM 2014, 61, 1–57. [Google Scholar] [CrossRef]

- Wang, Y.; Lee, J.; Kifer, D. Differentially Private Hypothesis Testing, Revisited. arXiv 2015, arXiv:1511.03376. [Google Scholar]

- Gaboardi, M.; Lim, H.; Rogers, R.; Vadhan, S. Differentially Private Chi-Squared Hypothesis Testing: Goodness of Fit and Independence Testing. In Proceedings of the 33rd International Conference on Machine Learning, New York City, NY, USA, 19–24 June 2016; Volume 48, pp. 2111–2120. [Google Scholar]

- Rogers, R.M.; Roth, A.; Smith, A.D.; Thakkar, O. Max-Information, Differential Privacy, and Post-Selection Hypothesis Testing. arXiv 2016, arXiv:1604.03924. [Google Scholar]

- Cai, B.; Daskalakis, C.; Kamath, G. Priv’IT: Private and Sample Efficient Identity Testing. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; Volume 70, pp. 635–644. [Google Scholar]

- Sheffet, O. Locally Private Hypothesis Testing. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; Volume 80, pp. 4605–4614. [Google Scholar]

- Acharya, J.; Sun, Z.; Zhang, H. Differentially Private Testing of Identity and Closeness of Discrete Distributions. In Advances in Neural Information Processing Systems 31; Curran Associates Inc.: Red Hook, NY, USA, 2018; pp. 6878–6891. [Google Scholar]

- Canonne, C.L.; Kamath, G.; McMillan, A.; Smith, A.; Ullman, J. The Structure of Optimal Private Tests for Simple Hypotheses. In Proceedings of the 51st Annual ACM SIGACT Symposium on Theory of Computing, Phoenix, Arizona, 23–26 June 2019; pp. 310–321. [Google Scholar]

- Aliakbarpour, M.; Diakonikolas, I.; Kane, D.; Rubinfeld, R. Private Testing of Distributions via Sample Permutations. In Advances in Neural Information Processing Systems 32; Curran Associates Inc.: Red Hook, NY, USA, 2019; pp. 10878–10889. [Google Scholar]

- Csiszár, I.; Körner, J. Information Theory: Coding Theorems for Discrete Memoryless Systems; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

- Wang, Y.; Basciftci, Y.O.; Ishwar, P. Privacy-Utility Tradeoffs under Constrained Data Release Mechanisms. arXiv 2017, arXiv:1710.09295. [Google Scholar]

- Cuff, P. Distributed Channel Synthesis. IEEE Trans. Inf. Theory 2013, 59, 7071–7096. [Google Scholar] [CrossRef]

- Song, E.C.; Cuff, P.; Poor, H.V. The Likelihood Encoder for Lossy Compression. IEEE Trans. Inf. Theory 2016, 62, 1836–1849. [Google Scholar] [CrossRef]

- Wyner, A.D. The Common Information of Two Dependent Random Variables. IEEE Trans. Inf. Theory 1975, 21, 163–179. [Google Scholar] [CrossRef]

- Han, T.S.; Verdú, S. Approximation Theory of Output Statistics. IEEE Trans. Inf. Theory 1993, 39, 752–772. [Google Scholar] [CrossRef]

- Sreekumar, S.; Gündüz, D.; Cohen, A. Distributed Hypothesis Testing Under Privacy Constraints. In Proceedings of the IEEE Information Theory Workshop (ITW), Guangzhou, China, 25–29 November 2018; pp. 1–5. [Google Scholar]

- Tishby, N.; Pereira, F.; Bialek, W. The Information Bottleneck Method. arXiv 2000, arXiv:physics/0004057. [Google Scholar]

- Gamal, A.E.; Kim, Y.H. Network Information Theory; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

- Polyanskiy, Y. Channel Coding: Non-Asymptotic Fundamental Limits. Ph.D. Thesis, Princeton University, Princeton, NJ, USA, 2010. [Google Scholar]

- Yang, W.; Caire, G.; Durisi, G.; Polyanskiy, Y. Optimum Power Control at Finite Blocklength. IEEE Trans. Inf. Theory 2015, 61, 4598–4615. [Google Scholar] [CrossRef]

- Villard, J.; Piantanida, P. Secure Multiterminal Source Coding With Side Information at the Eavesdropper. IEEE Trans. Inf. Theory 2013, 59, 3668–3692. [Google Scholar] [CrossRef][Green Version]

- Gallager, R.G. A simple derivation of the coding theorem and some applications. IEEE Trans. Inf. Theory 1965, 11, 3–18. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sreekumar, S.; Cohen, A.; Gündüz, D. Privacy-Aware Distributed Hypothesis Testing. Entropy 2020, 22, 665. https://doi.org/10.3390/e22060665

Sreekumar S, Cohen A, Gündüz D. Privacy-Aware Distributed Hypothesis Testing. Entropy. 2020; 22(6):665. https://doi.org/10.3390/e22060665

Chicago/Turabian StyleSreekumar, Sreejith, Asaf Cohen, and Deniz Gündüz. 2020. "Privacy-Aware Distributed Hypothesis Testing" Entropy 22, no. 6: 665. https://doi.org/10.3390/e22060665

APA StyleSreekumar, S., Cohen, A., & Gündüz, D. (2020). Privacy-Aware Distributed Hypothesis Testing. Entropy, 22(6), 665. https://doi.org/10.3390/e22060665