Professional or Amateur? The Phonological Output Buffer as a Working Memory Operator

Abstract

:1. The Phonological Output Buffer and Its Challenges

2. Sign Language Phonology and Its Distinct Demands on an Output Buffer

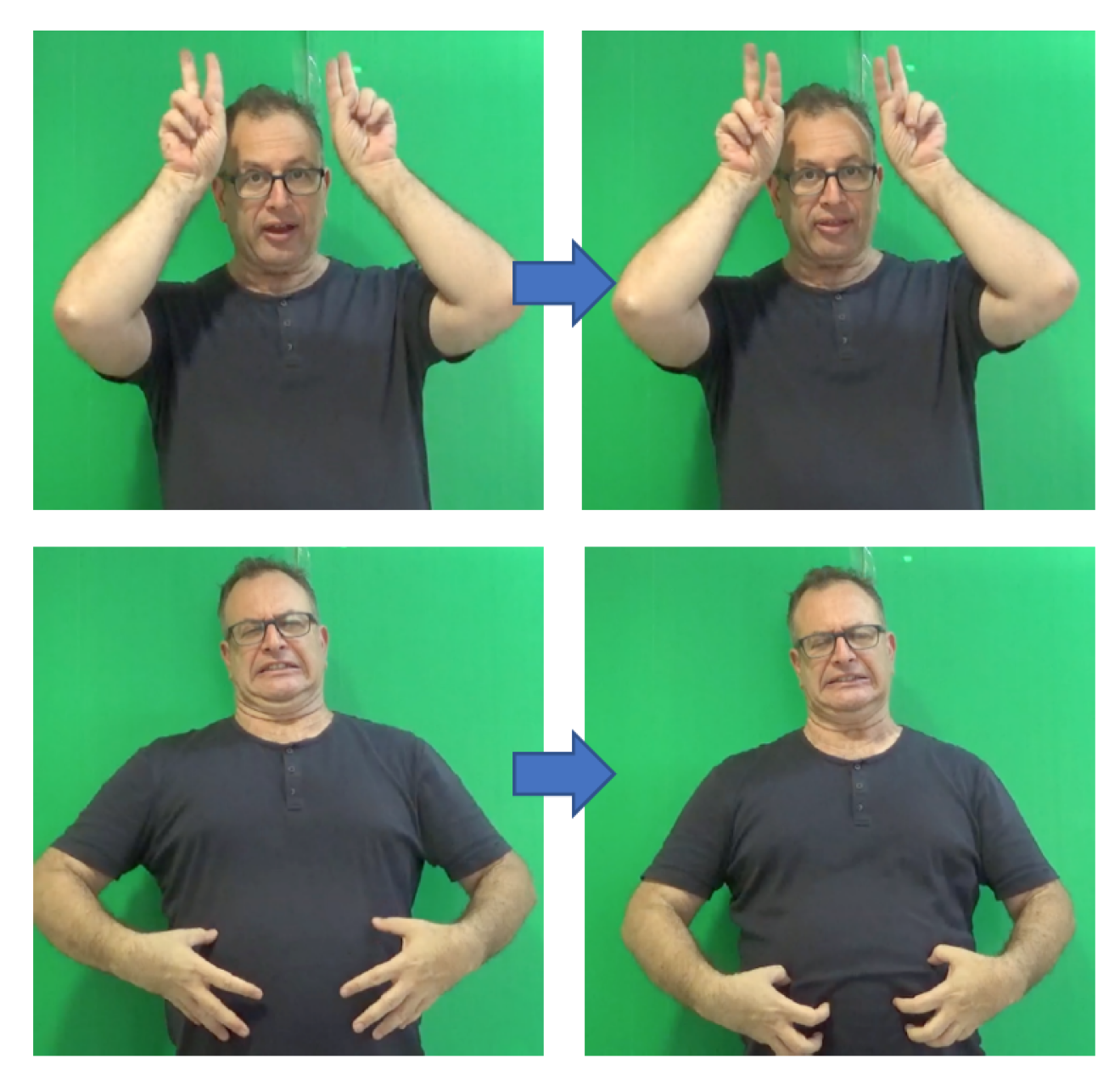

2.1. Markedness of Handshapes

- Unmarked shapes are acquired earlier.

- Unmarked shapes are easier to articulate.

- Unmarked shapes can be used on H2 (the non-dominant hand), while marked shapes cannot.

- Unmarked shapes are more frequent cross-linguistically.

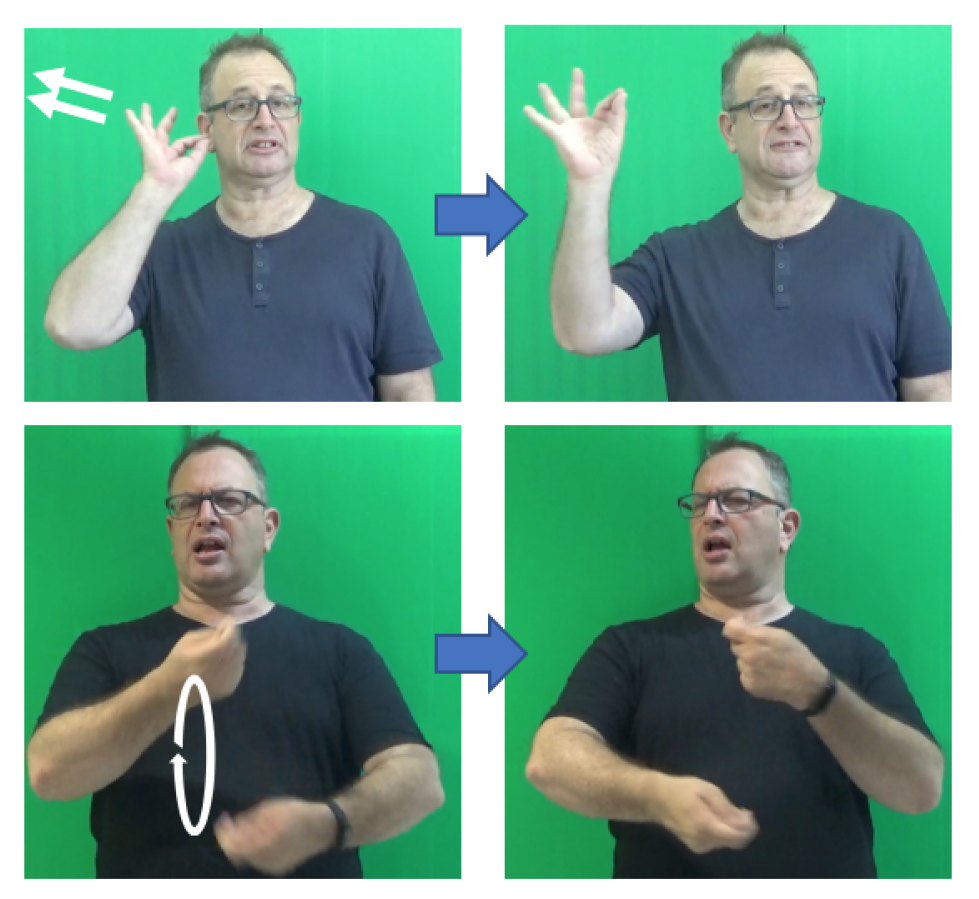

2.2. The Non-Dominant Hand

- type-1: both hands are active, have the same handshape, and perform the same movement.

- type-2: H2 is passive, and exhibits the same handshape as the dominant hand.

- type-3: H2 is passive, but exhibits a different handshape from the dominant hand.

2.3. The Inventory of Phonemes in ISL

2.4. The Syllable in Sign Languages

- Reduction of movement and repetition—movement and repetition of the single signs constructing the compound are reduced. This applies most drastically to the first element of the compound.

- Smoothing of transition between signs—movement used for transition between the two signs in a compound is reduced spatially and temporally. The two signs are signed closer to each other in signing space, the transition is faster, and the movement between them is smoother.

3. Signing Output Buffer Function and Dysfunction

3.1. Working Memory Mechanisms in Signers of Sign Languages

3.2. Impairments to the POB acting on signs

4. A Potts Network Model of Cortically Distributed Compositional Memories

- its units represent not neurons but small patches of cortex, in their tendency to approach one of S local dynamical states, so that attention is focused above the local circuitry and operations, which are assumed to be largely the same throughout.

- It assumes long-term memories of any kind to be distributed over many patches, even if localized at the gross system level, so that it can be analyzed statistically, adapting statistical physics techniques.

- In certain parameter regimes, it shows a tendency to generate spontaneous latching dynamics, or hopping among global cortical states, which can be utilized to model endogenous dynamics generated by cognitive processes.

4.1. The Potts Network With Discrete Long-Term Memories

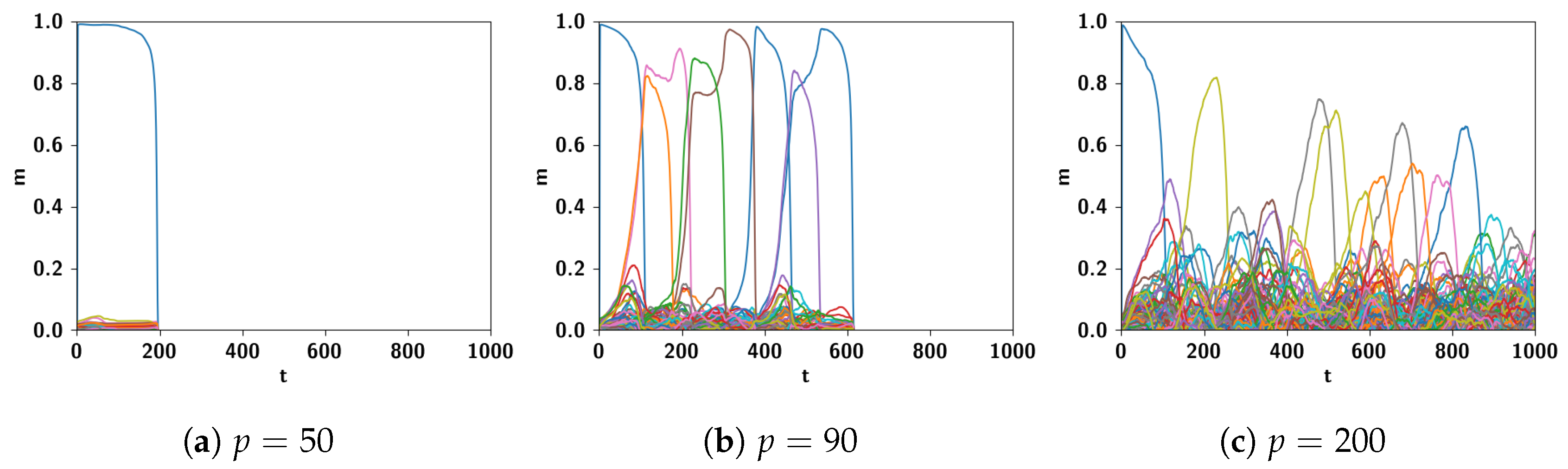

4.2. Potts Model Dynamics

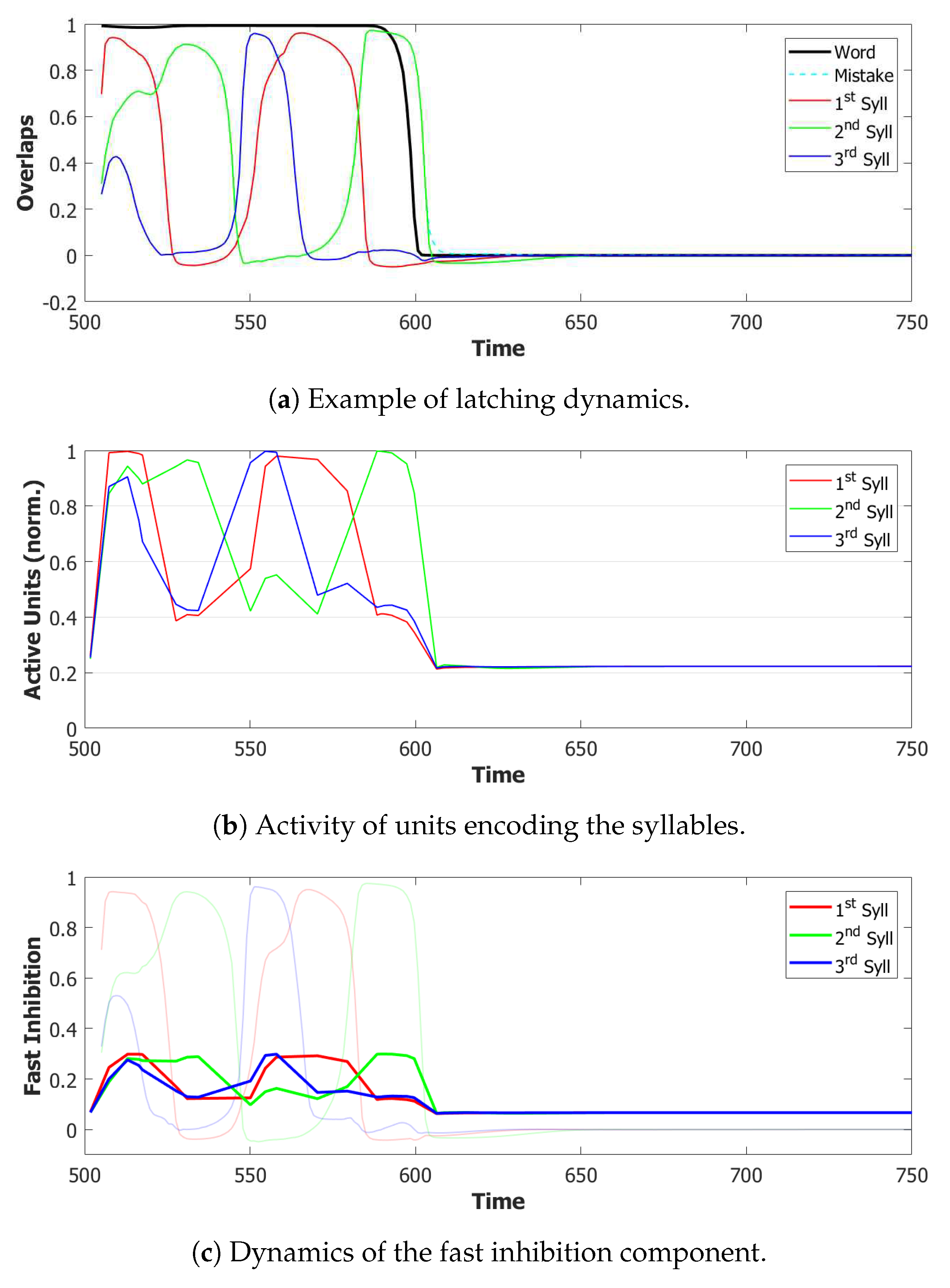

Latching Guided by Heteroassociative Connections

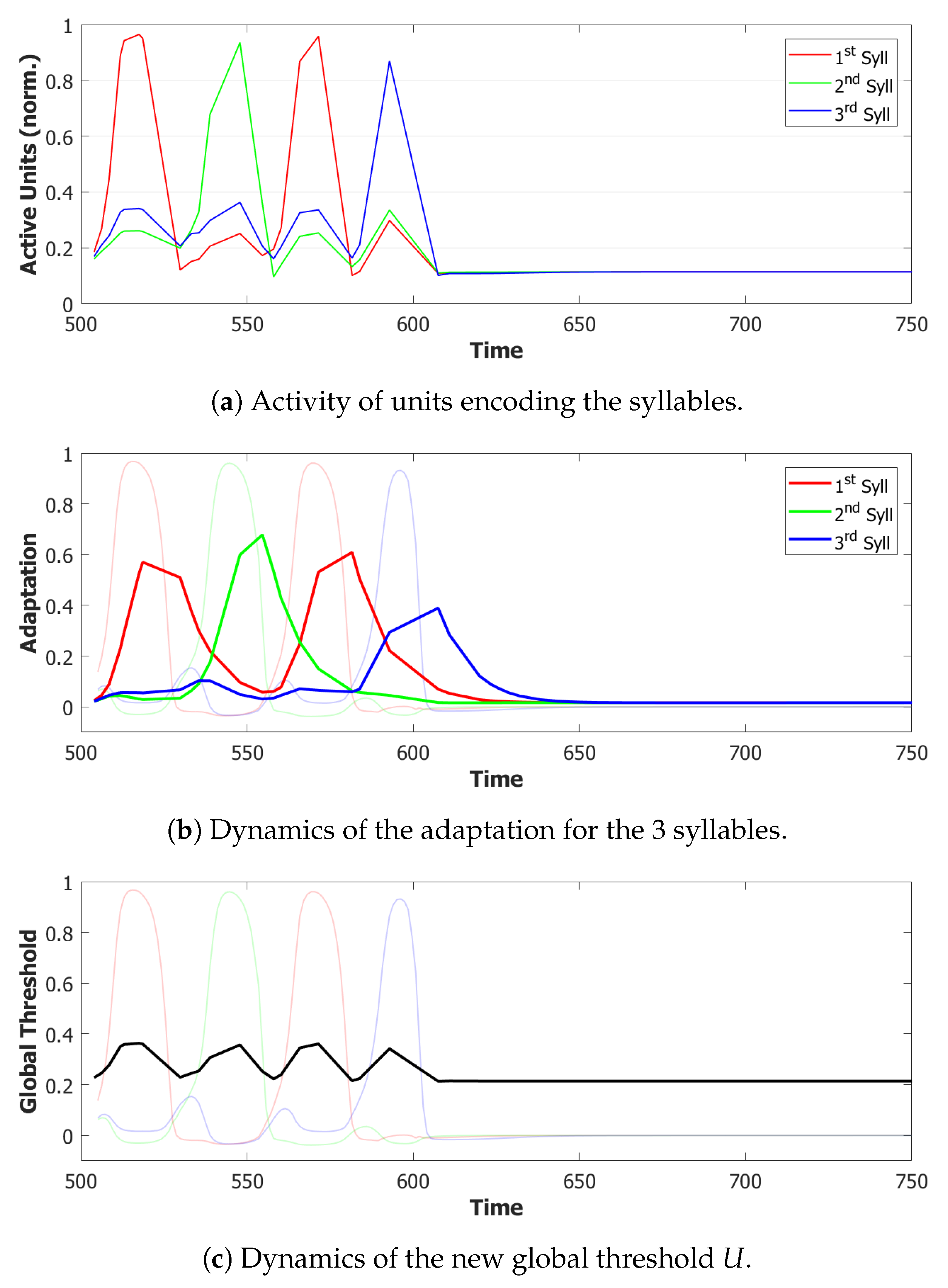

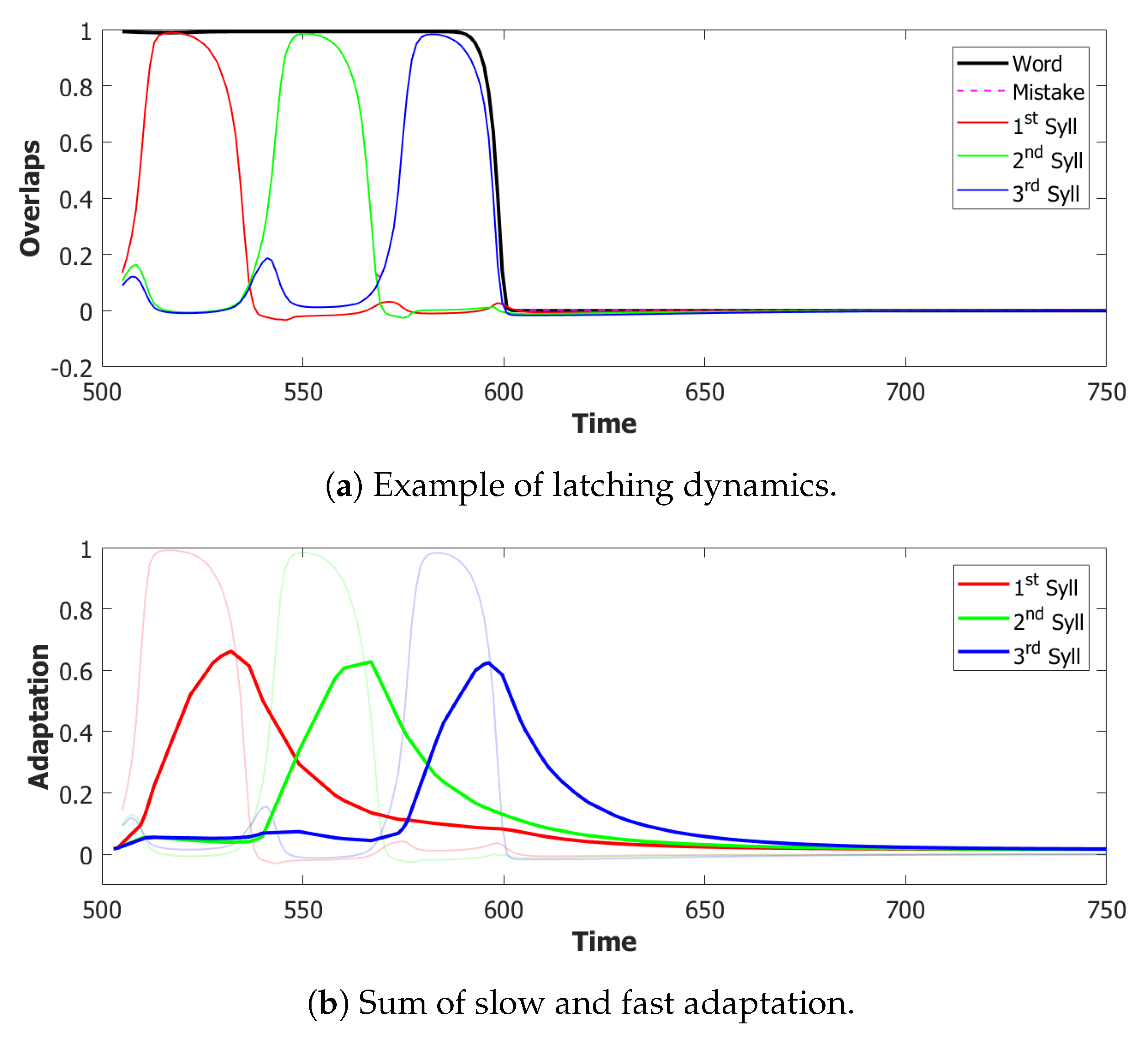

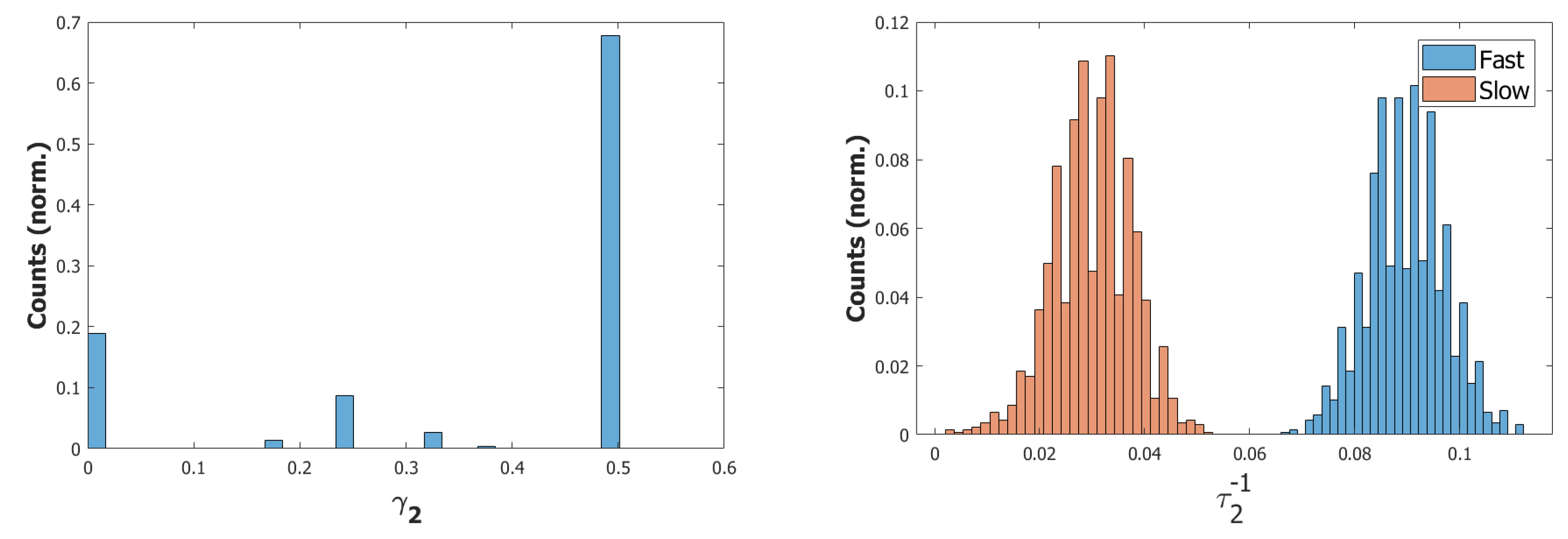

4.3. Short-Term Memory in the Latching Range Extended by Fast and Slow Inhibition

5. A Structured POB Potts Network

5.1. Network Structure

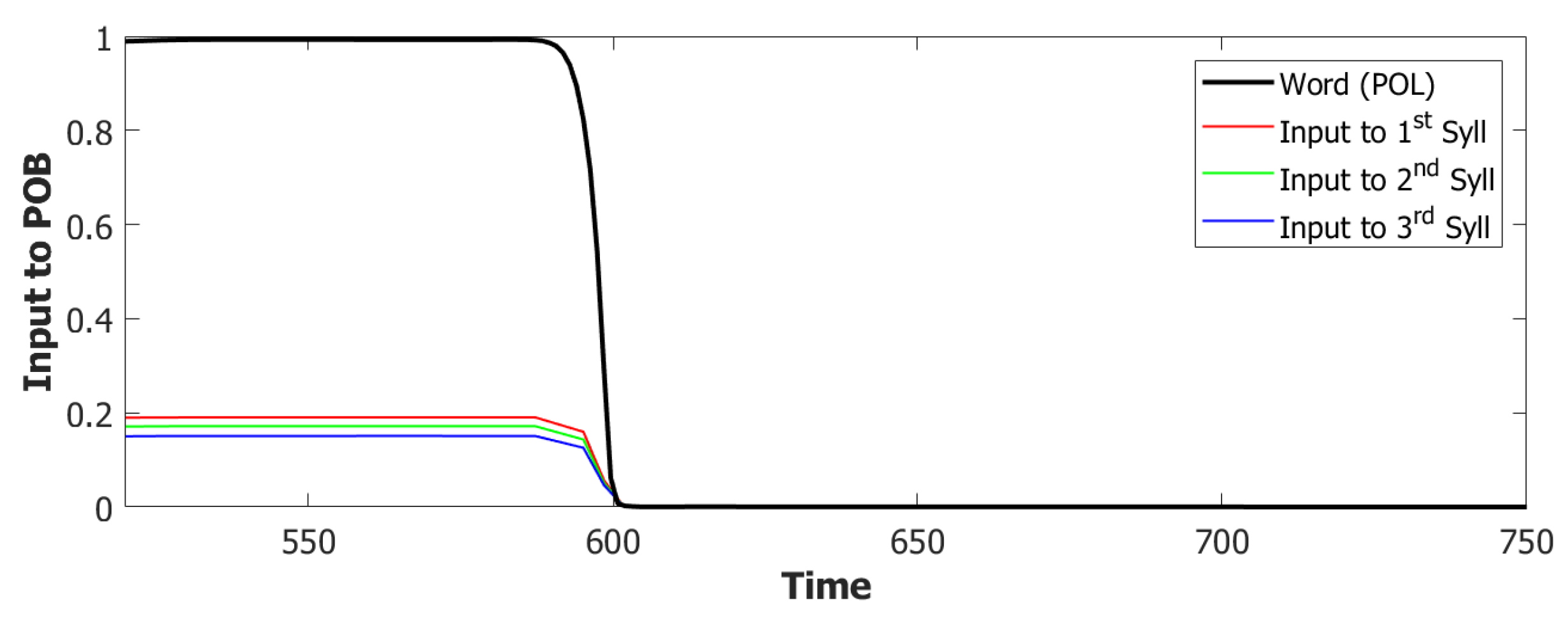

5.1.1. Step 1: Cascade Input

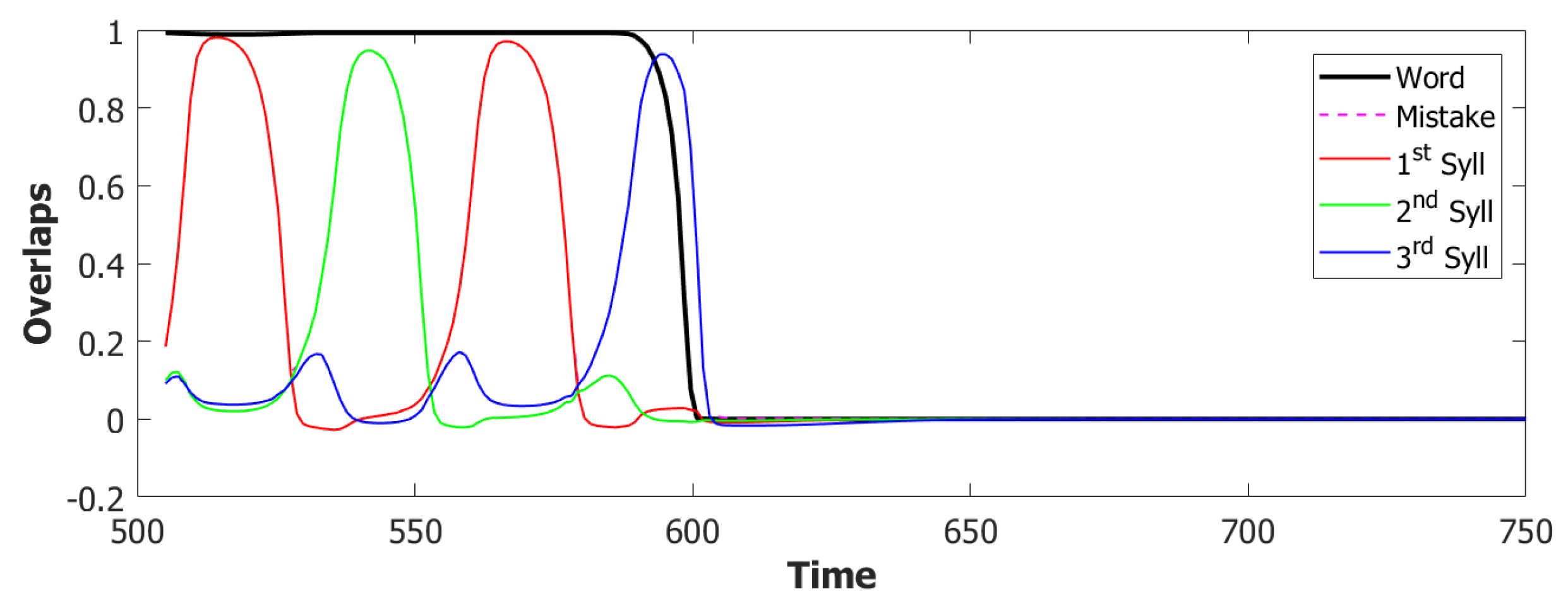

5.1.2. Step 2: Fast Inhibition

5.1.3. Step 3: Dynamic Global Threshold

5.1.4. Step 4: Slow Adaptation

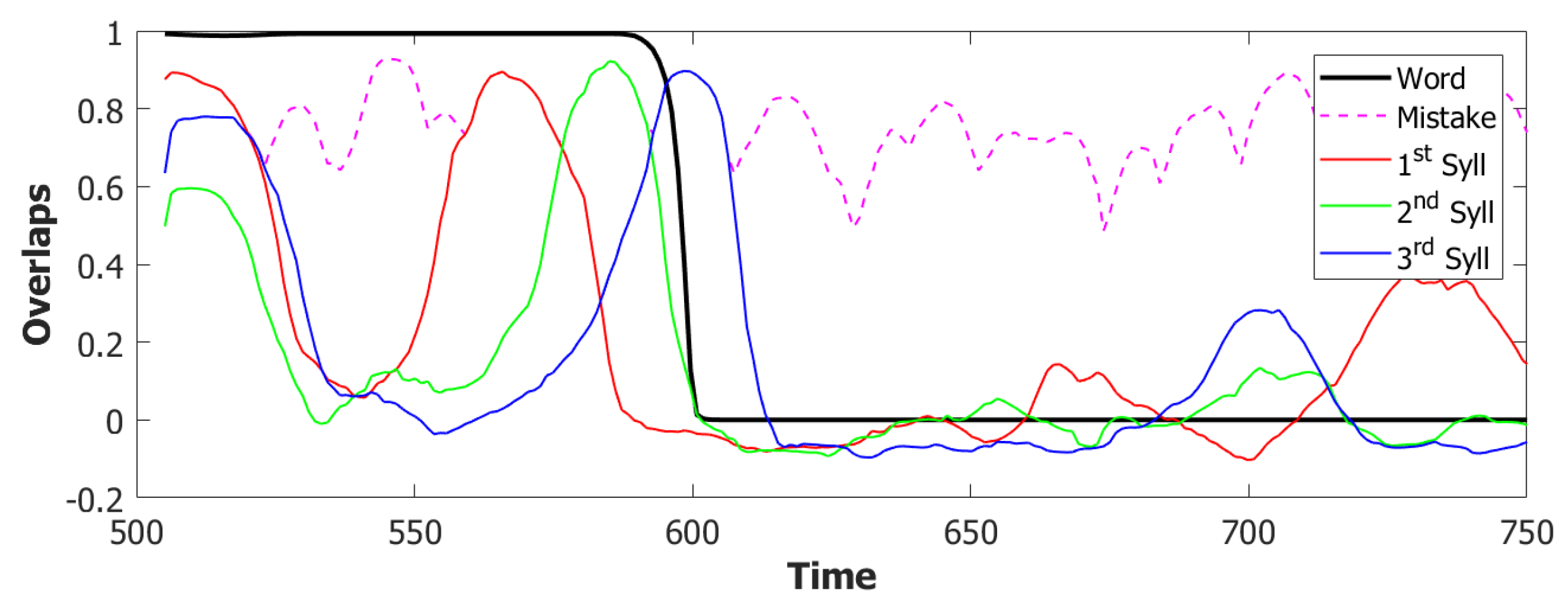

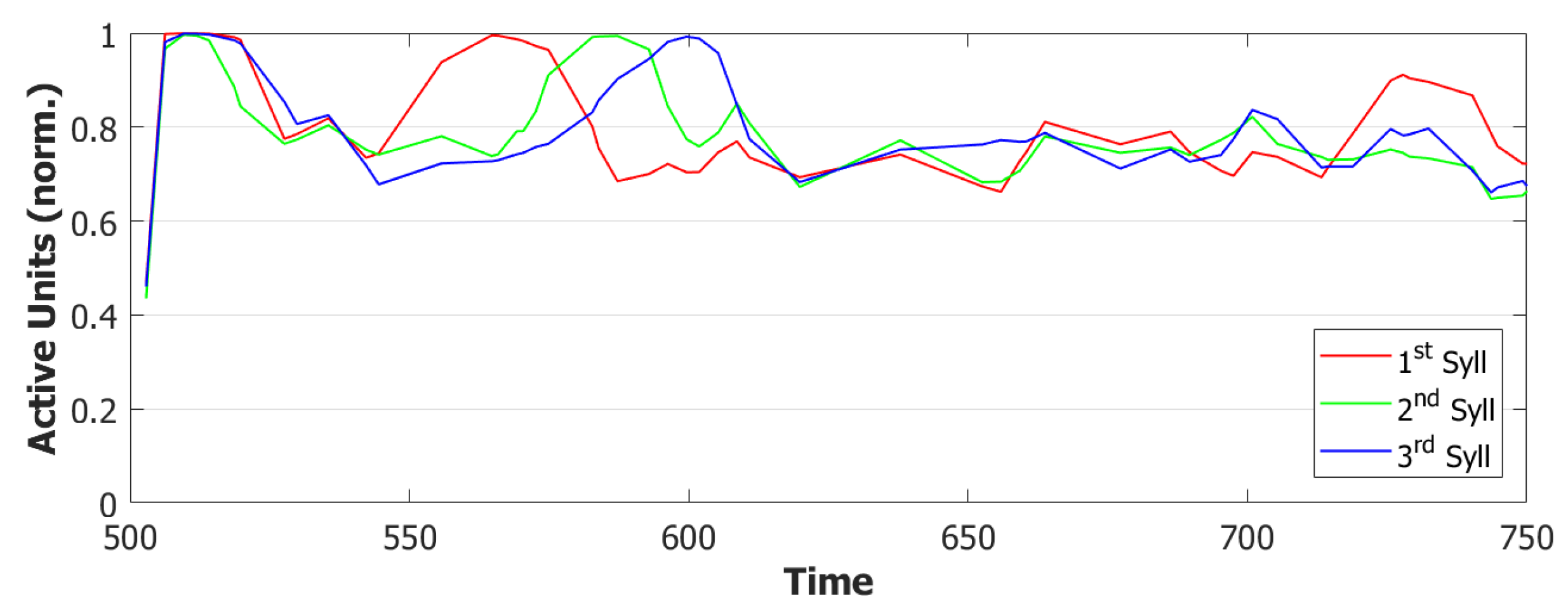

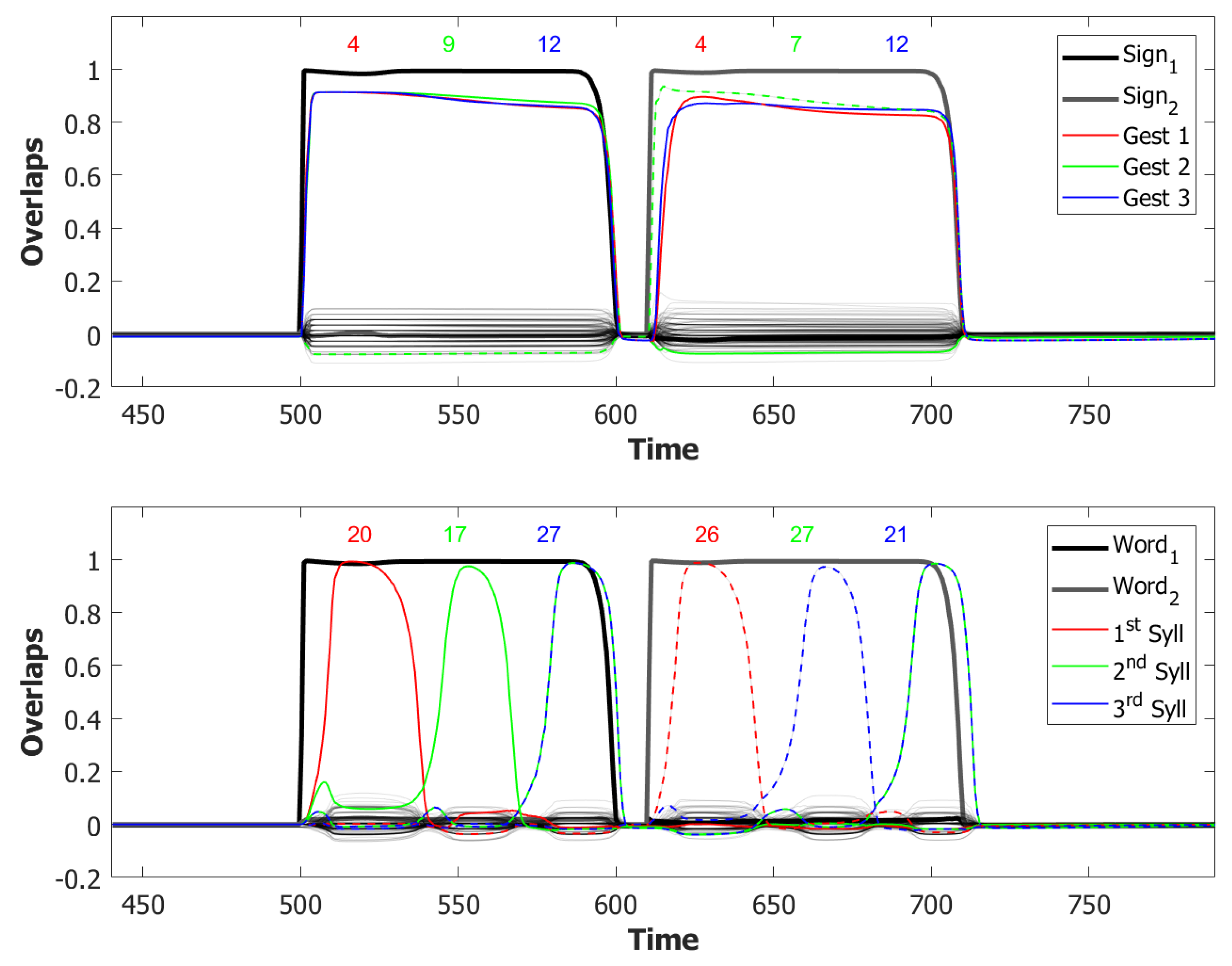

5.2. Simulation Results

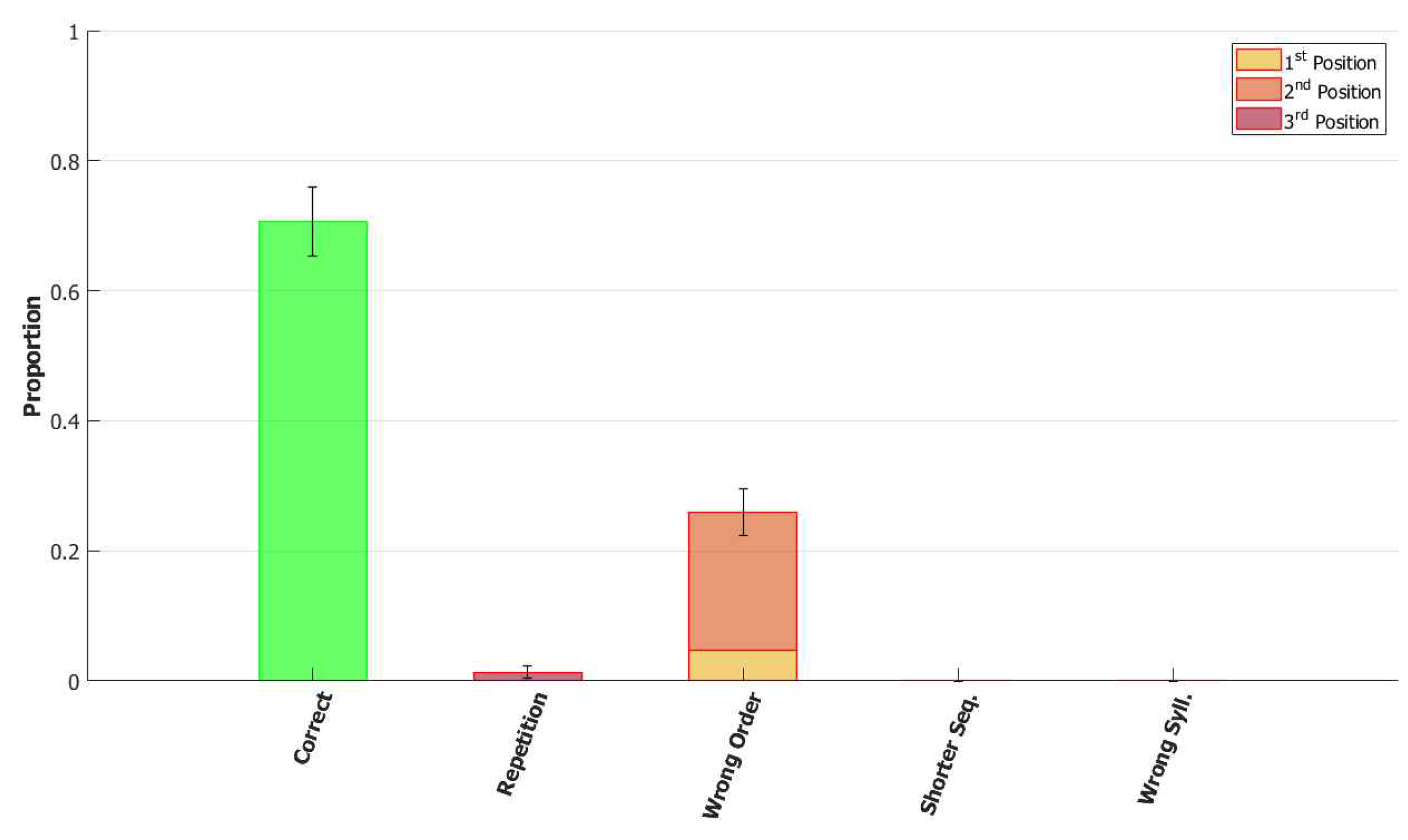

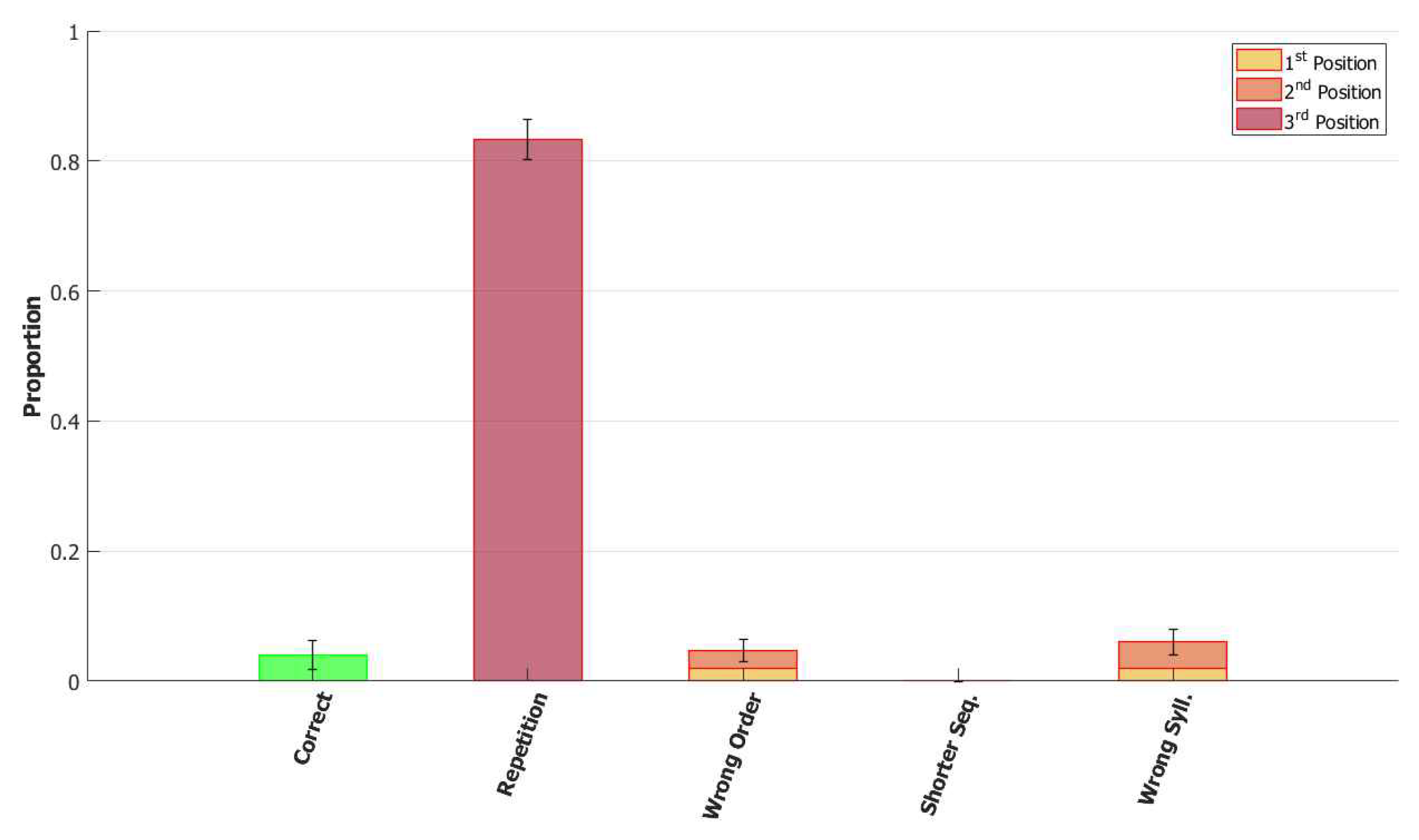

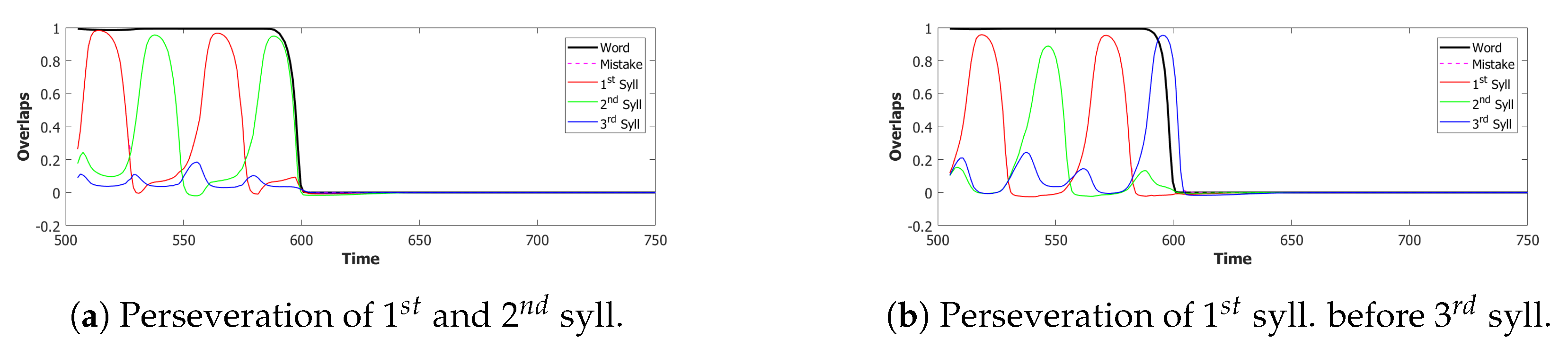

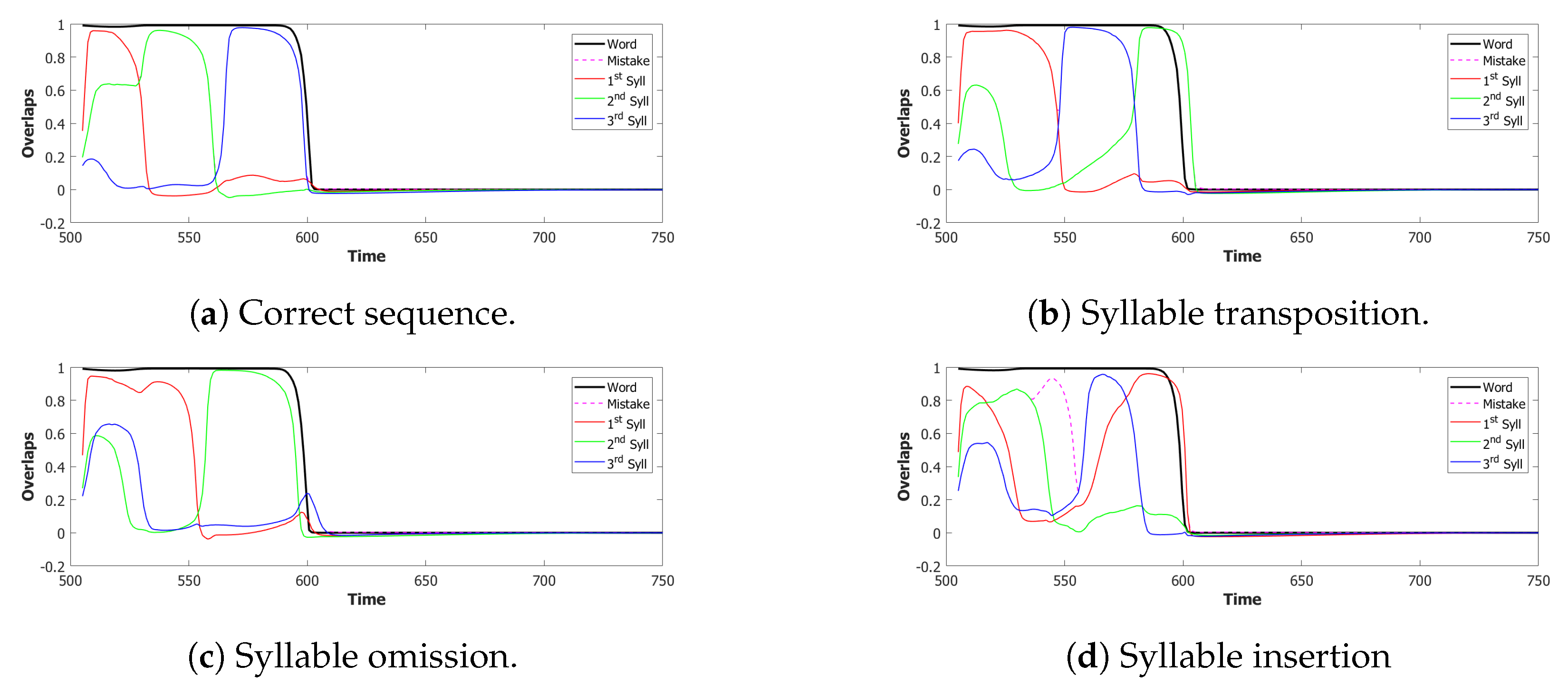

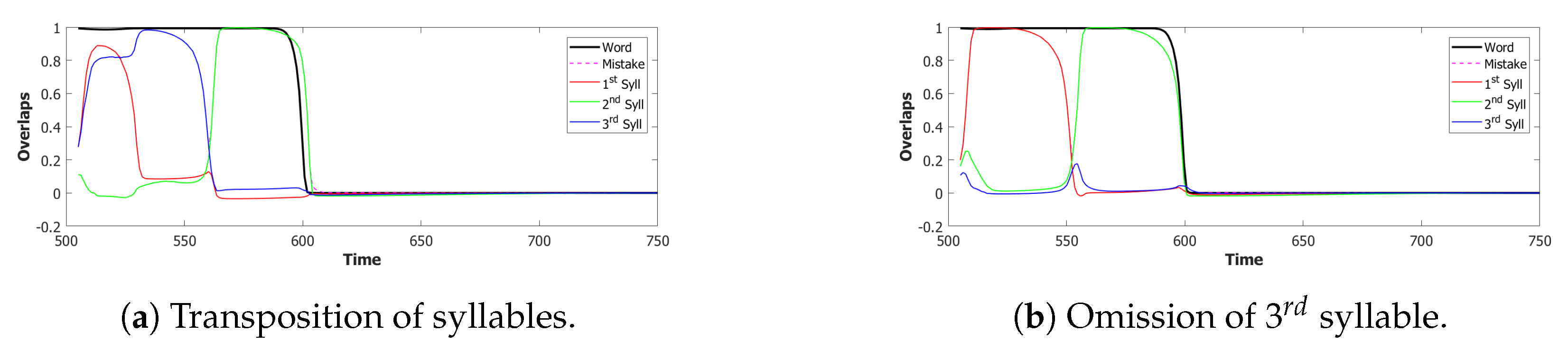

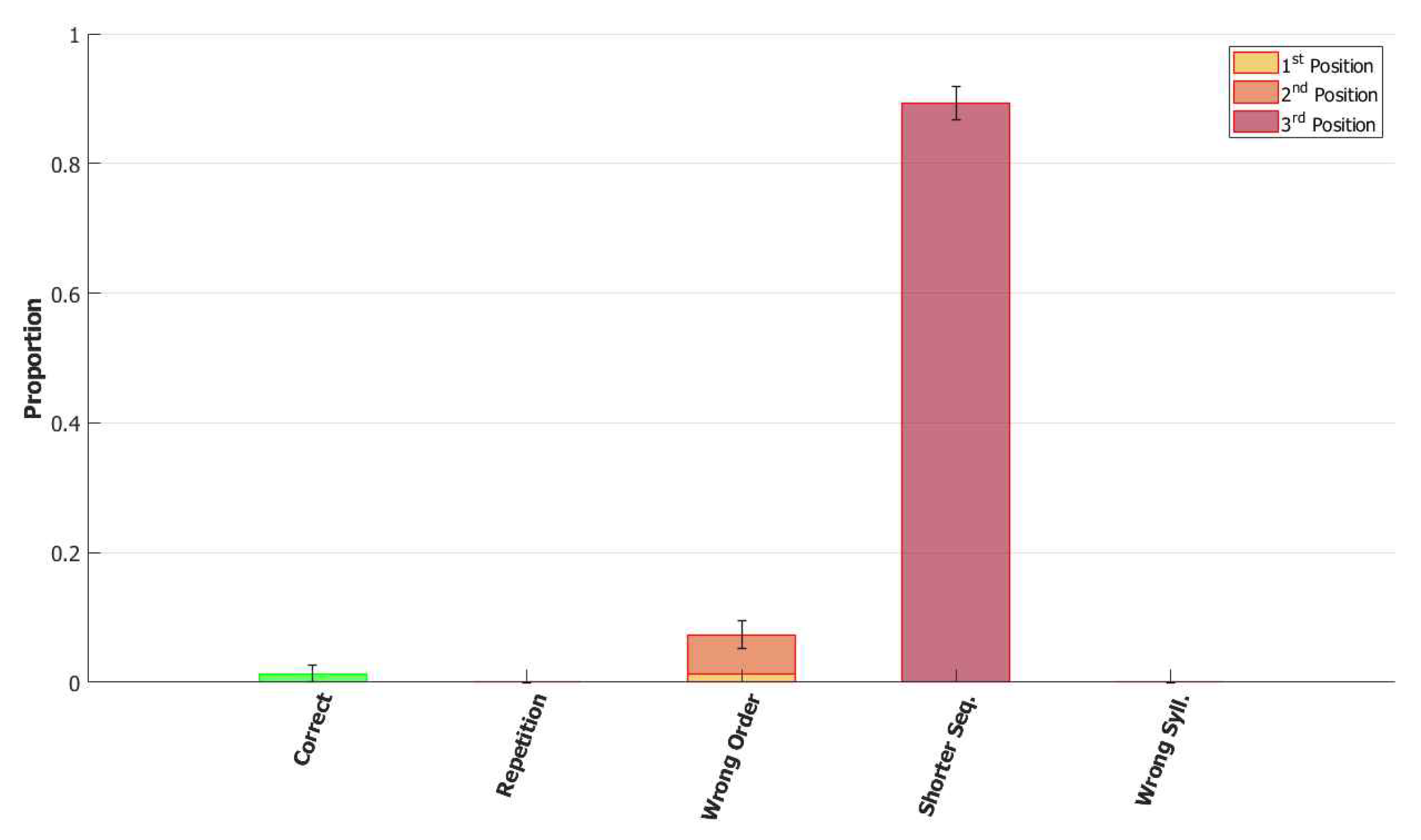

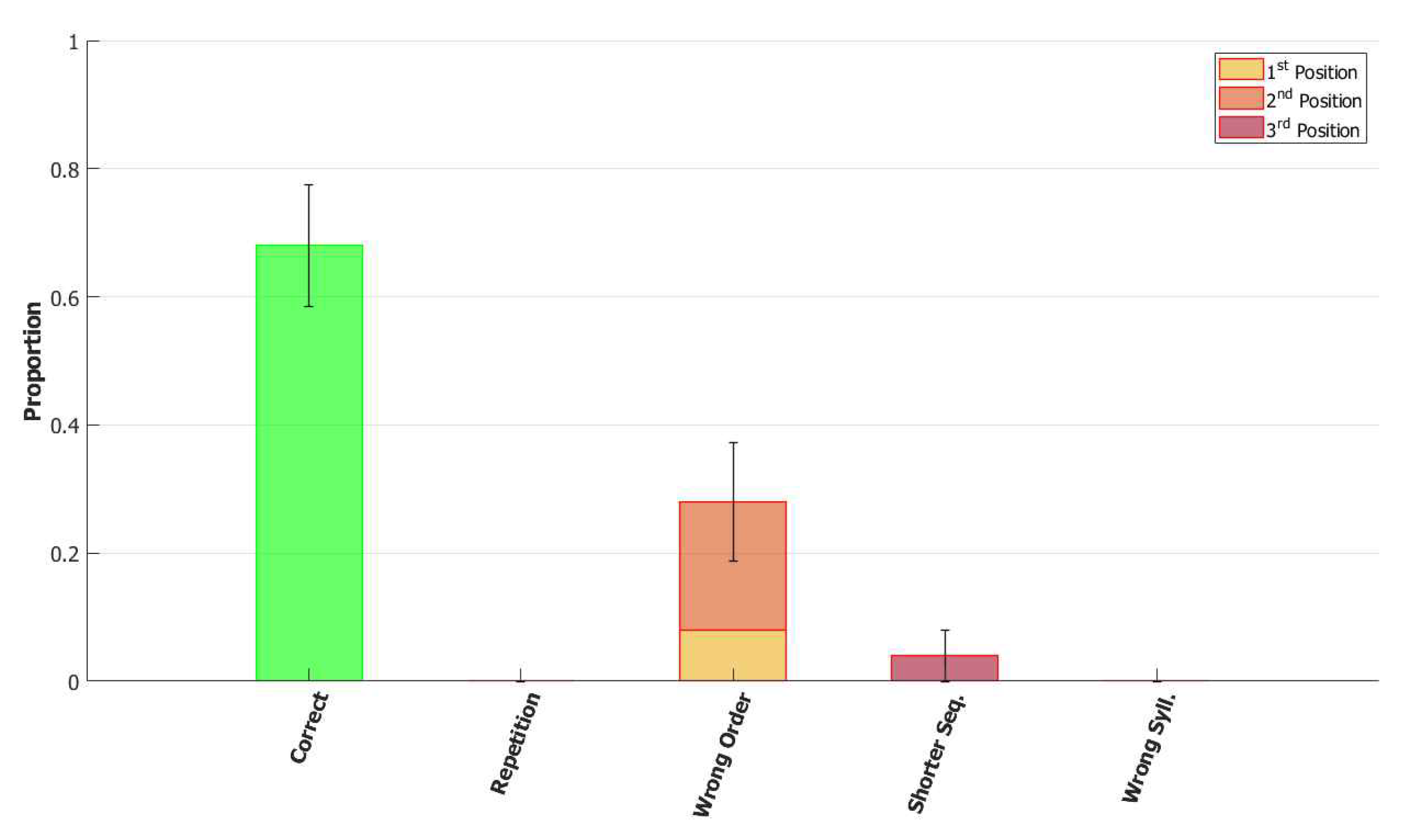

5.2.1. Performance of the POB Model and Points of Failure

5.2.2. Breaking the Network: Analysis of Errors

6. From POB to SOB

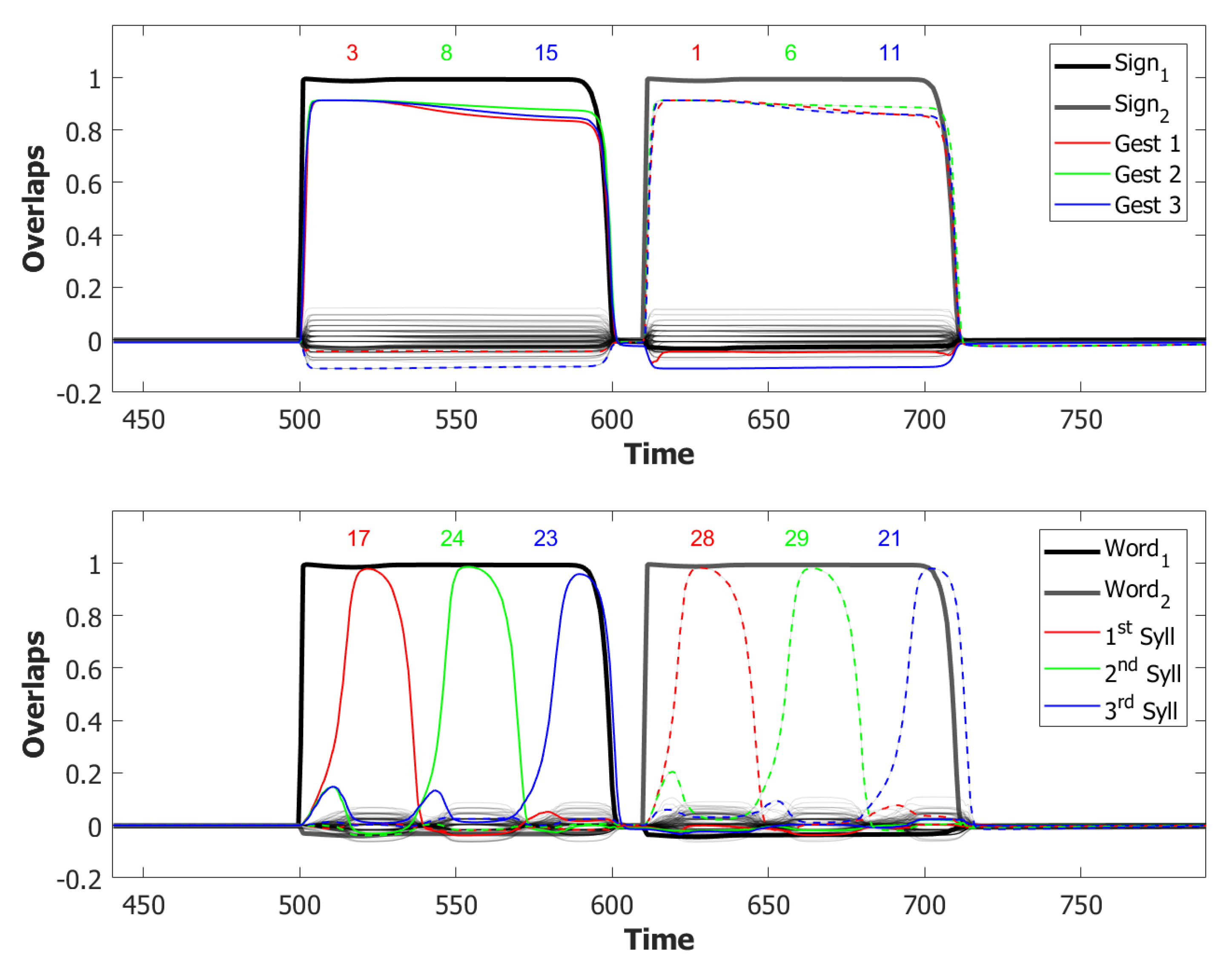

6.1. Signs Patterns

6.2. Inhibition and Adaptation

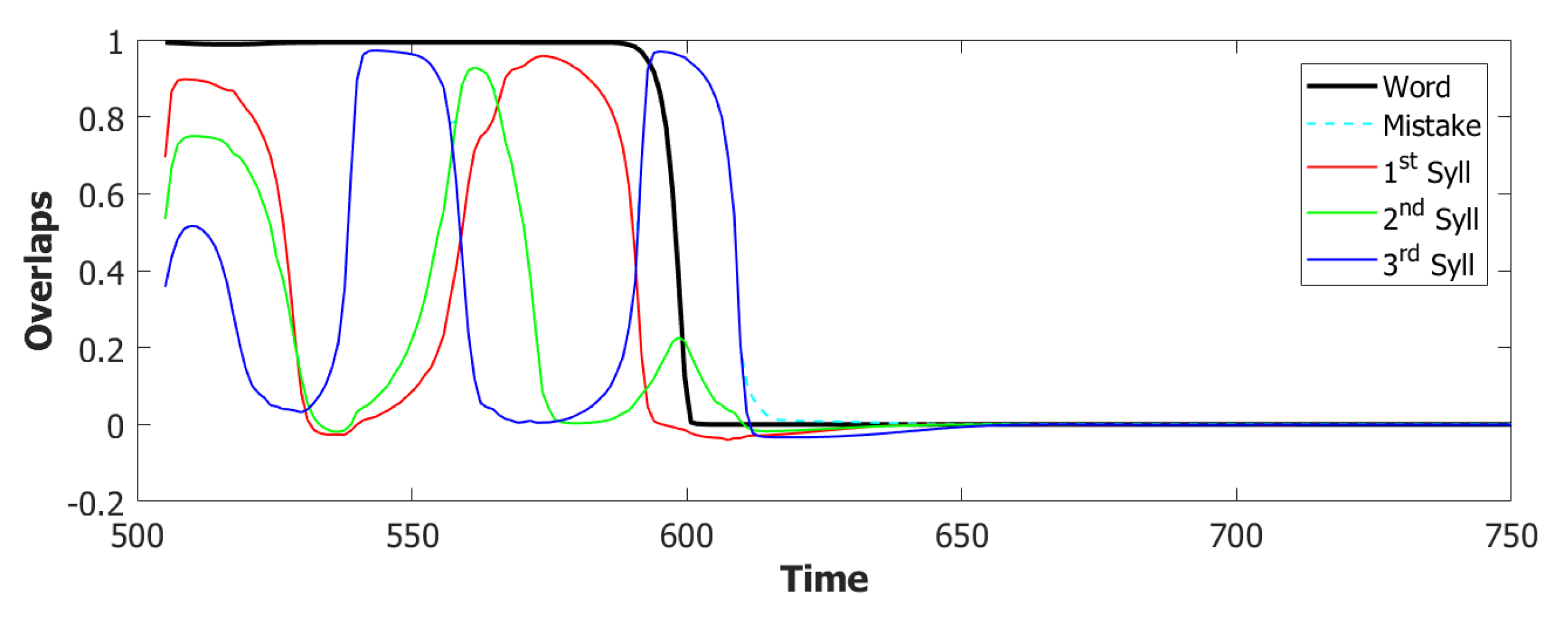

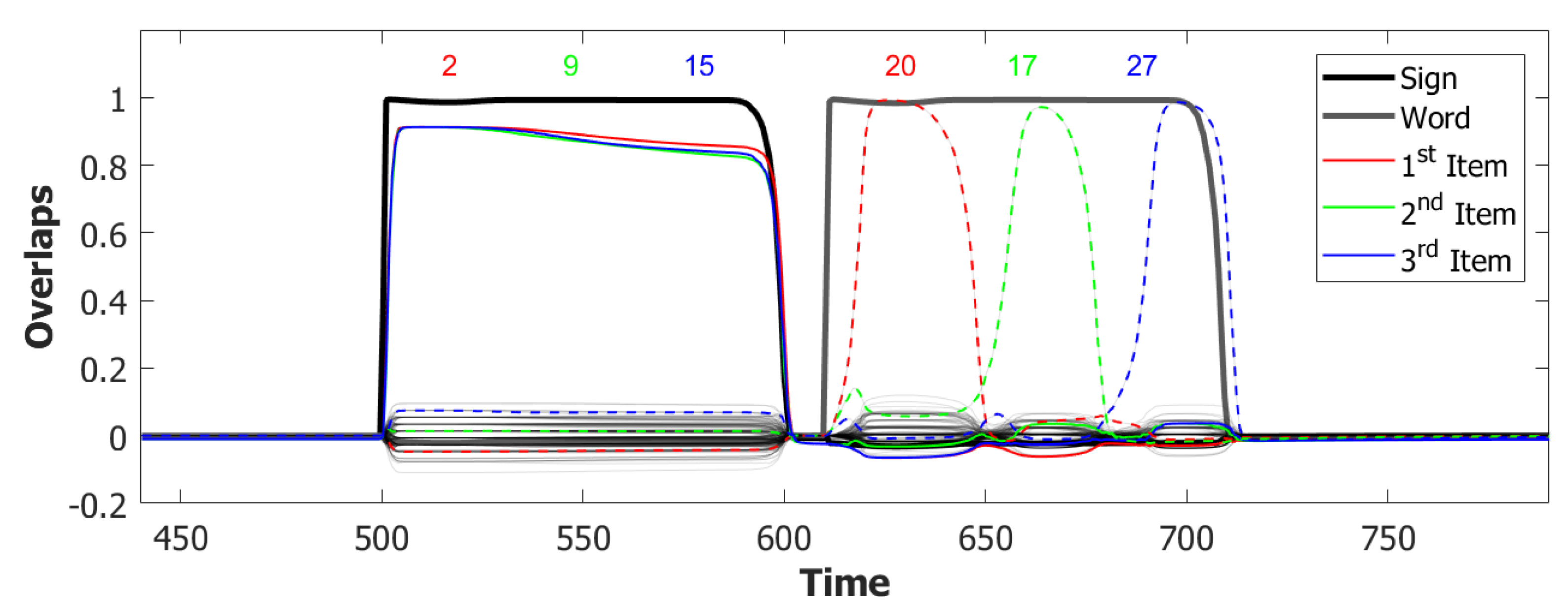

6.3. Simulating the SOB–POB

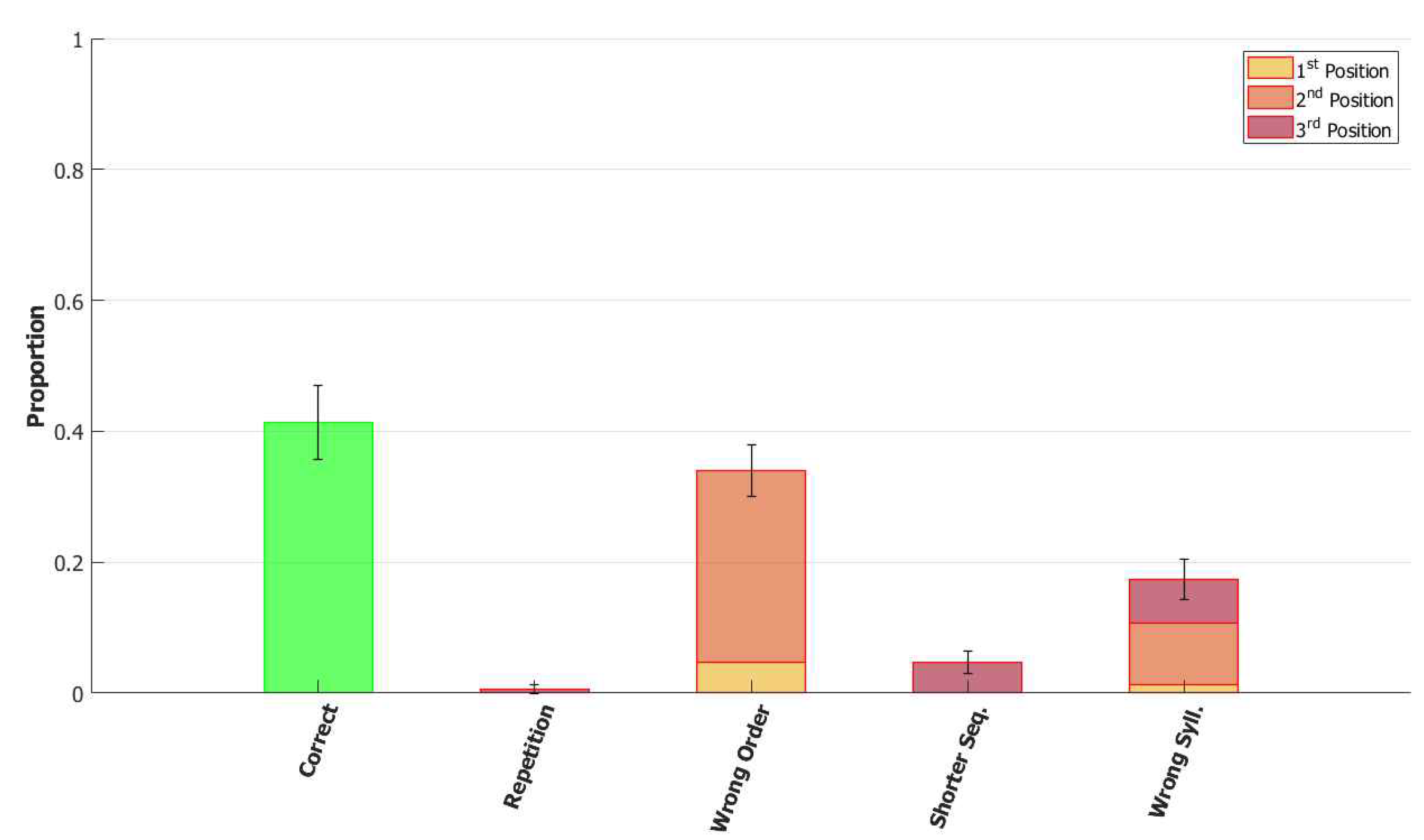

6.4. Signs and Words: Results

7. Discussion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Romani, C. Are there distinct input and output buffers? Evidence from an aphasic patient with an impaired output buffer. Lang. Cogn. Process. 1992, 7, 131–162. [Google Scholar] [CrossRef]

- Vallar, G.; Di Betta, A.M.; Silveri, M.C. The phonological short-term store-rehearsal system: Patterns of impairment and neural correlates. Neuropsychologia 1997, 35, 795–812. [Google Scholar] [CrossRef]

- Martin, R.C.; Shelton, J.R.; Yaffee, L.S. Language processing and working memory: Neuropsychological evidence for separate phonological and semantic capacities. J. Mem. Lang. 1994, 33, 83–111. [Google Scholar] [CrossRef]

- Nickels, L.; Howard, D. Effects of lexical stress on aphasic word production. Clin. Linguist. Phonet. 1999, 13, 269–294. [Google Scholar] [CrossRef]

- Dotan, D.; Friedmann, N. Steps towards understanding the phonological output buffer and its role in the production of numbers, morphemes, and function words. Cortex 2015, 63, 317–351. [Google Scholar] [CrossRef] [PubMed]

- Shallice, T.; Rumiati, R.I.; Zadini, A. The selective impairment of the phonological output buffer. Cogn. Neuropsychol. 2000, 17, 517–546. [Google Scholar] [CrossRef]

- Friedmann, N.; Biran, M.; Dotan, D. Lexical retrieval and its breakdown in aphasia and developmental language impairment. In The Cambridge Handbook of Biolinguistics; Cambridge University Press: Cambridge, UK, 2013; pp. 350–374. [Google Scholar]

- Amunts, K.; Lenzen, M.; Friederici, A.D.; Schleicher, A.; Morosan, P.; Palomero-Gallagher, N.; Zilles, K. Broca’s region: Novel organizational principles and multiple receptor mapping. PLoS. Biol. 2010, 8. [Google Scholar] [CrossRef]

- Gvion, A.; Friedmann, N. Phonological short-term memory in conduction aphasia. Aphasiology 2012, 26, 579–614. [Google Scholar] [CrossRef]

- Guggenheim, R.; Friedmann, N. The role of the phonological output buffer in morphology, function words, and word order. In Proceedings of the 18th annual Brain and Language Lab Conference, Tel Aviv, Israel, 1 July 2019. [Google Scholar]

- Lopes-Toledano, L. Migrations between and within Words in Reading: A Deficit in the Orthographic-Visual Analysis Process or in the Phonological Output Buffer? Unpublished Thesis, Tel Aviv University, Tel Aviv, Israel, 2018. [Google Scholar]

- Bub, D.; Black, S.; Howell, J.; Kertesz, A. Speech output processes and reading. In The Cognitive Neuropsychology of Language; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 1987; pp. 79–110. [Google Scholar]

- Romani, C.; Galluzzi, C.; Olson, A. Phonological–lexical activation: A lexical component or an output buffer? Evidence from aphasic errors. Cortex 2011, 47, 217–235. [Google Scholar] [CrossRef] [Green Version]

- Cohen, L.; Verstichel, P.; Dehaene, S. Neologistic Jargon Sparing Numbers: A Category-specific Phonological Impairment. Cogn. Neuropsychol. 1997, 14, 1029–1061. [Google Scholar] [CrossRef] [Green Version]

- Messina, G.; Denes, G.; Basso, A. Words and number words transcoding: A retrospective study on 57 aphasic subjects. J. Neurolinguist. 2009, 22, 486–494. [Google Scholar] [CrossRef]

- Delazer, M.; Bartha, L. Transcoding and calculation in aphasia. Aphasiology 2001, 15, 649–679. [Google Scholar] [CrossRef]

- Girelli, L.; Delazer, M. Differential effects of verbal paraphasias on calculation. Brain Lang. 1999, 69, 361–364. [Google Scholar]

- Arad, M. Roots and Patterns: Hebrew Morpho-Syntax; Springer: Berlin/Heidelberger, Germany, 2005. [Google Scholar]

- McCarthy, J.J. A prosodic theory of nonconcatenative morphology. Linguist. Inq. 1981, 12, 373–418. [Google Scholar]

- Amit, D.J. Modeling Brain Function: The World of Attractor Neural Networks; Cambridge University Press: Cambridge, UK, 1992. [Google Scholar]

- Baddeley, A.D.; Thomson, N.; Buchanan, M. Word length and the structure of short-term memory. JVLVB 1975, 14, 575–589. [Google Scholar] [CrossRef]

- Hulme, C.; Tordoff, V. Working memory development: The effects of speech rate, word length, and acoustic similarity on serial recall. J. Exp. Child Psychol. 1989, 47, 72–87. [Google Scholar] [CrossRef]

- Nickels, L.; Howard, D. Dissociating effects of number of phonemes, number of syllables, and syllabic complexity on word production in aphasia: It’s the number of phonemes that counts. Cogn. Neuropsychol. 2004, 21, 57–78. [Google Scholar] [CrossRef]

- Franklin, S.; Buerk, F.; Howard, D. Generalised improvement in speech production for a subject with reproduction conduction aphasia. Aphasiology 2002, 16, 1087–1114. [Google Scholar] [CrossRef]

- Biran, M.; Friedmann, N. The representation of lexical-syntactic information: Evidence from syntactic and lexical retrieval impairments in aphasia. Cortex 2012, 48, 1103–1127. [Google Scholar] [CrossRef]

- Baddeley, A.; Hitch, G.J. Working memory. In Recent Advances in Learning and Motivation; Academic Press: Cambridge, MA, USA, 1974; Volume 8, pp. 47–90. [Google Scholar]

- Baddeley, A.; Andrade, J. Reversing the word-length effect: A comment on Caplan, Rochon, and Waters. Q. J. Exp. Psychol. 1994, 47, 1047–1054. [Google Scholar] [CrossRef]

- Cowan, N.; Wood, N.L.; Nugent, L.D.; Treisman, M. There are two word-length effects in verbal short-term memory: Opposed effects of duration and complexity. Psychol. Sci. 1997, 8, 290–295. [Google Scholar] [CrossRef]

- Caplan, D.; Rochon, E.; Waters, G.S. Articulatory and phonological determinants of word length effects in span tasks. Q. J. Exp. Psychol. 1992, 45, 177–192. [Google Scholar] [CrossRef] [PubMed]

- Caplan, D.; Waters, G.S. Articulatory length and phonological similarity in span tasks: A reply to Baddeley and Andrade. Q. J. Exp. Psychol. 1994, 47, 1055–1062. [Google Scholar] [CrossRef] [PubMed]

- Service, E. The effect of word length on immediate serial recall depends on phonological complexity, not articulatory duration. Q. J. Exp. Psychol. 1998, 51, 283–304. [Google Scholar] [CrossRef]

- Lovatt, P.; Avons, S.E.; Masterson, J. The word-length effect and disyllabic words. Q. J. Exp. Psychol. 2000, 53, 1–22. [Google Scholar] [CrossRef]

- Lovatt, P.; Avons, S.; Masterson, J. Output decay in immediate serial recall: Speech time revisited. J. Mem. Lang. 2002, 46, 227–243. [Google Scholar] [CrossRef] [Green Version]

- Giegerich, H.J. English Phonology: An Introduction; Cambridge University Press: Cambridge, UK, 1992. [Google Scholar]

- Stokoe, W.C. Sign Language Structure: An Outline of the Visual Communication Systems of the American Deaf. J. Deaf Stud. Deaf Educ. 1960, 10, 3–37. [Google Scholar] [CrossRef] [Green Version]

- Stokoe, W.C.; Casterline, D.C.; Croneberg, C.G. A Dictionary of American Sign Language on Linguistic Principles; Linstok Press: Silver Spring, MD, USA, 1976. [Google Scholar]

- Brentari, D. A Prosodic Model of Sign Language Phonology; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Sandler, W.; Lillo-Martin, D. Sign Language and Linguistic Universals; Cambridge University Press: Cambridge, UK, 2006. [Google Scholar]

- Liddell, S.K.; Johnson, R.E. American Sign Language: The Phonological Base. Sign Lang. Stud. 1989, 1064, 195–277. [Google Scholar] [CrossRef]

- Van der Hulst, H. Units in the analysis of signs. Phonology 1993, 10, 209–241. [Google Scholar] [CrossRef] [Green Version]

- Sandler, W. The spreading hand autosegment of American Sign Language. Sign Lang. Stud. 1986, 1–28. [Google Scholar] [CrossRef]

- Rice, K. Markedness in phonology. Camb. Handb. Phonol. 2007, 79–97. [Google Scholar]

- Moravcsik, E.; Wirth, J. Markedness—An overview. In Markedness; Springer: Berlin/Heidelberger, Germany, 1986; pp. 1–11. [Google Scholar]

- Battistella, E.L. Markedness: The Evaluative Superstructure of Language; SUNY Press: Albany, NY, USA, 1990. [Google Scholar]

- Battison, R. Lexical Borrowing in American Sign Language; Linstok Press: Silver Spring, MD, USA, 1978. [Google Scholar]

- Brentari, D. Licensing in ASL handshape change. In Sign Language Research: Theoretical Issues; Gallaudet University Press: Washington, DC, USA, 1990; pp. 57–68. [Google Scholar]

- Brentari, D.; van der Hulst, H.; van der Kooij, E.; Sandler, W. One over All and All over One. In Theoretical Issues in Sign Language Research; University of Chicago Press: Chicago, IL, USA, 1996; Volume 5. [Google Scholar]

- Sandler, W. Representing handshapes. In International Review of Sign Linguistics; Psychology Press: London, UK, 1996; pp. 115–158. [Google Scholar]

- Corina, D.P.; Poizner, H.; Bellugi, U.; Feinberg, T.; Dowd, D.; O’Grady-Batch, L. Dissociation between linguistic and nonlinguistic gestural systems: A case for compositionality. Brain Lang. 1992, 43, 414–447. [Google Scholar] [CrossRef]

- Corina, D. Some observations regarding paraphasia in American Sign Language. In The Signs of Language Revisited: An Anthology to Honor Ursula Bellugi and Edward Klima; Psychology Press: London, UK, 2000; pp. 493–507. [Google Scholar]

- Morgan, G.; Barrett-Jones, S.; Stoneham, H. The first signs of language: Phonological development in British Sign Language. Appl. Psycholinguist. 2007, 28, 3–22. [Google Scholar] [CrossRef] [Green Version]

- Orfanidou, E.; Adam, R.; McQueen, J.M.; Morgan, G. Making sense of nonsense in British Sign Language (BSL): The contribution of different phonological parameters to sign recognition. Mem. Cogn. 2009, 37, 302–315. [Google Scholar] [CrossRef]

- Brentari, D. Effects of language modality on word segmentation: An experimental study of phonological factors in a sign language. Lab. Phonol. 2009, 8, 155–164. [Google Scholar]

- Haluts, N. The Phonological Output Buffer in Sign Languages: Evidence from impairments among deaf signers of Israeli Sign Language. Unpublished Thesis, Tel Aviv University, Tel Aviv, Israel, 2019. [Google Scholar]

- Haluts, N.; Friedmann, N. Signs for a POB impairment: Morphological errors in deaf signers with impairment to the phonological output buffer. In Proceedings of the 56th Annual Conference of the Israeli Speech Hearing and Language Association (ISHLA), Tel Aviv, Israel, 21–22 February 2020. [Google Scholar]

- van der Hulst, H. On the other hand. Lingua 1996, 98, 121–143. [Google Scholar] [CrossRef]

- Lepic, R.; Börstell, C.; Belsitzman, G.; Sandler, W. Taking meaning in hand: Iconic motivations in two-handed signs. In Sign Language & Linguistics; John Benjamins Publishing: Amsterdam, The Netherlands, 2016; pp. 37–81. [Google Scholar]

- Berent, I.; Marcus, G.F.; Shimron, J.; Gafos, A.I. The scope of linguistic generalizations: Evidence from Hebrew word formation. Cognition 2002, 83, 113–139. [Google Scholar] [CrossRef] [Green Version]

- Bolozky, S. Israeli Hebrew phonology. In Phonologies of Asia and Africa; Eisenbrauns: University Park, PA, USA, 1997; pp. 287–311. [Google Scholar]

- Bertinetto, P.M.; Loporcaro, M. The sound pattern of Standard Italian, as compared with the varieties spoken in Florence, Milan and Rome. J. Int. Phon. Assoc. 2005, 35, 131–151. [Google Scholar] [CrossRef] [Green Version]

- Meir, I.; Sandler, W. A Language in Space: The Story of Israeli Sign Language; Psychology Press: London, UK, 2007. [Google Scholar]

- Sandler, W. Phonological features and feature classes: The case of movements in sign language. Lingua 1996, 98, 197–220. [Google Scholar] [CrossRef]

- Sandler, W. The Phonology of Movement in Sign Language. In The Blackwell Companion to Phonology; John Wiley & Sons: Chichester, UK, 2011; pp. 1–27. [Google Scholar] [CrossRef]

- Friedman, L.A. Phonology of a Soundless Language: Phonological Structure of the American Sign Language. Ph.D. Thesis, UC Berkeley, Berkeley, CA, USA, 1976. [Google Scholar]

- Corina, D.; Sandler, W. On the nature of phonological structure in sign language. Phonology 1993, 10, 165–207. [Google Scholar] [CrossRef]

- Perlmutter, D.M. Sonority and syllable structure in American Sign Language. In Current Issues in ASL Phonology; Elsevier: Amsterdam, The Netherlands, 1993; pp. 227–261. [Google Scholar]

- Sandler, W. A sonority cycle in American Sign Language. Phonology 1993, 10, 243–279. [Google Scholar] [CrossRef]

- Wilbur, R.B. Syllables and segments: Hold the movement and move the holds! In Current Issues in ASL Phonology; Elsevier: Amsterdam, The Netherlands, 1993; pp. 135–168. [Google Scholar]

- Klima, E.S.; Bellugi, U. The Signs of Language; Harvard University Press: Cambridge, MA, USA, 1979. [Google Scholar]

- Bellugi, U.; Newkirk, D. Formal devices for creating new signs in American Sign Language. Sign Lang. Stud. 1981, 1–35. [Google Scholar] [CrossRef]

- Meir, I.; Aronoff, M.; Sandler, W.; Padden, C. Sign languages and compounding. Compound. John Benjamins 2010, 573–595. [Google Scholar]

- Coupé, C.; Oh, Y.M.; Dediu, D.; Pellegrino, F. Different languages, similar encoding efficiency: Comparable information rates across the human communicative niche. Sci. Adv. 2019, 5. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wilbur, R.B. Effects of varying rate of signing on ASL manual signs and nonmanual markers. Lang. Speech 2009, 52, 245–285. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bavelier, D.; Newport, E.L.; Hall, M.L.; Supalla, T.; Boutla, M. Persistent difference in short-term memory span between sign and speech: Implications for cross-linguistic comparisons. Psychol. Sci. 2006, 17, 1090–1092. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Poizner, H.; Bellugi, U.; Tweney, R.D. Processing of formational, semantic, and iconic information in American Sign Language. J. Exp. Psychol.-Hum. Percept. Perform. 1981, 7, 1146. [Google Scholar] [CrossRef]

- Wilson, M.; Emmorey, K. A visuospatial “phonological loop” in working memory: Evidence from American Sign Language. Mem. Cogn. 1997, 25, 313–320. [Google Scholar] [CrossRef]

- Wilson, M.; Emmorey, K. The effect of irrelevant visual input on working memory for sign language. J. Deaf Stud. Deaf Educ. 2003, 8, 97–103. [Google Scholar] [CrossRef]

- Wilson, M.; Emmorey, K. A “word length effect” for sign language: Further evidence for the role of language in structuring working memory. Mem. Cogn. 1998, 26, 584–590. [Google Scholar] [CrossRef] [Green Version]

- Mann, W.; Marshall, C.R.; Mason, K.; Morgan, G. The acquisition of sign language: The impact of phonetic complexity on phonology. Lang. Learn. Dev. 2010, 6, 60–86. [Google Scholar] [CrossRef]

- Bellugi, U.; Klima, E.S.; Siple, P. Remembering in signs. Cognition 1974, 3, 93–125. [Google Scholar] [CrossRef]

- Rönnberg, J.; Rudner, M.; Ingvar, M. Neural correlates of working memory for sign language. Brain Res. Cogn. Brain Res. 2004, 20, 165–182. [Google Scholar] [CrossRef] [PubMed]

- Boutla, M.; Supalla, T.; Newport, E.L.; Bavelier, D. Short-term memory span: Insights from sign language. Nat. Neurosci. 2004, 7, 997–1002. [Google Scholar] [CrossRef] [PubMed]

- Wilson, M.; Emmorey, K. Comparing sign language and speech reveals a universal limit on short-term memory capacity. Psychol. Sci. 2006, 17, 682. [Google Scholar] [CrossRef] [PubMed]

- Geraci, C.; Gozzi, M.; Papagno, C.; Cecchetto, C. How grammar can cope with limited short-term memory: Simultaneity and seriality in sign languages. Cognition 2008, 106, 780–804. [Google Scholar] [CrossRef] [PubMed]

- Haluts, N.; Friedmann, N. The Signing Buffer—Evidence from Impairments to the Phonological Output Buffer in Deaf Users of Israeli Sign Language. In Proceedings of the Cogneuro2020: Unfolding the Human Brain conference, Tel Aviv, Israel, 27–28 January 2020. [Google Scholar]

- Dell, G.S.; Schwartz, M.F.; Martin, N.; Saffran, E.M.; Gagnon, D.A. Lexical access in aphasic and nonaphasic speakers. Psychol. Rev. 1997, 104, 801. [Google Scholar] [CrossRef]

- Rapp, B.; Goldrick, M. Discreteness and interactivity in spoken word production. Psychol. Rev. 2000, 107, 460. [Google Scholar] [CrossRef]

- Guggenheim, R. Phonological Output Buffer Developmental Impairment and Its Influence on Reading and Writing. Unpublished Thesis, Tel Aviv University, Tel Aviv, Israel, 2015. [Google Scholar]

- Braitenberg, V. Cell assemblies in the cerebral cortex. In Theoretical Approaches to Complex Systems; Springer: Berlin/Heidelberger, Germany, 1978; pp. 171–188. [Google Scholar]

- Braitenberg, V.; Schüz, A. Anatomy of the Cortex: Statistics and Geometry; Springer: Berlin/Heidelberger, Germany, 2013; Volume 18. [Google Scholar]

- Potts, R.B.; Domb, C. Some generalized order-disorder transformations. In Mathematical Proceedings of the Cambridge Philosophical Society; Cambridge University Press: Cambridge, UK, 1952; p. 106. [Google Scholar] [CrossRef]

- Kanter, I. Potts-glass models of neural networks. Phys. Rev. A 1988, 37, 2739–2742. [Google Scholar] [CrossRef]

- Bollé, D.; Dupont, P.; van Mourik, J. Stability properties of Potts neural networks with biased patterns and low loading. J. Phys. A Math Theor. 1991, 24, 1065. [Google Scholar] [CrossRef]

- Bollé, D.; Dupont, P.; Huyghebaert, J. Thermodynamic properties of the Q-state Potts-glass neural network. Phys. Rev. A 1992, 45, 4194. [Google Scholar] [CrossRef]

- Bollé, D.; Cools, R.; Dupont, P.; Huyghebaert, J. Mean-field theory for the Q-state Potts-glass neural network with biased patterns. J. Phys. A Math Theor. 1993, 26, 549. [Google Scholar] [CrossRef]

- Hopfield, J.J. Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. USA 1982, 79, 2554–2558. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Naim, M.; Boboeva, V.; Kang, C.J.; Treves, A. Reducing a cortical network to a Potts model yields storage capacity estimates. J. Stat. Mech. Theory Exp. 2018, 2018, 043304. [Google Scholar] [CrossRef] [Green Version]

- Tremblay, R.; Lee, S.; Rudy, B. GABAergic interneurons in the neocortex: From cellular properties to circuits. Neuron 2016, 91, 260–292. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kang, C.J.; Naim, M.; Boboeva, V.; Treves, A. Life on the Edge: Latching Dynamics in a Potts Neural Network. Entropy 2017, 19, 468. [Google Scholar] [CrossRef] [Green Version]

- Russo, E.; Treves, A. Cortical free-association dynamics: Distinct phases of a latching network. Phys. Rev. E 2012, 85, 051920. [Google Scholar] [CrossRef] [Green Version]

- Treves, A. Frontal latching networks: A possible neural basis for infinite recursion. Cogn. Neuropsychol. 2005, 22, 276–291. [Google Scholar] [CrossRef] [PubMed]

- Boboeva, V.; Brasselet, R.; Treves, A. The capacity for correlated semantic memories in the cortex. Entropy 2018, 20, 824. [Google Scholar] [CrossRef] [Green Version]

- Caramazza, A.; Miceli, G.; Villa, G. The role of the (output) phonological buffer in reading, writing, and repetition. Cogn. Neuropsychol. 1986, 3, 37–76. [Google Scholar] [CrossRef]

- Coltheart, M.; Funnell, E. Reading and Writing: One Lexicon or Two? Academic Press: Cambridge, MA, USA, 1987. [Google Scholar]

- Shelton, J.R.; Weinrich, M. Further evidence of a dissociation between output phonological and orthographic lexicons: A case study. Cogn. Neuropsychol. 1997, 14, 105–129. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Haluts, N.; Trippa, M.; Friedmann, N.; Treves, A. Professional or Amateur? The Phonological Output Buffer as a Working Memory Operator. Entropy 2020, 22, 662. https://doi.org/10.3390/e22060662

Haluts N, Trippa M, Friedmann N, Treves A. Professional or Amateur? The Phonological Output Buffer as a Working Memory Operator. Entropy. 2020; 22(6):662. https://doi.org/10.3390/e22060662

Chicago/Turabian StyleHaluts, Neta, Massimiliano Trippa, Naama Friedmann, and Alessandro Treves. 2020. "Professional or Amateur? The Phonological Output Buffer as a Working Memory Operator" Entropy 22, no. 6: 662. https://doi.org/10.3390/e22060662

APA StyleHaluts, N., Trippa, M., Friedmann, N., & Treves, A. (2020). Professional or Amateur? The Phonological Output Buffer as a Working Memory Operator. Entropy, 22(6), 662. https://doi.org/10.3390/e22060662