1. Introduction

In recent years, we witnessed increasing attention on the distributed cooperative control of dynamic multi-agent systems due to their vast applications in various fields. In many situations, groups of dynamic agents need to interact with each other and their goal is to reach an agreement (consensus) on a certain task. For example, it can be flocking of birds during migration [

1,

2] to eventually reach their destinations; or robot teams synchronized in order to accomplish their collective tasks [

3,

4]. The main challenge for distributed cooperative control of multi-agent systems is that interaction between agents is only based on local information. There already exists a vast literature concerning first-order [

3,

5], second-order [

6,

7], and fractional-order [

8,

9,

10] networks. For a survey of the recent results, we refer the reader to [

11]. Within different approaches to the consensus problem in multi-agent networks, one can find continuous-time agents’ state evolving (the state trajectory is a continuous curve) [

3,

5,

12,

13], discrete-time agents’ state evolving (the state trajectory is a sequence of values) [

14,

15,

16,

17,

18,

19], and both continuous and discrete-time agents’ state evolving (the domain of the state trajectory is any time scale) [

20,

21,

22,

23]. An important question connected with the consensus problem is whether the communication topology is fixed over time or is time-varying; that is, communications channels are allowed to change over time [

24]. The latter case seems to be more realistic; therefore, scientists mostly focus on it. Going farther, in real-word situations, it may happen that agents’ states are continuous but an exchange of information between agents occurs only at discrete time instants (update times). This issue was already addressed in the literature [

25]. In this paper we also investigate such a situation. However, our approach is new and more challenging: we consider the case when agents exchange information between each other at random instants of times. Another question to be answered is whether the consensus problem is considered with or without the leader. Based on the existence of a leader, there are two kinds of consensus problems: leaderless consensus and leader-following consensus. This last-mentioned problem relies on establishing conditions under which, through local interactions, all the agents reach an agreement upon a common state (consensus), which is defined by the dynamic leader. A great number of works have been already devoted to the consensus problem with the leader (see, e.g., [

24,

26,

27,

28] and the references given there).

In the present paper, we investigate a leader-following consensus for multi-agent systems. It is assumed that the agents’ state variables are continuous but exchange information between them occurs only at discrete time instants (update times) appearing randomly. In other words, the consensus control law is applied at those update times. We analyze the case when a sequence of update times is a sequence of random variables and the waiting time between two consecutive updates is exponentially distributed. To avoid unnecessary complexity, we assume that the update times are the same for all agents. Combining of continuity of state variables with discrete random times of communications requires the introduction of an artificial state variable for each agent that evolves in continuous-time and is allowed to have discontinuities; the primary state variable is continuous for all time. Between update times, both the original state and the artificial variable evolve continuously according to some specified dynamics. At randomly occurring update times, the state variable keeps its current value, while the artificial variable is updated by the state values received from other agents, including the leader. It is worth noting that, in the case of deterministic update times, known initially, the idea of artificial state variables is applied in [

15,

16,

25]. The presence of randomly occurring update times in the model leads to a total change of the behavior of the solutions. They are changed from deterministic real valued functions to stochastic processes. This requires combining of results from the probability theory with the ones of the theory of ordinary differential equations with impulses. In order to analyze the leader-following consensus problem, we define the state error system and a sample path solution of this system. Since solutions to the studied model of a multi-agent system with discrete-time updates at random times are stochastic processes, asymptotically reaching leader-following consensus is understood in the sense of the expected value.

The paper is organized in the following manner. In

Section 2, we describe the multi-agent system of our interest in detail. Some necessary definitions, lemmas, and propositions from probability theory are given in

Section 3.

Section 4 contains our main results. First, we describe a stochastic process that is a solution to the continuous-time system with communications at random times. Next, sufficient conditions for the global asymptotic leader-following consensus in a continuous-time multi-agent system with discrete-time updates occurring at random times are proven. In

Section 5, an illustrative example with numerical simulations is presented to verify theoretical discussion. Some concluding remarks are drawn in

Section 6.

Notation: For a given vector , stands for its Euclidean norm . For a given square matrix, , stands for its spectral norm , where are the eigenvalues of . We have and .

2. Statement of the Model

We consider a multi-agent system consisting of N agents and one leader. The state of agent i is denoted by , , and the state of the leader by , where is a given initial time. Without information exchange between agents, the leader has no influence on the other agents (see Example 1, Case 1.1, and Example 2, Case 2.1). In order to analyze the influence of the leader on the behavior of the other agents, we assume that there is information exchange between agents but it occurs only at random update times. In other words, the model is set up as the continuous-time multi-agent system with discrete-time communications/updates occurring only at random times.

Let us denote by (

) a probability space, where

is the sample space,

is a

-algebra on

, and

P is the probability on

. Consider a sequence of independent, exponentially-distributed random variables

with parameter

and such that

with a probability 1. Define the sequence of random variables

by

where

is a given initial time. The random variable

measures the waiting time of the

k-th event time after the

-st controller update occurs and the random variable

is connected with the random event time and it denotes the length of time until

k controller updates occur for

. At each time

agent

i updates its state variable according to the following equation:

where

is the control input function for the

i-th agent. Here,

is the difference between the value of the state variable of the

i-th agent after the update

and before it

; i.e.,

. The state of the leader remains unchanged; that is,

For each agent

i we consider the control law, at the random times

, based on the information it receives from its neighboring agents and the leader:

where weights

and

are entries of the weighted connectivity matrix

at time

t:

and

if the virtual leader is available to agent

i at time

t, while

otherwise. Between two update times

and

, any agent

i has information only about his own state. More precisely, the dynamics of agent

i are described by

where

,

.

The leader for the multi-agent system is an isolated agent with constant reference state

Observe that the model described above can be written as a system of differential equations with impulses at random times

, and waiting time between two consecutive updates

as follows:

with initial conditions

We introduce an additional (artificial) variable

for each state

, such that it has discontinuities at random times

and

. These variables allow us to keep the state of each agent

as a continuous function of time. Between two update times

and

the evolution of

and

are given by

Then, by Equations (

2) and (

4), we obtain

Consequently, we get the following system:

At each update time we set:

The initial conditions for (

5) and (

6) are:

Observe that dynamics described by (

5) lead to a decrease of the absolute difference between a state variable

and an artificial variable

,

. Whereas by (

6), the value of

is updated using the information received, while

remains unchanged. Therefore, Equations (

5) and (

6) provide a formal description of the multi-agent system with continuous-time states of agents and information exchange between agents occurring at discrete-time instants.

Let

be errors between any state

or

, and the leader state

, at time

t. Then, by (

5)–(

7), one gets the following error system:

where coefficients

are the entries of the matrix

i.e.,

Now let us introduce the

-dimensional matrices

and

Then, denoting

, we can write error system (

8) in the following matrix form:

where

4. Leader-Following Consensus

Consider the sequence of points , where the point is an arbitrary value of the corresponding random variable . Define the increasing sequence of points by and for .

Remark 1. Note that if is a value of the random variable , , then is a value of the random variable , , correspondingly.

Since the multi-agent system with the leader described by system (

2)–(

3) is equivalent to system (

9), we focus on initial value problem (

9).

Let us consider the following system of impulsive differential equations with fixed points of impulses and fixed length of action of the impulses:

or its equivalent matrix form

Note that system (

11) is a system of impulsive differential equations with impulses at deterministic time moments

. For a deeper discussion of impulsive differential equations we refer the reader to [

31] and the references given there. The solution to (

11) depends not only on the initial condition

but also on the moments of impulses

, i.e., on the arbitrary chosen values

of the random variables

, and is given by

The set of all solutions

of the initial value problems of type (

11) for any values

of the random variables

, generates a stochastic process with state space

. We denote it by

and call it a solution to initial value problem (

9). Following the ideas of a sample path of a stochastic process [

29,

32] we define a sample path solution of studied system (

9).

Definition 2. For any given values of the random variables , respectively, the solution of the corresponding initial value problem (10) is called a sample path solution of initial value problem (9). Definition 3. A stochastic process with an uncountable state space is said to be a solution of initial value problem (9) if, for any values of the random variables , the corresponding function is a sample path solution of initial value problem (9). Let the stochastic process

with an uncountable state space

be a solution of initial value problem with random impulses (

9).

Definition 4. We say that the leader-following consensus is reached asymptotically in multi-agent system (2) if, for any and any , where .

Remark 2. Observe that since , , and initial value problem (2)–(3) is equivalent to initial value problem (9), equality (12) means that , where .

Now we prove the main results of the paper, which are sufficient conditions for the leader-following consensus in a continuous-time multi-agent system with discrete-time updates occurring at random times.

Theorem 1. Assume that:

- (A1)

The inequalitieshold, and there exists a real such thatand - (A2)

The random variables , are independently exponentially distributed with the parameter λ such that .

Then, for any initial point the solution of the initial value problem with random moments of impulses (9) is given by the formula and the expected value of the solution satisfies the inequality Proof. Let

be an arbitrary given initial time. According to (A1), we have

and

for

. For any

, we choose an arbitrary value

of the random variable

and define the increasing sequence of points

,

. By Remark 1, for any natural

k,

is a value of the random variable

. Consider the initial value problem of impulsive differential equations with fixed points of impulses (

11). The solution of initial value problem (

11) is given by the formula

Then, for

, we get the following estimation

The solutions

generate continuous stochastic process

that is defined by (

16). It is a solution to initial value problem of impulsive differential equation with random moments of impulses (

9). According to Proposition 2, Proposition 3, and inequality (

17), we get

Therefore, applying Corollary 1, we obtain

□

Remark 3. The inequalities (14) and (15) are satisfied only for , such that for all and . Indeed, assume that there exist and , such that . Then inequality (14) reduces to . If , then from (14) it follows that , i.e, , which is not possible since . Therefore, assume that and . Hence which is again a contradiction with assumption that . Theorem 2. If the assumptions of Theorem 1 are satisfied, then the leader-following consensus for multi-agent system (2) is reached asymptotically. Proof. The claim follows from Theorem 1, Remark 1, the equality

, and the inequalities

for

. □

According to Remark 3, condition (A1) is satisfied only in the case when a leader is available to each agent at any update random time. An interpretation of this situation can be the following. A leader can be viewed as the root node for the communication network; if there exists a directed path from the root to each agent (device), then all the agents can track the objective successfully. Since the leader can perceive more information in order to guide the whole group to complete the task (consensus), it seems to be reasonable to demand that he is available to each follower at any update random time.

5. Illustrative Examples

In this section, the numerical examples are given to verify the effectiveness of the proposed sufficient conditions for a multi-agent system to achieve asymptotically the leader-following consensus. In all examples, we set

and consider a sequence of independent exponentially distributed random variables

with parameter

(it will be defined later in each example) and the sequence of random variables

defined by (

1).

Example 1. Let us consider a system of three agents and the leader. In order to illustrate the meaningfulness of the studied model and the obtained results, we consider three cases.

Case 1.1.There is no information exchange between agents and the leader is not available.

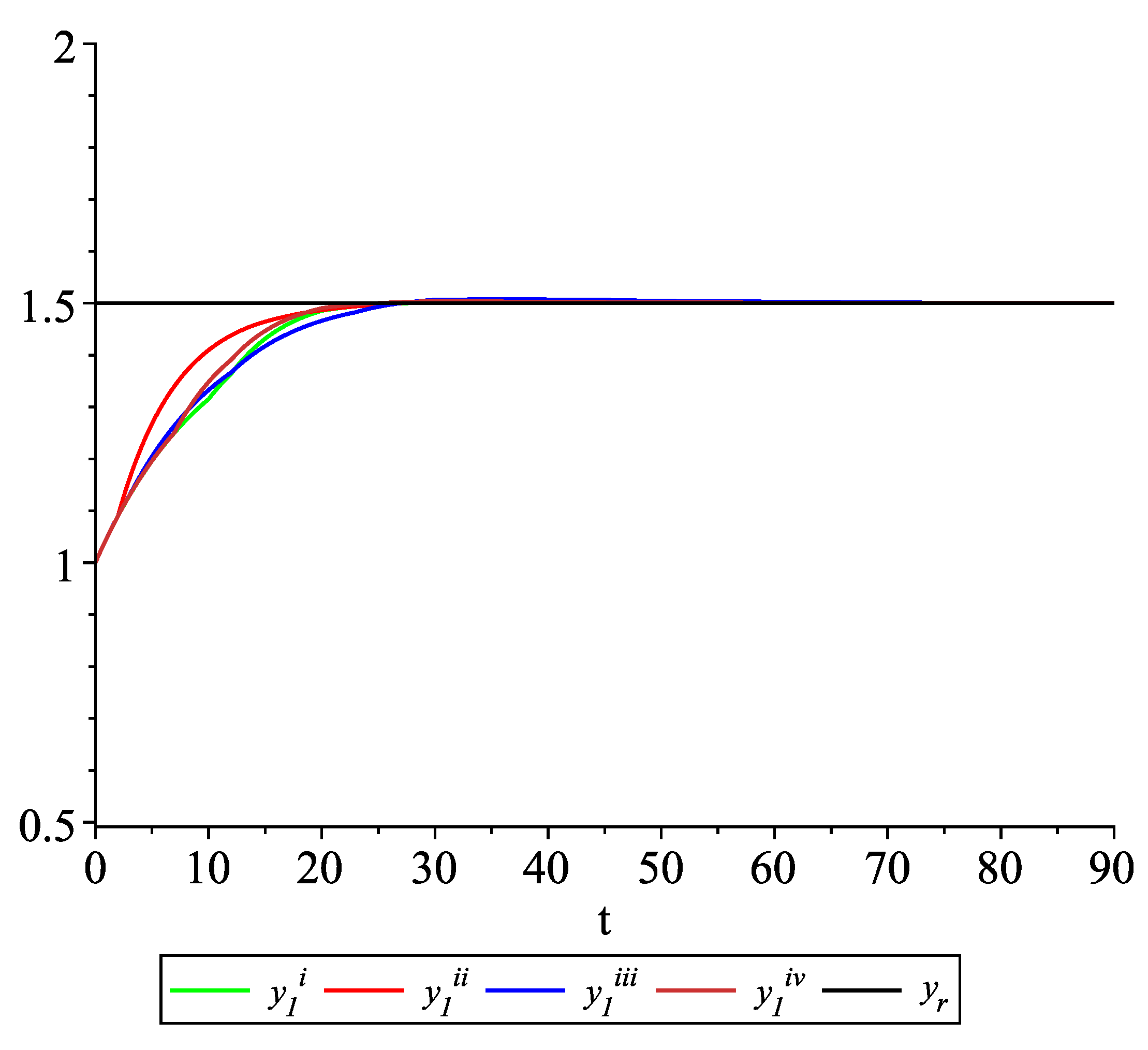

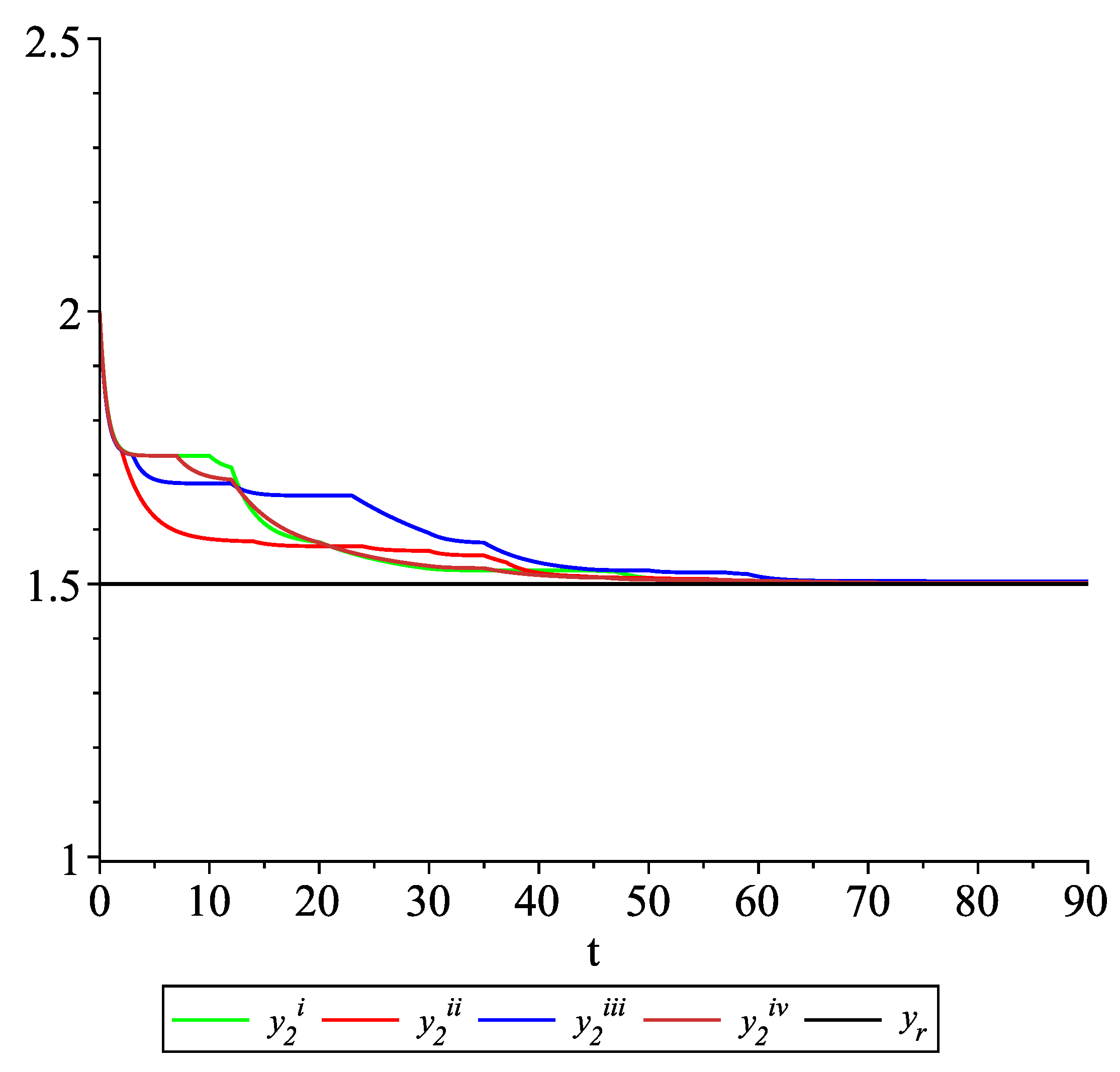

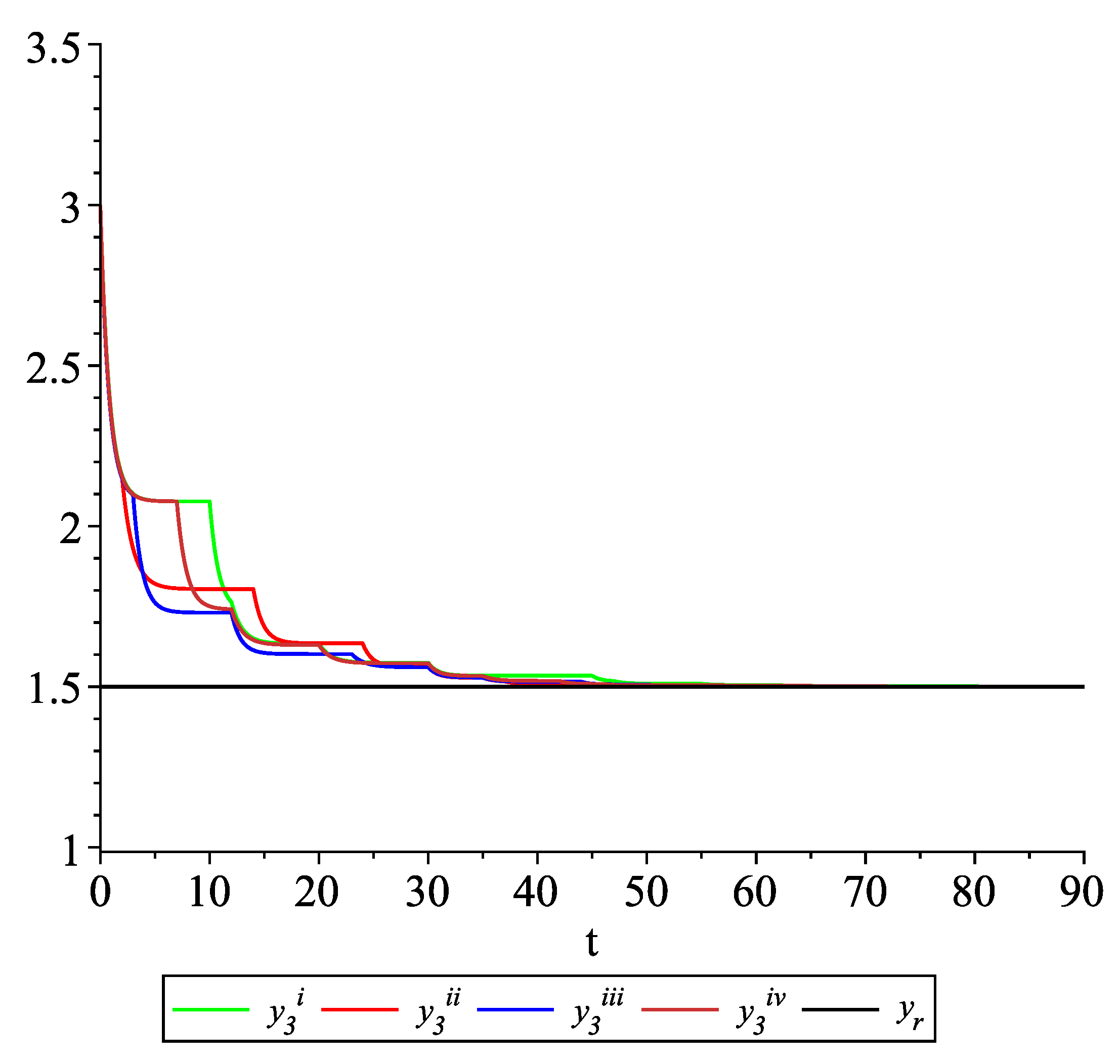

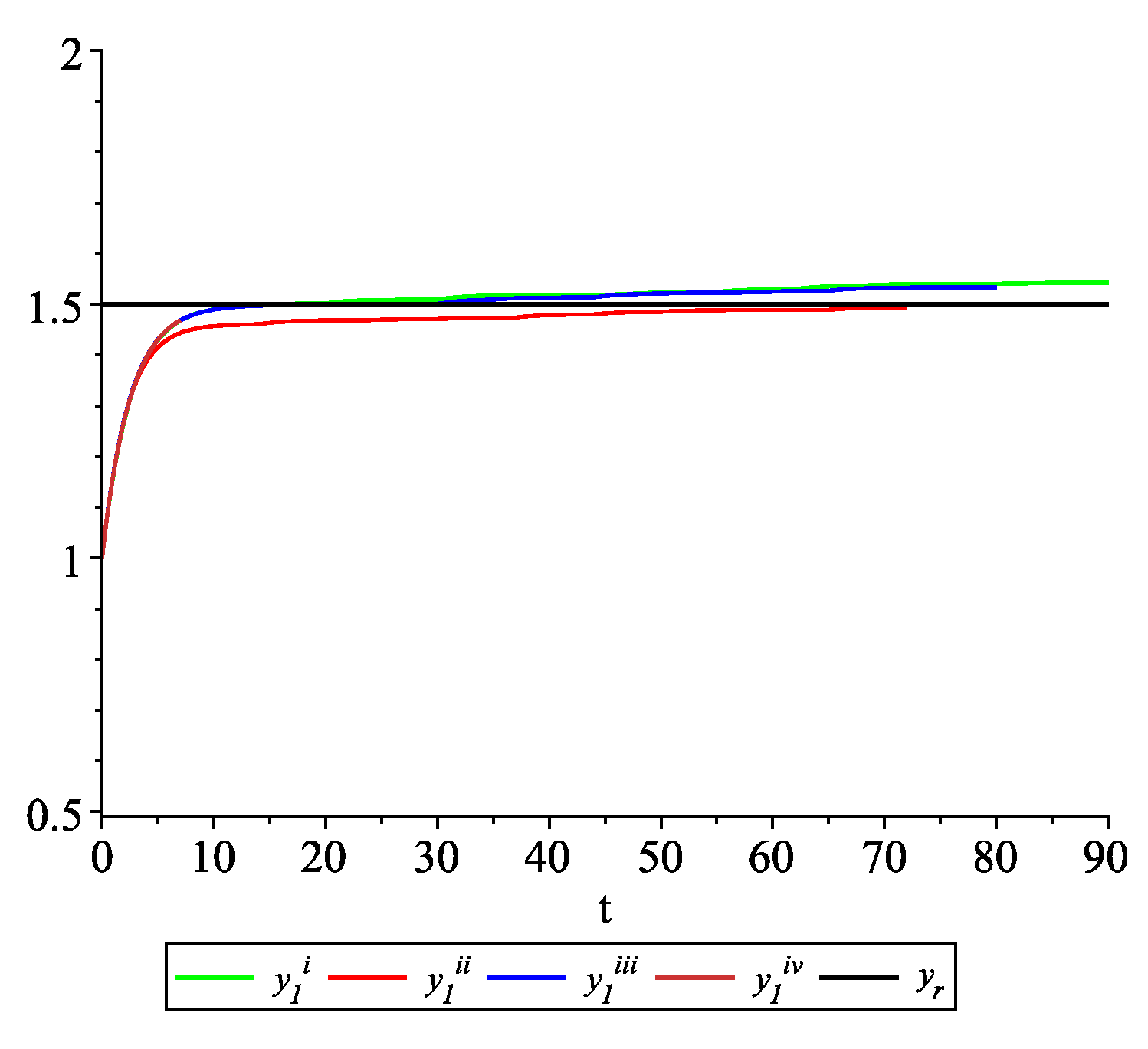

The dynamics of agents are given by Figure 1 shows the solution to system (18) with the initial values: , , , . From the graphs in Figure 1 it can be seen that the leader-following consensus is not reached. Case 1.2.There is information exchange between agents (including the leader) occurring at random update times.

The dynamics between two update times of each agent and of the leader are given by (compare with (18)): The consensus control law at any update time is given by Observe that, for , Assumption(A1)of Theorem 1 is fulfilled. Let . Then, for , Assumption(A2)of Theorem 1 holds. Therefore, by Theorem 2, the leader-following consensus for multi-agent system (19) with the consensus control law (20) at any update time is reached asymptotically. To illustrate the behavior of the solutions of the model with impulses occurring at random times, we consider several sample path solutions. For we fix the initial values as follows: , , , , and choose different values of each random variable , in the following way:

- (i)

;

- (ii)

;

- (iii)

;

- (iv)

.

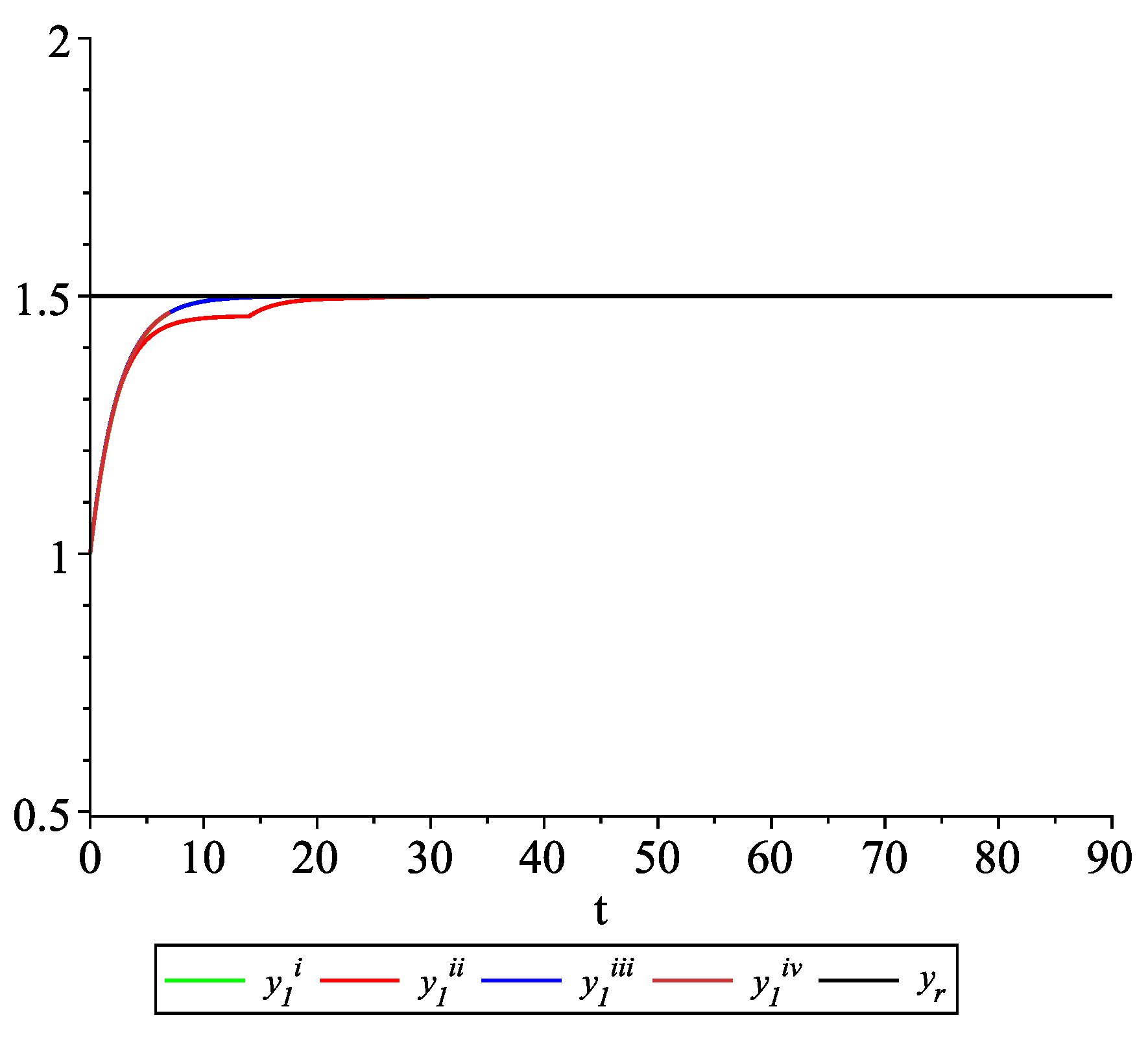

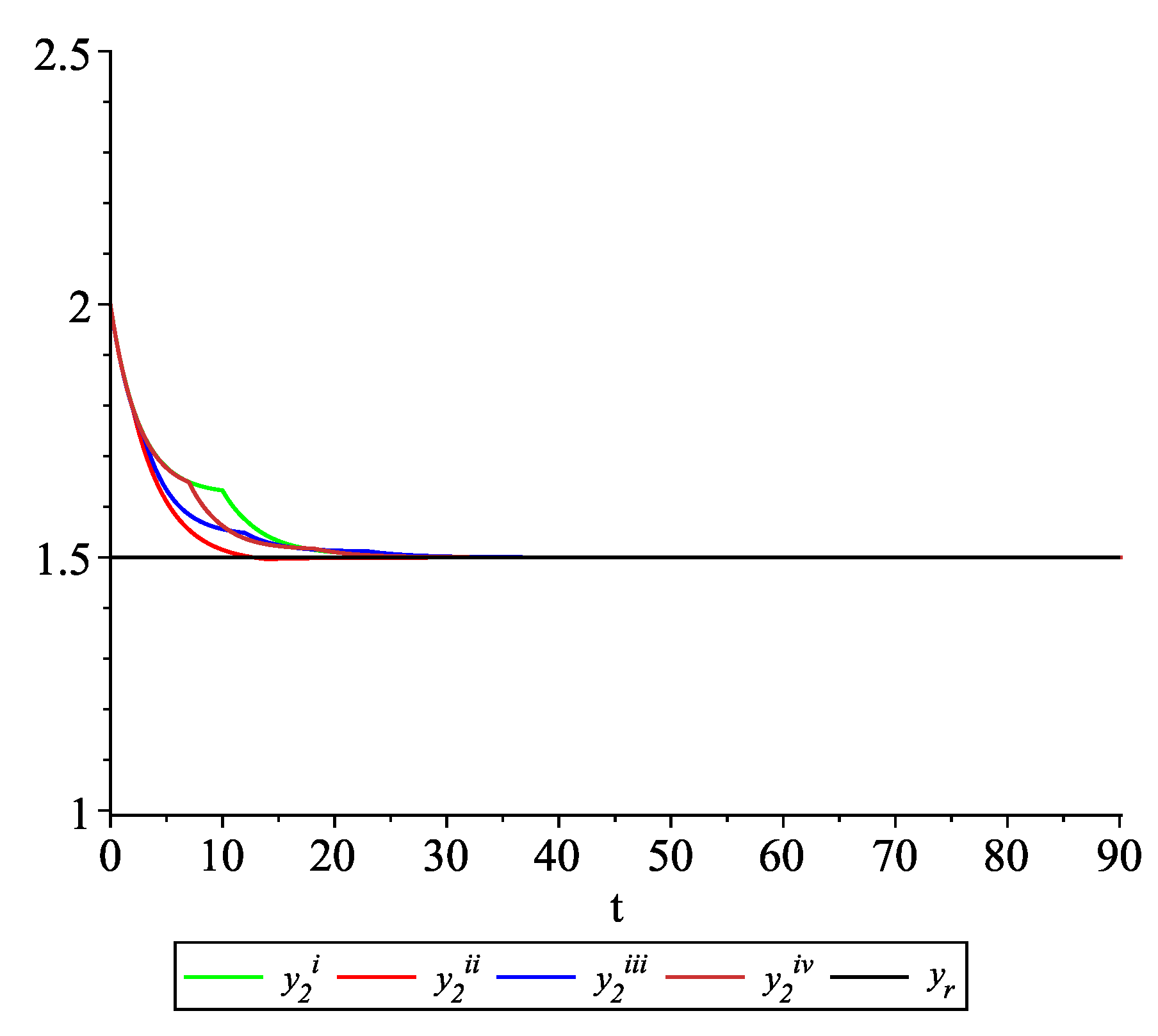

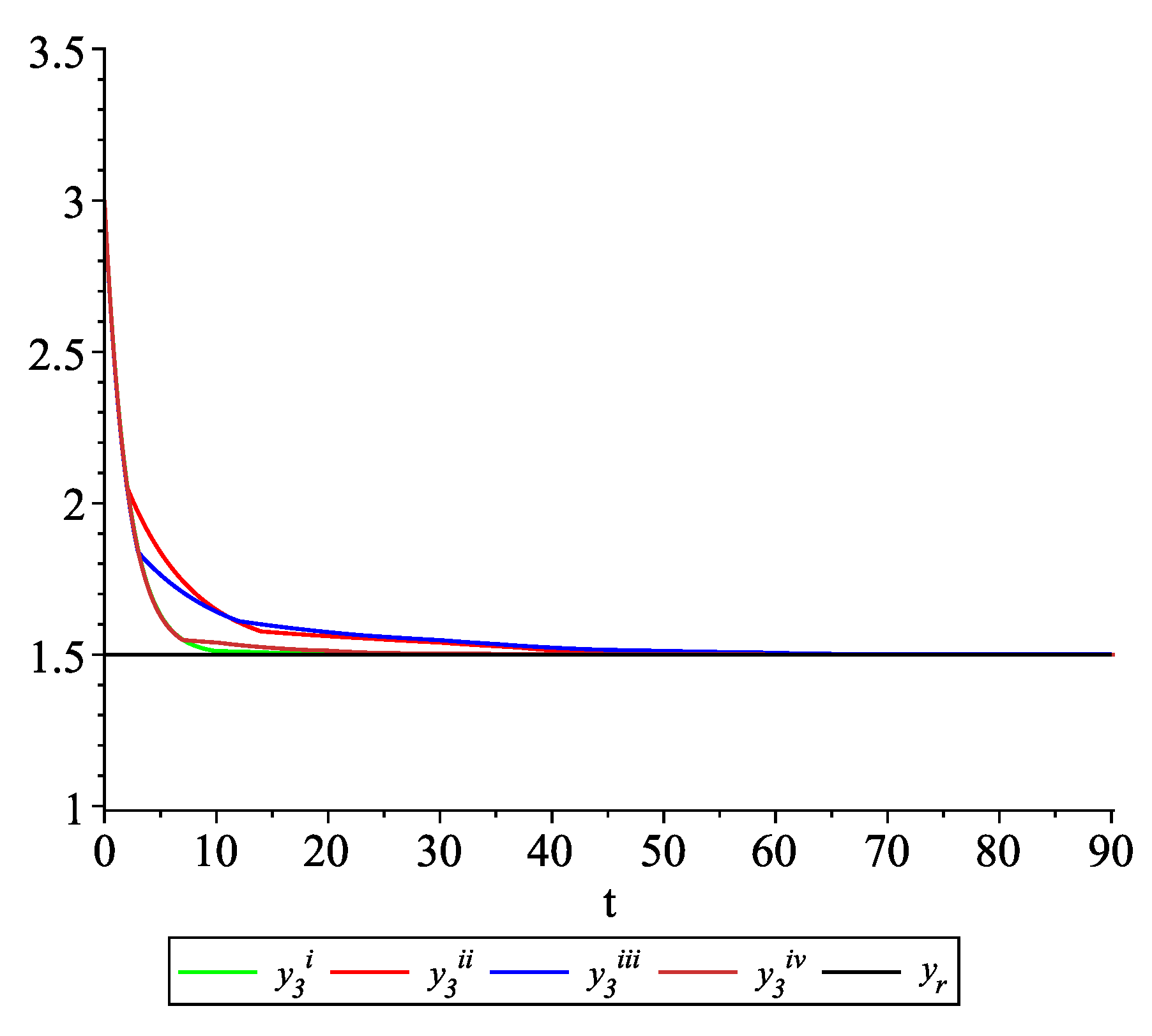

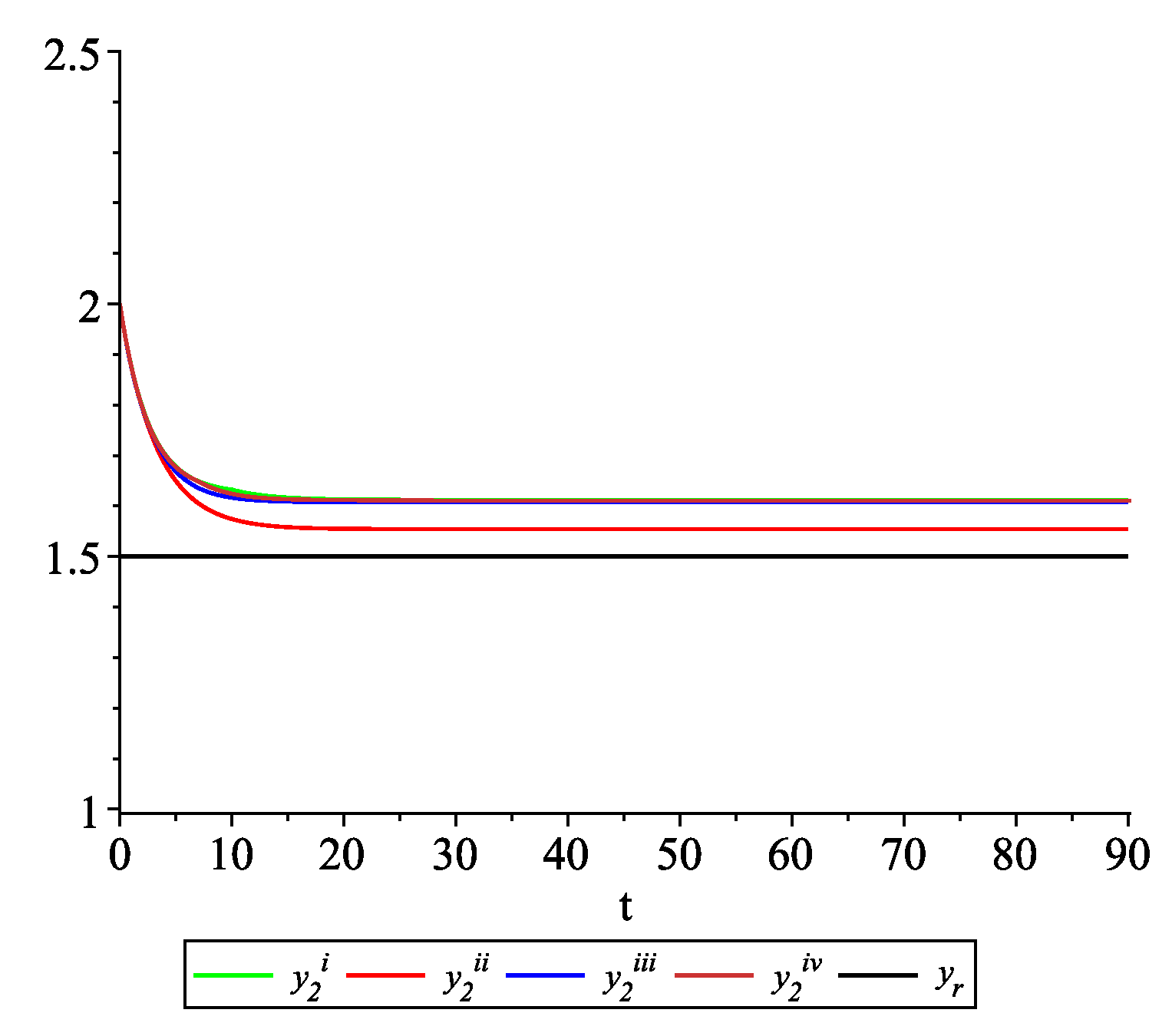

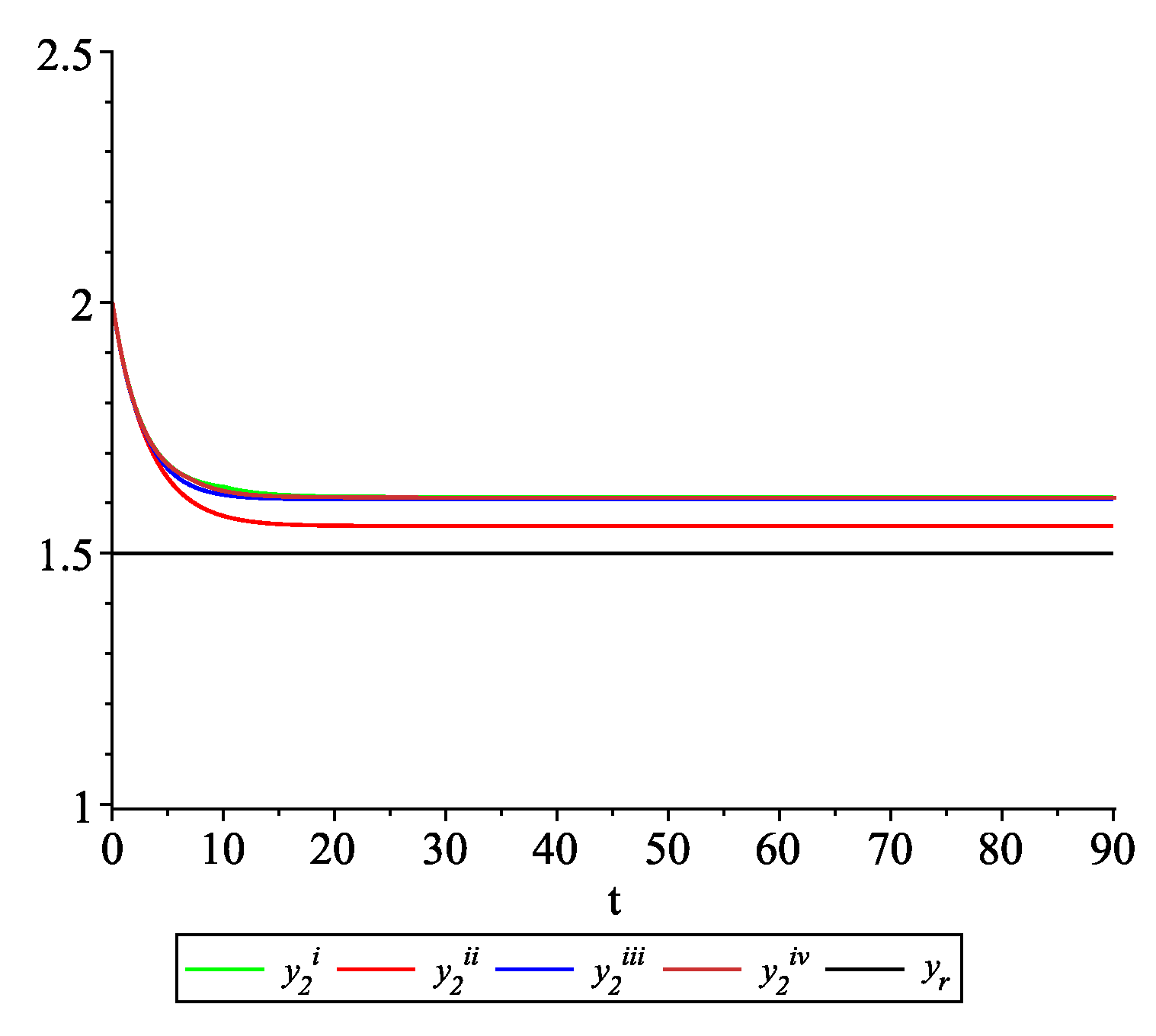

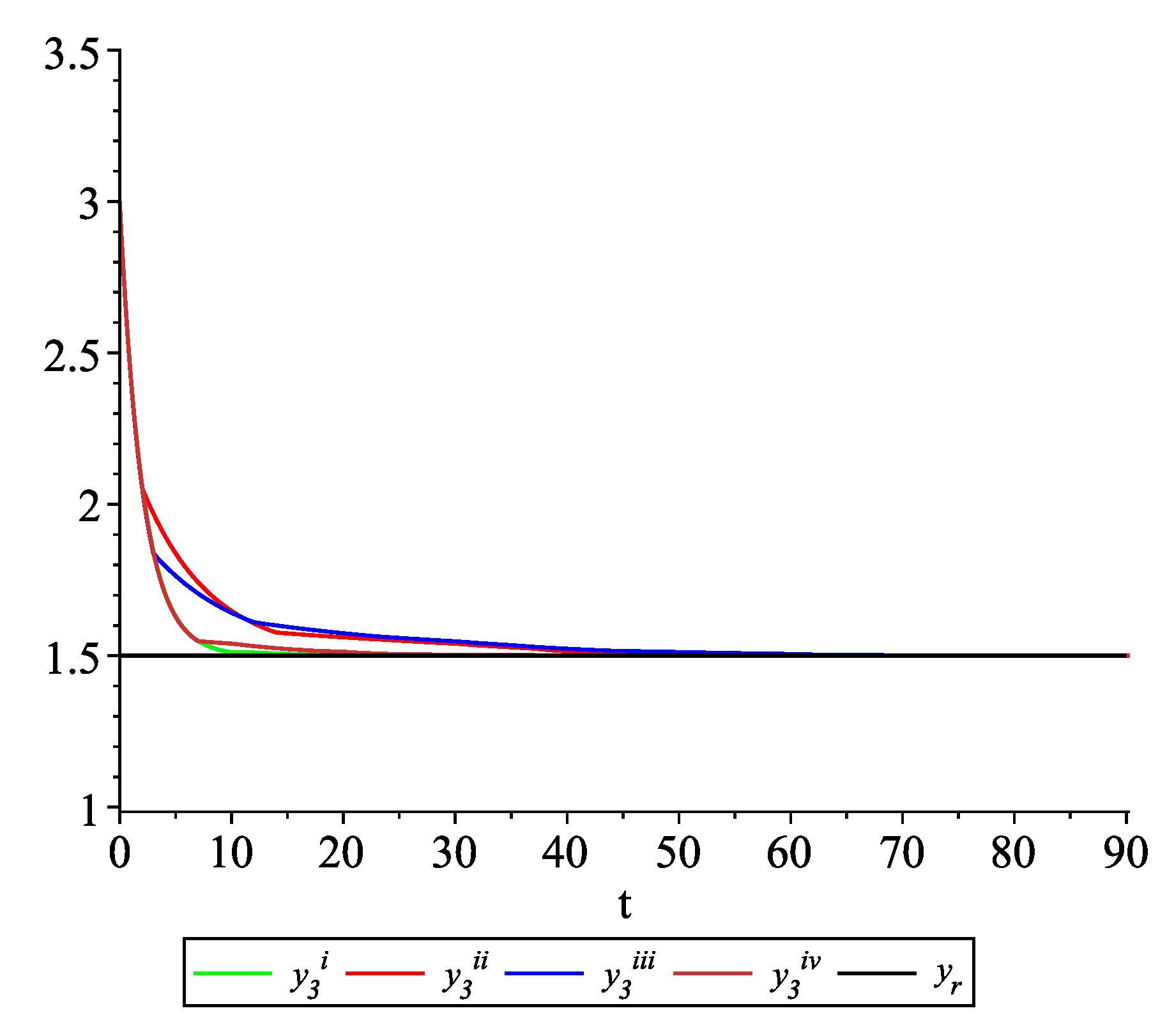

Clearly, the leader state is . For each value of the random variables (i)–(iv) we get the system of impulsive differential equations with fixed points of impulses of type (11) with and matrices , given above. Figure 2, Figure 3 and Figure 4 present the state trajectories of the leader and agent and , respectively. Apparently, it is visible that the leader-following consensus is reached for all considered sample path solutions. Case 1.3.At any update random time, only the leader is available to each agent and there is no information exchange between agents.

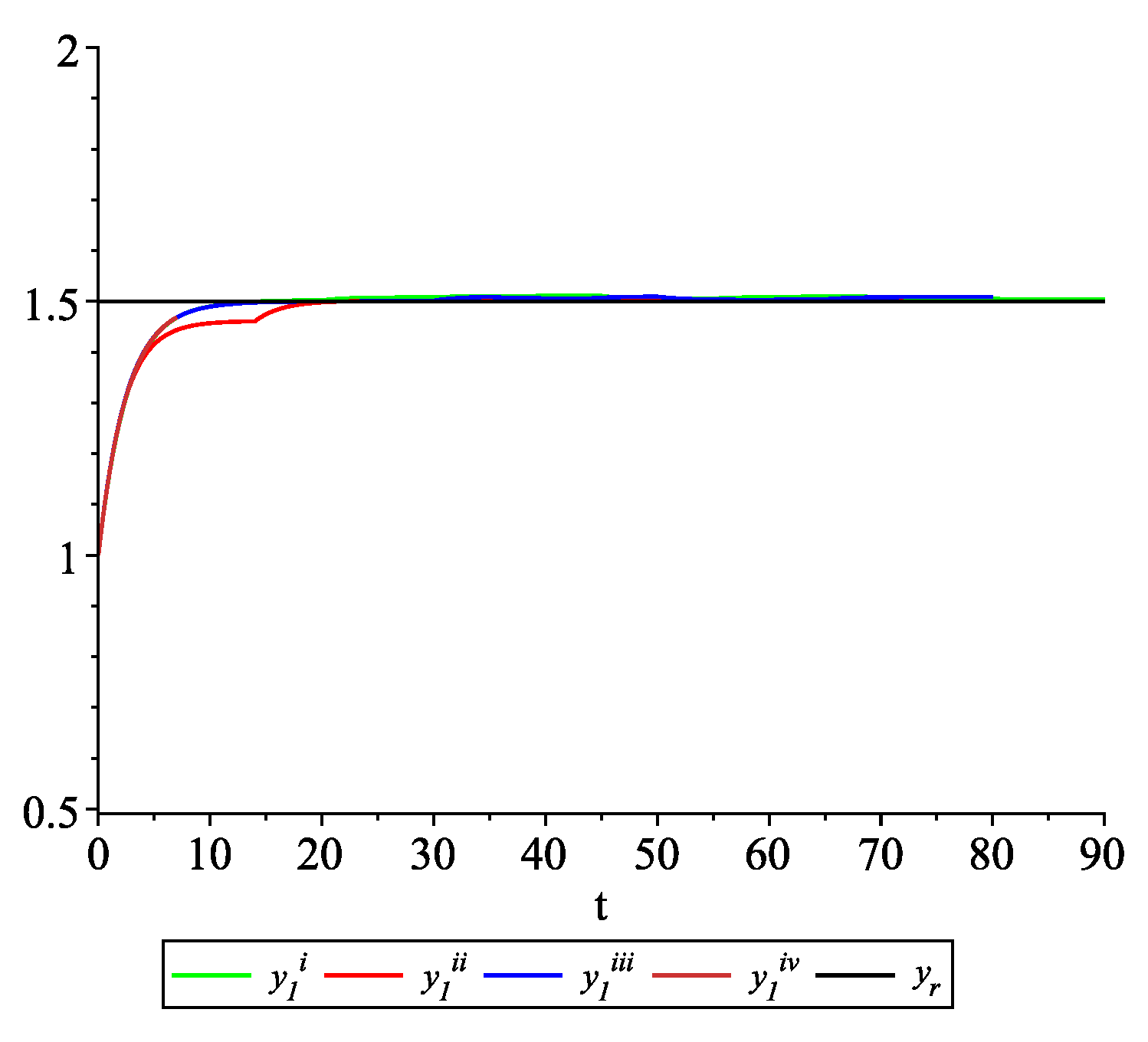

The dynamics between two update times of each agent are given by (19), and at update time the following control law is applied: and is the same as in Case 1.2. It is easy to check that, for and , assumptions(A1)and(A2)are fulfilled. According to Theorem 2, the leader-following consensus for multi-agent system (19) with the consensus control law (21) at any update time is reached asymptotically. To illustrate the behavior of the solutions of the model with impulses occurring at random times, we consider sample path solutions with the same data as in Case 1.2. Figure 5, Figure 6 and Figure 7 present the state trajectories of the leader and agent and , respectively. Apparently, it is visible that the leader-following consensus is reached in all considered sample path solutions. Example 2. Let the system consist of four agents and the leader. In order to illustrate the meaningfulness of the studied model and the obtained results, we consider four cases.

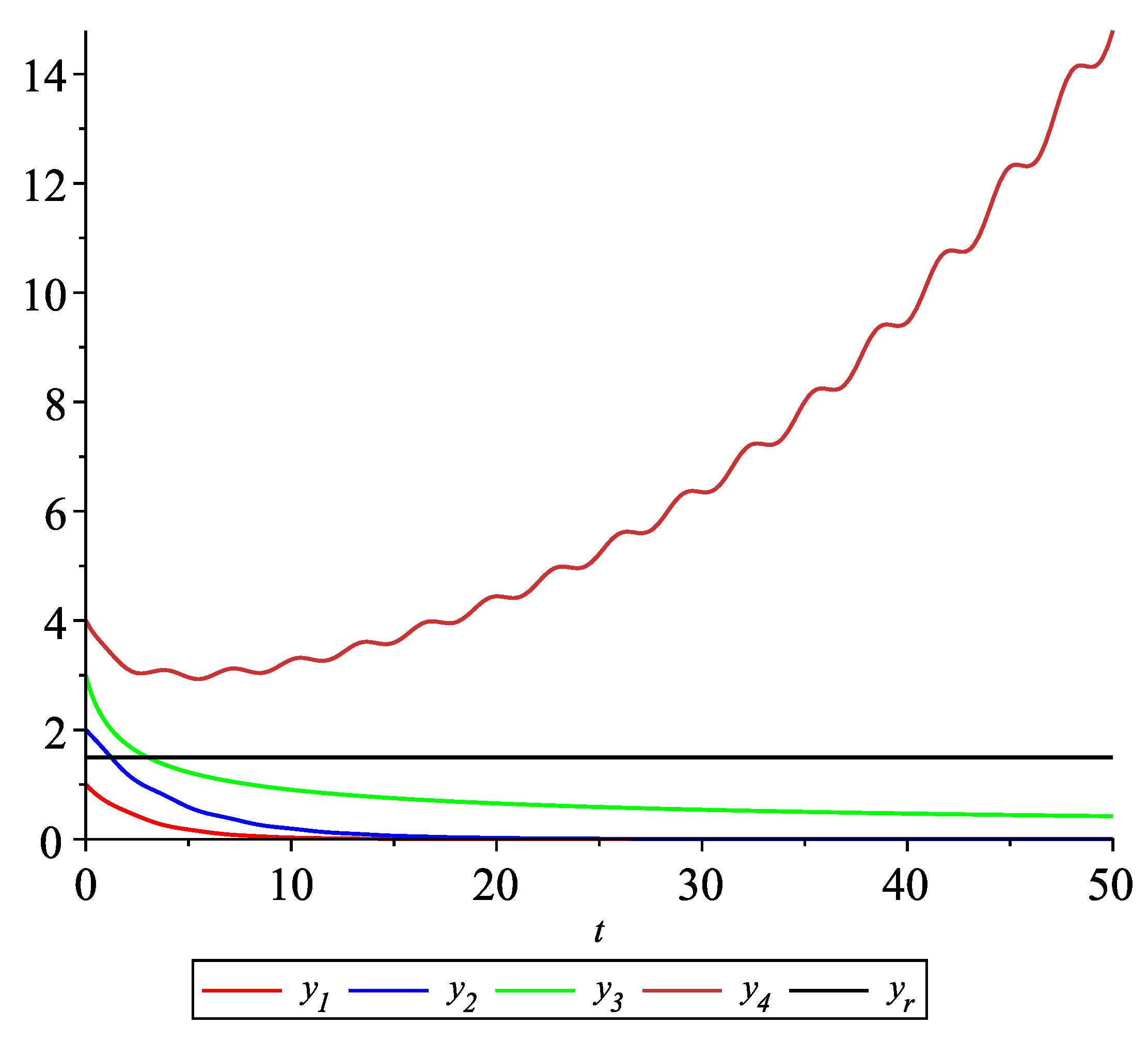

Case 2.1.There is no information exchange between agents and the leader is not available.

The dynamics of agents are given by Figure 8 shows the solution to system (22) with the initial values: , , , , . It is visible that the leader-following consensus is not reached. Case 2.2.There is information exchange between agents occurring at random update times and the leader is available for agents.

The dynamics between two update times of each agent and of the leader are given by At update time the following control law is applied: Hence, for , Assumption(A1)of Theorem 1 is fulfilled. Let . Then, for , Assumption(A2)of Theorem 1 holds. Therefore, by Theorem 2, the leader-following consensus for multi-agent system (23) with the consensus control law (24) at any update time is reached asymptotically. To illustrate the behavior of the solutions of the model with impulses occurring at random times, we consider several sample path solutions. For we fix the initial values as follows: , , , ; and choose different values of each random variable , in the following way:

- (i)

;

- (ii)

;

- (iii)

;

- (iv)

.

Clearly, the leader state is .

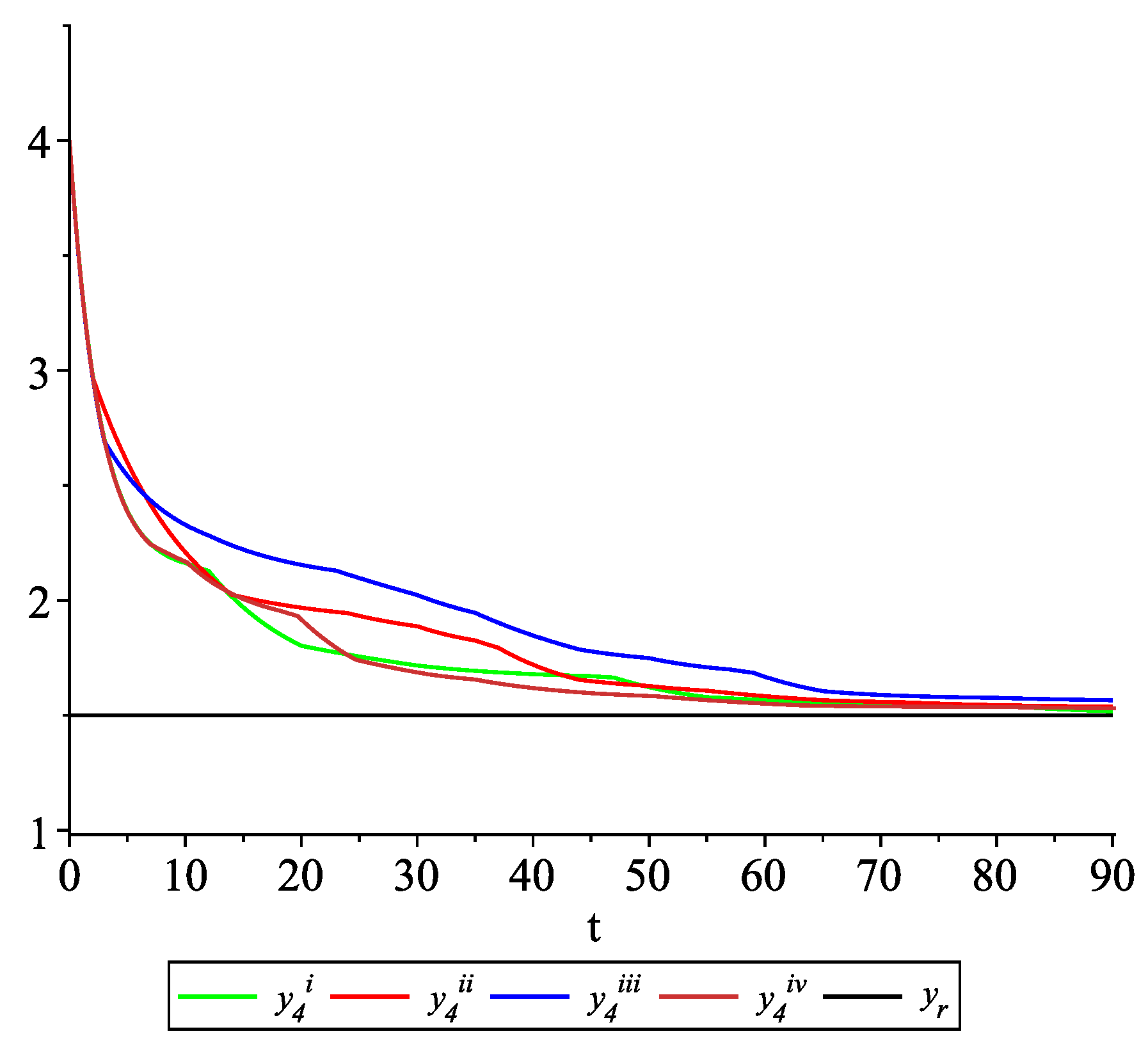

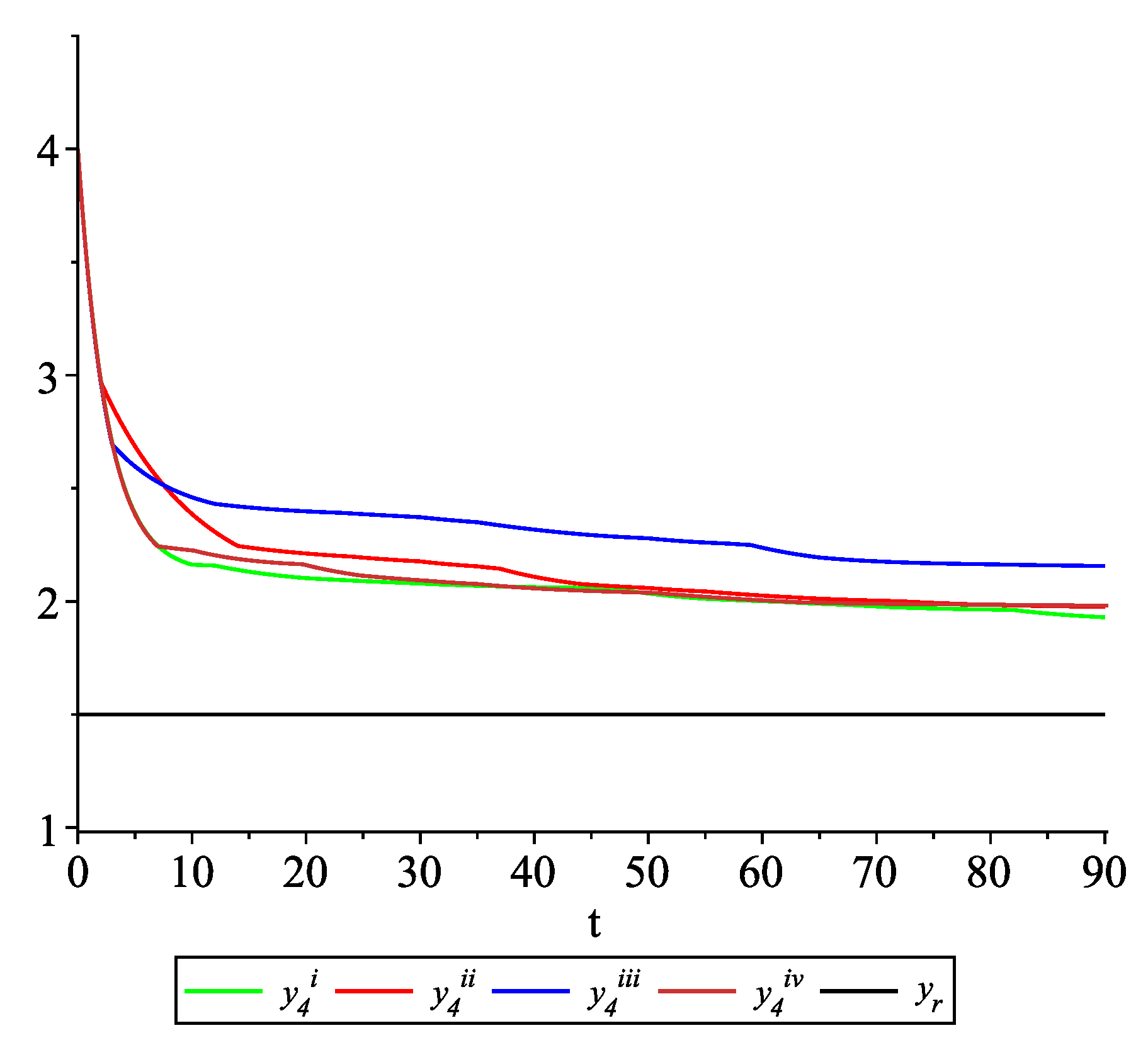

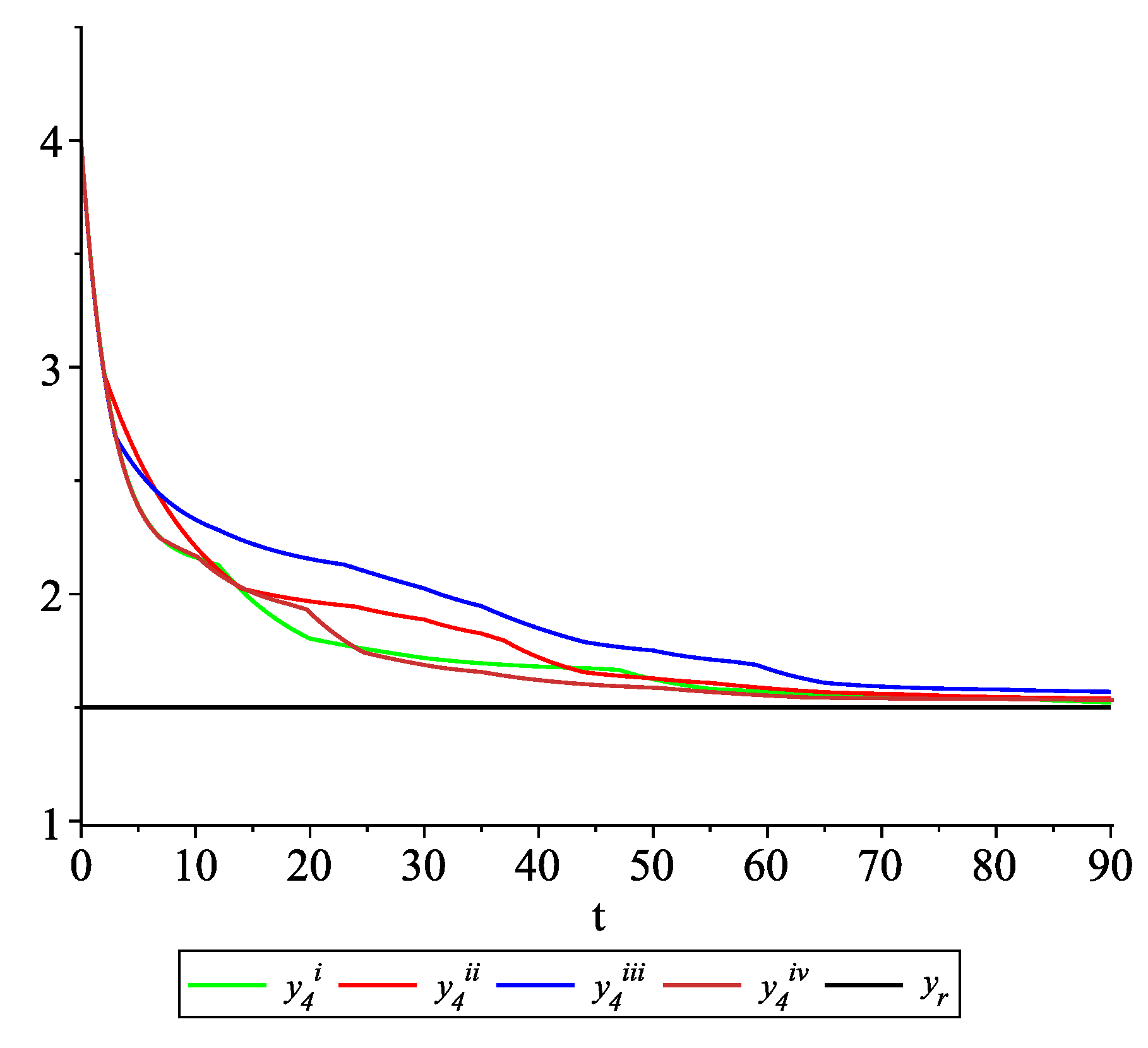

Figure 9, Figure 10, Figure 11 and Figure 12 present the state trajectories of the leader and agent , and , respectively. Apparently, it is visible that the leader-following consensus is reached for all considered sample path solutions. Case 2.3.There is information exchange between agents occurring at random update times but the leader is not available for agents.

The dynamics between two update times of each agent are given by (23), and at update time the following control law is applied: In this case, , and inequalities (13) and (15) are satisfied. According to observation in Remark 3, Assumption (A1) is not fulfilled. To illustrate the behavior of the solutions of the model with impulses occurring at random times, we fix and consider sample path solutions with the same data as in Case 2.2. Figure 13, Figure 14, Figure 15 and Figure 16 present the state trajectories of the leader and agent and , respectively. Observe that the leader-following consensus is not reached in all considered sample path solutions. Case 2.4.The leader is not available to one agent at all update times.

The dynamics between two update times of each agent are given by (23), and at update time the control law is applied: Since , by Remark 3, Assumption (A1) is not fulfilled.

To illustrate the behavior of the solutions of the model with impulses occurring at random times, we consider sample path solutions with the same data as in Case 2.2.

Figure 17, Figure 18, Figure 19 and Figure 20 present the state trajectories of 4 agents and the leader, respectively. Observe that the leader-following consensus is not reached in all considered sample path solutions. It is visible in Figure 18 where the graphs of the state trajectory of the second agent for various values of random variables are presented. This shows the importance of Assumption (

A1).

However, we emphasize that in the model considered in this paper the information exchange between agents is possible only at discrete random update times and the waiting time between two consecutive updates is exponentially distributed (similarly to queuing theory). Of course, in general, it is obvious that if the leader is continuously available for agents, then the leader-following consensus is reached. But in this paper, we consider the situation when the leader is available just from time to time at random times (so he is not available continuously). We deliver conditions under which, in spite of lack of this continuous information flow from the leader to agents, the leader-following consensus is still reached. Both examples illustrate that the interaction between the leader and the other agents only at random update times changes significantly the behavior of the agents. If conditions (

A1) and (

A2) are satisfied, then the leader-following consensus is reached in multi-system (

2).